Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Random Assignment in Experiments | Introduction & Examples

Random Assignment in Experiments | Introduction & Examples

Published on March 8, 2021 by Pritha Bhandari . Revised on June 22, 2023.

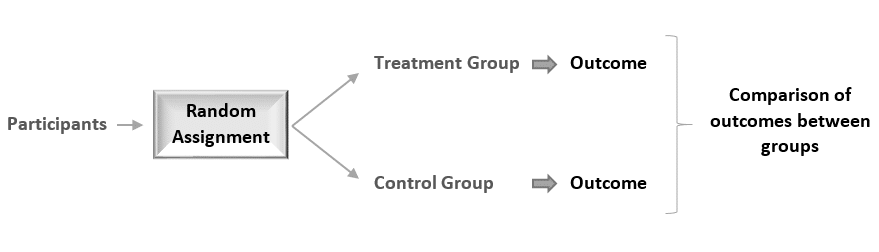

In experimental research, random assignment is a way of placing participants from your sample into different treatment groups using randomization.

With simple random assignment, every member of the sample has a known or equal chance of being placed in a control group or an experimental group. Studies that use simple random assignment are also called completely randomized designs .

Random assignment is a key part of experimental design . It helps you ensure that all groups are comparable at the start of a study: any differences between them are due to random factors, not research biases like sampling bias or selection bias .

Table of contents

Why does random assignment matter, random sampling vs random assignment, how do you use random assignment, when is random assignment not used, other interesting articles, frequently asked questions about random assignment.

Random assignment is an important part of control in experimental research, because it helps strengthen the internal validity of an experiment and avoid biases.

In experiments, researchers manipulate an independent variable to assess its effect on a dependent variable, while controlling for other variables. To do so, they often use different levels of an independent variable for different groups of participants.

This is called a between-groups or independent measures design.

You use three groups of participants that are each given a different level of the independent variable:

- a control group that’s given a placebo (no dosage, to control for a placebo effect ),

- an experimental group that’s given a low dosage,

- a second experimental group that’s given a high dosage.

Random assignment to helps you make sure that the treatment groups don’t differ in systematic ways at the start of the experiment, as this can seriously affect (and even invalidate) your work.

If you don’t use random assignment, you may not be able to rule out alternative explanations for your results.

- participants recruited from cafes are placed in the control group ,

- participants recruited from local community centers are placed in the low dosage experimental group,

- participants recruited from gyms are placed in the high dosage group.

With this type of assignment, it’s hard to tell whether the participant characteristics are the same across all groups at the start of the study. Gym-users may tend to engage in more healthy behaviors than people who frequent cafes or community centers, and this would introduce a healthy user bias in your study.

Although random assignment helps even out baseline differences between groups, it doesn’t always make them completely equivalent. There may still be extraneous variables that differ between groups, and there will always be some group differences that arise from chance.

Most of the time, the random variation between groups is low, and, therefore, it’s acceptable for further analysis. This is especially true when you have a large sample. In general, you should always use random assignment in experiments when it is ethically possible and makes sense for your study topic.

Prevent plagiarism. Run a free check.

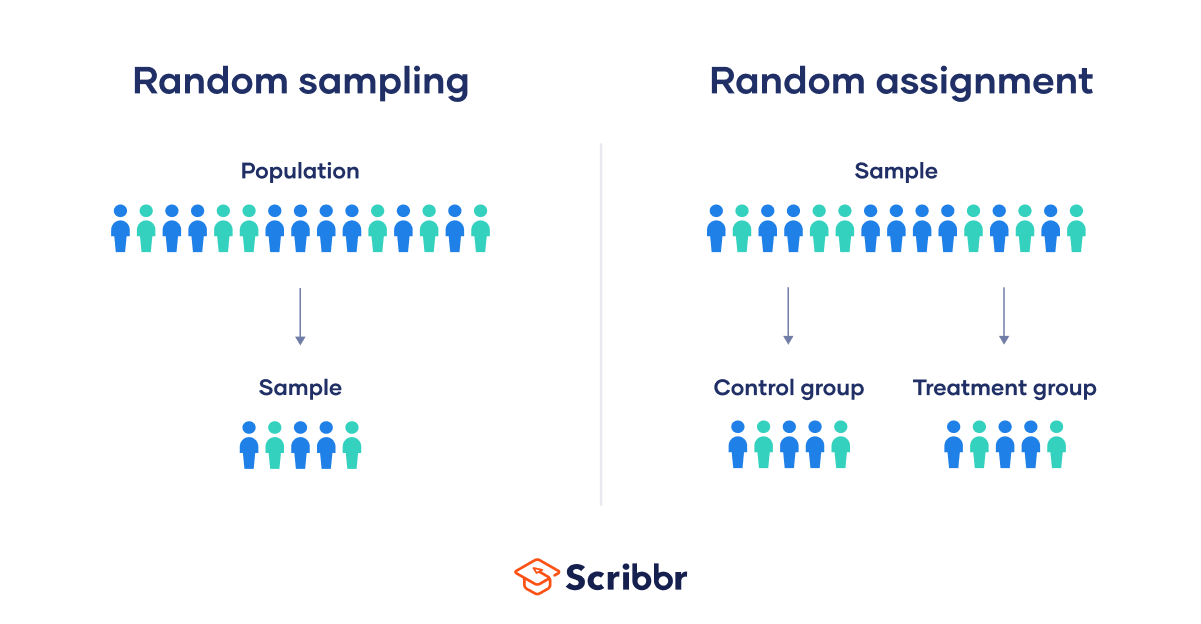

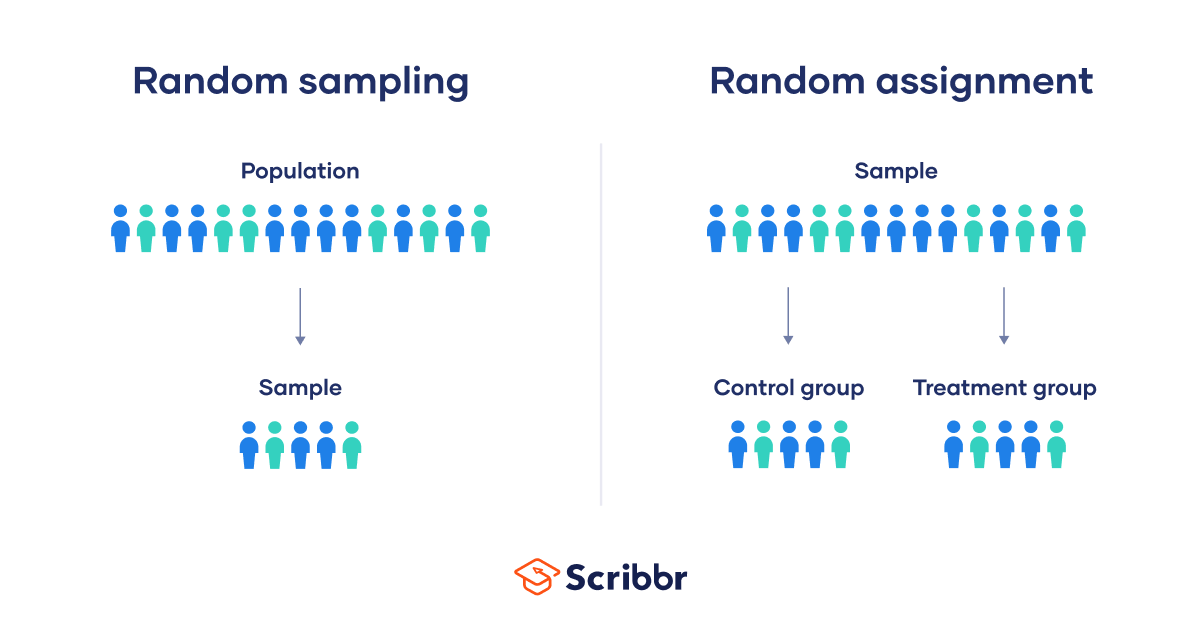

Random sampling and random assignment are both important concepts in research, but it’s important to understand the difference between them.

Random sampling (also called probability sampling or random selection) is a way of selecting members of a population to be included in your study. In contrast, random assignment is a way of sorting the sample participants into control and experimental groups.

While random sampling is used in many types of studies, random assignment is only used in between-subjects experimental designs.

Some studies use both random sampling and random assignment, while others use only one or the other.

Random sampling enhances the external validity or generalizability of your results, because it helps ensure that your sample is unbiased and representative of the whole population. This allows you to make stronger statistical inferences .

You use a simple random sample to collect data. Because you have access to the whole population (all employees), you can assign all 8000 employees a number and use a random number generator to select 300 employees. These 300 employees are your full sample.

Random assignment enhances the internal validity of the study, because it ensures that there are no systematic differences between the participants in each group. This helps you conclude that the outcomes can be attributed to the independent variable .

- a control group that receives no intervention.

- an experimental group that has a remote team-building intervention every week for a month.

You use random assignment to place participants into the control or experimental group. To do so, you take your list of participants and assign each participant a number. Again, you use a random number generator to place each participant in one of the two groups.

To use simple random assignment, you start by giving every member of the sample a unique number. Then, you can use computer programs or manual methods to randomly assign each participant to a group.

- Random number generator: Use a computer program to generate random numbers from the list for each group.

- Lottery method: Place all numbers individually in a hat or a bucket, and draw numbers at random for each group.

- Flip a coin: When you only have two groups, for each number on the list, flip a coin to decide if they’ll be in the control or the experimental group.

- Use a dice: When you have three groups, for each number on the list, roll a dice to decide which of the groups they will be in. For example, assume that rolling 1 or 2 lands them in a control group; 3 or 4 in an experimental group; and 5 or 6 in a second control or experimental group.

This type of random assignment is the most powerful method of placing participants in conditions, because each individual has an equal chance of being placed in any one of your treatment groups.

Random assignment in block designs

In more complicated experimental designs, random assignment is only used after participants are grouped into blocks based on some characteristic (e.g., test score or demographic variable). These groupings mean that you need a larger sample to achieve high statistical power .

For example, a randomized block design involves placing participants into blocks based on a shared characteristic (e.g., college students versus graduates), and then using random assignment within each block to assign participants to every treatment condition. This helps you assess whether the characteristic affects the outcomes of your treatment.

In an experimental matched design , you use blocking and then match up individual participants from each block based on specific characteristics. Within each matched pair or group, you randomly assign each participant to one of the conditions in the experiment and compare their outcomes.

Sometimes, it’s not relevant or ethical to use simple random assignment, so groups are assigned in a different way.

When comparing different groups

Sometimes, differences between participants are the main focus of a study, for example, when comparing men and women or people with and without health conditions. Participants are not randomly assigned to different groups, but instead assigned based on their characteristics.

In this type of study, the characteristic of interest (e.g., gender) is an independent variable, and the groups differ based on the different levels (e.g., men, women, etc.). All participants are tested the same way, and then their group-level outcomes are compared.

When it’s not ethically permissible

When studying unhealthy or dangerous behaviors, it’s not possible to use random assignment. For example, if you’re studying heavy drinkers and social drinkers, it’s unethical to randomly assign participants to one of the two groups and ask them to drink large amounts of alcohol for your experiment.

When you can’t assign participants to groups, you can also conduct a quasi-experimental study . In a quasi-experiment, you study the outcomes of pre-existing groups who receive treatments that you may not have any control over (e.g., heavy drinkers and social drinkers). These groups aren’t randomly assigned, but may be considered comparable when some other variables (e.g., age or socioeconomic status) are controlled for.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Student’s t -distribution

- Normal distribution

- Null and Alternative Hypotheses

- Chi square tests

- Confidence interval

- Quartiles & Quantiles

- Cluster sampling

- Stratified sampling

- Data cleansing

- Reproducibility vs Replicability

- Peer review

- Prospective cohort study

Research bias

- Implicit bias

- Cognitive bias

- Placebo effect

- Hawthorne effect

- Hindsight bias

- Affect heuristic

- Social desirability bias

In experimental research, random assignment is a way of placing participants from your sample into different groups using randomization. With this method, every member of the sample has a known or equal chance of being placed in a control group or an experimental group.

Random selection, or random sampling , is a way of selecting members of a population for your study’s sample.

In contrast, random assignment is a way of sorting the sample into control and experimental groups.

Random sampling enhances the external validity or generalizability of your results, while random assignment improves the internal validity of your study.

Random assignment is used in experiments with a between-groups or independent measures design. In this research design, there’s usually a control group and one or more experimental groups. Random assignment helps ensure that the groups are comparable.

In general, you should always use random assignment in this type of experimental design when it is ethically possible and makes sense for your study topic.

To implement random assignment , assign a unique number to every member of your study’s sample .

Then, you can use a random number generator or a lottery method to randomly assign each number to a control or experimental group. You can also do so manually, by flipping a coin or rolling a dice to randomly assign participants to groups.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bhandari, P. (2023, June 22). Random Assignment in Experiments | Introduction & Examples. Scribbr. Retrieved August 21, 2024, from https://www.scribbr.com/methodology/random-assignment/

Is this article helpful?

Pritha Bhandari

Other students also liked, guide to experimental design | overview, steps, & examples, confounding variables | definition, examples & controls, control groups and treatment groups | uses & examples, "i thought ai proofreading was useless but..".

I've been using Scribbr for years now and I know it's a service that won't disappoint. It does a good job spotting mistakes”

Random Assignment in Psychology: Definition & Examples

Julia Simkus

Editor at Simply Psychology

BA (Hons) Psychology, Princeton University

Julia Simkus is a graduate of Princeton University with a Bachelor of Arts in Psychology. She is currently studying for a Master's Degree in Counseling for Mental Health and Wellness in September 2023. Julia's research has been published in peer reviewed journals.

Learn about our Editorial Process

Saul McLeod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul McLeod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

In psychology, random assignment refers to the practice of allocating participants to different experimental groups in a study in a completely unbiased way, ensuring each participant has an equal chance of being assigned to any group.

In experimental research, random assignment, or random placement, organizes participants from your sample into different groups using randomization.

Random assignment uses chance procedures to ensure that each participant has an equal opportunity of being assigned to either a control or experimental group.

The control group does not receive the treatment in question, whereas the experimental group does receive the treatment.

When using random assignment, neither the researcher nor the participant can choose the group to which the participant is assigned. This ensures that any differences between and within the groups are not systematic at the onset of the study.

In a study to test the success of a weight-loss program, investigators randomly assigned a pool of participants to one of two groups.

Group A participants participated in the weight-loss program for 10 weeks and took a class where they learned about the benefits of healthy eating and exercise.

Group B participants read a 200-page book that explains the benefits of weight loss. The investigator randomly assigned participants to one of the two groups.

The researchers found that those who participated in the program and took the class were more likely to lose weight than those in the other group that received only the book.

Importance

Random assignment ensures that each group in the experiment is identical before applying the independent variable.

In experiments , researchers will manipulate an independent variable to assess its effect on a dependent variable, while controlling for other variables. Random assignment increases the likelihood that the treatment groups are the same at the onset of a study.

Thus, any changes that result from the independent variable can be assumed to be a result of the treatment of interest. This is particularly important for eliminating sources of bias and strengthening the internal validity of an experiment.

Random assignment is the best method for inferring a causal relationship between a treatment and an outcome.

Random Selection vs. Random Assignment

Random selection (also called probability sampling or random sampling) is a way of randomly selecting members of a population to be included in your study.

On the other hand, random assignment is a way of sorting the sample participants into control and treatment groups.

Random selection ensures that everyone in the population has an equal chance of being selected for the study. Once the pool of participants has been chosen, experimenters use random assignment to assign participants into groups.

Random assignment is only used in between-subjects experimental designs, while random selection can be used in a variety of study designs.

Random Assignment vs Random Sampling

Random sampling refers to selecting participants from a population so that each individual has an equal chance of being chosen. This method enhances the representativeness of the sample.

Random assignment, on the other hand, is used in experimental designs once participants are selected. It involves allocating these participants to different experimental groups or conditions randomly.

This helps ensure that any differences in results across groups are due to manipulating the independent variable, not preexisting differences among participants.

When to Use Random Assignment

Random assignment is used in experiments with a between-groups or independent measures design.

In these research designs, researchers will manipulate an independent variable to assess its effect on a dependent variable, while controlling for other variables.

There is usually a control group and one or more experimental groups. Random assignment helps ensure that the groups are comparable at the onset of the study.

How to Use Random Assignment

There are a variety of ways to assign participants into study groups randomly. Here are a handful of popular methods:

- Random Number Generator : Give each member of the sample a unique number; use a computer program to randomly generate a number from the list for each group.

- Lottery : Give each member of the sample a unique number. Place all numbers in a hat or bucket and draw numbers at random for each group.

- Flipping a Coin : Flip a coin for each participant to decide if they will be in the control group or experimental group (this method can only be used when you have just two groups)

- Roll a Die : For each number on the list, roll a dice to decide which of the groups they will be in. For example, assume that rolling 1, 2, or 3 places them in a control group and rolling 3, 4, 5 lands them in an experimental group.

When is Random Assignment not used?

- When it is not ethically permissible: Randomization is only ethical if the researcher has no evidence that one treatment is superior to the other or that one treatment might have harmful side effects.

- When answering non-causal questions : If the researcher is just interested in predicting the probability of an event, the causal relationship between the variables is not important and observational designs would be more suitable than random assignment.

- When studying the effect of variables that cannot be manipulated: Some risk factors cannot be manipulated and so it would not make any sense to study them in a randomized trial. For example, we cannot randomly assign participants into categories based on age, gender, or genetic factors.

Drawbacks of Random Assignment

While randomization assures an unbiased assignment of participants to groups, it does not guarantee the equality of these groups. There could still be extraneous variables that differ between groups or group differences that arise from chance. Additionally, there is still an element of luck with random assignments.

Thus, researchers can not produce perfectly equal groups for each specific study. Differences between the treatment group and control group might still exist, and the results of a randomized trial may sometimes be wrong, but this is absolutely okay.

Scientific evidence is a long and continuous process, and the groups will tend to be equal in the long run when data is aggregated in a meta-analysis.

Additionally, external validity (i.e., the extent to which the researcher can use the results of the study to generalize to the larger population) is compromised with random assignment.

Random assignment is challenging to implement outside of controlled laboratory conditions and might not represent what would happen in the real world at the population level.

Random assignment can also be more costly than simple observational studies, where an investigator is just observing events without intervening with the population.

Randomization also can be time-consuming and challenging, especially when participants refuse to receive the assigned treatment or do not adhere to recommendations.

What is the difference between random sampling and random assignment?

Random sampling refers to randomly selecting a sample of participants from a population. Random assignment refers to randomly assigning participants to treatment groups from the selected sample.

Does random assignment increase internal validity?

Yes, random assignment ensures that there are no systematic differences between the participants in each group, enhancing the study’s internal validity .

Does random assignment reduce sampling error?

Yes, with random assignment, participants have an equal chance of being assigned to either a control group or an experimental group, resulting in a sample that is, in theory, representative of the population.

Random assignment does not completely eliminate sampling error because a sample only approximates the population from which it is drawn. However, random sampling is a way to minimize sampling errors.

When is random assignment not possible?

Random assignment is not possible when the experimenters cannot control the treatment or independent variable.

For example, if you want to compare how men and women perform on a test, you cannot randomly assign subjects to these groups.

Participants are not randomly assigned to different groups in this study, but instead assigned based on their characteristics.

Does random assignment eliminate confounding variables?

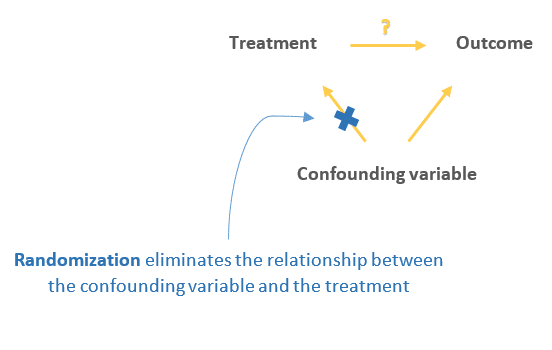

Yes, random assignment eliminates the influence of any confounding variables on the treatment because it distributes them at random among the study groups. Randomization invalidates any relationship between a confounding variable and the treatment.

Why is random assignment of participants to treatment conditions in an experiment used?

Random assignment is used to ensure that all groups are comparable at the start of a study. This allows researchers to conclude that the outcomes of the study can be attributed to the intervention at hand and to rule out alternative explanations for study results.

Further Reading

- Bogomolnaia, A., & Moulin, H. (2001). A new solution to the random assignment problem . Journal of Economic theory , 100 (2), 295-328.

- Krause, M. S., & Howard, K. I. (2003). What random assignment does and does not do . Journal of Clinical Psychology , 59 (7), 751-766.

- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

Random Assignment in Experiments

By Jim Frost 4 Comments

Random assignment uses chance to assign subjects to the control and treatment groups in an experiment. This process helps ensure that the groups are equivalent at the beginning of the study, which makes it safer to assume the treatments caused any differences between groups that the experimenters observe at the end of the study.

Huh? That might be a big surprise! At this point, you might be wondering about all of those studies that use statistics to assess the effects of different treatments. There’s a critical separation between significance and causality:

- Statistical procedures determine whether an effect is significant.

- Experimental designs determine how confidently you can assume that a treatment causes the effect.

In this post, learn how using random assignment in experiments can help you identify causal relationships.

Correlation, Causation, and Confounding Variables

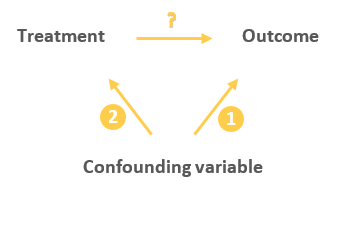

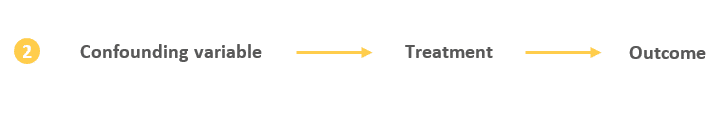

Random assignment helps you separate causation from correlation and rule out confounding variables. As a critical component of the scientific method , experiments typically set up contrasts between a control group and one or more treatment groups. The idea is to determine whether the effect, which is the difference between a treatment group and the control group, is statistically significant. If the effect is significant, group assignment correlates with different outcomes.

However, as you have no doubt heard, correlation does not necessarily imply causation. In other words, the experimental groups can have different mean outcomes, but the treatment might not be causing those differences even though the differences are statistically significant.

The difficulty in definitively stating that a treatment caused the difference is due to potential confounding variables or confounders. Confounders are alternative explanations for differences between the experimental groups. Confounding variables correlate with both the experimental groups and the outcome variable. In this situation, confounding variables can be the actual cause for the outcome differences rather than the treatments themselves. As you’ll see, if an experiment does not account for confounding variables, they can bias the results and make them untrustworthy.

Related posts : Understanding Correlation in Statistics , Causation versus Correlation , and Hill’s Criteria for Causation .

Example of Confounding in an Experiment

- Control group: Does not consume vitamin supplements

- Treatment group: Regularly consumes vitamin supplements.

Imagine we measure a specific health outcome. After the experiment is complete, we perform a 2-sample t-test to determine whether the mean outcomes for these two groups are different. Assume the test results indicate that the mean health outcome in the treatment group is significantly better than the control group.

Why can’t we assume that the vitamins improved the health outcomes? After all, only the treatment group took the vitamins.

Related post : Confounding Variables in Regression Analysis

Alternative Explanations for Differences in Outcomes

The answer to that question depends on how we assigned the subjects to the experimental groups. If we let the subjects decide which group to join based on their existing vitamin habits, it opens the door to confounding variables. It’s reasonable to assume that people who take vitamins regularly also tend to have other healthy habits. These habits are confounders because they correlate with both vitamin consumption (experimental group) and the health outcome measure.

Random assignment prevents this self sorting of participants and reduces the likelihood that the groups start with systematic differences.

In fact, studies have found that supplement users are more physically active, have healthier diets, have lower blood pressure, and so on compared to those who don’t take supplements. If subjects who already take vitamins regularly join the treatment group voluntarily, they bring these healthy habits disproportionately to the treatment group. Consequently, these habits will be much more prevalent in the treatment group than the control group.

The healthy habits are the confounding variables—the potential alternative explanations for the difference in our study’s health outcome. It’s entirely possible that these systematic differences between groups at the start of the study might cause the difference in the health outcome at the end of the study—and not the vitamin consumption itself!

If our experiment doesn’t account for these confounding variables, we can’t trust the results. While we obtained statistically significant results with the 2-sample t-test for health outcomes, we don’t know for sure whether the vitamins, the systematic difference in habits, or some combination of the two caused the improvements.

Learn why many randomized clinical experiments use a placebo to control for the Placebo Effect .

Experiments Must Account for Confounding Variables

Your experimental design must account for confounding variables to avoid their problems. Scientific studies commonly use the following methods to handle confounders:

- Use control variables to keep them constant throughout an experiment.

- Statistically control for them in an observational study.

- Use random assignment to reduce the likelihood that systematic differences exist between experimental groups when the study begins.

Let’s take a look at how random assignment works in an experimental design.

Random Assignment Can Reduce the Impact of Confounding Variables

Note that random assignment is different than random sampling. Random sampling is a process for obtaining a sample that accurately represents a population .

Random assignment uses a chance process to assign subjects to experimental groups. Using random assignment requires that the experimenters can control the group assignment for all study subjects. For our study, we must be able to assign our participants to either the control group or the supplement group. Clearly, if we don’t have the ability to assign subjects to the groups, we can’t use random assignment!

Additionally, the process must have an equal probability of assigning a subject to any of the groups. For example, in our vitamin supplement study, we can use a coin toss to assign each subject to either the control group or supplement group. For more complex experimental designs, we can use a random number generator or even draw names out of a hat.

Random Assignment Distributes Confounders Equally

The random assignment process distributes confounding properties amongst your experimental groups equally. In other words, randomness helps eliminate systematic differences between groups. For our study, flipping the coin tends to equalize the distribution of subjects with healthier habits between the control and treatment group. Consequently, these two groups should start roughly equal for all confounding variables, including healthy habits!

Random assignment is a simple, elegant solution to a complex problem. For any given study area, there can be a long list of confounding variables that you could worry about. However, using random assignment, you don’t need to know what they are, how to detect them, or even measure them. Instead, use random assignment to equalize them across your experimental groups so they’re not a problem.

Because random assignment helps ensure that the groups are comparable when the experiment begins, you can be more confident that the treatments caused the post-study differences. Random assignment helps increase the internal validity of your study.

Comparing the Vitamin Study With and Without Random Assignment

Let’s compare two scenarios involving our hypothetical vitamin study. We’ll assume that the study obtains statistically significant results in both cases.

Scenario 1: We don’t use random assignment and, unbeknownst to us, subjects with healthier habits disproportionately end up in the supplement treatment group. The experimental groups differ by both healthy habits and vitamin consumption. Consequently, we can’t determine whether it was the habits or vitamins that improved the outcomes.

Scenario 2: We use random assignment and, consequently, the treatment and control groups start with roughly equal levels of healthy habits. The intentional introduction of vitamin supplements in the treatment group is the primary difference between the groups. Consequently, we can more confidently assert that the supplements caused an improvement in health outcomes.

For both scenarios, the statistical results could be identical. However, the methodology behind the second scenario makes a stronger case for a causal relationship between vitamin supplement consumption and health outcomes.

How important is it to use the correct methodology? Well, if the relationship between vitamins and health outcomes is not causal, then consuming vitamins won’t cause your health outcomes to improve regardless of what the study indicates. Instead, it’s probably all the other healthy habits!

Learn more about Randomized Controlled Trials (RCTs) that are the gold standard for identifying causal relationships because they use random assignment.

Drawbacks of Random Assignment

Random assignment helps reduce the chances of systematic differences between the groups at the start of an experiment and, thereby, mitigates the threats of confounding variables and alternative explanations. However, the process does not always equalize all of the confounding variables. Its random nature tends to eliminate systematic differences, but it doesn’t always succeed.

Sometimes random assignment is impossible because the experimenters cannot control the treatment or independent variable. For example, if you want to determine how individuals with and without depression perform on a test, you cannot randomly assign subjects to these groups. The same difficulty occurs when you’re studying differences between genders.

In other cases, there might be ethical issues. For example, in a randomized experiment, the researchers would want to withhold treatment for the control group. However, if the treatments are vaccinations, it might be unethical to withhold the vaccinations.

Other times, random assignment might be possible, but it is very challenging. For example, with vitamin consumption, it’s generally thought that if vitamin supplements cause health improvements, it’s only after very long-term use. It’s hard to enforce random assignment with a strict regimen for usage in one group and non-usage in the other group over the long-run. Or imagine a study about smoking. The researchers would find it difficult to assign subjects to the smoking and non-smoking groups randomly!

Fortunately, if you can’t use random assignment to help reduce the problem of confounding variables, there are different methods available. The other primary approach is to perform an observational study and incorporate the confounders into the statistical model itself. For more information, read my post Observational Studies Explained .

Read About Real Experiments that Used Random Assignment

I’ve written several blog posts about studies that have used random assignment to make causal inferences. Read studies about the following:

- Flu Vaccinations

- COVID-19 Vaccinations

Sullivan L. Random assignment versus random selection . SAGE Glossary of the Social and Behavioral Sciences, SAGE Publications, Inc.; 2009.

Share this:

Reader Interactions

November 13, 2019 at 4:59 am

Hi Jim, I have a question of randomly assigning participants to one of two conditions when it is an ongoing study and you are not sure of how many participants there will be. I am using this random assignment tool for factorial experiments. http://methodologymedia.psu.edu/most/rannumgenerator It asks you for the total number of participants but at this point, I am not sure how many there will be. Thanks for any advice you can give me, Floyd

May 28, 2019 at 11:34 am

Jim, can you comment on the validity of using the following approach when we can’t use random assignments. I’m in education, we have an ACT prep course that we offer. We can’t force students to take it and we can’t keep them from taking it either. But we want to know if it’s working. Let’s say that by senior year all students who are going to take the ACT have taken it. Let’s also say that I’m only including students who have taking it twice (so I can show growth between first and second time taking it). What I’ve done to address confounders is to go back to say 8th or 9th grade (prior to anyone taking the ACT or the ACT prep course) and run an analysis showing the two groups are not significantly different to start with. Is this valid? If the ACT prep students were higher achievers in 8th or 9th grade, I could not assume my prep course is effecting greater growth, but if they were not significantly different in 8th or 9th grade, I can assume the significant difference in ACT growth (from first to second testing) is due to the prep course. Yes or no?

May 26, 2019 at 5:37 pm

Nice post! I think the key to understanding scientific research is to understand randomization. And most people don’t get it.

May 27, 2019 at 9:48 pm

Thank you, Anoop!

I think randomness in an experiment is a funny thing. The issue of confounding factors is a serious problem. You might not even know what they are! But, use random assignment and, voila, the problem usually goes away! If you can’t use random assignment, suddenly you have a whole host of issues to worry about, which I’ll be writing about in more detail in my upcoming post about observational experiments!

Comments and Questions Cancel reply

- Yale Directories

Institution for Social and Policy Studies

Advancing research • shaping policy • developing leaders, why randomize.

About Randomized Field Experiments Randomized field experiments allow researchers to scientifically measure the impact of an intervention on a particular outcome of interest.

What is a randomized field experiment? In a randomized experiment, a study sample is divided into one group that will receive the intervention being studied (the treatment group) and another group that will not receive the intervention (the control group). For instance, a study sample might consist of all registered voters in a particular city. This sample will then be randomly divided into treatment and control groups. Perhaps 40% of the sample will be on a campaign’s Get-Out-the-Vote (GOTV) mailing list and the other 60% of the sample will not receive the GOTV mailings. The outcome measured –voter turnout– can then be compared in the two groups. The difference in turnout will reflect the effectiveness of the intervention.

What does random assignment mean? The key to randomized experimental research design is in the random assignment of study subjects – for example, individual voters, precincts, media markets or some other group – into treatment or control groups. Randomization has a very specific meaning in this context. It does not refer to haphazard or casual choosing of some and not others. Randomization in this context means that care is taken to ensure that no pattern exists between the assignment of subjects into groups and any characteristics of those subjects. Every subject is as likely as any other to be assigned to the treatment (or control) group. Randomization is generally achieved by employing a computer program containing a random number generator. Randomization procedures differ based upon the research design of the experiment. Individuals or groups may be randomly assigned to treatment or control groups. Some research designs stratify subjects by geographic, demographic or other factors prior to random assignment in order to maximize the statistical power of the estimated effect of the treatment (e.g., GOTV intervention). Information about the randomization procedure is included in each experiment summary on the site.

What are the advantages of randomized experimental designs? Randomized experimental design yields the most accurate analysis of the effect of an intervention (e.g., a voter mobilization phone drive or a visit from a GOTV canvasser, on voter behavior). By randomly assigning subjects to be in the group that receives the treatment or to be in the control group, researchers can measure the effect of the mobilization method regardless of other factors that may make some people or groups more likely to participate in the political process. To provide a simple example, say we are testing the effectiveness of a voter education program on high school seniors. If we allow students from the class to volunteer to participate in the program, and we then compare the volunteers’ voting behavior against those who did not participate, our results will reflect something other than the effects of the voter education intervention. This is because there are, no doubt, qualities about those volunteers that make them different from students who do not volunteer. And, most important for our work, those differences may very well correlate with propensity to vote. Instead of letting students self-select, or even letting teachers select students (as teachers may have biases in who they choose), we could randomly assign all students in a given class to be in either a treatment or control group. This would ensure that those in the treatment and control groups differ solely due to chance. The value of randomization may also be seen in the use of walk lists for door-to-door canvassers. If canvassers choose which houses they will go to and which they will skip, they may choose houses that seem more inviting or they may choose houses that are placed closely together rather than those that are more spread out. These differences could conceivably correlate with voter turnout. Or if house numbers are chosen by selecting those on the first half of a ten page list, they may be clustered in neighborhoods that differ in important ways from neighborhoods in the second half of the list. Random assignment controls for both known and unknown variables that can creep in with other selection processes to confound analyses. Randomized experimental design is a powerful tool for drawing valid inferences about cause and effect. The use of randomized experimental design should allow a degree of certainty that the research findings cited in studies that employ this methodology reflect the effects of the interventions being measured and not some other underlying variable or variables.

- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Sweepstakes

- Guided Meditations

- Verywell Mind Insights

- 2024 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

The Definition of Random Assignment According to Psychology

Materio / Getty Images

Random assignment refers to the use of chance procedures in psychology experiments to ensure that each participant has the same opportunity to be assigned to any given group in a study to eliminate any potential bias in the experiment at the outset. Participants are randomly assigned to different groups, such as the treatment group versus the control group. In clinical research, randomized clinical trials are known as the gold standard for meaningful results.

Simple random assignment techniques might involve tactics such as flipping a coin, drawing names out of a hat, rolling dice, or assigning random numbers to a list of participants. It is important to note that random assignment differs from random selection .

While random selection refers to how participants are randomly chosen from a target population as representatives of that population, random assignment refers to how those chosen participants are then assigned to experimental groups.

Random Assignment In Research

To determine if changes in one variable will cause changes in another variable, psychologists must perform an experiment. Random assignment is a critical part of the experimental design that helps ensure the reliability of the study outcomes.

Researchers often begin by forming a testable hypothesis predicting that one variable of interest will have some predictable impact on another variable.

The variable that the experimenters will manipulate in the experiment is known as the independent variable , while the variable that they will then measure for different outcomes is known as the dependent variable. While there are different ways to look at relationships between variables, an experiment is the best way to get a clear idea if there is a cause-and-effect relationship between two or more variables.

Once researchers have formulated a hypothesis, conducted background research, and chosen an experimental design, it is time to find participants for their experiment. How exactly do researchers decide who will be part of an experiment? As mentioned previously, this is often accomplished through something known as random selection.

Random Selection

In order to generalize the results of an experiment to a larger group, it is important to choose a sample that is representative of the qualities found in that population. For example, if the total population is 60% female and 40% male, then the sample should reflect those same percentages.

Choosing a representative sample is often accomplished by randomly picking people from the population to be participants in a study. Random selection means that everyone in the group stands an equal chance of being chosen to minimize any bias. Once a pool of participants has been selected, it is time to assign them to groups.

By randomly assigning the participants into groups, the experimenters can be fairly sure that each group will have the same characteristics before the independent variable is applied.

Participants might be randomly assigned to the control group , which does not receive the treatment in question. The control group may receive a placebo or receive the standard treatment. Participants may also be randomly assigned to the experimental group , which receives the treatment of interest. In larger studies, there can be multiple treatment groups for comparison.

There are simple methods of random assignment, like rolling the die. However, there are more complex techniques that involve random number generators to remove any human error.

There can also be random assignment to groups with pre-established rules or parameters. For example, if you want to have an equal number of men and women in each of your study groups, you might separate your sample into two groups (by sex) before randomly assigning each of those groups into the treatment group and control group.

Random assignment is essential because it increases the likelihood that the groups are the same at the outset. With all characteristics being equal between groups, other than the application of the independent variable, any differences found between group outcomes can be more confidently attributed to the effect of the intervention.

Example of Random Assignment

Imagine that a researcher is interested in learning whether or not drinking caffeinated beverages prior to an exam will improve test performance. After randomly selecting a pool of participants, each person is randomly assigned to either the control group or the experimental group.

The participants in the control group consume a placebo drink prior to the exam that does not contain any caffeine. Those in the experimental group, on the other hand, consume a caffeinated beverage before taking the test.

Participants in both groups then take the test, and the researcher compares the results to determine if the caffeinated beverage had any impact on test performance.

A Word From Verywell

Random assignment plays an important role in the psychology research process. Not only does this process help eliminate possible sources of bias, but it also makes it easier to generalize the results of a tested sample of participants to a larger population.

Random assignment helps ensure that members of each group in the experiment are the same, which means that the groups are also likely more representative of what is present in the larger population of interest. Through the use of this technique, psychology researchers are able to study complex phenomena and contribute to our understanding of the human mind and behavior.

Lin Y, Zhu M, Su Z. The pursuit of balance: An overview of covariate-adaptive randomization techniques in clinical trials . Contemp Clin Trials. 2015;45(Pt A):21-25. doi:10.1016/j.cct.2015.07.011

Sullivan L. Random assignment versus random selection . In: The SAGE Glossary of the Social and Behavioral Sciences. SAGE Publications, Inc.; 2009. doi:10.4135/9781412972024.n2108

Alferes VR. Methods of Randomization in Experimental Design . SAGE Publications, Inc.; 2012. doi:10.4135/9781452270012

Nestor PG, Schutt RK. Research Methods in Psychology: Investigating Human Behavior. (2nd Ed.). SAGE Publications, Inc.; 2015.

By Kendra Cherry, MSEd Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

| Elements of Research | ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices. Chapter 6: Data Collection Strategies 6.1.1 Random AssignationAs previously mentioned, one of the characteristics of a true experiment is that researchers use a random process to decide which participants are tested under which conditions. Random assignation is a powerful research technique that addresses the assumption of pre-test equivalence – that the experimental and control group are equal in all respects before the administration of the independent variable (Palys & Atchison, 2014). Random assignation is the primary way that researchers attempt to control extraneous variables across conditions. Random assignation is associated with experimental research methods. In its strictest sense, random assignment should meet two criteria. One is that each participant has an equal chance of being assigned to each condition (e.g., a 50% chance of being assigned to each of two conditions). The second is that each participant is assigned to a condition independently of other participants. Thus, one way to assign participants to two conditions would be to flip a coin for each one. If the coin lands on the heads side, the participant is assigned to Condition A, and if it lands on the tails side, the participant is assigned to Condition B. For three conditions, one could use a computer to generate a random integer from 1 to 3 for each participant. If the integer is 1, the participant is assigned to Condition A; if it is 2, the participant is assigned to Condition B; and, if it is 3, the participant is assigned to Condition C. In practice, a full sequence of conditions—one for each participant expected to be in the experiment—is usually created ahead of time, and each new participant is assigned to the next condition in the sequence as he or she is tested. However, one problem with coin flipping and other strict procedures for random assignment is that they are likely to result in unequal sample sizes in the different conditions. Unequal sample sizes are generally not a serious problem, and you should never throw away data you have already collected to achieve equal sample sizes. However, for a fixed number of participants, it is statistically most efficient to divide them into equal-sized groups. It is standard practice, therefore, to use a kind of modified random assignment that keeps the number of participants in each group as similar as possible. One approach is block randomization. In block randomization, all the conditions occur once in the sequence before any of them is repeated. Then they all occur again before any of them is repeated again. Within each of these “blocks,” the conditions occur in a random order. Again, the sequence of conditions is usually generated before any participants are tested, and each new participant is assigned to the next condition in the sequence. When the procedure is computerized, the computer program often handles the random assignment, which is obviously much easier. You can also find programs online to help you randomize your random assignation. For example, the Research Randomizer website will generate block randomization sequences for any number of participants and conditions. Random assignation is not guaranteed to control all extraneous variables across conditions. It is always possible that, just by chance, the participants in one condition might turn out to be substantially older, less tired, more motivated, or less depressed on average than the participants in another condition. However, there are some reasons that this may not be a major concern. One is that random assignment works better than one might expect, especially for large samples. Another is that the inferential statistics that researchers use to decide whether a difference between groups reflects a difference in the population take the “fallibility” of random assignment into account. Yet another reason is that even if random assignment does result in a confounding variable and therefore produces misleading results, this confound is likely to be detected when the experiment is replicated. The upshot is that random assignment to conditions—although not infallible in terms of controlling extraneous variables—is always considered a strength of a research design. Note: Do not confuse random assignation with random sampling. Random sampling is a method for selecting a sample from a population; we will talk about this in Chapter 7. Research Methods for the Social Sciences: An Introduction Copyright © 2020 by Valerie Sheppard is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted. Share This Book Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices. As previously mentioned, one of the characteristics of a true experiment is that researchers use a random process to decide which participants are tested under which conditions. Random assignation is a powerful research technique that addresses the assumption of pre-test equivalence – that the experimental and control group are equal in all respects before the administration of the independent variable (Palys & Atchison, 2014). Random assignation is the primary way that researchers attempt to control extraneous variables across conditions. Random assignation is associated with experimental research methods. In its strictest sense, random assignment should meet two criteria. One is that each participant has an equal chance of being assigned to each condition (e.g., a 50% chance of being assigned to each of two conditions). The second is that each participant is assigned to a condition independently of other participants. Thus, one way to assign participants to two conditions would be to flip a coin for each one. If the coin lands on the heads side, the participant is assigned to Condition A, and if it lands on the tails side, the participant is assigned to Condition B. For three conditions, one could use a computer to generate a random integer from 1 to 3 for each participant. If the integer is 1, the participant is assigned to Condition A; if it is 2, the participant is assigned to Condition B; and, if it is 3, the participant is assigned to Condition C. In practice, a full sequence of conditions—one for each participant expected to be in the experiment—is usually created ahead of time, and each new participant is assigned to the next condition in the sequence as he or she is tested. However, one problem with coin flipping and other strict procedures for random assignment is that they are likely to result in unequal sample sizes in the different conditions. Unequal sample sizes are generally not a serious problem, and you should never throw away data you have already collected to achieve equal sample sizes. However, for a fixed number of participants, it is statistically most efficient to divide them into equal-sized groups. It is standard practice, therefore, to use a kind of modified random assignment that keeps the number of participants in each group as similar as possible. One approach is block randomization. In block randomization, all the conditions occur once in the sequence before any of them is repeated. Then they all occur again before any of them is repeated again. Within each of these “blocks,” the conditions occur in a random order. Again, the sequence of conditions is usually generated before any participants are tested, and each new participant is assigned to the next condition in the sequence. When the procedure is computerized, the computer program often handles the random assignment, which is obviously much easier. You can also find programs online to help you randomize your random assignation. For example, the Research Randomizer website will generate block randomization sequences for any number of participants and conditions ( Research Randomizer ). Random assignation is not guaranteed to control all extraneous variables across conditions. It is always possible that, just by chance, the participants in one condition might turn out to be substantially older, less tired, more motivated, or less depressed on average than the participants in another condition. However, there are some reasons that this may not be a major concern. One is that random assignment works better than one might expect, especially for large samples. Another is that the inferential statistics that researchers use to decide whether a difference between groups reflects a difference in the population take the “fallibility” of random assignment into account. Yet another reason is that even if random assignment does result in a confounding variable and therefore produces misleading results, this confound is likely to be detected when the experiment is replicated. The upshot is that random assignment to conditions—although not infallible in terms of controlling extraneous variables—is always considered a strength of a research design. Note: Do not confuse random assignation with random sampling. Random sampling is a method for selecting a sample from a population; we will talk about this in Chapter 7. Research Methods, Data Collection and Ethics Copyright © 2020 by Valerie Sheppard is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted. Share This BookRandom Assignment in Psychology (Definition + 40 Examples) Have you ever wondered how researchers discover new ways to help people learn, make decisions, or overcome challenges? A hidden hero in this adventure of discovery is a method called random assignment, a cornerstone in psychological research that helps scientists uncover the truths about the human mind and behavior. Random Assignment is a process used in research where each participant has an equal chance of being placed in any group within the study. This technique is essential in experiments as it helps to eliminate biases, ensuring that the different groups being compared are similar in all important aspects. By doing so, researchers can be confident that any differences observed are likely due to the variable being tested, rather than other factors. In this article, we’ll explore the intriguing world of random assignment, diving into its history, principles, real-world examples, and the impact it has had on the field of psychology. History of Random Assignment Stepping back in time, we delve into the origins of random assignment, which finds its roots in the early 20th century. The pioneering mind behind this innovative technique was Sir Ronald A. Fisher , a British statistician and biologist. Fisher introduced the concept of random assignment in the 1920s, aiming to improve the quality and reliability of experimental research . His contributions laid the groundwork for the method's evolution and its widespread adoption in various fields, particularly in psychology. Fisher’s groundbreaking work on random assignment was motivated by his desire to control for confounding variables – those pesky factors that could muddy the waters of research findings. By assigning participants to different groups purely by chance, he realized that the influence of these confounding variables could be minimized, paving the way for more accurate and trustworthy results. Early Studies Utilizing Random AssignmentFollowing Fisher's initial development, random assignment started to gain traction in the research community. Early studies adopting this methodology focused on a variety of topics, from agriculture (which was Fisher’s primary field of interest) to medicine and psychology. The approach allowed researchers to draw stronger conclusions from their experiments, bolstering the development of new theories and practices. One notable early study utilizing random assignment was conducted in the field of educational psychology. Researchers were keen to understand the impact of different teaching methods on student outcomes. By randomly assigning students to various instructional approaches, they were able to isolate the effects of the teaching methods, leading to valuable insights and recommendations for educators. Evolution of the MethodologyAs the decades rolled on, random assignment continued to evolve and adapt to the changing landscape of research. Advances in technology introduced new tools and techniques for implementing randomization, such as computerized random number generators, which offered greater precision and ease of use. The application of random assignment expanded beyond the confines of the laboratory, finding its way into field studies and large-scale surveys. Researchers across diverse disciplines embraced the methodology, recognizing its potential to enhance the validity of their findings and contribute to the advancement of knowledge. From its humble beginnings in the early 20th century to its widespread use today, random assignment has proven to be a cornerstone of scientific inquiry. Its development and evolution have played a pivotal role in shaping the landscape of psychological research, driving discoveries that have improved lives and deepened our understanding of the human experience. Principles of Random AssignmentDelving into the heart of random assignment, we uncover the theories and principles that form its foundation. The method is steeped in the basics of probability theory and statistical inference, ensuring that each participant has an equal chance of being placed in any group, thus fostering fair and unbiased results. Basic Principles of Random AssignmentUnderstanding the core principles of random assignment is key to grasping its significance in research. There are three principles: equal probability of selection, reduction of bias, and ensuring representativeness. The first principle, equal probability of selection , ensures that every participant has an identical chance of being assigned to any group in the study. This randomness is crucial as it mitigates the risk of bias and establishes a level playing field. The second principle focuses on the reduction of bias . Random assignment acts as a safeguard, ensuring that the groups being compared are alike in all essential aspects before the experiment begins. This similarity between groups allows researchers to attribute any differences observed in the outcomes directly to the independent variable being studied. Lastly, ensuring representativeness is a vital principle. When participants are assigned randomly, the resulting groups are more likely to be representative of the larger population. This characteristic is crucial for the generalizability of the study’s findings, allowing researchers to apply their insights broadly. Theoretical FoundationThe theoretical foundation of random assignment lies in probability theory and statistical inference . Probability theory deals with the likelihood of different outcomes, providing a mathematical framework for analyzing random phenomena. In the context of random assignment, it helps in ensuring that each participant has an equal chance of being placed in any group. Statistical inference, on the other hand, allows researchers to draw conclusions about a population based on a sample of data drawn from that population. It is the mechanism through which the results of a study can be generalized to a broader context. Random assignment enhances the reliability of statistical inferences by reducing biases and ensuring that the sample is representative. Differentiating Random Assignment from Random SelectionIt’s essential to distinguish between random assignment and random selection, as the two terms, while related, have distinct meanings in the realm of research. Random assignment refers to how participants are placed into different groups in an experiment, aiming to control for confounding variables and help determine causes. In contrast, random selection pertains to how individuals are chosen to participate in a study. This method is used to ensure that the sample of participants is representative of the larger population, which is vital for the external validity of the research. While both methods are rooted in randomness and probability, they serve different purposes in the research process. Understanding the theories, principles, and distinctions of random assignment illuminates its pivotal role in psychological research. This method, anchored in probability theory and statistical inference, serves as a beacon of reliability, guiding researchers in their quest for knowledge and ensuring that their findings stand the test of validity and applicability. Methodology of Random Assignment Implementing random assignment in a study is a meticulous process that involves several crucial steps. The initial step is participant selection, where individuals are chosen to partake in the study. This stage is critical to ensure that the pool of participants is diverse and representative of the population the study aims to generalize to. Once the pool of participants has been established, the actual assignment process begins. In this step, each participant is allocated randomly to one of the groups in the study. Researchers use various tools, such as random number generators or computerized methods, to ensure that this assignment is genuinely random and free from biases. Monitoring and adjusting form the final step in the implementation of random assignment. Researchers need to continuously observe the groups to ensure that they remain comparable in all essential aspects throughout the study. If any significant discrepancies arise, adjustments might be necessary to maintain the study’s integrity and validity. Tools and Techniques UsedThe evolution of technology has introduced a variety of tools and techniques to facilitate random assignment. Random number generators, both manual and computerized, are commonly used to assign participants to different groups. These generators ensure that each individual has an equal chance of being placed in any group, upholding the principle of equal probability of selection. In addition to random number generators, researchers often use specialized computer software designed for statistical analysis and experimental design. These software programs offer advanced features that allow for precise and efficient random assignment, minimizing the risk of human error and enhancing the study’s reliability. Ethical ConsiderationsThe implementation of random assignment is not devoid of ethical considerations. Informed consent is a fundamental ethical principle that researchers must uphold. Informed consent means that every participant should be fully informed about the nature of the study, the procedures involved, and any potential risks or benefits, ensuring that they voluntarily agree to participate. Beyond informed consent, researchers must conduct a thorough risk and benefit analysis. The potential benefits of the study should outweigh any risks or harms to the participants. Safeguarding the well-being of participants is paramount, and any study employing random assignment must adhere to established ethical guidelines and standards. Conclusion of MethodologyThe methodology of random assignment, while seemingly straightforward, is a multifaceted process that demands precision, fairness, and ethical integrity. From participant selection to assignment and monitoring, each step is crucial to ensure the validity of the study’s findings. The tools and techniques employed, coupled with a steadfast commitment to ethical principles, underscore the significance of random assignment as a cornerstone of robust psychological research. Benefits of Random Assignment in Psychological ResearchThe impact and importance of random assignment in psychological research cannot be overstated. It is fundamental for ensuring the study is accurate, allowing the researchers to determine if their study actually caused the results they saw, and making sure the findings can be applied to the real world. Facilitating Causal InferencesWhen participants are randomly assigned to different groups, researchers can be more confident that the observed effects are due to the independent variable being changed, and not other factors. This ability to determine the cause is called causal inference . This confidence allows for the drawing of causal relationships, which are foundational for theory development and application in psychology. Ensuring Internal ValidityOne of the foremost impacts of random assignment is its ability to enhance the internal validity of an experiment. Internal validity refers to the extent to which a researcher can assert that changes in the dependent variable are solely due to manipulations of the independent variable , and not due to confounding variables. By ensuring that each participant has an equal chance of being in any condition of the experiment, random assignment helps control for participant characteristics that could otherwise complicate the results. Enhancing GeneralizabilityBeyond internal validity, random assignment also plays a crucial role in enhancing the generalizability of research findings. When done correctly, it ensures that the sample groups are representative of the larger population, so can allow researchers to apply their findings more broadly. This representative nature is essential for the practical application of research, impacting policy, interventions, and psychological therapies. Limitations of Random AssignmentPotential for implementation issues. While the principles of random assignment are robust, the method can face implementation issues. One of the most common problems is logistical constraints. Some studies, due to their nature or the specific population being studied, find it challenging to implement random assignment effectively. For instance, in educational settings, logistical issues such as class schedules and school policies might stop the random allocation of students to different teaching methods . Ethical DilemmasRandom assignment, while methodologically sound, can also present ethical dilemmas. In some cases, withholding a potentially beneficial treatment from one of the groups of participants can raise serious ethical questions, especially in medical or clinical research where participants' well-being might be directly affected. Researchers must navigate these ethical waters carefully, balancing the pursuit of knowledge with the well-being of participants. Generalizability ConcernsEven when implemented correctly, random assignment does not always guarantee generalizable results. The types of people in the participant pool, the specific context of the study, and the nature of the variables being studied can all influence the extent to which the findings can be applied to the broader population. Researchers must be cautious in making broad generalizations from studies, even those employing strict random assignment. Practical and Real-World LimitationsIn the real world, many variables cannot be manipulated for ethical or practical reasons, limiting the applicability of random assignment. For instance, researchers cannot randomly assign individuals to different levels of intelligence, socioeconomic status, or cultural backgrounds. This limitation necessitates the use of other research designs, such as correlational or observational studies , when exploring relationships involving such variables. Response to CritiquesIn response to these critiques, people in favor of random assignment argue that the method, despite its limitations, remains one of the most reliable ways to establish cause and effect in experimental research. They acknowledge the challenges and ethical considerations but emphasize the rigorous frameworks in place to address them. The ongoing discussion around the limitations and critiques of random assignment contributes to the evolution of the method, making sure it is continuously relevant and applicable in psychological research. While random assignment is a powerful tool in experimental research, it is not without its critiques and limitations. Implementation issues, ethical dilemmas, generalizability concerns, and real-world limitations can pose significant challenges. However, the continued discourse and refinement around these issues underline the method's enduring significance in the pursuit of knowledge in psychology. By being careful with how we do things and doing what's right, random assignment stays a really important part of studying how people act and think. Real-World Applications and Examples Random assignment has been employed in many studies across various fields of psychology, leading to significant discoveries and advancements. Here are some real-world applications and examples illustrating the diversity and impact of this method:

Let's get into some specific examples. You'll need to know one term though, and that is "control group." A control group is a set of participants in a study who do not receive the treatment or intervention being tested , serving as a baseline to compare with the group that does, in order to assess the effectiveness of the treatment.

Each of these studies exemplifies how random assignment is utilized in various fields and settings, shedding light on the multitude of ways it can be applied to glean valuable insights and knowledge. Real-world Impact of Random Assignment Random assignment is like a key tool in the world of learning about people's minds and behaviors. It’s super important and helps in many different areas of our everyday lives. It helps make better rules, creates new ways to help people, and is used in lots of different fields. Health and MedicineIn health and medicine, random assignment has helped doctors and scientists make lots of discoveries. It’s a big part of tests that help create new medicines and treatments. By putting people into different groups by chance, scientists can really see if a medicine works. This has led to new ways to help people with all sorts of health problems, like diabetes, heart disease, and mental health issues like depression and anxiety. Schools and education have also learned a lot from random assignment. Researchers have used it to look at different ways of teaching, what kind of classrooms are best, and how technology can help learning. This knowledge has helped make better school rules, develop what we learn in school, and find the best ways to teach students of all ages and backgrounds. Workplace and Organizational BehaviorRandom assignment helps us understand how people act at work and what makes a workplace good or bad. Studies have looked at different kinds of workplaces, how bosses should act, and how teams should be put together. This has helped companies make better rules and create places to work that are helpful and make people happy. Environmental and Social ChangesRandom assignment is also used to see how changes in the community and environment affect people. Studies have looked at community projects, changes to the environment, and social programs to see how they help or hurt people’s well-being. This has led to better community projects, efforts to protect the environment, and programs to help people in society. Technology and Human InteractionIn our world where technology is always changing, studies with random assignment help us see how tech like social media, virtual reality, and online stuff affect how we act and feel. This has helped make better and safer technology and rules about using it so that everyone can benefit. The effects of random assignment go far and wide, way beyond just a science lab. It helps us understand lots of different things, leads to new and improved ways to do things, and really makes a difference in the world around us. From making healthcare and schools better to creating positive changes in communities and the environment, the real-world impact of random assignment shows just how important it is in helping us learn and make the world a better place. So, what have we learned? Random assignment is like a super tool in learning about how people think and act. It's like a detective helping us find clues and solve mysteries in many parts of our lives. From creating new medicines to helping kids learn better in school, and from making workplaces happier to protecting the environment, it’s got a big job! This method isn’t just something scientists use in labs; it reaches out and touches our everyday lives. It helps make positive changes and teaches us valuable lessons. Whether we are talking about technology, health, education, or the environment, random assignment is there, working behind the scenes, making things better and safer for all of us. In the end, the simple act of putting people into groups by chance helps us make big discoveries and improvements. It’s like throwing a small stone into a pond and watching the ripples spread out far and wide. Thanks to random assignment, we are always learning, growing, and finding new ways to make our world a happier and healthier place for everyone! Related posts:

Reference this article: About The Author Free Personality Test Free Memory Test Free IQ Test PracticalPie.com is a participant in the Amazon Associates Program. As an Amazon Associate we earn from qualifying purchases. Follow Us On: Youtube Facebook Instagram X/Twitter Psychology ResourcesDevelopmental Personality Relationships Psychologists Serial Killers  Psychology TestsPersonality Quiz Memory Test Depression test Type A/B Personality Test © PracticalPsychology. All rights reserved Privacy Policy | Terms of Use Random Assignment

Cite this chapter