Which descriptive statistics tool should you choose?

This article will help you choose the right descriptive statistics tool for your data. Each tool is available in Excel using the XLSTAT software.

The purpose of descriptive statistics

Describing data is an essential part of statistical analysis aiming to provide a complete picture of the data before moving to exploratory analysis or predictive modeling. The type of statistical methods used for this purpose are called descriptive statistics. They include both numerical (e.g. central tendency measures such as mean, mode, median or measures of variability) and graphical tools (e.g. histogram, box plot, scatter plot…) which give a summary of the dataset and extract important information such as central tendencies and variability. Moreover, we can use descriptive statistics to explore the association between two or several variables (bivariate or multivariate analysis).

For example, let’s say we have a data table which represents the results of a survey on the amount of money people spend on online shopping on a monthly average basis. Rows correspond to respondents and columns to the amount of money spent as well as the age group they belong to. Our goal is to extract important information from the survey and detect potential differences between the age groups. For this, we can simply summarize the results per group using common descriptive statistics, such as:

The mean and the median , that reflect the central tendency.

The standard deviation , the variance , and the variation coefficient, that reflect the dispersion .

In another example, using qualitative data, we consider a survey on commuting. Rows correspond to respondents and columns to the mode of transportation as well as to the city they live in. Our goal is to describe transportation preferences when commuting to work per city using: - The mode , reflecting the most frequent mode of commuting (the most frequent category).

The frequencies , reflecting how many times each mode of commuting appears as an answer.

The relative frequencies (percentages), which is the frequency divided by the total number of answers.

Bar charts and stacked bars, that graphically illustrate the relative frequencies by category.

A guide to choose a descriptive statistics tool according to the situation

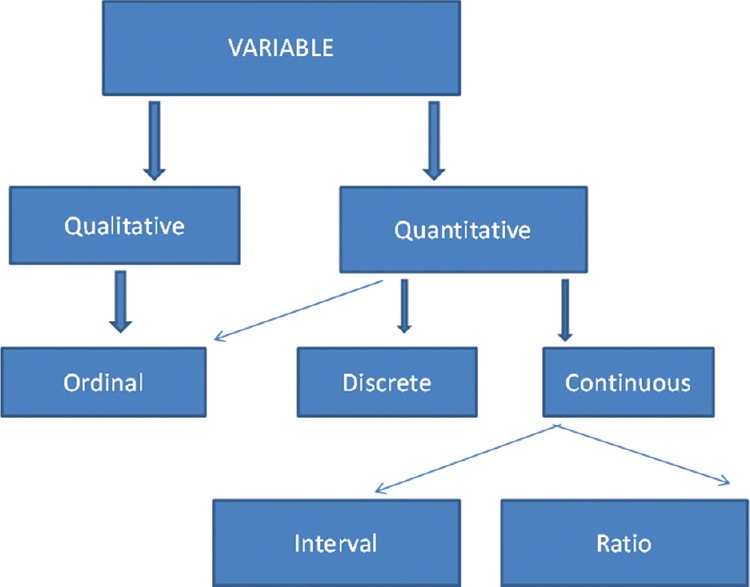

In order to choose the right descriptive statistics tool, we need to consider the types and the number of variables we have as well as the objective of the study. Based on these three criteria we have generated a grid that will help you decide which tool to use according to your situation. The first column of the grid refers to data types:

Quantitative dataset: containing variables that describe quantities of the objects of interest. The values are numbers. The weight of an infant is an example of a quantitative variable.

Qualitative dataset: containing variables that describe qualities of the objects of interest (categorical or nominal data). These values are called categories, also referred as levels or modalities. The gender of an infant is an example of a qualitative variable. The possible values are the categories male and female. Qualitative variables are referred as nominal or categorical.

Mixed dataset: containing both types of variables.

The second column indicates the number of variables. The proposed tools can handle either the description of one (univariate analysis) or the description of the relationships between two (bivariate analysis) or several variables. The grid provides intuitive example for each situation as well as a link of a tutorial explaining how to apply each XLSTAT tool using a demo file.

Descriptive Statistics grid

Please note that the list below is not exhaustive. However, it contains the most commonly used descriptive statistics, all available in Excel using the XLSTAT add-on.

| Quantitative | One variable (univariate analysis) | Estimate a frequency distribution | How many people per age class attended this event? (here the investigated variable is age in a quantitative form) | |

| Measure the central tendency of one sample | What is the average grade in a classroom? | Scattergram Strip plot | ||

| Measure the dispersion of one sample | How widely or narrowly are the grade scores dispersed around the mean score in a classroom? | , quartiles | Scattergram Strip plot | |

| Characterize the shape of a distribution | Is the employee wage distribution in a company symmetric? | |||

| Visually control wether a sample follows a given distribution | What is the theorical percentage of students who obtained a better note than a given threshold | |||

| Measure the position of a value within a sample | What data point can be used to split the sample into 95% of low values and 5% of high values? | |||

| Detect extreme values | Is the height of 184cm an extreme value in this group of students? | |||

| Two variables (bivariate analysis) | Describe the association between two variables | Does plant biomass increase or decrease with soil Pb content? | ||

| Several variables | Describe the association between multiple variables | What is the evolution of the life expectancy, the fertility rate and the size of population over the last 10 years in this country? | (up to 3 variables to describe over time) or (up to 3 variables to describe) | |

| Describe the association between three variables under specific conditions | How to visualize the proportions of three ice cream ingredients in several ice scream samples? | |||

| Two matrices of several variables | Describe the association between two matrices | Does the evaluation of a series of products differ from a panel to another? | ||

| Qualitative | One variable (univariate analysis) | Compute the frequencies of different categories | How many clients said they are satisfied by the service and how many said they were not? | |

| Detect the most frequent category | Which is the most frequent hair color in this country? | |||

| Two variables (bivariate analysis) | Measure the association between two variables | Does the presence of a trace element change according to the presence of another trace element? | Stacked or clustered bars | |

| Mixed (quantitative & qualitative) | Two variables (bivariate analysis) | Describe the relationship between a binary and a continuous variable | Is the concentration of a molecule in rats linked to the rats' sex (M/F)? | |

| Describe the relationship between a categorical and a continuous variable | Does sepal length differ between three flower species? | |||

| Several variables (multivariate analysis) | Describe the relationship between one categorical and two quantitative variables | Does the amount of money spent on this commercial website change according to the age class and the salary of the customers? | (with groups) |

How to run descriptive statistics in XLSTAT?

In XLSTAT, you will find a large variety of descriptive statistics tools in the Describing data menu. The most popular feature is Descriptive Statistics . All you have to do is select your data on the Excel sheet, then set up the dialog box and click OK. It's simple and quick. If you do not have XLSTAT, download for free our 14-Day version.

Outputs for quantitative data

Statistics : Min./max. value, 1st quartile, median, 3rd quartile, range, sum, mean, geometric mean, harmonic mean, kurtosis (Pearson), skewness (Pearson), kurtosis, skewness, CV (standard deviation/mean), sample variance, estimated variance, standard deviation of a sample, estimated standard deviation, mean absolute deviation, standard deviation of the mean.

Graphs : box plots, scattergrams, strip plots, Q-Q plots, p-p plots, stem and leaf plots. It is possible group together the various box plots, scattergrams and strip plots on the same chart, sort them by mean and color by group to compare them.

Outputs for qualitative data

Statistics : No. of categories, mode, mode frequency, mode weight, % mode, relative frequency of the mode, frequency, weight of the category, percentage of the category, relative frequency of the category

Graphs : Bar charts, pie charts, double pie charts, doughnuts, stacked bars, multiple bars

XLSTAT has developed a series of statistics tutorials that will provide you with a theorical background on inferential statistical, data modeling, clustering, multivariate data analysis and more. These guides will also help you in choosing an appropriate statistical method to investigate the question you are asking.

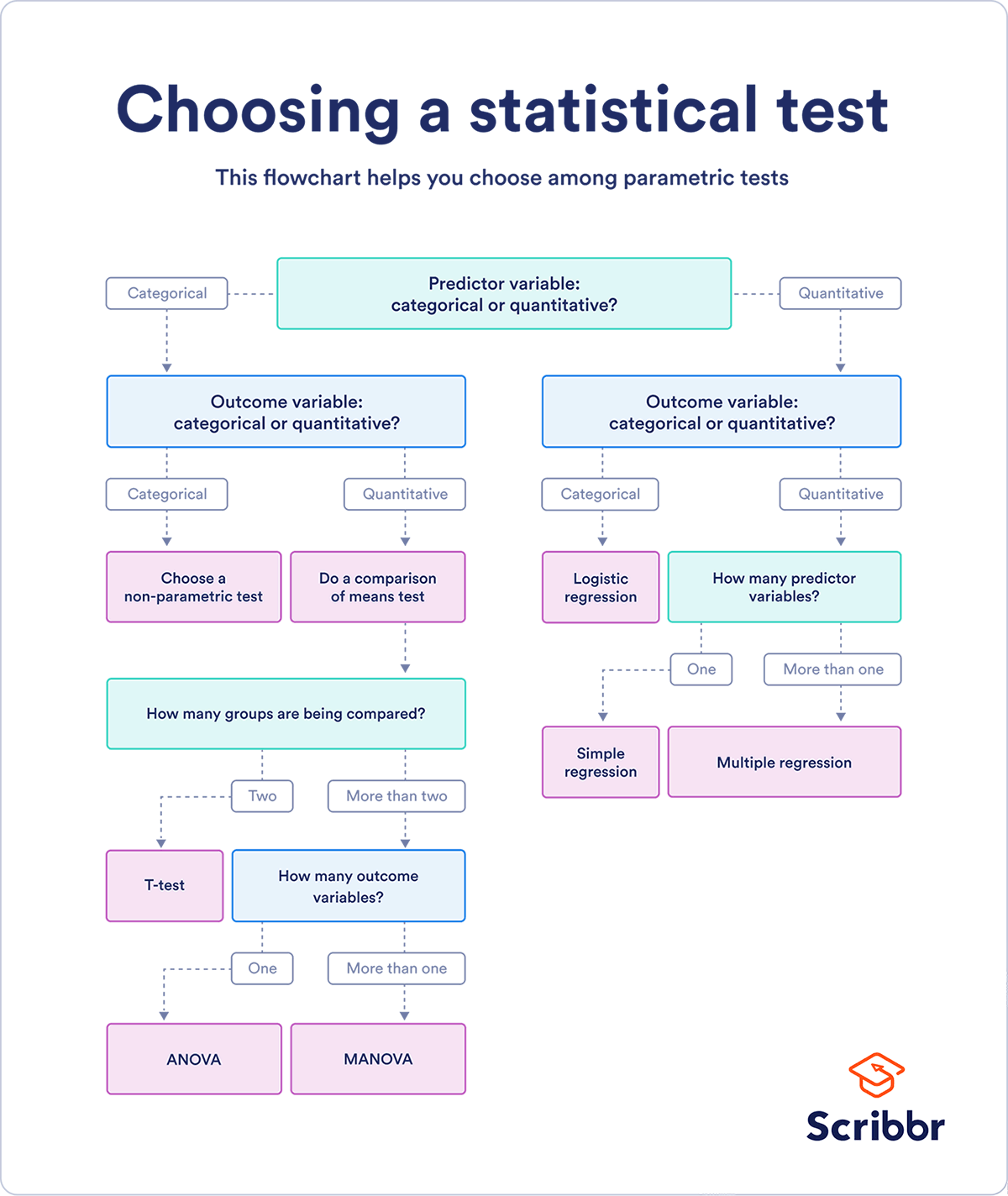

Which statistical test to use?

Which statistical model should you use?

Which multivariate data analysis method to choose?

Which clustering method should you choose?

Choosing an appropriate time series analysis method

Comparison of supervised machine learning algorithms

Source: Introductory Statistics: Exploring the World Through Data: Robert Gould and Colle n Ryan**

Was this article useful?

Similar articles

- Free Case Studies and White Papers

- How to interpret goodness of fit statistics in regression analysis?

- Webinar XLSTAT: Sensory data analysis - Part 1 - Evaluating differences between products

- What is statistical modeling?

- Statistics Tutorials for choosing the right statistical method

- Which statistical model should you choose?

Expert Software for Better Insights, Research, and Outcomes

Quant Analysis 101: Descriptive Statistics

Everything You Need To Get Started (With Examples)

By: Derek Jansen (MBA) | Reviewers: Kerryn Warren (PhD) | October 2023

If you’re new to quantitative data analysis , one of the first terms you’re likely to hear being thrown around is descriptive statistics. In this post, we’ll unpack the basics of descriptive statistics, using straightforward language and loads of examples . So grab a cup of coffee and let’s crunch some numbers!

Overview: Descriptive Statistics

What are descriptive statistics.

- Descriptive vs inferential statistics

- Why the descriptives matter

- The “ Big 7 ” descriptive statistics

- Key takeaways

At the simplest level, descriptive statistics summarise and describe relatively basic but essential features of a quantitative dataset – for example, a set of survey responses. They provide a snapshot of the characteristics of your dataset and allow you to better understand, roughly, how the data are “shaped” (more on this later). For example, a descriptive statistic could include the proportion of males and females within a sample or the percentages of different age groups within a population.

Another common descriptive statistic is the humble average (which in statistics-talk is called the mean ). For example, if you undertook a survey and asked people to rate their satisfaction with a particular product on a scale of 1 to 10, you could then calculate the average rating. This is a very basic statistic, but as you can see, it gives you some idea of how this data point is shaped .

What about inferential statistics?

Now, you may have also heard the term inferential statistics being thrown around, and you’re probably wondering how that’s different from descriptive statistics. Simply put, descriptive statistics describe and summarise the sample itself , while inferential statistics use the data from a sample to make inferences or predictions about a population .

Put another way, descriptive statistics help you understand your dataset , while inferential statistics help you make broader statements about the population , based on what you observe within the sample. If you’re keen to learn more, we cover inferential stats in another post , or you can check out the explainer video below.

Why do descriptive statistics matter?

While descriptive statistics are relatively simple from a mathematical perspective, they play a very important role in any research project . All too often, students skim over the descriptives and run ahead to the seemingly more exciting inferential statistics, but this can be a costly mistake.

The reason for this is that descriptive statistics help you, as the researcher, comprehend the key characteristics of your sample without getting lost in vast amounts of raw data. In doing so, they provide a foundation for your quantitative analysis . Additionally, they enable you to quickly identify potential issues within your dataset – for example, suspicious outliers, missing responses and so on. Just as importantly, descriptive statistics inform the decision-making process when it comes to choosing which inferential statistics you’ll run, as each inferential test has specific requirements regarding the shape of the data.

Long story short, it’s essential that you take the time to dig into your descriptive statistics before looking at more “advanced” inferentials. It’s also worth noting that, depending on your research aims and questions, descriptive stats may be all that you need in any case . So, don’t discount the descriptives!

The “Big 7” descriptive statistics

With the what and why out of the way, let’s take a look at the most common descriptive statistics. Beyond the counts, proportions and percentages we mentioned earlier, we have what we call the “Big 7” descriptives. These can be divided into two categories – measures of central tendency and measures of dispersion.

Measures of central tendency

True to the name, measures of central tendency describe the centre or “middle section” of a dataset. In other words, they provide some indication of what a “typical” data point looks like within a given dataset. The three most common measures are:

The mean , which is the mathematical average of a set of numbers – in other words, the sum of all numbers divided by the count of all numbers.

The median , which is the middlemost number in a set of numbers, when those numbers are ordered from lowest to highest.

The mode , which is the most frequently occurring number in a set of numbers (in any order). Naturally, a dataset can have one mode, no mode (no number occurs more than once) or multiple modes.

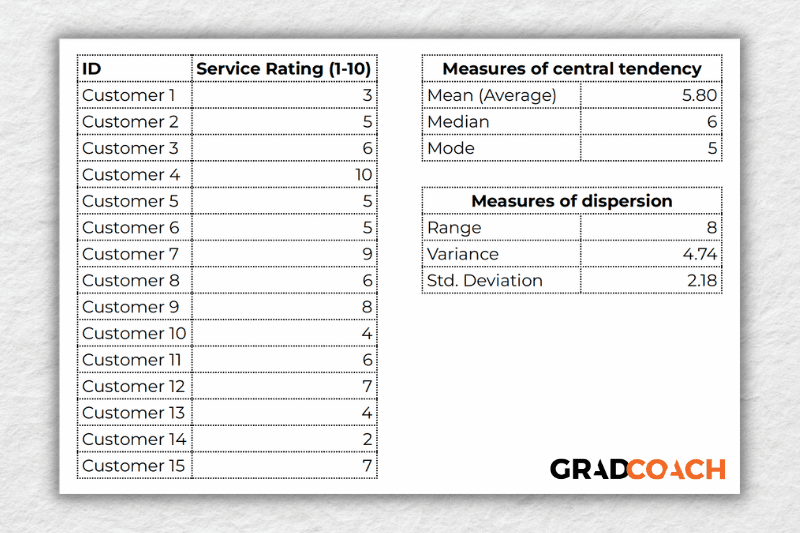

To make this a little more tangible, let’s look at a sample dataset, along with the corresponding mean, median and mode. This dataset reflects the service ratings (on a scale of 1 – 10) from 15 customers.

As you can see, the mean of 5.8 is the average rating across all 15 customers. Meanwhile, 6 is the median . In other words, if you were to list all the responses in order from low to high, Customer 8 would be in the middle (with their service rating being 6). Lastly, the number 5 is the most frequent rating (appearing 3 times), making it the mode.

Together, these three descriptive statistics give us a quick overview of how these customers feel about the service levels at this business. In other words, most customers feel rather lukewarm and there’s certainly room for improvement. From a more statistical perspective, this also means that the data tend to cluster around the 5-6 mark , since the mean and the median are fairly close to each other.

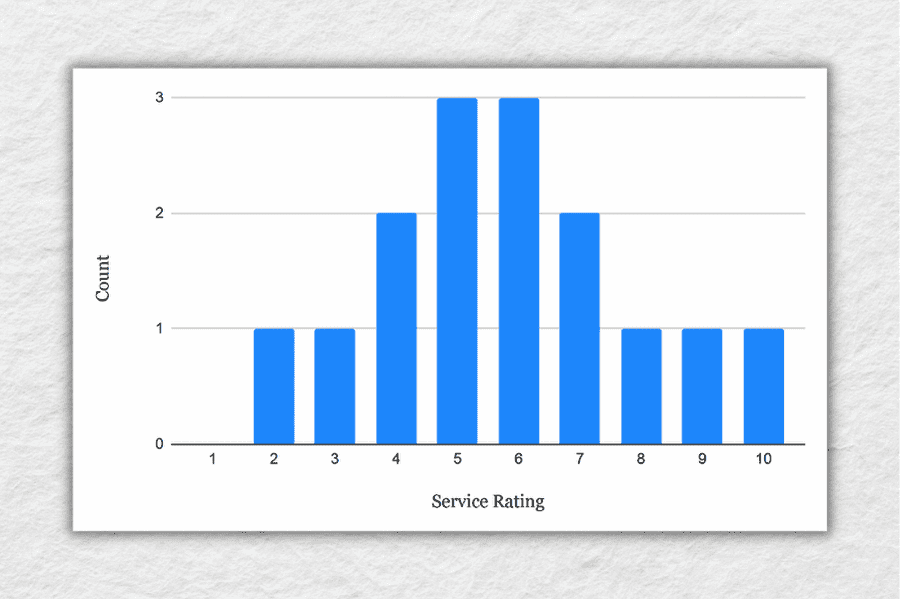

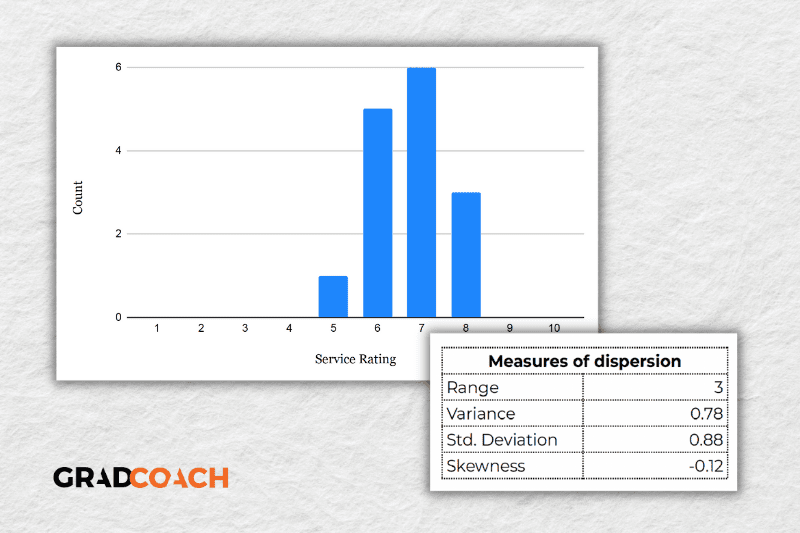

To take this a step further, let’s look at the frequency distribution of the responses . In other words, let’s count how many times each rating was received, and then plot these counts onto a bar chart.

As you can see, the responses tend to cluster toward the centre of the chart , creating something of a bell-shaped curve. In statistical terms, this is called a normal distribution .

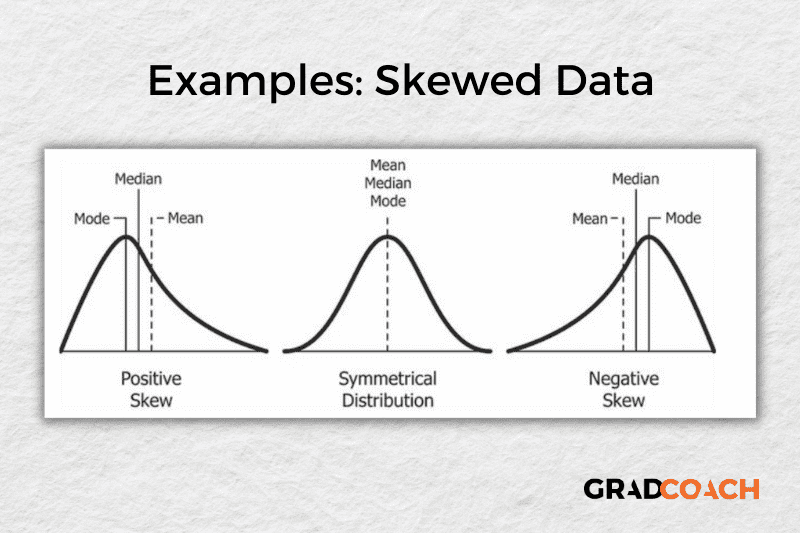

As you delve into quantitative data analysis, you’ll find that normal distributions are very common , but they’re certainly not the only type of distribution. In some cases, the data can lean toward the left or the right of the chart (i.e., toward the low end or high end). This lean is reflected by a measure called skewness , and it’s important to pay attention to this when you’re analysing your data, as this will have an impact on what types of inferential statistics you can use on your dataset.

Measures of dispersion

While the measures of central tendency provide insight into how “centred” the dataset is, it’s also important to understand how dispersed that dataset is . In other words, to what extent the data cluster toward the centre – specifically, the mean. In some cases, the majority of the data points will sit very close to the centre, while in other cases, they’ll be scattered all over the place. Enter the measures of dispersion, of which there are three:

Range , which measures the difference between the largest and smallest number in the dataset. In other words, it indicates how spread out the dataset really is.

Variance , which measures how much each number in a dataset varies from the mean (average). More technically, it calculates the average of the squared differences between each number and the mean. A higher variance indicates that the data points are more spread out , while a lower variance suggests that the data points are closer to the mean.

Standard deviation , which is the square root of the variance . It serves the same purposes as the variance, but is a bit easier to interpret as it presents a figure that is in the same unit as the original data . You’ll typically present this statistic alongside the means when describing the data in your research.

Again, let’s look at our sample dataset to make this all a little more tangible.

As you can see, the range of 8 reflects the difference between the highest rating (10) and the lowest rating (2). The standard deviation of 2.18 tells us that on average, results within the dataset are 2.18 away from the mean (of 5.8), reflecting a relatively dispersed set of data .

For the sake of comparison, let’s look at another much more tightly grouped (less dispersed) dataset.

As you can see, all the ratings lay between 5 and 8 in this dataset, resulting in a much smaller range, variance and standard deviation . You might also notice that the data are clustered toward the right side of the graph – in other words, the data are skewed. If we calculate the skewness for this dataset, we get a result of -0.12, confirming this right lean.

In summary, range, variance and standard deviation all provide an indication of how dispersed the data are . These measures are important because they help you interpret the measures of central tendency within context . In other words, if your measures of dispersion are all fairly high numbers, you need to interpret your measures of central tendency with some caution , as the results are not particularly centred. Conversely, if the data are all tightly grouped around the mean (i.e., low dispersion), the mean becomes a much more “meaningful” statistic).

Key Takeaways

We’ve covered quite a bit of ground in this post. Here are the key takeaways:

- Descriptive statistics, although relatively simple, are a critically important part of any quantitative data analysis.

- Measures of central tendency include the mean (average), median and mode.

- Skewness indicates whether a dataset leans to one side or another

- Measures of dispersion include the range, variance and standard deviation

If you’d like hands-on help with your descriptive statistics (or any other aspect of your research project), check out our private coaching service , where we hold your hand through each step of the research journey.

Psst… there’s more!

This post is an extract from our bestselling short course, Methodology Bootcamp . If you want to work smart, you don't want to miss this .

Good day. May I ask about where I would be able to find the statistics cheat sheet?

Right above you comment 🙂

Good job. you saved me

Brilliant and well explained. So much information explained clearly!

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

- Privacy Policy

Home » Descriptive Statistics – Types, Methods and Examples

Descriptive Statistics – Types, Methods and Examples

Table of Contents

Descriptive Statistics

Descriptive statistics is a branch of statistics that deals with the summarization and description of collected data. This type of statistics is used to simplify and present data in a manner that is easy to understand, often through visual or numerical methods. Descriptive statistics is primarily concerned with measures of central tendency, variability, and distribution, as well as graphical representations of data.

Here are the main components of descriptive statistics:

- Measures of Central Tendency : These provide a summary statistic that represents the center point or typical value of a dataset. The most common measures of central tendency are the mean (average), median (middle value), and mode (most frequent value).

- Measures of Dispersion or Variability : These provide a summary statistic that represents the spread of values in a dataset. Common measures of dispersion include the range (difference between the highest and lowest values), variance (average of the squared differences from the mean), standard deviation (square root of the variance), and interquartile range (difference between the upper and lower quartiles).

- Measures of Position : These are used to understand the distribution of values within a dataset. They include percentiles and quartiles.

- Graphical Representations : Data can be visually represented using various methods like bar graphs, histograms, pie charts, box plots, and scatter plots. These visuals provide a clear, intuitive way to understand the data.

- Measures of Association : These measures provide insight into the relationships between variables in the dataset, such as correlation and covariance.

Descriptive Statistics Types

Descriptive statistics can be classified into two types:

Measures of Central Tendency

These measures help describe the center point or average of a data set. There are three main types:

- Mean : The average value of the dataset, obtained by adding all the data points and dividing by the number of data points.

- Median : The middle value of the dataset, obtained by ordering all data points and picking out the one in the middle (or the average of the two middle numbers if the dataset has an even number of observations).

- Mode : The most frequently occurring value in the dataset.

Measures of Variability (or Dispersion)

These measures describe the spread or variability of the data points in the dataset. There are four main types:

- Range : The difference between the largest and smallest values in the dataset.

- Variance : The average of the squared differences from the mean.

- Standard Deviation : The square root of the variance, giving a measure of dispersion that is in the same units as the original dataset.

- Interquartile Range (IQR) : The range between the first quartile (25th percentile) and the third quartile (75th percentile), which provides a measure of variability that is resistant to outliers.

Descriptive Statistics Formulas

Sure, here are some of the most commonly used formulas in descriptive statistics:

Mean (μ or x̄) :

The average of all the numbers in the dataset. It is computed by summing all the observations and dividing by the number of observations.

Formula : μ = Σx/n or x̄ = Σx/n (where Σx is the sum of all observations and n is the number of observations)

The middle value in the dataset when the observations are arranged in ascending or descending order. If there is an even number of observations, the median is the average of the two middle numbers.

The most frequently occurring number in the dataset. There’s no formula for this as it’s determined by observation.

The difference between the highest (max) and lowest (min) values in the dataset.

Formula : Range = max – min

Variance (σ² or s²) :

The average of the squared differences from the mean. Variance is a measure of how spread out the numbers in the dataset are.

Population Variance formula : σ² = Σ(x – μ)² / N Sample Variance formula: s² = Σ(x – x̄)² / (n – 1)

(where x is each individual observation, μ is the population mean, x̄ is the sample mean, N is the size of the population, and n is the size of the sample)

Standard Deviation (σ or s) :

The square root of the variance. It measures the amount of variability or dispersion for a set of data. Population Standard Deviation formula: σ = √σ² Sample Standard Deviation formula: s = √s²

Interquartile Range (IQR) :

The range between the first quartile (Q1, 25th percentile) and the third quartile (Q3, 75th percentile). It measures statistical dispersion, or how far apart the data points are.

Formula : IQR = Q3 – Q1

Descriptive Statistics Methods

Here are some of the key methods used in descriptive statistics:

This method involves arranging data into a table format, making it easier to understand and interpret. Tables often show the frequency distribution of variables.

Graphical Representation

This method involves presenting data visually to help reveal patterns, trends, outliers, or relationships between variables. There are many types of graphs used, such as bar graphs, histograms, pie charts, line graphs, box plots, and scatter plots.

Calculation of Central Tendency Measures

This involves determining the mean, median, and mode of a dataset. These measures indicate where the center of the dataset lies.

Calculation of Dispersion Measures

This involves calculating the range, variance, standard deviation, and interquartile range. These measures indicate how spread out the data is.

Calculation of Position Measures

This involves determining percentiles and quartiles, which tell us about the position of particular data points within the overall data distribution.

Calculation of Association Measures

This involves calculating statistics like correlation and covariance to understand relationships between variables.

Summary Statistics

Often, a collection of several descriptive statistics is presented together in what’s known as a “summary statistics” table. This provides a comprehensive snapshot of the data at a glanc

Descriptive Statistics Examples

Descriptive Statistics Examples are as follows:

Example 1: Student Grades

Let’s say a teacher has the following set of grades for 7 students: 85, 90, 88, 92, 78, 88, and 94. The teacher could use descriptive statistics to summarize this data:

- Mean (average) : (85 + 90 + 88 + 92 + 78 + 88 + 94)/7 = 88

- Median (middle value) : First, rearrange the grades in ascending order (78, 85, 88, 88, 90, 92, 94). The median grade is 88.

- Mode (most frequent value) : The grade 88 appears twice, more frequently than any other grade, so it’s the mode.

- Range (difference between highest and lowest) : 94 (highest) – 78 (lowest) = 16

- Variance and Standard Deviation : These would be calculated using the appropriate formulas, providing a measure of the dispersion of the grades.

Example 2: Survey Data

A researcher conducts a survey on the number of hours of TV watched per day by people in a particular city. They collect data from 1,000 respondents and can use descriptive statistics to summarize this data:

- Mean : Calculate the average hours of TV watched by adding all the responses and dividing by the total number of respondents.

- Median : Sort the data and find the middle value.

- Mode : Identify the most frequently reported number of hours watched.

- Histogram : Create a histogram to visually display the frequency of responses. This could show, for example, that the majority of people watch 2-3 hours of TV per day.

- Standard Deviation : Calculate this to find out how much variation there is from the average.

Importance of Descriptive Statistics

Descriptive statistics are fundamental in the field of data analysis and interpretation, as they provide the first step in understanding a dataset. Here are a few reasons why descriptive statistics are important:

- Data Summarization : Descriptive statistics provide simple summaries about the measures and samples you have collected. With a large dataset, it’s often difficult to identify patterns or tendencies just by looking at the raw data. Descriptive statistics provide numerical and graphical summaries that can highlight important aspects of the data.

- Data Simplification : They simplify large amounts of data in a sensible way. Each descriptive statistic reduces lots of data into a simpler summary, making it easier to understand and interpret the dataset.

- Identification of Patterns and Trends : Descriptive statistics can help identify patterns and trends in the data, providing valuable insights. Measures like the mean and median can tell you about the central tendency of your data, while measures like the range and standard deviation tell you about the dispersion.

- Data Comparison : By summarizing data into measures such as the mean and standard deviation, it’s easier to compare different datasets or different groups within a dataset.

- Data Quality Assessment : Descriptive statistics can help identify errors or outliers in the data, which might indicate issues with data collection or entry.

- Foundation for Further Analysis : Descriptive statistics are typically the first step in data analysis. They help create a foundation for further statistical or inferential analysis. In fact, advanced statistical techniques often assume that one has first examined their data using descriptive methods.

When to use Descriptive Statistics

They can be used in a wide range of situations, including:

- Understanding a New Dataset : When you first encounter a new dataset, using descriptive statistics is a useful first step to understand the main characteristics of the data, such as the central tendency, dispersion, and distribution.

- Data Exploration in Research : In the initial stages of a research project, descriptive statistics can help to explore the data, identify trends and patterns, and generate hypotheses for further testing.

- Presenting Research Findings : Descriptive statistics can be used to present research findings in a clear and understandable way, often using visual aids like graphs or charts.

- Monitoring and Quality Control : In fields like business or manufacturing, descriptive statistics are often used to monitor processes, track performance over time, and identify any deviations from expected standards.

- Comparing Groups : Descriptive statistics can be used to compare different groups or categories within your data. For example, you might want to compare the average scores of two groups of students, or the variance in sales between different regions.

- Reporting Survey Results : If you conduct a survey, you would use descriptive statistics to summarize the responses, such as calculating the percentage of respondents who agree with a certain statement.

Applications of Descriptive Statistics

Descriptive statistics are widely used in a variety of fields to summarize, represent, and analyze data. Here are some applications:

- Business : Businesses use descriptive statistics to summarize and interpret data such as sales figures, customer feedback, or employee performance. For instance, they might calculate the mean sales for each month to understand trends, or use graphical representations like bar charts to present sales data.

- Healthcare : In healthcare, descriptive statistics are used to summarize patient data, such as age, weight, blood pressure, or cholesterol levels. They are also used to describe the incidence and prevalence of diseases in a population.

- Education : Educators use descriptive statistics to summarize student performance, like average test scores or grade distribution. This information can help identify areas where students are struggling and inform instructional decisions.

- Social Sciences : Social scientists use descriptive statistics to summarize data collected from surveys, experiments, and observational studies. This can involve describing demographic characteristics of participants, response frequencies to survey items, and more.

- Psychology : Psychologists use descriptive statistics to describe the characteristics of their study participants and the main findings of their research, such as the average score on a psychological test.

- Sports : Sports analysts use descriptive statistics to summarize athlete and team performance, such as batting averages in baseball or points per game in basketball.

- Government : Government agencies use descriptive statistics to summarize data about the population, such as census data on population size and demographics.

- Finance and Economics : In finance, descriptive statistics can be used to summarize past investment performance or economic data, such as changes in stock prices or GDP growth rates.

- Quality Control : In manufacturing, descriptive statistics can be used to summarize measures of product quality, such as the average dimensions of a product or the frequency of defects.

Limitations of Descriptive Statistics

While descriptive statistics are a crucial part of data analysis and provide valuable insights about a dataset, they do have certain limitations:

- Lack of Depth : Descriptive statistics provide a summary of your data, but they can oversimplify the data, resulting in a loss of detail and potentially significant nuances.

- Vulnerability to Outliers : Some descriptive measures, like the mean, are sensitive to outliers. A single extreme value can significantly skew your mean, making it less representative of your data.

- Inability to Make Predictions : Descriptive statistics describe what has been observed in a dataset. They don’t allow you to make predictions or generalizations about unobserved data or larger populations.

- No Insight into Correlations : While some descriptive statistics can hint at potential relationships between variables, they don’t provide detailed insights into the nature or strength of these relationships.

- No Causality or Hypothesis Testing : Descriptive statistics cannot be used to determine cause and effect relationships or to test hypotheses. For these purposes, inferential statistics are needed.

- Can Mislead : When used improperly, descriptive statistics can be used to present a misleading picture of the data. For instance, choosing to only report the mean without also reporting the standard deviation or range can hide a large amount of variability in the data.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Documentary Analysis – Methods, Applications and...

Probability Histogram – Definition, Examples and...

Substantive Framework – Types, Methods and...

Data Analysis – Process, Methods and Types

MANOVA (Multivariate Analysis of Variance) –...

Framework Analysis – Method, Types and Examples

Chapter 14 Quantitative Analysis Descriptive Statistics

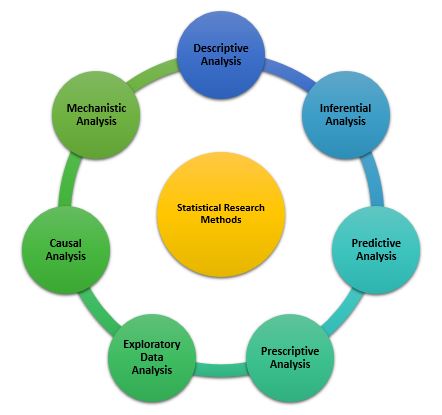

Numeric data collected in a research project can be analyzed quantitatively using statistical tools in two different ways. Descriptive analysis refers to statistically describing, aggregating, and presenting the constructs of interest or associations between these constructs. Inferential analysis refers to the statistical testing of hypotheses (theory testing). In this chapter, we will examine statistical techniques used for descriptive analysis, and the next chapter will examine statistical techniques for inferential analysis. Much of today’s quantitative data analysis is conducted using software programs such as SPSS or SAS. Readers are advised to familiarize themselves with one of these programs for understanding the concepts described in this chapter.

Data Preparation

In research projects, data may be collected from a variety of sources: mail-in surveys, interviews, pretest or posttest experimental data, observational data, and so forth. This data must be converted into a machine -readable, numeric format, such as in a spreadsheet or a text file, so that they can be analyzed by computer programs like SPSS or SAS. Data preparation usually follows the following steps.

Data coding. Coding is the process of converting data into numeric format. A codebook should be created to guide the coding process. A codebook is a comprehensive document containing detailed description of each variable in a research study, items or measures for that variable, the format of each item (numeric, text, etc.), the response scale for each item (i.e., whether it is measured on a nominal, ordinal, interval, or ratio scale; whether such scale is a five-point, seven-point, or some other type of scale), and how to code each value into a numeric format. For instance, if we have a measurement item on a seven-point Likert scale with anchors ranging from “strongly disagree” to “strongly agree”, we may code that item as 1 for strongly disagree, 4 for neutral, and 7 for strongly agree, with the intermediate anchors in between. Nominal data such as industry type can be coded in numeric form using a coding scheme such as: 1 for manufacturing, 2 for retailing, 3 for financial, 4 for healthcare, and so forth (of course, nominal data cannot be analyzed statistically). Ratio scale data such as age, income, or test scores can be coded as entered by the respondent. Sometimes, data may need to be aggregated into a different form than the format used for data collection. For instance, for measuring a construct such as “benefits of computers,” if a survey provided respondents with a checklist of b enefits that they could select from (i.e., they could choose as many of those benefits as they wanted), then the total number of checked items can be used as an aggregate measure of benefits. Note that many other forms of data, such as interview transcripts, cannot be converted into a numeric format for statistical analysis. Coding is especially important for large complex studies involving many variables and measurement items, where the coding process is conducted by different people, to help the coding team code data in a consistent manner, and also to help others understand and interpret the coded data.

Data entry. Coded data can be entered into a spreadsheet, database, text file, or directly into a statistical program like SPSS. Most statistical programs provide a data editor for entering data. However, these programs store data in their own native format (e.g., SPSS stores data as .sav files), which makes it difficult to share that data with other statistical programs. Hence, it is often better to enter data into a spreadsheet or database, where they can be reorganized as needed, shared across programs, and subsets of data can be extracted for analysis. Smaller data sets with less than 65,000 observations and 256 items can be stored in a spreadsheet such as Microsoft Excel, while larger dataset with millions of observations will require a database. Each observation can be entered as one row in the spreadsheet and each measurement item can be represented as one column. The entered data should be frequently checked for accuracy, via occasional spot checks on a set of items or observations, during and after entry. Furthermore, while entering data, the coder should watch out for obvious evidence of bad data, such as the respondent selecting the “strongly agree” response to all items irrespective of content, including reverse-coded items. If so, such data can be entered but should be excluded from subsequent analysis.

Missing values. Missing data is an inevitable part of any empirical data set. Respondents may not answer certain questions if they are ambiguously worded or too sensitive. Such problems should be detected earlier during pretests and corrected before the main data collection process begins. During data entry, some statistical programs automatically treat blank entries as missing values, while others require a specific numeric value such as -1 or 999 to be entered to denote a missing value. During data analysis, the default mode of handling missing values in most software programs is to simply drop the entire observation containing even a single missing value, in a technique called listwise deletion . Such deletion can significantly shrink the sample size and make it extremely difficult to detect small effects. Hence, some software programs allow the option of replacing missing values with an estimated value via a process called imputation . For instance, if the missing value is one item in a multi-item scale, the imputed value may be the average of the respondent’s responses to remaining items on that scale. If the missing value belongs to a single-item scale, many researchers use the average of other respondent’s responses to that item as the imputed value. Such imputation may be biased if the missing value is of a systematic nature rather than a random nature. Two methods that can produce relatively unbiased estimates for imputation are the maximum likelihood procedures and multiple imputation methods, both of which are supported in popular software programs such as SPSS and SAS.

Data transformation. Sometimes, it is necessary to transform data values before they can be meaningfully interpreted. For instance, reverse coded items, where items convey the opposite meaning of that of their underlying construct, should be reversed (e.g., in a 1-7 interval scale, 8 minus the observed value will reverse the value) before they can be compared or combined with items that are not reverse coded. Other kinds of transformations may include creating scale measures by adding individual scale items, creating a weighted index from a set of observed measures, and collapsing multiple values into fewer categories (e.g., collapsing incomes into income ranges).

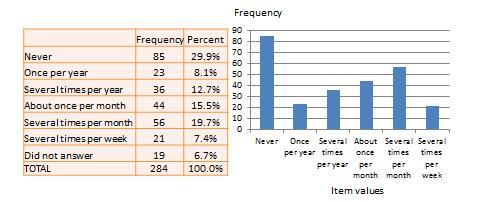

Univariate Analysis

Univariate analysis, or analysis of a single variable, refers to a set of statistical techniques that can describe the general properties of one variable. Univariate statistics include: (1) frequency distribution, (2) central tendency, and (3) dispersion. The frequency distribution of a variable is a summary of the frequency (or percentages) of individual values or ranges of values for that variable. For instance, we can measure how many times a sample of respondents attend religious services (as a measure of their “religiosity”) using a categorical scale: never, once per year, several times per year, about once a month, several times per month, several times per week, and an optional category for “did not answer.” If we count the number (or percentage) of observations within each category (except “did not answer” which is really a missing value rather than a category), and display it in the form of a table as shown in Figure 14.1, what we have is a frequency distribution. This distribution can also be depicted in the form of a bar chart, as shown on the right panel of Figure 14.1, with the horizontal axis representing each category of that variable and the vertical axis representing the frequency or percentage of observations within each category.

Figure 14.1. Frequency distribution of religiosity.

With very large samples where observations are independent and random, the frequency distribution tends to follow a plot that looked like a bell-shaped curve (a smoothed bar chart of the frequency distribution) similar to that shown in Figure 14.2, where most observations are clustered toward the center of the range of values, and fewer and fewer observations toward the extreme ends of the range. Such a curve is called a normal distribution.

Central tendency is an estimate of the center of a distribution of values. There are three major estimates of central tendency: mean, median, and mode. The arithmetic mean (often simply called the “mean”) is the simple average of all values in a given distribution. Consider a set of eight test scores: 15, 22, 21, 18, 36, 15, 25, 15. The arithmetic mean of these values is (15 + 20 + 21 + 20 + 36 + 15 + 25 + 15)/8 = 20.875. Other types of means include geometric mean (n th root of the product of n numbers in a distribution) and harmonic mean (the reciprocal of the arithmetic means of the reciprocal of each value in a distribution), but these means are not very popular for statistical analysis of social research data.

The second measure of central tendency, the median , is the middle value within a range of values in a distribution. This is computed by sorting all values in a distribution in increasing order and selecting the middle value. In case there are two middle values (if there is an even number of values in a distribution), the average of the two middle values represent the median. In the above example, the sorted values are: 15, 15, 15, 18, 22, 21, 25, 36. The two middle values are 18 and 22, and hence the median is (18 + 22)/2 = 20.

Lastly, the mode is the most frequently occurring value in a distribution of values. In the previous example, the most frequently occurring value is 15, which is the mode of the above set of test scores. Note that any value that is estimated from a sample, such as mean, median, mode, or any of the later estimates are called a statistic .

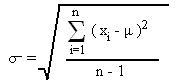

Dispersion refers to the way values are spread around the central tendency, for example, how tightly or how widely are the values clustered around the mean. Two common measures of dispersion are the range and standard deviation. The range is the difference between the highest and lowest values in a distribution. The range in our previous example is 36-15 = 21.

The range is particularly sensitive to the presence of outliers. For instance, if the highest value in the above distribution was 85 and the other vales remained the same, the range would be 85-15 = 70. Standard deviation , the second measure of dispersion, corrects for such outliers by using a formula that takes into account how close or how far each value from the distribution mean:

Figure 14.2. Normal distribution.

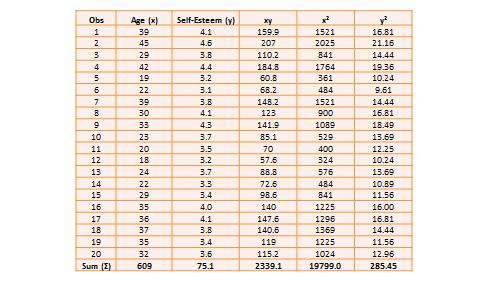

Table 14.1. Hypothetical data on age and self-esteem.

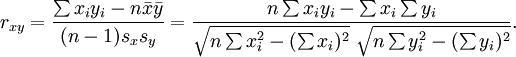

The two variables in this dataset are age (x) and self-esteem (y). Age is a ratio-scale variable, while self-esteem is an average score computed from a multi-item self-esteem scale measured using a 7-point Likert scale, ranging from “strongly disagree” to “strongly agree.” The histogram of each variable is shown on the left side of Figure 14.3. The formula for calculating bivariate correlation is:

Figure 14.3. Histogram and correlation plot of age and self-esteem.

After computing bivariate correlation, researchers are often interested in knowing whether the correlation is significant (i.e., a real one) or caused by mere chance. Answering such a question would require testing the following hypothesis:

H 0 : r = 0

H 1 : r ≠ 0

H 0 is called the null hypotheses , and H 1 is called the alternative hypothesis (sometimes, also represented as H a ). Although they may seem like two hypotheses, H 0 and H 1 actually represent a single hypothesis since they are direct opposites of each other. We are interested in testing H 1 rather than H 0 . Also note that H 1 is a non-directional hypotheses since it does not specify whether r is greater than or less than zero. Directional hypotheses will be specified as H 0 : r ≤ 0; H 1 : r > 0 (if we are testing for a positive correlation). Significance testing of directional hypothesis is done using a one-tailed t-test, while that for non-directional hypothesis is done using a two-tailed t-test.

In statistical testing, the alternative hypothesis cannot be tested directly. Rather, it is tested indirectly by rejecting the null hypotheses with a certain level of probability. Statistical testing is always probabilistic, because we are never sure if our inferences, based on sample data, apply to the population, since our sample never equals the population. The probability that a statistical inference is caused pure chance is called the p-value . The p-value is compared with the significance level (α), which represents the maximum level of risk that we are willing to take that our inference is incorrect. For most statistical analysis, α is set to 0.05. A p-value less than α=0.05 indicates that we have enough statistical evidence to reject the null hypothesis, and thereby, indirectly accept the alternative hypothesis. If p>0.05, then we do not have adequate statistical evidence to reject the null hypothesis or accept the alternative hypothesis.

The easiest way to test for the above hypothesis is to look up critical values of r from statistical tables available in any standard text book on statistics or on the Internet (most software programs also perform significance testing). The critical value of r depends on our desired significance level (α = 0.05), the degrees of freedom (df), and whether the desired test is a one-tailed or two-tailed test. The degree of freedom is the number of values that can vary freely in any calculation of a statistic. In case of correlation, the df simply equals n – 2, or for the data in Table 14.1, df is 20 – 2 = 18. There are two different statistical tables for one-tailed and two -tailed test. In the two -tailed table, the critical value of r for α = 0.05 and df = 18 is 0.44. For our computed correlation of 0.79 to be significant, it must be larger than the critical value of 0.44 or less than -0.44. Since our computed value of 0.79 is greater than 0.44, we conclude that there is a significant correlation between age and self-esteem in our data set, or in other words, the odds are less than 5% that this correlation is a chance occurrence. Therefore, we can reject the null hypotheses that r ≤ 0, which is an indirect way of saying that the alternative hypothesis r > 0 is probably correct.

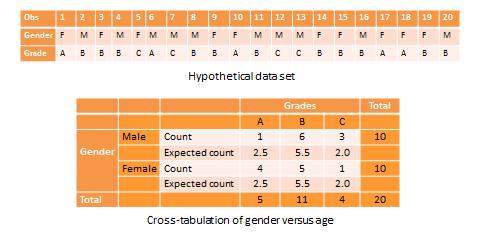

Most research studies involve more than two variables. If there are n variables, then we will have a total of n*(n-1)/2 possible correlations between these n variables. Such correlations are easily computed using a software program like SPSS, rather than manually using the formula for correlation (as we did in Table 14.1), and represented using a correlation matrix, as shown in Table 14.2. A correlation matrix is a matrix that lists the variable names along the first row and the first column, and depicts bivariate correlations between pairs of variables in the appropriate cell in the matrix. The values along the principal diagonal (from the top left to the bottom right corner) of this matrix are always 1, because any variable is always perfectly correlated with itself. Further, since correlations are non-directional, the correlation between variables V1 and V2 is the same as that between V2 and V1. Hence, the lower triangular matrix (values below the principal diagonal) is a mirror reflection of the upper triangular matrix (values above the principal diagonal), and therefore, we often list only the lower triangular matrix for simplicity. If the correlations involve variables measured using interval scales, then this specific type of correlations are called Pearson product moment correlations .

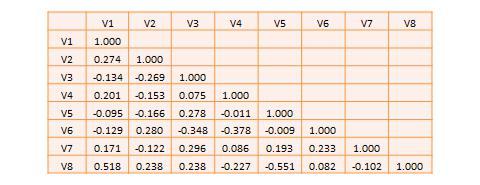

Another useful way of presenting bivariate data is cross-tabulation (often abbreviated to cross-tab, and sometimes called more formally as a contingency table). A cross-tab is a table that describes the frequency (or percentage) of all combinations of two or more nominal or categorical variables. As an example, let us assume that we have the following observations of gender and grade for a sample of 20 students, as shown in Figure 14.3. Gender is a nominal variable (male/female or M/F), and grade is a categorical variable with three levels (A, B, and C). A simple cross-tabulation of the data may display the joint distribution of gender and grades (i.e., how many students of each gender are in each grade category, as a raw frequency count or as a percentage) in a 2 x 3 matrix. This matrix will help us see if A, B, and C grades are equally distributed across male and female students. The cross-tab data in Table 14.3 shows that the distribution of A grades is biased heavily toward female students: in a sample of 10 male and 10 female students, five female students received the A grade compared to only one male students. In contrast, the distribution of C grades is biased toward male students: three male students received a C grade, compared to only one female student. However, the distribution of B grades was somewhat uniform, with six male students and five female students. The last row and the last column of this table are called marginal totals because they indicate the totals across each category and displayed along the margins of the table.

Table 14.2. A hypothetical correlation matrix for eight variables.

Table 14.3. Example of cross-tab analysis.

Although we can see a distinct pattern of grade distribution between male and female students in Table 14.3, is this pattern real or “statistically significant”? In other words, do the above frequency counts differ from that that may be expected from pure chance? To answer this question, we should compute the expected count of observation in each cell of the 2 x 3 cross-tab matrix. This is done by multiplying the marginal column total and the marginal row total for each cell and dividing it by the total number of observations. For example, for the male/A grade cell, expected count = 5 * 10 / 20 = 2.5. In other words, we were expecting 2.5 male students to receive an A grade, but in reality, only one student received the A grade. Whether this difference between expected and actual count is significant can be tested using a chi-square test . The chi-square statistic can be computed as the average difference between observed and expected counts across all cells. We can then compare this number to the critical value associated with a desired probability level (p < 0.05) and the degrees of freedom, which is simply (m-1)*(n-1), where m and n are the number of rows and columns respectively. In this example, df = (2 – 1) * (3 – 1) = 2. From standard chi-square tables in any statistics book, the critical chi-square value for p=0.05 and df=2 is 5.99. The computed chi -square value, based on our observed data, is 1.00, which is less than the critical value. Hence, we must conclude that the observed grade pattern is not statistically different from the pattern that can be expected by pure chance.

- Social Science Research: Principles, Methods, and Practices. Authored by : Anol Bhattacherjee. Provided by : University of South Florida. Located at : http://scholarcommons.usf.edu/oa_textbooks/3/ . License : CC BY-NC-SA: Attribution-NonCommercial-ShareAlike

Child Care and Early Education Research Connections

Descriptive Statistics

This page describes graphical and pictorial methods of descriptive statistics and the three most common measures of descriptive statistics (central tendency, dispersion, and association).

Descriptive statistics can be useful for two purposes: 1) to provide basic information about variables in a dataset and 2) to highlight potential relationships between variables. The three most common descriptive statistics can be displayed graphically or pictorially and are measures of:

Graphical/Pictorial Methods

Measures of central tendency, measures of dispersion, measures of association.

There are several graphical and pictorial methods that enhance researchers' understanding of individual variables and the relationships between variables. Graphical and pictorial methods provide a visual representation of the data. Some of these methods include:

Scatter plots

Geographical Information Systems (GIS)

Visually represent the frequencies with which values of variables occur

Each value of a variable is displayed along the bottom of a histogram, and a bar is drawn for each value

The height of the bar corresponds to the frequency with which that value occurs

Display the relationship between two quantitative or numeric variables by plotting one variable against the value of another variable

For example, one axis of a scatter plot could represent height and the other could represent weight. Each person in the data would receive one data point on the scatter plot that corresponds to his or her height and weight

Geographic Information Systems (GIS)

A GIS is a computer system capable of capturing, storing, analyzing, and displaying geographically referenced information; that is, data identified according to location

Using a GIS program, a researcher can create a map to represent data relationships visually

Display networks of relationships among variables, enabling researchers to identify the nature of relationships that would otherwise be too complex to conceptualize

Visit the following websites for more information:

Graphical Analytic Techniques

Geographic Information Systems

Glossary terms related to graphical and pictorial methods:

GIS Histogram Scatter Plot Sociogram

Measures of central tendency are the most basic and, often, the most informative description of a population's characteristics. They describe the "average" member of the population of interest. There are three measures of central tendency:

Mean -- the sum of a variable's values divided by the total number of values Median -- the middle value of a variable Mode -- the value that occurs most often

Example: The incomes of five randomly selected people in the United States are $10,000, $10,000, $45,000, $60,000, and $1,000,000.

Mean Income = (10,000 + 10,000 + 45,000 + 60,000 + 1,000,000) / 5 = $225,000 Median Income = $45,000 Modal Income = $10,000

The mean is the most commonly used measure of central tendency. Medians are generally used when a few values are extremely different from the rest of the values (this is called a skewed distribution). For example, the median income is often the best measure of the average income because, while most individuals earn between $0 and $200,000, a handful of individuals earn millions.

Basic Statistics

Measures of Position

Glossary terms related to measures of central tendency:

Average Central Tendency Confidence Interval Mean Median Mode Moving Average Point Estimate Univariate Analysis

Measures of dispersion provide information about the spread of a variable's values. There are four key measures of dispersion:

Standard Deviation

Range is simply the difference between the smallest and largest values in the data. The interquartile range is the difference between the values at the 75th percentile and the 25th percentile of the data.

Variance is the most commonly used measure of dispersion. It is calculated by taking the average of the squared differences between each value and the mean.

Standard deviation , another commonly used statistic, is the square root of the variance.

Skew is a measure of whether some values of a variable are extremely different from the majority of the values. For example, income is skewed because most people make between $0 and $200,000, but a handful of people earn millions. A variable is positively skewed if the extreme values are higher than the majority of values. A variable is negatively skewed if the extreme values are lower than the majority of values.

Example: The incomes of five randomly selected people in the United States are $10,000, $10,000, $45,000, $60,000, and $1,000,000:

Range = 1,000,000 - 10,000 = 990,000 Variance = [(10,000 - 225,000)2 + (10,000 - 225,000)2 + (45,000 - 225,000)2 + (60,000 - 225,000)2 + (1,000,000 - 225,000)2] / 5 = 150,540,000,000 Standard Deviation = Square Root (150,540,000,000) = 387,995 Skew = Income is positively skewed

Survey Research Tools

Variance and Standard Deviation

Summarizing and Presenting Data

Skewness Simulation

Glossary terms related to measures of dispersion:

Confidence Interval Distribution Kurtosis Point Estimate Quartiles Range Skewness Standard Deviation Univariate Analysis Variance

Measures of association indicate whether two variables are related. Two measures are commonly used:

Correlation

As a measure of association between variables, chi-square tests are used on nominal data (i.e., data that are put into classes: e.g., gender [male, female] and type of job [unskilled, semi-skilled, skilled]) to determine whether they are associated*

A chi-square is called significant if there is an association between two variables, and nonsignificant if there is not an association

To test for associations, a chi-square is calculated in the following way: Suppose a researcher wants to know whether there is a relationship between gender and two types of jobs, construction worker and administrative assistant. To perform a chi-square test, the researcher counts up the number of female administrative assistants, the number of female construction workers, the number of male administrative assistants, and the number of male construction workers in the data. These counts are compared with the number that would be expected in each category if there were no association between job type and gender (this expected count is based on statistical calculations). If there is a large difference between the observed values and the expected values, the chi-square test is significant, which indicates there is an association between the two variables.

*The chi-square test can also be used as a measure of goodness of fit, to test if data from a sample come from a population with a specific distribution, as an alternative to Anderson-Darling and Kolmogorov-Smirnov goodness-of-fit tests. As such, the chi square test is not restricted to nominal data; with non-binned data, however, the results depend on how the bins or classes are created and the size of the sample

A correlation coefficient is used to measure the strength of the relationship between numeric variables (e.g., weight and height)

The most common correlation coefficient is Pearson's r , which can range from -1 to +1.

If the coefficient is between 0 and 1, as one variable increases, the other also increases. This is called a positive correlation. For example, height and weight are positively correlated because taller people usually weigh more

If the correlation coefficient is between -1 and 0, as one variable increases the other decreases. This is called a negative correlation. For example, age and hours slept per night are negatively correlated because older people usually sleep fewer hours per night

Chi-Square Procedures for the Analysis of Categorical Frequency Data

Chi-square Analysis

Glossary terms related to measures of association:

Association Chi Square Correlation Correlation Coefficient Measures of Association Pearson's Correlational Coefficient Product Moment Correlation Coefficient

Open Access is an initiative that aims to make scientific research freely available to all. To date our community has made over 100 million downloads. It’s based on principles of collaboration, unobstructed discovery, and, most importantly, scientific progression. As PhD students, we found it difficult to access the research we needed, so we decided to create a new Open Access publisher that levels the playing field for scientists across the world. How? By making research easy to access, and puts the academic needs of the researchers before the business interests of publishers.

We are a community of more than 103,000 authors and editors from 3,291 institutions spanning 160 countries, including Nobel Prize winners and some of the world’s most-cited researchers. Publishing on IntechOpen allows authors to earn citations and find new collaborators, meaning more people see your work not only from your own field of study, but from other related fields too.

Brief introduction to this section that descibes Open Access especially from an IntechOpen perspective

Want to get in touch? Contact our London head office or media team here

Our team is growing all the time, so we’re always on the lookout for smart people who want to help us reshape the world of scientific publishing.

Home > Books > Recent Advances in Biostatistics

Introduction to Descriptive Statistics

Submitted: 04 July 2023 Reviewed: 20 July 2023 Published: 07 September 2023

DOI: 10.5772/intechopen.1002475

Cite this chapter

There are two ways to cite this chapter:

From the Edited Volume

Recent Advances in Biostatistics

B. Santhosh Kumar

To purchase hard copies of this book, please contact the representative in India: CBS Publishers & Distributors Pvt. Ltd. www.cbspd.com | [email protected]

Chapter metrics overview

287 Chapter Downloads

Impact of this chapter

Total Chapter Downloads on intechopen.com

Total Chapter Views on intechopen.com

This chapter offers a comprehensive exploration of descriptive statistics, tracing its historical development from Condorcet’s “average” concept to Galton and Pearson’s contributions. Emphasizing its pivotal role in academia, descriptive statistics serve as a fundamental tool for summarizing and analyzing data across disciplines. The chapter underscores how descriptive statistics drive research inspiration and guide analysis, and provide a foundation for advanced statistical techniques. It delves into their historical context, highlighting their organizational and presentational significance. Furthermore, the chapter accentuates the advantages of descriptive statistics in academia, including their ability to succinctly represent complex data, aid decision-making, and enhance research communication. It highlights the potency of visualization in discerning data patterns and explores emerging trends like large dataset analysis, Bayesian statistics, and nonparametric methods. Sources of variance intrinsic to descriptive statistics, such as sampling fluctuations, measurement errors, and outliers, are discussed, stressing the importance of considering these factors in data interpretation.

- academic research

- data analysis

- data visualization

- decision-making

- research methodology

- data summarization

Author Information

Olubunmi alabi *.

- African University of Science and Technology, Abuja, Nigeria

Tosin Bukola

- University of Greenwich, London, United Kingdom

*Address all correspondence to: [email protected]

1. Introduction

The French mathematician and philosopher Condorcet established the idea of the “average” as a means to summarize data, which is when descriptive statistics got their start. Yet, the widespread use of descriptive statistics in academic study did not start until the 19th century. Francis Galton, who was concerned in the examination of human features and attributes, was one of the major forerunners of descriptive statistics. Galton created various statistical methods that are still frequently applied in academic research today, such as the correlation and regression analysis concepts. The American statistician and mathematician in the early 20th century Karl Pearson created the “normal distribution,” which is a bell-shaped curve that characterizes the distribution of many natural occurrences. Moreover, Pearson created a number of correlational measures and popularized the chi-square test, which evaluates the significance of variations between observed and predicted frequencies. With the advent of new methods like multivariate analysis and factor analysis in the middle of the 20th century, the development of electronic computers sparked a revolution in statistical analysis. Descriptive statistics is the analysis and summarization of data to gain insights into its characteristics and distribution [ 1 ].

Descriptive statistics help researchers generate study ideas and guide further analysis by allowing them to explore data patterns and trends [ 2 ]. Descriptive statistics were used more often in academic research because they helped researchers better comprehend their datasets and served as a basis for more sophisticated statistical techniques. Similarly, Descriptive statistics are used to summarize and analyze data in a variety of academic areas, including psychology, sociology, economics, education, and epidemiology [ 3 ]. Descriptive statistics continue to be a crucial research tool in academia today, giving researchers a method to compile and analyze data from many fields. It is now simpler than ever to analyze and understand data, enabling researchers to make better informed judgments based on their results. This is due to the development of new statistical techniques and computer tools. Descriptive statistics can benefit researchers in hypothesis creation and exploratory analysis by identifying trends, patterns, and correlations between variables in huge datasets [ 4 ]. Descriptive statistics are important in data-driven decision-making processes because they allow stakeholders to make educated decisions based on reliable data [ 5 ].

2. Background

The history of descriptive statistics may be traced back to the 17th century, when early pioneers like John Graunt and William Petty laid the groundwork for statistical analysis [ 6 ]. Descriptive statistics is a fundamental concept in academia that is widely used across many disciplines, including social sciences, economics, medicine, engineering, and business. Descriptive statistics provides a comprehensive background for understanding data by organizing, summarizing, and presenting information effectively [ 7 ]. In academia, descriptive statistics is used to summarize and analyze data, providing insights into the patterns, trends, and characteristics of a dataset. Similarly, in academic research, descriptive statistics are often used as a preliminary analysis technique to gain a better understanding of the dataset before applying more complex statistical methods. Descriptive statistics lay the groundwork for inferential statistics by assisting researchers in drawing inferences about a population based on observed sample data [ 8 ]. Descriptive statistics aid in the identification and analysis of outliers, which can give useful insights into unusual observations or data collecting problems [ 9 ].

Descriptive statistics enable researchers to synthesize both quantitative and qualitative data, allowing for a thorough examination of factors [ 10 ]. Descriptive statistics can provide valuable information about the central tendency, variability, and distribution of the data, allowing researchers to make informed decisions about the appropriate statistical techniques to use. Descriptive statistics are an essential component of survey research technique, allowing researchers to efficiently summarize and display survey results [ 11 ]. Descriptive statistics may be used to summarize data as well as spot outliers, or observations that dramatically depart from the trend of the data as a whole. Finding outliers can help researchers spot any issues or abnormalities in the data so they can make the necessary modifications or repairs. In academic research, descriptive statistics are frequently employed to address research issues and evaluate hypotheses. Descriptive statistics, for instance, can be used to compare the average scores of two groups to see if there is a significant difference between them. In order to create new hypotheses or validate preexisting ideas, descriptive statistics may also be used to find patterns and correlations in the data.

There are several sources of variation that can affect the descriptive statistics of a data set, some of which include: Sampling Variation, descriptive statistics are often calculated using a sample of data rather than the entire population. Therefore, the descriptive statistics can vary depending on the particular sample that is selected. This is known as sampling variation. Measurement Variation, different measurement methods can produce different results, leading to variation in descriptive statistics. For example, if a scale is used to measure the weight of objects, slight differences in how the scale is used can produce slightly different measurements.

Data entry errors are mistakes made during the data entry process which can lead to variation in descriptive statistics. Even small errors, such as transposing two digits, can significantly impact the results. Outliers, Outliers are extreme values that fall outside of the expected range of values. These values can skew the descriptive statistics, making them appear more or less extreme than they actually are. Natural Variation, Natural variation refers to the inherent variability in the data itself. For example, if a data set contains measurements of the heights of trees, there will naturally be variation in the heights of the trees. It is important to understand these sources of variation when interpreting and using descriptive statistics in academia. Properly accounting for these sources of variation can help ensure that the descriptive statistics accurately reflect the underlying data.

Some emerging patterns in descriptive statistics in academia include: Big data analysis, with the increasing availability of large data sets, researchers are using descriptive statistics to identify patterns and trends in the data. The use of big data analysis techniques, such as machine learning and data mining, is becoming more common in academic research. Visualization techniques, advances in data visualization techniques are enabling researchers to more easily identify patterns in data sets. For example, heat maps and scatter plots can be used to visualize the relationship between different variables. Bayesian statistics is an emerging area of research in academia, which involves using probability theory to make inferences about data. Bayesian statistics can provide more accurate estimates of descriptive statistics, particularly when dealing with complex data sets.

Non-parametric statistics are becoming increasingly popular in academia, particularly when dealing with data sets that do not meet the assumptions of traditional parametric statistical tests. Non-parametric tests do not require the data to be normally distributed, and can be more robust to outliers. Open science practices, such as pre-registration and data sharing, are becoming more common in academia. This is enabling researchers to more easily replicate and verify the results of descriptive statistical analyses, which can improve the quality and reliability of research findings. Overall, the emerging patterns in descriptive statistics in academia reflect the increasing availability of data, the need for more accurate and robust statistical techniques, and a growing emphasis on transparency and openness in research practices.

3. Benefits of descriptive statistics

The advantages of descriptive statistics extend beyond research and academia, with applications in commercial decision-making, public policy, and strategic planning [ 12 ]. The benefits of descriptive statistics include providing a clear and concise summary of data, aiding in decision-making processes, and facilitating effective communication of findings [ 13 ]. Descriptive statistics provide numerous benefits to academia, some of which include: Summarization of Data: descriptive statistics allow researchers to quickly and efficiently summarize large data sets, providing a snapshot of the key characteristics of the data. This can help researchers identify patterns and trends in the data, and can also help to simplify complex data sets. Better decision making: descriptive statistics can help researchers make data-driven decisions. For example, if a researcher is comparing the effectiveness of two different treatments, descriptive statistics can be used to identify which treatment is more effective based on the data. Visualization of data: descriptive statistics can be used to create visualizations of data, which can make it easier to communicate research findings to others.

Histograms, bar charts, and scatterplots are examples of data visualization techniques that may be used to graphically depict data in order to detect trends, outliers, and correlations [ 14 ]. Visualizations can also help to identify patterns and trends in the data that might not be immediately apparent from raw data. Hypothesis Testing: descriptive statistics are often used in hypothesis testing, which allows researchers to determine whether a particular hypothesis about a data set is supported by the data. This can help to validate research findings and increase confidence in the conclusions drawn from the data. Improved data quality: Descriptive statistics can help to identify errors or inconsistencies in the data, which can help researchers improve the quality of the data. This can lead to more accurate research findings and a better understanding of the underlying phenomena. Overall, the benefits of descriptive statistics in academia are many and varied. They help researchers summarize large data sets, make data-driven decisions, visualize data, validate research findings, and improve the quality of the data. By using descriptive statistics, researchers can gain valuable insights into complex data sets and make more informed decisions based on the data.

4. Practical applications of descriptive statistics