Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Content Analysis | Guide, Methods & Examples

Content Analysis | Guide, Methods & Examples

Published on July 18, 2019 by Amy Luo . Revised on June 22, 2023.

Content analysis is a research method used to identify patterns in recorded communication. To conduct content analysis, you systematically collect data from a set of texts, which can be written, oral, or visual:

- Books, newspapers and magazines

- Speeches and interviews

- Web content and social media posts

- Photographs and films

Content analysis can be both quantitative (focused on counting and measuring) and qualitative (focused on interpreting and understanding). In both types, you categorize or “code” words, themes, and concepts within the texts and then analyze the results.

Table of contents

What is content analysis used for, advantages of content analysis, disadvantages of content analysis, how to conduct content analysis, other interesting articles.

Researchers use content analysis to find out about the purposes, messages, and effects of communication content. They can also make inferences about the producers and audience of the texts they analyze.

Content analysis can be used to quantify the occurrence of certain words, phrases, subjects or concepts in a set of historical or contemporary texts.

Quantitative content analysis example

To research the importance of employment issues in political campaigns, you could analyze campaign speeches for the frequency of terms such as unemployment , jobs , and work and use statistical analysis to find differences over time or between candidates.

In addition, content analysis can be used to make qualitative inferences by analyzing the meaning and semantic relationship of words and concepts.

Qualitative content analysis example

To gain a more qualitative understanding of employment issues in political campaigns, you could locate the word unemployment in speeches, identify what other words or phrases appear next to it (such as economy, inequality or laziness ), and analyze the meanings of these relationships to better understand the intentions and targets of different campaigns.

Because content analysis can be applied to a broad range of texts, it is used in a variety of fields, including marketing, media studies, anthropology, cognitive science, psychology, and many social science disciplines. It has various possible goals:

- Finding correlations and patterns in how concepts are communicated

- Understanding the intentions of an individual, group or institution

- Identifying propaganda and bias in communication

- Revealing differences in communication in different contexts

- Analyzing the consequences of communication content, such as the flow of information or audience responses

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

- Unobtrusive data collection

You can analyze communication and social interaction without the direct involvement of participants, so your presence as a researcher doesn’t influence the results.

- Transparent and replicable

When done well, content analysis follows a systematic procedure that can easily be replicated by other researchers, yielding results with high reliability .

- Highly flexible

You can conduct content analysis at any time, in any location, and at low cost – all you need is access to the appropriate sources.

Focusing on words or phrases in isolation can sometimes be overly reductive, disregarding context, nuance, and ambiguous meanings.

Content analysis almost always involves some level of subjective interpretation, which can affect the reliability and validity of the results and conclusions, leading to various types of research bias and cognitive bias .

- Time intensive

Manually coding large volumes of text is extremely time-consuming, and it can be difficult to automate effectively.

If you want to use content analysis in your research, you need to start with a clear, direct research question .

Example research question for content analysis

Is there a difference in how the US media represents younger politicians compared to older ones in terms of trustworthiness?

Next, you follow these five steps.

1. Select the content you will analyze

Based on your research question, choose the texts that you will analyze. You need to decide:

- The medium (e.g. newspapers, speeches or websites) and genre (e.g. opinion pieces, political campaign speeches, or marketing copy)

- The inclusion and exclusion criteria (e.g. newspaper articles that mention a particular event, speeches by a certain politician, or websites selling a specific type of product)

- The parameters in terms of date range, location, etc.

If there are only a small amount of texts that meet your criteria, you might analyze all of them. If there is a large volume of texts, you can select a sample .

2. Define the units and categories of analysis

Next, you need to determine the level at which you will analyze your chosen texts. This means defining:

- The unit(s) of meaning that will be coded. For example, are you going to record the frequency of individual words and phrases, the characteristics of people who produced or appear in the texts, the presence and positioning of images, or the treatment of themes and concepts?

- The set of categories that you will use for coding. Categories can be objective characteristics (e.g. aged 30-40 , lawyer , parent ) or more conceptual (e.g. trustworthy , corrupt , conservative , family oriented ).

Your units of analysis are the politicians who appear in each article and the words and phrases that are used to describe them. Based on your research question, you have to categorize based on age and the concept of trustworthiness. To get more detailed data, you also code for other categories such as their political party and the marital status of each politician mentioned.

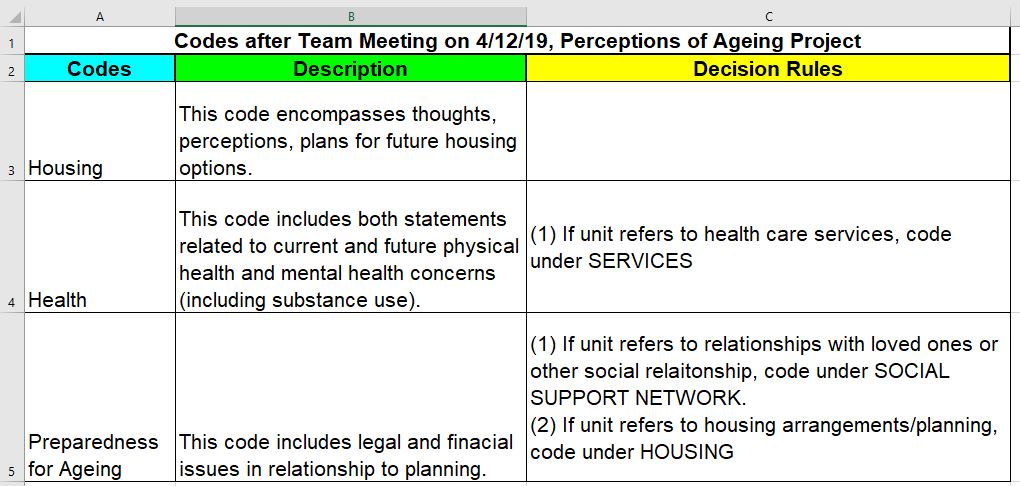

3. Develop a set of rules for coding

Coding involves organizing the units of meaning into the previously defined categories. Especially with more conceptual categories, it’s important to clearly define the rules for what will and won’t be included to ensure that all texts are coded consistently.

Coding rules are especially important if multiple researchers are involved, but even if you’re coding all of the text by yourself, recording the rules makes your method more transparent and reliable.

In considering the category “younger politician,” you decide which titles will be coded with this category ( senator, governor, counselor, mayor ). With “trustworthy”, you decide which specific words or phrases related to trustworthiness (e.g. honest and reliable ) will be coded in this category.

4. Code the text according to the rules

You go through each text and record all relevant data in the appropriate categories. This can be done manually or aided with computer programs, such as QSR NVivo , Atlas.ti and Diction , which can help speed up the process of counting and categorizing words and phrases.

Following your coding rules, you examine each newspaper article in your sample. You record the characteristics of each politician mentioned, along with all words and phrases related to trustworthiness that are used to describe them.

5. Analyze the results and draw conclusions

Once coding is complete, the collected data is examined to find patterns and draw conclusions in response to your research question. You might use statistical analysis to find correlations or trends, discuss your interpretations of what the results mean, and make inferences about the creators, context and audience of the texts.

Let’s say the results reveal that words and phrases related to trustworthiness appeared in the same sentence as an older politician more frequently than they did in the same sentence as a younger politician. From these results, you conclude that national newspapers present older politicians as more trustworthy than younger politicians, and infer that this might have an effect on readers’ perceptions of younger people in politics.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Measures of central tendency

- Chi square tests

- Confidence interval

- Quartiles & Quantiles

- Cluster sampling

- Stratified sampling

- Thematic analysis

- Cohort study

- Peer review

- Ethnography

Research bias

- Implicit bias

- Cognitive bias

- Conformity bias

- Hawthorne effect

- Availability heuristic

- Attrition bias

- Social desirability bias

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Luo, A. (2023, June 22). Content Analysis | Guide, Methods & Examples. Scribbr. Retrieved August 29, 2024, from https://www.scribbr.com/methodology/content-analysis/

Is this article helpful?

Other students also liked

Qualitative vs. quantitative research | differences, examples & methods, descriptive research | definition, types, methods & examples, reliability vs. validity in research | difference, types and examples, "i thought ai proofreading was useless but..".

I've been using Scribbr for years now and I know it's a service that won't disappoint. It does a good job spotting mistakes”

- Find My Rep

You are here

Qualitative Content Analysis in Practice

- Margrit Schreier - Jacobs University Bremen, Germany

- Description

In one of the first to focus on qualitative content analysis, Margrit Schreier takes students step-by step through:

- creating a coding frame

- segmenting the material

- trying out the coding frame

- evaluating the trial coding

- carrying out the main coding

- what comes after qualitative content analysis

- making use of software when conducting qualitative content analysis.

Each part of the process is described in detail and research examples are provided to illustrate each step. Frequently asked questions are answered, the most important points are summarized, and end of chapter questions provide an opportunity to revise these points. After reading the book, students are fully equiped to conduct their own qualitative content analysis.

This book provides a well written, clear and detailed account of QCA, highlighting the value of this research method for the analysis of social, political and psychological phenomena Tereza Capelos University of Surrey

Schreier writes clearly and with authority, positioning QCA in relation to other qualitative research methods and emphasising the hands-on aspects of the analysis process. She offers numerous illuminating examples and helpful pedagogical tools for the reader. This book will thus be most welcomed by students at different levels as well as by researchers.

Ulla Hällgren Graneheim Umeå University, Sweden

This book has been written for students but would be of value to anyone considering using the analysis method to help them reduce and make sense of a large volume of textual data. [...]The content is detailed and presented in text-book style with key points, definitions and beginners’ mistakes scattered throughout and frequently asked questions and end of chapter questions. These break up the text but also help when skimming. In addition, and what I found particularly valuable, was the liberal use of examples drawn from published papers. These really help to clarify and bring to life the issues raised.

Schreier provides several helpful educational tools, such as mid-chapter definitions, summaries and key-points....this is an excellent introductory or reference book for all students of content analysis

This book makes a valuable supplementary reading text about applied content analysis for my course in ethnography.

I will use this as a recommended text, and not adopt as a main or compulsory text. This is so given the book's particular focus on QCA, and the more complex treatment of research issues in general, which will be of use to a limited number of students but will be a good resource potentially for some select few taking my spring 2014 senior thesis class and NOT the fall basic / intro research methods class. Thank you.

Preview this book

Sample materials & chapters.

Companion Chapter

End of Chapter Questions

Introduction

For instructors

Select a purchasing option, order from:.

- VitalSource

- Amazon Kindle

- Google Play

Related Products

SAGE Research Methods is a research methods tool created to help researchers, faculty and students with their research projects. SAGE Research Methods links over 175,000 pages of SAGE’s renowned book, journal and reference content with truly advanced search and discovery tools. Researchers can explore methods concepts to help them design research projects, understand particular methods or identify a new method, conduct their research, and write up their findings. Since SAGE Research Methods focuses on methodology rather than disciplines, it can be used across the social sciences, health sciences, and more.

With SAGE Research Methods, researchers can explore their chosen method across the depth and breadth of content, expanding or refining their search as needed; read online, print, or email full-text content; utilize suggested related methods and links to related authors from SAGE Research Methods' robust library and unique features; and even share their own collections of content through Methods Lists. SAGE Research Methods contains content from over 720 books, dictionaries, encyclopedias, and handbooks, the entire “Little Green Book,” and "Little Blue Book” series, two Major Works collating a selection of journal articles, and specially commissioned videos.

Chapter 17. Content Analysis

Introduction.

Content analysis is a term that is used to mean both a method of data collection and a method of data analysis. Archival and historical works can be the source of content analysis, but so too can the contemporary media coverage of a story, blogs, comment posts, films, cartoons, advertisements, brand packaging, and photographs posted on Instagram or Facebook. Really, almost anything can be the “content” to be analyzed. This is a qualitative research method because the focus is on the meanings and interpretations of that content rather than strictly numerical counts or variables-based causal modeling. [1] Qualitative content analysis (sometimes referred to as QCA) is particularly useful when attempting to define and understand prevalent stories or communication about a topic of interest—in other words, when we are less interested in what particular people (our defined sample) are doing or believing and more interested in what general narratives exist about a particular topic or issue. This chapter will explore different approaches to content analysis and provide helpful tips on how to collect data, how to turn that data into codes for analysis, and how to go about presenting what is found through analysis. It is also a nice segue between our data collection methods (e.g., interviewing, observation) chapters and chapters 18 and 19, whose focus is on coding, the primary means of data analysis for most qualitative data. In many ways, the methods of content analysis are quite similar to the method of coding.

Although the body of material (“content”) to be collected and analyzed can be nearly anything, most qualitative content analysis is applied to forms of human communication (e.g., media posts, news stories, campaign speeches, advertising jingles). The point of the analysis is to understand this communication, to systematically and rigorously explore its meanings, assumptions, themes, and patterns. Historical and archival sources may be the subject of content analysis, but there are other ways to analyze (“code”) this data when not overly concerned with the communicative aspect (see chapters 18 and 19). This is why we tend to consider content analysis its own method of data collection as well as a method of data analysis. Still, many of the techniques you learn in this chapter will be helpful to any “coding” scheme you develop for other kinds of qualitative data. Just remember that content analysis is a particular form with distinct aims and goals and traditions.

An Overview of the Content Analysis Process

The first step: selecting content.

Figure 17.2 is a display of possible content for content analysis. The first step in content analysis is making smart decisions about what content you will want to analyze and to clearly connect this content to your research question or general focus of research. Why are you interested in the messages conveyed in this particular content? What will the identification of patterns here help you understand? Content analysis can be fun to do, but in order to make it research, you need to fit it into a research plan.

| New stories | Blogs | Comment posts | Lyrics |

| Letters to editor | Films | Cartoons | Advertisements |

| Brand packaging | Logos | Instagram photos | Tweets |

| Photographs | Graffiti | Street signs | Personalized license plates |

| Avatars (names, shapes, presentations) | Nicknames | Band posters | Building names |

Figure 17.1. A Non-exhaustive List of "Content" for Content Analysis

To take one example, let us imagine you are interested in gender presentations in society and how presentations of gender have changed over time. There are various forms of content out there that might help you document changes. You could, for example, begin by creating a list of magazines that are coded as being for “women” (e.g., Women’s Daily Journal ) and magazines that are coded as being for “men” (e.g., Men’s Health ). You could then select a date range that is relevant to your research question (e.g., 1950s–1970s) and collect magazines from that era. You might create a “sample” by deciding to look at three issues for each year in the date range and a systematic plan for what to look at in those issues (e.g., advertisements? Cartoons? Titles of articles? Whole articles?). You are not just going to look at some magazines willy-nilly. That would not be systematic enough to allow anyone to replicate or check your findings later on. Once you have a clear plan of what content is of interest to you and what you will be looking at, you can begin, creating a record of everything you are including as your content. This might mean a list of each advertisement you look at or each title of stories in those magazines along with its publication date. You may decide to have multiple “content” in your research plan. For each content, you want a clear plan for collecting, sampling, and documenting.

The Second Step: Collecting and Storing

Once you have a plan, you are ready to collect your data. This may entail downloading from the internet, creating a Word document or PDF of each article or picture, and storing these in a folder designated by the source and date (e.g., “ Men’s Health advertisements, 1950s”). Sølvberg ( 2021 ), for example, collected posted job advertisements for three kinds of elite jobs (economic, cultural, professional) in Sweden. But collecting might also mean going out and taking photographs yourself, as in the case of graffiti, street signs, or even what people are wearing. Chaise LaDousa, an anthropologist and linguist, took photos of “house signs,” which are signs, often creative and sometimes offensive, hung by college students living in communal off-campus houses. These signs were a focal point of college culture, sending messages about the values of the students living in them. Some of the names will give you an idea: “Boot ’n Rally,” “The Plantation,” “Crib of the Rib.” The students might find these signs funny and benign, but LaDousa ( 2011 ) argued convincingly that they also reproduced racial and gender inequalities. The data here already existed—they were big signs on houses—but the researcher had to collect the data by taking photographs.

In some cases, your content will be in physical form but not amenable to photographing, as in the case of films or unwieldy physical artifacts you find in the archives (e.g., undigitized meeting minutes or scrapbooks). In this case, you need to create some kind of detailed log (fieldnotes even) of the content that you can reference. In the case of films, this might mean watching the film and writing down details for key scenes that become your data. [2] For scrapbooks, it might mean taking notes on what you are seeing, quoting key passages, describing colors or presentation style. As you might imagine, this can take a lot of time. Be sure you budget this time into your research plan.

Researcher Note

A note on data scraping : Data scraping, sometimes known as screen scraping or frame grabbing, is a way of extracting data generated by another program, as when a scraping tool grabs information from a website. This may help you collect data that is on the internet, but you need to be ethical in how to employ the scraper. A student once helped me scrape thousands of stories from the Time magazine archives at once (although it took several hours for the scraping process to complete). These stories were freely available, so the scraping process simply sped up the laborious process of copying each article of interest and saving it to my research folder. Scraping tools can sometimes be used to circumvent paywalls. Be careful here!

The Third Step: Analysis

There is often an assumption among novice researchers that once you have collected your data, you are ready to write about what you have found. Actually, you haven’t yet found anything, and if you try to write up your results, you will probably be staring sadly at a blank page. Between the collection and the writing comes the difficult task of systematically and repeatedly reviewing the data in search of patterns and themes that will help you interpret the data, particularly its communicative aspect (e.g., What is it that is being communicated here, with these “house signs” or in the pages of Men’s Health ?).

The first time you go through the data, keep an open mind on what you are seeing (or hearing), and take notes about your observations that link up to your research question. In the beginning, it can be difficult to know what is relevant and what is extraneous. Sometimes, your research question changes based on what emerges from the data. Use the first round of review to consider this possibility, but then commit yourself to following a particular focus or path. If you are looking at how gender gets made or re-created, don’t follow the white rabbit down a hole about environmental injustice unless you decide that this really should be the focus of your study or that issues of environmental injustice are linked to gender presentation. In the second round of review, be very clear about emerging themes and patterns. Create codes (more on these in chapters 18 and 19) that will help you simplify what you are noticing. For example, “men as outdoorsy” might be a common trope you see in advertisements. Whenever you see this, mark the passage or picture. In your third (or fourth or fifth) round of review, begin to link up the tropes you’ve identified, looking for particular patterns and assumptions. You’ve drilled down to the details, and now you are building back up to figure out what they all mean. Start thinking about theory—either theories you have read about and are using as a frame of your study (e.g., gender as performance theory) or theories you are building yourself, as in the Grounded Theory tradition. Once you have a good idea of what is being communicated and how, go back to the data at least one more time to look for disconfirming evidence. Maybe you thought “men as outdoorsy” was of importance, but when you look hard, you note that women are presented as outdoorsy just as often. You just hadn’t paid attention. It is very important, as any kind of researcher but particularly as a qualitative researcher, to test yourself and your emerging interpretations in this way.

The Fourth and Final Step: The Write-Up

Only after you have fully completed analysis, with its many rounds of review and analysis, will you be able to write about what you found. The interpretation exists not in the data but in your analysis of the data. Before writing your results, you will want to very clearly describe how you chose the data here and all the possible limitations of this data (e.g., historical-trace problem or power problem; see chapter 16). Acknowledge any limitations of your sample. Describe the audience for the content, and discuss the implications of this. Once you have done all of this, you can put forth your interpretation of the communication of the content, linking to theory where doing so would help your readers understand your findings and what they mean more generally for our understanding of how the social world works. [3]

Analyzing Content: Helpful Hints and Pointers

Although every data set is unique and each researcher will have a different and unique research question to address with that data set, there are some common practices and conventions. When reviewing your data, what do you look at exactly? How will you know if you have seen a pattern? How do you note or mark your data?

Let’s start with the last question first. If your data is stored digitally, there are various ways you can highlight or mark up passages. You can, of course, do this with literal highlighters, pens, and pencils if you have print copies. But there are also qualitative software programs to help you store the data, retrieve the data, and mark the data. This can simplify the process, although it cannot do the work of analysis for you.

Qualitative software can be very expensive, so the first thing to do is to find out if your institution (or program) has a universal license its students can use. If they do not, most programs have special student licenses that are less expensive. The two most used programs at this moment are probably ATLAS.ti and NVivo. Both can cost more than $500 [4] but provide everything you could possibly need for storing data, content analysis, and coding. They also have a lot of customer support, and you can find many official and unofficial tutorials on how to use the programs’ features on the web. Dedoose, created by academic researchers at UCLA, is a decent program that lacks many of the bells and whistles of the two big programs. Instead of paying all at once, you pay monthly, as you use the program. The monthly fee is relatively affordable (less than $15), so this might be a good option for a small project. HyperRESEARCH is another basic program created by academic researchers, and it is free for small projects (those that have limited cases and material to import). You can pay a monthly fee if your project expands past the free limits. I have personally used all four of these programs, and they each have their pluses and minuses.

Regardless of which program you choose, you should know that none of them will actually do the hard work of analysis for you. They are incredibly useful for helping you store and organize your data, and they provide abundant tools for marking, comparing, and coding your data so you can make sense of it. But making sense of it will always be your job alone.

So let’s say you have some software, and you have uploaded all of your content into the program: video clips, photographs, transcripts of news stories, articles from magazines, even digital copies of college scrapbooks. Now what do you do? What are you looking for? How do you see a pattern? The answers to these questions will depend partially on the particular research question you have, or at least the motivation behind your research. Let’s go back to the idea of looking at gender presentations in magazines from the 1950s to the 1970s. Here are some things you can look at and code in the content: (1) actions and behaviors, (2) events or conditions, (3) activities, (4) strategies and tactics, (5) states or general conditions, (6) meanings or symbols, (7) relationships/interactions, (8) consequences, and (9) settings. Table 17.1 lists these with examples from our gender presentation study.

Table 17.1. Examples of What to Note During Content Analysis

| What can be noted/coded | Example from Gender Presentation Study |

|---|---|

| Actions and behaviors | |

| Events or conditions | |

| Activities | |

| Strategies and tactics | |

| States/conditions | |

| Meanings/symbols | |

| Relationships/interactions | |

| Consequences | |

| Settings |

One thing to note about the examples in table 17.1: sometimes we note (mark, record, code) a single example, while other times, as in “settings,” we are recording a recurrent pattern. To help you spot patterns, it is useful to mark every setting, including a notation on gender. Using software can help you do this efficiently. You can then call up “setting by gender” and note this emerging pattern. There’s an element of counting here, which we normally think of as quantitative data analysis, but we are using the count to identify a pattern that will be used to help us interpret the communication. Content analyses often include counting as part of the interpretive (qualitative) process.

In your own study, you may not need or want to look at all of the elements listed in table 17.1. Even in our imagined example, some are more useful than others. For example, “strategies and tactics” is a bit of a stretch here. In studies that are looking specifically at, say, policy implementation or social movements, this category will prove much more salient.

Another way to think about “what to look at” is to consider aspects of your content in terms of units of analysis. You can drill down to the specific words used (e.g., the adjectives commonly used to describe “men” and “women” in your magazine sample) or move up to the more abstract level of concepts used (e.g., the idea that men are more rational than women). Counting for the purpose of identifying patterns is particularly useful here. How many times is that idea of women’s irrationality communicated? How is it is communicated (in comic strips, fictional stories, editorials, etc.)? Does the incidence of the concept change over time? Perhaps the “irrational woman” was everywhere in the 1950s, but by the 1970s, it is no longer showing up in stories and comics. By tracing its usage and prevalence over time, you might come up with a theory or story about gender presentation during the period. Table 17.2 provides more examples of using different units of analysis for this work along with suggestions for effective use.

Table 17.2. Examples of Unit of Analysis in Content Analysis

| Unit of Analysis | How Used... |

|---|---|

| Words | |

| Themes | |

| Characters | |

| Paragraphs | |

| Items | |

| Concepts | |

| Semantics |

Every qualitative content analysis is unique in its particular focus and particular data used, so there is no single correct way to approach analysis. You should have a better idea, however, of what kinds of things to look for and what to look for. The next two chapters will take you further into the coding process, the primary analytical tool for qualitative research in general.

Further Readings

Cidell, Julie. 2010. “Content Clouds as Exploratory Qualitative Data Analysis.” Area 42(4):514–523. A demonstration of using visual “content clouds” as a form of exploratory qualitative data analysis using transcripts of public meetings and content of newspaper articles.

Hsieh, Hsiu-Fang, and Sarah E. Shannon. 2005. “Three Approaches to Qualitative Content Analysis.” Qualitative Health Research 15(9):1277–1288. Distinguishes three distinct approaches to QCA: conventional, directed, and summative. Uses hypothetical examples from end-of-life care research.

Jackson, Romeo, Alex C. Lange, and Antonio Duran. 2021. “A Whitened Rainbow: The In/Visibility of Race and Racism in LGBTQ Higher Education Scholarship.” Journal Committed to Social Change on Race and Ethnicity (JCSCORE) 7(2):174–206.* Using a “critical summative content analysis” approach, examines research published on LGBTQ people between 2009 and 2019.

Krippendorff, Klaus. 2018. Content Analysis: An Introduction to Its Methodology . 4th ed. Thousand Oaks, CA: SAGE. A very comprehensive textbook on both quantitative and qualitative forms of content analysis.

Mayring, Philipp. 2022. Qualitative Content Analysis: A Step-by-Step Guide . Thousand Oaks, CA: SAGE. Formulates an eight-step approach to QCA.

Messinger, Adam M. 2012. “Teaching Content Analysis through ‘Harry Potter.’” Teaching Sociology 40(4):360–367. This is a fun example of a relatively brief foray into content analysis using the music found in Harry Potter films.

Neuendorft, Kimberly A. 2002. The Content Analysis Guidebook . Thousand Oaks, CA: SAGE. Although a helpful guide to content analysis in general, be warned that this textbook definitely favors quantitative over qualitative approaches to content analysis.

Schrier, Margrit. 2012. Qualitative Content Analysis in Practice . Thousand Okas, CA: SAGE. Arguably the most accessible guidebook for QCA, written by a professor based in Germany.

Weber, Matthew A., Shannon Caplan, Paul Ringold, and Karen Blocksom. 2017. “Rivers and Streams in the Media: A Content Analysis of Ecosystem Services.” Ecology and Society 22(3).* Examines the content of a blog hosted by National Geographic and articles published in The New York Times and the Wall Street Journal for stories on rivers and streams (e.g., water-quality flooding).

- There are ways of handling content analysis quantitatively, however. Some practitioners therefore specify qualitative content analysis (QCA). In this chapter, all content analysis is QCA unless otherwise noted. ↵

- Note that some qualitative software allows you to upload whole films or film clips for coding. You will still have to get access to the film, of course. ↵

- See chapter 20 for more on the final presentation of research. ↵

- . Actually, ATLAS.ti is an annual license, while NVivo is a perpetual license, but both are going to cost you at least $500 to use. Student rates may be lower. And don’t forget to ask your institution or program if they already have a software license you can use. ↵

A method of both data collection and data analysis in which a given content (textual, visual, graphic) is examined systematically and rigorously to identify meanings, themes, patterns and assumptions. Qualitative content analysis (QCA) is concerned with gathering and interpreting an existing body of material.

Introduction to Qualitative Research Methods Copyright © 2023 by Allison Hurst is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License , except where otherwise noted.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- v.23(1); 2018 Feb

Directed qualitative content analysis: the description and elaboration of its underpinning methods and data analysis process

Qualitative content analysis consists of conventional, directed and summative approaches for data analysis. They are used for provision of descriptive knowledge and understandings of the phenomenon under study. However, the method underpinning directed qualitative content analysis is insufficiently delineated in international literature. This paper aims to describe and integrate the process of data analysis in directed qualitative content analysis. Various international databases were used to retrieve articles related to directed qualitative content analysis. A review of literature led to the integration and elaboration of a stepwise method of data analysis for directed qualitative content analysis. The proposed 16-step method of data analysis in this paper is a detailed description of analytical steps to be taken in directed qualitative content analysis that covers the current gap of knowledge in international literature regarding the practical process of qualitative data analysis. An example of “the resuscitation team members' motivation for cardiopulmonary resuscitation” based on Victor Vroom's expectancy theory is also presented. The directed qualitative content analysis method proposed in this paper is a reliable, transparent, and comprehensive method for qualitative researchers. It can increase the rigour of qualitative data analysis, make the comparison of the findings of different studies possible and yield practical results.

Introduction

Qualitative content analysis (QCA) is a research approach for the description and interpretation of textual data using the systematic process of coding. The final product of data analysis is the identification of categories, themes and patterns ( Elo and Kyngäs, 2008 ; Hsieh and Shannon, 2005 ; Zhang and Wildemuth, 2009 ). Researchers in the field of healthcare commonly use QCA for data analysis ( Berelson, 1952 ). QCA has been described and used in the first half of the 20th century ( Schreier, 2014 ). The focus of QCA is the development of knowledge and understanding of the study phenomenon. QCA, as the application of language and contextual clues for making meanings in the communication process, requires a close review of the content gleaned from conducting interviews or observations ( Downe-Wamboldt, 1992 ; Hsieh and Shannon, 2005 ).

QCA is classified into conventional (inductive), directed (deductive) and summative methods ( Hsieh and Shannon, 2005 ; Mayring, 2000 , 2014 ). Inductive QCA, as the most popular approach in data analysis, helps with the development of theories, schematic models or conceptual frameworks ( Elo and Kyngäs, 2008 ; Graneheim and Lundman, 2004 ; Vaismoradi et al., 2013 , 2016 ), which should be refined, tested or further developed by using directed QCA ( Elo and Kyngäs, 2008 ). Directed QCA is a common method of data analysis in healthcare research ( Elo and Kyngäs, 2008 ), but insufficient knowledege is available about how this method is applied ( Elo and Kyngäs, 2008 ; Hsieh and Shannon, 2005 ). This may hamper the use of directed QCA by novice qualitative researchers and account for a low application of this method compared with the inductive method ( Elo and Kyngäs, 2008 ; Mayring, 2000 ). Therefore, this paper aims to describe and integrate methods applied in directed QCA.

International databases such as PubMed (including Medline), Scopus, Web of Science and ScienceDirect were searched for retrieval of papers related to QCA and directed QCA. Use of keywords such as ‘directed content analysis’, ‘deductive content analysis’ and ‘qualitative content analysis’ led to 13,738 potentially eligible papers. Applying inclusion criteria such as ‘focused on directed qualitative content analysis’ and ‘published in peer-reviewed journals’; and removal of duplicates resulted in 30 papers. However, only two of these papers dealt with the description of directed QCA in terms of the methodological process. Ancestry and manual searches within these 30 papers revealed the pioneers of the description of this method in international literature. A further search for papers published by the method's pioneers led to four more papers and one monograph dealing with directed QCA ( Figure 1 ).

The search strategy for the identification of papers.

Finally, the authors of this paper integrated and elaborated a comprehensive and stepwise method of directed QCA based on the commonalities of methods discussed in the included papers. Also, the experiences of the current authors in the field of qualitative research were incorporated into the suggested stepwise method of data analysis for directed QCA ( Table 1 ).

The suggested steps for directed content analysis.

| Steps | References |

|---|---|

| Preparation phase | |

| 1. Acquiring the necessary general skills | , |

| 2. Selecting the appropriate sampling strategy | Inferred by the authors of the present paper from |

| 3. Deciding on the analysis of manifest and/or latent content | |

| 4. Developing an interview guide | Inferred by the authors of the present paper from |

| 5. Conducting and transcribing interviews | , |

| 6. Specifying the unit of analysis | |

| 7. Being immersed in data | |

| Organisation phase | |

| 8. Developing a formative categorisation matrix | Inferred by the authors of the present paper from |

| 9. Theoretically defining the main categories and subcategories | , |

| 10. Determining coding rules for main categories | |

| 11. Pre-testing the categorisation matrix | Inferred by the authors of the present paper from |

| 12. Choosing and specifying the anchor samples for each main category | |

| 13. Performing the main data analysis | , , |

| 14. Inductive abstraction of main categories from preliminary codes | |

| 15. Establishment of links between generic categories and main categories | Suggested by the authors of the present paper |

| Reporting phase | |

| 16. Reporting all steps of directed content analysis and findings | , |

While the included papers about directed QCA were the most cited ones in international literature, none of them provided sufficient detail with regard to how to conduct the data analysis process. This might hamper the use of this method by novice qualitative researchers and hinder its application by nurse researchers compared with inductive QCA. As it can be seen in Figure 1 , the search resulted in 5 articles that explain DCA method. The following is description of the articles, along with their strengths and weaknesses. Authors used the strengths in their suggested method as mentioned in Table 1 .

The methods suggested for directed QCA in the international literature

The method suggested by hsieh and shannon (2005).

Hsieh and Shannon (2005) developed two strategies for conducting directed QCA. The first strategy consists of reading textual data and highlighting those parts of the text that, on first impression, appeared to be related to the predetermined codes dictated by a theory or prior research findings. Next, the highlighted texts would be coded using the predetermined codes.

As for the second strategy, the only difference lay in starting the coding process without primarily highlighting the text. In both analysis strategies, the qualitative researcher should return to the text and perform reanalysis after the initial coding process ( Hsieh and Shannon, 2005 ). However, the current authors believe that this second strategy provides an opportunity for recognising missing texts related to the predetermined codes and also newly emerged ones. It also enhances the trustworthiness of findings.

As an important part of the method suggested by Hsieh and Shannon (2005) , the term ‘code’ was used for the different levels of abstraction, but a more precise definition of this term seems to be crucial. For instance, they stated that ‘data that cannot be coded are identified and analyzed later to determine if they represent a new category or a subcategory of an existing code’ (2005: 1282).

It seems that the first ‘code’ in the above sentence indicates the lowest level of abstraction that could be achieved instantly from raw data. However, the ‘code’ at the end of the sentence refers to a higher level of abstraction, because it denotes to a category or subcategory.

Furthermore, the interchangeable and inconsistent use of the words ‘predetermined code’ and ‘category’ could be confusing to novice qualitative researchers. Moreover, Hsieh and Shannon (2005) did not specify exactly which parts of the text, whether highlighted, coded or the whole text, should be considered during the reanalysis of the text after initial coding process. Such a lack of specification runs the risk of missing the content during the initial coding process, especially if the second review of the text is restricted to highlighted sections. One final important omission in this method is the lack of an explicit description of the process through which new codes emerge during the reanalysis of the text. Such a clarification is crucial, because the detection of subtle links between newly emerging codes and the predetermined ones is not straightforward.

The method suggested by Elo and Kyngäs (2008)

Elo and Kyngäs (2008) suggested ‘structured’ and ‘unconstrained’ methods or paths for directed QCA. Accordingly, after determining the ‘categorisation matrix’ as the framework for data collection and analysis during the study process, the whole content would be reviewed and coded. The use of the unconstrained matrix allows the development of some categories inductively by using the steps of ‘grouping’, ‘categorisation’ and ‘abstraction’. The use of a structured method requires a structured matrix upon which data are strictly coded. Hypotheses suggested by previous studies often are tested using this method ( Elo and Kyngäs, 2008 ).

The current authors believe that the label of ‘data gathering by the content’ (p. 110) in the unconstrained matrix path can be misleading. It refers to the data coding step rather than data collection. Also, in the description of the structured path there is an obvious discrepancy with regard to the selection of the portions of the content that fit or do not fit the matrix: ‘… if the matrix is structured, only aspects that fit the matrix of analysis are chosen from the data …’; ‘… when using a structured matrix of analysis, it is possible to choose either only the aspects from the data that fit the categorization frame or, alternatively, to choose those that do not’ ( Elo and Kyngäs, 2008 : 111–112).

Figure 1 in Elo and Kyngäs's paper ( 2008 : 110) clearly distinguished between the structured and unconstrained paths. On the other hand, the first sentence in the above quotation clearly explained the use of the structured matrix, but it was not clear whether the second sentence referred to the use of the structured or unconstrained matrix.

The method suggested by Zhang and Wildemuth (2009)

Considering the method suggested by Hsieh and Shannon (2005) , Zhang and Wildemuth (2009) suggested an eight-step method as follows: (1) preparation of data, (2) definition of the unit of analysis, (3) development of categories and the coding scheme, (4) testing the coding scheme in a text sample, (5) coding the whole text, (6) assessment of the coding's consistency, (7) drawing conclusions from the coded data, and (8) reporting the methods and findings ( Zhang and Wildemuth, 2009 ). Only in the third step of this method, the description of the process of category development, did Zhang and Wildemuth (2009) briefly make a distinction between the inductive versus deductive content analysis methods. On first impression, the only difference between the two approaches seems to be the origin from which categories are developed. In addition, the process of connecting the preliminary codes extracted from raw data with predetermined categories is described. Furthermore, it is not clear whether this linking should be established from categories to primary codes, or vice versa.

The method suggested by Mayring ( 2000 , 2014 )

Mayring ( 2000 , 2014 ) suggested a seven-step method for directed QCA that distinctively differentiated between inductive and deductive methods as follows: (1) determination of the research question and theoretical background, (2) definition of the category system such as main categories and subcategories based on the previous theory and research, (3) establishing a guideline for coding, considering definitions, anchor examples and coding rules, (5) reading the whole text, determining preliminary codes, adding anchor examples and coding rules, (5) revision of the category and coding guideline after working through 10–50% of the data, (6) reworking data if needed, or listing the final category, and (7) analysing and interpreting based on the category frequencies and contingencies.

Mayring suggested that coding rules should be defined to distinctly assign the parts of the text to a particular category. Furthermore, indicating which concrete part of the text serves as typical examples, also known as ‘anchor samples’, and belongs to a particular category was recommended for describing each category ( Mayring, 2000 , 2014 ). The current authors believe that these suggestions help clarify directed QCA and enhance its trustworthiness.

But when the term ‘preliminary coding’ was used, Mayring ( 2000 , 2014 ) did not clearly clarify whether these codes are inductively or deductively created. In addition, Mayring was inclined to apply the quantitative approach implicitly in steps 5 and 7, which is incongruent with the qualitative paradigm. Furthermore, nothing was stated about the possibility of the development of new categories from the textual material: ‘… theoretical considerations can lead to a further categories or rephrasing of categories from previous studies, but the categories are not developed out of the text material like in inductive category formation …’ ( Mayring, 2014 : 97).

Integration and clarification of methods for directed QCA

Directed QCA took different paths when the categorisation matrix contained concepts with higher-level versus lower-level abstractions. In matrices with low abstraction levels, linking raw data to predetermined categories was not difficult, and suggested methods in international nursing literature seem appropriate and helpful. For instance, Elo and Kyngäs (2008) introduced ‘mental well-being threats’ based on the categories of ‘dependence’, ‘worries’, ‘sadness’ and ‘guilt’. Hsieh and Shannon (2005) developed the categories of ‘denial’, ‘anger’, ‘bargaining’, ‘depression’ and ‘acceptance’ when elucidating the stages of grief. Therefore, the low-level abstractions easily could link raw data to categories. The predicament of directed QCA began when the categorisation matrix contained the concepts with high levels of abstraction. The gap regarding how to connect the highly abstracted categories to the raw data should be bridged by using a transparent and comprehensive analysis strategy. Therefore, the authors of this paper integrated the methods of directed QCA outlined in the international literature and elaborated them using the phases of ‘preparation’, ‘organization’ and ‘reporting’ proposed by Elo and Kyngäs (2008) . Also, the experiences of the current authors in the field of qualitative research were incorporated into their suggested stepwise method of data analysis. The method was presented using the example of the “team members’ motivation for cardiopulmonary resuscitation (CPR)” based on Victor Vroom's expectancy theory ( Assarroudi et al., 2017 ). In this example, interview transcriptions were considered as the unit of analysis, because interviews are the most common method of data collection in qualitative studies ( Gill et al., 2008 ).

Suggested method of directed QCA by the authors of this paper

This method consists of 16 steps and three phases, described below: preparation phase (steps 1–7), organisation phase (steps 8–15), and reporting phase (step 16).

The preparation phase:

- The acquisition of general skills . In the first step, qualitative researchers should develop skills including self-critical thinking, analytical abilities, continuous self-reflection, sensitive interpretive skills, creative thinking, scientific writing, data gathering and self-scrutiny ( Elo et al., 2014 ). Furthermore, they should attain sufficient scientific and content-based mastery of the method chosen for directed QCA. In the proposed example, qualitative researchers can achieve this mastery through conducting investigations in original sources related to Victor Vroom's expectancy theory. Main categories pertaining to Victor Vroom's expectancy theory were ‘expectancy’, ‘instrumentality’ and ‘valence’. This theory defined ‘expectancy’ as the perceived probability that efforts could lead to good performance. ‘Instrumentality’ was the perceived probability that good performance led to desired outcomes. ‘Valence’ was the value that the individual personally placed on outcomes ( Vroom, 1964 , 2005 ).

- Selection of the appropriate sampling strategy . Qualitative researchers need to select the proper sampling strategies that facilitate an access to key informants on the study phenomenon ( Elo et al., 2014 ). Sampling methods such as purposive, snowball and convenience methods ( Coyne, 1997 ) can be used with the consideration of maximum variations in terms of socio-demographic and phenomenal characteristics ( Sandelowski, 1995 ). The sampling process ends when information ‘redundancy’ or ‘saturation’ is reached. In other words, it ends when all aspects of the phenomenon under study are explored in detail and no additional data are revealed in subsequent interviews ( Cleary et al., 2014 ). In line with this example, nurses and physicians who are the members of the CPR team should be selected, given diversity in variables including age, gender, the duration of work, number of CPR procedures, CPR in different patient groups and motivation levels for CPR.

- Deciding on the analysis of manifest and/or latent content . Qualitative researchers decide whether the manifest and/or latent contents should be considered for analysis based on the study's aim. The manifest content is limited to the transcribed interview text, but latent content includes both the researchers' interpretations of available text, and participants' silences, pauses, sighs, laughter, posture, etc. ( Elo and Kyngäs, 2008 ). Both types of content are recommended to be considered for data analysis, because a deep understanding of data is preferred for directed QCA ( Thomas and Magilvy, 2011 ).

- Developing an interview guide . The interview guide contains open-ended questions based on the study's aims, followed by directed questions about main categories extracted from the existing theory or previous research ( Hsieh and Shannon, 2005 ). Directed questions guide how to conduct interviews when using directed or conventional methods. The following open-ended and directed questions were used in this example: An open-ended question was ‘What is in your mind when you are called for performing CPR?’ The directed question for the main category of ‘expectancy’ could be ‘How does the expectancy of the successful CPR procedure motivate you to resuscitate patients?’

- Conducting and transcribing interviews . An interview guide is used to conduct interviews for directed QCA. After each interview session, the entire interview is transcribed verbatim immediately ( Poland, 1995 ) and with utmost care ( Seidman, 2013 ). Two recorders should be used to ensure data backup ( DiCicco-Bloom and Crabtree, 2006 ). (For more details concerning skills required for conducting successful qualitative interviews, see Edenborough, 2002 ; Kramer, 2011 ; Schostak, 2005 ; Seidman, 2013 ).

- Specifying the unit of analysis . The unit of analysis may include the person, a program, an organisation, a class, community, a state, a country, an interview, or a diary written by the researchers ( Graneheim and Lundman, 2004 ). The transcriptions of interviews are usually considered units of analysis when data are collected using interviews. In this example, interview transcriptions and filed notes are considered as the units of analysis.

- Immersion in data . The transcribed interviews are read and reviewed several times with the consideration of the following questions: ‘Who is telling?’, ‘Where is this happening?’, ‘When did it happen?’, ‘What is happening?’, and ‘Why?’ ( Elo and Kyngäs, 2008 ). These questions help researchers get immersed in data and become able to extract related meanings ( Elo and Kyngäs, 2008 ; Elo et al., 2014 ).

The organisation phase:

The categorisation matrix of the team members' motivation for CPR.

| Motivation for CPR | |||

|---|---|---|---|

| Expectancy | Instrumentality | Valence | Other inductively emerged categories |

CPR: cardiopulmonary resuscitation.

- Theoretical definition of the main categories and subcategories . Derived from the existing theory or previous research, the theoretical definitions of categories should be accurate and objective ( Mayring, 2000 , 2014 ). As for this example, ‘expectancy’ as a main category could be defined as the “subjective probability that the efforts by an individual led to an acceptable level of performance (effort–performance association) or to the desired outcome (effort–outcome association)” ( Van Eerde and Thierry, 1996 ; Vroom, 1964 ).

- – Expectancy in the CPR was a subjective probability formed in the rescuer's mind.

- – This subjective probability should be related to the association between the effort–performance or effort–outcome relationship perceived by the rescuer.

- The pre-testing of the categorisation matrix . The categorisation matrix should be tested using a pilot study. This is an essential step, particularly if more than one researcher is involved in the coding process. In this step, qualitative researchers should independently and tentatively encode the text, and discuss the difficulties in the use of the categorisation matrix and differences in the interpretations of the unit of analysis. The categorisation matrix may be further modified as a result of such discussions ( Elo et al., 2014 ). This also can increase inter-coder reliability ( Vaismoradi et al., 2013 ) and the trustworthiness of the study.

- Choosing and specifying the anchor samples for each main category . An anchor sample is an explicit and concise exemplification, or the identifier of a main category, selected from meaning units ( Mayring, 2014 ). An anchor sample for ‘expectancy’ as the main category of this example could be as follows: ‘… the patient with advanced metastatic cancer who requires CPR … I do not envision a successful resuscitation for him.’

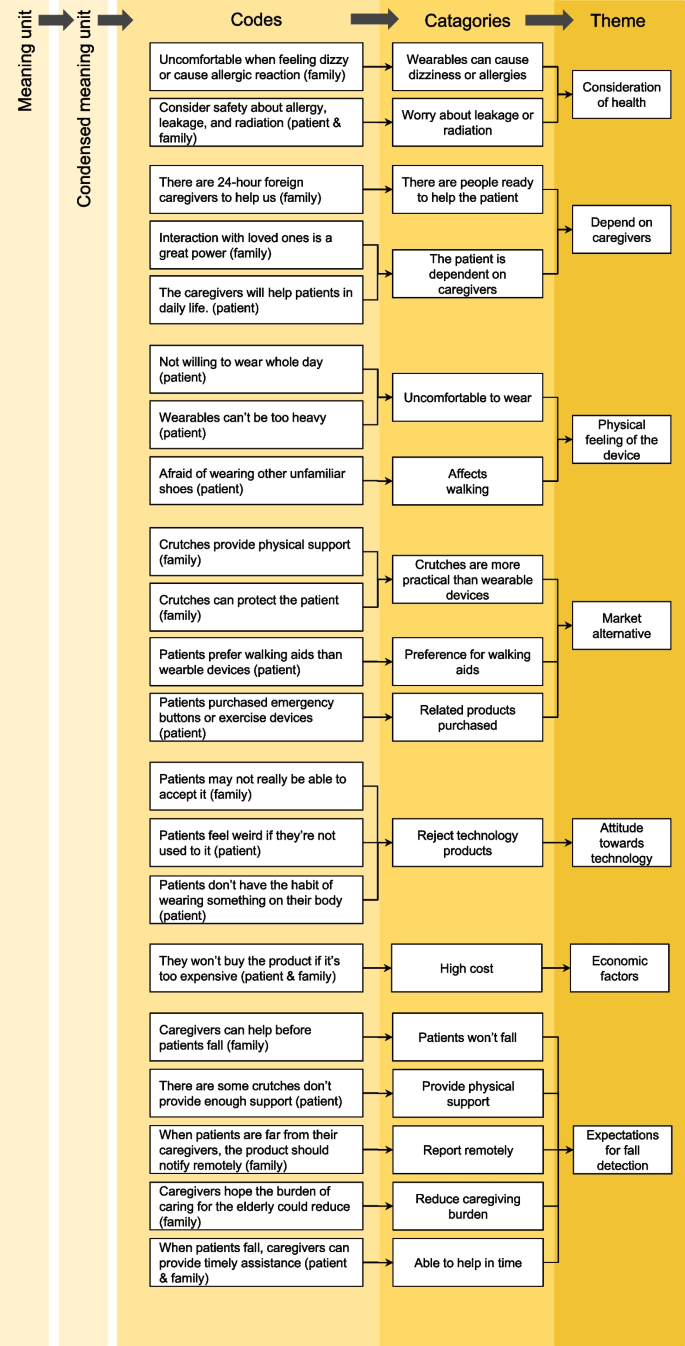

An example of steps taken for the abstraction of the phenomenon of expectancy (main category).

| Meaning unit | Summarised meaning unit | Preliminary code | Group of codes | Subcategory | Generic category | Main category |

|---|---|---|---|---|---|---|

| The patient with advanced heart failure: I do not envisage a successful resuscitation for him | No expectation for the resuscitation of those with advanced heart failure | Cardiovascular conditions that decrease the chance of successful resuscitation | Estimation of the functional capacity of vital organs | Scientific estimation of life capacity | Estimation of the chances of successful CPR | Expectancy |

| Patients are rarely resuscitated, especially those who experience a cardiogenic shock following a heart attack | Low possibility of resuscitation of patients with a cardiogenic shock | |||||

| When ventricular fibrillation is likely, a chance of resuscitation still exists even after performing CPR for 30 minutes | The higher chance of resuscitation among patients with ventricular fibrillation | Cardiovascular conditions that increase the chance of successful resuscitation | ||||

| Patients with sudden cardiac arrest are more likely to be resuscitated through CPR | The higher chance of resuscitation among patients with sudden cardiac arrest | |||||

| Estimation of the severity of the patient's complications | ||||||

| Estimation of remaining life span | ||||||

| Intuitive estimation of the chances of successful resuscitation | ||||||

| Uncertainty in the estimation | ||||||

| Time considerations in resuscitation | ||||||

| Estimation of self-efficacy |

CPR: cardiopulmonary resuscitation

- The inductive abstraction of main categories from preliminary codes . Preliminary codes are grouped and categorised according to their meanings, similarities and differences. The products of this categorisation process are known as ‘generic categories’ ( Elo and Kyngäs, 2008 ) ( Table 3 ).

- The establishment of links between generic categories and main categories . The constant comparison of generic categories and main categories results in the development of a conceptual and logical link between generic and main categories, nesting generic categories into the pre-existing main categories and creating new main categories. The constant comparison technique is applied to data analysis throughout the study ( Zhang and Wildemuth, 2009 ) ( Table 3 ).

The reporting phase:

- Reporting all steps of directed QCA and findings . This includes a detailed description of the data analysis process and the enumeration of findings ( Elo and Kyngäs, 2008 ). Findings should be systematically presented in such a way that the association between the raw data and the categorisation matrix is clearly shown and easily followed. Detailed descriptions of the sampling process, data collection, analysis methods and participants' characteristics should be presented. The trustworthiness criteria adopted along with the steps taken to fulfil them should also be outlined. Elo et al. (2014) developed a comprehensive and specific checklist for reporting QCA studies.

Trustworthiness

Multiple terms are used in the international literature regarding the validation of qualitative studies ( Creswell, 2013 ). The terms ‘validity’, ‘reliability’, and ‘generalizability’ in quantitative studies are equivalent to ‘credibility’, ‘dependability’, and ‘transferability’ in qualitative studies, respectively ( Polit and Beck, 2013 ). These terms, along with the additional concept of confirmability, were introduced by Lincoln and Guba (1985) . Polit and Beck added the term ‘authenticity’ to the list. Collectively, they are the different aspects of trustworthiness in all types of qualitative studies ( Polit and Beck, 2013 ).

To ehnance the trustworthiness of the directed QCA study, researchers should thoroughly delineate the three phases of ‘preparation’, ‘organization’, and ‘reporting’ ( Elo et al., 2014 ). Such phases are needed to show in detail how categories are developed from data ( Elo and Kyngäs, 2008 ; Graneheim and Lundman, 2004 ; Vaismoradi et al., 2016 ). To accomplish this, appendices, tables and figures may be used to depict the reduction process ( Elo and Kyngäs, 2008 ; Elo et al., 2014 ). Furthermore, an honest account of different realities during data analysis should be provided ( Polit and Beck, 2013 ). The authors of this paper believe that adopting this 16-step method can enhance the trustworthiness of directed QCA.

Directed QCA is used to validate, refine and/or extend a theory or theoretical framework in a new context ( Elo and Kyngäs, 2008 ; Hsieh and Shannon, 2005 ). The purpose of this paper is to provide a comprehensive, systematic, yet simple and applicable method for directed QCA to facilitate its use by novice qualitative researchers.

Despite the current misconceptions regarding the simplicity of QCA and directed QCA, knowledge development is required for conducting them ( Elo and Kyngäs, 2008 ). Directed QCA is often performed on a considerable amount of textual data ( Pope et al., 2000 ). Nevertheless, few studies have discussed the multiple steps need to be taken to conduct it. In this paper, we have integrated and elaborated the essential steps pointed to by international qualitative researchers on directed QCA such as ‘preliminary coding’, ‘theoretical definition’ ( Mayring, 2000 , 2014 ), ‘coding rule’, ‘anchor sample’ ( Mayring, 2014 ), ‘inductive analysis in directed qualitative content analysis’ ( Elo and Kyngäs, 2008 ), and ‘pretesting the categorization matrix’ ( Elo et al., 2014 ). Moreover, the authors have added a detailed discussion regarding ‘the use of inductive abstraction’ and ‘linking between generic categories and main categories’.

The importance of directed QCA is increased due to the development of knowledge and theories derived from QCA using the inductive approach, and the growing need to test the theories. Directed QCA proposed in this paper, is a reliable, transparent and comprehensive method that may increase the rigour of data analysis, allow the comparison of the findings of different studies, and yield practical results.

Abdolghader Assarroudi (PhD, MScN, BScN) is Assistant Professor in Nursing, Department of Medical‐Surgical Nursing, School of Nursing and Midwifery, Sabzevar University of Medical Sciences, Sabzevar, Iran. His main areas of research interest are qualitative research, instrument development study and cardiopulmonary resuscitation.

Fatemeh Heshmati Nabavi (PhD, MScN, BScN) is Assistant Professor in nursing, Department of Nursing Management, School of Nursing and Midwifery, Mashhad University of Medical Sciences, Mashhad, Iran. Her main areas of research interest are medical education, nursing management and qualitative study.

Mohammad Reza Armat (MScN, BScN) graduated from the Mashhad University of Medical Sciences in 1991 with a Bachelor of Science degree in nursing. He completed his Master of Science degree in nursing at Tarbiat Modarres University in 1995. He is an instructor in North Khorasan University of Medical Sciences, Bojnourd, Iran. Currently, he is a PhD candidate in nursing at the Mashhad School of Nursing and Midwifery, Mashhad University of Medical Sciences, Iran.

Abbas Ebadi (PhD, MScN, BScN) is professor in nursing, Behavioral Sciences Research Centre, School of Nursing, Baqiyatallah University of Medical Sciences, Tehran, Iran. His main areas of research interest are instrument development and qualitative study.

Mojtaba Vaismoradi (PhD, MScN, BScN) is a doctoral nurse researcher at the Faculty of Nursing and Health Sciences, Nord University, Bodø, Norway. He works in Nord’s research group ‘Healthcare Leadership’ under the supervision of Prof. Terese Bondas. For now, this team has focused on conducting meta‐synthesis studies with the collaboration of international qualitative research experts. His main areas of research interests are patient safety, elderly care and methodological issues in qualitative descriptive approaches. Mojtaba is the associate editor of BMC Nursing and journal SAGE Open in the UK.

Key points for policy, practice and/or research

- In this paper, essential steps pointed to by international qualitative researchers in the field of directed qualitative content analysis were described and integrated.

- A detailed discussion regarding the use of inductive abstraction, and linking between generic categories and main categories, was presented.

- A 16-step method of directed qualitative content analysis proposed in this paper is a reliable, transparent, comprehensive, systematic, yet simple and applicable method. It can increase the rigour of data analysis and facilitate its use by novice qualitative researchers.

Declaration of conflicting interests

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

The author(s) received no financial support for the research, authorship, and/or publication of this article.

- Assarroudi A, Heshmati Nabavi F, Ebadi A, et al.(2017) Professional rescuers' experiences of motivation for cardiopulmonary resuscitation: A qualitative study . Nursing & Health Sciences . 19(2): 237–243. [ PubMed ] [ Google Scholar ]

- Berelson B. (1952) Content Analysis in Communication Research , Glenoce, IL: Free Press. [ Google Scholar ]

- Cleary M, Horsfall J, Hayter M. (2014) Data collection and sampling in qualitative research: Does size matter? Journal of Advanced Nursing 70 ( 3 ): 473–475. [ PubMed ] [ Google Scholar ]

- Coyne IT. (1997) Sampling in qualitative research.. Purposeful and theoretical sampling; merging or clear boundaries? Journal of Advanced Nursing 26 ( 3 ): 623–630. [ PubMed ] [ Google Scholar ]

- Creswell JW. (2013) Research Design: Qualitative, Quantitative, and Mixed Methods Approaches , 4th edn. Thousand Oaks, CA: SAGE Publications. [ Google Scholar ]

- DiCicco-Bloom B, Crabtree BF. (2006) The qualitative research interview . Medical Education 40 ( 4 ): 314–321. [ PubMed ] [ Google Scholar ]

- Downe-Wamboldt B. (1992) Content analysis: Method, applications, and issues . Health Care for Women International 13 ( 3 ): 313–321. [ PubMed ] [ Google Scholar ]

- Edenborough R. (2002) Effective Interviewing: A Handbook of Skills and Techniques , 2nd edn. London: Kogan Page. [ Google Scholar ]

- Elo S, Kyngäs H. (2008) The qualitative content analysis process . Journal of Advanced Nursing 62 ( 1 ): 107–115. [ PubMed ] [ Google Scholar ]

- Elo S, Kääriäinen M, Kanste O, et al.(2014) Qualitative content analysis: A focus on trustworthiness . SAGE Open 4 ( 1 ): 1–10. [ Google Scholar ]

- Gill P, Stewart K, Treasure E, et al.(2008) Methods of data collection in qualitative research: Interviews and focus groups . British Dental Journal 204 ( 6 ): 291–295. [ PubMed ] [ Google Scholar ]

- Graneheim UH, Lundman B. (2004) Qualitative content analysis in nursing research: Concepts, procedures and measures to achieve trustworthiness . Nurse Education Today 24 ( 2 ): 105–112. [ PubMed ] [ Google Scholar ]

- Hsieh H-F, Shannon SE. (2005) Three approaches to qualitative content analysis . Qualitative Health Research 15 ( 9 ): 1277–1288. [ PubMed ] [ Google Scholar ]

- Kramer EP. (2011) 101 Successful Interviewing Strategies , Boston, MA: Course Technology, Cengage Learning. [ Google Scholar ]

- Lincoln YS, Guba EG. (1985) Naturalistic Inquiry , Beverly Hills, CA: SAGE Publications. [ Google Scholar ]

- Mayring P. (2000) Qualitative Content Analysis . Forum: Qualitative Social Research 1 ( 2 ): Available at: http://www.qualitative-research.net/fqs-texte/2-00/02-00mayring-e.htm (accessed 10 March 2005). [ Google Scholar ]

- Mayring P. (2014) Qualitative content analysis: Theoretical foundation, basic procedures and software solution , Klagenfurt: Monograph. Available at: http://nbn-resolving.de/urn:nbn:de:0168-ssoar-395173 (accessed 10 May 2015). [ Google Scholar ]

- Poland BD. (1995) Transcription quality as an aspect of rigor in qualitative research . Qualitative Inquiry 1 ( 3 ): 290–310. [ Google Scholar ]

- Polit DF, Beck CT. (2013) Essentials of Nursing Research: Appraising Evidence for Nursing Practice , 7th edn. China: Lippincott Williams & Wilkins. [ Google Scholar ]

- Pope C, Ziebland S, Mays N. (2000) Analysing qualitative data . BMJ 320 ( 7227 ): 114–116. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Sandelowski M. (1995) Sample size in qualitative research . Research in Nursing & Health 18 ( 2 ): 179–183. [ PubMed ] [ Google Scholar ]

- Schostak J. (2005) Interviewing and Representation in Qualitative Research , London: McGraw-Hill/Open University Press. [ Google Scholar ]

- Schreier M. (2014) Qualitative content analysis . In: Flick U. (ed.) The SAGE Handbook of Qualitative Data Analysis , Thousand Oaks, CA: SAGE Publications Ltd, pp. 170–183. [ Google Scholar ]

- Seidman I. (2013) Interviewing as Qualitative Research: A Guide for Researchers in Education and the Social Sciences , 3rd edn. New York: Teachers College Press. [ Google Scholar ]

- Thomas E, Magilvy JK. (2011) Qualitative rigor or research validity in qualitative research . Journal for Specialists in Pediatric Nursing 16 ( 2 ): 151–155. [ PubMed ] [ Google Scholar ]

- Vaismoradi M, Jones J, Turunen H, et al.(2016) Theme development in qualitative content analysis and thematic analysis . Journal of Nursing Education and Practice 6 ( 5 ): 100–110. [ Google Scholar ]

- Vaismoradi M, Turunen H, Bondas T. (2013) Content analysis and thematic analysis: Implications for conducting a qualitative descriptive study . Nursing & Health Sciences 15 ( 3 ): 398–405. [ PubMed ] [ Google Scholar ]

- Van Eerde W, Thierry H. (1996) Vroom's expectancy models and work-related criteria: A meta-analysis . Journal of Applied Psychology 81 ( 5 ): 575. [ Google Scholar ]

- Vroom VH. (1964) Work and Motivation , New York: Wiley. [ Google Scholar ]

- Vroom VH. (2005) On the origins of expectancy theory . In: Smith KG, Hitt MA. (eds) Great Minds in Management: The Process of Theory Development , Oxford: Oxford University Press, pp. 239–258. [ Google Scholar ]

- Zhang Y, Wildemuth BM. (2009) Qualitative analysis of content . In: Wildemuth B. (ed.) Applications of Social Research Methods to Questions in Information and Library Science , Westport, CT: Libraries Unlimited, pp. 308–319. [ Google Scholar ]

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

18.5 Content analysis

Learning objectives.

Learners will be able to…

- Explain defining features of content analysis as a strategy for analyzing qualitative data

- Determine when content analysis can be most effectively used

- Formulate an initial content analysis plan (if appropriate for your research proposal)

What are you trying to accomplish with content analysis

Much like with thematic analysis, if you elect to use content analysis to analyze your qualitative data, you will be deconstructing the artifacts that you have sampled and looking for similarities across these deconstructed parts. Also consistent with thematic analysis, you will be seeking to bring together these similarities in the discussion of your findings to tell a collective story of what you learned across your data. While the distinction between thematic analysis and content analysis is somewhat murky, if you are looking to distinguish between the two, content analysis:

- Places greater emphasis on determining the unit of analysis. Just to quickly distinguish, when we discussed sampling in Chapter 10 we also used the term “unit of analysis. As a reminder, when we are talking about sampling, unit of analysis refers to the entity that a researcher wants to say something about at the end of her study (individual, group, or organization). However, for our purposes when we are conducting a content analysis, this term has to do with the ‘chunk’ or segment of data you will be looking at to reflect a particular idea. This may be a line, a paragraph, a section, an image or section of an image, a scene, etc., depending on the type of artifact you are dealing with and the level at which you want to subdivide this artifact.

- Content analysis is also more adept at bringing together a variety of forms of artifacts in the same study. While other approaches can certainly accomplish this, content analysis more readily allows the researcher to deconstruct, label and compare different kinds of ‘content’. For example, perhaps you have developed a new advocacy training for community members. To evaluate your training you want to analyze a variety of products they create after the workshop, including written products (e.g. letters to their representatives, community newsletters), audio/visual products (e.g. interviews with leaders, photos hosted in a local art exhibit on the topic) and performance products (e.g. hosting town hall meetings, facilitating rallies). Content analysis can allow you the capacity to examine evidence across these different formats.

For some more in-depth discussion comparing these two approaches, including more philosophical differences between the two, check out this article by Vaismoradi, Turunen, and Bondas (2013) . [1]

Variations in the approach

There are also significant variations among different content analysis approaches. Some of these approaches are more concerned with quantifying (counting) how many times a code representing a specific concept or idea appears. These are more quantitative and deductive in nature. Other approaches look for codes to emerge from the data to help describe some idea or event. These are more qualitative and inductive . Hsieh and Shannon (2005) [2] describe three approaches to help understand some of these differences:

- Conventional Content Analysis. Starting with a general idea or phenomenon you want to explore (for which there is limited data), coding categories then emerge from the raw data. These coding categories help us understand the different dimensions, patterns, and trends that may exist within the raw data collected in our research.

- Directed Content Analysis. Starts with a theory or existing research for which you develop your initial codes (there is some existing research, but incomplete in some aspects) and uses these to guide your initial analysis of the raw data to flesh out a more detailed understanding of the codes and ultimately, the focus of your study.

- Summative Content Analysis. Starts by examining how many times and where codes are showing up in your data, but then looks to develop an understanding or an “interpretation of the underlying context” (p.1277) for how they are being used. As you might have guessed, this approach is more likely to be used if you’re studying a topic that already has some existing research that forms a basic place to begin the analysis.