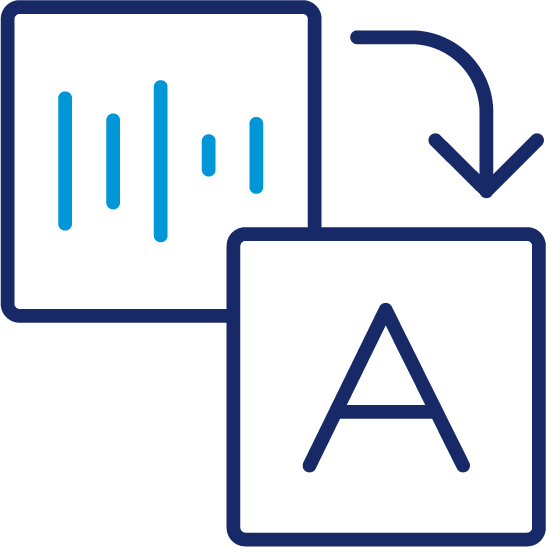

Speech recognition, also known as automatic speech recognition (ASR), computer speech recognition or speech-to-text, is a capability that enables a program to process human speech into a written format.

While speech recognition is commonly confused with voice recognition, speech recognition focuses on the translation of speech from a verbal format to a text one whereas voice recognition just seeks to identify an individual user’s voice.

IBM has had a prominent role within speech recognition since its inception, releasing of “Shoebox” in 1962. This machine had the ability to recognize 16 different words, advancing the initial work from Bell Labs from the 1950s. However, IBM didn’t stop there, but continued to innovate over the years, launching VoiceType Simply Speaking application in 1996. This speech recognition software had a 42,000-word vocabulary, supported English and Spanish, and included a spelling dictionary of 100,000 words.

While speech technology had a limited vocabulary in the early days, it is utilized in a wide number of industries today, such as automotive, technology, and healthcare. Its adoption has only continued to accelerate in recent years due to advancements in deep learning and big data. Research (link resides outside ibm.com) shows that this market is expected to be worth USD 24.9 billion by 2025.

Explore the free O'Reilly ebook to learn how to get started with Presto, the open source SQL engine for data analytics.

Register for the guide on foundation models

Many speech recognition applications and devices are available, but the more advanced solutions use AI and machine learning . They integrate grammar, syntax, structure, and composition of audio and voice signals to understand and process human speech. Ideally, they learn as they go — evolving responses with each interaction.

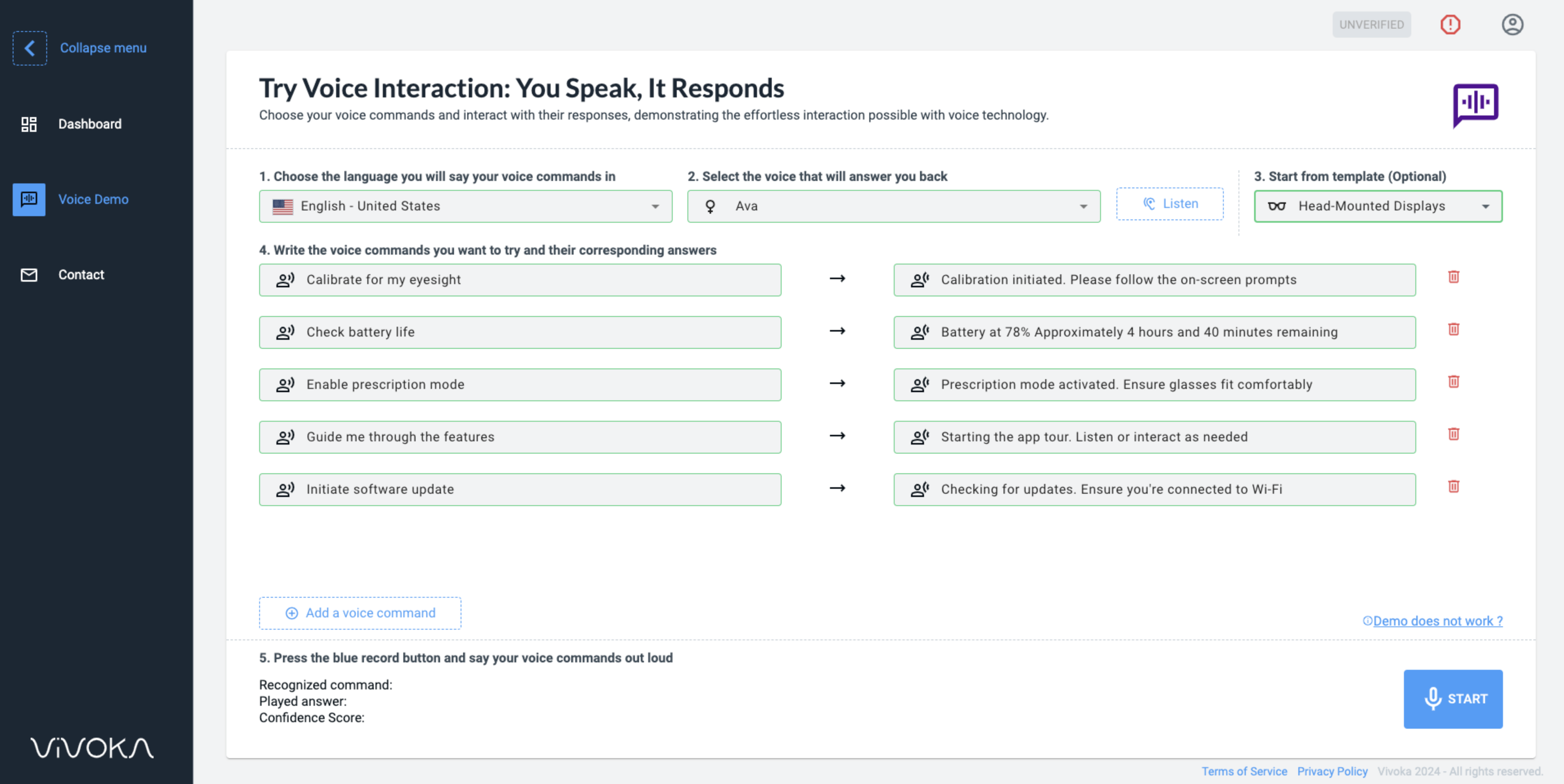

The best kind of systems also allow organizations to customize and adapt the technology to their specific requirements — everything from language and nuances of speech to brand recognition. For example:

- Language weighting: Improve precision by weighting specific words that are spoken frequently (such as product names or industry jargon), beyond terms already in the base vocabulary.

- Speaker labeling: Output a transcription that cites or tags each speaker’s contributions to a multi-participant conversation.

- Acoustics training: Attend to the acoustical side of the business. Train the system to adapt to an acoustic environment (like the ambient noise in a call center) and speaker styles (like voice pitch, volume and pace).

- Profanity filtering: Use filters to identify certain words or phrases and sanitize speech output.

Meanwhile, speech recognition continues to advance. Companies, like IBM, are making inroads in several areas, the better to improve human and machine interaction.

The vagaries of human speech have made development challenging. It’s considered to be one of the most complex areas of computer science – involving linguistics, mathematics and statistics. Speech recognizers are made up of a few components, such as the speech input, feature extraction, feature vectors, a decoder, and a word output. The decoder leverages acoustic models, a pronunciation dictionary, and language models to determine the appropriate output.

Speech recognition technology is evaluated on its accuracy rate, i.e. word error rate (WER), and speed. A number of factors can impact word error rate, such as pronunciation, accent, pitch, volume, and background noise. Reaching human parity – meaning an error rate on par with that of two humans speaking – has long been the goal of speech recognition systems. Research from Lippmann (link resides outside ibm.com) estimates the word error rate to be around 4 percent, but it’s been difficult to replicate the results from this paper.

Various algorithms and computation techniques are used to recognize speech into text and improve the accuracy of transcription. Below are brief explanations of some of the most commonly used methods:

- Natural language processing (NLP): While NLP isn’t necessarily a specific algorithm used in speech recognition, it is the area of artificial intelligence which focuses on the interaction between humans and machines through language through speech and text. Many mobile devices incorporate speech recognition into their systems to conduct voice search—e.g. Siri—or provide more accessibility around texting.

- Hidden markov models (HMM): Hidden Markov Models build on the Markov chain model, which stipulates that the probability of a given state hinges on the current state, not its prior states. While a Markov chain model is useful for observable events, such as text inputs, hidden markov models allow us to incorporate hidden events, such as part-of-speech tags, into a probabilistic model. They are utilized as sequence models within speech recognition, assigning labels to each unit—i.e. words, syllables, sentences, etc.—in the sequence. These labels create a mapping with the provided input, allowing it to determine the most appropriate label sequence.

- N-grams: This is the simplest type of language model (LM), which assigns probabilities to sentences or phrases. An N-gram is sequence of N-words. For example, “order the pizza” is a trigram or 3-gram and “please order the pizza” is a 4-gram. Grammar and the probability of certain word sequences are used to improve recognition and accuracy.

- Neural networks: Primarily leveraged for deep learning algorithms, neural networks process training data by mimicking the interconnectivity of the human brain through layers of nodes. Each node is made up of inputs, weights, a bias (or threshold) and an output. If that output value exceeds a given threshold, it “fires” or activates the node, passing data to the next layer in the network. Neural networks learn this mapping function through supervised learning, adjusting based on the loss function through the process of gradient descent. While neural networks tend to be more accurate and can accept more data, this comes at a performance efficiency cost as they tend to be slower to train compared to traditional language models.

- Speaker Diarization (SD): Speaker diarization algorithms identify and segment speech by speaker identity. This helps programs better distinguish individuals in a conversation and is frequently applied at call centers distinguishing customers and sales agents.

A wide number of industries are utilizing different applications of speech technology today, helping businesses and consumers save time and even lives. Some examples include:

Automotive: Speech recognizers improves driver safety by enabling voice-activated navigation systems and search capabilities in car radios.

Technology: Virtual agents are increasingly becoming integrated within our daily lives, particularly on our mobile devices. We use voice commands to access them through our smartphones, such as through Google Assistant or Apple’s Siri, for tasks, such as voice search, or through our speakers, via Amazon’s Alexa or Microsoft’s Cortana, to play music. They’ll only continue to integrate into the everyday products that we use, fueling the “Internet of Things” movement.

Healthcare: Doctors and nurses leverage dictation applications to capture and log patient diagnoses and treatment notes.

Sales: Speech recognition technology has a couple of applications in sales. It can help a call center transcribe thousands of phone calls between customers and agents to identify common call patterns and issues. AI chatbots can also talk to people via a webpage, answering common queries and solving basic requests without needing to wait for a contact center agent to be available. It both instances speech recognition systems help reduce time to resolution for consumer issues.

Security: As technology integrates into our daily lives, security protocols are an increasing priority. Voice-based authentication adds a viable level of security.

Convert speech into text using AI-powered speech recognition and transcription.

Convert text into natural-sounding speech in a variety of languages and voices.

AI-powered hybrid cloud software.

Enable speech transcription in multiple languages for a variety of use cases, including but not limited to customer self-service, agent assistance and speech analytics.

Learn how to keep up, rethink how to use technologies like the cloud, AI and automation to accelerate innovation, and meet the evolving customer expectations.

IBM watsonx Assistant helps organizations provide better customer experiences with an AI chatbot that understands the language of the business, connects to existing customer care systems, and deploys anywhere with enterprise security and scalability. watsonx Assistant automates repetitive tasks and uses machine learning to resolve customer support issues quickly and efficiently.

Speech Recognition: Everything You Need to Know in 2024

We adhere to clear ethical standards and follow an objective methodology . The brands with links to their websites fund our research.

Speech recognition, also known as automatic speech recognition (ASR) , enables seamless communication between humans and machines. This technology empowers organizations to transform human speech into written text. Speech recognition technology can revolutionize many business applications , including customer service, healthcare, finance and sales.

In this comprehensive guide, we will explain speech recognition, exploring how it works, the algorithms involved, and the use cases of various industries.

If you require training data for your speech recognition system, here is a guide to finding the right speech data collection services.

What is speech recognition?

Speech recognition, also known as automatic speech recognition (ASR), speech-to-text (STT), and computer speech recognition, is a technology that enables a computer to recognize and convert spoken language into text.

Speech recognition technology uses AI and machine learning models to accurately identify and transcribe different accents, dialects, and speech patterns.

What are the features of speech recognition systems?

Speech recognition systems have several components that work together to understand and process human speech. Key features of effective speech recognition are:

- Audio preprocessing: After you have obtained the raw audio signal from an input device, you need to preprocess it to improve the quality of the speech input The main goal of audio preprocessing is to capture relevant speech data by removing any unwanted artifacts and reducing noise.

- Feature extraction: This stage converts the preprocessed audio signal into a more informative representation. This makes raw audio data more manageable for machine learning models in speech recognition systems.

- Language model weighting: Language weighting gives more weight to certain words and phrases, such as product references, in audio and voice signals. This makes those keywords more likely to be recognized in a subsequent speech by speech recognition systems.

- Acoustic modeling : It enables speech recognizers to capture and distinguish phonetic units within a speech signal. Acoustic models are trained on large datasets containing speech samples from a diverse set of speakers with different accents, speaking styles, and backgrounds.

- Speaker labeling: It enables speech recognition applications to determine the identities of multiple speakers in an audio recording. It assigns unique labels to each speaker in an audio recording, allowing the identification of which speaker was speaking at any given time.

- Profanity filtering: The process of removing offensive, inappropriate, or explicit words or phrases from audio data.

What are the different speech recognition algorithms?

Speech recognition uses various algorithms and computation techniques to convert spoken language into written language. The following are some of the most commonly used speech recognition methods:

- Hidden Markov Models (HMMs): Hidden Markov model is a statistical Markov model commonly used in traditional speech recognition systems. HMMs capture the relationship between the acoustic features and model the temporal dynamics of speech signals.

- Estimate the probability of word sequences in the recognized text

- Convert colloquial expressions and abbreviations in a spoken language into a standard written form

- Map phonetic units obtained from acoustic models to their corresponding words in the target language.

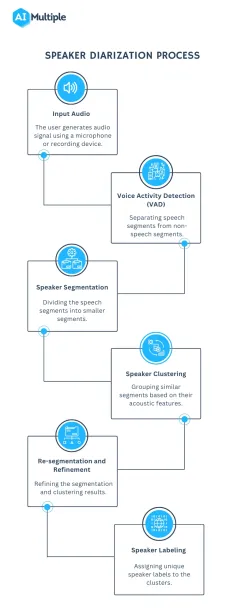

- Speaker Diarization (SD): Speaker diarization, or speaker labeling, is the process of identifying and attributing speech segments to their respective speakers (Figure 1). It allows for speaker-specific voice recognition and the identification of individuals in a conversation.

Figure 1: A flowchart illustrating the speaker diarization process

- Dynamic Time Warping (DTW): Speech recognition algorithms use Dynamic Time Warping (DTW) algorithm to find an optimal alignment between two sequences (Figure 2).

Figure 2: A speech recognizer using dynamic time warping to determine the optimal distance between elements

5. Deep neural networks: Neural networks process and transform input data by simulating the non-linear frequency perception of the human auditory system.

6. Connectionist Temporal Classification (CTC): It is a training objective introduced by Alex Graves in 2006. CTC is especially useful for sequence labeling tasks and end-to-end speech recognition systems. It allows the neural network to discover the relationship between input frames and align input frames with output labels.

Speech recognition vs voice recognition

Speech recognition is commonly confused with voice recognition, yet, they refer to distinct concepts. Speech recognition converts spoken words into written text, focusing on identifying the words and sentences spoken by a user, regardless of the speaker’s identity.

On the other hand, voice recognition is concerned with recognizing or verifying a speaker’s voice, aiming to determine the identity of an unknown speaker rather than focusing on understanding the content of the speech.

What are the challenges of speech recognition with solutions?

While speech recognition technology offers many benefits, it still faces a number of challenges that need to be addressed. Some of the main limitations of speech recognition include:

Acoustic Challenges:

- Assume a speech recognition model has been primarily trained on American English accents. If a speaker with a strong Scottish accent uses the system, they may encounter difficulties due to pronunciation differences. For example, the word “water” is pronounced differently in both accents. If the system is not familiar with this pronunciation, it may struggle to recognize the word “water.”

Solution: Addressing these challenges is crucial to enhancing speech recognition applications’ accuracy. To overcome pronunciation variations, it is essential to expand the training data to include samples from speakers with diverse accents. This approach helps the system recognize and understand a broader range of speech patterns.

- For instance, you can use data augmentation techniques to reduce the impact of noise on audio data. Data augmentation helps train speech recognition models with noisy data to improve model accuracy in real-world environments.

Figure 3: Examples of a target sentence (“The clown had a funny face”) in the background noise of babble, car and rain.

Linguistic Challenges:

- Out-of-vocabulary words: Since the speech recognizers model has not been trained on OOV words, they may incorrectly recognize them as different or fail to transcribe them when encountering them.

Figure 4: An example of detecting OOV word

Solution: Word Error Rate (WER) is a common metric that is used to measure the accuracy of a speech recognition or machine translation system. The word error rate can be computed as:

Figure 5: Demonstrating how to calculate word error rate (WER)

- Homophones: Homophones are words that are pronounced identically but have different meanings, such as “to,” “too,” and “two”. Solution: Semantic analysis allows speech recognition programs to select the appropriate homophone based on its intended meaning in a given context. Addressing homophones improves the ability of the speech recognition process to understand and transcribe spoken words accurately.

Technical/System Challenges:

- Data privacy and security: Speech recognition systems involve processing and storing sensitive and personal information, such as financial information. An unauthorized party could use the captured information, leading to privacy breaches.

Solution: You can encrypt sensitive and personal audio information transmitted between the user’s device and the speech recognition software. Another technique for addressing data privacy and security in speech recognition systems is data masking. Data masking algorithms mask and replace sensitive speech data with structurally identical but acoustically different data.

Figure 6: An example of how data masking works

- Limited training data: Limited training data directly impacts the performance of speech recognition software. With insufficient training data, the speech recognition model may struggle to generalize different accents or recognize less common words.

Solution: To improve the quality and quantity of training data, you can expand the existing dataset using data augmentation and synthetic data generation technologies.

13 speech recognition use cases and applications

In this section, we will explain how speech recognition revolutionizes the communication landscape across industries and changes the way businesses interact with machines.

Customer Service and Support

- Interactive Voice Response (IVR) systems: Interactive voice response (IVR) is a technology that automates the process of routing callers to the appropriate department. It understands customer queries and routes calls to the relevant departments. This reduces the call volume for contact centers and minimizes wait times. IVR systems address simple customer questions without human intervention by employing pre-recorded messages or text-to-speech technology . Automatic Speech Recognition (ASR) allows IVR systems to comprehend and respond to customer inquiries and complaints in real time.

- Customer support automation and chatbots: According to a survey, 78% of consumers interacted with a chatbot in 2022, but 80% of respondents said using chatbots increased their frustration level.

- Sentiment analysis and call monitoring: Speech recognition technology converts spoken content from a call into text. After speech-to-text processing, natural language processing (NLP) techniques analyze the text and assign a sentiment score to the conversation, such as positive, negative, or neutral. By integrating speech recognition with sentiment analysis, organizations can address issues early on and gain valuable insights into customer preferences.

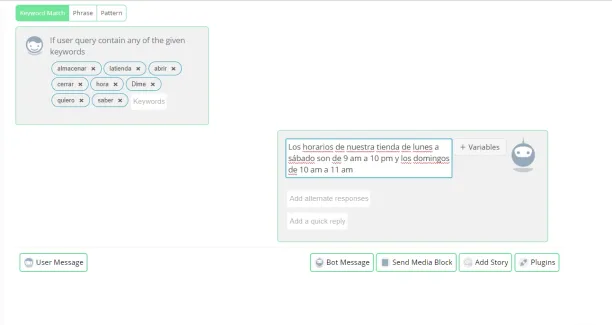

- Multilingual support: Speech recognition software can be trained in various languages to recognize and transcribe the language spoken by a user accurately. By integrating speech recognition technology into chatbots and Interactive Voice Response (IVR) systems, organizations can overcome language barriers and reach a global audience (Figure 7). Multilingual chatbots and IVR automatically detect the language spoken by a user and switch to the appropriate language model.

Figure 7: Showing how a multilingual chatbot recognizes words in another language

- Customer authentication with voice biometrics: Voice biometrics use speech recognition technologies to analyze a speaker’s voice and extract features such as accent and speed to verify their identity.

Sales and Marketing:

- Virtual sales assistants: Virtual sales assistants are AI-powered chatbots that assist customers with purchasing and communicate with them through voice interactions. Speech recognition allows virtual sales assistants to understand the intent behind spoken language and tailor their responses based on customer preferences.

- Transcription services : Speech recognition software records audio from sales calls and meetings and then converts the spoken words into written text using speech-to-text algorithms.

Automotive:

- Voice-activated controls: Voice-activated controls allow users to interact with devices and applications using voice commands. Drivers can operate features like climate control, phone calls, or navigation systems.

- Voice-assisted navigation: Voice-assisted navigation provides real-time voice-guided directions by utilizing the driver’s voice input for the destination. Drivers can request real-time traffic updates or search for nearby points of interest using voice commands without physical controls.

Healthcare:

- Recording the physician’s dictation

- Transcribing the audio recording into written text using speech recognition technology

- Editing the transcribed text for better accuracy and correcting errors as needed

- Formatting the document in accordance with legal and medical requirements.

- Virtual medical assistants: Virtual medical assistants (VMAs) use speech recognition, natural language processing, and machine learning algorithms to communicate with patients through voice or text. Speech recognition software allows VMAs to respond to voice commands, retrieve information from electronic health records (EHRs) and automate the medical transcription process.

- Electronic Health Records (EHR) integration: Healthcare professionals can use voice commands to navigate the EHR system , access patient data, and enter data into specific fields.

Technology:

- Virtual agents: Virtual agents utilize natural language processing (NLP) and speech recognition technologies to understand spoken language and convert it into text. Speech recognition enables virtual agents to process spoken language in real-time and respond promptly and accurately to user voice commands.

Further reading

- Top 5 Speech Recognition Data Collection Methods in 2023

- Top 11 Speech Recognition Applications in 2023

External Links

- 1. Databricks

- 2. PubMed Central

- 3. Qin, L. (2013). Learning Out-of-vocabulary Words in Automatic Speech Recognition . Carnegie Mellon University.

- 4. Wikipedia

Next to Read

Top 10 text to speech software analysis in 2024, top 5 speech recognition data collection methods in 2024, top 4 speech recognition challenges & solutions in 2024.

Your email address will not be published. All fields are required.

Related research

Why Should You Use Cloud Inference (Inference as a Service) in 2024?

How Does Speech Recognition Work? (9 Simple Questions Answered)

- by Team Experts

- July 2, 2023 July 3, 2023

Discover the Surprising Science Behind Speech Recognition – Learn How It Works in 9 Simple Questions!

Speech recognition is the process of converting spoken words into written or machine-readable text. It is achieved through a combination of natural language processing , audio inputs, machine learning , and voice recognition . Speech recognition systems analyze speech patterns to identify phonemes , the basic units of sound in a language. Acoustic modeling is used to match the phonemes to words , and word prediction algorithms are used to determine the most likely words based on context analysis . Finally, the words are converted into text.

What is Natural Language Processing and How Does it Relate to Speech Recognition?

How do audio inputs enable speech recognition, what role does machine learning play in speech recognition, how does voice recognition work, what are the different types of speech patterns used for speech recognition, how is acoustic modeling used for accurate phoneme detection in speech recognition systems, what is word prediction and why is it important for effective speech recognition technology, how can context analysis improve accuracy of automatic speech recognition systems, common mistakes and misconceptions.

Natural language processing (NLP) is a branch of artificial intelligence that deals with the analysis and understanding of human language. It is used to enable machines to interpret and process natural language, such as speech, text, and other forms of communication . NLP is used in a variety of applications , including automated speech recognition , voice recognition technology , language models, text analysis , text-to-speech synthesis , natural language understanding , natural language generation, semantic analysis , syntactic analysis, pragmatic analysis, sentiment analysis, and speech-to-text conversion. NLP is closely related to speech recognition , as it is used to interpret and understand spoken language in order to convert it into text.

Audio inputs enable speech recognition by providing digital audio recordings of spoken words . These recordings are then analyzed to extract acoustic features of speech, such as pitch, frequency, and amplitude. Feature extraction techniques , such as spectral analysis of sound waves, are used to identify and classify phonemes . Natural language processing (NLP) and machine learning models are then used to interpret the audio recordings and recognize speech. Neural networks and deep learning architectures are used to further improve the accuracy of voice recognition . Finally, Automatic Speech Recognition (ASR) systems are used to convert the speech into text, and noise reduction techniques and voice biometrics are used to improve accuracy .

Machine learning plays a key role in speech recognition , as it is used to develop algorithms that can interpret and understand spoken language. Natural language processing , pattern recognition techniques , artificial intelligence , neural networks, acoustic modeling , language models, statistical methods , feature extraction , hidden Markov models (HMMs), deep learning architectures , voice recognition systems, speech synthesis , and automatic speech recognition (ASR) are all used to create machine learning models that can accurately interpret and understand spoken language. Natural language understanding is also used to further refine the accuracy of the machine learning models .

Voice recognition works by using machine learning algorithms to analyze the acoustic properties of a person’s voice. This includes using voice recognition software to identify phonemes , speaker identification, text normalization , language models, noise cancellation techniques , prosody analysis , contextual understanding , artificial neural networks, voice biometrics , speech synthesis , and deep learning . The data collected is then used to create a voice profile that can be used to identify the speaker .

The different types of speech patterns used for speech recognition include prosody , contextual speech recognition , speaker adaptation , language models, hidden Markov models (HMMs), neural networks, Gaussian mixture models (GMMs) , discrete wavelet transform (DWT), Mel-frequency cepstral coefficients (MFCCs), vector quantization (VQ), dynamic time warping (DTW), continuous density hidden Markov model (CDHMM), support vector machines (SVM), and deep learning .

Acoustic modeling is used for accurate phoneme detection in speech recognition systems by utilizing statistical models such as Hidden Markov Models (HMMs) and Gaussian Mixture Models (GMMs). Feature extraction techniques such as Mel-frequency cepstral coefficients (MFCCs) are used to extract relevant features from the audio signal . Context-dependent models are also used to improve accuracy . Discriminative training techniques such as maximum likelihood estimation and the Viterbi algorithm are used to train the models. In recent years, neural networks and deep learning algorithms have been used to improve accuracy , as well as natural language processing techniques .

Word prediction is a feature of natural language processing and artificial intelligence that uses machine learning algorithms to predict the next word or phrase a user is likely to type or say. It is used in automated speech recognition systems to improve the accuracy of the system by reducing the amount of user effort and time spent typing or speaking words. Word prediction also enhances the user experience by providing faster response times and increased efficiency in data entry tasks. Additionally, it reduces errors due to incorrect spelling or grammar, and improves the understanding of natural language by machines. By using word prediction, speech recognition technology can be more effective , providing improved accuracy and enhanced ability for machines to interpret human speech.

Context analysis can improve the accuracy of automatic speech recognition systems by utilizing language models, acoustic models, statistical methods , and machine learning algorithms to analyze the semantic , syntactic, and pragmatic aspects of speech. This analysis can include word – level , sentence- level , and discourse-level context, as well as utterance understanding and ambiguity resolution. By taking into account the context of the speech, the accuracy of the automatic speech recognition system can be improved.

- Misconception : Speech recognition requires a person to speak in a robotic , monotone voice. Correct Viewpoint: Speech recognition technology is designed to recognize natural speech patterns and does not require users to speak in any particular way.

- Misconception : Speech recognition can understand all languages equally well. Correct Viewpoint: Different speech recognition systems are designed for different languages and dialects, so the accuracy of the system will vary depending on which language it is programmed for.

- Misconception: Speech recognition only works with pre-programmed commands or phrases . Correct Viewpoint: Modern speech recognition systems are capable of understanding conversational language as well as specific commands or phrases that have been programmed into them by developers.

Speech Recognition

What Is Speech Recognition?

Speech recognition is the technology that allows a computer to recognize human speech and process it into text. It’s also known as automatic speech recognition ( ASR ), speech-to-text, or computer speech recognition.

Speech recognition systems rely on technologies like artificial intelligence (AI) and machine learning (ML) to gain larger samples of speech, including different languages, accents, and dialects. AI is used to identify patterns of speech, words, and language to transcribe them into a written format.

In this blog post, we’ll take a deeper dive into speech recognition and look at how it works, its real-world applications, and how platforms like aiOla are using it to change the way we work.

Basic Speech Recognition Concepts

To start understanding speech recognition and all its applications, we need to first look at what it is and isn’t. While speech recognition is more than just the sum of its parts, it’s important to look at each of the parts that contribute to this technology to better grasp how it can make a real impact. Let’s take a look at some common concepts.

Speech Recognition vs. Speech Synthesis

Unlike speech recognition, which converts spoken language into a written format through a computer, speech synthesis does the same in reverse. In other words, speech synthesis is the creation of artificial speech derived from a written text, where a computer uses an AI-generated voice to simulate spoken language. For example, think of the language voice assistants like Siri or Alexa use to communicate information.

Phonetics and Phonology

Phonetics studies the physical sound of human speech, such as its acoustics and articulation. Alternatively, phonology looks at the abstract representation of sounds in a language including their patterns and how they’re organized. These two concepts need to be carefully weighed for speech AI algorithms to understand sound and language as a human might.

Acoustic Modeling

In acoustic modeling , the acoustic characteristics of audio and speech are looked at. When it comes to speech recognition systems, this process is essential since it helps analyze the audio features of each word, such as the frequency in which it’s used, the duration of a word, or the sounds it encompasses.

Language Modeling

Language modeling algorithms look at details like the likelihood of word sequences in a language. This type of modeling helps make speech recognition systems more accurate as it mimics real spoken language by looking at the probability of word combinations in phrases.

Speaker-Dependent vs. Speaker-Independent Systems

A system that’s dependent on a speaker is trained on the unique voice and speech patterns of a specific user, meaning the system might be highly accurate for that individual but not as much for other people. By contrast, a system that’s independent of a speaker can recognize speech for any number of speakers, and while more versatile, may be slightly less accurate.

How Does Speech Recognition Work?

There are a few different stages to speech recognition, each one providing another layer to how language is processed by a computer. Here are the different steps that make up the process.

- First, raw audio input undergoes a process called preprocessing , where background noise is removed to enhance sound quality and make recognition more manageable.

- Next, the audio goes through feature extraction , where algorithms identify distinct characteristics of sounds and words.

- Then, these extracted features go through acoustic modeling , which as we described earlier, is the stage where acoustic and language models decide the most accurate visual representation of the word. These acoustic modeling systems are based on extensive datasets, allowing them to learn the acoustic patterns of different spoken words.

- At the same time, language modeling looks at the structure and probability of words in a sequence, which helps provide context.

- After this, the output goes into a decoding sequence, where the speech recognition system matches data from the extracted features with the acoustic models. This helps determine the most likely word sequence.

- Finally, the audio and corresponding textual output go through post-processing , which refines the output by correcting errors and improving coherence to create a more accurate transcription.

When it comes to advanced systems, all of these stages are done nearly instantaneously, making this process almost invisible to the average user. All of these stages together have made speech recognition a highly versatile tool that can be used in many different ways, from virtual assistants to transcription services and beyond.

Types of Speech Recognition Systems

Speech recognition technology is used in many different ways today, transforming the way humans and machines interact and work together. From professional settings to helping us make our lives a little easier, this technology can take on many forms. Here are some of them.

Virtual Assistants

In 2022, 62% of US adults used a voice assistant on various mobile devices. Siri, Google Assistant, and Alexa are all examples of speech recognition in our daily lives. These applications respond to vocal commands and can interact with humans through natural language in order to complete tasks like sending messages, answering questions, or setting reminders.

Voice Search

Search engines like Google can be searched using voice instead of typing in a query, often with voice assistants. This allows users to conveniently search for a quick answer without sorting through content when they need to be hands-free, like when driving or multitasking. This technology has become so popular over the last few years that now 50% of US-based consumers use voice search every single day.

Transcription Services

Speech recognition has completely changed the transcription industry. It has enabled transcription services to automate the process of turning speech into text, increasing efficiency in many fields like education, legal services, healthcare, and even journalism.

Accessibility

With speech recognition, technologies that may have seemed out of reach are now accessible to people with disabilities. For example, for people with motor impairments or who are visually impaired, AI voice-to-text technology can help with the hands-free operation of things like keyboards, writing assistance for dictation, and voice commands to control devices.

Automotive Systems

Speech recognition is keeping drivers safer by giving them hands-free control over in-car features. Drivers can make calls, adjust the temperature, navigate, or even control the music without ever removing their hands from the wheel and instead just issuing voice commands to a speech-activated system.

How Does aiOla Use Speech Recognition?

aiOla’s AI-powered speech platform is revolutionizing the way certain industries work by bringing advanced speech recognition technology to companies in fields like aviation, fleet management, food safety, and manufacturing.

Traditionally, many processes in these industries were manual, forcing organizations to use a lot of time, budget, and resources to complete mission-critical tasks like inspections and maintenance. However, with aiOla’s advanced speech system, these otherwise labor and resource-intensive tasks can be reduced to a matter of minutes using natural language.

Rather than manually writing to record data during inspections, inspectors can speak about what they’re verifying and the data gets stored instantly. Similarly, through dissecting speech, aiOla can help with predictive maintenance of essential machinery, allowing food manufacturers to produce safer items and decrease downtime.

Since aiOla’s speech recognition platform understands over 100 languages and countless accents, dialects, and industry-specific jargon, the system is highly accurate and can help turn speech into action to go a step further and automate otherwise manual tasks.

Embracing Speech Recognition Technology

Looking ahead, we can only expect the technology that relies on speech recognition to improve and become more embedded into our day-to-day. Indeed, the market for this technology is expected to grow to $19.57 billion by 2030 . Whether it’s refining virtual assistants, improving voice search, or applying speech recognition to new industries, this technology is here to stay and enhance our personal and professional lives.

aiOla, while also a relatively new technology, is already making waves in industries like manufacturing, fleet management, and food safety. Through technological advancements in speech recognition, we only expect aiOla’s capabilities to continue to grow and support a larger variety of businesses and organizations.

Schedule a demo with one of our experts to see how aiOla’s AI speech recognition platform works in action.

What is speech recognition software? Speech recognition software is a technology that enables computers to convert speech into written words. This is done through algorithms that analyze audio signals along with AI, ML, and other technologies. What is a speech recognition example? A relatable example of speech recognition is asking a virtual assistant like Siri on a mobile device to check the day’s weather or set an alarm. While speech recognition can complete a lot more advanced tasks, this exemplifies how this technology is commonly used in everyday life. What is speech recognition in AI? Speech recognition in AI refers to how artificial intelligence processes are used to aid in recognizing voice and language using advanced models and algorithms trained on vast amounts of data. What are some different types of speech recognition? A few different types of speech recognition include speaker-dependent and speaker-independent systems, command and control systems, and continuous speech recognition. What is the difference between voice recognition and speech recognition? Speech recognition converts spoken language into text, while voice recognition works to identify a speaker’s unique vocal characteristics for authentication purposes. In essence, voice recognition is more tied to identity rather than transcription.

Ready to put your speech in motion? We’re listening.

Share your details to schedule a call

We will contact you soon!

Essential Guide to Automatic Speech Recognition Technology

Over the past decade, AI-powered speech recognition systems have slowly become part of our everyday lives, from voice search to virtual assistants in contact centers, cars, hospitals, and restaurants. These speech recognition developments are made possible by deep learning advancements.

Developers across many industries now use automatic speech recognition (ASR) to increase business productivity, application efficiency, and even digital accessibility. This post discusses ASR, how it works, use cases, advancements, and more.

What is automatic speech recognition?

Speech recognition technology is capable of converting spoken language (an audio signal) into written text that is often used as a command.

Today’s most advanced software can accurately process varying language dialects and accents. For example, ASR is commonly seen in user-facing applications such as virtual agents, live captioning, and clinical note-taking. Accurate speech transcription is essential for these use cases.

Developers in the speech AI space also use alternative terminologies to describe speech recognition such as ASR, speech-to-text (STT), and voice recognition.

ASR is a critical component of speech AI , which is a suite of technologies designed to help humans converse with computers through voice.

Why natural language processing is used in speech recognition

Developers are often unclear about the role of natural language processing (NLP) models in the ASR pipeline. Aside from being applied in language models, NLP is also used to augment generated transcripts with punctuation and capitalization at the end of the ASR pipeline.

After the transcript is post-processed with NLP, the text is used for downstream language modeling tasks:

- Sentiment analysis

- Text analytics

- Text summarization

- Question answering

Speech recognition algorithms

Speech recognition algorithms can be implemented in a traditional way using statistical algorithms or by using deep learning techniques such as neural networks to convert speech into text.

Traditional ASR algorithms

Hidden Markov models (HMM) and dynamic time warping (DTW) are two such examples of traditional statistical techniques for performing speech recognition.

Using a set of transcribed audio samples, an HMM is trained to predict word sequences by varying the model parameters to maximize the likelihood of the observed audio sequence.

DTW is a dynamic programming algorithm that finds the best possible word sequence by calculating the distance between time series: one representing the unknown speech and others representing the known words.

Deep learning ASR algorithms

For the last few years, developers have been interested in deep learning for speech recognition because statistical algorithms are less accurate. In fact, deep learning algorithms work better at understanding dialects, accents, context, and multiple languages, and they transcribe accurately even in noisy environments.

Some of the most popular state-of-the-art speech recognition acoustic models are Quartznet , Citrinet , and Conformer . In a typical speech recognition pipeline, you can choose and switch any acoustic model that you want based on your use case and performance.

Implementation tools for deep learning models

Several tools are available for developing deep learning speech recognition models and pipelines, including Kaldi , Mozilla DeepSpeech, NVIDIA NeMo , NVIDIA Riva , NVIDIA TAO Toolkit , and services from Google, Amazon, and Microsoft.

Kaldi, DeepSpeech, and NeMo are open-source toolkits that help you build speech recognition models. TAO Toolkit and Riva are closed-source SDKs that help you develop customizable pipelines that can be deployed in production.

Cloud service providers like Google, AWS, and Microsoft offer generic services that you can easily plug and play with.

Deep learning speech recognition pipeline

An ASR pipeline consists of the following components:

- Spectrogram generator that converts raw audio to spectrograms.

- Acoustic model that takes the spectrograms as input and outputs a matrix of probabilities over characters over time.

- Decoder (optionally coupled with a language model) that generates possible sentences from the probability matrix.

- Punctuation and capitalization model that formats the generated text for easier human consumption.

A typical deep learning pipeline for speech recognition includes the following components:

- Data preprocessing

- Neural acoustic model

- Decoder (optionally coupled with an n-gram language model)

- Punctuation and capitalization model

Figure 1 shows an example of a deep learning speech recognition pipeline:.

Datasets are essential in any deep learning application. Neural networks function similarly to the human brain. The more data you use to teach the model, the more it learns. The same is true for the speech recognition pipeline.

A few popular speech recognition datasets are

- LibriSpeech

- Fisher English Training Speech

- Mozilla Common Voice (MCV)

- 2000 HUB 5 English Evaluation Speech

- AN4 (includes recordings of people spelling out addresses and names)

- Aishell-1/AIshell-2 Mandarin speech corpus

Data processing is the first step. It includes data preprocessing and augmentation techniques such as speed/time/noise/impulse perturbation and time stretch augmentation, fast Fourier Transformations (FFT) using windowing, and normalization techniques.

For example, in Figure 2, the mel spectrogram is generated from a raw audio waveform after applying FFT using the windowing technique.

We can also use perturbation techniques to augment the training dataset. Figures 3 and 4 represent techniques like noise perturbation and masking being used to increase the size of the training dataset in order to avoid problems like overfitting.

The output of the data preprocessing stage is a spectrogram/mel spectrogram, which is a visual representation of the strength of the audio signal over time.

Mel spectrograms are then fed into the next stage: a neural acoustic model . QuartzNet, CitriNet, ContextNet, Conformer-CTC, and Conformer-Transducer are examples of cutting-edge neural acoustic models. Multiple ASR models exist for several reasons, such as the need for real-time performance, higher accuracy, memory size, and compute cost for your use case.

However, Conformer-based models are becoming more popular due to their improved accuracy and ability to comprehend. The acoustic model returns the probability of characters/words at each time stamp.

Figure 5 shows the output of the acoustic model, with time stamps.

The acoustic model’s output is fed into the decoder along with the language model. Decoders include beam search and greedy decoders, and language models include n-gram language, KenLM, and neural scoring. When it comes to the decoder, it helps to generate top words, which are then passed to language models to predict the correct sentence.

In Figure 6, the decoder selects the next best word based on the probability score. Based on the final highest score, the correct word or sentence is selected and sent to the punctuation and capitalization model.

The ASR pipeline generates text with no punctuation or capitalization.

Finally, a punctuation and capitalization model is used to improve the text quality for better readability. Bidirectional Encoder Representations from Transformers (BERT) models are commonly used to generate punctuated text.

Figure 7 shows a simple example of a before-and-after punctuation and capitalization model.

Speech recognition industry impact

There are many unique applications for ASR . For example, speech recognition could help industries such as finance, telecommunications, and unified communications as a service (UCaaS) to improve customer experience, operational efficiency, and return on investment (ROI).

Speech recognition is applied in the finance industry for applications such as call center agent assist and trade floor transcripts. ASR is used to transcribe conversations between customers and call center agents or trade floor agents. The generated transcriptions can then be analyzed and used to provide real-time recommendations to agents. This adds to an 80% reduction in post-call time.

Furthermore, the generated transcripts are used for downstream tasks:

- Intent and entity recognition

Telecommunications

Contact centers are critical components of the telecommunications industry. With contact center technology, you can reimagine the telecommunications customer center, and speech recognition helps with that.

As previously discussed in the finance call center use case, ASR is used in Telecom contact centers to transcribe conversations between customers and contact center agents to analyze them and recommend call center agents in real time. T-Mobile uses ASR for quick customer resolution , for example.

Unified communications as a software

COVID-19 increased demand for UCaaS solutions, and vendors in the space began focusing on the use of speech AI technologies such as ASR to create more engaging meeting experiences.

For example, ASR can be used to generate live captions in video conferencing meetings. Captions generated can then be used for downstream tasks such as meeting summaries and identifying action items in notes.

Future of ASR technology

Speech recognition is not as easy as it sounds. Developing speech recognition is full of challenges, ranging from accuracy to customization for your use case to real-time performance. On the other hand, businesses and academic institutions are racing to overcome some of these challenges and advance the use of speech recognition capabilities.

ASR challenges

Some of the challenges in developing and deploying speech recognition pipelines in production include the following:

- Lack of tools and SDKs that offer state-of-the-art (SOTA) ASR models makes it difficult for developers to take advantage of the best speech recognition technology.

- Limited customization capabilities that enable developers to fine-tune on domain-specific and context-specific jargon, multiple languages, dialects, and accents to have your applications understand and speak like you

- Restricted deployment support; for example, depending on the use case, the software should be capable of being deployed in any cloud, on-premises, edge, and embedded.

- Real-time speech recognition pipelines; for instance, in a call center agent assist use case, we cannot wait several seconds for conversations to be transcribed before using them to empower agents.

For more information about the major pain points that developers face when adding speech-to-text capabilities to applications, see Solving Automatic Speech Recognition Deployment Challenges .

ASR advancements

Numerous advancements in speech recognition are occurring on both the research and software development fronts. To begin, research has resulted in the development of several new cutting-edge ASR architectures, E2E speech recognition models, and self-supervised or unsupervised training techniques.

On the software side, there are a few tools that enable quick access to SOTA models, and then there are different sets of tools that enable the deployment of models as services in production.

Key takeaways

Speech recognition continues to grow in adoption due to its advancements in deep learning-based algorithms that have made ASR as accurate as human recognition. Also, breakthroughs like multilingual ASR help companies make their apps available worldwide, and moving algorithms from cloud to on-device saves money, protects privacy, and speeds up inference.

NVIDIA offers Riva , a speech AI SDK, to address several of the challenges discussed above. With Riva, you can quickly access the latest SOTA research models tailored for production purposes. You can customize these models to your domain and use case, deploy on any cloud, on-premises, edge, or embedded, and run them in real-time for engaging natural interactions.

Learn how your organization can benefit from speech recognition skills with the free ebook, Building Speech AI Applications .

Related resources

- GTC session: Speech AI Demystified

- GTC session: Mastering Speech AI for Multilingual Multimedia Transformation

- GTC session: Human-Like AI Voices: Exploring the Evolution of Voice Technology

- NGC Containers: Domain Specific NeMo ASR Application

- NGC Containers: MATLAB

- Webinar: How Telcos Transform Customer Experiences with Conversational AI

About the Authors

Related posts

Video: Exploring Speech AI from Research to Practical Production Applications

Deep learning is transforming asr and tts algorithms.

Making an NVIDIA Riva ASR Service for a New Language

Exploring Unique Applications of Automatic Speech Recognition Technology

An Easy Introduction to Speech AI

Automating Telco Network Design using NVIDIA NIM and NVIDIA NeMo

Improving Video Quality with the NVIDIA Video Codec SDK 12.2 for HEVC

Introducing Grouped GEMM APIs in cuBLAS and More Performance Updates

Spotlight: Cisco Enhances Workload Security and Operational Efficiency with NVIDIA BlueField-3 DPUs

Seamlessly Deploying a Swarm of LoRA Adapters with NVIDIA NIM

- Random article

- Teaching guide

- Privacy & cookies

Speech recognition software

by Chris Woodford . Last updated: August 17, 2023.

I t's just as well people can understand speech. Imagine if you were like a computer: friends would have to "talk" to you by prodding away at a plastic keyboard connected to your brain by a long, curly wire. If you wanted to say "hello" to someone, you'd have to reach out, chatter your fingers over their keyboard, and wait for their eyes to light up; they'd have to do the same to you. Conversations would be a long, slow, elaborate nightmare—a silent dance of fingers on plastic; strange, abstract, and remote. We'd never put up with such clumsiness as humans, so why do we talk to our computers this way?

Scientists have long dreamed of building machines that can chatter and listen just like humans. But although computerized speech recognition has been around for decades, and is now built into most smartphones and PCs, few of us actually use it. Why? Possibly because we never even bother to try it out, working on the assumption that computers could never pull off a trick so complex as understanding the human voice. It's certainly true that speech recognition is a complex problem that's challenged some of the world's best computer scientists, mathematicians, and linguists. How well are they doing at cracking the problem? Will we all be chatting to our PCs one day soon? Let's take a closer look and find out!

Photo: A court reporter dictates notes into a laptop with a noise-cancelling microphone and speech-recogition software. Photo by Micha Pierce courtesy of US Marine Corps and DVIDS .

What is speech?

Language sets people far above our creeping, crawling animal friends. While the more intelligent creatures, such as dogs and dolphins, certainly know how to communicate with sounds, only humans enjoy the rich complexity of language. With just a couple of dozen letters, we can build any number of words (most dictionaries contain tens of thousands) and express an infinite number of thoughts.

Photo: Speech recognition has been popping up all over the place for quite a few years now. Even my old iPod Touch (dating from around 2012) has a built-in "voice control" program that let you pick out music just by saying "Play albums by U2," or whatever band you're in the mood for.

When we speak, our voices generate little sound packets called phones (which correspond to the sounds of letters or groups of letters in words); so speaking the word cat produces phones that correspond to the sounds "c," "a," and "t." Although you've probably never heard of these kinds of phones before, you might well be familiar with the related concept of phonemes : simply speaking, phonemes are the basic LEGO™ blocks of sound that all words are built from. Although the difference between phones and phonemes is complex and can be very confusing, this is one "quick-and-dirty" way to remember it: phones are actual bits of sound that we speak (real, concrete things), whereas phonemes are ideal bits of sound we store (in some sense) in our minds (abstract, theoretical sound fragments that are never actually spoken).

Computers and computer models can juggle around with phonemes, but the real bits of speech they analyze always involves processing phones. When we listen to speech, our ears catch phones flying through the air and our leaping brains flip them back into words, sentences, thoughts, and ideas—so quickly, that we often know what people are going to say before the words have fully fled from their mouths. Instant, easy, and quite dazzling, our amazing brains make this seem like a magic trick. And it's perhaps because listening seems so easy to us that we think computers (in many ways even more amazing than brains) should be able to hear, recognize, and decode spoken words as well. If only it were that simple!

Why is speech so hard to handle?

The trouble is, listening is much harder than it looks (or sounds): there are all sorts of different problems going on at the same time... When someone speaks to you in the street, there's the sheer difficulty of separating their words (what scientists would call the acoustic signal ) from the background noise —especially in something like a cocktail party, where the "noise" is similar speech from other conversations. When people talk quickly, and run all their words together in a long stream, how do we know exactly when one word ends and the next one begins? (Did they just say "dancing and smile" or "dance, sing, and smile"?) There's the problem of how everyone's voice is a little bit different, and the way our voices change from moment to moment. How do our brains figure out that a word like "bird" means exactly the same thing when it's trilled by a ten year-old girl or boomed by her forty-year-old father? What about words like "red" and "read" that sound identical but mean totally different things (homophones, as they're called)? How does our brain know which word the speaker means? What about sentences that are misheard to mean radically different things? There's the age-old military example of "send reinforcements, we're going to advance" being misheard for "send three and fourpence, we're going to a dance"—and all of us can probably think of song lyrics we've hilariously misunderstood the same way (I always chuckle when I hear Kate Bush singing about "the cattle burning over your shoulder"). On top of all that stuff, there are issues like syntax (the grammatical structure of language) and semantics (the meaning of words) and how they help our brain decode the words we hear, as we hear them. Weighing up all these factors, it's easy to see that recognizing and understanding spoken words in real time (as people speak to us) is an astonishing demonstration of blistering brainpower.

It shouldn't surprise or disappoint us that computers struggle to pull off the same dazzling tricks as our brains; it's quite amazing that they get anywhere near!

Photo: Using a headset microphone like this makes a huge difference to the accuracy of speech recognition: it reduces background sound, making it much easier for the computer to separate the signal (the all-important words you're speaking) from the noise (everything else).

How do computers recognize speech?

Speech recognition is one of the most complex areas of computer science —and partly because it's interdisciplinary: it involves a mixture of extremely complex linguistics, mathematics, and computing itself. If you read through some of the technical and scientific papers that have been published in this area (a few are listed in the references below), you may well struggle to make sense of the complexity. My objective is to give a rough flavor of how computers recognize speech, so—without any apology whatsoever—I'm going to simplify hugely and miss out most of the details.

Broadly speaking, there are four different approaches a computer can take if it wants to turn spoken sounds into written words:

1: Simple pattern matching

Ironically, the simplest kind of speech recognition isn't really anything of the sort. You'll have encountered it if you've ever phoned an automated call center and been answered by a computerized switchboard. Utility companies often have systems like this that you can use to leave meter readings, and banks sometimes use them to automate basic services like balance inquiries, statement orders, checkbook requests, and so on. You simply dial a number, wait for a recorded voice to answer, then either key in or speak your account number before pressing more keys (or speaking again) to select what you want to do. Crucially, all you ever get to do is choose one option from a very short list, so the computer at the other end never has to do anything as complex as parsing a sentence (splitting a string of spoken sound into separate words and figuring out their structure), much less trying to understand it; it needs no knowledge of syntax (language structure) or semantics (meaning). In other words, systems like this aren't really recognizing speech at all: they simply have to be able to distinguish between ten different sound patterns (the spoken words zero through nine) either using the bleeping sounds of a Touch-Tone phone keypad (technically called DTMF ) or the spoken sounds of your voice.

From a computational point of view, there's not a huge difference between recognizing phone tones and spoken numbers "zero", "one," "two," and so on: in each case, the system could solve the problem by comparing an entire chunk of sound to similar stored patterns in its memory. It's true that there can be quite a bit of variability in how different people say "three" or "four" (they'll speak in a different tone, more or less slowly, with different amounts of background noise) but the ten numbers are sufficiently different from one another for this not to present a huge computational challenge. And if the system can't figure out what you're saying, it's easy enough for the call to be transferred automatically to a human operator.

Photo: Voice-activated dialing on cellphones is little more than simple pattern matching. You simply train the phone to recognize the spoken version of a name in your phonebook. When you say a name, the phone doesn't do any particularly sophisticated analysis; it simply compares the sound pattern with ones you've stored previously and picks the best match. No big deal—which explains why even an old phone like this 2001 Motorola could do it.

2: Pattern and feature analysis

Automated switchboard systems generally work very reliably because they have such tiny vocabularies: usually, just ten words representing the ten basic digits. The vocabulary that a speech system works with is sometimes called its domain . Early speech systems were often optimized to work within very specific domains, such as transcribing doctor's notes, computer programming commands, or legal jargon, which made the speech recognition problem far simpler (because the vocabulary was smaller and technical terms were explicitly trained beforehand). Much like humans, modern speech recognition programs are so good that they work in any domain and can recognize tens of thousands of different words. How do they do it?

Most of us have relatively large vocabularies, made from hundreds of common words ("a," "the," "but" and so on, which we hear many times each day) and thousands of less common ones (like "discombobulate," "crepuscular," "balderdash," or whatever, which we might not hear from one year to the next). Theoretically, you could train a speech recognition system to understand any number of different words, just like an automated switchboard: all you'd need to do would be to get your speaker to read each word three or four times into a microphone, until the computer generalized the sound pattern into something it could recognize reliably.

The trouble with this approach is that it's hugely inefficient. Why learn to recognize every word in the dictionary when all those words are built from the same basic set of sounds? No-one wants to buy an off-the-shelf computer dictation system only to find they have to read three or four times through a dictionary, training it up to recognize every possible word they might ever speak, before they can do anything useful. So what's the alternative? How do humans do it? We don't need to have seen every Ford, Chevrolet, and Cadillac ever manufactured to recognize that an unknown, four-wheeled vehicle is a car: having seen many examples of cars throughout our lives, our brains somehow store what's called a prototype (the generalized concept of a car, something with four wheels, big enough to carry two to four passengers, that creeps down a road) and we figure out that an object we've never seen before is a car by comparing it with the prototype. In much the same way, we don't need to have heard every person on Earth read every word in the dictionary before we can understand what they're saying; somehow we can recognize words by analyzing the key features (or components) of the sounds we hear. Speech recognition systems take the same approach.

The recognition process

Practical speech recognition systems start by listening to a chunk of sound (technically called an utterance ) read through a microphone. The first step involves digitizing the sound (so the up-and-down, analog wiggle of the sound waves is turned into digital format, a string of numbers) by a piece of hardware (or software) called an analog-to-digital (A/D) converter (for a basic introduction, see our article on analog versus digital technology ). The digital data is converted into a spectrogram (a graph showing how the component frequencies of the sound change in intensity over time) using a mathematical technique called a Fast Fourier Transform (FFT) ), then broken into a series of overlapping chunks called acoustic frames , each one typically lasting 1/25 to 1/50 of a second. These are digitally processed in various ways and analyzed to find the components of speech they contain. Assuming we've separated the utterance into words, and identified the key features of each one, all we have to do is compare what we have with a phonetic dictionary (a list of known words and the sound fragments or features from which they're made) and we can identify what's probably been said. Probably is always the word in speech recognition: no-one but the speaker can ever know exactly what was said.)

Seeing speech

In theory, since spoken languages are built from only a few dozen phonemes (English uses about 46, while Spanish has only about 24), you could recognize any possible spoken utterance just by learning to pick out phones (or similar key features of spoken language such as formants , which are prominent frequencies that can be used to help identify vowels). Instead of having to recognize the sounds of (maybe) 40,000 words, you'd only need to recognize the 46 basic component sounds (or however many there are in your language), though you'd still need a large phonetic dictionary listing the phonemes that make up each word. This method of analyzing spoken words by identifying phones or phonemes is often called the beads-on-a-string model : a chunk of unknown speech (the string) is recognized by breaking it into phones or bits of phones (the beads); figure out the phones and you can figure out the words.

Most speech recognition programs get better as you use them because they learn as they go along using feedback you give them, either deliberately (by correcting mistakes) or by default (if you don't correct any mistakes, you're effectively saying everything was recognized perfectly—which is also feedback). If you've ever used a program like one of the Dragon dictation systems, you'll be familiar with the way you have to correct your errors straight away to ensure the program continues to work with high accuracy. If you don't correct mistakes, the program assumes it's recognized everything correctly, which means similar mistakes are even more likely to happen next time. If you force the system to go back and tell it which words it should have chosen, it will associate those corrected words with the sounds it heard—and do much better next time.

Screenshot: With speech dictation programs like Dragon NaturallySpeaking, shown here, it's important to go back and correct your mistakes if you want your words to be recognized accurately in future.

3: Statistical analysis

In practice, recognizing speech is much more complex than simply identifying phones and comparing them to stored patterns, and for a whole variety of reasons: Speech is extremely variable: different people speak in different ways (even though we're all saying the same words and, theoretically, they're all built from a standard set of phonemes) You don't always pronounce a certain word in exactly the same way; even if you did, the way you spoke a word (or even part of a word) might vary depending on the sounds or words that came before or after. As a speaker's vocabulary grows, the number of similar-sounding words grows too: the digits zero through nine all sound different when you speak them, but "zero" sounds like "hero," "one" sounds like "none," "two" could mean "two," "to," or "too"... and so on. So recognizing numbers is a tougher job for voice dictation on a PC, with a general 50,000-word vocabulary, than for an automated switchboard with a very specific, 10-word vocabulary containing only the ten digits. The more speakers a system has to recognize, the more variability it's going to encounter and the bigger the likelihood of making mistakes. For something like an off-the-shelf voice dictation program (one that listens to your voice and types your words on the screen), simple pattern recognition is clearly going to be a bit hit and miss. The basic principle of recognizing speech by identifying its component parts certainly holds good, but we can do an even better job of it by taking into account how language really works. In other words, we need to use what's called a language model .

When people speak, they're not simply muttering a series of random sounds. Every word you utter depends on the words that come before or after. For example, unless you're a contrary kind of poet, the word "example" is much more likely to follow words like "for," "an," "better," "good", "bad," and so on than words like "octopus," "table," or even the word "example" itself. Rules of grammar make it unlikely that a noun like "table" will be spoken before another noun ("table example" isn't something we say) while—in English at least—adjectives ("red," "good," "clear") come before nouns and not after them ("good example" is far more probable than "example good"). If a computer is trying to figure out some spoken text and gets as far as hearing "here is a ******* example," it can be reasonably confident that ******* is an adjective and not a noun. So it can use the rules of grammar to exclude nouns like "table" and the probability of pairs like "good example" and "bad example" to make an intelligent guess. If it's already identified a "g" sound instead of a "b", that's an added clue.

Virtually all modern speech recognition systems also use a bit of complex statistical hocus-pocus to help figure out what's being said. The probability of one phone following another, the probability of bits of silence occurring in between phones, and the likelihood of different words following other words are all factored in. Ultimately, the system builds what's called a hidden Markov model (HMM) of each speech segment, which is the computer's best guess at which beads are sitting on the string, based on all the things it's managed to glean from the sound spectrum and all the bits and pieces of phones and silence that it might reasonably contain. It's called a Markov model (or Markov chain), for Russian mathematician Andrey Markov , because it's a sequence of different things (bits of phones, words, or whatever) that change from one to the next with a certain probability. Confusingly, it's referred to as a "hidden" Markov model even though it's worked out in great detail and anything but hidden! "Hidden," in this case, simply means the contents of the model aren't observed directly but figured out indirectly from the sound spectrum. From the computer's viewpoint, speech recognition is always a probabilistic "best guess" and the right answer can never be known until the speaker either accepts or corrects the words that have been recognized. (Markov models can be processed with an extra bit of computer jiggery pokery called the Viterbi algorithm , but that's beyond the scope of this article.)

4: Artificial neural networks

HMMs have dominated speech recognition since the 1970s—for the simple reason that they work so well. But they're by no means the only technique we can use for recognizing speech. There's no reason to believe that the brain itself uses anything like a hidden Markov model. It's much more likely that we figure out what's being said using dense layers of brain cells that excite and suppress one another in intricate, interlinked ways according to the input signals they receive from our cochleas (the parts of our inner ear that recognize different sound frequencies).

Back in the 1980s, computer scientists developed "connectionist" computer models that could mimic how the brain learns to recognize patterns, which became known as artificial neural networks (sometimes called ANNs). A few speech recognition scientists explored using neural networks, but the dominance and effectiveness of HMMs relegated alternative approaches like this to the sidelines. More recently, scientists have explored using ANNs and HMMs side by side and found they give significantly higher accuracy over HMMs used alone.

Artwork: Neural networks are hugely simplified, computerized versions of the brain—or a tiny part of it that have inputs (where you feed in information), outputs (where results appear), and hidden units (connecting the two). If you train them with enough examples, they learn by gradually adjusting the strength of the connections between the different layers of units. Once a neural network is fully trained, if you show it an unknown example, it will attempt to recognize what it is based on the examples it's seen before.

Speech recognition: a summary

Artwork: A summary of some of the key stages of speech recognition and the computational processes happening behind the scenes.

What can we use speech recognition for?

We've already touched on a few of the more common applications of speech recognition, including automated telephone switchboards and computerized voice dictation systems. But there are plenty more examples where those came from.

Many of us (whether we know it or not) have cellphones with voice recognition built into them. Back in the late 1990s, state-of-the-art mobile phones offered voice-activated dialing , where, in effect, you recorded a sound snippet for each entry in your phonebook, such as the spoken word "Home," or whatever that the phone could then recognize when you spoke it in future. A few years later, systems like SpinVox became popular helping mobile phone users make sense of voice messages by converting them automatically into text (although a sneaky BBC investigation eventually claimed that some of its state-of-the-art speech automated speech recognition was actually being done by humans in developing countries!).

Today's smartphones make speech recognition even more of a feature. Apple's Siri , Google Assistant ("Hey Google..."), and Microsoft's Cortana are smartphone "personal assistant apps" who'll listen to what you say, figure out what you mean, then attempt to do what you ask, whether it's looking up a phone number or booking a table at a local restaurant. They work by linking speech recognition to complex natural language processing (NLP) systems, so they can figure out not just what you say , but what you actually mean , and what you really want to happen as a consequence. Pressed for time and hurtling down the street, mobile users theoretically find this kind of system a boon—at least if you believe the hype in the TV advertisements that Google and Microsoft have been running to promote their systems. (Google quietly incorporated speech recognition into its search engine some time ago, so you can Google just by talking to your smartphone, if you really want to.) If you have one of the latest voice-powered electronic assistants, such as Amazon's Echo/Alexa or Google Home, you don't need a computer of any kind (desktop, tablet, or smartphone): you just ask questions or give simple commands in your natural language to a thing that resembles a loudspeaker ... and it answers straight back.

Screenshot: When I asked Google "does speech recognition really work," it took it three attempts to recognize the question correctly.

Will speech recognition ever take off?