Community Blog

Keep up-to-date on postgraduate related issues with our quick reads written by students, postdocs, professors and industry leaders.

Statistical Treatment of Data – Explained & Example

- By DiscoverPhDs

- September 8, 2020

‘Statistical treatment’ is when you apply a statistical method to a data set to draw meaning from it. Statistical treatment can be either descriptive statistics, which describes the relationship between variables in a population, or inferential statistics, which tests a hypothesis by making inferences from the collected data.

Introduction to Statistical Treatment in Research

Every research student, regardless of whether they are a biologist, computer scientist or psychologist, must have a basic understanding of statistical treatment if their study is to be reliable.

This is because designing experiments and collecting data are only a small part of conducting research. The other components, which are often not so well understood by new researchers, are the analysis, interpretation and presentation of the data. This is just as important, if not more important, as this is where meaning is extracted from the study .

What is Statistical Treatment of Data?

Statistical treatment of data is when you apply some form of statistical method to a data set to transform it from a group of meaningless numbers into meaningful output.

Statistical treatment of data involves the use of statistical methods such as:

- regression,

- conditional probability,

- standard deviation and

- distribution range.

These statistical methods allow us to investigate the statistical relationships between the data and identify possible errors in the study.

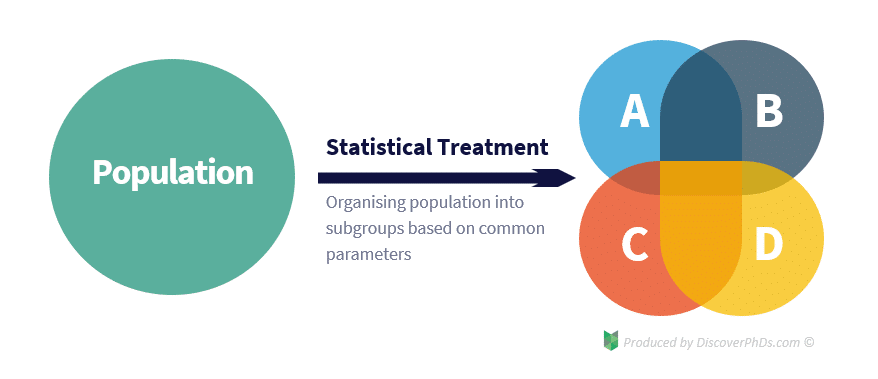

In addition to being able to identify trends, statistical treatment also allows us to organise and process our data in the first place. This is because when carrying out statistical analysis of our data, it is generally more useful to draw several conclusions for each subgroup within our population than to draw a single, more general conclusion for the whole population. However, to do this, we need to be able to classify the population into different subgroups so that we can later break down our data in the same way before analysing it.

Statistical Treatment Example – Quantitative Research

For a statistical treatment of data example, consider a medical study that is investigating the effect of a drug on the human population. As the drug can affect different people in different ways based on parameters such as gender, age and race, the researchers would want to group the data into different subgroups based on these parameters to determine how each one affects the effectiveness of the drug. Categorising the data in this way is an example of performing basic statistical treatment.

Type of Errors

A fundamental part of statistical treatment is using statistical methods to identify possible outliers and errors. No matter how careful we are, all experiments are subject to inaccuracies resulting from two types of errors: systematic errors and random errors.

Systematic errors are errors associated with either the equipment being used to collect the data or with the method in which they are used. Random errors are errors that occur unknowingly or unpredictably in the experimental configuration, such as internal deformations within specimens or small voltage fluctuations in measurement testing instruments.

These experimental errors, in turn, can lead to two types of conclusion errors: type I errors and type II errors . A type I error is a false positive which occurs when a researcher rejects a true null hypothesis. On the other hand, a type II error is a false negative which occurs when a researcher fails to reject a false null hypothesis.

A concept paper is a short document written by a researcher before starting their research project, explaining what the study is about, why it is needed and the methods that will be used.

The title page of your dissertation or thesis conveys all the essential details about your project. This guide helps you format it in the correct way.

In this post you’ll learn what the significance of the study means, why it’s important, where and how to write one in your paper or thesis with an example.

Join thousands of other students and stay up to date with the latest PhD programmes, funding opportunities and advice.

Browse PhDs Now

The unit of analysis refers to the main parameter that you’re investigating in your research project or study.

This article will answer common questions about the PhD synopsis, give guidance on how to write one, and provide my thoughts on samples.

Elpida is about to start her third year of PhD research at the University of Leicester. Her research focuses on preventing type 2 diabetes in women who had gestational diabetes, and she an active STEM Ambassador.

Chris is a third (and final) year PhD student at Ulster University. His project aims to develop a novel method of delivering antibiofilm compounds directly to an infected wound bed in patients.

Join Thousands of Students

Research Paper Statistical Treatment of Data: A Primer

We can all agree that analyzing and presenting data effectively in a research paper is critical, yet often challenging.

This primer on statistical treatment of data will equip you with the key concepts and procedures to accurately analyze and clearly convey research findings.

You'll discover the fundamentals of statistical analysis and data management, the common quantitative and qualitative techniques, how to visually represent data, and best practices for writing the results - all framed specifically for research papers.

If you are curious on how AI can help you with statistica analysis for research, check Hepta AI .

Introduction to Statistical Treatment in Research

Statistical analysis is a crucial component of both quantitative and qualitative research. Properly treating data enables researchers to draw valid conclusions from their studies. This primer provides an introductory guide to fundamental statistical concepts and methods for manuscripts.

Understanding the Importance of Statistical Treatment

Careful statistical treatment demonstrates the reliability of results and ensures findings are grounded in robust quantitative evidence. From determining appropriate sample sizes to selecting accurate analytical tests, statistical rigor adds credibility. Both quantitative and qualitative papers benefit from precise data handling.

Objectives of the Primer

This primer aims to equip researchers with best practices for:

Statistical tools to apply during different research phases

Techniques to manage, analyze, and present data

Methods to demonstrate the validity and reliability of measurements

By covering fundamental concepts ranging from descriptive statistics to measurement validity, it enables both novice and experienced researchers to incorporate proper statistical treatment.

Navigating the Primer: Key Topics and Audience

The primer spans introductory topics including:

Research planning and design

Data collection, management, analysis

Result presentation and interpretation

While useful for researchers at any career stage, earlier-career scientists with limited statistical exposure will find it particularly valuable as they prepare manuscripts.

How do you write a statistical method in a research paper?

Statistical methods are a critical component of research papers, allowing you to analyze, interpret, and draw conclusions from your study data. When writing the statistical methods section, you need to provide enough detail so readers can evaluate the appropriateness of the methods you used.

Here are some key things to include when describing statistical methods in a research paper:

Type of Statistical Tests Used

Specify the types of statistical tests performed on the data, including:

Parametric vs nonparametric tests

Descriptive statistics (means, standard deviations)

Inferential statistics (t-tests, ANOVA, regression, etc.)

Statistical significance level (often p < 0.05)

For example: We used t-tests and one-way ANOVA to compare means across groups, with statistical significance set at p < 0.05.

Analysis of Subgroups

If you examined subgroups or additional variables, describe the methods used for these analyses.

For example: We stratified data by gender and used chi-square tests to analyze differences between subgroups.

Software and Versions

List any statistical software packages used for analysis, including version numbers. Common programs include SPSS, SAS, R, and Stata.

For example: Data were analyzed using SPSS version 25 (IBM Corp, Armonk, NY).

The key is to give readers enough detail to assess the rigor and appropriateness of your statistical methods. The methods should align with your research aims and design. Keep explanations clear and concise using consistent terminology throughout the paper.

What are the 5 statistical treatment in research?

The five most common statistical treatments used in academic research papers include:

The mean, or average, is used to describe the central tendency of a dataset. It provides a singular value that represents the middle of a distribution of numbers. Calculating means allows researchers to characterize typical observations within a sample.

Standard Deviation

Standard deviation measures the amount of variability in a dataset. A low standard deviation indicates observations are clustered closely around the mean, while a high standard deviation signifies the data is more spread out. Reporting standard deviations helps readers contextualize means.

Regression Analysis

Regression analysis models the relationship between independent and dependent variables. It generates an equation that predicts changes in the dependent variable based on changes in the independents. Regressions are useful for hypothesizing causal connections between variables.

Hypothesis Testing

Hypothesis testing evaluates assumptions about population parameters based on statistics calculated from a sample. Common hypothesis tests include t-tests, ANOVA, and chi-squared. These quantify the likelihood of observed differences being due to chance.

Sample Size Determination

Sample size calculations identify the minimum number of observations needed to detect effects of a given size at a desired statistical power. Appropriate sampling ensures studies can uncover true relationships within the constraints of resource limitations.

These five statistical analysis methods form the backbone of most quantitative research processes. Correct application allows researchers to characterize data trends, model predictive relationships, and make probabilistic inferences regarding broader populations. Expertise in these techniques is fundamental for producing valid, reliable, and publishable academic studies.

How do you know what statistical treatment to use in research?

The selection of appropriate statistical methods for the treatment of data in a research paper depends on three key factors:

The Aim and Objective of the Study

The aim and objectives that the study seeks to achieve will determine the type of statistical analysis required.

Descriptive research presenting characteristics of the data may only require descriptive statistics like measures of central tendency (mean, median, mode) and dispersion (range, standard deviation).

Studies aiming to establish relationships or differences between variables need inferential statistics like correlation, t-tests, ANOVA, regression etc.

Predictive modeling research requires methods like regression, discriminant analysis, logistic regression etc.

Thus, clearly identifying the research purpose and objectives is the first step in planning appropriate statistical treatment.

Type and Distribution of Data

The type of data (categorical, numerical) and its distribution (normal, skewed) also guide the choice of statistical techniques.

Parametric tests have assumptions related to normality and homogeneity of variance.

Non-parametric methods are distribution-free and better suited for non-normal or categorical data.

Testing data distribution and characteristics is therefore vital.

Nature of Observations

Statistical methods also differ based on whether the observations are paired or unpaired.

Analyzing changes within one group requires paired tests like paired t-test, Wilcoxon signed-rank test etc.

Comparing between two or more independent groups needs unpaired tests like independent t-test, ANOVA, Kruskal-Wallis test etc.

Thus the nature of observations is pivotal in selecting suitable statistical analyses.

In summary, clearly defining the research objectives, testing the collected data, and understanding the observational units guides proper statistical treatment and interpretation.

What is statistical techniques in research paper?

Statistical methods are essential tools in scientific research papers. They allow researchers to summarize, analyze, interpret and present data in meaningful ways.

Some key statistical techniques used in research papers include:

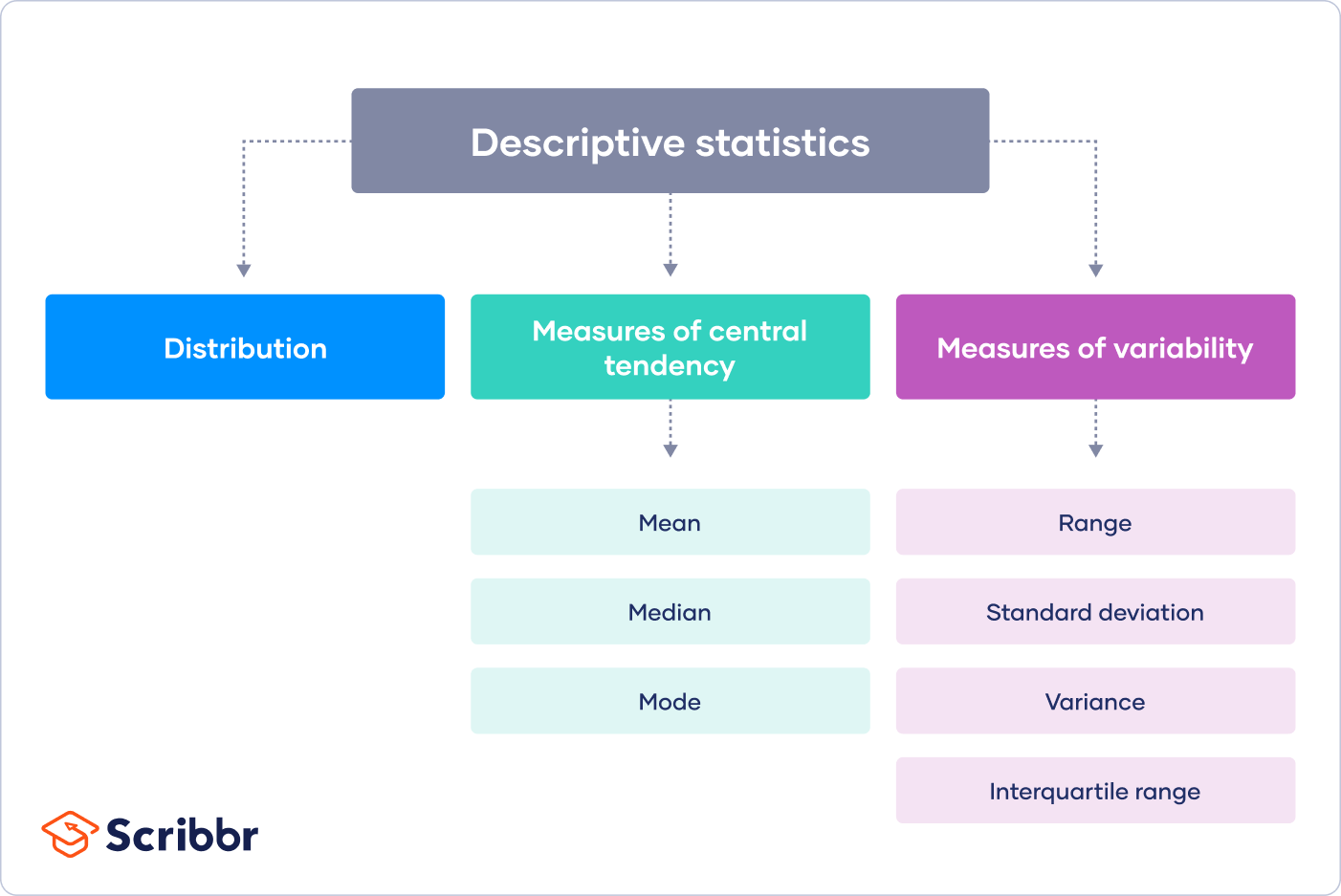

Descriptive statistics: These provide simple summaries of the sample and the measures. Common examples include measures of central tendency (mean, median, mode), measures of variability (range, standard deviation) and graphs (histograms, pie charts).

Inferential statistics: These help make inferences and predictions about a population from a sample. Common techniques include estimation of parameters, hypothesis testing, correlation and regression analysis.

Analysis of variance (ANOVA): This technique allows researchers to compare means across multiple groups and determine statistical significance.

Factor analysis: This technique identifies underlying relationships between variables and latent constructs. It allows reducing a large set of variables into fewer factors.

Structural equation modeling: This technique estimates causal relationships using both latent and observed factors. It is widely used for testing theoretical models in social sciences.

Proper statistical treatment and presentation of data are crucial for the integrity of any quantitative research paper. Statistical techniques help establish validity, account for errors, test hypotheses, build models and derive meaningful insights from the research.

Fundamental Concepts and Data Management

Exploring basic statistical terms.

Understanding key statistical concepts is essential for effective research design and data analysis. This includes defining key terms like:

Statistics : The science of collecting, organizing, analyzing, and interpreting numerical data to draw conclusions or make predictions.

Variables : Characteristics or attributes of the study participants that can take on different values.

Measurement : The process of assigning numbers to variables based on a set of rules.

Sampling : Selecting a subset of a larger population to estimate characteristics of the whole population.

Data types : Quantitative (numerical) or qualitative (categorical) data.

Descriptive vs. inferential statistics : Descriptive statistics summarize data while inferential statistics allow making conclusions from the sample to the larger population.

Ensuring Validity and Reliability in Measurement

When selecting measurement instruments, it is critical they demonstrate:

Validity : The extent to which the instrument measures what it intends to measure.

Reliability : The consistency of measurement over time and across raters.

Researchers should choose instruments aligned to their research questions and study methodology .

Data Management Essentials

Proper data management requires:

Ethical collection procedures respecting autonomy, justice, beneficence and non-maleficence.

Handling missing data through deletion, imputation or modeling procedures.

Data cleaning by identifying and fixing errors, inconsistencies and duplicates.

Data screening via visual inspection and statistical methods to detect anomalies.

Data Management Techniques and Ethical Considerations

Ethical data management includes:

Obtaining informed consent from all participants.

Anonymization and encryption to protect privacy.

Secure data storage and transfer procedures.

Responsible use of statistical tools free from manipulation or misrepresentation.

Adhering to ethical guidelines preserves public trust in the integrity of research.

Statistical Methods and Procedures

This section provides an introduction to key quantitative analysis techniques and guidance on when to apply them to different types of research questions and data.

Descriptive Statistics and Data Summarization

Descriptive statistics summarize and organize data characteristics such as central tendency, variability, and distributions. Common descriptive statistical methods include:

Measures of central tendency (mean, median, mode)

Measures of variability (range, interquartile range, standard deviation)

Graphical representations (histograms, box plots, scatter plots)

Frequency distributions and percentages

These methods help describe and summarize the sample data so researchers can spot patterns and trends.

Inferential Statistics for Generalizing Findings

While descriptive statistics summarize sample data, inferential statistics help generalize findings to the larger population. Common techniques include:

Hypothesis testing with t-tests, ANOVA

Correlation and regression analysis

Nonparametric tests

These methods allow researchers to draw conclusions and make predictions about the broader population based on the sample data.

Selecting the Right Statistical Tools

Choosing the appropriate analyses involves assessing:

The research design and questions asked

Type of data (categorical, continuous)

Data distributions

Statistical assumptions required

Matching the correct statistical tests to these elements helps ensure accurate results.

Statistical Treatment of Data for Quantitative Research

For quantitative research, common statistical data treatments include:

Testing data reliability and validity

Checking assumptions of statistical tests

Transforming non-normal data

Identifying and handling outliers

Applying appropriate analyses for the research questions and data type

Examples and case studies help demonstrate correct application of statistical tests.

Approaches to Qualitative Data Analysis

Qualitative data is analyzed through methods like:

Thematic analysis

Content analysis

Discourse analysis

Grounded theory

These help researchers discover concepts and patterns within non-numerical data to derive rich insights.

Data Presentation and Research Method

Crafting effective visuals for data presentation.

When presenting analyzed results and statistics in a research paper, well-designed tables, graphs, and charts are key for clearly showcasing patterns in the data to readers. Adhering to formatting standards like APA helps ensure professional data presentation. Consider these best practices:

Choose the appropriate visual type based on the type of data and relationship being depicted. For example, bar charts for comparing categorical data, line graphs to show trends over time.

Label the x-axis, y-axis, legends clearly. Include informative captions.

Use consistent, readable fonts and sizing. Avoid clutter with unnecessary elements. White space can aid readability.

Order data logically. Such as largest to smallest values, or chronologically.

Include clear statistical notations, like error bars, where applicable.

Following academic standards for visuals lends credibility while making interpretation intuitive for readers.

Writing the Results Section with Clarity

When writing the quantitative Results section, aim for clarity by balancing statistical reporting with interpretation of findings. Consider this structure:

Open with an overview of the analysis approach and measurements used.

Break down results by logical subsections for each hypothesis, construct measured etc.

Report exact statistics first, followed by interpretation of their meaning. For example, “Participants exposed to the intervention had significantly higher average scores (M=78, SD=3.2) compared to controls (M=71, SD=4.1), t(115)=3.42, p = 0.001. This suggests the intervention was highly effective for increasing scores.”

Use present verb tense. And scientific, formal language.

Include tables/figures where they aid understanding or visualization.

Writing results clearly gives readers deeper context around statistical findings.

Highlighting Research Method and Design

With a results section full of statistics, it's vital to communicate key aspects of the research method and design. Consider including:

Brief overview of study variables, materials, apparatus used. Helps reproducibility.

Descriptions of study sampling techniques, data collection procedures. Supports transparency.

Explanations around approaches to measurement, data analysis performed. Bolsters methodological rigor.

Noting control variables, attempts to limit biases etc. Demonstrates awareness of limitations.

Covering these methodological details shows readers the care taken in designing the study and analyzing the results obtained.

Acknowledging Limitations and Addressing Biases

Honestly recognizing methodological weaknesses and limitations goes a long way in establishing credibility within the published discussion section. Consider transparently noting:

Measurement errors and biases that may have impacted findings.

Limitations around sampling methods that constrain generalizability.

Caveats related to statistical assumptions, analysis techniques applied.

Attempts made to control/account for biases and directions for future research.

Rather than detracting value, acknowledging limitations demonstrates academic integrity regarding the research performed. It also gives readers deeper insight into interpreting the reported results and findings.

Conclusion: Synthesizing Statistical Treatment Insights

Recap of statistical treatment fundamentals.

Statistical treatment of data is a crucial component of high-quality quantitative research. Proper application of statistical methods and analysis principles enables valid interpretations and inferences from study data. Key fundamentals covered include:

Descriptive statistics to summarize and describe the basic features of study data

Inferential statistics to make judgments of the probability and significance based on the data

Using appropriate statistical tools aligned to the research design and objectives

Following established practices for measurement techniques, data collection, and reporting

Adhering to these core tenets ensures research integrity and allows findings to withstand scientific scrutiny.

Key Takeaways for Research Paper Success

When incorporating statistical treatment into a research paper, keep these best practices in mind:

Clearly state the research hypothesis and variables under examination

Select reliable and valid quantitative measures for assessment

Determine appropriate sample size to achieve statistical power

Apply correct analytical methods suited to the data type and distribution

Comprehensively report methodology procedures and statistical outputs

Interpret results in context of the study limitations and scope

Following these guidelines will bolster confidence in the statistical treatment and strengthen the research quality overall.

Encouraging Continued Learning and Application

As statistical techniques continue advancing, it is imperative for researchers to actively further their statistical literacy. Regularly reviewing new methodological developments and learning advanced tools will augment analytical capabilities. Persistently putting enhanced statistical knowledge into practice through research projects and manuscript preparations will cement competencies. Statistical treatment mastery is a journey requiring persistent effort, but one that pays dividends in research proficiency.

Antonio Carlos Filho @acfilho_dev

Statistical Treatment

Statistics Definitions > Statistical Treatment

What is Statistical Treatment?

Statistical treatment can mean a few different things:

- In Data Analysis : Applying any statistical method — like regression or calculating a mean — to data.

- In Factor Analysis : Any combination of factor levels is called a treatment.

- In a Thesis or Experiment : A summary of the procedure, including statistical methods used.

1. Statistical Treatment in Data Analysis

The term “statistical treatment” is a catch all term which means to apply any statistical method to your data. Treatments are divided into two groups: descriptive statistics , which summarize your data as a graph or summary statistic and inferential statistics , which make predictions and test hypotheses about your data. Treatments could include:

- Finding standard deviations and sample standard errors ,

- Finding T-Scores or Z-Scores .

- Calculating Correlation coefficients .

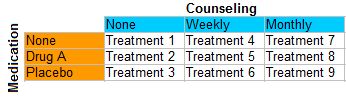

2. Treatments in Factor Analysis

3. Treatments in a Thesis or Experiment

Sometimes you might be asked to include a treatment as part of a thesis. This is asking you to summarize the data and analysis portion of your experiment, including measurements and formulas used. For example, the following experimental summary is from Statistical Treatment in Acta Physiologica Scandinavica. :

Each of the test solutions was injected twice in each subject…30-42 values were obtained for the intensity, and a like number for the duration, of the pain indiced by the solution. The pain values reported in the following are arithmetical means for these 30-42 injections.”

The author goes on to provide formulas for the mean, the standard deviation and the standard error of the mean.

Vogt, W.P. (2005). Dictionary of Statistics & Methodology: A Nontechnical Guide for the Social Sciences . SAGE. Wheelan, C. (2014). Naked Statistics . W. W. Norton & Company Unknown author (1961) Chapter 3: Statistical Treatment. Acta Physiologica Scandinavica. Volume 51, Issue s179 December Pages 16–20.

Loading metrics

Open Access

Ten Simple Rules for Effective Statistical Practice

Affiliation Department of Statistics, Machine Learning Department, and Center for the Neural Basis of Cognition, Carnegie Mellon University, Pittsburgh, Pennsylvania, United States of America

Affiliation Department of Biostatistics, Bloomberg School of Public Health, Johns Hopkins University, Baltimore, Maryland, United States of America

Affiliation Department of Statistics, North Carolina State University, Raleigh, North Carolina, United States of America

Affiliation Department of Statistics, Harvard University, Cambridge, Massachusetts, United States of America

Affiliation Department of Statistics and Department of Electrical Engineering and Computer Science, University of California Berkeley, Berkeley, California, United States of America

* E-mail: [email protected]

Affiliation Department of Statistical Sciences, University of Toronto, Toronto, Ontario, Canada

- Robert E. Kass,

- Brian S. Caffo,

- Marie Davidian,

- Xiao-Li Meng,

- Bin Yu,

Published: June 9, 2016

- https://doi.org/10.1371/journal.pcbi.1004961

- Reader Comments

Citation: Kass RE, Caffo BS, Davidian M, Meng X-L, Yu B, Reid N (2016) Ten Simple Rules for Effective Statistical Practice. PLoS Comput Biol 12(6): e1004961. https://doi.org/10.1371/journal.pcbi.1004961

Editor: Fran Lewitter, Whitehead Institute, UNITED STATES

Copyright: © 2016 Kass et al. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Funding: BSC's research is partially supported by the National Institutes of Health grant EB012547: www.nibib.nih.gov . MD's research is partially supported by the National Institutes of Health grant NIH P01 CA142538: www.nih.gov . REK's research is partially supported by the National Institute of Mental Health R01 MH064537: www.nimh.nih.gov . NR's research is partially supported by the Natural Sciences and Engineering Council of Canada grant RGPIN-2015-06390: www.nserc.ca . BY's research is partially supported by the National Science Foundation grant CCF-0939370: www.nsf.gov . XLM's research is partially supported by the National Science Foundation ( www.nsf.gov ) DMS 1513492. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Competing interests: The authors have declared that no competing interests exist.

Introduction

Several months ago, Phil Bourne, the initiator and frequent author of the wildly successful and incredibly useful “Ten Simple Rules” series, suggested that some statisticians put together a Ten Simple Rules article related to statistics. (One of the rules for writing a PLOS Ten Simple Rules article is to be Phil Bourne [ 1 ]. In lieu of that, we hope effusive praise for Phil will suffice.)

Implicit in the guidelines for writing Ten Simple Rules [ 1 ] is “know your audience.” We developed our list of rules with researchers in mind: researchers having some knowledge of statistics, possibly with one or more statisticians available in their building, or possibly with a healthy do-it-yourself attitude and a handful of statistical packages on their laptops. We drew on our experience in both collaborative research and teaching, and, it must be said, from our frustration at being asked, more than once, to “take a quick look at my student’s thesis/my grant application/my referee’s report: it needs some input on the stats, but it should be pretty straightforward.”

There are some outstanding resources available that explain many of these concepts clearly and in much more detail than we have been able to do here: among our favorites are Cox and Donnelly [ 2 ], Leek [ 3 ], Peng [ 4 ], Kass et al. [ 5 ], Tukey [ 6 ], and Yu [ 7 ].

Every article on statistics requires at least one caveat. Here is ours: we refer in this article to “science” as a convenient shorthand for investigations using data to study questions of interest. This includes social science, engineering, digital humanities, finance, and so on. Statisticians are not shy about reminding administrators that statistical science has an impact on nearly every part of almost all organizations.

Rule 1: Statistical Methods Should Enable Data to Answer Scientific Questions

A big difference between inexperienced users of statistics and expert statisticians appears as soon as they contemplate the uses of some data. While it is obvious that experiments generate data to answer scientific questions, inexperienced users of statistics tend to take for granted the link between data and scientific issues and, as a result, may jump directly to a technique based on data structure rather than scientific goal. For example, if the data were in a table, as for microarray gene expression data, they might look for a method by asking, “Which test should I use?” while a more experienced person would, instead, start with the underlying question, such as, “Where are the differentiated genes?” and, from there, would consider multiple ways the data might provide answers. Perhaps a formal statistical test would be useful, but other approaches might be applied as alternatives, such as heat maps or clustering techniques. Similarly, in neuroimaging, understanding brain activity under various experimental conditions is the main goal; illustrating this with nice images is secondary. This shift in perspective from statistical technique to scientific question may change the way one approaches data collection and analysis. After learning about the questions, statistical experts discuss with their scientific collaborators the ways that data might answer these questions and, thus, what kinds of studies might be most useful. Together, they try to identify potential sources of variability and what hidden realities could break the hypothesized links between data and scientific inferences; only then do they develop analytic goals and strategies. This is a major reason why collaborating with statisticians can be helpful, and also why the collaborative process works best when initiated early in an investigation. See Rule 3 .

Rule 2: Signals Always Come with Noise

Grappling with variability is central to the discipline of statistics. Variability comes in many forms. In some cases variability is good, because we need variability in predictors to explain variability in outcomes. For example, to determine if smoking is associated with lung cancer, we need variability in smoking habits; to find genetic associations with diseases, we need genetic variation. Other times variability may be annoying, such as when we get three different numbers when measuring the same thing three times. This latter variability is usually called “noise,” in the sense that it is either not understood or thought to be irrelevant. Statistical analyses aim to assess the signal provided by the data, the interesting variability, in the presence of noise, or irrelevant variability.

A starting point for many statistical procedures is to introduce a mathematical abstraction: outcomes, such as patients being diagnosed with specific diseases or receiving numerical scores on diagnostic tests, will vary across the set of individuals being studied, and statistical formalism describes such variation using probability distributions. Thus, for example, a data histogram might be replaced, in theory, by a probability distribution, thereby shifting attention from the raw data to the numerical parameters that determine the precise features of the probability distribution, such as its shape, its spread, or the location of its center. Probability distributions are used in statistical models, with the model specifying the way signal and noise get combined in producing the data we observe, or would like to observe. This fundamental step makes statistical inferences possible. Without it, every data value would be considered unique, and we would be left trying to figure out all the detailed processes that might cause an instrument to give different values when measuring the same thing several times. Conceptualizing signal and noise in terms of probability within statistical models has proven to be an extremely effective simplification, allowing us to capture the variability in data in order to express uncertainty about quantities we are trying to understand. The formalism can also help by directing us to look for likely sources of systematic error, known as bias.

Big data makes these issues more important, not less. For example, Google Flu Trends debuted to great excitement in 2008, but turned out to overestimate the prevalence of influenza by nearly 50%, largely due to bias caused by the way the data were collected; see Harford [ 8 ], for example.

Rule 3: Plan Ahead, Really Ahead

When substantial effort will be involved in collecting data, statistical issues may not be captured in an isolated statistical question such as, “What should my n be?” As we suggested in Rule 1, rather than focusing on a specific detail in the design of the experiment, someone with a lot of statistical experience is likely to step back and consider many aspects of data collection in the context of overall goals and may start by asking, “What would be the ideal outcome of your experiment, and how would you interpret it?” In trying to determine whether observations of X and Y tend to vary together, as opposed to independently, key issues would involve the way X and Y are measured, the extent to which the measurements represent the underlying conceptual meanings of X and Y, the many factors that could affect the measurements, the ability to control those factors, and whether some of those factors might introduce systematic errors (bias).

In Rule 2 we pointed out that statistical models help link data to goals by shifting attention to theoretical quantities of interest. For example, in making electrophysiological measurements from a pair of neurons, a neurobiologist may take for granted a particular measurement methodology along with the supposition that these two neurons will represent a whole class of similar neurons under similar experimental conditions. On the other hand, a statistician will immediately wonder how the specific measurements get at the issue of co-variation; what the major influences on the measurements are, and whether some of them can be eliminated by clever experimental design; what causes variation among repeated measurements, and how quantitative knowledge about sources of variation might influence data collection; and whether these neurons may be considered to be sampled from a well-defined population, and how the process of picking that pair could influence subsequent statistical analyses. A conversation that covers such basic issues may reveal possibilities an experimenter has not yet considered.

Asking questions at the design stage can save headaches at the analysis stage: careful data collection can greatly simplify analysis and make it more rigorous. Or, as Sir Ronald Fisher put it: “To consult the statistician after an experiment is finished is often merely to ask him to conduct a post mortem examination. He can perhaps say what the experiment died of” [ 9 ]. As a good starting point for reading on planning of investigations, see Chapters 1 through 4 of [ 2 ].

Rule 4: Worry about Data Quality

Well-trained experimenters understand instinctively that, when it comes to data analysis, “garbage in produces garbage out.” However, the complexity of modern data collection requires many assumptions about the function of technology, often including data pre-processing technology. It is highly advisable to approach pre-processing with care, as it can have profound effects that easily go unnoticed.

Even with pre-processed data, further considerable effort may be needed prior to analysis; this is variously called “data cleaning,” “data munging,” or “data carpentry.” Hands-on experience can be extremely useful, as data cleaning often reveals important concerns about data quality, in the best case confirming that what was measured is indeed what was intended to be measured and, in the worst case, ensuring that losses are cut early.

Units of measurement should be understood and recorded consistently. It is important that missing data values can be recognized as such by relevant software. For example, 999 may signify the number 999, or it could be code for “we have no clue.” There should be a defensible rule for handling situations such as “non-detects,” and data should be scanned for anomalies such as variable 27 having half its values equal to 0.00027. Try to understand as much as you can how these data arrived at your desk or disk. Why are some data missing or incomplete? Did they get lost through some substantively relevant mechanism? Understanding such mechanisms can help to avoid some seriously misleading results. For example, in a developmental imaging study of attention deficit hyperactivity disorder, might some data have been lost from children with the most severe hyperactivity because they could not sit still in the MR scanner?

Once the data have been wrestled into a convenient format, have a look! Tinkering around with the data, also known as exploratory data analysis, is often the most informative part of the analysis. Exploratory plots can reveal data quality issues and outliers. Simple summaries, such as means, standard deviations, and quantiles, can help refine thinking and offer face validity checks for hypotheses. Many studies, especially when going in completely new scientific directions, are exploratory by design; the area may be too novel to include clear a priori hypotheses. Working with the data informally can help generate new hypotheses and ideas. However, it is also important to acknowledge the specific ways data are selected prior to formal analyses and to consider how such selection might affect conclusions. And it is important to remember that using a single set of data to both generate and test hypotheses is problematic. See Rule 9 .

Rule 5: Statistical Analysis Is More Than a Set of Computations

Statistical software provides tools to assist analyses, not define them. The scientific context is critical, and the key to principled statistical analysis is to bring analytic methods into close correspondence with scientific questions. See Rule 1 . While it can be helpful to include references to a specific algorithm or piece of software in the Methods section of a paper, this should not be a substitute for an explanation of the choice of statistical method in answering a question. A reader will likely want to consider the fundamental issue of whether the analytic technique is appropriately linked to the substantive questions being answered. Don’t make the reader puzzle over this: spell it out clearly.

At the same time, a structured algorithmic approach to the steps in your analysis can be very helpful in making this analysis reproducible by yourself at a later time, or by others with the same or similar data. See Rule 10 .

Rule 6: Keep it Simple

All else being equal, simplicity trumps complexity. This rule has been rediscovered and enshrined in operating procedures across many domains and variously described as “Occam’s razor,” “KISS,” “less is more,” and “simplicity is the ultimate sophistication.” The principle of parsimony can be a trusted guide: start with simple approaches and only add complexity as needed, and then only add as little as seems essential.

Having said this, scientific data have detailed structure, and simple models can’t always accommodate important intricacies. The common assumption of independence is often incorrect and nearly always needs careful examination. See Rule 8 . Large numbers of measurements, interactions among explanatory variables, nonlinear mechanisms of action, missing data, confounding, sampling biases, and so on, can all require an increase in model complexity.

Keep in mind that good design, implemented well, can often allow simple methods of analysis to produce strong results. See Rule 3 . Simple models help us to create order out of complex phenomena, and simple models are well suited for communication to our colleagues and the wider world.

Rule 7: Provide Assessments of Variability

Nearly all biological measurements, when repeated, exhibit substantial variation, and this creates uncertainty in the result of every calculation based on the data. A basic purpose of statistical analysis is to help assess uncertainty, often in the form of a standard error or confidence interval, and one of the great successes of statistical modeling and inference is that it can provide estimates of standard errors from the same data that produce estimates of the quantity of interest. When reporting results, it is essential to supply some notion of statistical uncertainty. A common mistake is to calculate standard errors without taking into account the dependencies among data or variables, which usually means a substantial underestimate of the real uncertainty. See Rule 8 .

Remember that every number obtained from the data by some computation would change somewhat, even if the measurements were repeated on the same biological material. If you are using new material, you can add to the measurement variability an increase due to the natural variability among samples. If you are collecting data on a different day, in a different lab, or under a slightly changed protocol, there are now three more potential sources of variability to be accounted for. In microarray analysis, batch effects are well known to introduce extra variability, and several methods are available to filter these. Extra variability means extra uncertainty in the conclusions, and this uncertainty needs to be reported. Such reporting is invaluable for planning the next investigation.

It is a very common feature of big data that uncertainty assessments tend to be overly optimistic (Cox [ 10 ], Meng [ 11 ]). For an instructive, and beguilingly simple, quantitative analysis most relevant to surveys, see the “data defect” section of [ 11 ]. Big data is not always as big as it looks: a large number of measurements on a small number of samples requires very careful estimation of the standard error, not least because these measurements are quite likely to be dependent.

Rule 8: Check Your Assumptions

Every statistical inference involves assumptions, which are based on substantive knowledge and some probabilistic representation of data variation—this is what we call a statistical model. Even the so-called “model-free” techniques require assumptions, albeit less restrictive assumptions, so this terminology is somewhat misleading.

The most common statistical methods involve an assumption of linear relationships. For example, the ordinary correlation coefficient, also called the Pearson correlation, is a measure of linear association. Linearity often works well as a first approximation or as a depiction of a general trend, especially when the amount of noise in the data makes it difficult to distinguish between linear and nonlinear relationships. However, for any given set of data, the appropriateness of the linear model is an empirical issue and should be investigated.

In many ways, a more worrisome, and very common, assumption in statistical analysis is that multiple observations in the data are statistically independent. This is worrisome because relatively small deviations from this assumption can have drastic effects. When measurements are made across time, for example, the temporal sequencing may be important; if it is, specialized methods appropriate for time series need to be considered.

In addition to nonlinearity and statistical dependence, missing data, systematic biases in measurements, and a variety of other factors can cause violations of statistical modeling assumptions, even in the best experiments. Widely available statistical software makes it easy to perform analyses without careful attention to inherent assumptions, and this risks inaccurate, or even misleading, results. It is therefore important to understand the assumptions embodied in the methods you are using and to do whatever you can to understand and assess those assumptions. At a minimum, you will want to check how well your statistical model fits the data. Visual displays and plots of data and residuals from fitting are helpful for evaluating the relevance of assumptions and the fit of the model, and some basic techniques for assessing model fit are available in most statistical software. Remember, though, that several models can “pass the fit test” on the same data. See Rule 1 and Rule 6 .

Rule 9: When Possible, Replicate!

Every good analyst examines the data at great length, looking for patterns of many types and searching for predicted and unpredicted results. This process often involves dozens of procedures, including many alternative visualizations and a host of numerical slices through the data. Eventually, some particular features of the data are deemed interesting and important, and these are often the results reported in the resulting publication.

When statistical inferences, such as p -values, follow extensive looks at the data, they no longer have their usual interpretation. Ignoring this reality is dishonest: it is like painting a bull’s eye around the landing spot of your arrow. This is known in some circles as p -hacking, and much has been written about its perils and pitfalls: see, for example, [ 12 ] and [ 13 ].

Recently there has been a great deal of criticism of the use of p -values in science, largely related to the misperception that results can’t be worthy of publication unless “ p is less than 0.05.” The recent statement from the American Statistical Association (ASA) [ 14 ] presents a detailed view of the merits and limitations of the p -value.

Statisticians tend to be aware of the most obvious kinds of data snooping, such as choosing particular variables for a reported analysis, and there are methods that can help adjust results in these cases; the False Discovery Rate method of Benjamini and Hochberg [ 15 ] is the basis for several of these.

For some analyses, there may be a case that some kinds of preliminary data manipulation are likely to be innocuous. In other situations, analysts may build into their work an informal check by trusting only extremely small p -values. For example, in high energy physics, the requirement of a “5-sigma” result is at least partly an approximate correction for what is called the “look-elsewhere effect.”

The only truly reliable solution to the problem posed by data snooping is to record the statistical inference procedures that produced the key results, together with the features of the data to which they were applied, and then to replicate the same analysis using new data. Independent replications of this type often go a step further by introducing modifications to the experimental protocol, so that the replication will also provide some degree of robustness to experimental details.

Ideally, replication is performed by an independent investigator. The scientific results that stand the test of time are those that get confirmed across a variety of different, but closely related, situations. In the absence of experimental replications, appropriate forms of data perturbation can be helpful (Yu [ 16 ]). In many contexts, complete replication is very difficult or impossible, as in large-scale experiments such as multi-center clinical trials. In such cases, a minimum standard would be to follow Rule 10.

Rule 10: Make Your Analysis Reproducible

In our current framework for publication of scientific results, the independent replication discussed in Rule 9 is not practical for most investigators. A different standard, which is easier to achieve, is reproducibility: given the same set of data, together with a complete description of the analysis, it should be possible to reproduce the tables, figures, and statistical inferences. However, even this lower standard can face multiple barriers, such as different computing architectures, software versions, and settings.

One can dramatically improve the ability to reproduce findings by being very systematic about the steps in the analysis (see Rule 5 ), by sharing the data and code used to produce the results, and by following Goodman et al. [ 17 ]. Modern reproducible research tools like Sweave [ 18 ], knitr [ 19 ], and iPython [ 20 ] notebooks take this a step further and combine the research report with the code. Reproducible research is itself an ongoing area of research and a very important area that we all need to pay attention to.

Mark Twain popularized the saying, “There are three kinds of lies: lies, damned lies, and statistics.” It is true that data are frequently used selectively to give arguments a false sense of support. Knowingly misusing data or concealing important information about the way data and data summaries have been obtained is, of course, highly unethical. More insidious, however, are the widespread instances of claims made about scientific hypotheses based on well-intentioned yet faulty statistical reasoning. One of our chief aims here has been to emphasize succinctly many of the origins of such problems and ways to avoid the pitfalls.

A central and common task for us as research investigators is to decipher what our data are able to say about the problems we are trying to solve. Statistics is a language constructed to assist this process, with probability as its grammar. While rudimentary conversations are possible without good command of the language (and are conducted routinely), principled statistical analysis is critical in grappling with many subtle phenomena to ensure that nothing serious will be lost in translation and to increase the likelihood that your research findings will stand the test of time. To achieve full fluency in this mathematically sophisticated language requires years of training and practice, but we hope the Ten Simple Rules laid out here will provide some essential guidelines.

Among the many articles reporting on the ASA’s statement on p- values, we particularly liked a quote from biostatistician Andrew Vickers in [ 21 ]: “Treat statistics as a science, not a recipe.” This is a great candidate for Rule 0.

Acknowledgments

We consulted many colleagues informally about this article, but the opinions expressed here are unique to our small committee of authors. We’d like to give a shout out to xkcd.com for conveying statistical ideas with humor, to the Simply Statistics blog as a reliable source for thoughtful commentary, to FiveThirtyEight for bringing statistics to the world (or at least to the media), to Phil Bourne for suggesting that we put together this article, and to Steve Pierson of the American Statistical Association for getting the effort started.

- View Article

- PubMed/NCBI

- Google Scholar

- 2. Cox DR, Donnelly CA (2011) Principles of Applied Statistics. Cambridge: Cambridge University Press.

- 3. Leek JT (2015) The Elements of Data Analytic Style. Leanpub, https://leanpub.com/artofdatascience .

- 4. Peng R (2014) The Art of Data Science. Leanpub, https://leanpub.com/artofdatascience .

- 5. Kass RE, Eden UT, Brown EN (2014) Analysis of Neural Data. Springer: New York.

- 11. Meng XL (2014) A trio of inference problems that could win you a Nobel prize in statistics (if you help fund it). In: Lin X, Genest C, Banks DL, Molenberghs G, Scott DW, Wang J-L,editors. Past, Present, and Future of Statistical Science, Boca Raton: CRC Press. pp. 537–562.

- 13. Aschwanden C (2015) Science isn’t broken. August 11 2015 http://fivethirtyeight.com/features/science-isnt-broken/

- 16. Yu, B (2015) Data wisdom for data science. April 13 2015 http://www.odbms.org/2015/04/data-wisdom-for-data-science/

- 18. Leisch F (2002) Sweave: Dynamic generation of statistical reports using data analysis. In Härdle W, Rönz H, editors. Compstat: Proceedings in Computational Statistics, Heidelberg: Springer-Verlag, pp. 575–580.

- 19. Xie Y (2014) Dynamic Documents with R and knitr. Boca Raton: CRC Press.

13. Study design and choosing a statistical test

Sample size.

- Foundations

- Write Paper

Search form

- Experiments

- Anthropology

- Self-Esteem

- Social Anxiety

- Statistics >

Statistical Treatment Of Data

Statistical treatment of data is essential in order to make use of the data in the right form. Raw data collection is only one aspect of any experiment; the organization of data is equally important so that appropriate conclusions can be drawn. This is what statistical treatment of data is all about.

This article is a part of the guide:

- Statistics Tutorial

- Branches of Statistics

- Statistical Analysis

- Discrete Variables

Browse Full Outline

- 1 Statistics Tutorial

- 2.1 What is Statistics?

- 2.2 Learn Statistics

- 3 Probability

- 4 Branches of Statistics

- 5 Descriptive Statistics

- 6 Parameters

- 7.1 Data Treatment

- 7.2 Raw Data

- 7.3 Outliers

- 7.4 Data Output

- 8 Statistical Analysis

- 9 Measurement Scales

- 10 Variables and Statistics

- 11 Discrete Variables

There are many techniques involved in statistics that treat data in the required manner. Statistical treatment of data is essential in all experiments, whether social, scientific or any other form. Statistical treatment of data greatly depends on the kind of experiment and the desired result from the experiment.

For example, in a survey regarding the election of a Mayor, parameters like age, gender, occupation, etc. would be important in influencing the person's decision to vote for a particular candidate. Therefore the data needs to be treated in these reference frames.

An important aspect of statistical treatment of data is the handling of errors. All experiments invariably produce errors and noise. Both systematic and random errors need to be taken into consideration.

Depending on the type of experiment being performed, Type-I and Type-II errors also need to be handled. These are the cases of false positives and false negatives that are important to understand and eliminate in order to make sense from the result of the experiment.

Treatment of Data and Distribution

Trying to classify data into commonly known patterns is a tremendous help and is intricately related to statistical treatment of data. This is because distributions such as the normal probability distribution occur very commonly in nature that they are the underlying distributions in most medical, social and physical experiments.

Therefore if a given sample size is known to be normally distributed, then the statistical treatment of data is made easy for the researcher as he would already have a lot of back up theory in this aspect. Care should always be taken, however, not to assume all data to be normally distributed, and should always be confirmed with appropriate testing.

Statistical treatment of data also involves describing the data. The best way to do this is through the measures of central tendencies like mean , median and mode . These help the researcher explain in short how the data are concentrated. Range, uncertainty and standard deviation help to understand the distribution of the data. Therefore two distributions with the same mean can have wildly different standard deviation, which shows how well the data points are concentrated around the mean.

Statistical treatment of data is an important aspect of all experimentation today and a thorough understanding is necessary to conduct the right experiments with the right inferences from the data obtained.

- Psychology 101

- Flags and Countries

- Capitals and Countries

Siddharth Kalla (Apr 10, 2009). Statistical Treatment Of Data. Retrieved Aug 23, 2024 from Explorable.com: https://explorable.com/statistical-treatment-of-data

You Are Allowed To Copy The Text

The text in this article is licensed under the Creative Commons-License Attribution 4.0 International (CC BY 4.0) .

This means you're free to copy, share and adapt any parts (or all) of the text in the article, as long as you give appropriate credit and provide a link/reference to this page.

That is it. You don't need our permission to copy the article; just include a link/reference back to this page. You can use it freely (with some kind of link), and we're also okay with people reprinting in publications like books, blogs, newsletters, course-material, papers, wikipedia and presentations (with clear attribution).

Want to stay up to date? Follow us!

Save this course for later.

Don't have time for it all now? No problem, save it as a course and come back to it later.

Footer bottom

- Privacy Policy

- Subscribe to our RSS Feed

- Like us on Facebook

- Follow us on Twitter

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Descriptive Statistics | Definitions, Types, Examples

Published on July 9, 2020 by Pritha Bhandari . Revised on June 21, 2023.

Descriptive statistics summarize and organize characteristics of a data set. A data set is a collection of responses or observations from a sample or entire population.

In quantitative research , after collecting data, the first step of statistical analysis is to describe characteristics of the responses, such as the average of one variable (e.g., age), or the relation between two variables (e.g., age and creativity).

The next step is inferential statistics , which help you decide whether your data confirms or refutes your hypothesis and whether it is generalizable to a larger population.

Table of contents

Types of descriptive statistics, frequency distribution, measures of central tendency, measures of variability, univariate descriptive statistics, bivariate descriptive statistics, other interesting articles, frequently asked questions about descriptive statistics.

There are 3 main types of descriptive statistics:

- The distribution concerns the frequency of each value.

- The central tendency concerns the averages of the values.

- The variability or dispersion concerns how spread out the values are.

You can apply these to assess only one variable at a time, in univariate analysis, or to compare two or more, in bivariate and multivariate analysis.

- Go to a library

- Watch a movie at a theater

- Visit a national park

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

A data set is made up of a distribution of values, or scores. In tables or graphs, you can summarize the frequency of every possible value of a variable in numbers or percentages. This is called a frequency distribution .

- Simple frequency distribution table

- Grouped frequency distribution table

| Gender | Number |

|---|---|

| Male | 182 |

| Female | 235 |

| Other | 27 |

From this table, you can see that more women than men or people with another gender identity took part in the study. In a grouped frequency distribution, you can group numerical response values and add up the number of responses for each group. You can also convert each of these numbers to percentages.

| Library visits in the past year | Percent |

|---|---|

| 0–4 | 6% |

| 5–8 | 20% |

| 9–12 | 42% |

| 13–16 | 24% |

| 17+ | 8% |

Measures of central tendency estimate the center, or average, of a data set. The mean, median and mode are 3 ways of finding the average.

Here we will demonstrate how to calculate the mean, median, and mode using the first 6 responses of our survey.

The mean , or M , is the most commonly used method for finding the average.

To find the mean, simply add up all response values and divide the sum by the total number of responses. The total number of responses or observations is called N .

| Data set | 15, 3, 12, 0, 24, 3 |

|---|---|

| Sum of all values | 15 + 3 + 12 + 0 + 24 + 3 = 57 |

| Total number of responses | = 6 |

| Mean | Divide the sum of values by to find : 57/6 = |

The median is the value that’s exactly in the middle of a data set.

To find the median, order each response value from the smallest to the biggest. Then , the median is the number in the middle. If there are two numbers in the middle, find their mean.

| Ordered data set | 0, 3, 3, 12, 15, 24 |

|---|---|

| Middle numbers | 3, 12 |

| Median | Find the mean of the two middle numbers: (3 + 12)/2 = |

The mode is the simply the most popular or most frequent response value. A data set can have no mode, one mode, or more than one mode.

To find the mode, order your data set from lowest to highest and find the response that occurs most frequently.

| Ordered data set | 0, 3, 3, 12, 15, 24 |

|---|---|

| Mode | Find the most frequently occurring response: |

Measures of variability give you a sense of how spread out the response values are. The range, standard deviation and variance each reflect different aspects of spread.

The range gives you an idea of how far apart the most extreme response scores are. To find the range , simply subtract the lowest value from the highest value.

Standard deviation

The standard deviation ( s or SD ) is the average amount of variability in your dataset. It tells you, on average, how far each score lies from the mean. The larger the standard deviation, the more variable the data set is.

There are six steps for finding the standard deviation:

- List each score and find their mean.

- Subtract the mean from each score to get the deviation from the mean.

- Square each of these deviations.

- Add up all of the squared deviations.

- Divide the sum of the squared deviations by N – 1.

- Find the square root of the number you found.

| Raw data | Deviation from mean | Squared deviation |

|---|---|---|

| 15 | 15 – 9.5 = 5.5 | 30.25 |

| 3 | 3 – 9.5 = -6.5 | 42.25 |

| 12 | 12 – 9.5 = 2.5 | 6.25 |

| 0 | 0 – 9.5 = -9.5 | 90.25 |

| 24 | 24 – 9.5 = 14.5 | 210.25 |

| 3 | 3 – 9.5 = -6.5 | 42.25 |

| = 9.5 | Sum = 0 | Sum of squares = 421.5 |

Step 5: 421.5/5 = 84.3

Step 6: √84.3 = 9.18

The variance is the average of squared deviations from the mean. Variance reflects the degree of spread in the data set. The more spread the data, the larger the variance is in relation to the mean.

To find the variance, simply square the standard deviation. The symbol for variance is s 2 .

Prevent plagiarism. Run a free check.

Univariate descriptive statistics focus on only one variable at a time. It’s important to examine data from each variable separately using multiple measures of distribution, central tendency and spread. Programs like SPSS and Excel can be used to easily calculate these.

| Visits to the library | |

|---|---|

| 6 | |

| Mean | 9.5 |

| Median | 7.5 |

| Mode | 3 |

| Standard deviation | 9.18 |

| Variance | 84.3 |

| Range | 24 |

If you were to only consider the mean as a measure of central tendency, your impression of the “middle” of the data set can be skewed by outliers, unlike the median or mode.

Likewise, while the range is sensitive to outliers , you should also consider the standard deviation and variance to get easily comparable measures of spread.

If you’ve collected data on more than one variable, you can use bivariate or multivariate descriptive statistics to explore whether there are relationships between them.

In bivariate analysis, you simultaneously study the frequency and variability of two variables to see if they vary together. You can also compare the central tendency of the two variables before performing further statistical tests .

Multivariate analysis is the same as bivariate analysis but with more than two variables.

Contingency table

In a contingency table, each cell represents the intersection of two variables. Usually, an independent variable (e.g., gender) appears along the vertical axis and a dependent one appears along the horizontal axis (e.g., activities). You read “across” the table to see how the independent and dependent variables relate to each other.

| Number of visits to the library in the past year | |||||

|---|---|---|---|---|---|

| Group | 0–4 | 5–8 | 9–12 | 13–16 | 17+ |

| Children | 32 | 68 | 37 | 23 | 22 |

| Adults | 36 | 48 | 43 | 83 | 25 |

Interpreting a contingency table is easier when the raw data is converted to percentages. Percentages make each row comparable to the other by making it seem as if each group had only 100 observations or participants. When creating a percentage-based contingency table, you add the N for each independent variable on the end.

| Visits to the library in the past year (Percentages) | ||||||

|---|---|---|---|---|---|---|

| Group | 0–4 | 5–8 | 9–12 | 13–16 | 17+ | |

| Children | 18% | 37% | 20% | 13% | 12% | 182 |

| Adults | 15% | 20% | 18% | 35% | 11% | 235 |

From this table, it is more clear that similar proportions of children and adults go to the library over 17 times a year. Additionally, children most commonly went to the library between 5 and 8 times, while for adults, this number was between 13 and 16.

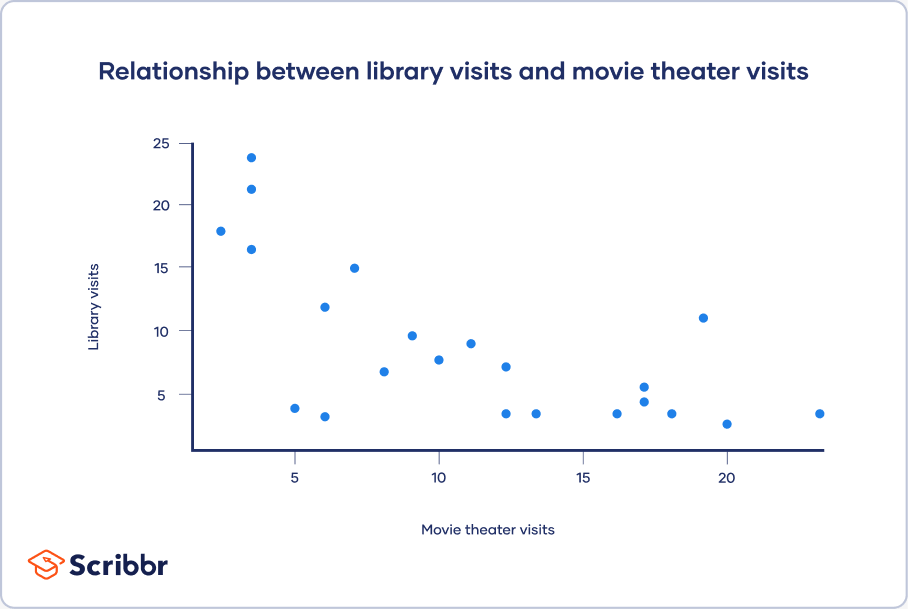

Scatter plots

A scatter plot is a chart that shows you the relationship between two or three variables . It’s a visual representation of the strength of a relationship.

In a scatter plot, you plot one variable along the x-axis and another one along the y-axis. Each data point is represented by a point in the chart.

From your scatter plot, you see that as the number of movies seen at movie theaters increases, the number of visits to the library decreases. Based on your visual assessment of a possible linear relationship, you perform further tests of correlation and regression.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Statistical power

- Pearson correlation

- Degrees of freedom

- Statistical significance

Methodology

- Cluster sampling

- Stratified sampling

- Focus group

- Systematic review

- Ethnography

- Double-Barreled Question

Research bias

- Implicit bias

- Publication bias

- Cognitive bias

- Placebo effect

- Pygmalion effect

- Hindsight bias

- Overconfidence bias

Descriptive statistics summarize the characteristics of a data set. Inferential statistics allow you to test a hypothesis or assess whether your data is generalizable to the broader population.

The 3 main types of descriptive statistics concern the frequency distribution, central tendency, and variability of a dataset.

- Distribution refers to the frequencies of different responses.

- Measures of central tendency give you the average for each response.

- Measures of variability show you the spread or dispersion of your dataset.

- Univariate statistics summarize only one variable at a time.

- Bivariate statistics compare two variables .

- Multivariate statistics compare more than two variables .

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bhandari, P. (2023, June 21). Descriptive Statistics | Definitions, Types, Examples. Scribbr. Retrieved August 21, 2024, from https://www.scribbr.com/statistics/descriptive-statistics/

Is this article helpful?

Pritha Bhandari

Other students also liked, central tendency | understanding the mean, median & mode, variability | calculating range, iqr, variance, standard deviation, inferential statistics | an easy introduction & examples, what is your plagiarism score.

Effective Use of Statistics in Research – Methods and Tools for Data Analysis

Remember that impending feeling you get when you are asked to analyze your data! Now that you have all the required raw data, you need to statistically prove your hypothesis. Representing your numerical data as part of statistics in research will also help in breaking the stereotype of being a biology student who can’t do math.

Statistical methods are essential for scientific research. In fact, statistical methods dominate the scientific research as they include planning, designing, collecting data, analyzing, drawing meaningful interpretation and reporting of research findings. Furthermore, the results acquired from research project are meaningless raw data unless analyzed with statistical tools. Therefore, determining statistics in research is of utmost necessity to justify research findings. In this article, we will discuss how using statistical methods for biology could help draw meaningful conclusion to analyze biological studies.

Table of Contents

Role of Statistics in Biological Research

Statistics is a branch of science that deals with collection, organization and analysis of data from the sample to the whole population. Moreover, it aids in designing a study more meticulously and also give a logical reasoning in concluding the hypothesis. Furthermore, biology study focuses on study of living organisms and their complex living pathways, which are very dynamic and cannot be explained with logical reasoning. However, statistics is more complex a field of study that defines and explains study patterns based on the sample sizes used. To be precise, statistics provides a trend in the conducted study.

Biological researchers often disregard the use of statistics in their research planning, and mainly use statistical tools at the end of their experiment. Therefore, giving rise to a complicated set of results which are not easily analyzed from statistical tools in research. Statistics in research can help a researcher approach the study in a stepwise manner, wherein the statistical analysis in research follows –

1. Establishing a Sample Size

Usually, a biological experiment starts with choosing samples and selecting the right number of repetitive experiments. Statistics in research deals with basics in statistics that provides statistical randomness and law of using large samples. Statistics teaches how choosing a sample size from a random large pool of sample helps extrapolate statistical findings and reduce experimental bias and errors.

2. Testing of Hypothesis

When conducting a statistical study with large sample pool, biological researchers must make sure that a conclusion is statistically significant. To achieve this, a researcher must create a hypothesis before examining the distribution of data. Furthermore, statistics in research helps interpret the data clustered near the mean of distributed data or spread across the distribution. These trends help analyze the sample and signify the hypothesis.

3. Data Interpretation Through Analysis

When dealing with large data, statistics in research assist in data analysis. This helps researchers to draw an effective conclusion from their experiment and observations. Concluding the study manually or from visual observation may give erroneous results; therefore, thorough statistical analysis will take into consideration all the other statistical measures and variance in the sample to provide a detailed interpretation of the data. Therefore, researchers produce a detailed and important data to support the conclusion.

Types of Statistical Research Methods That Aid in Data Analysis

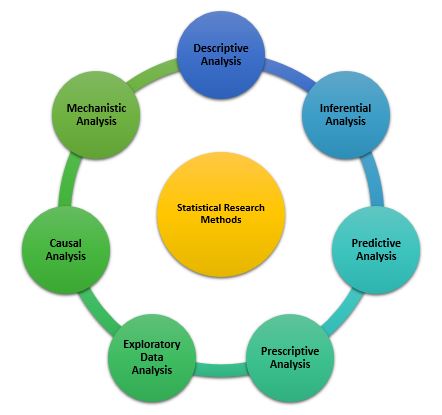

Statistical analysis is the process of analyzing samples of data into patterns or trends that help researchers anticipate situations and make appropriate research conclusions. Based on the type of data, statistical analyses are of the following type:

1. Descriptive Analysis

The descriptive statistical analysis allows organizing and summarizing the large data into graphs and tables . Descriptive analysis involves various processes such as tabulation, measure of central tendency, measure of dispersion or variance, skewness measurements etc.

2. Inferential Analysis

The inferential statistical analysis allows to extrapolate the data acquired from a small sample size to the complete population. This analysis helps draw conclusions and make decisions about the whole population on the basis of sample data. It is a highly recommended statistical method for research projects that work with smaller sample size and meaning to extrapolate conclusion for large population.

3. Predictive Analysis

Predictive analysis is used to make a prediction of future events. This analysis is approached by marketing companies, insurance organizations, online service providers, data-driven marketing, and financial corporations.

4. Prescriptive Analysis

Prescriptive analysis examines data to find out what can be done next. It is widely used in business analysis for finding out the best possible outcome for a situation. It is nearly related to descriptive and predictive analysis. However, prescriptive analysis deals with giving appropriate suggestions among the available preferences.

5. Exploratory Data Analysis

EDA is generally the first step of the data analysis process that is conducted before performing any other statistical analysis technique. It completely focuses on analyzing patterns in the data to recognize potential relationships. EDA is used to discover unknown associations within data, inspect missing data from collected data and obtain maximum insights.

6. Causal Analysis

Causal analysis assists in understanding and determining the reasons behind “why” things happen in a certain way, as they appear. This analysis helps identify root cause of failures or simply find the basic reason why something could happen. For example, causal analysis is used to understand what will happen to the provided variable if another variable changes.

7. Mechanistic Analysis

This is a least common type of statistical analysis. The mechanistic analysis is used in the process of big data analytics and biological science. It uses the concept of understanding individual changes in variables that cause changes in other variables correspondingly while excluding external influences.

Important Statistical Tools In Research

Researchers in the biological field find statistical analysis in research as the scariest aspect of completing research. However, statistical tools in research can help researchers understand what to do with data and how to interpret the results, making this process as easy as possible.

1. Statistical Package for Social Science (SPSS)

It is a widely used software package for human behavior research. SPSS can compile descriptive statistics, as well as graphical depictions of result. Moreover, it includes the option to create scripts that automate analysis or carry out more advanced statistical processing.

2. R Foundation for Statistical Computing