Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

16 21. Qualitative research dissemination

Chapter outline.

- Ethical responsibility and cultural respectfulness (8 minute read time)

- Critical considerations (5 minute read time)

- Informing your dissemination plan (11 minute read time)

- Final product taking shape (10 minute read time)

Content warning: Examples in this chapter contain references to research as a potential tool to stigmatize or oppress vulnerable groups, mistreatment and inequalities experienced by Native American tribes, sibling relationships, caregiving, child welfare, criminal justice and recidivism, first generation college students, Covid-19, school culture and race, health (in)equity, physical and sensory abilities, and transgender youth.

Your sweat and hard work has paid off! You’ve planned your study, collected your data, and completed your analysis. But alas, no rest for the weary student researcher. Now you need to share your findings. As researchers, we generally have some ideas where and with whom we desire to share our findings, but these plans may evolve and change during our research process. Communicating our findings with a broader audience is a critical step in the research process, so make sure not to treat this like an afterthought. Remember, research is about making a contribution to collective knowledge-building in the area of study that you are interested in. Indeed, research is of no value if there is no audience to receive it. You worked hard…get those findings out there!

In planning for this phase of research, we can consider a variety of methods for sharing our study findings. Among other options, we may choose to write our findings up as an article in a professional journal, provide a report to an organization, give testimony to a legislative group, or create a presentation for a community event. We will explore these options in a bit more detail below in section 21.4 where we talk more about different types of qualitative research products. We also want to think about our intended audience.

For your research, answer these two key questions as you are planning for dissemination:

- Who are you targeting to communicate your findings to? In other words, who needs to hear the results of your study?

- What do you hope your audience will take away after learning about your study?

21.1 Ethical responsibility and cultural respectfulness

Learning Objectives

Learners will be able to…

- Identify key ethical considerations in developing their qualitative research dissemination plan

- Conceptualize how research dissemination may impact diverse groups, both presently and into the future

Have you ever been misrepresented or portrayed in a negative light? It doesn’t feel good. It especially doesn’t feel good when the person portraying us has power, control and influence. While you might not feel powerful, research can be a powerful tool, and can be used and abused for many ends. Once research is out in the world, it is largely out of our control, so we need to approach dissemination with care. Be thoughtful about how you represent your work and take time to think through the potential implications it may have, both intended and unintended, for the people it represents.

As alluded to in the paragraph above, research comes with hefty responsibilities. You aren’t off the hook if you are conducting quantitative research. While quantitative research deals with numbers, these numbers still represent people and their relationships to social problems. However, with qualitative research, we are often dealing with a smaller sample and trying to learn more from them. As such, our job often carries additional weight as we think about how we will represent our findings and the people they reflect. Furthermore, we probably hope that our research has an impact; that in some way, it leads to change around some issue. This is especially true as social work researchers. Our research often deals with oppressed groups, social problems, and inequality. However, it’s hard to predict the implications that our research may have. This suggests that we need to be especially thoughtful about how we present our research to others.

Two of the core values of social work involve respecting the inherent dignity and worth of each person, practicing with integrity, and behaving in a trustworthy manner [1] . As social work researchers, to uphold these values, we need to consider how we are representing the people we are researching. Our work needs to honestly and accurately reflect our findings, but it also needs to be sensitive and respectful to the people it represents. In Chapter 8 we discussed research ethics and introduced the concept of beneficence or the idea that research needs to support the welfare of participants. Beneficence is particularly important as we think about our findings becoming public and how the public will receive, interpret and use this information. Thus, both as social workers and researchers, we need to be conscientious of how dissemination of our findings takes place.

As you think about the people in your sample and the communities or groups to which they belong, consider some of these questions:

- How are participants being portrayed in my research?

- What characteristics or findings are being shared or highlighted in my research that may directly or indirectly be associated with participants?

- Have the groups that I am researching been stigmatized, stereotyped, and/or misrepresented in the past? If so, how does my research potentially reinforce or challenge these representations?

- How might my research be perceived or interpreted by members of the community or group it represents?

- In what ways does my research honor the dignity and worth of participants?

Qualitative research often has a voyeuristic quality to it, as we are seeking a window into participants’ lives by exploring their experiences, beliefs, and values. As qualitative researchers, we have a role as stewards or caretakers of data. We need to be mindful of how data are gathered, maintained, and most germane to our conversation here, how data are used. We need to craft research products that honor and respect individual participants (micro), our collective sample as a whole (meso), and the communities that our research may represent (macro).

As we prepare to disseminate our findings, our ethical responsibilities as researchers also involve honoring the commitments we have made during the research process. We need to think back to our early phases of the research process, including our initial conversations with research partners and other stakeholders who helped us to coordinate our research activities. If we made any promises along the way about how the findings would be presented or used, we need to uphold them here. Additionally, we need to abide by what we committed to in our informed consent . Part of our informed consent involves letting participants know how findings may be used. We need to present our findings according to these commitments. We of course also have a commitment to represent our research honestly.

As an extension of our ethical responsibilities as researchers, we need to consider the impact that our findings may have, as well as our need to be socially conscientious researchers. As scouts, we were taught to leave our campsite in a better state than when we arrived. I think it is helpful to think of research in these terms. Think about the group(s) that may be represented by your research; what impact might your findings have for the lives of members of this group? Will it leave their lives in a better state than before you conducted your research? As a responsible researcher, you need to be thoughtful, aware and realistic about how your research findings might be interpreted and used by others. As social workers, while we hope that findings will be used to improve the lives of our clients, we can’t ignore that findings can also be used to further oppress or stigmatize vulnerable groups; research is not apolitical and we should not be naive about this. It is worth mentioning the concept of sustainable research here. Sustainable research involves conducting research projects that have a long-term, sustainable impact for the social groups we work with. As researchers, this means that we need to actively plan for how our research will continue to benefit the communities we work with into the future. This can be supported by staying involved with these communities, routinely checking-in and seeking input from community members, and making sure to share our findings in ways that community members can access, understand, and utilize them. Nate Olson provides a very inspiring Ted Talk about the importance of building resilient communities. As you consider your research project, think about it in these terms.

Key Takeaways

- As you think about how best to share your qualitative findings, remember that these findings represent people. As such, we have a responsibility as social work researchers to ensure that our findings are presented in honest, respectful, and culturally sensitive ways.

- Since this phase of research deals with how we are going to share our findings with the public, we need to actively consider the potential implications of our research and how it may be interpreted and used.

Is your work, in some way, helping to contribute to a resilient and sustainable community? It may not be a big tangible project as described in Olson’s Ted Talk , but is it providing a resource for change and growth to a group of people, either directly or indirectly? Does it promote sustainability amongst the social networks that might be impacted by the research you are conducting?

21.2 Critical considerations

- Identify how issues of power and control are present in the dissemination of qualitative research findings

- Begin to examine and account for their own role in the qualitative research process, and address this in their findings

This is the part of our research that is shared with the public and because of this, issues like reciprocity, ownership, and transparency are relevant. We need to think about who will have access to the tangible products of our research and how that research will get used. As researchers, we likely benefit directly from research products; perhaps it helps us to advance our career, obtain a good grade, or secure funding. Our research participants often benefit indirectly by advancing knowledge about a topic that may be relevant or important to them, but often don’t experience the same direct tangible benefits that we do. However, a participatory perspective challenges us to involve community members from the outset in discussions about what changes would be most meaningful to their communities and what research products would be most helpful in accomplishing those changes. This is especially important as it relates to the role of research as a tool to support empowerment.

Ownership of research products is also important as an issue of power and control. We will discuss a range of venues for presenting your qualitative research, some of which are more amenable to shared ownership than others. For instance, if you are publishing your findings in an academic journal, you will need to sign an agreement with that publisher about how the information in that article can be used and who has access to it. Similarly, if you are presenting findings at a national conference, travel and other conference-related expenses and requirements may make access to these research products prohibitive. In these instances, the researcher and the organization(s) they negotiate with (e.g. the publishing company, the conference organizing body) share control. However, disseminating qualitative findings in a public space, public record, or community-owned resource means that more equitable ownership might be negotiated. An equitable or reciprocal arrangement might not always be able to be reached, however. Transparency about who owns the products of research is important if you are working with community partners. To support this, establishing a Memorandum Of Understanding (MOU) or Memorandum of Agreement (MOA) e arly in the research process is important. This document should clearly articulate roles, responsibilities, and a number of other details, such as ownership of research products between the researcher and the partnering group(s).

Resources for learning more about MOUs and MOAs

Center for Community Health and Development, University of Kansas. (n.d.). Community toolbox: Section 9. Understanding and writing contracts and memoranda of agreement [Webpage]. https://ctb.ku.edu/en/table-of-contents/structure/organizational-structure/understanding-writing-contracts-memoranda-agreement/main

Collaborative Center for Health Equity, University of Wisconson Madison. (n.d.). Standard agreement for research with community organizations [Template] https://d1uqjtzsuwlnsf.cloudfront.net/wp-content/uploads/sites/163/2018/08/CCHE-UW-MOU-sample.pdf

Office of Research, UC Davis. (n.d.). Research MOUs [Webpage]. https://research.ucdavis.edu/proposals-grants-contracts/international-agreements/memorandum-understanding/

Office of Research, The University of Texas at Dallas. (n.d.). Types of agreements [Webpage]. https://research.utdallas.edu/researchers/contracts/types-of-agreements

In our discussion about qualitative research, we have also frequently identified the need for the qualitative researcher to account for their role throughout the research process. Part of this accounting can specifically apply to qualitative research products. This is our opportunity to demonstrate to our audience that we have been reflective throughout the course of the study and how this has influenced the work we did. Some qualitative research studies include a positionality statement within the final product. This is often toward the beginning of the report or the presentation and includes information about the researcher(s)’s identity and worldview, particularly details relevant to the topic being studied. This can include why you are invested in the study, what experiences have shaped how you have come to think about the topic, and any positions or assumptions you make with respect to the topic. This is another way to encourage transparency. It can also be a means of relegating or at least acknowledging some of our power in the research process, as it can provide one modest way for us, as the researcher, to be a bit more exposed or vulnerable, although this is a far cry from making the risks of research equitable between the researcher and the researched. However, the positionality statement can be a place to integrate our identities, who we are as an individual, a researcher, and a social work practitioner. Granted, for some of us that might be volumes, but we need to condense this down to a brief but informative statement – don’t let it eclipse the research! It should just be enough to inform the audience and allow them to draw their own conclusions about who is telling the story of this research and how well they can be trusted. This student provides a helpful discussion of the positionality statement that she developed for her study. Reviewing your reflexive journal (discussed in chapter 20 as a tool to enhance qualitative rigor) can help in identifying underlying assumptions and positions you might have grounded in your reactions throughout the research process. These insights can be integrated into your positionality statement. Please take a few minutes to watch this informative video of a student further explaining what a positionality statement is and providing a good example of one.

- The products of qualitative research often benefit the researcher disproportionately when compared to research participants or the communities they represent. Whenever possible, we can seek out ways to disseminate research in ways that addresses this imbalance and supports more tangible and direct benefits to community members.

- Openly positioning ourselves in our dissemination plans can be an important way for qualitative researchers to be transparent and account for our role.

21.3 Informing your dissemination plan

- Appraise important dimensions of planning that will inform their research dissemination plan, including: audience, purpose, context and content

- Apply this appraisal to key decisions they will need to make when designing their qualitative research product(s)

This section will offer you a general overview of points to consider as you form the dissemination plan for your research. We will start with considerations regarding your audience, then turn our attention to the purpose of your research, and finally consider the importance of attending to both content and context as you plan for your final research product(s).

Perhaps the most important consideration you have as you plan how to present your work is your audience. Research is a product that is meant to be consumed, and because of this, we need to be conscious of our consumers. We will speak more extensively about knowing your audience in Chapter 24 , devoted to both sharing and consuming research. Regardless of who your audience is (e.g. community members, classmates, research colleagues, practicing social workers, state legislator), there will be common elements that will be important to convey. While the way you present them will vary greatly according to who is listening, Table 21.1 offers a brief review of the elements that you will want your audience to leave with.

| Aim | What did my research aim to accomplish and why is it important |

| Process | How did I go about conducting my research and what did I do to ensure quality |

| Concepts | What are the main ideas that someone needs to know to make sense of my topic and how are they integrated into my research plan |

| Findings | What were my results and under what circumstances are they valid |

| Connection | How are my findings connected to what else we know about this topic and why are they important |

Once we determine who our audience is, we can further tailor our dissemination plan to that specific group. Of course, we may be presenting our findings in more than one venue, and in that case, we will have multiple plans that will meet the needs of each specific audience.

It’s a good idea to pitch your plan first. However you plan to present your findings, you will want to have someone preview before you share with a wider audience. Ideally, whoever previews will be a person from your target audience or at least someone who knows them well. Getting feedback can go a long way in helping us with the clarity with which we convey our ideas and the impact they have on our audience. This might involve giving a practice speech, having someone review your article or report, or practice discussing your research one-on-one, as you would with a poster presentation. Let’s talk about some specific audiences that you may be targeting and their unique needs or expectations.

Below I will go through some brief considerations for each of these different audiences. I have tried to focus this discussion on elements that are relevant specific to qualitative studies since we do revisit this topic in Chapter 24 .

Research community

When presenting your findings to an academic audience or other research-related community, it is probably safe to a make a few assumptions. This audience is likely to have a general understanding of the research process and what it entails. For this reason, you will have to do less explaining of research-related terms and concepts. However, compared to other audiences, you will probably have to provide a bit more detail about what steps you took in your research process, especially as they relate to qualitative rigor, because this group will want to know about how your research was carried out and how you arrived at your decisions throughout the research process. Additionally, you will want to make a clear connection between which qualitative design you chose and your research question; a methodological justification . Researchers will also want to have a good idea about how your study fits within the wider body of scientific knowledge that it is related to and what future studies you feel are needed based on your findings. You are likely to encounter this audience if you are disseminating through a peer-reviewed journal article, presenting at a research conference, or giving an invited talk in an academic setting.

Professional community

We often find ourselves presenting our research to other professionals, such as social workers in the field. While this group may have some working knowledge of research, they are likely to be much more focused on how your research is related to the work they do and the clients they serve. While you will need to convey your design accurately, this audience is most likely to be invested in what you learned and what it means (especially for practice). You will want to set the stage for the discussion by doing a good job expressing your connection to and passion for the topic (a positionality statemen t might be particularly helpful here), what we know about the issue, and why it is important to their professional lives. You will want to give good contextual information for your qualitative findings so that practitioners can know if these findings might apply to people they work with. Also, as since social work practitioners generally place emphasis on person-centered practice, hearing the direct words of participants (quotes) whenever possible, is likely to be impactful as we present qualitative results. Where academics and researchers will want to know about implications for future research, professionals will want to know about implications for how this information could help transform services in the future or understand the clients they serve.

Lay community

The lay community are people who don’t necessarily have specialized training or knowledge of the subject, but may be interested or invested for some other reason; perhaps the issue you are studying affects them or a loved one. Since this is the general public, you should expect to spend the most time explaining scientific knowledge and research processes and terminology in accessible terms. Furthermore, you will want to invest some time establishing a personal connection to the topic (like I talked about for the professional community). They will likely want to know why you are interested and why you are a credible source for this information. While this group may not be experts on research, as potential members of the group(s) that you may be researching, you do want to remember that they are experts in their own community. As such, you will want to be especially mindful of approaching how you present findings with a sense of cultural humility (although hopefully you have this in mind across all audiences). It will be good to discuss what steps you took to ensure that your findings accurately reflect what participants shared with you ( rigor ). You will want to be most clear with this group about what they should take away, without overstating your findings.

Regardless of who your audience is, remember that you are an ambassador. You may represent a topic, a population, an organization, or the whole institution of research, or any combination of these. Make sure to present your findings honestly, ethically, and clearly. Furthermore, I’m assuming that the research you are conducting is important because you have spent a lot of time and energy to arrive at your findings. Make sure that this importance comes through in your dissemination. Tell a compelling story with your research!

Who needs to hear the message of your qualitative research?

- Example. If you are presenting your research about caregiver fatigue to a caregiver support group, you won’t need to spend time describing the role of caregivers because your audience will have lived experience.

- Example. If you are presenting your research findings to a group of academics, you wouldn’t have to explain what a sampling frame is, but if you are sharing it with a group of community members from a local housing coalition, you will need to help them understand what this is (or maybe use a phrase that is more meaningful to them).

- Example. If you are speaking to a group of child welfare workers about your study examining trauma-informed communication strategies, they are probably going to want to know how these strategies might impact the work that they do.

- Example. If you are sharing your findings at a meeting with a council member, it may be especially meaningful to share direct quotes from constituents.

Being clear about the purpose of your research from the outset is immeasurably helpful. What are you hoping to accomplish with your study? We can certainly look to the overarching purpose of qualitative research, that being to develop/expand/challenge/explore understanding of some topic. But, what are you specifically attempting to accomplish with your study? Two of the main reasons we conduct research are to raise awareness about a topic and to create change around some issue. Let’s say you are conducting a study to better understand the experience of recidivism in the criminal justice system. This is an example of a study whose main purpose is to better understand and raise awareness around a particular social phenomenon (recidivism). On the other hand, you could also conduct a study that examines the use of strengths-based strategies by probation officers to reduce recidivism. This would fall into the category of research promoting a specific change (the use of strengths-based strategies among probation officers). I would wager that your research topic falls into one of these two very broad categories. If this is the case, how would you answer the corresponding questions below?

Are you seeking to raise awareness of a particular issue with your research? If so,

- Whose awareness needs raising?

- What will “speak” most effectively to this group?

- How can you frame your research so that it has the most impact?

Are you seeking to create a specific change with your research? If so,

- What will that change look like?

- How can your research best support that change occurring?

- Who has the power to create that change and what will be most compelling in reaching them?

How you answer these questions will help to inform your dissemination plan. For instance, your dissemination plan will likely look very different if you are trying to persuade a group of legislators to pass a bill versus trying to share a new model or theory with academic colleagues. Considering your purposes will help you to convey the message of your research most effectively and efficiently. We invest a lot of ourselves in our research, so make sure to keep your sights focused on what you hope to accomplish with it!

Content and context

As a reminder, qualitative research often has a dual responsibility for conveying both content and context. You can think of content as the actual data that is shared with us or that we obtain, while context is the circumstances under which that data sharing occurs. Content conveys the message and context provides us the clues with which we can decode and make sense of that message.

While quantitative research may provide some contextual information, especially in regards to describing its sample, it rarely receives as much attention or detail as it does in qualitative studies. Because of this, you will want to plan for how you will attend to both the content and context of your study in planning for your dissemination.

- Research is an intentional act; you are trying to accomplish something with it. To be successful, you need to approach dissemination planfully.

- Planning the most effective way of sharing our qualitative findings requires looking beyond what is convenient or even conventional, and requires us to consider a number of factors, including our audience, the purpose or intent of our research and the nature of both the content and the context that we are trying to convey.

21.4 Final product taking shape

- Evaluate the various means of disseminating research and consider their applicability for your research project

- Determine appropriate building blocks for designing your qualitative research product

As we have discussed, qualitative research takes many forms. It should then come as no surprise that qualitative research products also come in many different packages. To help guide you as the final products of your research take shape, we will discuss some of the building blocks or elements that you are likely to include as tools in sharing your qualitative findings. These are the elements that will allow you to flesh out the details of your dissemination plan.

Building blocks

There are many building blocks that are at our disposal as we formulate our qualitative research product(s). Quantitative researchers have charts, graphs, tables, and narrative descriptions of numerical output. These tools allow the quantitative researcher to tell the story of their research with numbers. As qualitative researchers, we are tasked with telling the story of our research findings as well, but our tools look different. While this isn’t an exhaustive list of tools that are at our disposal as qualitative researchers, a number of commonly used elements in sharing qualitative findings are discussed here. Depending on your study design and the type of data you are working with, you may use one or some combination of the building blocks discussed below.

Themes are a very common element when presenting qualitative research findings. They may be called themes, but they may also go by other names: categories, dimensions, main ideas, etc. Themes offer the qualitative researcher a way to share ideas that emerged from your analysis that were shared by multiple participants or across multiple sources of data. They help us to distill the large amounts of qualitative data that we might be working with into more concise and manageable pieces of information that are more consumable for our audience. When integrating themes into your qualitative research product, you will want to offer your audience: the title of the theme (try to make this as specific/meaningful as possible), a brief description or definition of the theme, any accompanying dimensions or sub-themes that may be relevant, and examples (when appropriate).

Quotes offer you the opportunity to share participants’ exact words with your audience. Of course, we can’t only rely on quotes, because we need to knit the information that is shared into one cohesive description of our findings and an endless list of quotes is unlikely to support this. Because of this, you will want to be judicious in selecting your quotes. Choose quotes that can stand on their own, best reflect the sentiment that is being captured by the theme or category of findings that you are discussing, and are likely to speak to and be understood by your audience. Quotes are a great way to help your findings come alive or to give them greater depth and significance. If you are using quotes, be sure to do so in a balanced manner – don’t only use them in some sections but not others, or use a large number to support one theme and only one or two for another. Finally, we often provide some brief demographic information in a parenthetical reference following a quote so our reader knows a little bit about the person who shared the information. This helps to provide some context for the quote.

Kohli and Pizarro (2016) [2] provide a good example of a qualitative study using quotes to exemplify their themes. In their study, they gathered data through short-answer questionnaires and in-depth interviews from racial-justice oriented teachers of Color. Their study explored the experiences and motivations of these teachers and the environments in which they worked. As you might guess, the words of the teacher-participants were especially powerful and the quotes provided in the results section were very informative and important in helping to fulfill the aim of the research study. Take a few minutes to review this article. Note how the authors provide a good amount of detail as to what each of the themes meant and how they used the quotes to demonstrate and support each theme. The quotes help bring the themes to life and anchor the results in the actual words of the participants (suggesting greater trustworthiness in the findings).

Figure 21.1 below offers a more extensive example of a theme being reported along with supporting quotes from a study conducted by Karabanow, Gurman, and Naylor (2012) [3] . This study focused on the role of work activities in the lives of “Guatemalan street youth”. One of the important themes had to do with intersection of work and identity for this group. In this example, brief quotes are used within the body of the description of the theme, and also longer quotes (full sentence(s)) to demonstrate important aspects of the description.

| Work, be it formal or informal, is beneficial for street youth not only for its financial benefits; but it helps youth to develop and rebuild their sense of self, to break away from destructive patterns, and ultimately contributes to any goals of exiting street life. Although many of the participants were aware that society viewed them as “lazy” or “useless,” they tended to see themselves as contributing members of society earning a valid and honest living. One participant said, “Well, a lot of people say, right? ‘The kid doesn’t want to do anything. Lazy kid’ right? And I wouldn’t like for people to say that about me, I’d rather do something so that they don’t say that I’m lazy. I want to be someone important in life.” This youth makes an interesting and important connection in this statement: he intrinsically associates “being someone” with “doing something” – he accepts the work-based identity that characterizes much of contemporary capitalist society. Many of the interviews subtly enforced this idea that in the informal economy, as in the formal economy, “who one is” is largely dependent on “what one does.” This demonstrates two important ideas: that street youth working in the informal sector are surprisingly ‘mainstream’ in their underlying beliefs and ambitions, and that work – be it formal or informal – plays a crucial role in allowing street youth, who have often dealt with trauma, isolation and low self-esteem, to rebuild a sense of self-worth. Many of the youth involved in this study dream of futures that echo traditional ideals: “to have my family all together…to have a home, or rather to have a nice house…to have a good job.” Several explained that this future is unattainable without hard work; many viewed those who “do nothing” as people who “waste their time” and think that “your life isn’t important to you.” On the other hand, those who value their lives and wish to attain a future of “peace and tranquility” must “look for work, that’s what needs to be done to have that future because if God allows it, in the future maybe you can find a partner, form a family and live peacefully.” For these youth, working – be it in the formal or informal sector – is essential to a feeling of “moving forward ( ).” This movement forward begins with self-esteem. Although the focus of this study was not the troubled pasts of the participants, many alluded to the difficulties they have faced and the various traumas that forced them onto the streets. Several of the youth noted that working was a catalyst in rebuilding positive feelings about oneself: one explained, “[When I’m working,] I feel happy, powerful…Sometimes when I go out to sell, I feel happy.” Another said: For me, when I’m working I feel free because I know that I’m earning my money in an honest way, not stealing right. Because when you’re stealing, you don’t feel free, right? Now when you’re working, you’re free, they can’t arrest you or anything because you’re selling. Now if you’re stealing and everything, you don’t feel free. But when you’re selling you feel free, out of danger. This feeling of being “free” or “powerful” rests on the idea that money is “earned” and not stolen; being able to earn money is associated with being “someone,” with being a valid and contributing member of society. In addition, work helps street youth to break away from destructive patterns. One participant spoke of her experience working full time at a café: For me, working means to be busy, to not just be there….It helps us meet other people, like new people and not to be always in the same scene. Because if you’re not busy, you feel really bored and you might want to, I don’t know, go back to the same thing you were in before…you even forget your problems because you’re keeping busy, you’re talking to other people, people who don’t know you. For this participant, a formal job was beneficial in that it supplied her with a daily routine and allowed her to interact with non-street people – these factors helped to separate her from the destructive lifestyle of the street, and helped her to “move forward.” Although these benefits are indeed most obvious with formal employment, many participants spoke of the positive effects of informal work as well, although to varying degrees. In Guatemala, since the informal economy accounts for over half of the country’s GNP, there is a wide range of under-the-table informal work available. These jobs frequently bring youth out of the street context and, therefore, provide similar benefits to a formal job, as described by the above participant. As to informal work that takes place on the street, such as hawking or car watching, the benefits of work are present, although to a different degree. Even hawking, for example, gives young workers a routine and a chance to interact with non-street people. As one young man continuously emphasized throughout his interview, “work helps you to keep your mind busy, to be in another mind-set, right? To not be thinking the same thing all the time: ‘Oh, drugs, drugs, drugs…’” As explained earlier, the code of the hawking world dictates that vendors cannot sell while high – just like a formal job, hawking helps to distance youth workers from some of their destructive street habits. However, as one participant thoughtfully noted, it is difficult to break these habits when one is still highly embroiled in street culture; “it depended on who was around me because if they were in the same problems as I was, I stopped working and I started doing the same as they did. And if I was surrounded by serious people, then I got my act together.” While certain types of informal work, like cleaning or waitressing, can help youth to distance themselves from destructive patterns, others, such as car watching and selling, may not do enough to separate youth from their peers. While the routine and activity do have positive effects, they often are not sufficient. Among some of the participants, there was the sentiment that informal work could function as a transition stage towards exiting the street; it could “change your life.” One participant said “there are lots of vendors who’ve gotten off the streets, if you make an effort, you go out to sell, you can get off the street. Like myself, when I was selling, I mean working, I got off the street, I went home and I managed to stay there quite a long time.” One might credit this success to several factors: first, the money the seller may have been able to save and accumulate; second, the routine of selling may have helped the seller to break from destructive patterns, such as drug use, and also prepared the seller for the demands of formal sector employment; and, thirdly, selling may have enabled the seller to develop the necessary confidence and sense of self to attempt exiting the street. |

Pictures or videos

If our data collection involves the use of photographs, drawings, videos or other artistic expression of participants or collection of artifacts, we may very well include selections of these in our dissemination of qualitative findings. In fact, if we failed to include these, it would seem a bit inauthentic. For the same reason we include quotes as direct representations of participants’ contributions, it is a good idea to provide direct reference to other visual forms of data that support or demonstrate our findings. We might incorporate narrative descriptions of these elements or quotes from participants that help to interpret their meaning. Integrating pictures and quotes is especially common if we are conducting a study using a Photovoice approach, as we discussed in Chapter 17 , where a main goal of the research technique is to bring together participant generated visuals with collaborative interpretation.

Take some time to explore the website linked here. It is the webpage for The Philidelphia Collaborative for Health Equity’s PhotoVoice Exhibit Gallery and offers a good demonstration of research that brings together pictures and text.

Graphic or figure

Qualitative researchers will often create a graphic or figure to visually reflect how the various pieces of your findings come together or relate to each other. Using a visual representation can be especially compelling for people who are visual learners. When you are using a visual representation, you will want to: label all elements clearly; include all the components or themes that are part of your findings; pay close attention to where you place and how you orient each element (as their spatial arrangement carries meaning); and finally, offer a brief but informative explanation that helps your reader to interpret your representation. A special subcategory of visual representation is process. These are especially helpful to lay out a sequential relationship within your findings or a model that has emerged out of your analysis. A process or model will show the ‘flow’ of ideas or knowledge in our findings, the logic of how one concept proceeds to the next and what each step of the model entails.

Noonan and colleagues (2004) [4] conducted a qualitative study that examined the career development of high achieving women with physical and sensory disabilities. Through the analysis of their interviews, they built a model of career development based on these women’s experiences with a figure that helps to conceptually illustrate the model. They place the ‘dyanmic self’ in the center, surrounded by a dotted (permeable) line, with a number of influences outside the line (i.e. family influences, disability impact, career attitudes and behaviors, sociopoltical context, developmental opportunities and social support) and arrows directed inward and outward between each influence and the dynamic self to demonstrate mutual influence/exchange between them. The image is included in the results section of their study and brings together “core categories” and demonstrates how they work together in the emergent theory or how they relate to each other. Because so many of our findings are dynamic, like Noonan and colleagues, showing interaction and exchange between ideas, figures can be especially helpful in conveying this as we share our results.

Going one step further than the graphic or figure discussed above, qualitative researchers may decide to combine and synthesize findings into one integrated representation. In the case of the graphic or figure, the individual elements still maintain their distinctiveness, but are brought together to reflect how they are related. In a composite however, rather than just showing that they are related (static), the audience actually gets to ‘see’ the elements interacting (dynamic). The integrated and interactive findings of a composite can take many forms. It might be a written narrative, such as a fictionalized case study that reflects of highlights the many aspects that emerged during analysis. It could be a poem, dance, painting or any other performance or medium. Ultimately, a composite offers an audience a meaningful and comprehensive expression of our findings. If you are choosing to utilize a composite, there is an underlying assumption that is conveyed: you are suggesting that the findings of your study are best understood holistically. By discussing each finding individually, they lose some of their potency or significance, so a composite is required to bring them together. As an example of a composite, consider that you are conducting research with a number of First Nations Peoples in Canada. After consulting with a number of Elders and learning about the importance of oral traditions and the significance of storytelling, you collaboratively determine that the best way to disseminate your findings will be to create and share a story as a means of presenting your research findings. The use of composites also assumes that the ‘truths’ revealed in our data can take many forms. The Transgender Youth Project hosted by the Mandala Center for Change , is an example of legislative theatre combining research, artistic expression, and political advocacy and a good example of action-oriented research.

While you haven’t heard much about numbers in our qualitative chapters, I’m going to break with tradition and speak briefly about them here. For many qualitative projects we do include some numeric information in our final product(s), mostly in the way of counts. Counts usually show up in the way of frequency of demographic characteristics of our sample or characteristics regarding our artifacts, if they aren’t people. These may be included as a table or they may be integrated into the narrative we provide, but in either case, our goal in including this information is to offer the reader information so they can better understand who or what our sample is representing. The other time we sometimes include count information is in respect to the frequency and coverage of the themes or categories that are represented in our data. Frequency information about a theme can help the reader to know how often an idea came up in our analysis, while coverage can help them to know how widely dispersed this idea was (e.g. did nearly everyone mention this, or was it a small group of participants).

- There are a wide variety of means by which you can deliver your qualitative research to the public. Choose one that takes into account the various considerations that we have discussed above and also honors the ethical commitments that we outlined early in this chapter.

- Presenting qualitative research requires some amount of creativity. Utilize the building blocks discussed in this chapter to help you consider how to most authentically and effectively convey your message to a wider audience.

What means of delivery will you be choosing for your dissemination plan?

What building blocks will best convey your qualitaitve results to your audience?

- National Association of Social Workers. (2017). NASW code of ethics. Retrieved from https://www.socialworkers.org/About/Ethics/Code-of-Ethics/Code-of-Ethics-English ↵

- Kohli, R., & Pizarro, M. (2016). Fighting to educate our own: Teachers of Color, relational accountability, and the struggle for racial justice. Equity & Excellence in Education, 49 (1), 72-84. ↵

- Karabanow, J., Gurman, E., & Naylor, T. (2012). Street youth labor as an Expression of survival and self-worth. Critical Social Work, 13 (2). ↵

- Noonan, B. M., Gallor, S. M., Hensler-McGinnis, N. F., Fassinger, R. E., Wang, S., & Goodman, J. (2004). Challenge and success: A Qualitative study of the career development of highly achieving women with physical and sensory disabilities. Journal of Counseling Psychology, 51 (1), 68. ↵

- Ede, L., & Starrin, B. (2014). Unresolved conflicts and shaming processes: risk factors for long-term sick leave for mental-health reasons. Nordic Journal of Social Research, 5 , 39-54. ↵

how you plan to share your research findings

One of the three values indicated in the Belmont report. An obligation to protect people from harm by maximizing benefits and minimizing risks.

A written agreement between parties that want to participate in a collaborative project.

A research journal that helps the researcher to reflect on and consider their thoughts and reactions to the research process and how it may be shaping the study

Context is the circumstances surrounding an artifact, event, or experience.

Rigor is the process through which we demonstrate, to the best of our ability, that our research is empirically sound and reflects a scientific approach to knowledge building.

Content is the substance of the artifact (e.g. the words, picture, scene). It is what can actually be observed.

Graduate research methods in social work Copyright © 2020 by Matthew DeCarlo, Cory Cummings, Kate Agnelli is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Internet Explorer is no longer supported by Microsoft. To browse the NIHR site please use a modern, secure browser like Google Chrome, Mozilla Firefox, or Microsoft Edge.

How to disseminate your research

Published: 01 January 2019

Version: Version 1.0 - January 2019

This guide is for researchers who are applying for funding or have research in progress. It is designed to help you to plan your dissemination and give your research every chance of being utilised.

What does NIHR mean by dissemination?

Effective dissemination is simply about getting the findings of your research to the people who can make use of them, to maximise the benefit of the research without delay.

Research is of no use unless it gets to the people who need to use it

Professor Chris Whitty, Chief Scientific Adviser for the Department of Health

Principles of good dissemination

Stakeholder engagement: Work out who your primary audience is; engage with them early and keep in touch throughout the project, ideally involving them from the planning of the study to the dissemination of findings. This should create ‘pull’ for your research i.e. a waiting audience for your outputs. You may also have secondary audiences and others who emerge during the study, to consider and engage.

Format: Produce targeted outputs that are in an appropriate format for the user. Consider a range of tailored outputs for decision makers, patients, researchers, clinicians, and the public at national, regional, and/or local levels as appropriate. Use plain English which is accessible to all audiences.

Utilise opportunities: Build partnerships with established networks; use existing conferences and events to exchange knowledge and raise awareness of your work.

Context: Understand the service context of your research, and get influential opinion leaders on board to act as champions. Timing: Dissemination should not be limited to the end of a study. Consider whether any findings can be shared earlier

Remember to contact your funding programme for guidance on reporting outputs .

Your dissemination plan: things to consider

What do you want to achieve, for example, raise awareness and understanding, or change practice? How will you know if you are successful and made an impact? Be realistic and pragmatic.

Identify your audience(s) so that you know who you will need to influence to maximise the uptake of your research e.g. commissioners, patients, clinicians and charities. Think who might benefit from using your findings. Understand how and where your audience looks for/receives information. Gain an insight into what motivates your audience and the barriers they may face.

Remember to feedback study findings to participants, such as patients and clinicians; they may wish to also participate in the dissemination of the research and can provide a powerful voice.

When will dissemination activity occur? Identify and plan critical time points, consider external influences, and utilise existing opportunities, such as upcoming conferences. Build momentum throughout the entire project life-cycle; for example, consider timings for sharing findings.

Think about the expertise you have in your team and whether you need additional help with dissemination. Consider whether your dissemination plan would benefit from liaising with others, for example, NIHR Communications team, your institution’s press office, PPI members. What funds will you need to deliver your planned dissemination activity? Include this in your application (or talk to your funding programme).

Partners / Influencers: think about who you will engage with to amplify your message. Involve stakeholders in research planning from an early stage to ensure that the evidence produced is grounded, relevant, accessible and useful.

Messaging: consider the main message of your research findings. How can you frame this so it will resonate with your target audience? Use the right language and focus on the possible impact of your research on their practice or daily life.

Channels: use the most effective ways to communicate your message to your target audience(s) e.g. social media, websites, conferences, traditional media, journals. Identify and connect with influencers in your audience who can champion your findings.

Coverage and frequency: how many people are you trying to reach? How often do you want to communicate with them to achieve the required impact?

Potential risks and sensitivities: be aware of the relevant current cultural and political climate. Consider how your dissemination might be perceived by different groups.

Think about what the risks are to your dissemination plan e.g. intellectual property issues. Contact your funding programme for advice.

More advice on dissemination

We want to ensure that the research we fund has the maximum benefit for patients, the public and the NHS. Generating meaningful research impact requires engaging with the right people from the very beginning of planning your research idea.

More advice from the NIHR on knowledge mobilisation and dissemination .

What you need to know about research dissemination

Last updated

5 March 2024

Reviewed by

In this article, we'll tell you what you need to know about research dissemination.

- Understanding research dissemination

Research that never gets shared has limited benefits. Research dissemination involves sharing research findings with the relevant audiences so the research’s impact and utility can reach its full potential.

When done effectively, dissemination gets the research into the hands of those it can most positively impact. This may include:

Politicians

Industry professionals

The general public

What it takes to effectively disseminate research will depend greatly on the audience the research is intended for. When planning for research dissemination, it pays to understand some guiding principles and best practices so the right audience can be targeted in the most effective way.

- Core principles of effective dissemination

Effective dissemination of research findings requires careful planning. Before planning can begin, researchers must think about the core principles of research dissemination and how their research and its goals fit into those constructs.

Research dissemination principles can best be described using the 3 Ps of research dissemination.

This pillar of research dissemination is about clarifying the objective. What is the goal of disseminating the information? Is the research meant to:

Persuade policymakers?

Influence public opinion?

Support strategic business decisions?

Contribute to academic discourse?

Knowing the purpose of sharing the information makes it easy to accurately target it and align the language used with the target audience.

The process includes the methods that will be used and the steps taken when it comes time to disseminate the findings. This includes the channels by which the information will be shared, the format it will be shared in, and the timing of the dissemination.

By planning out the process and taking the time to understand the process, researchers will be better prepared and more flexible should changes arise.

The target audience is whom the research is aimed at. Because different audiences require different approaches and language styles, identifying the correct audience is a huge factor in the successful dissemination of findings.

By tailoring the research dissemination to the needs and preferences of a specific audience, researchers increase the chances of the information being received, understood, and used.

- Types of research dissemination

There are many options for researchers to get their findings out to the world. The type of desired dissemination plays a big role in choosing the medium and the tone to take when sharing the information.

Some common types include:

Academic dissemination: Sharing research findings in academic journals, which typically involves a peer-review process.

Policy-oriented dissemination: Creating documents that summarize research findings in a way that's understandable to policymakers.

Public dissemination: Using television and other media outlets to communicate research findings to the public.

Educational dissemination: Developing curricula for education settings that incorporate research findings.

Digital and online dissemination: Using digital platforms to present research findings to a global audience.

Strategic business presentation: Creating a presentation for a business group to use research insights to shape business strategy

- Major components of information dissemination

While the three Ps provide a convenient overview of what needs to be considered when planning research dissemination, they are not a complete picture.

Here’s a more comprehensive list of what goes into the dissemination of research results:

Audience analysis : Identifying the target audience and researching their needs, preferences, and knowledge level so content can be tailored to them.

Content development: Creating the content in a way that accurately reflects the findings and presents them in a way that is relevant to the target audience.

Channel selection: Choosing the channel or channels through which the research will be disseminated and ensuring they align with the preferences and needs of the target audience.

Timing and scheduling: Evaluating factors such as current events, publication schedules, and project milestones to develop a timeline for the dissemination of the findings.

Resource allocation: With the basics mapped out, financial, human, and technological resources can be set aside for the project to facilitate the dissemination process.

Impact assessment and feedback: During the dissemination, methods should be in place to measure how successful the strategy has been in disseminating the information.

Ethical considerations and compliance: Research findings often include sensitive or confidential information. Any legal and ethical guidelines should be followed.

- Crafting a dissemination blueprint

With the three Ps providing a foundation and the components outlined above giving structure to the dissemination, researchers can then dive deeper into the important steps in crafting an impactful and informative presentation.

Let’s take a look at the core steps.

1. Identify your audience

To identify the right audience for research dissemination, researchers must gather as much detail as possible about the different target audience segments.

By gathering detailed information about the preferences, personalities, and information-consumption habits of the target audience, researchers can craft messages that resonate effectively.

As a simple example, academic findings might be highly detailed for scholarly journals and simplified for the general public. Further refinements can be made based on the cultural, educational, and professional background of the target audience.

2. Create the content

Creating compelling content is at the heart of effective research dissemination. Researchers must distill complex findings into a format that's engaging and easy to understand. In addition to the format of the presentation and the language used, content includes the visual or interactive elements that will make up the supporting materials.

Depending on the target audience, this may include complex technical jargon and charts or a more narrative approach with approachable infographics. For non-specialist audiences, the challenge is to provide the required information in a way that's engaging for the layperson.

3. Take a strategic approach to dissemination

There's no single best solution for all research dissemination needs. What’s more, technology and how target audiences interact with it is constantly changing. Developing a strategic approach to sharing research findings requires exploring the various methods and channels that align with the audience's preferences.

Each channel has a unique reach and impact, and a particular set of best practices to get the most out of it. Researchers looking to have the biggest impact should carefully weigh up the strengths and weaknesses of the channels they've decided upon and craft a strategy that best uses that knowledge.

4. Manage the timeline and resources

Time constraints are an inevitable part of research dissemination. Deadlines for publications can be months apart, conferences may only happen once a year, etc. Any avenue used to disseminate the research must be carefully planned around to avoid missed opportunities.

In addition to properly planning and allocating time, there are other resources to consider. The appropriate number of people must be assigned to work on the project, and they must be given adequate financial and technological resources. To best manage these resources, regular reviews and adjustments should be made.

- Tailoring communication of research findings

We’ve already mentioned the importance of tailoring a message to a specific audience. Here are some examples of how to reach some of the most common target audiences of research dissemination.

Making formal presentations

Content should always be professional, well-structured, and supported by data and visuals when making formal presentations. The depth of information provided should match the expertise of the audience, explaining key findings and implications in a way they'll understand. To be persuasive, a clear narrative and confident delivery are required.

Communication with stakeholders

Stakeholders often don't have the same level of expertise that more direct peers do. The content should strike a balance between providing technical accuracy and being accessible enough for everyone. Time should be taken to understand the interests and concerns of the stakeholders and align the message accordingly.

Engaging with the public

Members of the public will have the lowest level of expertise. Not everyone in the public will have a technical enough background to understand the finer points of your message. Try to minimize confusion by using relatable examples and avoiding any jargon. Visual aids are important, as they can help the audience to better understand a topic.

- 10 commandments for impactful research dissemination

In addition to the details above, there are a few tips that researchers can keep in mind to boost the effectiveness of dissemination:

Master the three Ps to ensure clarity, focus, and coherence in your presentation.

Establish and maintain a public profile for all the researchers involved.

When possible, encourage active participation and feedback from the audience.

Use real-time platforms to enable communication and feedback from viewers.

Leverage open-access platforms to reach as many people as possible.

Make use of visual aids and infographics to share information effectively.

Take into account the cultural diversity of your audience.

Rather than considering only one dissemination medium, consider the best tool for a particular job, given the audience and research to be delivered.

Continually assess and refine your dissemination strategies as you gain more experience.

Should you be using a customer insights hub?

Do you want to discover previous research faster?

Do you share your research findings with others?

Do you analyze research data?

Start for free today, add your research, and get to key insights faster

Editor’s picks

Last updated: 18 April 2023

Last updated: 27 February 2023

Last updated: 6 February 2023

Last updated: 5 February 2023

Last updated: 16 April 2023

Last updated: 9 March 2023

Last updated: 30 April 2024

Last updated: 12 December 2023

Last updated: 11 March 2024

Last updated: 4 July 2024

Last updated: 6 March 2024

Last updated: 5 March 2024

Last updated: 13 May 2024

Latest articles

Related topics, .css-je19u9{-webkit-align-items:flex-end;-webkit-box-align:flex-end;-ms-flex-align:flex-end;align-items:flex-end;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-flex-direction:row;-ms-flex-direction:row;flex-direction:row;-webkit-box-flex-wrap:wrap;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;-webkit-box-pack:center;-ms-flex-pack:center;-webkit-justify-content:center;justify-content:center;row-gap:0;text-align:center;max-width:671px;}@media (max-width: 1079px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}}@media (max-width: 799px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}} decide what to .css-1kiodld{max-height:56px;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;}@media (max-width: 1079px){.css-1kiodld{display:none;}} build next, decide what to build next, log in or sign up.

Get started for free

- Request new password

- Create a new account

Doing Research in Counselling and Psychotherapy

Student resources, disseminating the findings of your research study.

It is very important to find appropriate ways to disseminate the findings of your research – projects that sit on office or library shelves and are seldom or never read represent a tragic loss to the profession.

A key dimension of research dissemination is to be actively involved with potential audiences for your work, and help them to understand what it means to them. These dialogues also represent invaluable learning experiences for researchers, in terms of developing new ideas and appreciating the methodological limitations of their work. An inspiring example of how to do this can be found in:

Granek, L., & Nakash, O. (2016). The impact of qualitative research on the “real world” knowledge translation as education, policy, clinical training, and clinical practice. Journal of Humanistic Psychology , 56(4), 414 – 435.

A further key dimension of research dissemination lies in the act of writing. There are a number of challenges associated with writing counselling and psychotherapy research papers, such as the need to adhere to journal formats, and the need (sometimes) to weave personal reflective writing into a predominantly third-person standard academic style. The items in the following sections explore these challenges from a variety of perspectives.

Suggestions for becoming a more effective academic writer

Sources of advice on how to ease the pain of writing:

Gioia, D. (2019). Gioia’s rules of the game. Journal of Management Inquiry , 28(1), 113 – 115.

Greenhalgh, T. (2019). Twitter women’s tips on academic writing: a female response to Gioia’s rules of the game. Journal of Management Inquiry , 28(4), 484 – 487.

Roulston, K. (2019). Learning how to write successfully from academic writers. The Qualitative Report, 24(7), 1778 – 1781.

Writing tips from the student centre, University of Berkeley

The transition from being a therapist to being a researcher

Finlay, L. (2020). How to write a journal article: Top tips for the novice writer. European Journal for Qualitative Research in Psychotherapy , 10, 28 – 40.

McBeath, A., Bager-Charleson, S., & Abarbanel, A. (2019). Therapists and academic writing: “Once upon a time psychotherapy practitioners and researchers were the same people”. European Journal for Qualitative Research in Psychotherapy , 9, 103 – 116.

McPherson, A. (2020). Dissertation to published article: A journey from shame to sharing. European Journal for Qualitative Research in Psychotherapy , 10, 41 – 52.

Journal article style requirements of the American Psychological Association (including a section on writing quantitative papers)

Writing qualitative reports

Jonsen, K., Fendt, J., & Point, S. (2018). Convincing qualitative research: What constitutes persuasive writing? Organizational Research Methods , 21(1), 30 – 67.

Ponterotto, J.G. & Grieger, I. (2007). Effectively communicating qualitative research. The Counseling Psychologist , 35, 404 – 430.

Smith, L., Rosenzweig, L. & Schmidt, M. (2010). Best practices in the reporting of participatory action research: embracing both the forest and the trees. The Counseling Psychologist, 38, 1115 – 1138.

Staller, K.M. & Krumer-Nevo, M. (2013). Successful qualitative articles: A tentative list of cautionary advice. Qualitative Social Work, 12, 247 – 253.

Clark, A.M. & Thompson, D.R. (2016). Five tips for writing qualitative research in high-impact journals: moving from #BMJnoQual . International Journal of Qualitative Methods , 15, 1 – 3

Gustafson, D. L., Parsons, J. E., & Gillingham, B. (2019). Writing to transgress: Knowledge production in feminist participatory action research. Forum Qualitative Sozialforschung/Forum: Qualitative Social Research, 20 . DOI: 10.17169/fqs-20.2.3164

Caulley, D.N. (2008). Making qualitative reports less boring: the techniques of writing creative nonfiction. Qualitative Inquiry, 14, 424 – 449.

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Chapter 15: Sharing Your Research

15.3 Disseminating Findings

Presenting your work, as discussed in Section “ Presenting Your Research “, is one way of disseminating your research findings. In this section, we will focus on disseminating the written results of your research. Dissemination refers to “a planned process that involves consideration of target audiences and the settings in which research findings are to be received and, where appropriate, communicating and interacting with wider policy and…service audiences in ways that will facilitate research uptake in decision-making processes and practice” (Wilson, Petticrew, Calnan, & Natareth, 2010, p. 93). In other words, dissemination of research findings involves careful planning, thought, consideration of target audiences, and communication with those audiences. Writing up results from your research and having others take notice are two entirely different propositions. In fact, the general rule of thumb is that people will not take notice unless you help and encourage them to do so. To paraphrase the classic line from the film Field of Dreams , just because you build it does not mean they will come.

Disseminating your findings successfully requires determining who your audience is, where they are, and how to reach them. When considering who your audience is, think about who is likely to take interest in your work. Your audience might include those who do not express enthusiastic interest but might nevertheless benefit from an awareness of your research. Your research participants and those who share some characteristics in common with your participants are likely to have some interest in what you’ve discovered in the course of your research. Other scholars who study similar topics are another obvious audience for your work. Perhaps there are policy makers who should take note of your work. Organizations that do work in an area related to the topic of your research are another possibility. Finally, any and all inquisitive and engaged members of the public represent a possible audience for your work.

The location of your audience should be fairly obvious once you have determined who you would like your audience to be. You know where your research participants are because you have studied them. You can find interested scholars on your campus (e.g., perhaps you could offer to present your findings at some campus event), at professional conferences, and via publications such as professional organizations’ newsletters (an often-overlooked source for sharing findings in brief form), and scholarly journals. Policymakers include your state and federal representatives, who, at least in theory, should be available to hear a constituent speak on matters of policy interest. Perhaps you are already aware of organizations that work in an area related to your research topic, but if not, a simple web search should help you identify possible organizational audiences for your work. Disseminating your findings to the public more generally could take any number of forms, including a letter to the editor of the local newspaper, or a blog.

Finally, determining how to reach your audiences will vary according to which audience you wish to reach. Your strategy should be determined by the norms of the audience. For example, scholarly journals provide author submission instructions that clearly define requirements for anyone wishing to disseminate their work via a particular journal. The same is true for newspaper editorials; check your newspaper’s website for details about how to format and submit letters to the editor. If you wish to reach out to your political representatives, a call to their offices or, again, a simple web search should tell you how to do that.

Whether or not you act on all these suggestions is ultimately your decision. But if you have conducted high-quality research, and you have findings that are likely to be of interest to any constituents besides yourself, it is your duty as a scholar and a sociologist to share those findings. Disseminating findings involves the following three steps:

- Determine who your audience is.

- Identify where your audience is.

- Discover how best to reach them.

Research Methods for the Social Sciences: An Introduction Copyright © 2020 by Valerie Sheppard is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Breadcrumbs Section. Click here to navigate to respective pages.

9Dissemination of Qualitative Findings

DOI link for 9Dissemination of Qualitative Findings

Click here to navigate to parent product.

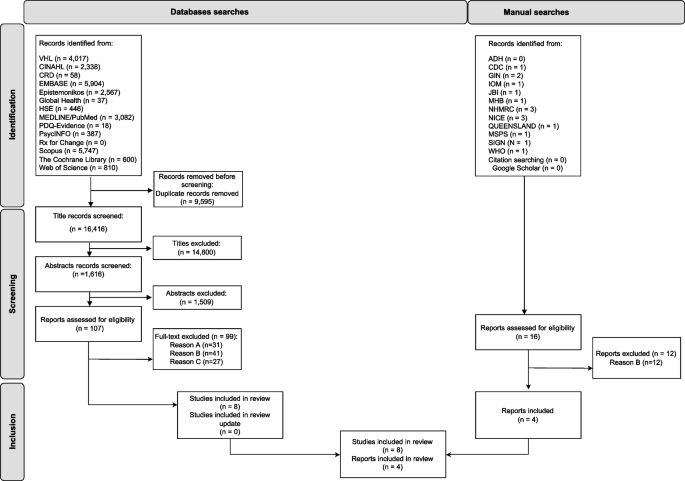

This chapter discusses strategies for disseminating the results of qualitative analysis. It begins with a discussion of the documentation of qualitative methods through the construction of a natural history of the methodology. The chapter then turns to a discussion of approaches to the presentation of qualitative data in written reports. They include the narrative style of presenting data, the construction of descriptive tables, and the presentation of data in graphic formats. This section of the chapter is followed by a discussion of how qualitative results fit into various modes of data dissemination, such as professional reports, public presentations, and web-based applications. Before closing the discussion of data presentation, the adoption of writing styles that make qualitative research interesting and memorable to audiences is discussed.

- Privacy Policy

- Terms & Conditions

- Cookie Policy

- Taylor & Francis Online

- Taylor & Francis Group

- Students/Researchers

- Librarians/Institutions

Connect with us

Registered in England & Wales No. 3099067 5 Howick Place | London | SW1P 1WG © 2024 Informa UK Limited

Qualitative Research: An Introduction to Methods and Designs by

Get full access to Qualitative Research: An Introduction to Methods and Designs and 60K+ other titles, with a free 10-day trial of O'Reilly.

There are also live events, courses curated by job role, and more.

Dissemination of Findings

Biography and life story research is scientifically and practically useful for dissemination. Scholars employ several formats to write a biography and life story research report, making this research methodology beneficial for multiple types of audiences. These formats include journal articles, books written as biographies for both the general reader and the scholar, and book chapters in theory and methods handbooks. This range allows for the general reader to gain insight into the psychology of lives, and for a professional to gain theoretical knowledge as well as some technical insight into the practice of therapy, healing, and liberation. Disseminated biography and life story findings are also useful as case materials ...

Get Qualitative Research: An Introduction to Methods and Designs now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.

Don’t leave empty-handed

Get Mark Richards’s Software Architecture Patterns ebook to better understand how to design components—and how they should interact.

It’s yours, free.

Check it out now on O’Reilly

Dive in for free with a 10-day trial of the O’Reilly learning platform—then explore all the other resources our members count on to build skills and solve problems every day.

How To Write The Results/Findings Chapter

For qualitative studies (dissertations & theses).

By: Jenna Crossley (PhD). Expert Reviewed By: Dr. Eunice Rautenbach | August 2021

So, you’ve collected and analysed your qualitative data, and it’s time to write up your results chapter. But where do you start? In this post, we’ll guide you through the qualitative results chapter (also called the findings chapter), step by step.

Overview: Qualitative Results Chapter

- What (exactly) the qualitative results chapter is

- What to include in your results chapter