An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Indian Dermatol Online J

- v.12(2); Mar-Apr 2021

Practical Guidelines to Develop and Evaluate a Questionnaire

Kamal kishore.

Department of Biostatistics, Post Graduate Institute of Medical Education and Research (PGIMER), Chandigarh, India

Vidushi Jaswal

1 Department of Psychology, MCM DAV College for Women, Chandigarh, India

Vinay Kulkarni

2 Department of Dermatology, PRAYAS Health Group, Amrita Clinic, Karve Road, Pune, Maharashtra, India

Dipankar De

3 Department of Dermatology, Post Graduate Institute of Medical Education and Research (PGIMER), Chandigarh, India

Life expectancy is gradually increasing due to continuously improving medical and nonmedical interventions. The increasing life expectancy is desirable but brings in issues such as impairment of quality of life, disease perception, cognitive health, and mental health. Thus, questionnaire building and data collection through the questionnaires have become an active area of research. However, questionnaire development can be challenging and suboptimal in the absence of careful planning and user-friendly literature guide. Keeping in mind the intricacies of constructing a questionnaire, researchers need to carefully plan, document, and follow systematic steps to build a reliable and valid questionnaire. Additionally, questionnaire development is technical, jargon-filled, and is not a part of most of the graduate and postgraduate training. Therefore, this article is an attempt to initiate an understanding of the complexities of the questionnaire fundamentals, technical challenges, and sequential flow of steps to build a reliable and valid questionnaire.

Introduction

There is an increase in the usage of the questionnaires to understand and measure patients' perception of medical and nonmedical care. Recently, with increased interest in quality of life associated with chronic diseases, there is a surge in the usage and types of questionnaires. The questionnaires are also known as scales and instruments. Their significant advantage is that they capture information about unobservable characteristics such as attitude, belief, intention, or behavior. The multiple items measuring specific domains of interest are required to obtain hidden (latent) information from participants. However, the importance of questions or items needs to be validated and evaluated individually and holistically.

The item formulation is an integral part of the scale construction. The literature consists of many approaches, such as Thurstone, Rasch, Gutmann, or Likert methods for framing an item. The Thurstone scale is labor intensive, time-consuming, and is practically not better than the Likert scale.[ 1 ] In the Guttman method, cumulative attributes of the respondents are measured with a group of items framed from the “easiest” to the “most difficult.” For example, for a stem, a participant may have to choose from options (a) stand, (b) walk, (c) jog, and (d) run. It requires a strict ordering of items. The Rasch method adds the stochastic component to the Guttman method which lay the foundation of modern and powerful technique item response theory for scale construction. All the approaches have their fair share of advantages and disadvantages. However, Likert scales based on classical testing theory are widely established and preferred by researchers to capture intrinsic characteristics. Therefore, in this article, we will discuss only psychometric properties required to build a Likert scale.

A hallmark of scientific research is that it needs to meet rigorous scientific standards. A questionnaire evaluates characteristics whose value can significantly change with time, place, and person. The error variance, along with systematic variation, plays a significant part in ascertaining unobservable characteristics. Therefore, it is critical to evaluate the instruments testing human traits rigorously. Such evaluations are known as psychometric evaluations in context to questionnaire development and validation. The scientific standards are available to select items, subscales, and entire scales. The researchers can broadly segment scientific criteria for a questionnaire into reliability and validity.

Despite increasing usage, many academicians grossly misunderstand the scales. The other complication is that many authors in the past did not adhere to the rigorous standards. Thus, the questionnaire-based research was criticized by many in the past for being a soft science.[ 2 ] The scale construction is also not a part of most of the graduate and postgraduate training. Given the previous discussion, the primary objective of this article is to sensitize researchers about the various intricacies and importance of each step for scale construction. The emphasis is also to make researcher aware and motivate to use multiple metrics to assess psychometric properties. Table 1 describes a glossary of essential terminologies used in context to questionnaire.

Glossary of important terms used in context to psychometric scale

| Term | Definition |

|---|---|

| Psychometrics | A science which deals with the quantitative assessment of abilities that are not directly observable, e.g., confidence, intelligence |

| Reliability | Refer to the degree of consistency of instrument in measurements, e.g., is weighing machine giving similar results under consistent conditions? |

| Validity | Refer to the ability of an instrument to represent the intended measure correctly, e.g., is weighing machine giving accurate results? |

| Likert scale | A psychometric scale consists of multiple items that arrived through a systematic evaluation of reliability and validity, e.g., quality-of-life score |

| Likert Item | It is a statement with a fixed set of choices to express an opinion with the level of agreement or disagreement |

| Latent variable | Represent a concept or underlying construct which cannot be measured directly. Latent variables are also known as unobserved variables, e.g., health and socioeconomic status |

| Manifest variable | A variable which can be measured directly. Manifest variables are also known as observed variables, e.g., blood pressure and income |

| Double-barrel item | A question addressing two or more separate issues but provides an option for one answer, e.g., do you like the house and locality? |

| Negative item | It is an item which is in the opposite direction from most of the questions on a scale |

| Factor loadings | Demonstrate the correlation coefficient between the observed variable and factor. It quantifies the strength of the relationship between a latent variable (factor) and manifest variables. It is key to understand the relative importance of items in the final questionnaire. An item with high factor loading is more important than others |

| Cross-loading | An observed variable with loading more than threshold value on two or more factors, e.g., education level with value >0.35 for both teaching and research domains. The items with cross-loadings are candidates for deletion from a questionnaire |

| Reverse scoring | The practice of reversing the score to cancel positive and negative loading on the same factor, e.g., changing the maximum rating (such as strongly agree=5) to a minimum (such as strongly agree=1) or vice versa |

| Floor and ceiling effect | The inability of a scale to discriminate between participants in a study as the high proportion of participants score worst/minimum or best/maximum score, e.g., more than 80% responses are received by single option among the five options for a Likert item. Item is poorly discriminating between participants and is a candidate for deletion |

| Eigenvalue | An indicator of the amount of variance explained by a factor. The factor with the highest eigenvalue explains the maximum amount of variance and practically makes a factor most important. The eigenvalue is obtained by column sum of squares of factor loading |

The process of building a questionnaire starts with item generation, followed by questionnaire development, and concludes with rigorous scientific evaluation. Figure 1 summarizes the systematic steps and respective tasks at each stage to build a good questionnaire. There are specific essential requirements which are not directly a part of scale development and evaluation; however, these improve the utility of the instrument. The indirect but necessary conditions are documented and discussed under the miscellaneous category. We broadly segment and discuss the questionnaire development process under three domains, known as questionnaire development, questionnaire evaluation, and miscellaneous properties.

Flowchart demonstrating the various steps involved in the development of a questionnaire

Questionnaire Development

The development of the list of items is an essential and mandatory prerequisite for developing a good questionnaire. The researcher at this stage decides to utilize formats such as Guttman, Rasch, or Likert to frame items.[ 2 ] Further, the researcher carefully identifies the appropriate member of the expert panel group for face and content validity. Broadly, there are six steps in the scale development.

It is crucial to select appropriate questions (items) to capture the latent trait. An exhaustive list of items is the most critical and primary requisite to lay the foundation of a good questionnaire. It needs considerable work in terms of literature search, qualitative study, discussion with colleagues, other experts, general and targeted responders, and other questionnaires in and around the area of interest. General and targeted participants can also advise on items, wording, and smoothness of questionnaire as they will be the potential responders.

It is crucial to arrange and reword the pool of questions for eliminating ambiguity, technical jargon, and loading. Further, one should avoid using double-barreled, long, and negatively worded questions. Arrange all items systematically to form a preliminary draft of the questionnaire. After generating an initial draft, review the instrument for the flow of items, face validity and content validity before sending it to experts. The researcher needs to assess whether the items in the score are comprehensive (content validity) and appear to measure what it is supposed to measure (face validity). For example, does the scale measuring stress is measuring stress or is it measuring depression instead? There is no uniformity on the selection of a panel of experts. However, a general agreement is to use anywhere from a minimum of 5–15 experts in a group.[ 3 ] These experts will ascertain the face and content validity of the questionnaire. These are subjective and objective measures of validity, respectively.

It is advisable to prepare an appealing, jargon-free, and nontechnical cover letter explaining the purpose and description of the instrument. Further, it is better to include the reason/s for selecting the expert, scoring format, and explanations of response categories for the scale. It is advantageous to speak with experts telephonically, face to face, or electronically, requesting their participation before mailing the questionnaire. It is good to explain to them right in the beginning that this process unfolds over phases. The time allowed to respond can vary from hours to weeks. It is recommended to give at least 7 days to respond. However, a nonresponse needs to be followed up by a reminder email or call. Usually, this stage takes two to three rounds. Therefore, it is essential to engage with experts regularly; else there is a risk of nonresponse from the study. Table 2 gives general advice to researchers for making a cover letter. The researcher can modify the cover letter appropriately for their studies. The authors can consult Rubio and coauthors for more details regarding the drafting of a cover letter.[ 4 ]

General overview and the instructions for rating in the cover letter to be accompanied by the questionnaire

| Content | Explanation | ||

|---|---|---|---|

| Construct | Definition of characteristics of the measurement | ||

| Purpose | To evaluate the content and face validity | ||

| How | Please rate each item for its representativeness and clarity on a scale from 1 to 4 | ||

| Evaluate the comprehensiveness of the entire instrument in measuring the domain | |||

| Please add, delete, or modify any item as per your understanding | |||

| Scoring | 0-Not necessary | 1-Not representative | 1-Not clear |

| 1-Useful | 2-Need major revisions to be representative | 2-Need major revisions to be clear | |

| 2-Essential | 3-Need minor revisions to be representative | 3-Need minor revisions to be clear | |

| 4-Representative | 4-Clear | ||

| Formula | CVR = ( - /2)/( /2) | CVI = / | CVI = / |

| where =number of experts rated an item as essential | where CVI =CVI for representativeness | where CVI =CVI for clarity | |

| =Number of experts rated an item as representative (3 or 4) | =Number of experts rated an item as clear (3 or 4) | ||

| =Total number of experts | =Total number of experts | ||

The responses from each round will help in rewording, rephrasing, and reordering of the items in the scale. Few questions may need deletion in the different rounds of previous steps. Therefore, it is better to evaluate content validity ratio (CVR), content validity index (CVI), and interrater agreement before deleting any question in the instrument. Readers can consult formulae in Table 2 for calculating CVR and CVI for the instrument. CVR is calculated and reported for the overall scale, whereas CVI is computed for each item. Researchers need to consult Lawshe table to determine the cutoff value for CVR as the same depends on the number of experts in the panel.[ 5 ] CVI >0.80 is recommended. Researchers interested in detail regarding CVR and CVI can read excellent articles written by Zamanzadeh et al . and Rubio et al .[ 4 , 6 ] It is crucial to compute CVR, CVI, and kappa agreement for each item from the rating of importance, representativeness, and clarity by experts. The CVR and CVI do not account for a chance factor. Since interrater agreement (IRA) incorporates chance factor; it is better to report CVR, CVI, and IRA measures.

The scholars require to address subtle issues before administering a questionnaire to responders for pilot testing. The introduction and format of the scale play a crucial role in mitigating doubts and maximizing response. The front page of the questionnaire provides an overview of the research without using technical words. Further, it includes roles and responsibilities of the participants, contact details of researchers, list of research ethics (such as voluntary participation, confidentiality and withdrawal, risks and benefits), and informed consent for participation in the study. It is also better to incorporate anchors (levels of Likert item) in each page at the top or bottom or both for ease and maximizing response. Readers can refer to Table 3 for detail.

A random set of questions with anchors at the top and bottom row

| Items | Strongly disagree (SD) | Disagree (D) | Neutral (N) | Agree (A) | Strongly agree (SA) |

|---|---|---|---|---|---|

| Duration of disease (since onset) | SD | D | N | A | SA |

| Number of relapse(s) of the disease | SD | D | N | A | SA |

| Duration of oral erosions (present episode) | SD | D | N | A | SA |

| Number of relapse(s) of oral lesions | SD | D | N | A | SA |

| Persistence of oral lesions after subsidence of cutaneous lesions | SD | D | N | A | SA |

| Change in size of existing lesion in last 1 week | SD | D | N | A | SA |

| Development of new lesions in last 1 week | SD | D | N | A | SA |

| Difficulty in eating normal food | SD | D | N | A | SA |

| Difficulty in eating food according to their consistency | SD | D | N | A | SA |

| Inability to eat spicy food | SD | D | N | A | SA |

| Inability to drink fruit juices | SD | D | N | A | SA |

| Excessive salivation/drooling | SD | D | N | A | SA |

| Difficulty in speaking | SD | D | N | A | SA |

| Difficulty in brushing teeth | SD | D | N | A | SA |

| Difficulty in swallowing | SD | D | N | A | SA |

| Restricted mouth opening | SD | D | N | A | SA |

| Strongly disagree | Disagree | Neutral | Agree | Strongly agree |

Pilot testing of an instrument in the target population is an important and essential requirement before testing on a large sample of individuals. It helps in the elimination or revision of poorly worded items. At this stage, it is better to use floor and ceiling effects to eliminate poorly discriminating items. Further, random interviews of 5–10 participants can help to mitigate the problems such as difficulty, relevance, confusion, and order of the questions before testing it on the study population. The general recommendations are to recruit a sample size between 30 and 100 for pilot testing.[ 4 ] Inter-question (item) correlation (IQC) and Cronbach's α can be assessed at this stage. The values less than 0.3 and 0.7, respectively, for IQC and reliability, are suspicious and candidate for elimination from the questionnaire. Cronbach's α, a measure of internal consistency and IQC of a scale, indicates researcher about the quality of items in measuring latent attribute at the initial stage. This process is important to refine and finalize the questionnaire before starting the testing of a questionnaire in study participants.

Questionnaire Evaluation

The preliminary items and the questionnaire until this stage have addressed issues of reliability, validity, and overall appeal in the target population. However, researchers need to rigorously evaluate the psychometric properties of the primary instrument before finally adopting. The first step in this process is to calculate the appropriate sample size for administering a preliminary questionnaire in the target group. The evaluations of various measures do not follow a sequential order like the previous stage. Nevertheless, these measures are critical to evaluate the reliability and validity of the questionnaire.

Correct data entry is the first requirement to evaluate the characteristics of a manually administered questionnaire. The primary need is to enter the data into an appropriate spreadsheet. Subsequently, clean the data for cosmetic and logical errors. Finally, prepare a master sheet, and data dictionary for analysis and reference to coding, respectively. Authors interested in more detail can read “Biostatistics Series.”[ 7 , 8 ] The data entry process of the questionnaire is like other cross-sectional study designs. The rows and columns represent participants and variables, respectively. It is better to enter the set of items with item numbers. First, it is tedious and time-consuming to find suitable variable names for many questions. Second, item numbers help in quick identification of significantly contributing and non-contributing items of the scale during the assessment of psychometric properties. Readers can see Table 4 for more detail.

A sample of data entry format

| (a) Illustration of master sheet | ||||||||

|---|---|---|---|---|---|---|---|---|

| Participant | Age | Religion | Family | Height | Weight | Q1 | Q2 | Q3 |

| 1 | 25 | 1 | 1 | 185.0 | 85.0 | 1 | 5 | 2 |

| 2 | 26 | 3 | 1 | 155.0 | 63.0 | 2 | 5 | 1 |

| 3 | 22 | 2 | 2 | 155.0 | 57.0 | 4 | 2 | 1 |

| 4 | 35 | 2 | 1 | 158.5 | 67.5 | 3 | 2 | 2 |

| 5 | 49 | 1 | 2 | 175.0 | 64.0 | 2 | 4 | 3 |

| 6 | 40 | 4 | 1 | 159.0 | 78.0 | 2 | 4 | 3 |

| Q → th Question in the questionnaire, where =1,2,3, … | ||||||||

| | | | ||||||

| Participant | A random serial number to participant | None | String | |||||

| Age | Age in years | None (30-70 years) | Interval | |||||

| Religion | Religion of the participant | 1=Hindu 2=Sikh 3=Muslim 4=Others | Nominal | |||||

| Q | Level of agreement in the question | 1=Strongly disagree 2=Disagree 3=Neutral 4=Agree 5=Strongly agree | Ordinal | |||||

Descriptive statistics

Spreadsheets are easy and flexible for routine data entry and cleaning. However, the same lack the features of advanced statistical analysis. Therefore, the master sheet needs to be exported to appropriate software for advanced statistical analysis. Descriptive analysis is the usual first step which helps in understanding the fundamental characteristics of the data. Thus, report appropriate descriptive measures such as mean and standard deviation, and median and interquartile/interdecile range for continuous symmetric and asymmetric data, respectively.[ 9 ] Utilize exploratory tabular and graphical display to inspect the distribution of various items in the questionnaire. A stacked bar chart is a handy tool to investigate the distribution of data graphically. Further, ascertain linearity and lack of extreme multicollinearity at this stage. Any value of IQC >0.7 warrants further inspection for deletion or modification. Help from a good biostatistician is of great assistance for data analysis and reporting.

Missing data analysis

Missing data is the rule, not the exception. Majority of the researchers face difficulties of finding missing values in the data. There are usually three approaches to analyze incomplete data. The first approach is to “take all” which use all the available data for analysis. In the second method, the analyst deletes the participants and variables with gross missingness or both from the analysis process. The third scenario consists of estimating the percentage and type of missingness. The typically recommended threshold for the missingness is 5%.[ 10 ] There are broadly three types of missingness, such as missing completely at random, missing at random, and not missing at random. After identification of a missing mechanism, impute the data with single or multiple imputation approaches. Readers can refer to an excellent article written by Graham for more details about missing data.[ 11 ]

Sample size

The optimum sample size is a vital requisite to build a good questionnaire. There are many guidelines in the literature regarding recruiting an appropriate sample size. Literature broadly segments sample size approaches into three domains known as subject to variables ratio (SVR), minimum sample size, and factor loadings (FL). The factor analysis (FA) is a crucial component of questionnaire designing. Therefore, recent recommendations are to use FLs to determine sample size. Readers can consult Table 5 for sample size recommendations under various domains. Interested readers can refer to Beavers and colleagues for more detail.[ 12 ] The stability of the factors is essential to determine sample size. Therefore, data analysis from questionnaires validates the sample size after data collection. The Kaiser–Meyer–Olkin (KMO) criterion testing the adequacy of sample size is available in the majority of the statistical software packages. A higher value of KMO is an indicator of sufficient sample size for stable factor solution.

Sample size recommendations in the literature

| Sample size criteria | ||

|---|---|---|

| Subject to variables ratio | Minimum sample size | Factor loading |

| Minimum 100 participants + SVR ≥5 | At least 300 participants | At least 4 items with FL >0.60 (minimum 100 participants) |

| 51 participants + number of variables | At least 200 participants | At least 10 items with FL >0.40 (minimum 150 participants) |

| At least SVR >5 | At least 150-300 participants | Items with 0.30 ≤ FL ≤0.40 (minimum 300 participants) |

SVR→Subject to variable ratio, FL→Factor loading

Correlation measures

The strength of relationships between the items is an imperative requisite for a stable factor solution. Therefore, the correlation matrix is calculated and ascertained for same. There are various recommendations of correlation coefficient; however, a value greater than 0.3 is a must.[ 13 ] A lower value of the correlation coefficient will fail to form a stable factor due to lack of commonality. The determinant and Bartlett's test of sphericity can be used to ascertain the stability of the factors. The determinant is a single value which ranges from zero to one. A nonzero determinant indicates that factors are possible. However, it is small in most of the studies and not easy to interpret. Therefore, Bartlett's test of sphericity is routinely used to infer that determinant is significantly different than zero.

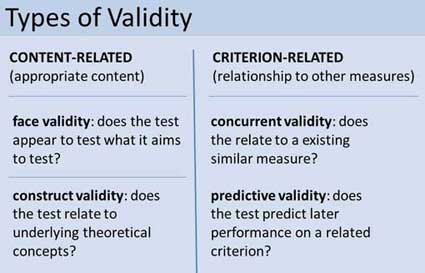

Physical quantities such as height and weight are observable and measurable with instruments. However, many tools need regular calibration to be precise and accurate. The standardization in context to the questionnaire development is known as reliability and validity. The validity is the property which indicates that an instrument is measuring what it is supposed to measure. Validation is a continuous process which begins with the identification of domains and goes on till generalization. There are various measures to establish the validity of the instrument. Authors can consult Table 6 for different types of validity and their metrics.

Scientific standards to evaluate and report for constructing a good scale

| Psychometric properties | Component | Definition | Indices |

|---|---|---|---|

| Validity | Content validity | The items are addressing all the relevant aspect of construct | Content validity ratio Content validity indices Interrater agreement |

| Face validity | The test appears to measure the intended measure | Expert opinion (qualitative) | |

| Construct validity | The strong ( ) and weak ( ) correlation between same and different construct, respectively | Exploratory factor analysis Correlation coefficient | |

| Criterion validity | The correlation between a predictor measure (teamwork) and criterion measures (actual performance in team) | Correlation coefficient | |

| Convergent validity | The correlation between a scale and conceptually similar scales or subscales of a scale | Correlation coefficient Multitrait-multimethod matrix | |

| Reliability | Internal consistency | The cohesiveness of items in measuring the same variable consistently | Coefficient α Coefficient β Coefficient Ω |

| Test-retest | Consistency of score for stable characteristics on separate times | Correlation coefficient Intra-class correlation coefficient | |

| Alternate forms | Consistency of scores among the same sample for similar tests | Correlation coefficient | |

| Descriptive analysis | Tabular display | Display of essential data characteristics in rows and columns | Mean (SD) Median (IQR) |

| Graphical display | Visual display of large data to exhibit trends, patterns, and relationships | Box plot Bar graph | |

| Missing | MCAR | Missing data is independent of observed or unobserved data | Little’s MCAR |

| mechanism | MAR | Missing data is related to observed but not unobserved data | Listing and Schlittgen (LS) test |

| NMAR | Missing data is related to unobserved data | No standard test (based on assumptions) | |

| Factorability | Sample size | Minimum number of participants required to measure study outcomes | KMO criteria |

| Correlation matrix | A matrix displaying the inter-correlations among the variables | Determinant | |

| Sphericity | Refers to equality of correlations between different items | Bartlett’s test |

MCAR: Missing completely at random; MAR: Missing at random; NMAR: Not missing at random; KMO: Kaiser-Meyer-Olkin; SD: Standard deviation; IQR: Interquartile range

Exploratory FA

FA assumes that there are underlying constructs (factors) which cannot be measured directly. Therefore, the investigator collects the exhaustive list of observed variables or responses representing underlying constructs. Researchers expect that variables or questions in the questionnaire correlate among themselves and load on the corresponding but a small number of factors. FA can be broadly segmented in exploratory factor analysis (EFA) and confirmatory factor analysis. The EFA is applied on the master sheet after assessing descriptive statistics such as tabular and graphical display, missing mechanism, sample size adequacy, IQC, and Bartlett's test in step 7 [ Figure 1 ]. The value of EFA is used at the initial stages to extract factors while constructing a questionnaire. It is especially important to identify an adequate number of factors for building a decent scale. The factors represent latent variables that explain variance in the observed data. First and the last factor explain maximum and minimum variance, respectively. There are multiple factor selection criteria, each with its advantages and disadvantages. It is better to utilize more than one approach for retaining factors during the initial extraction phase. Readers can consult Sindhuja et al . for the practical application of more than one-factor selection criteria.[ 14 ]

Kaiser's criterion

Kaiser's criterion is one of the most popular factor retention criteria. The basis of the Kaiser criterion is to explain the variance through the eigenvalue approach. A factor with more than one eigenvalue is the candidate for retention.[ 15 ] An eigenvalue bigger than one simply means that a single factor is explaining variance for more than one observed variable. However, there is a dearth of scientifically rigorous studies to declare a cutoff value for Kaiser's criterion. Many authors highlighted that the Kaiser criterion over-extract and under-extract factors.[ 16 , 17 ] Therefore, investigators need to calculate and consider other measures for extraction of factors.

Cattell's scree plot

Cattell's scree plot is another widespread eigenvalue-based factor selection criterion used by researchers. It is popularly known as scree plot. The scree plot assigns the eigenvalues on the y -axis against the number of factors in the x -axis. The factors with highest to lowest eigenvalues are plotted from left to right on the x -axis. Usually, the scree plots form an elbow which indicates the cutoff point for factor extraction. The location or the bend at which the curve first begins to straighten out indicates the maximum number of factors to retain. A significant disadvantage of the scree plot is the subjectivity of the researcher's perception of the “elbow” in the plot. Researchers can see Figure 2 for detail.

A hypothetical example showing the researcher's dilemma of selecting 6, 10, or 15 factors through scree plot

Percentage of variance

The variance extraction criterion is another criterion to retain the number of factors. The literature recommendation varies from more than a minimum of 50–70% onward.[ 12 ] However, both the number of items and factors will increase dramatically if there are a large number of manifest (observed) variables. Practically, the percentage of variance explained mechanism should be used judiciously along with FL. The FLs with greater than 0.4 value are preferred; however, there are recommendations to use a value higher than 0.30.[ 3 , 15 , 18 ]

Very simple structure

Very simple structure (VSS) approach is a symbiosis of theory, psychometrics, and statistical analysis. The VSS criterion compares the fit of the simplified model to the original correlations. It plots the goodness-of-fit value as a function of several factors rather than statistical significance. The number of factors that maximizes the VSS criterion suggests the optimal number of factors to extract. VSS criterion facilitates comparison of a different number of factors for varying complexity. VSS will be highest at the optimum number of factors.[ 19 ] However, it is not efficient for factorially complex data.

Parallel analysis

Parallel analysis (PA) is a statistical theory-based robust technique to identify the appropriate number of factors. It is the only technique which accounts for the probability that a factor is due to chance. PA simulates data to generate 95 th percentile cutoff line on a scree plot restricted upon the number of items and sample size in original data. The factors above the cutoff line are not due to chance. PA is the most robust empirical technique to retain the appropriate number of factors.[ 16 , 20 ] However, it should be used cautiously for the eigenvalue near the 95 th percentile cutoff line. PA is also robust to distributional assumptions of the data. Since different techniques have their fair share of advantages and disadvantages, researchers need to assess information on the basis of multiple criteria.

Reliability

Reliability, an essential requisite of a scale, is also known as reproducibility, repeatability, and consistency. It identifies that the instrument is consistently measuring the attribute under identical conditions. Reliability is a necessary characteristic of a tool. The trustworthiness of a scale can be increased by increasing and decreasing the systematic and random component, respectively. The reliability of an instrument can be further segmented and measured with various indices. Reliability is important but it is secondary to validity. Therefore, it is ideal to calculate and report reliability after validity. However, there are no hard and fast rules except that both are necessary and important measures. Readers may consult Table 6 for multiple types of indices for reliability.

Internal consistency

Cronbach's alpha (α), also known as α-coefficient, is one of the most used statistics to report internal consistency reliability. The internal consistency using the interitem correlations suggests the cohesiveness of items in a questionnaire. However, the α-coefficient is sample-specific; thus, the literature recommends the same to calculate and report for all the studies. Ideally, a value of α >0.70 is preferred; however, the value of α >0.60 is also accepted for construction of new scale.[ 21 , 22 ] Researchers can increase the α-coefficient by adding items in the scale. However, a value can either reduce with the addition of non-correlated items or deletion of correlated items. Corrected interitem correlation is another popular measure to report for internal consistency. A value of α <0.3 indicates the presence of nonrelated items. The studies claim that coefficient beta (β) and omega (Ω) are better indices than coefficient-α, but there is a scarcity of literature reporting these indices.[ 23 ]

Test–retest

Test–retest reliability measures the stability of an instrument over time. In other words, it measures the consistency of scores over time. However, the appropriate time between repeated measures is a debatable issue. Pearson's product-moment and intraclass correlation coefficient measure and report test–retest reliability. A high value of correlation >0.70 represents high reliability.[ 21 ] The change in study condition (recovery of patients after intervention) over time can decrease test–retest reliability. Therefore, it is important to report the time between repeated measures while reporting test–retest reliability.

Parallel forms and split-half reliability

Parallel form reliability is also known as an alternate form of consistency. There are two types of option to report parallel form reliability. In the first method, different but similar items make alternative forms of the test. The assumptions of both the assessment are that they measure the same phenomenon or underlying construct. It addresses the twin issues of time and knowledge acquisition of test in test–retest reliability. In the second approach, the researcher randomly divides the total items of an instrument into two halves. The calculation of parallel form from two halves is known as split-half reliability. However, randomly divided half may not be similar. The parallel from and split-half reliability are reported with the correlation coefficient. The recommendations are to use a value higher than 0.80 to assess the alternate form of consistency.[ 24 ] It is challenging to generate two types of tests in clinical studies. Therefore, researchers rarely report reliability from two analogous but separate tests.

General Questionnaire Properties

The major issues regarding the reliability and validity of scale development have already been discussed. However, there are many other subtle issues for developing a good questionnaire. These delicate issues may vary from a choice of Likert items, length of the instrument, cover letter, web or internet mode of data collection, and weighting of scale. The immediately preceding issues demand careful deliberation and attention from the researcher. Therefore, the researcher should carefully think through all these issues to build a good questionnaire.

Likert items

The Likert items are the fixed choice ordinal items which capture attitude, belief, and various other latent domains. The subsequent step is to rank the questions of the Likert scale for further analysis. The numerals for ranking can either start from 0 or 1. It does not make a difference. The Likert scale is primarily bipolar as opposite ends endorse the contrary idea.[ 2 ] These are the type of items which express opinions on a measure from strong disagreement to strong agreement. The adjectival scales are unipolar scale that tends to measure variables like pain intensity (no pain/mild pain/moderate pain/severe pain) in one direction. However, the Likert scale (most likely–least likely) can measure almost any attribute. The Likert scale can either have odd or even categories; however, odd categories are more popular. The number of classifications in the Likert scale can vary from anywhere between 3 and 11,[ 2 ] although the scale with 5 and 7 classes have displayed better statistical properties for discriminating between responses.[ 2 , 24 ]

Length of questionnaire

A good questionnaire needs to include many items to capture the construct of interest. Therefore, investigators need to collect as many questions as possible. However, the lengthier scale increases both time and cost. The response rate also decreases with an increase in the length of the questionnaire.[ 25 ] Although what is lengthy is debatable and varies from more than 4 pages to 12 pages in various studies,[ 26 ] the longer scales increase the false positivity rate.[ 27 ]

Translating a questionnaire

Many a time, there are already existing reliable and valid questionnaires for use. However, the expert needs to assess two immediate and important criteria of cultural sensitivity and language of the scale. Many sensitive questions on sexual preferences, political orientations, societal structure, and religion may be open for discussion in certain societies, religions, and cultures, whereas the same may be taboo or receive misreporting in others. The sensitive questions need to be reframed considering regional sentiments and culture in mind. Further, a questionnaire in different language needs to be translated by a minimum of two independent bilingual translators. Similarly, the translated questionnaire needs to be translated back into the original language by a minimum of two independent and different bilingual experts who converted the original questionnaire. The process of converting the original questionnaire to the targeted language and then back to the original language is known as forward and backward translation. The subsequent steps such as expert panel group, pilot testing, reliability, and validity for translating a questionnaire remain the same as in constructing a new scale.

Web-based or paper-based

Broadly, paper and electronic format are the two modes of administering a questionnaire to the participants. Both techniques have advantages and disadvantages. The response rate is a significant issue in self-administered scales. The significant benefits of electronic format are the reduction in cost, time, and data cleaning requirements. In contrast, paper-based administration of questionnaire increases external generalization, paper feel, and no need of internet. As per Greenlaw and Welty, the response rate improves with the availability of both the options to participants. However, cost and time increase in comparison to the usage of electronic format alone.[ 27 ]

Item order and weights

There are multiple ways to order an item in a questionnaire. The order of questions becomes more critical for a lengthy questionnaire. There are different opinions about either grouping or mixing the issues in an instrument.[ 24 ] Grouping inflates intra-scale correlation, whereas mixing inflates inter-scale correlation.[ 28 ] Both the approaches have empirically shown to give similar results for at least 20 or more items. The questions related to a particular domain can be assigned either equal or unequal weights. There are two mechanisms to assign unequal weights in a questionnaire. In the first situation, researchers affix different importance to items. In the second method, the investigators frame more or fewer questions as per the importance of subscales in the scale.

The fundamental triad of science is accuracy, precision, and objectivity. The increasing usage of questionnaires in medical sciences requires rigorous scientific evaluations before finally adopting it for routine use. There are no standard guidelines for questionnaire development, evaluation, and reporting in contrast to guidelines such as CONSORT, PRISMA, and STROBE for treatment development, evaluation, and reporting. In this article, we emphasize on the systematic and structured approach for building a good questionnaire. Failure to meet the questionnaire development standards may lead to biased, unreliable, and inaccurate study finding. Therefore, the general guidelines given in this article can be used to develop and validate an instrument before routine use.

Financial support and sponsorship

Conflicts of interest.

There are no conflicts of interest.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Construct Validity | Definition, Types, & Examples

Construct Validity | Definition, Types, & Examples

Published on February 17, 2022 by Pritha Bhandari . Revised on June 22, 2023.

Construct validity is about how well a test measures the concept it was designed to evaluate. It’s crucial to establishing the overall validity of a method.

Assessing construct validity is especially important when you’re researching something that can’t be measured or observed directly, such as intelligence, self-confidence, or happiness. You need multiple observable or measurable indicators to measure those constructs or run the risk of introducing research bias into your work.

- Content validity : Is the test fully representative of what it aims to measure?

- Face validity : Does the content of the test appear to be suitable to its aims?

- Criterion validity : Do the results accurately measure the concrete outcome they are designed to measure?

Table of contents

What is a construct, what is construct validity, types of construct validity, how do you measure construct validity, threats to construct validity, other interesting articles, frequently asked questions about construct validity.

A construct is a theoretical concept, theme, or idea based on empirical observations. It’s a variable that’s usually not directly measurable.

Some common constructs include:

- Self-esteem

- Logical reasoning

- Academic motivation

- Social anxiety

Constructs can range from simple to complex. For example, a concept like hand preference is easily assessed:

- A simple survey question : Ask participants which hand is their dominant hand.

- Observations : Ask participants to perform simple tasks, such as picking up an object or drawing a cat, and observe which hand they use to execute the tasks.

A more complex concept, like social anxiety, requires more nuanced measurements, such as psychometric questionnaires and clinical interviews.

Simple constructs tend to be narrowly defined, while complex constructs are broader and made up of dimensions. Dimensions are different parts of a construct that are coherently linked to make it up as a whole.

As a construct, social anxiety is made up of several dimensions.

- Psychological dimension: Intense fear and anxiety

- Physiological dimension: Physical stress indicators

- Behavioral dimension: Avoidance of social settings

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

Construct validity concerns the extent to which your test or measure accurately assesses what it’s supposed to.

In research, it’s important to operationalize constructs into concrete and measurable characteristics based on your idea of the construct and its dimensions.

Be clear on how you define your construct and how the dimensions relate to each other before you collect or analyze data . This helps you ensure that any measurement method you use accurately assesses the specific construct you’re investigating as a whole and helps avoid biases and mistakes like omitted variable bias or information bias .

- How often do you avoid entering a room when everyone else is already seated?

- Do other people tend to describe you as quiet?

- When talking to new acquaintances, how often do you worry about saying something foolish?

- To what extent do you fear giving a talk in front of an audience?

- How often do you avoid making eye contact with other people?

- Do you prefer to have a small number of close friends over a big group of friends?

When designing or evaluating a measure, it’s important to consider whether it really targets the construct of interest or whether it assesses separate but related constructs.

It’s crucial to differentiate your construct from related constructs and make sure that every part of your measurement technique is solely focused on your specific construct.

- Does your questionnaire solely measure social anxiety?

- Are all aspects of social anxiety covered by the questions?

- Do your questions avoid measuring other relevant constructs like shyness or introversion?

There are two main types of construct validity.

- Convergent validity: The extent to which your measure corresponds to measures of related constructs

- Discriminant validity: The extent to which your measure is unrelated or negatively related to measures of distinct constructs

Convergent validity

Convergent validity is the extent to which measures of the same or similar constructs actually correspond to each other.

In research studies, you expect measures of related constructs to correlate with one another. If you have two related scales, people who score highly on one scale tend to score highly on the other as well.

Discriminant validity

Conversely, discriminant validity means that two measures of unrelated constructs that should be unrelated, very weakly related, or negatively related actually are in practice.

You check for discriminant validity the same way as convergent validity: by comparing results for different measures and assessing whether or how they correlate.

How do you select unrelated constructs? It’s good to pick constructs that are theoretically distinct or opposing concepts within the same category.

For example, if your construct of interest is a personality trait (e.g., introversion), it’s appropriate to pick a completely opposing personality trait (e.g., extroversion). You can expect results for your introversion test to be negatively correlated with results for a measure of extroversion.

Alternatively, you can pick non-opposing unrelated concepts and check there are no correlations (or weak correlations) between measures.

You often focus on assessing construct validity after developing a new measure. It’s best to test out a new measure with a pilot study, but there are other options.

- A pilot study is a trial run of your study. You test out your measure with a small sample to check its feasibility, reliability , and validity . This helps you figure out whether you need to tweak or revise your measure to make sure you’re accurately testing your construct.

- Statistical analyses are often applied to test validity with data from your measures. You test convergent and discriminant validity with correlations to see if results from your test are positively or negatively related to those of other established tests.

- You can also use regression analyses to assess whether your measure is actually predictive of outcomes that you expect it to predict theoretically. A regression analysis that supports your expectations strengthens your claim of construct validity.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

It’s important to recognize and counter threats to construct validity for a robust research design. The most common threats are:

Poor operationalization

Experimenter expectancies, subject bias.

A big threat to construct validity is poor operationalization of the construct.

A good operational definition of a construct helps you measure it accurately and precisely every time. Your measurement protocol is clear and specific, and it can be used under different conditions by other people.

Without a good operational definition, you may have random or systematic error , which compromises your results and can lead to information bias . Your measure may not be able to accurately assess your construct.

Experimenter expectancies about a study can bias your results. It’s best to be aware of this research bias and take steps to avoid it.

To combat this threat, use researcher triangulation and involve people who don’t know the hypothesis in taking measurements in your study. Since they don’t have strong expectations, they are unlikely to bias the results.

When participants hold expectations about the study, their behaviors and responses are sometimes influenced by their own biases. This can threaten your construct validity because you may not be able to accurately measure what you’re interested in.

You can mitigate subject bias by using masking (blinding) to hide the true purpose of the study from participants. By giving them a cover story for your study, you can lower the effect of subject bias on your results, as well as prevent them guessing the point of your research, which can lead to demand characteristics , social desirability bias , and a Hawthorne effect .

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Degrees of freedom

- Null hypothesis

- Discourse analysis

- Control groups

- Mixed methods research

- Non-probability sampling

- Quantitative research

- Ecological validity

Research bias

- Rosenthal effect

- Implicit bias

- Cognitive bias

- Selection bias

- Negativity bias

- Status quo bias

Construct validity is about how well a test measures the concept it was designed to evaluate. It’s one of four types of measurement validity , which includes construct validity, face validity , and criterion validity.

There are two subtypes of construct validity.

- Convergent validity : The extent to which your measure corresponds to measures of related constructs

- Discriminant validity : The extent to which your measure is unrelated or negatively related to measures of distinct constructs

When designing or evaluating a measure, construct validity helps you ensure you’re actually measuring the construct you’re interested in. If you don’t have construct validity, you may inadvertently measure unrelated or distinct constructs and lose precision in your research.

Construct validity is often considered the overarching type of measurement validity , because it covers all of the other types. You need to have face validity , content validity , and criterion validity to achieve construct validity.

Statistical analyses are often applied to test validity with data from your measures. You test convergent validity and discriminant validity with correlations to see if results from your test are positively or negatively related to those of other established tests.

You can also use regression analyses to assess whether your measure is actually predictive of outcomes that you expect it to predict theoretically. A regression analysis that supports your expectations strengthens your claim of construct validity .

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bhandari, P. (2023, June 22). Construct Validity | Definition, Types, & Examples. Scribbr. Retrieved August 26, 2024, from https://www.scribbr.com/methodology/construct-validity/

Is this article helpful?

Pritha Bhandari

Other students also liked, the 4 types of validity in research | definitions & examples, reliability vs. validity in research | difference, types and examples, correlation coefficient | types, formulas & examples, what is your plagiarism score.

Validity in research: a guide to measuring the right things

Last updated

27 February 2023

Reviewed by

Cathy Heath

Short on time? Get an AI generated summary of this article instead

Validity is necessary for all types of studies ranging from market validation of a business or product idea to the effectiveness of medical trials and procedures. So, how can you determine whether your research is valid? This guide can help you understand what validity is, the types of validity in research, and the factors that affect research validity.

Make research less tedious

Dovetail streamlines research to help you uncover and share actionable insights

- What is validity?

In the most basic sense, validity is the quality of being based on truth or reason. Valid research strives to eliminate the effects of unrelated information and the circumstances under which evidence is collected.

Validity in research is the ability to conduct an accurate study with the right tools and conditions to yield acceptable and reliable data that can be reproduced. Researchers rely on carefully calibrated tools for precise measurements. However, collecting accurate information can be more of a challenge.

Studies must be conducted in environments that don't sway the results to achieve and maintain validity. They can be compromised by asking the wrong questions or relying on limited data.

Why is validity important in research?

Research is used to improve life for humans. Every product and discovery, from innovative medical breakthroughs to advanced new products, depends on accurate research to be dependable. Without it, the results couldn't be trusted, and products would likely fail. Businesses would lose money, and patients couldn't rely on medical treatments.

While wasting money on a lousy product is a concern, lack of validity paints a much grimmer picture in the medical field or producing automobiles and airplanes, for example. Whether you're launching an exciting new product or conducting scientific research, validity can determine success and failure.

- What is reliability?

Reliability is the ability of a method to yield consistency. If the same result can be consistently achieved by using the same method to measure something, the measurement method is said to be reliable. For example, a thermometer that shows the same temperatures each time in a controlled environment is reliable.

While high reliability is a part of measuring validity, it's only part of the puzzle. If the reliable thermometer hasn't been properly calibrated and reliably measures temperatures two degrees too high, it doesn't provide a valid (accurate) measure of temperature.

Similarly, if a researcher uses a thermometer to measure weight, the results won't be accurate because it's the wrong tool for the job.

- How are reliability and validity assessed?

While measuring reliability is a part of measuring validity, there are distinct ways to assess both measurements for accuracy.

How is reliability measured?

These measures of consistency and stability help assess reliability, including:

Consistency and stability of the same measure when repeated multiple times and conditions

Consistency and stability of the measure across different test subjects

Consistency and stability of results from different parts of a test designed to measure the same thing

How is validity measured?

Since validity refers to how accurately a method measures what it is intended to measure, it can be difficult to assess the accuracy. Validity can be estimated by comparing research results to other relevant data or theories.

The adherence of a measure to existing knowledge of how the concept is measured

The ability to cover all aspects of the concept being measured

The relation of the result in comparison with other valid measures of the same concept

- What are the types of validity in a research design?

Research validity is broadly gathered into two groups: internal and external. Yet, this grouping doesn't clearly define the different types of validity. Research validity can be divided into seven distinct groups.

Face validity : A test that appears valid simply because of the appropriateness or relativity of the testing method, included information, or tools used.

Content validity : The determination that the measure used in research covers the full domain of the content.

Construct validity : The assessment of the suitability of the measurement tool to measure the activity being studied.

Internal validity : The assessment of how your research environment affects measurement results. This is where other factors can’t explain the extent of an observed cause-and-effect response.

External validity : The extent to which the study will be accurate beyond the sample and the level to which it can be generalized in other settings, populations, and measures.

Statistical conclusion validity: The determination of whether a relationship exists between procedures and outcomes (appropriate sampling and measuring procedures along with appropriate statistical tests).

Criterion-related validity : A measurement of the quality of your testing methods against a criterion measure (like a “gold standard” test) that is measured at the same time.

- Examples of validity

Like different types of research and the various ways to measure validity, examples of validity can vary widely. These include:

A questionnaire may be considered valid because each question addresses specific and relevant aspects of the study subject.

In a brand assessment study, researchers can use comparison testing to verify the results of an initial study. For example, the results from a focus group response about brand perception are considered more valid when the results match that of a questionnaire answered by current and potential customers.

A test to measure a class of students' understanding of the English language contains reading, writing, listening, and speaking components to cover the full scope of how language is used.

- Factors that affect research validity

Certain factors can affect research validity in both positive and negative ways. By understanding the factors that improve validity and those that threaten it, you can enhance the validity of your study. These include:

Random selection of participants vs. the selection of participants that are representative of your study criteria

Blinding with interventions the participants are unaware of (like the use of placebos)

Manipulating the experiment by inserting a variable that will change the results

Randomly assigning participants to treatment and control groups to avoid bias

Following specific procedures during the study to avoid unintended effects

Conducting a study in the field instead of a laboratory for more accurate results

Replicating the study with different factors or settings to compare results

Using statistical methods to adjust for inconclusive data

What are the common validity threats in research, and how can their effects be minimized or nullified?

Research validity can be difficult to achieve because of internal and external threats that produce inaccurate results. These factors can jeopardize validity.

History: Events that occur between an early and later measurement

Maturation: The passage of time in a study can include data on actions that would have naturally occurred outside of the settings of the study

Repeated testing: The outcome of repeated tests can change the outcome of followed tests

Selection of subjects: Unconscious bias which can result in the selection of uniform comparison groups

Statistical regression: Choosing subjects based on extremes doesn't yield an accurate outcome for the majority of individuals

Attrition: When the sample group is diminished significantly during the course of the study

Maturation: When subjects mature during the study, and natural maturation is awarded to the effects of the study

While some validity threats can be minimized or wholly nullified, removing all threats from a study is impossible. For example, random selection can remove unconscious bias and statistical regression.

Researchers can even hope to avoid attrition by using smaller study groups. Yet, smaller study groups could potentially affect the research in other ways. The best practice for researchers to prevent validity threats is through careful environmental planning and t reliable data-gathering methods.

- How to ensure validity in your research

Researchers should be mindful of the importance of validity in the early planning stages of any study to avoid inaccurate results. Researchers must take the time to consider tools and methods as well as how the testing environment matches closely with the natural environment in which results will be used.

The following steps can be used to ensure validity in research:

Choose appropriate methods of measurement

Use appropriate sampling to choose test subjects

Create an accurate testing environment

How do you maintain validity in research?

Accurate research is usually conducted over a period of time with different test subjects. To maintain validity across an entire study, you must take specific steps to ensure that gathered data has the same levels of accuracy.

Consistency is crucial for maintaining validity in research. When researchers apply methods consistently and standardize the circumstances under which data is collected, validity can be maintained across the entire study.

Is there a need for validation of the research instrument before its implementation?

An essential part of validity is choosing the right research instrument or method for accurate results. Consider the thermometer that is reliable but still produces inaccurate results. You're unlikely to achieve research validity without activities like calibration, content, and construct validity.

- Understanding research validity for more accurate results

Without validity, research can't provide the accuracy necessary to deliver a useful study. By getting a clear understanding of validity in research, you can take steps to improve your research skills and achieve more accurate results.

Should you be using a customer insights hub?

Do you want to discover previous research faster?

Do you share your research findings with others?

Do you analyze research data?

Start for free today, add your research, and get to key insights faster

Editor’s picks

Last updated: 18 April 2023

Last updated: 27 February 2023

Last updated: 22 August 2024

Last updated: 5 February 2023

Last updated: 16 August 2024

Last updated: 9 March 2023

Last updated: 30 April 2024

Last updated: 12 December 2023

Last updated: 11 March 2024

Last updated: 4 July 2024

Last updated: 6 March 2024

Last updated: 5 March 2024

Last updated: 13 May 2024

Latest articles

Related topics, .css-je19u9{-webkit-align-items:flex-end;-webkit-box-align:flex-end;-ms-flex-align:flex-end;align-items:flex-end;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-flex-direction:row;-ms-flex-direction:row;flex-direction:row;-webkit-box-flex-wrap:wrap;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;-webkit-box-pack:center;-ms-flex-pack:center;-webkit-justify-content:center;justify-content:center;row-gap:0;text-align:center;max-width:671px;}@media (max-width: 1079px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}}@media (max-width: 799px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}} decide what to .css-1kiodld{max-height:56px;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;}@media (max-width: 1079px){.css-1kiodld{display:none;}} build next, decide what to build next, log in or sign up.

Get started for free

Validity In Psychology Research: Types & Examples

Saul McLeod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul McLeod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

In psychology research, validity refers to the extent to which a test or measurement tool accurately measures what it’s intended to measure. It ensures that the research findings are genuine and not due to extraneous factors.

Validity can be categorized into different types based on internal and external validity .

The concept of validity was formulated by Kelly (1927, p. 14), who stated that a test is valid if it measures what it claims to measure. For example, a test of intelligence should measure intelligence and not something else (such as memory).

Internal and External Validity In Research

Internal validity refers to whether the effects observed in a study are due to the manipulation of the independent variable and not some other confounding factor.

In other words, there is a causal relationship between the independent and dependent variables .

Internal validity can be improved by controlling extraneous variables, using standardized instructions, counterbalancing, and eliminating demand characteristics and investigator effects.

External validity refers to the extent to which the results of a study can be generalized to other settings (ecological validity), other people (population validity), and over time (historical validity).

External validity can be improved by setting experiments more naturally and using random sampling to select participants.

Types of Validity In Psychology

Two main categories of validity are used to assess the validity of the test (i.e., questionnaire, interview, IQ test, etc.): Content and criterion.

- Content validity refers to the extent to which a test or measurement represents all aspects of the intended content domain. It assesses whether the test items adequately cover the topic or concept.

- Criterion validity assesses the performance of a test based on its correlation with a known external criterion or outcome. It can be further divided into concurrent (measured at the same time) and predictive (measuring future performance) validity.

Face Validity

Face validity is simply whether the test appears (at face value) to measure what it claims to. This is the least sophisticated measure of content-related validity, and is a superficial and subjective assessment based on appearance.

Tests wherein the purpose is clear, even to naïve respondents, are said to have high face validity. Accordingly, tests wherein the purpose is unclear have low face validity (Nevo, 1985).

A direct measurement of face validity is obtained by asking people to rate the validity of a test as it appears to them. This rater could use a Likert scale to assess face validity.

For example:

- The test is extremely suitable for a given purpose

- The test is very suitable for that purpose;

- The test is adequate

- The test is inadequate

- The test is irrelevant and, therefore, unsuitable

It is important to select suitable people to rate a test (e.g., questionnaire, interview, IQ test, etc.). For example, individuals who actually take the test would be well placed to judge its face validity.

Also, people who work with the test could offer their opinion (e.g., employers, university administrators, employers). Finally, the researcher could use members of the general public with an interest in the test (e.g., parents of testees, politicians, teachers, etc.).

The face validity of a test can be considered a robust construct only if a reasonable level of agreement exists among raters.

It should be noted that the term face validity should be avoided when the rating is done by an “expert,” as content validity is more appropriate.

Having face validity does not mean that a test really measures what the researcher intends to measure, but only in the judgment of raters that it appears to do so. Consequently, it is a crude and basic measure of validity.

A test item such as “ I have recently thought of killing myself ” has obvious face validity as an item measuring suicidal cognitions and may be useful when measuring symptoms of depression.

However, the implication of items on tests with clear face validity is that they are more vulnerable to social desirability bias. Individuals may manipulate their responses to deny or hide problems or exaggerate behaviors to present a positive image of themselves.

It is possible for a test item to lack face validity but still have general validity and measure what it claims to measure. This is good because it reduces demand characteristics and makes it harder for respondents to manipulate their answers.

For example, the test item “ I believe in the second coming of Christ ” would lack face validity as a measure of depression (as the purpose of the item is unclear).

This item appeared on the first version of The Minnesota Multiphasic Personality Inventory (MMPI) and loaded on the depression scale.

Because most of the original normative sample of the MMPI were good Christians, only a depressed Christian would think Christ is not coming back. Thus, for this particular religious sample, the item does have general validity but not face validity.

Construct Validity

Construct validity assesses how well a test or measure represents and captures an abstract theoretical concept, known as a construct. It indicates the degree to which the test accurately reflects the construct it intends to measure, often evaluated through relationships with other variables and measures theoretically connected to the construct.

Construct validity was invented by Cronbach and Meehl (1955). This type of content-related validity refers to the extent to which a test captures a specific theoretical construct or trait, and it overlaps with some of the other aspects of validity

Construct validity does not concern the simple, factual question of whether a test measures an attribute.

Instead, it is about the complex question of whether test score interpretations are consistent with a nomological network involving theoretical and observational terms (Cronbach & Meehl, 1955).

To test for construct validity, it must be demonstrated that the phenomenon being measured actually exists. So, the construct validity of a test for intelligence, for example, depends on a model or theory of intelligence .

Construct validity entails demonstrating the power of such a construct to explain a network of research findings and to predict further relationships.

The more evidence a researcher can demonstrate for a test’s construct validity, the better. However, there is no single method of determining the construct validity of a test.

Instead, different methods and approaches are combined to present the overall construct validity of a test. For example, factor analysis and correlational methods can be used.

Convergent validity

Convergent validity is a subtype of construct validity. It assesses the degree to which two measures that theoretically should be related are related.

It demonstrates that measures of similar constructs are highly correlated. It helps confirm that a test accurately measures the intended construct by showing its alignment with other tests designed to measure the same or similar constructs.

For example, suppose there are two different scales used to measure self-esteem:

Scale A and Scale B. If both scales effectively measure self-esteem, then individuals who score high on Scale A should also score high on Scale B, and those who score low on Scale A should score similarly low on Scale B.

If the scores from these two scales show a strong positive correlation, then this provides evidence for convergent validity because it indicates that both scales seem to measure the same underlying construct of self-esteem.

Concurrent Validity (i.e., occurring at the same time)

Concurrent validity evaluates how well a test’s results correlate with the results of a previously established and accepted measure, when both are administered at the same time.

It helps in determining whether a new measure is a good reflection of an established one without waiting to observe outcomes in the future.

If the new test is validated by comparison with a currently existing criterion, we have concurrent validity.

Very often, a new IQ or personality test might be compared with an older but similar test known to have good validity already.

Predictive Validity

Predictive validity assesses how well a test predicts a criterion that will occur in the future. It measures the test’s ability to foresee the performance of an individual on a related criterion measured at a later point in time. It gauges the test’s effectiveness in predicting subsequent real-world outcomes or results.

For example, a prediction may be made on the basis of a new intelligence test that high scorers at age 12 will be more likely to obtain university degrees several years later. If the prediction is born out, then the test has predictive validity.

Cronbach, L. J., and Meehl, P. E. (1955) Construct validity in psychological tests. Psychological Bulletin , 52, 281-302.

Hathaway, S. R., & McKinley, J. C. (1943). Manual for the Minnesota Multiphasic Personality Inventory . New York: Psychological Corporation.

Kelley, T. L. (1927). Interpretation of educational measurements. New York : Macmillan.

Nevo, B. (1985). Face validity revisited . Journal of Educational Measurement , 22(4), 287-293.

COMMENTS

There are four main types of validity: Construct validity: Does the test measure the concept that it’s intended to measure? Content validity: Is the test fully representative of what it aims to measure? Face validity: Does the content of the test appear to be suitable to its aims?

Face validity is a subjective assessment of factors such as the relevance, formatting, readability, clarity, and appropriateness of the questionnaire for the intended audience. Face validity can be determined by nonexperts, but is an important component when a questionnaire is first being developed.

However, the quality and accuracy of data collected using a questionnaire depend on how it is designed, used, and validated. In this two-part series, we discuss how to design (part 1) and how to use and validate (part 2) a research questionnaire.

For example, to collect data on a personality trait, you could use a standardized questionnaire that is considered reliable and valid. If you develop your own questionnaire, it should be based on established theory or findings of previous studies, and the questions should be carefully and precisely worded.

Questionnaire Validation in a Nutshell. Generally speaking the first step in validating a survey is to establish face validity. There are two important steps in this process. First is to have experts or people who understand your topic read through your questionnaire.

The standardization in context to the questionnaire development is known as reliability and validity. The validity is the property which indicates that an instrument is measuring what it is supposed to measure.

If it is necessary to adopt such a questionnaire for use within a native-speaking population, then it will first have to undergo a proper validation process. This book aims to provide a useful...

Revised on June 22, 2023. Construct validity is about how well a test measures the concept it was designed to evaluate. It’s crucial to establishing the overall validity of a method.