Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- 06 April 2024

Exclusive: official investigation reveals how superconductivity physicist faked blockbuster results

- Dan Garisto

You can also search for this author in PubMed Google Scholar

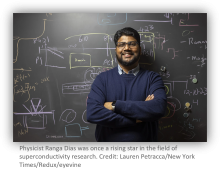

Physicist Ranga Dias was once a rising star in the field of superconductivity research. Credit: Lauren Petracca/New York Times/Redux/eyevine

Ranga Dias, the physicist at the centre of the room-temperature superconductivity scandal , committed data fabrication, falsification and plagiarism, according to an investigation commissioned by his university. Nature ’s news team discovered the bombshell investigation report in court documents.

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

24,99 € / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

185,98 € per year

only 3,65 € per issue

Rent or buy this article

Prices vary by article type

Prices may be subject to local taxes which are calculated during checkout

Nature 628 , 481-483 (2024)

doi: https://doi.org/10.1038/d41586-024-00976-y

The author of this story is related to Robert Garisto, the chief editor of PRL. The two have had no contact about this story.

Snider, E. et al. Nature 586 , 373–377 (2020); retraction 610 , 804 (2022).

Article Google Scholar

Dasenbrock-Gammon, N. et al. Nature 615 , 244–250 (2023); retraction 624 , 460 (2023).

Smith, G. A. et al. Chem. Commun. 58 , 9064–9067 (2022); retraction 60 , 1047 (2024).

Durkee, D. et al. Phys. Rev. Lett. 127 , 016401 (2021); retraction 131 , 079902 (2023).

Lamichhane, A. et al. J. Chem. Phys. 155 , 114703 (2021).

Tabak, G. et al. Phys. Rev. B 109 , 064102 (2024).

Pant, A. et al. Preprint at arXiv https://doi.org/10.48550/arXiv.2007.15247 (2020).

Download references

Reprints and permissions

Supplementary Information

- University of Rochester investigation report

Related Articles

- Institutions

- Materials science

The Taliban said women could study — three years on they still can’t

News 14 AUG 24

Who is legally responsible for climate harms? The world’s top court will now decide

Editorial 13 AUG 24

The time to act is now: the world’s highest court must weigh in strongly on climate and nature

World View 08 AUG 24

The Taliban ‘took my life’ — scientists who fled takeover speak out

News 09 AUG 24

Why we quit: how ‘toxic management’ and pandemic pressures fuelled disillusionment in higher education

Career News 08 AUG 24

Hijacked journals are still a threat — here’s what publishers can do about them

Nature Index 23 JUL 24

On-chip topological beamformer for multi-link terahertz 6G to XG wireless

Article 14 AUG 24

Precision spectroscopy on 9Be overcomes limitations from nuclear structure

Twist-assisted all-antiferromagnetic tunnel junction in the atomic limit

Faculty Positions in Center of Bioelectronic Medicine, School of Life Sciences, Westlake University

SLS invites applications for multiple tenure-track/tenured faculty positions at all academic ranks.

Hangzhou, Zhejiang, China

School of Life Sciences, Westlake University

Faculty Positions, Aging and Neurodegeneration, Westlake Laboratory of Life Sciences and Biomedicine

Applicants with expertise in aging and neurodegeneration and related areas are particularly encouraged to apply.

Westlake Laboratory of Life Sciences and Biomedicine (WLLSB)

Faculty Positions in Chemical Biology, Westlake University

We are seeking outstanding scientists to lead vigorous independent research programs focusing on all aspects of chemical biology including...

Assistant Professor Position in Genomics

The Lewis-Sigler Institute at Princeton University invites applications for a tenure-track faculty position in Genomics.

Princeton University, Princeton, New Jersey, US

The Lewis-Sigler Institute for Integrative Genomics at Princeton University

Associate or Senior Editor, BMC Medical Education

Job Title: Associate or Senior Editor, BMC Medical Education Locations: New York or Heidelberg (Hybrid Working Model) Application Deadline: August ...

New York City, New York (US)

Springer Nature Ltd

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Advertisement

- Publications

This site uses cookies to enhance your user experience. By continuing to use this site you are agreeing to our COOKIE POLICY .

Grab your lab coat. Let's get started

Create an account below to get 6 c&en articles per month, receive newsletters and more - all free., it seems this is your first time logging in online. please enter the following information to continue., as an acs member you automatically get access to this site. all we need is few more details to create your reading experience., not you sign in with a different account..

Password and Confirm password must match.

If you have an ACS member number, please enter it here so we can link this account to your membership. (optional)

ACS values your privacy. By submitting your information, you are gaining access to C&EN and subscribing to our weekly newsletter. We use the information you provide to make your reading experience better, and we will never sell your data to third party members.

Already have an ACS ID? Log in here

The key to knowledge is in your (nitrile-gloved) hands

Access more articles now. choose the acs option that’s right for you..

Already an ACS Member? Log in here

$0 Community Associate

ACS’s Basic Package keeps you connected with C&EN and ACS.

- Access to 6 digital C&EN articles per month on cen.acs.org

- Weekly delivery of the C&EN Essential newsletter

$80 Regular Members & Society Affiliates

ACS’s Standard Package lets you stay up to date with C&EN, stay active in ACS, and save.

- Access to 10 digital C&EN articles per month on cen.acs.org

- Weekly delivery of the digital C&EN Magazine

- Access to our Chemistry News by C&EN mobile app

$160 Regular Members & Society Affiliates $55 Graduate Students $25 Undergraduate Students

ACS’s Premium Package gives you full access to C&EN and everything the ACS Community has to offer.

- Unlimited access to C&EN’s daily news coverage on cen.acs.org

- Weekly delivery of the C&EN Magazine in print or digital format

- Significant discounts on registration for most ACS-sponsored meetings

Your account has been created successfully, and a confirmation email is on the way.

Your username is now your ACS ID.

Research Integrity

Us office of research integrity received 269 allegations of research misconduct last fiscal year, office closed 36 cases and released nine findings of research misconduct during the period, by dalmeet singh chawla, special to c&en, february 24, 2023.

- Court overturns conviction of chemist Feng “Franklin” Tao

- Are retraction notices becoming clearer?

- Former chemistry professor jailed for making meth

- One academic paper’s journey through the mill

- Highly-cited chemist is suspended for claiming to be affiliated with Russian and Saudi universities

The US Office of Research Integrity (ORI) received a total of 269 complaints of alleged research misconduct between Oct. 1, 2021 and Sept. 30, 2022, a new report released by the agency reveals.

During the period, the agency closed 42 cases and released nine findings of research misconduct (one involving a single person but two institutions); 10 other investigated cases yielded no such findings. The ORI declined to pursue the remaining 22 cases. In the nine cases with guilty findings, seven were cases of falsification and fabrication, one was falsification alone, and one was of plagiarism.

The ORI defines research misconduct as “fabrication, falsification, or plagiarism in proposing, performing, or reviewing research, or in reporting research results.” In two of the nine cases, the researchers were banned from federal research funding for a certain period of time, and four papers have been requested to be retracted or corrected.

In the last fiscal year, the ORI continued 33 cases from previous years and opened 38 new ones. The ORI has also awarded three grants totaling just under $450,000 to researchers conducting studies in the area of research integrity.

In September 2022, the ORI released a request for information , asking institutions, funders, and concerned individuals for their views on the ORI’s plans to revise the 2005 Public Health Service Policies on Research Misconduct. In the new report, the ORI reveals that 31 institutions, organizations, and individuals submitted comments, which the agency will use to develop a notice for public comment.

You might also like...

Sign up for C&EN's must-read weekly newsletter

Contact us to opt out anytime

- Share on Facebook

- Share on Linkedin

- Share on Reddit

This article has been sent to the following recipient:

Join the conversation

Contact the reporter

Submit a Letter to the Editor for publication

Engage with us on Twitter

The power is now in your (nitrile gloved) hands

Sign up for a free account to get more articles. or choose the acs option that’s right for you..

Already have an ACS ID? Log in

Create a free account To read 6 articles each month from

Join acs to get even more access to.

- Environment

- Science & Technology

- Business & Industry

- Health & Public Welfare

- Topics (CFR Indexing Terms)

- Public Inspection

- Presidential Documents

- Document Search

- Advanced Document Search

- Public Inspection Search

- Reader Aids Home

- Office of the Federal Register Announcements

- Using FederalRegister.Gov

- Understanding the Federal Register

- Recent Site Updates

- Federal Register & CFR Statistics

- Videos & Tutorials

- Developer Resources

- Government Policy and OFR Procedures

- Congressional Review

- My Clipboard

- My Comments

- My Subscriptions

- Sign In / Sign Up

- Site Feedback

- Search the Federal Register

This site displays a prototype of a “Web 2.0” version of the daily Federal Register. It is not an official legal edition of the Federal Register, and does not replace the official print version or the official electronic version on GPO’s govinfo.gov.

The documents posted on this site are XML renditions of published Federal Register documents. Each document posted on the site includes a link to the corresponding official PDF file on govinfo.gov. This prototype edition of the daily Federal Register on FederalRegister.gov will remain an unofficial informational resource until the Administrative Committee of the Federal Register (ACFR) issues a regulation granting it official legal status. For complete information about, and access to, our official publications and services, go to About the Federal Register on NARA's archives.gov.

The OFR/GPO partnership is committed to presenting accurate and reliable regulatory information on FederalRegister.gov with the objective of establishing the XML-based Federal Register as an ACFR-sanctioned publication in the future. While every effort has been made to ensure that the material on FederalRegister.gov is accurately displayed, consistent with the official SGML-based PDF version on govinfo.gov, those relying on it for legal research should verify their results against an official edition of the Federal Register. Until the ACFR grants it official status, the XML rendition of the daily Federal Register on FederalRegister.gov does not provide legal notice to the public or judicial notice to the courts.

Design Updates: As part of our ongoing effort to make FederalRegister.gov more accessible and easier to use we've enlarged the space available to the document content and moved all document related data into the utility bar on the left of the document. Read more in our feature announcement .

Findings of Research Misconduct

A Notice by the Health and Human Services Department on 03/17/2022

This document has been published in the Federal Register . Use the PDF linked in the document sidebar for the official electronic format.

- Document Details Published Content - Document Details Agencies Department of Health and Human Services Office of the Secretary Document Citation 87 FR 15256 Document Number 2022-05659 Document Type Notice Pages 15256-15257 (2 pages) Publication Date 03/17/2022 Published Content - Document Details

- View printed version (PDF)

This table of contents is a navigational tool, processed from the headings within the legal text of Federal Register documents. This repetition of headings to form internal navigation links has no substantive legal effect.

FOR FURTHER INFORMATION CONTACT:

Supplementary information:.

This feature is not available for this document.

Additional information is not currently available for this document.

- Sharing Enhanced Content - Sharing Shorter Document URL https://www.federalregister.gov/d/2022-05659 Email Email this document to a friend Enhanced Content - Sharing

- Print this document

Document page views are updated periodically throughout the day and are cumulative counts for this document. Counts are subject to sampling, reprocessing and revision (up or down) throughout the day.

This document is also available in the following formats:

More information and documentation can be found in our developer tools pages .

This PDF is the current document as it appeared on Public Inspection on 03/16/2022 at 8:45 am.

It was viewed 15 times while on Public Inspection.

If you are using public inspection listings for legal research, you should verify the contents of the documents against a final, official edition of the Federal Register. Only official editions of the Federal Register provide legal notice of publication to the public and judicial notice to the courts under 44 U.S.C. 1503 & 1507 . Learn more here .

Document headings vary by document type but may contain the following:

- the agency or agencies that issued and signed a document

- the number of the CFR title and the number of each part the document amends, proposes to amend, or is directly related to

- the agency docket number / agency internal file number

- the RIN which identifies each regulatory action listed in the Unified Agenda of Federal Regulatory and Deregulatory Actions

See the Document Drafting Handbook for more details.

Department of Health and Human Services

Office of the secretary.

Office of the Secretary, HHS.

Findings of research misconduct have been made against Shuo Chen, Ph.D. (Respondent), formerly a postdoctoral researcher, Department of Physics, University of California, Berkeley (UCB). Respondent engaged in research misconduct in research reported in a grant application submitted for U.S. Public Health Service (PHS) funds, specifically National Institute of Neurological Disorders and Stroke (NINDS), National Institutes of Health (NIH), grant application K99 NS116562-01. The administrative actions, including supervision for a period of one (1) year, were implemented beginning on February 28, 2022, and are detailed below.

Wanda K. Jones, Dr.P.H., Acting Director, Office of Research Integrity, 1101 Wootton Parkway, Suite 240, Rockville, MD 20852, (240) 453-8200.

Notice is hereby given that the Office of Research Integrity (ORI) has taken final action in the following case:

Shuo Chen, Ph.D., University of California, Berkeley: Based on the report of an investigation conducted by UCB and additional analysis conducted by ORI in its oversight review, ORI found that Dr. Shuo Chen, formerly a postdoctoral researcher, Department of Physics, UCB, engaged in research misconduct in research reported in a grant application submitted for PHS funds, specifically NINDS, NIH, grant application K99 NS116562-01.

ORI found that Respondent engaged in research misconduct by intentionally, knowingly, and/or recklessly falsifying data and methods by altering, reusing, and relabeling source two-photon microscopy and electrophysiological data to represent images of mouse hippocampal neurons in the following grant application:

- K99 NS116562-01, “Investigation into network dynamics of hippocampal replay sequences by ultrafast voltage imaging,” submitted to NINDS, NIH, on June 25, 2019.

ORI found that Respondent intentionally, knowingly, and/or recklessly falsified two-photon microscopy and in vivo electrophysiological activity images, figure legends, and text descriptions of hippocampal neurons from a mouse running on a treadmill in a head-fixed virtual reality (VR) set up. Specifically:

- Respondent reused an image of visual cortex neurons to represent fluorescence calcium imaging of hippocampal neurons in Figure 6d and its associated text and figure legend of K99 NS116562-01.

- Respondent reused in vivo electrophysiological data from control mice of spatial receptive fields for all recorded place cells during linear track exploration sessions from Supplemental Figure 1b from Nat Neurosci. 2018 Jul;21(7):996-1003 (doi: 10.1038/s41593-018-0163-8) to represent several sessions of two-photon hippocampal calcium imaging of progressive place fields, obtained from multiple mice running on a treadmill in a head-fixed VR set up, in Figure 6e and its associated text and figure legend of K99 NS116562-01.

Respondent neither admits nor denies ORI's findings of research misconduct. The parties entered into a Voluntary Settlement Agreement (Agreement) to conclude this matter without further expenditure of time, finances, or other resources. The settlement is not an admission of liability on the part of the Respondent.

Respondent voluntarily agreed to the following:

(1) Respondent will have his research supervised for a period of one (1) year beginning on February 28, 2022 (the “Supervision Period”). Prior to the submission of an application for PHS support for a research project on which Respondent's participation is proposed and prior to Respondent's participation in any capacity in PHS-supported research, Respondent will submit a plan for supervision of Respondent's duties to ORI for approval. The supervision plan must be designed to ensure the integrity of Respondent's research. Respondent will not participate in any PHS-supported research until such a supervision plan is approved by ORI. Respondent will comply with the agreed-upon supervision plan.

(2) The requirements for Respondent's supervision plan are as follows:

i. A committee of 2-3 senior faculty members at the institution who are familiar with Respondent's field of research, but not including Respondent's supervisor or collaborators, will provide oversight and guidance during the Supervision Period. The committee will review primary data from Respondent's laboratory on a quarterly basis and submit a report to ORI at six (6) month intervals setting forth the committee meeting dates and Respondent's compliance with appropriate research standards and confirming the integrity of Respondent's research.

ii. The committee will conduct an advance review of each application for PHS funds, or report, manuscript, or abstract involving PHS-supported research in which Respondent is involved. The review will include a discussion with Respondent of the primary data represented in those documents and will include a certification to ORI that the data presented in the proposed application, report, manuscript, or abstract is supported by the research record.

(3) During the Supervision Period, Respondent will ensure that any institution employing him submits, in conjunction with each application for PHS funds, or report, manuscript, or abstract involving PHS-supported research in which Respondent is involved, a certification to ORI that the data provided by Respondent are based on actual experiments or are otherwise legitimately derived and that the data, procedures, and methodology are accurately reported in the application, report, manuscript, or abstract. ( print page 15257)

(4) If no supervision plan is provided to ORI, Respondent will provide certification to ORI at the conclusion of the Supervision Period that his participation was not proposed on a research project for which an application for PHS support was submitted and that he has not participated in any capacity in PHS-supported research.

(5) During the Supervision Period, Respondent will exclude himself voluntarily from serving in any advisory or consultant capacity to PHS including, but not limited to, service on any PHS advisory committee, board, and/or peer review committee.

Dated: March 14, 2022.

Wanda K. Jones,

Acting Director, Office of Research Integrity, Office of the Assistant Secretary for Health.

[ FR Doc. 2022-05659 Filed 3-16-22; 8:45 am]

BILLING CODE 4150-31-P

- Executive Orders

Reader Aids

Information.

- About This Site

- Legal Status

- Accessibility

- No Fear Act

- Continuity Information

- Editor's Pick

Family of Anthony N. Almazan ’16 Files Wrongful Death Lawsuit Against Harvard

Roy Mottahedeh ’60, Pioneering Middle East Scholar Who Sought to Bridge U.S.-Iran Divide, Dies at 84

CPD Proposal for Surveillance Cameras in Harvard Square Sparks Privacy Concerns

‘The Rudder of the Organization’: Longtime PBHA Staff Member Lee Smith Remembered for Warmth and Intellect

Susan Wojcicki ’90, Former YouTube CEO and Silicon Valley Pioneer, Dies at 56

Top Harvard Medical School Neuroscientist Accused of Research Misconduct

Top Harvard Medical School neuroscientist Khalid Shah allegedly falsified data and plagiarized images across 21 papers, data manipulation expert Elisabeth M. Bik said.

In an analysis shared with The Crimson, Bik alleged that Shah, the vice chair of research in the department of neurosurgery at Brigham and Women’s Hospital, presented images from other scientists’ research as his own original experimental data.

Though Bik alleged 44 instances of data falsification in papers spanning 2001 to 2023, she said the “most damning” concerns appeared in a 2022 paper by Shah and 32 other authors in Nature Communications, for which Shah was the corresponding author.

Shah is the latest prominent scientist to have his research face scrutiny by Bik, who has emerged as a leading figure among scientists concerned with research integrity.

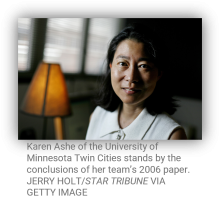

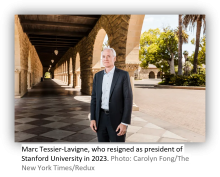

She contributed to data falsification allegations against four top scientists at the Dana-Farber Cancer Institute — leading to the retraction of six and correction of 31 papers — and independently reviewed research misconduct allegations reported by the Stanford Daily against former Stanford president Marc T. Tessier-Lavigne, which played a part in his resignation last summer.

Bik said that after being notified of the allegations by one of Shah’s former colleagues, she used the AI software ImageTwin and reverse image searches to identify duplicates across papers. Bik said she plans on detailing the specifc allegations in a forthcoming blog post.

In interviews, Matthew S. Schrag, an assistant professor of neurology at Vanderbilt University Medical Center, and Mike Rossner, the president of Image Data Integrity — who reviewed the allegations at The Crimson’s request — said they had merit and raised serious concerns about the integrity of the papers in question.

Shah did not respond to a request for comment for this article.

In an emailed statement Wednesday, Paul J. Anderson, the chief academic officer of Mass General Brigham, which oversees Brigham and Women’s Hospital, did not comment on the specific allegations against Shah but said the hospital “is committed to preserving the highest standards of biomedical research and fostering scientific innovation.”

“We take very seriously any questions, concerns, or allegations regarding research conducted at our hospitals and undertake a robust and confidential process to assess and respond to any claims that are brought to our attention in accordance with hospital policy and federal regulations,” Anderson wrote.

Bik said the 2022 paper contained lifted images from seven papers authored by other scientists and the websites of two scientific vendors.

The 2022 paper contains an image which Bik said was taken from imaging by R&D Systems, a company which manufactures antibodies for scientific research. An apparently identical image to the one contained in the 2022 paper appears in a 2018 R&D Systems catalog entry obtained by The Crimson.

R&D Systems is not credited for the image in the 2022 article.

Schrag said that not only were the images repeated, but that “the vendor is saying this is a different antibody than the one that the authors are saying it is.”

“This is a really unusual sort of thing that I cannot imagine how this happens by accident,” Schrag added.

Rossner added he had never seen an allegation of duplication of this sort in 22 years.

“If I were either a research integrity officer or a journal editor, I would want to see the source data,” Rossner said.

Nature Communications, which published the 2022 article, did not respond to a request for comment.

The allegation against the earliest paper — published in 2001, on which Shah is listed as first author — claims that two blots have been copied, magnified, and pasted into two other blots within the same figure.

According to Schrag, this manipulation would change the findings of the study, as it suggests production of a larger abundance of proteins.

The remaining 19 papers contain blot and image duplications within figures in the same paper or repeated from earlier papers authored by Shah, Bik alleged.

In an emailed statement Wednesday, HMS spokesperson Ekaterina D. Pesheva declined to comment on the allegations against Shah, citing a policy against commenting on individual research integrity concerns due to federal and institutional regulations.

She wrote that a research integrity officer will typically respond to concerns of research misconduct to determine whether it “warrants a formal inquiry” led by HMS and the “respective affiliated institution” under federal research misconduct regulations.

“Please note that until proven otherwise, any and all concerns remain simply concerns, and it is critical for the review process to unfold as intended,” Pesheva wrote.

Of the 18 scientific journals which published the articles questioned by Bik, spokespeople for seven — Oncogene, Biophysical Journal, PLOS One, Proceedings of the National Academy of Science, Cancer Biology and Therapy, Nature Scientific Reports, and Clinical Cancer Research — said they were aware of and investigating the allegations.

The other eleven journals, including Nature Communications, did not respond to requests for comment.

Correction: February 2, 2024

A previous version of this article misspelled the name of Harvard Medical School spokesperson Ekaterina D. Pesheva.

—Staff writer Veronica H. Paulus can be reached at [email protected] . Follow her on X @VeronicaHPaulus .

—Staff writer Akshaya Ravi can be reached at [email protected] . Follow her on X @akshayaravi22 .

Want to keep up with breaking news? Subscribe to our email newsletter.

May 9, 2019

In Fraud We Trust: Top 5 Cases of Misconduct in University Research

There’s a thin line between madness and immorality. This idea of the “mad scientist” has taken on a charming, even glorified perception in popular culture. From the campy portrayal of Nikola Tesla in the first issue of Superman, to Dr. Frankenstein, to Dr. Emmet Brown of Back to the Future, there’s no question Hollywood has softened the idea of the mad scientist. So, I will not paint the scientists involved in these five cases of research fraud as such. The immoral actions of these researchers didn’t just affect their own lives, but also the lives and careers of innocent students, patients, and colleagues. Academic fraud is not only a crime, it is a threat to the intellectual integrity upon which the evolution of knowledge rests. It also compromises the integrity of the institution, as any institution will take a blow to their reputation for allowing academic misconduct to go unnoticed under its watch. Here, you will find the top five most notorious cases of fraud in university research in only the last few years

Fraud in Psychology Research

In 2011, a Dutch psychologist named Diederik Stapel committed academic fraud in a number of publications over the course of ten years, spanning three different universities: the University of Groningen, the University of Amsterdam, and Tilburg University.

Among the dozens of studies in question, most notably, he falsified data on a study which analyzed racial stereotyping and the effects of advertisements on personal identity. The journal Science published the study, which claimed that one particular race stereotyped and discriminated against another particular race in a chaotic, messy environment, versus an organized, structured one. Stapel produced another study which claimed that the average person determined employment applicants to be more competent if they had a male voice. As a result, both studies were found to be contaminated with false, manipulated data.

Psychologists discovered Stapel’s falsified work and reported that his work did not stand up to scrutiny. Moreover, they concluded that Stapel took advantage of a loose system, under which researchers were able to work in almost total secrecy and very lightly maneuver data to reach their conclusions with little fear of being contested. A host of newspapers published Stapel’s research all over the world. He even oversaw and administered over a dozen doctoral theses; all of which have been rendered invalid, thereby compromising the integrity of former students’ degrees.

“I have failed as a scientist and a researcher. I feel ashamed for it and have great regret,” lamented Stapel to the New York Times. You can read the particulars of this fraud case here .

Duke University Cancer Research Fraud

In 2010, Dr. Anil Potti left Duke University after allegations of research fraud surfaced. The fraud came in waves. First, Dr. Potti flagrantly lied about being a Rhodes Scholar to attain hundreds of thousands of dollars in grant money from the American Cancer Society. Then, Dr. Potti was caught outright falsifying data in his research, after he discovered one of his theories for personalized cancer treatment was disproven. This theory was intended to justify clinical trials for over a hundred patients. Because it was disproven, the trials could no longer take place. Dr. Potti falsified data in order to continue with these trials and attain further funding.

Over a dozen papers that he published were retracted from various medical journals, including the New England Journal of Medicine.

Dr. Potti had been working on personalized cancer treatment he hailed as “the holy grail of cancer.” There are a lot of people whose bodies fail to respond to more traditional cancer treatments. Personalized treatments, however, offer hope because patients are exposed to treatments that are tailored to their own unique body constitution, and the type of tumors they have. Because of this, patients flocked to Duke to register for trials for these drugs. They were even told there was an 80% chance that they would find the right drug for them. The patients who partook in these trials filed a lawsuit against Duke, alleging that the institution performed ill-performed chemotherapy on participants. Patients were so excited that there was renewed hope for their cancer treatment, that they trusted Dr. Potti’s trials and drugs. Sadly, many of these cancer patients suffered from unusual side effects like blood clots and damaged joints.

Duke settled these lawsuits with the families of the patients. You can read details of the case here .

Plagiarism in Kansas

Mahesh Visvanathan and Gerald Lushington, two computer scientists from the University of Kansas, confessed to accusations of plagiarism. They copied large chunks of their research from the works of other scientists in their field. The plagiarism was so ubiquitous that even the summary statement of their presentation was lifted from another scientist’s article in a renowned journal.

Visvanathan and Lushington oversaw a program at the University of Kansas in which researchers reviewed and processed large amounts of data for DNA analysis. In this case, Visvanathan committed the plagiarism and Lushington knowingly refrained from reporting it to the university. Learn more about this case here .

Columbia University Research Misconduct

The year was 2010. Bengü Sezen was finally caught falsifying data after ten years of continuously committing fraud. Her fraudulent activity was so blatant that she even made up fake people and organizations in an effort to support her research results. Sezen was found guilty of committing over 20 acts of research misconduct, with about ten research papers recalled for redaction due to plagiarism and outright fabrication.

Sezen’s doctoral thesis was fabricated entirely in order to produce her desired results. Additionally, her misconduct greatly affected the careers of other young scientists who worked with her. These scientists dedicated a large portion of their graduate careers trying to reproduce Sezen’s desired results.

Columbia University moved to retract her Ph.D in chemistry. Sezen fled the country during her investigation. Read further details about this case here .

Penn State Fraud

In 2012, Craig Grimes ripped off the U.S. government to the tune of $3 million. He pleaded guilty to wire fraud, money laundering, and engaging in fraudulent statements to attain grant money.

Grimes bamboozled the National Institute of Health (NIH) and the National Science Foundation (NSF) into granting him $1.2 million for research on gases in blood, which helps detect disorders in infants. Sadly, it was revealed by the Attorney’s Office that Grimes never carried out this research, and instead used the majority of his granted funds for personal expenditures. In addition to that $1.2 million, Grimes also falsified information that helped him attain $1.9 million in grant money via the American Recovery and Reinvestment Act. Consequently, a federal judge ruled that Grimes spend 41 months in prison and pay back over $660,000 to Penn State, the NIH, and the NSF.

Check out the details about this case here .

Share this:

Latest articles, how to keep your orcid profile current .

Cory Thaxton

Toxic Labs and Research Misconduct

Digital persistent identifiers and you, featured articles.

August 15, 2024

November 21, 2023

November 16, 2023

How courts can help, not punish parents of habitually absent students

Can training high school students help address the teacher shortage?

School district sued over broken windows, mold, overheating classrooms and missing teachers

How earning a college degree put four California men on a path from prison to new lives | Documentary

Patrick Acuña’s journey from prison to UC Irvine | Video

Family reunited after four years separated by Trump-era immigration policy

Getting Students Back to School

Calling the cops: Policing in California schools

Black teachers: How to recruit them and make them stay

Lessons in Higher Education: California and Beyond

Superintendents: Well paid and walking away

Keeping California public university options open

Getting students back to school: Addressing chronic absenteeism

August 28, 2024

July 25, 2024

Adult education: Overlooked and underfunded

May 14, 2024

Getting California kids to read: What will it take?

News Update

Stanford university’s president investigated over research misconduct allegations.

Thursday December 1, 2022 10:46 am

State board of education, november 11, 2020.

Betty Márquez Rosales

Stanford University’s president, Marc Tessier-Lavigne, is the focus of an investigation alleging multiple manipulated images were included in at least four neurobiology papers that he co-authored.

The investigation was announced soon after The Daily, the university student newspaper, reported the allegations, which have been raised repeatedly over several years and most recently highlighted by Elizabeth Bik, a biologist who also investigates science misconduct.

The university’s Board of Trustees, which Tessier-Lavigne is a member of, is overseeing the investigation, but a Stanford spokeswoman confirmed that he “will not be involved in the Board of Trustees’ oversight of the review,” according to The Chronicle of Higher Education.

In 2015, Tessier-Lavigne submitted corrections to Science , where two of the papers in question were published. Science, however, did not publish them “due to an error on our part,” the Chronicle confirmed.

The timeline for the investigation remains unclear.

Latest updates:

Thursday, august 15, 2024, 12:50 pm, columbia university president resigns following “period of turmoil”, thursday, august 15, 2024, 10:35 am, lawmakers push again for parental leave for teachers, thursday, august 15, 2024, 9:27 am, school district in san diego county defies new state law by passing parental notification policy, wednesday, august 14, 2024, 10:31 am, new housing for homeless families in nine communities in california, wednesday, august 14, 2024, 9:51 am, fresno superintendent sets goal for improving test scores, stay informed with our daily newsletter.

Retraction Watch

Tracking retractions as a window into the scientific process

A U.S. federal science watchdog made just three findings of misconduct in 2021. We asked them why.

Retraction Watch readers are likely familiar with the U.S. Office of Research Integrity (ORI), the agency that oversees institutional investigations into misconduct in research funded by the NIH, as well as focusing on education programs.

Earlier this month, ORI released data on its case closures dating back to 2006. We’ve charted those data in the graphics below. In 2021, ORI made just 3 findings of misconduct, a drop from 10 — roughly the average over the past 15 years — in 2020. Such cases can take years.

As the first chart makes clear, a similar dip in ORI findings of misconduct occurred in 2016. That was then-director Kathy Partin’s first year in the role, and a time of some turmoil at the agency. In an interview with us then , Partin referred multiple times to the agency being short-staffed. Partin was removed from the post in 2017 and became intramural research integrity officer at the NIH in 2018 .

ORI — as has often been the case over the past two decades — is once again without a permanent director. The most recent permanent director, Elisabeth (Lis) Handley, became Principal Deputy Assistant Secretary for Health in the Office of the Assistant Secretary for Health in July 2021.

We asked ORI to explain what’s behind the figures. A spokesperson responded on their behalf.

Data: ORI; graphic by Retraction Watch

What is ORI’s explanation for the fact that there were so few findings in 2021?

After ORI conducts a thorough oversight review for cases in which the institution has submitted its final investigation report, ORI may or may not concur with the institutional findings (that research misconduct occurred or did not occur). ORI also must decide whether to pursue or decline to pursue (DTP) separate administrative actions. ORI may conclude that the misconduct lacks significance (e.g., no published papers or grants) or that PHS funds were not involved and closes the case as a DTP. ORI’s DTP closure of a case is not an exoneration of the respondent, and an institution can implement its own administrative actions based on its determination of the respondent’s research, scientific, or professional misconduct. In CY 2021, ORI closed 41 cases (Finding of Research Misconduct, No Finding of Research Misconduct, and DTP), compared to 48 in CY 2020 (Finding of Research Misconduct, No Finding of Research Misconduct, and DTP).

Per the ORI , a declined to pursue (DTP) closure “involves a case in which an institution found that research misconduct occurred and may implement administrative actions against the respondent, but during its oversight review, ORI determined that a separate PHS finding of research misconduct was not warranted.” A “no-misconduct closure involves a case in which the institution conducted an investigation and determined that research misconduct did not occur. Based on a preponderance of the evidence during its oversight review, ORI concurred with the institution’s determination.” And an accession “involves a case that was resolved during the assessment or inquiry stage of the institutional proceeding. Generally, during its assessment or inquiry into the allegation(s), the institution determined that there was insufficient evidence to warrant proceeding to an investigation. Based on its subsequent review of the institution’s assessment or inquiry report, ORI concurred with the institution.” Accessions include cases in which ORI decided the case was outside of its jurisdiction.

What is ORI’s explanation for why accessions nearly doubled, but findings declined by more than half?

As nearly every labor sector has experienced, institutional workflows were altered over the past two years of COVID-related building closures and staffing issues. These closures in part affected the ability of some institutions to complete their proceedings within the time limitations as specified in the federal regulations. Although the number of allegations received over that time has changed little, the delays in receiving completed institutional reports meant that ORI could focus on the full range of potential accession closures. ORI notes that some allegations may involve many published papers or grant applications with multiple respondents from various institutions, further enumerating the complexity of a case and completing its review. The number of accessions for any calendar year does not necessarily reflect the year in which ORI received the allegation, when the institution started or completed its research misconduct proceeding, or when ORI initiated its oversight review. Generally, an accession closure involves an institutional proceeding that did not progress to an investigation, and ORI’s oversight review concurred with the institution’s determination that there was insufficient evidence to warrant proceeding to an investigation. In other cases, ORI may not have jurisdiction (does not involve PHS funded-research or is outside the 42 C.F.R. Part 93 definition of research misconduct). ORI would close such a case while the institution proceeds to an investigation under its own (or other funding agency’s) authority and relevant regulations. ORI closed 52 accessions in CY 2021 after thorough oversight review of the associated allegations and institutional outcomes. The increase in accession closures reflects tireless work carried out at ORI and by institutions.

Is ORI concerned about how long cases typically take to be adjudicated?

ORI recognizes the importance of and is focused on fully addressing allegations of potential research misconduct in the most effective and efficient way possible in accordance with 42 C.F.R. Part 93. ORI also recognizes that ensuring due process and a full, fair, and independent examination of allegations is in the best interest of all involved. It is important to remember that institutional processes take time. Sometimes the investigation must be expanded in scope to consider other possible research misconduct committed by the same or additional respondents or to examine additional papers and grant applications that were not part of the initial allegations. A thorough oversight review, which ORI undertakes when the institutions complete their work, also takes time. ORI hopes to expand its use of technology for file submission, reporting of allegations, and information processing to improve overall processes, efficiencies, and case closure rates in the coming years.

Like Retraction Watch? You can make a one-time tax-deductible contribution by PayPal or by Square , or a monthly tax-deductible donation by Paypal to support our work, follow us on Twitter , like us on Facebook , add us to your RSS reader , or subscribe to our daily digest . If you find a retraction that’s not in our database , you can let us know here . For comments or feedback, email us at [email protected] .

Share this:

22 thoughts on “a u.s. federal science watchdog made just three findings of misconduct in 2021. we asked them why.”.

An example of the type of thing ORI does not consider misconduct, is self-plagiarism, such as the case I reported here… https://psblab.org/?p=611 Essentially, according to ORI it’s perfectly OK to just publish the exact same data multiple times across different journals, thus gaming the metrics system by which all academics are judged. 42 C.F.R. Part 93.103 needs to be rewritten!

Clearly ORI needs more funding and more staff. I am wondering if some sort of a tax on institutions receiving NIH grants could be levied to fund it?

Even cases that have been resolved by the DOJ are still be pending at ORI. One example would be the Sam W. Lee case (misconduct in grant proposals) which was settled with the DOJ but there is still no finding of misconduct from ORI. Another case that I have been waiting to see resolved is the Xianglin Shi/Zhuo Zhang case at the University of Kentucky which will be interesting reading because to my knowledge ORI have never found two respondents to be responsible for the same research misconduct.

Scotus (re the interesting funding idea): ORI was (is?) actually funded out of a fixed percentage of the annual NIH budget, nice because that’s generally politically immunized. But irrespective of congressional intent, ORI is administratively within – and its budget controlled by – OASH (Office of Assist Secretary of Health) . . . . which is at its own separate mercy of congressional appropriation process. So OASH controls ORI spending, and you can figure out the rest!

Having read the spokesperson’s comments, MEGO (My Eyes Glaze Over).

This RW story is based on the ORI spokesperson’s account, so one wouldn’t think that this had anything to do with the fact that -in its infinite wisdom (and just before the pandemic) – HHS downgraded ORI’s facilities by moving out of its 7th floor space down to the 2nd floor, eliminating and replacing individual offices with open carrels in that process. (Or so I was told then and had asked RW to look into at the time.)

Hard enough to work on confidential files, and d**n near impossible to review physical case evidence which must be secured in the file from. OK, so let’s add Covid to the mix, and it is not hard to see how the geniuses at HHS really screwed over ORI investigations. The facts are easier to understand than the spokesperson’s fine explanation

Fascinating. Thank you for the insight into the weaponization of open-plan offices.

I had heard of the idea of open offices before I left in 2013, but no one then thought it would be implemented by HHS since it was deemed so crazy and unworkable. (BTW: for clarification, my “file from” is ‘iPadese’ for “file room”.). But this had to be a short sighted decision from upstairs at HHS, fully independently from the troops doing investigations.

Given that it was made during the Trump administration, I think there’s a good chance it was done for the sole purpose of frustrating and demoralizing government workers.

Honestly the shocking thing to me about this is the idea that anybody in the government not in senior leadership still had individual offices.

It’s been almost a decade since I left federal service but at the time the cubicles were disappearing in favor of a completely open office, and there was serious talk of hot-desking.

Jake, what do you mean by “hot-desking” and would that in an open environment protect confidential phone conversations or need for spreading across your desk (and complete office) the original physical evidence in rebiewing these cases? Would a “hot desk” protect the scientist-investigator from even a less-than-clever respondent’s lawyer impeaching their credibility in an appeals hearing: “So Dr, how can we be sure my client’s data hasn’t been altered, since he swears those are not accurate”? Finally (and I don’t know the answer to this question), does the NIH recruit professional scientific staff at NIH work in cubicles, and at what GS level?

Hot-desking is where nobody has an assigned desk. First-come, first-serve at the start of every day. It could be combined with open-plan, cubicles, OR even private offices, but let’s face it, it’s most likely to be combined with open plan. During the workday, it’s no less private (could anything get LESS private than open-plan?), but the lack of assigned spaces means that you can’t just lock up your desk drawer or filing cabinet at the end of the day. I have no idea where confidential materials would be stored overnight. Maybe take them home? Yeah, sounds good, I can’t see anything going wrong…

Thanks Adele, I had not heard that term, which describes a come-and-go, pick-up-and-leave, portable work space. I don’t know how they have managed to make things work in the ‘new’ ORI facilities, but at my time (1993-2013) we (all 8-10 of us) worked on multiple case files at one time, confidential records spread, strewn, and stashed all over our individual secure and locked office spaces. I was told the only individual space was the computer forensics room we established. I can fully understand why the HHS spokesperson was unnamed. RW should look into who pushed those decisions downtown.

NSF moved into a new building in 2018. The initial plan was for open office but that was nixed, the argument being that program officers needed privacy when conducting panels, telephone calls with PIs, etc. The support/administrative staff, however, were put into cubicles. And – and I’m not making this up – low grade levels got cubicles with 66” barriers, high grade levels got 80” barriers.

Can somebody explain how come Fazlul Sarkar, one of the most disgraced “scientist” due to the extent of his research misconduct and retractions, cannot be found in the ORI summaries? At the time his papers were flagged, he had at least 4 R01s and also DOD funding. No investigation of Sarkar from ORI? No punishment for the millions of dollars that were wasted in fraudulent research? No accountability? How is this possible? Something doesn’t add up.

http://retractionwatch.com/2020/08/07/cancer-researcher-hit-with-10-year-ban-on-federal-us-funding-for-nearly-100-faked-images/

Wang was initially Sarkar’s PhD student and then he became his postdoc. Why was he targeted by ORI and not the advisor? The PI of the R01 and several DOD grants was Sarkar, and Zhang was not a co-author in many of the retracted papers. I don’t believe Zhang was held responsible by the investigation at Wayne, only Sarkar. It seems that Sarkar got away with the crime and the student paid the price. All very strange.

I spoke with a staffer with the House Investigations and Oversight Committee on Science, Space, and Technology late in 2021 and while he didn’t share any details, my impression was that they might be working on legislation aimed at addressing some of this and on research integrity in general.

In my discussion with him, I shared a list of ideas that might help with research funded by NIH and other US agencies. As I’m not a researcher (which he knew), the list may be misguided, but here it is:

More clear-cut guidance from the ORI about ethics in publishing. Perhaps adopt these as a code of ethics: https://www.nature.com/nature-portfolio/editorial-policies . While this may only be enforced on NIH-funded papers, having this as US policy would nudge US institutions (at least) to follow suit.

Require research to be published in open-access journals; public pays for it, public should get access

Require data retention for 10 years with a custodian (can be institution or for-profit data warehouse) pre-identified in grant requests and published paper

Require data transparency – with anonymization for patient data

Agreement that funded researchers must submit to audit or examination upon request, or grant money is clawed back

Whistleblower protections in writing before grants awarded

Perhaps adopt the “Bik scale” https://scienceintegritydigest.com/2020/01/08/oops-i-did-it-again/

In my experience working on ORI images with Journals, an institutional data retention requirement (by the funders such as the NIH) would be a key first step. But start with the key motivation in ‘science.’ That of course is “retraction” (of occasional discussion by RW watchers), and that solely is the purview of academic/research institutions and (IMHO) not a call for government. But a funding requirement for data retention would make the key step much easier for Journals.

Specifically, Journals can already exercise common sense (and one in their own self interest), by simply requiring the corresponding author to accept, pre-publication, an immediate retraction ‘for cause’, namely if 1) a legitimate question is raised in post publication peer review and 2) the data is then found to be ‘missing.’ No debate about the results, easy-peasey, and all within academic prerogatives. If the journals stepped up to the plate, 70% of ORI’s investigative work (that involving images cases) would evaporate.

Such an institutional data retention requirement by funders would facilitate what publishers can already be doing to protect their investment and inspire coauthors to review the primary data.

The NIH has a new policy on data management that will go into effect in 2023

https://grants.nih.gov/grants/guide/notice-files/NOT-OD-21-013.html

The policy will will require “storing data of sufficient quality to validate and replicate scientific findings” with an expectation that data will be digital or digitized.

Use of a data management system would establish the provenance of data and make it much simpler to identify fraud. Based on the amount of gel and western blot fraud I can only imagine how many bogus graphs and tables of data the fraudsters are churning out.

There are literally hundreds, if not thousands, of fake, fraudulent, or otherwise “misconducted” research published every year. Everybody knows this. It is also clear that many of these cases are extremely obvious, even if others might be harder to identify. The ORI finds three (3) cases in a whole year… That seems like an institution that has no reason to exists if it can’t work more effectively than that.

I suggest you (re?)read the federal regulations defining the limits of ORI’s jurisdiction and authority.

(Indulge my aggressive mock cynicism here: Historically , the ORI was created to sweep up after the annual NIH appropriation funding parade passed each year, much to the then dismay of the academic research community. Are you saying it is ORI’s (and government’s) task to enforce failures to make really the simple steps that academics, research institutions, and journals could painlessly and easily implement to insure their standards keep up with research practices in the digital age?

In addition, ORI lost last year one of its top (for 12 years) scientist-investigators, described by the former ORI Director as having “brought incredible tenacity, a willingness to teach others and to share her knowledge, strategic understanding of the importance of working with others like our Office of General Counsel and RIOs on the successful outcome of cases, and a keen and strong intellect to our work. The outcomes she achieved with her cases are something I wish the public knew about public servants like her. She fought hard to make sure that biomedical research results can be counted upon, by painstakingly chasing down fabrication, falsification and plagiarism in hundreds of cases across multiple disciplines and with thousands and thousands of images.” https://ori.hhs.gov/blog/personnel-announcement-ori-director

Thanks, Anne.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

This site uses Akismet to reduce spam. Learn how your comment data is processed .

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Springer Nature - PMC COVID-19 Collection

Deceiving scientific research, misconduct events are possibly a more common practice than foreseen

Alonzo alfaro-núñez.

1 Department of Clinical Biochemistry, Naestved Hospital, Ringstedgade 57a, 4700 Naestved, Denmark

2 Section for Evolutionary Genomics, GLOBE Institute, University of Copenhagen, Øster Farimagsgade 5, 1353 Copenhagen K, Denmark

Associated Data

Not applicable.

Today, scientists and academic researchers experience an enormous pressure to publish innovative and ground-breaking results in prestigious journals. This pressure may blight the general view concept of how scientific research needs to be done in terms of the general rules of transparency; duplication of data, and co-authorship rights might be compromised. As such, misconduct acts may occur more frequently than foreseen, as frequently these experiences are not openly shared or discussed among researchers.

While there are some concerns about the health and the transparency implications of such normalised pressure practices imposed on researchers in scientific research, there is a general acceptance that researchers must take and accept it in order to survive in the competitive world of science. This is even more the case for junior and mid-senior researchers who have recently started their adventure into the universe of independent researchers. Only the slightest fraction manages to endure, after many years of furious and cruel rivalry, to obtain a long-term, and even less probable, permanent position. There is an evil circle; excellent records of good publications are needed in order to obtain research funding, but how to produce pioneering research during these first years without funding? Many may argue this is a necessary process to ensure good quality scientific investigation, possibly, but perseverance and resilience may not be the only values needed when rejection is received consecutively for years.

There is a general culture that scientists rarely share previous bad experiences, in particular if they were associated to misconduct, as they may not be seen or considered as a relevant or hot topic to the scientific community readers. On next, a recent misconduct experience is shared, and a few additional reflections and suggestions on this topic were drafted in the hope other researchers might be spared unnecessary and unpleasant times.

Scientists are under great pressure to publish not only high-quality research, but also a larger number of publications, the more the merrier, within the first years of career in order to survive in the competitive world of science. This pressure might mislead young less experienced researchers to take “shortcuts” that may consequently mislead to carry out misconduct actions. The aim of this article is not just trying to report a case of misconduct to the concerned stakeholders, but also to the research community as a whole in the hope other researchers might avoid similar experiences. Moreover, some basic recommendations are shared to remind the basic rules of transparency, duplication of data and authorship rights to avoid and prevent misconduct acts based on existing literature and the present experience.

Welcoming collaboration

During the first months of 2021, already in the second year of the COVID-19 pandemic with most European research institutes and labs still in lockdown [ 1 ], and all over the world, I received an email from a young researcher overseas. This young fellow is based in Bangladesh, South Asia, in a country in which I have never collaborated before. He was interested in a potential collaboration with many ideas, and proved to be a very energetic person writing me on a daily basis and even several times a day during the first weeks.

There were obviously some suspicions about the nature of this collaboration, but the general and basic background check out was done, and this fellow seemed to be legitimate. Thus, after a few weeks of discussing back and forth research ideas, I welcomed the collaboration. Thereafter, for the first few months many ideas were elaborated and discussed, and so we began to draft two review manuscripts simultaneously. In no time, it felt like a potential and long-standing collaboration was born. However, it also required additional time because of the linguistic and cultural barrier. It appeared that sometimes the main message was getting lost in translation, and it was reflected in the text on the various manuscript versions. We repetitively argued about the importance of transparency, the correct use of data previously published and the general rules of authorship and citation, especially when producing a new review document. Nevertheless, these errors were corrected and he guaranteed to have full understanding, and I trusted.

After some time, enthusiasm started to decline and the highly motivated collaborator started to rush to complete the work regardless of the quality, especially as a third manuscript was now also in play. I was not willing to sacrifice quality, so I started using more of my personal time to complete the different manuscripts, I felt committed. After six months or so, the first of the three manuscripts was ready, and the process of submission started to a high-impact peer-review journal to a special issue on a topic where I had been invited months ago. A few months later, the second manuscript followed the same steps.

By the middle of April 2022, the first of the manuscripts had just been accepted; the second one was already in its second round of review, and the third and last of the manuscripts was ready for submission. I cannot deny the satisfaction felt of a good job properly done in a time record (for my personal standards).

Deceptive surprise

Through the last hours, before submitting our final manuscript, the mandatory final inspection was done. However, I noticed something odd, two new citations had been added in the last minute, and I did not approve that change. Even more curious, the two citations had the new collaborator’s name on it. Immediately, I searched for the two mysterious documents, a book chapter and another peer-reviewed publication were the result. To my surprise, the titles of these two new works were very similar and somehow nearly identical to the topic we had just finished and his name appeared as the first author. Both documents were not open access and had recently been published, one of them less than a week old. Furthermore, our manuscript, the same document I was supposed to submit that same day, had six figures and four tables, all generated by our collaborative work. The book chapter had exactly the same figures and tables just in a different order, but the data and content were nearly identical. The text redaction was different, and there were also some other co-authors from his same region, but the content and background idea was the same.

During the next hours, I went back to the other two manuscripts. Indeed, all my fears were right. My new collaborator had systematically been committing fraud, replicating manuscripts using the same data and publishing by himself using my very ideas and sentences.

I confronted him; I wanted to receive an explanation, a reason for these actions. I copied all other co-authors in these communications. The three manuscripts had built international collaboration, and other parties had actively participated, and now we all were compromised. The first reaction received was that he was not aware that was an illegal action, and then, silence. No satisfactory answer was ever received, and more importantly, it seemed some of the other co-authors did not care, nor were surprised.

The aftermath of deception

In the next coming days, I redacted several email letters describing the misconduct situation to the different journal’s editors, preprint services and especially to the main affiliations of this fraudulent person. The two manuscripts were withdrawn from the respective journals right away. Together with the third manuscript, none of the documents will ever be published. There is a long history and documentation showing that withdraws and retractions of scientific manuscripts may be the most relevant form of silently reporting scientific misconduct [ 2 , 3 ], and now I was part of it. Editors from the journals and editorial houses where the duplicated documents had been published responded to investigate the case. However, after several months of waiting, and despite the multiple complain letters providing all the evidence to prove the misconduct act, no official sanctions have been taken by any of the journals and the documents remain still available online. Editors have the responsibility to pursue scientific misconduct in submitted or published manuscripts; however, editors are not responsible for conducting investigation or deciding whether a scientific misconduct occurred [ 4 ].

The preprint services response was very clear and conclusive, regardless of the evidence provided, the documents published online in their preprint format cannot and will not be removed. Now our names will remain associated with this person to posterity, another wonderful discovery. Release of early results in the format of preprints without going through the process of peer-review is an old well known issue of concern [ 5 – 7 ]. For the last few years I have been in favour and accepting the early release of preprint publications, this new experience has made me reconsider and change entirely this position. I find unacceptable that in spite of providing all evidence of research misconduct, fraud and duplication of data especially, a retraction of a preprint document is not possible for most preprint services available.

As for the consequences or sanctions imposed on this “researcher” by his own affiliate institutions, it also remains unknown as no reply or answer has been received until now. Additionally, some of his personal collaborators also included as co-authors during the editing process of the manuscripts, as it was claimed they “intellectually contributed” to the study, contacted me during the first weeks after withdrawing. These collaborators were unhappy about the decision taken, and complained asking: ‘‘ what is it really necessary to retract the documents entirely, in particular one manuscript already accepted and a second one in-review? Why was not this decision put into a vote among the co-authors?” They did not considered to be an enough reason for withdrawing and claimed, “ It had been a rush and wrong decision” . The answer was simple, it was a clear research misconduct act and the data has been duplicated and misused, my decision could not be clouded by the grief of losing three publications. Besides, I was the last author and corresponding author for all three manuscripts, and thus, the responsibility and final decision relied on me. Furthermore, and as a curious additional detail, all editors associated to the journals where the two-duplicated manuscripts were published, all are as well from the same region as this person. All these facts together allow me to reach the conclusion that misconduct practices may be relatively more common in some other parts of the world, and the research culture may play an important role in this type of practices, but we are still afraid to discuss about it [ 8 ]. There are no rigorous or systematic controls to regulate that one unique person can manipulate, duplicate with slight modifications in the text, and publish the same datasets in different journals, especially if the time between submissions is minimal. There are thousands of journals with many more thousands of editors in an infinite number of online platforms. Decisions over whether to retract or modify a study are more likely to take years than months, this time could potentially harmfully misinform [ 9 ] and damage the reputation of researchers [ 3 ] if any sanction is taken at all by the end [ 10 ]. Based on the previous rationale, this author who duplicated our work and published by himself may simply get away with it, two fraudulent copy/paste extra publications and zero consequences.

Hundreds of hour’s work and nearly a year of effort were lost in an instant. As many others, I believe I work and interact with researchers sharing similar values of honesty, openness and accountability pursuing to establish as an independent researcher to produce good science work. Yet every aspect of science, from the framing of a research idea to the publication of a manuscript, is susceptible to influences that can lead to misconduct [ 11 ]. By withdrawing at once three manuscripts, now associated to misconduct practices, my research colleagues and I will suffer the consequences of the current academia culture of “publish or perish” [ 12 ].

Recommendations to avoid unpleasant research events

With two official retractions across the editorial offices of two major journals and three preprint documents that I cannot rig out, all associated to fraud and scientific misconduct; I am probably the less qualified person with the least authority to provide any feedback and even less, a short list of recommendations to prevent misconduct in research. Nevertheless, here I am. There are many general guidelines and basic rules to prevent, avoid and report misconduct actions [ 3 , 13 – 15 ], the interested readers can get more information below in the reference list if they want to explore deeper into this. Using these guidelines as the main backbone, a short list of three main recommendations is presented in the lines below.

The first and possibly most important recommendation, despite the previous shared experience; always welcome collaboration after a well-throughout background check. This may sound contradictory, but contemporary science is based on collaboration and the interdisciplinary combination of fields [ 16 ], one bad experience and one “rotten apple” cannot disrupt the development of scientific research. Of course, it is mandatory to be vigilant and to carefully investigate the background interests [ 9 ] and history of each new door that opens along the way. Welcome collaboration cautiously.

A second recommendation, to investigate the institution and location of the new coming collaborations. As stated above, the cultural background [ 8 ], and thus, the location of these new collaboration institutions may play a very important role in the final outcome. Most countries across Europe and in the U.S. have well-defined guidelines [ 3 , 10 ], which varied a lot about each principle and at the end are regulated by each institution research policies. However, there may be regions across the world where policies and regulations concerning misconduct actions and the implications and consequences are yet not well established [ 17 ]. Avoid those.

My third recommendation, and possibly the most relevant of all, do not take for granted that the other researchers are fully aware that some actions may lead to misconduct. My biggest mistake was to believe that other researchers knew or cared about the basic rules of duplication of data, transparency and respect of authorship rights. Ignorance still accounts for a large portion of the research misconduct actions [ 11 , 18 ]. Never assume that others know and respect the broad spectrum of misconduct actions.

Two additional personal recommendations. Stay away from review manuscripts and book chapters, avoid them at all cost. Consider very carefully sharing your manuscript results in the format of an early release preprint online publication.

Conclusions

There is so much to modify in the existing science research environment to avoid situations like this to continue or ever happen again. Young scientists need to be inspired and motivated to produce by example based on principles of integrity, ethical values, transparency and respect, and not by current trend of rejection and extreme pressure. Dealing with the research pressure to secure external funds and to publish in top-tier journals stand as the most common stressors that contribute to research misconduct [ 15 , 19 ]. The same research culture that creates this pressure for publishing and obtaining funds, it also contributes to the behaviour practice of silence that leads to ignore and avoid the topic of misconduct in research. While there is a general concern and scientific journals attempt to take situations like this seriously, there should also be a more open space to share and inform junior and even senior researchers about this kind of predatory stealing research practices.

Manipulation and duplication of data to inflate academic records is a desperate and shameless act, and it truly represents scientific misconduct and fraud. Unfortunately, there is a general trend with an increase in misconduct in research [ 13 ], which ultimately account for the majority of withdrawals in modern scientific publications [ 20 ]. I would like to believe that even good people could do bad things when extreme pressure is received. Nevertheless, would this justify misconduct and fraud? Never!

Acknowledgements

Special thanks to Esther Agnete Jensen, Therese Kronevald, Stina Christensen, Aksel Skovgaard, Morten Juel, Jesper Clausager Madsen and Alonso A. Aguirre for their support and advice. The author would also like to thank the three anonymous reviewers for their comments, feedback and improvements.

Author contributions

The author read and approved the final manuscript.

Authors’ information

Web of Science Researcher ID H-2972-2019.

This research received support from the Department of Clinical Biochemistry at Naestved Hospital, Region Sjaelland.

Availability of data and materials

Declarations.

The author gives full consent for publication.

The author declares no competing of interest.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

A compilation of articles regarding Research Misconduct issues.

This page offers news-worthy topics for the Responsible Conduct of Research and Research Misconduct. Note: Due to the nature of web page evolution, some links may be broken.

| August | September | October | November | December |

Science stands on shaky shoulders with research misconduct

Research misconduct poisons the well of scientific literature, but finding systemic ways to change the current “publish or perish” culture will help.

July 4, 2024 Drug Discovery News (DDN) Stephanie DeMarco, PhD

I distinctly remember the day I saw a western blot band stretched, rotated, and pasted into another panel. Zoomed out, it looked like a perfectly normal blot; the imposter band sat amongst the others like it had always been there.

Sitting at a long table with the other graduate students on my training grant, I watched as our professor showed us example after example of images from published scientific papers that had been manipulated to embellish the data. I really appreciate that course and the other research integrity courses I took during my research training for teaching me and my peers how to spot bad science and what to do when we encounter it. It made me a better scientist when I was in the lab, and now, it makes me a better journalist.

When bad science infiltrates the publication record, researchers unwittingly build their own research programs around shaky science. Not only does this waste researchers’ time and money, but it affects real people’s lives.

Read more...

Implementing statcheck during peer review is related to a steep decline in statistical reporting inconsistencies

June 20, 2024 PsyArXiv Preprints Michele B. Nuijten and Jelte Wicherts