Introduction to Statistics

(15 reviews)

David Lane, Rice University

Copyright Year: 2003

Publisher: David Lane

Language: English

Formats Available

Conditions of use.

Learn more about reviews.

Reviewed by Terri Torres, professor, Oregon Institute of Technology on 8/17/23

This author covers all the topics that would be covered in an introductory statistics course plus some. I could imagine using it for two courses at my university, which is on the quarter system. I would rather have the problem of too many topics... read more

Comprehensiveness rating: 5 see less

This author covers all the topics that would be covered in an introductory statistics course plus some. I could imagine using it for two courses at my university, which is on the quarter system. I would rather have the problem of too many topics rather than too few.

Content Accuracy rating: 5

Yes, Lane is both thorough and accurate.

Relevance/Longevity rating: 5

What is covered is what is usually covered in an introductory statistics book. The only topic I may, given sufficient time, cover is bootstrapping.

Clarity rating: 5

The book is clear and well-written. For the trickier topics, simulations are included to help with understanding.

Consistency rating: 5

All is organized in a way that is consistent with the previous topic.

Modularity rating: 5

The text is organized in a way that easily enables navigation.

Organization/Structure/Flow rating: 5

The text is organized like most statistics texts.

Interface rating: 5

Easy navigation.

Grammatical Errors rating: 5

I didn't see any grammatical errors.

Cultural Relevance rating: 5

Nothing is included that is culturally insensitive.

The videos that accompany this text are short and easy to watch and understand. Videos should be short enough to teach, but not so long that they are tiresome. This text includes almost everything: videos, simulations, case studies---all nicely organized in one spot. In addition, Lane has promised to send an instructor's manual and slide deck.

Reviewed by Professor Sandberg, Professor, Framingham State University on 6/29/21

This text covers all the usual topics in an Introduction to Statistics for college students. In addition, it has some additional topics that are useful. read more

This text covers all the usual topics in an Introduction to Statistics for college students. In addition, it has some additional topics that are useful.

I did not find any errors.

Some of the examples are dated. And the frequent use of male/female examples need updating in terms of current gender splits.

I found it was easy to read and understand and I expect that students would also find the writing clear and the explanations accessible.

Even with different authors of chapter, the writing is consistent.

The text is well organized into sections making it easy to assign individual topics and sections.

The topics are presented in the usual order. Regression comes later in the text but there is a difference of opinions about whether to present it early with descriptive statistics for bivariate data or later with inferential statistics.

I had no problem navigating the text online.

The writing is grammatical correct.

I saw no issues that would be offensive.

I did like this text. It seems like it would be a good choice for most introductory statistics courses. I liked that the Monty Hall problem was included in the probability section. The author offers to provide an instructor's manual, PowerPoint slides and additional questions. These additional resources are very helpful and not always available with online OER texts.

Reviewed by Emilio Vazquez, Associate Professor, Trine University on 4/23/21

This appears to be an excellent textbook for an Introductory Course in Statistics. It covers subjects in enough depth to fulfill the needs of a beginner in Statistics work yet is not so complex as to be overwhelming. read more

This appears to be an excellent textbook for an Introductory Course in Statistics. It covers subjects in enough depth to fulfill the needs of a beginner in Statistics work yet is not so complex as to be overwhelming.

I found no errors in their discussions. Did not work out all of the questions and answers but my sampling did not reveal any errors.

Some of the examples may need updating depending on the times but the examples are still relevant at this time.

This is a Statistics text so a little dry. I found that the derivation of some of the formulas was not explained. However the background is there to allow the instructor to derive these in class if desired.

The text is consistent throughout using the same verbiage in various sections.

The text dose lend itself to reasonable reading assignments. For example the chapter (Chapter 3) on Summarizing Distributions covers Central Tendency and its associated components in an easy 20 pages with Measures of Variability making up most of the rest of the chapter and covering approximately another 20 pages. Exercises are available at the end of each chapter making it easy for the instructor to assign reading and exercises to be discussed in class.

The textbook flows easily from Descriptive to Inferential Statistics with chapters on Sampling and Estimation preceding chapters on hypothesis testing

I had no problems with navigation

All textbooks have a few errors but certainly nothing glaring or making text difficult

I saw no issues and I am part of a cultural minority in the US

Overall I found this to be a excellent in-depth overview of Statistical Theory, Concepts and Analysis. The length of the textbook appears to be more than adequate for a one-semester course in Introduction to Statistics. As I no longer teach a full statistics course but simply a few lectures as part of our Research Curriculum, I am recommending this book to my students as a good reference. Especially as it is available on-line and in Open Access.

Reviewed by Audrey Hickert, Assistant Professor, Southern Illinois University Carbondale on 3/29/21

All of the major topics of an introductory level statistics course for social science are covered. Background areas include levels of measurement and research design basics. Descriptive statistics include all major measures of central tendency and... read more

All of the major topics of an introductory level statistics course for social science are covered. Background areas include levels of measurement and research design basics. Descriptive statistics include all major measures of central tendency and dispersion/variation. Building blocks for inferential statistics include sampling distributions, the standard normal curve (z scores), and hypothesis testing sections. Inferential statistics include how to calculate confidence intervals, as well as conduct tests of one-sample tests of the population mean (Z- and t-tests), two-sample tests of the difference in population means (Z- and t-tests), chi square test of independence, correlation, and regression. Doesn’t include full probability distribution tables (e.g., t or Z), but those can be easily found online in many places.

I did not find any errors or issues of inaccuracy. When a particular method or practice is debated in the field, the authors acknowledge it (and provide citations in some circumstances).

Relevance/Longevity rating: 4

Basic statistics are standard, so the core information will remain relevant in perpetuity. Some of the examples are dated (e.g., salaries from 1999), but not problematic.

Clarity rating: 4

All of the key terms, formulas, and logic for statistical tests are clearly explained. The book sometimes uses different notation than other entry-level books. For example, the variance formula uses "M" for mean, rather than x-bar.

The explanations are consistent and build from and relate to corresponding sections that are listed in each unit.

Modularity is a strength of this text in both the PDF and interactive online format. Students can easily navigate to the necessary sections and each starts with a “Prerequisites” list of other sections in the book for those who need the additional background material. Instructors could easily compile concise sub-sections of the book for readings.

The presentation of topics differs somewhat from the standard introductory social science statistics textbooks I have used before. However, the modularity allows the instructor and student to work through the discrete sections in the desired order.

Interface rating: 4

For the most part the display of all images/charts is good and navigation is straightforward. One concern is that the organization of the Table of Contents does not exactly match the organizational outline at the start of each chapter in the PDF version. For example, sometimes there are more detailed sub-headings at the start of chapter and occasionally slightly different section headings/titles. There are also inconsistencies in section listings at start of chapters vs. start of sub-sections.

The text is easy to read and free from any obvious grammatical errors.

Although some of the examples are outdated, I did not review any that were offensive. One example of an outdated reference is using descriptive data on “Men per 100 Women” in U.S. cities as “useful if we are looking for an opposite-sex partner”.

This is a good introduction level statistics text book if you have a course with students who may be intimated by longer texts with more detailed information. Just the core basics are provided here and it is easy to select the sections you need. It is a good text if you plan to supplement with an array of your own materials (lectures, practice, etc.) that are specifically tailored to your discipline (e.g., criminal justice and criminology). Be advised that some formulas use different notation than other standard texts, so you will need to point that out to students if they differ from your lectures or assessment materials.

Reviewed by Shahar Boneh, Professor, Metropolitan State University of Denver on 3/26/21, updated 4/22/21

The textbook is indeed quite comprehensive. It can accommodate any style of introductory statistics course. read more

The textbook is indeed quite comprehensive. It can accommodate any style of introductory statistics course.

The text seems to be statistically accurate.

It is a little too extensive, which requires instructors to cover it selectively, and has a potential to confuse the students.

It is written clearly.

Consistency rating: 4

The terminology is fairly consistent. There is room for some improvement.

By the nature of the subject, the topics have to be presented in a sequential and coherent order. However, the book breaks things down quite effectively.

Organization/Structure/Flow rating: 3

Some of the topics are interleaved and not presented in the order I would like to cover them.

Good interface.

The grammar is ok.

The book seems to be culturally neutral, and not offensive in any way.

I really liked the simulations that go with the book. Parts of the book are a little too advanced for students who are learning statistics for the first time.

Reviewed by Julie Gray, Adjunct Assistant Professor, University of Texas at Arlington on 2/26/21

The textbook is for beginner-level students. The concept development is appropriate--there is always room to grow to high higher level, but for an introduction, the basics are what is needed. This is a well-thought-through OER textbook project by... read more

The textbook is for beginner-level students. The concept development is appropriate--there is always room to grow to high higher level, but for an introduction, the basics are what is needed. This is a well-thought-through OER textbook project by Dr. Lane and colleagues. It is obvious that several iterations have only made it better.

I found all the material accurate.

Essentially, statistical concepts at the introductory level are accepted as universal. This suggests that the relevance of this textbook will continue for a long time.

The book is well written for introducing beginners to statistical concepts. The figures, tables, and animated examples reinforce the clarity of the written text.

Yes, the information is consistent; when it is introduced in early chapters it ties in well in later chapters that build on and add more understanding for the topic.

Modularity rating: 4

The book is well-written with attention to modularity where possible. Due to the nature of statistics, that is not always possible. The content is presented in the order that I usually teach these concepts.

The organization of the book is good, I particularly like the sample lecture slide presentations and the problem set with solutions for use in quizzes and exams. These are available by writing to the author. It is wonderful to have access to these helpful resources for instructors to use in preparation.

I did not find any interface issues.

The book is well written. In my reading I did not notice grammatical errors.

For this subject and in the examples given, I did not notice any cultural issues.

For the field of social work where qualitative data is as common as quantitative, the importance of giving students the rationale or the motivation to learn the quantitative side is understated. To use this text as an introductory statistics OER textbook in a social work curriculum, the instructor will want to bring in field-relevant examples to engage and motivate students. The field needs data-driven decision making and evidence-based practices to become more ubiquitous than not. Preparing future social workers by teaching introductory statistics is essential to meet that goal.

Reviewed by Mamata Marme, Assistant Professor, Augustana College on 6/25/19

This textbook offers a fairly comprehensive summary of what should be discussed in an introductory course in Statistics. The statistical literacy exercises are particularly interesting. It would be helpful to have the statistical tables... read more

Comprehensiveness rating: 4 see less

This textbook offers a fairly comprehensive summary of what should be discussed in an introductory course in Statistics. The statistical literacy exercises are particularly interesting. It would be helpful to have the statistical tables attached in the same package, even though they are available online.

The terminology and notation used in the textbook is pretty standard. The content is accurate.

The statistical literacy example are up to date but will need to be updated fairly regularly to keep the textbook fresh. The applications within the chapter are accessible and can be used fairly easily over a couple of editions.

The textbook does not necessarily explain the derivation of some of the formulae and this will need to be augmented by the instructor in class discussion. What is beneficial is that there are multiple ways that a topic is discussed using graphs, calculations and explanations of the results. Statistics textbooks have to cover a wide variety of topics with a fair amount of depth. To do this concisely is difficult. There is a fine line between being concise and clear, which this textbook does well, and being somewhat dry. It may be up to the instructor to bring case studies into the readings we are going through the topics rather than wait until the end of the chapter.

The textbook uses standard notation and terminology. The heading section of each chapter is closely tied to topics that are covered. The end of chapter problems and the statistical literacy applications are closely tied to the material covered.

The authors have done a good job treating each chapter as if they stand alone. The lack of connection to a past reference may create a sense of disconnect between the topics discussed

The text's "modularity" does make the flow of the material a little disconnected. If would be better if there was accountability of what a student should already have learnt in a different section. The earlier material is easy to find but not consistently referred to in the text.

I had no problem with the interface. The online version is more visually interesting than the pdf version.

I did not see any grammatical errors.

Cultural Relevance rating: 4

I am not sure how to evaluate this. The examples are mostly based on the American experience and the data alluded to mostly domestic. However, I am not sure if that creates a problem in understanding the methodology.

Overall, this textbook will cover most of the topics in a survey of statistics course.

Reviewed by Alexandra Verkhovtseva, Professor, Anoka-Ramsey Community College on 6/3/19

This is a comprehensive enough text, considering that it is not easy to create a comprehensive statistics textbook. It is suitable for an introductory statistics course for non-math majors. It contains twenty-one chapters, covering the wide range... read more

This is a comprehensive enough text, considering that it is not easy to create a comprehensive statistics textbook. It is suitable for an introductory statistics course for non-math majors. It contains twenty-one chapters, covering the wide range of intro stats topics (and some more), plus the case studies and the glossary.

The content is pretty accurate, I did not find any biases or errors.

The book contains fairly recent data presented in the form of exercises, examples and applications. The topics are up-to-date, and appropriate technology is used for examples, applications, and case studies.

The language is simple and clear, which is a good thing, since students are usually scared of this class, and instructors are looking for something to put them at ease. I would, however, try to make it a little more interesting, exciting, or may be even funny.

Consistency is good, the book has a great structure. I like how each chapter has prerequisites and learner outcomes, this gives students a good idea of what to expect. Material in this book is covered in good detail.

The text can be easily divided into sub-sections, some of which can be omitted if needed. The chapter on regression is covered towards the end (chapter 14), but part of it can be covered sooner in the course.

The book contains well organized chapters that makes reading through easy and understandable. The order of chapters and sections is clear and logical.

The online version has many functions and is easy to navigate. This book also comes with a PDF version. There is no distortion of images or charts. The text is clean and clear, the examples provided contain appropriate format of data presentation.

No grammatical errors found.

The text uses simple and clear language, which is helpful for non-native speakers. I would include more culturally-relevant examples and case studies. Overall, good text.

In all, this book is a good learning experience. It contains tools and techniques that free and easy to use and also easy to modify for both, students and instructors. I very much appreciate this opportunity to use this textbook at no cost for our students.

Reviewed by Dabrina Dutcher, Assistant Professor, Bucknell University on 3/4/19

This is a reasonably thorough first-semester statistics book for most classes. It would have worked well for the general statistics courses I have taught in the past but is not as suitable for specialized introductory statistics courses for... read more

This is a reasonably thorough first-semester statistics book for most classes. It would have worked well for the general statistics courses I have taught in the past but is not as suitable for specialized introductory statistics courses for engineers or business applications. That is OK, they have separate texts for that! The only sections that feel somewhat light in terms of content are the confidence intervals and ANOVA sections. Given that these topics are often sort of crammed in at the end of many introductory classes, that might not be problematic for many instructors. It should also be pointed out that while there are a couple of chapters on probability, this book spends presents most formulas as "black boxes" rather than worry about the derivation or origin of the formulas. The probability sections do not include any significant combinatorics work, which is sometimes included at this level.

I did not find any errors in the formulas presented but I did not work many end-of-chapter problems to gauge the accuracy of their answers.

There isn't much changing in the introductory stats world, so I have no concerns about the book becoming outdated rapidly. The examples and problems still feel relevant and reasonably modern. My only concern is that the statistical tool most often referenced in the book are TI-83/84 type calculators. As students increasingly buy TI-89s or Inspires, these sections of the book may lose relevance faster than other parts.

Solid. The book gives a list of key terms and their definitions at the end of each chapter which is a nice feature. It also has a formula review at the end of each chapter. I can imagine that these are heavily used by students when studying! Formulas are easy to find and read and are well defined. There are a few areas that I might have found frustrating as a student. For example, the explanation for the difference in formulas for a population vs sample standard deviation is quite weak. Again, this is a book that focuses on sort of a "black-box" approach but you may have to supplement such sections for some students.

I did not detect any problems with inconsistent symbol use or switches in terminology.

Modularity rating: 3

This low rating should not be taken as an indicator of an issue with this book but would be true of virtually any statistics book. Different books still use different variable symbols even for basic calculated statistics. So trying to use a chapter of this book without some sort of symbol/variable cheat-sheet would likely be frustrating to the students.

However, I think it would be possible to skip some chapters or use the chapters in a different order without any loss of functionality.

This book uses a very standard order for the material. The chapter on regressions comes later than it does in some texts but it doesn't really matter since that chapter never seems to fit smoothly anywhere.

There are numerous end of chapter problems, some with answers, available in this book. I'm vacillating on whether these problems would be more useful if they were distributed after each relevant section or are better clumped at the end of the whole chapter. That might be a matter of individual preference.

I did not detect any problems.

I found no errors. However, there were several sections where the punctuation seemed non-ideal. This did not affect the over-all useability of the book though

I'm not sure how well this book would work internationally as many of the examples contain domestic (American) references. However, I did not see anything offensive or biased in the book.

Reviewed by Ilgin Sager, Assistant Professor, University of Missouri - St. Louis on 1/14/19

As the title implies, this is a brief introduction textbook. It covers the fundamental of the introductory statistics, however not a comprehensive text on the subject. A teacher can use this book as the sole text of an introductory statistics.... read more

As the title implies, this is a brief introduction textbook. It covers the fundamental of the introductory statistics, however not a comprehensive text on the subject. A teacher can use this book as the sole text of an introductory statistics. The prose format of definitions and theorems make theoretical concepts accessible to non-math major students. The textbook covers all chapters required in this level course.

It is accurate; the subject matter in the examples to be up to date, is timeless and wouldn't need to be revised in future editions; there is no error except a few typographical errors. There are no logic errors or incorrect explanations.

This text will remain up to date for a long time since it has timeless examples and exercises, it wouldn't be outdated. The information is presented clearly with a simple way and the exercises are beneficial to follow the information.

The material is presented in a clear, concise manner. The text is easy readable for the first time statistics student.

The structure of the text is very consistent. Topics are presented with examples, followed by exercises. Problem sets are appropriate for the level of learner.

When the earlier matters need to be referenced, it is easy to find; no trouble reading the book and finding results, it has a consistent scheme. This book is set very well in sections.

The text presents the information in a logical order.

The learner can easily follow up the material; there is no interface problem.

There is no logic errors and incorrect explanations, a few typographical errors is just to be ignored.

Not applicable for this textbook.

Reviewed by Suhwon Lee, Associate Teaching Professor, University of Missouri on 6/19/18

This book is pretty comprehensive for being a brief introductory book. This book covers all necessary content areas for an introduction to Statistics course for non-math majors. The text book provides an effective index, plenty of exercises,... read more

This book is pretty comprehensive for being a brief introductory book. This book covers all necessary content areas for an introduction to Statistics course for non-math majors. The text book provides an effective index, plenty of exercises, review questions, and practice tests. It provides references and case studies. The glossary and index section is very helpful for students and can be used as a great resource.

Content appears to be accurate throughout. Being an introductory book, the book is unbiased and straight to the point. The terminology is standard.

The content in textbook is up to date. It will be very easy to update it or make changes at any point in time because of the well-structured contents in the textbook.

The author does a great job of explaining nearly every new term or concept. The book is easy to follow, clear and concise. The graphics are good to follow. The language in the book is easily understandable. I found most instructions in the book to be very detailed and clear for students to follow.

Overall consistency is good. It is consistent in terms of terminology and framework. The writing is straightforward and standardized throughout the text and it makes reading easier.

The authors do a great job of partitioning the text and labeling sections with appropriate headings. The table of contents is well organized and easily divisible into reading sections and it can be assigned at different points within the course.

Organization/Structure/Flow rating: 4

Overall, the topics are arranged in an order that follows natural progression in a statistics course with some exception. They are addressed logically and given adequate coverage.

The text is free of any issues. There are no navigation problems nor any display issues.

The text contains no grammatical errors.

The text is not culturally insensitive or offensive in any way most of time. Some examples might need to consider citing the sources or use differently to reflect current inclusive teaching strategies.

Overall, it's well-written and good recourse to be an introduction to statistical methods. Some materials may not need to be covered in an one-semester course. Various examples and quizzes can be a great recourse for instructor.

Reviewed by Jenna Kowalski, Mathematics Instructor, Anoka-Ramsey Community College on 3/27/18

The text includes the introductory statistics topics covered in a college-level semester course. An effective index and glossary are included, with functional hyperlinks. read more

The text includes the introductory statistics topics covered in a college-level semester course. An effective index and glossary are included, with functional hyperlinks.

Content Accuracy rating: 3

The content of this text is accurate and error-free, based on a random sampling of various pages throughout the text. Several examples included information without formal citation, leading the reader to potential bias and discrimination. These examples should be corrected to reflect current values of inclusive teaching.

The text contains relevant information that is current and will not become outdated in the near future. The statistical formulas and calculations have been used for centuries. The examples are direct applications of the formulas and accurately assess the conceptual knowledge of the reader.

The text is very clear and direct with the language used. The jargon does require a basic mathematical and/or statistical foundation to interpret, but this foundational requirement should be met with course prerequisites and placement testing. Graphs, tables, and visual displays are clearly labeled.

The terminology and framework of the text is consistent. The hyperlinks are working effectively, and the glossary is valuable. Each chapter contains modules that begin with prerequisite information and upcoming learning objectives for mastery.

The modules are clearly defined and can be used in conjunction with other modules, or individually to exemplify a choice topic. With the prerequisite information stated, the reader understands what prior mathematical understanding is required to successfully use the module.

The topics are presented well, but I recommend placing Sampling Distributions, Advanced Graphs, and Research Design ahead of Probability in the text. I think this rearranged version of the index would better align with current Introductory Statistics texts. The structure is very organized with the prerequisite information stated and upcoming learner outcomes highlighted. Each module is well-defined.

Adding an option of returning to the previous page would be of great value to the reader. While progressing through the text systematically, this is not an issue, but when the reader chooses to skip modules and read select pages then returning to the previous state of information is not easily accessible.

No grammatical errors were found while reviewing select pages of this text at random.

Cultural Relevance rating: 3

Several examples contained data that were not formally cited. These examples need to be corrected to reflect current inclusive teaching strategies. For example, one question stated that “while men are XX times more likely to commit murder than women, …” This data should be cited, otherwise the information can be interpreted as biased and offensive.

An included solutions manual for the exercises would be valuable to educators who choose to use this text.

Reviewed by Zaki Kuruppalil, Associate Professor, Ohio University on 2/1/18

This is a comprehensive book on statistical methods, its settings and most importantly the interpretation of the results. With the advent of computers and software’s, complex statistical analysis can be done very easily. But the challenge is the... read more

This is a comprehensive book on statistical methods, its settings and most importantly the interpretation of the results. With the advent of computers and software’s, complex statistical analysis can be done very easily. But the challenge is the knowledge of how to set the case, setting parameters (for example confidence intervals) and knowing its implication on the interpretation of the results. If not done properly this could lead to deceptive inferences, inadvertently or purposely. This book does a great job in explaining the above using many examples and real world case studies. If you are looking for a book to learn and apply statistical methods, this is a great one. I think the author could consider revising the title of the book to reflect the above, as it is more than just an introduction to statistics, may be include the word such as practical guide.

The contents of the book seems accurate. Some plots and calculations were randomly selected and checked for accuracy.

The book topics are up to date and in my opinion, will not be obsolete in the near future. I think the smartest thing the author has done is, not tied the book with any particular software such as minitab or spss . No matter what the software is, standard deviation is calculated the same way as it is always. The only noticeable exception in this case was using the Java Applet for calculating Z values in page 261 and in page 416 an excerpt of SPSS analysis is provided for ANOVA calculations.

The contents and examples cited are clear and explained in simple language. Data analysis and presentation of the results including mathematical calculations, graphical explanation using charts, tables, figures etc are presented with clarity.

Terminology is consistant. Framework for each chapter seems consistent with each chapter beginning with a set of defined topics, and each of the topic divided into modules with each module having a set of learning objectives and prerequisite chapters.

The text book is divided into chapters with each chapter further divided into modules. Each of the modules have detailed learning objectives and prerequisite required. So you can extract a portion of the book and use it as a standalone to teach certain topics or as a learning guide to apply a relevant topic.

Presentation of the topics are well thought and are presented in a logical fashion as if it would be introduced to someone who is learning the contents. However, there are some issues with table of contents and page numbers, for example chapter 17 starts in page 597 not 598. Also some tables and figures does not have a number, for instance the graph shown in page 114 does not have a number. Also it would have been better if the chapter number was included in table and figure identification, for example Figure 4-5 . Also in some cases, for instance page 109, the figures and titles are in two different pages.

No major issues. Only suggestion would be, since each chapter has several modules, any means such as a header to trace back where you are currently, would certainly help.

Grammatical Errors rating: 4

Easy to read and phrased correctly in most cases. Minor grammatical errors such as missing prepositions etc. In some cases the author seems to have the habbit of using a period after the decimal. For instance page 464, 467 etc. For X = 1, Y' = (0.425)(1) + 0.785 = 1.21. For X = 2, Y' = (0.425)(2) + 0.785 = 1.64.

However it contains some statements (even though given as examples) that could be perceived as subjective, which the author could consider citing the sources. For example from page 11: Statistics include numerical facts and figures. For instance: • The largest earthquake measured 9.2 on the Richter scale. • Men are at least 10 times more likely than women to commit murder. • One in every 8 South Africans is HIV positive. • By the year 2020, there will be 15 people aged 65 and over for every new baby born.

Solutions for the exercises would be a great teaching resource to have

Reviewed by Randy Vander Wal, Professor, The Pennsylvania State University on 2/1/18

As a text for an introductory course, standard topics are covered. It was nice to see some topics such as power, sampling, research design and distribution free methods covered, as these are often omitted in abbreviated texts. Each module... read more

As a text for an introductory course, standard topics are covered. It was nice to see some topics such as power, sampling, research design and distribution free methods covered, as these are often omitted in abbreviated texts. Each module introduces the topic, has appropriate graphics, illustration or worked example(s) as appropriate and concluding with many exercises. An instructor’s manual is available by contacting the author. A comprehensive glossary provides definitions for all the major terms and concepts. The case studies give examples of practical applications of statistical analyses. Many of the case studies contain the actual raw data. To note is that the on-line e-book provides several calculators for the essential distributions and tests. These are provided in lieu of printed tables which are not included in the pdf. (Such tables are readily available on the web.)

The content is accurate and error free. Notation is standard and terminology is used accurately, as are the videos and verbal explanations therein. Online links work properly as do all the calculators. The text appears neutral and unbiased in subject and content.

The text achieves contemporary relevance by ending each section with a Statistical Literacy example, drawn from contemporary headlines and issues. Of course, the core topics are time proven. There is no obvious material that may become “dated”.

The text is very readable. While the pdf text may appear “sparse” by absence varied colored and inset boxes, pictures etc., the essential illustrations and descriptions are provided. Meanwhile for this same content the on-line version appears streamlined, uncluttered, enhancing the value of the active links. Moreover, the videos provide nice short segments of “active” instruction that are clear and concise. Despite being a mathematical text, the text is not overly burdened by formulas and numbers but rather has “readable feel”.

This terminology and symbol use are consistent throughout the text and with common use in the field. The pdf text and online version are also consistent by content, but with the online e-book offering much greater functionality.

The chapters and topics may be used in a selective manner. Certain chapters have no pre-requisite chapter and in all cases, those required are listed at the beginning of each module. It would be straightforward to select portions of the text and reorganize as needed. The online version is highly modular offering students both ease of navigation and selection of topics.

Chapter topics are arranged appropriately. In an introductory statistics course, there is a logical flow given the buildup to the normal distribution, concept of sampling distributions, confidence intervals, hypothesis testing, regression and additional parametric and non-parametric tests. The normal distribution is central to an introductory course. Necessary precursor topics are covered in this text, while its use in significance and hypothesis testing follow, and thereafter more advanced topics, including multi-factor ANOVA.

Each chapter is structured with several modules, each beginning with pre-requisite chapter(s), learning objectives and concluding with Statistical Literacy sections providing a self-check question addressing the core concept, along with answer, followed by an extensive problem set. The clear and concise learning objectives will be of benefit to students and the course instructor. No solutions or answer key is provided to students. An instructor’s manual is available by request.

The on-line interface works well. In fact, I was pleasantly surprised by its options and functionality. The pdf appears somewhat sparse by comparison to publisher texts, lacking pictures, colored boxes, etc. But the on-line version has many active links providing definitions and graphic illustrations for key terms and topics. This can really facilitate learning as making such “refreshers” integral to the new material. Most sections also have short videos that are professionally done, with narration and smooth graphics. In this way, the text is interactive and flexible, offering varied tools for students. To note is that the interactive e-book works for both IOS and OS X.

The text in pdf form appeared to free of grammatical errors, as did the on-line version, text, graphics and videos.

This text contains no culturally insensitive or offensive content. The focus of the text is on concepts and explanation.

The text would be a great resource for students. The full content would be ambitious for a 1-semester course, such use would be unlikely. The text is clearly geared towards students with no statistics background nor calculus. The text could be used in two styles of course. For 1st year students early chapters on graphs and distributions would be the starting point, omitting later chapters on Chi-square, transformations, distribution-free and size effect chapters. Alternatively, for upper level students the introductory chapters could be bypassed with the latter chapters then covered to completion.

This text adopts a descriptive style of presentation with topics well and fully explained, much like the “Dummy series”. For this, it may seem a bit “wordy”, but this can well serve students and notably it complements powerpoint slides that are generally sparse on written content. This text could be used as the primary text, for regular lectures, or as reference for a “flipped” class. The e-book videos are an enabling tool if this approach is adopted.

Reviewed by David jabon, Associate Professor, DePaul University on 8/15/17

This text covers all the standard topics in a semester long introductory course in statistics. It is particularly well indexed and very easy to navigate. There is comprehensive hyperlinked glossary. read more

This text covers all the standard topics in a semester long introductory course in statistics. It is particularly well indexed and very easy to navigate. There is comprehensive hyperlinked glossary.

The material is completely accurate. There are no errors. The terminology is standard with one exception: the book calls what most people call the interquartile range, the H-spread in a number of places. Ideally, the term "interquartile range" would be used in place of every reference to "H-spread." "Interquartile range" is simply a better, more descriptive term of the concept that it describes. It is also more commonly used nowadays.

This book came out a number of years ago, but the material is still up to date. Some more recent case studies have been added.

The writing is very clear. There are also videos for almost every section. The section on boxplots uses a lot of technical terms that I don't find are very helpful for my students (hinge, H-spread, upper adjacent value).

The text is internally consistent with one exception that I noted (the use of the synonymous words "H-spread" and "interquartile range").

The text book is brokenly into very short sections, almost to a fault. Each section is at most two pages long. However at the end of each of these sections there are a few multiple choice questions to test yourself. These questions are a very appealing feature of the text.

The organization, in particular the ordering of the topics, is rather standard with a few exceptions. Boxplots are introduced in Chapter II before the discussion of measures of center and dispersion. Most books introduce them as part of discussion of summaries of data using measure of center and dispersion. Some statistics instructors may not like the way the text lumps all of the sampling distributions in a single chapter (sampling distribution of mean, sampling distribution for the difference of means, sampling distribution of a proportion, sampling distribution of r). I have tried this approach, and I now like this approach. But it is a very challenging chapter for students.

The book's interface has no features that distracted me. Overall the text is very clean and spare, with no additional distracting visual elements.

The book contains no grammatical errors.

The book's cultural relevance comes out in the case studies. As of this writing there are 33 such case studies, and they cover a wide range of issues from health to racial, ethnic, and gender disparity.

Each chapter as a nice set of exercises with selected answers. The thirty three case studies are excellent and can be supplement with some other online case studies. An instructor's manual and PowerPoint slides can be obtained by emailing the author. There are direct links to online simulations within the text. This text is very high quality textbook in every way.

Table of Contents

- 1. Introduction

- 2. Graphing Distributions

- 3. Summarizing Distributions

- 4. Describing Bivariate Data

- 5. Probability

- 6. Research Design

- 7. Normal Distributions

- 8. Advanced Graphs

- 9. Sampling Distributions

- 10. Estimation

- 11. Logic of Hypothesis Testing

- 12. Testing Means

- 14. Regression

- 15. Analysis of Variance

- 16. Transformations

- 17. Chi Square

- 18. Distribution-Free Tests

- 19. Effect Size

- 20. Case Studies

- 21. Glossary

Ancillary Material

- Ancillary materials are available by contacting the author or publisher .

About the Book

Introduction to Statistics is a resource for learning and teaching introductory statistics. This work is in the public domain. Therefore, it can be copied and reproduced without limitation. However, we would appreciate a citation where possible. Please cite as: Online Statistics Education: A Multimedia Course of Study (http://onlinestatbook.com/). Project Leader: David M. Lane, Rice University. Instructor's manual, PowerPoint Slides, and additional questions are available.

About the Contributors

David Lane is an Associate Professor in the Departments of Psychology, Statistics, and Management at the Rice University. Lane is the principal developer of this resource although many others have made substantial contributions. This site was developed at Rice University, University of Houston-Clear Lake, and Tufts University.

Contribute to this Page

What is Statistics? Importance, Scope, Limitations

- Post last modified: 30 July 2022

- Reading time: 24 mins read

- Post category: Business Statistics

What is Statistics?

Statistics may be defined as the collection, presentation, analysis and interpretation of numerical data.

Statistics is a set of decision-making techniques which helps businessmen in making suitable policies from the available data. In fact, every businessman needs a sound background of statistics as well as of mathematics.

The purpose of statistics and mathematics is to manipulate, summarize and investigate data so that the useful decision-making results can be executed.

Table of Content

- 1 What is Statistics?

- 2 Statistics Meaning

- 3 Statistics Definition

- 4.1 Uses of Statistics in Business

- 4.2 Uses of Mathematics for Decision Making

- 4.3 Uses of Statistics in Economics

- 5.1 Condensation

- 5.2 Comparison

- 5.3 Forecast

- 5.4 Testing of hypotheses

- 5.5 Preciseness

- 5.6 Expectation

- 6.1 Importance of Statistics in Business and Industry

- 6.2 Importance in the Field of Science and Research

- 6.3 Importance in the Field of Banking

- 6.4 Importance to the State

- 6.5 Importance in planning

- 7.1 Presents facts in numerical figures

- 7.2 Presents complex facts in a simplified form

- 7.3 Studies relationship between two or more phenomena

- 7.4 Helps in the formulation of policies

- 7.5 Helps in forecasting

- 7.6 Provides techniques for testing of hypothesis

- 7.7 Provides techniques for making decisions under uncertainty

- 8.1 Statistics Suits to the Study of Quantitative Data Only

- 8.2 Statistical Results are not Exact

- 8.3 Statistics Deals with Aggregates Only

- 8.4 Statistics is Useful for Experts Only

- 8.5 Statistics does not Provide Solutions to the Problems

Statistics Meaning

The term ‘statistics’ has been derived from the Latin word ‘status’ Italian word ‘statista’ or German word ‘statistik’.

All these words mean ‘Political state’. In ancient days, the states were required to collect statistical data mainly for the number of youngmen so that they can be recruited in the Army.

Also to calculate the total amount of land revenue that can be collected. Due to this reason, statistics is also called ‘Political Arithmetic’.

Statistics Definition

Statistics has been defined in different ways by different authors.

Uses of Statistics in Business Decision Making

Uses of statistics in business.

The following are the main uses of statistics in various business activities:

- With the help of statistical methods, quantitative information about production, sale, purchase, finance, etc. can be obtained. This type of information helps businessmen in formulating suitable policies.

- By using the techniques of time series analysis which are based on statistical methods, the businessman can predict the effect of a large number of variables with a fair degree of accuracy.

- In business decision theory, most of the statistics techniques are used in taking a business decision which helps us in doing the business without uncertainty.

- Nowadays, a large part of modern business is being organised around systems of statistical analysis and control.

- By using ‘Bayesian Decision Theory’, the businessmen can select the optimal decisions for the direct evaluation of the payoff for each alternative course of action.

Uses of Mathematics for Decision Making

- The number of defects in a roll of paper, bale of cloth, sheet of a photographic film can be judged by means of Control Chart based on Normal distribution.

- In statistical quality control, we analyse the data which are based on the principles involved in Normal curve.

Uses of Statistics in Economics

Statistics is the basis of economics. The consumer’s maximum satisfaction can be determined on the basis of data pertaining to income and expenditure. The various laws of demand depend on the data concerning price and quantity. The price of a commodity is well determined on the basis of data relating to its buyers, sellers, etc.

Functions of Statistics

Statistics can be well-defined as a branch of research which is concerned with the development and application of techniques for collecting, organising, presenting, analysing and interpreting data in such a manner that the reliability of conclusions may be evaluated in terms of probability statements.

Statistical methods and processes are useful for business development and, hence, applied to enormous numerical facts with an objective that “behind every figure, there’s a story”.

Some key functions of statistics are as follows:

Condensation

Testing of hypotheses, preciseness, expectation.

Statistics can be used to compress a large amount of data into small meaningful information; for example, aggregated sales forecast, BSE indices, GDP growth rate, etc. It is almost impossible to get a complete idea of the profitability of a company by looking at the records of its income and expenditure. Financial ratios such as return on investment, earnings per share, profit margins, etc., however, can be easily remembered and thus can be used in quick decision making.

Statistics facilitate comparing different quantities. For example, the price-to-earnings ratio of ITC as of January 22, 2021 is 19.54 as compared to HUL. HUL is overvalued, quoting a price-to-earnings ratio of 71 times.

Statistics helps forecast by looking at trends of a variable. It is essential for planning and decision-making. Predictions or forecasts based on intuition can be disastrous for any business.

For example, to decide the production capacity for a vehicle-manufacturing plant, we need to predict the demand for the product mix, supply of components, cost of manpower, competitor strategy, etc., over the next 5 to 10 years, before committing an investment.

Hypotheses are statements about population parameters based on knowledge from literature that a researcher would like to test for validity in the light of new information. Drawing inferences about the population using sample estimates involves an element of risk.

Statistics visualises and presents facts precisely in a quantitative form. Facts and information conveyed in quantitative terms are more convincing than qualitative data. For example, ‘increase in profit margin is less in the year 2020 than in the year 2019’ does not convey a precise and complete piece of information.

On the other hand, statistics summarise the information more precisely. For example, ‘profit margin is 5% of the turnover in the year 2020 against 7% in the year 2019’.

Statistics can act as the basic building block for framing clear plans and policies. For example, how much raw material to be imported in a year, how much capacity to be expanded, or manpower to be recruited, etc., depends on the expected value of outcome of our decisions taken under different situations.

Importance of Statistics

Statistics in today’s life has become an essential part of various business activities which is clear from the following points.

The importance of statistics in the following major areas:

Importance of Statistics in Business and Industry

Importance in the field of science and research, importance in the field of banking, importance to the state, importance in planning.

In past days, decisions regarding business were made only on personal judgement. However, in these days, they are based on several mathematical and statistical techniques and the best decision is arrived by using all these techniques.

For example, by using the testing hypothesis, we can reject or accept the null hypothesis which are based upon the assumption made from the population or universe

- By using ‘Bayesian Decision Theory’ or ‘Decision Theory’, we can select the optimal decisions for the direct evaluation of the payoff for each alternative course of action.

Mathematics and statistics have become ingredients of various decisions problems which is clear from the following:

- In Selecting Alternative Course of Action: The process of business decisions involve the selection of a single action among some set of alternative actions. When there are two or more alternative courses of action, and we need only one course of action, statistical decisions theory helps us in selecting the required course of action by applying Bayesian decision theory and thus saves lot of time.

- In Removing Uncertainty: In decision-making problems, uncertainty is very common in a situation, when the course of action is not known to us. When there are many possible outcomes of an event, we cannot predict with certainty that what will happen. By applying the concept of joint and conditional probability, the uncertainty about the event can be removed very easily.

- In Calculating E.O.L., C.O.L., etc.: In business, the opportunity loss is very often, which can be defined as the difference between the highest possible profit for an event and the actual profit obtained for the actual action taken. The expected opportunity loss (E.O.L.) and conditional opportunity loss (C.O.L.) can be easily calculated by using the concept of maximum and minimum criteria of pay-off.

Statistics has great significance in the field of physical and natural sciences. It is widely used in verifying scientific laws and phenomenon.

For example, to formulate standards of body temperature, pulse rate, blood pressure, etc. The success of modern computers depends on the conclusions drawn on the basis of statistics.

In banking industry, the bankers have to relate demand deposits, time deposits, credit etc. It is on the basis of data relating to demand and time deposits that the bankers determine the credit policies. The credit policies are based on the theory of probability.

We know that the subject of statistics originated for helping the ancient rulers in the assessment of their military and economic strength. Gradually its scope was enlarged to tackle other problems relating to political activities of the State.

In the modern era, the role of State has increased and various governments of the world also take care of the welfare of its people. Therefore, these governments require much greater information in the form of numerical figures for the fulfilment of welfare objectives in addition to the efficient running of their administration.

Planning is indispensable for achieving faster rate of growth through the best use of a nation’s resources. It also requires a good deal of statistical data on various aspects of the economy.

One of the aims of planning could be to achieve a specified rate of growth of the economy. Using statistical techniques, it is possible to assess the amounts of various resources available in the economy and accordingly determine whether the specified rate of growth is sustainable or not.

Scope of Statistics

The following are the main scope of statistics:

Presents facts in numerical figures

Presents complex facts in a simplified form, studies relationship between two or more phenomena, helps in the formulation of policies, helps in forecasting, provides techniques for testing of hypothesis, provides techniques for making decisions under uncertainty.

The first function of statistics is to present a given problem in terms of numerical figures. We know that the numerical presentation helps in having a better understanding of nature an of problem.

Generally, a problem to be investigated is represented by a large mass of numerical figures which are very difficult to understand and remember. Using various statistical methods, this large mass of data can be presented in a simplified form.

Statistics can be used to investigate whether two or more phenomena are related. For example, the relationship between income and consumption, demand and supply, etc.

Statistical analysis of data is the starting point in the formulation of policies in various economic, business and government activities. For example, using statistical techniques a firm can know the tastes and preferences of the consumers and decide to make its product accordingly.

The success of planning by the Government or of a business depends to a large extent upon the accuracy of their forecasts. Statistics provides a scientific basis for making such forecasts.

A hypothesis is a statement about some characteristics of a population (or universe).

Many times we face an uncertain situation where any one of the many alternatives may be adopted. A businessman might face a situation of uncertain investment opportunities in which he can lose or gain.

He may be interested in knowing whether to undertake a particular investment or not. The answer to such problems are provided by the statistical techniques of decision-making under uncertainty.

Limitations of Statistics

- Statistics is considered to be a science as well as an art, which is used as an instrument of research in almost every sphere of our activities.

Some of the limitations of statistics are as follows:

Statistics Suits to the Study of Quantitative Data Only

Statistical results are not exact, statistics deals with aggregates only, statistics is useful for experts only, statistics does not provide solutions to the problems.

Statistics deals with the study of quantitative data only. By using the methods of statistics, the problems regarding production, income, price, wage, height, weight etc. can be studied. Such characteristics are quantitative in nature.

The characteristics like honesty, goodwill, duty, character, beauty, intelligence, efficiency, integrity etc. are not capable of quantitative measurement and hence cannot be directly dealt with statistical methods. These characteristics are qualitative in nature.

In such type of characteristics, only comparison is possible The use of statistical methods is limited to quantitative characteristics and those qualitative characteristics which are capable of being expressed numerically.

The task of statistical analysis is performed under certain conditions. It is not always possible, rather not advisable, to consider the entire population during statistical investigations.

The use of samples is called for in statistical investigations. And the results obtained by using samples may not be universally true for the entire population. Data collected for a statistical enquiry may not be hundred percent true. Statistical results are true on an average.

Statistics does not recognise individual items. Consider the statement, “The weight of Mr X in the college is 70 kg”. This statement does not constitute statistical data. Statistical methods are not going to investigate anything about this statement. Whereas, if the weights of all the students of the college are given, the statistical methods may be applied to analyse that data.

According to Tippett, “Statistics is essentially totalitarian because it is not concerned with individual values, but only with classes”. Statistics is used to study group characteristics of aggregates.

Statistics is both a science and an art. It is systematic and finds applications in studying problems in Economics, Business, Astronomy, Physics, Medicines etc. Statistical methods are sophisticated in nature. Everyone is not expected to possess the intelligence required to understand and to apply these methods to practical problems. This is the job of an expert, who is well-versed with statistical methods

The statistical methods are used to explore the essentials of problems. It does not find use in inventing solutions to problems. For example, the methods of statistics may reveal the fact that the average result of a particular class in a college is deteriorating for the last ten years, i.e., the trend of the result is downward, but statistics cannot provide solution to this problem.

It cannot help in taking remedial steps to improve the result of that class. Statistics should be taken as a means and not as an end. The methods of statistics are used to study the various aspects of the data.

- Statistics is a set of decision-making techniques which helps businessmen in making suitable policies from the available data.

- “Statistics may be defined as the collection, presentation, analysis and interpretation of numerical data.”

- With the help of statistical methods, quantitative information about production, sale, purchase, finance etc. can be obtained.

- Statistics is the basis of economics. The consumer’s maximum satisfaction can be determined on the basis of data pertaining to income and expenditure.

- W.I. King says, “Statistics is a most useful servant but only of great value to those who understand its proper use.”

- Yule and Kendall has rightly said that “statistical methods are most dangerous tools in the hands of inexperts.”

- The statistical methods are used to explore the essentials of problems.

You Might Also Like

What is consumer research developing, collecting, designing, this post has 8 comments.

It was really helpful, the info was quite well. though if it would be good if you increase the amount of info’s to get a deep view

Thanks for your valuable feedback. Our team will connect with you in a bit.

Compact and sleek !! Great

Thank you so much It’s realy helpful information availble

Thanks you too, and please am a first year student, I would like you to update more with the knowledge of statistic.

Thank u so much. This information is very helpful. I am very lucky to seen this information.

I really appreciate this service for it has been helpful to me.

very useful

Leave a Reply Cancel reply

You must be logged in to post a comment.

World's Best Online Courses at One Place

We’ve spent the time in finding, so you can spend your time in learning

Digital Marketing

Personal Growth

Development

- Search Search Please fill out this field.

What Is Statistics?

Understanding statistics.

- Descriptive & Inferential Statistics

Mean, Median, and Mode

Understanding statistical data.

- Levels of Measurement

- Sampling Techniques

Uses of Statistics

The bottom line.

- Corporate Finance

- Financial Analysis

Statistics: Definition, Types, and Importance

:max_bytes(150000):strip_icc():format(webp)/JimShades1-Edited1-32b96b226a364fd59211458ca9acec09.jpg)

Katrina Ávila Munichiello is an experienced editor, writer, fact-checker, and proofreader with more than fourteen years of experience working with print and online publications.

:max_bytes(150000):strip_icc():format(webp)/KatrinaAvilaMunichiellophoto-9d116d50f0874b61887d2d214d440889.jpg)

Statistics is a branch of applied mathematics that involves the collection, description, analysis, and inference of conclusions from quantitative data. The mathematical theories behind statistics rely heavily on differential and integral calculus, linear algebra, and probability theory.

People who do statistics are referred to as statisticians. They’re particularly concerned with determining how to draw reliable conclusions about large groups and general events from the behavior and other observable characteristics of small samples. These small samples represent a portion of the large group or a limited number of instances of a general phenomenon.

Key Takeaways

- Statistics is the study and manipulation of data, including ways to gather, review, analyze, and draw conclusions from data.

- The two major areas of statistics are descriptive and inferential statistics.

- Statistics can be communicated at different levels ranging from non-numerical descriptor (nominal-level) to numerical in reference to a zero-point (ratio-level).

- Several sampling techniques can be used to compile statistical data, including simple random, systematic, stratified, or cluster sampling.

- Statistics are present in almost every department of every company and are an integral part of investing.

Dennis Madamba / Investopedia

Statistics are used in virtually all scientific disciplines, such as the physical and social sciences as well as in business, medicine, the humanities, government, and manufacturing. Statistics is fundamentally a branch of applied mathematics that developed from the application of mathematical tools, including calculus and linear algebra, to probability theory.

In practice, statistics is the idea that we can learn about the properties of large sets of objects or events (a population ) by studying the characteristics of a smaller number of similar objects or events (a sample ). Gathering comprehensive data about an entire population is too costly, difficult, or impossible in many cases, so statistics start with a sample that can be conveniently or affordably observed.

Statisticians measure and gather data about the individuals or elements of a sample and analyze this data to generate descriptive statistics. They can then use these observed characteristics of the sample data, which are properly called “statistics,” to make inferences or educated guesses about the unmeasured characteristics of the broader population, known as the parameters.

Statistics informally dates back centuries. An early record of correspondence between French mathematicians Pierre de Fermat and Blaise Pascal in 1654 is often cited as an early example of statistical probability analysis.

Descriptive and Inferential Statistics

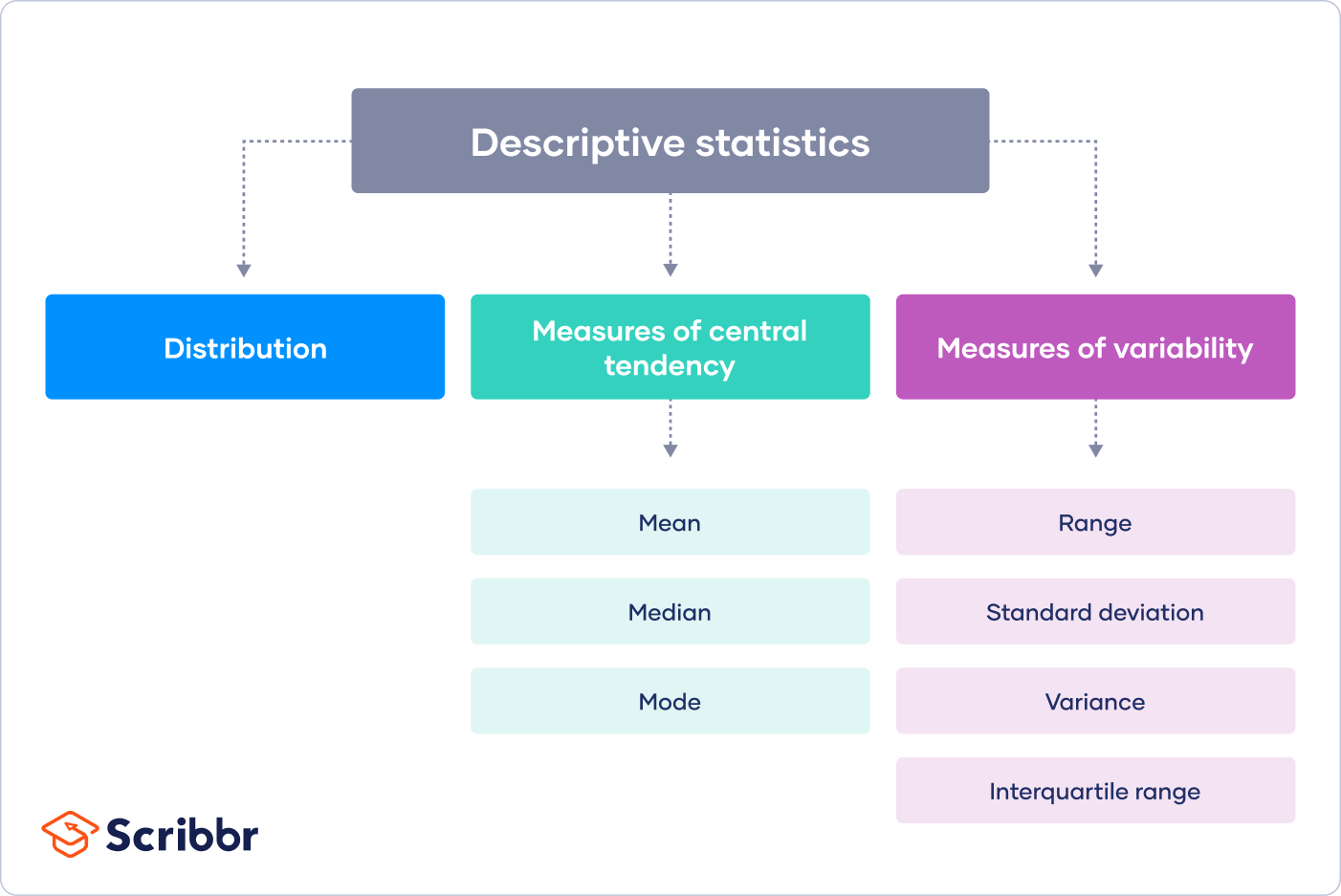

The two major areas of statistics are known as descriptive statistics , which describes the properties of sample and population data, and inferential statistics, which uses those properties to test hypotheses and draw conclusions. Descriptive statistics include mean (average), variance, skewness , and kurtosis . Inferential statistics include linear regression analysis, analysis of variance (ANOVA), logit/Probit models, and null hypothesis testing.

Descriptive Statistics

Descriptive statistics mostly focus on the central tendency, variability, and distribution of sample data. Central tendency means the estimate of the characteristics, a typical element of a sample or population. It includes descriptive statistics such as mean , median , and mode .

Variability refers to a set of statistics that show how much difference there is among the elements of a sample or population along the characteristics measured. It includes metrics such as range, variance , and standard deviation .

The distribution refers to the overall “shape” of the data, which can be depicted on a chart such as a histogram or a dot plot, and includes properties such as the probability distribution function, skewness, and kurtosis. Descriptive statistics can also describe differences between observed characteristics of the elements of a data set. They can help us understand the collective properties of the elements of a data sample and form the basis for testing hypotheses and making predictions using inferential statistics.

Inferential Statistics

Inferential statistics is a tool that statisticians use to draw conclusions about the characteristics of a population, drawn from the characteristics of a sample. It is also used to determine how certain they can be of the reliability of those conclusions. Based on the sample size and distribution, statisticians can calculate the probability that statistics, which measure the central tendency, variability, distribution, and relationships between characteristics within a data sample, provide an accurate picture of the corresponding parameters of the whole population from which the sample is drawn.

Inferential statistics are used to make generalizations about large groups, such as estimating average demand for a product by surveying the buying habits of a sample of consumers or attempting to predict future events. This might mean projecting the future return of a security or asset class based on returns in a sample period.

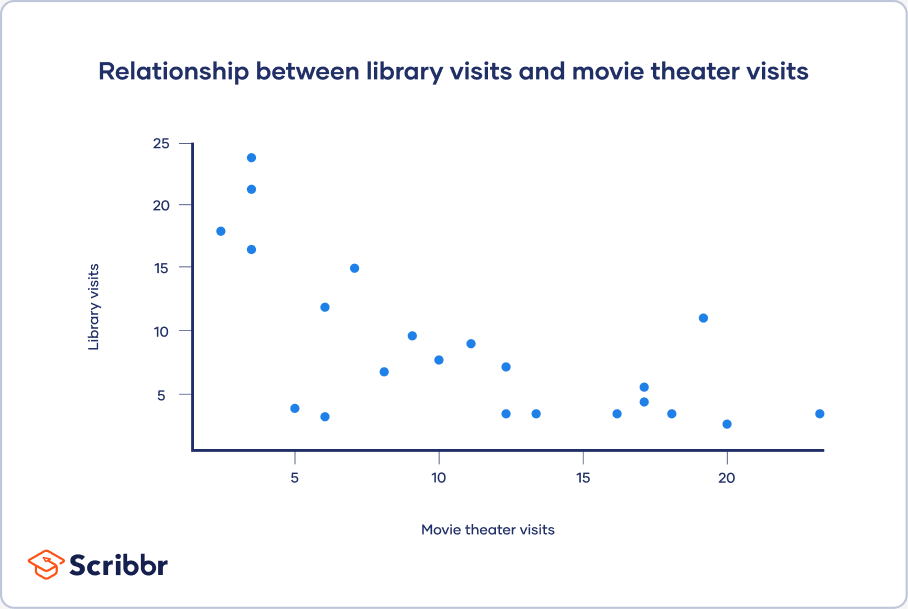

Regression analysis is a widely used technique of statistical inference. It is used to determine the strength and nature of the relationship (the correlation) between a dependent variable and one or more explanatory (independent) variables. The output of a regression model is often analyzed for statistical significance, meaning that a result from findings generated by testing or experimentation is not likely to have occurred randomly or by chance. In other words, statistical significance suggests the results are attributable to a specific cause elucidated by the data.

Having statistical significance is important for academic disciplines or practitioners that rely heavily on analyzing data and research.

The terms “mean,” “median,” and “mode” fall under the umbrella of central tendency. They describe an element that’s typical in a given sample group. You can find the mean descriptor by adding the numbers in the group and dividing the result by the number of data set observations.

The middle number in the set is the median. Half of all included numbers are higher than the median, and half are lower. The median home value in a neighborhood would be $350,000 if five homes were located there and valued at $500,000, $400,000, $350,000, $325,000, and $300,000. Two values are higher, and two are lower.

Mode identifies the number that falls between the highest and lowest values. It appears most frequently in the data set.

The root of statistics is driven by variables. A variable is a data set that can be counted that marks a characteristic or attribute of an item. For example, a car can have variables such as make, model, year, mileage, color, or condition. By combining the variables across a set of data, such as the colors of all cars in a given parking lot, statistics allows us to better understand trends and outcomes.

There are two main types of variables:

First, qualitative variables are specific attributes that are often non-numeric. Many of the examples given in the car example are qualitative. Other examples of qualitative variables in statistics are gender, eye color, or city of birth. Qualitative data is most often used to determine what percentage of an outcome occurs for any given qualitative variable. Qualitative analysis often does not rely on numbers. For example, trying to determine what percentage of women own a business analyzes qualitative data.

The second type of variable in statistics is quantitative variables. Quantitative variables are studied numerically and only have weight when they’re about a non-numerical descriptor. Similar to quantitative analysis, this information is rooted in numbers. In the car example above, the mileage driven is a quantitative variable, but the number 60,000 holds no value unless it is understood that it is the total number of miles driven.

Quantitative variables can be further broken into two categories. First, discrete variables have limitations in statistics and infer that there are gaps between potential discrete variable values. The number of points scored in a football game is a discrete variable because:

- There can be no decimals.

- It is impossible for a team to score only one point.

Statistics also makes use of continuous quantitative variables. These values run along a scale. Discrete values have limitations, but continuous variables are often measured into decimals. Any value within possible limits can be obtained when measuring the height of the football players, and the heights can be measured down to 1/16th of an inch, if not further.

Statisticians can hold various titles and positions within a company. The average total compensation for a statistician with one to three years of experience was $81,885 as of December 2023. This increased to $109,288 with 15 years of experience.

Statistical Levels of Measurement

There are several resulting levels of measurement after analyzing variables and outcomes. Statistics can quantify outcomes in four ways.

Nominal-level Measurement

There’s no numerical or quantitative value, and qualities are not ranked. Nominal-level measurements are instead simply labels or categories assigned to other variables. It’s easiest to think of nominal-level measurements as non-numerical facts about a variable.

Example : The name of the U.S. president elected in 2020 was Joseph Robinette Biden Jr.

Ordinal-level Measurement

Outcomes can be arranged in an order, but all data values have the same value or weight. Although they’re numerical, ordinal-level measurements can’t be subtracted against each other in statistics because only the position of the data point matters. Ordinal levels are often incorporated into nonparametric statistics and compared against the total variable group.

Example : American Fred Kerley was the second-fastest man at the 2020 Tokyo Olympics based on 100-meter sprint times.

Interval-level Measurement

Outcomes can be arranged in order, but differences between data values may now have meaning. Two data points are often used to compare the passing of time or changing conditions within a data set. There is often no “starting point” for the range of data values, and calendar dates or temperatures may not have a meaningful intrinsic zero value.

Example : Inflation hit 8.6% in May 2022. The last time inflation was that high was in December 1981 .

Ratio-level Measurement

Outcomes can be arranged in order, and differences between data values now have meaning. But there’s a starting point or “zero value” that can be used to further provide value to a statistical value. The ratio between data values has meaning, including its distance away from zero.

Example : The lowest meteorological temperature recorded was -128.6 degrees Fahrenheit in Antarctica in 1983.

Statistics Sampling Techniques

Often, it's not possible to gather data from every data point within a population to gather statistical information. Statistics relies instead on different sampling techniques to create a representative subset of the population that’s easier to analyze. In statistics, there are several primary types of sampling.

Simple Random Sampling

Simple random sampling calls for every member within the population to have an equal chance of being selected for analysis. The entire population is used as the basis for sampling, and any random generator based on chance can select the sample items. For example, 100 individuals are lined up and 10 are chosen at random.

Systemic Sampling

Systematic sampling calls for a random sample as well, but its technique is slightly modified to make it easier to conduct. A single random number is generated to determine the starting point, and individuals are then selected at a specified regular interval until the sample size is complete. For example, if 100 individuals are lined up and numbered, and the random starting point is the seventh individual, every subsequent ninth individual (i.e., 7th, 16th, 25th, etc.) is selected until 10 sample items have been selected.

Stratified Sampling

Stratified sampling calls for more control over your sample. The population is divided into subgroups based on similar characteristics. Then you calculate how many people from each subgroup would represent the entire population. For example, 100 individuals are grouped by gender and race. Then a sample from each subgroup is taken in proportion to how representative that subgroup is of the population.

Cluster Sampling

Cluster sampling calls for subgroups as well, but each subgroup should be representative of the population. The entire subgroup is randomly selected instead of randomly selecting individuals within a subgroup.

Not sure which Major League Baseball player should have won Most Valuable Player last year? Statistics, often used to determine value, is often cited when the award for best player is announced. Statistics can include batting average, number of home runs hit, and stolen bases.

Statistics is prominent in finance, investing, business, and a wide scope of sectors. Much of the information you see and the data you’re given is derived from statistics, which are used in all facets of a business.