What We Offer

With a comprehensive suite of qualitative and quantitative capabilities and 55 years of experience in the industry, Sago powers insights through adaptive solutions.

- Recruitment

- Communities

- Methodify® Automated research

- QualBoard® Digital Discussions

- QualMeeting® Digital Interviews

- Global Qualitative

- Global Quantitative

- In-Person Facilities

- Healthcare Solutions

- Research Consulting

- Europe Solutions

- Neuromarketing Tools

- Trial & Jury Consulting

Who We Serve

Form deeper customer connections and make the process of answering your business questions easier. Sago delivers unparalleled access to the audiences you need through adaptive solutions and a consultative approach.

- Consumer Packaged Goods

- Financial Services

- Media Technology

- Medical Device Manufacturing

- Marketing Research

With a 55-year legacy of impact, Sago has proven we have what it takes to be a long-standing industry leader and partner. We continually advance our range of expertise to provide our clients with the highest level of confidence.

- Global Offices

- Partnerships & Certifications

- News & Media

- Researcher Events

Steve Schlesinger Inducted Into 2024 Market Research Council Hall of Fame

Sago Announces Launch of Sago Health to Elevate Healthcare Research

Sago Launches AI Video Summaries on QualBoard to Streamline Data Synthesis

Drop into your new favorite insights rabbit hole and explore content created by the leading minds in market research.

- Case Studies

- Knowledge Kit

Pioneering the Future of Pediatric Health

The Swing Voter Project, July 2024: Florida

- Get in touch

- Account Logins

The Significance of Validity and Reliability in Quantitative Research

- Resources , Blog

Key Takeaways:

- Types of validity to consider during quantitative research include internal, external, construct, and statistical

- Types of reliability that apply to quantitative research include test re-test, inter-rater, internal consistency, and parallel forms

- There are numerous challenges to achieving validity and reliability in quantitative research, but the right techniques can help overcome them

Quantitative research is used to investigate and analyze data to draw meaningful conclusions. Validity and reliability are two critical concepts in quantitative analysis that ensure the accuracy and consistency of the research results. Validity refers to the extent to which the research measures what it intends to measure, while reliability refers to the consistency and reproducibility of the research results over time. Ensuring validity and reliability is crucial in conducting high-quality research, as it increases confidence in the findings and conclusions drawn from the data.

This article aims to provide an in-depth analysis of the significance of validity and reliability in quantitative research. It will explore the different types of validity and reliability, their interrelationships, and the associated challenges and limitations.

In this Article:

The role of validity in quantitative research, the role of reliability in quantitative research, validity and reliability: how they differ and interrelate, challenges and limitations of ensuring validity and reliability, overcoming challenges and limitations to achieve validity and reliability, explore trusted quantitative solutions.

Take the guesswork out of your quant research with solutions that put validity and reliability first. Discover Sago’s quantitative solutions.

Request a consultation

Validity is crucial in maintaining the credibility and reliability of quantitative research outcomes. Therefore, it is critical to establish that the variables being measured in a study align with the research objectives and accurately reflect the phenomenon being investigated.

Several types of validity apply to various study designs; let’s take a deeper look at each one below:

Internal validity is concerned with the extent to which a study establishes a causal relationship between the independent and dependent variables. In other words, internal validity determines whether the changes observed in the conditional variable result from changes in the independent variable or some other factor.

External validity refers to the degree to which the findings of a study can be generalized to other populations and contexts. External validity helps ensure the results of a study are not limited to the specific people or context in which the study was conducted.

Construct validity refers to the degree to which a research study accurately measures the theoretical construct it intends to measure. Construct validity helps provide alignment between the study’s measures and the theoretical concept it aims to investigate.

Finally, statistical validity refers to the accuracy of the statistical tests used to analyze the data. Establishing statistical validity provides confidence that the conclusions drawn from the data are reliable and accurate.

To safeguard the validity of a study, researchers must carefully design their research methodology, select appropriate measures, and control for extraneous variables that may impact the results. Validity is especially crucial in fields such as medicine, where inaccurate research findings can have severe consequences for patients and healthcare practices.

Ensuring the consistency and reproducibility of research outcomes over time is crucial in quantitative research, and this is where the concept of reliability comes into play. Reliability is vital to building trust in the research findings and their ability to be replicated in diverse contexts.

Similar to validity, multiple types of reliability are pertinent to different research designs. Let’s take a closer look at each of these types of reliability below:

Test-retest reliability refers to the consistency of the results obtained when the same test is administered to the same group of participants at different times. This type of reliability is essential when researchers need to administer the same test multiple times to assess changes in behavior or attitudes over time.

Inter-rater reliability refers to the results’ consistency when different raters or observers monitor the same behavior or phenomenon. This type of reliability is vital when researchers are required to rely on different individuals to rate or observe the same behavior or phenomenon.

Internal consistency reliability refers to the degree to which the items or questions in a test or questionnaire measure the same construct. This type of reliability is important in studies where researchers use multiple items or questions to assess a particular construct, such as knowledge or quality of life.

Lastly, parallel forms reliability refers to the consistency of the results obtained when two different versions of the same test are administered to the same group of participants. This type of reliability is important when researchers administer different versions of the same test to assess the consistency of the results.

Reliability in research is like the accuracy and consistency of a medical test. Just as a reliable medical test produces consistent and accurate results that physicians can trust to make informed decisions about patient care, a highly reliable study produces consistent and precise findings that researchers can trust to make knowledgeable conclusions about a particular phenomenon. To ensure reliability in a study, researchers must carefully select appropriate measures and establish protocols for administering the measures consistently. They must also take steps to control for extraneous variables that may impact the results.

Validity and reliability are two critical concepts in quantitative research that significantly determine the quality of research studies. While both terms are often used interchangeably, they refer to different aspects of research. Validity is the extent to which a research study measures what it claims to measure without being affected by extraneous factors or bias. In contrast, reliability is the degree to which the research results are consistent and stable over time and across different samples , methods, and evaluators.

Designing a research study that is both valid and reliable is essential for producing high-quality and trustworthy research findings. Finding this balance requires significant expertise, skill, and attention to detail. Ultimately, the goal is to produce research findings that are valid and reliable but also impactful and influential for the organization requesting them. Achieving this level of excellence requires a deep understanding of the nuances and complexities of research methodology and a commitment to excellence and rigor in all aspects of the research process.

Ensuring validity and reliability in quantitative research is not without its challenges. Some of the factors to consider include:

1. Measuring Complex Constructs or Variables One of the main challenges is the difficulty in accurately measuring complex constructs or variables. For instance, measuring constructs such as intelligence or personality can be complicated due to their multi-dimensional nature, and it can be challenging to capture all aspects accurately.

2. Limitations of Data Collection Instruments In addition, the measures or instruments used to collect data can also be limited in their sensitivity or specificity. This can impact the study’s validity and reliability, as accurate and precise measures can lead to incorrect conclusions and unreliable results. For example, a scale that measures depression but does not include all relevant symptoms may not accurately capture the construct being studied.

3. Sources of Error and Bias in Data Collection The data collection process itself can introduce sources of error or bias, which can impact the validity and reliability of the study. For instance, measurement errors can occur due to the limitations of the measuring instrument or human error during data collection. In addition, response bias can arise when participants provide socially desirable answers, while sampling bias can occur when the sample is not representative of the studied population.

4. The Complexity of Achieving Meaningful and Accurate Research Findings There are also some limitations to validity and reliability in research studies. For example, achieving internal validity by controlling for extraneous variables may only sometimes ensure external validity or the ability to generalize findings to other populations or settings. This can be a limitation for researchers who wish to apply their findings to a larger population or different contexts.

Additionally, while reliability is essential for producing consistent and reproducible results, it does not guarantee the accuracy or truth of the findings. This means that even if a study has reliable results, it may still need to be revised in terms of accuracy. These limitations remind us that research is a complex process, and achieving validity and reliability is just one part of the giant puzzle of producing accurate and meaningful research.

Researchers can adopt various measures and techniques to overcome the challenges and limitations in ensuring validity and reliability in research studies.

One such approach is to use multiple measures or instruments to assess the same construct. In addition, various steps can help identify commonalities and differences across measures, thereby providing a more comprehensive understanding of the construct being studied.

Inter-rater reliability checks can also be conducted to ensure different raters or observers consistently interpret and rate the same data. This can reduce measurement errors and improve the reliability of the results. Additionally, data-cleaning techniques can be used to identify and remove any outliers or errors in the data.

Finally, researchers can use appropriate statistical methods to assess the validity and reliability of their measures. For example, factor analysis identifies the underlying factors contributing to the construct being studied, while test-retest reliability helps evaluate the consistency of results over time. By adopting these measures and techniques, researchers can crease t their findings’ overall quality and usefulness.

The backbone of any quantitative research lies in the validity and reliability of the data collected. These factors ensure the data accurately reflects the intended research objectives and is consistent and reproducible. By carefully balancing the interrelationship between validity and reliability and using appropriate techniques to overcome challenges, researchers protect the credibility and impact of their work. This is essential in producing high-quality research that can withstand scrutiny and drive progress.

Are you seeking a reliable and valid way to collect, analyze, and report your quantitative data? Sago’s comprehensive quantitative solutions provide you the peace of mind to conduct research and draw meaningful conclusions.

Don’t Settle for Subpar Results

Work with a trusted quantitative research partner to deliver quantitative research you can count on. Book a consultation with our team to get started.

Crack the Code: Evolving Panel Expectations

Exploring Travel Trends and Behaviors for Summer 2024

The Deciders, June 24, 2024: Third-Party Georgia Voters

Summer 2024 Insights: The Compass to This Year’s Travel Choices

The Swing Voter Project, June 2024: North Carolina

Making the Case for Virtual Audiences: Unleashing Insights in a Creative Agency

Boost Efficiency with Quantitative Methods Designed for You

The Deciders, June 2024: Hispanic American voters in Arizona

OnDemand: Decoding Gen C: Mastering Engagement with a New Consumer Powerhouse

Take a deep dive into your favorite market research topics

How can we help support you and your research needs?

BEFORE YOU GO

Have you considered how to harness AI in your research process? Check out our on-demand webinar for everything you need to know

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- Reliability vs Validity in Research | Differences, Types & Examples

Reliability vs Validity in Research | Differences, Types & Examples

Published on 3 May 2022 by Fiona Middleton . Revised on 10 October 2022.

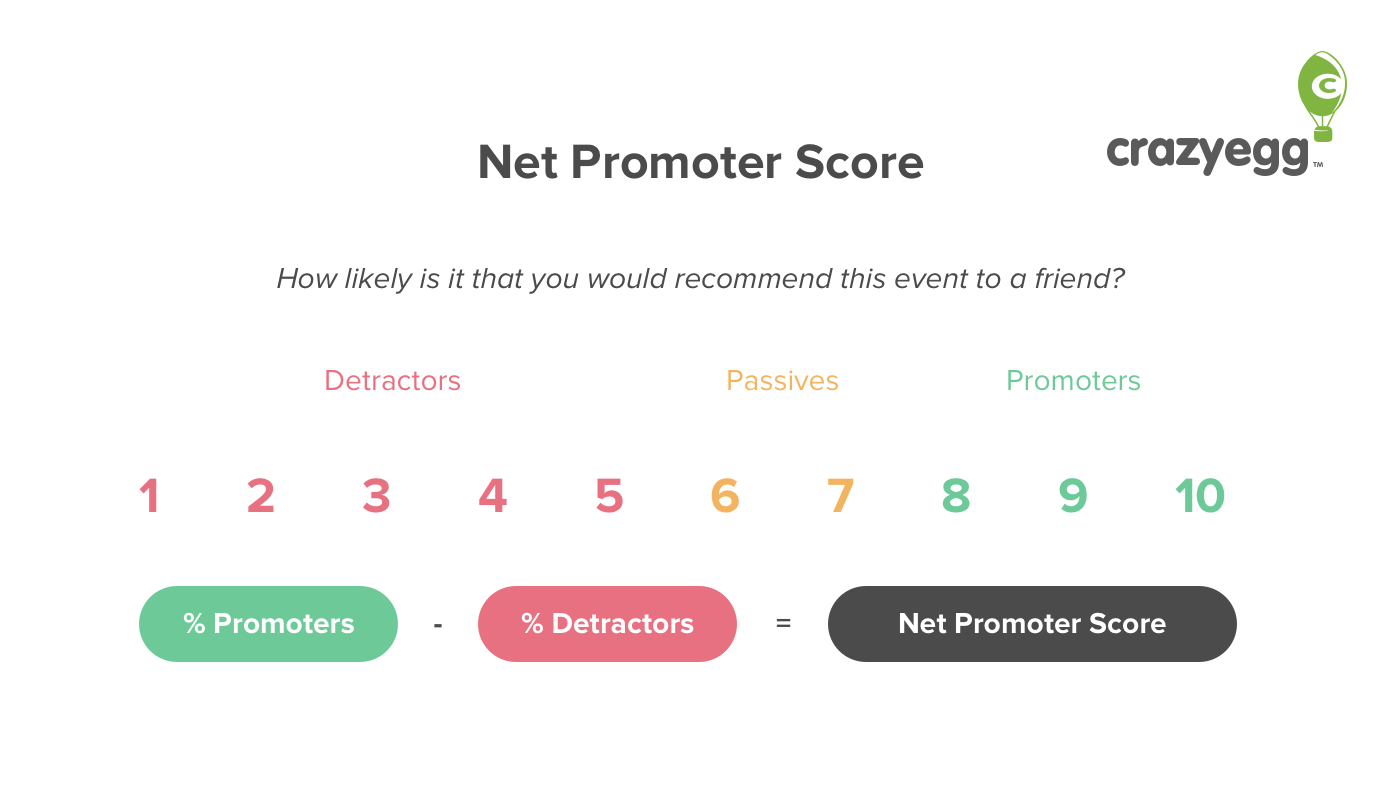

Reliability and validity are concepts used to evaluate the quality of research. They indicate how well a method , technique, or test measures something. Reliability is about the consistency of a measure, and validity is about the accuracy of a measure.

It’s important to consider reliability and validity when you are creating your research design , planning your methods, and writing up your results, especially in quantitative research .

| Reliability | Validity | |

|---|---|---|

| What does it tell you? | The extent to which the results can be reproduced when the research is repeated under the same conditions. | The extent to which the results really measure what they are supposed to measure. |

| How is it assessed? | By checking the consistency of results across time, across different observers, and across parts of the test itself. | By checking how well the results correspond to established theories and other measures of the same concept. |

| How do they relate? | A reliable measurement is not always valid: the results might be reproducible, but they’re not necessarily correct. | A valid measurement is generally reliable: if a test produces accurate results, they should be . |

Table of contents

Understanding reliability vs validity, how are reliability and validity assessed, how to ensure validity and reliability in your research, where to write about reliability and validity in a thesis.

Reliability and validity are closely related, but they mean different things. A measurement can be reliable without being valid. However, if a measurement is valid, it is usually also reliable.

What is reliability?

Reliability refers to how consistently a method measures something. If the same result can be consistently achieved by using the same methods under the same circumstances, the measurement is considered reliable.

What is validity?

Validity refers to how accurately a method measures what it is intended to measure. If research has high validity, that means it produces results that correspond to real properties, characteristics, and variations in the physical or social world.

High reliability is one indicator that a measurement is valid. If a method is not reliable, it probably isn’t valid.

However, reliability on its own is not enough to ensure validity. Even if a test is reliable, it may not accurately reflect the real situation.

Validity is harder to assess than reliability, but it is even more important. To obtain useful results, the methods you use to collect your data must be valid: the research must be measuring what it claims to measure. This ensures that your discussion of the data and the conclusions you draw are also valid.

Prevent plagiarism, run a free check.

Reliability can be estimated by comparing different versions of the same measurement. Validity is harder to assess, but it can be estimated by comparing the results to other relevant data or theory. Methods of estimating reliability and validity are usually split up into different types.

Types of reliability

Different types of reliability can be estimated through various statistical methods.

| Type of reliability | What does it assess? | Example |

|---|---|---|

| The consistency of a measure : do you get the same results when you repeat the measurement? | A group of participants complete a designed to measure personality traits. If they repeat the questionnaire days, weeks, or months apart and give the same answers, this indicates high test-retest reliability. | |

| The consistency of a measure : do you get the same results when different people conduct the same measurement? | Based on an assessment criteria checklist, five examiners submit substantially different results for the same student project. This indicates that the assessment checklist has low inter-rater reliability (for example, because the criteria are too subjective). | |

| The consistency of : do you get the same results from different parts of a test that are designed to measure the same thing? | You design a questionnaire to measure self-esteem. If you randomly split the results into two halves, there should be a between the two sets of results. If the two results are very different, this indicates low internal consistency. |

Types of validity

The validity of a measurement can be estimated based on three main types of evidence. Each type can be evaluated through expert judgement or statistical methods.

| Type of validity | What does it assess? | Example |

|---|---|---|

| The adherence of a measure to of the concept being measured. | A self-esteem questionnaire could be assessed by measuring other traits known or assumed to be related to the concept of self-esteem (such as social skills and optimism). Strong correlation between the scores for self-esteem and associated traits would indicate high construct validity. | |

| The extent to which the measurement of the concept being measured. | A test that aims to measure a class of students’ level of Spanish contains reading, writing, and speaking components, but no listening component. Experts agree that listening comprehension is an essential aspect of language ability, so the test lacks content validity for measuring the overall level of ability in Spanish. | |

| The extent to which the result of a measure corresponds to of the same concept. | A is conducted to measure the political opinions of voters in a region. If the results accurately predict the later outcome of an election in that region, this indicates that the survey has high criterion validity. |

To assess the validity of a cause-and-effect relationship, you also need to consider internal validity (the design of the experiment ) and external validity (the generalisability of the results).

The reliability and validity of your results depends on creating a strong research design , choosing appropriate methods and samples, and conducting the research carefully and consistently.

Ensuring validity

If you use scores or ratings to measure variations in something (such as psychological traits, levels of ability, or physical properties), it’s important that your results reflect the real variations as accurately as possible. Validity should be considered in the very earliest stages of your research, when you decide how you will collect your data .

- Choose appropriate methods of measurement

Ensure that your method and measurement technique are of high quality and targeted to measure exactly what you want to know. They should be thoroughly researched and based on existing knowledge.

For example, to collect data on a personality trait, you could use a standardised questionnaire that is considered reliable and valid. If you develop your own questionnaire, it should be based on established theory or the findings of previous studies, and the questions should be carefully and precisely worded.

- Use appropriate sampling methods to select your subjects

To produce valid generalisable results, clearly define the population you are researching (e.g., people from a specific age range, geographical location, or profession). Ensure that you have enough participants and that they are representative of the population.

Ensuring reliability

Reliability should be considered throughout the data collection process. When you use a tool or technique to collect data, it’s important that the results are precise, stable, and reproducible.

- Apply your methods consistently

Plan your method carefully to make sure you carry out the same steps in the same way for each measurement. This is especially important if multiple researchers are involved.

For example, if you are conducting interviews or observations, clearly define how specific behaviours or responses will be counted, and make sure questions are phrased the same way each time.

- Standardise the conditions of your research

When you collect your data, keep the circumstances as consistent as possible to reduce the influence of external factors that might create variation in the results.

For example, in an experimental setup, make sure all participants are given the same information and tested under the same conditions.

It’s appropriate to discuss reliability and validity in various sections of your thesis or dissertation or research paper. Showing that you have taken them into account in planning your research and interpreting the results makes your work more credible and trustworthy.

| Section | Discuss |

|---|---|

| What have other researchers done to devise and improve methods that are reliable and valid? | |

| How did you plan your research to ensure reliability and validity of the measures used? This includes the chosen sample set and size, sample preparation, external conditions, and measuring techniques. | |

| If you calculate reliability and validity, state these values alongside your main results. | |

| This is the moment to talk about how reliable and valid your results actually were. Were they consistent, and did they reflect true values? If not, why not? | |

| If reliability and validity were a big problem for your findings, it might be helpful to mention this here. |

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Middleton, F. (2022, October 10). Reliability vs Validity in Research | Differences, Types & Examples. Scribbr. Retrieved 5 August 2024, from https://www.scribbr.co.uk/research-methods/reliability-or-validity/

Is this article helpful?

Fiona Middleton

Other students also liked, the 4 types of validity | types, definitions & examples, a quick guide to experimental design | 5 steps & examples, sampling methods | types, techniques, & examples.

- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

Reliability vs Validity: Differences & Examples

By Jim Frost 1 Comment

Reliability and validity are criteria by which researchers assess measurement quality. Measuring a person or item involves assigning scores to represent an attribute. This process creates the data that we analyze. However, to provide meaningful research results, that data must be good. And not all data are good!

For data to be good enough to allow you to draw meaningful conclusions from a research study, they must be reliable and valid. What are the properties of good measurements? In a nutshell, reliability relates to the consistency of measures, and validity addresses whether the measurements are quantifying the correct attribute.

In this post, learn about reliability vs. validity, their relationship, and the various ways to assess them.

Learn more about Experimental Design: Definition, Types, and Examples .

Reliability

Reliability refers to the consistency of the measure. High reliability indicates that the measurement system produces similar results under the same conditions. If you measure the same item or person multiple times, you want to obtain comparable values. They are reproducible.

If you take measurements multiple times and obtain very different values, your data are unreliable. Numbers are meaningless if repeated measures do not produce similar values. What’s the correct value? No one knows! This inconsistency hampers your ability to draw conclusions and understand relationships.

Suppose you have a bathroom scale that displays very inconsistent results from one time to the next. It’s very unreliable. It would be hard to use your scale to determine your correct weight and to know whether you are losing weight.

Inadequate data collection procedures and low-quality or defective data collection tools can produce unreliable data. Additionally, some characteristics are more challenging to measure reliably. For example, the length of an object is concrete. On the other hand, a psychological construct, such as conscientiousness, depression, and self-esteem, can be trickier to measure reliably.

When assessing studies, evaluate data collection methodologies and consider whether any issues undermine their reliability.

Validity refers to whether the measurements reflect what they’re supposed to measure. This concept is a broader issue than reliability. Researchers need to consider whether they’re measuring what they think they’re measuring. Or do the measurements reflect something else? Does the instrument measure what it says it measures? It’s a question that addresses the appropriateness of the data rather than whether measurements are repeatable.

Validity is a smaller concern for tangible measurements like height and weight. You might have a biased bathroom scale if it tends to read too high or too low—but it still measures weight. Validity is a bigger concern in the social sciences, where you can measure elusive concepts such as positive outlook and self-esteem. If you’re assessing the psychological construct of conscientiousness, you need to confirm that the instrument poses questions that appraise this attribute rather than, say, obedience.

Reliability vs Validity

A measurement must be reliable first before it has a chance of being valid. After all, if you don’t obtain consistent measurements for the same object or person under similar conditions, it can’t be valid. If your scale displays a different weight every time you step on it, it’s unreliable, and it is also invalid.

So, having reliable measurements is the first step towards having valid measures. Validity is necessary for reliability, but it is insufficient by itself.

Suppose you have a reliable measurement. You step on your scale a few times in a short period, and it displays very similar weights. It’s reliable. But the weight might be incorrect.

Just because you can measure the same object multiple times and get consistent values, it does not necessarily indicate that the measurements reflect the desired characteristic.

How can you determine whether measurements are both valid and reliable? Assessing reliability vs. validity is the topic for the rest of this post!

| Similar measurements for the same person/item under the same conditions. | Measurements reflect what they’re supposed to measure. | |

| Stability of results across time, between observers, within the test. | Measures have appropriate relationships to theories, similar measures, and different measures. | |

| Unreliable measurements typically cannot be valid. | Valid measurements are also reliable. |

How to Assess Reliability

Reliability relates to measurement consistency. To evaluate reliability, analysts assess consistency over time, within the measurement instrument, and between different observers. These types of consistency are also known as—test-retest, internal, and inter-rater reliability. Typically, appraising these forms of reliability involves taking multiple measures of the same person, object, or construct and assessing scatterplots and correlations of the measurements. Reliable measurements have high correlations because the scores are similar.

Test-Retest Reliability

Analysts often assume that measurements should be consistent across a short time. If you measure your height twice over a couple of days, you should obtain roughly the same measurements.

To assess test-retest reliability, the experimenters typically measure a group of participants on two occasions within a few days. Usually, you’ll evaluate the reliability of the repeated measures using scatterplots and correlation coefficients . You expect to see high correlations and tight lines on the scatterplot when the characteristic you measure is consistent over a short period, and you have a reliable measurement system.

This type of reliability establishes the degree to which a test can produce stable, consistent scores across time. However, in practice, measurement instruments are never entirely consistent.

Keep in mind that some characteristics should not be consistent across time. A good example is your mood, which can change from moment to moment. A test-retest assessment of mood is not likely to produce a high correlation even though it might be a useful measurement instrument.

Internal Reliability

This type of reliability assesses consistency across items within a single instrument. Researchers evaluate internal reliability when they’re using instruments such as a survey or personality inventories. In these instruments, multiple items relate to a single construct. Questions that measure the same characteristic should have a high correlation. People who indicate they are risk-takers should also note that they participate in dangerous activities. If items that supposedly measure the same underlying construct have a low correlation, they are not consistent with each other and might not measure the same thing.

Inter-Rater Reliability

This type of reliability assesses consistency across different observers, judges, or evaluators. When various observers produce similar measurements for the same item or person, their scores are highly correlated. Inter-rater reliability is essential when the subjectivity or skill of the evaluator plays a role. For example, assessing the quality of a writing sample involves subjectivity. Researchers can employ rating guidelines to reduce subjectivity. Comparing the scores from different evaluators for the same writing sample helps establish the measure’s reliability. Learn more about inter-rater reliability .

Related post : Interpreting Correlation

Cronbach’s Alpha

Cronbach’s alpha measures the internal consistency, or reliability, of a set of survey items. Use this statistic to help determine whether a collection of items consistently measures the same characteristic. Learn more about Cronbach’s Alpha .

Gage R&R Studies

These studies evaluation a measurement systems reliability and identifies sources of variation that can help you target improvement efforts effectively. Learn more about Gage R&R Studies .

How to Assess Validity

Validity is more difficult to evaluate than reliability. After all, with reliability, you only assess whether the measures are consistent across time, within the instrument, and between observers. On the other hand, evaluating validity involves determining whether the instrument measures the correct characteristic. This process frequently requires examining relationships between these measurements, other data, and theory. Validating a measurement instrument requires you to use a wide range of subject-area knowledge and different types of constructs to determine whether the measurements from your instrument fit in with the bigger picture!

An instrument with high validity produces measurements that correctly fit the larger picture with other constructs. Validity assesses whether the web of empirical relationships aligns with the theoretical relationships.

The measurements must have a positive relationship with other measures of the same construct. Additionally, they need to correlate in the correct direction (positively or negatively) with the theoretically correct constructs. Finally, the measures should have no relationship with unrelated constructs.

If you need more detailed information, read my post that focuses on Measurement Validity . In that post, I cover the various types, how to evaluate them, and provide examples.

Experimental validity relates to experimental designs and methods. To learn about that topic, read my post about Internal and External Validity .

Whew, that’s a lot of information about reliability vs. validity. Using these concepts, you can determine whether a measurement instrument produces good data!

Share this:

Reader Interactions

August 17, 2022 at 3:53 am

Good way of expressing what validity and reliabiliy with building examples.

Comments and Questions Cancel reply

Validity vs. Reliability in Research: What's the Difference?

Introduction

What is the difference between reliability and validity in a study, what is an example of reliability and validity, how to ensure validity and reliability in your research, critiques of reliability and validity.

In research, validity and reliability are crucial for producing robust findings. They provide a foundation that assures scholars, practitioners, and readers alike that the research's insights are both accurate and consistent. However, the nuanced nature of qualitative data often blurs the lines between these concepts, making it imperative for researchers to discern their distinct roles.

This article seeks to illuminate the intricacies of reliability and validity, highlighting their significance and distinguishing their unique attributes. By understanding these critical facets, qualitative researchers can ensure their work not only resonates with authenticity but also trustworthiness.

In the domain of research, whether qualitative or quantitative , two concepts often arise when discussing the quality and rigor of a study: reliability and validity . These two terms, while interconnected, have distinct meanings that hold significant weight in the world of research.

Reliability, at its core, speaks to the consistency of a study. If a study or test measures the same concept repeatedly and yields the same results, it demonstrates a high degree of reliability. A common method for assessing reliability is through internal consistency reliability, which checks if multiple items that measure the same concept produce similar scores.

Another method often used is inter-rater reliability , which gauges the consistency of scores given by different raters. This approach is especially amenable to qualitative research , and it can help researchers assess the clarity of their code system and the consistency of their codings . For a study to be more dependable, it's imperative to ensure a sufficient measurement of reliability is achieved.

On the other hand, validity is concerned with accuracy. It looks at whether a study truly measures what it claims to. Within the realm of validity, several types exist. Construct validity, for instance, verifies that a study measures the intended abstract concept or underlying construct. If a research aims to measure self-esteem and accurately captures this abstract trait, it demonstrates strong construct validity.

Content validity ensures that a test or study comprehensively represents the entire domain of the concept it seeks to measure. For instance, if a test aims to assess mathematical ability, it should cover arithmetic, algebra, geometry, and more to showcase strong content validity.

Criterion validity is another form of validity that ensures that the scores from a test correlate well with a measure from a related outcome. A subset of this is predictive validity, which checks if the test can predict future outcomes. For instance, if an aptitude test can predict future job performance, it can be said to have high predictive validity.

The distinction between reliability and validity becomes clear when one considers the nature of their focus. While reliability is concerned with consistency and reproducibility, validity zeroes in on accuracy and truthfulness.

A research tool can be reliable without being valid. For instance, faulty instrument measures might consistently give bad readings (reliable but not valid). Conversely, in discussions about test reliability, the same test measure administered multiple times could sometimes hit the mark and at other times miss it entirely, producing different test scores each time. This would make it valid in some instances but not reliable.

For a study to be robust, it must achieve both reliability and validity. Reliability ensures the study's findings are reproducible while validity confirms that it accurately represents the phenomena it claims to. Ensuring both in a study means the results are both dependable and accurate, forming a cornerstone for high-quality research.

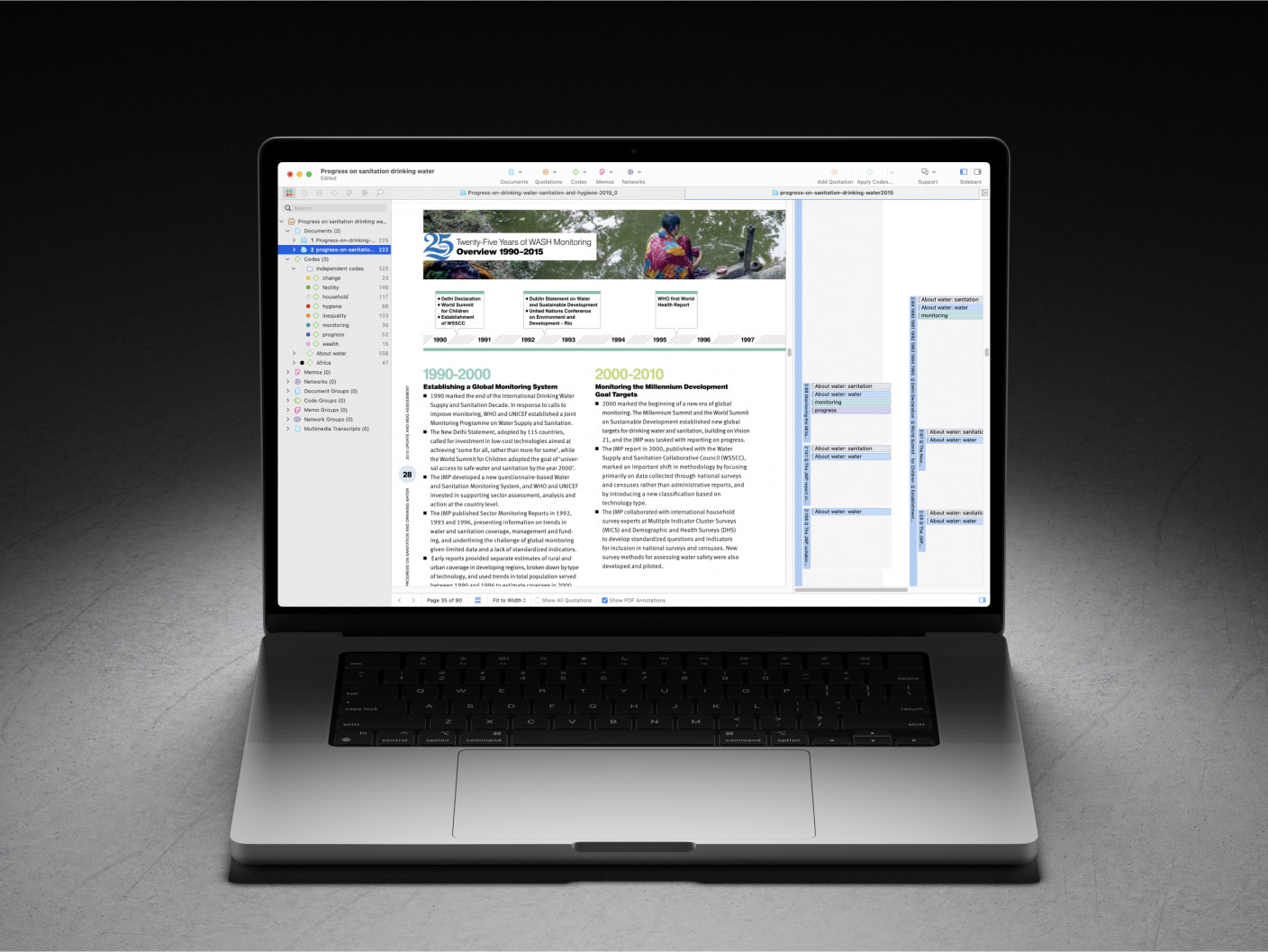

Efficient, easy data analysis with ATLAS.ti

Start analyzing data quickly and more deeply with ATLAS.ti. Download a free trial today.

Understanding the nuances of reliability and validity becomes clearer when contextualized within a real-world research setting. Imagine a qualitative study where a researcher aims to explore the experiences of teachers in urban schools concerning classroom management. The primary method of data collection is semi-structured interviews .

To ensure the reliability of this qualitative study, the researcher crafts a consistent list of open-ended questions for the interview. This ensures that, while each conversation might meander based on the individual’s experiences, there remains a core set of topics related to classroom management that every participant addresses.

The essence of reliability in this context isn't necessarily about garnering identical responses but rather about achieving a consistent approach to data collection and subsequent interpretation . As part of this commitment to reliability, two researchers might independently transcribe and analyze a subset of these interviews. If they identify similar themes and patterns in their independent analyses, it suggests a consistent interpretation of the data, showcasing inter-rater reliability .

Validity , on the other hand, is anchored in ensuring that the research genuinely captures and represents the lived experiences and sentiments of teachers concerning classroom management. To establish content validity, the list of interview questions is thoroughly reviewed by a panel of educational experts. Their feedback ensures that the questions encompass the breadth of issues and concerns related to classroom management in urban school settings.

As the interviews are conducted, the researcher pays close attention to the depth and authenticity of responses. After the interviews, member checking could be employed, where participants review the researcher's interpretation of their responses to ensure that their experiences and perspectives have been accurately captured. This strategy helps in affirming the study's construct validity, ensuring that the abstract concept of "experiences with classroom management" has been truthfully and adequately represented.

In this example, we can see that while the interview study is rooted in qualitative methods and subjective experiences, the principles of reliability and validity can still meaningfully inform the research process. They serve as guides to ensure the research's findings are both dependable and genuinely reflective of the participants' experiences.

Ensuring validity and reliability in research, irrespective of its qualitative or quantitative nature, is pivotal to producing results that are both trustworthy and robust. Here's how you can integrate these concepts into your study to ensure its rigor:

Reliability is about consistency. One of the most straightforward ways to gauge it in quantitative research is using test-retest reliability. It involves administering the same test to the same group of participants on two separate occasions and then comparing the results.

A high degree of similarity between the two sets of results indicates good reliability. This can often be measured using a correlation coefficient, where a value closer to 1 indicates a strong positive consistency between the two test iterations.

Validity, on the other hand, ensures that the research genuinely measures what it intends to. There are various forms of validity to consider. Convergent validity ensures that two measures of the same construct or those that should theoretically be related, are indeed correlated. For example, two different measures assessing self-esteem should show similar results for the same group, highlighting that they are measuring the same underlying construct.

Face validity is the most basic form of validity and is gauged by the sheer appearance of the measurement tool. If, at face value, a test seems like it measures what it claims to, it has face validity. This is often the first step and is usually followed by more rigorous forms of validity testing.

Criterion-related validity, a subtype of the previously discussed criterion validity, evaluates how well the outcomes of a particular test or measurement correlate with another related measure. For example, if a new tool is developed to measure reading comprehension, its results can be compared with those of an established reading comprehension test to assess its criterion-related validity. If the results show a strong correlation, it's a sign that the new tool is valid.

Ensuring both validity and reliability requires deliberate planning, meticulous testing, and constant reflection on the study's methods and results. This might involve using established scales or measures with proven validity and reliability, conducting pilot studies to refine measurement tools, and always staying cognizant of the fact that these two concepts are important considerations for research robustness.

While reliability and validity are foundational concepts in many traditional research paradigms, they have not escaped scrutiny, especially from critical and poststructuralist perspectives. These critiques often arise from the fundamental philosophical differences in how knowledge, truth, and reality are perceived and constructed.

From a poststructuralist viewpoint, the very pursuit of a singular "truth" or an objective reality is questionable. In such a perspective, multiple truths exist, each shaped by its own socio-cultural, historical, and individual contexts.

Reliability, with its emphasis on consistent replication, might then seem at odds with this understanding. If truths are multiple and shifting, how can consistency across repeated measures or observations be a valid measure of anything other than the research instrument's stability?

Validity, too, faces critique. In seeking to ensure that a study measures what it purports to measure, there's an implicit assumption of an observable, knowable reality. Poststructuralist critiques question this foundation, arguing that reality is too fluid, multifaceted, and influenced by power dynamics to be pinned down by any singular measurement or representation.

Moreover, the very act of determining "validity" often requires an external benchmark or "gold standard." This brings up the issue of who determines this standard and the power dynamics and potential biases inherent in such decisions.

Another point of contention is the way these concepts can inadvertently prioritize certain forms of knowledge over others. For instance, privileging research that meets stringent reliability and validity criteria might marginalize more exploratory, interpretive, or indigenous research methods. These methods, while offering deep insights, might not align neatly with traditional understandings of reliability and validity, potentially relegating them to the periphery of "accepted" knowledge production.

To be sure, reliability and validity serve as guiding principles in many research approaches. However, it's essential to recognize their limitations and the critiques posed by alternative epistemologies. Engaging with these critiques doesn't diminish the value of reliability and validity but rather enriches our understanding of the multifaceted nature of knowledge and the complexities of its pursuit.

A rigorous research process begins with ATLAS.ti

Download a free trial of our powerful data analysis software to make the most of your research.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- The 4 Types of Reliability in Research | Definitions & Examples

The 4 Types of Reliability in Research | Definitions & Examples

Published on August 8, 2019 by Fiona Middleton . Revised on June 22, 2023.

Reliability tells you how consistently a method measures something. When you apply the same method to the same sample under the same conditions, you should get the same results. If not, the method of measurement may be unreliable or bias may have crept into your research.

There are four main types of reliability. Each can be estimated by comparing different sets of results produced by the same method.

| Type of reliability | Measures the consistency of… |

|---|---|

| The same test over . | |

| The same test conducted by different . | |

| of a test which are designed to be equivalent. | |

| The of a test. | |

Table of contents

Test-retest reliability, interrater reliability, parallel forms reliability, internal consistency, which type of reliability applies to my research, other interesting articles, frequently asked questions about types of reliability.

Test-retest reliability measures the consistency of results when you repeat the same test on the same sample at a different point in time. You use it when you are measuring something that you expect to stay constant in your sample.

Why it’s important

Many factors can influence your results at different points in time: for example, respondents might experience different moods, or external conditions might affect their ability to respond accurately.

Test-retest reliability can be used to assess how well a method resists these factors over time. The smaller the difference between the two sets of results, the higher the test-retest reliability.

How to measure it

To measure test-retest reliability, you conduct the same test on the same group of people at two different points in time. Then you calculate the correlation between the two sets of results.

Test-retest reliability example

You devise a questionnaire to measure the IQ of a group of participants (a property that is unlikely to change significantly over time).You administer the test two months apart to the same group of people, but the results are significantly different, so the test-retest reliability of the IQ questionnaire is low.

Improving test-retest reliability

- When designing tests or questionnaires , try to formulate questions, statements, and tasks in a way that won’t be influenced by the mood or concentration of participants.

- When planning your methods of data collection , try to minimize the influence of external factors, and make sure all samples are tested under the same conditions.

- Remember that changes or recall bias can be expected to occur in the participants over time, and take these into account.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

Interrater reliability (also called interobserver reliability) measures the degree of agreement between different people observing or assessing the same thing. You use it when data is collected by researchers assigning ratings, scores or categories to one or more variables , and it can help mitigate observer bias .

People are subjective, so different observers’ perceptions of situations and phenomena naturally differ. Reliable research aims to minimize subjectivity as much as possible so that a different researcher could replicate the same results.

When designing the scale and criteria for data collection, it’s important to make sure that different people will rate the same variable consistently with minimal bias . This is especially important when there are multiple researchers involved in data collection or analysis.

To measure interrater reliability, different researchers conduct the same measurement or observation on the same sample. Then you calculate the correlation between their different sets of results. If all the researchers give similar ratings, the test has high interrater reliability.

Interrater reliability example

A team of researchers observe the progress of wound healing in patients. To record the stages of healing, rating scales are used, with a set of criteria to assess various aspects of wounds. The results of different researchers assessing the same set of patients are compared, and there is a strong correlation between all sets of results, so the test has high interrater reliability.

Improving interrater reliability

- Clearly define your variables and the methods that will be used to measure them.

- Develop detailed, objective criteria for how the variables will be rated, counted or categorized.

- If multiple researchers are involved, ensure that they all have exactly the same information and training.

Parallel forms reliability measures the correlation between two equivalent versions of a test. You use it when you have two different assessment tools or sets of questions designed to measure the same thing.

If you want to use multiple different versions of a test (for example, to avoid respondents repeating the same answers from memory), you first need to make sure that all the sets of questions or measurements give reliable results.

The most common way to measure parallel forms reliability is to produce a large set of questions to evaluate the same thing, then divide these randomly into two question sets.

The same group of respondents answers both sets, and you calculate the correlation between the results. High correlation between the two indicates high parallel forms reliability.

Parallel forms reliability example

A set of questions is formulated to measure financial risk aversion in a group of respondents. The questions are randomly divided into two sets, and the respondents are randomly divided into two groups. Both groups take both tests: group A takes test A first, and group B takes test B first. The results of the two tests are compared, and the results are almost identical, indicating high parallel forms reliability.

Improving parallel forms reliability

- Ensure that all questions or test items are based on the same theory and formulated to measure the same thing.

Internal consistency assesses the correlation between multiple items in a test that are intended to measure the same construct.

You can calculate internal consistency without repeating the test or involving other researchers, so it’s a good way of assessing reliability when you only have one data set.

When you devise a set of questions or ratings that will be combined into an overall score, you have to make sure that all of the items really do reflect the same thing. If responses to different items contradict one another, the test might be unreliable.

Two common methods are used to measure internal consistency.

- Average inter-item correlation : For a set of measures designed to assess the same construct, you calculate the correlation between the results of all possible pairs of items and then calculate the average.

- Split-half reliability : You randomly split a set of measures into two sets. After testing the entire set on the respondents, you calculate the correlation between the two sets of responses.

Internal consistency example

A group of respondents are presented with a set of statements designed to measure optimistic and pessimistic mindsets. They must rate their agreement with each statement on a scale from 1 to 5. If the test is internally consistent, an optimistic respondent should generally give high ratings to optimism indicators and low ratings to pessimism indicators. The correlation is calculated between all the responses to the “optimistic” statements, but the correlation is very weak. This suggests that the test has low internal consistency.

Improving internal consistency

- Take care when devising questions or measures: those intended to reflect the same concept should be based on the same theory and carefully formulated.

Prevent plagiarism. Run a free check.

It’s important to consider reliability when planning your research design , collecting and analyzing your data, and writing up your research. The type of reliability you should calculate depends on the type of research and your methodology .

| What is my methodology? | Which form of reliability is relevant? |

|---|---|

| Measuring a property that you expect to stay the same over time. | Test-retest |

| Multiple researchers making observations or ratings about the same topic. | Interrater |

| Using two different tests to measure the same thing. | Parallel forms |

| Using a multi-item test where all the items are intended to measure the same variable. | Internal consistency |

If possible and relevant, you should statistically calculate reliability and state this alongside your results .

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Degrees of freedom

- Null hypothesis

- Discourse analysis

- Control groups

- Mixed methods research

- Non-probability sampling

- Quantitative research

- Ecological validity

Research bias

- Rosenthal effect

- Implicit bias

- Cognitive bias

- Selection bias

- Negativity bias

- Status quo bias

Reliability and validity are both about how well a method measures something:

- Reliability refers to the consistency of a measure (whether the results can be reproduced under the same conditions).

- Validity refers to the accuracy of a measure (whether the results really do represent what they are supposed to measure).

If you are doing experimental research, you also have to consider the internal and external validity of your experiment.

You can use several tactics to minimize observer bias .

- Use masking (blinding) to hide the purpose of your study from all observers.

- Triangulate your data with different data collection methods or sources.

- Use multiple observers and ensure interrater reliability.

- Train your observers to make sure data is consistently recorded between them.

- Standardize your observation procedures to make sure they are structured and clear.

Reproducibility and replicability are related terms.

- A successful reproduction shows that the data analyses were conducted in a fair and honest manner.

- A successful replication shows that the reliability of the results is high.

Research bias affects the validity and reliability of your research findings , leading to false conclusions and a misinterpretation of the truth. This can have serious implications in areas like medical research where, for example, a new form of treatment may be evaluated.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Middleton, F. (2023, June 22). The 4 Types of Reliability in Research | Definitions & Examples. Scribbr. Retrieved August 5, 2024, from https://www.scribbr.com/methodology/types-of-reliability/

Is this article helpful?

Fiona Middleton

Other students also liked, reliability vs. validity in research | difference, types and examples, what is quantitative research | definition, uses & methods, data collection | definition, methods & examples, what is your plagiarism score.

- How it works

Reliability and Validity – Definitions, Types & Examples

Published by Alvin Nicolas at August 16th, 2021 , Revised On October 26, 2023

A researcher must test the collected data before making any conclusion. Every research design needs to be concerned with reliability and validity to measure the quality of the research.

What is Reliability?

Reliability refers to the consistency of the measurement. Reliability shows how trustworthy is the score of the test. If the collected data shows the same results after being tested using various methods and sample groups, the information is reliable. If your method has reliability, the results will be valid.

Example: If you weigh yourself on a weighing scale throughout the day, you’ll get the same results. These are considered reliable results obtained through repeated measures.

Example: If a teacher conducts the same math test of students and repeats it next week with the same questions. If she gets the same score, then the reliability of the test is high.

What is the Validity?

Validity refers to the accuracy of the measurement. Validity shows how a specific test is suitable for a particular situation. If the results are accurate according to the researcher’s situation, explanation, and prediction, then the research is valid.

If the method of measuring is accurate, then it’ll produce accurate results. If a method is reliable, then it’s valid. In contrast, if a method is not reliable, it’s not valid.

Example: Your weighing scale shows different results each time you weigh yourself within a day even after handling it carefully, and weighing before and after meals. Your weighing machine might be malfunctioning. It means your method had low reliability. Hence you are getting inaccurate or inconsistent results that are not valid.

Example: Suppose a questionnaire is distributed among a group of people to check the quality of a skincare product and repeated the same questionnaire with many groups. If you get the same response from various participants, it means the validity of the questionnaire and product is high as it has high reliability.

Most of the time, validity is difficult to measure even though the process of measurement is reliable. It isn’t easy to interpret the real situation.

Example: If the weighing scale shows the same result, let’s say 70 kg each time, even if your actual weight is 55 kg, then it means the weighing scale is malfunctioning. However, it was showing consistent results, but it cannot be considered as reliable. It means the method has low reliability.

Internal Vs. External Validity

One of the key features of randomised designs is that they have significantly high internal and external validity.

Internal validity is the ability to draw a causal link between your treatment and the dependent variable of interest. It means the observed changes should be due to the experiment conducted, and any external factor should not influence the variables .

Example: age, level, height, and grade.

External validity is the ability to identify and generalise your study outcomes to the population at large. The relationship between the study’s situation and the situations outside the study is considered external validity.

Also, read about Inductive vs Deductive reasoning in this article.

Looking for reliable dissertation support?

We hear you.

- Whether you want a full dissertation written or need help forming a dissertation proposal, we can help you with both.

- Get different dissertation services at ResearchProspect and score amazing grades!

Threats to Interval Validity

| Threat | Definition | Example |

|---|---|---|

| Confounding factors | Unexpected events during the experiment that are not a part of treatment. | If you feel the increased weight of your experiment participants is due to lack of physical activity, but it was actually due to the consumption of coffee with sugar. |

| Maturation | The influence on the independent variable due to passage of time. | During a long-term experiment, subjects may feel tired, bored, and hungry. |

| Testing | The results of one test affect the results of another test. | Participants of the first experiment may react differently during the second experiment. |

| Instrumentation | Changes in the instrument’s collaboration | Change in the may give different results instead of the expected results. |

| Statistical regression | Groups selected depending on the extreme scores are not as extreme on subsequent testing. | Students who failed in the pre-final exam are likely to get passed in the final exams; they might be more confident and conscious than earlier. |

| Selection bias | Choosing comparison groups without randomisation. | A group of trained and efficient teachers is selected to teach children communication skills instead of randomly selecting them. |

| Experimental mortality | Due to the extension of the time of the experiment, participants may leave the experiment. | Due to multi-tasking and various competition levels, the participants may leave the competition because they are dissatisfied with the time-extension even if they were doing well. |

Threats of External Validity

| Threat | Definition | Example |

|---|---|---|

| Reactive/interactive effects of testing | The participants of the pre-test may get awareness about the next experiment. The treatment may not be effective without the pre-test. | Students who got failed in the pre-final exam are likely to get passed in the final exams; they might be more confident and conscious than earlier. |

| Selection of participants | A group of participants selected with specific characteristics and the treatment of the experiment may work only on the participants possessing those characteristics | If an experiment is conducted specifically on the health issues of pregnant women, the same treatment cannot be given to male participants. |

How to Assess Reliability and Validity?

Reliability can be measured by comparing the consistency of the procedure and its results. There are various methods to measure validity and reliability. Reliability can be measured through various statistical methods depending on the types of validity, as explained below:

Types of Reliability

| Type of reliability | What does it measure? | Example |

|---|---|---|

| Test-Retests | It measures the consistency of the results at different points of time. It identifies whether the results are the same after repeated measures. | Suppose a questionnaire is distributed among a group of people to check the quality of a skincare product and repeated the same questionnaire with many groups. If you get the same response from a various group of participants, it means the validity of the questionnaire and product is high as it has high test-retest reliability. |

| Inter-Rater | It measures the consistency of the results at the same time by different raters (researchers) | Suppose five researchers measure the academic performance of the same student by incorporating various questions from all the academic subjects and submit various results. It shows that the questionnaire has low inter-rater reliability. |

| Parallel Forms | It measures Equivalence. It includes different forms of the same test performed on the same participants. | Suppose the same researcher conducts the two different forms of tests on the same topic and the same students. The tests could be written and oral tests on the same topic. If results are the same, then the parallel-forms reliability of the test is high; otherwise, it’ll be low if the results are different. |

| Inter-Term | It measures the consistency of the measurement. | The results of the same tests are split into two halves and compared with each other. If there is a lot of difference in results, then the inter-term reliability of the test is low. |

Types of Validity

As we discussed above, the reliability of the measurement alone cannot determine its validity. Validity is difficult to be measured even if the method is reliable. The following type of tests is conducted for measuring validity.

| Type of reliability | What does it measure? | Example |

|---|---|---|

| Content validity | It shows whether all the aspects of the test/measurement are covered. | A language test is designed to measure the writing and reading skills, listening, and speaking skills. It indicates that a test has high content validity. |

| Face validity | It is about the validity of the appearance of a test or procedure of the test. | The type of included in the question paper, time, and marks allotted. The number of questions and their categories. Is it a good question paper to measure the academic performance of students? |

| Construct validity | It shows whether the test is measuring the correct construct (ability/attribute, trait, skill) | Is the test conducted to measure communication skills is actually measuring communication skills? |

| Criterion validity | It shows whether the test scores obtained are similar to other measures of the same concept. | The results obtained from a prefinal exam of graduate accurately predict the results of the later final exam. It shows that the test has high criterion validity. |

Does your Research Methodology Have the Following?

- Great Research/Sources

- Perfect Language

- Accurate Sources

If not, we can help. Our panel of experts makes sure to keep the 3 pillars of Research Methodology strong.

How to Increase Reliability?

- Use an appropriate questionnaire to measure the competency level.

- Ensure a consistent environment for participants

- Make the participants familiar with the criteria of assessment.

- Train the participants appropriately.

- Analyse the research items regularly to avoid poor performance.

How to Increase Validity?

Ensuring Validity is also not an easy job. A proper functioning method to ensure validity is given below:

- The reactivity should be minimised at the first concern.

- The Hawthorne effect should be reduced.

- The respondents should be motivated.

- The intervals between the pre-test and post-test should not be lengthy.

- Dropout rates should be avoided.

- The inter-rater reliability should be ensured.

- Control and experimental groups should be matched with each other.

How to Implement Reliability and Validity in your Thesis?

According to the experts, it is helpful if to implement the concept of reliability and Validity. Especially, in the thesis and the dissertation, these concepts are adopted much. The method for implementation given below:

| Segments | Explanation | |||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| All the planning about reliability and validity will be discussed here, including the chosen samples and size and the techniques used to measure reliability and validity. | ||||||||||||||||||||

| Please talk about the level of reliability and validity of your results and their influence on values. | ||||||||||||||||||||

Frequently Asked QuestionsWhat is reliability and validity in research. Reliability in research refers to the consistency and stability of measurements or findings. Validity relates to the accuracy and truthfulness of results, measuring what the study intends to. Both are crucial for trustworthy and credible research outcomes. What is validity?Validity in research refers to the extent to which a study accurately measures what it intends to measure. It ensures that the results are truly representative of the phenomena under investigation. Without validity, research findings may be irrelevant, misleading, or incorrect, limiting their applicability and credibility. What is reliability?Reliability in research refers to the consistency and stability of measurements over time. If a study is reliable, repeating the experiment or test under the same conditions should produce similar results. Without reliability, findings become unpredictable and lack dependability, potentially undermining the study’s credibility and generalisability.  What is reliability in psychology?In psychology, reliability refers to the consistency of a measurement tool or test. A reliable psychological assessment produces stable and consistent results across different times, situations, or raters. It ensures that an instrument’s scores are not due to random error, making the findings dependable and reproducible in similar conditions. What is test retest reliability?Test-retest reliability assesses the consistency of measurements taken by a test over time. It involves administering the same test to the same participants at two different points in time and comparing the results. A high correlation between the scores indicates that the test produces stable and consistent results over time. How to improve reliability of an experiment?

What is the difference between reliability and validity?Reliability refers to the consistency and repeatability of measurements, ensuring results are stable over time. Validity indicates how well an instrument measures what it’s intended to measure, ensuring accuracy and relevance. While a test can be reliable without being valid, a valid test must inherently be reliable. Both are essential for credible research. Are interviews reliable and valid?Interviews can be both reliable and valid, but they are susceptible to biases. The reliability and validity depend on the design, structure, and execution of the interview. Structured interviews with standardised questions improve reliability. Validity is enhanced when questions accurately capture the intended construct and when interviewer biases are minimised. Are IQ tests valid and reliable?IQ tests are generally considered reliable, producing consistent scores over time. Their validity, however, is a subject of debate. While they effectively measure certain cognitive skills, whether they capture the entirety of “intelligence” or predict success in all life areas is contested. Cultural bias and over-reliance on tests are also concerns. Are questionnaires reliable and valid?Questionnaires can be both reliable and valid if well-designed. Reliability is achieved when they produce consistent results over time or across similar populations. Validity is ensured when questions accurately measure the intended construct. However, factors like poorly phrased questions, respondent bias, and lack of standardisation can compromise their reliability and validity. You May Also LikeDiscourse analysis is an essential aspect of studying a language. It is used in various disciplines of social science and humanities such as linguistic, sociolinguistics, and psycholinguistic. Inductive and deductive reasoning takes into account assumptions and incidents. Here is all you need to know about inductive vs deductive reasoning. A survey includes questions relevant to the research topic. The participants are selected, and the questionnaire is distributed to collect the data. USEFUL LINKS LEARNING RESOURCES  COMPANY DETAILS

Breadcrumbs Section. Click here to navigate to respective pages.  Validity and Reliability DOI link for Validity and Reliability Click here to navigate to parent product. This chapter provides an in-depth look into the concepts of reliability and validity. The inherent lack of total reliability in planning research gets further explained by specific challenges. Several different ways to measure reliability—inter-rater reliability, equivalency reliability, and internal consistency—and validity—face, construct, internal, and external validities—are presented. The chapter concludes with two examples of planning research studies that exemplify the concepts of reliability and validity.

Connect with us Registered in England & Wales No. 3099067 5 Howick Place | London | SW1P 1WG © 2024 Informa UK Limited

Home » Reliability Vs Validity Reliability Vs ValidityTable of Contents  Reliability and validity are two important concepts in research that are used to evaluate the quality of measurement instruments or research studies. ReliabilityReliability refers to the degree to which a measurement instrument or research study produces consistent and stable results over time, across different observers or raters, or under different conditions. In other words, reliability is the extent to which a measurement instrument or research study produces results that are free from random error. A reliable measurement instrument or research study should produce similar results each time it is used or conducted, regardless of who is using it or conducting it. Validity, on the other hand, refers to the degree to which a measurement instrument or research study accurately measures what it is supposed to measure or tests what it is supposed to test. In other words, validity is the extent to which a measurement instrument or research study measures or tests what it claims to measure or test. A valid measurement instrument or research study should produce results that accurately reflect the concept or construct being measured or tested. Difference Between Reliability Vs ValidityHere’s a comparison table that highlights the differences between reliability and validity:

Also see Research Methods About the authorMuhammad HassanResearcher, Academic Writer, Web developer You may also like Primary Vs Secondary Research Research Hypothesis Vs Null Hypothesis Market Research Vs Marketing Research Generative Vs Evaluative Research Criterion Validity – Methods, Examples and... Descriptive vs Inferential Statistics – All Key...An official website of the United States government The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site. The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

Save citation to fileEmail citation, add to collections.

Add to My BibliographyYour saved search, create a file for external citation management software, your rss feed.

Reliability and validity in researchAffiliation.

This article examines reliability and validity as ways to demonstrate the rigour and trustworthiness of quantitative and qualitative research. The authors discuss the basic principles of reliability and validity for readers who are new to research. PubMed Disclaimer Similar articles

Publication types

LinkOut - more resourcesFull text sources.

NCBI Literature Resources MeSH PMC Bookshelf Disclaimer The PubMed wordmark and PubMed logo are registered trademarks of the U.S. Department of Health and Human Services (HHS). Unauthorized use of these marks is strictly prohibited. Qualitative vs. Quantitative Data: 7 Key DifferencesQualitative data is information you can describe with words rather than numbers. Quantitative data is information represented in a measurable way using numbers. One type of data isn’t better than the other. To conduct thorough research, you need both. But knowing the difference between them is important if you want to harness the full power of both qualitative and quantitative data. In this post, we’ll explore seven key differences between these two types of data. #1. The Type of DataThe single biggest difference between quantitative and qualitative data is that one deals with numbers, and the other deals with concepts and ideas. The words “qualitative” and “quantitative” are really similar, which can make it hard to keep track of which one is which. I like to think of them this way: