🚀 Chart your career path in cutting-edge domains.

Talk to a career counsellor for personalised guidance 🤝.

100+ Real-Life Examples of Reinforcement Learning And It's Challenges

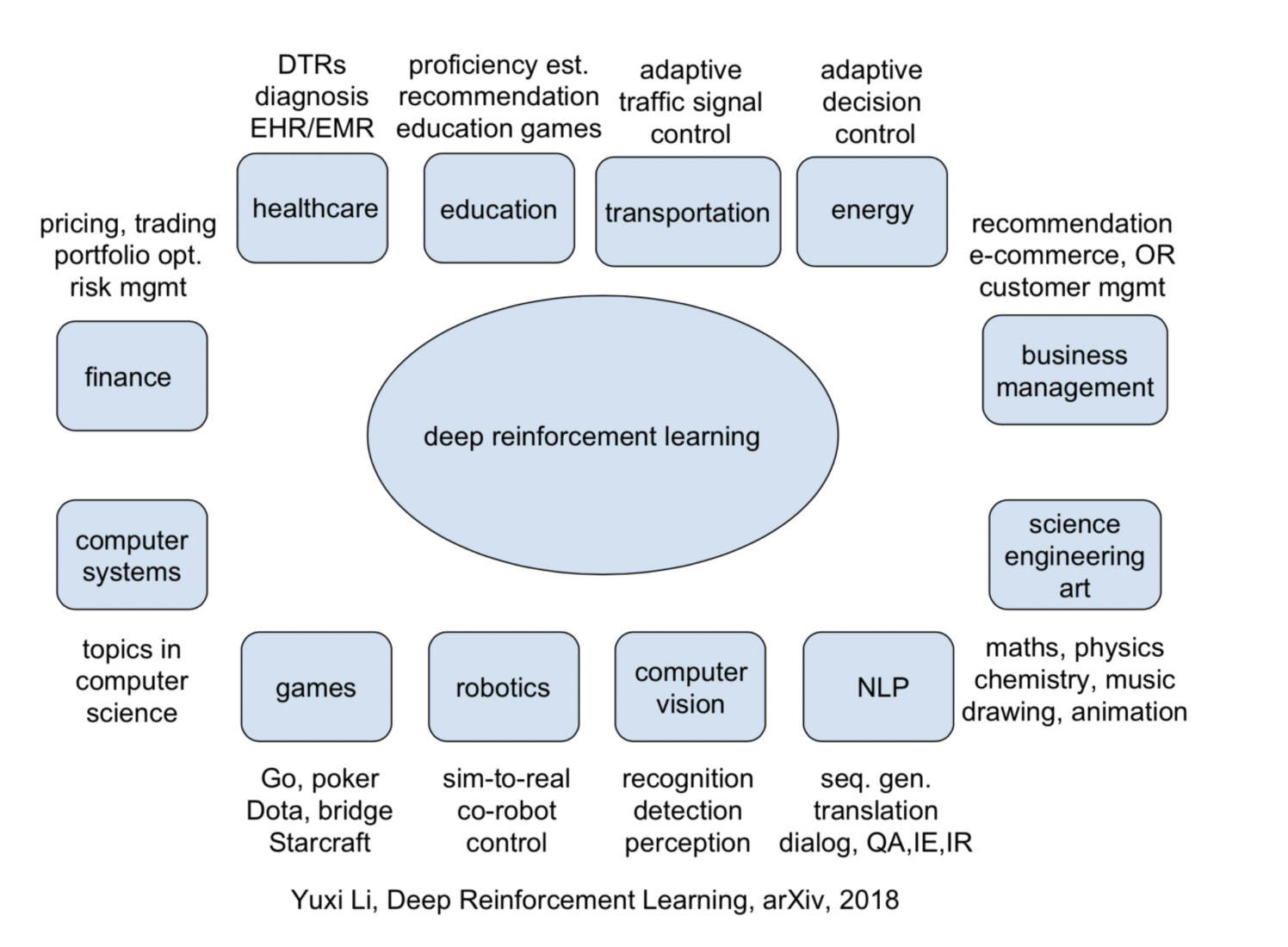

The blog features diverse case studies in reinforcement learning, showcasing its practical applications. These studies highlight how reinforcement learning algorithms enable machines to learn and make decisions by interacting with their environment.

From robotics and gaming to recommendation systems, each case study demonstrates the power of reinforcement learning in optimizing actions and achieving desired outcomes. With real-world examples, the blog illustrates the versatility and potential of reinforcement learning in revolutionizing various industries and solving complex problems through intelligent decision-making.

Table of Content

What are machine learning and reinforcement learning.

- Why has the need for Reinforcement Learning rise?

Supply Chain

Agriculture, autonomous vehicles, manufacturing, hospitality, advertising, cybersecurity.

- 100+ Real-Life Applications of Reinforcement Learning

Top 6 Challenges for Reinforcement Learning

- Future of Reinforcement Learning

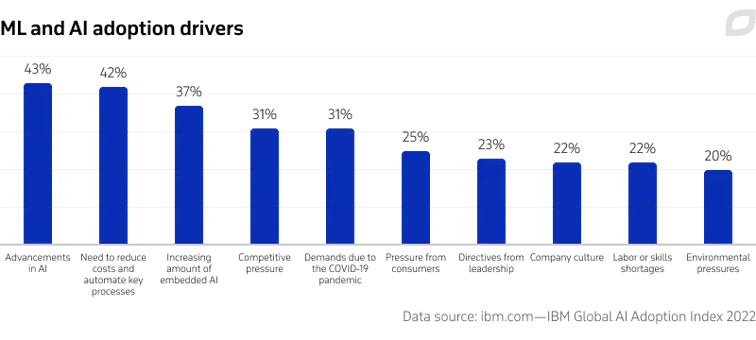

The Global Machine Learning Market Size is expected to reach $302.62 billion by 2030 at a rate of 38.1%.

The convergence of ample data and powerful computing resources facilitates the widespread adoption and growth of machine learning in industries like healthcare, finance, and autonomous systems.

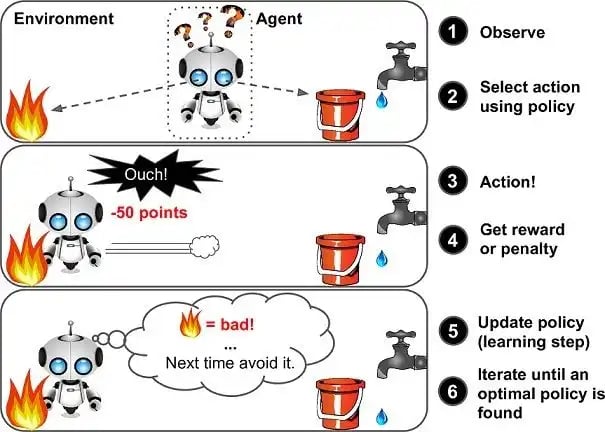

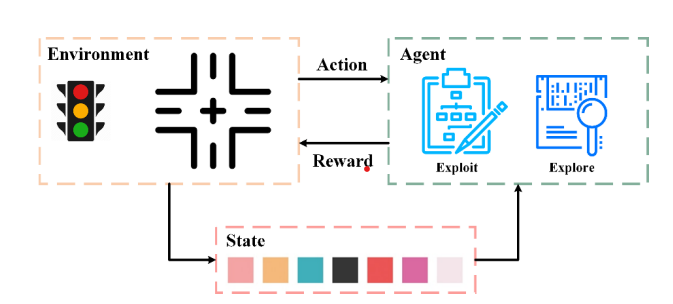

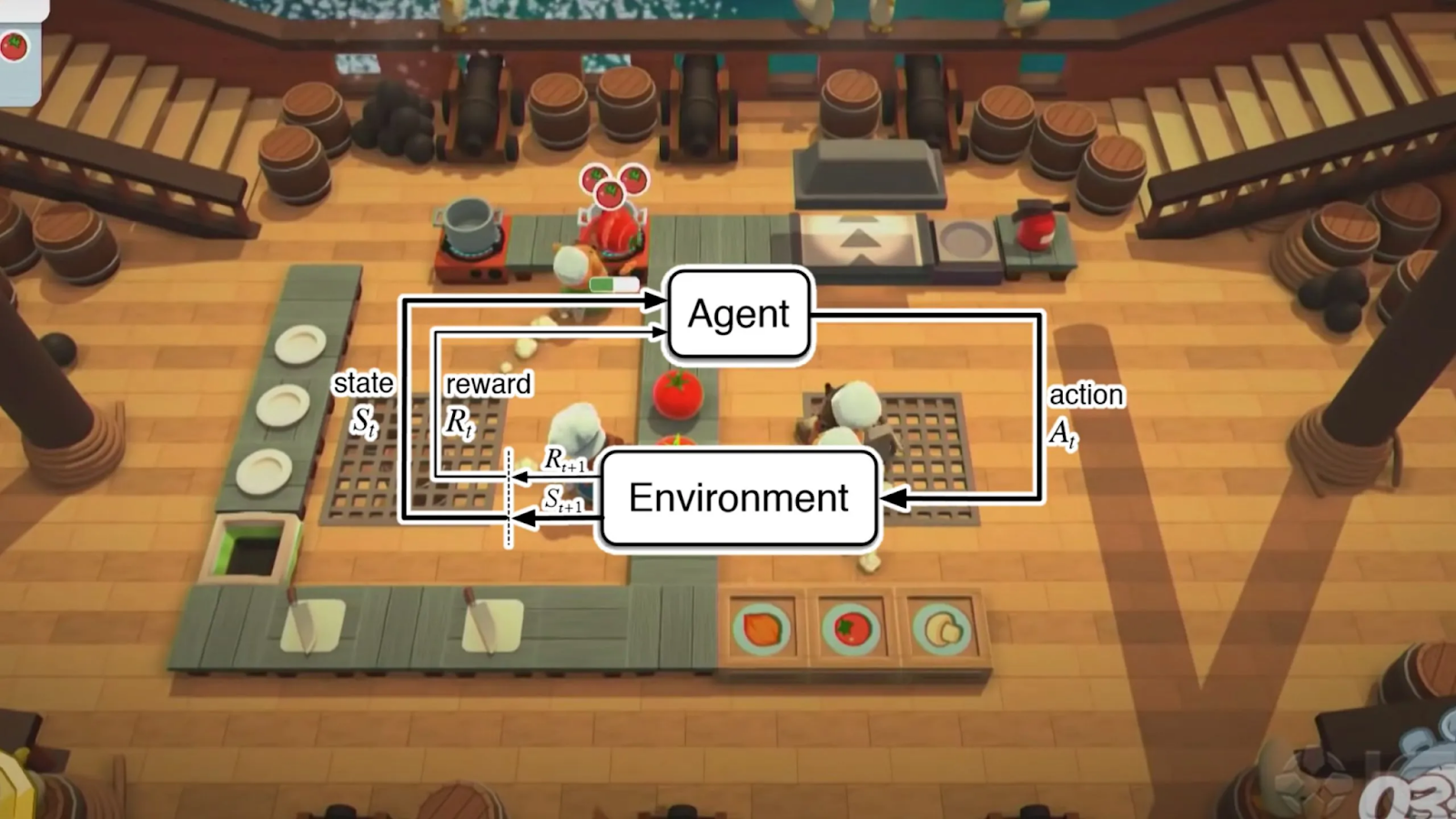

Machine learning (ML) enables computers to carry out certain tasks intelligently by learning from examples or data rather than by following pre-programmed rules, allowing computers to carry out complex procedures. While ML algorithms excel at supervised or unsupervised learning tasks, Reinforcement Learning (RL) is designed to handle sequential decision-making problems where an agent interacts with an environment. RL algorithms learn from trial and error, receiving feedback in the form of rewards or penalties to optimize their behavior over time. Example - Imagine you are playing a game and you want to win. RL is like figuring out the best moves by playing the game over and over again and getting feedback. You learn which actions give you good results (like getting points) and which actions give you bad results (like losing points).

Why is the need for Reinforcement Learning rising?

The need for RL has arisen due to the limitations of traditional machine learning approaches.

While supervised and unsupervised learning techniques are effective in tasks with labeled or unlabeled data, they struggle with problems involving sequential decision-making and dynamic environments. RL addresses these challenges by introducing a framework where an agent learns to make optimal decisions through trial and error interactions with the environment.

RL is particularly valuable in domains where actions have delayed consequences and where an agent must learn to balance short-term rewards with long-term goals. RL empowers machines to adapt and improve their behavior based on feedback, making it a crucial tool for solving complex problems where sequential decision-making and real-time adaptation are necessary.

100+ Real-Life Examples of Reinforcement Learning

Along with RL real world examples, there are perspectives of renowned researchers and experts in the field of Reinforcement Learning. These quotes reflect their insights and expertise on the subject showcasing its potential.

OpenAI Five , learned to play the complex multiplayer online battle game Dota 2 at a high level. It competed against professional human players and showcased advanced strategic decision-making.

DeepMind used RL techniques to train AlphaGo to play the ancient board game Go. By playing millions of games against itself, AlphaGo improved its strategies and went on to defeat world champions, demonstrating the power of RL in mastering complex games.

Project Malmo is an RL platform developed by Microsoft that integrates with the popular game Minecraft. It allows researchers to use RL techniques to train agents within the Minecraft environment. RL agents can learn to navigate, build structures, and interact with the game world, showcasing adaptive and intelligent behavior.

Ubisoft has implemented RL techniques in the development of Assassin's Creed game series. RL algorithms are used to train AI agents that control non-player characters (NPCs) in the game. These AI agents learn to exhibit realistic and diverse behaviors, enhancing the immersion and realism of the game world.

DeepMind 's AI system mastered the real-time strategy game StarCraft II using RL techniques. The RL agent learned to strategize, manage resources, and make tactical decisions to outperform human players.

- Massachusetts General Hospital uses RL to optimize the personalized dosing of blood thinning medications, such as warfarin, for patients. The RL agent learns from patient data to recommend individualized doses, reducing the risk of adverse events and improving treatment outcomes.

- IBM Watson is an RL-based clinical decision support system that assists oncologists in cancer treatment decision-making. It analyzes patient data and medical literature to provide evidence-based treatment recommendations, aiding physicians in creating personalized care plans.

- Google employed RL techniques to develop Flu Trends, a system that uses search queries to monitor and predict flu outbreaks. The RL agent learned from historical flu data to detect patterns and provide real-time estimates of flu activity, assisting in disease monitoring and control efforts.

- Mount Sinai developed an RL-based system to personalize insulin dosing for patients with diabetes. The RL agent learned from patient glucose monitoring data to optimize insulin delivery, resulting in improved glucose control and better management of the disease.

- The da Vinci Surgical System , widely used in robotic-assisted surgeries, employs RL techniques. The RL agent learns from expert surgeon demonstrations to assist surgeons in performing minimally invasive procedures with enhanced precision and dexterity.

Tesco , a multinational retailer, uses RL for assortment planning. RL agents learn from sales data, customer preferences, and market trends to optimize product assortments, ensuring that the right products are available at the right stores, improving customer satisfaction and sales.

Kroger , a grocery store chain, leverages RL to optimize store layouts. The RL agent learns from customer foot traffic patterns, sales data, and product relationships to determine the optimal arrangement of products, improving customer flow and maximizing sales.

Shopify , an e-commerce platform, utilizes RL algorithms for fraud detection. The RL agent learns from historical transaction data and user behavior patterns to identify and prevent fraudulent activities, protecting merchants and customers from financial losses.

Amazon utilizes RL algorithms to dynamically optimize pricing for its products. The RL agent learns from customer behavior, competitor prices, and market conditions to adjust prices in real-time, maximizing revenue and maintaining competitiveness.

Alibaba employs RL techniques to optimize its supply chain operations. RL agents learn from historical data, transportation logistics, and demand forecasts to optimize warehouse operations, inventory allocation, and delivery routes, improving efficiency and reducing costs.

Procter & Gamble (P&G) utilizes RL algorithms to optimize its inventory management. RL agents learn from demand patterns, lead times, and stock levels to determine optimal reorder points and quantities, minimizing stockouts and excess inventory.

UPS utilizes RL algorithms to optimize delivery routes. RL agents learn from real-time traffic data, package volumes, and customer time windows to dynamically adjust route plans, reducing fuel consumption and improving delivery efficiency.

Proximus , a Belgian telecommunications company, uses RL for supplier selection and negotiation. RL agents learn from supplier performance data, pricing models, and contract terms to optimize supplier selection and negotiate favorable agreements.

DHL applies RL techniques in its transportation management operations. RL agents learn from historical shipment data, traffic conditions, and delivery constraints to optimize transport routing, load consolidation, and mode selection, enhancing overall logistics efficiency.

Zara , a global fashion retailer, leverages RL for order fulfillment. RL agents learn from order characteristics, inventory availability, and production capacities to determine optimal sourcing and allocation strategies, ensuring timely order fulfillment.

Cisco employs RL techniques in supply chain risk management. RL agents learn from historical supply chain disruption data, market conditions, and risk indicators to assess and mitigate potential risks, enabling proactive risk management strategies.

Siemens implemented RL algorithms for robotic assembly tasks in manufacturing. RL agents learn to grasp and manipulate objects, perform assembly operations, and adapt to variations in object position and orientation, improving the efficiency and flexibility of robotic assembly lines

Harvard researchers employed RL techniques to coordinate and control a large swarm of small robots called Kilobots. RL agents learn to communicate and collaborate with other Kilobots, self-organizing into desired formations and performing collective tasks.

The ARM-H Robot developed at the University of Cambridge uses RL to adapt to changes in its physical structure. The RL agent learns to control the robot's movements, compensating for changes in joint stiffness or wear, allowing the robot to maintain precise and robust control.

NVIDIA 's Jetson AGX Xavier platform employs RL for autonomous flight control of drones. RL agents learn to navigate and perform complex maneuvers in dynamic environments, such as obstacle avoidance and optimal flight path planning.

OpenAI developed a robotic system called Dactyl that uses RL to learn dexterous manipulation skills. The RL agent learns to control the robot's fingers and manipulate objects through trial and error, achieving impressive levels of object manipulation and fine-grained control.

Fendt's Xaver is a precision fertilizer application system that utilizes RL techniques. RL agents learn from soil nutrient levels, plant growth stages, and field characteristics to optimize fertilizer application rates, reducing fertilizer waste and minimizing environmental impact.

LettUs Grow employs RL techniques for greenhouse climate control. RL agents learn from sensor data, plant growth models, and environmental conditions to optimize factors such as temperature, humidity, and lighting, creating ideal growing conditions and maximizing crop quality.

Cargill's Dairy Enteligen platform utilizes RL algorithms for livestock management. RL agents learn from sensor data, animal behavior, and health indicators to optimize feeding schedules, detect anomalies, and improve overall herd health and productivity.

John Deere's GreenON platform utilizes RL algorithms for crop yield optimization. RL agents learn from historical yield data, weather conditions, and field characteristics to generate optimal planting recommendations, maximizing crop yield and profitability.

Bonirob , developed by Deepfield Robotics, utilizes RL algorithms for precision irrigation. RL agents learn from sensor data, crop water requirements, and soil conditions to optimize irrigation scheduling, ensuring efficient water usage and reducing water waste.

American Express employs RL techniques for customer churn prediction. RL agents learn from customer transaction data, usage patterns, and behavior to identify customers at risk of churning, enabling proactive retention strategies and personalized offers.

Uber uses RL algorithms for dynamic pricing of its ride-sharing services. RL agents learn from supply-demand dynamics, traffic conditions, and user behavior to set optimal prices in real-time, maximizing revenue while balancing rider demand and driver availability.

Jump Trading , a proprietary trading firm, utilizes RL in high-frequency trading strategies. RL agents learn from tick-level market data, order book dynamics, and latency considerations to execute trades rapidly and exploit short-term market inefficiencies.

PayPal employs RL algorithms for fraud detection and prevention. RL agents learn from transaction data, user behavior patterns, and fraud indicators to identify suspicious activities, reducing fraudulent transactions and protecting customer accounts.

Citadel Securities , a leading market maker, utilizes RL algorithms in their algorithmic trading strategies. RL agents learn from market data, order book dynamics, and historical trade patterns to make real-time trading decisions, optimizing trade execution and liquidity provision.

LOXM is an RL-based algorithmic trading system developed by JP Morgan. It learns optimal trading strategies, dynamically adjusting trade execution parameters to achieve better performance in stock trading.

- Lemonade an insurance company, uses RL to automate and optimize claims handling processes. The RL agent learns to assess claims, verify information, and process payments efficiently, improving speed and accuracy.

Waymo , a leading autonomous vehicle company, uses RL for self-driving cars. RL agents learn from sensor data, such as cameras and lidar, to make driving decisions like lane keeping, adaptive cruise control, and object detection, improving safety and efficiency.

Tesla 's Autopilot system incorporates RL for collision avoidance. RL agents learn from sensor data and human driver behavior to make real-time decisions, such as emergency braking or evasive maneuvers, to avoid potential collisions on the road.

BMW developed a Remote Valet Parking Assistant using RL. RL agents learn from sensor data, parking lot maps, and vehicle dynamics to autonomously navigate and park the vehicle in tight parking spaces without human intervention.

Lyft employs RL algorithms for optimizing ride-hailing services. RL agents learn from historical demand patterns, traffic conditions, and driver availability to allocate drivers efficiently, reduce wait times, and improve overall service quality.

Roborace is an autonomous racing competition that utilizes RL techniques. RL agents learn from race track data, vehicle dynamics, and optimal racing lines to autonomously control race cars, competing against each other at high speeds.

Wing , a subsidiary of Alphabet, utilizes RL for autonomous delivery drones. RL agents learn from sensor data, airspace regulations, and package delivery requirements to autonomously navigate and deliver packages to specified locations.

Engie , a global energy company, employs RL algorithms for energy trading and pricing. RL agents learn from historical market data, supply-demand dynamics, and price signals to optimize trading strategies, maximize profitability, and manage energy portfolios.

Tesla utilizes RL techniques for energy storage optimization in their Powerpack and Powerwall systems. RL agents learn from electricity price data, demand patterns, and renewable energy generation forecasts to optimize energy storage scheduling, reducing costs and improving grid stability.

Opus One Solutions uses RL algorithms for demand response management. RL agents learn from customer consumption data, grid conditions, and price signals to optimize demand response actions, encouraging customers to adjust their energy usage during peak times and balance grid loads.

PG&E has implemented RL algorithms for microgrid control. RL agents learn from renewable energy generation, storage capacity, and load profiles to optimize microgrid operations, ensuring efficient energy distribution and minimizing reliance on the main grid.

Vattenfall , a leading European energy company, utilizes RL algorithms for wind farm control. RL agents learn from wind forecasts, turbine characteristics, and grid constraints to optimize turbine operation and power output, maximizing energy generation and grid integration.

ALLEGRO is an RL-based intelligent tutoring system that helps students learn algebra concepts. It adapts to individual student needs, providing personalized instruction, feedback, and exercises based on their performance and learning progress.

ALEKS (Assessment and Learning in Knowledge Spaces): ALEKS is an adaptive learning platform that utilizes RL techniques. It assesses students' knowledge in various subjects, such as math, science, and languages, and provides personalized learning paths based on their strengths and weaknesses. The RL agent continually adjusts the difficulty of the questions and the sequence of topics to optimize the learning experience.

Intelligent Tutoring Systems : RL can be used to develop intelligent tutoring systems that adapt the learning experience based on student performance and progress. The RL agent can adjust the difficulty of the questions, provide personalized hints or feedback, and dynamically generate new learning materials to optimize the student's learning trajectory.

edX , employs its own recommender system to personalize course recommendations for learners. The system considers user preferences, enrollment history, and course interactions to generate relevant suggestions.

DeepMind developed RL algorithms and the DeepMind Controls Suite to optimize industrial control systems. These algorithms learn to control complex systems like robots and machinery to improve efficiency, reduce energy consumption, and minimize defects.

Baxter , developed by Rethink Robotics, is a collaborative robot that has been trained using RL algorithms. It is designed to perform various tasks in manufacturing environments, such as assembly, packaging, and machine tending.

ABB's YuMi robot is another collaborative robot that has been trained using RL techniques. It is designed for assembly and small parts handling applications in manufacturing industries.

Fanuc , a leading robotics company, has applied RL to their industrial robots to improve their performance in various manufacturing tasks, including welding, material handling, and assembly.

Universal Robots ' collaborative robots, UR3, UR5, and UR10, have been trained using RL algorithms. These robots are designed for a wide range of manufacturing applications, such as pick-and-place operations, machine tending, and quality inspection.

KUKA's iiwa robot , a collaborative robot with sensitive touch capabilities, has been trained using RL techniques. It is used in manufacturing for tasks such as assembly, quality control, and material handling.

RL-based Autonomous Bellhop Robot is a hypothetical autonomous robot designed to assist with luggage transportation within hotels. RL algorithms could enable the robot to learn optimal routes, interact with guests, and navigate through complex environments.

A room service cart equipped with sensors and RL algorithms to optimize the delivery route and timing. The system could learn from historical data and feedback to dynamically adjust the delivery process based on factors like guest preferences, room occupancy, and real-time information.

An HVAC system in hotels that utilizes RL techniques to learn and adapt its temperature and airflow settings based on guest comfort and occupancy patterns. The system could optimize energy consumption while maintaining a comfortable environment for guests.

A food preparation system in hotel kitchens that leverages RL algorithms to optimize ingredient selection, cooking times, and recipes based on guest preferences and nutritional requirements. The system could continuously learn and improve its food preparation techniques.

Booking.com applies RL techniques to dynamically adjust hotel room prices based on factors like demand, seasonality, and competitor prices. The RL agent learns optimal pricing strategies to maximize revenue and occupancy rates.

Google's Duplex is an RL-based virtual assistant developed by Google. It can make phone calls to schedule appointments or make reservations on behalf of users, engaging in natural and human-like conversations to accomplish tasks.

ORION is a routing optimization system that uses RL algorithms to optimize package delivery routes. The RL agent learns to consider factors like traffic patterns, delivery time windows, and package prioritization, minimizing distances and improving efficiency.

Wing (owned by Alphabet Inc.) have been developing and deploying RL-based systems for autonomous drone delivery services. RL can be used to train autonomous delivery drones to optimize their flight paths, navigation, and delivery strategies

Fetch Robotics have developed autonomous mobile robots that utilize RL algorithms to optimize order fulfillment processes in warehouses. These robots learn to navigate the warehouse, locate items, and pick and transport them efficiently.

Celect (acquired by Nike) apply RL techniques to optimize pricing and revenue management in logistics. Their systems use RL algorithms to learn from historical sales data, market conditions, and customer preferences to dynamically adjust prices, promotions, and inventory allocation.

FourKites provide intelligent fleet management solutions that leverage RL algorithms. These solutions optimize logistics operations by learning from real-time data on vehicle locations, traffic conditions, and customer demands to optimize route planning, load balancing, and delivery schedules.

Criteo utilize RL algorithms to optimize bidding strategies in programmatic advertising. Their systems learn from historical data and feedback to dynamically adjust bid amounts based on factors such as user profiles, ad placement, and conversion probabilities.

Google's Smart Display Campaigns leverage RL techniques to optimize ad selection and personalization. RL algorithms learn from user interactions and historical data to dynamically choose the most relevant ad creatives, messages, and targeting options for individual users.

Facebook's Ad Placement Optimization (APO) system utilizes RL algorithms to optimize ad placement decisions across its advertising network. The system learns from user interactions, contextual factors, and historical performance data to dynamically select the most effective ad placements to maximize reach, engagement, and conversions.

Content Recommendation Engines are used by RL techniques advertising platforms such as Taboola. These engines learn from user feedback, engagement data, and contextual signals to dynamically recommend relevant content and advertisements to users, optimizing user experience and ad performance.

Intrusion Detection Systems : Companies like Darktrace utilize RL algorithms in their cybersecurity solutions for real-time intrusion detection. RL agents learn from network traffic patterns and system behavior to detect anomalies, identify potential threats, and take proactive measures to mitigate attacks.

Malware Detection : Cybereason's cybersecurity platform leverages RL techniques for malware detection and prevention. RL algorithms analyze patterns and characteristics of known malware to identify and block emerging threats, even without prior knowledge of specific malware signatures.

Adaptive Firewall Managemen t: Companies like Deep Instinct employ RL algorithms to optimize firewall configurations and rule management. RL agents learn from network traffic and attack patterns to dynamically adjust firewall rules and prioritize security policies for more effective protection against evolving threats.

Vulnerability Assessment and Patch Management : RL techniques can be applied to automate vulnerability assessment and patch management processes. Companies like Tenable utilize RL algorithms to analyze vulnerabilities, prioritize patching efforts, and optimize resource allocation for mitigating security risks.

Adaptive Authentication Systems : Adaptive authentication systems, such as those offered by BioCatch, employ RL algorithms to detect and prevent fraudulent activities by continuously learning and adapting to user behavior patterns. RL agents identify anomalies, unauthorized access attempts, and fraudulent activities to strengthen authentication processes.

RoboCup is an international robotics competition that includes a soccer league where teams of autonomous robots compete against each other. RL algorithms have been used by various teams to train their robotic players, with notable examples including the teams from Carnegie Mellon University and the University of Texas.

IBM's SlamTracker is an RL-based system used in tennis. It analyzes historical tennis match data and player statistics to predict the outcomes of future matches. The system employs RL algorithms to continuously learn and improve its predictions.

Catapult Sports , a sports analytics company, developed OptimEye, a wearable device used in various sports, including soccer, rugby, and basketball. OptimEye uses RL algorithms to analyze player movements, acceleration, and other metrics, providing insights to optimize training regimens and prevent injuries.

STRIVR is a company that uses virtual reality (VR) technology to provide immersive training experiences for athletes. By combining RL techniques with VR, STRIVR enables athletes to simulate game scenarios and make decisions in real-time, helping them improve their skills and decision-making abilities.

Sportlogiq is a sports analytics company that applies RL algorithms to analyze video footage of hockey games. Their system tracks player movements, evaluates game situations, and provides insights to coaches and teams, helping them develop effective strategies and improve performance.

Boeing's Autonomous Aerial Refueling : Boeing has been working on an RL-based system called the Autonomous Aerial Refueling (AAR) system. It uses RL algorithms to enable unmanned aircraft to autonomously perform aerial refueling operations, ensuring precise and safe refueling maneuvers.

NASA's Autonomous Systems : NASA has been actively researching RL for autonomous systems in aviation. They have developed RL algorithms to train autonomous drones and aerial vehicles for tasks such as collision avoidance, path planning, and autonomous landing.

Airbus Skywise Predictive Maintenance : Airbus has implemented RL techniques in their Skywise Predictive Maintenance platform. This platform utilizes RL algorithms to analyze aircraft sensor data, historical maintenance records, and operational data to predict component failures and optimize maintenance schedules, reducing maintenance costs and minimizing disruptions.

Thales Autopilot System : Thales, a global aerospace and defense company, has incorporated RL algorithms into their autopilot system. The RL-based autopilot system learns from pilot inputs and flight data to optimize aircraft control, adjust to different flight conditions, and enhance flight performance.

General Electric's Digital Twin Technology : General Electric (GE) utilizes RL in their digital twin technology for aircraft engines. By creating a virtual replica of the engine and using RL algorithms, GE can optimize engine operation, fuel efficiency, and maintenance schedules, leading to improved performance and reduced costs.

These examples demonstrate the broad applicability of RL in various industries, highlighting its potential for optimizing decision-making, automation, and resource management.

Exploration vs. Exploitation : Balancing exploration and exploitation is a fundamental challenge in RL. Agents must explore the environment to learn optimal policies while also exploiting what they have already learned.

example - Imagine a robot learning to navigate a maze. The robot needs to explore different paths to find the exit (exploration), but it also needs to exploit the known paths to reach the goal quickly. Striking the right balance is crucial because the robot may waste time exploring unnecessary paths or get stuck in suboptimal routes if it only exploits known paths.

Sample Efficiency : RL algorithms often require a substantial number of interactions with the environment to learn effective policies. This high sample complexity can be a significant challenge, especially in real-world applications.

example - Suppose an RL algorithm is used to optimize energy usage in a building. Collecting data on energy consumption and environmental factors can be challenging and time-consuming. With limited data, the algorithm may require a long training period to learn effective energy-saving policies. Improving sample efficiency would involve finding ways to make the algorithm learn faster and make better decisions with fewer data samples.

Generalization : RL algorithms often struggle with generalizing their learned policies to unseen situations or environments. The policies that agents learn in specific settings may not transfer well to different contexts, requiring additional training or adaptation.

example - Consider an RL agent trained to play a specific video game level. If the agent is then tested on a new, unseen level with different obstacles and layouts, it may struggle to perform well. The agent needs to generalize its learned strategies and adapt them to the new level, understanding the underlying principles of the game rather than memorizing specific actions for each level.

Credit Assignment : It is often difficult to attribute the success or failure of an episode to specific actions, making it challenging to learn from past experiences and make effective policy updates.

example - Imagine train ing an RL algorithm to control a robot arm in a manufacturing environment. The algorithm needs to learn to perform tasks like picking and placing objects. Determining which specific actions or arm movements led to successful outcomes (e.g., correctly picking up an object) can be challenging, especially when rewards are sparse or delayed.

Safety and Ethics : In RL applications that involve physical systems or have real-world consequences, ensuring safety and ethical behavior is of paramount importance. Guaranteeing safe and ethical behavior throughout the learning process is a complex challenge that requires careful design and monitoring.

Scalability and Complexity : RL faces challenges when scaling to large-scale or high-dimensional problems. As the complexity of the state and action spaces increases, RL algorithms may struggle to explore and learn effectively. Developing scalable algorithms that can handle complex environments efficiently is an ongoing research area.

Addressing these challenges requires continued research and innovation in RL algorithms, exploration of new techniques such as meta-learning and transfer learning, and collaborations between researchers, practitioners, and policymakers to ensure responsible and beneficial deployment of RL systems.

Future of Reinforcement Learning

The future of Reinforcement Learning (RL) holds significant promise as the field continues to advance and find application in various domains. Here are some potential aspects that could shape the future of RL:

Improved Algorithms : Researchers will continue to develop more sophisticated RL algorithms, focusing on areas such as sample efficiency, generalization, and scalability.

Advances in algorithms, such as meta-learning, imitation learning, and hierarchical RL, may enable faster learning, better transferability, and handling of complex problems.

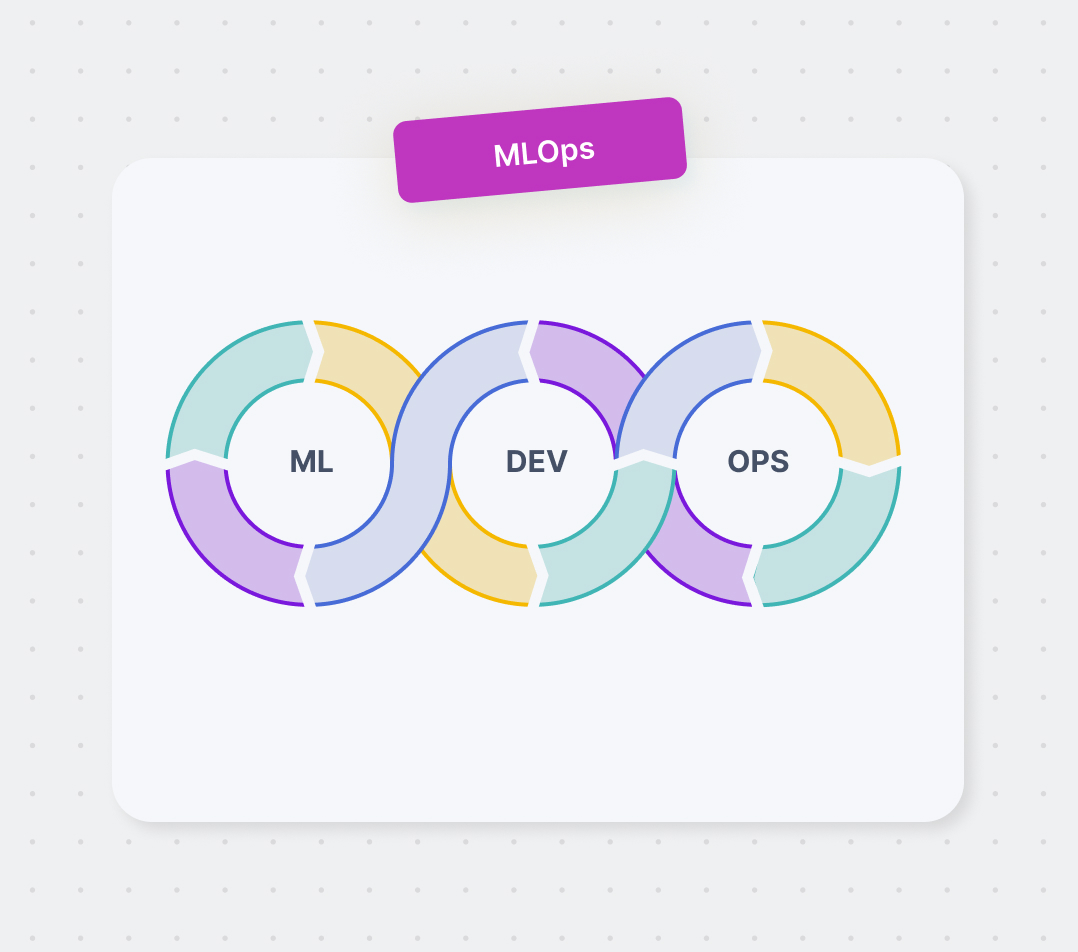

Combination with Other Technologie s: RL will likely be integrated with other emerging technologies, such as deep learning, natural language processing, and computer vision.

Combining RL with these fields can enable more sophisticated and intelligent systems that can understand and interact with the world in a more human-like manner.

Human-AI Collaboration : RL can facilitate human-AI collaboration, where humans and AI systems work together to solve complex problems.

RL algorithms can learn from human demonstrations and feedback, allowing humans to guide and influence the learning process. This collaboration can enhance decision-making, creativity, and problem-solving across multiple domains.

Transfer Learning and Lifelong Learning : RL systems that can transfer knowledge and skills learned in one task to another related task (transfer learning) and adapt to new environments and tasks (lifelong learning) will be of significant interest. These capabilities will enable RL agents to acquire knowledge more efficiently and be adaptable to evolving scenarios.

Multi-Agent RL and Cooperative Systems : The future of RL involves exploring multi-agent settings, where multiple RL agents interact and cooperate to achieve common goals. This can lead to the development of intelligent systems that can collaborate, negotiate, and solve complex tasks in coordination with other agents.

As RL continues to progress, it is expected to have a transformative impact on various aspects of technology, industry, and society, paving the way for intelligent and autonomous systems that can learn, adapt, and make decisions in dynamic and complex environments.

Final Thoughts

Reinforcement Learning is indeed an exciting and valuable area of study, particularly in domains mentioned. With the above examples it is shown how reinforcement learning is evolving every day and is creating endless opportunities. Thus, if one wants to make a career from the reinforcement learning opportunities then it is advisable to join a professional data science course. WHY?

Refer > A Quick Guide to Choosing The Best Data Science Bootcamp for Your Career

Here are a few reasons why joining a data science course can be beneficial:

Comprehensive Skill Development : Data science courses often cover a wide range of topics, including data analysis, machine learning, statistics, and data visualization. These foundational skills are valuable across various domains and provide a well-rounded understanding of data-driven problem-solving.

Diverse Career Opportunities : Data science encompasses various subfields such as machine learning, natural language processing, computer vision, and more. By pursuing a data science course, you gain exposure to these different areas and increase your employability in a broader range of roles.

Fundamental Understanding : Data science courses typically teach the underlying principles and techniques that power RL and other machine learning methods. Having a strong foundation in data science allows you to better understand and apply RL algorithms effectively.

Real-World Applications : While RL has shown promise in areas like robotics and game playing, many real-world applications still rely on other data science techniques. By joining a data science course, you can learn about these techniques and apply them to a wide range of practical problems across industries.

Flexibility and Adaptability : Staying up to date with the latest developments is crucial. By joining a data science course, you can acquire a flexible skill set that allows you to adapt to emerging trends, including RL or other cutting-edge techniques.

If you are looking for a course that helps you achieve your career goals and aspirations, join OdinSchool's Data Science Course .

About the Author

Mechanical engineer turned wordsmith, Pratyusha, holds an MSIT from IIIT, seamlessly blending technical prowess with creative flair in her content writing. By day, she navigates complex topics with precision; by night, she's a mom on a mission, juggling bedtime stories and brainstorming sessions with equal delight.

Related Posts

Rebooting at 38: Subramanian's Success Story of Grit and Upskilling

Discover the 15-year career journey filled with courage and adventure of Subramanian Ayyappan!

Beyond Expectations: Siba Ranjan's Path to Success at Genpact

Siba Ranjan Jena is now a successful Business Analyst at Genpact .

Unlocking Success: AON Analyst's Middle-Class Climb to a 124% Salary Hike!

Discover the importance of structured learning in the pursuit of success in the data science field.

Join OdinSchool's Data Science Bootcamp

With job assistance.

9 Reinforcement Learning Real-Life Applications

“Most human and animal learning can be said to fall into unsupervised learning. It has been wisely said that if intelligence was a cake, unsupervised learning could be the cake, supervised learning would be the icing on the cake, and reinforcement learning would be the cherry on the top.”

It seems intriguing, right?

Reinforcement Learning is the closest to human learning.

Just like we humans learn from the dynamic environment we live in and our actions determine whether we are rewarded or punished, so do Reinforcement Learning agents whose ultimate aim is to maximize the rewards.

Isn’t it what we are looking?

We want the AI agents to be as intelligent and decisive as us.

Reinforcement Learning techniques are the base of all the solutions, from self-driving cars to surgeons being replaced by medical AI bots. It has become the main driver of emerging technologies and, quite frankly, that’s just the tip of the iceberg.

💡 Pro Tip: Read more on Neural Network architecture, which is a major governing factor of the Deep Reinforcement Learning algorithms.

In this article, we’ll discuss ten different Reinforcement Learning applications and learn how they are shaping the future of AI across all industries.

Here’s what we’ll cover:

- Autonomous cars

Datacenters cooling

Traffic light control, image processing.

Use computer vision and LLMs for quality control automation

Ready to streamline AI product deployment right away? Check out:

- V7 Model Training

- V7 Workflows

- V7 Auto Annotation

- V7 Dataset Management

Autonomous cars

Vehicle driving in an open context environment should be backed by the machine learning model trained with all possible scenes and scenarios in the real world.

The collection of these varieties of scenes is a complicated problem to solve. How can we ensure that a self-driving car has already learned all possible scenarios and safely masters every situation?

The answer to this is Reinforcement Learning .

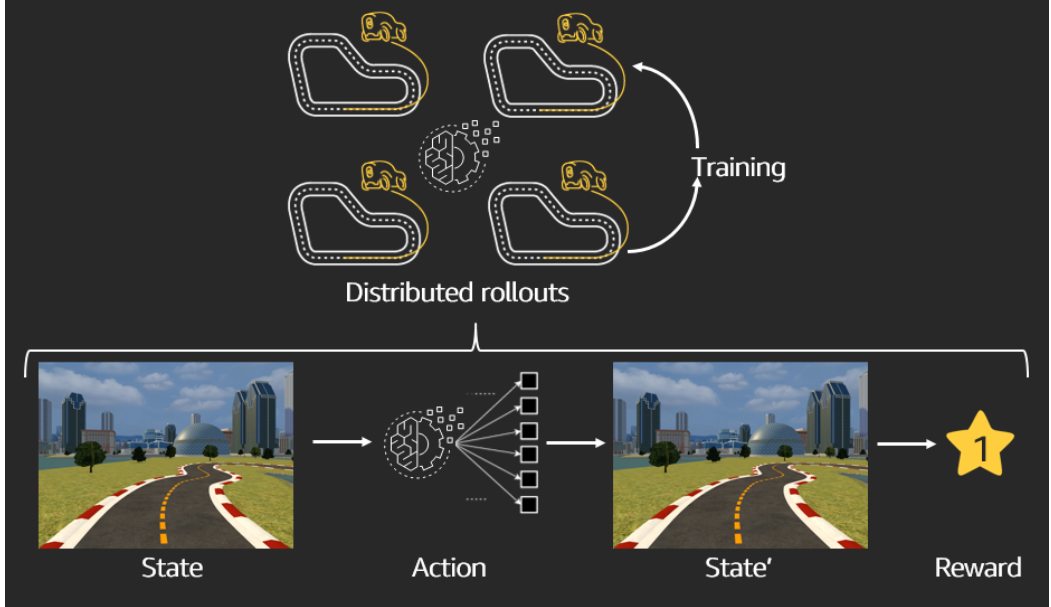

Reinforcement Learning models are trained in a dynamic environment by learning a policy from its own experiences following the principles of exploration and exploitation that minimize disruption to traffic. Self-driving cars have many aspects to consider depending on which it makes optimal decisions.

Driving zones, traffic handling, maintaining the speed limit, avoiding collisions are significant factors.

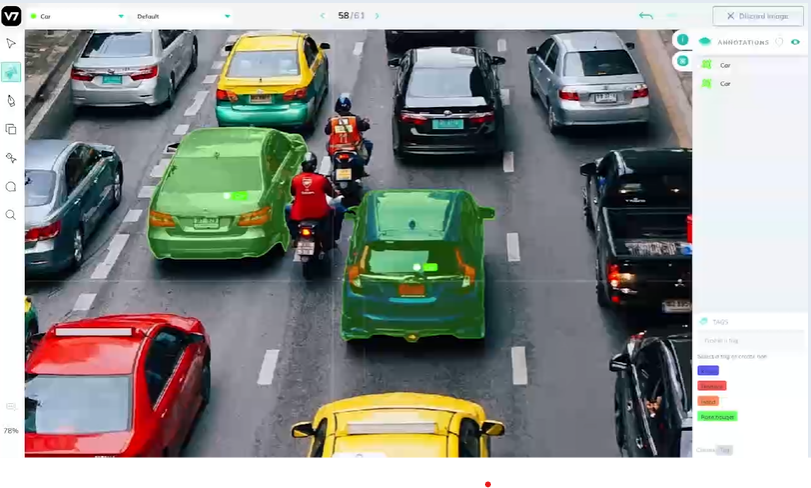

💡 Pro Tip: Have a look at our Open Datasets repository or upload your own multimodal traffic data to V7, annotate it , and train deep Neural Networks in less than an hour!

Many simulation environments are available for testing Reinforcement Learning models for autonomous vehicle technologies.

DeepTraffic is an open-source environment that combines the powers of Reinforcement Learning, Deep Learning , and Computer Vision to build algorithms used for autonomous driving launched by MIT. It simulates autonomous vehicles such as drones, cars, etc.

Carla is another excellent alternative that has been developed to support the development, training and validation of autonomous driving systems. It replicates the urban layouts, buildings, vehicles to train the self-driving cars in real-time simulated environments very close to reality.

💡 Pro-tip: Have a look at 27+ Most Popular Computer Vision Applications and Use Cases and start your first Reinforcement learning project.

Autonomous driving uses Reinforcement Learning with the help of these synthetic environments to target the significant problems of Trajectory optimization and Dynamic pathing.

Reinforcement Learning agents are trained in these dynamic environments to optimize trajectories. The agents learn motion planning, route changing, decision and position of parking and speed control, etc.

A paper on Confidence based Reinforcement Learning proposes an effective solution to use Reinforcement Learning with a baseline rule-based policy with a high confidence score.

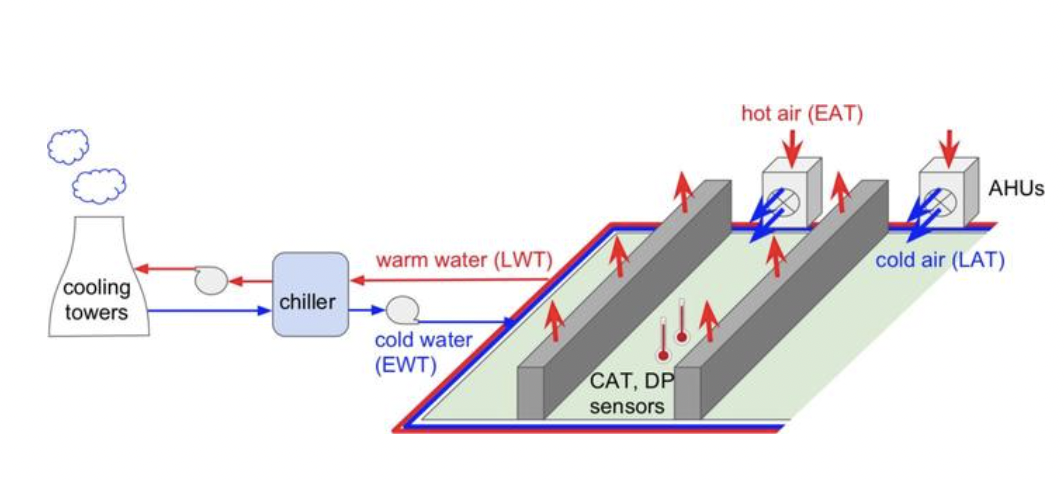

We are in this era where AI can help us tackle some of the world’s most challenging physical problems—such as energy consumption. With the entire world at the edge of virtualization and cloud-based applications, large-scale commercial and industrial systems like data centers have a large energy consumption to keep the servers running.

Interesting Fact: Google data centers using machine learning algorithms have reduced the amount of energy for cooling by up to 40 percent.

Researchers in this domain have proved that a few hours of exploration enables data-driven, model-based learning.

This approach of a Reinforcement Learning agent with little or no prior knowledge can effectively and safely regulate conditions on a server floor efficiently compared to the existing PID controllers. The data collected by thousands of sensors within the data centers have attributes like temperatures, power, setpoints, etc.—that are fed to be used to train the deep neural networks for datacentre cooling.

Due to the difficulty of directly solving this problem through conventional machine learning algorithms due to the lack of varied datasets, deep Q-learning Network (DQN)- based methods are broadly used to conquer this challenge.

With the increase of urbanization and the increase in the number of cars per household, traffic congestion has become an enormous problem, especially in metropolitan areas.

Reinforcement Learning is a trending data-driven approach for adaptive traffic signal control. These models are trained with the objective of learning a policy using a value function that optimally controls the traffic light based on the current status of the traffic.

The decision-making needs to be dynamic depending upon the arrival rate of traffic from different directions, which ought to vary at different times of the day. The conventional way of handling traffic seems to be limited due to this non-stationary behavior. Also, the policy π trained for an intersection with x lanes cannot be re-used in an intersection with y lanes.

Reinforcement Learning (RL) is a trending approach due to its data-driven nature for adaptive traffic signal control in complex urban traffic networks.

There are some limitations in applying deep Reinforcement Learning algorithms to transportation networks, like an exploration-exploitation dilemma, multi-agent training schemes, continuous action spaces, signal coordination, etc.

💡 Pro tip: Take a step back and revise the concepts of quality training data to improve your model’s accuracy.

Choosing medicines is hard. It is even more challenging when the patient has been on medication for years, and no improvements have been seen.

Recent research shows that a patient suffering from chronic disease tries different medicines before giving up. We must find the right treatments and map them to the right person.

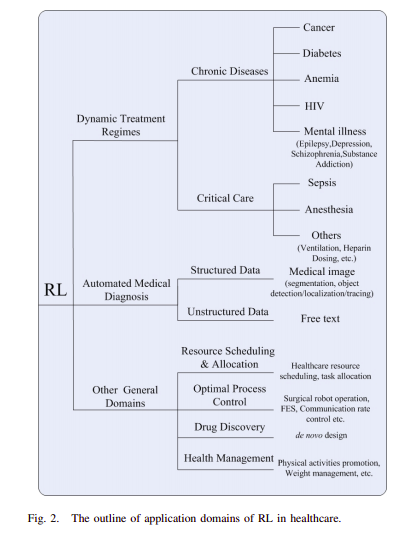

The healthcare sector has always been an early adopter and a significant beneficiary of technological advancements. This industry has seen a significant tilt towards Reinforcement Learning in the past few years, especially in implementing dynamic treatment regimes ( DTRs ) for patients suffering from long-term illnesses.

It has also found its application in automated medical diagnosis, health resource scheduling, drug discovery and development, and health management.

Automated medical diagnosis

Deep Reinforcement Learning (DRL) augments the Reinforcement Learning framework, which learns a sequence of actions that maximizes the expected reward, using deep neural networks' representative power.

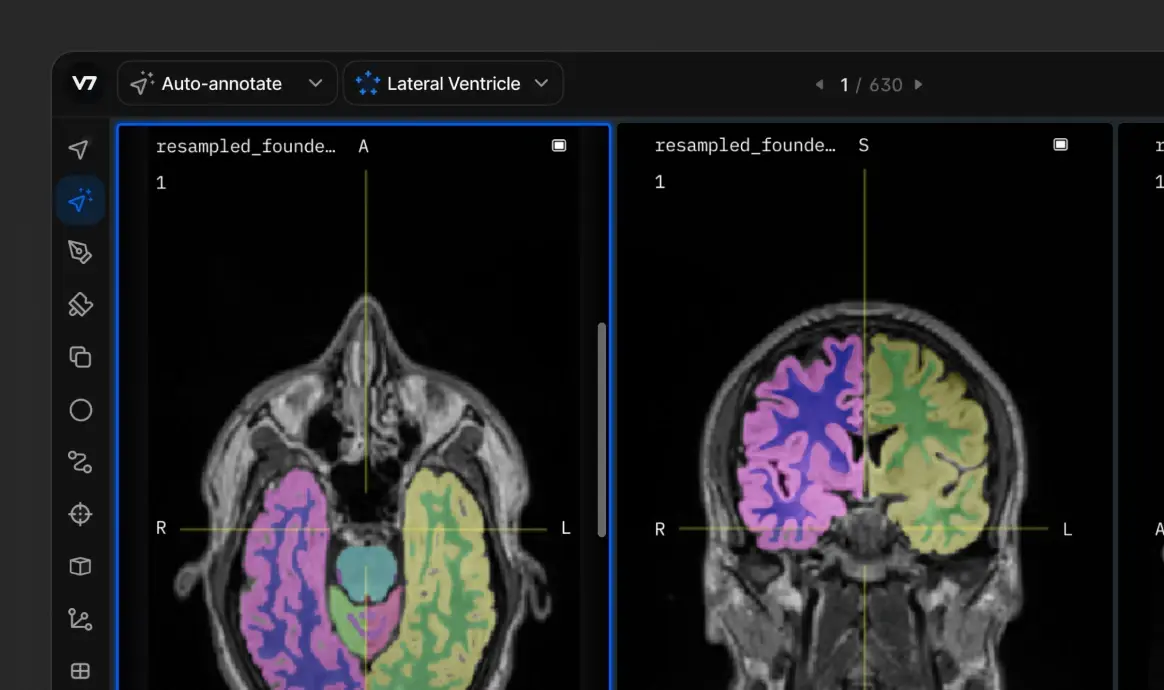

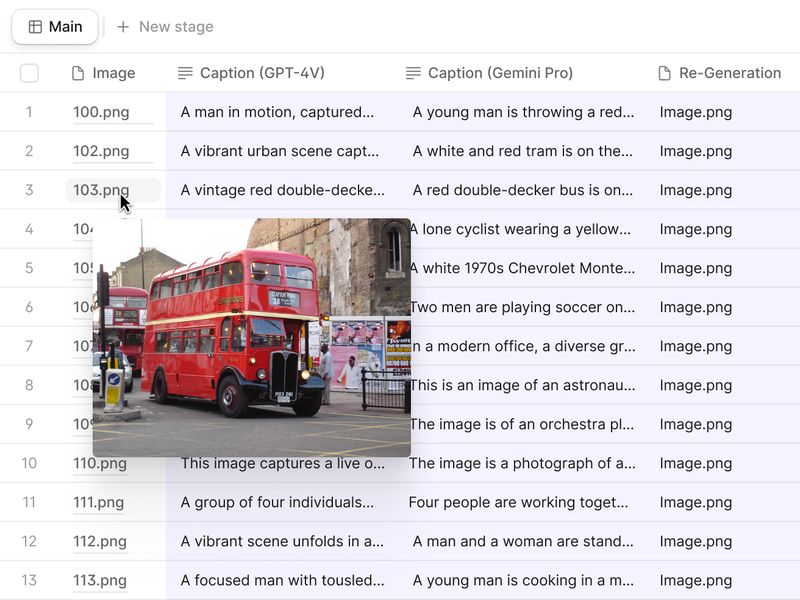

Reinforcement Learning has taken over medical report generation, identification of nodules/tumors and blood vessel blockage, analysis of these reports, etc. Refer to this paper for more insights into this problem space and the solutions offered by the Reinforcement Learning approach.

💡 Pro-tip: Have a look at our healthcare datasets for computer vision and start annotating medical data today.

Dtrs (dynamic treatment regimes).

DTRs involve sequential healthcare decisions – including treatment type, drug dosages, and appointment timing – tailored to an individual patient based on their medical history and conditions over time. This input data is fed to the algorithm outputting treatment options to provide the patient’s most desirable environmental state.

The tricky thing is that patients suffering from chronic long-term diseases like HIV develop resistance to drugs, so the drugs need to be switched over time, making the treatment sequence important. When physicians need to adapt treatment for individual patients, they may refer to past trials, systematic reviews, and analyses. However, the specific use-case data may not be available for many ICU conditions.

Many patients admitted to ICUs might also be too ill for inclusion in clinical trials. We need other methods to aid ICU clinical decisions, including sizeable observational data sets. Given the dynamic nature of critically ill patients, one machine learning method called reinforcement learning (RL) is particularly suitable for ICU settings.

Robotic surgeries

A powerful Reinforcement Learning application in decision-making is the use of surgical bots that can minimize errors and any variations and will eventually help increase the surgeons' efficiency. One such robot is Da Vinci , which allows surgeons to perform complex procedures with greater flexibility and control than conventional approaches.

The critical features served are aiding surgeons with advanced instruments, translating hand movements of the surgeons in real-time, and delivering a 3D high-definition view of the surgical area.

Reinforcement Learning is data-intensive and is well-versed in interacting with a dynamic and initially unknown environment. The current solutions offered in Image Processing by supervised and unsupervised neural networks focus more on the classification of the objects identified. However, they do not acknowledge the interdependency among different entities and the deviation from the human perception procedure.

It is used in the following subfields of Image Processing.

Object detection and Localization

The RL approach learns multiple searching policies by maximizing the long-term reward, starting with the entire image as a proposal, allowing the agent to discover multiple objects sequentially.

It offers more diversity in search paths and can find multiple objects in a single feed and generate bounding boxes or polygons. This paper on Active Object Localization with Deep Reinforcement Learning validates its effectiveness.

💡 Pro tip: Check out our guide to YOLO: Real-Time Object Detection.

Scene understanding.

Artificial vision systems based on deep convolutional neural networks consume large, labeled datasets to learn functions that map the sequence of images to human-generated scene descriptions. Reinforcement Learning offers rich and generalizable simulation engines for physical scene understanding.

This paper shows a new model based on pixel-wise rewards (pixelRL) for image processing. In pixelRL, an agent is attached to each pixel responsible for changing the pixel value by taking action. It is an effective learning method that significantly improves the performance by considering the future states of the own pixel and neighbor pixels.

Reinforcement learning is one of the most modern machine learning technologies in which learning is carried out through interaction with the environment. It is used in computer vision tasks like feature detection, image segmentation , object recognition , and tracking .

Here are some other examples where Reinforcement Learning is used in image processing:-

- Robots equipped with visual sensors from which they learn the state of the surrounding environment

- Scanners to understand the text

- Image pre-processing and segmentation of medical images like CT Scans

- Traffic analysis and real-time road processing by video segmentation and frame-by-frame image processing

- CCTV cameras for traffic and crowd analytics etc.

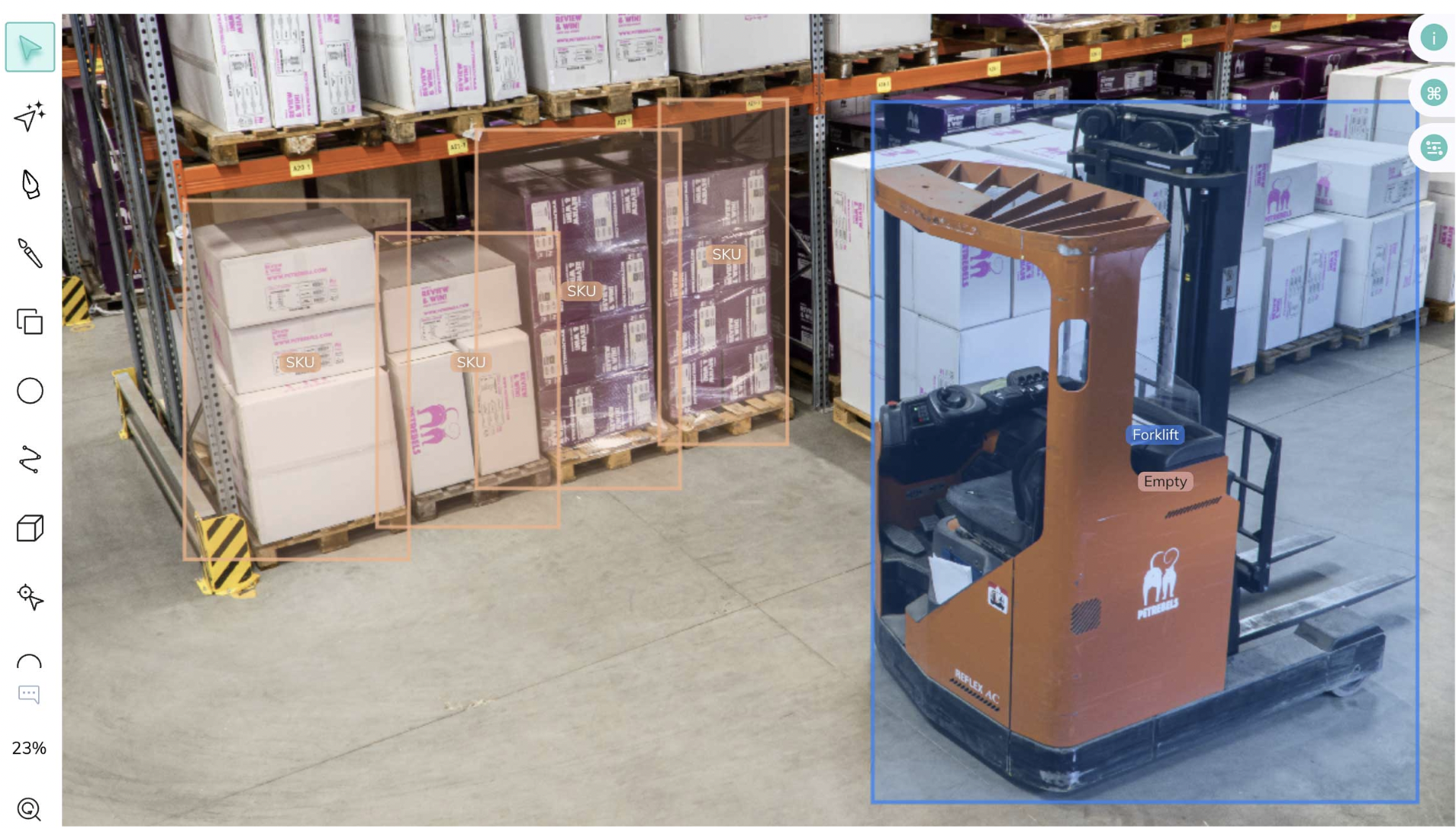

Robots operate in a highly dynamic and ever-changing environment, making it impossible to predict what will happen next. Reinforcement Learning provides a considerable advantage in these scenarios to make the robots robust enough and help acquire complex behaviors adaptively in different scenarios.

It aims to remove the need for time-consuming and tedious checks and replaces them with computer vision systems ensuring higher levels of quality control on the production assembly line.

💡 Pro tip: Read these guides on data cleaning and data preprocessing.

Robots are used in warehouse navigation mainly for part supplies, quality testing, packaging, automizing the complete process in the environment where other humans, vehicles, and devices are also involved.

All these scenarios are complex to handle by the traditional machine learning paradigm. The robot should be intelligent and responsive enough to walk through these complex environments. It is trained to have object manipulation knowledge for grasping objects of different sizes and shapes depending upon the texture and mass of the object embedded with the power of image processing and computer vision.

Let us quickly walk through some of the use-cases in this field of robotics that Reinforcement Learning offers solutions for.

Product assembly

Computer vision is used by multiple manufacturers to help improve their product assembly process and to completely automate this and remove the manual intervention from this entire flow. One central area in the product assembly is object detection and object tracking.

Defect Inspection

A deep Reinforcement learning model is trained using multimodal data to easily identify missing pieces, dents, cracks, scratches, and overall damage, with the images spanning millions of data points .

Using V7’s software, you can train object detection, instance segmentation , and image classification models to spot defects and anomalies.

💡 Pro tip: Learn more about training defect inspection models with V7

Inventory management.

The inventory management in big companies and warehouses has become automated with the inventions in the field of computer vision to track stock in real-time. Deep reinforcement learning agents can locate empty containers, and ensure that restocking is fully optimised.

💡 Pro tip: Want to learn more? Check out AI in manufacturing.

Automate repetitive tasks and complex processes with AI

Language understanding uses Reinforcement Learning because of its inherent nature of decision making. The agent tries to understand the state of the sentence and tries to form an action set maximizing the value it would add.

The problem is complex because the state space is huge; the action space is vast too. Reinforcement Learning is used in multiple areas of NLP like text summarization, question answering, translation, dialogue generation, machine translation etc.

Reinforcement Learning agents can be trained to understand a few sentences of the document and use it to answer the corresponding questions. Reinforcement Learning with a combination of RNN is used to generate the answers for those questions as shown in this paper.

💡 Pro tip: Don't forget to have a look at Supervised Learning vs. Unsupervised Learning .

Research led by Salesforce introduced a new training method that combines standard supervised word prediction and reinforcement learning (RL), showing improvement over previous state-of-the-art models for summarization as shown here in this paper .

Robots in industries or healthcare working towards reducing manual intervention use reinforcement learning to map natural language instructions to sequences of executable actions.

During training, the learner repeatedly constructs action sequences, executes those actions, and observes the resulting rewards. A reward function works in the backend that defines the quality of these executed actions. This paper demonstrates that this method can rival supervised learning techniques while requiring only a few annotated training examples.

💡 Pro-tip: Read this guide on test, train and validation split for better results.

Another interesting research in this area is led by the researchers of Stanford University, Ohio State University, and Microsoft Research on Deep RL for dialogue generation .

The deep RL finds application in a chatbot dialogue . Conversations are simulated using two virtual agents and the quality is improved in progressive iterations.

Reinforcement Learning is used in various marketing spheres to develop techniques that maximize customer growth and strive for a balance between long-term and short-term rewards.

Let us go through the various scenarios where real-time bidding via Reinforcement Learning is used in the marketing space.

Customized Recommendations for customers

Personalized product suggestions give customers what they want. The Reinforcement Learning bot is trained to handle situations where challenging barriers like reputation, limited customer data, and consumers evolving mindset are dealt.

It dynamically learns the customer's requirements and analyses the behavior to serve high-quality recommendations. This increases the ROI and profit margins for the company.

Creating the most beneficial content for advertisement

Coming up with the best marketing pitch that attracts a broader audience is challenging. Models based on Q-Learning are trained on a reward basis and develop an inherent knowledge of positive actions and the desired results. The Reinforcement Learning model will find the advertisement that the users are more likely to click on, thus increasing the customer footprint.

Identifying interest areas of customers with store’s CCTV to deliver better advertisements and offers.

Reinforcement Learning For Consumers And Brands

Without the power of AI, there is a big hurdle in optimizing the reach of advertisements to the customers.

Analyzing which advertisement would suit the need at a given scenario is very hard by naive methods; it paves the way for Reinforcement Learning models. The algorithm meets associated user preferences and dynamically chooses the perfect frequency for buyers.

As a result, increased online conversions are transforming browsing into business.

Reinforcement Learning has taken over the traditional methods of creating video games.

As compared to traditional video games where we need to have a complex behavioral tree to craft the logic of game, training a Reinforcement Learning model is much simpler. Here, the agent is set to learn by itself in the simulated game environment by performing the necessary sequence of actions to achieve the desired behavior.

💡Pro-Tip: Looking to speed up your annotation process? Check out V7—Automated Image Annotation.

In Reinforcement Learning, the agent should be trained for all the aspects of the game like path finding, defense, attack and creating situation based strategies to make the game interesting for the opponent.

Depending upon the intelligence the bot has obtained, levels of the game are set.

Google DeepMind is a live example of Game Optimization.

We have seen in AlphaGo, a RL trained agent beat the strongest Go player in history scoring a goal that was considered impossible at that time. It is known to be a very challenging game for Artifical Intelligence.

AlphaGo, a computer program, created by DeepMind a Google company, uses an amalgamation of the advanced search tree and deep neural networks. These neural networks take the Go board as an input derive features through different network layers containing millions of neuron-like connections.

Reinforcement Learning agents are also used in bug detection and game testing. This is due to its ability to run a ton of iterations without human input, stress testing, and creating situations for potential bugs.

Newer games companies such as Ubisoft have recently utilized Reinforcement Learning to decrease the number of active bugs found within the game. RL agents are trained in the game environment using exploration and exploitation techniques to test some of its game mechanics in an attempt to fix them.

Reinforcement Learning Applications: Key Takeaways

Finally, here's a quick recap of everything we've learned:

- Reinforcement Learning involves training a model so that they produce a sequence of decisions. It is either trained using a positive mechanism where the models are rewarded for actions to be more likely to generate it in the future. On the other hand, negative Reinforcement Learning adds punishment so that they don't produce the current sequence of results again.

- Reinforcement Learning has changed the dynamics of various sectors like Healthcare, Robotics, Gaming, Retail, Marketing, and many more.

- Various companies have started managing the marketing campaigns digitally with Reinforcement Learning due to its fundamental ability to increase the profit margins by predicting the choices and behavior of customers towards the products/services.

- Healthcare is another sector where Reinforcement Learning is used to help doctors discover the treatment type, suggest appropriate doses of drugs and timings for taking such doses.

- Reinforcement Learning approaches are used in the field of Game Optimization and simulating synthetic environments for game creation.

- Reinforcement Learning also finds application in self-driving cars to train an agent for optimizing trajectories and dynamically planning the most efficient path.

- RL can be used for NLP use cases such as text summarization, question & answers, machine translation.

💡 Read next:

A Step-by-Step Guide to Text Annotation [+Free OCR Tool]

The Complete Guide to CVAT—Pros & Cons

5 Alternatives to Scale AI

The Ultimate Guide to Semi-Supervised Learning

9 Essential Features for a Bounding Box Annotation Tool

Mean Average Precision (mAP) Explained: Everything You Need to Know

The Complete Guide to Ensemble Learning

The Beginner’s Guide to Contrastive Learning

Pragati is a software developer at Microsoft, and a deep learning enthusiast. She writes about the fundamental mathematics behind deep neural networks.

“Collecting user feedback and using human-in-the-loop methods for quality control are crucial for improving Al models over time and ensuring their reliability and safety. Capturing data on the inputs, outputs, user actions, and corrections can help filter and refine the dataset for fine-tuning and developing secure ML solutions.”

Related articles

Explore Neptune Scale: tracker for foundation models -> Tour a live project 📈

Model-Based and Model-Free Reinforcement Learning: Pytennis Case Study

Reinforcement learning is a field of Artificial Intelligence in which you build an intelligent system that learns from its environment through interaction and evaluates what it learns in real-time.

A good example of this is self-driving cars, or when DeepMind built what we know today as AlphaGo, AlphaStar, and AlphaZero.

AlphaZero is a program built to master the games of chess, shogi and go (AlphaGo is the first program that beat a human Go master). AlphaStar plays the video game StarCraft II.

In this article, we’ll compare model-free vs model-based reinforcement learning. Along the way, we will explore:

- Fundamental concepts of Reinforcement Learning a) Markov decision processes / Q-Value / Q-Learning / Deep Q Network

- Difference between model-based and model-free reinforcement learning.

- Discrete mathematical approach to playing tennis – model-free reinforcement learning.

- Tennis game using Deep Q Network – model-based reinforcement learning.

Comparison/Evaluation

- References to learn more

SEE RELATED ARTICLES

7 Applications of Reinforcement Learning in Finance and Trading 10 Real-Life Applications of Reinforcement Learning Best Reinforcement Learning Tutorials, Examples, Projects, and Courses

Fundamental concepts of Reinforcement Learning

Any reinforcement learning problem includes the following elements:

- Agent – the program controlling the object of concern (for instance, a robot).

- Environment – this defines the outside world programmatically. Everything the agent(s) interacts with is part of the environment. It’s built for the agent to make it seem like a real-world case. It’s needed to prove the performance of an agent, meaning if it will do well once implemented in a real world application.

- Rewards – this gives us a score of how the algorithm performs with respect to the environment. It’s represented as 1 or 0. ‘1’ means that the policy network made the right move, ‘0’ means wrong move. In other words, rewards represent gains and losses.

- Policy – the algorithm used by the agent to decide its actions. This is the part that can be model-based or model-free.

Every problem that needs an RL solution starts with simulating an environment for the agent. Next, you build a policy network that guides the actions of the agent. The agent can then evaluate the policy if its corresponding action resulted in a gain or a loss.

The policy is our main discussion point for this article. Policy can be model-based or model-free. When building, our concern is how to optimize the policy network via policy gradient (PG).

PG algorithms directly try to optimize the policy to increase rewards. To understand these algorithms, we must take a look at Markov decision processes (MDP).

Markov decision processes / Q-Value / Q-Learning / Deep Q Network

MDP is a process with a fixed number of states, and it randomly evolves from one state to another at each step. The probability for it to evolve from state A to state B is fixed.

A lot of Reinforcement Learning problems with discrete actions are modeled as Markov decision processes , with the agent having no initial clue on the next transition state. The agent also has no idea on the rewarding principle, so it has to explore all possible states to begin to decode how to adjust to a perfect rewarding system. This will lead us to what we call Q Learning.

The Q-Learning algorithm is adapted from the Q-Value Iteration algorithm, in a situation where the agent has no prior knowledge of preferred states and rewarding principles. Q-Values can be defined as an optimal estimate of a state-action value in an MDP.

It is often said that Q-Learning doesn’t scale well to large (or even medium) MDPs with many states and actions. The solution is to approximate the Q-Value of any state-action pair (s,a). This is called Approximate Q-Learning.

DeepMind proposed the use of deep neural networks, which work much better, especially for complex problems – without the use of any feature engineering. A deep neural network used to estimate Q-Values is called a deep Q-network (DQN). Using DQN for approximated Q-learning is called Deep Q-Learning.

Difference between model-based and model-free Reinforcement Learning

RL algorithms can be mainly divided into two categories – model-based and model-free .

Model-based , as it sounds, has an agent trying to understand its environment and creating a model for it based on its interactions with this environment. In such a system, preferences take priority over the consequences of the actions i.e. the greedy agent will always try to perform an action that will get the maximum reward irrespective of what that action may cause.

On the other hand, model-free algorithms seek to learn the consequences of their actions through experience via algorithms such as Policy Gradient, Q-Learning, etc. In other words, such an algorithm will carry out an action multiple times and will adjust the policy (the strategy behind its actions) for optimal rewards, based on the outcomes.

Think of it this way, if the agent can predict the reward for some action before actually performing it thereby planning what it should do, the algorithm is model-based. While if it actually needs to carry out the action to see what happens and learn from it, it is model-free.

This results in different applications for these two classes, for e.g. a model-based approach may be the perfect fit for playing chess or for a robotic arm in the assembly line of a product, where the environment is static and getting the task done most efficiently is our main concern. However, in the case of real-world applications such as self-driving cars, a model-based approach might prompt the car to run over a pedestrian to reach its destination in less time (maximum reward), but a model-free approach would make the car wait till the road is clear (optimal way out).

To better understand this, we will explain everything with an example. In the example, we’ll build model-free and model-based RL for tennis games . To build the model, we need an environment for the policy to get implemented. However we won’t build the environment in this article, we’ll import one to use for our program.

Pytennis environment

We’ll use the Pytennis environment to build a model-free and model-based RL system.

A tennis game requires the following:

- 2 players which implies 2 agents.

- A tennis lawn – main environment.

- A single tennis ball.

- Movement of the agents left-right (or right-left direction).

The Pytennis environment specifications are:

- There are 2 agents (2 players) with a ball.

- There’s a tennis field of dimension (x, y) – (300, 500)

- The ball was designed to move on a straight line, such that agent A decides a target point between x1 (0) and x2 (300) of side B (Agent B side), therefore it displays the ball 50 different times with respect to an FPS of 20. This makes the ball move in a straight line from source to destination. This also applies to agent B.

- Movement of Agent A and Agent B is bound between (x1= 100, to x2 = 600).

- Movement of the ball is bound along the y-axis (y1 = 100 to y2 = 600).

- Movement of the ball is bound along the x-axis (x1 = 100, to x2 = 600).

Pytennis is an environment that mimics real-life tennis situations. As shown below, the image on the left is a model-free Pytennis game, and the one on the right is model-based .

Discrete mathematical approach to playing tennis – model-free Reinforcement Learning

Why “discrete mathematical approach to playing tennis”? Because this method is a logical implementation of the Pytennis environment.

The code below shows us the implementation of the ball movement on the lawn. You can find the source code here .

Here is how this works once the networks are initialized (Network A for Agent A and Network B for Agent B):

Each network is bounded by the directions of ball movement. Network A represents Agent A, which defines the movement of the ball from Agent A to any position between 100 and 300 along the x-axis at Agent B. This also applies to Network B (Agent B).

When the network is started, the .network method discretely generates 50 y-points (between y1 = 100 and y2 = 600), and corresponding x-points (between x1 which happens to be the location of the ball from Agent A to a randomly selected point x2 on Agent B side) for network A. This also applies to Network B (Agent B).

To automate the movement of each agent, the opposing agent has to move in a corresponding direction with respect to the ball. This can only be done by setting the x position of the ball to be the x position of the opposing agent, as in the code below.

Meanwhile the source agent has to move back to its default position from its current position. The code below illustrates this.

Now, to make the agents play with each other recursively, this has to run in a loop. After every 50 counts (50 frame display of the ball), the opposing player is made the next player. The code below puts all of it together in a loop.

And this is basic model-free reinforcement learning. It’s model-free because you need no form of learning or modelling for the 2 agents to play simultaneously and accurately.

Tennis game using Deep Q Network – model-based Reinforcement Learning

A typical example of model-based reinforcement learning is the Deep Q Network. Source code to this work is available here .

The code below illustrates the Deep Q Network, which is the model architecture for this work.

In this case, we need a policy network to control the movement of each agent as they move along the x-axis. Since the values are continuous, that is from (x1 = 100 to x2 = 300), we can’t have a model that predicts or works with 200 states.

To simplify this problem, we can split x1 and x2 into 10 states / 10 actions, and define an upper and lower bound for each state.

Note that we have 10 actions, because from a state there are 10 possibilities.

The code below illustrates the definition of both upper and lower bounds for each state.

The Deep Neural Network (DNN) used experimentally for this work is a network of 1 input (which represents the previous state), 2 hidden layers of 64 neurons each, and an output layer of 10 neurons (binary selection from 10 different states). This is shown below:

Now that we have a DQN model that predicts the next state/action of the model, and the Pytennis environment already sorted out the ball movement in a straight line, let’s go ahead and write a function that carries out an action by an agent, based on the DQN model prediction regarding it’s next state.

The detailed code below illustrates how agent A makes a decision on where to direct the ball (on Agent B’s side and vice-versa). This code also evaluates agent B, if it was able to receive the ball.

From the code above, function stepA gets executed when AgentA has to play. While playing, AgentA uses the next action predicted by DQN to estimate the target (x2 position, at Agent B, from the current position of the ball, x1, which is on it’s own side), by using the ball trajectory network developed by the Pytennis environment to make its own move.

Agent A, for example, is able to get a precise point x2 on Agent’s B side by using the function randomVal , as shown above, to randomly select a coordinate x2 bounded by the action given by DQN.

Finally, function stepA evaluates the response of AgentB to target point x2 by using the function evaluate_action . The function evaluate_action defines if AgentB should be penalized or rewarded. Just as this is described for AgentA to AgentB, it applies for AgentB to AgentA (same code by different variable names).

Now that we have the policy, reward, environment, states and actions correctly defined, we can go ahead and recursively make the two agents play the game with each other.

The code below shows how turns are taken by each agent after 50 ball displays. Note that for each ball display, the DQN is making a decision on where to toss the ball for the next agent to play.

Having played this game model-free and model-based, here are some differences that we need to be aware of:

If you’re interested, the videos below show these two techniques in action playing tennis games:

1. Model-free

2. Model-based

Tennis might be simple compared to self-driving cars, but hopefully this example showed you a few things about RL that you didn’t know.

The main difference between model-free and model-based RL is the policy network, which is required for model-based RL and unnecessary in model-free.

It’s worth noting that oftentimes, model-based RL takes a massive amount of time for the DNN to learn the states perfectly without getting it wrong.

But every technique has its drawbacks and advantages, choosing the right one depends on what exactly you need your program to do.

Thanks for reading, I left a few additional references for you to follow if you want to explore this topic more.

- AlphaGo documentary: https://www.youtube.com/watch?v=WXuK6gekU1Y

- List of reinforcement learning environments: https://medium.com/@mauriciofadelargerich/reinforcement-learning-environments-cff767bc241f

- Create your own reinforcement learning environment: https://towardsdatascience.com/create-your-own-reinforcement-learning-environment-beb12f4151ef

- Types of RL Environments: https://subscription.packtpub.com/book/big_data_and_business_intelligence/9781838649777/1/ch01lvl1sec14/types-of-rl-environment

- Model-based Deep Q Network: https://github.com/elishatofunmi/pytennis-Deep-Q-Network-DQN

- Discrete mathematics approach youtube video: https://youtu.be/iUYxZ2tYKHw

- Deep Q Network approach YouTube video: https://youtu.be/FCwGNRiq9SY

- Model-free discrete mathematics implementation: https://github.com/elishatofunmi/pytennis-Discrete-Mathematics-Approach-

- Hands-on Machine Learning with scikit-learn and TensorFlow: https://www.amazon.com/Hands-Machine-Learning-Scikit-Learn-TensorFlow/dp/1491962291

Was the article useful?

More about model-based and model-free reinforcement learning: pytennis case study, check out our product resources and related articles below:, observability in llmops: different levels of scale, llm observability: fundamentals, practices, and tools, 3 takes on end-to-end for the mlops stack: was it worth it, adversarial machine learning: defense strategies, explore more content topics:, manage your model metadata in a single place.

Join 50,000+ ML Engineers & Data Scientists using Neptune to easily log, compare, register, and share ML metadata.

Exploring Reinforcement Learning: A Case Study Applied to the Popular Snake Game

- Conference paper

- First Online: 28 February 2022

- Cite this conference paper

- Russell Sammut Bonnici 14 ,

- Chantelle Saliba 14 ,

- Giulia Elena Caligari 14 &

- Mark Bugeja 14

Part of the book series: Lecture Notes in Networks and Systems ((LNNS,volume 382))

Included in the following conference series:

- The International Conference on Intelligent Systems & Networks

Reinforcement Learning is a machine learning approach in which an agent interacts with their environment to gather information, and make an informed decision based on the accumulated information. In this research, we investigate the applicability of various reinforcement learning techniques for Snake, a video game popular on the Nokia 3310 mobile phone. Q-Learning (Quality-Learning), SARSA (State-Action Reward State-Action) and PPO (Proximal Policy Optimization), were implemented and evaluated for Snake. Q-Learning and SARSA did not generate optimal results due to the large environment of the game. Meanwhile, PPO was implemented with three varying approaches for input; a vector, CNN and raycasting based approach. PPO, in conjunction with raycasting, resulted in the best performance, with the snake agent learning for both collecting food and avoiding obstacles. Furthermore, A* Pathfinding was tested and it achieved a performance better than Q-Learning and SARSA but not better than PPO as it was less adaptable to large environments. In the future, agents in large dynamic game environments, may benefit further from utilizing PPO.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

Subscribe and save.

- Get 10 units per month

- Download Article/Chapter or eBook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Similar content being viewed by others

Leaving the NavMesh: An Ablative Analysis of Deep Reinforcement Learning for Complex Navigation in 3D Virtual Environments

Evaluating Human-like Behaviors of Video-Game Agents Autonomously Acquired with Biological Constraints

Mario Fast Learner: Fast and Efficient Solutions for Super Mario Bros

Anunpattana, P., Panumate, C., Iida, H.: Finding comfortable settings of snake game using game refinement measurement. In: Advances in Computer Science and Ubiquitous Computing, pp. 66–73 (2016)

Google Scholar

Jost, J.,, Li, W.: Reinforcement learning in complementarity game and population dynamics. Phys. Rev. E, Stat. Nonlinear Soft Matter Phys. 89 (2), 022113 (2014). ISSN: 15393755. http://search.proquest.com/docview/1639976188/

Kaelbling, L.P., Littman, M.L., Moore, A.W.: Reinforcement learning: a survey. In: CoRR cs.AI/9605103 (1996). https://arxiv.org/abs/cs/9605103

Sutton, R.S., Barto, A.G.: Reinforcement learning: an introduction. IEEE Trans. Neural Netw. 16 , 285–286 (1988)

MATH Google Scholar

Amiri, R., Mehrpouyan, H., Fridman, L., Mallik, R.K., Nallanathan, A., Matolak, D.: A machine learning approach for power allocation in HetNets considering QoS. In: 2018 IEEE International Conference on Communications (ICC), pp 1–7, May 2018. IEEE. https://ieeexplore.ieee.org/abstract/document/8422864

Zheng, Y.: Reinforcement learning and video games (2019). arXiv: 1909.04751 [cs.LG]

Silver, D., et al.: Mastering Chess and Shogi by self-play with a general reinforcement learning algorithm (2017). arXiv: 1712.01815 [cs.AI]

Mnih, V., et al.: Playing Atari with Deep Reinforcement Learning (2013). arXiv: 1312.5602 [cs.LG]

Ma, B., Tang, M.X., Zhang, J.: Exploration of reinforcement learning to SNAKE (2016)

Wei, Z., et al.: Autonomous agents in Snake Game via Deep Reinforcement Learning. In: 2018 IEEE International conference on Agents (ICA), pp 20–25, July 2018. https://doi.org/10.1109/AGENTS.2018.8460004.

Watkins, CJCH.: Learning from delayed rewards (1989)