- Search Menu

- Sign in through your institution

- Advance articles

- Author Guidelines

- Submission Site

- Open Access

- Why Submit?

- About Journal of Survey Statistics and Methodology

- About the American Association for Public Opinion Research

- About the American Statistical Association

- Editorial Board

- Advertising and Corporate Services

- Journals Career Network

- Self-Archiving Policy

- Dispatch Dates

- Journals on Oxford Academic

- Books on Oxford Academic

High-Impact Articles

Journal of Survey Statistics and Methodology , sponsored by the American Association for Public Opinion Research and the American Statistical Association , began publishing in 2013. Its objective is to publish cutting edge scholarly articles on statistical and methodological issues for sample surveys, censuses, administrative record systems, and other related data.

OUP has granted free access to the articles on this page, which represent some of the most cited, most read, and most discussed articles from recent years. These articles are just a sample of the impressive body of research from Journal of Survey Statistics and Methodology .

Most Downloaded

Most discussed.

- Recommend to your Library

Affiliations

- Online ISSN 2325-0992

- Copyright © 2024 American Association for Public Opinion Research

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Institutional account management

- Rights and permissions

- Get help with access

- Accessibility

- Advertising

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

Articles on Surveys

Displaying 1 - 20 of 114 articles.

The number of religious ‘nones’ has soared, but not the number of atheists – and as social scientists, we wanted to know why

Christopher P. Scheitle , West Virginia University and Katie Corcoran , West Virginia University

Gen Zers and millennials are still big fans of books – even if they don’t call themselves ‘readers’

Kathi Inman Berens , Portland State University and Rachel Noorda , Portland State University

Honey bees are suprisingly abundant, research shows – but most are wild, not managed in hives

Francis Ratnieks , University of Sussex and Oliver Visick , University of Sussex

Gen Z and millennials have an unlikely love affair with their local libraries

US food insecurity surveys aren’t getting accurate data regarding Latino families

Cassandra M. Johnson , Texas State University ; Amanda C. McClain , San Diego State University , and Katherine Dickin , Cornell University

What are young Australians most worried about? Finding affordable housing, they told us

Lucas Walsh , Monash University ; Blake Cutler , Monash University ; Thuc Bao Huynh , Monash University , and Zihong Deng , Monash University

Potentially faulty data spotted in surveys of drug use and other behaviors among LGBQ youth

Joseph Cimpian , New York University

Most Americans support NASA – but don’t think it should prioritize sending people to space

Mariel Borowitz , Georgia Institute of Technology and Teasel Muir-Harmony , Georgetown University

Americans remain hopeful about democracy despite fears of its demise – and are acting on that hope

Ray Block Jr , Penn State ; Andrene Wright , Penn State , and Mia Angelica Powell , Penn State

US birth rates are at record lows – even though the number of kids most Americans say they want has held steady

Sarah Hayford , The Ohio State University and Karen Benjamin Guzzo , University of North Carolina at Chapel Hill

LGBTQ Americans are 9 times more likely to be victimized by a hate crime

Andrew Ryan Flores , American University ; Ilan Meyer , University of California, Los Angeles , and Rebecca Stotzer , University of Hawaii

Mussels are disappearing from the Thames and growing smaller – and it’s partly because the river is cleaner

Isobel Ollard , University of Cambridge

What psychology tells us about the failure of the emergency services at the Manchester Arena bombing

Nicola Power , Lancaster University

Who sees what you flush? Wastewater surveillance for public health is on the rise, but a new survey reveals many US adults are still unaware

Rochelle H. Holm , University of Louisville

Eating lots of meat is bad for the environment – but we don’t know enough about how consumption is changing

Kerry Smith , University of Reading and Emma Garnett , University of Oxford

We asked Ukrainians living on the front lines what was an acceptable peace – here’s what they told us

Gerard Toal , Virginia Tech and Karina Korostelina , George Mason University

More than 1 in 5 US adults don’t want children

Zachary P. Neal , Michigan State University and Jennifer Watling Neal , Michigan State University

A window into the number of trans teens living in America

Jody L. Herman , University of California, Los Angeles ; Andrew Ryan Flores , American University , and Kathryn K. O’Neill , University of California, Los Angeles

Are Australians socially inclusive? 5 things we learned after surveying 11,000 people for half a decade

Kun Zhao , Monash University and Liam Smith , Monash University

Climate change, the environment and the cost of living top the #SetTheAgenda poll

Misha Ketchell , The Conversation

Related Topics

- Climate change

- Coronavirus

- Public opinion

- Quick reads

- Significant Figures

- The Conversation France

Top contributors

Professor of Psychology, San Diego State University

Professeur au département Gestion, Droit et Finance, Grenoble École de Management (GEM)

Associate Professor of Political Science, University of Richmond

Professor, Future Fellow and Head of Statistics at UNSW, and a Deputy Director of the Australian Centre of Excellence in Mathematical and Statistical Frontiers (ACEMS), UNSW Sydney

Research fellow, BehaviourWorks Australia, Monash Sustainable Development Institute, Monash University

Director, BehaviourWorks, Monash Sustainable Development Institute, Monash University

Emeritus professor, Monash University

Professor in Economics and Humanistic Studies, Princeton University

Professor of Public Policy and Director of the Policy Institute, King's College London

Professor of Finance, Duke University

Visiting Scholar at the Williams Institute and Assistant Professor of Government, American University

Associate Professor of Book Publishing and Digital Humanities, Portland State University

Senior Lecturer, The University of Queensland

Associate Professor of Publishing, Portland State University

Assistant Professor of Pediatrics, West Virginia University

- X (Twitter)

- Unfollow topic Follow topic

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

Statistics articles from across Nature Portfolio

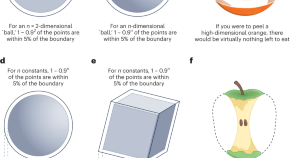

Statistics is the application of mathematical concepts to understanding and analysing large collections of data. A central tenet of statistics is to describe the variations in a data set or population using probability distributions. This analysis aids understanding of what underlies these variations and enables predictions of future changes.

Latest Research and Reviews

Grasshopper platform-assisted design optimization of fujian rural earthen buildings considering low-carbon emissions reduction

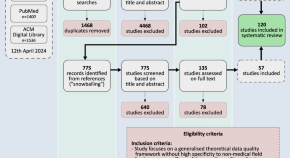

The METRIC-framework for assessing data quality for trustworthy AI in medicine: a systematic review

- Daniel Schwabe

- Katinka Becker

- Tobias Schaeffter

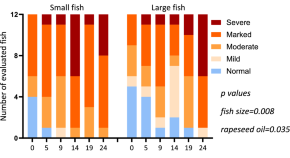

Effects of dietary fish to rapeseed oil ratio on steatosis symptoms in Atlantic salmon ( Salmo salar L) of different sizes

- D. Siciliani

- Å. Krogdahl

A model-free and distribution-free multi-omics integration approach for detecting novel lung adenocarcinoma genes

- Shaofei Zhao

Intrinsic dimension as a multi-scale summary statistics in network modeling

- Iuri Macocco

- Antonietta Mira

- Alessandro Laio

A new possibilistic-based clustering method for probability density functions and its application to detecting abnormal elements

- Hung Tran-Nam

- Thao Nguyen-Trang

- Ha Che-Ngoc

News and Comment

Efficient learning of many-body systems

The Hamiltonian describing a quantum many-body system can be learned using measurements in thermal equilibrium. Now, a learning algorithm applicable to many natural systems has been found that requires exponentially fewer measurements than existing methods.

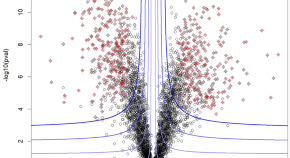

Fudging the volcano-plot without dredging the data

Selecting omic biomarkers using both their effect size and their differential status significance ( i.e. , selecting the “volcano-plot outer spray”) has long been equally biologically relevant and statistically troublesome. However, recent proposals are paving the way to resolving this dilemma.

- Thomas Burger

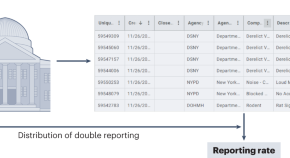

Disentangling truth from bias in naturally occurring data

A technique that leverages duplicate records in crowdsourcing data could help to mitigate the effects of biases in research and services that are dependent on government records.

- Daniel T. O’Brien

Sciama’s argument on life in a random universe and distinguishing apples from oranges

Dennis Sciama has argued that the existence of life depends on many quantities—the fundamental constants—so in a random universe life should be highly unlikely. However, without full knowledge of these constants, his argument implies a universe that could appear to be ‘intelligently designed’.

- Zhi-Wei Wang

- Samuel L. Braunstein

A method for generating constrained surrogate power laws

A paper in Physical Review X presents a method for numerically generating data sequences that are as likely to be observed under a power law as a given observed dataset.

- Zoe Budrikis

Connected climate tipping elements

Tipping elements are regions that are vulnerable to climate change and capable of sudden drastic changes. Now research establishes long-distance linkages between tipping elements, with the network analysis offering insights into their interactions on a global scale.

- Valerie N. Livina

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Numbers, Facts and Trends Shaping Your World

Read our research on:

Full Topic List

Regions & Countries

- Publications

Our Methods

- Short Reads

- Tools & Resources

Read Our Research On:

Views on America’s global role diverge widely by age and party

War in ukraine: wide partisan differences on u.s. responsibility and support, asian americans: a survey data snapshot.

Asian Americans are the fastest-growing major racial or ethnic group in the country. Here’s how they describe their own identities, their views of the U.S. and their ancestral homelands, their political and religious affiliations, and more.

- Learn more about: Chinese Americans | Filipino Americans | Indian Americans | Japanese Americans | Korean Americans | Vietnamese Americans

How Americans Get Local Political News

Experiences of u.s. adults who don’t have children, sign up for our weekly newsletter.

Fresh data delivered Saturday mornings

Latest Publications

How do states fill vacancies in the u.s. senate it depends on the state.

In the event that a Senate seat becomes vacant, governors in 45 states have the power to appoint a temporary replacement.

Here’s how Asian Americans describe their own identities, their views of the U.S. and their ancestral homelands, their political and religious affiliations, and more.

Majority of Americans support more nuclear power in the country

Americans remain more likely to favor expanding solar power (78%) and wind power (72%) than nuclear power (56%).

A third of adults under age 35 say it is extremely or very important that the U.S. play an active role in world affairs.

What do Americans think about fewer people choosing to have children?

The share of U.S. adults younger than 50 without children who say they are unlikely to ever have children rose from 37% in 2018 to 47% in 2023.

All publications >

Most Popular

Sign up for the briefing.

Weekly updates on the world of news & information

Election 2024

How latino voters view the 2024 presidential election.

While Latino voters have favored Democratic candidates in presidential elections for many decades, the margin of support has varied.

10 facts about Republicans in the U.S.

Third-party and independent candidates for president often fall short of early polling numbers, americans’ views of government’s role: persistent divisions and areas of agreement, cultural issues and the 2024 election.

All Election 2024 research >

Quiz: Test your polling knowledge

The hardships and dreams of asian americans living in poverty, what public k-12 teachers want americans to know about teaching, how people in 24 countries think democracy can improve.

All Features >

International Affairs

72% of americans say the u.s. used to be a good example of democracy, but isn’t anymore.

A median of 40% of adults across 34 other countries surveyed in 2024 say U.S. democracy used to be a good example for other countries to follow.

Most People in 35 Countries Say China Has a Large Impact on Their National Economy

Large majorities in nearly all 35 nations surveyed say China has a great deal or a fair amount of influence on their country’s economic conditions.

In some countries, immigration accounted for all population growth between 2000 and 2020

In 14 countries and territories, immigration accounted for more than 100% of population growth during this period.

NATO Seen Favorably in Member States; Confidence in Zelenskyy Down in Europe, U.S.

NATO is seen more positively than not across 13 member states. And global confidence in Ukraine’s leader has become more mixed since last year.

All INTERNATIONAL AFFAIRS RESEARCH >

Internet & Technology

How americans navigate politics on tiktok, x, facebook and instagram.

X stands out as a place people go to keep up with politics. Still, some users see political posts on Facebook, TikTok and Instagram, too.

How Americans Get News on TikTok, X, Facebook and Instagram

X is still more of a news destination than these other platforms, but the vast majority of users on all four see news-related content.

72% of U.S. high school teachers say cellphone distraction is a major problem in the classroom

Some 72% of high school teachers say that students being distracted by cellphones is a major problem in their classroom.

All INTERNET & TECHNOLOGY RESEARCH >

Race & Ethnicity

What the data says about immigrants in the u.s..

In 2022, roughly 10.6 million immigrants living in the U.S. were born in Mexico, making up 23% of all U.S. immigrants.

The State of the Asian American Middle Class

The share of Asian Americans in the U.S. middle class has held steady since 2010, while the share in the upper-income tier has grown.

An Early Look at Black Voters’ Views on Biden, Trump and Election 2024

Black voters are more confident in Biden than Trump when it comes to having the qualities needed to serve another term.

A Majority of Latinas Feel Pressure To Support Their Families or To Succeed at Work

Many juggle cultural expectations and gender roles from both Latin America and the U.S., like doing housework and succeeding at work.

Asian Americans, Charitable Giving and Remittances

Overall, 64% of Asian American adults say they gave to a U.S. charitable organization in the 12 months before the survey. One-in-five say they gave to a charity in their Asian ancestral homeland during that time. And 27% say they sent money to someone living there.

All Race & Ethnicity RESEARCH >

U.S. Surveys

Pew Research Center has deep roots in U.S. public opinion research. Launched as a project focused primarily on U.S. policy and politics in the early 1990s, the Center has grown over time to study a wide range of topics vital to explaining America to itself and to the world.

International Surveys

Pew Research Center regularly conducts public opinion surveys in countries outside the United States as part of its ongoing exploration of attitudes, values and behaviors around the globe.

Data Science

Pew Research Center’s Data Labs uses computational methods to complement and expand on the Center’s existing research agenda.

Demographic Research

Pew Research Center tracks social, demographic and economic trends, both domestically and internationally.

All Methods research >

Our Experts

“A record 23 million Asian Americans trace their roots to more than 20 countries … and the U.S. Asian population is projected to reach 46 million by 2060.”

Neil G. Ruiz , Head of New Research Initiatives

Key facts about asian americans >

Methods 101 Videos

Methods 101: random sampling.

The first video in Pew Research Center’s Methods 101 series helps explain random sampling – a concept that lies at the heart of all probability-based survey research – and why it’s important.

Methods 101: Survey Question Wording

Methods 101: mode effects, methods 101: what are nonprobability surveys.

All Methods 101 Videos >

Add Pew Research Center to your Alexa

Say “Alexa, enable the Pew Research Center flash briefing”

Signature Reports

Race and lgbtq issues in k-12 schools, representative democracy remains a popular ideal, but people around the world are critical of how it’s working, americans’ dismal views of the nation’s politics, measuring religion in china, diverse cultures and shared experiences shape asian american identities, parenting in america today, editor’s pick, who are you the art and science of measuring identity, electric vehicle charging infrastructure in the u.s., 8 in 10 americans say religion is losing influence in public life, how americans view weight-loss drugs and their potential impact on obesity in the u.s., most americans continue to say their side in politics is losing more often than it is winning, immigration & migration, how temporary protected status has expanded under the biden administration, key facts about asian americans living in poverty, latinos’ views on the migrant situation at the u.s.-mexico border, migrant encounters at the u.s.-mexico border hit a record high at the end of 2023, what we know about unauthorized immigrants living in the u.s., social media, 6 facts about americans and tiktok, whatsapp and facebook dominate the social media landscape in middle-income nations, how teens and parents approach screen time, majorities in most countries surveyed say social media is good for democracy, a declining share of adults, and few teens, support a u.s. tiktok ban.

901 E St. NW, Suite 300 Washington, DC 20004 USA (+1) 202-419-4300 | Main (+1) 202-857-8562 | Fax (+1) 202-419-4372 | Media Inquiries

Research Topics

- Email Newsletters

ABOUT PEW RESEARCH CENTER Pew Research Center is a nonpartisan fact tank that informs the public about the issues, attitudes and trends shaping the world. It conducts public opinion polling, demographic research, media content analysis and other empirical social science research. Pew Research Center does not take policy positions. It is a subsidiary of The Pew Charitable Trusts .

© 2024 Pew Research Center

Understanding and Evaluating Survey Research

- December 2015

- Journal of the Advanced Practitioner in Oncology 6(2):168-171

- 6(2):168-171

- This person is not on ResearchGate, or hasn't claimed this research yet.

Discover the world's research

- 25+ million members

- 160+ million publication pages

- 2.3+ billion citations

- Abdulhadi Y Gahtani

- Alana Murphy-Dooley

- Rachel Flynn

- Mary Grace C. Nueva

- Sirlene Luz Penha

- Cláudia Maria Messias

- Vilanice Alves de Araújo Püschel

- Yuki Shirai

- Peter I Buerhaus

- Carol D. Ryff

- Suzanne Mellon

- J PSYCHOSOM RES

- Dag Neckelmann

- Ingvar Bjelland

- Tone Tangen Haug

- D A Dillman

- L M Christian

- Recruit researchers

- Join for free

- Login Email Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google Welcome back! Please log in. Email · Hint Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google No account? Sign up

The Scientist spoke with Maximilien Chaumon about his database showing how COVID-19 related lockdowns warped more 2,800 people’s perception of time.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Survey Research | Definition, Examples & Methods

Survey Research | Definition, Examples & Methods

Published on August 20, 2019 by Shona McCombes . Revised on June 22, 2023.

Survey research means collecting information about a group of people by asking them questions and analyzing the results. To conduct an effective survey, follow these six steps:

- Determine who will participate in the survey

- Decide the type of survey (mail, online, or in-person)

- Design the survey questions and layout

- Distribute the survey

- Analyze the responses

- Write up the results

Surveys are a flexible method of data collection that can be used in many different types of research .

Table of contents

What are surveys used for, step 1: define the population and sample, step 2: decide on the type of survey, step 3: design the survey questions, step 4: distribute the survey and collect responses, step 5: analyze the survey results, step 6: write up the survey results, other interesting articles, frequently asked questions about surveys.

Surveys are used as a method of gathering data in many different fields. They are a good choice when you want to find out about the characteristics, preferences, opinions, or beliefs of a group of people.

Common uses of survey research include:

- Social research : investigating the experiences and characteristics of different social groups

- Market research : finding out what customers think about products, services, and companies

- Health research : collecting data from patients about symptoms and treatments

- Politics : measuring public opinion about parties and policies

- Psychology : researching personality traits, preferences and behaviours

Surveys can be used in both cross-sectional studies , where you collect data just once, and in longitudinal studies , where you survey the same sample several times over an extended period.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

Before you start conducting survey research, you should already have a clear research question that defines what you want to find out. Based on this question, you need to determine exactly who you will target to participate in the survey.

Populations

The target population is the specific group of people that you want to find out about. This group can be very broad or relatively narrow. For example:

- The population of Brazil

- US college students

- Second-generation immigrants in the Netherlands

- Customers of a specific company aged 18-24

- British transgender women over the age of 50

Your survey should aim to produce results that can be generalized to the whole population. That means you need to carefully define exactly who you want to draw conclusions about.

Several common research biases can arise if your survey is not generalizable, particularly sampling bias and selection bias . The presence of these biases have serious repercussions for the validity of your results.

It’s rarely possible to survey the entire population of your research – it would be very difficult to get a response from every person in Brazil or every college student in the US. Instead, you will usually survey a sample from the population.

The sample size depends on how big the population is. You can use an online sample calculator to work out how many responses you need.

There are many sampling methods that allow you to generalize to broad populations. In general, though, the sample should aim to be representative of the population as a whole. The larger and more representative your sample, the more valid your conclusions. Again, beware of various types of sampling bias as you design your sample, particularly self-selection bias , nonresponse bias , undercoverage bias , and survivorship bias .

There are two main types of survey:

- A questionnaire , where a list of questions is distributed by mail, online or in person, and respondents fill it out themselves.

- An interview , where the researcher asks a set of questions by phone or in person and records the responses.

Which type you choose depends on the sample size and location, as well as the focus of the research.

Questionnaires

Sending out a paper survey by mail is a common method of gathering demographic information (for example, in a government census of the population).

- You can easily access a large sample.

- You have some control over who is included in the sample (e.g. residents of a specific region).

- The response rate is often low, and at risk for biases like self-selection bias .

Online surveys are a popular choice for students doing dissertation research , due to the low cost and flexibility of this method. There are many online tools available for constructing surveys, such as SurveyMonkey and Google Forms .

- You can quickly access a large sample without constraints on time or location.

- The data is easy to process and analyze.

- The anonymity and accessibility of online surveys mean you have less control over who responds, which can lead to biases like self-selection bias .

If your research focuses on a specific location, you can distribute a written questionnaire to be completed by respondents on the spot. For example, you could approach the customers of a shopping mall or ask all students to complete a questionnaire at the end of a class.

- You can screen respondents to make sure only people in the target population are included in the sample.

- You can collect time- and location-specific data (e.g. the opinions of a store’s weekday customers).

- The sample size will be smaller, so this method is less suitable for collecting data on broad populations and is at risk for sampling bias .

Oral interviews are a useful method for smaller sample sizes. They allow you to gather more in-depth information on people’s opinions and preferences. You can conduct interviews by phone or in person.

- You have personal contact with respondents, so you know exactly who will be included in the sample in advance.

- You can clarify questions and ask for follow-up information when necessary.

- The lack of anonymity may cause respondents to answer less honestly, and there is more risk of researcher bias.

Like questionnaires, interviews can be used to collect quantitative data: the researcher records each response as a category or rating and statistically analyzes the results. But they are more commonly used to collect qualitative data : the interviewees’ full responses are transcribed and analyzed individually to gain a richer understanding of their opinions and feelings.

Next, you need to decide which questions you will ask and how you will ask them. It’s important to consider:

- The type of questions

- The content of the questions

- The phrasing of the questions

- The ordering and layout of the survey

Open-ended vs closed-ended questions

There are two main forms of survey questions: open-ended and closed-ended. Many surveys use a combination of both.

Closed-ended questions give the respondent a predetermined set of answers to choose from. A closed-ended question can include:

- A binary answer (e.g. yes/no or agree/disagree )

- A scale (e.g. a Likert scale with five points ranging from strongly agree to strongly disagree )

- A list of options with a single answer possible (e.g. age categories)

- A list of options with multiple answers possible (e.g. leisure interests)

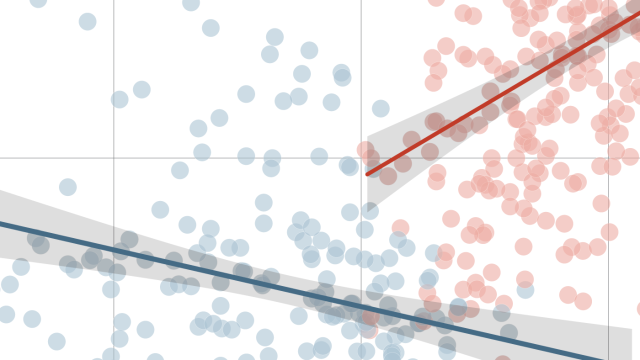

Closed-ended questions are best for quantitative research . They provide you with numerical data that can be statistically analyzed to find patterns, trends, and correlations .

Open-ended questions are best for qualitative research. This type of question has no predetermined answers to choose from. Instead, the respondent answers in their own words.

Open questions are most common in interviews, but you can also use them in questionnaires. They are often useful as follow-up questions to ask for more detailed explanations of responses to the closed questions.

The content of the survey questions

To ensure the validity and reliability of your results, you need to carefully consider each question in the survey. All questions should be narrowly focused with enough context for the respondent to answer accurately. Avoid questions that are not directly relevant to the survey’s purpose.

When constructing closed-ended questions, ensure that the options cover all possibilities. If you include a list of options that isn’t exhaustive, you can add an “other” field.

Phrasing the survey questions

In terms of language, the survey questions should be as clear and precise as possible. Tailor the questions to your target population, keeping in mind their level of knowledge of the topic. Avoid jargon or industry-specific terminology.

Survey questions are at risk for biases like social desirability bias , the Hawthorne effect , or demand characteristics . It’s critical to use language that respondents will easily understand, and avoid words with vague or ambiguous meanings. Make sure your questions are phrased neutrally, with no indication that you’d prefer a particular answer or emotion.

Ordering the survey questions

The questions should be arranged in a logical order. Start with easy, non-sensitive, closed-ended questions that will encourage the respondent to continue.

If the survey covers several different topics or themes, group together related questions. You can divide a questionnaire into sections to help respondents understand what is being asked in each part.

If a question refers back to or depends on the answer to a previous question, they should be placed directly next to one another.

Before you start, create a clear plan for where, when, how, and with whom you will conduct the survey. Determine in advance how many responses you require and how you will gain access to the sample.

When you are satisfied that you have created a strong research design suitable for answering your research questions, you can conduct the survey through your method of choice – by mail, online, or in person.

There are many methods of analyzing the results of your survey. First you have to process the data, usually with the help of a computer program to sort all the responses. You should also clean the data by removing incomplete or incorrectly completed responses.

If you asked open-ended questions, you will have to code the responses by assigning labels to each response and organizing them into categories or themes. You can also use more qualitative methods, such as thematic analysis , which is especially suitable for analyzing interviews.

Statistical analysis is usually conducted using programs like SPSS or Stata. The same set of survey data can be subject to many analyses.

Finally, when you have collected and analyzed all the necessary data, you will write it up as part of your thesis, dissertation , or research paper .

In the methodology section, you describe exactly how you conducted the survey. You should explain the types of questions you used, the sampling method, when and where the survey took place, and the response rate. You can include the full questionnaire as an appendix and refer to it in the text if relevant.

Then introduce the analysis by describing how you prepared the data and the statistical methods you used to analyze it. In the results section, you summarize the key results from your analysis.

In the discussion and conclusion , you give your explanations and interpretations of these results, answer your research question, and reflect on the implications and limitations of the research.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Student’s t -distribution

- Normal distribution

- Null and Alternative Hypotheses

- Chi square tests

- Confidence interval

- Quartiles & Quantiles

- Cluster sampling

- Stratified sampling

- Data cleansing

- Reproducibility vs Replicability

- Peer review

- Prospective cohort study

Research bias

- Implicit bias

- Cognitive bias

- Placebo effect

- Hawthorne effect

- Hindsight bias

- Affect heuristic

- Social desirability bias

A questionnaire is a data collection tool or instrument, while a survey is an overarching research method that involves collecting and analyzing data from people using questionnaires.

A Likert scale is a rating scale that quantitatively assesses opinions, attitudes, or behaviors. It is made up of 4 or more questions that measure a single attitude or trait when response scores are combined.

To use a Likert scale in a survey , you present participants with Likert-type questions or statements, and a continuum of items, usually with 5 or 7 possible responses, to capture their degree of agreement.

Individual Likert-type questions are generally considered ordinal data , because the items have clear rank order, but don’t have an even distribution.

Overall Likert scale scores are sometimes treated as interval data. These scores are considered to have directionality and even spacing between them.

The type of data determines what statistical tests you should use to analyze your data.

The priorities of a research design can vary depending on the field, but you usually have to specify:

- Your research questions and/or hypotheses

- Your overall approach (e.g., qualitative or quantitative )

- The type of design you’re using (e.g., a survey , experiment , or case study )

- Your sampling methods or criteria for selecting subjects

- Your data collection methods (e.g., questionnaires , observations)

- Your data collection procedures (e.g., operationalization , timing and data management)

- Your data analysis methods (e.g., statistical tests or thematic analysis )

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

McCombes, S. (2023, June 22). Survey Research | Definition, Examples & Methods. Scribbr. Retrieved August 7, 2024, from https://www.scribbr.com/methodology/survey-research/

Is this article helpful?

Shona McCombes

Other students also liked, qualitative vs. quantitative research | differences, examples & methods, questionnaire design | methods, question types & examples, what is a likert scale | guide & examples, what is your plagiarism score.

Are you considering the survey participant’s experience?

After a hiatus from participating in online panel surveys, researcher Ben Tolchinsky revisited the practice to assess changes in the survey experience since the mid-2000s. Joining 16 panels and taking hundreds of surveys on various devices, the author conducted a qualitative assessment to determine if the experience had improved. This article details the findings from Tolchinsky’s survey participation.

Evaluating the survey participant experience

Editor’s note: Ben Tolchinsky is owner of CBT Insights, Atlanta.

When was the last time you participated in an online panel survey?

For me, it had been a while. Early in my career, in the mid-2000s, I joined a number of online panels so that I could stay “on the pulse” of online surveys and the industry. I developed three beliefs from this experience:

- Surveys are too long.

- Surveys are boring.

- Surveys are unrewarding.

I’ve maintained these beliefs over the years, not having encountered anything that would change them. However, despite my beliefs and best efforts, I’ve contributed my share of lengthy and boring surveys. Since that early experiment, I occasionally participate in surveys, mostly from companies of which I’m a customer.

Because of my beliefs, I’ve often wondered and worried about the health of the lifeblood of our industry: survey participation. Despite my concerns, my sample needs have always been met.

Early in 2024, I had an itch to evaluate the survey participant experience once again. Has it changed? Has it improved? Are surveys shorter, more enjoyable and more rewarding than they were in the mid-2000s? I wasn’t optimistic.

To help answer my questions, I joined 16 panels (see Table A), and over the course of many months, I participated in hundreds of online surveys on my desktop PC, laptop, iPhone and iPad. All surveys were taken on the Microsoft Edge browser, and I did not use any survey apps.

In this article I’ll look at each experience, in order, that a panelist encounters when participating in an online survey. Although the sample size of surveys taken is large, I did not record and quantify my experience. My conclusion is based on a qualitative assessment of my collective experiences.

Survey recruitment

Much like years ago, I received e-mail invitations to take surveys. And much like years ago, the number of e-mail invitations varied widely by panel, with as few as one per week to as many as 80 per week (see Table A). From my participant perspective, fewer invitations made me feel “fortunate” to have been invited to the survey, whereas the larger number of invitations seemed excessive to me. Others may appreciate the frequent notifications of survey availability.

However, unlike my experience from years ago, the invitation rarely took me to the survey advertised in the e-mail, instead placing me in a “router” that would either find a survey for me in about 15-to-30 seconds or take me to a set of general screening questions before putting me in the router.

I should note that two of the 16 panels operated more like those from years past. For these two, I would receive an invitation for an advertised survey and then be taken directly to that survey.

However, unlike in the past, I would be presented with another survey opportunity if I was disqualified.

The other unique aspect of the recruitment process for me was that most panels have a survey dashboard that I could visit at any time or be returned to after completing or disqualifying from a survey. On the dashboard, I could choose from several surveys ranging in length and reward. If I wanted a shorter survey, I could typically find one with a smaller reward. And if I was ambitious or motivated by larger rewards, I could typically find one ranging from 20 to even 45 or 60 minutes. Dashboards can also stimulate participation in specific surveys by increasing the number of rewards points per minute, which are easily comparable across surveys.

I recall many complaints in the past from panelists who did not like being disqualified from a survey and not having other opportunities until a new invitation arrived (of which the same experience would often occur). Although there are pros (maximizes panelist utility) and cons (impact on sample representativeness) to dashboards for researchers, today’s recruitment approaches have eliminated this participant pain point.

Survey screening

I recall attending conferences where panel companies and others would discuss the need to utilize panelist demographic information so that participants did not have to answer the same demographic questions repeatedly while attempting to qualify for a survey.

Unfortunately, this need has not been met.

Hence, the process of attempting to qualify for a survey requires “routing” from one survey to the next, answering the same demographic questions repeatedly until finally qualifying or running out of available surveys. It can be incredibly frustrating, even laughable at times.

There were two other interesting aspects of the screening process:

- This is not social or political commentary, but it surprised me how many ways there now are to ask for a participant’s gender – one question had 13 options. Perhaps those who qualify for these responses are appreciative, but I think the many variations draw unwanted attention to a complex issue. Perhaps a standard will emerge.

- Bot detectors are now commonplace, appearing in most surveys. They’re not necessarily problematic, but to those who aren’t familiar with them or their purpose might question why the survey is asking which one of the photos is an apple. At times, I had to prove I was a human two or three times.

It should be noted here that I answered every screening and survey question honestly, as a consumer, except for one. I, like most panelists, know how to dodge the security screener, and I did not give myself up each time.

Survey length

Although I don’t have data points, I believe that it’s generally agreed that many surveys are too long and that survey participants much prefer shorter surveys to lengthy surveys. In my experience, practitioners (including me) generally agree that surveys really shouldn’t exceed 15, maybe 20, minutes – largely to maintain broad survey participation and to ensure data quality. At conferences, practitioners usually debate which stakeholder – the corporate buyers, the market research suppliers, the panel companies – should own and enforce the effort to shorten surveys. A partnership among leading companies from each stakeholder is typically sought, but it is seemingly an impossible task.

Based on my experiment, I don’t believe anything has changed. While there are 5-, 10- and 15- minute surveys, there are also many 20-, 30-, 45- and even 60-minute surveys. Because of this experiment, I did not shy away from the lengthier surveys. I tried to complete them – I really did – but I often couldn’t. They seemed to go on and on with no end in sight. Furthermore, once my eyes began to glaze over and the frustration set in (around the 20-minute mark), I could no longer vouch for the quality of my responses, so it is probably better that I often gave up. If I was motivated by the incentive/reward, perhaps I would have marched on.

It wasn’t just the survey length. It was the repetitiveness, the length of the attribute batteries, the endless detailed questions about brands I know little about or everyday experiences I can barely recall. I’m a researcher, and I know why we do this, but please take a 30-minute or longer survey and see if you disagree.

Survey enjoyment

Based on my previous account, this assessment will not surprise you. When comparing my experience in the mid-2000s to my recent experience, I noticed only minor improvements on this dimension to a minority of the surveys I took. The improvements generally came in the form of easier administration of attributes and other types of batteries that require repetition.

Sometimes the attributes would appear one by one, and on occasion, I could drag-and-drop responses into rank order or onto a fixed scale.

I didn’t expect to be entertained, but I expected the experience to be an ounce or two more enjoyable (or less boring) than previously based simply on advancements in technology. I’ve seen wonderful examples of unique and/or gamified question types that are seemingly more “enjoyable” and engaging, but I didn’t encounter any of these, not once. I have little doubt that it would encourage more/repeat participation, increase the likelihood of completion and produce higher quality data – particularly for lengthier surveys. But the industry appears uninterested in making the necessary investments to achieve these benefits.

Survey quality

Survey quality was the biggest surprise for me. In my opinion, survey quality has declined. The issues that led me to this conclusion were neither egregious nor rampant. Many were of the basic variety, including misspellings, missing words and poor punctuation. More concerning issues included poorly worded questions, leading questions, unanswerable questions, confusing or incorrect scales, missing or failed programming logic and more. The most common (and frustrating) issue was the failure to include a “none of these” or “don’t know” option – when this occurred, I was forced to randomly select a response, and I shuddered at the thought of someone presenting this data.

Perhaps it shouldn’t have been a surprise. Software as a service (SaaS) survey platforms have increased in popularity due to cost and speed improvements by allowing essentially anyone in an organization to write and deploy a survey. I am not going to list the pros and cons of SaaS survey platforms, but it seems apparent to me that some, even many, surveys are not drafted by an experienced and trained practitioner.

I notice the issues, but I suspect that most panelists either don’t notice them or don’t care. And maybe that’s the point. I’m beginning to believe that “good enough” is now the standard and that organizations believe benefits of SaaS platforms outweigh some amount of bias that no one will ever know or care about.

There was one pleasant surprise, however. Surveys taken on my iPhone browser were better than I expected. Some were optimized for the smartphone and others required a bit of pinching and shifting, whereas only a small number were no different than on a computer browser and unreadable for my worsening eyesight.

Survey incentives and rewards

As previously stated, it is my belief that surveys are unrewarding. As shown in Table A, on three of the 16 panels, it could take as few as four or five surveys to earn a $5 award. Not bad. Keep in mind, however, that those four or five surveys need to be of the 20-minute+ variety, and the participant must quality for and complete the surveys – not always easy tasks. But at least the reward feels attainable and tangible. Participants no longer have to use their earned currency for magazine subscriptions – today’s rewards are cash or gift cards (Amazon is almost always one of the choices).

As you will also see in Table A, most panels require significantly more completed surveys to earn the baseline reward. In my estimation, it is quite challenging to earn a reward, which signifies “unrewarding” or at least mildly rewarding to me.

The survey participant experience

Based on this experiment, I’ve come to three conclusions. The first is that, in my opinion, surveys remain very much the same as in the mid-2000s – too long, boring and unrewarding.

The second conclusion is more revealing. It seems apparent to me that the MR industry does not need to improve the participant experience, or it would have improved it by now. Long, boring and unrewarding surveys are completed every day, and if quotas are consistently met, why does anything need to change? Perhaps I should be pleased that the lifeblood of our industry – survey participation – is theoretically sufficiently healthy.

My third conclusion is that I must be an idealogue who yearns for something better but is not pragmatic. I get it … if it ain’t broke, don’t fix it.

Ensuring quality survey responses in an age of uncertainty Related Categories: Online Surveys, Online Survey Design/Analysis, Panels-Online Online Surveys, Online Survey Design/Analysis, Panels-Online, Research Industry, Data Analysis, Data Quality, Fraud Detection, Validation-Respondent

Engaging Gen Z: Using visual enhancements to improve survey engagement Related Categories: Survey Design, Online Survey Design/Analysis, Survey Research Survey Design, Online Survey Design/Analysis, Survey Research, Generation Z, Research Industry

23 Top Online Research Companies Related Categories: Online Surveys, Online Survey Design/Analysis, Panels-Online, Survey Research Online Surveys, Online Survey Design/Analysis, Panels-Online, Survey Research, Research Industry, Bus.-To-Bus. Research, Data Analysis, Data Collection Field Services, Focus Group-Online, Hybrid Research (Qual/Quant), Online Communities - MROC, Online Research, Online Research Consultation, Qualitative Research, Qualitative-Online, Quantitative Research, Software-Data Analysis, Software-Online Qualitative, Software-Online Surveys

Video surveys: The key to reliable consumer insights Related Categories: Survey Design, Online Surveys, Survey Research Survey Design, Online Surveys, Survey Research, Research Industry, Audience Research, CX/UX-Customer/User Experience, Consumer Research, In-Store Research, Product Testing Research, Shopper Insights, Video Recording

Autonomous Cars and Consumer Choices: A Stated Preference Approach

- Published: 06 August 2024

Cite this article

- Hiroaki Miyoshi ORCID: orcid.org/0000-0003-1466-0475 1

This study aims to identify the factors that influence consumers' choice of autonomous cars in their new car purchase behavior. We surveyed stated preferences for autonomous driving technology and then analyzed the survey data using a multinominal logit model to examine how various factors—including price, consumer attributes, and respondents' evaluations of autonomous car characteristics—influence consumers' choice of car technology. The results of analysis indicate that experience with adaptive cruise control (ACC), as well as expectations about the enjoyment that autonomous cars will bring to consumers' daily lives, have a significant impact on their decision.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Subscribe and save.

- Get 10 units per month

- Download Article/Chapter or eBook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

Price includes VAT (Russian Federation)

Instant access to the full article PDF.

Rent this article via DeepDyve

Institutional subscriptions

[ 13 ] provides a comprehensive review of the literature on stated preference/choice studies related to automated vehicles.

Contents of SP surveys in this study are described in detail in [ 14 ].

To facilitate understanding, the three car classes (ADAS-equipped cars, Level-3 cars, and Level-4 cars) were described to survey respondents respectively as assisted-driving cars , partially autonomous cars , and highly autonomous cars .

Note that subclass R3 is not used in the survey.

For this portion of the survey, a range of highways permitting autonomous driving was indicated, and survey respondents were shown one of the following three options, selected at random: (1) Major highways (Tomei, etc.), (2) Highways with two lanes in each direction, (3) All highways.

This refers to the frequency with which take-over requests (TORs) are issued. Survey respondents were shown one of the following four options, selected at random: (1) once per hour, (2) once per day, (3) once per week, (4) once per month.

This refers to the length of time within which a human driver must respond to a take-over request. Survey respondents were shown one of the following four options, selected at random: (1) immediately, (2) within 3 seconds, (3) within 10 seconds, (4) within 30 seconds.

This refers to the frequency of minimum-risk maneuver (MRM) incidents. Survey respondents were shown one of the following four options, selected at random: (1) once per day, (2) once per month, (3) once per year, (4) once every two years.

See [ 17 ] for further information except (B-4).

This survey queried household income in a multiple-choice format, with a single option for all income levels above 20 million yen. To exclude artifacts of this question design from the explanatory variable for household income, we introduce a dummy variable indicating income above 20 million yen.

For example, gasoline cars with the engine displacement of above 2000cc are included in standard sized cars.

Abbreviations

Advanced driver-assistance systems

Autonomous emergency braking

Driving assistant

Take-over request

Minimum-risk maneuver

Adaptive cruise control

Nordhoff, S., Kyriakidis, M., Van Arem, B., Happee, R.: A multi-level model on automated vehicle acceptance (MAVA): a review-based study. Theor. Issues Ergon. Sci. 20 (6), 682–710 (2019). https://doi.org/10.1080/1463922x.2019.1621406

Article Google Scholar

Venkatesh, V., Morris, M.G., Davis, G.B., Davis, F.D.: User acceptance of information technology: toward a unified view. MIS Q. 27 (3), 425–478 (2003). https://doi.org/10.2307/30036540

Venkatesh, V., Thong, J.Y.L., Xu, X.: Unified theory of acceptance and use of technology: a synthesis and the road ahead. J. Assoc. Inf. Syst. 17 (5), 328–376 (2016). https://doi.org/10.17705/1jais.00428

Bansal, P., Kockelman, K.M., Singh, A.: Assessing public opinions of and interest in new vehicle technologies: an Austin perspective. Transp. Res. Part C: Emerg. Technol. 67 , 1–14 (2016). https://doi.org/10.1016/j.trc.2016.01.019

Jiang, Y., Zhang, J., Wang, Y., Wang, W.: Capturing ownership behavior of autonomous vehicles in Japan based on a stated preference survey and a mixed logit model with repeated choices. Int. J. Sustain. Transp. 13 (10), 788–801 (2018). https://doi.org/10.1080/15568318.2018.1517841

Liu, P., Guo, Q., Ren, F., Wang, L., Xu, Z.: Willingness to pay for self-driving vehicles: Influences of demographic and psychological factors. Transp. Res. C: Emerg. Technol. 100 , 306–317 (2019). https://doi.org/10.1016/j.trc.2019.01.022

Liu, P., Yang, R., Xu, Z.: Public acceptance of fully automated driving: effects of social trust and risk/benefit perceptions. Risk Anal. 39 (2), 326–341 (2019). https://doi.org/10.1111/risa.13143

Shabanpour, R., Golshani, N., Shamshiripour, A., Mohammadian, A.: Eliciting preferences for adoption of fully automated vehicles using best-worst analysis. Transp. Res. C Part C: Emerg. Technol. 93 , 463–478 (2018). https://doi.org/10.1016/j.trc.2018.06.014

Tan, L., Ma, C., Xu, X., and Xu, J., Choice behavior of autonomous vehicles based on logistic models. Sustainability, 12(1): (2019). https://doi.org/10.3390/su12010054

Daziano, R.A., Sarrias, M., Leard, B.: Are consumers willing to pay to let cars drive for them? Analyzing response to autonomous vehicles. Transp. Res. Part C: Emerg. Technol. 78 , 150–164 (2017). https://doi.org/10.1016/j.trc.2017.03.003

Haboucha, C.J., Ishaq, R., Shiftan, Y.: User preferences regarding autonomous vehicles. Transp. Res. Part C: Emerg. Technol. 78 , 37–49 (2017). https://doi.org/10.1016/j.trc.2017.01.010

Potoglou, D., Whittle, C., Tsouros, I., Whitmarsh, L.: Consumer intentions for alternative fuelled and autonomous vehicles: a segmentation analysis across six countries. Transp. Res. Part D: Transp. Environ. 79 , 1–17 (2020). https://doi.org/10.1016/j.trd.2020.102243

Gkartzonikas, C., Gkritza, K.: What have we learned? A review of stated preference and choice studies on autonomous vehicles. Transp. Res. Part C: Emerg. Technol. 98 , 323–337 (2019). https://doi.org/10.1016/j.trc.2018.12.003

University of Tokyo, and Doshisha University (commissioned by NEDO), Research on assessment of the impact of automated driving on society and the economy and on measures to promote deployment (In Japanese), 2023 available at https://www.sip-adus.go.jp/rd/ . Accessed 30 Nov 2023

Suda, Y., Miyoshi, H.: Development of assessment methodology for socioeconomic impacts of automated driving including traffic accident reduction. In: SIP 2nd Phase: Automated Driving for Universal Services-Final Results Report (2018-2022), pp. 173–179. (2022). Available at https://www.sip-adus.go.jp/rd/rd_page04.php . Accessed 30 Nov 2023

Google Scholar

Nishihori, Y., Morikawa, T.: Analysis of the important factors affecting the acceptance of autonomous vehicles before and after test ride: Considering the awareness difference for technology and contents of test ride. J City Plann Inst Jpn 54 (3), 696–702 (2019). Available at https://cir.nii.ac.jp/crid/1390845702311790720 . Accessed 30 Nov 2023 (In Japanese)

Miyaki, Y.: Surveys and evaluations for fostering public acceptance. In: SIP 2nd Phase: Automated Driving for Universal Services-SIP 2nd Phase —Mid-Term Results Report (2018–2020), 021, pp. 124-129. Available at https://www.sip-adus.go.jp/rd/rd_page03.php . Accessed 30 Nov 2023

Kyriakidis, M., Happee, R., de Winter, J.C.F.: Public opinion on automated driving: results of an international questionnaire among 5000 respondents. Transp. Res. Part F: Traff. Psychol. Behav. 32 , 127–140 (2015). https://doi.org/10.1016/j.trf.2015.04.014

Dong, X., DiScenna, M., Guerra, E.: Transit user perceptions of driverless buses. Transportation 46 (1), 35–50 (2017). https://doi.org/10.1007/s11116-017-9786-y

Krueger, R., Rashidi, T.H., Rose, J.M.: Preferences for shared autonomous vehicles. Transp. Res. Part C: Emerg. Technol. 69 , 343–355 (2016). https://doi.org/10.1016/j.trc.2016.06.015

Menon, N.: Autonomous vehicles: An empirical assessment of consumers' perceptions, intended adoption, and impacts on household vehicle ownership, University of South Florida, available at https://digitalcommons.usf.edu . Accessed 30 Oct 2023

Nordhoff, S., de Winter, J., Kyriakidis, M., van Arem, B., and Happee, R., “Acceptance of driverless vehicles: results from a large cross-national questionnaire study”, J. Adv. Transp. 1-22 (2018). https://doi.org/10.1155/2018/5382192

Revelle, W.R.: psych: Procedures for personality and psychological research (Version 2.3.9) [Computer software]. 2023, available at https://cran.r-project.org/web/packages/psych/psych.pdf (accessed September 30, 2023)

Croissant, Y.: Estimation of multinomial logit models in R: The mlogit Packages. J. Stat. Softw. 95 (11), 1–41 (2020). https://doi.org/10.18637/jss.v095.i11

Taniguchi, A., Enoch, M., Theofilatos, A., Ieromonachou, P.: Understanding acceptance of autonomous vehicles in Japan, UK, and Germany. Urban Plann. Transp. Res. 10 (1), 514–535 (2022). https://doi.org/10.1080/21650020.2022.2135590

Miyaki, Y.: Public attitudes towards automated driving. Trends in the Sciences 27 (2), 100–104 (2022). https://doi.org/10.5363/tits.27.2_100 . (In Japanese)

Download references

Acknowledgements

This paper is based on results obtained from a project, JPNP18012, commissioned by the New Energy and Industrial Technology Development Organization (NEDO). For the survey discussed in this paper, the questions regarding consumer acceptance that appear on the survey page titled “Survey questions probing awareness and opinions of autonomous driving technology” are questions that were previously used to gauge social acceptance within another project in JPNP18012 . We extend our heartfelt gratitude to NEDO and Yukiko Miyaki, executive chief researcher at Dai-Ichi Research Institute Inc., for granting permission to use this material. The survey was conducted with the participation of Shoji Watanabe, a researcher at Doshisha University. We sincerely appreciate his efforts in conducting the survey. We are also grateful for the many valuable suggestions we received from researchers at the University of Tokyo.

The online surveys conducted for this study were reviewed and approved (case number 2021-09) by the ethics committee of Doshisha University’s Institute for Technology, Enterprise and Competitiveness.

Author information

Authors and affiliations.

Faculty of Policy Studies, Doshisha University, Karasuma-higashi-iru, Imadegawa-dori, Kamigyo-ku, Kyoto, 602-8580, Japan

Hiroaki Miyoshi

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Hiroaki Miyoshi .

Ethics declarations

Conflict of interest.

The authors declare that they have no conflict of interest.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Reprints and permissions

About this article

Miyoshi, H. Autonomous Cars and Consumer Choices: A Stated Preference Approach. Int. J. ITS Res. (2024). https://doi.org/10.1007/s13177-024-00408-1

Download citation

Received : 09 December 2023

Revised : 23 April 2024

Accepted : 10 June 2024

Published : 06 August 2024

DOI : https://doi.org/10.1007/s13177-024-00408-1

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Autonomous car

- Social acceptance

- Consumer expectation

- Stated preference

- Factor analysis

- Multinominal logit model

- Adaptive cruise control

- Find a journal

- Publish with us

- Track your research

Industry in Focus

Good Growth for Cities

Sustainable economy

Rethink Risk

Business in focus

Transformation

Managed Services

175 years of PwC

Annual Report

What is The New Equation?

Loading Results

No Match Found

Invest in team sports say 41% of experts with women’s sport on track for continued growth - new PwC Global Sports Survey

- Press Release

- 02 Aug 2024

Teams and leagues (41%), gaming (22%) and tech (17%) are the most attractive sectors to invest in

85% of sports experts predict double digit growth in women’s sport

59% of sports organisations do not have a strategy for GenAI incorporation into their business model

PwC unveiled its 8th Edition of its Global Sports Survey today, showcasing optimistic prospects for the business of sport. The survey, which gathered insights from 411 sports leaders worldwide, examined the current state of the sports industry and their expectations for the next three to five years. Despite presenting a cautiously optimistic outlook, the report indicates positive sentiment across all regions.

With the continued growth of big US sports and global football, as well as the emergence of sports such as Padel and Pickleball, there are significant growth opportunities across the sector.

Clive Reeves, PwC Global Sports Leader comments:

"The report provides valuable insights into the state of the sports industry and its future prospects, from some of the top leaders and sports strategists globally. Despite economic uncertainties, the industry remains optimistic and poised for growth. This summer in particular highlights the pulling power that sports continue to have - from the Men’s UEFA Euro 2024 to the Paris Olympic and Paralympic Games.”

“We believe this survey will be an invaluable resource for businesses and industry leaders, sparking crucial discussions and debates on how the sports industry can seize growth opportunities and navigate potential challenges."

Investment in the sports industry

The sports sector is becoming highly sought-after due to continued revenue growth (based on media rights and sponsorship deals) and more sustainable business models emerging. Sports experts surveyed are optimistic about institutional investors' prospects, especially with growth in the sports market. While growth isn't universal, certain investors are well-positioned to seize opportunities in this evolving landscape. Of the 411 experts surveyed by PwC, they identified teams and leagues (41%), gaming (22%) and tech (17%) as the top investment areas.

The scarcity of investable sports assets has driven up valuations in recent transactions, prompting shifts in investment strategies and structures. Relaxing ownership regulations in the US and the continued rise in asset valuations are attracting evermore diverse investors. Sports leaders surveyed conveyed a growing popularity for minority investment and joint venture investment (both 36%). Dedicated sports investment funds and athlete- backed funds are becoming more active. They are partnering with operators to unlock value and seek synergies across portfolios with athlete funds in particular mobilising the pulling power of attracting bigger audiences.

Major sporting events

44% of sports executives believe financial concerns are a key barrier to hosting a large event. Despite stating the benefits of investment and infrastructure (25%) and the tourism benefits (24%) of hosting such events, experts still believe that they can lack public support (19%) and a lack of suitable facilities to host in (15%). The majority of those surveyed believe that there needs to be new hosting models (64%) and utilisation of existing venues (60%) to help future major sporting events be delivered in a sustainable way.

86% of experts also believe a multi-location model is best for hosting such events - great news for the upcoming EURO 2028 across the UK and Ireland, as well as the future Olympics and Paralympics .

Women’s sport

Women's sports are experiencing a surge in interest and significant growth potential, driven by record-breaking events like the FIFA Women's World Cup (Summer 2023) and the NCAA women's basketball tournament (March 2024). Sports executives believe women’s sports will grow double-digit over the next three to five years, highlighting the significant potential still to be realised.

Sports leaders surveyed highlight that increased media coverage has been crucial for this growth and stress the importance of continued focus from broadcasters and media outlets. To attract new audiences, 18% of respondents cite increased promotion (advertising, ticket prices), 16% emphasise live broadcasting of women’s sports events, and 13% mention enhancing the matchday experience (food options, accessibility). Additionally, 12% advocate for improved athlete storytelling, and another 12% emphasise the need for family-friendly scheduling.

GenAI & Sports

Generative AI (GenAI) and other innovative technologies present significant growth opportunities for sporting organisations, but their adoption and impact vary across the industry. Sports tech and media companies are poised to benefit the most, focusing on content creation, distribution and fan engagement. Yet 59% of sports leaders reported not having a clear GenAI strategy, highlighting barriers such as funding and capability requirements, thus offering early adopters a chance to gain a competitive edge.

Media (26%), technology (21%) and fantasy/betting (16%) are the industry stakeholders that stand to benefit most from GenAI through an enhanced ability to create content more quickly, at lower cost, to unlock further commercial opportunities, driving opportunities for new pricing and fan engagement models. With many GenAI use cases still emerging, sports organisations will likely adopt a ‘wait and see’ approach. As more solutions come to market, organisations will have a greater opportunity to develop an overarching strategy and implementation plan.

Survey results suggest that teams, leagues and federations have been slower to embrace GenAI - 67% of respondents in this category do not yet have a plan for GenAI, while 15% do not see it as relevant for the business.

At PwC, our purpose is to build trust in society and solve important problems. We’re a network of firms in 151 countries with over 364,000 people who are committed to delivering quality in assurance, advisory and tax services. Find out more and tell us what matters to you by visiting us at www.pwc.com . PwC refers to the PwC network and/or one or more of its member firms, each of which is a separate legal entity. Please see www.pwc.com/structure for further details.

© 2024 PwC. All rights reserved.

Media Enquiries

Press office, PwC United Kingdom

Gemma-Louise Bond

Corporate Affairs Manager - Retail, consumer and leisure, PwC United Kingdom

Tel: +44 (0)7483 147794

© 2015 - 2024 PwC. All rights reserved. PwC refers to the PwC network and/or one or more of its member firms, each of which is a separate legal entity. Please see www.pwc.com/structure for further details.

- Terms and conditions

- Privacy Statement

- Cookie info

- Legal Disclaimer

- About Site Provider

- Provision of Services

- Human rights and Modern Slavery Statement

- Web Accessibility

- About the New York Fed

- Bank Leadership

- Diversity and Inclusion

- Communities We Serve

- Board of Directors

- Disclosures

- Ethics and Conflicts of Interest

- Annual Financial Statements

- News & Events

- Advisory Groups

- Vendor Information

- Holiday Schedule

At the New York Fed, our mission is to make the U.S. economy stronger and the financial system more stable for all segments of society. We do this by executing monetary policy, providing financial services, supervising banks and conducting research and providing expertise on issues that impact the nation and communities we serve.

The New York Innovation Center bridges the worlds of finance, technology, and innovation and generates insights into high-value central bank-related opportunities.

Do you have a request for information and records? Learn how to submit it.

Learn about the history of the New York Fed and central banking in the United States through articles, speeches, photos and video.

- Markets & Policy Implementation

- Reference Rates

- Effective Federal Funds Rate

- Overnight Bank Funding Rate

- Secured Overnight Financing Rate

- SOFR Averages & Index

- Broad General Collateral Rate

- Tri-Party General Collateral Rate

- Desk Operations

- Treasury Securities

- Agency Mortgage-Backed Securities

- Reverse Repos

- Securities Lending

- Central Bank Liquidity Swaps

- System Open Market Account Holdings

- Primary Dealer Statistics

- Historical Transaction Data

- Monetary Policy Implementation

- Agency Commercial Mortgage-Backed Securities

- Agency Debt Securities

- Repos & Reverse Repos

- Discount Window

- Treasury Debt Auctions & Buybacks as Fiscal Agent

- INTERNATIONAL MARKET OPERATIONS

- Foreign Exchange

- Foreign Reserves Management

- Central Bank Swap Arrangements

- Statements & Operating Policies

- Survey of Primary Dealers

- Survey of Market Participants

- Annual Reports

- Primary Dealers

- Standing Repo Facility Counterparties

- Reverse Repo Counterparties

- Foreign Exchange Counterparties

- Foreign Reserves Management Counterparties

- Operational Readiness

- Central Bank & International Account Services

- Programs Archive

- Economic Research

- Consumer Expectations & Behavior

- Survey of Consumer Expectations

- Household Debt & Credit Report

- Home Price Changes

- Growth & Inflation

- Equitable Growth Indicators

- Multivariate Core Trend Inflation

- New York Fed DSGE Model

- New York Fed Staff Nowcast

- R-star: Natural Rate of Interest

- Labor Market

- Labor Market for Recent College Graduates

- Financial Stability

- Corporate Bond Market Distress Index

- Outlook-at-Risk

- Treasury Term Premia

- Yield Curve as a Leading Indicator

- Banking Research Data Sets

- Quarterly Trends for Consolidated U.S. Banking Organizations

- Empire State Manufacturing Survey

- Business Leaders Survey

- Supplemental Survey Report

- Regional Employment Trends

- Early Benchmarked Employment Data

- INTERNATIONAL ECONOMY

- Global Supply Chain Pressure Index

- Staff Economists

- Visiting Scholars

- Resident Scholars

- PUBLICATIONS

- Liberty Street Economics

- Staff Reports

- Economic Policy Review

- RESEARCH CENTERS

- Applied Macroeconomics & Econometrics Center (AMEC)

- Center for Microeconomic Data (CMD)

- Economic Indicators Calendar

- Financial Institution Supervision

- Regulations

- Reporting Forms

- Correspondence

- Bank Applications

- Community Reinvestment Act Exams

- Frauds and Scams

As part of our core mission, we supervise and regulate financial institutions in the Second District. Our primary objective is to maintain a safe and competitive U.S. and global banking system.

The Governance & Culture Reform hub is designed to foster discussion about corporate governance and the reform of culture and behavior in the financial services industry.

Need to file a report with the New York Fed? Here are all of the forms, instructions and other information related to regulatory and statistical reporting in one spot.

The New York Fed works to protect consumers as well as provides information and resources on how to avoid and report specific scams.

- Financial Services & Infrastructure

- Services For Financial Institutions

- Payment Services

- Payment System Oversight

- International Services, Seminars & Training

- Tri-Party Repo Infrastructure Reform

- Managing Foreign Exchange

- Money Market Funds

- Over-The-Counter Derivatives

The Federal Reserve Bank of New York works to promote sound and well-functioning financial systems and markets through its provision of industry and payment services, advancement of infrastructure reform in key markets and training and educational support to international institutions.

The New York Fed offers the Central Banking Seminar and several specialized courses for central bankers and financial supervisors.

The New York Fed has been working with tri-party repo market participants to make changes to improve the resiliency of the market to financial stress.

- Community Development & Education

- Household Financial Well-being

- Fed Communities

- Fed Listens

- Fed Small Business

- Workforce Development

- Other Community Development Work

- High School Fed Challenge

- College Fed Challenge

- Teacher Professional Development

- Classroom Visits

- Museum & Learning Center Visits

- Educational Comic Books

- Economist Spotlight Series

- Lesson Plans and Resources

- Economic Education Calendar

We are connecting emerging solutions with funding in three areas—health, household financial stability, and climate—to improve life for underserved communities. Learn more by reading our strategy.

The Economic Inequality & Equitable Growth hub is a collection of research, analysis and convenings to help better understand economic inequality.

2024:Q2 Quarterly Highlights

Labor market conditions improved slightly for recent college graduates in the second quarter of 2024. The unemployment rate edged down to 4.5 percent and the underemployment rate inched lower to 40.5 percent.

This web feature tracks employment data for recent college graduates across the United States since 1990, allowing for a historical perspective on the experience of those moving into the labor market.

- compare the unemployment rate for recent college graduates with that of other groups

- monitor the underemployment rate of recent college graduates

A table tracks outcomes by college major with the latest available annual data.

How to cite this report:

Federal Reserve Bank of New York, The Labor Market for Recent College Graduates, https://nyfed.org/collegelabor.

Related reading:

The data do not represent official estimates of the Federal Reserve Bank of New York, its President, the Federal Reserve System, or the Federal Open Market Committee.