- About AssemblyAI

The top free Speech-to-Text APIs, AI Models, and Open Source Engines

This post compares the best free Speech-to-Text APIs and AI models on the market today, including APIs that have a free tier. We’ll also look at several free open-source Speech-to-Text engines and explore why you might choose an API vs. an open-source library, or vice versa.

Choosing the best Speech-to-Text API , AI model, or open-source engine to build with can be challenging. You need to compare accuracy, model design, features, support options, documentation, security, and more.

This post examines the best free Speech-to-Text APIs and AI models on the market today, including ones that have a free tier, to help you make an informed decision. We’ll also look at several free open-source Speech-to-Text engines and explore why you might choose an API or AI model vs. an open-source library, or vice versa.

Looking for a powerful speech-to-text API or AI model?

Learn why AssemblyAI is the leading Speech AI partner.

Free Speech-to-Text APIs and AI Models

APIs and AI models are more accurate, easier to integrate, and come with more out-of-the-box features than open-source options. However, large-scale use of APIs and AI models can come with a higher cost than open-source options.

If you’re looking to use an API or AI model for a small project or a trial run, many of today’s Speech-to-Text APIs and AI models have a free tier. This means that the API or model is free for anyone to use up to a certain volume per day, per month, or per year.

Let’s compare three of the most popular Speech-to-Text APIs and AI models with a free tier: AssemblyAI, Google, and AWS Transcribe.

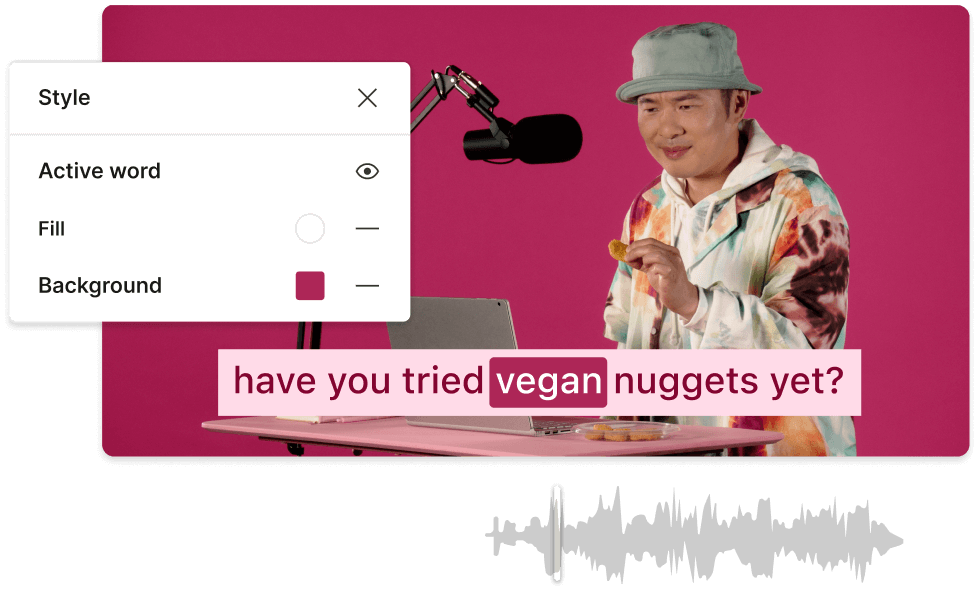

AssemblyAI offers speech AI models via an API that product teams and developers can use to build powerful AI solutions based on voice data for their users.

AssemblyAI offers cutting-edge AI models such as Speaker Diarization , Topic Detection, Entity Detection , Automated Punctuation and Casing , Content Moderation , Sentiment Analysis , Text Summarization , and more. These AI models help users get more out of voice data, with continuous improvements being made to accuracy .

The company offers a $50 credit to get users started with speech-to-text.

AssemblyAI also offers Speech Understanding models, including Audio Intelligence models and LeMUR. LeMUR enables users to leverage Large Language Models (LLMs) to pull valuable information from their voice data—including answering questions, generating summaries and action items, and more.

Its high accuracy and diverse collection of AI models built by AI experts make AssemblyAI a sound option for developers looking for a free Speech-to-Text API. The API also supports virtually every audio and video file format out-of-the-box for easier transcription.

AssemblyAI offers two options for Speech-to-Text: "Best" and "Nano. " Best is the default model, which gives users access to the company's most accurate and advanced Speech-to-Text offering to help users capture the nuances of voice data. The company's Nano tier offers high-quality Speech-to-Text at an accessible price point for users that require cost efficiency.

AssemblyAI has expanded the languages it supports to include 17 different languages for its Best offering and 102 languages for its Nano offering, with additional languages released monthly. See the full list here .

AssemblyAI’s easy-to-use models also allow for quick set-up and transcription in any programming language. You can copy/paste code examples in your preferred language directly from the AssemblyAI Docs or use the AssemblyAI Python SDK or another one of its ready-to-use integrations .

- Free to test in the AI playground , plus $50 credits with an API sign-up

- Speech-to-Text Best – $0.37 per hour

- Speech-to-Text Nano – $0.12 per hour

- Streaming Speech-to-Text – $0.47 per hour

- Speech Understanding – varies

- Volume pricing is also available

See the full pricing list here .

- High accuracy

- Breadth of AI models available, built by AI experts

- Continuous model iteration and improvement

- Developer-friendly documentation and SDKs

- Pay as you go and custom plans

- White glove support

- Strict security and privacy practices

- Models are not open-source

Google Speech-to-Text is a well-known speech transcription API. Google gives users 60 minutes of free transcription, with $300 in free credits for Google Cloud hosting.

Google only supports transcribing files already in a Google Cloud Bucket, so the free credits won’t get you very far. Google also requires you to sign up for a GCP account and project — whether you're using the free tier or paid.

With good accuracy and 125+ languages supported, Google is a decent choice if you’re willing to put in some initial work.

- 60 minutes of free transcription

- $300 in free credits for Google Cloud hosting

- Decent accuracy

- Multi-language support

- Only supports transcription of files in a Google Cloud Bucket

- Difficult to get started

- Lower accuracy than other similarly-priced APIs

- AWS Transcribe

AWS Transcribe offers one hour free per month for the first 12 months of use.

Like Google, you must create an AWS account first if you don’t already have one. AWS also has lower accuracy compared to alternative APIs and only supports transcribing files already in an Amazon S3 bucket.

However, if you’re looking for a specific feature, like medical transcription, AWS has some options. Its Transcribe Medical API is a medical-focused ASR option that is available today.

- One hour free per month for the first 12 months of use

- Tiered pricing , based on usage, ranges from $0.02400 to $0.00780

- Integrates into existing AWS ecosystem

- Medical language transcription

- Difficult to get started from scratch

- Only supports transcribing files already in an Amazon S3 bucket

Open-Source Speech Transcription engines

An alternative to APIs and AI models, open-source Speech-to-Text libraries are completely free--with no limits on use. Some developers also see data security as a plus, since your data doesn’t have to be sent to a third party or the cloud.

There is work involved with open-source engines, so you must be comfortable putting in a lot of time and effort to get the results you want, especially if you are trying to use these libraries at scale. Open-source Speech-to-Text engines are typically less accurate than the APIs discussed above.

If you want to go the open-source route, here are some options worth exploring:

DeepSpeech is an open-source embedded Speech-to-Text engine designed to run in real-time on a range of devices, from high-powered GPUs to a Raspberry Pi 4. The DeepSpeech library uses end-to-end model architecture pioneered by Baidu.

DeepSpeech also has decent out-of-the-box accuracy for an open-source option and is easy to fine-tune and train on your own data.

- Easy to customize

- Can use it to train your own model

- Can be used on a wide range of devices

- Lack of support

- No model improvement outside of individual custom training

- Heavy lift to integrate into production-ready applications

Kaldi is a speech recognition toolkit that has been widely popular in the research community for many years.

Like DeepSpeech, Kaldi has good out-of-the-box accuracy and supports the ability to train your own models. It’s also been thoroughly tested—a lot of companies currently use Kaldi in production and have used it for a while—making more developers confident in its application.

- Can use it to train your own models

- Active user base

- Can be complex and expensive to use

- Uses a command-line interface

Flashlight ASR (formerly Wav2Letter)

Flashlight ASR, formerly Wav2Letter, is Facebook AI Research’s Automatic Speech Recognition (ASR) Toolkit. It is also written in C++ and usesthe ArrayFire tensor library.

Like DeepSpeech, Flashlight ASR is decently accurate for an open-source library and is easy to work with on a small project.

- Customizable

- Easier to modify than other open-source options

- Processing speed

- Very complex to use

- No pre-trained libraries available

- Need to continuously source datasets for training and model updates, which can be difficult and costly

- SpeechBrain

SpeechBrain is a PyTorch-based transcription toolkit. The platform releases open implementations of popular research works and offers a tight integration with Hugging Face for easy access.

Overall, the platform is well-defined and constantly updated, making it a straightforward tool for training and finetuning.

- Integration with Pytorch and Hugging Face

- Pre-trained models are available

- Supports a variety of tasks

- Even its pre-trained models take a lot of customization to make them usable

- Lack of extensive docs makes it not as user-friendly, except for those with extensive experience

Coqui is another deep learning toolkit for Speech-to-Text transcription. Coqui is used in over twenty languages for projects and also offers a variety of essential inference and productionization features.

The platform also releases custom-trained models and has bindings for various programming languages for easier deployment.

- Generates confidence scores for transcripts

- Large support comunity

- No longer updated and maintained by Coqui

Whisper by OpenAI, released in September 2022, is comparable to other current state-of-the-art open-source options.

Whisper can be used either in Python or from the command line and can also be used for multilingual translation.

Whisper has five different models of varying sizes and capabilities, depending on the use case, including v3 released in November 2023 .

However, you’ll need a fairly large computing power and access to an in-house team to maintain, scale, update, and monitor the model to run Whisper at a large scale, making the total cost of ownership higher compared to other options.

As of March 2023, Whisper is also now available via API . On-demand pricing starts at $0.006/minute.

- Multilingual transcription

- Can be used in Python

- Five models are available, each with different sizes and capabilities

- Need an in-house research team to maintain and update

- Costly to run

Which free Speech-to-Text API, AI model, or Open Source engine is right for your project?

The best free Speech-to-Text API, AI model, or open-source engine will depend on our project. Do you want something that is easy-to-use, has high accuracy, and has additional out-of-the-box features? If so, one of these APIs might be right for you:

Alternatively, you might want a completely free option with no data limits—if you don’t mind the extra work it will take to tailor a toolkit to your needs. If so, you might choose one of these open-source libraries:

Whichever you choose, make sure you find a product that can continually meet the needs of your project now and what your project may develop into in the future.

Want to get started with an API?

Get a free API key for AssemblyAI.

Popular posts

AssemblyAI's C# .NET SDK + Latest Tutorials

Developer Educator

Build a Discord Voice Bot to Add ChatGPT to Your Voice Channel

Featured writer

What is speech recognition? A comprehensive guide

Announcement

Introducing the AssemblyAI C# .NET SDK

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications You must be signed in to change notification settings

Cross-platform, real-time, offline speech recognition plugin for Unreal Engine. Based on Whisper OpenAI technology, whisper.cpp.

gtreshchev/RuntimeSpeechRecognizer

Folders and files.

| Name | Name | |||

|---|---|---|---|---|

| 117 Commits | ||||

Repository files navigation

Runtime Speech Recognizer

High-performance OpenAI's Whisper speech recognition Explore the docs » Marketplace . Releases Discord support chat

Key features

- Fast recognition speed

- English-only and multilingual models available, with multilingual supporting 100 languages

- Different model sizes available (from 75 Mb to 2.9 Gb)

- Automatic download of language models in the Editor

- Optional translation of recognized speech to English

- Customizable properties

- Easy selection of model size and language in settings

- No static libraries or external dependencies

- Cross-platform compatibility (Windows, Mac, Linux, Android, iOS, etc)

Additional information

The implementation is based on whisper.cpp .

Unreal® is a trademark or registered trademark of Epic Games, Inc. in the United States of America and elsewhere.

Unreal® Engine, Copyright 1998 – 2024, Epic Games, Inc. All rights reserved.

Contributors 2

Speech to Text - Voice Typing & Transcription

Take notes with your voice for free, or automatically transcribe audio & video recordings. amazingly accurate, secure & blazing fast..

~ Proudly serving millions of users since 2015 ~

I need to >

Dictate Notes

Start taking notes, on our online voice-enabled notepad right away, for free. Learn more.

Transcribe Recordings

Automatically transcribe (& optionally translate) recordings, audio and video files, YouTubes and more, in no time. Learn more.

Speechnotes is a reliable and secure web-based speech-to-text tool that enables you to quickly and accurately transcribe & translate your audio and video recordings, as well as dictate your notes instead of typing, saving you time and effort. With features like voice commands for punctuation and formatting, automatic capitalization, and easy import/export options, Speechnotes provides an efficient and user-friendly dictation and transcription experience. Proudly serving millions of users since 2015, Speechnotes is the go-to tool for anyone who needs fast, accurate & private transcription. Our Portfolio of Complementary Speech-To-Text Tools Includes:

Voice typing - Chrome extension

Dictate instead of typing on any form & text-box across the web. Including on Gmail, and more.

Transcription API & webhooks

Speechnotes' API enables you to send us files via standard POST requests, and get the transcription results sent directly to your server.

Zapier integration

Combine the power of automatic transcriptions with Zapier's automatic processes. Serverless & codeless automation! Connect with your CRM, phone calls, Docs, email & more.

Android Speechnotes app

Speechnotes' notepad for Android, for notes taking on your mobile, battle tested with more than 5Million downloads. Rated 4.3+ ⭐

iOS TextHear app

TextHear for iOS, works great on iPhones, iPads & Macs. Designed specifically to help people with hearing impairment participate in conversations. Please note, this is a sister app - so it has its own pricing plan.

Audio & video converting tools

Tools developed for fast - batch conversions of audio files from one type to another and extracting audio only from videos for minimizing uploads.

Our Sister Apps for Text-To-Speech & Live Captioning

Complementary to Speechnotes

Reads out loud texts, files & web pages

Listen on the go to any written content, from custom texts to websites & e-books, for free.

Speechlogger

Live Captioning & Translation

Live captions & simultaneous translation for conferences, online meetings, webinars & more.

Need Human Transcription? We Can Offer a 10% Discount Coupon

We do not provide human transcription services ourselves, but, we partnered with a UK company that does. Learn more on human transcription and the 10% discount .

Dictation Notepad

Start taking notes with your voice for free

Speech to Text online notepad. Professional, accurate & free speech recognizing text editor. Distraction-free, fast, easy to use web app for dictation & typing.

Speechnotes is a powerful speech-enabled online notepad, designed to empower your ideas by implementing a clean & efficient design, so you can focus on your thoughts. We strive to provide the best online dictation tool by engaging cutting-edge speech-recognition technology for the most accurate results technology can achieve today, together with incorporating built-in tools (automatic or manual) to increase users' efficiency, productivity and comfort. Works entirely online in your Chrome browser. No download, no install and even no registration needed, so you can start working right away.

Speechnotes is especially designed to provide you a distraction-free environment. Every note, starts with a new clear white paper, so to stimulate your mind with a clean fresh start. All other elements but the text itself are out of sight by fading out, so you can concentrate on the most important part - your own creativity. In addition to that, speaking instead of typing, enables you to think and speak it out fluently, uninterrupted, which again encourages creative, clear thinking. Fonts and colors all over the app were designed to be sharp and have excellent legibility characteristics.

Example use cases

- Voice typing

- Writing notes, thoughts

- Medical forms - dictate

- Transcribers (listen and dictate)

Transcription Service

Start transcribing

Fast turnaround - results within minutes. Includes timestamps, auto punctuation and subtitles at unbeatable price. Protects your privacy: no human in the loop, and (unlike many other vendors) we do NOT keep your audio. Pay per use, no recurring payments. Upload your files or transcribe directly from Google Drive, YouTube or any other online source. Simple. No download or install. Just send us the file and get the results in minutes.

- Transcribe interviews

- Captions for Youtubes & movies

- Auto-transcribe phone calls or voice messages

- Students - transcribe lectures

- Podcasters - enlarge your audience by turning your podcasts into textual content

- Text-index entire audio archives

Key Advantages

Speechnotes is powered by the leading most accurate speech recognition AI engines by Google & Microsoft. We always check - and make sure we still use the best. Accuracy in English is very good and can easily reach 95% accuracy for good quality dictation or recording.

Lightweight & fast

Both Speechnotes dictation & transcription are lightweight-online no install, work out of the box anywhere you are. Dictation works in real time. Transcription will get you results in a matter of minutes.

Super Private & Secure!

Super private - no human handles, sees or listens to your recordings! In addition, we take great measures to protect your privacy. For example, for transcribing your recordings - we pay Google's speech to text engines extra - just so they do not keep your audio for their own research purposes.

Health advantages

Typing may result in different types of Computer Related Repetitive Strain Injuries (RSI). Voice typing is one of the main recommended ways to minimize these risks, as it enables you to sit back comfortably, freeing your arms, hands, shoulders and back altogether.

Saves you time

Need to transcribe a recording? If it's an hour long, transcribing it yourself will take you about 6! hours of work. If you send it to a transcriber - you will get it back in days! Upload it to Speechnotes - it will take you less than a minute, and you will get the results in about 20 minutes to your email.

Saves you money

Speechnotes dictation notepad is completely free - with ads - or a small fee to get it ad-free. Speechnotes transcription is only $0.1/minute, which is X10 times cheaper than a human transcriber! We offer the best deal on the market - whether it's the free dictation notepad ot the pay-as-you-go transcription service.

Dictation - Free

- Online dictation notepad

- Voice typing Chrome extension

Dictation - Premium

- Premium online dictation notepad

- Premium voice typing Chrome extension

- Support from the development team

Transcription

$0.1 /minute.

- Pay as you go - no subscription

- Audio & video recordings

- Speaker diarization in English

- Generate captions .srt files

- REST API, webhooks & Zapier integration

Compare plans

| Dictation Free | Dictation Premium | Transcription | |

|---|---|---|---|

| Unlimited dictation | ✅ | ✅ | |

| Online notepad | ✅ | ✅ | |

| Voice typing extension | ✅ | ✅ | |

| Editing | ✅ | ✅ | ✅ |

| Ads free | ✅ | ✅ | |

| Transcribe recordings | ✅ | ||

| Transcribe Youtubes | ✅ | ||

| API & webhooks | ✅ | ||

| Zapier | ✅ | ||

| Export to captions | ✅ | ||

| Extra security | ✅ | ✅ | |

| Support from the development team | ✅ | ✅ |

Privacy Policy

We at Speechnotes, Speechlogger, TextHear, Speechkeys value your privacy, and that's why we do not store anything you say or type or in fact any other data about you - unless it is solely needed for the purpose of your operation. We don't share it with 3rd parties, other than Google / Microsoft for the speech-to-text engine.

Privacy - how are the recordings and results handled?

- transcription service.

Our transcription service is probably the most private and secure transcription service available.

- HIPAA compliant.

- No human in the loop. No passing your recording between PCs, emails, employees, etc.

- Secure encrypted communications (https) with and between our servers.

- Recordings are automatically deleted from our servers as soon as the transcription is done.

- Our contract with Google / Microsoft (our speech engines providers) prohibits them from keeping any audio or results.

- Transcription results are securely kept on our secure database. Only you have access to them - only if you sign in (or provide your secret credentials through the API)

- You may choose to delete the transcription results - once you do - no copy remains on our servers.

- Dictation notepad & extension

For dictation, the recording & recognition - is delegated to and done by the browser (Chrome / Edge) or operating system (Android). So, we never even have access to the recorded audio, and Edge's / Chrome's / Android's (depending the one you use) privacy policy apply here.

The results of the dictation are saved locally on your machine - via the browser's / app's local storage. It never gets to our servers. So, as long as your device is private - your notes are private.

Payments method privacy

The whole payments process is delegated to PayPal / Stripe / Google Pay / Play Store / App Store and secured by these providers. We never receive any of your credit card information.

More generic notes regarding our site, cookies, analytics, ads, etc.

- We may use Google Analytics on our site - which is a generic tool to track usage statistics.

- We use cookies - which means we save data on your browser to send to our servers when needed. This is used for instance to sign you in, and then keep you signed in.

- For the dictation tool - we use your browser's local storage to store your notes, so you can access them later.

- Non premium dictation tool serves ads by Google. Users may opt out of personalized advertising by visiting Ads Settings . Alternatively, users can opt out of a third-party vendor's use of cookies for personalized advertising by visiting https://youradchoices.com/

- In case you would like to upload files to Google Drive directly from Speechnotes - we'll ask for your permission to do so. We will use that permission for that purpose only - syncing your speech-notes to your Google Drive, per your request.

SpeechTexter is a free multilingual speech-to-text application aimed at assisting you with transcription of notes, documents, books, reports or blog posts by using your voice. This app also features a customizable voice commands list, allowing users to add punctuation marks, frequently used phrases, and some app actions (undo, redo, make a new paragraph).

SpeechTexter is used daily by students, teachers, writers, bloggers around the world.

It will assist you in minimizing your writing efforts significantly.

Voice-to-text software is exceptionally valuable for people who have difficulty using their hands due to trauma, people with dyslexia or disabilities that limit the use of conventional input devices. Speech to text technology can also be used to improve accessibility for those with hearing impairments, as it can convert speech into text.

It can also be used as a tool for learning a proper pronunciation of words in the foreign language, in addition to helping a person develop fluency with their speaking skills.

Accuracy levels higher than 90% should be expected. It varies depending on the language and the speaker.

No download, installation or registration is required. Just click the microphone button and start dictating.

Speech to text technology is quickly becoming an essential tool for those looking to save time and increase their productivity.

Powerful real-time continuous speech recognition

Creation of text notes, emails, blog posts, reports and more.

Custom voice commands

More than 70 languages supported

SpeechTexter is using Google Speech recognition to convert the speech into text in real-time. This technology is supported by Chrome browser (for desktop) and some browsers on Android OS. Other browsers have not implemented speech recognition yet.

Note: iPhones and iPads are not supported

List of supported languages:

Afrikaans, Albanian, Amharic, Arabic, Armenian, Azerbaijani, Basque, Bengali, Bosnian, Bulgarian, Burmese, Catalan, Chinese (Mandarin, Cantonese), Croatian, Czech, Danish, Dutch, English, Estonian, Filipino, Finnish, French, Galician, Georgian, German, Greek, Gujarati, Hebrew, Hindi, Hungarian, Icelandic, Indonesian, Italian, Japanese, Javanese, Kannada, Kazakh, Khmer, Kinyarwanda, Korean, Lao, Latvian, Lithuanian, Macedonian, Malay, Malayalam, Marathi, Mongolian, Nepali, Norwegian Bokmål, Persian, Polish, Portuguese, Punjabi, Romanian, Russian, Serbian, Sinhala, Slovak, Slovenian, Southern Sotho, Spanish, Sundanese, Swahili, Swati, Swedish, Tamil, Telugu, Thai, Tsonga, Tswana, Turkish, Ukrainian, Urdu, Uzbek, Venda, Vietnamese, Xhosa, Zulu.

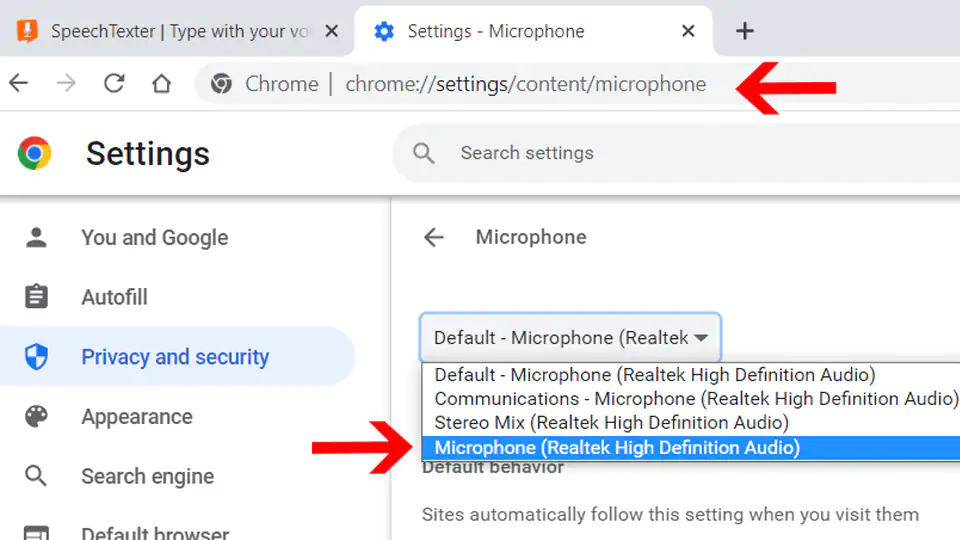

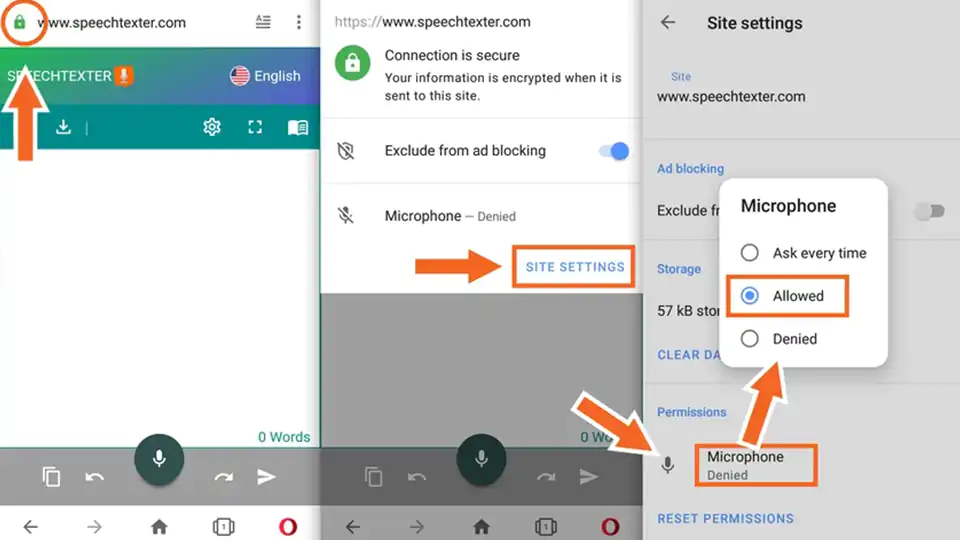

Instructions for web app on desktop (Windows, Mac, Linux OS)

Requirements: the latest version of the Google Chrome [↗] browser (other browsers are not supported).

1. Connect a high-quality microphone to your computer.

2. Make sure your microphone is set as the default recording device on your browser.

To go directly to microphone's settings paste the line below into Chrome's URL bar.

chrome://settings/content/microphone

To capture speech from video/audio content on the web or from a file stored on your device, select 'Stereo Mix' as the default audio input.

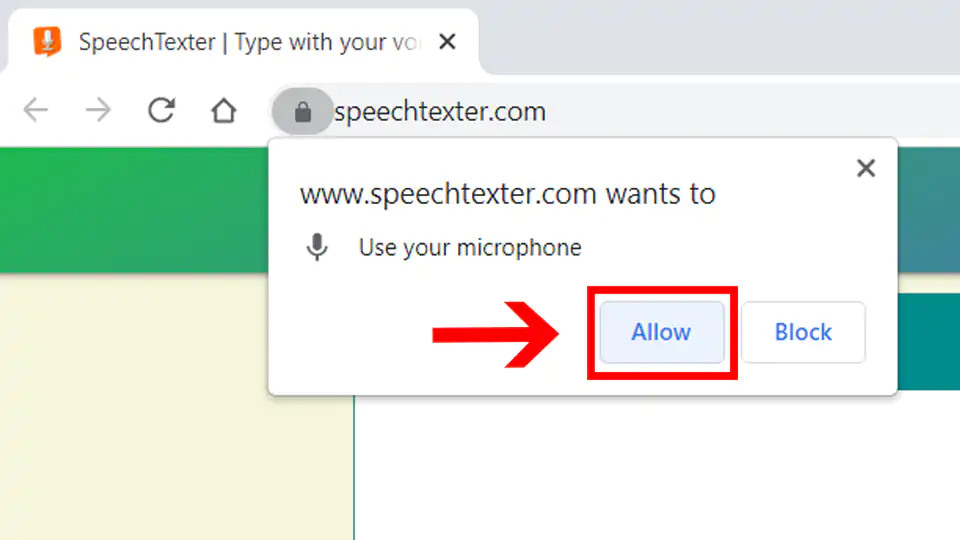

3. Select the language you would like to speak (Click the button on the top right corner).

4. Click the "microphone" button. Chrome browser will request your permission to access your microphone. Choose "allow".

5. You can start dictating!

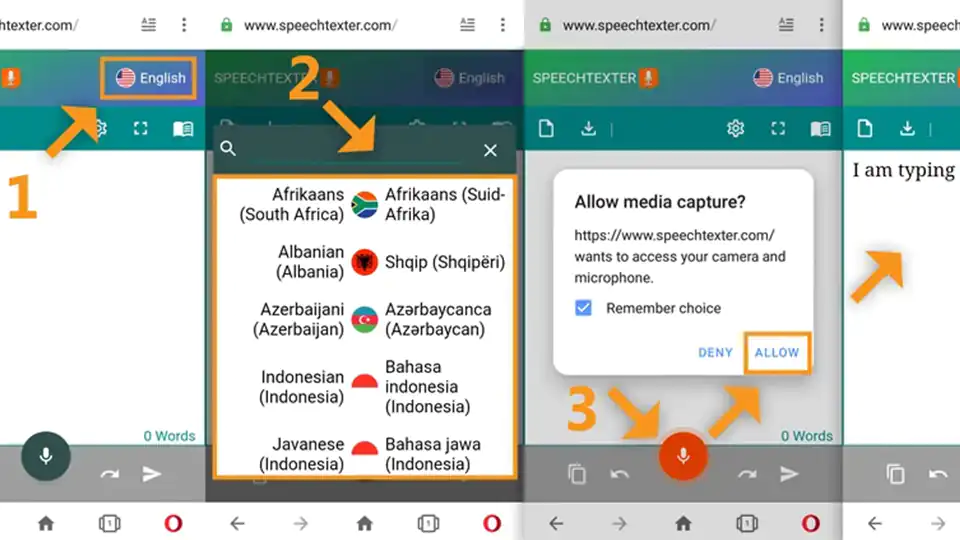

Instructions for the web app on a mobile and for the android app

Requirements: - Google app [↗] installed on your Android device. - Any of the supported browsers if you choose to use the web app.

Supported android browsers (not a full list): Chrome browser (recommended), Edge, Opera, Brave, Vivaldi.

1. Tap the button with the language name (on a web app) or language code (on android app) on the top right corner to select your language.

2. Tap the microphone button. The SpeechTexter app will ask for permission to record audio. Choose 'allow' to enable microphone access.

3. You can start dictating!

Common problems on a desktop (Windows, Mac, Linux OS)

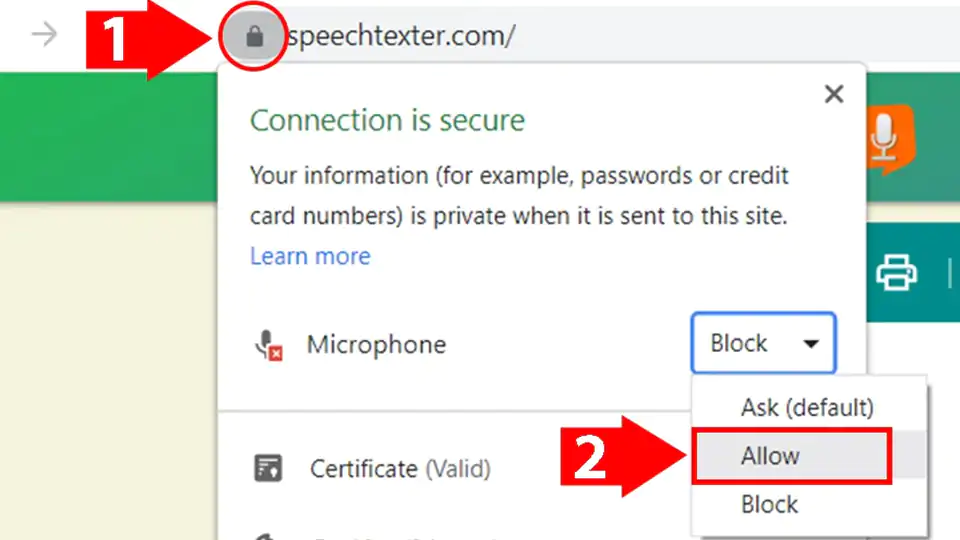

Error: 'speechtexter cannot access your microphone'..

Please give permission to access your microphone.

Click on the "padlock" icon next to the URL bar, find the "microphone" option, and choose "allow".

Error: 'No speech was detected. Please try again'.

If you get this error while you are speaking, make sure your microphone is set as the default recording device on your browser [see step 2].

If you're using a headset, make sure the mute switch on the cord is off.

Error: 'Network error'

The internet connection is poor. Please try again later.

The result won't transfer to the "editor".

The result confidence is not high enough or there is a background noise. An accumulation of long text in the buffer can also make the engine stop responding, please make some pauses in the speech.

The results are wrong.

Please speak loudly and clearly. Speaking clearly and consistently will help the software accurately recognize your words.

Reduce background noise. Background noise from fans, air conditioners, refrigerators, etc. can drop the accuracy significantly. Try to reduce background noise as much as possible.

Speak directly into the microphone. Speaking directly into the microphone enhances the accuracy of the software. Avoid speaking too far away from the microphone.

Speak in complete sentences. Speaking in complete sentences will help the software better recognize the context of your words.

Can I upload an audio file and get the transcription?

No, this feature is not available.

How do I transcribe an audio (video) file on my PC or from the web?

Playback your file in any player and hit the 'mic' button on the SpeechTexter website to start capturing the speech. For better results select "Stereo Mix" as the default recording device on your browser, if you are accessing SpeechTexter and the file from the same device.

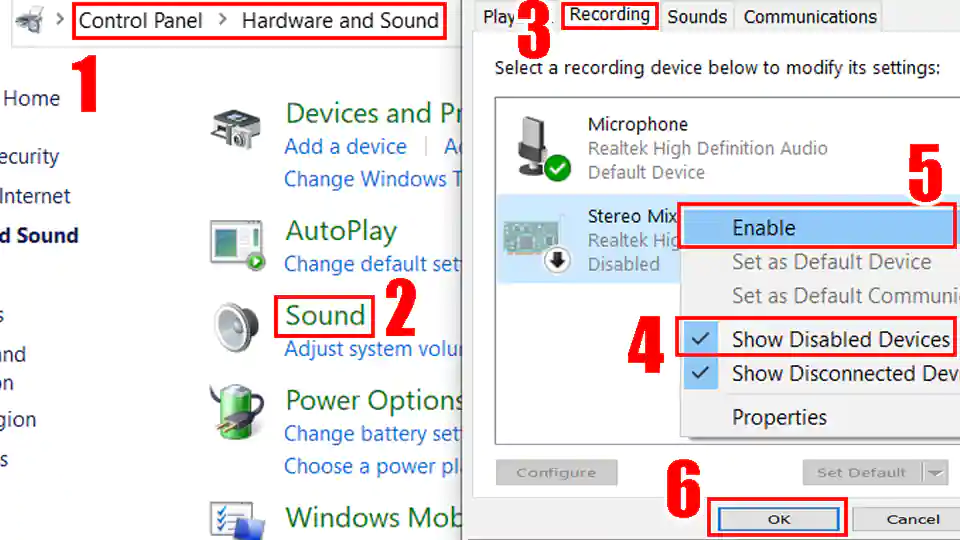

I don't see the "Stereo mix" option (Windows OS)

"Stereo Mix" might be hidden or it's not supported by your system. If you are a Windows user go to 'Control panel' → Hardware and Sound → Sound → 'Recording' tab. Right-click on a blank area in the pane and make sure both "View Disabled Devices" and "View Disconnected Devices" options are checked. If "Stereo Mix" appears, you can enable it by right clicking on it and choosing 'enable'. If "Stereo Mix" hasn't appeared, it means it's not supported by your system. You can try using a third-party program such as "Virtual Audio Cable" or "VB-Audio Virtual Cable" to create a virtual audio device that includes "Stereo Mix" functionality.

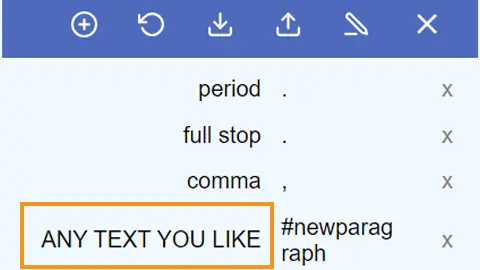

How to use the voice commands list?

The voice commands list allows you to insert the punctuation, some text, or run some preset functions using only your voice. On the first column you enter your voice command. On the second column you enter a punctuation mark or a function. Voice commands are case-sensitive. Available functions: #newparagraph (add a new paragraph), #undo (undo the last change), #redo (redo the last change)

To use the function above make a pause in your speech until all previous dictated speech appears in your note, then say "insert a new paragraph" and wait for the command execution.

Found a mistake in the voice commands list or want to suggest an update? Follow the steps below:

- Navigate to the voice commands list [↑] on this website.

- Click on the edit button to update or add new punctuation marks you think other users might find useful in your language.

- Click on the "Export" button located above the voice commands list to save your list in JSON format to your device.

Next, send us your file as an attachment via email. You can find the email address at the bottom of the page. Feel free to include a brief description of the mistake or the updates you're suggesting in the email body.

Your contribution to the improvement of the services is appreciated.

Can I prevent my custom voice commands from disappearing after closing the browser?

SpeechTexter by default saves your data inside your browser's cache. If your browsers clears the cache your data will be deleted. However, you can export your custom voice commands to your device and import them when you need them by clicking the corresponding buttons above the list. SpeechTexter is using JSON format to store your voice commands. You can create a .txt file in this format on your device and then import it into SpeechTexter. An example of JSON format is shown below:

{ "period": ".", "full stop": ".", "question mark": "?", "new paragraph": "#newparagraph" }

I lost my dictated work after closing the browser.

SpeechTexter doesn't store any text that you dictate. Please use the "autosave" option or click the "download" button (recommended). The "autosave" option will try to store your work inside your browser's cache, where it will remain until you switch the "text autosave" option off, clear the cache manually, or if your browser clears the cache on exit.

Common problems on the Android app

I get the message: 'speech recognition is not available'..

'Google app' from Play store is required for SpeechTexter to work. download [↗]

Where does SpeechTexter store the saved files?

Version 1.5 and above stores the files in the internal memory.

Version 1.4.9 and below stores the files inside the "SpeechTexter" folder at the root directory of your device.

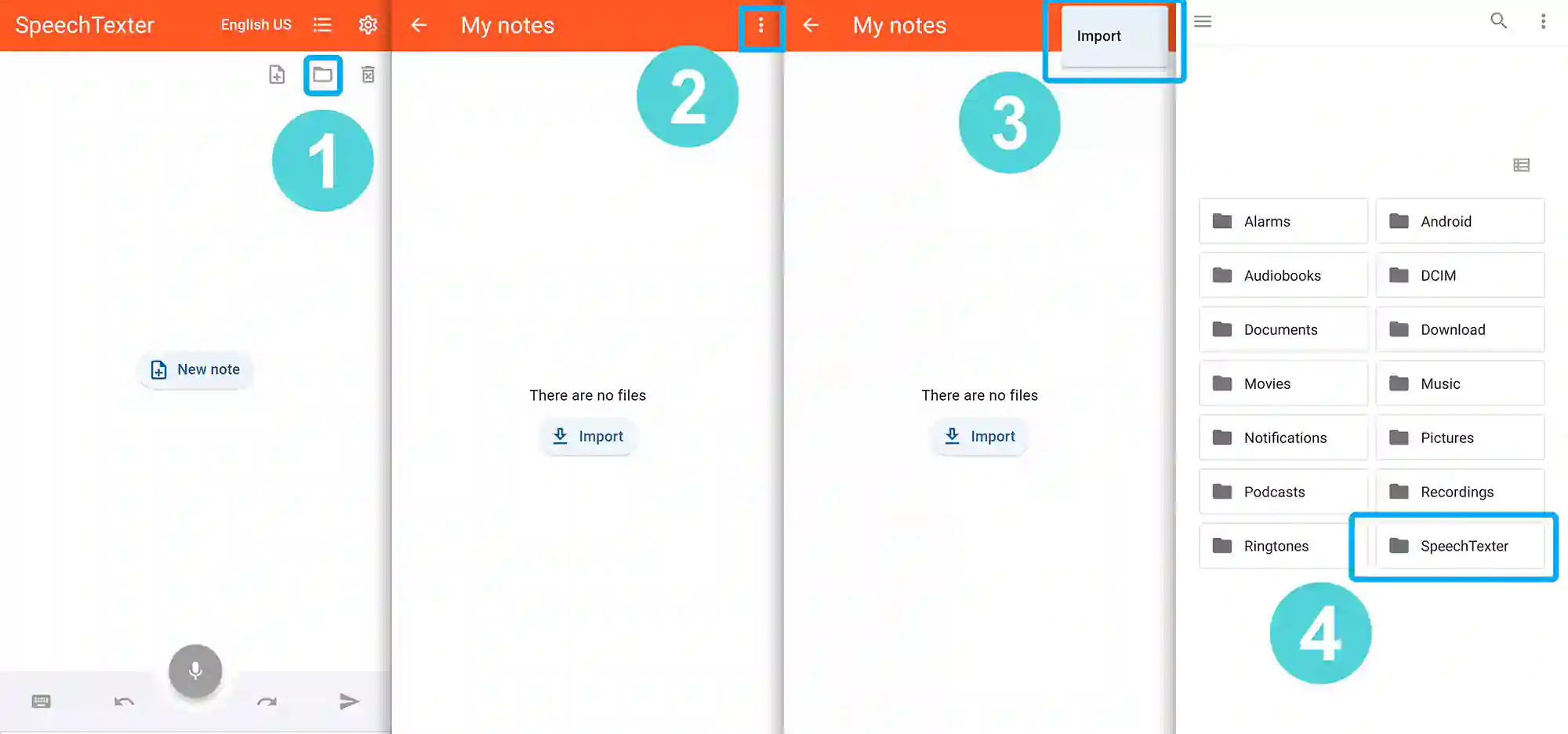

After updating the app from version 1.x.x to version 2.x.x my files have disappeared

As a result of recent updates, the Android operating system has implemented restrictions that prevent users from accessing folders within the Android root directory, including SpeechTexter's folder. However, your old files can still be imported manually by selecting the "import" button within the Speechtexter application.

Common problems on the mobile web app

Tap on the "padlock" icon next to the URL bar, find the "microphone" option and choose "allow".

- TERMS OF USE

- PRIVACY POLICY

- Play Store [↗]

copyright © 2014 - 2024 www.speechtexter.com . All Rights Reserved.

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Quickstart: Recognize and convert speech to text

- 3 contributors

Some of the features described in this article might only be available in preview. This preview is provided without a service-level agreement, and we don't recommend it for production workloads. Certain features might not be supported or might have constrained capabilities. For more information, see Supplemental Terms of Use for Microsoft Azure Previews .

In this quickstart, you try real-time speech to text in Azure AI Studio .

Prerequisites

- Azure subscription - Create one for free .

- Some AI services features are free to try in AI Studio. For access to all capabilities described in this article, you need to connect AI services to your hub in AI Studio .

Try real-time speech to text

Go to the Home page in AI Studio and then select AI Services from the left pane.

Select Speech from the list of AI services.

Select Real-time speech to text .

In the Try it out section, select your hub's AI services connection. For more information about AI services connections, see connect AI services to your hub in AI Studio .

Select Show advanced options to configure speech to text options such as:

- Language identification : Used to identify languages spoken in audio when compared against a list of supported languages. For more information about language identification options such as at-start and continuous recognition, see Language identification .

- Speaker diarization : Used to identify and separate speakers in audio. Diarization distinguishes between the different speakers who participate in the conversation. The Speech service provides information about which speaker was speaking a particular part of transcribed speech. For more information about speaker diarization, see the real-time speech to text with speaker diarization quickstart.

- Custom endpoint : Use a deployed model from custom speech to improve recognition accuracy. To use Microsoft's baseline model, leave this set to None. For more information about custom speech, see Custom Speech .

- Output format : Choose between simple and detailed output formats. Simple output includes display format and timestamps. Detailed output includes more formats (such as display, lexical, ITN, and masked ITN), timestamps, and N-best lists.

- Phrase list : Improve transcription accuracy by providing a list of known phrases, such as names of people or specific locations. Use commas or semicolons to separate each value in the phrase list. For more information about phrase lists, see Phrase lists .

Select an audio file to upload, or record audio in real-time. In this example, we use the Call1_separated_16k_health_insurance.wav file that's available in the Speech SDK repository on GitHub . You can download the file or use your own audio file.

You can view the real-time speech to text results in the Results section.

Reference documentation | Package (NuGet) | Additional samples on GitHub

In this quickstart, you create and run an application to recognize and transcribe speech to text in real-time.

To instead transcribe audio files asynchronously, see What is batch transcription . If you're not sure which speech to text solution is right for you, see What is speech to text?

- An Azure subscription. You can create one for free .

- Create a Speech resource in the Azure portal.

- Get the Speech resource key and region. After your Speech resource is deployed, select Go to resource to view and manage keys.

Set up the environment

The Speech SDK is available as a NuGet package and implements .NET Standard 2.0. You install the Speech SDK later in this guide. For any other requirements, see Install the Speech SDK .

Set environment variables

You need to authenticate your application to access Azure AI services. This article shows you how to use environment variables to store your credentials. You can then access the environment variables from your code to authenticate your application. For production, use a more secure way to store and access your credentials.

We recommend Microsoft Entra ID authentication with managed identities for Azure resources to avoid storing credentials with your applications that run in the cloud.

If you use an API key, store it securely somewhere else, such as in Azure Key Vault . Don't include the API key directly in your code, and never post it publicly.

For more information about AI services security, see Authenticate requests to Azure AI services .

To set the environment variables for your Speech resource key and region, open a console window, and follow the instructions for your operating system and development environment.

- To set the SPEECH_KEY environment variable, replace your-key with one of the keys for your resource.

- To set the SPEECH_REGION environment variable, replace your-region with one of the regions for your resource.

If you only need to access the environment variables in the current console, you can set the environment variable with set instead of setx .

After you add the environment variables, you might need to restart any programs that need to read the environment variables, including the console window. For example, if you're using Visual Studio as your editor, restart Visual Studio before you run the example.

Edit your .bashrc file, and add the environment variables:

After you add the environment variables, run source ~/.bashrc from your console window to make the changes effective.

Edit your .bash_profile file, and add the environment variables:

After you add the environment variables, run source ~/.bash_profile from your console window to make the changes effective.

For iOS and macOS development, you set the environment variables in Xcode. For example, follow these steps to set the environment variable in Xcode 13.4.1.

- Select Product > Scheme > Edit scheme .

- Select Arguments on the Run (Debug Run) page.

- Under Environment Variables select the plus (+) sign to add a new environment variable.

- Enter SPEECH_KEY for the Name and enter your Speech resource key for the Value .

To set the environment variable for your Speech resource region, follow the same steps. Set SPEECH_REGION to the region of your resource. For example, westus .

For more configuration options, see the Xcode documentation .

Recognize speech from a microphone

Follow these steps to create a console application and install the Speech SDK.

Open a command prompt window in the folder where you want the new project. Run this command to create a console application with the .NET CLI.

This command creates the Program.cs file in your project directory.

Install the Speech SDK in your new project with the .NET CLI.

Replace the contents of Program.cs with the following code:

To change the speech recognition language, replace en-US with another supported language . For example, use es-ES for Spanish (Spain). If you don't specify a language, the default is en-US . For details about how to identify one of multiple languages that might be spoken, see Language identification .

Run your new console application to start speech recognition from a microphone:

Make sure that you set the SPEECH_KEY and SPEECH_REGION environment variables . If you don't set these variables, the sample fails with an error message.

Speak into your microphone when prompted. What you speak should appear as text:

Here are some other considerations:

This example uses the RecognizeOnceAsync operation to transcribe utterances of up to 30 seconds, or until silence is detected. For information about continuous recognition for longer audio, including multi-lingual conversations, see How to recognize speech .

To recognize speech from an audio file, use FromWavFileInput instead of FromDefaultMicrophoneInput :

For compressed audio files such as MP4, install GStreamer and use PullAudioInputStream or PushAudioInputStream . For more information, see How to use compressed input audio .

Clean up resources

You can use the Azure portal or Azure Command Line Interface (CLI) to remove the Speech resource you created.

The Speech SDK is available as a NuGet package and implements .NET Standard 2.0. You install the Speech SDK later in this guide. For other requirements, see Install the Speech SDK .

Create a new C++ console project in Visual Studio Community named SpeechRecognition .

Select Tools > Nuget Package Manager > Package Manager Console . In the Package Manager Console , run this command:

Replace the contents of SpeechRecognition.cpp with the following code:

Build and run your new console application to start speech recognition from a microphone.

Reference documentation | Package (Go) | Additional samples on GitHub

Install the Speech SDK for Go. For requirements and instructions, see Install the Speech SDK .

Follow these steps to create a GO module.

Open a command prompt window in the folder where you want the new project. Create a new file named speech-recognition.go .

Copy the following code into speech-recognition.go :

Run the following commands to create a go.mod file that links to components hosted on GitHub:

Build and run the code:

Reference documentation | Additional samples on GitHub

To set up your environment, install the Speech SDK . The sample in this quickstart works with the Java Runtime .

Install Apache Maven . Then run mvn -v to confirm successful installation.

Create a new pom.xml file in the root of your project, and copy the following code into it:

Install the Speech SDK and dependencies.

Follow these steps to create a console application for speech recognition.

Create a new file named SpeechRecognition.java in the same project root directory.

Copy the following code into SpeechRecognition.java :

To recognize speech from an audio file, use fromWavFileInput instead of fromDefaultMicrophoneInput :

Reference documentation | Package (npm) | Additional samples on GitHub | Library source code

You also need a .wav audio file on your local machine. You can use your own .wav file (up to 30 seconds) or download the https://crbn.us/whatstheweatherlike.wav sample file.

To set up your environment, install the Speech SDK for JavaScript. Run this command: npm install microsoft-cognitiveservices-speech-sdk . For guided installation instructions, see Install the Speech SDK .

Recognize speech from a file

Follow these steps to create a Node.js console application for speech recognition.

Open a command prompt window where you want the new project, and create a new file named SpeechRecognition.js .

Install the Speech SDK for JavaScript:

Copy the following code into SpeechRecognition.js :

In SpeechRecognition.js , replace YourAudioFile.wav with your own .wav file. This example only recognizes speech from a .wav file. For information about other audio formats, see How to use compressed input audio . This example supports up to 30 seconds of audio.

Run your new console application to start speech recognition from a file:

The speech from the audio file should be output as text:

This example uses the recognizeOnceAsync operation to transcribe utterances of up to 30 seconds, or until silence is detected. For information about continuous recognition for longer audio, including multi-lingual conversations, see How to recognize speech .

Recognizing speech from a microphone is not supported in Node.js. It's supported only in a browser-based JavaScript environment. For more information, see the React sample and the implementation of speech to text from a microphone on GitHub.

The React sample shows design patterns for the exchange and management of authentication tokens. It also shows the capture of audio from a microphone or file for speech to text conversions.

Reference documentation | Package (PyPi) | Additional samples on GitHub

The Speech SDK for Python is available as a Python Package Index (PyPI) module . The Speech SDK for Python is compatible with Windows, Linux, and macOS.

- For Windows, install the Microsoft Visual C++ Redistributable for Visual Studio 2015, 2017, 2019, and 2022 for your platform. Installing this package for the first time might require a restart.

- On Linux, you must use the x64 target architecture.

Install a version of Python from 3.7 or later . For other requirements, see Install the Speech SDK .

Follow these steps to create a console application.

Open a command prompt window in the folder where you want the new project. Create a new file named speech_recognition.py .

Run this command to install the Speech SDK:

Copy the following code into speech_recognition.py :

To change the speech recognition language, replace en-US with another supported language . For example, use es-ES for Spanish (Spain). If you don't specify a language, the default is en-US . For details about how to identify one of multiple languages that might be spoken, see language identification .

This example uses the recognize_once_async operation to transcribe utterances of up to 30 seconds, or until silence is detected. For information about continuous recognition for longer audio, including multi-lingual conversations, see How to recognize speech .

To recognize speech from an audio file, use filename instead of use_default_microphone :

Reference documentation | Package (download) | Additional samples on GitHub

The Speech SDK for Swift is distributed as a framework bundle. The framework supports both Objective-C and Swift on both iOS and macOS.

The Speech SDK can be used in Xcode projects as a CocoaPod , or downloaded directly and linked manually. This guide uses a CocoaPod. Install the CocoaPod dependency manager as described in its installation instructions .

Follow these steps to recognize speech in a macOS application.

Clone the Azure-Samples/cognitive-services-speech-sdk repository to get the Recognize speech from a microphone in Swift on macOS sample project. The repository also has iOS samples.

Navigate to the directory of the downloaded sample app ( helloworld ) in a terminal.

Run the command pod install . This command generates a helloworld.xcworkspace Xcode workspace containing both the sample app and the Speech SDK as a dependency.

Open the helloworld.xcworkspace workspace in Xcode.

Open the file named AppDelegate.swift and locate the applicationDidFinishLaunching and recognizeFromMic methods as shown here.

In AppDelegate.m , use the environment variables that you previously set for your Speech resource key and region.

To make the debug output visible, select View > Debug Area > Activate Console .

Build and run the example code by selecting Product > Run from the menu or selecting the Play button.

After you select the button in the app and say a few words, you should see the text that you spoke on the lower part of the screen. When you run the app for the first time, it prompts you to give the app access to your computer's microphone.

This example uses the recognizeOnce operation to transcribe utterances of up to 30 seconds, or until silence is detected. For information about continuous recognition for longer audio, including multi-lingual conversations, see How to recognize speech .

Objective-C

The Speech SDK for Objective-C shares client libraries and reference documentation with the Speech SDK for Swift. For Objective-C code examples, see the recognize speech from a microphone in Objective-C on macOS sample project in GitHub.

Speech to text REST API reference | Speech to text REST API for short audio reference | Additional samples on GitHub

You also need a .wav audio file on your local machine. You can use your own .wav file up to 60 seconds or download the https://crbn.us/whatstheweatherlike.wav sample file.

Open a console window and run the following cURL command. Replace YourAudioFile.wav with the path and name of your audio file.

You should receive a response similar to what is shown here. The DisplayText should be the text that was recognized from your audio file. The command recognizes up to 60 seconds of audio and converts it to text.

For more information, see Speech to text REST API for short audio .

Follow these steps and see the Speech CLI quickstart for other requirements for your platform.

Run the following .NET CLI command to install the Speech CLI:

Run the following commands to configure your Speech resource key and region. Replace SUBSCRIPTION-KEY with your Speech resource key and replace REGION with your Speech resource region.

Run the following command to start speech recognition from a microphone:

Speak into the microphone, and you see transcription of your words into text in real-time. The Speech CLI stops after a period of silence, 30 seconds, or when you select Ctrl + C .

To recognize speech from an audio file, use --file instead of --microphone . For compressed audio files such as MP4, install GStreamer and use --format . For more information, see How to use compressed input audio .

To improve recognition accuracy of specific words or utterances, use a phrase list . You include a phrase list in-line or with a text file along with the recognize command:

To change the speech recognition language, replace en-US with another supported language . For example, use es-ES for Spanish (Spain). If you don't specify a language, the default is en-US .

For continuous recognition of audio longer than 30 seconds, append --continuous :

Run this command for information about more speech recognition options such as file input and output:

Learn more about speech recognition

Was this page helpful?

Additional resources

Speech to Text Converter

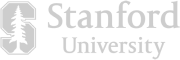

Descript instantly turns speech into text in real time. Just start recording and watch our AI speech recognition transcribe your voice—with 95% accuracy—into text that’s ready to edit or export.

How to automatically convert speech to text with Descript

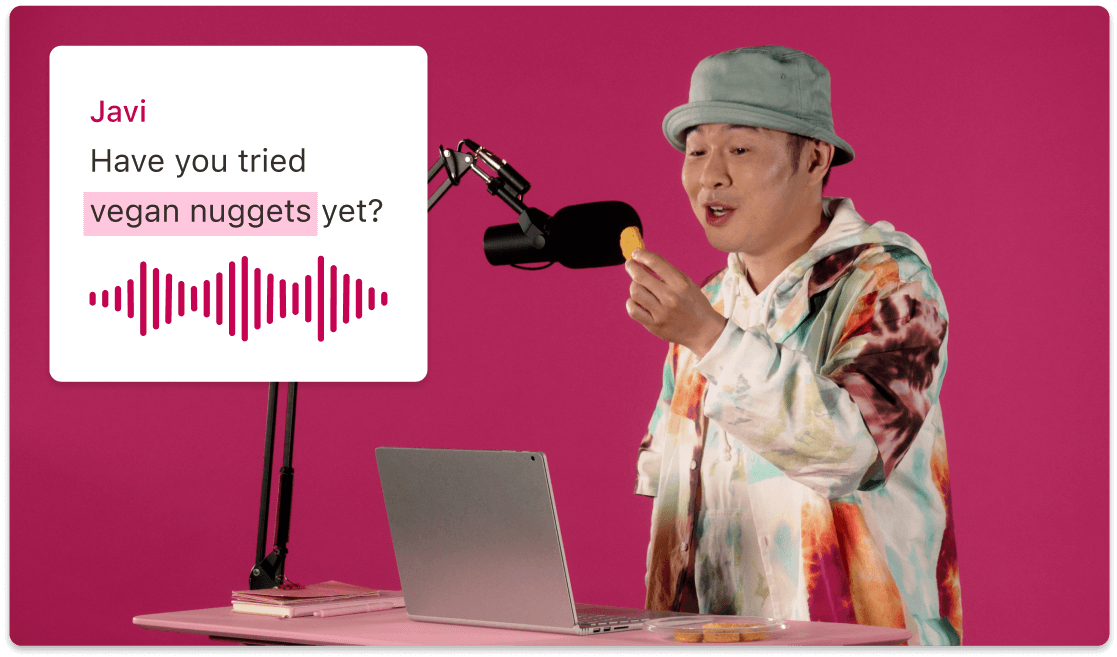

Create a project in Descript, select record, and choose your microphone input to start a recording session. Or upload a voice file to convert the audio to text.

As you speak into your mic, Descript’s speech-to-text software turns what you say into text in real time. Don’t worry about filler words or mistakes; Descript makes it easy to find and remove those from both the generated text and recorded audio.

Enter Correct mode (press the C key) to edit, apply formatting, highlight sections, and leave comments on your speech-to-text transcript. Filler words will be highlighted, which you can remove by right clicking to remove some or all instances. When ready, export your text as HTML, Markdown, Plain text, Word file, or Rich Text format.

Download the app for free

More articles and resources.

How to write a transcript: 9 tips for beginners

What is a video crossfade effect?

New one-click integrations with Riverside, SquadCast, Restream, Captivate

Other tools from descript, voice cloning, video collage maker, advertising video maker, facebook video maker, youtube video summarizer, rotate video, marketing video maker.

Speech to Text

- 3 Create a new project Drag your file into the box above, or click Select file and import it from your computer or wherever it lives.

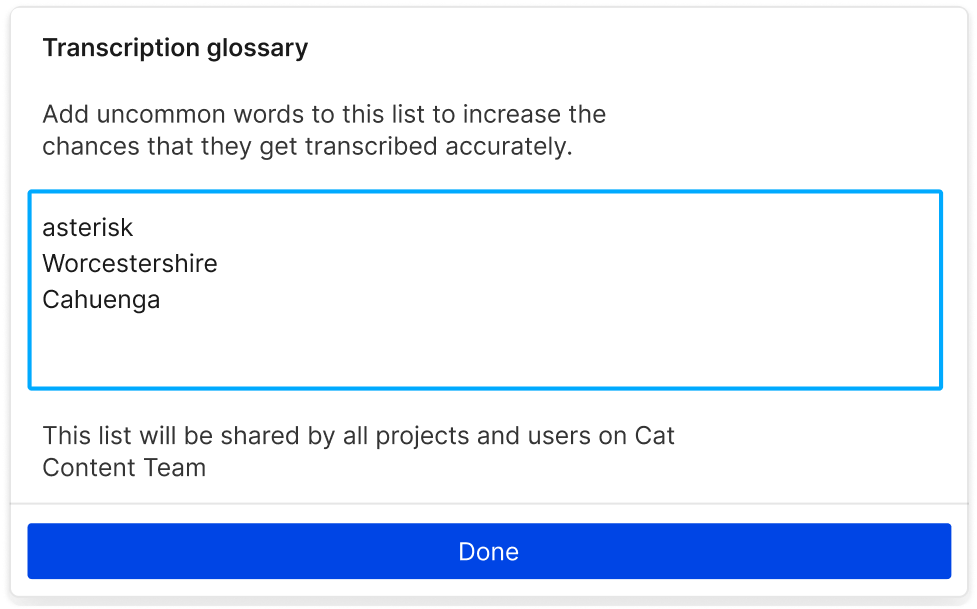

Expand Descript’s online voice recognition powers with an expandable transcription glossary to recognize hard-to-translate words like names and jargon.

Record yourself talking and turn it into text, audio, and video that’s ready to edit in Descript’s timeline. You can format, search, highlight, and other actions you’d perform in a Google Doc, while taking advantage of features like text-to-speec h, captions, and more.

Go from speech to text in over 22 different languages, plus English. Transcribe audio in French , Spanish , Italian, German and other languages from around the world. Finnish? Oh we’re just getting started.

Yes, basic real-time speech to text conversion is included for free with most modern devices (Android, Mac, etc.) Descript also offers a 95% accurate text-to-speech converter for up to 1 hour per month for free.

Speech-to-text conversion works by using AI and large quantities of diverse training data to recognize the acoustic qualities of specific words, despite the different speech patterns and accents people have, to generate it as text.

Yes! Descript‘s AI-powered Overdub feature lets you not only turn speech to text but also generate human-sounding speech from a script in your choice of AI stock voices.

Descript supports speech-to-text conversion in Catalan, Finnish, Lithuanian, Slovak, Croatian, French (FR), Malay, Slovenian, Czech, German, Norwegian, Spanish (US), Danish, Hungarian, Polish, Swedish, Dutch, Italian, Portuguese (BR), Turkish.

Descript’s included AI transcription offers up to 95% accurate speech to text generation. We also offer a white glove pay-per-word transcription service and 99% accuracy. Expanding your transcription glossary makes the automatic transcription more accurate over time.

- Español – América Latina

- Português – Brasil

- Cloud Speech-to-Text

- Documentation

Speech-to-Text supported languages

This page lists all languages supported by Cloud Speech-to-Text. Language is specified within a recognition request's languageCode parameter. For more information about sending a recognition request and specifying the language of the transcription, see the how-to guides about performing speech recognition. For more information about the class tokens available for each language, see the class tokens page .

Try it for yourself

If you're new to Google Cloud, create an account to evaluate how Speech-to-Text performs in real-world scenarios. New customers also get $300 in free credits to run, test, and deploy workloads.

The table below lists the models available for each language. Cloud Speech-to-Text offers multiple recognition models , each tuned to different audio types. The default and command_and_search recognition models support all available languages. The command_and_search model is optimized for short audio clips, such as voice commands or voice searches. The default model can be used to transcribe any audio type.

Some languages are supported by additional models, optimized for additional audio types: enhanced phone_call , and enhanced video . These models can recognize speech captured from these audio sources more accurately than the default model. See the enhanced models page for more information. If any of these additional models are available for your language, they will be listed with the default and command_and_search models for your language. If only the default and command_and_search models are listed with your language, no additional models are currently available.

Use only the language codes shown in the following table. The following language codes are officially maintained and monitored externally by Google. Using other language codes can result in breaking changes.

| ( ) ( ) | ( ) ( ) ( ) ( ) |

| Name | BCP-47 | Model | Automatic punctuation | Diarization | Model adaptation | Word-level confidence | Profanity filter | Spoken punctuation | Spoken emojis | |

|---|---|---|---|---|---|---|---|---|---|---|

| Afrikaans (South Africa) | af-ZA | False | ||||||||

| Afrikaans (South Africa) | af-ZA | True | ||||||||

| Afrikaans (South Africa) | af-ZA | False | ||||||||

| Albanian (Albania) | sq-AL | False | ||||||||

| Albanian (Albania) | sq-AL | True | ||||||||

| Albanian (Albania) | sq-AL | False | ||||||||

| Amharic (Ethiopia) | am-ET | False | ||||||||

| Amharic (Ethiopia) | am-ET | True | ||||||||

| Amharic (Ethiopia) | am-ET | False | ||||||||

| Arabic (Algeria) | ar-DZ | False | ||||||||

| Arabic (Algeria) | ar-DZ | True | ||||||||

| Arabic (Algeria) | ar-DZ | False | ||||||||

| Arabic (Algeria) | ar-DZ | False | ✔ | |||||||

| Arabic (Algeria) | ar-DZ | True | ✔ | |||||||

| Arabic (Algeria) | ar-DZ | False | ✔ | |||||||

| Arabic (Algeria) | ar-DZ | True | ✔ | |||||||

| Arabic (Bahrain) | ar-BH | False | ||||||||

| Arabic (Bahrain) | ar-BH | True | ||||||||

| Arabic (Bahrain) | ar-BH | False | ||||||||

| Arabic (Bahrain) | ar-BH | False | ✔ | |||||||

| Arabic (Bahrain) | ar-BH | True | ✔ | |||||||

| Arabic (Bahrain) | ar-BH | False | ✔ | |||||||

| Arabic (Bahrain) | ar-BH | True | ✔ | |||||||

| Arabic (Egypt) | ar-EG | False | ||||||||

| Arabic (Egypt) | ar-EG | True | ||||||||

| Arabic (Egypt) | ar-EG | False | ||||||||

| Arabic (Egypt) | ar-EG | False | ✔ | |||||||

| Arabic (Egypt) | ar-EG | True | ✔ | |||||||

| Arabic (Egypt) | ar-EG | False | ✔ | |||||||

| Arabic (Egypt) | ar-EG | True | ✔ | |||||||

| Arabic (Iraq) | ar-IQ | False | ||||||||

| Arabic (Iraq) | ar-IQ | True | ||||||||

| Arabic (Iraq) | ar-IQ | False | ||||||||

| Arabic (Iraq) | ar-IQ | False | ✔ | |||||||

| Arabic (Iraq) | ar-IQ | True | ✔ | |||||||

| Arabic (Iraq) | ar-IQ | False | ✔ | |||||||

| Arabic (Iraq) | ar-IQ | True | ✔ | |||||||

| Arabic (Israel) | ar-IL | False | ||||||||

| Arabic (Israel) | ar-IL | True | ||||||||

| Arabic (Israel) | ar-IL | False | ||||||||

| Arabic (Israel) | ar-IL | False | ✔ | |||||||

| Arabic (Israel) | ar-IL | True | ✔ | |||||||

| Arabic (Israel) | ar-IL | False | ✔ | |||||||

| Arabic (Israel) | ar-IL | True | ✔ | |||||||

| Arabic (Jordan) | ar-JO | False | ||||||||

| Arabic (Jordan) | ar-JO | True | ||||||||

| Arabic (Jordan) | ar-JO | False | ||||||||

| Arabic (Jordan) | ar-JO | False | ✔ | |||||||

| Arabic (Jordan) | ar-JO | True | ✔ | |||||||

| Arabic (Jordan) | ar-JO | False | ✔ | |||||||

| Arabic (Jordan) | ar-JO | True | ✔ | |||||||

| Arabic (Kuwait) | ar-KW | False | ||||||||

| Arabic (Kuwait) | ar-KW | True | ||||||||

| Arabic (Kuwait) | ar-KW | False | ||||||||

| Arabic (Kuwait) | ar-KW | False | ✔ | |||||||

| Arabic (Kuwait) | ar-KW | True | ✔ | |||||||

| Arabic (Kuwait) | ar-KW | False | ✔ | |||||||

| Arabic (Kuwait) | ar-KW | True | ✔ | |||||||

| Arabic (Lebanon) | ar-LB | False | ||||||||

| Arabic (Lebanon) | ar-LB | True | ||||||||

| Arabic (Lebanon) | ar-LB | False | ||||||||

| Arabic (Lebanon) | ar-LB | False | ✔ | |||||||

| Arabic (Lebanon) | ar-LB | True | ✔ | |||||||

| Arabic (Lebanon) | ar-LB | False | ✔ | |||||||

| Arabic (Lebanon) | ar-LB | True | ✔ | |||||||

| Arabic (Mauritania) | ar-MR | False | ||||||||

| Arabic (Mauritania) | ar-MR | True | ||||||||

| Arabic (Mauritania) | ar-MR | False | ||||||||

| Arabic (Mauritania) | ar-MR | False | ✔ | |||||||

| Arabic (Mauritania) | ar-MR | True | ✔ | |||||||

| Arabic (Mauritania) | ar-MR | False | ✔ | |||||||

| Arabic (Mauritania) | ar-MR | True | ✔ | |||||||

| Arabic (Morocco) | ar-MA | False | ||||||||

| Arabic (Morocco) | ar-MA | True | ||||||||

| Arabic (Morocco) | ar-MA | False | ||||||||

| Arabic (Morocco) | ar-MA | False | ✔ | |||||||

| Arabic (Morocco) | ar-MA | True | ✔ | |||||||

| Arabic (Morocco) | ar-MA | False | ✔ | |||||||

| Arabic (Morocco) | ar-MA | True | ✔ | |||||||

| Arabic (Oman) | ar-OM | False | ||||||||

| Arabic (Oman) | ar-OM | True | ||||||||

| Arabic (Oman) | ar-OM | False | ||||||||

| Arabic (Oman) | ar-OM | False | ✔ | |||||||

| Arabic (Oman) | ar-OM | True | ✔ | |||||||

| Arabic (Oman) | ar-OM | False | ✔ | |||||||

| Arabic (Oman) | ar-OM | True | ✔ | |||||||

| Arabic (Qatar) | ar-QA | False | ||||||||

| Arabic (Qatar) | ar-QA | True | ||||||||

| Arabic (Qatar) | ar-QA | False | ||||||||

| Arabic (Qatar) | ar-QA | False | ✔ | |||||||

| Arabic (Qatar) | ar-QA | True | ✔ | |||||||

| Arabic (Qatar) | ar-QA | False | ✔ | |||||||

| Arabic (Qatar) | ar-QA | True | ✔ | |||||||

| Arabic (Saudi Arabia) | ar-SA | False | ||||||||

| Arabic (Saudi Arabia) | ar-SA | True | ||||||||

| Arabic (Saudi Arabia) | ar-SA | False | ||||||||

| Arabic (Saudi Arabia) | ar-SA | False | ✔ | |||||||

| Arabic (Saudi Arabia) | ar-SA | True | ✔ | |||||||

| Arabic (Saudi Arabia) | ar-SA | False | ✔ | |||||||

| Arabic (Saudi Arabia) | ar-SA | True | ✔ | |||||||

| Arabic (State of Palestine) | ar-PS | False | ||||||||

| Arabic (State of Palestine) | ar-PS | True | ||||||||

| Arabic (State of Palestine) | ar-PS | False | ||||||||

| Arabic (State of Palestine) | ar-PS | False | ✔ | |||||||

| Arabic (State of Palestine) | ar-PS | True | ✔ | |||||||

| Arabic (State of Palestine) | ar-PS | False | ✔ | |||||||

| Arabic (State of Palestine) | ar-PS | True | ✔ | |||||||

| Arabic (Syria) | ar-SY | False | ||||||||

| Arabic (Syria) | ar-SY | True | ||||||||

| Arabic (Syria) | ar-SY | False | ||||||||

| Arabic (Tunisia) | ar-TN | False | ||||||||

| Arabic (Tunisia) | ar-TN | True | ||||||||

| Arabic (Tunisia) | ar-TN | False | ||||||||

| Arabic (Tunisia) | ar-TN | False | ✔ | |||||||

| Arabic (Tunisia) | ar-TN | True | ✔ | |||||||

| Arabic (Tunisia) | ar-TN | False | ✔ | |||||||

| Arabic (Tunisia) | ar-TN | True | ✔ | |||||||

| Arabic (United Arab Emirates) | ar-AE | False | ||||||||

| Arabic (United Arab Emirates) | ar-AE | True | ||||||||

| Arabic (United Arab Emirates) | ar-AE | False | ||||||||

| Arabic (United Arab Emirates) | ar-AE | False | ✔ | |||||||

| Arabic (United Arab Emirates) | ar-AE | True | ✔ | |||||||

| Arabic (United Arab Emirates) | ar-AE | False | ✔ | |||||||

| Arabic (United Arab Emirates) | ar-AE | True | ✔ | |||||||

| Arabic (Yemen) | ar-YE | False | ||||||||

| Arabic (Yemen) | ar-YE | True | ||||||||

| Arabic (Yemen) | ar-YE | False | ||||||||

| Arabic (Yemen) | ar-YE | False | ✔ | |||||||

| Arabic (Yemen) | ar-YE | True | ✔ | |||||||

| Arabic (Yemen) | ar-YE | False | ✔ | |||||||

| Arabic (Yemen) | ar-YE | True | ✔ | |||||||

| Armenian (Armenia) | hy-AM | False | ||||||||

| Armenian (Armenia) | hy-AM | True | ||||||||

| Armenian (Armenia) | hy-AM | False | ||||||||

| Azerbaijani (Azerbaijan) | az-AZ | False | ||||||||

| Azerbaijani (Azerbaijan) | az-AZ | True | ||||||||

| Azerbaijani (Azerbaijan) | az-AZ | False | ||||||||

| Basque (Spain) | eu-ES | False | ||||||||

| Basque (Spain) | eu-ES | True | ||||||||

| Basque (Spain) | eu-ES | False | ||||||||

| Bengali (Bangladesh) | bn-BD | False | ||||||||

| Bengali (Bangladesh) | bn-BD | True | ||||||||

| Bengali (Bangladesh) | bn-BD | False | ||||||||

| Bengali (Bangladesh) | bn-BD | False | ||||||||

| Bengali (Bangladesh) | bn-BD | True | ||||||||

| Bengali (Bangladesh) | bn-BD | False | ||||||||

| Bengali (Bangladesh) | bn-BD | True | ||||||||

| Bengali (India) | bn-IN | False | ||||||||

| Bengali (India) | bn-IN | True | ||||||||

| Bengali (India) | bn-IN | False | ||||||||

| Bosnian (Bosnia and Herzegovina) | bs-BA | False | ||||||||

| Bosnian (Bosnia and Herzegovina) | bs-BA | True | ||||||||

| Bosnian (Bosnia and Herzegovina) | bs-BA | False | ||||||||

| Bulgarian (Bulgaria) | bg-BG | False | ||||||||

| Bulgarian (Bulgaria) | bg-BG | True | ||||||||

| Bulgarian (Bulgaria) | bg-BG | False | ||||||||

| Bulgarian (Bulgaria) | bg-BG | False | ||||||||

| Bulgarian (Bulgaria) | bg-BG | True | ||||||||

| Bulgarian (Bulgaria) | bg-BG | False | ||||||||

| Bulgarian (Bulgaria) | bg-BG | True | ||||||||

| Burmese (Myanmar) | my-MM | False | ||||||||

| Burmese (Myanmar) | my-MM | True | ||||||||

| Burmese (Myanmar) | my-MM | False | ||||||||

| Catalan (Spain) | ca-ES | False | ||||||||

| Catalan (Spain) | ca-ES | True | ||||||||

| Catalan (Spain) | ca-ES | False | ||||||||

| Chinese (Simplified, China) | cmn-Hans-CN | False | ✔ | |||||||

| Chinese (Simplified, China) | cmn-Hans-CN | True | ✔ | |||||||

| Chinese (Simplified, China) | cmn-Hans-CN | False | ✔ | |||||||

| Chinese (Simplified, Hong Kong) | cmn-Hans-HK | False | ✔ | |||||||

| Chinese (Simplified, Hong Kong) | cmn-Hans-HK | True | ✔ | |||||||

| Chinese (Simplified, Hong Kong) | cmn-Hans-HK | False | ✔ | |||||||

| Chinese (Traditional, Taiwan) | cmn-Hant-TW | False | ✔ | |||||||

| Chinese (Traditional, Taiwan) | cmn-Hant-TW | True | ✔ | |||||||

| Chinese (Traditional, Taiwan) | cmn-Hant-TW | False | ✔ | |||||||

| Chinese, Cantonese (Traditional Hong Kong) | yue-Hant-HK | False | ||||||||

| Chinese, Cantonese (Traditional Hong Kong) | yue-Hant-HK | True | ||||||||

| Chinese, Cantonese (Traditional Hong Kong) | yue-Hant-HK | False | ||||||||

| Croatian (Croatia) | hr-HR | False | ||||||||

| Croatian (Croatia) | hr-HR | True | ||||||||

| Croatian (Croatia) | hr-HR | False | ||||||||

| Czech (Czech Republic) | cs-CZ | False | ✔ | |||||||

| Czech (Czech Republic) | cs-CZ | True | ✔ | |||||||

| Czech (Czech Republic) | cs-CZ | False | ✔ | |||||||

| Czech (Czech Republic) | cs-CZ | False | ✔ | |||||||

| Czech (Czech Republic) | cs-CZ | True | ✔ | |||||||

| Czech (Czech Republic) | cs-CZ | False | ✔ | |||||||

| Czech (Czech Republic) | cs-CZ | True | ✔ | |||||||

| Danish (Denmark) | da-DK | False | ✔ | |||||||

| Danish (Denmark) | da-DK | True | ✔ | |||||||

| Danish (Denmark) | da-DK | False | ✔ | |||||||

| Danish (Denmark) | da-DK | False | ✔ | |||||||

| Danish (Denmark) | da-DK | True | ✔ | |||||||

| Danish (Denmark) | da-DK | False | ✔ | |||||||

| Danish (Denmark) | da-DK | True | ✔ | |||||||

| Dutch (Belgium) | nl-BE | False | ||||||||

| Dutch (Belgium) | nl-BE | True | ||||||||

| Dutch (Belgium) | nl-BE | False | ||||||||

| Dutch (Belgium) | nl-BE | False | ✔ | |||||||

| Dutch (Belgium) | nl-BE | False | ✔ | |||||||

| Dutch (Netherlands) | nl-NL | False | ||||||||

| Dutch (Netherlands) | nl-NL | True | ||||||||

| Dutch (Netherlands) | nl-NL | False | ||||||||

| Dutch (Netherlands) | nl-NL | False | ✔ | |||||||

| Dutch (Netherlands) | nl-NL | True | ✔ | |||||||

| Dutch (Netherlands) | nl-NL | False | ✔ | |||||||

| Dutch (Netherlands) | nl-NL | True | ✔ | |||||||

| Dutch (Netherlands) | nl-NL | False | ✔ | |||||||

| Dutch (Netherlands) | nl-NL | True | ✔ | |||||||

| Dutch (Netherlands) | nl-NL | False | ✔ | |||||||

| Dutch (Netherlands) | nl-NL | True | ✔ | |||||||

| English (Australia) | en-AU | False | ✔ | |||||||

| English (Australia) | en-AU | True | ✔ | |||||||

| English (Australia) | en-AU | False | ✔ | |||||||

| English (Australia) | en-AU | False | ✔ | |||||||

| English (Australia) | en-AU | True | ✔ | |||||||

| English (Australia) | en-AU | False | ✔ | |||||||

| English (Australia) | en-AU | True | ✔ | |||||||

| English (Australia) | en-AU | False | ✔ | |||||||

| English (Australia) | en-AU | False | ✔ | |||||||

| English (Australia) | en-AU | True | ✔ | |||||||

| English (Australia) | en-AU | False | ✔ | |||||||

| English (Australia) | en-AU | True | ✔ | |||||||

| English (Canada) | en-CA | False | ||||||||

| English (Canada) | en-CA | True | ||||||||

| English (Canada) | en-CA | False | ||||||||

| English (Canada) | en-CA | False | ✔ | |||||||

| English (Canada) | en-CA | True | ✔ | |||||||

| English (Canada) | en-CA | False | ✔ | |||||||

| English (Canada) | en-CA | True | ✔ | |||||||

| English (Ghana) | en-GH | False | ||||||||

| English (Ghana) | en-GH | True | ||||||||

| English (Ghana) | en-GH | False | ||||||||

| English (Hong Kong) | en-HK | False | ||||||||

| English (Hong Kong) | en-HK | True | ||||||||

| English (Hong Kong) | en-HK | False | ||||||||

| English (Hong Kong) | en-HK | False | ✔ | |||||||

| English (Hong Kong) | en-HK | False | ✔ | |||||||

| English (India) | en-IN | False | ✔ | |||||||

| English (India) | en-IN | True | ✔ | |||||||

| English (India) | en-IN | False | ✔ | |||||||

| English (India) | en-IN | False | ✔ | |||||||

| English (India) | en-IN | True | ✔ | |||||||

| English (India) | en-IN | False | ✔ | |||||||

| English (India) | en-IN | True | ✔ | |||||||

| English (India) | en-IN | False | ✔ | |||||||

| English (India) | en-IN | True | ✔ | |||||||

| English (India) | en-IN | False | ✔ | |||||||

| English (India) | en-IN | True | ✔ | |||||||

| English (Ireland) | en-IE | False | ||||||||

| English (Ireland) | en-IE | True | ||||||||

| English (Ireland) | en-IE | False | ||||||||

| English (Ireland) | en-IE | False | ✔ | |||||||

| English (Ireland) | en-IE | False | ✔ | |||||||

| English (Kenya) | en-KE | False | ||||||||

| English (Kenya) | en-KE | True | ||||||||

| English (Kenya) | en-KE | False | ||||||||

| English (New Zealand) | en-NZ | False | ||||||||

| English (New Zealand) | en-NZ | True | ||||||||

| English (New Zealand) | en-NZ | False | ||||||||

| English (New Zealand) | en-NZ | False | ✔ | |||||||

| English (New Zealand) | en-NZ | False | ✔ | |||||||

| English (Nigeria) | en-NG | False | ||||||||

| English (Nigeria) | en-NG | True | ||||||||

| English (Nigeria) | en-NG | False | ||||||||

| English (Pakistan) | en-PK | False | ||||||||

| English (Pakistan) | en-PK | True | ||||||||

| English (Pakistan) | en-PK | False | ||||||||

| English (Pakistan) | en-PK | False | ✔ | |||||||

| English (Pakistan) | en-PK | False | ✔ | |||||||

| English (Philippines) | en-PH | False | ||||||||

| English (Philippines) | en-PH | True | ||||||||

| English (Philippines) | en-PH | False | ||||||||

| English (Singapore) | en-SG | False | ✔ | |||||||

| English (Singapore) | en-SG | True | ✔ | |||||||

| English (Singapore) | en-SG | False | ✔ | |||||||

| English (Singapore) | en-SG | False | ✔ | |||||||

| English (Singapore) | en-SG | False | ✔ | |||||||

| English (South Africa) | en-ZA | False | ||||||||

| English (South Africa) | en-ZA | True | ||||||||

| English (South Africa) | en-ZA | False | ||||||||

| English (Tanzania) | en-TZ | False | ||||||||

| English (Tanzania) | en-TZ | True | ||||||||

| English (Tanzania) | en-TZ | False | ||||||||

| English (United Kingdom) | en-GB | False | ✔ | |||||||

| English (United Kingdom) | en-GB | True | ✔ | |||||||

| English (United Kingdom) | en-GB | False | ✔ | |||||||

| English (United Kingdom) | en-GB | False | ✔ | |||||||

| English (United Kingdom) | en-GB | True | ✔ | |||||||

| English (United Kingdom) | en-GB | False | ✔ | |||||||

| English (United Kingdom) | en-GB | True | ✔ | |||||||

| English (United Kingdom) | en-GB | False | ✔ | |||||||

| English (United Kingdom) | en-GB | False | ✔ | |||||||

| English (United Kingdom) | en-GB | True | ✔ | |||||||

| English (United Kingdom) | en-GB | False | ✔ | |||||||

| English (United Kingdom) | en-GB | True | ✔ | |||||||

| English (United States) | en-US | False | ✔ | |||||||

| English (United States) | en-US | True | ✔ | |||||||

| English (United States) | en-US | False | ✔ | |||||||

| English (United States) | en-US | False | ✔ | |||||||

| English (United States) | en-US | True | ✔ | |||||||

| English (United States) | en-US | False | ✔ | |||||||

| English (United States) | en-US | True | ✔ | |||||||

| English (United States) | en-US | False | ✔ | |||||||

| English (United States) | en-US | False | ✔ | |||||||

| English (United States) | en-US | False | ✔ | |||||||

| English (United States) | en-US | False | ✔ | |||||||

| English (United States) | en-US | True | ✔ | |||||||

| English (United States) | en-US | False | ✔ | |||||||

| English (United States) | en-US | True | ✔ | |||||||

| English (United States) | en-US | False | ✔ | |||||||

| Estonian (Estonia) | et-EE | False | ||||||||

| Estonian (Estonia) | et-EE | True | ||||||||

| Estonian (Estonia) | et-EE | False | ||||||||

| Filipino (Philippines) | fil-PH | False | ||||||||

| Filipino (Philippines) | fil-PH | True | ||||||||

| Filipino (Philippines) | fil-PH | False | ||||||||

| Finnish (Finland) | fi-FI | False | ✔ | |||||||

| Finnish (Finland) | fi-FI | True | ✔ | |||||||

| Finnish (Finland) | fi-FI | False | ✔ | |||||||

| Finnish (Finland) | fi-FI | False | ✔ | |||||||

| Finnish (Finland) | fi-FI | True | ✔ | |||||||

| Finnish (Finland) | fi-FI | False | ✔ | |||||||

| Finnish (Finland) | fi-FI | True | ✔ | |||||||

| French (Belgium) | fr-BE | False | ||||||||

| French (Belgium) | fr-BE | True | ||||||||

| French (Belgium) | fr-BE | False | ||||||||

| French (Belgium) | fr-BE | False | ✔ | |||||||

| French (Belgium) | fr-BE | False | ✔ | |||||||

| French (Canada) | fr-CA | False | ||||||||

| French (Canada) | fr-CA | True | ||||||||

| French (Canada) | fr-CA | False | ||||||||

| French (Canada) | fr-CA | False | ||||||||

| French (Canada) | fr-CA | True | ||||||||

| French (Canada) | fr-CA | False | ||||||||

| French (Canada) | fr-CA | True | ||||||||

| French (Canada) | fr-CA | False | ||||||||

| French (Canada) | fr-CA | False | ||||||||

| French (Canada) | fr-CA | True | ||||||||

| French (Canada) | fr-CA | False | ||||||||

| French (Canada) | fr-CA | True | ||||||||

| French (France) | fr-FR | False | ✔ | |||||||

| French (France) | fr-FR | True | ✔ | |||||||

| French (France) | fr-FR | False | ✔ | |||||||

| French (France) | fr-FR | False | ✔ | |||||||

| French (France) | fr-FR | True | ✔ | |||||||

| French (France) | fr-FR | False | ✔ | |||||||

| French (France) | fr-FR | True | ✔ | |||||||

| French (France) | fr-FR | False | ✔ | |||||||

| French (France) | fr-FR | False | ✔ | |||||||

| French (France) | fr-FR | True | ✔ | |||||||

| French (France) | fr-FR | False | ✔ | |||||||

| French (France) | fr-FR | True | ✔ | |||||||

| French (Switzerland) | fr-CH | False | ||||||||

| French (Switzerland) | fr-CH | True | ||||||||

| French (Switzerland) | fr-CH | False | ||||||||

| French (Switzerland) | fr-CH | False | ✔ | |||||||

| French (Switzerland) | fr-CH | False | ✔ | |||||||

| Galician (Spain) | gl-ES | False | ||||||||

| Galician (Spain) | gl-ES | True | ||||||||

| Galician (Spain) | gl-ES | False | ||||||||

| Georgian (Georgia) | ka-GE | False | ||||||||

| Georgian (Georgia) | ka-GE | True | ||||||||

| Georgian (Georgia) | ka-GE | False | ||||||||

| German (Austria) | de-AT | False | ||||||||

| German (Austria) | de-AT | True | ||||||||

| German (Austria) | de-AT | False | ||||||||

| German (Austria) | de-AT | False | ✔ | |||||||

| German (Austria) | de-AT | False | ✔ | |||||||

| German (Germany) | de-DE | False | ✔ | |||||||