- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case AskWhy Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Data Analysis in Research: Types & Methods

Content Index

Why analyze data in research?

Types of data in research, finding patterns in the qualitative data, methods used for data analysis in qualitative research, preparing data for analysis, methods used for data analysis in quantitative research, considerations in research data analysis, what is data analysis in research.

Definition of research in data analysis: According to LeCompte and Schensul, research data analysis is a process used by researchers to reduce data to a story and interpret it to derive insights. The data analysis process helps reduce a large chunk of data into smaller fragments, which makes sense.

Three essential things occur during the data analysis process — the first is data organization . Summarization and categorization together contribute to becoming the second known method used for data reduction. It helps find patterns and themes in the data for easy identification and linking. The third and last way is data analysis – researchers do it in both top-down and bottom-up fashion.

LEARN ABOUT: Research Process Steps

On the other hand, Marshall and Rossman describe data analysis as a messy, ambiguous, and time-consuming but creative and fascinating process through which a mass of collected data is brought to order, structure and meaning.

We can say that “the data analysis and data interpretation is a process representing the application of deductive and inductive logic to the research and data analysis.”

Researchers rely heavily on data as they have a story to tell or research problems to solve. It starts with a question, and data is nothing but an answer to that question. But, what if there is no question to ask? Well! It is possible to explore data even without a problem – we call it ‘Data Mining’, which often reveals some interesting patterns within the data that are worth exploring.

Irrelevant to the type of data researchers explore, their mission and audiences’ vision guide them to find the patterns to shape the story they want to tell. One of the essential things expected from researchers while analyzing data is to stay open and remain unbiased toward unexpected patterns, expressions, and results. Remember, sometimes, data analysis tells the most unforeseen yet exciting stories that were not expected when initiating data analysis. Therefore, rely on the data you have at hand and enjoy the journey of exploratory research.

Create a Free Account

Every kind of data has a rare quality of describing things after assigning a specific value to it. For analysis, you need to organize these values, processed and presented in a given context, to make it useful. Data can be in different forms; here are the primary data types.

- Qualitative data: When the data presented has words and descriptions, then we call it qualitative data . Although you can observe this data, it is subjective and harder to analyze data in research, especially for comparison. Example: Quality data represents everything describing taste, experience, texture, or an opinion that is considered quality data. This type of data is usually collected through focus groups, personal qualitative interviews , qualitative observation or using open-ended questions in surveys.

- Quantitative data: Any data expressed in numbers of numerical figures are called quantitative data . This type of data can be distinguished into categories, grouped, measured, calculated, or ranked. Example: questions such as age, rank, cost, length, weight, scores, etc. everything comes under this type of data. You can present such data in graphical format, charts, or apply statistical analysis methods to this data. The (Outcomes Measurement Systems) OMS questionnaires in surveys are a significant source of collecting numeric data.

- Categorical data: It is data presented in groups. However, an item included in the categorical data cannot belong to more than one group. Example: A person responding to a survey by telling his living style, marital status, smoking habit, or drinking habit comes under the categorical data. A chi-square test is a standard method used to analyze this data.

Learn More : Examples of Qualitative Data in Education

Data analysis in qualitative research

Data analysis and qualitative data research work a little differently from the numerical data as the quality data is made up of words, descriptions, images, objects, and sometimes symbols. Getting insight from such complicated information is a complicated process. Hence it is typically used for exploratory research and data analysis .

Although there are several ways to find patterns in the textual information, a word-based method is the most relied and widely used global technique for research and data analysis. Notably, the data analysis process in qualitative research is manual. Here the researchers usually read the available data and find repetitive or commonly used words.

For example, while studying data collected from African countries to understand the most pressing issues people face, researchers might find “food” and “hunger” are the most commonly used words and will highlight them for further analysis.

LEARN ABOUT: Level of Analysis

The keyword context is another widely used word-based technique. In this method, the researcher tries to understand the concept by analyzing the context in which the participants use a particular keyword.

For example , researchers conducting research and data analysis for studying the concept of ‘diabetes’ amongst respondents might analyze the context of when and how the respondent has used or referred to the word ‘diabetes.’

The scrutiny-based technique is also one of the highly recommended text analysis methods used to identify a quality data pattern. Compare and contrast is the widely used method under this technique to differentiate how a specific text is similar or different from each other.

For example: To find out the “importance of resident doctor in a company,” the collected data is divided into people who think it is necessary to hire a resident doctor and those who think it is unnecessary. Compare and contrast is the best method that can be used to analyze the polls having single-answer questions types .

Metaphors can be used to reduce the data pile and find patterns in it so that it becomes easier to connect data with theory.

Variable Partitioning is another technique used to split variables so that researchers can find more coherent descriptions and explanations from the enormous data.

LEARN ABOUT: Qualitative Research Questions and Questionnaires

There are several techniques to analyze the data in qualitative research, but here are some commonly used methods,

- Content Analysis: It is widely accepted and the most frequently employed technique for data analysis in research methodology. It can be used to analyze the documented information from text, images, and sometimes from the physical items. It depends on the research questions to predict when and where to use this method.

- Narrative Analysis: This method is used to analyze content gathered from various sources such as personal interviews, field observation, and surveys . The majority of times, stories, or opinions shared by people are focused on finding answers to the research questions.

- Discourse Analysis: Similar to narrative analysis, discourse analysis is used to analyze the interactions with people. Nevertheless, this particular method considers the social context under which or within which the communication between the researcher and respondent takes place. In addition to that, discourse analysis also focuses on the lifestyle and day-to-day environment while deriving any conclusion.

- Grounded Theory: When you want to explain why a particular phenomenon happened, then using grounded theory for analyzing quality data is the best resort. Grounded theory is applied to study data about the host of similar cases occurring in different settings. When researchers are using this method, they might alter explanations or produce new ones until they arrive at some conclusion.

LEARN ABOUT: 12 Best Tools for Researchers

Data analysis in quantitative research

The first stage in research and data analysis is to make it for the analysis so that the nominal data can be converted into something meaningful. Data preparation consists of the below phases.

Phase I: Data Validation

Data validation is done to understand if the collected data sample is per the pre-set standards, or it is a biased data sample again divided into four different stages

- Fraud: To ensure an actual human being records each response to the survey or the questionnaire

- Screening: To make sure each participant or respondent is selected or chosen in compliance with the research criteria

- Procedure: To ensure ethical standards were maintained while collecting the data sample

- Completeness: To ensure that the respondent has answered all the questions in an online survey. Else, the interviewer had asked all the questions devised in the questionnaire.

Phase II: Data Editing

More often, an extensive research data sample comes loaded with errors. Respondents sometimes fill in some fields incorrectly or sometimes skip them accidentally. Data editing is a process wherein the researchers have to confirm that the provided data is free of such errors. They need to conduct necessary checks and outlier checks to edit the raw edit and make it ready for analysis.

Phase III: Data Coding

Out of all three, this is the most critical phase of data preparation associated with grouping and assigning values to the survey responses . If a survey is completed with a 1000 sample size, the researcher will create an age bracket to distinguish the respondents based on their age. Thus, it becomes easier to analyze small data buckets rather than deal with the massive data pile.

LEARN ABOUT: Steps in Qualitative Research

After the data is prepared for analysis, researchers are open to using different research and data analysis methods to derive meaningful insights. For sure, statistical analysis plans are the most favored to analyze numerical data. In statistical analysis, distinguishing between categorical data and numerical data is essential, as categorical data involves distinct categories or labels, while numerical data consists of measurable quantities. The method is again classified into two groups. First, ‘Descriptive Statistics’ used to describe data. Second, ‘Inferential statistics’ that helps in comparing the data .

Descriptive statistics

This method is used to describe the basic features of versatile types of data in research. It presents the data in such a meaningful way that pattern in the data starts making sense. Nevertheless, the descriptive analysis does not go beyond making conclusions. The conclusions are again based on the hypothesis researchers have formulated so far. Here are a few major types of descriptive analysis methods.

Measures of Frequency

- Count, Percent, Frequency

- It is used to denote home often a particular event occurs.

- Researchers use it when they want to showcase how often a response is given.

Measures of Central Tendency

- Mean, Median, Mode

- The method is widely used to demonstrate distribution by various points.

- Researchers use this method when they want to showcase the most commonly or averagely indicated response.

Measures of Dispersion or Variation

- Range, Variance, Standard deviation

- Here the field equals high/low points.

- Variance standard deviation = difference between the observed score and mean

- It is used to identify the spread of scores by stating intervals.

- Researchers use this method to showcase data spread out. It helps them identify the depth until which the data is spread out that it directly affects the mean.

Measures of Position

- Percentile ranks, Quartile ranks

- It relies on standardized scores helping researchers to identify the relationship between different scores.

- It is often used when researchers want to compare scores with the average count.

For quantitative research use of descriptive analysis often give absolute numbers, but the in-depth analysis is never sufficient to demonstrate the rationale behind those numbers. Nevertheless, it is necessary to think of the best method for research and data analysis suiting your survey questionnaire and what story researchers want to tell. For example, the mean is the best way to demonstrate the students’ average scores in schools. It is better to rely on the descriptive statistics when the researchers intend to keep the research or outcome limited to the provided sample without generalizing it. For example, when you want to compare average voting done in two different cities, differential statistics are enough.

Descriptive analysis is also called a ‘univariate analysis’ since it is commonly used to analyze a single variable.

Inferential statistics

Inferential statistics are used to make predictions about a larger population after research and data analysis of the representing population’s collected sample. For example, you can ask some odd 100 audiences at a movie theater if they like the movie they are watching. Researchers then use inferential statistics on the collected sample to reason that about 80-90% of people like the movie.

Here are two significant areas of inferential statistics.

- Estimating parameters: It takes statistics from the sample research data and demonstrates something about the population parameter.

- Hypothesis test: I t’s about sampling research data to answer the survey research questions. For example, researchers might be interested to understand if the new shade of lipstick recently launched is good or not, or if the multivitamin capsules help children to perform better at games.

These are sophisticated analysis methods used to showcase the relationship between different variables instead of describing a single variable. It is often used when researchers want something beyond absolute numbers to understand the relationship between variables.

Here are some of the commonly used methods for data analysis in research.

- Correlation: When researchers are not conducting experimental research or quasi-experimental research wherein the researchers are interested to understand the relationship between two or more variables, they opt for correlational research methods.

- Cross-tabulation: Also called contingency tables, cross-tabulation is used to analyze the relationship between multiple variables. Suppose provided data has age and gender categories presented in rows and columns. A two-dimensional cross-tabulation helps for seamless data analysis and research by showing the number of males and females in each age category.

- Regression analysis: For understanding the strong relationship between two variables, researchers do not look beyond the primary and commonly used regression analysis method, which is also a type of predictive analysis used. In this method, you have an essential factor called the dependent variable. You also have multiple independent variables in regression analysis. You undertake efforts to find out the impact of independent variables on the dependent variable. The values of both independent and dependent variables are assumed as being ascertained in an error-free random manner.

- Frequency tables: The statistical procedure is used for testing the degree to which two or more vary or differ in an experiment. A considerable degree of variation means research findings were significant. In many contexts, ANOVA testing and variance analysis are similar.

- Analysis of variance: The statistical procedure is used for testing the degree to which two or more vary or differ in an experiment. A considerable degree of variation means research findings were significant. In many contexts, ANOVA testing and variance analysis are similar.

- Researchers must have the necessary research skills to analyze and manipulation the data , Getting trained to demonstrate a high standard of research practice. Ideally, researchers must possess more than a basic understanding of the rationale of selecting one statistical method over the other to obtain better data insights.

- Usually, research and data analytics projects differ by scientific discipline; therefore, getting statistical advice at the beginning of analysis helps design a survey questionnaire, select data collection methods , and choose samples.

LEARN ABOUT: Best Data Collection Tools

- The primary aim of data research and analysis is to derive ultimate insights that are unbiased. Any mistake in or keeping a biased mind to collect data, selecting an analysis method, or choosing audience sample il to draw a biased inference.

- Irrelevant to the sophistication used in research data and analysis is enough to rectify the poorly defined objective outcome measurements. It does not matter if the design is at fault or intentions are not clear, but lack of clarity might mislead readers, so avoid the practice.

- The motive behind data analysis in research is to present accurate and reliable data. As far as possible, avoid statistical errors, and find a way to deal with everyday challenges like outliers, missing data, data altering, data mining , or developing graphical representation.

LEARN MORE: Descriptive Research vs Correlational Research The sheer amount of data generated daily is frightening. Especially when data analysis has taken center stage. in 2018. In last year, the total data supply amounted to 2.8 trillion gigabytes. Hence, it is clear that the enterprises willing to survive in the hypercompetitive world must possess an excellent capability to analyze complex research data, derive actionable insights, and adapt to the new market needs.

LEARN ABOUT: Average Order Value

QuestionPro is an online survey platform that empowers organizations in data analysis and research and provides them a medium to collect data by creating appealing surveys.

MORE LIKE THIS

Jotform vs SurveyMonkey: Which Is Best in 2024

Aug 15, 2024

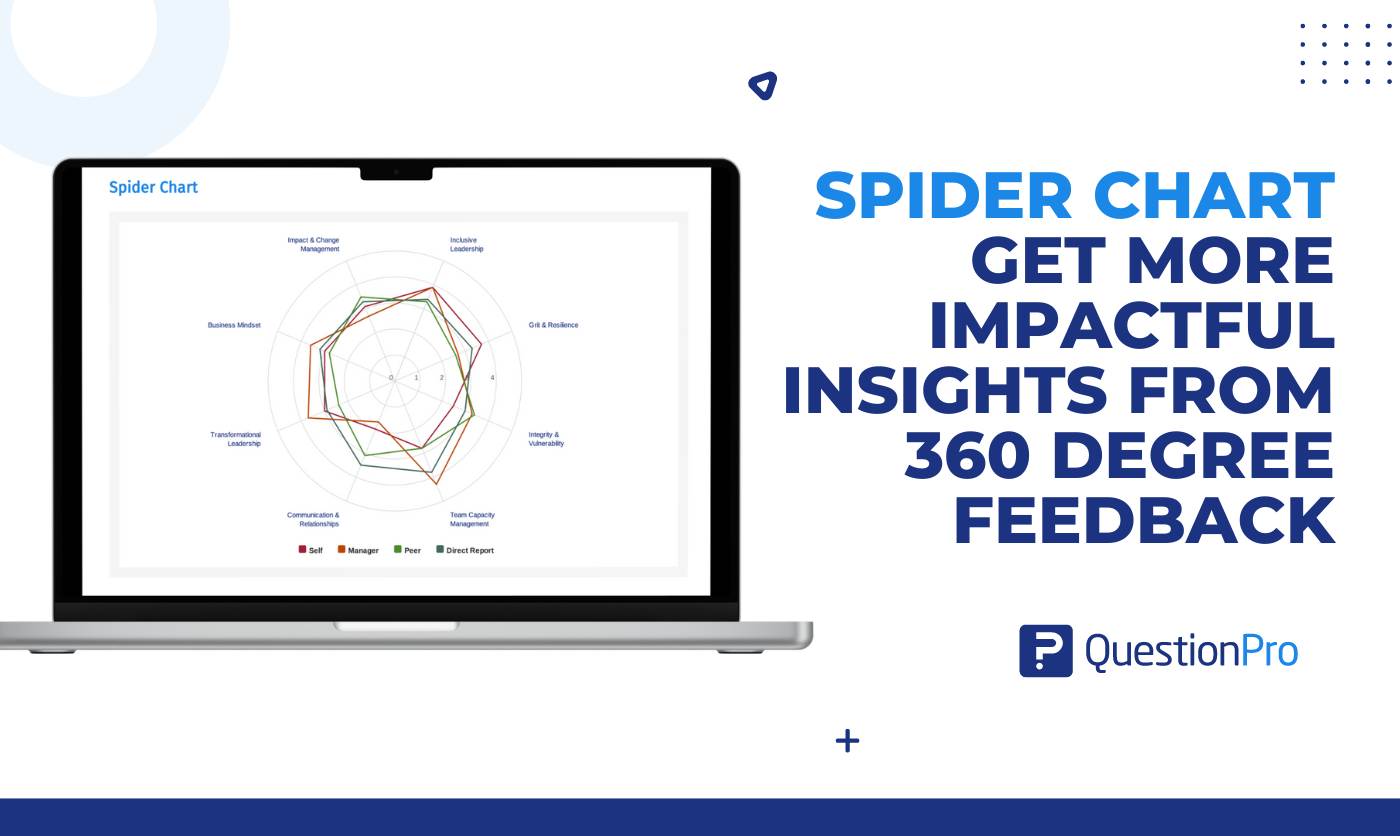

360 Degree Feedback Spider Chart is Back!

Aug 14, 2024

Jotform vs Wufoo: Comparison of Features and Prices

Aug 13, 2024

Product or Service: Which is More Important? — Tuesday CX Thoughts

Other categories.

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Tuesday CX Thoughts (TCXT)

- Uncategorized

- What’s Coming Up

- Workforce Intelligence

Educational resources and simple solutions for your research journey

How to Write the Methods Section of a Research Paper

Writing a research paper is both an art and a skill, and knowing how to write the methods section of a research paper is the first crucial step in mastering scientific writing. If, like the majority of early career researchers, you believe that the methods section is the simplest to write and needs little in the way of careful consideration or thought, this article will help you understand it is not 1 .

We have all probably asked our supervisors, coworkers, or search engines “ how to write a methods section of a research paper ” at some point in our scientific careers, so you are not alone if that’s how you ended up here. Even for seasoned researchers, selecting what to include in the methods section from a wealth of experimental information can occasionally be a source of distress and perplexity.

Additionally, journal specifications, in some cases, may make it more of a requirement rather than a choice to provide a selective yet descriptive account of the experimental procedure. Hence, knowing these nuances of how to write the methods section of a research paper is critical to its success. The methods section of the research paper is not supposed to be a detailed heavy, dull section that some researchers tend to write; rather, it should be the central component of the study that justifies the validity and reliability of the research.

Are you still unsure of how the methods section of a research paper forms the basis of every investigation? Consider the last article you read but ignore the methods section and concentrate on the other parts of the paper . Now think whether you could repeat the study and be sure of the credibility of the findings despite knowing the literature review and even having the data in front of you. You have the answer!

Having established the importance of the methods section , the next question is how to write the methods section of a research paper that unifies the overall study. The purpose of the methods section , which was earlier called as Materials and Methods , is to describe how the authors went about answering the “research question” at hand. Here, the objective is to tell a coherent story that gives a detailed account of how the study was conducted, the rationale behind specific experimental procedures, the experimental setup, objects (variables) involved, the research protocol employed, tools utilized to measure, calculations and measurements, and the analysis of the collected data 2 .

In this article, we will take a deep dive into this topic and provide a detailed overview of how to write the methods section of a research paper . For the sake of clarity, we have separated the subject into various sections with corresponding subheadings.

Table of Contents

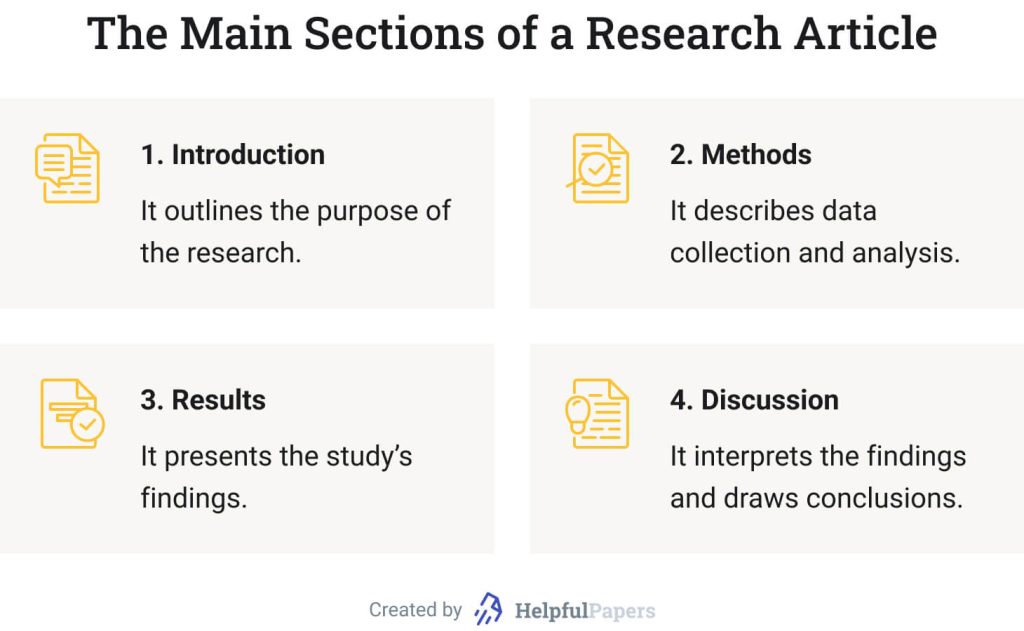

What is the methods section of a research paper ?

The methods section is a fundamental section of any paper since it typically discusses the ‘ what ’, ‘ how ’, ‘ which ’, and ‘ why ’ of the study, which is necessary to arrive at the final conclusions. In a research article, the introduction, which serves to set the foundation for comprehending the background and results is usually followed by the methods section, which precedes the result and discussion sections. The methods section must explicitly state what was done, how it was done, which equipment, tools and techniques were utilized, how were the measurements/calculations taken, and why specific research protocols, software, and analytical methods were employed.

Why is the methods section important?

The primary goal of the methods section is to provide pertinent details about the experimental approach so that the reader may put the results in perspective and, if necessary, replicate the findings 3 . This section offers readers the chance to evaluate the reliability and validity of any study. In short, it also serves as the study’s blueprint, assisting researchers who might be unsure about any other portion in establishing the study’s context and validity. The methods plays a rather crucial role in determining the fate of the article; an incomplete and unreliable methods section can frequently result in early rejections and may lead to numerous rounds of modifications during the publication process. This means that the reviewers also often use methods section to assess the reliability and validity of the research protocol and the data analysis employed to address the research topic. In other words, the purpose of the methods section is to demonstrate the research acumen and subject-matter expertise of the author(s) in their field.

Structure of methods section of a research paper

Similar to the research paper, the methods section also follows a defined structure; this may be dictated by the guidelines of a specific journal or can be presented in a chronological or thematic manner based on the study type. When writing the methods section , authors should keep in mind that they are telling a story about how the research was conducted. They should only report relevant information to avoid confusing the reader and include details that would aid in connecting various aspects of the entire research activity together. It is generally advisable to present experiments in the order in which they were conducted. This facilitates the logical flow of the research and allows readers to follow the progression of the study design.

It is also essential to clearly state the rationale behind each experiment and how the findings of earlier experiments informed the design or interpretation of later experiments. This allows the readers to understand the overall purpose of the study design and the significance of each experiment within that context. However, depending on the particular research question and method, it may make sense to present information in a different order; therefore, authors must select the best structure and strategy for their individual studies.

In cases where there is a lot of information, divide the sections into subheadings to cover the pertinent details. If the journal guidelines pose restrictions on the word limit , additional important information can be supplied in the supplementary files. A simple rule of thumb for sectioning the method section is to begin by explaining the methodological approach ( what was done ), describing the data collection methods ( how it was done ), providing the analysis method ( how the data was analyzed ), and explaining the rationale for choosing the methodological strategy. This is described in detail in the upcoming sections.

How to write the methods section of a research paper

Contrary to widespread assumption, the methods section of a research paper should be prepared once the study is complete to prevent missing any key parameter. Hence, please make sure that all relevant experiments are done before you start writing a methods section . The next step for authors is to look up any applicable academic style manuals or journal-specific standards to ensure that the methods section is formatted correctly. The methods section of a research paper typically constitutes materials and methods; while writing this section, authors usually arrange the information under each category.

The materials category describes the samples, materials, treatments, and instruments, while experimental design, sample preparation, data collection, and data analysis are a part of the method category. According to the nature of the study, authors should include additional subsections within the methods section, such as ethical considerations like the declaration of Helsinki (for studies involving human subjects), demographic information of the participants, and any other crucial information that can affect the output of the study. Simply put, the methods section has two major components: content and format. Here is an easy checklist for you to consider if you are struggling with how to write the methods section of a research paper .

- Explain the research design, subjects, and sample details

- Include information on inclusion and exclusion criteria

- Mention ethical or any other permission required for the study

- Include information about materials, experimental setup, tools, and software

- Add details of data collection and analysis methods

- Incorporate how research biases were avoided or confounding variables were controlled

- Evaluate and justify the experimental procedure selected to address the research question

- Provide precise and clear details of each experiment

- Flowcharts, infographics, or tables can be used to present complex information

- Use past tense to show that the experiments have been done

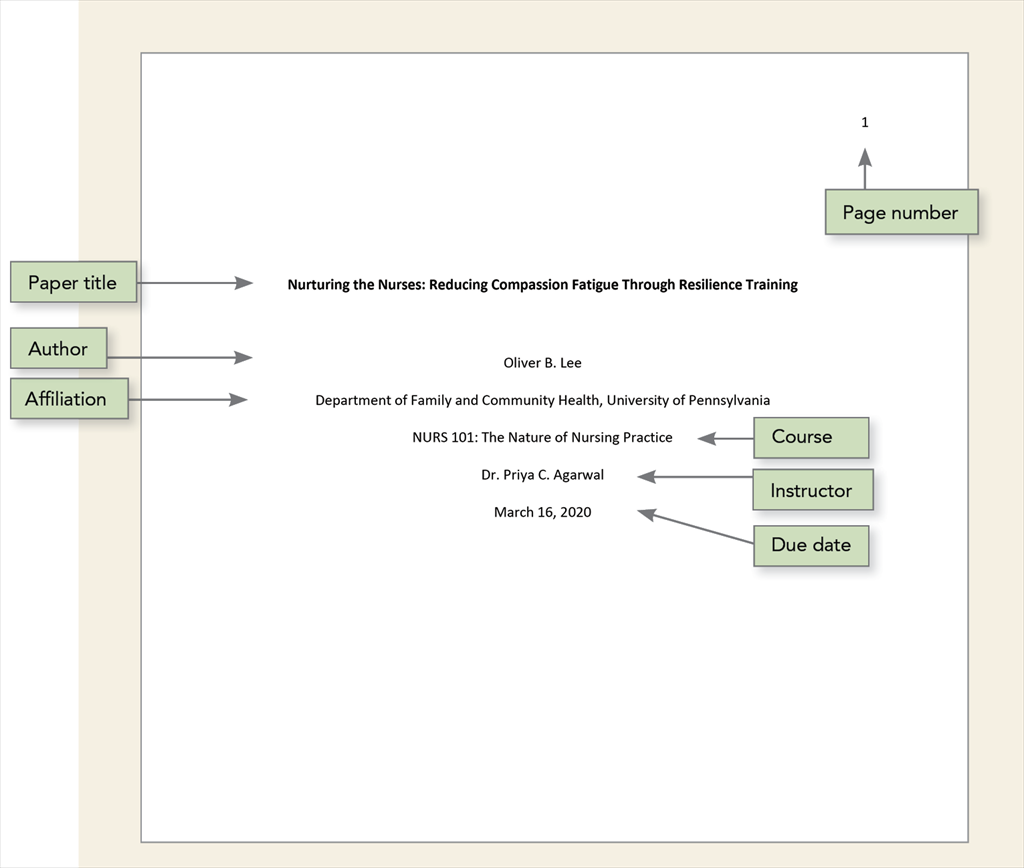

- Follow academic style guides (such as APA or MLA ) to structure the content

- Citations should be included as per standard protocols in the field

Now that you know how to write the methods section of a research paper , let’s address another challenge researchers face while writing the methods section —what to include in the methods section . How much information is too much is not always obvious when it comes to trying to include data in the methods section of a paper. In the next section, we examine this issue and explore potential solutions.

What to include in the methods section of a research paper

The technical nature of the methods section occasionally makes it harder to present the information clearly and concisely while staying within the study context. Many young researchers tend to veer off subject significantly, and they frequently commit the sin of becoming bogged down in itty bitty details, making the text harder to read and impairing its overall flow. However, the best way to write the methods section is to start with crucial components of the experiments. If you have trouble deciding which elements are essential, think about leaving out those that would make it more challenging to comprehend the context or replicate the results. The top-down approach helps to ensure all relevant information is incorporated and vital information is not lost in technicalities. Next, remember to add details that are significant to assess the validity and reliability of the study. Here is a simple checklist for you to follow ( bonus tip: you can also make a checklist for your own study to avoid missing any critical information while writing the methods section ).

- Structuring the methods section : Authors should diligently follow journal guidelines and adhere to the specific author instructions provided when writing the methods section . Journals typically have specific guidelines for formatting the methods section ; for example, Frontiers in Plant Sciences advises arranging the materials and methods section by subheading and citing relevant literature. There are several standardized checklists available for different study types in the biomedical field, including CONSORT (Consolidated Standards of Reporting Trials) for randomized clinical trials, PRISMA (Preferred Reporting Items for Systematic reviews and Meta-Analysis) for systematic reviews and meta-analysis, and STROBE (STrengthening the Reporting of OBservational studies in Epidemiology) for cohort, case-control, cross-sectional studies. Before starting the methods section , check the checklist available in your field that can function as a guide.

- Organizing different sections to tell a story : Once you are sure of the format required for structuring the methods section , the next is to present the sections in a logical manner; as mentioned earlier, the sections can be organized according to the chronology or themes. In the chronological arrangement, you should discuss the methods in accordance with how the experiments were carried out. An example of the method section of a research paper of an animal study should first ideally include information about the species, weight, sex, strain, and age. Next, the number of animals, their initial conditions, and their living and housing conditions should also be mentioned. Second, how the groups are assigned and the intervention (drug treatment, stress, or other) given to each group, and finally, the details of tools and techniques used to measure, collect, and analyze the data. Experiments involving animal or human subjects should additionally state an ethics approval statement. It is best to arrange the section using the thematic approach when discussing distinct experiments not following a sequential order.

- Define and explain the objects and procedure: Experimental procedure should clearly be stated in the methods section . Samples, necessary preparations (samples, treatment, and drug), and methods for manipulation need to be included. All variables (control, dependent, independent, and confounding) must be clearly defined, particularly if the confounding variables can affect the outcome of the study.

- Match the order of the methods section with the order of results: Though not mandatory, organizing the manuscript in a logical and coherent manner can improve the readability and clarity of the paper. This can be done by following a consistent structure throughout the manuscript; readers can easily navigate through the different sections and understand the methods and results in relation to each other. Using experiment names as headings for both the methods and results sections can also make it simpler for readers to locate specific information and corroborate it if needed.

- Relevant information must always be included: The methods section should have information on all experiments conducted and their details clearly mentioned. Ask the journal whether there is a way to offer more information in the supplemental files or external repositories if your target journal has strict word limitations. For example, Nature communications encourages authors to deposit their step-by-step protocols in an open-resource depository, Protocol Exchange which allows the protocols to be linked with the manuscript upon publication. Providing access to detailed protocols also helps to increase the transparency and reproducibility of the research.

- It’s all in the details: The methods section should meticulously list all the materials, tools, instruments, and software used for different experiments. Specify the testing equipment on which data was obtained, together with its manufacturer’s information, location, city, and state or any other stimuli used to manipulate the variables. Provide specifics on the research process you employed; if it was a standard protocol, cite previous studies that also used the protocol. Include any protocol modifications that were made, as well as any other factors that were taken into account when planning the study or gathering data. Any new or modified techniques should be explained by the authors. Typically, readers evaluate the reliability and validity of the procedures using the cited literature, and a widely accepted checklist helps to support the credibility of the methodology. Note: Authors should include a statement on sample size estimation (if applicable), which is often missed. It enables the reader to determine how many subjects will be required to detect the expected change in the outcome variables within a given confidence interval.

- Write for the audience: While explaining the details in the methods section , authors should be mindful of their target audience, as some of the rationale or assumptions on which specific procedures are based might not always be obvious to the audience, particularly for a general audience. Therefore, when in doubt, the objective of a procedure should be specified either in relation to the research question or to the entire protocol.

- Data interpretation and analysis : Information on data processing, statistical testing, levels of significance, and analysis tools and software should be added. Mention if the recommendations and expertise of an experienced statistician were followed. Also, evaluate and justify the preferred statistical method used in the study and its significance.

What NOT to include in the methods section of a research paper

To address “ how to write the methods section of a research paper ”, authors should not only pay careful attention to what to include but also what not to include in the methods section of a research paper . Here is a list of do not’s when writing the methods section :

- Do not elaborate on specifics of standard methods/procedures: You should refrain from adding unnecessary details of experiments and practices that are well established and cited previously. Instead, simply cite relevant literature or mention if the manufacturer’s protocol was followed.

- Do not add unnecessary details : Do not include minute details of the experimental procedure and materials/instruments used that are not significant for the outcome of the experiment. For example, there is no need to mention the brand name of the water bath used for incubation.

- Do not discuss the results: The methods section is not to discuss the results or refer to the tables and figures; save it for the results and discussion section. Also, focus on the methods selected to conduct the study and avoid diverting to other methods or commenting on their pros or cons.

- Do not make the section bulky : For extensive methods and protocols, provide the essential details and share the rest of the information in the supplemental files. The writing should be clear yet concise to maintain the flow of the section.

We hope that by this point, you understand how crucial it is to write a thoughtful and precise methods section and the ins and outs of how to write the methods section of a research paper . To restate, the entire purpose of the methods section is to enable others to reproduce the results or verify the research. We sincerely hope that this post has cleared up any confusion and given you a fresh perspective on the methods section .

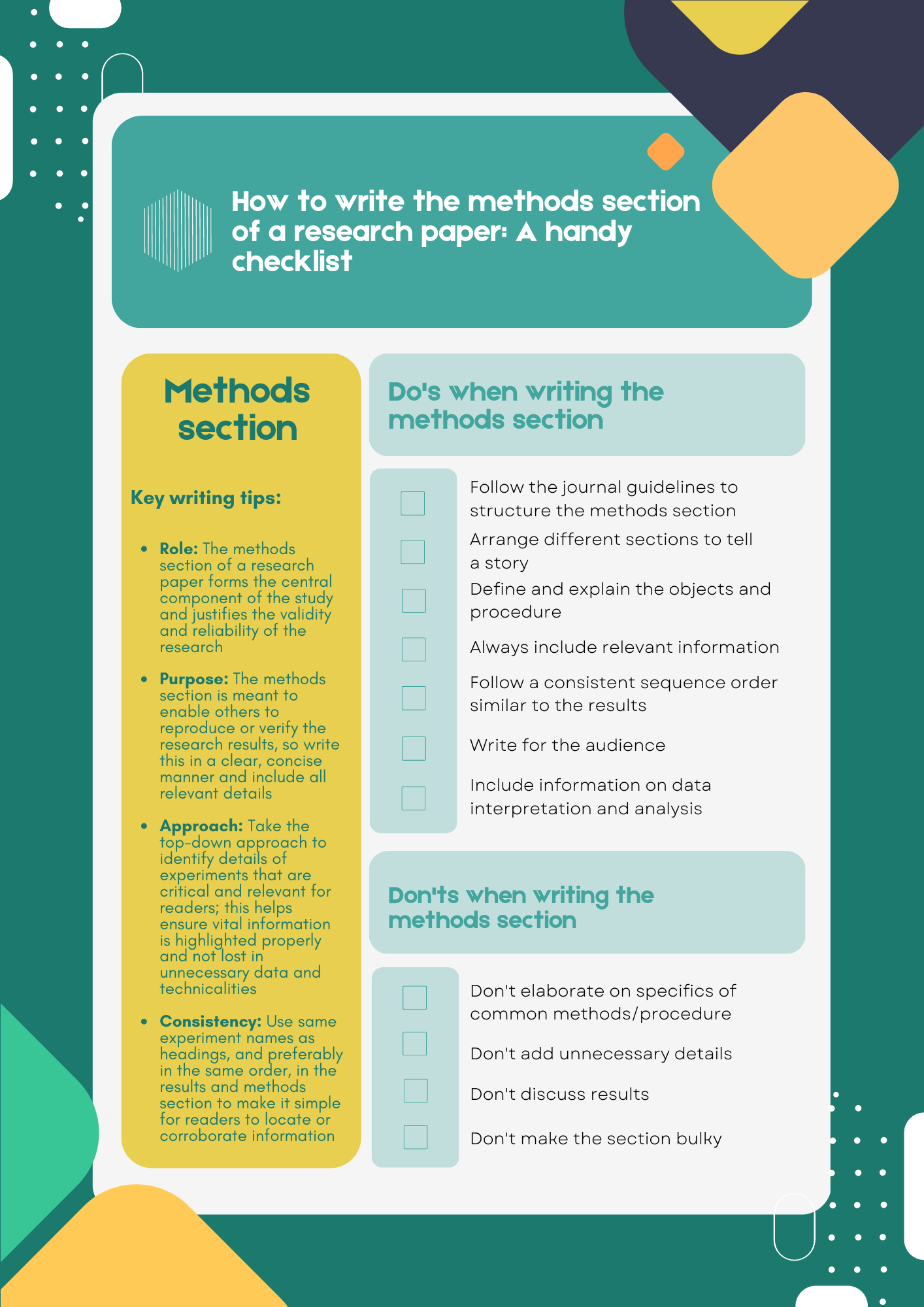

As a parting gift, we’re leaving you with a handy checklist that will help you understand how to write the methods section of a research paper . Feel free to download this checklist and use or share this with those who you think may benefit from it.

References

- Bhattacharya, D. How to write the Methods section of a research paper. Editage Insights, 2018. https://www.editage.com/insights/how-to-write-the-methods-section-of-a-research-paper (2018).

- Kallet, R. H. How to Write the Methods Section of a Research Paper. Respiratory Care 49, 1229–1232 (2004). https://pubmed.ncbi.nlm.nih.gov/15447808/

- Grindstaff, T. L. & Saliba, S. A. AVOIDING MANUSCRIPT MISTAKES. Int J Sports Phys Ther 7, 518–524 (2012). https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3474299/

Editage All Access is a subscription-based platform that unifies the best AI tools and services designed to speed up, simplify, and streamline every step of a researcher’s journey. The Editage All Access Pack is a one-of-a-kind subscription that unlocks full access to an AI writing assistant, literature recommender, journal finder, scientific illustration tool, and exclusive discounts on professional publication services from Editage.

Based on 22+ years of experience in academia, Editage All Access empowers researchers to put their best research forward and move closer to success. Explore our top AI Tools pack, AI Tools + Publication Services pack, or Build Your Own Plan. Find everything a researcher needs to succeed, all in one place – Get All Access now starting at just $14 a month !

Related Posts

What are the Best Research Funding Sources

Inductive vs. Deductive Research Approach

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Dissertation

- What Is a Research Methodology? | Steps & Tips

What Is a Research Methodology? | Steps & Tips

Published on 25 February 2019 by Shona McCombes . Revised on 10 October 2022.

Your research methodology discusses and explains the data collection and analysis methods you used in your research. A key part of your thesis, dissertation, or research paper, the methodology chapter explains what you did and how you did it, allowing readers to evaluate the reliability and validity of your research.

It should include:

- The type of research you conducted

- How you collected and analysed your data

- Any tools or materials you used in the research

- Why you chose these methods

- Your methodology section should generally be written in the past tense .

- Academic style guides in your field may provide detailed guidelines on what to include for different types of studies.

- Your citation style might provide guidelines for your methodology section (e.g., an APA Style methods section ).

Instantly correct all language mistakes in your text

Be assured that you'll submit flawless writing. Upload your document to correct all your mistakes.

Table of contents

How to write a research methodology, why is a methods section important, step 1: explain your methodological approach, step 2: describe your data collection methods, step 3: describe your analysis method, step 4: evaluate and justify the methodological choices you made, tips for writing a strong methodology chapter, frequently asked questions about methodology.

Prevent plagiarism, run a free check.

Your methods section is your opportunity to share how you conducted your research and why you chose the methods you chose. It’s also the place to show that your research was rigorously conducted and can be replicated .

It gives your research legitimacy and situates it within your field, and also gives your readers a place to refer to if they have any questions or critiques in other sections.

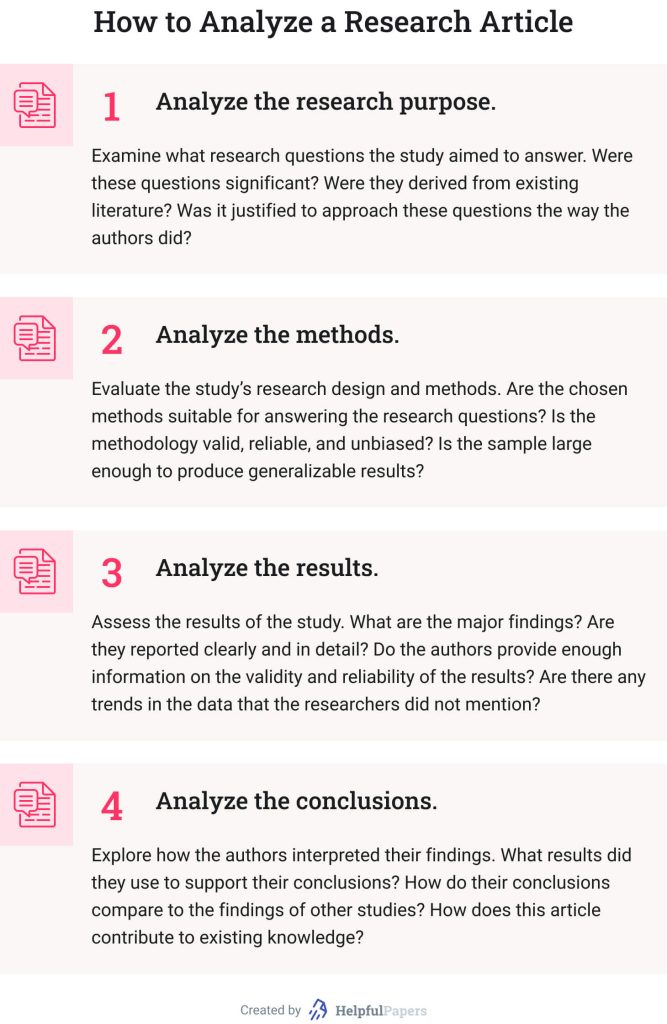

You can start by introducing your overall approach to your research. You have two options here.

Option 1: Start with your “what”

What research problem or question did you investigate?

- Aim to describe the characteristics of something?

- Explore an under-researched topic?

- Establish a causal relationship?

And what type of data did you need to achieve this aim?

- Quantitative data , qualitative data , or a mix of both?

- Primary data collected yourself, or secondary data collected by someone else?

- Experimental data gathered by controlling and manipulating variables, or descriptive data gathered via observations?

Option 2: Start with your “why”

Depending on your discipline, you can also start with a discussion of the rationale and assumptions underpinning your methodology. In other words, why did you choose these methods for your study?

- Why is this the best way to answer your research question?

- Is this a standard methodology in your field, or does it require justification?

- Were there any ethical considerations involved in your choices?

- What are the criteria for validity and reliability in this type of research ?

Once you have introduced your reader to your methodological approach, you should share full details about your data collection methods .

Quantitative methods

In order to be considered generalisable, you should describe quantitative research methods in enough detail for another researcher to replicate your study.

Here, explain how you operationalised your concepts and measured your variables. Discuss your sampling method or inclusion/exclusion criteria, as well as any tools, procedures, and materials you used to gather your data.

Surveys Describe where, when, and how the survey was conducted.

- How did you design the questionnaire?

- What form did your questions take (e.g., multiple choice, Likert scale )?

- Were your surveys conducted in-person or virtually?

- What sampling method did you use to select participants?

- What was your sample size and response rate?

Experiments Share full details of the tools, techniques, and procedures you used to conduct your experiment.

- How did you design the experiment ?

- How did you recruit participants?

- How did you manipulate and measure the variables ?

- What tools did you use?

Existing data Explain how you gathered and selected the material (such as datasets or archival data) that you used in your analysis.

- Where did you source the material?

- How was the data originally produced?

- What criteria did you use to select material (e.g., date range)?

The survey consisted of 5 multiple-choice questions and 10 questions measured on a 7-point Likert scale.

The goal was to collect survey responses from 350 customers visiting the fitness apparel company’s brick-and-mortar location in Boston on 4–8 July 2022, between 11:00 and 15:00.

Here, a customer was defined as a person who had purchased a product from the company on the day they took the survey. Participants were given 5 minutes to fill in the survey anonymously. In total, 408 customers responded, but not all surveys were fully completed. Due to this, 371 survey results were included in the analysis.

Qualitative methods

In qualitative research , methods are often more flexible and subjective. For this reason, it’s crucial to robustly explain the methodology choices you made.

Be sure to discuss the criteria you used to select your data, the context in which your research was conducted, and the role you played in collecting your data (e.g., were you an active participant, or a passive observer?)

Interviews or focus groups Describe where, when, and how the interviews were conducted.

- How did you find and select participants?

- How many participants took part?

- What form did the interviews take ( structured , semi-structured , or unstructured )?

- How long were the interviews?

- How were they recorded?

Participant observation Describe where, when, and how you conducted the observation or ethnography .

- What group or community did you observe? How long did you spend there?

- How did you gain access to this group? What role did you play in the community?

- How long did you spend conducting the research? Where was it located?

- How did you record your data (e.g., audiovisual recordings, note-taking)?

Existing data Explain how you selected case study materials for your analysis.

- What type of materials did you analyse?

- How did you select them?

In order to gain better insight into possibilities for future improvement of the fitness shop’s product range, semi-structured interviews were conducted with 8 returning customers.

Here, a returning customer was defined as someone who usually bought products at least twice a week from the store.

Surveys were used to select participants. Interviews were conducted in a small office next to the cash register and lasted approximately 20 minutes each. Answers were recorded by note-taking, and seven interviews were also filmed with consent. One interviewee preferred not to be filmed.

Mixed methods

Mixed methods research combines quantitative and qualitative approaches. If a standalone quantitative or qualitative study is insufficient to answer your research question, mixed methods may be a good fit for you.

Mixed methods are less common than standalone analyses, largely because they require a great deal of effort to pull off successfully. If you choose to pursue mixed methods, it’s especially important to robustly justify your methods here.

The only proofreading tool specialized in correcting academic writing

The academic proofreading tool has been trained on 1000s of academic texts and by native English editors. Making it the most accurate and reliable proofreading tool for students.

Correct my document today

Next, you should indicate how you processed and analysed your data. Avoid going into too much detail: you should not start introducing or discussing any of your results at this stage.

In quantitative research , your analysis will be based on numbers. In your methods section, you can include:

- How you prepared the data before analysing it (e.g., checking for missing data , removing outliers , transforming variables)

- Which software you used (e.g., SPSS, Stata or R)

- Which statistical tests you used (e.g., two-tailed t test , simple linear regression )

In qualitative research, your analysis will be based on language, images, and observations (often involving some form of textual analysis ).

Specific methods might include:

- Content analysis : Categorising and discussing the meaning of words, phrases and sentences

- Thematic analysis : Coding and closely examining the data to identify broad themes and patterns

- Discourse analysis : Studying communication and meaning in relation to their social context

Mixed methods combine the above two research methods, integrating both qualitative and quantitative approaches into one coherent analytical process.

Above all, your methodology section should clearly make the case for why you chose the methods you did. This is especially true if you did not take the most standard approach to your topic. In this case, discuss why other methods were not suitable for your objectives, and show how this approach contributes new knowledge or understanding.

In any case, it should be overwhelmingly clear to your reader that you set yourself up for success in terms of your methodology’s design. Show how your methods should lead to results that are valid and reliable, while leaving the analysis of the meaning, importance, and relevance of your results for your discussion section .

- Quantitative: Lab-based experiments cannot always accurately simulate real-life situations and behaviours, but they are effective for testing causal relationships between variables .

- Qualitative: Unstructured interviews usually produce results that cannot be generalised beyond the sample group , but they provide a more in-depth understanding of participants’ perceptions, motivations, and emotions.

- Mixed methods: Despite issues systematically comparing differing types of data, a solely quantitative study would not sufficiently incorporate the lived experience of each participant, while a solely qualitative study would be insufficiently generalisable.

Remember that your aim is not just to describe your methods, but to show how and why you applied them. Again, it’s critical to demonstrate that your research was rigorously conducted and can be replicated.

1. Focus on your objectives and research questions

The methodology section should clearly show why your methods suit your objectives and convince the reader that you chose the best possible approach to answering your problem statement and research questions .

2. Cite relevant sources

Your methodology can be strengthened by referencing existing research in your field. This can help you to:

- Show that you followed established practice for your type of research

- Discuss how you decided on your approach by evaluating existing research

- Present a novel methodological approach to address a gap in the literature

3. Write for your audience

Consider how much information you need to give, and avoid getting too lengthy. If you are using methods that are standard for your discipline, you probably don’t need to give a lot of background or justification.

Regardless, your methodology should be a clear, well-structured text that makes an argument for your approach, not just a list of technical details and procedures.

Methodology refers to the overarching strategy and rationale of your research. Developing your methodology involves studying the research methods used in your field and the theories or principles that underpin them, in order to choose the approach that best matches your objectives.

Methods are the specific tools and procedures you use to collect and analyse data (e.g. interviews, experiments , surveys , statistical tests ).

In a dissertation or scientific paper, the methodology chapter or methods section comes after the introduction and before the results , discussion and conclusion .

Depending on the length and type of document, you might also include a literature review or theoretical framework before the methodology.

Quantitative research deals with numbers and statistics, while qualitative research deals with words and meanings.

Quantitative methods allow you to test a hypothesis by systematically collecting and analysing data, while qualitative methods allow you to explore ideas and experiences in depth.

A sample is a subset of individuals from a larger population. Sampling means selecting the group that you will actually collect data from in your research.

For example, if you are researching the opinions of students in your university, you could survey a sample of 100 students.

Statistical sampling allows you to test a hypothesis about the characteristics of a population. There are various sampling methods you can use to ensure that your sample is representative of the population as a whole.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

McCombes, S. (2022, October 10). What Is a Research Methodology? | Steps & Tips. Scribbr. Retrieved 12 August 2024, from https://www.scribbr.co.uk/thesis-dissertation/methodology/

Is this article helpful?

Shona McCombes

Other students also liked, how to write a dissertation proposal | a step-by-step guide, what is a literature review | guide, template, & examples, what is a theoretical framework | a step-by-step guide.

Quantitative Analysis: the guide for beginners

- University of Valencia

Abstract and Figures

Discover the world's research

- 25+ million members

- 160+ million publication pages

- 2.3+ billion citations

- Meiliana Meiliana

- Justin Justin

- Viandi Agustinus

- Tshireletso Philemon Kgosiemang

- Akanksha Kamble

- Vinaya Dandekar

- Dr. Parag Sawant

- Alan Bryman

- Recruit researchers

- Join for free

- Login Email Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google Welcome back! Please log in. Email · Hint Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google No account? Sign up

Qualitative Data Analysis Methods 101:

The “big 6” methods + examples.

By: Kerryn Warren (PhD) | Reviewed By: Eunice Rautenbach (D.Tech) | May 2020 (Updated April 2023)

Qualitative data analysis methods. Wow, that’s a mouthful.

If you’re new to the world of research, qualitative data analysis can look rather intimidating. So much bulky terminology and so many abstract, fluffy concepts. It certainly can be a minefield!

Don’t worry – in this post, we’ll unpack the most popular analysis methods , one at a time, so that you can approach your analysis with confidence and competence – whether that’s for a dissertation, thesis or really any kind of research project.

What (exactly) is qualitative data analysis?

To understand qualitative data analysis, we need to first understand qualitative data – so let’s step back and ask the question, “what exactly is qualitative data?”.

Qualitative data refers to pretty much any data that’s “not numbers” . In other words, it’s not the stuff you measure using a fixed scale or complex equipment, nor do you analyse it using complex statistics or mathematics.

So, if it’s not numbers, what is it?

Words, you guessed? Well… sometimes , yes. Qualitative data can, and often does, take the form of interview transcripts, documents and open-ended survey responses – but it can also involve the interpretation of images and videos. In other words, qualitative isn’t just limited to text-based data.

So, how’s that different from quantitative data, you ask?

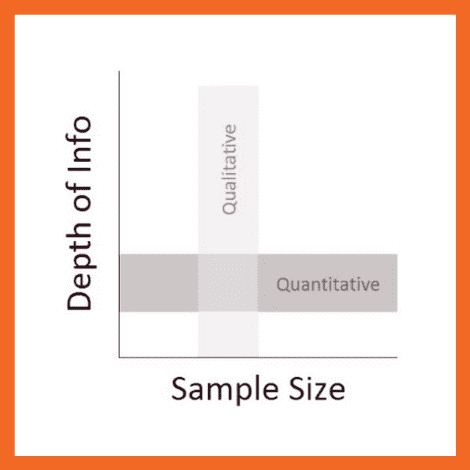

Simply put, qualitative research focuses on words, descriptions, concepts or ideas – while quantitative research focuses on numbers and statistics . Qualitative research investigates the “softer side” of things to explore and describe , while quantitative research focuses on the “hard numbers”, to measure differences between variables and the relationships between them. If you’re keen to learn more about the differences between qual and quant, we’ve got a detailed post over here .

So, qualitative analysis is easier than quantitative, right?

Not quite. In many ways, qualitative data can be challenging and time-consuming to analyse and interpret. At the end of your data collection phase (which itself takes a lot of time), you’ll likely have many pages of text-based data or hours upon hours of audio to work through. You might also have subtle nuances of interactions or discussions that have danced around in your mind, or that you scribbled down in messy field notes. All of this needs to work its way into your analysis.

Making sense of all of this is no small task and you shouldn’t underestimate it. Long story short – qualitative analysis can be a lot of work! Of course, quantitative analysis is no piece of cake either, but it’s important to recognise that qualitative analysis still requires a significant investment in terms of time and effort.

Need a helping hand?

In this post, we’ll explore qualitative data analysis by looking at some of the most common analysis methods we encounter. We’re not going to cover every possible qualitative method and we’re not going to go into heavy detail – we’re just going to give you the big picture. That said, we will of course includes links to loads of extra resources so that you can learn more about whichever analysis method interests you.

Without further delay, let’s get into it.

The “Big 6” Qualitative Analysis Methods

There are many different types of qualitative data analysis, all of which serve different purposes and have unique strengths and weaknesses . We’ll start by outlining the analysis methods and then we’ll dive into the details for each.

The 6 most popular methods (or at least the ones we see at Grad Coach) are:

- Content analysis

- Narrative analysis

- Discourse analysis

- Thematic analysis

- Grounded theory (GT)

- Interpretive phenomenological analysis (IPA)

Let’s take a look at each of them…

QDA Method #1: Qualitative Content Analysis

Content analysis is possibly the most common and straightforward QDA method. At the simplest level, content analysis is used to evaluate patterns within a piece of content (for example, words, phrases or images) or across multiple pieces of content or sources of communication. For example, a collection of newspaper articles or political speeches.

With content analysis, you could, for instance, identify the frequency with which an idea is shared or spoken about – like the number of times a Kardashian is mentioned on Twitter. Or you could identify patterns of deeper underlying interpretations – for instance, by identifying phrases or words in tourist pamphlets that highlight India as an ancient country.

Because content analysis can be used in such a wide variety of ways, it’s important to go into your analysis with a very specific question and goal, or you’ll get lost in the fog. With content analysis, you’ll group large amounts of text into codes , summarise these into categories, and possibly even tabulate the data to calculate the frequency of certain concepts or variables. Because of this, content analysis provides a small splash of quantitative thinking within a qualitative method.

Naturally, while content analysis is widely useful, it’s not without its drawbacks . One of the main issues with content analysis is that it can be very time-consuming , as it requires lots of reading and re-reading of the texts. Also, because of its multidimensional focus on both qualitative and quantitative aspects, it is sometimes accused of losing important nuances in communication.

Content analysis also tends to concentrate on a very specific timeline and doesn’t take into account what happened before or after that timeline. This isn’t necessarily a bad thing though – just something to be aware of. So, keep these factors in mind if you’re considering content analysis. Every analysis method has its limitations , so don’t be put off by these – just be aware of them ! If you’re interested in learning more about content analysis, the video below provides a good starting point.

QDA Method #2: Narrative Analysis

As the name suggests, narrative analysis is all about listening to people telling stories and analysing what that means . Since stories serve a functional purpose of helping us make sense of the world, we can gain insights into the ways that people deal with and make sense of reality by analysing their stories and the ways they’re told.

You could, for example, use narrative analysis to explore whether how something is being said is important. For instance, the narrative of a prisoner trying to justify their crime could provide insight into their view of the world and the justice system. Similarly, analysing the ways entrepreneurs talk about the struggles in their careers or cancer patients telling stories of hope could provide powerful insights into their mindsets and perspectives . Simply put, narrative analysis is about paying attention to the stories that people tell – and more importantly, the way they tell them.

Of course, the narrative approach has its weaknesses , too. Sample sizes are generally quite small due to the time-consuming process of capturing narratives. Because of this, along with the multitude of social and lifestyle factors which can influence a subject, narrative analysis can be quite difficult to reproduce in subsequent research. This means that it’s difficult to test the findings of some of this research.

Similarly, researcher bias can have a strong influence on the results here, so you need to be particularly careful about the potential biases you can bring into your analysis when using this method. Nevertheless, narrative analysis is still a very useful qualitative analysis method – just keep these limitations in mind and be careful not to draw broad conclusions . If you’re keen to learn more about narrative analysis, the video below provides a great introduction to this qualitative analysis method.

QDA Method #3: Discourse Analysis

Discourse is simply a fancy word for written or spoken language or debate . So, discourse analysis is all about analysing language within its social context. In other words, analysing language – such as a conversation, a speech, etc – within the culture and society it takes place. For example, you could analyse how a janitor speaks to a CEO, or how politicians speak about terrorism.

To truly understand these conversations or speeches, the culture and history of those involved in the communication are important factors to consider. For example, a janitor might speak more casually with a CEO in a company that emphasises equality among workers. Similarly, a politician might speak more about terrorism if there was a recent terrorist incident in the country.

So, as you can see, by using discourse analysis, you can identify how culture , history or power dynamics (to name a few) have an effect on the way concepts are spoken about. So, if your research aims and objectives involve understanding culture or power dynamics, discourse analysis can be a powerful method.

Because there are many social influences in terms of how we speak to each other, the potential use of discourse analysis is vast . Of course, this also means it’s important to have a very specific research question (or questions) in mind when analysing your data and looking for patterns and themes, or you might land up going down a winding rabbit hole.

Discourse analysis can also be very time-consuming as you need to sample the data to the point of saturation – in other words, until no new information and insights emerge. But this is, of course, part of what makes discourse analysis such a powerful technique. So, keep these factors in mind when considering this QDA method. Again, if you’re keen to learn more, the video below presents a good starting point.

QDA Method #4: Thematic Analysis

Thematic analysis looks at patterns of meaning in a data set – for example, a set of interviews or focus group transcripts. But what exactly does that… mean? Well, a thematic analysis takes bodies of data (which are often quite large) and groups them according to similarities – in other words, themes . These themes help us make sense of the content and derive meaning from it.

Let’s take a look at an example.

With thematic analysis, you could analyse 100 online reviews of a popular sushi restaurant to find out what patrons think about the place. By reviewing the data, you would then identify the themes that crop up repeatedly within the data – for example, “fresh ingredients” or “friendly wait staff”.

So, as you can see, thematic analysis can be pretty useful for finding out about people’s experiences , views, and opinions . Therefore, if your research aims and objectives involve understanding people’s experience or view of something, thematic analysis can be a great choice.

Since thematic analysis is a bit of an exploratory process, it’s not unusual for your research questions to develop , or even change as you progress through the analysis. While this is somewhat natural in exploratory research, it can also be seen as a disadvantage as it means that data needs to be re-reviewed each time a research question is adjusted. In other words, thematic analysis can be quite time-consuming – but for a good reason. So, keep this in mind if you choose to use thematic analysis for your project and budget extra time for unexpected adjustments.

QDA Method #5: Grounded theory (GT)

Grounded theory is a powerful qualitative analysis method where the intention is to create a new theory (or theories) using the data at hand, through a series of “ tests ” and “ revisions ”. Strictly speaking, GT is more a research design type than an analysis method, but we’ve included it here as it’s often referred to as a method.

What’s most important with grounded theory is that you go into the analysis with an open mind and let the data speak for itself – rather than dragging existing hypotheses or theories into your analysis. In other words, your analysis must develop from the ground up (hence the name).

Let’s look at an example of GT in action.

Assume you’re interested in developing a theory about what factors influence students to watch a YouTube video about qualitative analysis. Using Grounded theory , you’d start with this general overarching question about the given population (i.e., graduate students). First, you’d approach a small sample – for example, five graduate students in a department at a university. Ideally, this sample would be reasonably representative of the broader population. You’d interview these students to identify what factors lead them to watch the video.

After analysing the interview data, a general pattern could emerge. For example, you might notice that graduate students are more likely to read a post about qualitative methods if they are just starting on their dissertation journey, or if they have an upcoming test about research methods.

From here, you’ll look for another small sample – for example, five more graduate students in a different department – and see whether this pattern holds true for them. If not, you’ll look for commonalities and adapt your theory accordingly. As this process continues, the theory would develop . As we mentioned earlier, what’s important with grounded theory is that the theory develops from the data – not from some preconceived idea.

So, what are the drawbacks of grounded theory? Well, some argue that there’s a tricky circularity to grounded theory. For it to work, in principle, you should know as little as possible regarding the research question and population, so that you reduce the bias in your interpretation. However, in many circumstances, it’s also thought to be unwise to approach a research question without knowledge of the current literature . In other words, it’s a bit of a “chicken or the egg” situation.

Regardless, grounded theory remains a popular (and powerful) option. Naturally, it’s a very useful method when you’re researching a topic that is completely new or has very little existing research about it, as it allows you to start from scratch and work your way from the ground up .

QDA Method #6: Interpretive Phenomenological Analysis (IPA)

Interpretive. Phenomenological. Analysis. IPA . Try saying that three times fast…

Let’s just stick with IPA, okay?

IPA is designed to help you understand the personal experiences of a subject (for example, a person or group of people) concerning a major life event, an experience or a situation . This event or experience is the “phenomenon” that makes up the “P” in IPA. Such phenomena may range from relatively common events – such as motherhood, or being involved in a car accident – to those which are extremely rare – for example, someone’s personal experience in a refugee camp. So, IPA is a great choice if your research involves analysing people’s personal experiences of something that happened to them.

It’s important to remember that IPA is subject – centred . In other words, it’s focused on the experiencer . This means that, while you’ll likely use a coding system to identify commonalities, it’s important not to lose the depth of experience or meaning by trying to reduce everything to codes. Also, keep in mind that since your sample size will generally be very small with IPA, you often won’t be able to draw broad conclusions about the generalisability of your findings. But that’s okay as long as it aligns with your research aims and objectives.

Another thing to be aware of with IPA is personal bias . While researcher bias can creep into all forms of research, self-awareness is critically important with IPA, as it can have a major impact on the results. For example, a researcher who was a victim of a crime himself could insert his own feelings of frustration and anger into the way he interprets the experience of someone who was kidnapped. So, if you’re going to undertake IPA, you need to be very self-aware or you could muddy the analysis.

How to choose the right analysis method

In light of all of the qualitative analysis methods we’ve covered so far, you’re probably asking yourself the question, “ How do I choose the right one? ”

Much like all the other methodological decisions you’ll need to make, selecting the right qualitative analysis method largely depends on your research aims, objectives and questions . In other words, the best tool for the job depends on what you’re trying to build. For example:

- Perhaps your research aims to analyse the use of words and what they reveal about the intention of the storyteller and the cultural context of the time.

- Perhaps your research aims to develop an understanding of the unique personal experiences of people that have experienced a certain event, or

- Perhaps your research aims to develop insight regarding the influence of a certain culture on its members.

As you can probably see, each of these research aims are distinctly different , and therefore different analysis methods would be suitable for each one. For example, narrative analysis would likely be a good option for the first aim, while grounded theory wouldn’t be as relevant.

It’s also important to remember that each method has its own set of strengths, weaknesses and general limitations. No single analysis method is perfect . So, depending on the nature of your research, it may make sense to adopt more than one method (this is called triangulation ). Keep in mind though that this will of course be quite time-consuming.

As we’ve seen, all of the qualitative analysis methods we’ve discussed make use of coding and theme-generating techniques, but the intent and approach of each analysis method differ quite substantially. So, it’s very important to come into your research with a clear intention before you decide which analysis method (or methods) to use.

Start by reviewing your research aims , objectives and research questions to assess what exactly you’re trying to find out – then select a qualitative analysis method that fits. Never pick a method just because you like it or have experience using it – your analysis method (or methods) must align with your broader research aims and objectives.

Let’s recap on QDA methods…

In this post, we looked at six popular qualitative data analysis methods:

- First, we looked at content analysis , a straightforward method that blends a little bit of quant into a primarily qualitative analysis.

- Then we looked at narrative analysis , which is about analysing how stories are told.

- Next up was discourse analysis – which is about analysing conversations and interactions.

- Then we moved on to thematic analysis – which is about identifying themes and patterns.

- From there, we went south with grounded theory – which is about starting from scratch with a specific question and using the data alone to build a theory in response to that question.

- And finally, we looked at IPA – which is about understanding people’s unique experiences of a phenomenon.

Of course, these aren’t the only options when it comes to qualitative data analysis, but they’re a great starting point if you’re dipping your toes into qualitative research for the first time.

If you’re still feeling a bit confused, consider our private coaching service , where we hold your hand through the research process to help you develop your best work.

Psst... there’s more!

This post was based on one of our popular Research Bootcamps . If you're working on a research project, you'll definitely want to check this out ...

87 Comments

This has been very helpful. Thank you.

Thank you madam,

Thank you so much for this information

I wonder it so clear for understand and good for me. can I ask additional query?

Very insightful and useful

Good work done with clear explanations. Thank you.

Thanks so much for the write-up, it’s really good.

Thanks madam . It is very important .

thank you very good

Great presentation

This has been very well explained in simple language . It is useful even for a new researcher.

Great to hear that. Good luck with your qualitative data analysis, Pramod!

This is very useful information. And it was very a clear language structured presentation. Thanks a lot.

Thank you so much.

very informative sequential presentation

Precise explanation of method.

Hi, may we use 2 data analysis methods in our qualitative research?

Thanks for your comment. Most commonly, one would use one type of analysis method, but it depends on your research aims and objectives.

You explained it in very simple language, everyone can understand it. Thanks so much.

Thank you very much, this is very helpful. It has been explained in a very simple manner that even a layman understands

Thank nicely explained can I ask is Qualitative content analysis the same as thematic analysis?

Thanks for your comment. No, QCA and thematic are two different types of analysis. This article might help clarify – https://onlinelibrary.wiley.com/doi/10.1111/nhs.12048

This is my first time to come across a well explained data analysis. so helpful.

I have thoroughly enjoyed your explanation of the six qualitative analysis methods. This is very helpful. Thank you!

Thank you very much, this is well explained and useful

i need a citation of your book.

Thanks a lot , remarkable indeed, enlighting to the best

Hi Derek, What other theories/methods would you recommend when the data is a whole speech?

Keep writing useful artikel.

It is important concept about QDA and also the way to express is easily understandable, so thanks for all.

Thank you, this is well explained and very useful.

Very helpful .Thanks.

Hi there! Very well explained. Simple but very useful style of writing. Please provide the citation of the text. warm regards

The session was very helpful and insightful. Thank you

This was very helpful and insightful. Easy to read and understand

As a professional academic writer, this has been so informative and educative. Keep up the good work Grad Coach you are unmatched with quality content for sure.

Keep up the good work Grad Coach you are unmatched with quality content for sure.

Its Great and help me the most. A Million Thanks you Dr.

It is a very nice work

Very insightful. Please, which of this approach could be used for a research that one is trying to elicit students’ misconceptions in a particular concept ?

This is Amazing and well explained, thanks

great overview

What do we call a research data analysis method that one use to advise or determining the best accounting tool or techniques that should be adopted in a company.

Informative video, explained in a clear and simple way. Kudos

Waoo! I have chosen method wrong for my data analysis. But I can revise my work according to this guide. Thank you so much for this helpful lecture.

This has been very helpful. It gave me a good view of my research objectives and how to choose the best method. Thematic analysis it is.

Very helpful indeed. Thanku so much for the insight.

This was incredibly helpful.

Very helpful.

very educative

Nicely written especially for novice academic researchers like me! Thank you.