Your Data Guide

How to Perform Hypothesis Testing Using Python

Step into the intriguing world of hypothesis testing, where your natural curiosity meets the power of data to reveal truths!

This article is your key to unlocking how those everyday hunches—like guessing a group’s average income or figuring out who owns their home—can be thoroughly checked and proven with data.

Thanks for reading Your Data Guide! Subscribe for free to receive new posts and support my work.

I am going to take you by the hand and show you, in simple steps, how to use Python to explore a hypothesis about the average yearly income.

By the time we’re done, you’ll not only get the hang of creating and testing hypotheses but also how to use statistical tests on actual data.

Perfect for up-and-coming data scientists, anyone with a knack for analysis, or just if you’re keen on data, get ready to gain the skills to make informed decisions and turn insights into real-world actions.

Join me as we dive deep into the data, one hypothesis at a time!

Before we get started, elevate your data skills with my expert eBooks—the culmination of my experiences and insights.

Support my work and enhance your journey. Check them out:

eBook 1: Personal INTERVIEW Ready “SQL” CheatSheet

eBook 2: Personal INTERVIEW Ready “Statistics” Cornell Notes

Best Selling eBook: Top 50+ ChatGPT Personas for Custom Instructions

Data Science Bundle ( Cheapest ): The Ultimate Data Science Bundle: Complete

ChatGPT Bundle ( Cheapest ): The Ultimate ChatGPT Bundle: Complete

💡 Checkout for more such resources: https://codewarepam.gumroad.com/

What is a hypothesis, and how do you test it?

A hypothesis is like a guess or prediction about something specific, such as the average income or the percentage of homeowners in a group of people.

It’s based on theories, past observations, or questions that spark our curiosity.

For instance, you might predict that the average yearly income of potential customers is over $50,000 or that 60% of them own their homes.

To see if your guess is right, you gather data from a smaller group within the larger population and check if the numbers ( like the average income, percentage of homeowners, etc. ) from this smaller group match your initial prediction.

You also set a rule for how sure you need to be to trust your findings, often using a 5% chance of error as a standard measure . This means you’re 95% confident in your results. — Level of Significance (0.05)

There are two main types of hypotheses : the null hypothesi s, which is your baseline saying there’s no change or difference, and the alternative hypothesis , which suggests there is a change or difference.

For example,

If you start with the idea that the average yearly income of potential customers is $50,000,

The alternative could be that it’s not $50,000—it could be less or more, depending on what you’re trying to find out.

To test your hypothesis, you calculate a test statistic —a number that shows how much your sample data deviates from what you predicted.

How you calculate this depends on what you’re studying and the kind of data you have. For example, to check an average, you might use a formula that considers your sample’s average, the predicted average, the variation in your sample data, and how big your sample is.

This test statistic follows a known distribution ( like the t-distribution or z-distribution ), which helps you figure out the p-value.

The p-value tells you the odds of seeing a test statistic as extreme as yours if your initial guess was correct.

A small p-value means your data strongly disagrees with your initial guess.

Finally, you decide on your hypothesis by comparing the p-value to your error threshold.

If the p-value is smaller or equal, you reject the null hypothesis, meaning your data shows a significant difference that’s unlikely due to chance.

If the p-value is larger, you stick with the null hypothesis , suggesting your data doesn’t show a meaningful difference and any change might just be by chance.

We’ll go through an example that tests if the average annual income of prospective customers exceeds $50,000.

This process involves stating hypotheses , specifying a significance level , collecting and analyzing data , and drawing conclusions based on statistical tests.

Example: Testing a Hypothesis About Average Annual Income

Step 1: state the hypotheses.

Null Hypothesis (H0): The average annual income of prospective customers is $50,000.

Alternative Hypothesis (H1): The average annual income of prospective customers is more than $50,000.

Step 2: Specify the Significance Level

Significance Level: 0.05, meaning we’re 95% confident in our findings and allow a 5% chance of error.

Step 3: Collect Sample Data

We’ll use the ProspectiveBuyer table, assuming it's a random sample from the population.

This table has 2,059 entries, representing prospective customers' annual incomes.

Step 4: Calculate the Sample Statistic

In Python, we can use libraries like Pandas and Numpy to calculate the sample mean and standard deviation.

SampleMean: 56,992.43

SampleSD: 32,079.16

SampleSize: 2,059

Step 5: Calculate the Test Statistic

We use the t-test formula to calculate how significantly our sample mean deviates from the hypothesized mean.

Python’s Scipy library can handle this calculation:

T-Statistic: 4.62

Step 6: Calculate the P-Value

The p-value is already calculated in the previous step using Scipy's ttest_1samp function, which returns both the test statistic and the p-value.

P-Value = 0.0000021

Step 7: State the Statistical Conclusion

We compare the p-value with our significance level to decide on our hypothesis:

Since the p-value is less than 0.05, we reject the null hypothesis in favor of the alternative.

Conclusion:

There’s strong evidence to suggest that the average annual income of prospective customers is indeed more than $50,000.

This example illustrates how Python can be a powerful tool for hypothesis testing, enabling us to derive insights from data through statistical analysis.

How to Choose the Right Test Statistics

Choosing the right test statistic is crucial and depends on what you’re trying to find out, the kind of data you have, and how that data is spread out.

Here are some common types of test statistics and when to use them:

T-test statistic:

This one’s great for checking out the average of a group when your data follows a normal distribution or when you’re comparing the averages of two such groups.

The t-test follows a special curve called the t-distribution . This curve looks a lot like the normal bell curve but with thicker ends, which means more chances for extreme values.

The t-distribution’s shape changes based on something called degrees of freedom , which is a fancy way of talking about your sample size and how many groups you’re comparing.

Z-test statistic:

Use this when you’re looking at the average of a normally distributed group or the difference between two group averages, and you already know the standard deviation for all in the population.

The z-test follows the standard normal distribution , which is your classic bell curve centered at zero and spreading out evenly on both sides.

Chi-square test statistic:

This is your go-to for checking if there’s a difference in variability within a normally distributed group or if two categories are related.

The chi-square statistic follows its own distribution, which leans to the right and gets its shape from the degrees of freedom —basically, how many categories or groups you’re comparing.

F-test statistic:

This one helps you compare the variability between two groups or see if the averages of more than two groups are all the same, assuming all groups are normally distributed.

The F-test follows the F-distribution , which is also right-skewed and has two types of degrees of freedom that depend on how many groups you have and the size of each group.

In simple terms, the test you pick hinges on what you’re curious about, whether your data fits the normal curve, and if you know certain specifics, like the population’s standard deviation.

Each test has its own special curve and rules based on your sample’s details and what you’re comparing.

Join my community of learners! Subscribe to my newsletter for more tips, tricks, and exclusive content on mastering Data Science & AI. — Your Data Guide Join my community of learners! Subscribe to my newsletter for more tips, tricks, and exclusive content on mastering data science and AI. By Richard Warepam ⭐️ Visit My Gumroad Shop: https://codewarepam.gumroad.com/

Ready for more?

Hypothesis Testing with Python

Learn how to plan, implement, and interpret different kinds of hypothesis tests in Python.

- AI assistance for guided coding help

- Projects to apply new skills

- Quizzes to test your knowledge

- A certificate of completion

Skill level

Time to complete

Prerequisites

About this course

In this course, you’ll learn to plan, implement, and interpret a hypothesis test in Python. Hypothesis testing is used to address questions about a population based on a subset from that population. For example, A/B testing is a framework for learning about consumer behavior based on a small sample of consumers.

This course assumes some preexisting knowledge of Python, including the NumPy and pandas libraries.

Introduction to Hypothesis Testing

Find out what you’ll learn in this course and why it’s important.

Hypothesis testing: Testing a Sample Statistic

Learn about hypothesis testing and implement binomial and one-sample t-tests in Python.

Hypothesis Testing: Testing an Association

Learn about hypothesis tests that can be used to evaluate whether there is an association between two variables.

Experimental Design

Learn to design an experiment to make a decision using a hypothesis test.

Hypothesis Testing Projects

Practice your hypothesis testing skills with some additional projects!

Certificate of completion available with Plus or Pro

The platform

Hands-on learning

Projects in this course

Heart disease research part i, heart disease research part ii, a/b testing at nosh mish mosh, earn a certificate of completion.

- Show proof Receive a certificate that demonstrates you've completed a course or path.

- Build a collection The more courses and paths you complete, the more certificates you collect.

- Share with your network Easily add certificates of completion to your LinkedIn profile to share your accomplishments.

Reviews from learners

Our learners work at.

- Google Logo

- Amazon Logo

- Microsoft Logo

- Reddit Logo

- Spotify Logo

- YouTube Logo

- Instagram Logo

Frequently asked questions about Hypothesis Testing with Python

What is hypothesis testing.

After drawing conclusions from data, you have to make sure it’s correct, and hypothesis testing involves using statistical methods to validate our results.

Why is hypothesis testing important?

What kind of jobs perform hypothesis testing, what else should i study if i am learning about hypothesis testing, join over 50 million learners and start hypothesis testing with python today, looking for something else, related resources, software testing methodologies, introduction to testing with mocha and chai, testing types, related courses and paths, hypothesis testing: associations, hypothesis testing: experimental design, browse more topics.

- Math 85,399 learners enrolled

- Python 3,443,842 learners enrolled

- Data Science 4,260,983 learners enrolled

- Code Foundations 7,095,668 learners enrolled

- Computer Science 5,544,604 learners enrolled

- Web Development 4,741,585 learners enrolled

- For Business 3,126,645 learners enrolled

- JavaScript 2,764,873 learners enrolled

- Data Analytics 2,261,059 learners enrolled

Unlock additional features with a paid plan

Practice projects, assessments, certificate of completion.

Statistical Hypothesis Testing: A Comprehensive Guide

We’ve all heard it – “ go to college to get a good job .” The assumption is that higher education leads straight to higher incomes. Elite Indian institutes like the IITs and IIMs are even judged based on the average starting salaries of their graduates. But is this direct connection between schooling and income actually true?

Intuitively, it seems believable. But how can we really prove this assumption that more school = more money? Is there hard statistical evidence either way? Turns out, there are methods to scientifically test widespread beliefs like this – what statisticians call hypothesis testing.

In this article, we’ll dig into the concept of hypothesis testing and the tools to rigorously question conventional wisdom: null and alternate hypotheses, one and two-tailed tests, paired sample tests, and more.

Statistical hypothesis testing allows researchers to make inferences about populations based on sample data. It involves setting up a null hypothesis, choosing a confidence level, calculating a p-value, and conducting tests such as two-tailed, one-tailed, or paired sample tests to draw conclusions.

What is Hypothesis Testing?

Statistical Hypothesis Testing is a method used to make inferences about a population based on sample data. Before we move ahead and understand what Hypothesis Testing is, we need to understand some basic terms.

Null Hypothesis

The Null Hypothesis is generally where we start our journey. Null Hypotheses are statements that are generally accepted or statements that you want to challenge. Since it is generally accepted that income level is positively correlated with quality of education, this will be our Null Hypothesis. It is denoted by H 0 .

H 0 : Income levels are positively correlated with quality of education.

Alternate Hypothesis

The Alternate Hypothesis is the opposite of the Null hypothesis. An alternate Hypothesis is what we want to prove as a researcher and is not generally accepted by society. An alternate hypothesis is denoted H a . The alternate hypothesis of the above is given below.

H a : Income levels are negatively correlated with the quality of education.

Confidence Level (1- α )

Confidence Levels represent the probability that the range of values contains the true parameter value. The most common confidence levels are 95% and 99%. It can be interpreted that our test is 95% accurate if our confidence level is 95%. It is denoted by 1-α.

p-value ( p )

The p-value represents the probability of obtaining test results at least as extreme as the results actually observed, under the assumption that the null hypothesis is correct. A lower p-value means fewer chances for our observed result to happen. If our p-value is less than α , our null hypothesis is rejected, otherwise null hypothesis is accepted.

Types of Hypothesis Tests

Since we are equipped with the basic terms, let’s go ahead and conduct some hypothesis tests.

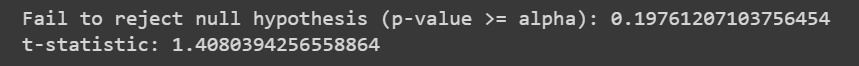

Conducting a Two-Tailed Hypothesis Test

In a two-tailed hypothesis test, our analysis can go in either direction i.e. either more than or less than our observed value. For example, a medical researcher testing out the effects of a placebo wants to know whether it increases or decreases blood pressure. Let’s look at its Python implementation.

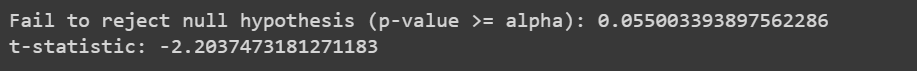

In the above code, we want to know if the group study method is an effective way to study or not. Therefore our null and alternate hypotheses are as follows.

- H 0 : The Group study method is not an effective way to study .

- H a : The group study method is an effective way to study .

Since the p-value is greater than α , we fail to reject the null hypothesis. Therefore the group study method is not an effective way to study.

Recommended: Hypothesis Testing in Python: Finding the critical value of T

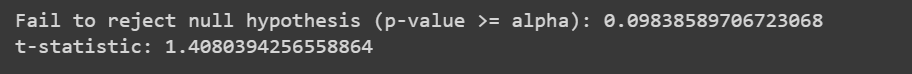

In a one-tailed hypothesis test, we have certain expectations in which way our observed value will move i.e. higher or lower. For example, our researchers want to know if a particular medicine lowers our cholesterol level. Let’s look at its Python code.

Here our null and alternate hypothesis tests are given below.

- H 0 : The Group study method does not increase our marks.

- H a : The group study method increases our marks.

Since the p-value is greater than α , we fail to reject the null hypothesis. Therefore the group study method does not increase our marks.

A paired sample test compares two sets of observations and then provides us with a conclusion. For example, we need to know whether the reaction time of our participants increases after consuming caffeine. Let’s look at another example with a Python code as well.

Similar to the above hypothesis tests, we consider the group study method here as well. Our null and alternate hypotheses are as follows.

- H 0 : The group study method does not provide us with significant differences in our scores.

- H a : The group study method gives us significant differences in our scores.

Since the p-value is greater than α , we fail to reject the null hypothesis.

Here you go! Now you are equipped to perform statistical hypothesis testing on different samples and draw out different conclusions. You need to collect data and decide on null and alternate hypotheses. Furthermore, based on the predetermined hypothesis, you need to decide on which type of test to perform. Statistical hypothesis testing is one of the most powerful tools in the world of research.

Now that you have a grasp on statistical hypothesis testing, how will you apply these concepts to your own research or data analysis projects? What hypotheses are you eager to test?

Do check out: How to find critical value in Python

What Is Hypothesis Testing? Types and Python Code Example

Curiosity has always been a part of human nature. Since the beginning of time, this has been one of the most important tools for birthing civilizations. Still, our curiosity grows — it tests and expands our limits. Humanity has explored the plains of land, water, and air. We've built underwater habitats where we could live for weeks. Our civilization has explored various planets. We've explored land to an unlimited degree.

These things were possible because humans asked questions and searched until they found answers. However, for us to get these answers, a proven method must be used and followed through to validate our results. Historically, philosophers assumed the earth was flat and you would fall off when you reached the edge. While philosophers like Aristotle argued that the earth was spherical based on the formation of the stars, they could not prove it at the time.

This is because they didn't have adequate resources to explore space or mathematically prove Earth's shape. It was a Greek mathematician named Eratosthenes who calculated the earth's circumference with incredible precision. He used scientific methods to show that the Earth was not flat. Since then, other methods have been used to prove the Earth's spherical shape.

When there are questions or statements that are yet to be tested and confirmed based on some scientific method, they are called hypotheses. Basically, we have two types of hypotheses: null and alternate.

A null hypothesis is one's default belief or argument about a subject matter. In the case of the earth's shape, the null hypothesis was that the earth was flat.

An alternate hypothesis is a belief or argument a person might try to establish. Aristotle and Eratosthenes argued that the earth was spherical.

Other examples of a random alternate hypothesis include:

- The weather may have an impact on a person's mood.

- More people wear suits on Mondays compared to other days of the week.

- Children are more likely to be brilliant if both parents are in academia, and so on.

What is Hypothesis Testing?

Hypothesis testing is the act of testing whether a hypothesis or inference is true. When an alternate hypothesis is introduced, we test it against the null hypothesis to know which is correct. Let's use a plant experiment by a 12-year-old student to see how this works.

The hypothesis is that a plant will grow taller when given a certain type of fertilizer. The student takes two samples of the same plant, fertilizes one, and leaves the other unfertilized. He measures the plants' height every few days and records the results in a table.

After a week or two, he compares the final height of both plants to see which grew taller. If the plant given fertilizer grew taller, the hypothesis is established as fact. If not, the hypothesis is not supported. This simple experiment shows how to form a hypothesis, test it experimentally, and analyze the results.

In hypothesis testing, there are two types of error: Type I and Type II.

When we reject the null hypothesis in a case where it is correct, we've committed a Type I error. Type II errors occur when we fail to reject the null hypothesis when it is incorrect.

In our plant experiment above, if the student finds out that both plants' heights are the same at the end of the test period yet opines that fertilizer helps with plant growth, he has committed a Type I error.

However, if the fertilized plant comes out taller and the student records that both plants are the same or that the one without fertilizer grew taller, he has committed a Type II error because he has failed to reject the null hypothesis.

What are the Steps in Hypothesis Testing?

The following steps explain how we can test a hypothesis:

Step #1 - Define the Null and Alternative Hypotheses

Before making any test, we must first define what we are testing and what the default assumption is about the subject. In this article, we'll be testing if the average weight of 10-year-old children is more than 32kg.

Our null hypothesis is that 10 year old children weigh 32 kg on average. Our alternate hypothesis is that the average weight is more than 32kg. Ho denotes a null hypothesis, while H1 denotes an alternate hypothesis.

Step #2 - Choose a Significance Level

The significance level is a threshold for determining if the test is valid. It gives credibility to our hypothesis test to ensure we are not just luck-dependent but have enough evidence to support our claims. We usually set our significance level before conducting our tests. The criterion for determining our significance value is known as p-value.

A lower p-value means that there is stronger evidence against the null hypothesis, and therefore, a greater degree of significance. A p-value of 0.05 is widely accepted to be significant in most fields of science. P-values do not denote the probability of the outcome of the result, they just serve as a benchmark for determining whether our test result is due to chance. For our test, our p-value will be 0.05.

Step #3 - Collect Data and Calculate a Test Statistic

You can obtain your data from online data stores or conduct your research directly. Data can be scraped or researched online. The methodology might depend on the research you are trying to conduct.

We can calculate our test using any of the appropriate hypothesis tests. This can be a T-test, Z-test, Chi-squared, and so on. There are several hypothesis tests, each suiting different purposes and research questions. In this article, we'll use the T-test to run our hypothesis, but I'll explain the Z-test, and chi-squared too.

T-test is used for comparison of two sets of data when we don't know the population standard deviation. It's a parametric test, meaning it makes assumptions about the distribution of the data. These assumptions include that the data is normally distributed and that the variances of the two groups are equal. In a more simple and practical sense, imagine that we have test scores in a class for males and females, but we don't know how different or similar these scores are. We can use a t-test to see if there's a real difference.

The Z-test is used for comparison between two sets of data when the population standard deviation is known. It is also a parametric test, but it makes fewer assumptions about the distribution of data. The z-test assumes that the data is normally distributed, but it does not assume that the variances of the two groups are equal. In our class test example, with the t-test, we can say that if we already know how spread out the scores are in both groups, we can now use the z-test to see if there's a difference in the average scores.

The Chi-squared test is used to compare two or more categorical variables. The chi-squared test is a non-parametric test, meaning it does not make any assumptions about the distribution of data. It can be used to test a variety of hypotheses, including whether two or more groups have equal proportions.

Step #4 - Decide on the Null Hypothesis Based on the Test Statistic and Significance Level

After conducting our test and calculating the test statistic, we can compare its value to the predetermined significance level. If the test statistic falls beyond the significance level, we can decide to reject the null hypothesis, indicating that there is sufficient evidence to support our alternative hypothesis.

On the other contrary, if the test statistic does not exceed the significance level, we fail to reject the null hypothesis, signifying that we do not have enough statistical evidence to conclude in favor of the alternative hypothesis.

Step #5 - Interpret the Results

Depending on the decision made in the previous step, we can interpret the result in the context of our study and the practical implications. For our case study, we can interpret whether we have significant evidence to support our claim that the average weight of 10 year old children is more than 32kg or not.

For our test, we are generating random dummy data for the weight of the children. We'll use a t-test to evaluate whether our hypothesis is correct or not.

For a better understanding, let's look at what each block of code does.

The first block is the import statement, where we import numpy and scipy.stats . Numpy is a Python library used for scientific computing. It has a large library of functions for working with arrays. Scipy is a library for mathematical functions. It has a stat module for performing statistical functions, and that's what we'll be using for our t-test.

The weights of the children were generated at random since we aren't working with an actual dataset. The random module within the Numpy library provides a function for generating random numbers, which is randint .

The randint function takes three arguments. The first (20) is the lower bound of the random numbers to be generated. The second (40) is the upper bound, and the third (100) specifies the number of random integers to generate. That is, we are generating random weight values for 100 children. In real circumstances, these weight samples would have been obtained by taking the weight of the required number of children needed for the test.

Using the code above, we declared our null and alternate hypotheses stating the average weight of a 10-year-old in both cases.

t_stat and p_value are the variables in which we'll store the results of our functions. stats.ttest_1samp is the function that calculates our test. It takes in two variables, the first is the data variable that stores the array of weights for children, and the second (32) is the value against which we'll test the mean of our array of weights or dataset in cases where we are using a real-world dataset.

The code above prints both values for t_stats and p_value .

Lastly, we evaluated our p_value against our significance value, which is 0.05. If our p_value is less than 0.05, we reject the null hypothesis. Otherwise, we fail to reject the null hypothesis. Below is the output of this program. Our null hypothesis was rejected.

In this article, we discussed the importance of hypothesis testing. We highlighted how science has advanced human knowledge and civilization through formulating and testing hypotheses.

We discussed Type I and Type II errors in hypothesis testing and how they underscore the importance of careful consideration and analysis in scientific inquiry. It reinforces the idea that conclusions should be drawn based on thorough statistical analysis rather than assumptions or biases.

We also generated a sample dataset using the relevant Python libraries and used the needed functions to calculate and test our alternate hypothesis.

Thank you for reading! Please follow me on LinkedIn where I also post more data related content.

Read more posts .

If this article was helpful, share it .

Learn to code for free. freeCodeCamp's open source curriculum has helped more than 40,000 people get jobs as developers. Get started

Next-Gen App & Browser Testing Cloud

Trusted by 2 Mn+ QAs & Devs to accelerate their release cycles

Automation Playwright Testing Selenium Python Tutorial

- What Is Hypothesis Testing in Python: A Hands-On Tutorial

Jaydeep Karale

Posted On: June 5, 2024

In software testing, there is an approach known as property-based testing that leverages the concept of formal specification of code behavior and focuses on asserting properties that hold true for a wide range of inputs rather than individual test cases.

Python is an open-source programming language that provides a Hypothesis library for property-based testing. Hypothesis testing in Python provides a framework for generating diverse and random test data, allowing development and testing teams to thoroughly test their code against a broad spectrum of inputs.

In this blog, we will explore the fundamentals of Hypothesis testing in Python using Selenium and Playwright. We’ll learn various aspects of Hypothesis testing, from basic usage to advanced strategies, and demonstrate how it can improve the robustness and reliability of the codebase.

TABLE OF CONTENTS

What Is a Hypothesis Library?

Decorators in hypothesis, strategies in hypothesis, setting up python environment for hypothesis testing, how to perform hypothesis testing in python, hypothesis testing in python with selenium and playwright.

- How to Run Hypothesis Testing in Python With Date Strategy?

- How to Write Composite Strategies in Hypothesis Testing in Python?

Frequently Asked Questions (FAQs)

Hypothesis is a property-based testing library that automates test data generation based on properties or invariants defined by the developers and testers.

In property-based testing, instead of specifying individual test cases, developers define general properties that the code should satisfy. Hypothesis then generates a wide range of input data to test these properties automatically.

Property-based testing using Hypothesis allows developers and testers to focus on defining the behavior of their code rather than writing specific test cases, resulting in more comprehensive testing coverage and the discovery of edge cases and unexpected behavior.

Writing property-based tests usually consists of deciding on guarantees our code should make – properties that should always hold, regardless of what the world throws at the code.

Examples of such guarantees can be:

- Your code shouldn’t throw an exception or should only throw a particular type of exception (this works particularly well if you have a lot of internal assertions).

- If you delete an object, it is no longer visible.

- If you serialize and then deserialize a value, you get the same value back.

Before we proceed further, it’s worthwhile to understand decorators in Python a bit since the Hypothesis library exposes decorators that we need to use to write tests.

In Python, decorators are a powerful feature that allows you to modify or extend the behavior of functions or classes without changing their source code. Decorators are essentially functions themselves, which take another function (or class) as input and return a new function (or class) with added functionality.

Decorators are denoted by the @ symbol followed by the name of the decorator function placed directly before the definition of the function or class to be modified.

Let us understand this with the help of an example:

In the example above, only authenticated users are allowed to create_post() . The logic to check authentication is wrapped in its own function, authenticate() .

This function can now be called using @authenticate before beginning a function where it’s needed & Python would automatically know that it needs to execute the code of authenticate() before calling the function.

If we no longer need the authentication logic in the future, we can simply remove the @authenticate line without disturbing the core logic. Thus, decorators are a powerful construct in Python that allows plug-n-play of repetitive logic into any function/method.

Now that we know the concept of Python decorators, let us understand the given decorators that which Hypothesis provides.

Hypothesis @given Decorator

This decorator turns a test function that accepts arguments into a randomized test. It serves as the main entry point to the Hypothesis.

The @given decorator can be used to specify which arguments of a function should be parameterized over. We can use either positional or keyword arguments, but not a mixture of both.

| .given(*_given_arguments, **_given_kwargs) |

Some valid declarations of the @given decorator are:

| given(integers(), integers()) a(x, y): pass given(integers()) b(x, y): pass given(y=integers()) c(x, y): pass given(x=integers()) d(x, y): pass given(x=integers(), y=integers()) e(x, **kwargs): pass given(x=integers(), y=integers()) f(x, *args, **kwargs): pass SomeTest(TestCase): @given(integers()) def test_a_thing(self, x): pass |

Some invalid declarations of @given are:

| given(integers(), integers(), integers()) g(x, y): pass given(integers()) h(x, *args): pass given(integers(), x=integers()) i(x, y): pass given() j(x, y): pass |

Hypothesis @example Decorator

When writing production-grade applications, the ability of a Hypothesis to generate a wide range of input test data plays a crucial role in ensuring robustness.

However, there are certain inputs/scenarios the testing team might deem mandatory to be tested as part of every test run. Hypothesis has the @example decorator in such cases where we can specify values we always want to be tested. The @example decorator works for all strategies.

Let’s understand by tweaking the factorial test example.

The above test will always run for the input value 41 along with other custom-generated test data by the Hypothesis st.integers() function.

By now, we understand that the crux of the Hypothesis is to test a function for a wide range of inputs. These inputs are generated automatically, and the Hypothesis lets us configure the range of inputs. Under the hood, the strategy method takes care of the process of generating this test data of the correct data type.

Hypothesis offers a wide range of strategies such as integers, text, boolean, datetime, etc. For more complex scenarios, which we will see a bit later in this blog, the hypothesis also lets us set up composite strategies.

While not exhaustive, here is a tabular summary of strategies available as part of the Hypothesis library.

| Strategy | Description |

|---|---|

| Generates none values. | |

| Generates boolean values (True or False). | |

| Generates integer values. | |

| Generates floating-point values. | |

| Generates unicode text strings. | |

| Generates single unicode characters. | |

| Generates lists of elements. | |

| Generates tuples of elements. | |

| Generates dictionaries with specified keys and values. | |

| Generates sets of elements. | |

| Generates binary data. | |

| Generates datetime objects. | |

| Generates timedelta objects. | |

| Choose one of the given strategies with equal probability. | |

| Chooses values from a given sequence with equal probability. | |

| Generates lists of elements. | |

| Generates date objects. | |

| Generates datetime objects. | |

| Generates a single value. | |

| Generates strings that match a given regular expression. | |

| Generates UUID objects. | |

| Generates complex numbers. | |

| Generates fraction objects. | |

| Builds objects using a provided constructor and strategy for each argument. | |

| Generates single unicode characters. | |

| Generates unicode text strings. | |

| Chooses values from a given sequence with equal probability. | |

| Generates arbitrary data values. | |

| Generates values that are shared between different parts of a test. | |

| Generates recursively structured data. | |

| Generates data based on the outcome of other strategies. |

Let’s see the steps to how to set up a test environment to perform Hypothesis testing in Python.

- Create a separate virtual environment for this project using the built-in venv module of Python using the command.

- Activate the newly created virtual environment using the activate script present within the environment.

- Install the Hypothesis library necessary for property-based testing using the pip install hypothesis command. The installed package can be viewed using the command pip list. When writing this blog, the latest version of Hypothesis is 6.102.4. For this article, we have used the Hypothesis version 6.99.6.

- Install python-dotenv , pytest, Playwright, and Selenium packages which we will need to run the tests on the cloud. We will talk about this in more detail later in the blog.

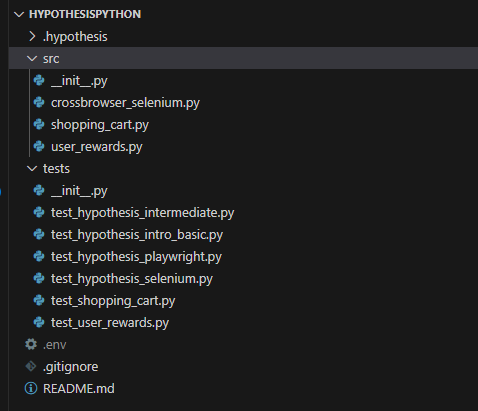

Our final project structure setup looks like below:

With the setup done, let us now understand Hypothesis testing in Python with various examples, starting with the introductory one and then working toward more complex ones.

Subscribe to the LambdaTest YouTube Channel for quick updates on the tutorials around Selenium Python and more.

Let’s now start writing tests to understand how we can leverage the Hypothesis library to perform Python automation .

For this, let’s look at one test scenario to understand Hypothesis testing in Python.

Test Scenario:

Implementation:

This is what the initial implementation of the function looks like:

| factorial(num: int) -> int: if num < 0: raise ValueError("Input must be > 0") fact = 1 for _ in range(1, num + 1): fact *= _ return fact |

It takes in an integer as an input. If the input is 0, it raises an error; if not, it uses the range() function to generate a list of numbers within, iterate over it, calculate the factorial, and return it.

Let’s now write a test using the Hypothesis library to test the above function:

| hypothesis import given, strategies as st given(st.integers(min_value=1, max_value=30)) test_factorial(num: int): fact_num_result = factorial(num) fact_num_minus_one_result = factorial(num-1) result = fact_num_result / fact_num_minus_one_result assert num == result |

Code Walkthrough:

Let’s now understand the step-by-step code walkthrough for Hypothesis testing in Python.

Step 1: From the Hypothesis library, we import the given decorator and strategies method.

Step 2: Using the imported given and strategies, we set our test strategy of passing integer inputs within the range of 1 to 30 to the function under test using the min_value and max_value arguments.

Step 3: We write the actual test_factorial where the integer generated by our strategy is passed automatically by Hypothesis into the value num.

Using this value we call the factorial function once for value num and num – 1.

Next, we divide the factorial of num by the factorial of num -1 and assert if the result of the operation is equal to the original num.

Test Execution:

Let’s now execute our hypothesis test using the pytest -v -k “test_factorial” command.

And Hypothesis confirms that our function works perfectly for the given set of inputs, i.e., for integers from 1 to 30.

We can also view detailed statistics of the Hypothesis run by passing the argument –hypothesis-show-statistics to pytest command as:

| -v --hypothesis-show-statistics -k "test_factorial" |

The difference between the reuse and generate phase in the output above is explained below:

- Reuse Phase: During the reuse phase, the Hypothesis attempts to reuse previously generated test data. If a test case fails or raises an exception, the Hypothesis will try to shrink the failing example to find a minimal failing case.

This phase typically has a very short runtime, as it involves reusing existing test data or shrinking failing examples. The output provides statistics about the typical runtimes and the number of passing, failing, and invalid examples encountered during this phase.

- Generate Phase: During the generate phase, the Hypothesis generates new test data based on the defined strategies. This phase involves generating a wide range of inputs to test the properties defined by the developer.

The output provides statistics about the typical runtimes and the number of passing, failing, and invalid examples generated during this phase. While this helped us understand what passing tests look like with a Hypothesis, it’s also worthwhile to understand how a Hypothesis can catch bugs in the code.

Let’s rewrite the factorial() function with an obvious bug, i.e., remove the check for when the input value is 0.

| factorial(num: int) -> int: # if num < 0: # raise ValueError("Number must be >= 0") fact = 1 for _ in range(1, num + 1): fact *= _ return fact |

We also tweak the test to remove the min_value and max_value arguments.

| given(st.integers()) test_factorial(num: int): fact_num_result = factorial(num) fact_num_minus_one_result = factorial(num-1) result = int(fact_num_result / fact_num_minus_one_result) assert num == result |

Let us now rerun the test with the same command:

| -v --hypothesis-show-statistics -k test_factorial |

| pytest -v --hypothesis-show-statistics -k test_factorial |

We can clearly see how Hypothesis has caught the bug immediately, which is shown in the above output. Hypothesis presents the input that resulted in the failing test under the Falsifying example section of the output.

So far, we’ve performed Hypothesis testing locally. This works nicely for unit tests , but when setting up automation for building more robust and resilient test suites, we can leverage a cloud grid like LambdaTest that supports automation testing tools like Selenium and Playwright.

LambdaTest is an AI-powered test orchestration and execution platform that enables developers and testers to perform automation testing with Selenium and Playwright at scale. It provides a remote test lab of 3000+ real environments.

How to Perform Hypothesis Testing in Python Using Cloud Selenium Grid?

Selenium is an open-source suite of tools and libraries for web automation . When combined with a cloud grid, it can help you perform Hypothesis testing in Python with Selenium at scale.

Let’s look at one test scenario to understand Hypothesis testing in Python with Selenium.

The code to set up a connection to LambdaTest Selenium Grid is stored in a crossbrowser_selenium.py file.

| selenium import webdriver selenium.webdriver.chrome.options import Options selenium.webdriver.common.keys import Keys time import sleep urllib3 warnings os selenium.webdriver import ChromeOptions selenium.webdriver import FirefoxOptions selenium.webdriver.remote.remote_connection import RemoteConnection hypothesis.strategies import integers dotenv import load_dotenv () = os.getenv('LT_USERNAME', None) = os.getenv('LT_ACCESS_KEY', None) CrossBrowserSetup: global web_driver def __init__(self): global remote_url urllib3.disable_warnings(urllib3.exceptions.InsecureRequestWarning) remote_url = "https://" + str(username) + ":" + str(access_key) + "@hub.lambdatest.com/wd/hub" def add(self, browsertype): if (browsertype == "Firefox"): ff_options = webdriver.FirefoxOptions() ff_options.browser_version = "latest" ff_options.platform_name = "Windows 11" lt_options = {} lt_options["build"] = "Build: FF: Hypothesis Testing with Selenium & Pytest" lt_options["project"] = "Project: FF: Hypothesis Testing withSelenium & Pytest" lt_options["name"] = "Test: FF: Hypothesis Testing with Selenium & Pytest" lt_options["browserName"] = "Firefox" lt_options["browserVersion"] = "latest" lt_options["platformName"] = "Windows 11" lt_options["console"] = "error" lt_options["w3c"] = True lt_options["headless"] = False ff_options.set_capability('LT:Options', lt_options) web_driver = webdriver.Remote( command_executor = remote_url, options = ff_options ) self.driver = web_driver self.driver.get("https://www.lambdatest.com") sleep(1) if web_driver is not None: web_driver.execute_script("lambda-status=passed") web_driver.quit() return True else: return False |

The test_selenium.py contains code to test the Hypothesis that tests will only run on the Firefox browser.

| hypothesis import given, settings hypothesis import given, example hypothesis.strategies as strategy src.crossbrowser_selenium import CrossBrowserSetup settings(deadline=None) given(strategy.just("Firefox")) test_add(browsertype_1): cbt = CrossBrowserSetup() assert True == cbt.add(browsertype_1) |

Let’s now understand the step-by-step code walkthrough for Hypothesis testing in Python using Selenium Grid.

Step 1: We import the necessary Selenium methods to initiate a connection to LambdaTest Selenium Grid.

The FirefoxOptions() method is used to configure the setup when connecting to LambdaTest Selenium Grid using Firefox.

Step 2: We use the load_dotenv package to access the LT_ACCESS_KEY required to access the LambdaTest Selenium Grid, which is stored in the form of environment variables.

The LT_ACCESS_KEY can be obtained from your LambdaTest Profile > Account Settings > Password & Security .

Step 3: We initialize the CrossBrowserSetup class, which prepares the remote connection URL using the username and access_key.

Step 4: The add() method is responsible for checking the browsertype and then setting the capabilities of the LambdaTest Selenium Grid.

LambdaTest offers a variety of capabilities, such as cross browser testing , which means we can test on various operating systems such as Windows, Linux, and macOS and multiple browsers such as Chrome, Firefox, Edge, and Safari.

For the purpose of this blog, we will be testing that connection to the LambdaTest Selenium Grid should only happen if the browsertype is Firefox.

Step 5: If the connection to LambdaTest happens, the add() returns True ; else, it returns False .

Let’s now understand a step-by-step walkthrough of the test_selenium.py file.

Step 1: We set up the imports of the given decorator and the Hypothesis strategy. We also import the CrossBrowserSetup class.

Step 2: @setting(deadline=None) ensures the test doesn’t timeout if the connection to the LambdaTest Grid takes more time.

We use the @given decorator to set the strategy to just use Firefox as an input to the test_add() argument broswertype_1. We then initialize an instance of the CrossBrowserSetup class & call the add() method using the broswertype_1 & assert if it returns True .

The commented strategy @given(strategy.just(‘Chrome’)) is to demonstrate that the add() method, when called with Chrome, returns False .

Let’s now run the test using pytest -k “test_hypothesis_selenium.py”.

We can see that the test has passed, and the Web Automation Dashboard reflects that the connection to the Selenium Grid has been successful.

On opening one of the execution runs, we can see a detailed step-by-step test execution.

How to Perform Hypothesis Testing in Python Using Cloud Playwright Grid?

Playwright is a popular open-source tool for end-to-end testing developed by Microsoft. When combined with a cloud grid, it can help you perform Hypothesis testing in Python at scale.

Let’s look at one test scenario to understand Hypothesis testing in Python with Playwright.

| website. |

| os dotenv import load_dotenv playwright.sync_api import expect, sync_playwright hypothesis import given, strategies as st subprocess urllib json () = { 'browserName': 'Chrome', # Browsers allowed: `Chrome`, `MicrosoftEdge`, `pw-chromium`, `pw-firefox` and `pw-webkit` 'browserVersion': 'latest', 'LT:Options': { 'platform': 'Windows 11', 'build': 'Playwright Hypothesis Demo Build', 'name': 'Playwright Locators Test For Windows 11 & Chrome', 'user': os.getenv('LT_USERNAME'), 'accessKey': os.getenv('LT_ACCESS_KEY'), 'network': True, 'video': True, 'visual': True, 'console': True, 'tunnel': False, # Add tunnel configuration if testing locally hosted webpage 'tunnelName': '', # Optional 'geoLocation': '', # country code can be fetched from https://www.lambdatest.com/capabilities-generator/ } interact_with_lambdatest(quantity): with sync_playwright() as playwright: playwrightVersion = str(subprocess.getoutput('playwright --version')).strip().split(" ")[1] capabilities['LT:Options']['playwrightClientVersion'] = playwrightVersion lt_cdp_url = 'wss://cdp.lambdatest.com/playwright?capabilities=' + urllib.parse.quote(json.dumps(capabilities)) browser = playwright.chromium.connect(lt_cdp_url) page = browser.new_page() page.goto("https://ecommerce-playground.lambdatest.io/") page.get_by_role("button", name="Shop by Category").click() page.get_by_role("link", name="MP3 Players").click() page.get_by_role("link", name="HTC Touch HD HTC Touch HD HTC Touch HD HTC Touch HD").click() page.get_by_role("button", name="Add to Cart").click(click_count=quantity) page.get_by_role("link", name="Checkout ").first.click() unit_price = float(page.get_by_role("cell", name="$146.00").first.inner_text().replace("$","")) page.evaluate("_ => {}", "lambdatest_action: {\"action\": \"setTestStatus\", \"arguments\": {\"status\":\"" + "Passed" + "\", \"remark\": \"" + "pass" + "\"}}" ) page.close() total_price = quantity * unit_price return total_price = st.integers(min_value=1, max_value=10) given(quantity=quantity_strategy) test_website_interaction(quantity): assert interact_with_lambdatest(quantity) == quantity * 146.00 |

Let’s now understand the step-by-step code walkthrough for Hypothesis testing in Python using Playwright Grid.

Step 1: To connect to the LambdaTest Playwright Grid, we need a Username and Access Key, which can be obtained from the Profile page > Account Settings > Password & Security.

We use the python-dotenv module to load the Username and Access Key, which are stored as environment variables.

The capabilities dictionary is used to set up the Playwright Grid on LambdaTest.

We configure the Grid to use Windows 11 and the latest version of Chrome.

Step 3: The function interact_with_lambdatest interacts with the LambdaTest eCommerce Playground website to simulate adding a product to the cart and proceeding to checkout.

It starts a Playwright session and retrieves the version of the Playwright being used. The LambdaTest CDP URL is created with the appropriate capabilities. It connects to the Chromium browser instance on LambdaTest.

A new page instance is created, and the LambdaTest eCommerce Playground website is navigated. The specified product is added to the cart by clicking through the required buttons and links. The unit price of the product is extracted from the web page.

The browser page is then closed.

Step 4: We define a Hypothesis strategy quantity_strategy using st.integers to generate random integers representing product quantities. The generated integers range from 1 to 10

Using the @given decorator from the Hypothesis library, we define a property-based test function test_website_interaction that takes a quantity parameter generated by the quantity_strategy .

Inside the test function, we use the interact_with_lambdatest function to simulate interacting with the website and calculate the total price based on the generated quantity.

We assert that the total_price returned by interact_with_lambdatest matches the expected value calculated as quantity * 146.00.

Let’s now run the test on the Playwright Cloud Grid using pytest -v -k “test_hypothesis_playwright.py ”

The LambdaTest Web Automation Dashboard shows successfully passed tests.

Run Your Hypothesis Tests With Selenium & Playwright on Cloud. Try LambdaTest Today!

How to Perform Hypothesis Testing in Python With Date Strategy?

In the previous test scenario, we saw a simple example where we used the integer() strategy available as part of the Hypothesis. Let’s now understand another strategy, the date() strategy, which can be effectively used to test date-based functions.

Also, the output of the Hypothesis run can be customized to produce detailed results. Often, we may wish to see an even more verbose output when executing a Hypothesis test.

To do so, we have two options: either use the @settings decorator or use the –hypothesis-verbosity=<verbosity_level> when performing pytest testing .

| hypothesis import Verbosity,settings, given, strategies as st datetime import datetime, timedelta generate_expiry_alert(expiry_date): current_date = datetime.now().date() days_until_expiry = (expiry_date - current_date).days return days_until_expiry <= 45 given(expiry_date=st.dates()) settings(verbosity=Verbosity.verbose, max_examples=1000) test_expiry_alert_generation(expiry_date): alert_generated = generate_expiry_alert(expiry_date) # Check if the alert is generated correctly based on the expiry date days_until_expiry = (expiry_date - datetime.now().date()).days expected_alert = days_until_expiry <= 45 assert alert_generated == expected_alert |

Let’s now understand the code step-by-step.

Step 1: The function generate_expiry_alert() , which takes in an expiry_date as input and returns a boolean depending on whether the difference between the current date and expiry_date is less than or equal to 45 days.

Step 2: To ensure we test the generate_expiry_alert() for a wide range of date inputs, we use the date() strategy.

We also enable verbose logging and set the max_examples=1000 , which requests Hypothesis to generate 1000 date inputs at the max.

Step 3: On the inputs generated by Hypothesis in Step 3, we call the generate_expiry_alert() function and store the returned boolean in alert_generated.

We then compare the value returned by the function generate_expiry_alert() with a locally calculated copy and assert if the match.

We execute the test using the below command in the verbose mode, which allows us to see the test input dates generated by the Hypothesis.

| -s --hypothesis-show-statistics --hypothesis-verbosity=debug -k "test_expiry_alert_generation" |

As we can see, Hypothesis ran 1000 tests, 2 with reused data and 998 with unique newly generated data, and found no issues with the code.

Now, imagine the trouble we would have had to take to write 1000 tests manually using traditional example-based testing.

How to Perform Hypothesis Testing in Python With Composite Strategies?

So far, we’ve been using simple standalone examples to demo the power of Hypothesis. Let’s now move on to more complicated scenarios.

| website offers customer rewards points. A class tracks the customer reward points and their spending. class. |

The implementation of the UserRewards class is stored in a user_rewards.py file for better readability.

| UserRewards: def __init__(self, initial_points): self.reward_points = initial_points def get_reward_points(self): return self.reward_points def spend_reward_points(self, spent_points): if spent_points<= self.reward_points: self.reward_points -= spent_points return True else: return False |

The tests for the UserRewards class are stored in test_user_rewards.py .

| hypothesis import given, strategies as st src.user_rewards import UserRewards = st.integers(min_value=0, max_value=1000) given(initial_points=reward_points_strategy) test_get_reward_points(initial_points): user_rewards = UserRewards(initial_points) assert user_rewards.get_reward_points() == initial_points given(initial_points=reward_points_strategy, spend_amount=st.integers(min_value=0, max_value=1000)) test_spend_reward_points(initial_points, spend_amount): user_rewards = UserRewards(initial_points) remaining_points = user_rewards.get_reward_points() if spend_amount <= initial_points: assert user_rewards.spend_reward_points(spend_amount) remaining_points -= spend_amount else: assert not user_rewards.spend_reward_points(spend_amount) assert user_rewards.get_reward_points() == remaining_points |

Let’s now understand what is happening with both the class file and the test file step-by-step, starting first with the UserReward class.

Step 1: The class takes in a single argument initial_points to initialize the object.

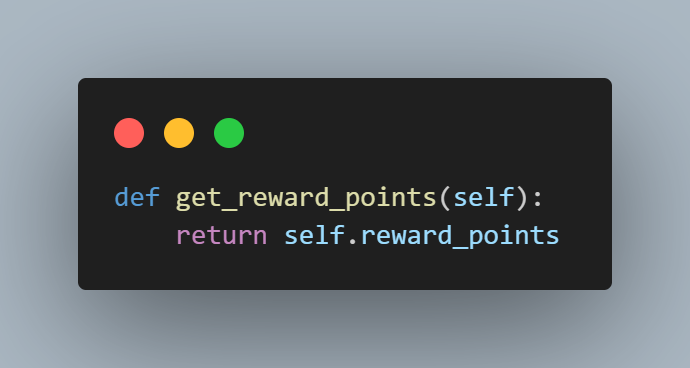

Step 2: The get_reward_points() function returns the customers current reward points.

Step 3: The spend_reward_points() takes in the spent_points as input and returns True if spent_points are less than or equal to the customer current point balance and updates the customer reward_points by subtracting the spent_points , else it returns False .

That is it for our simple UserRewards class. Next, we understand what’s happening in the test_user_rewards.py step-by-step.

Step 1: We import the @given decorator and strategies from Hypothesis and the UserRewards class.

Step 2: Since reward points will always be integers, we use the integer() Hypothesis strategy to generate 1000 sample inputs starting with 0 and store them in a reward_points_strategy variable.

Step 3: Use the rewards_point_strategy as an input we run the test_get_reward_points() for 1000 samples starting with value 0.

For each input, we initialize the UserRewards class and assert that the method get_reward_points() returns the same value as the initial_points .

Step 4: To test the spend_reward_points() function, we generate two sets of sample inputs first, an initial reward_points using the reward_points_strategy we defined in Step 2 and a spend_amount which simulates spending of points.

Step 5: Write the test_spend_reward_points , which takes in the initial_points and spend_amount as arguments and initializes the UserRewards class with initial_point .

We also initialize a remaining_points variable to track the points remaining after the spend.

Step 6: If the spend_amount is less than the initial_points allocated to the customer, we assert if spend_reward_points returns True and update the remaining_points else, we assert spend_reward_points returns False .

Step 7: Lastly, we assert if the final remaining_points are correctly returned by get_rewards_points , which should be updated after spending the reward points.

Let’s now run the test and see if Hypothesis is able to find any bugs in the code.

| -s --hypothesis-show-statistics --hypothesis-verbosity=debug -k "test_user_rewards" |

To test if the Hypothesis indeed works, let’s make a small change to the UserRewards by commenting on the logic to deduct the spent_points in the spend_reward_points() function.

We run the test suite again using the command pytest -s –hypothesis-show-statistics -k “test_user_rewards “.

This time, the Hypothesis highlights the failures correctly.

Thus, we can catch any bugs and potential side effects of code changes early, making it perfect for unit testing and regression testing .

To understand composite strategies a bit more, let’s now test the shopping cart functionality and see how composite strategy can help write robust tests for even the most complicated of real-world scenarios.

| and which handles the shopping cart feature of the website. |

Let’s view the implementation of the ShoppingCart class written in the shopping_cart.py file.

| random enum import Enum, auto Item(Enum): """Item type""" LUNIX_CAMERA = auto() IMAC = auto() HTC_TOUCH = auto() CANNON_EOS = auto() IPOD_TOUCH = auto() APPLE_VISION_PRO = auto() COFMACBOOKFEE = auto() GALAXY_S24 = auto() def __str__(self): return self.name.upper() ShoppingCart: def __init__(self): """ "" self.items = {} def add_item(self, item: Item, price: int | float, quantity: int = 1) -> None: """ "" if item.name in self.items: self.items[item.name]["quantity"] += quantity else: self.items[item.name] = {"price": price, "quantity": quantity} def remove_item(self, item: Item, quantity: int = 1) -> None: """ "" if item.name in self.items: if self.items[item.name]["quantity"] <= quantity: del self.items[item.name] else: self.items[item.name]["quantity"] -= quantity def get_total_price(self): total_price = 0 for item in self.items.values(): total_price += item["price"] * item["quantity"] return total_price |

Let’s now view the tests written to verify the correct behavior of all aspects of the ShoppingCart class stored in a separate test_shopping_cart.py file.

| typing import Callable hypothesis import given, strategies as st hypothesis.strategies import SearchStrategy src.shopping_cart import ShoppingCart, Item st.composite items_strategy(draw: Callable[[SearchStrategy[Item]], Item]): return draw(st.sampled_from(list(Item))) st.composite price_strategy(draw: Callable[[SearchStrategy[int]], int]): return draw(st.integers(min_value=1, max_value=100)) st.composite qty_strategy(draw: Callable[[SearchStrategy[int]], int]): return draw(st.integers(min_value=1, max_value=10)) given(items_strategy(), price_strategy(), qty_strategy()) test_add_item_hypothesis(item, price, quantity): cart = ShoppingCart() # Add items to cart cart.add_item(item=item, price=price, quantity=quantity) # Assert that the quantity of items in the cart is equal to the number of items added assert item.name in cart.items assert cart.items[item.name]["quantity"] == quantity given(items_strategy(), price_strategy(), qty_strategy()) test_remove_item_hypothesis(item, price, quantity): cart = ShoppingCart() print("Adding Items") # Add items to cart cart.add_item(item=item, price=price, quantity=quantity) cart.add_item(item=item, price=price, quantity=quantity) print(cart.items) # Remove item from cart print(f"Removing Item {item}") quantity_before = cart.items[item.name]["quantity"] cart.remove_item(item=item) quantity_after = cart.items[item.name]["quantity"] # Assert that if we remove an item, the quantity of items in the cart is equal to the number of items added - 1 assert quantity_before == quantity_after + 1 given(items_strategy(), price_strategy(), qty_strategy()) test_calculate_total_hypothesis(item, price, quantity): cart = ShoppingCart() # Add items to cart cart.add_item(item=item, price=price, quantity=quantity) cart.add_item(item=item, price=price, quantity=quantity) # Remove item from cart cart.remove_item(item=item) # Calculate total total = cart.get_total_price() assert total == cart.items[item.name]["price"] * cart.items[item.name]["quantity"] |

Code Walkthrough of ShoppingCart class:

Let’s now understand what is happening in the ShoppingCart class step-by-step.

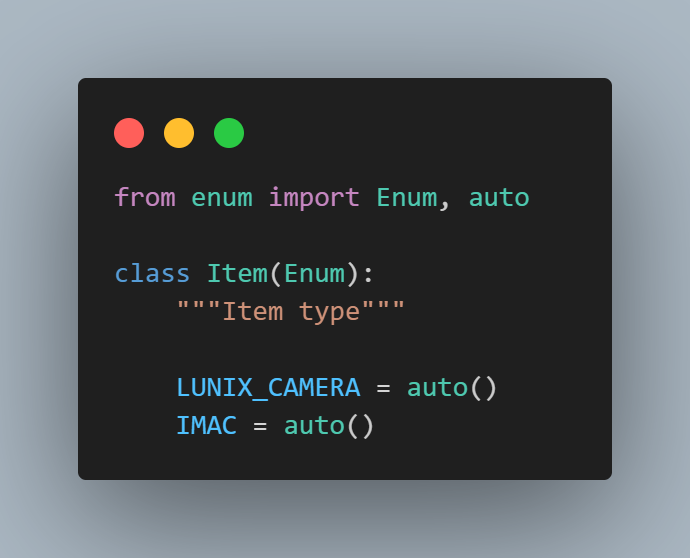

Step 1: We import the Python built-in Enum class and the auto() method.

The auto function within the Enum module automatically assigns sequential integer values to enumeration members, simplifying the process of defining enumerations with incremental values.

We define an Item enum corresponding to items available for sale on the LambdaTest eCommerce Playground website.

Step 2: We initialize the ShoppingCart class with an empty dictionary of items.

Step 3: The add_item() method takes in the item, price, and quantity as input and adds it to the shopping cart state held in the item dictionary.

Step 4: The remove_item() method takes in an item and quantity and removes it from the shopping cart state indicated by the item dictionary.

Step 5: The get_total_price() method iterates over the item dictionary, multiples the quantity by price, and returns the total_price of items in the cart.

Code Walkthrough of test_shopping_cart:

Let’s now understand step-by-step the tests written to ensure the correct working of the ShoppingCart class.

Step 1: First, we set up the imports, including the @given decorator, strategies, and the ShoppingCart class and Item enum.

The SearchStrategy is one of the various strategies on offer as part of the Hypothesis. It represents a set of rules for generating valid inputs to test a specific property or behavior of a function or program.

Step 2: We use the @st.composite decorator to define a custom Hypothesis strategy named items_strategy. This strategy takes a single argument, draw, which is a callable used to draw values from other strategies.

The st.sampled_from strategy randomly samples values from a given iterable. Within the strategy, we use draw(st.sampled_from(list(Item))) to draw a random Item instance from a list of all enum members.

Each time the items_strategy is used in a Hypothesis test, it will generate a random instance of the Item enum for testing purposes.

Step 3: The price_strategy runs on similar logic as the item_strategy but generates an integer value between 1 and 100.

Step 4: The qty_strategy runs on similar logic as the item_strategy but generates an integer value between 1 and 10.

Step 5: We use the @given decorator from the Hypothesis library to define a property-based test.

The items_strategy() , price_strategy() , and qty_strategy() functions are used to generate random values for the item, price, and quantity parameters, respectively.

Inside the test function, we create a new instance of a ShoppingCart .

We then add an item to the cart using the generated values for item, price, and quantity.

Finally, we assert that the item was successfully added to the cart and that the quantity matches the generated quantity.

Step 6: We use the @given decorator from the Hypothesis library to define a property-based test.

The items_strategy(), price_strategy() , and qty_strategy() functions are used to generate random values for the item, price, and quantity parameters, respectively.

Inside the test function, we create a new instance of a ShoppingCart . We then add the same item to the cart twice to simulate two quantity additions to the cart.

We remove one instance of the item from the cart. After that, we compare the item quantity before and after removal to ensure it decreases by 1.

The test verifies the behavior of the remove_item() method of the ShoppingCart class by testing it with randomly generated inputs for item, price , and quantity.

Step 7: We use the @given decorator from the Hypothesis library to define a property-based test.

The items_strategy(), price_strategy(), and qty_strategy() functions are used to generate random values for the item, price, and quantity parameters, respectively.

We add the same item to the cart twice to ensure it’s present, then remove one instance of the item from the cart. After that, we calculate the total price of items remaining in the cart.

Finally, we assert that the total price matches the price of one item times its remaining quantity.

The test verifies the correctness of the get_total_price() method of the ShoppingCart class by testing it with randomly generated inputs for item, price , and quantity .

Let’s now run the test using the command pytest –hypothesis-show-statistics -k “test_shopping_cart”.

We can verify that Hypothesis was able to find no issues with the ShoppingCart class.

Let’s now amend the price_strategy and qty_strategy to remove the min_value and max_value arguments.

And rerun the test pytest -k “test_shopping_cart” .

The tests run clearly reveal that we have bugs with respect to handling scenarios when quantity and price are passed as 0.

This also reveals the fact that setting the test inputs correctly to ensure we do comprehensive testing is key to writing robots and resilient tests.

Choosing min_val and max_val should only be done when we know beforehand the bounds of inputs the function under test will receive. If we are unsure what the inputs are, maybe it’s important to come up with the right strategies based on the behavior of the function under test.

In this blog we have seen in detail how Hypothesis testing in Python works using the popular Hypothesis library. Hypothesis testing falls under property-based testing and is much better than traditional testing in handling edge cases.

We also explored Hypothesis strategies and how we can use the @composite decorator to write custom strategies for testing complex functionalities.

We also saw how Hypothesis testing in Python can be performed with popular test automation frameworks like Selenium and Playwright. In addition, by performing Hypothesis testing in Python with LambdaTest on Cloud Grid, we can set up effective automation tests to enhance our confidence in the code we’ve written.

What are the three types of Hypothesis tests?

There are three main types of hypothesis tests based on the direction of the alternative hypothesis:

- Right-tailed test: This tests if a parameter is greater than a certain value.

- Left-tailed test: This tests if a parameter is less than a certain value.

- Two-tailed test: This tests for any non-directional difference, either greater or lesser than the hypothesized value.

What is Hypothesis testing in the ML model?

Hypothesis testing is a statistical approach used to evaluate the performance and validity of machine learning models. It helps us determine if a pattern observed in the training data likely holds true for unseen data (generalizability).

Jaydeep is a software engineer with 10 years of experience, most recently developing and supporting applications written in Python. He has extensive with shell scripting and is also an AI/ML enthusiast. He is also a tech educator, creating content on Twitter, YouTube, Instagram, and LinkedIn. Link to his YouTube channel- https://www.youtube.com/@jaydeepkarale

See author's profile

Author’s Profile

Got Questions? Drop them on LambdaTest Community. Visit now

Test Your Web Or Mobile Apps On 3000+ Browsers

Related Articles

How To Write Test Cases Effectively: Your Step-by-step Guide

September 5, 2024

Manual Testing | Automation |

How to Use Assert and Verify in Selenium

Faisal Khatri

September 2, 2024

Automation | Tutorial |

How To Download & Upload Files Using Selenium With Java

August 29, 2024

Automation | Selenium Tutorial | Tutorial |

Introducing KaneAI – World’s First End-to-End Testing Assistant

August 21, 2024

Automation | LambdaTest Updates |

ExpectedConditions In Selenium: Types And Examples

August 13, 2024

CSS Grid Best Practices: Guide With Examples

Tahera Alam

Web Development | LambdaTest Experiments | Tutorial |

Try LambdaTest Now !!

Get 100 minutes of automation test minutes FREE!!

Download Whitepaper

You'll get your download link by email.

Don't worry, we don't spam!

We use cookies to give you the best experience. Cookies help to provide a more personalized experience and relevant advertising for you, and web analytics for us. Learn More in our Cookies policy , Privacy & Terms of service .

Schedule Your Personal Demo ×

Pytest with Eric

How to Use Hypothesis and Pytest for Robust Property-Based Testing in Python

There will always be cases you didn’t consider, making this an ongoing maintenance job. Unit testing solves only some of these issues.

Example-Based Testing vs Property-Based Testing

Project set up, getting started, prerequisites.

| | |

Simple Example

Source code.

| 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 | def find_largest_smallest_item(input_array: list) -> tuple: """ Function to find the largest and smallest items in an array :param input_array: Input array :return: Tuple of largest and smallest items """ if len(input_array) == 0: raise ValueError # Set the initial values of largest and smallest to the first item in the array largest = input_array[0] smallest = input_array[0] # Iterate through the array for i in range(1, len(input_array)): # If the current item is larger than the current value of largest, update largest if input_array[i] > largest: largest = input_array[i] # If the current item is smaller than the current value of smallest, update smallest if input_array[i] return largest, smallest def sort_array(input_array: list, sort_key: str) -> list: """ Function to sort an array :param sort_key: Sort key :param input_array: Input array :return: Sorted array """ if len(input_array) == 0: raise ValueError if sort_key not in input_array[0]: raise KeyError if not isinstance(input_array[0][sort_key], int): raise TypeError sorted_data = sorted(input_array, key=lambda x: x[sort_key], reverse=True) return sorted_data |

| 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 | def reverse_string(input_string) -> str: """ Function to reverse a string :param input_string: Input string :return: Reversed string """ return input_string[::-1] def complex_string_operation(input_string: str) -> str: """ Function to perform a complex string operation :param input_string: Input string :return: Transformed string """ # Remove Whitespace input_string = input_string.strip().replace(" ", "") # Convert to Upper Case input_string = input_string.upper() # Remove vowels vowels = ("A", "E", "I", "O", "U") for x in input_string.upper(): if x in vowels: input_string = input_string.replace(x, "") return input_string |

Simple Example — Unit Tests

Example-based testing.

| 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 | pytest import logging from src.random_operations import ( reverse_string, find_largest_smallest_item, complex_string_operation, sort_array, ) logging.basicConfig(level=logging.INFO) logger = logging.getLogger(__name__) # Example Based Unit Testing def test_find_largest_smallest_item(): assert find_largest_smallest_item([1, 2, 3]) == (3, 1) def test_reverse_string(): assert reverse_string("hello") == "olleh" def test_sort_array(): data = [ {"name": "Alice", "age": 25}, {"name": "Bob", "age": 30}, {"name": "Charlie", "age": 20}, {"name": "David", "age": 35}, ] assert sort_array(data, "age") == [ {"name": "David", "age": 35}, {"name": "Bob", "age": 30}, {"name": "Alice", "age": 25}, {"name": "Charlie", "age": 20}, ] def test_complex_string_operation(): assert complex_string_operation(" Hello World ") == "HLLWRLD" |

Running The Unit Test

Property-based testing.

| 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 | hypothesis import given, strategies as st from hypothesis import assume as hypothesis_assume # Property Based Unit Testing @given(st.lists(st.integers(), min_size=1, max_size=25)) def test_find_largest_smallest_item_hypothesis(input_list): assert find_largest_smallest_item(input_list) == (max(input_list), min(input_list)) @given( st.lists( st.fixed_dictionaries({"name": st.text(), "age": st.integers()}), )) def test_sort_array_hypothesis(input_list): if len(input_list) == 0: with pytest.raises(ValueError): sort_array(input_list, "age") hypothesis_assume(len(input_list) > 0) assert sort_array(input_list, "age") == sorted( input_list, key=lambda x: x["age"], reverse=True ) @given(st.text()) def test_reverse_string_hypothesis(input_string): assert reverse_string(input_string) == input_string[::-1] @given(st.text()) def test_complex_string_operation_hypothesis(input_string): assert complex_string_operation(input_string) == input_string.strip().replace( " ", "" ).upper().replace("A", "").replace("E", "").replace("I", "").replace( "O", "" ).replace( "U", "" ) |

Complex Example

Source code.

| 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 | random from enum import Enum, auto class Item(Enum): """Item type""" APPLE = auto() ORANGE = auto() BANANA = auto() CHOCOLATE = auto() CANDY = auto() GUM = auto() COFFEE = auto() TEA = auto() SODA = auto() WATER = auto() def __str__(self): return self.name.upper() class ShoppingCart: def __init__(self): """ Creates a shopping cart object with an empty dictionary of items """ self.items = {} def add_item(self, item: Item, price: int | float, quantity: int = 1) -> None: """ Adds an item to the shopping cart :param quantity: Quantity of the item :param item: Item to add :param price: Price of the item :return: None """ if item.name in self.items: self.items[item.name]["quantity"] += quantity else: self.items[item.name] = {"price": price, "quantity": quantity} def remove_item(self, item: Item, quantity: int = 1) -> None: """ Removes an item from the shopping cart :param quantity: Quantity of the item :param item: Item to remove :return: None """ if item.name in self.items: if self.items[item.name]["quantity"] self.items[item.name] else: self.items[item.name]["quantity"] -= quantity def get_total_price(self): total = 0 for item in self.items.values(): total += item["price"] * item["quantity"] return total def view_cart(self) -> None: """ Prints the contents of the shopping cart :return: None """ print("Shopping Cart:") for item, price in self.items.items(): print("- {}: ${}".format(item, price)) def clear_cart(self) -> None: """ Clears the shopping cart :return: None """ self.items = {} |

Complex Example — Unit Tests