The loci of Stroop effects: a critical review of methods and evidence for levels of processing contributing to color-word Stroop effects and the implications for the loci of attentional selection

- Open access

- Published: 13 August 2021

- Volume 86 , pages 1029–1053, ( 2022 )

Cite this article

You have full access to this open access article

- Benjamin A. Parris ORCID: orcid.org/0000-0003-2402-2100 1 ,

- Nabil Hasshim 1 , 2 , 5 ,

- Michael Wadsley 1 ,

- Maria Augustinova 3 &

- Ludovic Ferrand 4

15k Accesses

47 Citations

12 Altmetric

Explore all metrics

Despite instructions to ignore the irrelevant word in the Stroop task, it robustly influences the time it takes to identify the color, leading to performance decrements (interference) or enhancements (facilitation). The present review addresses two questions: (1) What levels of processing contribute to Stroop effects; and (2) Where does attentional selection occur? The methods that are used in the Stroop literature to measure the candidate varieties of interference and facilitation are critically evaluated and the processing levels that contribute to Stroop effects are discussed. It is concluded that the literature does not provide clear evidence for a distinction between conflicting and facilitating representations at phonological, semantic and response levels (together referred to as informational conflict), because the methods do not currently permit their isolated measurement. In contrast, it is argued that the evidence for task conflict as being distinct from informational conflict is strong and, thus, that there are at least two loci of attentional selection in the Stroop task. Evidence suggests that task conflict occurs earlier, has a different developmental trajectory and is independently controlled which supports the notion of a separate mechanism of attentional selection. The modifying effects of response modes and evidence for Stroop effects at the level of response execution are also discussed. It is argued that multiple studies claiming to have distinguished response and semantic conflict have not done so unambiguously and that models of Stroop task performance need to be modified to more effectively account for the loci of Stroop effects.

Similar content being viewed by others

Different types of semantic interference, same lapses of attention: Evidence from Stroop tasks

Semantic Stroop interference is modulated by the availability of executive resources: Insights from delta-plot analyses and cognitive load manipulation

A spatial version of the Stroop task for examining proactive and reactive control independently from non-conflict processes

Avoid common mistakes on your manuscript.

Introduction

In his doctoral dissertation, John R. Stroop was interested in the extent to which difficulties that accompany learning, such as interference, can be reduced by practice (Stroop, 1935 ). For this purpose, he construed a particular type of stimulus. Stroop displayed words in a color that was different from the one that they actually designated (e.g., the word red in blue font). After he failed to observe any interference from the colors on the time it took to read the words (Exp.1), he asked his participants to identify their font color. Because the meaning of these words (e.g., red) interfered with the to-be-named target color (e.g., blue), Stroop observed that naming aloud the color of these words takes longer than naming aloud the color of small squares included in his control condition (Exp.2). In line with both his expectations and other learning experiments carried out at the time, this interference decreased substantially over the course of practice. However, daily practice did not eliminate it completely (Exp.3). During the next thirty years, this result and more generally this paradigm received only modest interest from the scientific community (see, e.g., Jensen & Rohwer, 1966, MacLeod, 1992 for discussions). Things changed dramatically when color-word stimuli, ingeniously construed by Stroop, became a prime paradigm to study attention, and in particular selective attention (Klein, 1964 ).

The ability to selectively attend to and process only certain features in the environment while ignoring others is crucial in many everyday activities (e.g., Jackson & Balota, 2013 ). Indeed, it is this very ability that allows us to drive without being distracted by beautiful surroundings or to quickly find a friend in a hallway full of people. It is clear then that an ability to reduce the impact of potentially interfering information by selectively attending to the parts of the world that are consistent with our goals, is essential to functioning in the world as a purposive individual. The Stroop task (Stroop, 1935 ), as this paradigm is now known, is a selective attention task in that it requires participants to focus on one dimension of the stimulus whilst ignoring another dimension of the very same stimulus. When the word dimension is not successfully ignored, it elicits interference: Naming aloud the color that a word is printed in takes longer when the word denotes a different color (incongruent trials, e.g., the word red displayed in color-incongruent blue font) compared to a baseline condition. This difference in color-naming times is often referred to as the Stroop interference effect or the Stroop effect (see the section ‘Definitional issues’ for further development and clarifications of these terms).

Evidencing its utility, the Stroop task has been widely used in clinical settings as an aid to assess disorders related to frontal lobe and executive attention impairments (e.g., in attention deficit hyperactivity disorder, Barkley, 1997 ; schizophrenia, Henik & Salo, 2004 ; dementia, Spieler et al., 1996 ; and anxiety, Mathews & MacLeod, 1985 ; see MacLeod, 1991 for an in-depth review of the Stroop task). The Stroop task is also ubiquitously used in basic and applied research—as indicated by the fact that the original paper (Stroop, 1935 ) is one of the most cited in the history of psychology and cognitive science (e.g., Gazzaniga et al., 2013 ; MacLeod, 1992 ). It is, however, important to understand that the Stroop task as it is currently employed in neuropsychological practice (e.g., Strauss et al., 2007 ), its implementations in most basic and applied research (see here below), and leading accounts of the effect it produces, are profoundly rooted in the idea that the Stroop effect is a unitary phenomenon in that it is caused by the failure of a single mechanism (i.e., it has a single locus). By addressing the critical issue of whether there is a single locus or multiple loci of Stroop effects, the present review not only addresses several pending issues of theoretical and empirical importance, but also critically evaluates these current practices.

The where vs. the when and the how of attentional control

The Stroop effect has been described as the gold standard measure of selective attention (MacLeod, 1992 ) in which a smaller Stroop interference effect is an indication of greater attentional selectivity. However, the notion that it is selective attention that is the cognitive mechanism enabling successful performance in the Stroop task has recently been sidelined (see Algom & Chajut, 2019 , for a discussion of this issue). For example, in a recent description of the Stroop task, Braem et al. ( 2019 ) noted that the size of the Stroop congruency effect is “indicative of the signal strength of the irrelevant dimension relative to the relevant dimension, as well as of the level of cognitive control applied” (p769). Cognitive control is a broader concept than selective attention in that it refers to the entirety of mechanisms used to control thought and behavior to ensure goal-oriented behavior (e.g., task switching, response inhibition, working memory). Its invocation in describing the Stroop task has proven to be somewhat controversial given that it implies the operation of top-down mechanisms, which might or might not be necessary to explain certain experimental findings (Algom & Chajut, 2019 ; Braem et al., 2019 ; Schmidt, 2018 ). It does, however, have the benefit of hypothesizing a form of attentional control that is not a static, invariant process but instead posits a more dynamic, adaptive form of attentional control, and provides foundational hypotheses about how and when attentional control might happen. However, the present work addresses that which the cognitive control approach tends to eschew (see Algom & Chajut, 2019 ): the question of where the conflict that causes the interference comes from. Importantly, the answer to the where question will have implication for the how and when questions.

The question of where the interference derives has historically been referred to as the locus of the Stroop effect (e.g., Dyer, 1973 ; Logan & Zbrodoff, 1998 , Luo, 1999 ; Scheibe et al., 1967 ; Seymour, 1977 ; Wheeler, 1977 ; see also MacLeod, 1991 , and Parris, Augustinova & Ferrand, 2019 ). Whilst, by virtue of our interest in where attentional selection occurs, we review evidence for the early or late selection of information in the color-word Stroop task, recent models of selective attention have shown that whether selection is early or late is a function of either the attentional resources available to process the irrelevant stimulus (Lavie, 1995) or the strength of the perceptual representation of the irrelevant dimension (Tsal & Benoni, 2010 ). Moreover, despite being referred to as the gold standard attentional measure and as one of the most robust findings in the field of psychology (MacLeod, 1992 ), it is clear that Stroop effects can be substantially reduced or eliminated by making what appear to be small changes to the task. For example, Besner, Stolz, and Boutillier ( 1997 ) showed that the Stroop effect can be reduced and even eliminated by coloring a single letter instead of all letters of the irrelevant word (although notably they used button press responses which produced smaller Stroop effects (Sharma & McKenna, 1998 ) making it easier to eliminate interference; see also Parris, Sharma, & Weekes, 2007 ). In addition, Melara and Mounts ( 1993 ) showed that by making the irrelevant words smaller to equate the discriminability of word and color, the Stroop effect can be eliminated and even reversed.

Later, Dishon-Berkovits and Algom ( 2000 ) noted that often in the Stroop task the dimensions are correlated in that one dimension can be used to predict the other (i.e., when an experimenter matches the number of congruent (e.g., the word red presented in the color red) and incongruent trials in the Stroop task, the irrelevant word is more often presented in its matching color than in any other color which sets up a response contingency). They demonstrated that when this dimensional correlation was removed the Stroop effect was substantially reduced. By showing that the Stroop effect is malleable through the modulation of dimensional uncertainty (degree of correlation of the dimensional values and how expected the co-occurrences are) or dimensional imbalance (of the salience of each dimension) their data, and resulting model (Melara & Algom, 2003 ; see also Algom & Fitousi, 2016 ), indicate that selective attention is failing because the experimental set-up of the Stroop task provides a context with little or no perceptual load / little or no perceptual competition, and where the dimensions (word and color) are often correlated and / or asymmetrical in discriminability that contributes to the robust nature of the Stroop effect. In other words, the Stroop task sets selective attention mechanisms up to fail, pitching as it does the intention to ignore irrelevant information against the tendency and resources to process conspicuous and correlated characteristics of the environment (Melara & Algom, 2003 ). But, in the same way that neuropsychological impairments teach us something about how the mind works (Shallice, 1988 ), it is these failures that give us an opportunity to explore the architecture of the mechanisms of selective attention in healthy and impaired populations. We, therefore, ask the question: if control does fail, where (at what levels of processing) is conflict experienced in the color-word Stroop task?

Given our focus on the varieties of conflict (and facilitation), the where of control, we will not concern ourselves with the how and the when of control. Manipulations and models of the Stroop task that are not designed to understand the types of conflict and facilitation that contribute to Stroop effects such as list-wise versus item-specific congruency proportion manipulations (e.g., Botvinick et al., 2001 ; Bugg, & Crump, 2012 ; Gonthier et al., 2016 ; Logan & Zbrodoff, 1979 ; Schmidt & Besner, 2008 ; Schmidt, Notebaert, & Van Den Bussche, 2015 ; see Schmidt, 2019 , for a review) or memory load manipulations (e.g., De Fockert, 2013 ; Kalanthroff et al., 2015 ; Kim et al., 2005 ; Kim, Min, Kim & Won, 2006 ), will be eschewed, unless these manipulations are specifically modified in a way that permits the understanding of the processing involved in producing Stroop interference and facilitation. To reiterate the aims of the present review, here we are less concerned with the evaluative function of control which judges when and how control operates (Chuderski & Smolen, 2016 ), but are instead concerned with the regulative function of control and specifically at which processing levels this might occur. In short, the present review attempts to identify whether at any level, other than the historically favoured level of response output, processing reliably leads to conflict (or facilitation) between activated representations. Before we address this question, however, we must first address the terminology used here and, in the literature, to describe different types of Stroop effects.

Definitional issues to consider before we begin

A word about baselines and descriptions of stroop effects.

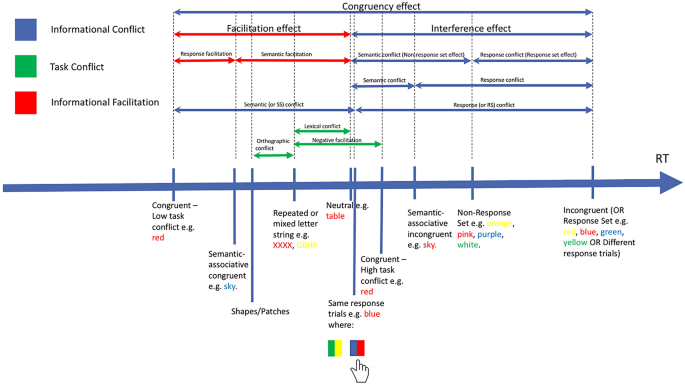

Given the number of studies that have employed the Stroop task since its inception in 1935, it is no surprise that a variety of modifications of the original task have been employed, including the introduction of new trial types (as exemplified by Klein, 1964 ) and new ways of responding, to measure and understand mechanisms of selective attention. This has led to disagreement over what is being measured by each manipulation, obfuscating the path to theoretical enlightenment. Various trial types have been used to distinguish types of conflict and facilitation in the color-word Stroop task (see Fig. 1 ), although with less fervor for facilitation varieties, resulting in a lack of agreement about how one should go about indexing response conflict, semantic conflict, and other forms of conflict and facilitation. Indeed, as can be seen in Fig. 1 , one person’s semantic conflict can be another person’s facilitation; a problem that arises due to the selection of the baseline control condition. Differences in performance between a critical trial and a control trial might be attributed to a specific variable but this method relies on having a suitable baseline that differs only in the specific component under test (Jonides & Mack, 1984 ).

This figure shows examples of the various trial types that have been used to decompose the Stroop effect into various types of conflict (interference) and facilitation. This has resulted in a lack of clarity about what components are being measured. Indeed, as can be seen, one person’s semantic conflict can be another person’s facilitation, a problem that arises due to the selection of the baseline control condition

Selecting an appropriate baseline, and indeed an appropriate critical trial, to measure the specific component under test is non-trivial. For example, congruent trials, first introduced by Dalrymple-Alford and Budayr ( 1966 , Exp. 2), have become a popular baseline condition against which to compare performance on incongruent trials. Congruent trials are commonly responded to much faster than incongruent trials and the difference in reaction time between the two conditions has been variously referred to as the Stroop congruency effect (e.g., Egner et al., 2010 ), the Stroop interference effect (e.g., Leung et al., 2000 ), and the Total Stroop Effect (Brown et al., 1998 ), and Color-Word Impact (Kahneman & Chajczyk, 1983 ). However, when compared to non-color-word neutral trials, congruent trials are often reported to be responded to faster, evidencing a facilitation effect of the irrelevant word on the task of color naming (Dalrymple-Alford, 1972 ; Dalrymple-Alford & Budayr, 1966 ). Referring to the difference between incongruent and congruent trials as Stroop interference then—as is often the case in the Stroop literature—fails to recognize the role of facilitation observed on congruent trials and epitomizes a wider problem. As already emphasized by MacLeod ( 1991 ), this difference corresponds to “(…) the sum of facilitation and interference, each in unknown amounts” (MacLeod, 1991 , p.168). Moreover, as will be discussed in detail later, congruent trial reaction times have been shown to be influenced by a newly discovered form of conflict, known as task conflict (Goldfarb & Henik, 2007 ) and are not, therefore, straightforwardly a measure of facilitation either.

Furthermore, whilst the common implementation of the Stroop task involves incongruent, congruent, and non-color-word neutral trials (or perhaps where the non-color-word neutral baseline is replaced by repeated letter strings e.g., xxxx), this common format ignores the possibility that the difference between incongruent and neutral trials involves multiple processes (e.g., semantic and response level conflict). As Klein ( 1964 ) showed the irrelevant word in the Stroop task can refer to concepts semantically associated with a color (e.g., sky; Klein, 1964 ), potentially permitting a way to answer to the question of whether selection occurs early at the level of semantics, before response selection, in the processing stream. But it is unclear whether such trials are direct measures of semantic conflict or indirect measures of response conflict.

Here, we employ the following terms: We refer to the difference between incongruent and congruent conditions as the Stroop congruency effect , because it contrasts performance in conditions with opposite congruency values. For the reasons noted above, the term Stroop interference or just interference is preferentially reserved for referring to slower performance on one trial type compared to another. The word conflict will denote competing representations at any particular level that could be the cause of interference (note that interference might not result from conflict (De Houwer, 2003 ) as, for example, in the emotional Stroop task, interference could result without conflict from competing representations (Algom et al., 2004 )). When the distinction is not critical, the terms interference and conflict will be used interchangeably. The term Stroop facilitation or just facilitation will refer to the speeding up of performance on one trial type compared to another (unless specified otherwise). In common with the literature, facilitation will also be used to refer to the opposite of conflict; that is, it will denote facilitating representations at any level. Finally, the term Stroop effect(s) will be employed to refer more generally to all of these effects.

Levels of conflict vs. levels of selection

When considering the standard incongruent Stroop trial (e.g., red in blue) where the word dimension is a color word (e.g., red) that is incongruent with the target color dimension that is being named, and where the color red is also a potential response, one might surmise numerous levels of representation where these two concepts might compete. Processing of the color dimension of a Stroop stimulus to name the color would, on a simple analysis, require initial visual processing, followed by activation of the relevant semantic representation and then word-form (phonetic) encoding of the color name in preparation for a response. For this process to advance unimpeded until response there would need to be no competing representations activated at any of those stages. Like color naming, the processes of word reading also requires visual processing but of letters and not of colors perhaps avoiding creating conflict at this level, although there is evidence for a competition for resources at the level of visual processing under some conditions (Kahneman & Chajczyk, 1983 ). Word reading also requires the computation of phonology from orthography which color processing does not. One way interference might occur at this level is if semantic processing or word-form encoding during the processing of the color dimension also leads to the unnecessary (for the purposes of providing a correct response) activation of the orthographic representation of the color name—as far as we are aware there is no evidence for this. However, orthography does appear to lead to conflict through a different route—the presence of a word or word-like stimulus appears to activate the full mental machinery used to process words. This unintentionally activated word reading task set, conflicts with the intentionally activated color identification task set, creating task conflict. Task conflict occurs whenever an orthographically plausible letter string is presented (e.g., the word table leads to interference, as does the non-word but pronounceable letter string fanit ; the letter string xxxxx less so; Levin & Tzelgov, 2016 ; Monsell et al., 2001 ).

Despite being a task in which participants do not intend to engage, irrelevant word processing would also likely involve the activation of a phonological representation of the word and the activation of a semantic representation (and likely some word-form encoding), either of which could lead to the activation of representations competing for selection. However, just because the word is processed at certain level (e.g., orthography or phonology here) does not mean that each of these levels independently lead to conflict. Phonological information would only independently contribute to conflict if the process of color naming activated a competing representation at the same level. Otherwise, the phonological representation of the irrelevant word might simply facilitate activation of the semantic representation of the irrelevant word thereby providing competition for the semantic representation of the relevant color. In which case, whilst phonological information would contribute to Stroop effects, no selection mechanism would be required at the phonological level. And of course, there could be conflict at the phonological processing level, but with no selection mechanism available, conflict would have to be resolved later. To identify whether selection occurs at the level of phonological processing, a method would be needed to isolate phonological information from information at the semantic and response levels.

So-called late selection accounts would argue that any activated representations at these levels would result in increased activation at the response level where selection would occur with no competition or selection at earlier stages (e.g., Dyer, 1973 ; Logan & Zbrodoff, 1998 , Luo, 1999 ; Scheibe et al., 1967 ; Seymour, 1977 ; Wheeler, 1977 ; see also MacLeod, 1991 , and Parris, Augustinova & Ferrand, 2019a , 2019b , 2019c ; for discussions of this topic). In contrast, so-called early selection accounts (De Houwer, 2003 ; Scheibe et al., 1967 ; Seymour, 1977 ; Stirling, 1979 ; Zhang & Kornblum, 1998 ; Zhang et al., 1999 ) argue for earlier and multiple sites of attentional selection with Hock and Egeth ( 1970 ) even arguing that the perceptual encoding of the color dimension is slowed by the irrelevant word, although this has been shown to be a problematic interpretation of their results (Dyer, 1973 ). In Zhang and colleagues models, attentional selection occurred and was resolved at the stimulus identification stage, before any information was passed on to the response level which had its own selection mechanism.

The organization of the review

It is important to emphasize at this point then that when considering the locus or loci of the Stroop effect, there are in fact two issues to address. The first concerns the level(s) of processing that significantly contribute to Stroop interference (and facilitation) so that a specific type of conflict actually arises at this level. The second issue concerns the level(s) of attentional selection: Is there, like Zhang and Kornblum ( 1998 ) and Zhang et al. ( 1999 ) have suggested, more than one level at which attentional selection occurs?

With regards to the first issue, we start below by critically evaluating the evidence for different levels of processing that putatively contribute to conflict with the objective of assessing the methods used to index the forms of conflict, and what we can learn from them. To do this, we employed the distinction introduced by MacLeod and MacDonald ( 2000 ) who argued for two categories of conflict: informational and the aforementioned task conflict (see also Levin & Tzelgov, 2016 ) to further structure the review. Informational conflict arises from the semantic and response information that the irrelevant word conveys. This roughly corresponds to the distinction between stimulus-based and response-based conflicts (Kornblum & Lee, 1995 ; Kornblum et al., 1990 ; Zhang & Kornblum, 1998 ; Zhang et al., 1999 ). According to this approach, conflict arises due to overlap between the dimensions of the Stroop stimulus at the level of stimulus processing (Stimulus–Stimulus or S–S overlap) and at the level of response production (Stimulus–Response or S–R overlap). At the level of stimulus processing interference can occur at the perceptual encoding, memory retrieval, conceptual encoding and stimulus comparison stages. At the level of response production interference can also occur at response selection, motor programming and response execution. In the Stroop task, the relevant and irrelevant dimensions both involve colors and would, thus, produce Stimulus–Stimulus conflict and both stimuli overlap with the response (S–R overlap) because the response involves color classification. We also include phonological processing and word frequency in the informational conflict taxon (cf. Levin & Tzelgov, 2016 ). We discuss informational conflict and its varieties in the first section which is entitled ‘Decomposing Informational conflict’.

Task conflict, as noted above, arises when two task sets compete for resources. In the Stroop task, the task set for color identification is endogenously and purposively activated, and the task set for word reading is exogenously activated on presentation of the word. The simultaneous activation of two task sets creates conflict even before the identities of the Stroop dimensions have been processed. Therefore, this form of conflict is generated by all irrelevant words in the Stroop task including congruent and neutral words (Monsell et al., 2001 ). We discuss task conflict in the section ‘ Task conflict ’. We then discuss the often overlooked phenomenon of Stroop facilitation in the section entitled ‘ Informational facilitation ’. In the section entitled “Other evidence relevant to the issue of locus vs. loci of the Stroop effect” we consider the influence of response mode (vocal, manual, oculomotor) on the variety of conflicts and facilitation observed in the subsection ‘Response modes and the loci of the Stroop effect’ and we consider whether conflict and facilitation effects are resolved even once a response has been favored in the subsection ‘Beyond response selection: Stroop effects on response execution’. In the final section entitled “Locus or loci of selection?”, we use the outcome of these deliberations to discuss the second issue of whether the evidence supports attentional selection at a single or at multiple loci.

Decomposing informational conflict

A seminal paper by George S. Klein in 1964 (Klein, 1964 ) represents a critical impetus for understanding different types of informational conflict. Indeed, up until Klein, all studies had utilized incongruent color-word stimuli as the irrelevant dimension. Klein was the first to manipulate the relatedness of the irrelevant word to the relevant color responses to determine the “evocative strength of the printed word” ( 1964 , p. 577). To this end, he compared color-naming times of lists of nonsense syllables, low-frequency non-color-related words, high-frequency non-color words, words with color-related meanings (semantic associates: e.g., lemon, frog, sky), color words that were not in the set of possible response colors (non-response set stimuli), and color words that were in the set of possible response colors (response set stimuli). The response times increased linearly in the order they are presented above. Whilst lists of nonsense syllables vs. low-frequency words, high-frequency words vs. semantic-associative stimuli, and semantic-associative stimuli vs. non-response set stimuli did not differ, all other comparisons were significant.

It is important to underscore that for Klein himself, there was no competition between semantic nodes or at any stage of processing, and, thus, no need for attentional selection other than at the response stage. Only when both irrelevant word and relevant color are processed to the point of providing evidence towards different motor responses, do the two sources of information compete. Said differently, whilst he questioned the effect of semantic relatedness, Klein assumed that semantic relatedness would only affect the strength of activation of alternative motor responses. Highlighting his favoring of a single late locus for attentional selection, Klein noted that words that are semantically distant from the color name would be less likely to “arouse the associated motor-response in competitive intensity” (p. 577). Although others (e.g., early selection accounts mentioned above) have argued for competition and selection occurring earlier than response output, a historically favored view of the Stroop interference effect as resulting solely from response conflict has prevailed (MacLeod, 1991 ) such that so-called informational conflict (MacLeod & MacDonald, 2000 ) is viewed as being essentially solely response conflict. That is, the color and word dimensions are processed sufficiently to produce evidence towards different responses and before the word dimension is incorrectly selected, mechanisms of selective attention at response output have to either inhibit the incorrect response or bias the correct response.

Response and semantic level processing

To assess the extent to which we can (or cannot) move forward from this latter view, we describe and critically evaluate methods used to dissociate and measure the potentially independent contributions of response and semantic conflict. We start by considering so-called same-response trials before going on to consider semantic-associative trials, non-response set trials and a method that has used semantic distance on the electromagnetic spectrum as a way to determine the involvement of semantic conflict in the color-word Stroop task. Indeed, this is an important first step for determining whether at this point informational conflict can (or cannot) be reliably decomposed.

Same-response trials

Same-response trials utilize a two-to-one color-response mapping and have become the most popular way of distinguishing semantic and response conflict in recent studies (e.g., Chen et al., 2011 ; Chen, Lei, Ding, Li, & Chen, 2013a ; Chen, Tang & Chen, 2013b ; Jiang et al., 2015 ; van Veen & Carter, 2005 ). First introduced by De Houwer ( 2003 ), this method maps two color responses to the same response button (see Fig. 1 ), which allows for a distinction between stimulus–stimulus (lexico-semantic) and stimulus–response (response) conflict.

By mapping two response options onto the same response key (e.g., both ‘blue’ and ‘yellow’ are assigned to the ‘z’ key), certain stimuli combinations (e.g., when blue is printed in yellow) are purported to not involve competition at the level of response selection; thus, any interference during same-response trials is thought to involve only semantic conflict. Any additional interference on different-response incongruent trials (e.g., when red is printed in yellow and where both ‘red’ and ‘yellow’ are assigned to different response keys) is taken as an index of response conflict. Performance on congruent trials (sometimes referred to as identity trials when used in the context of the two-to-one color-response mapping paradigm, here after 2:1 paradigm) is compared to performance on same-response incongruent trials to reveal interference that can be attributed to only semantic conflict, whereas a different-response incongruent vs same-response incongruent trial comparison is taken as an index of response conflict. Thus, the main advantage of using same-response incongruent trials as an index of semantic conflict is that this approach claims to be able to remove all of the influence of response competition (De Houwer, 2003 ). Notably, according to some models of Stroop task performance same-response incongruent trials should not produce interference because they do not involve response conflict (Cohen, Dunbar & McCelland, 1990 ; Roelofs, 2003 ).

Despite providing a seemingly convenient measure of semantic and response conflict, the studies that have employed the 2:1 paradigm share one major issue—that of an inappropriate baseline (see MacLeod, 1992 ). Same-response incongruent trials have consistently been compared to congruent trials to index semantic conflict. However, congruent trials also involve facilitation (both response and semantic facilitation—see below for more discussion of this) and thus, the difference between these two trial types could simply be facilitation and not semantic interference, a possibility De Houwer ( 2003 ) alluded to in his original paper (see also Schmidt et al., 2018 ). And whilst same-response trials plausibly involve semantic conflict, they are also likely to involve response facilitation because despite being semantically incongruent, the two dimensions of this type of Stroop stimulus provide evidence towards the same response. This means that both same-response and congruent trials involve response facilitation. Therefore the difference between same-response and congruent trials would actually be semantic conflict (experienced on same-response trials) + semantic facilitation (experienced on congruent trials), not just semantic conflict. This also has ramifications for the difference between different-response and same-response trials since the involvement of response facilitation on same-response trials means that the comparison of these two trials types would actually be response conflict plus response facilitation, not just response conflict.

Hasshim and Parris ( 2014 ) explored this possibility by comparing same-response incongruent trials to non-color-word neutral trials. They reasoned that this comparison could reveal faster RTs to same-response incongruent trials thereby providing evidence for response facilitation on same-response trials. In contrast, it could also reveal faster RTs to non-color-word neutral trials, thus, would have provided evidence for semantic interference (and would indicate that whatever response facilitation is present is hidden by an opposing and greater amount of semantic conflict). Hasshim and Parris reported no statistical difference between the RTs of the two trial types and reported Bayes Factors indicating evidence in favor of the null hypothesis of no difference. This would suggest that, when using reaction time as the index of performance, same-response incongruent trials cannot be employed as a measure of semantic conflict since they are not different from non-color-word neutral trials. In a later study, the same researchers investigated whether the two-to-one color-response mapping paradigm could still be used to reveal semantic conflict when using a more sensitive measure of performance than RT (Hasshim & Parris, 2015 ). They attempted to provide evidence for semantic conflict using an oculomotor Stroop task and an early, pre-response pupillometric measure of effort, which had previously been shown to provide a reliable alternative measure of the potential differences between conditions (Hodgson et al., 2009 ). However, in line with their previous findings, they reported Bayes Factors indicating evidence for no statistical difference between the same-response incongruent trials and non-color-word neutral trials. These findings, therefore, suggest that the difference between same-response incongruent trials and congruent trials indexes facilitation on congruent trials, and that the former trials are not therefore a reliable measure of semantic conflict when reaction times or pupillometry are used as the dependent variable. Notably, Hershman and Henik ( 2020 ) included neutral trials in their study of the 2:1 paradigm, but did not report statistics comparing same-response and neutral trials (although they did report differences between same-response and congruent trials where the latter had similar RTs to their neutral trials) It is clear from their Fig. 1, however, that pupil sizes for neutral and same-response trials do begin to diverge at around the time the button press response was made. This divergence gets much larger ~ 500 ms post-response indicating that a difference between the two trial types is detectable using pupillometry. Importantly, however, Hershman and Henik employed repeated letter string as their neutral condition, which does not involve task conflict (see the section on task conflict below for more details). This means that any differences between their neutral trial and the same-response trial could be entirely due to task and not semantic conflict.

However, despite Hasshim and Parris consistently reporting no difference between same-response and non-color-word neutral trials, in an unpublished study, Lakhzoum ( 2017 ) has reported a significant difference between non-color-word neutral trials and same-response trials. Lakhzoum’s study contained no special modifications to induce a difference between these two trial types, and had roughly similar trial and participant numbers and a similar experimental set-up to Hasshim and Parris. Yet Lakhzoum observed the effect that Hasshim and Parris have consistently failed to observe. The one clear difference between Lakhzoum ( 2017 ), Hasshim and Parris ( 2014 , 2015 ), however, was that Lakhzoum used French participants and presented the stimuli in French where Hasshim and Parris conducted their studies in English. A question for further research then is whether and to what extent language, including issues such as orthographic depth of the written script of that language, might modify the utility of same-response trials as an index of semantic conflict.

Indeed, even though the 2:1 paradigm is prone to limitations, more research is needed to assess its utility for distinguishing response and semantic conflict. Notably, in both their studies Hasshim and Parris used colored patches as the response targets (at least initially, Hasshim & Parris, 2015 , replaced the colored patches with white patches after practice trials) which could have reduced the magnitude of the Stroop effect (Sugg & McDonald, 1994 ). Same-response trials cannot, for obvious reasons, be used with the commonly used vocal response as a means to increase Stroop effects (see Response Modes and varieties of conflict section below), but future studies could use written word labels, a manipulation that has also been shown to increase Stroop effects (Sugg & McDonald, 1994 ), and thus might reveal a difference between same-response incongruent and non-color-word neutral conditions. At the very least future studies employing same-response incongruent trials should also employ a neutral non-color-word baseline (as opposed to color patches used by Shichel & Tzelgov, 2018 ) to properly index semantic conflict and should avoid the confounding issues associated with congruent trials (see also the section on Informational Facilitation below).

As noted above, same-response incongruent trials are also likely to involve response facilitation since both dimensions (word and color) provide evidence toward the same response. Since congruent trials and same-response incongruent trials both involve response facilitation, the difference between the two conditions likely represents semantic facilitation, not semantic conflict. As a consequence, indexing response conflict via the difference between different-response and same-response trials is also problematic. Until further work is done to clarify these issues, work applying the 2:1 color-response paradigm to understand the neural substrates of semantic and response conflicts (e.g., Van Veen & Carter, 2005 ) or wider issues such as anxiety (Berggren & Derakshan, 2014 ) remain difficult to interpret.

Non-response set trials

Non-response set trials are trials on which the irrelevant color word used is not part of the response set (e.g., the word ‘orange’ in blue, where orange is not a possible response option and blue is; originally introduced by Klein, 1964 ). Since the non-response set color word will activate color-processing systems, interference on such trials has been interpreted as evidence for conflict occurring at the semantic level. These trials should in theory remove the influence of response conflict because the irrelevant color word is not a possible response option and thus, conflict at the response level is not present. The difference in performance between the non-response set trials and a non-color-word neutral baseline condition (e.g., the word ‘table’ in red) is taken as evidence of interference caused by the semantic processing of the irrelevant color word (i.e., semantic conflict). In contrast, response conflict can be isolated by comparing the difference between the performance on incongruent trials and the non-response set trials. This index of response conflict has been referred to as the response set effect (Hasshim & Parris, 2018 ; Lamers et al., 2010 ) or the response set membership effect (Sharma & McKenna, 1998 ) and describes the interference that is a result of the irrelevant word denoting a color that is also a possible response option. The aim of non-response set trials is to provide a condition where the irrelevant word is semantically incongruent with the relevant color such that the resultant semantic conflict is the only form of conflict present.

It has been argued that the interference measured using non-response set trials, the non-response set effect, is an indirect measure of response conflict (Cohen et al., 1990 ; Roelofs, 2003 ) and is, thus, not a measure of semantic conflict. That is, the non-response set effect results from the semantic link between the non-response set words and the response set colors and indirect activation of the other response set colors leads to response competition with the target color. As far as we are aware there is no study that has provided or attempted to provide evidence that is inconsistent with this argument. Thus, for non-response set trials to have utility in distinguishing response and semantic conflict, future research will need to evidence the independence of these types of conflict in RTs and other dependent measures.

Semantic-associative trials

Another method that has been used to tease apart semantic and response conflict employs words that are semantically associated with colors (e.g., sky-blue, frog-green). In trials of this kind (e.g., sky printed in green), first introduced by Klein ( 1964 ), the irrelevant words are semantically related to each of the response colors. Recall that for Klein this was a way of investigating different magnitudes of response conflict (the indirect response conflict interpretation). Indeed, the notion of comparing RTs on color-associated incongruent trials to those on color-neutral trials to specifically isolate semantic conflict (i.e., so-called “sky-put” design) was first suggested by Neely and Kahan ( 2001 ). It was later actually empirically implemented by Manwell, Roberts and Besner ( 2004 ) and used since in multiple studies investigating Stroop interference (e.g., Augustinova & Ferrand, 2014 ; Risko et al., 2006 ; Sharma & McKenna, 1998 ; White et al., 2016 ).

Interference observed when using semantic associates tends to be smaller than when using non-response set trials (Klein, 1964 ; Sharma & McKenna, 1998 ). This suggests that semantic associates may not capture semantic interference in its entirety (or alternatively that non-response set trials involve some response conflict). Sharma and McKenna ( 1998 ) postulated that this is because non-response set trials involve an additional level of semantic processing which, following Neumann ( 1980 ) and La Heij, Van der Heijdan, and Schreuder ( 1985 ), they called semantic relevance (due to the fact that color words are also relevant in a task in which participants identify colors). It is, however, also the case that smaller interference observed with semantic associates compared to non-response set trials can be conceptualized simply as less semantic association with the response colors for non-color words (sky-blue) than for color words (red–blue).

As with non-response set trials, it is unclear whether semantic associates exclude the influence of response competition because they too can be modeled as indirect measures of response conflict (e.g., Roelofs, 2003 ). Since semantic-associative interference could be the result of the activation of the set of response colors to which they are associated (for instance when sky in red activates competing response set option blue), it does not allow for a clear distinction between semantic and response processes. In support of this possibility, Risko et al. ( 2006 ) reported that approximately half of the semantic-associative Stroop effect is due to response set membership and therefore response level conflict. The raw effect size of pure semantic-associative interference (after interference due to response set membership was removed) in their study was only between 6 ms (manual response, 112 participants) and 10 ms (vocal response, 30 participants).

When the same group investigated this issue with a different approach (i.e., ex-Gaussian analysis), their conclusions were quite different. White and colleagues ( 2016 ) found the semantic Stroop interference effect (difference between semantic-associative and color-neutral trials) in the mean of the normal distribution (mu) and in the standard deviation of the normal distribution (sigma), but not the tail of the RT distribution (tau). This finding was different from past studies that found standard Stroop interference in all three parameters (see, e.g., Heathcote et al., 1991 ). Therefore, White and colleagues reasoned that the source of the semantic (as opposed standard) Stroop effect is different such that the interference associated with response competition on standard color-incongruent trials (that is to be seen in tau) is absent in incongruent semantic associates. However, White et al. only investigated semantic conflict. A more recent study that considered both response and semantic conflict in the same experiment found they influence similar portions of the RT distribution (Hasshim, Downes, Bate, & Parris, 2019 ), suggesting that ex-Gaussian analysis cannot be used to distinguish the two types of conflict.

Interestingly, Schmidt and Cheesman ( 2005 ) explored whether semantic-associative trials involve response conflict by employing the 2:1 paradigm depicted above. With the standard Stroop stimuli, they reported the common differences between same- and different-response incongruent trials (that are thought to indicate response conflict) and between congruent and same-response incongruent (that are thought to indicate semantic conflict in the 2:1 paradigm). However, with semantic-associative stimuli they only observed an effect of semantic conflict a finding that differs from that of Risko et al. ( 2006 ) whose results indicate an effect of response conflict with semantic-associative stimuli. But, as already noted, the issues associated with employing just congruent trials as a baseline in the 2:1 paradigm and the potential response facilitation on same-response trials lessens the interpretability of this result.

Complicating matters further still, Lorentz et al. ( 2016 ) showed that the semantic-associative Stroop effect is not present in reaction time data when response contingency (a measure of how often an irrelevant word is paired with any particular color) is controlled by employing two separate contingency-matched non-color-word neutral conditions (but see Selimbegovic, Juneau, Ferrand, Spatola & Augustinova, 2019 ). There was, however, evidence for Stroop facilitation with these stimuli and for interference effects in the error data. Nevertheless, studies utilizing semantic-associative stimuli that have not controlled for response contingency might not have accurately indexed semantic-associative interference. Future research should focus on assessing the magnitude of the semantic-associative Stroop interference effect after the influences of response set membership and response contingency have been controlled.

Levin and Tzelgov ( 2016 ) also reported that they failed to observe the semantic-associative Stroop effect across multiple experiments using a vocal response (in both Hebrew and Russian). Only when the semantic associations were primed via a training protocol were semantic-associative Stroop effects observed, although they were not able to consistently report evidence for the null hypothesis of no difference. They subsequently argued that the semantic-associative Stroop effect is probably present but is a small and “unstable” contributor to Stroop interference. This is a somewhat surprising conclusion given the small but consistent effects reported by others with a vocal response (Klein, 1964 ; Risko et al., 2006 ; Scheibe et al., 1967 ; White et al., 2016 ; see Augustinova & Ferrand, 2014 , for a review). However, it seems reasonable to conclude that the semantic-associative Stroop effect is not easily observed, especially with a manual response (e.g., Sharma & McKenna, 1998 ).

Finally, any observed semantic-associative interference could be interpreted as being an indirect measure of response competition (even after factors such as response set membership and response contingency are controlled). Indeed, the colors associated with the semantic-associative stimuli are also linked to the response set colors (Cohen et al., 1990 ; Roelofs, 2003 ) and thus, semantic associates do not generate an unambiguous measure of semantic conflict, at least when only RTs are used. Thus, it seems essential for future research to investigate this issue with additional, and perhaps more refined indicators of response processing such as EMGs.

Semantics as distance on the electromagnetic spectrum

Klopfer ( 1996 ) demonstrated that RTs were slower when both dimensions of the Stroop stimulus were closely related on the electromagnetic spectrum. The electromagnetic spectrum is the range of frequencies of electromagnetic radiation and their wavelengths including those for visible light. The visible light portion of the spectrum goes from red with the shortest and violet with the longest wavelengths with Orange, Yellow, Green and Blue (amongst others) in between. The Stroop effect has been reported to be larger when the color and word dimensions of the Stroop stimulus are close on the spectrum (e.g., blue in green) compared to when the colors were distantly related (e.g., blue in red; see also Laeng et al., 2005 , for an effect of color opponency on Stroop interference). In other words, Stroop interference is greater when the semantic distance between the color denoted by the word and the target color in “color space” is smaller, making it seemingly difficult to argue that semantic conflict does not contribute to Stroop interference. However, Kinoshita, Mills, and Norris ( 2018 ) recently failed to replicate this electromagnetic spectrum effect indicating that more research is needed to assess whether this is a robust effect. Even if replicated, however, this manipulation cannot escape the interpretation of semantic conflict as being the indirect indexing of response conflict. Therefore, these replications also call for additional indicators of response processing or the lack of thereof.

Can we distinguish the contribution of response and semantic processing?

Perhaps due to the past competition between early and late selection, single-stage accounts of Stroop interference (Logan & Zbrodoff, 1998 ; MacLeod, 1991 ) response and semantic conflict have historically been the most studied and, therefore, compared types of conflict. For instance, there is a multitude of studies indicating that semantic conflict is often preserved when response conflict is reduced by experimental manipulations including hypnosis-like suggestion (Augustinova & Ferrand, 2012 ), priming (Augustinova & Ferrand, 2014 ), Response–Stimulus Interval (Augustinova et al., 2018a ), viewing position (Ferrand & Augustinova, 2014a ) and single letter coloring (Augustinova & Ferrand, 2007 ; Augustinova et al., 2010 , 2015 , 2018a , 2018b ). This dissociative pattern (i.e., significant semantic conflict while response conflict is reduced or even eliminated) is often viewed as indicating two qualitatively distinct types of conflict, suggesting that these manipulations result in response conflict being prevented. However, these studies have commonly employed semantic-associative conflict which could be indirectly measuring response conflict and it could, therefore, be argued that it is not the type of conflict but simply residual response conflict that remains (Cohen et al., 1990 ; Roelofs, 2003 ). Therefore, it still remains plausible that the dissociative pattern simply indicates quantitative differences in response conflict.

As we have discussed in this section, interference generated by both non-response trials and trials that manipulation proximity on the electromagnetic spectrum are prone to the same limitations. The 2:1 paradigm is a paradigm that could in principle remove response conflict from the conflict equation, but the issues surrounding this manipulation need to be further researched before we can be confident of its utility. Therefore, at this point, it seems reasonable to conclude that published research conducted so far with additional color-incongruent trial types (same-response, non-response, or semantic-associative trials) does not permit the unambiguous conclusion that the informational conflict generated by standard color-incongruent trials (word ‘red’ presented in blue) can be decomposed into semantic and response conflicts. More than ever then, cumulative evidence from more time- and process-sensitive measures are required.

Other types of informational conflict: considering the role of phonological processing and word frequency

Whilst participants are asked to ignore the irrelevant word in the color-word Stroop task, it is clear that their attempts to do so are not successful. If word processing proceeds in an obligatory fashion such that before accessing the semantic representation of the irrelevant word, the letters, orthography, and phonology are also processed, interference could happen at these levels of processing. But, as anticipated by Klein ( 1964 ), just because the word is processed at these levels does not mean that each leads to level-specific conflict. To determine whether or not these different levels of processing also independently contribute to Stroop interference, various trial types and manipulations have been employed that have attempted to dissociate pre-semantic levels of processing. The most notable methods are: (1) phonological overlap between the irrelevant word and color name; (2) the use of pseudowords; and (3) manipulation of word frequency. This section attempts to identify whether pre-semantic processing of the irrelevant word reliably leads to conflict (or facilitation) at levels other than response output.

Phonological overlap between word and color name

A study by Dalrymple-Alford ( 1972 ) presented evidence for solely phonological interference in the Stroop task. Dalrymple-Alford manipulated the phonemic overlap between the irrelevant word and color name. For example, if the color to be named was red, the to-be-ignored word would be rat (sharing initial phoneme) or pod (sharing the end phoneme) or a word that shares no phoneme at all (e.g., fit ). Dalrymple-Alford reported evidence for greater interference at the initial letter than at the end letter position (similar effects were observed for facilitation). Using a more carefully designed set of stimuli (originally created by Coltheart et al., 1999 , who focused on just facilitation), Marmurek et al. ( 2006 ) also showed greater interference and facilitation at the initial letter position than the end letter position; although, in their study effects at the end letter position did not reach significance. This paradigm represents a direct measure of phonological processing that, importantly, does not have a semantic component (other than the weak conflict that would result from the activation of two semantic representations with unrelated meanings). However, in line with the interpretation by Coltheart et al. ( 1999 ), Marmurek and colleagues argued it was evidence for phonological processing of the irrelevant word that either facilitates or interferes with the production of the color name at the response output stage (see also Parris et al., 2019a , 2019b , 2019c ; Regan, 1978; Singer et al., 1975 ). Thus, whilst the word is processed phonologically, the only phonological representation with which the resulting representation could compete is that created during the phonological encoding of the color name, which would only be produced at later response processing levels. In sum, it is not possible to conclude in favor of qualitatively different conflict (or facilitation) other than that at the response level using this approach.

Pseudowords

A pseudoword is a non-word that is pronounceable (e.g., veglid ). In fact, some real words are so rare (e.g., helot , eft ) that to most they are equivalent to pseudowords. As noted above, Klein ( 1964 ) used rare words in the Stroop task and showed that they interfered less than higher-frequency words but more than consonant strings (e.g., GTBND ). Both Burt’s ( 2002 ) and Monsell et al.’s ( 2001 ) studies later supported the finding that pseudowords result in more interference than consonant strings. In recent work, Kinoshita et al. ( 2017 ) asked what aspects of the reading process is triggered by the irrelevant word stimulus to produce interference in the color-word Stroop task. They compared performance on five types of color-neutral letter strings to incongruent words. They included real words (e.g., hat ), pronounceable non-words (or pseudowords; e.g., hix ), consonant strings (e.g., hdk ), non-alphabetic symbol strings (e.g., &@£ ), and a row of Xs. They reported that there was a word-likeness or pronounceability gradient with real words and pseudowords showing an equal amount of interference (with interference increasing with string length) and more than that produced by the consonant strings. Consonant strings produced more interference than the symbol strings and the row of Xs which did not differ from each other. The absence of the lexicality effect (defined by color-neutral real words producing more interference than pseudowords) was explained by Kinoshita and colleagues as being a consequence of the pre-lexically generated phonology from the pronounceable irrelevant words interfering with the speech production processes involved in naming the color. Under this account, the process of phonological encoding (the segment-to-frame association processes in articulation planning) of the color name must be slowed by the computation of phonology that occurs independent of lexical status (because it happens with pronounceable pseudowords). Notably, the authors reported evidence for pre-lexically generated phonology when participants responded vocally (by saying aloud the color name), but not when participants responded manually (by pressing a key that corresponds to the target color) suggesting the effects were the result of the need to articulate the color name.

Some pseudowords can sound like color words (e.g., bloo), and are known as pseudohomophones. Besner and Stolz ( 1998 ) employed pseudohomophones as the irrelevant dimension, and found substantial Stroop effects when compared to a neutral baseline (see also Lorentz et al., 2016 ; Monahan, 2001 ) suggesting that there is phonological conflict in the Stroop task. However, pseudohomophones do not involve only phonological conflict since they contain substantial orthographic overlap with their base words (e.g., bloo , yeloe , grene , wred ) and will likely activate the semantic representations of the colors indicated by the word via their shared phonology. In short, interference produced by pseudohomophones could result from phonological, orthographic, or semantic processing but also and importantly it can still simply result from response conflict (see also Tzelgov et al., 1996 , work on cross-script homophones which shows phonologically mediated semantic/response conflict, but not phonological conflict).

Taken together, this work shows a clear effect of phonological processing of the irrelevant word on Stroop task performance; and one that likely results from the pre-lexical phonological processing of the irrelevant word. Again, however, it is unclear whether the resulting competition arises at the pre-lexical level (suggesting the color name’s pre-lexical phonological representation is unnecessarily activated) or whether phonological processing of the irrelevant word leads to phonological encoding of that word that then interferes with the phonological encoding of the relevant color name. The latter seems more likely than the former.

High- vs. low-frequency words

In support of the notion that non-semantic lexical factors contribute to Stroop effects, studies have shown an effect of the word frequency of non-color-related words on Stroop interference. Word frequency refers to the likelihood of encountering that word in reading and conversation. It is a factor that has long been known to contribute to word reading latency, and given that color words tend to be high-frequency words, it is possible word frequency contributes to Stroop effects. Whilst the locus of word frequency effects in word reading are unclear, it is known that it takes longer to access lexico-semantic (phonological/semantic) representations of low-frequency words (Gherhand & Barry, 1998 , 1999 ; Monsell et al., 1989 ).

According to influential models of the Stroop task, the magnitude of Stroop interference is determined by the strength of the connection between the irrelevant word and the response output level (Cohen et al., 1990 ; Kalanthroff et al., 2018 ; Zhang et al., 1999 ). Since high-frequency words are by definition encountered more often, their strength of connection to the response output level would be higher than that for low-frequency words. This leads to the prediction that color-naming times should be longer when the distractor word is of a higher frequency. Evidence in support of this has been reported by Klein ( 1964 ), Fox et al. ( 1971 ) and Scheibe et al. ( 1967 ). However, Monsell et al. ( 2001 ) pointed out methodological issues in these older studies that could have confounded the results. First, these previous studies employed the card presentation version of the Stroop task in which the items from each stimulus condition (e.g., all the high-frequency words) are placed on different cards and the time taken to respond to all the items on one card is recorded. This method, it was argued, could result in the adoption of different response criteria for the different cards and permits previews of the next stimulus which could result in overlap of processing. Second, Monsell et al. noted that these studies employed a limited set of 4–5 stimuli in each condition which were repeated numerous times on each card, potentially leading to practice effects that would potentially nullify any effects of word frequency. After addressing these issues, Monsell et al. ( 2001 ) reported no effects of word frequency on color-naming times, although there was a non-significant tendency for low-frequency words to result in more interference than high-frequency words. With the same methodological control as Monsell et al., but with a greater difference in frequency between the high and low conditions, Burt ( 1994 , 1999 , 2002 ) has repeatedly reported that low-frequency words produce significantly more interference than high-frequency words (findings recently replicated by Navarrete et al., 2015 ). A recent study by Levin and Tzelgov ( 2016 ) also reported more interference to low-frequency words although their effects were not consistent across experiments, a finding that could be attributed to their use of a small set of words for each class of words.

The repeated finding of greater interference for low-frequency words is consistent with the notion that word frequency contributes to determining response times in the Stroop task, but is inconsistent with predictions from models of the class exemplified by Cohen et al. ( 1990 ). The finding of larger Stroop effects for lower-frequency words provides a potent challenge to the many models based on the Parallel Distributed Processing (PDP) connectionist framework (Cohen et al., 1990 ; Kalanthroff et al., 2018 ; Kornblum et al., 1990 ; Kornblum & Lee, 1995 ; Zhang & Kornblum, 1998 ; Zhang et al., 1999 ; see Monsell et al., 2001 for a full explanation of this). As noted, these models would argue, on the basis of a fundamental tenet of their architectures, that higher-frequency words should produce greater interference because they have stronger connection strengths with their word forms. Notably, whilst unsupported by later studies, the lack of an effect of word frequency in Monsell et al.’s data led them to the conclusion that there was another type of conflict involved in the Stroop task, called task conflict. It is to the topic of task conflict that we now turn.

Task conflict

The presence of task conflict in the Stroop task was first proposed in MacLeod and MacDonald’s ( 2000 ) review of brain imaging studies (see also Monsell et al., 2001 ; see Littman et al., 2019 , for a mini review). The authors proposed its existence because the anterior cingulate cortex (ACC) appeared to be more activated by incongruent and congruent stimuli when compared to repeated letter neutral stimuli such as xxxx (e.g., Bench et al., 1993 ). MacLeod and MacDonald suggested that increased ACC activation by congruent and incongruent stimuli reflects the signaling the need for control recruitment in response to task conflict. Since task conflict is produced by the activation of the mental machinery used to read, interference at this level occurs with any stimulus that is found in the mental lexicon. Studies have used this logic to isolate task conflict from informational conflict (e.g., Entel & Tzelgov, 2018 ).

Congruent trials, proportion of repeated letter strings trials and negative facilitation

In contrast to color-incongruent trials that are thought to produce both task and informational conflicts, color-congruent trials are only thought to produce task conflict. Conflict of any type, by definition, increases response times and thus, congruent trial reaction times can be expected to be longer than those on trials that do not activate a task set for word reading. Repeated color patches, symbols or letters (e.g., ■■■, xxxx or ####) have, therefore, been introduced as a baseline for such a comparison. Indeed, these trials are not expected to generate task conflict as they do not activate an item in the mental lexicon. The difference between these non-linguistic baselines and congruent trials would therefore represent a measure of task conflict, and has been referred to as negative facilitation. However, a common finding in such experiments is that congruent trials still produce faster RTs than neutral non-word stimuli or positive facilitation (Entel et al., 2015 ; see also Augustinova et al., 2019 ; Levin & Tzelgov, 2016 , Shichel & Tzelgov, 2018 ), indicating that task conflict is not fully measured under such conditions. Goldfarb and Henik ( 2007 ) reasoned that this is likely due to the fact that faster responses on congruent trials compared to a non-linguistic baseline results when task conflict control is highly efficient, permitting the expression of positive facilitation.

To circumvent this issue, they attempted to reduce task conflict control by increasing the proportion of non-word neutral trials (repeated letter strings) to 75% (see also Kalanthroff et al., 2013 ). Increasing the proportion of non-word neutral trials would create the expectation for a low task conflict context and so task conflict monitoring would effectively be offline. In addition to increasing the proportion of non-word neutral trials, on half of the trials, the participants received cues that indicated whether the following stimulus would be a non-word or a color word, giving another indication as to whether the mechanisms that control task conflict should be activated. For non-cued trials, when presumably task conflict control was at its nadir, and therefore task conflict at its peak, RTs were slower for congruent trials than for non-word neutral trials, producing a negative facilitation effect. Goldfarb and Henik ( 2007 ) suggested that previous studies had not detected a negative facilitation effect because resolving task conflict for congruent stimuli does not take long, and thus, as mentioned above, the effects of positive facilitation had hidden those of negative facilitation. In sum, by reducing task control both globally (by increasing the proportion of neutral trials) and locally (by adding cues to half of the trials), Goldfarb and Henik were able to increase task conflict enough to demonstrate a negative facilitation effect; an effect that has been shown to be a robust and prime signature of task conflict (Goldfarb & Henik, 2006 , 2007 ; Kalantroff et al., 2013).

Steinhauser and Hübner ( 2009 ) manipulated task conflict control by combining the Stroop task with a task-switching paradigm. In this paradigm participants switch between color naming and reading the irrelevant word (see Kalanthroff et al., 2013 , for a discussion on task switching and task conflict). Thus, the two task sets are active in this task context. This means that during color-naming Stroop trials, the word dimension of the stimulus will be more strongly associated with word processing than it otherwise would. This would have the effect of increasing the conflict between the task set for color naming and the task set of word reading. Steinhauser and Hübner ( 2009 ) found that under these experimental conditions, participants performed worse on congruent (and incongruent) trials than they did on the non-word neutral trials, evidencing negative facilitation, the key marker of task conflict. These results showing increasing task conflict when there is less control over the task set for word reading on color-naming trials reaffirmed Goldfarb and Henik’s ( 2007 ) findings that showed that reducing task control on color-naming trials leads to task conflict.

Whilst both of the above methods are useful in showing that task conflict can influence the magnitude of Stroop interference and facilitation, both manipulations result in magnifying task conflict (and likely other forms of conflict) to levels greater than is present when such targeted manipulations are not used.

Repeated letter strings without a task conflict control manipulation

As has been noted, task conflict appears to be present whenever the irrelevant stimulus has an entry in the lexical system. Consequently, studies have used the contrast in mean color-naming latencies between color-neutral words and repeated letter strings to index task conflict (Augustinova et al., 2018a ; Levin & Tzelgov, 2016 ). However, Augustinova et al. argued that both of these stimuli might include task conflict in different quantities. This is because the processing activated by a string of repeated letters (e.g., xxx) stops at the orthographic pre-lexical level, whereas the one activated by color-neutral words (e.g., dog) proceeds through to access to meaning (see also Augustinova et al., 2019 ; Ferrand et al., 2020 ), and as such the latter might more strongly activate the task set for word reading. Augustinova et al. ( 2019 ) reported task conflict (color-neutral—repeated letter strings) with vocal responses but not manual responses. Likewise, in a manual response study, Hershman et al. ( 2020 ) reported that repeated letter strings did not differ in terms of Stroop interference relative to symbol strings, consonant strings and color-neutral words. All were responded to more slowly than congruent trials, however, evidencing facilitation on congruent trials. Levin and Tzelgov ( 2016 ) compared vocal response color-naming times of repeated letter strings and shapes and found that repeated letter strings had longer color-naming times indicating some level of extra conflict with repeated letter strings, which they referred to as orthographic conflict, but which could also be expected to activate a task set for word reading. The implication of this work is that whilst repeated letter strings can be used as a baseline against which to measure task conflict relative to color-neutral words, they are likely to be useful mainly with vocal responses (Augustinova et al., 2019 ), and moreover can be expected to lead to some level of task conflict (Levin & Tzelgov, 2016 ).

For a purer measure of task conflict, when eschewing manipulations needed to produce negative facilitation, future research would do better to compare response times for color-neutral stimuli with those for shapes whilst employing a vocal response (Levin & Tzelgov, 2016 ; see Parris et al., 2019a , 2019b , 2019c , who reported no difference between color-neutral stimuli and unnamable/novel shapes with a manual response in an fMRI experiment). This does not mean, however, that task conflict is not measureable with manual responses in designs that eschew manipulations that produce negative facilitation: Continuing with their exploration of Stroop effects in pupillometric data Hershman et al. ( 2020 ) reported that pupil size data revealed larger pupils to congruent than to repeated letter strings (and also symbol strings, consonant strings and non-color-related words); in other words, they reported negative facilitation.

Does task conflict precede informational conflict?

The studies discussed above also suggest that task conflict occurs earlier than informational conflict. Hershman and Henik ( 2019 ) recently provided evidence that supports this supposition. Using incongruent, congruent and a repeated letter string baseline, but without manipulating the task conflict context in a way that would produce negative facilitation, Hershman and Henik observed a large interference effect and small non-significant, positive facilitation. However, the authors also recorded pupil dilations during task performance and reported both interference and negative facilitation (pupils were smaller for the repeated letter string condition than for congruent stimuli). Importantly, the pupil data began to distinguish between the repeated letter string condition and the two word conditions (incongruent and congruent) up to 500 ms before there was divergence between the incongruent and congruent trials. In other words, task conflict appeared earlier than informational conflict in the pupil data.

If it is not firmly established that task conflict comes before informational conflict on a single trial, recent research has shown that it certainly seems to come first developmentally. By comparing performance in 1st, 3rd and 5th graders, Ferrand and colleagues ( 2020 ) showed that 1st graders experience smaller Stroop interference effects (even when controlling for processing speed differences) compared to 3rd and 5th graders. Importantly, whereas the Stroop interference effect in these older children is largely driven by the presence of response, semantic and task conflict, in the 1st graders (i.e., pre-readers) this interference effect was entirely due to task conflict. Indeed, these children produced slower color-naming latencies for all items using words as distractors compared to repeated letter strings, without being sensitive to color-(in)congruency and to the informational (phonological, semantic or. response) conflict that it generates. The finding of task conflict’s developmental precedence is consistent with the idea that visual expertise for letters (as evidence by aforementioned N170 tuning for print) is known to be present even in pre‐readers (Maurer et al., 2005 ).

A model of task conflict