Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Guide to Experimental Design | Overview, Steps, & Examples

Guide to Experimental Design | Overview, 5 steps & Examples

Published on December 3, 2019 by Rebecca Bevans . Revised on June 21, 2023.

Experiments are used to study causal relationships . You manipulate one or more independent variables and measure their effect on one or more dependent variables.

Experimental design create a set of procedures to systematically test a hypothesis . A good experimental design requires a strong understanding of the system you are studying.

There are five key steps in designing an experiment:

- Consider your variables and how they are related

- Write a specific, testable hypothesis

- Design experimental treatments to manipulate your independent variable

- Assign subjects to groups, either between-subjects or within-subjects

- Plan how you will measure your dependent variable

For valid conclusions, you also need to select a representative sample and control any extraneous variables that might influence your results. If random assignment of participants to control and treatment groups is impossible, unethical, or highly difficult, consider an observational study instead. This minimizes several types of research bias, particularly sampling bias , survivorship bias , and attrition bias as time passes.

Table of contents

Step 1: define your variables, step 2: write your hypothesis, step 3: design your experimental treatments, step 4: assign your subjects to treatment groups, step 5: measure your dependent variable, other interesting articles, frequently asked questions about experiments.

You should begin with a specific research question . We will work with two research question examples, one from health sciences and one from ecology:

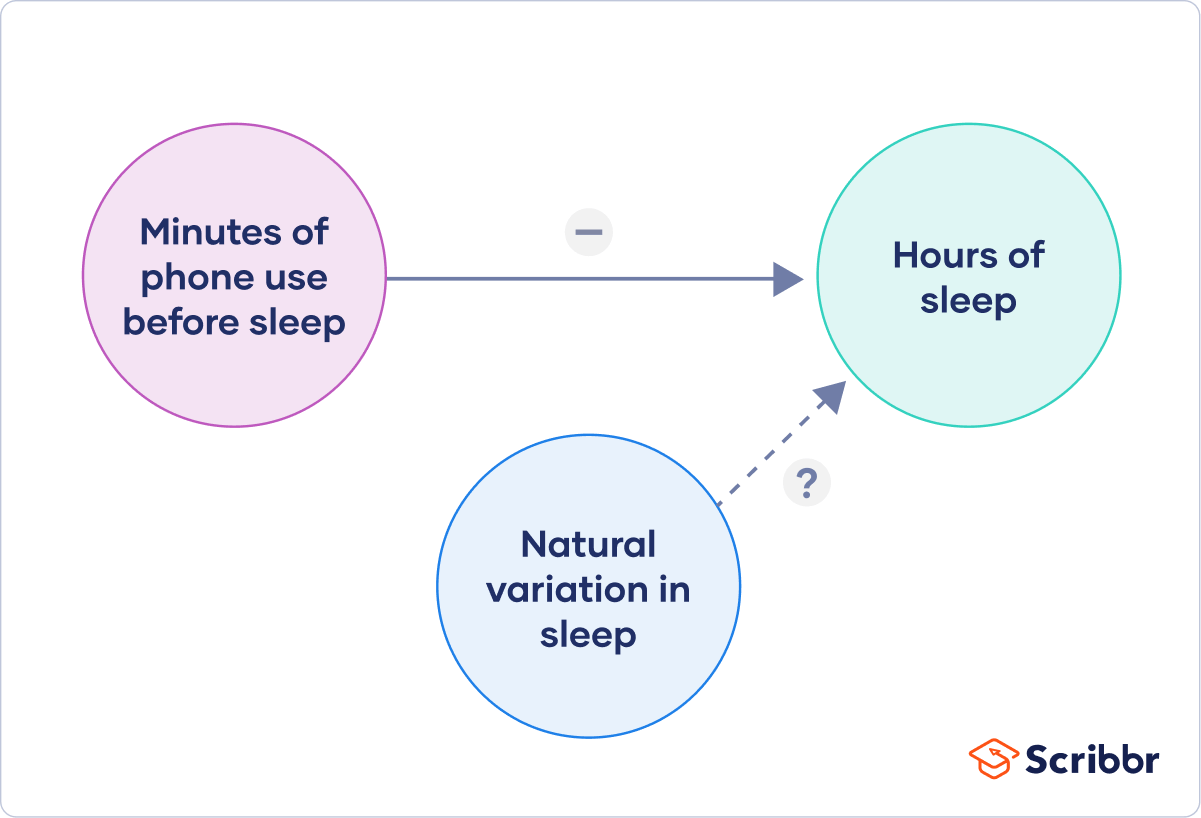

To translate your research question into an experimental hypothesis, you need to define the main variables and make predictions about how they are related.

Start by simply listing the independent and dependent variables .

| Research question | Independent variable | Dependent variable |

|---|---|---|

| Phone use and sleep | Minutes of phone use before sleep | Hours of sleep per night |

| Temperature and soil respiration | Air temperature just above the soil surface | CO2 respired from soil |

Then you need to think about possible extraneous and confounding variables and consider how you might control them in your experiment.

| Extraneous variable | How to control | |

|---|---|---|

| Phone use and sleep | in sleep patterns among individuals. | measure the average difference between sleep with phone use and sleep without phone use rather than the average amount of sleep per treatment group. |

| Temperature and soil respiration | also affects respiration, and moisture can decrease with increasing temperature. | monitor soil moisture and add water to make sure that soil moisture is consistent across all treatment plots. |

Finally, you can put these variables together into a diagram. Use arrows to show the possible relationships between variables and include signs to show the expected direction of the relationships.

Here we predict that increasing temperature will increase soil respiration and decrease soil moisture, while decreasing soil moisture will lead to decreased soil respiration.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

Now that you have a strong conceptual understanding of the system you are studying, you should be able to write a specific, testable hypothesis that addresses your research question.

| Null hypothesis (H ) | Alternate hypothesis (H ) | |

|---|---|---|

| Phone use and sleep | Phone use before sleep does not correlate with the amount of sleep a person gets. | Increasing phone use before sleep leads to a decrease in sleep. |

| Temperature and soil respiration | Air temperature does not correlate with soil respiration. | Increased air temperature leads to increased soil respiration. |

The next steps will describe how to design a controlled experiment . In a controlled experiment, you must be able to:

- Systematically and precisely manipulate the independent variable(s).

- Precisely measure the dependent variable(s).

- Control any potential confounding variables.

If your study system doesn’t match these criteria, there are other types of research you can use to answer your research question.

How you manipulate the independent variable can affect the experiment’s external validity – that is, the extent to which the results can be generalized and applied to the broader world.

First, you may need to decide how widely to vary your independent variable.

- just slightly above the natural range for your study region.

- over a wider range of temperatures to mimic future warming.

- over an extreme range that is beyond any possible natural variation.

Second, you may need to choose how finely to vary your independent variable. Sometimes this choice is made for you by your experimental system, but often you will need to decide, and this will affect how much you can infer from your results.

- a categorical variable : either as binary (yes/no) or as levels of a factor (no phone use, low phone use, high phone use).

- a continuous variable (minutes of phone use measured every night).

How you apply your experimental treatments to your test subjects is crucial for obtaining valid and reliable results.

First, you need to consider the study size : how many individuals will be included in the experiment? In general, the more subjects you include, the greater your experiment’s statistical power , which determines how much confidence you can have in your results.

Then you need to randomly assign your subjects to treatment groups . Each group receives a different level of the treatment (e.g. no phone use, low phone use, high phone use).

You should also include a control group , which receives no treatment. The control group tells us what would have happened to your test subjects without any experimental intervention.

When assigning your subjects to groups, there are two main choices you need to make:

- A completely randomized design vs a randomized block design .

- A between-subjects design vs a within-subjects design .

Randomization

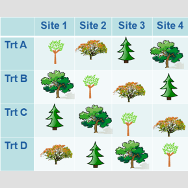

An experiment can be completely randomized or randomized within blocks (aka strata):

- In a completely randomized design , every subject is assigned to a treatment group at random.

- In a randomized block design (aka stratified random design), subjects are first grouped according to a characteristic they share, and then randomly assigned to treatments within those groups.

| Completely randomized design | Randomized block design | |

|---|---|---|

| Phone use and sleep | Subjects are all randomly assigned a level of phone use using a random number generator. | Subjects are first grouped by age, and then phone use treatments are randomly assigned within these groups. |

| Temperature and soil respiration | Warming treatments are assigned to soil plots at random by using a number generator to generate map coordinates within the study area. | Soils are first grouped by average rainfall, and then treatment plots are randomly assigned within these groups. |

Sometimes randomization isn’t practical or ethical , so researchers create partially-random or even non-random designs. An experimental design where treatments aren’t randomly assigned is called a quasi-experimental design .

Between-subjects vs. within-subjects

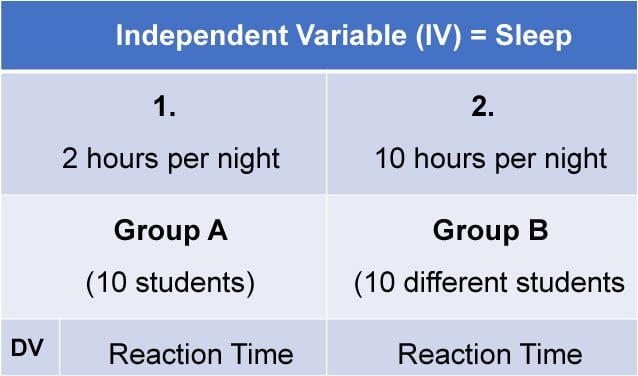

In a between-subjects design (also known as an independent measures design or classic ANOVA design), individuals receive only one of the possible levels of an experimental treatment.

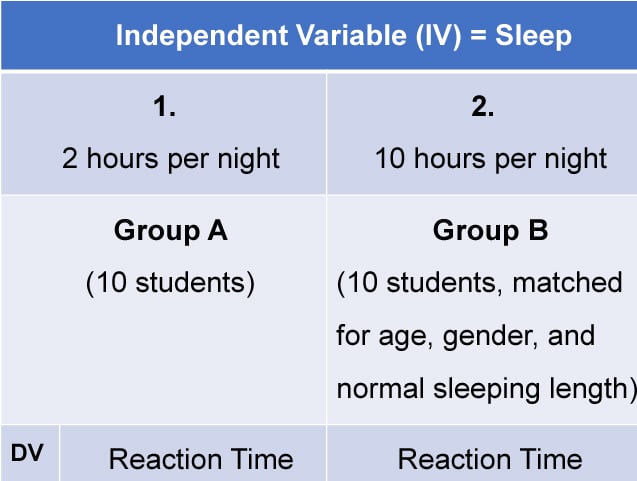

In medical or social research, you might also use matched pairs within your between-subjects design to make sure that each treatment group contains the same variety of test subjects in the same proportions.

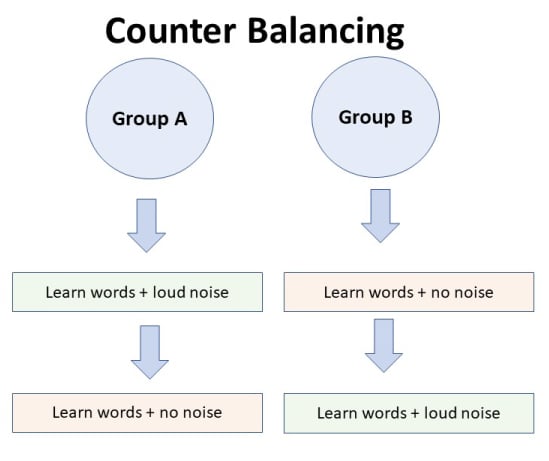

In a within-subjects design (also known as a repeated measures design), every individual receives each of the experimental treatments consecutively, and their responses to each treatment are measured.

Within-subjects or repeated measures can also refer to an experimental design where an effect emerges over time, and individual responses are measured over time in order to measure this effect as it emerges.

Counterbalancing (randomizing or reversing the order of treatments among subjects) is often used in within-subjects designs to ensure that the order of treatment application doesn’t influence the results of the experiment.

| Between-subjects (independent measures) design | Within-subjects (repeated measures) design | |

|---|---|---|

| Phone use and sleep | Subjects are randomly assigned a level of phone use (none, low, or high) and follow that level of phone use throughout the experiment. | Subjects are assigned consecutively to zero, low, and high levels of phone use throughout the experiment, and the order in which they follow these treatments is randomized. |

| Temperature and soil respiration | Warming treatments are assigned to soil plots at random and the soils are kept at this temperature throughout the experiment. | Every plot receives each warming treatment (1, 3, 5, 8, and 10C above ambient temperatures) consecutively over the course of the experiment, and the order in which they receive these treatments is randomized. |

Finally, you need to decide how you’ll collect data on your dependent variable outcomes. You should aim for reliable and valid measurements that minimize research bias or error.

Some variables, like temperature, can be objectively measured with scientific instruments. Others may need to be operationalized to turn them into measurable observations.

- Ask participants to record what time they go to sleep and get up each day.

- Ask participants to wear a sleep tracker.

How precisely you measure your dependent variable also affects the kinds of statistical analysis you can use on your data.

Experiments are always context-dependent, and a good experimental design will take into account all of the unique considerations of your study system to produce information that is both valid and relevant to your research question.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Student’s t -distribution

- Normal distribution

- Null and Alternative Hypotheses

- Chi square tests

- Confidence interval

- Cluster sampling

- Stratified sampling

- Data cleansing

- Reproducibility vs Replicability

- Peer review

- Likert scale

Research bias

- Implicit bias

- Framing effect

- Cognitive bias

- Placebo effect

- Hawthorne effect

- Hindsight bias

- Affect heuristic

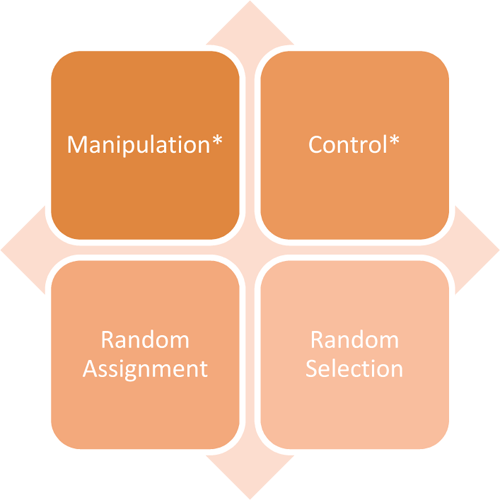

Experimental design means planning a set of procedures to investigate a relationship between variables . To design a controlled experiment, you need:

- A testable hypothesis

- At least one independent variable that can be precisely manipulated

- At least one dependent variable that can be precisely measured

When designing the experiment, you decide:

- How you will manipulate the variable(s)

- How you will control for any potential confounding variables

- How many subjects or samples will be included in the study

- How subjects will be assigned to treatment levels

Experimental design is essential to the internal and external validity of your experiment.

The key difference between observational studies and experimental designs is that a well-done observational study does not influence the responses of participants, while experiments do have some sort of treatment condition applied to at least some participants by random assignment .

A confounding variable , also called a confounder or confounding factor, is a third variable in a study examining a potential cause-and-effect relationship.

A confounding variable is related to both the supposed cause and the supposed effect of the study. It can be difficult to separate the true effect of the independent variable from the effect of the confounding variable.

In your research design , it’s important to identify potential confounding variables and plan how you will reduce their impact.

In a between-subjects design , every participant experiences only one condition, and researchers assess group differences between participants in various conditions.

In a within-subjects design , each participant experiences all conditions, and researchers test the same participants repeatedly for differences between conditions.

The word “between” means that you’re comparing different conditions between groups, while the word “within” means you’re comparing different conditions within the same group.

An experimental group, also known as a treatment group, receives the treatment whose effect researchers wish to study, whereas a control group does not. They should be identical in all other ways.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bevans, R. (2023, June 21). Guide to Experimental Design | Overview, 5 steps & Examples. Scribbr. Retrieved August 26, 2024, from https://www.scribbr.com/methodology/experimental-design/

Is this article helpful?

Rebecca Bevans

Other students also liked, random assignment in experiments | introduction & examples, quasi-experimental design | definition, types & examples, how to write a lab report, get unlimited documents corrected.

✔ Free APA citation check included ✔ Unlimited document corrections ✔ Specialized in correcting academic texts

Three Principles of Experimental Designs

by Kim Love 1 Comment

Yes, absolutely! Understanding experimental design can help you recognize the questions you can and can’t answer with the data. It will also help you identify possible sources of bias that can lead to undesirable results. Finally, it will help you provide recommendations to make future studies more efficient.

The Three Rs of Experimental Design

An experiment involves one or more treatments, each with two or more conditions. The defining characteristic of an experiment is that the researcher is able to assign subjects to treatment groups.

There are three principles that underlie any experiment. These are often called the three Rs of experimental design , and they are:

Randomization

Replication.

- Reduction of variance

Let’s look at each principle in the context of a specific experiment.

Randomization is the assignment of the subjects in the study to treatment groups in a random way. This is one of the most important aspects of an experiment.

It ensures that the only systematic difference in groups is the treatment condition. In the training experiment, this would mean that any difference in the outcomes between the two groups is due to the training. In other words, random assignment allows you to demonstrate causation.

Suppose the researcher did not randomize, and assigned men to one group and women to the other group. It should be clear that we won’t know if differences between the treatment groups come from gender or training.

Although in this example our confounding variable , gender, is obvious, that’s not always true. Randomization is the only sure way to avoid accidental confounding and its resulting bias.

Replication refers to having multiple subjects in each group. The more subjects in each group, the easier to determine whether any differences between the groups are due to the treatment and not the characteristics of individuals in the groups.

Suppose the training study had limited resources. Would it be enough to recruit only two people, and compare their times after training? Again, it’s probably obvious you can’t do this. The difference in outcomes would depend as much on those two people as it would on the training method.

There are many considerations that go into determining sample size . Generally, though, more subjects per group means more statistical confidence in the outcomes. Too few subjects in a group makes it very hard to find differences in the outcomes between treatment groups.

Reduction of Variance

Reduction of variance refers to removing or accounting for systematic difference among subjects. This allows you to measure the differences due to the treatment more precisely. There are multiple ways to approach this.

One way is to limit the population of the study so the subjects are more similar. Another way is to incorporate covariates into the analysis. These are variables outside of the experimental design that you can measure.

A third way is blocking. This refers to identifying related subjects and randomly assigning them to different treatments.

In the training experiment, not accounting for gender could make it more difficult to estimate the effects of training. There are at least three ways to account for it in the design and data collection.

- Only include one gender in the study, and limit the results of the study to that one gender.

- Measure the participants’ gender and include it in the study as a covariate.

- Include gender in the experimental design as a block. Randomly assign men and women in equal number to the two groups, and include gender in the analysis.

Application to Analysis

Although there are many types of experimental designs, the three Rs are at the heart of each of them. Advanced experimental designs simply achieve these under complicated circumstances. Understanding these principles, even without advanced knowledge, will make you a better analyst.

Always answer the following questions any time you are analyzing experimental data:

1. How was randomization applied in the experiment? This will help you understand whether you can draw causal conclusions. It will also help you recognize if the general conclusions of the study could be biased.

2. How much replication was there in the experiment? If the number of subjects was small (overall or in certain groups), this could result in a lack of findings.

3. Was variability outside of the scope of the experiment appropriately reduced? If the researcher can account for outside factors in the future, this will make the experiment more efficient.

Reader Interactions

July 19, 2022 at 3:02 am

Hi Kim, Thanks for this nice article,

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Privacy Overview

Experimental Design: Types, Examples & Methods

Saul McLeod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul McLeod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

Experimental design refers to how participants are allocated to different groups in an experiment. Types of design include repeated measures, independent groups, and matched pairs designs.

Probably the most common way to design an experiment in psychology is to divide the participants into two groups, the experimental group and the control group, and then introduce a change to the experimental group, not the control group.

The researcher must decide how he/she will allocate their sample to the different experimental groups. For example, if there are 10 participants, will all 10 participants participate in both groups (e.g., repeated measures), or will the participants be split in half and take part in only one group each?

Three types of experimental designs are commonly used:

1. Independent Measures

Independent measures design, also known as between-groups , is an experimental design where different participants are used in each condition of the independent variable. This means that each condition of the experiment includes a different group of participants.

This should be done by random allocation, ensuring that each participant has an equal chance of being assigned to one group.

Independent measures involve using two separate groups of participants, one in each condition. For example:

- Con : More people are needed than with the repeated measures design (i.e., more time-consuming).

- Pro : Avoids order effects (such as practice or fatigue) as people participate in one condition only. If a person is involved in several conditions, they may become bored, tired, and fed up by the time they come to the second condition or become wise to the requirements of the experiment!

- Con : Differences between participants in the groups may affect results, for example, variations in age, gender, or social background. These differences are known as participant variables (i.e., a type of extraneous variable ).

- Control : After the participants have been recruited, they should be randomly assigned to their groups. This should ensure the groups are similar, on average (reducing participant variables).

2. Repeated Measures Design

Repeated Measures design is an experimental design where the same participants participate in each independent variable condition. This means that each experiment condition includes the same group of participants.

Repeated Measures design is also known as within-groups or within-subjects design .

- Pro : As the same participants are used in each condition, participant variables (i.e., individual differences) are reduced.

- Con : There may be order effects. Order effects refer to the order of the conditions affecting the participants’ behavior. Performance in the second condition may be better because the participants know what to do (i.e., practice effect). Or their performance might be worse in the second condition because they are tired (i.e., fatigue effect). This limitation can be controlled using counterbalancing.

- Pro : Fewer people are needed as they participate in all conditions (i.e., saves time).

- Control : To combat order effects, the researcher counter-balances the order of the conditions for the participants. Alternating the order in which participants perform in different conditions of an experiment.

Counterbalancing

Suppose we used a repeated measures design in which all of the participants first learned words in “loud noise” and then learned them in “no noise.”

We expect the participants to learn better in “no noise” because of order effects, such as practice. However, a researcher can control for order effects using counterbalancing.

The sample would be split into two groups: experimental (A) and control (B). For example, group 1 does ‘A’ then ‘B,’ and group 2 does ‘B’ then ‘A.’ This is to eliminate order effects.

Although order effects occur for each participant, they balance each other out in the results because they occur equally in both groups.

3. Matched Pairs Design

A matched pairs design is an experimental design where pairs of participants are matched in terms of key variables, such as age or socioeconomic status. One member of each pair is then placed into the experimental group and the other member into the control group .

One member of each matched pair must be randomly assigned to the experimental group and the other to the control group.

- Con : If one participant drops out, you lose 2 PPs’ data.

- Pro : Reduces participant variables because the researcher has tried to pair up the participants so that each condition has people with similar abilities and characteristics.

- Con : Very time-consuming trying to find closely matched pairs.

- Pro : It avoids order effects, so counterbalancing is not necessary.

- Con : Impossible to match people exactly unless they are identical twins!

- Control : Members of each pair should be randomly assigned to conditions. However, this does not solve all these problems.

Experimental design refers to how participants are allocated to an experiment’s different conditions (or IV levels). There are three types:

1. Independent measures / between-groups : Different participants are used in each condition of the independent variable.

2. Repeated measures /within groups : The same participants take part in each condition of the independent variable.

3. Matched pairs : Each condition uses different participants, but they are matched in terms of important characteristics, e.g., gender, age, intelligence, etc.

Learning Check

Read about each of the experiments below. For each experiment, identify (1) which experimental design was used; and (2) why the researcher might have used that design.

1 . To compare the effectiveness of two different types of therapy for depression, depressed patients were assigned to receive either cognitive therapy or behavior therapy for a 12-week period.

The researchers attempted to ensure that the patients in the two groups had similar severity of depressed symptoms by administering a standardized test of depression to each participant, then pairing them according to the severity of their symptoms.

2 . To assess the difference in reading comprehension between 7 and 9-year-olds, a researcher recruited each group from a local primary school. They were given the same passage of text to read and then asked a series of questions to assess their understanding.

3 . To assess the effectiveness of two different ways of teaching reading, a group of 5-year-olds was recruited from a primary school. Their level of reading ability was assessed, and then they were taught using scheme one for 20 weeks.

At the end of this period, their reading was reassessed, and a reading improvement score was calculated. They were then taught using scheme two for a further 20 weeks, and another reading improvement score for this period was calculated. The reading improvement scores for each child were then compared.

4 . To assess the effect of the organization on recall, a researcher randomly assigned student volunteers to two conditions.

Condition one attempted to recall a list of words that were organized into meaningful categories; condition two attempted to recall the same words, randomly grouped on the page.

Experiment Terminology

Ecological validity.

The degree to which an investigation represents real-life experiences.

Experimenter effects

These are the ways that the experimenter can accidentally influence the participant through their appearance or behavior.

Demand characteristics

The clues in an experiment lead the participants to think they know what the researcher is looking for (e.g., the experimenter’s body language).

Independent variable (IV)

The variable the experimenter manipulates (i.e., changes) is assumed to have a direct effect on the dependent variable.

Dependent variable (DV)

Variable the experimenter measures. This is the outcome (i.e., the result) of a study.

Extraneous variables (EV)

All variables which are not independent variables but could affect the results (DV) of the experiment. Extraneous variables should be controlled where possible.

Confounding variables

Variable(s) that have affected the results (DV), apart from the IV. A confounding variable could be an extraneous variable that has not been controlled.

Random Allocation

Randomly allocating participants to independent variable conditions means that all participants should have an equal chance of taking part in each condition.

The principle of random allocation is to avoid bias in how the experiment is carried out and limit the effects of participant variables.

Order effects

Changes in participants’ performance due to their repeating the same or similar test more than once. Examples of order effects include:

(i) practice effect: an improvement in performance on a task due to repetition, for example, because of familiarity with the task;

(ii) fatigue effect: a decrease in performance of a task due to repetition, for example, because of boredom or tiredness.

Design of Experiments

Chapter 1 principles of experimental design.

Although it is obviously true that statistical tests are not the only method for arriving at the ‘truth’, it is equally true that formal experiments generally provide the most scientifically valid research result. (Bailar III 1981 )

1.1 Introduction

The validity of conclusions drawn from a statistical analysis crucially hinges on the manner in which the data are acquired, and even the most sophisticated analysis will not rescue a flawed experiment. Planning an experiment and thinking about the details of data acquisition is so important for a successful analysis that R. A. Fisher—who single-handedly invented many of the experimental design techniques we are about to discuss—famously wrote

To call in the statistician after the experiment is done may be no more than asking him to perform a post-mortem examination: he may be able to say what the experiment died of. (Fisher 1938 )

(Statistical) design of experiments provides the principles and methods for planning experiments and tailoring the data acquisition to an intended analysis. Design and analysis of an experiment are best considered as two aspects of the same enterprise: the goals of the analysis strongly inform an appropriate design, and the implemented design determines the possible analyses.

The primary aim of designing experiments is to ensure that valid statistical and scientific conclusions can be drawn that withstand the scrutiny of a determined skeptic. Good experimental design also considers that resources are used efficiently, and that estimates are sufficiently precise and hypothesis tests adequately powered. It protects our conclusions by excluding alternative interpretations or rendering them implausible. Three main pillars of experimental design are randomization , replication , and blocking , and we will invest substantial effort into fleshing out their effects on the subsequent analysis as well as their implementation in an experimental design.

An experimental design is always tailored towards predefined (primary) analyses and an efficient analysis and unambiguous interpretation of the experimental data is often straightforward from a good design. This does not prevent us from doing additional analyses of interesting observations after the data are acquired, but these analyses can be subjected to more severe criticisms and conclusions are more tentative.

In this chapter, we provide the wider context for using experiments in a larger research enterprise and informally introduce the main statistical ideas of experimental design. We use a comparison of two samples as our main example to study how design choices affect their comparison, but postpone a formal quantitative analysis to the next chapters.

1.2 A cautionary tale

| A | 8.96 | 8.95 | 11.37 | 12.63 | 11.38 | 8.36 | 6.87 | 12.35 | 10.32 | 11.99 |

| B | 12.68 | 11.37 | 12.00 | 9.81 | 10.35 | 11.76 | 9.01 | 10.83 | 8.76 | 9.99 |

For illustrating some of the issues arising in the interplay of experimental design and analysis, we consider a simple example. We are interested in comparing the enzyme levels measured in processed blood samples from laboratory mice, when the preparation is done either with a kit from a vendor A, or a kit from a competitor B. The data in Table 1.1 show measured enzyme levels of 20 mice, with samples of 10 mice prepared with kit A and the remaining 10 samples with kit B.

One option for comparing the two kits is by looking at the difference in average enzyme levels, and we find an average level of 10.32 for vendor A and 10.66 for vendor B. We would like to interpret their difference -0.34 as the difference due to the two preparation kits and conclude whether the two kits give equal results, or if measurements base done one kit are systematically different from those based on the other kit.

Such interpretation, however, is only valid if the two groups of mice and their measurements are identical in all aspects except the sample preparation kit. If we use one strain of mice for kit A and another strain for kit B, any difference might also be attributed to inherent differences between the strains. Similarly, if the measurements using kit B were conducted much later than those using kit A, any observed difference might be attributed to changes in, e.g., mice selected, batches of chemicals used, device calibration or any number of other influences. None of these competing explanation for an observed difference can be excluded from the given data alone, but good experimental design allows us to render them (almost) arbitrarily implausible.

A second aspect for our analysis is the inherent uncertainty in our calculated difference: if we repeat the experiment, the observed difference will change each time, and this will be more pronounced for smaller number of mice, among others. If we do not use a sufficient number of mice in our experiment, the uncertainty associated with the observed difference might be too large, such that random fluctuations become a plausible explanation for the observed difference. Systematic differences between the two kits, of practically relevant magnitude in either direction, might then be compatible with the data, and we can not draw any reliable conclusions from our experiment.

In each case, the statistical analysis—no matter how clever—was doomed before the experiment was even started, while simple ideas from statistical design of experiments would have prevented failure and provided correct and robust results with interpretable conclusions.

1.3 The language of experimental design

By an experiment , we understand an investigation where the researcher has full control over selecting and altering the experimental conditions of interest, and we only consider investigations of this type. The selected experimental conditions are called treatments . An experiment is comparative if the responses to several treatments are to be compared or contrasted. The experimental units are the smallest subdivision of the experimental material to which a treatment can be assigned. All experimental units given the same treatment constitute a treatment group . Especially in biology, we often contrast responses to a control group to which some standard experimental conditions are applied; a typical example is using a placebo for the control group, and different drugs in the other treatment groups.

Multiple experimental units are sometimes combined into groupings or blocks , for example mice are naturally grouped by litter, and samples by batches of chemicals used for their preparation. The values observed are called responses and are measured on the response units ; these are often identical to the experimental units but need not be. More generally, we call any grouping of the experimental material a unit .

In our example, we selected the mice, used a single sample per mouse, deliberately chose the two specific vendors, and had full control over assigning a kit to a mouse. Here, the mice are the experimental units, the samples the response units, the two kits are the treatments, and the responses are the measured enzyme levels. Since we compare the average enzyme levels between treatments and choose which kit to assign to which sample, this is a comparative experiment.

In this example, we can identify experimental and response units, because we have a single response per mouse and cannot distinguish a sample from a mouse in the analysis. By contrast, if we take two samples per mouse and use the same kit for both samples, then the mice are still the experimental units, but each mouse now has two response units associated with it. If we take two samples per mouse, but apply each kit to one of the two samples, then the samples are both the experimental and response units, while the mice are blocks that group the samples. If we only use one kit and determine the average enzyme level, then this investigation is still an experiment, but is not comparative.

Finally, the design of an experiment determines the logical structure of the experiment ; it consists of (i) a set of treatments; (ii) a specification of the experimental units (animals, cell lines, samples); (iii) a procedure for assigning treatments to units; and (iv) a specification of the response units and the quantity to be measured as a response.

1.4 Experiment validity

Before we embark on the more technical aspects of experimental design, we discuss three components for evaluating an experiment’s validity: construct validity , internal validity , and external validity . These criteria are well-established in, e.g., educational and psychological research, but have more recently been proposed for animal research (Würbel 2017 ) where experiments are increasingly scutinized for their scientific rationale and their design and intended analyses.

1.4.1 Construct validity

Construct validity concerns the choice of the experimental system for answering our research question. Is the system even capable of providing a relevant answer to the question?

Studying the mechanisms of a particular disease, for example, might require careful choice of an appropriate animal model that shows a disease phenotype and is amenable to experimental interventions. If the animal model is a proxy for drug development for humans, biological mechanisms must be sufficiently similar between animal and human physiologies.

Another important aspect of the construct is the quantity that we intend to measure (the measurand ), and its relation to the quantity or property we are interested in. For example, we might measure the concentration of the same chemical compound once in a blood sample and once in a highly purified sample, and these constitute two different measurands, whose values might not be comparable. Often, the quantity of interest (e.g., liver function) is not directly measurable (or even quantifiable) and we measure a biomarker instead. For example, pre-clinical and clinical investigations may use concentrations of proteins or counts of specific cell types from blood samples, such as the CD4+ cell count used as a biomarker for immune system function. The problem of measurements and measurands is further discussed for statistics in (Hand 1996 ) and specifcially for biological experiments in (Coxon, Longstaff, and Burns 2019 ) .

1.4.2 Internal validity

The internal validity of an experiment concerns the soundness of the scientific rationale, statistical properties such as precision of estimates, and the measures taken against risk of bias. It refers to the validity of claims within the context of the experiment. Statistical design of experiments plays a prominent role in ensuring internal validity, and we briefly discuss the main ideas here before providing the technical details and an application to our example in the subsequent sections.

Scientific rationale and research question

The scientific rationale of a study is (usually) not immediately a statistical question. Translating a scientific question into a quantitative comparison amenable to statistical analysis is no small task and often requires substantial thought. It is a substantial, if non-statistical, benefit of using experimental design that we are forced to formulate a precise-enough research question and decide on the main analyses required for answering it before we conduct the experiment. For example, the question: is there a difference between placebo and drug? is insufficiently precise for planning a statistical analysis and determine an adequate experimental design. What exactly is the drug treatment? What concentration and how is it administered? How do we make sure that the placebo group is comparable to the drug group in all other aspects? What do we measure and what do we mean by “difference”? A shift in average response, a fold-change, change in response before and after treatment?

There are almost never enough resources to answer all conceivable scientific questions in a statistical analysis. We therefore select a few primary outcome variables whose analysis answers the most important questions and design the experiment to ensure these variables can be estimated and tested appropriately. Other, secondary outcome variables , can still be measured and analyzed, but we are not willing to increase the experiment to ensure that reliable conclusions can be drawn from these variables.

The scientific rationale also enters the choice of a potential control group to which we compare responses. The quote

The deep, fundamental question in statistical analysis is ‘Compared to what?’ (Tufte 1997 )

from Edward Tufte highlights the importance of this choice also for the statistical analyses of an experiment’s results.

Risk of bias

Experimental bias is a systematic difference in response between experimental units in addition to the difference caused by the treatments. The experimental units in the different groups are then not equal in all aspects except the treatment applied to them, and we saw several examples in Section 1.2 .

Minimizing the risk of bias is crucial for internal validity. Experimental design offers several methods for this, such as randomization , the random assignment of treatments to units to randomly distribute other differences between the treatment groups; blinding , the hiding of treatment assignments from the researcher and potential experiment subject to prevent conscious or unconscious biased assignments (e.g., by treating more agile mice with our favourite drug and more docile ones with the competitor’s); sampling , the random selection of units for inclusion in the experiment; and predefining the analysis plan detailing the intended analyses, including how to deal with missing data to counteract criticisms of performing many comparisons and only reporting those with the desired outcome, for example.

Precision and effect size

Another aspect of internal validity is the precision of estimates and the expected effect sizes. Is the experimental setup, in principle, able to detect a difference of relevant magnitude? Experimental design offers several methods for answering this question based on the expected heterogeneity of samples, the measurement error, and other sources of variation: power analysis is a technique for determining the number of samples required to reliably detect a relevant effect size and provide estimates of sufficient precision. More samples yield more precision and more power, but we have to be careful that replication is done at the right level: simply measuring a biological sample multiple times yields more measured values, but is pseudo-replication for analyses. Replication should also ensure that the statistical uncertainties of estimates can be gauged from the data of the experiment itself, and does not require additional untestable assumptions. Finally, the technique of blocking can remove a substantial proportion of the variation and thereby increase power and precision if we find a way to apply it.

1.4.3 External validity

The external validity of an experiment concerns its replicability and the generalizability of inferences. An experiment is replicable if its results can be confirmed by an independent new experiment, preferably by a different lab and researcher. Experimental conditions in the replicate experiment usually differ from the original experiment, which provides evidence that the observed effects are robust to such changes. A much weaker condition on an experiment is reproducibility , the property that an independent researcher draws equivalent conclusions based on the data from this particular experiment, using the same analyses techniques. Reproducibility requires publishing the raw data, details on the experimental protocol, and a detailed description of the statistical analyses, preferably with accompagnying source code.

Reporting the results of an experiment so that others can preproduce and replicate them is no simple task, and requires sufficient information about the experiment and its analysis. Many scientific journals subscribe to reporting guidelines that are also helpful for planning an experiment. Two such guidelines are the the ARRIVE guidelines for animal research (Kilkenny et al. 2010 ) and the CONSORT guidelines for clinical trials (Moher et al. 2010 ) . Guidelines describing the minimal information required for reproducing experimental results have been developed for many types of experimental techniques, including microarrays (MIAME), RNA sequencing (MINSEQE), metabolomics (MSI) and proteomics (MIAPE) experiments, and the FAIRSHARE initiative provides a more comprehensive collection (Sansone et al. 2019 ) .

A main threat to replicability and generalizability are too tightly controlled experimental conditions, when inferences only hold for a specific lab under the very specific conditions of the original experiment. Introducing systematic heterogeneity and using multi-center studies effectively broadens the experimental conditions and therefore the inferences for which internal validity is available.

For systematic heterogeneity , experimental conditions other than treatments are systematically altered and treatment differences estimated for each condition. For example, we might split the experimental material into several batches and use a different day of analysis, sample preparation, batch of buffer, measurement device, and lab technician for each the batches. A more general inference is then possible if the effect size, effect direction, and precision are comparable between the batches, indicating that the treatment differences are stable over the different conditions.

In multi-center experiments , the same experiment is conducted in several different labs and the results compared and merged. Already using a second laboratory increases replicability of animal studies substantially (Karp 2018 ) and differences between labs can be used for standardizing the treatment effects (Kafkafi et al. 2017 ) . Multi-center approaches are very common in clinical trials and often necessary to reach the required number of patient enrollments.

Generalizability of randomized controlled trials in medicine and animal studies often suffers from overly restrictive eligibility criteria. In clinical trials, patients are often included or excluded based on co-medications and co-morbidities, and the resulting sample of eligible patients might no longer be representative of the patient population. For example, (Travers et al. 2007 ) used the eligibility criteria of 17 random controlled trials of asthma treatments and found that out of 749 patients, only a median of 6% (45 patients) would be eligible for an asthma-related randomized controlled trial. This puts a question mark on the relevance of the trials’ findings for asthma patients in general.

1.5 Reducing the risk of bias

1.5.1 randomization of treatment allocation.

If systematic differences other than the treatment exist between our treatment groups, then the effect of the treatment is confounded with these other differences and our estimates of treatment effects might be biased.

We remove such unwanted sysstematic differences from our treatment comparisons by randomizing the allocation of treatments to experimental units. In a completely randomized design , each experimental unit has the same chance of being subjected to any of the treatments, and any differences between the experimental units other than the treatments are distributed over the treatment groups. Importantly, randomization is the only method that also protects our experiment against unknown sources of bias: we do not need to know all or even any of the potential differences and yet their impact is eliminated from the treatment comparisons by random treatment allocation.

Randomization has two effects: (i) differences unrelated to treatment become part of the residual variance rendering the treatment groups more similar; and (ii) the systematic differences are thereby eliminated as sources of bias from the treatment comparison. In short,

Randomization transforms systematic variation into random variation.

In our example, a proper randomization would select 10 out of our 20 mice fully at random, such that the probability of any mice being picked is 1/20. These ten mice are then assigned to kit A, and the remaining mice to kit B. This allocation is entirely independent of the treatments and of any properties of the mice.

To ensure completely random treatment allocation, some kind of random process needs to be employed. This can be as simple as shuffling a pack of 10 red and 10 black cards or we might use a software-based random number generator. Randomization is slightly more difficult if the number of experimental units is not known at the start of the experiment, such as when patients are recruited for an ongoing clinical trial (sometimes called rolling recruitment ), and we want to have reasonable balance between the treatment groups at each stage of the trial.

Seemingly random assignments “by hand” are usually no less complicated than fully random assignments, but are always inferior. If surprising results ensue from the experiment, such assignments are subject to unanswerable criticism and suspicion of unwanted bias. Even worse are systematic allocations; they can only remove bias from known causes, and immediately raise red flags under the slightest scrutiny.

The problem of undesired assignments

Even with a fully random treatment allocation procedure, we might end up with an undesirable allocation. For our example, the treatment group of kit A might—just by chance—contain mice that are bigger or more active than those in the other treatment group. Statistical orthodoxy and some authors recommend using the design nevertheless, because only full randomization guarantees valid estimates of residual variance and unbiased estimates of effects. This argument, however, concerns the long-run properties of the procedure and seems of little help in this specific situation. Why should we care if the randomization yields correct estimates under replication of the experiment, if the particular experiment is jeopardized?

Another solution is to create a list of all possible allocations that we would accept and randomly choose one of these allocations for our experiment. The analysis should then reflect this restriction in the possible randomizations, which often renders this approach difficult to implement.

The most pragmatic method is to reject undesirable designs and compute a new randomization (Cox 1958 ) . Undesirable allocations are unlikely to arise for large sample sizes, and we might accept a small bias in estimation for small sample sizes, when uncertainty in the estimated treatment effect is already high. In this approach, whenever we reject a particular outcome, we must also be willing to reject the outcome if we permute the treatment level labels. If we reject eight big and two small mice for kit A, then we must also reject a two big and eight small mice. We must also be transparent and report a rejected allocation, so that a critic may weigh the risk in bias due to rejection against the risk of bias due to the rejected allocation.

1.5.2 Blinding

Bias in treatment comparisons is also introduced if treatment allocation is random, but responses cannot be measured entirely objective, or if knowledge of the assigned treatment might affect the response. In clinical trials, for example, patients might (objectively) react differently when they know to be on a placebo treatment, an effect known as cognitive bias . In animal experiments, caretakers might report more abnormal behavior for animals on a more severe treatment. Cognitive bias can be eliminated by concealing the treatment allocation from participants of a clinical trial or technicians, a technique called single-blinding .

If response measures are partially based on professional judgement (e.g., a pain score), patient or physician might unconsciously report lower scores for a placebo treatment, a phenomenon known as observer bias . Its removal requires double blinding , where treatment allocations are additionally concealed from the experimentalist.

Blinding requires randomized treatment allocation to begin with and substantial effort might be needed to implement it. Drug companies, for example, have to go to great lengths to ensure that a placebo looks, tastes, and feels similar enough to the actual drug so that patients cannot unblind their treatment. Additionally, blinding is often done by coding the treatment conditions and samples, and statements about effect sizes and statistical significance are made before the code is revealed.

In clinical trials, double-blinding creates a conflict of interest. The attending doctors do not know which patient received which treatment, and thus accumulation of side-effects cannot be linked to any treatment. For this reason, clinical trials always have a data monitoring committee, constituted of doctors, pharmacologists, and statisticians. At predefined intervals, the data from the trials is used for an intermediate analysis of efficacy and safety by members of the committee. If severe problems are detected, the committee might recommend altering or aborting the trial. The same might happen if one treatment already shows overwhelming evidence of superiority, such that it becomes unethical to withhold better treatment from the other treatment groups.

1.5.3 Analysis plan, and registration

An often overlooked but nevertheless severe source of bias is what has been termed ‘researcher degrees of freedom’ or ‘a garden of forking paths’ in the data analysis. For any set of data, there are many different options for its analysis: some results might be considered outliers and discarded, assumptions are made on error distributions and appropriate test statistics, different covariates might be included into a regression model. Often, multiple hypotheses are investigated and tested, and analyses are done separately on various (overlapping) subgroups. Hypotheses formed after looking at the data require additional care in their interpretation; almost never will \(p\) -values for these ad hoc or post hoc hypotheses be statistically justifiable. Only reporting those sub-analyses that gave ‘interesting’ findings invariably leads to biased conclusions and is called cherry-picking or \(p\) -hacking (or much less flattering names). Many different measured response variables invite fishing expeditions , where patterns in the data are sought without an underlying hypothesis.

The interpretation of a statistical analysis is always part of a larger scientific argument and we should consider the necessary computations in relation to building our scientific argument about the interpretation of the data. In addition to the statistical calculations, this interpretation requires substantial subject-matter knowledge and includes (many) non-statistical arguments. Two quotes highlight highlight that experiment and analysis are a means to an end and not the end in itself.

There is a boundary in data interpretation beyond which formulas and quantitative decision procedures do not go, where judgment and style enter. (Abelson 1995 )

Often, perfectly reasonable people come to perfectly reasonable decisions or conclusions based on nonstatistical evidence. Statistical analysis is a tool with which we support reasoning. It is not a goal in itself. (Bailar III 1981 )

The deliberate use of statistical analyses and their interpretation for supporting a larger argument was called statistics as principled argument (Abelson 1995 ) . Employing useless statistical analysis without reference to the actual scientific question is surrogate science (Gigerenzer and Marewski 2014 ) and adaptive thinking is integral to meaningful statistical analysis (Gigerenzer 2002 ) .

There is often a grey area between exploiting researcher degrees of freedom to arrive at a desired conclusion, and creative yet informed analyses of data. One way to navigate this area is to distinguish between exploratory studies and confirmatory studies . The former have no clear stated scientific question, but are used to generate interesting hypotheses by identifying potential associations or effects that are then further investigated. Conclusions from these studies are very tentative and must be reported honestly. In contrast, standards are much higher for conformatory studies, which investigate a clearly defined scientific question. Here, analysis plans and pre-registration of an experiment are now the accepted means for demonstrating lack of bias due to researcher degrees of freedom.

Analysis plans

The analysis plan is written before conducting the experiment and details the measurands and estimands, the hypotheses to be tested together with a power and sample size calculation, a discussion of relevant effect sizes, detection and handling of outliers and missing data, as well as steps for data normalization such as transformations and baseline corrections. If a regression model is required, its factors and covariates are outlined. Particularly in biology, measurements below the limit of quantification require special attention in the analysis plan.

In the context of clinical trials, the problem of estimands has become a recent focus of attention. The estimand is the target of a statistical estimation procedure, for example the true average difference in enzyme levels between the two preparation kits. A main problem in many studies are post-randomization events that can change the estimand, even if the estimation procedure remains the same. For example, if kit B fails to produce usable samples for measurement in five out of ten cases because the enzyme level was too low, while kit A could handle these enzyme levels perfectly fine, then this might severely exaggerate the observed difference between the two kits. Similar problems arise in drug trials, when some patients stop taking one of the drugs due to side-effects or other complications, and data is then available for only those patients without side-effects.

Pre-registration

Pre-registration of experiments is an even more severe measure used in conjunction with an analysis plan and is becoming standard in clinical trials. Here, information about the trial, including the analysis plan, procedure to recruit patients, and stopping criteria, are registered at a dedicated website, such as ClinicalTrials.gov or AllTrials.net , and stored in a database. Publications based on the trial then refer to this registration, such that reviewers and readers can compare what the researchers intended to do and what they actually did. A similar portal for pre-clinical and translational research is PreClinicalTrials.eu .

Abelson, R P. 1995. Statistics as principled argument . Lawrence Erlbaum Associates Inc.

Bailar III, J. C. 1981. “Bailar’s laws of data analysis.” Clinical Pharmacology & Therapeutics 20 (1): 113–19.

Cox, D R. 1958. Planning of Experiments . Wiley-Blackwell.

Coxon, Carmen H., Colin Longstaff, and Chris Burns. 2019. “Applying the science of measurement to biology: Why bother?” PLOS Biology 17 (6): e3000338. https://doi.org/10.1371/journal.pbio.3000338 .

Fisher, R. 1938. “Presidential Address to the First Indian Statistical Congress.” Sankhya: The Indian Journal of Statistics 4: 14–17.

Gigerenzer, G. 2002. Adaptive Thinking: Rationality in the Real World . Oxford Univ Press. https://doi.org/10.1093/acprof:oso/9780195153729.003.0013 .

Gigerenzer, G, and J N Marewski. 2014. “Surrogate Science: The Idol of a Universal Method for Scientific Inference.” Journal of Management 41 (2). {SAGE} Publications: 421–40. https://doi.org/10.1177/0149206314547522 .

Hand, D J. 1996. “Statistics and the theory of measurement.” Journal of the Royal Statistical Society A 159 (3): 445–92. http://www.jstor.org/stable/2983326 .

Kafkafi, Neri, Ilan Golani, Iman Jaljuli, Hugh Morgan, Tal Sarig, Hanno Würbel, Shay Yaacoby, and Yoav Benjamini. 2017. “Addressing reproducibility in single-laboratory phenotyping experiments.” Nature Methods 14 (5): 462–64. https://doi.org/10.1038/nmeth.4259 .

Karp, Natasha A. 2018. “Reproducible preclinical research—Is embracing variability the answer?” PLOS Biology 16 (3): e2005413. https://doi.org/10.1371/journal.pbio.2005413 .

Kilkenny, Carol, William J Browne, Innes C Cuthill, Michael Emerson, and Douglas G Altman. 2010. “Improving Bioscience Research Reporting: The ARRIVE Guidelines for Reporting Animal Research.” PLoS Biology 8 (6): e1000412. https://doi.org/10.1371/journal.pbio.1000412 .

Moher, David, Sally Hopewell, Kenneth F Schulz, Victor Montori, Peter C Gøtzsche, P J Devereaux, Diana Elbourne, Matthias Egger, and Douglas G Altman. 2010. “CONSORT 2010 Explanation and Elaboration: updated guidelines for reporting parallel group randomised trials.” BMJ 340. BMJ Publishing Group Ltd. https://doi.org/10.1136/bmj.c869 .

Sansone, Susanna-Assunta, Peter McQuilton, Philippe Rocca-Serra, Alejandra Gonzalez-Beltran, Massimiliano Izzo, Allyson L. Lister, and Milo Thurston. 2019. “FAIRsharing as a community approach to standards, repositories and policies.” Nature Biotechnology 37 (4): 358–67. https://doi.org/10.1038/s41587-019-0080-8 .

Travers, Justin, Suzanne Marsh, Mathew Williams, Mark Weatherall, Brent Caldwell, Philippa Shirtcliffe, Sarah Aldington, and Richard Beasley. 2007. “External validity of randomised controlled trials in asthma: To whom do the results of the trials apply?” Thorax 62 (3): 219–33. https://doi.org/10.1136/thx.2006.066837 .

Tufte, E. 1997. Visual Explanations: Images and Quantities, Evidence and Narrative . 1st ed. Graphics Press.

Würbel, Hanno. 2017. “More than 3Rs: The importance of scientific validity for harm-benefit analysis of animal research.” Lab Animal 46 (4). Nature Publishing Group: 164–66. https://doi.org/10.1038/laban.1220 .

1.4 Experimental Design and Ethics

Does aspirin reduce the risk of heart attacks? Is one brand of fertilizer more effective at growing roses than another? Is fatigue as dangerous to a driver as speeding? Questions like these are answered using randomized experiments. In this module, you will learn important aspects of experimental design. Proper study design ensures the production of reliable, accurate data.

The purpose of an experiment is to investigate the relationship between two variables. In an experiment, there is the explanatory variable which affects the response variable . In a randomized experiment, the researcher manipulates the explanatory variable and then observes the response variable. Each value of the explanatory variable used in an experiment is called a treatment .

You want to investigate the effectiveness of vitamin E in preventing disease. You recruit a group of subjects and ask them if they regularly take vitamin E. You notice that the subjects who take vitamin E exhibit better health on average than those who do not. Does this prove that vitamin E is effective in disease prevention? It does not. There are many differences between the two groups compared in addition to vitamin E consumption. People who take vitamin E regularly often take other steps to improve their health: exercise, diet, other vitamin supplements. Any one of these factors could be influencing health. As described, this study does not prove that vitamin E is the key to disease prevention.

Additional variables that can cloud a study are called lurking variables . In order to prove that the explanatory variable is causing a change in the response variable, it is necessary to isolate the explanatory variable. The researcher must design her experiment in such a way that there is only one difference between groups being compared: the planned treatments. This is accomplished by the random assignment of experimental units to treatment groups. When subjects are assigned treatments randomly, all of the potential lurking variables are spread equally among the groups. At this point the only difference between groups is the one imposed by the researcher. Different outcomes measured in the response variable, therefore, must be a direct result of the different treatments. In this way, an experiment can prove a cause-and-effect connection between the explanatory and response variables.

Confounding occurs when the effects of multiple factors on a response cannot be separated, for instance, if a student guesses on the even-numbered questions on an exam and sits in a favorite spot on exam day. Why does the student get a high test scores on the exam? It could be the increased study time or sitting in the favorite spot or both. Confounding makes it difficult to draw valid conclusions about the effect of each factor on the outcome. The way around this is to test several outcomes with one method (treatment). This way, we know which treatment really works.

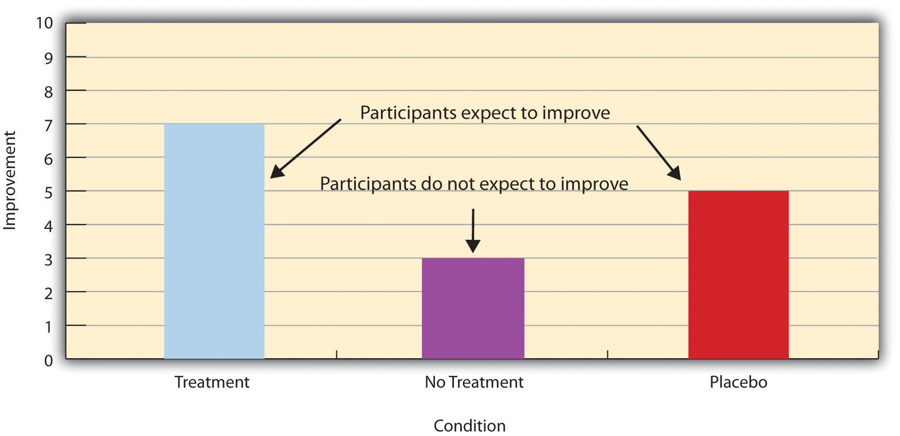

The power of suggestion can have an important influence on the outcome of an experiment. Studies have shown that the expectation of the study participant can be as important as the actual medication. In one study of performance-enhancing substances, researchers noted the following:

Results showed that believing one had taken the substance resulted in [ performance ] times almost as fast as those associated with consuming the substance itself. In contrast, taking the substance without knowledge yielded no significant performance increment. 1

When participation in a study prompts a physical response from a participant, it is difficult to isolate the effects of the explanatory variable. To counter the power of suggestion, researchers set aside one treatment group as a control group . This group is given a placebo treatment, a treatment that cannot influence the response variable. The control group helps researchers balance the effects of being in an experiment with the effects of the active treatments. Of course, if you are participating in a study and you know that you are receiving a pill that contains no actual medication, then the power of suggestion is no longer a factor. Blinding in a randomized experiment designed to reduce bias by hiding information. When a person involved in a research study is blinded, he does not know who is receiving the active treatment(s) and who is receiving the placebo treatment. A double-blind experiment is one in which both the subjects and the researchers involved with the subjects are blinded.

Sometimes, it is neither possible nor ethical for researchers to conduct experimental studies. For example, if you want to investigate whether malnutrition affects elementary school performance in children, it would not be appropriate to assign an experimental group to be malnourished. In these cases, observational studies or surveys may be used. In an observational study, the researcher does not directly manipulate the independent variable. Instead, he or she takes recordings and measurements of naturally occurring phenomena. By sorting these data into control and experimental conditions, the relationship between the dependent and independent variables can be drawn. In a survey, a researcher’s measurements consist of questionnaires that are answered by the research participants.

Example 1.20

Researchers want to investigate whether taking aspirin regularly reduces the risk of a heart attack. 400 men between the ages of 50 and 84 are recruited as participants. The men are divided randomly into two groups: one group will take aspirin, and the other group will take a placebo. Each man takes one pill each day for three years, but he does not know whether he is taking aspirin or the placebo. At the end of the study, researchers count the number of men in each group who have had heart attacks.

Identify the following values for this study: population, sample, experimental units, explanatory variable, response variable, treatments.

The population is men aged 50 to 84. The sample is the 400 men who participated. The experimental units are the individual men in the study. The explanatory variable is oral medication. The treatments are aspirin and a placebo. The response variable is whether a subject had a heart attack.

Example 1.21

The Smell & Taste Treatment and Research Foundation conducted a study to investigate whether smell can affect learning. Subjects completed mazes multiple times while wearing masks. They completed the pencil and paper mazes three times wearing floral-scented masks, and three times with unscented masks. Participants were assigned at random to wear the floral mask during the first three trials or during the last three trials. For each trial, researchers recorded the time it took to complete the maze and the subject’s impression of the mask’s scent: positive, negative, or neutral.

- Describe the explanatory and response variables in this study.

- What are the treatments?

- Identify any lurking variables that could interfere with this study.

- Is it possible to use blinding in this study?

- The explanatory variable is scent, and the response variable is the time it takes to complete the maze.

- There are two treatments: a floral-scented mask and an unscented mask.

- All subjects experienced both treatments. The order of treatments was randomly assigned so there were no differences between the treatment groups. Random assignment eliminates the problem of lurking variables.

- Subjects will clearly know whether they can smell flowers or not, so subjects cannot be blinded in this study. Researchers timing the mazes can be blinded, though. The researcher who is observing a subject will not know which mask is being worn.

Example 1.22

A researcher wants to study the effects of birth order on personality. Explain why this study could not be conducted as a randomized experiment. What is the main problem in a study that cannot be designed as a randomized experiment?

The explanatory variable is birth order. You cannot randomly assign a person’s birth order. Random assignment eliminates the impact of lurking variables. When you cannot assign subjects to treatment groups at random, there will be differences between the groups other than the explanatory variable.

Try It 1.22

You are concerned about the effects of texting on driving performance. Design a study to test the response time of drivers while texting and while driving only. How many seconds does it take for a driver to respond when a leading car hits the brakes?

- Describe the explanatory and response variables in the study.

- What should you consider when selecting participants?

- Your research partner wants to divide participants randomly into two groups: one to drive without distraction and one to text and drive simultaneously. Is this a good idea? Why or why not?

- How can blinding be used in this study?

The widespread misuse and misrepresentation of statistical information often gives the field a bad name. Some say that “numbers don’t lie,” but the people who use numbers to support their claims often do.

A recent investigation of famous social psychologist, Diederik Stapel, has led to the retraction of his articles from some of the world’s top journals including, Journal of Experimental Social Psychology, Social Psychology, Basic and Applied Social Psychology, British Journal of Social Psychology, and the magazine Science . Diederik Stapel is a former professor at Tilburg University in the Netherlands. Over the past two years, an extensive investigation involving three universities where Stapel has worked concluded that the psychologist is guilty of fraud on a colossal scale. Falsified data taints over 55 papers he authored and 10 Ph.D. dissertations that he supervised.

Stapel did not deny that his deceit was driven by ambition. But it was more complicated than that, he told me. He insisted that he loved social psychology but had been frustrated by the messiness of experimental data, which rarely led to clear conclusions. His lifelong obsession with elegance and order, he said, led him to concoct results that journals found attractive. “It was a quest for aesthetics, for beauty—instead of the truth,” he said. He described his behavior as an addiction that drove him to carry out acts of increasingly daring fraud . 2

The committee investigating Stapel concluded that he is guilty of several practices including

- creating datasets, which largely confirmed the prior expectations,

- altering data in existing datasets,

- changing measuring instruments without reporting the change, and

- misrepresenting the number of experimental subjects.

Clearly, it is never acceptable to falsify data the way this researcher did. Sometimes, however, violations of ethics are not as easy to spot.

Researchers have a responsibility to verify that proper methods are being followed. The report describing the investigation of Stapel’s fraud states that, “statistical flaws frequently revealed a lack of familiarity with elementary statistics.” 3 Many of Stapel’s co-authors should have spotted irregularities in his data. Unfortunately, they did not know very much about statistical analysis, and they simply trusted that he was collecting and reporting data properly.

Many types of statistical fraud are difficult to spot. Some researchers simply stop collecting data once they have just enough to prove what they had hoped to prove. They don’t want to take the chance that a more extensive study would complicate their lives by producing data contradicting their hypothesis.

Professional organizations, like the American Statistical Association, clearly define expectations for researchers. There are even laws in the federal code about the use of research data.

When a statistical study uses human participants, as in medical studies, both ethics and the law dictate that researchers should be mindful of the safety of their research subjects. The U.S. Department of Health and Human Services oversees federal regulations of research studies with the aim of protecting participants. When a university or other research institution engages in research, it must ensure the safety of all human subjects. For this reason, research institutions establish oversight committees known as Institutional Review Boards (IRB) . All planned studies must be approved in advance by the IRB. Key protections that are mandated by law include the following:

- Risks to participants must be minimized and reasonable with respect to projected benefits.

- Participants must give informed consent . This means that the risks of participation must be clearly explained to the subjects of the study. Subjects must consent in writing, and researchers are required to keep documentation of their consent.

- Data collected from individuals must be guarded carefully to protect their privacy.

These ideas may seem fundamental, but they can be very difficult to verify in practice. Is removing a participant’s name from the data record sufficient to protect privacy? Perhaps the person’s identity could be discovered from the data that remains. What happens if the study does not proceed as planned and risks arise that were not anticipated? When is informed consent really necessary? Suppose your doctor wants a blood sample to check your cholesterol level. Once the sample has been tested, you expect the lab to dispose of the remaining blood. At that point the blood becomes biological waste. Does a researcher have the right to take it for use in a study?

It is important that students of statistics take time to consider the ethical questions that arise in statistical studies. How prevalent is fraud in statistical studies? You might be surprised—and disappointed. There is a website dedicated to cataloging retractions of study articles that have been proven fraudulent. A quick glance will show that the misuse of statistics is a bigger problem than most people realize.

Vigilance against fraud requires knowledge. Learning the basic theory of statistics will empower you to analyze statistical studies critically.

Example 1.23

Describe the unethical behavior in each example and describe how it could impact the reliability of the resulting data. Explain how the problem should be corrected.

A researcher is collecting data in a community.

- She selects a block where she is comfortable walking because she knows many of the people living on the street.

- No one seems to be home at four houses on her route. She does not record the addresses and does not return at a later time to try to find residents at home.

- She skips four houses on her route because she is running late for an appointment. When she gets home, she fills in the forms by selecting random answers from other residents in the neighborhood.

- By selecting a convenient sample, the researcher is intentionally selecting a sample that could be biased. Claiming that this sample represents the community is misleading. The researcher needs to select areas in the community at random.

- Intentionally omitting relevant data will create bias in the sample. Suppose the researcher is gathering information about jobs and child care. By ignoring people who are not home, she may be missing data from working families that are relevant to her study. She needs to make every effort to interview all members of the target sample.