News: Teamscope has become Studypages Data! 🎉

News: Teamscope joins StudyPages 🎉

Data collection in the fight against COVID-19

Data Sharing

6 repositories to share your research data.

Dear Diary, I have been struggling with an eating disorder for the past few years. I am afraid to eat and afraid I will gain weight. The fear is unjustified as I was never overweight. I have weighed the same since I was 12 years old, and I am currently nearing my 25th birthday. Yet, when I see my reflection, I see somebody who is much larger than reality. I told my therapist that I thought I was fat. She said it was 'body dysmorphia'. She explained this as a mental health condition where a person is apprehensive about their appearance and suggested I visit a nutritionist. She also told me that this condition was associated with other anxiety disorders and eating disorders. I did not understand what she was saying as I was in denial; I had a problem, to begin with. I wanted a solution without having to address my issues. Upon visiting my nutritionist, he conducted an in-body scan and told me my body weight was dangerously low. I disagreed with him. I felt he was speaking about a different person than the person I saw in the mirror. I felt like the elephant in the room- both literally and figuratively. He then made the simple but revolutionary suggestion to keep a food diary to track what I was eating. This was a clever way for my nutritionist and me to be on the same page. By recording all my meals, drinks, and snacks, I was able to see what I was eating versus what I was supposed to be eating. Keeping a meal diary was a powerful and non-invasive way for my nutritionist to walk in my shoes for a specific time and understand my eating (and thinking) habits. No other methodology would have allowed my nutritionist to capture so much contextual and behavioural information on my eating patterns other than a daily detailed food diary. However, by using a paper and pen, I often forgot (or intentionally did not enter my food entries) as I felt guilty reading what I had eaten or that I had eaten at all. I also did not have the visual flexibility to express myself through using photos, videos, voice recordings, and screen recordings. The usage of multiple media sources would have allowed my nutritionist to observe my behaviour in real-time and gain a holistic view of my physical and emotional needs. I confessed to my therapist my deliberate dishonesty in completing the physical food diary and why I had been reluctant to participate in the exercise. My therapist then suggested to my nutritionist and me to transition to a mobile diary study. Whilst I used a physical diary (paper and pen), a mobile diary study app would have helped my nutritionist and me reach a common ground (and to be on the same page) sooner rather than later. As a millennial, I wanted to feel like journaling was as easy as Tweeting or posting a picture on Instagram. But at the same time, I wanted to know that the information I provided in a digital diary would be as safe and private as it would have been as my handwritten diary locked in my bedroom cabinet. Further, a digital food diary study platform with push notifications would have served as a constant reminder to log in my food entries as I constantly check my phone. It would have also made the task of writing a food diary less momentous by transforming my journaling into micro-journaling by allowing me to enter one bite at a time rather than the whole day's worth of meals at once. Mainly, the digital food diary could help collect the evidence that I was not the elephant in the room, but rather that the elephant in the room was my denied eating disorder. Sincerely, The elephant in the room

Why share research data?

Sharing information stimulates science. When researchers choose to make their data publicly available, they are allowing their work to contribute far beyond their original findings.

The benefits of data sharing are immense. When researchers make their data public, they increase transparency and trust in their work, they enable others to reproduce and validate their findings, and ultimately, contribute to the pace of scientific discovery by allowing others to reuse and build on top of their data.

"If I have seen further it is by standing on the shoulders of Giants." Isaac Newton, 1675.

While the benefits of data sharing and open science are categorical, sadly 86% of medical research data is never reused . In a 2014 survey conducted by Wiley with over 2000 researchers across different fields, found that 21% of surveyed researchers did not know where to share their data and 16% how to do so.

In a series of articles on Data Sharing we seek to break down this process for you and cover everything you need to know on how to share your research outputs.

In this first article, we will introduce essential concepts of public data and share six powerful platforms to upload and share datasets.

What is a Research Data Repository?

The best way to publish and share research data is with a research data repository. A repository is an online database that allows research data to be preserved across time and helps others find it.

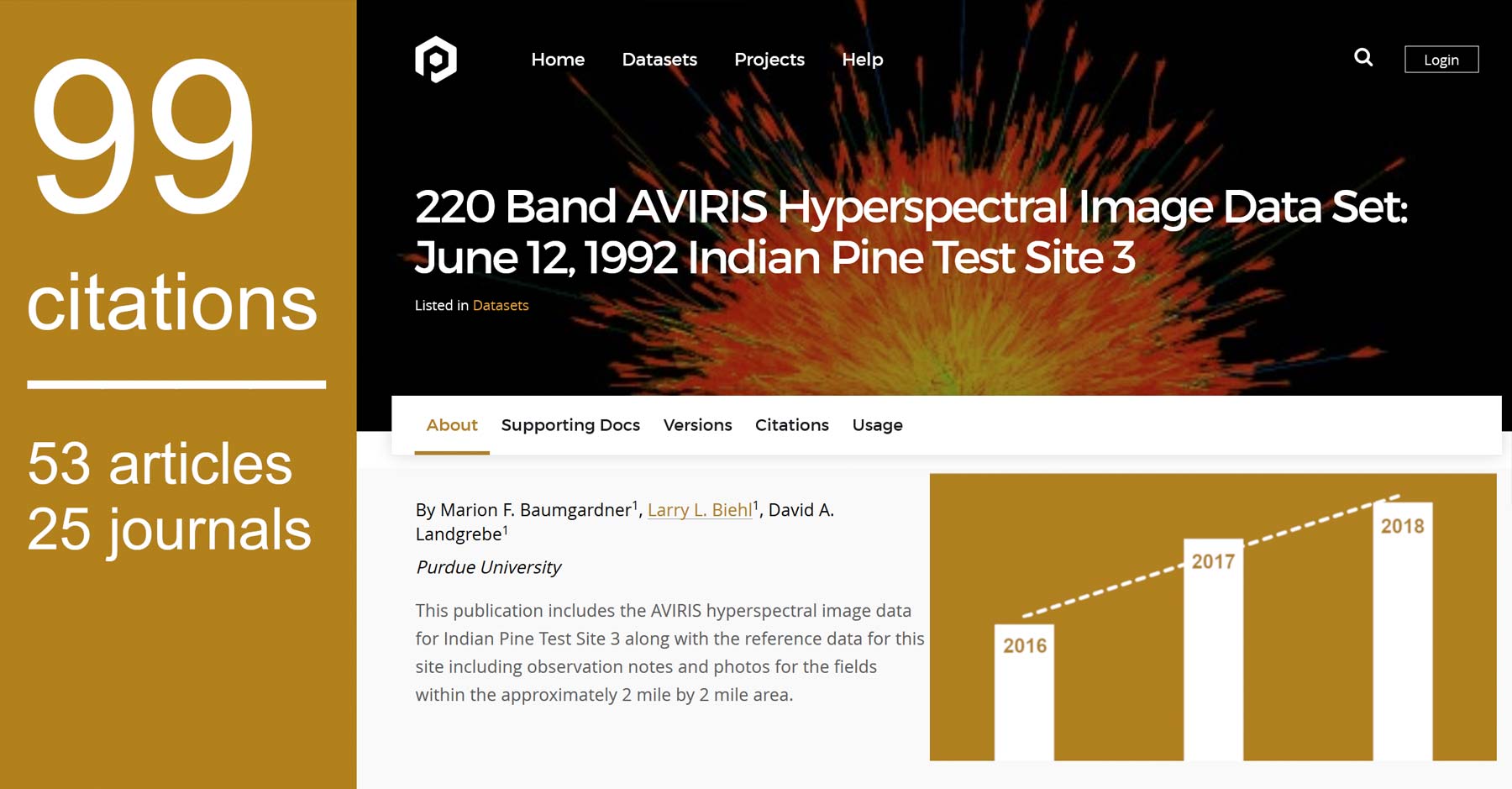

Apart from archiving research data, a repository will assign a DOI to each uploaded object and provide a web page that tells what it is, how to cite it and how many times other researchers have cited or downloaded that object.

What is a DOI?

When a researcher uploads a document to an online data repository, a digital object identifier (DOI) will be assigned. A DOI is a globally unique and persistent string (e.g. 10.6084/m9.figshare.7509368.v1) that identifies your work permanently.

A data repository can assign a DOI to any document, such as spreadsheets, images or presentation, and at different levels of hierarchy, like collection images or a specific chapter in a book.

The DOI contains metadata that provides users with relevant information about an object, such as the title, author, keywords, year of publication and the URL where that document is stored.

The International DOI Foundation (IDF) developed and introduced the DOI in 2000. Registration Agencies, a federation of independent organizations, register DOIs and provide the necessary infrastructure that allows researchers to declare and maintain metadata.

Key benefits of the DOI system:

- A more straightforward way to track research outputs

- Gives certainty to scientific work

- DOI's versioning system tracks changes to work overtime

- Can be assigned to any document

- Enables proper indexation and citation of research outputs

Once a document has a DOI, others can easily cite it. A handy tool to convert DOI's into a citation is DOI Citation Formatter .

Six repositories to share research data

Now that we have covered the role of a DOI and a data repository, below is a list of 6 data repositories for publishing and sharing research data.

1. figshare

Figshare is an open access data repository where researchers can preserve their research outputs, such as datasets, images, and videos and make them discoverable.

Figshare allows researchers to upload any file format and assigns a digital object identifier (DOI) for citations.

Mark Hahnel launched Figshare in January 2011. Hahnel first developed the platform as a personal tool for organizing and publishing the outputs of his PhD in stem cell biology. More than 50 institutions now use this solution.

Figshare releases' The State of Open Data' every year to assess the changing academic landscape around open research.

Free accounts on Figshare can upload files of up to 5gb and get 20gb of free storage.

2. Mendeley Data

Mendeley Data is an open research data repository, where researchers can store and share their data. Datasets can be shared privately between individuals, as well as publicly with the world.

Mendeley's mission is to facilitate data sharing. In their own words, "when research data is made publicly available, science benefits:

- the findings can be verified and reproduced- the data can be reused in new ways

- discovery of relevant research is facilitated

- funders get more value from their funding investment."

Datasets uploaded to Mendeley Data go into a moderation process where they are reviewed. This ensures the content constitutes research data, is scientific, and does not contain a previously published research article.

Researchers can upload and store their work free of cost on Mendeley Data.

If appropriately used in the 21st century, data could save us from lots of failed interventions and enable us to provide evidence-based solutions towards tackling malaria globally. This is also part of what makes the ALMA scorecard generated by the African Leaders Malaria Alliance an essential tool for tracking malaria intervention globally. If we are able to know the financial resources deployed to fight malaria in an endemic country and equate it to the coverage and impact, it would be easier to strengthen accountability for malaria control and also track progress in malaria elimination across the continent of Africa and beyond.

Odinaka Kingsley Obeta

West African Lead, ALMA Youth Advisory Council/Zero Malaria Champion

There is a smarter way to do research.

Build fully customizable data capture forms, collect data wherever you are and analyze it with a few clicks — without any training required.

3. Dryad Digital Repository

Dryad is a curated general-purpose repository that makes data discoverable, freely reusable, and citable.

Most types of files can be submitted (e.g., text, spreadsheets, video, photographs, software code) including compressed archives of multiple files.

Since a guiding principle of Dryad is to make its contents freely available for research and educational use, there are no access costs for individual users or institutions. Instead, Dryad supports its operation by charging a $120US fee each time data is published.

4. Harvard Dataverse

Harvard Dataverse is an online data repository where scientists can preserve, share, cite and explore research data.

The Harvard Dataverse repository is powered by the open-source web application Dataverse, developed by Insitute of Quantitative Social Science at Harvard.

Researchers, journals and institutions may choose to install the Dataverse web application on their own server or use Harvard's installation. Harvard Dataverse is open to all scientific data from all disciplines.

Harvard Dataverse is free and has a limit of 2.5 GB per file and 10 GB per dataset.

5. Open Science Framework

OSF is a free, open-source research management and collaboration tool designed to help researchers document their project's lifecycle and archive materials. It is built and maintained by the nonprofit Center for Open Science.

Each user, project, component, and file is given a unique, persistent uniform resource locator (URL) to enable sharing and promote attribution. Projects can also be assigned digital object identifiers (DOIs) if they are made publicly available.

OSF is a free service.

Zenodo is a general-purpose open-access repository developed under the European OpenAIRE program and operated by CERN.

Zenodo was first born as the OpenAire orphan records repository, with the mission to provide open science compliance to researchers without an institutional repository, irrespective of their subject area, funder or nation.

Zenodo encourages users to early on in their research lifecycle to upload their research outputs by allowing them to be private. Once an associated paper is published, datasets are automatically made open.

Zenodo has no restriction on the file type that researchers may upload and accepts dataset of up to 50 GB.

Research data can save lives, help develop solutions and maximise our knowledge. Promoting collaboration and cooperation among a global research community is the first step to reduce the burden of wasted research.

Although the waste of research data is an alarming issue with billions of euros lost every year, the future is optimistic. The pressure to reduce the burden of wasted research is pushing journals, funders and academic institutions to make data sharing a strict requirement.

We hope with this series of articles on data sharing that we can light up the path for many researchers who are weighing the benefits of making their data open to the world.

The six research data repositories shared in this article are a practical way for researchers to preserve datasets across time and maximize the value of their work.

Cover image by Copernicus Sentinel data (2019), processed by ESA, CC BY-SA 3.0 IG .

References:

“Harvard Dataverse,” Harvard Dataverse, https://library.harvard.edu/services-tools/harvard-dataverse

“Recommended Data Repositories.” Nature, https://go.nature.com/2zdLYTz

“DOI Marketing Brochure,” International DOI Foundation, http://bit.ly/2KU4HsK

“Managing and sharing data: best practice for researchers.” UK Data Archive, http://bit.ly/2KJHE53

Wikipedia contributors, “Figshare,” Wikipedia, The Free Encyclopedia, https://en.wikipedia.org/w/index.php?title=Figshare&oldid=896290279 (accessed August 20, 2019).

Walport, M., & Brest, P. (2011). Sharing research data to improve public health. The Lancet, 377(9765), 537–539. https://doi.org/10.1016/s0140-6736(10)62234-9

Foster, E. D., & Deardorff, A. (2017). Open Science Framework (OSF). Journal of the Medical Library Association : JMLA , 105 (2), 203–206. doi:10.5195/jmla.2017.88

Wikipedia contributors, "Zenodo," Wikipedia, The Free Encyclopedia, https://en.wikipedia.org/w/index.php?title=Zenodo&oldid=907771739 (accessed August 20, 2019).

Wikipedia contributors, "Dryad (repository)," Wikipedia, The Free Encyclopedia, https://en.wikipedia.org/w/index.php?title=Dryad_(repository)&oldid=879494242 (accessed August 20, 2019).

“How and Why Researchers Share Data (and Why They don't),” The Wiley Network, Liz Ferguson , http://bit.ly/31TzVHs

“Frequently Asked Questions,” Mendeley Data, https://data.mendeley.com/faq

Dear Digital Diary, I realized that there is an unquestionable comfort in being misunderstood. For to be understood, one must peel off all the emotional layers and be exposed. This requires both vulnerability and strength. I guess by using a physical diary (a paper and a pen), I never felt like what I was saying was analyzed or judged. But I also never thought I was understood. Paper does not talk back.Using a daily digital diary has required emotional strength. It has required the need to trust and the need to provide information to be helped and understood. Using a daily diary has needed less time and effort than a physical diary as I am prompted to interact through mobile notifications. I also no longer relay information from memory, but rather the medical or personal insights I enter are real-time behaviours and experiences. The interaction is more organic. I also must confess this technology has allowed me to see patterns in my behaviour that I would have otherwise never noticed. I trust that the data I enter is safe as it is password protected. I also trust that I am safe because my doctor and nutritionist can view my records in real-time. Also, with the data entered being more objective and diverse through pictures and voice recordings, my treatment plan has been better suited to my needs. Sincerely, No more elephants in this room

Diego Menchaca

Diego is the founder and CEO of Teamscope. He started Teamscope from a scribble on a table. It instantly became his passion project and a vehicle into the unknown. Diego is originally from Chile and lives in Nijmegen, the Netherlands.

More articles on

How to successfully share research data.

Harvard Dataverse

Harvard Dataverse is an online data repository where you can share, preserve, cite, explore, and analyze research data. It is open to all researchers, both inside and out of the Harvard community.

Harvard Dataverse provides access to a rich array of datasets to support your research. It offers advanced searching and text mining in over 2,000 dataverses, 75,000 datasets, and 350,000+ files, representing institutions, groups, and individuals at Harvard and beyond.

Explore Harvard Dataverse

The Harvard Dataverse repository runs on the open-source web application Dataverse , developed at the Institute for Quantitative Social Science . Dataverse helps make your data available to others, and allows you to replicate others' work more easily. Researchers, journals, data authors, publishers, data distributors, and affiliated institutions all receive academic credit and web visibility.

Why Create a Personal Dataverse?

- Easy set up

- Display your data on your personal website

- Brand it uniquely as your research program

- Makes your data more discoverable to the research community

- Satisfies data management plans

Terms to know

- A Dataverse repository is the software installation, which then hosts multiple virtual archives called dataverses .

- Each dataverse contains datasets, and each dataset contains descriptive metadata and data files (including documentation and code that accompany the data).

- As an organizing method, dataverses may also contain other dataverses.

Related Services and Tools

Research data services, qualitative research support.

What is a research repository, and why do you need one?

Last updated

31 January 2024

Reviewed by

Miroslav Damyanov

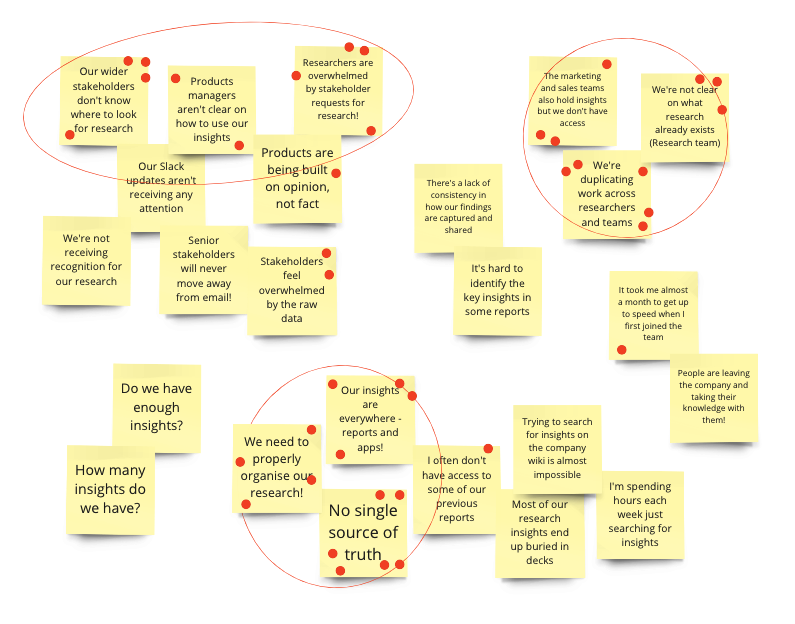

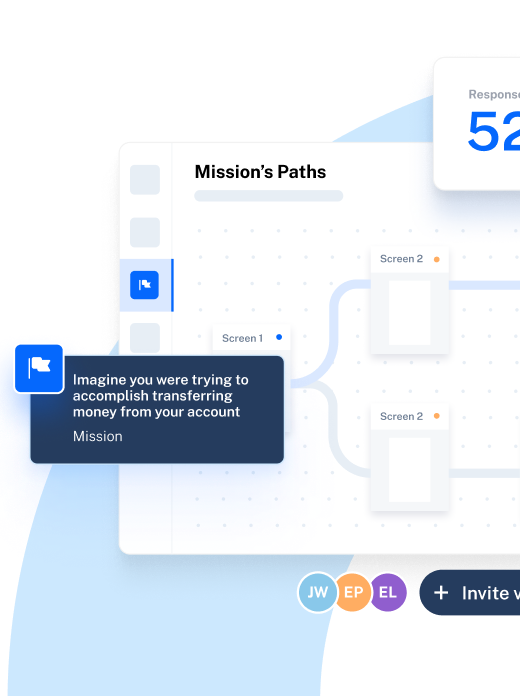

Without one organized source of truth, research can be left in silos, making it incomplete, redundant, and useless when it comes to gaining actionable insights.

A research repository can act as one cohesive place where teams can collate research in meaningful ways. This helps streamline the research process and ensures the insights gathered make a real difference.

- What is a research repository?

A research repository acts as a centralized database where information is gathered, stored, analyzed, and archived in one organized space.

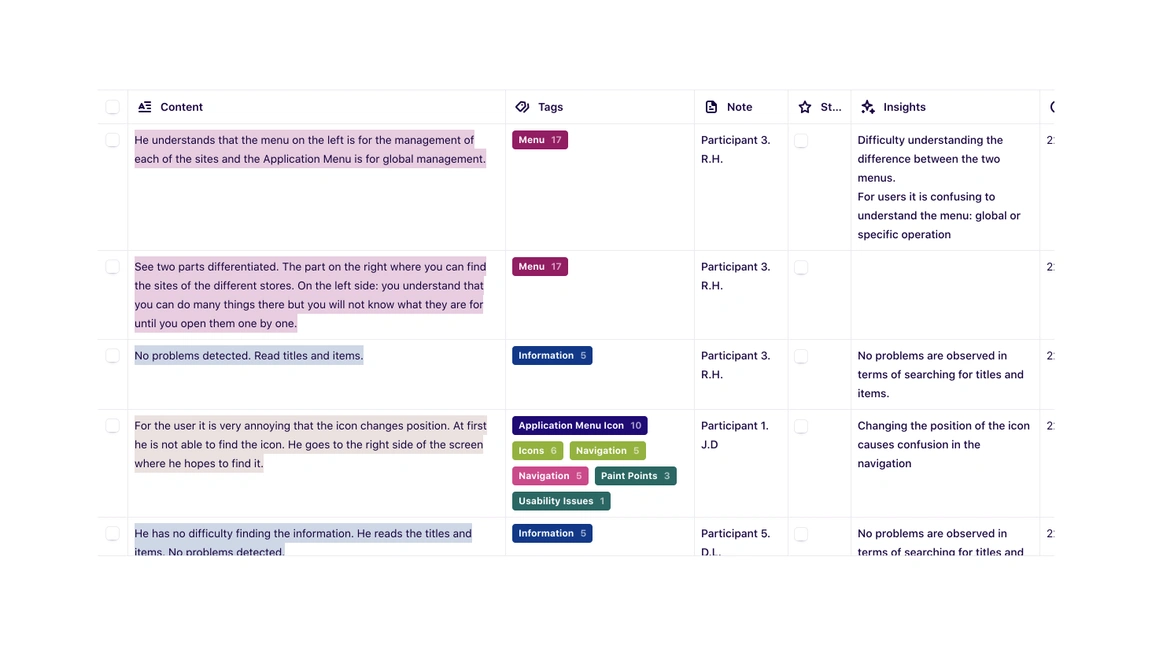

In this single source of truth, raw data, documents, reports, observations, and insights can be viewed, managed, and analyzed. This allows teams to organize raw data into themes, gather actionable insights , and share those insights with key stakeholders.

Ultimately, the research repository can make the research you gain much more valuable to the wider organization.

- Why do you need a research repository?

Information gathered through the research process can be disparate, challenging to organize, and difficult to obtain actionable insights from.

Some of the most common challenges researchers face include the following:

Information being collected in silos

No single source of truth

Research being conducted multiple times unnecessarily

No seamless way to share research with the wider team

Reports get lost and go unread

Without a way to store information effectively, it can become disparate and inconclusive, lacking utility. This can lead to research being completed by different teams without new insights being gathered.

A research repository can streamline the information gathered to address those key issues, improve processes, and boost efficiency. Among other things, an effective research repository can:

Optimize processes: it can ensure the process of storing, searching, and sharing information is streamlined and optimized across teams.

Minimize redundant research: when all information is stored in one accessible place for all relevant team members, the chances of research being repeated are significantly reduced.

Boost insights: having one source of truth boosts the chances of being able to properly analyze all the research that has been conducted and draw actionable insights from it.

Provide comprehensive data: there’s less risk of gaps in the data when it can be easily viewed and understood. The overall research is also likely to be more comprehensive.

Increase collaboration: given that information can be more easily shared and understood, there’s a higher likelihood of better collaboration and positive actions across the business.

- What to include in a research repository

Including the right things in your research repository from the start can help ensure that it provides maximum benefit for your team.

Here are some of the things that should be included in a research repository:

An overall structure

There are many ways to organize the data you collect. To organize it in a way that’s valuable for your organization, you’ll need an overall structure that aligns with your goals.

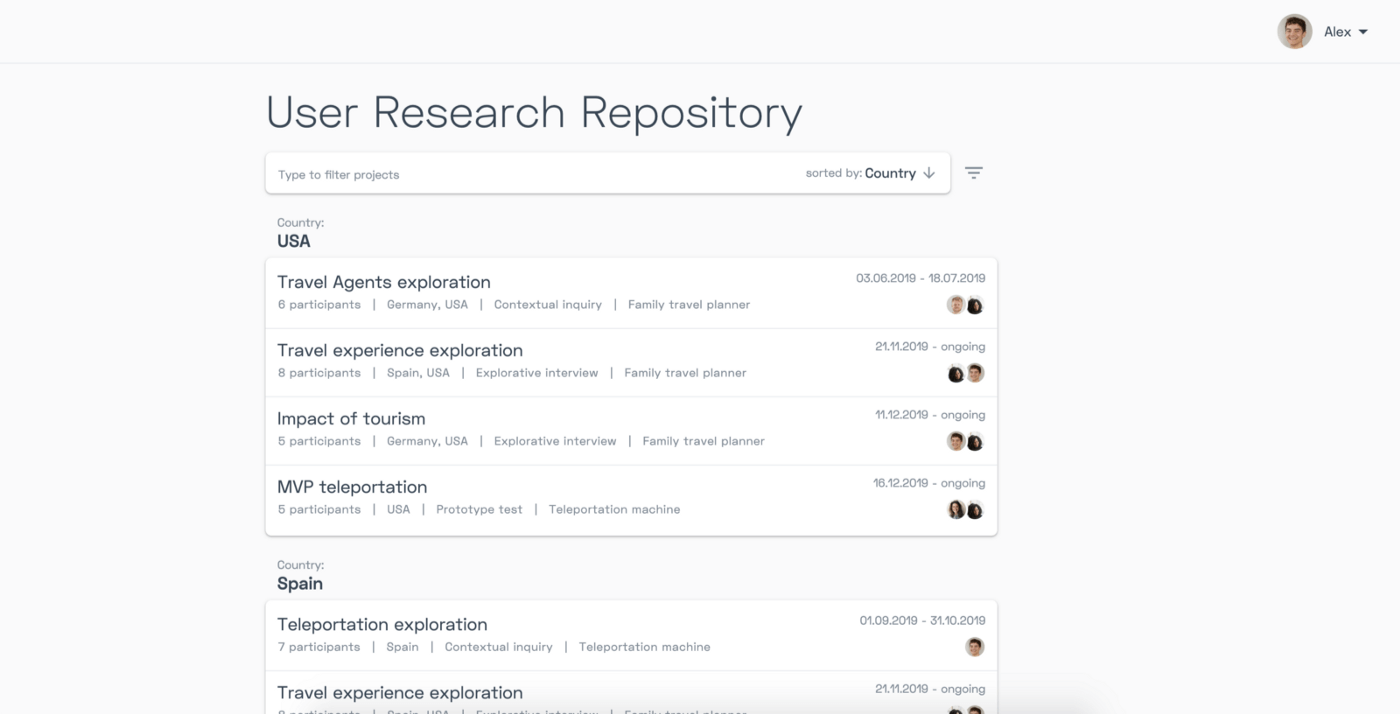

You might wish to organize projects by research type, project, department, or when the research was completed. This will help you better understand the research you’re looking at and find it quickly.

Including information about the research—such as authors, titles, keywords, a description, and dates—can make searching through raw data much faster and make the organization process more efficient.

All key data and information

It’s essential to include all of the key data you’ve gathered in the repository, including supplementary materials. This prevents information gaps, and stakeholders can easily stay informed. You’ll need to include the following information, if relevant:

Research and journey maps

Tools and templates (such as discussion guides, email invitations, consent forms, and participant tracking)

Raw data and artifacts (such as videos, CSV files, and transcripts)

Research findings and insights in various formats (including reports, desks, maps, images, and tables)

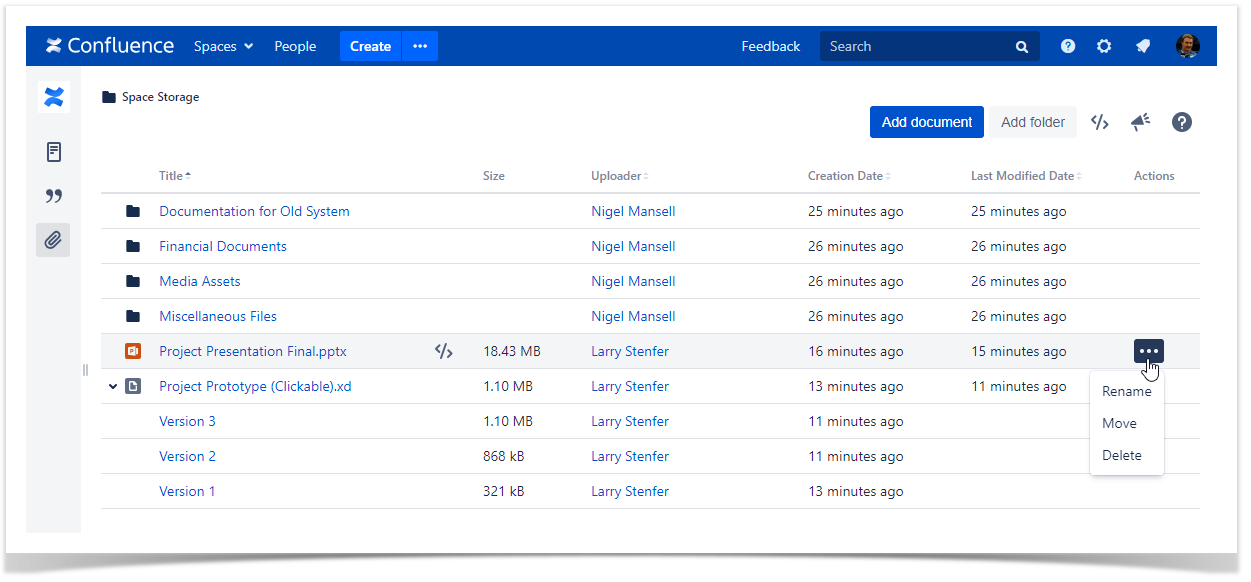

Version control

It’s important to use a system that has version control. This ensures the changes (including updates and edits) made by various team members can be viewed and reversed if needed.

- What makes a good research repository?

The following key elements make up a good research repository that’s useful for your team:

Access: all key stakeholders should be able to access the repository to ensure there’s an effective flow of information.

Actionable insights: a well-organized research repository should help you get from raw data to actionable insights faster.

Effective searchability : searching through large amounts of research can be very time-consuming. To save time, maximize search and discoverability by clearly labeling and indexing information.

Accuracy: the research in the repository must be accurately completed and organized so that it can be acted on with confidence.

Security: when dealing with data, it’s also important to consider security regulations. For example, any personally identifiable information (PII) must be protected. Depending on the information you gather, you may need password protection, encryption, and access control so that only those who need to read the information can access it.

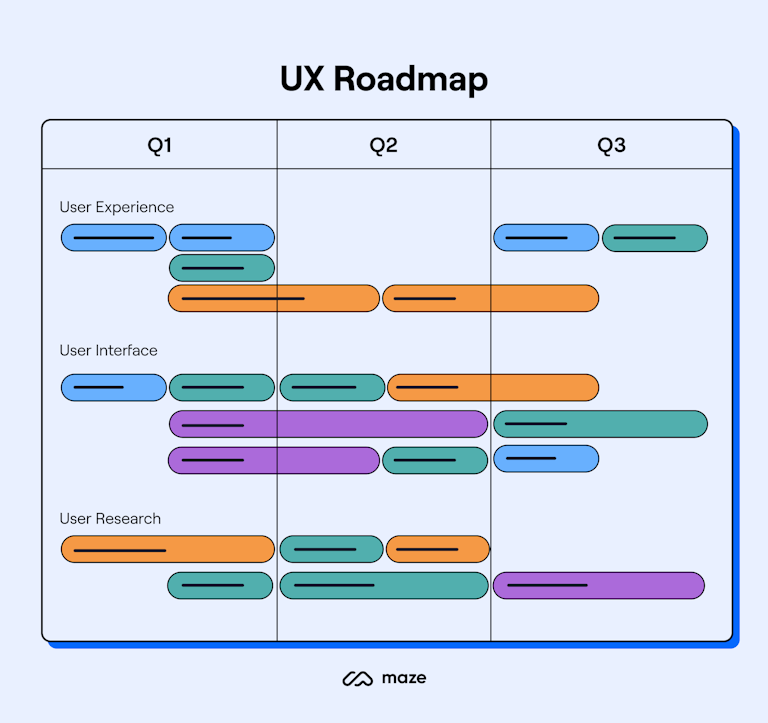

- How to create a research repository

Getting started with a research repository doesn’t have to be convoluted or complicated. Taking time at the beginning to set up the repository in an organized way can help keep processes simple further down the line.

The following six steps should simplify the process:

1. Define your goals

Before diving in, consider your organization’s goals. All research should align with these business goals, and they can help inform the repository.

As an example, your goal may be to deeply understand your customers and provide a better customer experience . Setting out this goal will help you decide what information should be collated into your research repository and how it should be organized for maximum benefit.

2. Choose a platform

When choosing a platform, consider the following:

Will it offer a single source of truth?

Is it simple to use

Is it relevant to your project?

Does it align with your business’s goals?

3. Choose an organizational method

To ensure you’ll be able to easily search for the documents, studies, and data you need, choose an organizational method that will speed up this process.

Choosing whether to organize your data by project, date, research type, or customer segment will make a big difference later on.

4. Upload all materials

Once you have chosen the platform and organization method, it’s time to upload all the research materials you have gathered. This also means including supplementary materials and any other information that will provide a clear picture of your customers.

Keep in mind that the repository is a single source of truth. All materials that relate to the project at hand should be included.

5. Tag or label materials

Adding metadata to your materials will help ensure you can easily search for the information you need. While this process can take time (and can be tempting to skip), it will pay off in the long run.

The right labeling will help all team members access the materials they need. It will also prevent redundant research, which wastes valuable time and money.

6. Share insights

For research to be impactful, you’ll need to gather actionable insights. It’s simpler to spot trends, see themes, and recognize patterns when using a repository. These insights can be shared with key stakeholders for data-driven decision-making and positive action within the organization.

- Different types of research repositories

There are many different types of research repositories used across organizations. Here are some of them:

Data repositories: these are used to store large datasets to help organizations deeply understand their customers and other information.

Project repositories: data and information related to a specific project may be stored in a project-specific repository. This can help users understand what is and isn’t related to a project.

Government repositories: research funded by governments or public resources may be stored in government repositories. This data is often publicly available to promote transparent information sharing.

Thesis repositories: academic repositories can store information relevant to theses. This allows the information to be made available to the general public.

Institutional repositories: some organizations and institutions, such as universities, hospitals, and other companies, have repositories to store all relevant information related to the organization.

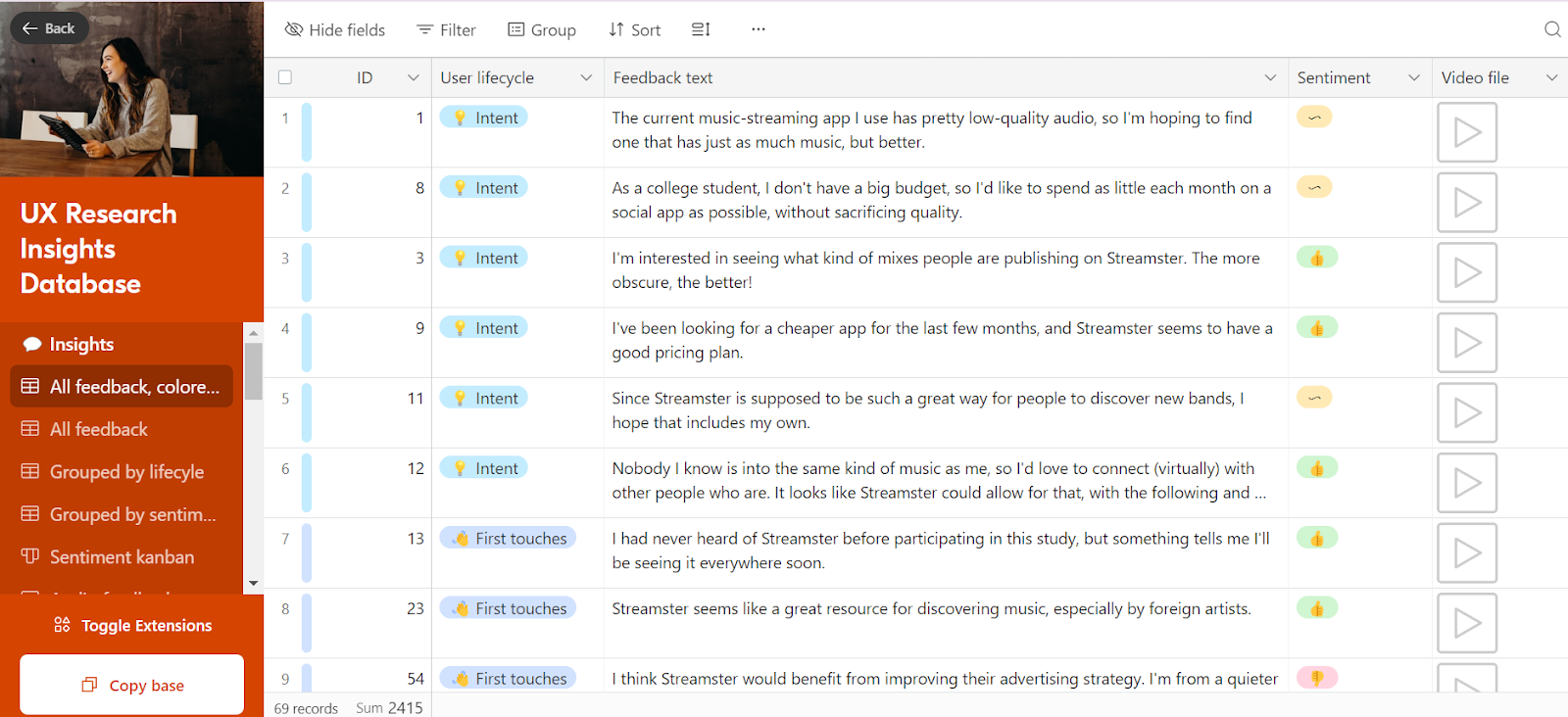

- Build your research repository in Dovetail

With Dovetail, building an insights hub is simple. It functions as a single source of truth where research can be gathered, stored, and analyzed in a streamlined way.

1. Get started with Dovetail

Dovetail is a scalable platform that helps your team easily share the insights you gather for positive actions across the business.

2. Assign a project lead

It’s helpful to have a clear project lead to create the repository. This makes it clear who is responsible and avoids duplication.

3. Create a project

To keep track of data, simply create a project. This is where you’ll upload all the necessary information.

You can create projects based on customer segments, specific products, research methods , or when the research was conducted. The project breakdown will relate back to your overall goals and mission.

4. Upload data and information

Now, you’ll need to upload all of the necessary materials. These might include data from customer interviews , sales calls, product feedback , usability testing , and more. You can also upload supplementary information.

5. Create a taxonomy

Create a taxonomy to organize the data effectively by ensuring that each piece of information will be tagged and organized.

When creating a taxonomy, consider your goals and how they relate to your customers. Ensure those tags are relevant and helpful.

6. Tag key themes

Once the taxonomy is created, tag each piece of information to ensure you can easily filter data, group themes, and spot trends and patterns.

With Dovetail, automatic clustering helps quickly sort through large amounts of information to uncover themes and highlight patterns. Sentiment analysis can also help you track positive and negative themes over time.

7. Share insights

With Dovetail, it’s simple to organize data by themes to uncover patterns and share impactful insights. You can share these insights with the wider team and key stakeholders, who can use them to make customer-informed decisions across the organization.

8. Use Dovetail as a source of truth

Use your Dovetail repository as a source of truth for new and historic data to keep data and information in one streamlined and efficient place. This will help you better understand your customers and, ultimately, deliver a better experience for them.

Should you be using a customer insights hub?

Do you want to discover previous research faster?

Do you share your research findings with others?

Do you analyze research data?

Start for free today, add your research, and get to key insights faster

Editor’s picks

Last updated: 18 April 2023

Last updated: 27 February 2023

Last updated: 6 February 2023

Last updated: 5 February 2023

Last updated: 16 April 2023

Last updated: 9 March 2023

Last updated: 30 April 2024

Last updated: 12 December 2023

Last updated: 11 March 2024

Last updated: 4 July 2024

Last updated: 6 March 2024

Last updated: 5 March 2024

Last updated: 13 May 2024

Latest articles

Related topics, .css-je19u9{-webkit-align-items:flex-end;-webkit-box-align:flex-end;-ms-flex-align:flex-end;align-items:flex-end;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-flex-direction:row;-ms-flex-direction:row;flex-direction:row;-webkit-box-flex-wrap:wrap;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;-webkit-box-pack:center;-ms-flex-pack:center;-webkit-justify-content:center;justify-content:center;row-gap:0;text-align:center;max-width:671px;}@media (max-width: 1079px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}}@media (max-width: 799px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}} decide what to .css-1kiodld{max-height:56px;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;}@media (max-width: 1079px){.css-1kiodld{display:none;}} build next, decide what to build next, log in or sign up.

Get started for free

- Utility Menu

Open source research data repository software

Researchers

Institutions

Participate in a vibrant and growing community that is helping to drive the norms for sharing, preserving, citing, exploring, and analyzing research data. Contribute code extensions, documentation, testing, and/or standards. Integrate research analysis, visualization and exploration tools, or other research and data archival systems with the Dataverse Project. Want to contribute?

Dataverse Repositories - A World View

A2bd36de0711ca11586e77791213138c.

View more Metrics

Dataverse Software 6.3 Release

Dataverse 6.3 is now available. Many thanks to all of the community members who contributed code, suggestions, bug reports, and other assistance across the project.

Release Overview

This release contains a number of updates and new features including:

- New Contributor Guide . The UX Working Group released a new Dataverse Contributor Guide .

- Search Performance Improvements...

Announcing Dataverse 6.2

Dataverse 6.2 is now available! Thank you to the community members who contributed code, suggestions, bug reports, and other assistance across the project.

- Search and Facet by License. Licenses have been added to the search facets in the search side panel to filter datasets by license (e.g. CC0). Licenses can also be used to filter the Search API results.

- When Returning Datasets to Authors, Reviewers Can Add a Note to the Author.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 29 August 2023

re3data – Indexing the Global Research Data Repository Landscape Since 2012

- Heinz Pampel ORCID: orcid.org/0000-0003-3334-2771 1 , 2 ,

- Nina Leonie Weisweiler ORCID: orcid.org/0000-0001-6967-9443 2 ,

- Dorothea Strecker ORCID: orcid.org/0000-0002-9754-3807 1 ,

- Michael Witt 3 ,

- Paul Vierkant 4 ,

- Kirsten Elger 5 ,

- Roland Bertelmann 2 ,

- Matthew Buys 4 ,

- Lea Maria Ferguson 2 ,

- Maxi Kindling 6 ,

- Rachael Kotarski ORCID: orcid.org/0000-0001-6843-7960 7 &

- Vivien Petras 1

Scientific Data volume 10 , Article number: 571 ( 2023 ) Cite this article

3541 Accesses

2 Citations

25 Altmetric

Metrics details

For more than ten years, re3data, a global registry of research data repositories (RDRs), has been helping scientists, funding agencies, libraries, and data centers with finding, identifying, and referencing RDRs. As the world’s largest directory of RDRs, re3data currently describes over 3,000 RDRs on the basis of a comprehensive metadata schema. The service allows searching for RDRs of any type and from all disciplines, and users can filter results based on a wide range of characteristics. The re3data RDR descriptions are available as Open Data accessible through an API and are utilized by numerous Open Science services. re3data is engaged in various initiatives and projects concerning data management and is mentioned in the policies of many scientific institutions, funding organizations, and publishers. This article reflects on the ten-year experience of running re3data and discusses ten key issues related to the management of an Open Science service that caters to RDRs worldwide.

Similar content being viewed by others

SciSciNet: A large-scale open data lake for the science of science research

Biomedical Data Repository Concepts and Management Principles

A dataset describing data discovery and reuse practices in research

Introduction.

In the 2010s, making research data publicly accessible gained importance: Terms such as e-science 1 and cyberscience 2 were shaping discourses about scientific work in the digital age. Various discussions within the scientific community 3 , 4 , 5 , 6 , 7 , 8 resulted in an increased awareness of the value of permanent access to research data. Policy recommendations of the Organization for Economic Co-operation and Development (OECD) 9 or the European Commission 10 reflected this shift.

The need for professional data management was increasingly emphasized with the publication of the now widely recognized FAIR Data Principles 11 . Researchers, academic institutions, and funders started to address this issue in policies 12 , initiatives and networks 13 , 14 , 15 , and infrastructures 16 , 17 , 18 , 19 . For example, the National Science Foundation (NSF) in the United States published a Data Sharing Policy in 2011, in which the funding agency required beneficiaries to provide information about data handling in a Data Management Plan 20 . In Germany, the German Research Foundation (DFG) published a similar statement regarding access to research data in the 2010s 21 , 22 .

The handling of research data was also discussed in library and computing center communities: In 2009, the German Initiative for Networked Information (DINI), a network of information infrastructure providers, published a position paper on the need for research data management (RDM) at higher education institutions 23 . Through the discussions within DINI, the need for a registry of RDRs became evident. At the time, the Directory of Open Access Repositories (OpenDOAR) 24 had already established itself as a directory of subject and institutional Open Access repositories. However, there was no comparable directory for RDRs, and it remained unclear how many repositories dedicated to research data existed.

In 2011, a consortium of research institutions in Germany submitted a proposal to the German Research Foundation (DFG), asking for funding to develop ‘re3data – Registry of Research Data Repositories’ 25 . Members of the consortium were the Karlsruhe Institute of Technology (KIT), the Humboldt-Universität zu Berlin, and the Helmholtz Open Science Office at the GFZ German Research Centre for Geosciences. The DFG approved the proposal in the same year. The project aimed to develop a service that would help researchers identify suitable RDRs to store their research data. re3data went online in 2012, and already listed 400 RDRs one year later 26 .

While working on the registry, the project team in Germany became aware of a similar initiative in the USA. With support from the Institute of Museum and Library Services, Purdue and Pennsylvania State University libraries developed Databib, a ‘curated, global, online catalog of research data repositories’ 27 . Databib went online in the same year 28 . At the time, RDRs were indexed and curated by library staff at re3data partner institutions, whereas Databib had established an international editorial board to curate RDR descriptions 27 . Databib and re3data signed a Memorandum of Understanding in 2012, and, following excellent cooperation, the two services merged in 2014 29 . The merger brought together successful ideas from each service: The metadata schemas were combined, resulting in version 2.2 of the re3data metadata schema 30 , and the sets of RDR descriptions were merged. The international editorial board of Databib was expanded to include re3data editors. Development of the IT infrastructure of re3data continued, combining the expertise both services had built. For operating the service, a management duo was installed, comprising a member each from institutions representing re3data and Databib.

The two services have always been closely corresponding with DataCite, an international not-for-profit organization that aims to ensure that research outputs and resources are openly available and connected so that their reuse can advance knowledge across and between disciplines, now and in the future 31 . In this process, the main objective was to cover the interests of the global community of operators more comprehensively. In 2015, the DataCite Executive Board and the General Assembly decided to enter into an agreement with re3data, making re3data a DataCite partner service 29 . In 2017, re3data won the Oberly Award for Bibliography in the Agricultural or Natural Sciences from the American Libraries Association 32 .

Today, re3data is the largest directory of RDRs worldwide, indexing over 3,000 RDRs as of March 2023. re3data is widely used by academic institutions, funding organizations, publishers, journals, and various other stakeholders, such as the European Open Science Cloud (EOSC) and the National Research Data Infrastructure in Germany (NFDI). re3data metadata is also used to monitor and study the landscape of RDRs, and it is reused by numerous tools and services. Third-party-funded projects support the continuous development of the service. Currently, the DFG is funding the development of the service within the project re3data COREF 33 , 34 . In addition, the project partners DataCite and KIT bring the re3data perspective into EOSC projects such as FAIRsFAIR (completed) 35 and FAIR-IMPACT 29 .

This article outlines the decade-long experience of managing a widely used registry that supports a diverse and global community of stakeholders. The article is clustered around ten key issues that have emerged over time. For each of the ten issues, we first present a brief definition from the perspective of re3data. We then describe our approach to addressing the issue, and finally, we offer a reflection on our work.

The section outlines ten key issues that have emerged in the last ten years of operating re3data.

For re3data, Open Science means providing unrestricted access to the re3data metadata and schema, transparency of the indexing process, as well as open communication with the community of global RDRs.

At all times, re3data has been committed to Open Science by striving to be transparent and by sharing metadata. The openness of re3data pertains not only to the handling of its metadata and the associated infrastructure, but also to collaborative engagements with the community of research data stewards and other stakeholders in the field of research data management.

An example of this is the development of the re3data metadata schema: The initial version of the schema integrated a request for comments that allowed stakeholders to offer suggestions and improvements 26 . This participatory approach, accompanied by a public relations campaign, has yielded positive outcomes. Numerous experts engaged in the request for comments and contributed their perspective and expertise. Based on the positive feedback, we subsequently integrated a participatory phase in further updates of the metadata schema 30 , 36 .

In addition to this general commitment to openness, re3data has made its metadata available under the Creative Commons deed CC0. Due to adopting this highly permissive license, re3data metadata is strongly utilized by other parties, thereby enabling the development of new and innovative services and tools. Moreover, adaptable Jupyter Notebooks 37 have been published to facilitate the use of the re3data metadata. Additionally, workshops 38 have been arranged to support individuals in working with the notebooks and re3data data in general.

As a registry of RDRs, re3data also promotes Open Science by helping researchers find suitable repositories for publishing their data. For researchers who are looking for a repository that supports Open Science practices, re3data offers concise information on repository openness via its icon system. A recent analysis showed that most repositories indexed in re3data are considered ‘open’ 39 .

Lessons learned

The extensive reuse of re3data metadata increases its overall value, and participatory phases allow for incorporating different perspectives and experiences.

Quality assurance

For re3data, quality assurance encompasses all processes to ensure a service that meets the needs of a global community, as well as verifiably high-quality information.

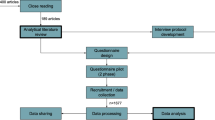

High-quality RDR descriptions are at the core of re3data. Therefore, continuous efforts ensure that re3data metadata describes appropriately and correctly. Figure 1 shows the editorial process in re3data. Anyone, for example RDR operators, can submit repositories to be indexed in re3data by providing the repository name, URL, and some other core properties via a web form 40 . The re3data editorial board analyzes if the suggested RDR conforms with the re3data registration policy 40 . The policy requires that the RDR is operated by a legal entity, such as a library or university, and that the terms of use are clearly communicated. Additionally, the RDR must have a focus on storing and providing access to research data. If an RDR meets these requirements, it is indexed based on the re3data metadata schema. A member of the editorial board creates an initial RDR description, which is then reviewed by another editor. This approach has proven effective in resolving any inconsistencies in interpreting RDR characteristics. An indexing manual explains how the schema is to be applied and helps to ensure consistency between RDR descriptions. Once this review is complete, the RDR description is made publicly visible.

Schematic overview of the editorial process in re3data.

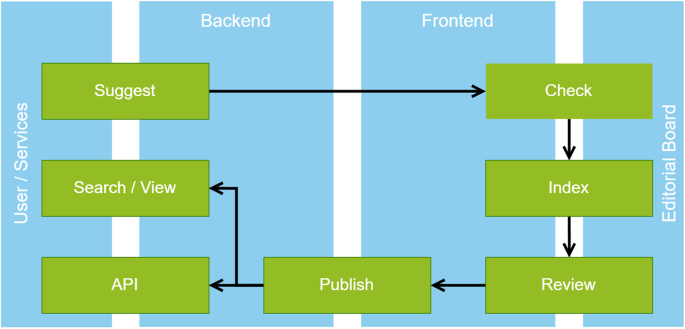

re3data applies a number of measures to ensure the long-term quality and consistency of RDR descriptions, including automated quality checks. For example, it is periodically checked whether the URLs of the RDR still resolve – if not, the entry of a RDR is reexamined. Figure 2 shows a screenshot of a re3data RDR description.

Screenshot of the re3data description of the research data repository PANGAEA 97 .

The re3data metadata schema on which RDR descriptions are based is reviewed and updated regularly to ensure that users’ changing information needs are met. Operators of an RDR, as well as any other person, can suggest changes to RDR descriptions by submitting a change request. A link for filing a change request can be found at the bottom of each RDR description in re3data. Once a change request has been submitted, a member of the editorial board will review the proposed changes and verify them against information on the RDR website. If the change request is deemed valid, the RDR description will be adapted accordingly.

As part of the project re3data COREF, quality assurance practices at RDRs were systematically investigated. The aim was to understand how RDRs ensure high-quality data, and to better reflect these measures in the metadata schema. The results of the study 41 , which were based on a survey among RDR operators, show that approaches to quality assurance are diverse and depend on the mission and scope of the RDR. However, RDRs are key actors in enabling quality assurance. Furthermore, there is a path dependence of data review on the review process of textual publications. In addition to the study, a workshop 42 , 43 was held with CoreTrustSeal that focused on quality assurance measures RDRs have implemented. CoreTrustSeal is a RDR certification organization launched in 2017 that defines requirements for base-level certification for RDRs 44 .

Combining manual and automated verification was shown to be most effective in ensuring that RDR descriptions remain consistent while meeting users’ diverse information needs.

Community engagement

For re3data, community engagement encompasses all activities that ensure interaction with the global RDR community in a participatory process.

Collaboration has always been a central principle for re3data. This is reflected in the fact that research communities, RDR providers, and other relevant stakeholders contribute significantly to the completeness and accuracy of the re3data metadata as well as its further technical and conceptual development. Examples include the participatory phase during the revision of the metadata schema, the involvement of important stakeholders in the development of the re3data Conceptual Model for User Stories 45 , 46 , or the activities that investigate data quality assurance at RDRs.

re3data engages in collaborations in various forms with diverse stakeholders, for example:

In collaboration with the Canadian Data Repositories Inventory Project and later with the Digital Research Alliance of Canada, both initiatives aiming at describing the Canadian landscape of RDRs comprehensively, descriptions of Canadian RDRs in re3data were improved, and additional RDRs were indexed 47 , 48 .

A collaboration initiative was initiated in Germany with the Helmholtz Metadata Collaboration (HMC). In this initiative, the descriptions of research data infrastructures within the Helmholtz Association are being reviewed and enhanced 49 .

re3data also engages in international networks, particularly within the Research Data Alliance (RDA). Activities focus on several RDA working and interest groups 50 , 51 , 52 that touch on topics relevant to RDR registries.

Combining strategies of engagement connects the service to its stakeholders and creates opportunities for collaboration and innovation.

Interoperability

For re3data, interoperability means facilitating interactions and metadata exchange with the global RDR community by relying on established standards.

Interoperability is a necessary condition to integrate a service into a global network of diverse stakeholders. International standards must be implemented to achieve this, for example with the re3data API 53 . The API can be used to query various parameters of an RDR as expressed in the metadata schema. The API enables the machine readability and integration of re3data metadata into other services. The re3data API is based on the RESTful API concept and is well-documented. Applying the HATEOAS principles 54 enables the decoupling of clients and servers, and thus allows for independent development of server functionality. This results in a robust interface that promotes interoperability and reduces barriers to future use. Also, re3data supports OpenSearch, a standard that enables interaction with search results in a format suitable for syndication and aggregation.

Interoperability also guides the development of the metadata schema: Established vocabularies and standards are used to describe RDRs wherever possible. Examples of standards used in the metadata schema include:

ISO 639-3 for language information, for example a RDR name

ISO 8601 for the use of date information on a RDR

DFG Classification of Subject Areas for subject information on a RDR

In addition, re3data pursues interoperability by jointly working on a mapping between the DFG Classification of Subject Areas used by re3data and the OECD Fields of Science classification used by DataCite 55 .

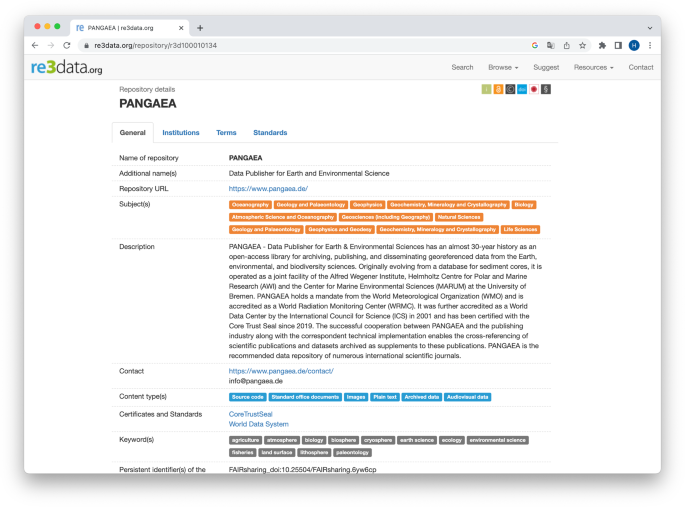

re3data records whether an RDR has obtained formal certification, for example by World Data System (WDS) or CoreTrustSeal. The certification status, along with other properties, is visualized by the re3data icon system that makes the core properties of RDRs easily accessible visually. The icon system provides information about the openness of the RDR and its data collection, the use of PID systems, as well as the certification status. The icon system can also be integrated into RDR websites via badges 56 . Figure 3 shows an example of a re3data badge.

The re3data badge integrated in the research data repository Health Atlas.

re3data captures information that might be relevant to metadata aggregator services, including API URLs, as well as the metadata standard(s) used. In offering this information in a standardized form, re3data fosters the development of services that span multiple collections, such as data portals. For example, as part of the FAIRsFAIR project work, re3data metadata has been integrated into DataCite Commons 57 to embed repository information in the DataCite PID Graph. This step not only improves the discoverability of repositories that support research data management in accordance with the FAIR principles but also serves as a basis for the development of new services such as the FAIR assessment tool F-UJI 35 , 58 .

The adherence to established standards facilitates the reuse of re3data metadata and increases the integration of the service into the broader Open Science landscape.

Developement

For re3data, continuous development ensures that the service is able to respond dynamically to evolving requirements of the global RDR community.

Maintaining a registry for an international community poses a significant challenge, particularly the continued provision of reliable technical operations and a governance structure capable of responding adequately to user demands. re3data has found suitable solutions to these challenges, which have enabled the service to be in operation for more than ten years. The long-standing collaboration with DataCite has contributed to this success. Participation in third-party-funded projects has facilitated the collaborative development of core service elements together with partners. Participation in committees such as those surrounding EOSC and RDA, as well as active engagement with the RDR community, have motivated discussions about changing requirements and led to the continuous evolution of the registry.

Responsibilities for specific tasks are divided among several entities, such as a working group responsible for guiding future directions of the service and the editorial board responsible for maintaining re3data metadata. In addition, there are teams responsible for technology as well as for outreach and communication. The working group includes experts from DataCite and other stakeholders, who discuss current requirements, prioritize developments, and ensure coordination with RDR operators worldwide. In addition to these entities, coordination with third-party-funded projects involving re3data is ongoing.

Continuous and agile development addresses the users’ constantly evolving needs. Operating a registry that meets those needs in the long term requires flexibility.

Sustainability

For re3data, sustainability means ensuring a long-term and reliable service to the global RDR community.

Maintaining the sustainable operation of a service like re3data beyond an initial project phase is a challenge. For re3data, the consortium model has proven effective, as the service is supported by a wide range of scientific institutions. This model, which is embedded in the governance of re3data, allows the operation of the service to be sustained through self-funding while also enabling important developments to be undertaken within the scope of third-party projects. Thanks to funding received from the DFG (re3data COREF project) and the European Union’s Horizon 2020 program (FAIRsFAIR project), significant investments have been made in the IT infrastructure and overall advancement of the service in recent years.

A strategy based on diverse revenue streams contributes to securing funding for the service long-term.

For re3data, being mentioned in policies comes with a responsibility for operating a reliable service and maintaining high-quality metadata for the global RDR community.

During the development of the re3data service, the partners engaged in dialogues with various stakeholders that were interested in using the registry to refer to RDRs in their policies. They might do this, for example, to recommend or mandate the use of RDRs in general for publishing research data, or the use of a specific RDR. Today, re3data is mentioned in the policies of several funding agencies, scientific institutions, and journals. These actors use re3data to identify RDRs operated by specific academic institutions that were developed using funding from a funding organization, or that store data that are the basis of a journal article. Examples of policies and policy guidance documents that refer to re3data:

Academic institutions:

Brandon University, Canada 59

Technische Universität Berlin, Germany 60

University of Edinburgh, United Kingdom 61

University of Eastern Finland 62

Western Norway University of Applied Sciences 63

Bill & Melinda Gates Foundation, USA 64

European Commission 65 and ERC, EU 66

National Science Foundation (NSF), USA 67

NIH, USA 68

Journals and Publishers:

Taylor & Francis, United Kingdom 69

Springer Nature, United Kingdom 70

Sage, United Kingdom 71

Wiley, Germany 72

Regular searches are conducted to track mentions of re3data in policies. On the re3data website, a list of policies referring to re3data is maintained and regularly updated 73 .

As a result of being mentioned in policies so frequently, re3data receives inquiries from researchers for information on listed RDRs almost daily. These inquiries are usually forwarded to the RDR directly.

Policies represent firm support for research data management by academic institutions, funders, and journals and publishers. By facilitating the search for and referencing of RDRs in policies, re3data further promotes Open Science practices.

For re3data, data reuse is one of the main objectives, ensuring that third parties can rely on re3data metadata to build services that support the global RDR community.

Because re3data metadata are published as open data, third parties are free to integrate it into their systems. Several service operators have already taken advantage of this opportunity. In general, there are three types of services that work with re3data data:

Services for finding and describing RDRs: These services usually work with a subset of re3data metadata. Sometimes, the data is manually curated, and then integrated into external services based on specific parameters. Examples include:

DARIAH-EU has developed its Data Deposit Recommendation Service based on a subset of re3data metadata, which helps humanities researchers find suitable RDRs 74 , 75 .

The American Geophysical Union (AGU) has utilized re3data metadata to create a dedicated gateway for RDRs in the geosciences with its Repository Finder tool 76 , 77 , which was later incorporated into the DataCite Commons web search interface.

Services for monitoring the landscape of RDRs: These services analyze re3data metadata using specific parameters and visualize the results. Examples include:

OpenAIRE has integrated re3data metadata into its Open Science Observatory to provide information on RDRs that are part of OpenAIRE 78 .

The European Commission operates the Open Science Monitor, a dashboard that analyzes re3data metadata. The following metrics are displayed: number of RDRs by subject, number of RDRs by access type, and number of RDRs by country 79 , 80 .

Services for assessing RDRs: These services use re3data metadata and other data sources to evaluate RDRs more comprehensively. Examples include:

The F-UJI Automated FAIR Data Assessment Tool is a web-based service that assesses the degree to which individual datasets conform to the FAIR Data principles. The tool utilizes re3data metadata to evaluate characteristics of the RDR that store the datasets 81 .

Charité Metrics Dashboard, a dashboard on responsible research practices from the Berlin Institute of Health at Charité in Berlin, Germany, builds on F-UJI data and combines this information with additional re3data metadata 82 .

These examples underscore the value Open Science tools like re3data generate by making their data openly available without restrictions. As a result of the permissive licensing, re3data metadata can be used for new and innovative applications, establishing re3data as a vital data provider for the global Open Science community.

Permissive licensing and extensive collaboration have turned re3data into a key data provider in the Open Science ecosystem.

Metadata for research

For re3data, providing RDR descriptions also means offering metadata that enables analyses of the global RDR community.

In research disciplines studying data infrastructures, for example library and information science or science and technology studies, re3data is regularly used for information on the state of research infrastructures. As re3data has been mapping the landscape of data infrastructures for ten years, it has evolved into a tool that is used for monitoring Open Science activities, research data management, and other topics. Studies reusing re3data metadata include analyses of the overall RDR landscape, the landscape of RDRs in a specific domain, or the RDR landscape of a region or country. Some examples of studies reusing re3data metadata for research are:

Overall studies: Boyd 83 examined the extent to which RDR exhibit properties of infrastructures. Khan & Ahangar 84 and Hansson & Dahlgren 85 focused on the openness of RDRs from a global perspective.

Regional studies: Bauer et al . 86 examined Austrian RDRs, Cho 87 Asian RDRs, Milzow et al . 88 Swiss RDRs, and Schöpfel 89 French RDRs.

Domain studies: Gómez et al . 90 and Li & Liu 91 investigated the landscape of RDRs in humanities and social science. Prashar & Chander 92 focused on computer science.

Members of the re3data team have also published studies reusing re3data metadata, including studies of the global state of RDR 93 , openness 39 , and quality assurance of RDRs 41 .

In response to the demand for information on the RDR landscape, the re3data graphical user interface provides various visualizations of the current state of RDRs. For example, re3data metadata can be browsed visually by subject category and on a map. In addition, the metrics page of re3data shows how RDRs are distributed across central properties of the metadata schema 94 .

The start page of re3data includes a recommendation for how to cite the service if it was used as a source in papers:

re3data - Registry of Research Data Repositories. https://doi.org/10.17616/R3D last accessed: [date].

In citing the service, the use of re3data as a data source in research and the service in general becomes more visible.

The increasing number of studies reusing re3data metadata shows a real demand for reliable information on the global RDR landscape.

Communications

For re3data, communication means engaging in dialogue with relevant stakeholders in the global RDR community.

Broad-based public relations are very important for a service catering to a global community. In recent years, re3data has pursued a communication strategy that includes the following elements:

Conference presentations: It has been proven effective to represent the service at conferences, paving new ways to engage with the community.

Mailing lists: The re3data team regularly informs members of a variety of mailing lists about news from the service.

Social media: re3data communicates current developments via Mastodon ( https://openbiblio.social/@re3data ) and Twitter ( https://twitter.com/re3data ).

Help desk: Communication via the help desk is essential for the re3data service. The help desk team answers questions about RDR descriptions, as well as general questions about data management. The number of general inquiries, e.g., for finding a suitable RDR, has increased over the years.

Blog: The project re3data COREF operates a blog that informs about developments in the project 95 . Some blog posts are also published in the DataCite Blog 96 .

Establishing broad-based communication channels enables the service to reach and engage with relevant stakeholders in a variety of formats.

Over the past ten years, re3data has evolved into a reliable and valuable Open Science service. The service offers high-quality RDR descriptions from all disciplines and regions. re3data is managed cooperatively; new features are developed in third-party projects.

Four basic principles guide the development of re3data: openness, community engagement, high-quality metadata, and ongoing consideration of users’ needs. These principles ensure that the activities of the service align with the values and interests of its stakeholders. In the context of these principles, ten key issues for the operation of the service have emerged over the last ten years.

In the past two years, following in-depth conversations with diverse parties, a new conceptual model for re3data was developed 45 . This process contributed to a better understanding of the needs of RDR operators and other stakeholders. The conceptual model will guide developments of re3data, embedding the service further in the evolving ecosystem of Open Science services with the intention to support researchers, scientific institutions, funding organizations, publishers, and journals in implementing the FAIR principles and realizing an interconnected global research data ecosystem.

This article describes the history and current status of the global registry re3data. Based on operational experience, it reflects on some of the basic principles that have shaped the service since its inception.

Having been launched more than ten years ago, re3data is now the most comprehensive registry of RDRs. The service currently describes more than 3,000 RDRs and caters to a diverse user base including RDR operators, researchers, funding agencies, and publishers. Ten key issues that are relevant for operating an Open Science service like re3data are identified, discussed, and reflected: openness, quality assurance, community engagement, interoperability, development, sustainability, policies, data reuse, metadata for research, and communications. For each of the key issues, we provide a definition, explain the approach applied by the re3data service, and describe what the re3data team learned from working on each issue.

Among other aspects, the paper outlines the design, governance, and objectives of re3data, providing important background information on a service that has evolved into a central data source on the global RDR landscape.

Data availability

The re3data RDR descriptions are openly available via https://re3data.org under a CC0 deed.

Code availability

The source code of the directory is not publicly released. The re3data subject ontology and several Jupyter notebooks with examples for using the re3data API can be found at: https://github.com/re3data .

National Science Foundation Cyberinfrastructure Council. Cyberinfrastructure Vision for 21st Century Discovery 2007 . https://www.nsf.gov/pubs/2007/nsf0728/nsf0728.pdf (2023).

National Science Foundation. Revolutionizing Science and Engineering through Cyberinfrastructure: Report of the National Science Foundation Blue-Ribbon Advisory Panel on Cyberinfrastructure 2003 . https://www.nsf.gov/cise/sci/reports/atkins.pdf (2023).

How to encourage the right behaviour. Nature 416 , 1–1 (2002).

Let Data Speak to Data. Nature 438 , 531–531 (2005).

The Royal Society. Science as an Open Enterprise https://royalsociety.org/~/media/Royal_Society_Content/policy/projects/sape/2012-06-20-SAOE.pdf (2023).

Data for the masses. Nature 457 , 129–129 (2009).

Data’s shameful neglect. Nature 461 , 145–145 (2009).

Science Staff. Challenges and opportunities. Science 331 , 692–693 (2011).

Article Google Scholar

OECD. OECD Principles and Guidelines for Access to Research Data from Public Funding (2007).

European Commission. Commission Recommendation of 17 July 2012 on Access to and Preservation of Scientific Information (2012).

Wilkinson, M. D. et al . The FAIR guiding principles for scientific data management and stewardship. Scientific Data 3 , 160018 (2016).

Pampel, H. & Bertelmann, R. in Handbuch Forschungsdatenmanagement (2011) . https://opus4.kobv.de/opus4-fhpotsdam/frontdoor/index/index/docId/195 (2023).

European Commission. European Cloud Initiative - Building a Competitive Data and Knowledge Economy in Europe (2016).

Michener,W. et al . DataONE: Data observation network for earth preserving data and enabling innovation in the biological and environmental sciences. D-Lib Magazine 17 (2011).

Parsons, M. A. The Research Data Alliance: Implementing the technology, practice and connections of a data infrastructure. Bul. Am. Soc. Info. Sci. Tech. 39 , 33–36 (2013).

Borgman, C. L. Big Data, Little Data, No Data: Scholarship in the Networked World (The MIT Press, 2016).

Manghi, P., Manola, N., Horstmann, W. & Peters, D. An Infrastructure for Managing EC Funded Research Output - The OpenAIRE Project 2010 . https://publications.goettingen-research-online.de/handle/2/57068 (2023).

Blanke, T., Bryant, M., Hedges, M., Aschenbrenner, A. & Priddy, M. Preparing DARIAH in 2011 IEEE Seventh International Conference on eScience , 158–165 (IEEE, 2011).

Hey, T. & Trefethen, A. in Scientific Collaboration on the Internet (eds Olson, G. M., Zimmerman, A. & Bos, N.) 14–31 (The MIT Press, 2008).

National Science Board. Digital Research Data Sharing and Management 2011 . https://www.nsf.gov/nsb/publications/2011/nsb1124.pdf (2023).

Deutsche Forschungsgemeinschaft. Empfehlungen Zur Gesicherten Aufbewahrung Und Bereitstellung Digitaler Forschungsprimar daten 2009 . https://www.dfg.de/download/pdf/foerderung/programme/lis/ua_inf_empfehlungen_200901.pdf (2023).

Allianz der deutschen Wissenschaftsorganisationen. Grundsatze Zum Umgang Mit Forschungsdaten https://doi.org/10.2312/ALLIANZOA.019 (2010).

DINI Working Group Electronic Publishing. Positionspapier Forschungsdaten. https://doi.org/10.18452/1489 (2009).

OpenDOAR. https://beta.jisc.ac.uk/opendoar (2023).

Deutsche Forschungsgemeinschaft. Re3data.Org - Registry of Research Data Repositories. Community Building, Net working and Research Data Management Services GEPRIS . https://gepris.dfg.de/gepris/projekt/209240528?context=projekt&task=showDetail&id=209240528& (2023).

Pampel, H. et al . Making research data repositories visible: The re3data.org registry. PLoS ONE 8 (ed Suleman, H.) e78080 (2013).

Witt, M. Databib: Cataloging the World’s Data Repositories 2013 . https://ir.inflibnet.ac.in:8443/ir/handle/1944/1778 (2023).

Witt, M. & Giarlo, M. Databib: IMLS LG-46-11-0091-11 Final Report (White Paper) 2012 . https://docs.lib.purdue.edu/libreports/2 (2023).

Buys, M. Strategic Collaboration 2022 . https://datacite.org/assets/re3data%20and%20DataCite_openHours.pdf (2023).

Vierkant, P. et al . Metadata Schema for the Description of Research Data Repositories: version 2.2 . https://doi.org/10.2312/RE3.006 (2014).

Brase, J. DataCite - A Global Registration Agency for Research Data in 2009 Fourth International Conference on Cooperation and Promotion of Information Resources in Science and Technology , 257–261 (IEEE, 2009).

Witt, M. DataCite’s Re3data Wins Oberly Award from the American Libraries Association https://doi.org/10.5438/0001-0HN* .

Deutsche Forschungsgemeinschaft. Re3data – Offene Und Nutzerorientierte Referenz Fur Forschungsdatenrepositorien (Re3data COREF) GEPRIS . https://gepris.dfg.de/gepris/projekt/422587133?context=projekt&task=showDetail&id=422587133& (2023).

re3data COREF. Re3data COREF Project https://coref.project.re3data.org/project (2023).

FAIRsFAIR. Repository Discovery in DataCite Commons https://www.fairsfair.eu/repository-discovery-datacite-commons (2023).

Strecker, D. et al . Metadata Schema for the Description of Research Data Repositories: version 3.1 . https://doi.org/10.48440/RE3.010 (2021).

re3data. Examples for using the re3data API GitHub . https://github.com/re3data/using_the_re3data_API (2023).

Schabinger, R., Strecker, D., Wang, Y. & Weisweiler, N. L. Introducing Re3data – the Registry of Research Data Repositories . https://doi.org/10.5281/ZENODO.5592123 (2021).

re3data COREF. How Open Are Repositories in Re3data? https://coref.project.re3data.org/blog/how-open-are-repositories-in-re3data (2023).

re3data. Suggest https://www.re3data.org/suggest (2023).

Kindling, M. & Strecker, D. Data quality assurance at research data repositories. Data Science Journal 21 , 18 (2022).

Kindling, M. et al . Report on re3data COREF/CoreTrustSeal workshop on quality management at research data repositories. Informationspraxis 8 (2022).

Kindling, M., Strecker, D. & Wang, Y. Data Quality Assurance at Research Data Repositories: Survey Data (Zenodo, 2022).

L’Hours, H., Kleemola, M. & De Leeuw, L. CoreTrustSeal: From academic collaboration to sustainable services. IASSIST Quarterly 43 , 1–17 (2019).

Vierkant, P. et al . Re3data Conceptual Model for User Stories . https://doi.org/10.48440/RE3.012 (2021).

Weisweiler, N. L. et al . Re3data Stakeholder Survey and Workshop Report . https://doi.org/10.48440/RE3.013 (2021).

Webster, P. Integrating Discovery and Access to Canadian Data Sources. Contributing to Academic Library Data Services by Sharing Data Source Knowledge Nation Wide in. In collab. with Haigh, S. (2017). https://library.ifla.org/id/eprint/2514/ (2023).

Dearborn, D. et al . Summary Report: Canadian Research Data Repositories and the Re3data Repository Registry in collab. with Labrador, A. & Purcell, F. (2023).

Helmholtz Open Science Office. Community Building for Research Data Repositories in Helmholtz https://os.helmholtz.de/en/open-science-in-helmholtz/networking/community-building-research-data-repositories/ (2023).

Research Data Alliance. Libraries for Research Data IG https://www.rd-alliance.org/groups/libraries-research-data.html (2023).

Research Data Alliance. Data Repository Attributes WG https://www.rd-alliance.org/groups/data-repository-attributes-wg (2023).

Research Data Alliance. Data GranularityWG https://www.rd-alliance.org/groups/data-granularity-wg (2023).

re3data. API https://www.re3data.org/api/doc (2023).

HATEOAS https://en.wikipedia.org/w/index.php?title=HATEOAS&oldid=1141349344 (2023).

Ninkov, A. B. et al . Mapping Metadata - Improving Dataset Discipline Classification . https://doi.org/10.5281/ZENODO.6948238 (2022).

Pampel, H. Re3data.Org Reaches a Milestone and Begins Offering Badges https://doi.org/10.5438/KTR7-ZJJH .

DataCite. DataCite Commons https://commons.datacite.org/repositories (2023).

Wimalaratne, S. et al . D4.7 Tools for Finding and Selecting Certified Repositories for Researchers and Other Stakeholders. https://doi.org/10.5281/ZENODO.6090418 (2022).

Brandon University. Research Data Management Strategy https://www.brandonu.ca/research/files/Research-Data-Strategy.pdf (2023).

Technical University Berlin. Research Data Policy of TU Berlin https://www.tu.berlin/en/working-at-tu-berlin/important-documents/guidelinesdirectives/research-data-policy (2023).

The University of Edinburgh. Research Data Management Policy https://www.ed.ac.uk/information-services/about/policies-and-regulations/research-data-policy (2023).

University of Eastern Finland. Data management at the end of research https://www.uef.fi/en/datasupport/data-management-at-the-end-of-research (n. d.).

Western Norway University of Applied Sciences. Research Data https://www.hvl.no/en/library/research-and-publish/publishing/research-data/ (2023).

Gates Open Access Policy. Data Sharing Requirements https://openaccess.gatesfoundation.org/how-to-comply/data-sharing-requirements/ (2023).

European Commission. Horizon Europe (HORIZON) - Programme Guide 2022 . https://ec.europa.eu/info/funding-tenders/opportunities/docs/2021-2027/horizon/guidance/programme-guide_horizon_en.pdf (2023).

European Research Council. Open Research Data and Data Management Plans - Information for ERC Grantee 2022 . https://erc.europa.eu/sites/default/files/document/file/ERC_info_document-Open_Research_Data_and_Data_Management_Plans.pdf (2023).

National Science Foundation. Dear Colleague Letter: Effective Practices for Making Research Data Discoverable and Citable (Data Sharing) https://www.nsf.gov/pubs/2022/nsf22055/nsf22055.jsp (2023).

National Institutes of Health. Repositories for Sharing Scientific Data https://sharing.nih.gov/data-management-and-sharing-policy/sharing-scientific-data/repositories-for-sharing-scientific-data (2023).

Taylor and Francis. Understanding and Using Data Repositories https://authorservices.taylorandfrancis.com/data-sharing/share-your-data/repositories/ (2023).

Scientific Data. Data Repository Guidance https://www.nature.com/sdata/policies/repositories (2023).

SAGE. Research Data Sharing FAQs https://us.sagepub.com/en-us/nam/research-data-sharing-faqs (2023).

Wiley. Data Sharing Policy https://authorservices.wiley.com/author-resources/Journal-Authors/open-access/data-sharing-citation/data-sharing-policy.html (2023).

re3data. Publications https://www.re3data.org/publications (2023).

Buddenbohm, S., de Jong, M., Minel, J.-L. & Moranville, Y. Find research data repositories for the humanities - the data deposit recommendation service. Int. J. Digit. Hum. 1 , 343–362 (2021).

DARIAH. DDRS https://ddrs-dev.dariah.eu/ddrs/ (2023).

Witt, M. et al . in Digital Libraries: Supporting Open Science (eds Manghi, P., Candela, L. & Silvello, G.) 86–96 (Springer, 2019).

DataCite. DataCite Repository Selector https://repositoryfinder.datacite.org/ (2023).

OpenAIRE. Open Science Observatory https://osobservatory.openaire.eu/home (2023).

The Lisbon Council. Open Science Monitor Methodological Note 2019 . https://research-and-innovation.ec.europa.eu/system/files/2020-01/open_science_monitor_methodological_note_april_2019.pdf (2023).

European Commission. Facts and Figures for Open Research Data https://research-and-innovation.ec.europa.eu/strategy/strategy-2020-2024/our-digital-future/open-science/open-science-monitor/facts-and-figures-open-research-data_en (2023).

Devaraju, A. & Huber, R. F-UJI - An Automated FAIR Data Assessment Tool Zenodo . https://doi.org/10.5281/ZENODO.4063720 (2023).

Berlin Institute of Health. ChariteMetrics Dashboard https://quest-dashboard.charite.de/#tabMethods (2023).

Boyd, C. Understanding research data repositories as infrastructures. P. J. Asso. for Info. Science & Tech. 58 , 25–35 (2021).

Khan, N. A. & Ahangar, H. Emerging Trends in Open Research Data in 2017 9th International Conference on Information and Knowledge Technology , 141–146 (2017).

Hansson, K. & Dahlgren, A. Open research data repositories: Practices, norms, and metadata for sharing images. J. Asso. for Info. Science & Tech. 73 , 303–316 (2022).

Bauer, B. & Ferus, A. Osterreichische Repositorien in OpenDOAR und re3data.org: Entwicklung und Status von Infrastrukturen fur Green Open Access und Forschungsdaten. Mitteilungen der VOB 71 , 70–86 (2018).

Cho, J. Study of Asian RDR based on re3data. EL 37 , 302–313 (2019).

Milzow, K., von Arx, M., Sommer, C., Cahenzli, J. & Perini, L. Open Research Data: SNSF Monitoring Report 2017-2018. https://doi.org/10.5281/ZENODO.3618123 (2020).

Schopfel, J. in Schopfel, J. & Rebouillat, V. Research Data Sharing and Valorization: Developments, Tendencies, Models (Wiley, 2022).

Gomez, N.-D., Mendez, E. & Hernandez-Perez, T. Data and metadata research in the social sciences and humanities: An approach from data repositories in these disciplines. EPI 25 , 545 (2016).

Li, Z. & Liu, W. Characteristics Analysis of Research Data Repositories in Humanities and Social Science - Based on Re3data.Org in 4th International Symposium on Social Science (Atlantis Press, 2018).

Prashar, P. & Chander, H. Research Data Management through Research Data Repositories in the Field of Computer Sciences https://ir.inflibnet.ac.in:8443/ir/bitstream/1944/2400/1/43.pdf (2023).