- Get new issue alerts Get alerts

- Submit a Manuscript

Secondary Logo

Journal logo.

Colleague's E-mail is Invalid

Your message has been successfully sent to your colleague.

Save my selection

Data Analysis in Qualitative Research

Ravindran, Vinitha 1,

1 College of Nursing, CMC, Vellore, Tamil Nadu, India

Address for correspondence: Dr. Vinitha Ravindran, College of Nursing, CMC, Vellore, Tamil Nadu, India. E-Mail: [email protected]

This is an open access journal, and articles are distributed under the terms of the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 License, which allows others to remix, tweak, and build upon the work non-commercially, as long as appropriate credit is given and the new creations are licensed under the identical terms.

Data analysis in qualitative research is an iterative and complex process. The focus of analysis is to bring out tacit meanings that people attach to their actions and responses related to a phenomenon. Although qualitative data analysis softwares are available, the researcher is the primary instrument who attempts to bring out these meanings by a deep engagement with the data and the individuals who share their stories. Although different approaches are suggested in different qualitative methods, the basic steps of content analysis that includes preparing the data, reading and reflection, coding, categorising and developing themes are integral to all approaches. The analysis process moves the researcher from describing the phenomenon to conceptualisation and abstraction of themes without losing the voice of the participants which are represented by the findings.

INTRODUCTION

Qualitative data analysis appears simple to those who have limited knowledge of qualitative research approach, but for the seasoned qualitative researcher, it is one of the most difficult tasks. According to Thorn,[ 1 ] it is the complex and elusive part of the qualitative research process. Many challenges that are inherent in the research approach makes the analysis process demanding. The first challenge is to convert the data from visual or auditory recording to textual data. As qualitative approach includes data generation through sharing of experiences, it becomes fundamentally necessary to record data rather than writing down accounts as the stories are shared. Essential data which may become apparent or uncovered when reflecting on audiotaped interviews may be missed or overlooked if interviews are not recorded.[ 2 ] Although field notes are written they often augment the experiences conveyed by participants rather than being the primary data source. Therefore, the researcher needs to spend effort and time to record as well as transcribe data to texts which can be analysed.

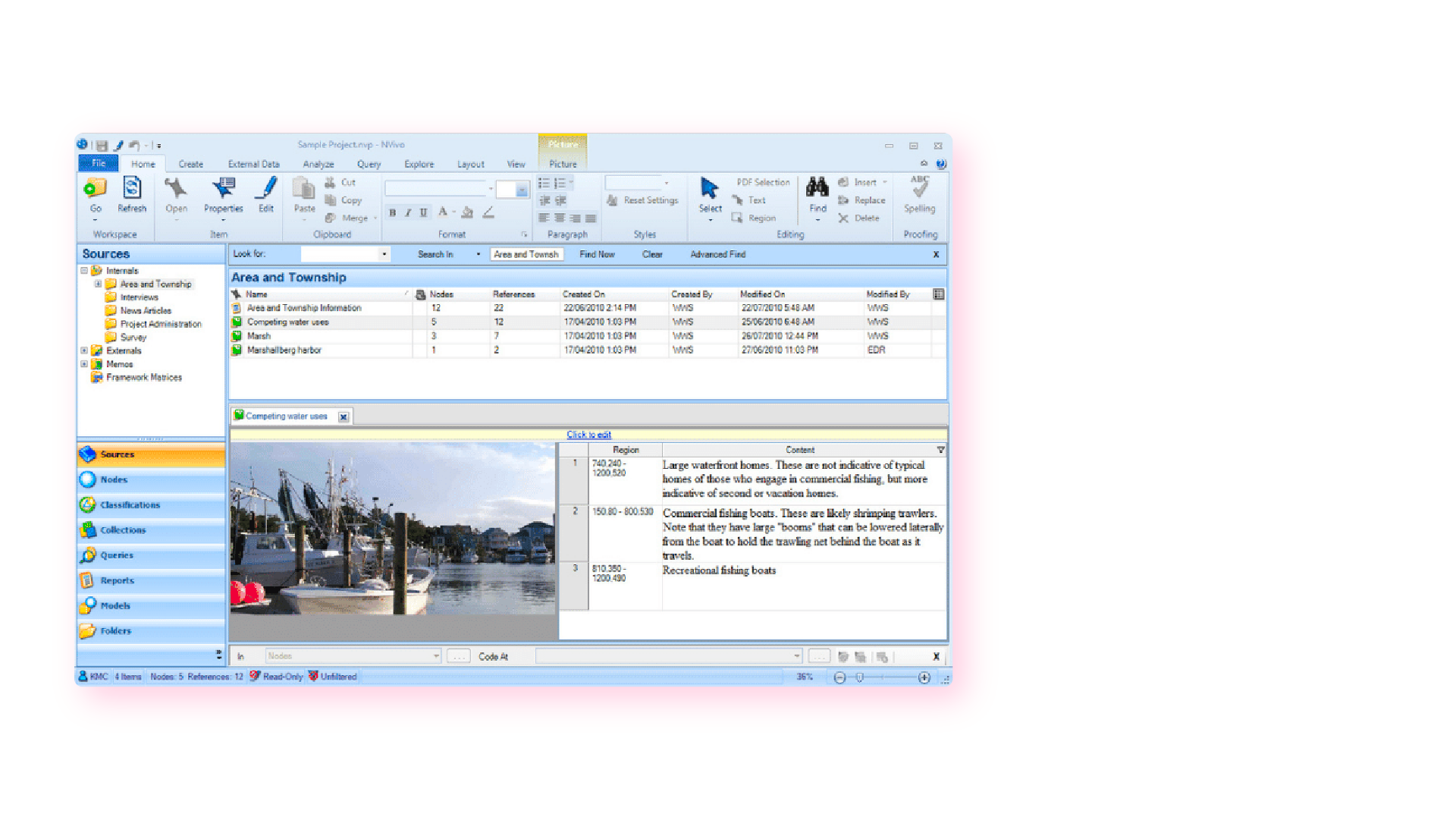

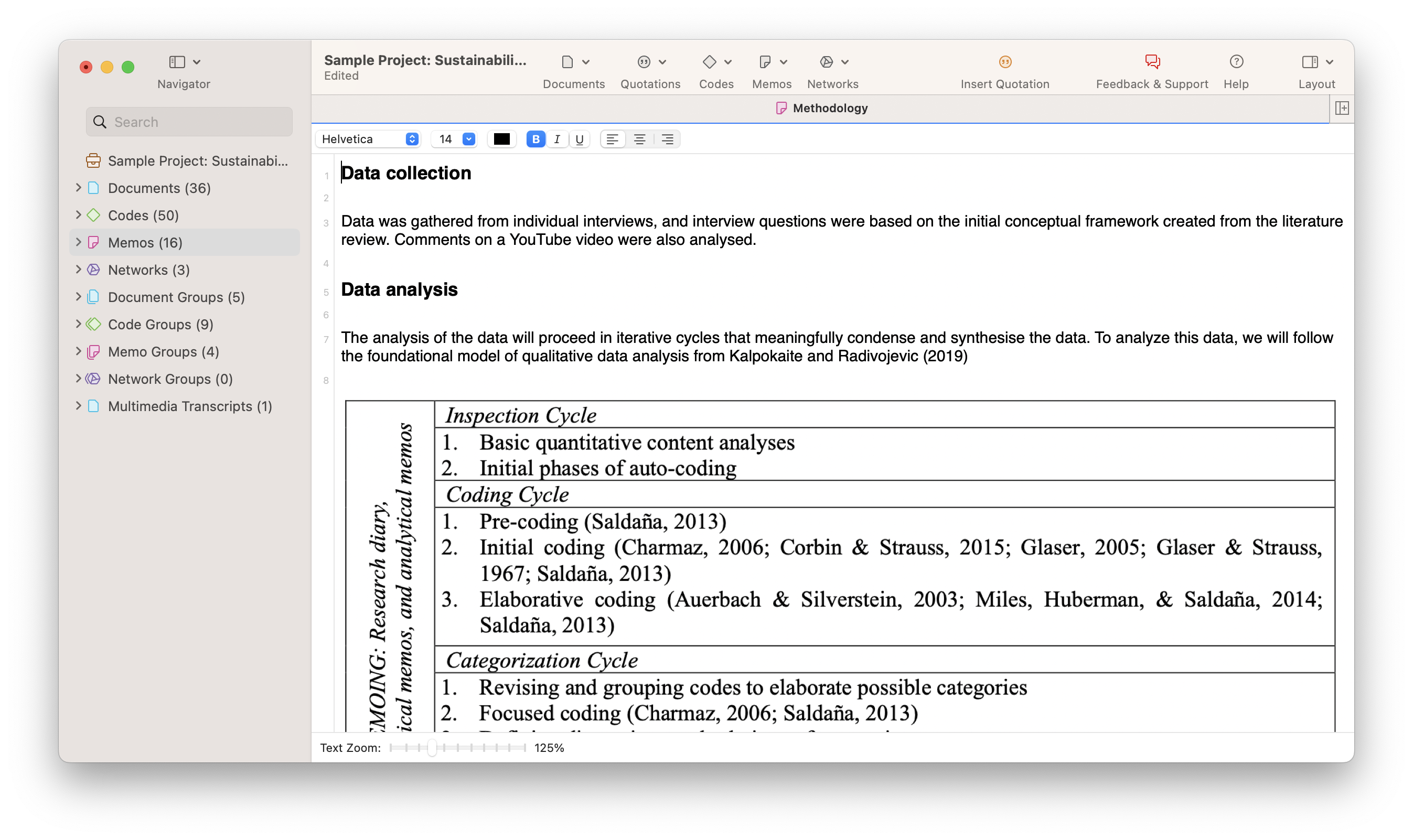

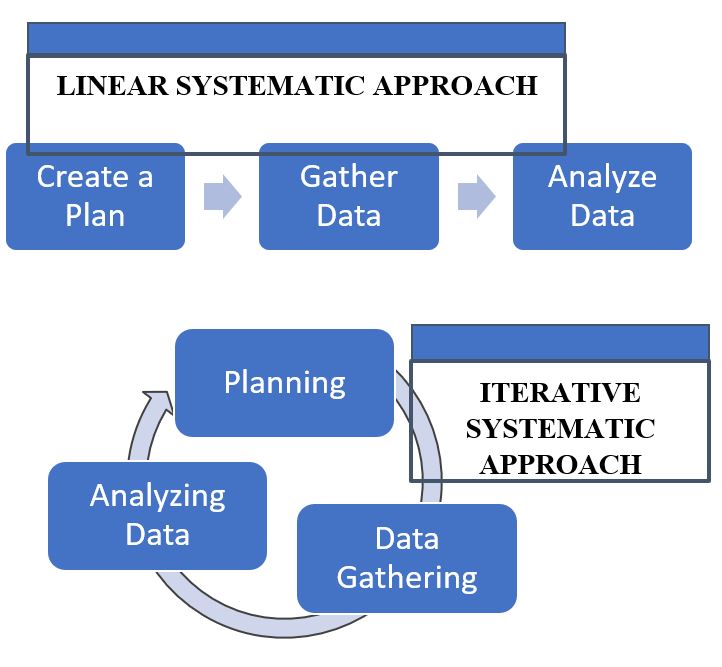

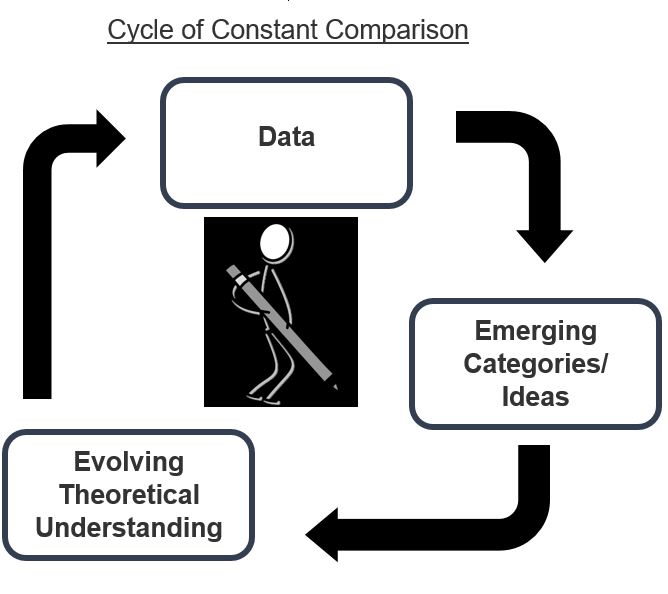

The second challenge is managing the quantum of textual data. One hour of interview may produce 20–40 pages of text. Even with fewer participants as generally is in qualitative research, the researcher may have many pages of data which need coding and analysing. Although software packages such as NVivo and Atlas-ti are available, they only help to organise, sort and categorise data and will not give meaning to the text.[ 3 ] The researcher has to read, reflect, compare and analyse data. The categories and themes have to be brought forth by the researcher. The third challenge is doing data generation and data analysis at the same time. Concurrent data generation and analysis is a predominant feature in qualitative research. An iterative or cyclic method of data collection and analysis is emphasised in qualitative approach. What it means is that as the researcher collects data, the analysis process is also initiated. The researcher does not wait to complete collecting data and then do analysis.[ 2 ] Iterative process enhances the researcher to focus on emerging concepts and categories in subsequent interviews and observations. It enables the researcher to address the gaps in the data and get information to saturate the gaps in subsequent contacts with earlier or new research participants. Sufficient time and resources are needed for sustaining the iterative process throughout the research process.

The above challenges are mentioned at the beginning of this article not to discourage the researchers but to emphasise the complexity of data analysis which has to be seriously considered by all researchers who are interested in doing qualitative research. In addition to the general challenges, data analysis in qualitative research also varies between different approaches and designs. There is also the possibility of flexibility and fluidity that enhances the researcher to choose different approaches to analysis, either one specific approach or a combination of approaches.[ 4 ] The framework for the analysis should, however, be made explicit at the beginning of the analysis. In qualitative research, the researcher is a bricoleur (weaver of stories) who is creating a bricolage.[ 5 ]

CHARACTERISTICS OF DATA ANALYSIS

In qualitative data analysis.

- Researcher attempts to understand the meaning behind actions and behaviours of participants

- Researcher becomes the instrument to generate data and ask analytical questions

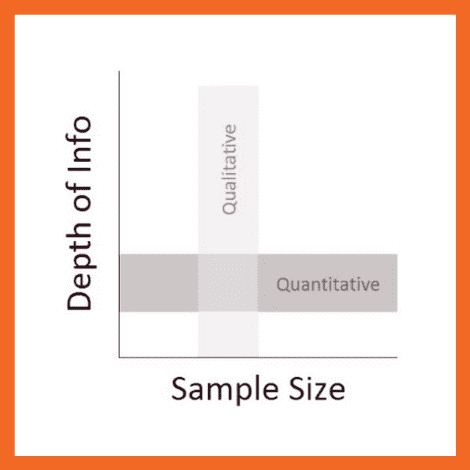

- Emphasis is given to quality and depth of narration about a phenomenon rather than the number of study participants

- The context and a holistic view of the participants' experience are stressed

- The research is sensitive to what the influence he/she has on the interpretation of data

- Analytical themes are projected as findings rather than quantified variables.

Process of data analysis

Qualitative data analysis can be both deductive and inductive. The deductive process, in which there is an attempt to establish causal relationships, is although associated with quantitative research, can be applied also in qualitative research as a deductive explanatory process or deductive category application.[ 6 ] When the researcher's interest is on specific aspects of the phenomenon, and the research question is focused and not general, a deductive approach to analysis may be used. For example, in a study done by Manoranjitham et al .,[ 7 ] focus group discussions were conducted to identify perceptions of suicide in terms of causes, methods of attempting suicide, impact of suicide and availability of support as perceived by family and community members and health-care professionals. Focused questions were asked to elicit information on what people thought about the above aspects of suicide. The answers from participants in focus groups were coded under each question, which was considered as categories and the number of responders and the responses were elaborated under the said questions as perceived by the participants. Deductive process in qualitative data analysis allows the researcher to be at a descriptive level where the results are closer to participants accounts, rather than moving to a more interpretive or conceptual level. This process is often used when qualitative research is used as a part of the mixed methods approach or as a part of an elaborate research study.

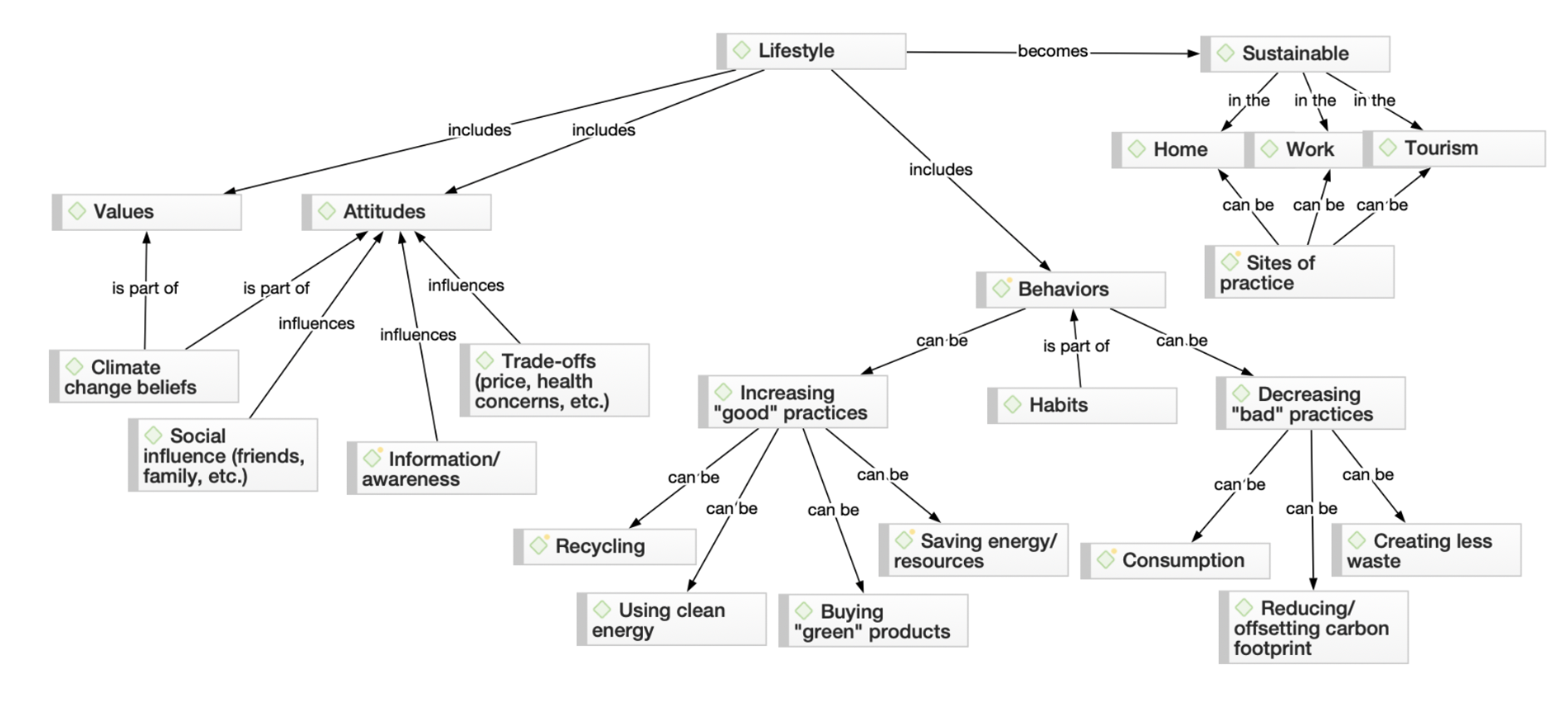

In contrast, the inductive process which is the hallmark of qualitative data analysis involves asking questions of the in-depth and vast data that have been generated from different sources regarding a phenomenon.[ 2 , 4 ] The inductive process is applicable to all qualitative research in which the research question has been more explorative and overarching in terms of understanding the phenomenon in peoples' lives. For example, in Rempel et al .'s[ 8 ] study on parenting children with life-threatening congenital heart disease, the researchers explored the process of parenting children with a lethal heart condition. Volumes of data generated through individual interviews with parents and grandparents were inductively analysed to understand the 'facets of parenting' children with heart disease. Inductive analysis motivates and enhances researchers to rise above describing what the participants say about their experience to interpretive conceptualisation and abstraction. The process of deduction and induction in qualitative data analysis is depicted in Figure 1 .

GENERAL STEPS IN DATA ANALYSIS

Although different analytical processes are proposed by different researchers, there are generally four basic steps to qualitative data analysis. These steps are similar to what is generally known as qualitative content analysis.[ 4 , 9 ] In any qualitative approach, the analysis starts with the steps of content analysis. The content analysis ends generally at an interpretive descriptive level. Further analysis to raise data to abstraction may be needed in some approaches such as grounded theory.

Preparation of data

Reading and reflecting, coding, categorising and memoing.

- Developing themes/conceptual models or theory.

As already discussed, the inductive process in qualitative research begins when data collection starts. Each recorded data set from individual interviews, focus groups or conversations should be first transcribed and edited. The researcher may decide on units of data that can be analysed to further help in organising.[ 10 ] The units can be the whole interview from one individual or interview transcripts from one family or data from different individuals connected with in a case (as in case study). On some occasions, the unit may consist of all answers to one question or one aspect of the phenomenon. Many researchers may not form any such units at the beginning of the analysis which is also accepted. The essential aspect of the preparation is to ensure that participants' accounts are truly represented in transcribing. Researchers who have a large amount of content will need assistance in transcription. One hour of interview may take 4–6 h to transcribe.[ 2 ] An official transcriber will do a good job than a researcher who may spend a long time in transcribing volumes of data. However, the researcher has to edit the transcription by listening to the audiotaped version and include words and connotations that are missed to maintain accuracy in transcription.[ 11 ] Another important point to note is to transcribe and prepare the data as soon as interviews are completed. This facilitates the iterative process of data collection and analysis. All data, including field notes, should be organised with date, time and identification number or pseudonym for easy retrieval.[ 2 ] Assigning numbers or pseudonyms help to maintain the confidentiality of the participants.

Reading the data as a whole, and reflecting on what the participants are sharing gives an initial understanding of the narrative. The reflection may start at the time of the interview itself. However, reading and rereading the transcribed text from an interview gives an understanding of context, situations, events and actions related to the phenomenon of interest before the data can be analysed for concepts and themes.[ 12 ] Reading and reflection help the researcher to get immersed in the data, understand the perspectives of participants and decide on an analytical framework for further data analysis.[ 13 ] As texts are read, the researcher may jot down points or questions that are striking or unusual or does or does not support assumptions. Such reflective notes assist the researcher to decide on questions to be asked in further interviews or look for similarities or differences in interview texts from other participants. These initial reflections do not complete analysis; rather, it provides a platform for the analysis to develop. An example of initial reflections when analysing interviews from a study on home care of children with chronic illness is given below.

Reflections-family 1 interview

'This family has a lot of issues related to home care. Their conversation is a list of complaints about the system and the personnel. Even though it appears that help is being rendered for support of child at home, nothing seems to satisfy the parents. The conversation revolves around how they have not been given their due in terms of material and personnel support rather than about their sick child or the siblings.

After a while, it became tedious for me to read this transcript as I resent the complaints (which I should not do I suppose). I wonder how other families perceive home care.'

The initial reflections also help to understand our position as a researcher and the assumptions the researcher brings to the study. It helps us to be aware of one's own professional and personal prejudices which may influence the interpretation of data.

For analysis to progress further the researcher has to decide on an organised way of sorting and categorising data to come to an understanding about the phenomenon or the concepts embedded in the phenomenon. Researchers may choose to analyse only the manifest content in a descriptive qualitative study or may move further to look for latent content in an analytical-qualitative study.[ 4 ] The manifest content analysis includes looking for specific words or phrases used by the participants and accounting for how many have expressed the same or similar words/phrases in the data. It looks at what is obvious. Latent content analysis, on the other hand, involves coding and categorising to identify patterns and themes that are implicit in the data.

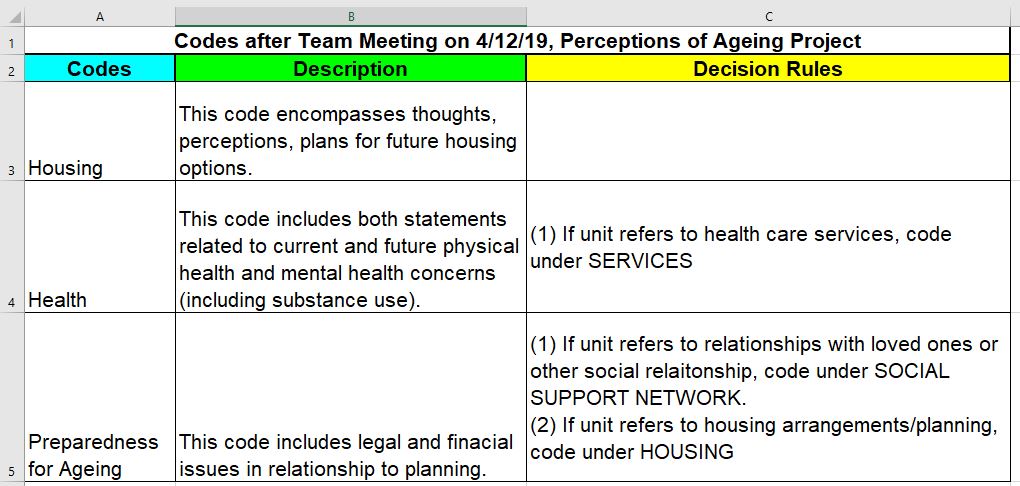

Coding is an essential first step in sorting and organising data.[ 4 ] Codes are labels given to phrases, expressions, behaviours, images and sentences as the researcher goes through the data.[ 13 ] It can be 'in vivo' codes or 'interpretive codes'. When participants' exact expressions itself are used as codes it is called ' in vivo ' codes.[ 14 ] If the researcher interprets the expression or behaviour of the participant depicted in the text, then it is called interpretive codes. In the grounded theory method, different levels of coding are suggested. The first level is called the 'open coding' that involves sifting through the initial data line by line and creating in vivo or interpretive codes. Questions such as what are this person saying or doing or what is happening here? will help in the initial coding of data. Initial coding may reveal gaps in the data or raise questions.[ 15 , 16 ] These gaps and questions will help the researcher to locate the sources from where further data are to be collected. The second level is known as 'focus or selective coding' will be used in subsequent interviews. Focused coding involves using the most frequent or most significant earlier codes to sift through large amounts of data. Focused codes are more directed, selective and conceptual and are employed to raise the sorting of data to an analytical level.[ 17 ] The first level of coding can be done manually or can be done using qualitative software packages. In other types of content analysis, the different levels of coding may not be followed instead the researcher engages in interpretive coding as the text is read. In a grounded theory study on parenting children with burn injury open codes such as scolded, accused, unwanted, guilt, nonsupport, difficult to care, terrible pain, blaming oneself and tired came up as the data were coded [ Table 1 ]. These codes gave the researcher an initial insight into the traumatic experiences that the parents undergo when caring for their burn-injured children. As texts were coded, the researcher attempted to understand further the struggles of parents in the successive interviews with other families.

Categorising

Categorising involves grouping similar codes together and formulating an understandable set within which related data can be clubbed. A category is 'a collection of similar data sorted into the same place' – the 'what', developed using content analysis and developing trajectories and relationships over time.[ 18 ] It is a group of content that shares commonality. Data can be categorised generally when the researcher realises that the same codes or codes that are relatively similar are emerging from the data. When categories are developed based on codes, they can be still at descriptive level or can be at an abstract level.[ 10 ] By developing categories a conceptual coding structure can be formulated. At this level, there is no need to continue line by line coding. Instead, the researcher uses the coding structure to sort data. In other words, parts of data that best fit the categories, and the codes are grouped appropriately from across the data sets. The grouping of data into categories is enabled by comparing and contrasting data from different sources or individuals.[ 19 ] As constant comparison continues[ 15 ] questions such as 'What is different between the accounts of two families? What are similar? Will help in grouping data into categories. As the researcher compares data, questions such as 'what if' may come up which will propel the researcher to return to participants to know more or even purposively include participants who will answer the question. The data under each category should be read again to ensure that they appropriately represent the category.[ 4 ] Qualitative software packages are very useful in sorting and organising data from this level. Any part of data which is not fitting into any category needs to be coded newly, and the new codes should be added. The emerging new codes may later fit into a category or form new categories. All data are thus accounted for during this phase of analysis.

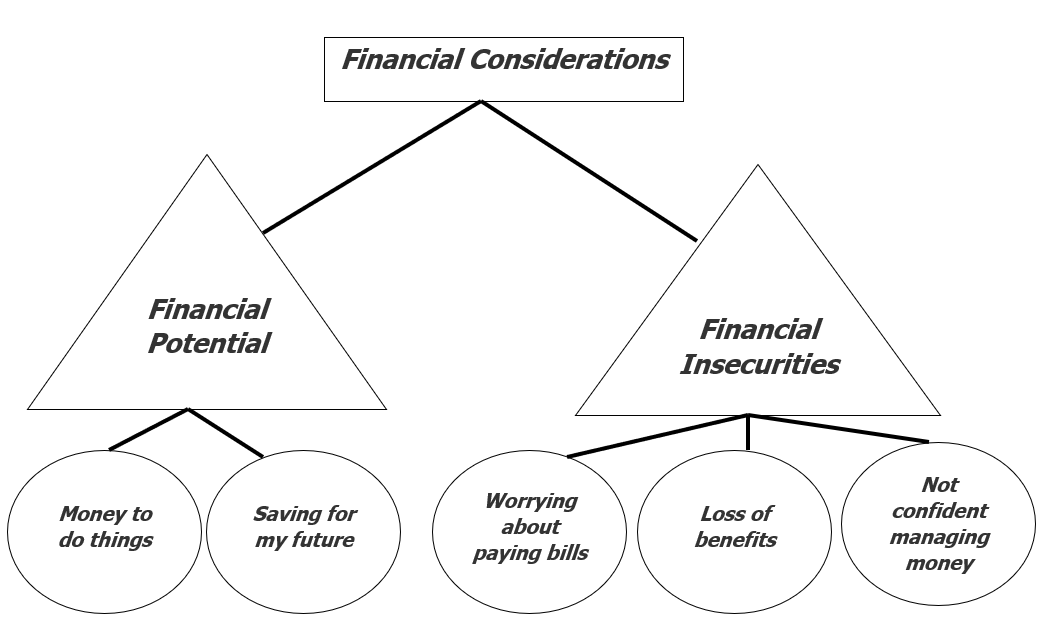

As analysis and grouping of further data continue, the researcher may rearrange data within categories or come up with subcategories.[ 4 ] The researcher may also go from data to codes, to sub-categories which then can be abstracted into categories.[ 10 ] In the burn study, similar codes that were repeated in many transcripts were grouped together. Grouping these codes helped in developing subcategories such as physical trauma, emotional trauma, self-blame and shame. The sub-categories were then grouped to develop meaningful categories such as facing blame and enduring the burn [ Table 2 ]. Creating categories thus assists the researcher to move from describing phenomenon to interpretation and abstraction.

Memoing is 'the researcher's record of analysis, thoughts, interpretations, questions and directions for further data collection' (pp 110).[ 20 ] Memos are elaborations of thoughts on data, codes and categories that are written down.[ 17 ] Simply put, memoing is writing down the reflections and ideas that arise from the data as data analysis progresses. As data are coded, the researcher writes down his/her thoughts on the codes and their relationships as they occur. Memo-writing is an on-going process, and memos lead to abstraction and theorising for the write-up of ideas.[ 15 ] Initial or early memos help in exploring and filling out the initial qualitative codes. It helps the researcher to understand what the participants are saying or doing and what is happening. Advanced memos help in the emergence of categories and identify the beliefs and assumptions that support the categories. Memos also help in looking at the categories and the data from different vantage points.[ 21 ] One of the early memos from burn study is given as an example.

Extensive wound

24 June, 2010, 10 pm – After coding interview texts from three families.

'I am struck by the enormity of a burn injury. I realize that family members cannot do many things for the child at home after discharge of a severely burned child because the injury is so big that even some clinics and doctors who are not familiar with burn care cannot manage care. These children need continuous attention of the health care professionals. They need professional assistance with dressing. They need professional assistance with splints and gadgets, and therapies. The injury is extensive that it is difficult for family members to do many things on their own. It is very hard, very hard for the parents to take up a role of the caregiver for children with burns because it involves large wound which has not healed or is in the process of early healing and the child suffers severe pain. The post burn care is very different from caring for other children with chronic illness or congenital defects which most often does not involve pain. The child's suffering makes it easy for the parents to view them as vulnerable. Yet the parents do their best. They try to follow the Health Care Professionals advice, they try to go for follow-up, but it seems simply not enough. I think the parents are doing all that they can within the context of severe injury, lack of finances, lack of resources in home town, or blame and ridicule from neighbors and others…'

Stopping to memo helps the researcher to reflect on data, move towards developing themes and models and lay the ground for discussion of findings later. Memos need to include the time, date, place and context at which they were written.

Developing themes, conceptual models and theory

Developing themes involves the 'threading together of the underlying meaning' that run through all the categories. It is the interpretation of the latent content in the texts.[ 10 ] Theming involves integrating all the categories and explicating the relationship in the categories.[ 4 ] In coding and categorising the researcher is involved in deconstructing or dividing the data to understand the feelings, behaviours and actions. In the phase of theming, the researcher is trying to connect the deconstructed part by understanding the implicit meaning that connects the behaviour, actions and reactions related to a phenomenon. To identify theme, the grounded theorist asks: What is the core issue which the participants are dealing with? The phenomenologist will ask about the central essence or structure of the lived experience related to the phenomenon of interest. The ethnographer may look at the cultural themes that link the categories. The researcher generally comes up with one to three themes.[ 4 ] Too many categories or themes may indicate that the analysis is prematurely closed and implies the need for the researcher to further interpret and conceptualise the data.[ 4 ] In the study on parenting children with burn injury, the researcher came up with the theme of 'Double Trauma' which explicated the experiences of parents living the burn with their children and also enduring the blame within the context of both the hospital and home [ Table 2 ].

In phenomenology and ethnography, the analysis may end with identifying themes. In other approaches, such as grounded theory and interpretive description, the analysis may progress further to developing theory or conceptual models. Identifying the core category/variable from the coding activity, memos and constant comparisons are the first step in moving towards theory development in grounded theory.[ 15 ] The core category is the main theme that the researcher identifies in the data. The next step in grounded theory is to identify the basic social process (BSP). The BSP evolves from understanding how participants are dealing with the core issue. In real-world situations, individuals develop their own strategies and process to deal with the core issue in any situation. Identifying this process is the stepping stone to theory development in grounded theory. In the example of burn study, the theme 'Double Trauma' was the core category and parenting in the burn study involved a dual process of 'embracing the survival' and 'enduring the blame'.[ 22 , 23 ] A conceptual model was developed based on these processes.

PITFALLS IN QUALITATIVE ANALYSIS

Large data sets for analysis.

As already explained, the amount of data text or field notes from observations and other sources in qualitative research can become overwhelming if data analysis is not initiated concurrently with data collection/generation. Coding large data text is tedious and takes much of the researcher's time. Postponing analysis to the end of data collection also prevents the researcher from becoming focused in subsequent interviews and filling gaps in data in further data collection. Therefore, deferring data analysis should be avoided.

Premature closure

Researcher should not hasten to conclude analysis with developing categories or themes. This may lead to 'premature closure' of the research and the danger that the participants' experiences are misunderstood or incompletely understood.[ 15 ] Qualitative data analysis involves in-depth interaction with the data and understanding the nuances in the experiences and the meanings behind actions. The researcher continues to generate data until all the categories are saturated, which means that the categories are mutually exclusive and can be explained from all aspects or angle.[ 21 ] In the burn study, although the table in this article appears simple, the codes and categories were developed from larger data sets representing multiple participant interviews and field notes. The category 'facing blame' was brought forth with parents' accounts of experiencing blame in almost all the families in one or multiple ways: from family members, health-care professionals, strangers and the child itself. The researcher needs to be reflexive and iteratively do data generation and analysis until there is no new information forthcoming in the data. Inferring conclusions too soon which is otherwise known as 'inferential leaps', will prevent the researcher from getting the whole picture of the phenomenon.[ 2 ]

Interpretation of meanings

During the analysis process as the researcher interprets and conceptualises the participants' experiences, he/she delves into the tacit meanings of actions and feelings expressed by participants or observed in various situations. The researcher endeavours to keep the interpretations as close to the participants' accounts as possible. However, it should be understood that the meanings are co-constructed by both the participant and researcher by collaborative effort which is also a hallmark of qualitative research.[ 2 ] In the process of co-construction, researcher should be cautious to not lose the voice of the participants. Discussion with peer at all steps of analysis or checks on codes and categories by others in the research team may help to avoid this problem.

Qualitative data analysis is a complex process that demands much of reading, thinking and reflection on the part of researcher. It is time-consuming as the researcher has to be constantly engaged with the texts to tease out the hidden meanings. Beyond the differences in data analysis in different qualitative methods, coding, categorising and developing themes are the essential phases of data analysis in most methods. Researchers should avoid premature conclusions and ensure that the findings are comprehensively represented by participants' accounts. Qualitative data analysis is an iterative process.

Financial support and sponsorship

Conflicts of interest.

There are no conflicts of interest.

- Cited Here |

- View Full Text | CrossRef

- PubMed | CrossRef

- View Full Text | PubMed | CrossRef

Categories; codes; data analysis; qualitative research; theme

- + Favorites

- View in Gallery

Readers Of this Article Also Read

Artificial intelligence in healthcare, q-methodology as a research design: a brief overview, scholarship in nursing, nursing management of patients with psychiatric emergencies, a study to assess the insight and motivation towards quitting alcohol among....

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case AskWhy Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Qualitative Data Analysis: What is it, Methods + Examples

In a world rich with information and narrative, understanding the deeper layers of human experiences requires a unique vision that goes beyond numbers and figures. This is where the power of qualitative data analysis comes to light.

In this blog, we’ll learn about qualitative data analysis, explore its methods, and provide real-life examples showcasing its power in uncovering insights.

What is Qualitative Data Analysis?

Qualitative data analysis is a systematic process of examining non-numerical data to extract meaning, patterns, and insights.

In contrast to quantitative analysis, which focuses on numbers and statistical metrics, the qualitative study focuses on the qualitative aspects of data, such as text, images, audio, and videos. It seeks to understand every aspect of human experiences, perceptions, and behaviors by examining the data’s richness.

Companies frequently conduct this analysis on customer feedback. You can collect qualitative data from reviews, complaints, chat messages, interactions with support centers, customer interviews, case notes, or even social media comments. This kind of data holds the key to understanding customer sentiments and preferences in a way that goes beyond mere numbers.

Importance of Qualitative Data Analysis

Qualitative data analysis plays a crucial role in your research and decision-making process across various disciplines. Let’s explore some key reasons that underline the significance of this analysis:

In-Depth Understanding

It enables you to explore complex and nuanced aspects of a phenomenon, delving into the ‘how’ and ‘why’ questions. This method provides you with a deeper understanding of human behavior, experiences, and contexts that quantitative approaches might not capture fully.

Contextual Insight

You can use this analysis to give context to numerical data. It will help you understand the circumstances and conditions that influence participants’ thoughts, feelings, and actions. This contextual insight becomes essential for generating comprehensive explanations.

Theory Development

You can generate or refine hypotheses via qualitative data analysis. As you analyze the data attentively, you can form hypotheses, concepts, and frameworks that will drive your future research and contribute to theoretical advances.

Participant Perspectives

When performing qualitative research, you can highlight participant voices and opinions. This approach is especially useful for understanding marginalized or underrepresented people, as it allows them to communicate their experiences and points of view.

Exploratory Research

The analysis is frequently used at the exploratory stage of your project. It assists you in identifying important variables, developing research questions, and designing quantitative studies that will follow.

Types of Qualitative Data

When conducting qualitative research, you can use several qualitative data collection methods , and here you will come across many sorts of qualitative data that can provide you with unique insights into your study topic. These data kinds add new views and angles to your understanding and analysis.

Interviews and Focus Groups

Interviews and focus groups will be among your key methods for gathering qualitative data. Interviews are one-on-one talks in which participants can freely share their thoughts, experiences, and opinions.

Focus groups, on the other hand, are discussions in which members interact with one another, resulting in dynamic exchanges of ideas. Both methods provide rich qualitative data and direct access to participant perspectives.

Observations and Field Notes

Observations and field notes are another useful sort of qualitative data. You can immerse yourself in the research environment through direct observation, carefully documenting behaviors, interactions, and contextual factors.

These observations will be recorded in your field notes, providing a complete picture of the environment and the behaviors you’re researching. This data type is especially important for comprehending behavior in their natural setting.

Textual and Visual Data

Textual and visual data include a wide range of resources that can be qualitatively analyzed. Documents, written narratives, and transcripts from various sources, such as interviews or speeches, are examples of textual data.

Photographs, films, and even artwork provide a visual layer to your research. These forms of data allow you to investigate what is spoken and the underlying emotions, details, and symbols expressed by language or pictures.

When to Choose Qualitative Data Analysis over Quantitative Data Analysis

As you begin your research journey, understanding why the analysis of qualitative data is important will guide your approach to understanding complex events. If you analyze qualitative data, it will provide new insights that complement quantitative methodologies, which will give you a broader understanding of your study topic.

It is critical to know when to use qualitative analysis over quantitative procedures. You can prefer qualitative data analysis when:

- Complexity Reigns: When your research questions involve deep human experiences, motivations, or emotions, qualitative research excels at revealing these complexities.

- Exploration is Key: Qualitative analysis is ideal for exploratory research. It will assist you in understanding a new or poorly understood topic before formulating quantitative hypotheses.

- Context Matters: If you want to understand how context affects behaviors or results, qualitative data analysis provides the depth needed to grasp these relationships.

- Unanticipated Findings: When your study provides surprising new viewpoints or ideas, qualitative analysis helps you to delve deeply into these emerging themes.

- Subjective Interpretation is Vital: When it comes to understanding people’s subjective experiences and interpretations, qualitative data analysis is the way to go.

You can make informed decisions regarding the right approach for your research objectives if you understand the importance of qualitative analysis and recognize the situations where it shines.

Qualitative Data Analysis Methods and Examples

Exploring various qualitative data analysis methods will provide you with a wide collection for making sense of your research findings. Once the data has been collected, you can choose from several analysis methods based on your research objectives and the data type you’ve collected.

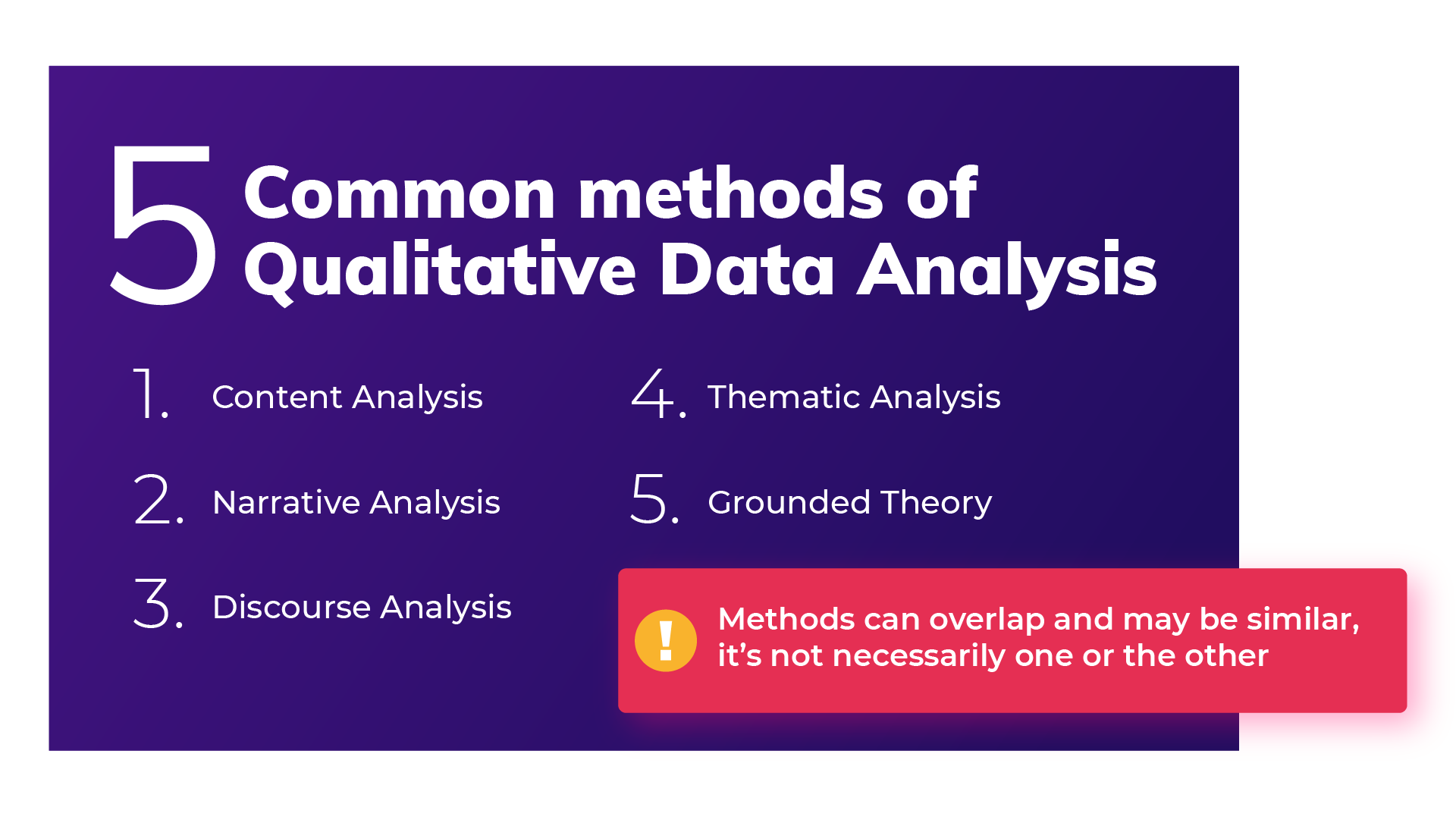

There are five main methods for analyzing qualitative data. Each method takes a distinct approach to identifying patterns, themes, and insights within your qualitative data. They are:

Method 1: Content Analysis

Content analysis is a methodical technique for analyzing textual or visual data in a structured manner. In this method, you will categorize qualitative data by splitting it into manageable pieces and assigning the manual coding process to these units.

As you go, you’ll notice ongoing codes and designs that will allow you to conclude the content. This method is very beneficial for detecting common ideas, concepts, or themes in your data without losing the context.

Steps to Do Content Analysis

Follow these steps when conducting content analysis:

- Collect and Immerse: Begin by collecting the necessary textual or visual data. Immerse yourself in this data to fully understand its content, context, and complexities.

- Assign Codes and Categories: Assign codes to relevant data sections that systematically represent major ideas or themes. Arrange comparable codes into groups that cover the major themes.

- Analyze and Interpret: Develop a structured framework from the categories and codes. Then, evaluate the data in the context of your research question, investigate relationships between categories, discover patterns, and draw meaning from these connections.

Benefits & Challenges

There are various advantages to using content analysis:

- Structured Approach: It offers a systematic approach to dealing with large data sets and ensures consistency throughout the research.

- Objective Insights: This method promotes objectivity, which helps to reduce potential biases in your study.

- Pattern Discovery: Content analysis can help uncover hidden trends, themes, and patterns that are not always obvious.

- Versatility: You can apply content analysis to various data formats, including text, internet content, images, etc.

However, keep in mind the challenges that arise:

- Subjectivity: Even with the best attempts, a certain bias may remain in coding and interpretation.

- Complexity: Analyzing huge data sets requires time and great attention to detail.

- Contextual Nuances: Content analysis may not capture all of the contextual richness that qualitative data analysis highlights.

Example of Content Analysis

Suppose you’re conducting market research and looking at customer feedback on a product. As you collect relevant data and analyze feedback, you’ll see repeating codes like “price,” “quality,” “customer service,” and “features.” These codes are organized into categories such as “positive reviews,” “negative reviews,” and “suggestions for improvement.”

According to your findings, themes such as “price” and “customer service” stand out and show that pricing and customer service greatly impact customer satisfaction. This example highlights the power of content analysis for obtaining significant insights from large textual data collections.

Method 2: Thematic Analysis

Thematic analysis is a well-structured procedure for identifying and analyzing recurring themes in your data. As you become more engaged in the data, you’ll generate codes or short labels representing key concepts. These codes are then organized into themes, providing a consistent framework for organizing and comprehending the substance of the data.

The analysis allows you to organize complex narratives and perspectives into meaningful categories, which will allow you to identify connections and patterns that may not be visible at first.

Steps to Do Thematic Analysis

Follow these steps when conducting a thematic analysis:

- Code and Group: Start by thoroughly examining the data and giving initial codes that identify the segments. To create initial themes, combine relevant codes.

- Code and Group: Begin by engaging yourself in the data, assigning first codes to notable segments. To construct basic themes, group comparable codes together.

- Analyze and Report: Analyze the data within each theme to derive relevant insights. Organize the topics into a consistent structure and explain your findings, along with data extracts that represent each theme.

Thematic analysis has various benefits:

- Structured Exploration: It is a method for identifying patterns and themes in complex qualitative data.

- Comprehensive knowledge: Thematic analysis promotes an in-depth understanding of the complications and meanings of the data.

- Application Flexibility: This method can be customized to various research situations and data kinds.

However, challenges may arise, such as:

- Interpretive Nature: Interpreting qualitative data in thematic analysis is vital, and it is critical to manage researcher bias.

- Time-consuming: The study can be time-consuming, especially with large data sets.

- Subjectivity: The selection of codes and topics might be subjective.

Example of Thematic Analysis

Assume you’re conducting a thematic analysis on job satisfaction interviews. Following your immersion in the data, you assign initial codes such as “work-life balance,” “career growth,” and “colleague relationships.” As you organize these codes, you’ll notice themes develop, such as “Factors Influencing Job Satisfaction” and “Impact on Work Engagement.”

Further investigation reveals the tales and experiences included within these themes and provides insights into how various elements influence job satisfaction. This example demonstrates how thematic analysis can reveal meaningful patterns and insights in qualitative data.

Method 3: Narrative Analysis

The narrative analysis involves the narratives that people share. You’ll investigate the histories in your data, looking at how stories are created and the meanings they express. This method is excellent for learning how people make sense of their experiences through narrative.

Steps to Do Narrative Analysis

The following steps are involved in narrative analysis:

- Gather and Analyze: Start by collecting narratives, such as first-person tales, interviews, or written accounts. Analyze the stories, focusing on the plot, feelings, and characters.

- Find Themes: Look for recurring themes or patterns in various narratives. Think about the similarities and differences between these topics and personal experiences.

- Interpret and Extract Insights: Contextualize the narratives within their larger context. Accept the subjective nature of each narrative and analyze the narrator’s voice and style. Extract insights from the tales by diving into the emotions, motivations, and implications communicated by the stories.

There are various advantages to narrative analysis:

- Deep Exploration: It lets you look deeply into people’s personal experiences and perspectives.

- Human-Centered: This method prioritizes the human perspective, allowing individuals to express themselves.

However, difficulties may arise, such as:

- Interpretive Complexity: Analyzing narratives requires dealing with the complexities of meaning and interpretation.

- Time-consuming: Because of the richness and complexities of tales, working with them can be time-consuming.

Example of Narrative Analysis

Assume you’re conducting narrative analysis on refugee interviews. As you read the stories, you’ll notice common themes of toughness, loss, and hope. The narratives provide insight into the obstacles that refugees face, their strengths, and the dreams that guide them.

The analysis can provide a deeper insight into the refugees’ experiences and the broader social context they navigate by examining the narratives’ emotional subtleties and underlying meanings. This example highlights how narrative analysis can reveal important insights into human stories.

Method 4: Grounded Theory Analysis

Grounded theory analysis is an iterative and systematic approach that allows you to create theories directly from data without being limited by pre-existing hypotheses. With an open mind, you collect data and generate early codes and labels that capture essential ideas or concepts within the data.

As you progress, you refine these codes and increasingly connect them, eventually developing a theory based on the data. Grounded theory analysis is a dynamic process for developing new insights and hypotheses based on details in your data.

Steps to Do Grounded Theory Analysis

Grounded theory analysis requires the following steps:

- Initial Coding: First, immerse yourself in the data, producing initial codes that represent major concepts or patterns.

- Categorize and Connect: Using axial coding, organize the initial codes, which establish relationships and connections between topics.

- Build the Theory: Focus on creating a core category that connects the codes and themes. Regularly refine the theory by comparing and integrating new data, ensuring that it evolves organically from the data.

Grounded theory analysis has various benefits:

- Theory Generation: It provides a one-of-a-kind opportunity to generate hypotheses straight from data and promotes new insights.

- In-depth Understanding: The analysis allows you to deeply analyze the data and reveal complex relationships and patterns.

- Flexible Process: This method is customizable and ongoing, which allows you to enhance your research as you collect additional data.

However, challenges might arise with:

- Time and Resources: Because grounded theory analysis is a continuous process, it requires a large commitment of time and resources.

- Theoretical Development: Creating a grounded theory involves a thorough understanding of qualitative data analysis software and theoretical concepts.

- Interpretation of Complexity: Interpreting and incorporating a newly developed theory into existing literature can be intellectually hard.

Example of Grounded Theory Analysis

Assume you’re performing a grounded theory analysis on workplace collaboration interviews. As you open code the data, you will discover notions such as “communication barriers,” “team dynamics,” and “leadership roles.” Axial coding demonstrates links between these notions, emphasizing the significance of efficient communication in developing collaboration.

You create the core “Integrated Communication Strategies” category through selective coding, which unifies new topics.

This theory-driven category serves as the framework for understanding how numerous aspects contribute to effective team collaboration. This example shows how grounded theory analysis allows you to generate a theory directly from the inherent nature of the data.

Method 5: Discourse Analysis

Discourse analysis focuses on language and communication. You’ll look at how language produces meaning and how it reflects power relations, identities, and cultural influences. This strategy examines what is said and how it is said; the words, phrasing, and larger context of communication.

The analysis is precious when investigating power dynamics, identities, and cultural influences encoded in language. By evaluating the language used in your data, you can identify underlying assumptions, cultural standards, and how individuals negotiate meaning through communication.

Steps to Do Discourse Analysis

Conducting discourse analysis entails the following steps:

- Select Discourse: For analysis, choose language-based data such as texts, speeches, or media content.

- Analyze Language: Immerse yourself in the conversation, examining language choices, metaphors, and underlying assumptions.

- Discover Patterns: Recognize the dialogue’s reoccurring themes, ideologies, and power dynamics. To fully understand the effects of these patterns, put them in their larger context.

There are various advantages of using discourse analysis:

- Understanding Language: It provides an extensive understanding of how language builds meaning and influences perceptions.

- Uncovering Power Dynamics: The analysis reveals how power dynamics appear via language.

- Cultural Insights: This method identifies cultural norms, beliefs, and ideologies stored in communication.

However, the following challenges may arise:

- Complexity of Interpretation: Language analysis involves navigating multiple levels of nuance and interpretation.

- Subjectivity: Interpretation can be subjective, so controlling researcher bias is important.

- Time-Intensive: Discourse analysis can take a lot of time because careful linguistic study is required in this analysis.

Example of Discourse Analysis

Consider doing discourse analysis on media coverage of a political event. You notice repeating linguistic patterns in news articles that depict the event as a conflict between opposing parties. Through deconstruction, you can expose how this framing supports particular ideologies and power relations.

You can illustrate how language choices influence public perceptions and contribute to building the narrative around the event by analyzing the speech within the broader political and social context. This example shows how discourse analysis can reveal hidden power dynamics and cultural influences on communication.

How to do Qualitative Data Analysis with the QuestionPro Research suite?

QuestionPro is a popular survey and research platform that offers tools for collecting and analyzing qualitative and quantitative data. Follow these general steps for conducting qualitative data analysis using the QuestionPro Research Suite:

- Collect Qualitative Data: Set up your survey to capture qualitative responses. It might involve open-ended questions, text boxes, or comment sections where participants can provide detailed responses.

- Export Qualitative Responses: Export the responses once you’ve collected qualitative data through your survey. QuestionPro typically allows you to export survey data in various formats, such as Excel or CSV.

- Prepare Data for Analysis: Review the exported data and clean it if necessary. Remove irrelevant or duplicate entries to ensure your data is ready for analysis.

- Code and Categorize Responses: Segment and label data, letting new patterns emerge naturally, then develop categories through axial coding to structure the analysis.

- Identify Themes: Analyze the coded responses to identify recurring themes, patterns, and insights. Look for similarities and differences in participants’ responses.

- Generate Reports and Visualizations: Utilize the reporting features of QuestionPro to create visualizations, charts, and graphs that help communicate the themes and findings from your qualitative research.

- Interpret and Draw Conclusions: Interpret the themes and patterns you’ve identified in the qualitative data. Consider how these findings answer your research questions or provide insights into your study topic.

- Integrate with Quantitative Data (if applicable): If you’re also conducting quantitative research using QuestionPro, consider integrating your qualitative findings with quantitative results to provide a more comprehensive understanding.

Qualitative data analysis is vital in uncovering various human experiences, views, and stories. If you’re ready to transform your research journey and apply the power of qualitative analysis, now is the moment to do it. Book a demo with QuestionPro today and begin your journey of exploration.

LEARN MORE FREE TRIAL

MORE LIKE THIS

Jotform vs SurveyMonkey: Which Is Best in 2024

Aug 15, 2024

360 Degree Feedback Spider Chart is Back!

Aug 14, 2024

Jotform vs Wufoo: Comparison of Features and Prices

Aug 13, 2024

Product or Service: Which is More Important? — Tuesday CX Thoughts

Other categories.

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Tuesday CX Thoughts (TCXT)

- Uncategorized

- What’s Coming Up

- Workforce Intelligence

- AI & NLP

- Churn & Loyalty

- Customer Experience

- Customer Journeys

- Customer Metrics

- Feedback Analysis

- Product Experience

- Product Updates

- Sentiment Analysis

- Surveys & Feedback Collection

- Text Analytics

- Try Thematic

Welcome to the community

Qualitative Data Analysis: Step-by-Step Guide (Manual vs. Automatic)

When we conduct qualitative methods of research, need to explain changes in metrics or understand people's opinions, we always turn to qualitative data. Qualitative data is typically generated through:

- Interview transcripts

- Surveys with open-ended questions

- Contact center transcripts

- Texts and documents

- Audio and video recordings

- Observational notes

Compared to quantitative data, which captures structured information, qualitative data is unstructured and has more depth. It can answer our questions, can help formulate hypotheses and build understanding.

It's important to understand the differences between quantitative data & qualitative data . But unfortunately, analyzing qualitative data is difficult. While tools like Excel, Tableau and PowerBI crunch and visualize quantitative data with ease, there are a limited number of mainstream tools for analyzing qualitative data . The majority of qualitative data analysis still happens manually.

That said, there are two new trends that are changing this. First, there are advances in natural language processing (NLP) which is focused on understanding human language. Second, there is an explosion of user-friendly software designed for both researchers and businesses. Both help automate the qualitative data analysis process.

In this post we want to teach you how to conduct a successful qualitative data analysis. There are two primary qualitative data analysis methods; manual & automatic. We will teach you how to conduct the analysis manually, and also, automatically using software solutions powered by NLP. We’ll guide you through the steps to conduct a manual analysis, and look at what is involved and the role technology can play in automating this process.

More businesses are switching to fully-automated analysis of qualitative customer data because it is cheaper, faster, and just as accurate. Primarily, businesses purchase subscriptions to feedback analytics platforms so that they can understand customer pain points and sentiment.

We’ll take you through 5 steps to conduct a successful qualitative data analysis. Within each step we will highlight the key difference between the manual, and automated approach of qualitative researchers. Here's an overview of the steps:

The 5 steps to doing qualitative data analysis

- Gathering and collecting your qualitative data

- Organizing and connecting into your qualitative data

- Coding your qualitative data

- Analyzing the qualitative data for insights

- Reporting on the insights derived from your analysis

What is Qualitative Data Analysis?

Qualitative data analysis is a process of gathering, structuring and interpreting qualitative data to understand what it represents.

Qualitative data is non-numerical and unstructured. Qualitative data generally refers to text, such as open-ended responses to survey questions or user interviews, but also includes audio, photos and video.

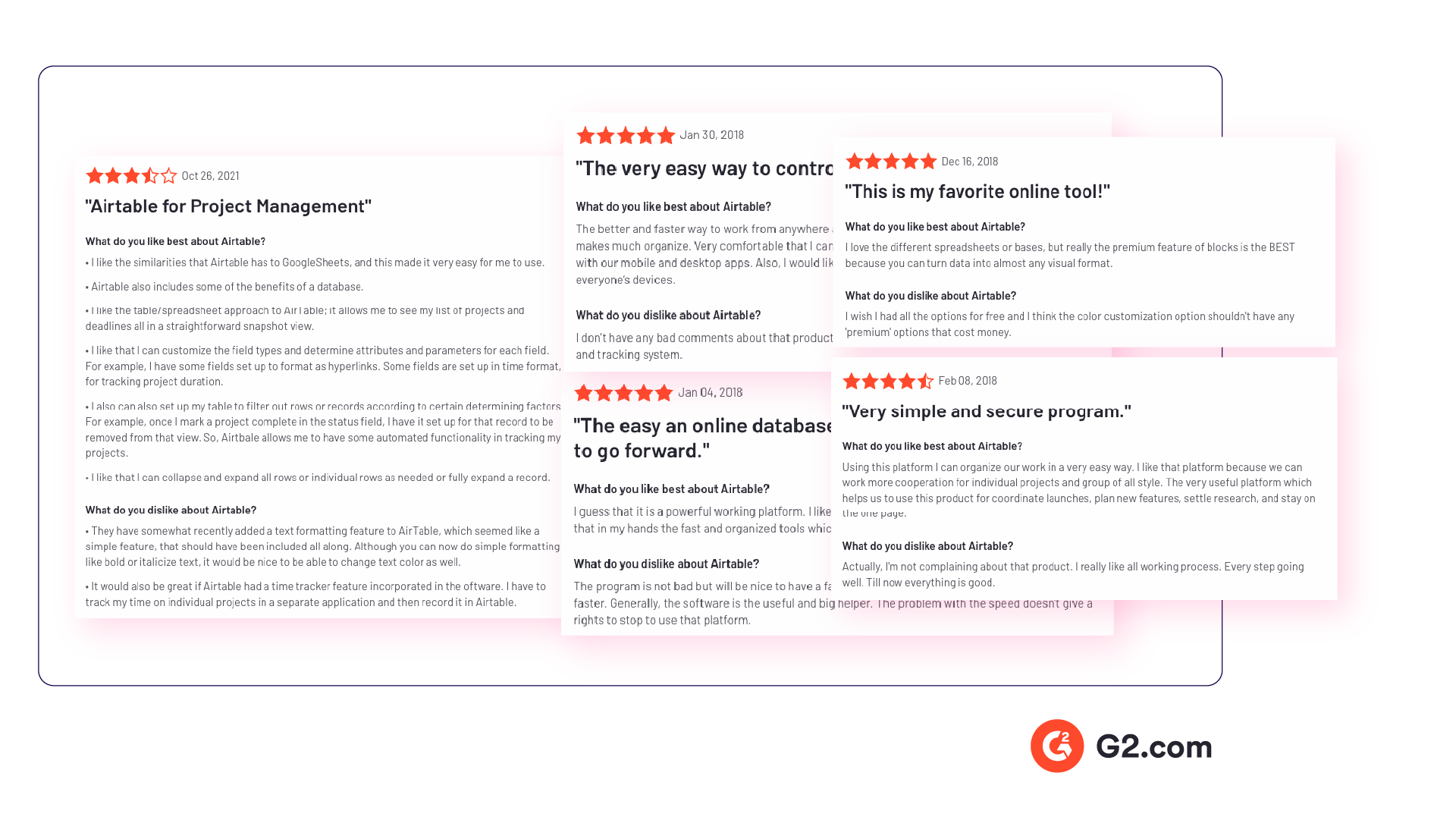

Businesses often perform qualitative data analysis on customer feedback. And within this context, qualitative data generally refers to verbatim text data collected from sources such as reviews, complaints, chat messages, support centre interactions, customer interviews, case notes or social media comments.

How is qualitative data analysis different from quantitative data analysis?

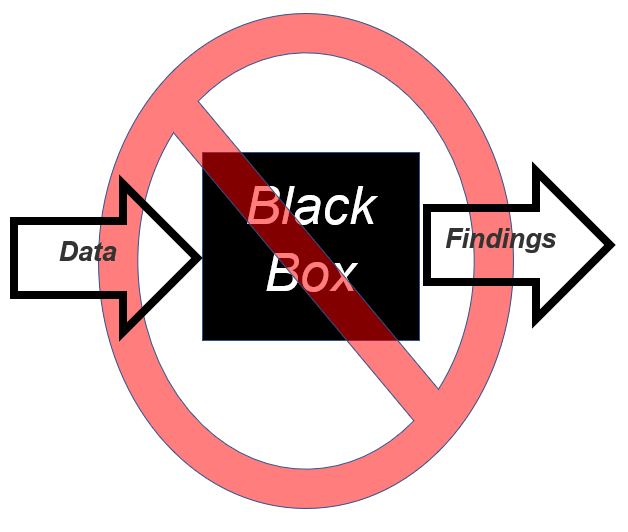

Understanding the differences between quantitative & qualitative data is important. When it comes to analyzing data, Qualitative Data Analysis serves a very different role to Quantitative Data Analysis. But what sets them apart?

Qualitative Data Analysis dives into the stories hidden in non-numerical data such as interviews, open-ended survey answers, or notes from observations. It uncovers the ‘whys’ and ‘hows’ giving a deep understanding of people’s experiences and emotions.

Quantitative Data Analysis on the other hand deals with numerical data, using statistics to measure differences, identify preferred options, and pinpoint root causes of issues. It steps back to address questions like "how many" or "what percentage" to offer broad insights we can apply to larger groups.

In short, Qualitative Data Analysis is like a microscope, helping us understand specific detail. Quantitative Data Analysis is like the telescope, giving us a broader perspective. Both are important, working together to decode data for different objectives.

Qualitative Data Analysis methods

Once all the data has been captured, there are a variety of analysis techniques available and the choice is determined by your specific research objectives and the kind of data you’ve gathered. Common qualitative data analysis methods include:

Content Analysis

This is a popular approach to qualitative data analysis. Other qualitative analysis techniques may fit within the broad scope of content analysis. Thematic analysis is a part of the content analysis. Content analysis is used to identify the patterns that emerge from text, by grouping content into words, concepts, and themes. Content analysis is useful to quantify the relationship between all of the grouped content. The Columbia School of Public Health has a detailed breakdown of content analysis .

Narrative Analysis

Narrative analysis focuses on the stories people tell and the language they use to make sense of them. It is particularly useful in qualitative research methods where customer stories are used to get a deep understanding of customers’ perspectives on a specific issue. A narrative analysis might enable us to summarize the outcomes of a focused case study.

Discourse Analysis

Discourse analysis is used to get a thorough understanding of the political, cultural and power dynamics that exist in specific situations. The focus of discourse analysis here is on the way people express themselves in different social contexts. Discourse analysis is commonly used by brand strategists who hope to understand why a group of people feel the way they do about a brand or product.

Thematic Analysis

Thematic analysis is used to deduce the meaning behind the words people use. This is accomplished by discovering repeating themes in text. These meaningful themes reveal key insights into data and can be quantified, particularly when paired with sentiment analysis . Often, the outcome of thematic analysis is a code frame that captures themes in terms of codes, also called categories. So the process of thematic analysis is also referred to as “coding”. A common use-case for thematic analysis in companies is analysis of customer feedback.

Grounded Theory

Grounded theory is a useful approach when little is known about a subject. Grounded theory starts by formulating a theory around a single data case. This means that the theory is “grounded”. Grounded theory analysis is based on actual data, and not entirely speculative. Then additional cases can be examined to see if they are relevant and can add to the original grounded theory.

Challenges of Qualitative Data Analysis

While Qualitative Data Analysis offers rich insights, it comes with its challenges. Each unique QDA method has its unique hurdles. Let’s take a look at the challenges researchers and analysts might face, depending on the chosen method.

- Time and Effort (Narrative Analysis): Narrative analysis, which focuses on personal stories, demands patience. Sifting through lengthy narratives to find meaningful insights can be time-consuming, requires dedicated effort.

- Being Objective (Grounded Theory): Grounded theory, building theories from data, faces the challenges of personal biases. Staying objective while interpreting data is crucial, ensuring conclusions are rooted in the data itself.

- Complexity (Thematic Analysis): Thematic analysis involves identifying themes within data, a process that can be intricate. Categorizing and understanding themes can be complex, especially when each piece of data varies in context and structure. Thematic Analysis software can simplify this process.

- Generalizing Findings (Narrative Analysis): Narrative analysis, dealing with individual stories, makes drawing broad challenging. Extending findings from a single narrative to a broader context requires careful consideration.

- Managing Data (Thematic Analysis): Thematic analysis involves organizing and managing vast amounts of unstructured data, like interview transcripts. Managing this can be a hefty task, requiring effective data management strategies.

- Skill Level (Grounded Theory): Grounded theory demands specific skills to build theories from the ground up. Finding or training analysts with these skills poses a challenge, requiring investment in building expertise.

Benefits of qualitative data analysis

Qualitative Data Analysis (QDA) is like a versatile toolkit, offering a tailored approach to understanding your data. The benefits it offers are as diverse as the methods. Let’s explore why choosing the right method matters.

- Tailored Methods for Specific Needs: QDA isn't one-size-fits-all. Depending on your research objectives and the type of data at hand, different methods offer unique benefits. If you want emotive customer stories, narrative analysis paints a strong picture. When you want to explain a score, thematic analysis reveals insightful patterns

- Flexibility with Thematic Analysis: thematic analysis is like a chameleon in the toolkit of QDA. It adapts well to different types of data and research objectives, making it a top choice for any qualitative analysis.

- Deeper Understanding, Better Products: QDA helps you dive into people's thoughts and feelings. This deep understanding helps you build products and services that truly matches what people want, ensuring satisfied customers

- Finding the Unexpected: Qualitative data often reveals surprises that we miss in quantitative data. QDA offers us new ideas and perspectives, for insights we might otherwise miss.

- Building Effective Strategies: Insights from QDA are like strategic guides. They help businesses in crafting plans that match people’s desires.

- Creating Genuine Connections: Understanding people’s experiences lets businesses connect on a real level. This genuine connection helps build trust and loyalty, priceless for any business.

How to do Qualitative Data Analysis: 5 steps

Now we are going to show how you can do your own qualitative data analysis. We will guide you through this process step by step. As mentioned earlier, you will learn how to do qualitative data analysis manually , and also automatically using modern qualitative data and thematic analysis software.

To get best value from the analysis process and research process, it’s important to be super clear about the nature and scope of the question that’s being researched. This will help you select the research collection channels that are most likely to help you answer your question.

Depending on if you are a business looking to understand customer sentiment, or an academic surveying a school, your approach to qualitative data analysis will be unique.

Once you’re clear, there’s a sequence to follow. And, though there are differences in the manual and automatic approaches, the process steps are mostly the same.

The use case for our step-by-step guide is a company looking to collect data (customer feedback data), and analyze the customer feedback - in order to improve customer experience. By analyzing the customer feedback the company derives insights about their business and their customers. You can follow these same steps regardless of the nature of your research. Let’s get started.

Step 1: Gather your qualitative data and conduct research (Conduct qualitative research)

The first step of qualitative research is to do data collection. Put simply, data collection is gathering all of your data for analysis. A common situation is when qualitative data is spread across various sources.

Classic methods of gathering qualitative data

Most companies use traditional methods for gathering qualitative data: conducting interviews with research participants, running surveys, and running focus groups. This data is typically stored in documents, CRMs, databases and knowledge bases. It’s important to examine which data is available and needs to be included in your research project, based on its scope.

Using your existing qualitative feedback

As it becomes easier for customers to engage across a range of different channels, companies are gathering increasingly large amounts of both solicited and unsolicited qualitative feedback.

Most organizations have now invested in Voice of Customer programs , support ticketing systems, chatbot and support conversations, emails and even customer Slack chats.

These new channels provide companies with new ways of getting feedback, and also allow the collection of unstructured feedback data at scale.

The great thing about this data is that it contains a wealth of valubale insights and that it’s already there! When you have a new question about user behavior or your customers, you don’t need to create a new research study or set up a focus group. You can find most answers in the data you already have.

Typically, this data is stored in third-party solutions or a central database, but there are ways to export it or connect to a feedback analysis solution through integrations or an API.

Utilize untapped qualitative data channels

There are many online qualitative data sources you may not have considered. For example, you can find useful qualitative data in social media channels like Twitter or Facebook. Online forums, review sites, and online communities such as Discourse or Reddit also contain valuable data about your customers, or research questions.

If you are considering performing a qualitative benchmark analysis against competitors - the internet is your best friend, and review analysis is a great place to start. Gathering feedback in competitor reviews on sites like Trustpilot, G2, Capterra, Better Business Bureau or on app stores is a great way to perform a competitor benchmark analysis.

Customer feedback analysis software often has integrations into social media and review sites, or you could use a solution like DataMiner to scrape the reviews.

Step 2: Connect & organize all your qualitative data

Now you all have this qualitative data but there’s a problem, the data is unstructured. Before feedback can be analyzed and assigned any value, it needs to be organized in a single place. Why is this important? Consistency!

If all data is easily accessible in one place and analyzed in a consistent manner, you will have an easier time summarizing and making decisions based on this data.

The manual approach to organizing your data

The classic method of structuring qualitative data is to plot all the raw data you’ve gathered into a spreadsheet.

Typically, research and support teams would share large Excel sheets and different business units would make sense of the qualitative feedback data on their own. Each team collects and organizes the data in a way that best suits them, which means the feedback tends to be kept in separate silos.

An alternative and a more robust solution is to store feedback in a central database, like Snowflake or Amazon Redshift .

Keep in mind that when you organize your data in this way, you are often preparing it to be imported into another software. If you go the route of a database, you would need to use an API to push the feedback into a third-party software.

Computer-assisted qualitative data analysis software (CAQDAS)

Traditionally within the manual analysis approach (but not always), qualitative data is imported into CAQDAS software for coding.

In the early 2000s, CAQDAS software was popularised by developers such as ATLAS.ti, NVivo and MAXQDA and eagerly adopted by researchers to assist with the organizing and coding of data.

The benefits of using computer-assisted qualitative data analysis software:

- Assists in the organizing of your data

- Opens you up to exploring different interpretations of your data analysis

- Allows you to share your dataset easier and allows group collaboration (allows for secondary analysis)

However you still need to code the data, uncover the themes and do the analysis yourself. Therefore it is still a manual approach.

Organizing your qualitative data in a feedback repository

Another solution to organizing your qualitative data is to upload it into a feedback repository where it can be unified with your other data , and easily searchable and taggable. There are a number of software solutions that act as a central repository for your qualitative research data. Here are a couple solutions that you could investigate:

- Dovetail: Dovetail is a research repository with a focus on video and audio transcriptions. You can tag your transcriptions within the platform for theme analysis. You can also upload your other qualitative data such as research reports, survey responses, support conversations ( conversational analytics ), and customer interviews. Dovetail acts as a single, searchable repository. And makes it easier to collaborate with other people around your qualitative research.

- EnjoyHQ: EnjoyHQ is another research repository with similar functionality to Dovetail. It boasts a more sophisticated search engine, but it has a higher starting subscription cost.

Organizing your qualitative data in a feedback analytics platform

If you have a lot of qualitative customer or employee feedback, from the likes of customer surveys or employee surveys, you will benefit from a feedback analytics platform. A feedback analytics platform is a software that automates the process of both sentiment analysis and thematic analysis . Companies use the integrations offered by these platforms to directly tap into their qualitative data sources (review sites, social media, survey responses, etc.). The data collected is then organized and analyzed consistently within the platform.

If you have data prepared in a spreadsheet, it can also be imported into feedback analytics platforms.

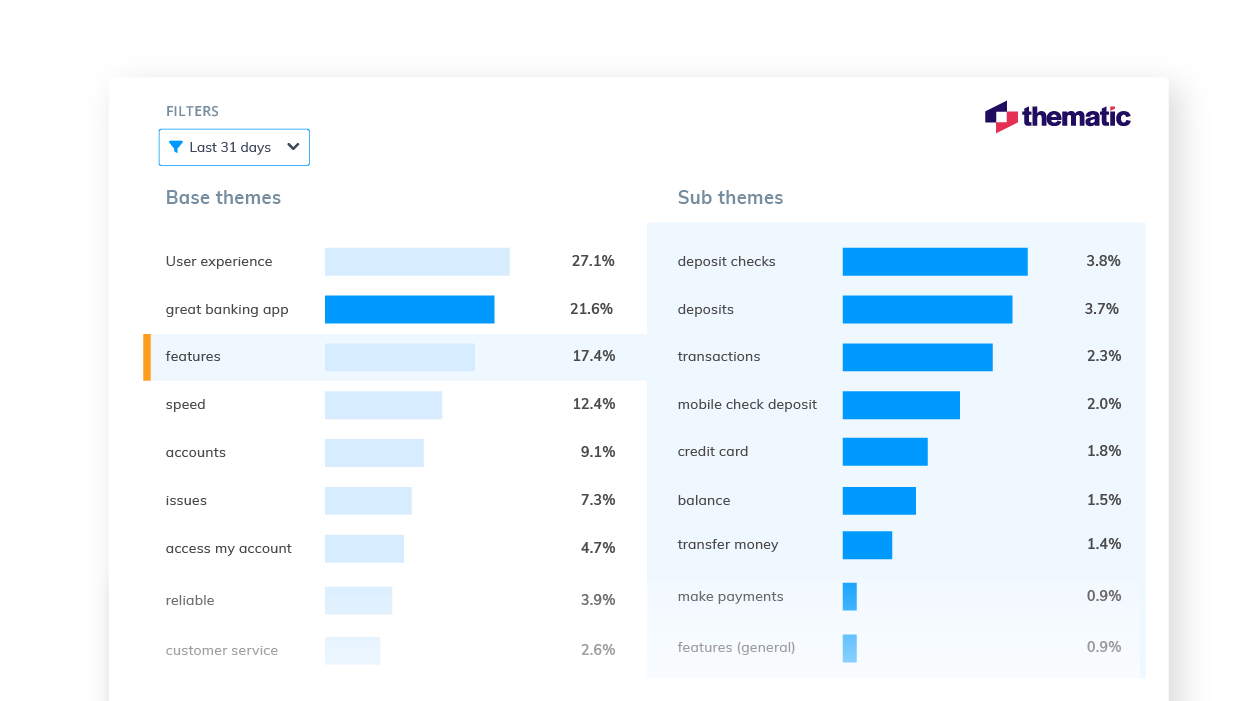

Once all this rich data has been organized within the feedback analytics platform, it is ready to be coded and themed, within the same platform. Thematic is a feedback analytics platform that offers one of the largest libraries of integrations with qualitative data sources.

Step 3: Coding your qualitative data

Your feedback data is now organized in one place. Either within your spreadsheet, CAQDAS, feedback repository or within your feedback analytics platform. The next step is to code your feedback data so we can extract meaningful insights in the next step.

Coding is the process of labelling and organizing your data in such a way that you can then identify themes in the data, and the relationships between these themes.

To simplify the coding process, you will take small samples of your customer feedback data, come up with a set of codes, or categories capturing themes, and label each piece of feedback, systematically, for patterns and meaning. Then you will take a larger sample of data, revising and refining the codes for greater accuracy and consistency as you go.

If you choose to use a feedback analytics platform, much of this process will be automated and accomplished for you.

The terms to describe different categories of meaning (‘theme’, ‘code’, ‘tag’, ‘category’ etc) can be confusing as they are often used interchangeably. For clarity, this article will use the term ‘code’.

To code means to identify key words or phrases and assign them to a category of meaning. “I really hate the customer service of this computer software company” would be coded as “poor customer service”.

How to manually code your qualitative data

- Decide whether you will use deductive or inductive coding. Deductive coding is when you create a list of predefined codes, and then assign them to the qualitative data. Inductive coding is the opposite of this, you create codes based on the data itself. Codes arise directly from the data and you label them as you go. You need to weigh up the pros and cons of each coding method and select the most appropriate.

- Read through the feedback data to get a broad sense of what it reveals. Now it’s time to start assigning your first set of codes to statements and sections of text.

- Keep repeating step 2, adding new codes and revising the code description as often as necessary. Once it has all been coded, go through everything again, to be sure there are no inconsistencies and that nothing has been overlooked.

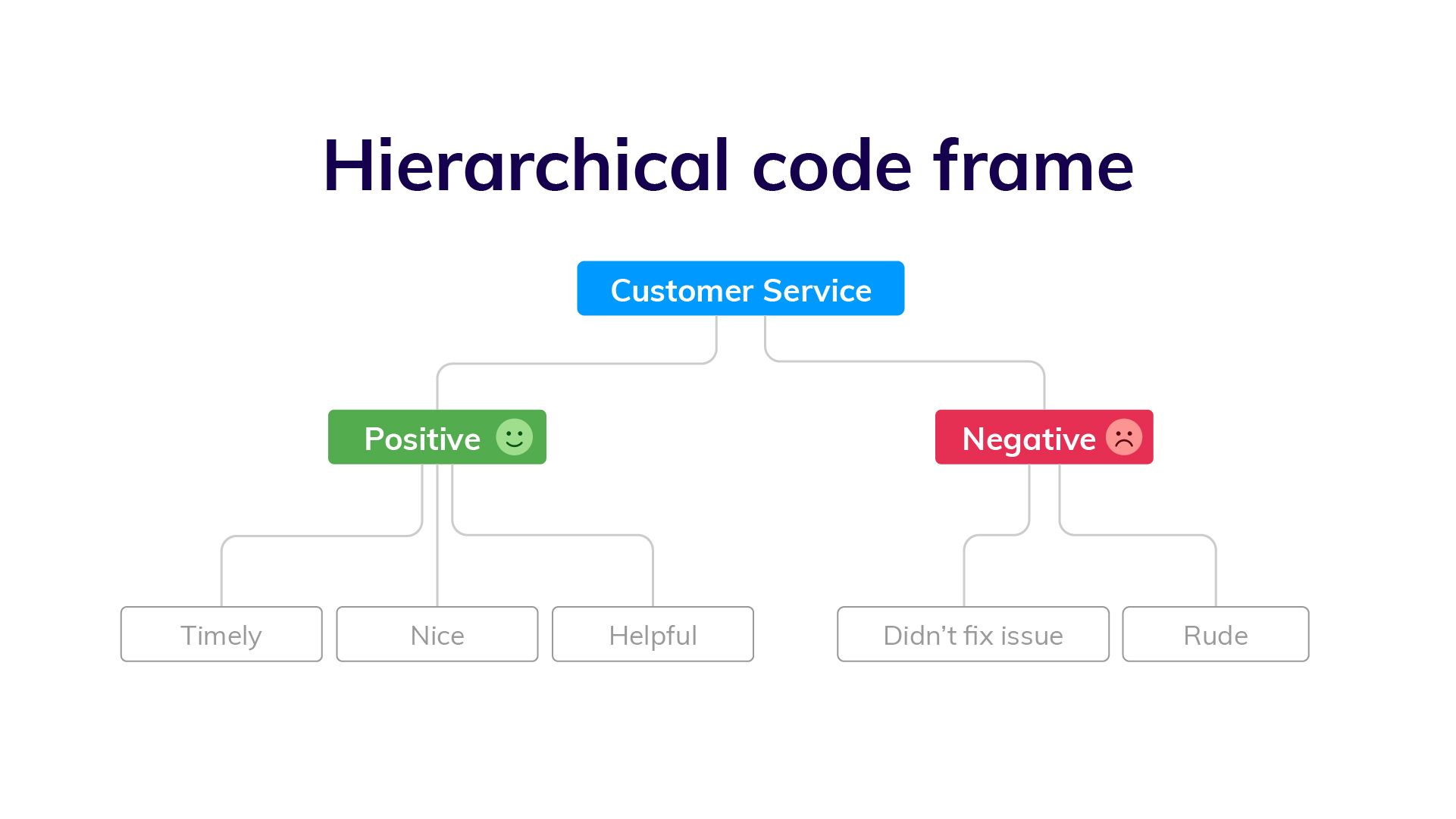

- Create a code frame to group your codes. The coding frame is the organizational structure of all your codes. And there are two commonly used types of coding frames, flat, or hierarchical. A hierarchical code frame will make it easier for you to derive insights from your analysis.

- Based on the number of times a particular code occurs, you can now see the common themes in your feedback data. This is insightful! If ‘bad customer service’ is a common code, it’s time to take action.

We have a detailed guide dedicated to manually coding your qualitative data .

Using software to speed up manual coding of qualitative data

An Excel spreadsheet is still a popular method for coding. But various software solutions can help speed up this process. Here are some examples.

- CAQDAS / NVivo - CAQDAS software has built-in functionality that allows you to code text within their software. You may find the interface the software offers easier for managing codes than a spreadsheet.

- Dovetail/EnjoyHQ - You can tag transcripts and other textual data within these solutions. As they are also repositories you may find it simpler to keep the coding in one platform.

- IBM SPSS - SPSS is a statistical analysis software that may make coding easier than in a spreadsheet.

- Ascribe - Ascribe’s ‘Coder’ is a coding management system. Its user interface will make it easier for you to manage your codes.

Automating the qualitative coding process using thematic analysis software

In solutions which speed up the manual coding process, you still have to come up with valid codes and often apply codes manually to pieces of feedback. But there are also solutions that automate both the discovery and the application of codes.

Advances in machine learning have now made it possible to read, code and structure qualitative data automatically. This type of automated coding is offered by thematic analysis software .

Automation makes it far simpler and faster to code the feedback and group it into themes. By incorporating natural language processing (NLP) into the software, the AI looks across sentences and phrases to identify common themes meaningful statements. Some automated solutions detect repeating patterns and assign codes to them, others make you train the AI by providing examples. You could say that the AI learns the meaning of the feedback on its own.

Thematic automates the coding of qualitative feedback regardless of source. There’s no need to set up themes or categories in advance. Simply upload your data and wait a few minutes. You can also manually edit the codes to further refine their accuracy. Experiments conducted indicate that Thematic’s automated coding is just as accurate as manual coding .

Paired with sentiment analysis and advanced text analytics - these automated solutions become powerful for deriving quality business or research insights.

You could also build your own , if you have the resources!

The key benefits of using an automated coding solution

Automated analysis can often be set up fast and there’s the potential to uncover things that would never have been revealed if you had given the software a prescribed list of themes to look for.

Because the model applies a consistent rule to the data, it captures phrases or statements that a human eye might have missed.

Complete and consistent analysis of customer feedback enables more meaningful findings. Leading us into step 4.

Step 4: Analyze your data: Find meaningful insights

Now we are going to analyze our data to find insights. This is where we start to answer our research questions. Keep in mind that step 4 and step 5 (tell the story) have some overlap . This is because creating visualizations is both part of analysis process and reporting.

The task of uncovering insights is to scour through the codes that emerge from the data and draw meaningful correlations from them. It is also about making sure each insight is distinct and has enough data to support it.

Part of the analysis is to establish how much each code relates to different demographics and customer profiles, and identify whether there’s any relationship between these data points.

Manually create sub-codes to improve the quality of insights

If your code frame only has one level, you may find that your codes are too broad to be able to extract meaningful insights. This is where it is valuable to create sub-codes to your primary codes. This process is sometimes referred to as meta coding.

Note: If you take an inductive coding approach, you can create sub-codes as you are reading through your feedback data and coding it.

While time-consuming, this exercise will improve the quality of your analysis. Here is an example of what sub-codes could look like.

You need to carefully read your qualitative data to create quality sub-codes. But as you can see, the depth of analysis is greatly improved. By calculating the frequency of these sub-codes you can get insight into which customer service problems you can immediately address.

Correlate the frequency of codes to customer segments

Many businesses use customer segmentation . And you may have your own respondent segments that you can apply to your qualitative analysis. Segmentation is the practise of dividing customers or research respondents into subgroups.

Segments can be based on:

- Demographic

- And any other data type that you care to segment by

It is particularly useful to see the occurrence of codes within your segments. If one of your customer segments is considered unimportant to your business, but they are the cause of nearly all customer service complaints, it may be in your best interest to focus attention elsewhere. This is a useful insight!

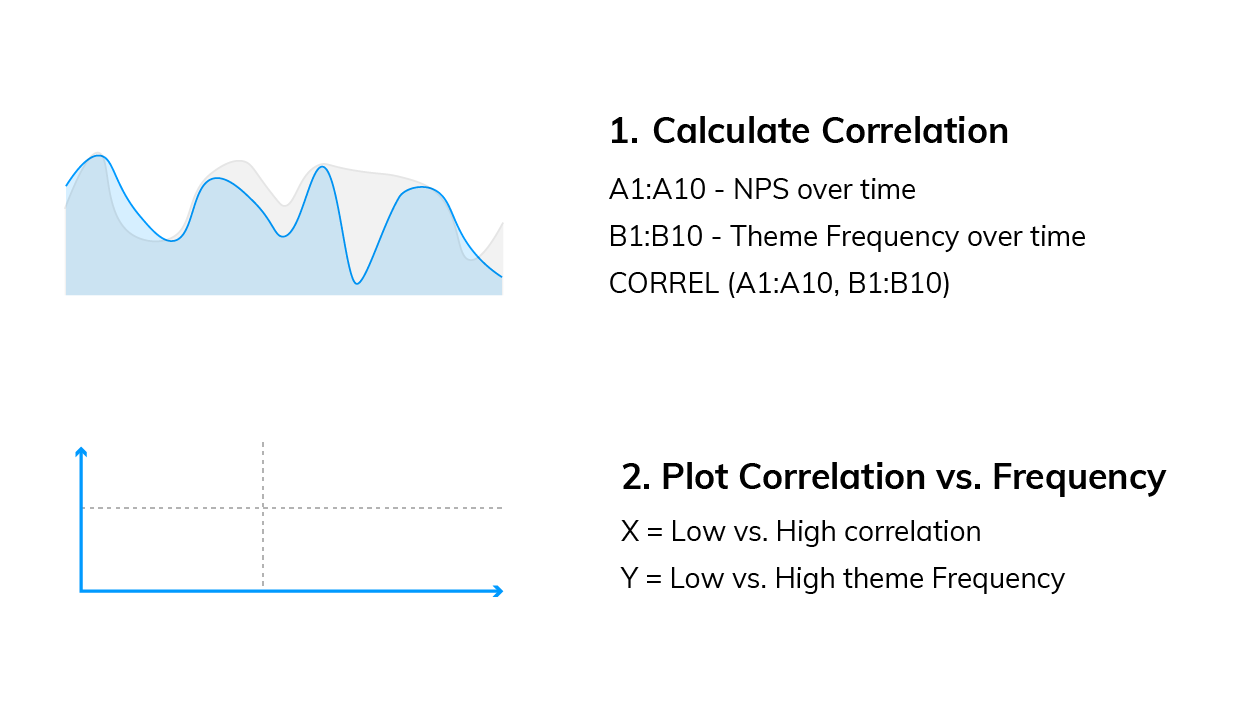

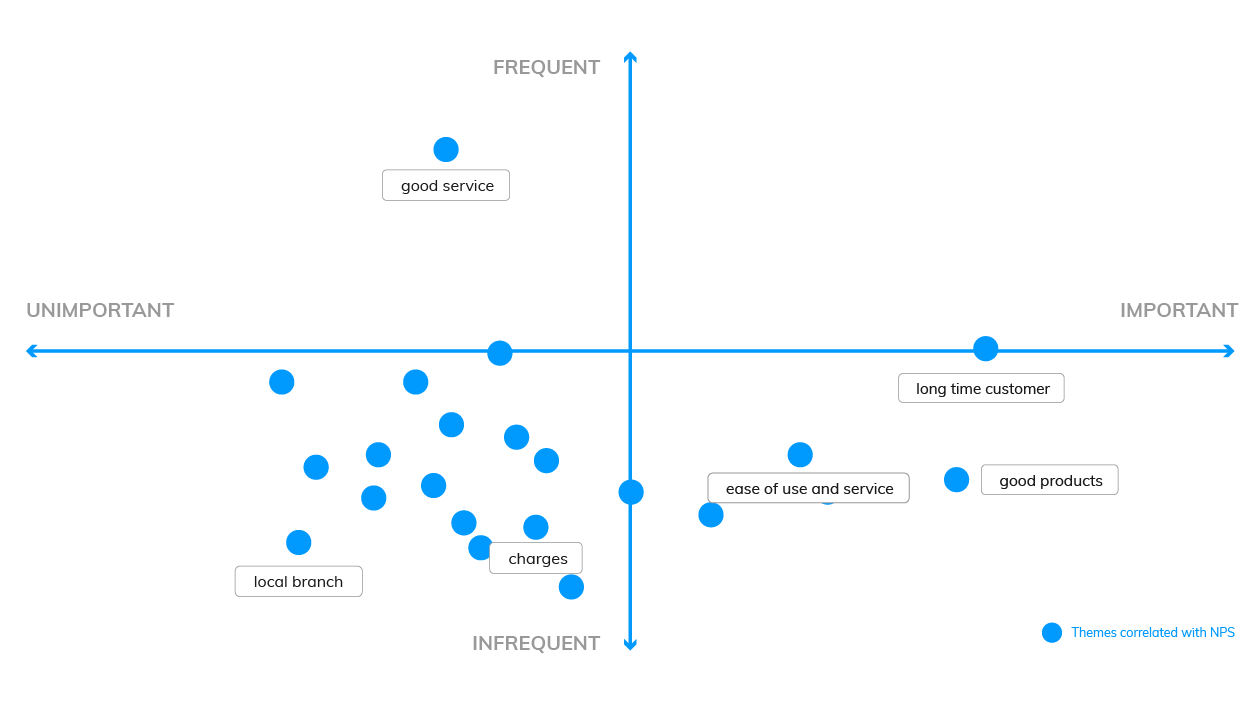

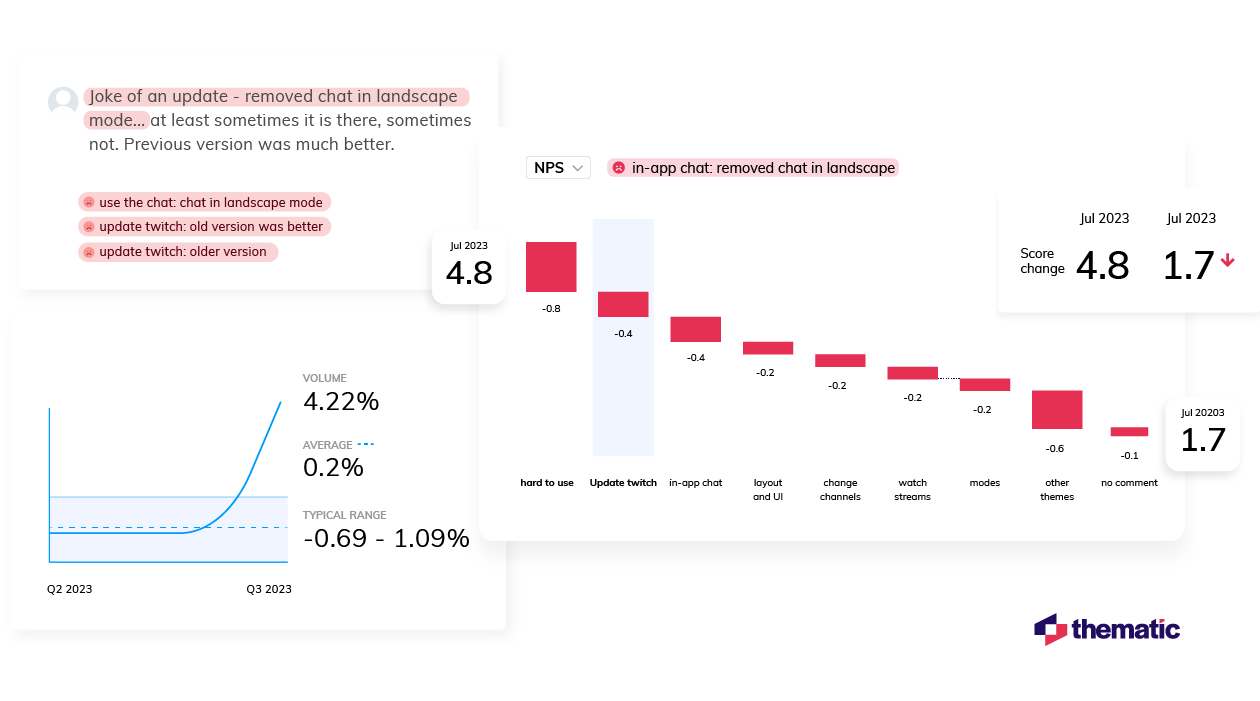

Manually visualizing coded qualitative data

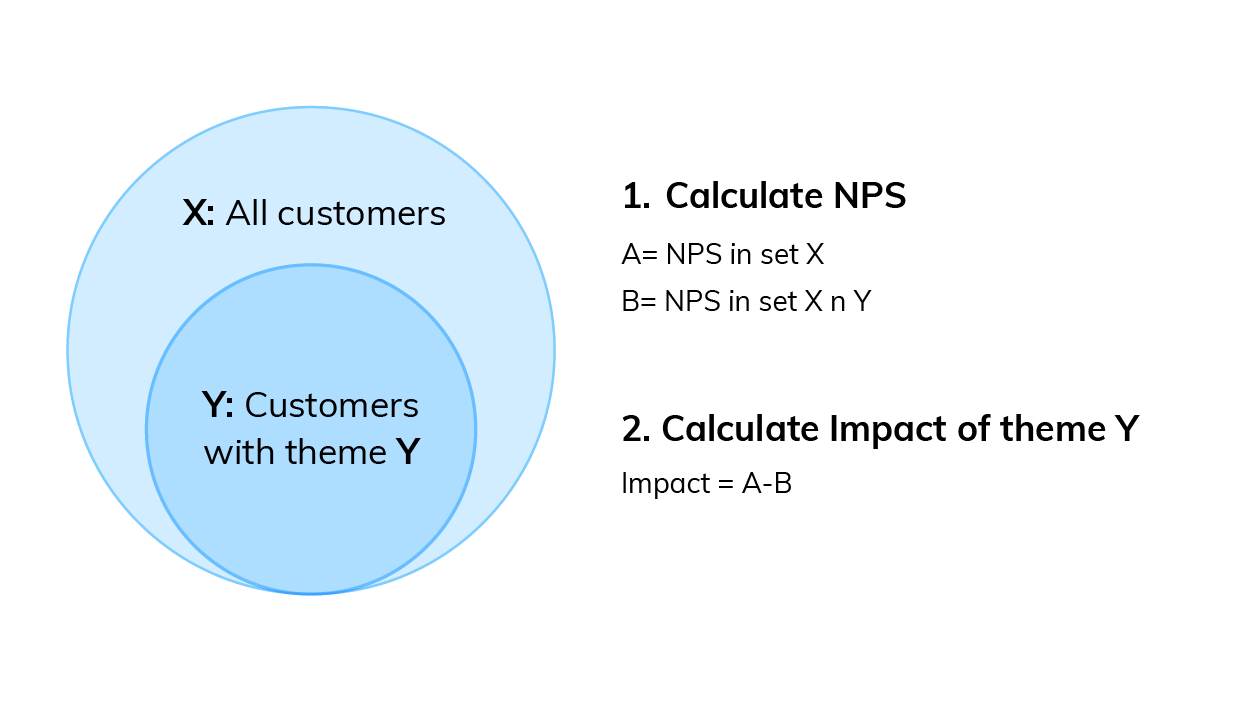

There are formulas you can use to visualize key insights in your data. The formulas we will suggest are imperative if you are measuring a score alongside your feedback.