- Comprehensive Guide to Developing a Robust Monitoring and Evaluation Plan in 13 Steps

- Learning Center

Developing a Monitoring and Evaluation Plan can be a complex process. These general steps can help get you started: Define your project, identify key performance indicators, set targets, determine data collection methods, establish a timeline, analyze and interpret data, use findings to inform future decisions, and more steps. Creating a solid Monitoring and Evaluation Plan can help ensure project success and improve resource allocation. Let’s get started!

Table of contents

Step 1 Identify your evaluation audience or stakeholders

Step 2 identify program goals and objectives, step 3 define the evaluation questions.

- Step 4 Developing Evaluation Objectives

- Step 5 Identify monitoring Questions

Step 6 Define Indicators to Include in Evaluation Plan

Step 7 define data collection methods and timeline – creating a methodology, step 8 identify m&e roles and responsibilities.

- Step 9 Identify who is responsible for data collection and timelines

Step 10 Create an Analysis Plan and Reporting Templates

Step 11 review the m&e plan, step 12 implementing and monitoring the evaluation plan.

- Step 13 Using Results to Make Informed Decisions ( Plan for Dissemination and Donor Reporting)

The audience or stakeholders for an evaluation can vary depending on the program or project being evaluated. However, some common stakeholders that may be involved in an evaluation include:

- Program managers and staff: Program managers and staff are responsible for implementing the program and are often the primary users of evaluation findings. They may use evaluation findings to make program adjustments or improvements, and to report on program progress to other stakeholders.

- Funders: Funders are often the primary source of funding for a program and may require evaluations to ensure that the program is meeting its intended goals and outcomes. Evaluation findings can be used to inform funding decisions and may be included in funding reports or proposals.

- Beneficiaries: Beneficiaries are the individuals or communities that are directly impacted by the program. Their perspectives and feedback are important in evaluating the effectiveness of the program and can help to identify areas for improvement.

- Other stakeholders: Other stakeholders may include partners, collaborators, policymakers, and the broader community. They may have an interest in the program and its outcomes and may use evaluation findings to inform their own work or decision-making.

It is important to consider the needs and perspectives of all stakeholders when conducting an evaluation. Evaluation findings should be communicated in a way that is clear and accessible to all stakeholders and should be used to inform decision-making and improve program effectiveness.

Program goals and objectives are critical components of a program design and evaluation. Program goals are broad statements that describe the overarching purpose or intended outcome of the program. Objectives, on the other hand, are more specific and measurable statements that describe the steps that will be taken to achieve the program goals.

Here are some examples of program goals and objectives:

Program Goal: To improve access to clean water in rural communities.

Program Objectives:

- To install 50 new water filtration systems in rural communities by the end of the year.

- To provide training on water sanitation and hygiene practices to 500 community members by the end of the year.

Program Goal: To reduce food insecurity in the local community.

- To establish a community garden program that will provide fresh produce to 100 families by the end of the year.

- To distribute food baskets to 200 families in need each month.

Program Goal: To improve academic performance of at-risk students.

- To provide after-school tutoring services to 50 at-risk students each week.

- To increase the graduation rate of at-risk students by 10% within the next 2 years.

Program Goal: To increase access to healthcare services in underserved communities.

- To establish 3 new health clinics in underserved communities within the next 3 years.

- To provide health education and screening services to 500 community members within the next year.

Program goals and objectives should be specific, measurable, achievable, relevant, and time-bound (SMART). These criteria help to ensure that the program is focused, achievable, and measurable, and that progress towards the goals and objectives can be tracked and evaluated.

Key questions to be asked to determine if an M&E plan is working include the following:

- Are the M&E activities progressing as planned?

- Are M&E questions being answered sufficiently? Are other data needed to answer these questions? How can such data be obtained?

- Should the M&E questions be re-framed? Have other M&E questions arisen that should be incorporated into the plan?

- Are there any methodological or valuation design issues that need to be addressed? Are there any practical or political factors that need to be considered?

- Are any changes in the M&E plan needed at this time? How will these changes be made? Who will implement them?

- Are appropriate staff and funding still available to complete the evaluation plan?

- How are findings from M&E activities being used and disseminated? Should anything be done to enhance their application to programs?

Catch HR’s Eye Instantly:

- Resume Review

- Resume Writing

- Resume Optimization

Premier global development resume service since 2012

Stand Out with a Pro Resume

Step 4 – Developing Evaluation Objectives

Developing evaluation objectives is a critical step in creating a comprehensive monitoring and evaluation plan. Evaluation objectives specify what the evaluation will assess and define the criteria for success.

Evaluation objectives should be aligned with the project’s overall goals and objectives, and they should be specific, measurable, achievable, relevant, and time-bound.

Sure, here are some examples of evaluation objectives as a step in the development of a monitoring and evaluation plan:

- Specific: To assess the effectiveness of a new training program by measuring the increase in job performance and knowledge among participants.

- Measurable: To determine the impact of a community development project by measuring the increase in access to essential services such as healthcare, education, and clean water among the target population.

- Achievable: To increase website traffic by 20% within six months by optimizing website content and implementing a targeted digital marketing campaign.

- Relevant: To improve employee retention by 15% within one year by implementing new professional development opportunities and increasing employee engagement.

- Time-bound: To reduce customer complaints by 25% within the next quarter by improving customer service response times and implementing a customer feedback system.

These are just a few examples of evaluation objectives, and they can be adapted to fit the specific needs and goals of each project or program.

By developing SMART evaluation objectives as a step in the development of a monitoring and evaluation plan, you can ensure that the evaluation process is focused, achievable, and aligned with the project’s overall goals and objectives. This will help ensure that the monitoring and evaluation plan is effective in assessing progress and providing valuable insights for project improvement and decision-making..

Step 5 Identify the monitoring questions

Monitoring questions are an important part of any project, as they help to ensure that the project is moving forward in the right direction. In the case of a project to improve customer service, the monitoring questions might include:

- How satisfied are customers with the current level of service?

- What areas need improvement?

- What resources are available to support customer service improvement?

- What processes are in place to ensure customer service is consistently meeting customer needs?

- What metrics are being used to measure customer service performance?

These questions will help to identify areas for improvement, and provide guidance on how to best implement changes. By regularly monitoring these questions, the project team can ensure that customer service is always improving and meeting customer needs.

Indicators are specific, measurable variables or metrics that can be used to assess progress towards achieving the objectives of a project or program. In an evaluation plan, indicators are essential components that help determine whether a project is achieving its intended outcomes and objectives. To define indicators to include in an evaluation plan, follow these steps:

- Identify the objectives of the project: Review the project’s goals and objectives to determine what specific outcomes the project is intended to achieve.

- Determine the data needed to measure progress: Identify the data needed to assess progress towards achieving each objective.

- Develop measurable indicators: Develop specific, measurable indicators that will allow you to track progress towards achieving each objective.

- Ensure that the indicators are relevant: Ensure that the indicators selected are relevant to the objectives of the project and that they provide meaningful information that can be used to inform decision-making.

- Consider data availability and collection methods: Ensure that data is available for the selected indicators and that collection methods are practical and cost-effective.

- Establish a baseline: Establish a baseline measurement for each indicator to determine the starting point for tracking progress.

Here are some examples of indicators that could be included in an evaluation plan:

Program Objective: To install 50 new water filtration systems in rural communities by the end of the year.

Indicators:

- Number of water filtration systems installed

- Number of community members with access to clean water

- Water quality tests results

Program Objective: To establish a community garden program that will provide fresh produce to 100 families by the end of the year.

- Number of families participating in the community garden program

- Number of pounds of fresh produce harvested

- Number of families reporting improved food security

Program Objective: To provide after-school tutoring services to 50 at-risk students each week.

- Number of at-risk students attending tutoring sessions

- Average increase in grades of at-risk students

- Percentage of at-risk students passing core subjects

Program Objective: To establish 3 new health clinics in underserved communities within the next 3 years.

- Number of new health clinics established

- Number of community members served by the new health clinics

- Number of community members reporting improved access to healthcare services

Overall, it is important to choose indicators that are meaningful, measurable, and aligned with program goals and objectives. This will help to ensure that the evaluation is able to accurately assess program effectiveness and identify areas for improvement.

By defining indicators to include in an evaluation plan, you can ensure that the evaluation process is focused and effective in providing valuable insights for project improvement and decision-making.

Creating a methodology is a crucial step in developing a monitoring and evaluation plan. In this step, you define the data collection methods and timeline for the evaluation. To do this, you need to identify the data that needs to be collected to assess progress towards achieving each objective. Then, you can select appropriate data collection methods that are appropriate for the data being collected and the resources available. Common methods include surveys, interviews, focus groups, observation, and document review.

Once you have identified the data and the appropriate data collection methods, you can establish a timeline for data collection and evaluation that aligns with the project’s overall timeline and key milestones. This timeline should be realistic and consider the availability of resources and the time required to collect and analyze the data.

It is also important to assign responsibility for each aspect of the methodology, including data collection, analysis, and reporting. You should ensure that the individuals responsible have the necessary skills, resources, and support to carry out their assigned tasks effectively.

To ensure data quality, you should develop strategies to ensure that data is accurate, reliable, and valid, and that any biases or errors are minimized. Establishing a baseline measurement for each indicator is crucial to determine the starting point for tracking progress.

By creating a methodology that defines data collection methods and timeline, you can ensure that the monitoring and evaluation plan is effective in assessing progress and providing valuable insights for project improvement and decision-making.

This step involves defining the roles and responsibilities of individuals or teams involved in the monitoring and evaluation process to ensure that everyone knows what is expected of them.

To begin, it is important to identify the key stakeholders involved in the project and determine their respective roles and responsibilities in the M&E process. This includes identifying the project manager or coordinator, data collectors, data analysts, and decision-makers who will use the M&E findings to inform project decisions.

Once the stakeholders have been identified, it is necessary to define their specific roles and responsibilities. For example, the project manager or coordinator may be responsible for overall project management and ensuring that the M&E plan is implemented as intended. Data collectors may be responsible for collecting and managing data, while data analysts may be responsible for analyzing and interpreting data. Decision-makers may be responsible for using the M&E findings to inform project decisions.

It is also important to establish communication channels and protocols for sharing information and M&E findings among stakeholders. This includes defining the frequency and format of progress reports, as well as procedures for addressing any issues or challenges that arise during the M&E process.

By identifying M&E roles and responsibilities, you can ensure that everyone involved in the monitoring and evaluation process understands their roles and responsibilities, which helps to ensure the effective implementation of the M&E plan and the project’s success.

Step 9 Identify who is Responsible for Data Collection and Timelines

This step involves determining the individuals or teams responsible for collecting and managing the data required to assess progress towards achieving project objectives, as well as defining the timelines for data collection.

To identify who is responsible for data collection, it is important to review the project goals and objectives and determine what data needs to be collected to assess progress. The individuals or teams responsible for data collection may include project staff, external consultants, or other stakeholders with relevant expertise.

Once the individuals or teams responsible for data collection have been identified, it is necessary to establish a timeline for data collection that aligns with the project’s overall timeline and key milestones. This timeline should be realistic and consider the availability of resources and the time required to collect and analyze the data.

In addition to establishing a timeline, it is also important to define the specific data collection methods that will be used and ensure that those responsible for data collection have the necessary resources and support to carry out their assigned tasks effectively. This may include providing training on data collection methods, ensuring access to necessary equipment or software, and establishing protocols for data management and quality control.

By identifying who is responsible for data collection and timelines, you can ensure that data is collected in a timely and efficient manner, which is crucial for the effective implementation of the M&E plan and the success of the project.

This step involves determining how data will be analyzed and reported, including the selection of appropriate methods and the development of reporting templates to ensure that data is presented in a clear and concise manner.

To create an analysis plan, it is important to review the project objectives and identify the key performance indicators that will be used to assess progress. This will help determine what data needs to be analyzed and what statistical methods will be used to analyze the data. The analysis plan should include a detailed description of the statistical methods that will be used, including any assumptions or limitations associated with these methods.

Once the analysis plan has been developed, it is necessary to create reporting templates to ensure that data is presented in a clear and concise manner. Reporting templates should include the key performance indicators and the specific data that will be reported, as well as any graphs, charts, or tables that will be used to present the data. Reporting templates should be designed to provide a clear picture of progress towards achieving project objectives, and should be easy to read and understand.

It is also important to establish protocols for data sharing and reporting to ensure that data is shared in a timely and effective manner. This may include establishing a timeline for reporting, identifying the stakeholders who will receive the reports, and determining the format and level of detail required for each report.

By creating an analysis plan and reporting templates, you can ensure that data is analyzed and reported in a systematic and standardized manner, which is crucial for the effective implementation of the M&E plan and the success of the project.

This step involves a comprehensive review of the M&E plan to ensure that it is aligned with the project’s overall goals and objectives, and that it is practical and feasible to implement.

To review the M&E plan, it is necessary to first review the project’s goals and objectives and ensure that the M&E plan is aligned with these. This includes reviewing the performance indicators and ensuring that they are relevant, measurable, and appropriate for tracking progress towards achieving project objectives.

Next, it is necessary to review the data collection methods and analysis plan to ensure that they are practical and feasible to implement. This includes reviewing the data collection timeline, the individuals or teams responsible for data collection, and the resources required to collect and manage the data.

In addition to reviewing the M&E plan itself, it is also important to review the communication and reporting protocols to ensure that they are effective and efficient. This may include reviewing the reporting templates, the stakeholders who will receive the reports, and the frequency and format of progress reports.

Overall, the goal of reviewing the M&E plan is to ensure that it is practical, feasible, and effective in assessing progress towards achieving project objectives. This step is critical for the success of the project, as it ensures that the M&E plan is aligned with the project’s overall goals and objectives and that it is designed to provide valuable insights for project improvement and decision-making.

This step involves carrying out the data collection, analysis, and reporting activities defined in the M&E plan, as well as monitoring progress towards achieving project objectives.

To implement the evaluation plan, it is necessary to follow the protocols and procedures defined in the M&E plan. This may include assigning responsibilities to individuals or teams involved in data collection, analysis, and reporting, and providing training and support as needed.

Monitoring progress towards achieving project objectives involves tracking the performance indicators defined in the M&E plan and comparing them to the baseline measurements to determine progress. This may involve conducting regular progress reports and reviewing data for any trends or issues that may require further attention.

Throughout the implementation and monitoring of the evaluation plan, it is important to maintain open lines of communication among stakeholders and to address any issues or challenges that arise in a timely manner. This may involve revising the M&E plan as needed to ensure that it remains aligned with project objectives and is effective in assessing progress towards achieving them.

Overall, implementing and monitoring the evaluation plan is critical for the success of the project, as it provides valuable insights for project improvement and decision-making. By following the protocols and procedures defined in the M&E plan and monitoring progress towards achieving project objectives, you can ensure that the project is on track to achieve its intended outcomes and objectives.

Step 13 Using Results to Make Informed Decisions

Using results to make informed decisions is a critical step in the monitoring and evaluation process. This step involves analyzing the data collected through the evaluation plan and using it to make informed decisions about the project’s future direction. It also involves disseminating the findings to relevant stakeholders, including donors, to demonstrate the project’s impact and to inform future funding decisions.

To plan for dissemination and donor reporting, it is important to first analyze the data collected through the evaluation plan and identify the key findings and insights. This includes identifying any successes or areas for improvement and determining what actions can be taken to address these.

Once the key findings have been identified, it is necessary to develop a plan for disseminating the findings to relevant stakeholders. This may include developing reports, presentations, or other materials that summarize the key findings and insights in a clear and concise manner. It may also involve identifying the stakeholders who will receive the findings and determining the best way to reach them.

In addition to disseminating the findings, it is important to report the results to donors to demonstrate the project’s impact and to inform future funding decisions. This may involve developing donor reports that summarize the key findings and insights and provide an overview of the project’s progress towards achieving its objectives.

Overall, using results to make informed decisions is critical for the success of the project, as it ensures that the project is on track to achieve its intended outcomes and objectives. By planning for dissemination and donor reporting, you can demonstrate the project’s impact and ensure that it receives continued support from donors and other stakeholders.

To Conclude

The 13 steps outlined in this article provide a comprehensive framework for developing a robust monitoring and evaluation plan that can effectively assess progress towards achieving project objectives and provide valuable insights for project improvement and decision-making.

Key steps in developing a monitoring and evaluation plan include defining the purpose and scope of the evaluation, identifying stakeholders and their roles and responsibilities, developing SMART evaluation objectives, identifying indicators to measure progress, defining data collection methods and timelines, creating an analysis plan and reporting templates, reviewing the M&E plan, implementing and monitoring the evaluation plan, using results to make informed decisions, planning for dissemination, and donor reporting.

By following these steps, organizations can ensure that their monitoring and evaluation plans are practical, feasible, and effective in assessing progress towards achieving project objectives. Additionally, the insights gained from monitoring and evaluation can help to inform decision-making and improve project outcomes, leading to greater success and impact. Ultimately, investing in monitoring and evaluation is crucial for any organization that wants to achieve its goals and have a meaningful impact in the world.

In conclusion, it’s important to remember that no plan will be effective without the right monitoring and evaluation strategies in place. Without an effective plan, you won’t be able to track the progress of your project or measure its success. Take the time to plan out your monitoring and evaluation plan, as this will help ensure that your project is successful and that it meets the desired outcomes.

Simana KONE

Very hepfull and great usefull tool

Santo Obina

This is helpful and a resourceful tool

Fation Luli

Hey EvalCommunity readers,

Did you know that you can enhance your visibility by actively engaging in discussions within the EvalCommunity? Every week, we highlight the most active commenters and authors in our newsletter , which is distributed to an impressive audience of over 1.289,000 monthly readers and practitioners in International Development , Monitoring and Evaluation (M&E), and related fields.

Seize this opportunity to share your invaluable insights and make a substantial contribution to our community. Begin sharing your thoughts below to establish a lasting presence and wield influence within our growing community.

This is so instrumental to consider when regarding a successful evaluations.

Leave a Comment Cancel Reply

Your email address will not be published.

How strong is my Resume?

Only 2% of resumes land interviews.

Land a better, higher-paying career

Jobs for You

College of education: open-rank, evaluation/social research methods — educational psychology.

- Champaign, IL, USA

- University of Illinois at Urbana-Champaign

Deputy Director – Operations and Finance

- United States

Energy/Environment Analyst

Climate finance specialist, call for consultancy: evaluation of dfpa projects in kenya, uganda and ethiopia.

- The Danish Family Planning Association

Project Assistant – Close Out

- United States (Remote)

Global Technical Advisor – Information Management

- Belfast, UK

- Concern Worldwide

Intern- International Project and Proposal Support – ISPI

Budget and billing consultant, manager ii, budget and billing, usaid/lac office of regional sustainable development – program analyst, team leader, senior finance and administrative manager, data scientist.

- New York, NY, USA

- Everytown For Gun Safety

Energy Evaluation Specialist

Services you might be interested in, useful guides ....

How to Create a Strong Resume

Monitoring And Evaluation Specialist Resume

Resume Length for the International Development Sector

Types of Evaluation

Monitoring, Evaluation, Accountability, and Learning (MEAL)

LAND A JOB REFERRAL IN 2 WEEKS (NO ONLINE APPS!)

Sign Up & To Get My Free Referral Toolkit Now:

- Featured Themes

- > Climate Change & Conflict

- > Youth, Peace & Security

- > Tech for Good

- > Asia Religious & Ethnic Freedom

- All Regions

- Resource Library

- Peace Impact Framework

- Grounded Accountability Model

- Add a Resource

- Organizations

- Collaboration Map

- Discussions

- Add an Organization

Monitoring and Evaluation: Tools, Methods and Approaches

The purpose of this M&E Overview is to strengthen awareness and interest in M&E, and to clarify what it entails. You will find an overview of a sample of M&E tools, methods, and approaches outlined here, including their purpose and use; advantages and disadvantages; costs, skills, and time required; and key references. Those illustrated here include several data collection methods, analytical frameworks, and types of evaluation and review. The M&E Overview discusses:

- Performance indicators

- The logical framework approach

- Theory-based evaluation

- Formal surveys

- Rapid appraisal methods

- Participatory methods

- Public expenditure tracking surveys

- Cost-benefit and cost-effectiveness analysis

- Impact evaluation

This list is not comprehensive, nor is it intended to be. Some of these tools and approaches are complementary; some are substitutes. Some have broad applicability, while others are quite narrow in their uses. The choice of which is appropriate for any given context will depend on a range of considerations. These include the uses for which M&E is intended, the main stakeholders who have an interest in the M&E findings, the speed with which the information is needed, and the cost.

You must be logged in in order to leave a comment

Related Resources

“to protect her honour”: child marriage in emergencies – the fatal confusion between protecting girls and sexual violence.

Theme: Conflict Sensitivity & Integration

“Investing in Listening”: International Organization for Migration’s Experience with Humanitarian Feedback Mechanisms in Sindh Province, Pakistan

Theme: Democracy & Governance , General

Region: Europe , Oceania

Share on Mastodon

We value your privacy

We and our partners are using technologies like Cookies or Targeting and process personal data like IP-address or browser information in order to personalize the contents you see. We also use it in order to measure results or align our website content. Because we value your privacy, we are herewith asking your permission to use the following technologies.

The Compass for SBC

Helping you Implement Effective Social and Behavior Change Projects

How-To-Guide

How to Develop a Monitoring and Evaluation Plan

Home > How to Guides > How to Develop a Monitoring and Evaluation Plan

Introduction

Click here to access this Guide in Arabic

ل مراجعة هذا الدليل باللغة العربية، انقر هنا

What is a Monitoring and Evaluation Plan?

A monitoring and evaluation (M&E) plan is a document that helps to track and assess the results of the interventions throughout the life of a program. It is a living document that should be referred to and updated on a regular basis. While the specifics of each program’s M&E plan will look different, they should all follow the same basic structure and include the same key elements.

An M&E plan will include some documents that may have been created during the program planning process, and some that will need to be created new. For example, elements such as the logic model /logical framework, theory of change, and monitoring indicators may have already been developed with input from key stakeholders and/or the program donor. The M&E plan takes those documents and develops a further plan for their implementation.

Why develop a Monitoring and Evaluation Plan?

It is important to develop an M&E plan before beginning any monitoring activities so that there is a clear plan for what questions about the program need to be answered. It will help program staff decide how they are going to collect data to track indicators , how monitoring data will be analyzed, and how the results of data collection will be disseminated both to the donor and internally among staff members for program improvement. Remember, M&E data alone is not useful until someone puts it to use! An M&E plan will help make sure data is being used efficiently to make programs as effective as possible and to be able to report on results at the end of the program.

Who should develop a Monitoring and Evaluation Plan?

An M&E plan should be developed by the research team or staff with research experience, with inputs from program staff involved in designing and implementing the program.

When should a Monitoring and Evaluation Plan be developed?

An M&E plan should be developed at the beginning of the program when the interventions are being designed. This will ensure there is a system in place to monitor the program and evaluate success.

Who is this guide for?

This guide is designed primarily for program managers or personnel who are not trained researchers themselves but who need to understand the rationale and process of conducting research. This guide can help managers to support the need for research and ensure that research staff have adequate resources to conduct the research that is needed to be certain that the program is evidence based and that results can be tracked over time and measured at the end of the program.

Learning Objectives

After completing the steps for developing an M&E plan, the team will:

- Identify the elements and steps of an M&E plan

- Explain how to create an M&E plan for an upcoming program

- Describe how to advocate for the creation and use of M&E plans for a program/organization

Estimated Time Needed

Developing an M&E plan can take up to a week, depending on the size of the team available to develop the plan, and whether a logic model and theory of change have already been designed.

Prerequisites

How to Develop a Logic Model

Step 1: Identify Program Goals and Objectives

The first step to creating an M&E plan is to identify the program goals and objectives. If the program already has a logic model or theory of change, then the program goals are most likely already defined. However, if not, the M&E plan is a great place to start. Identify the program goals and objectives.

Defining program goals starts with answering three questions:

- What problem is the program trying to solve?

- What steps are being taken to solve that problem?

- How will program staff know when the program has been successful in solving the problem?

Answering these questions will help identify what the program is expected to do, and how staff will know whether or not it worked. For example, if the program is starting a condom distribution program for adolescents, the answers might look like this:

| High rates of unintended pregnancy and sexually transmitted infections (STIs) transmission among youth ages 15-19 | |

| Promote and distribute free condoms in the community at youth-friendly locations | |

| Lowered rates of unintended pregnancy and STI transmission among youth 15-19. Higher percentage of condom use among sexually active youth. |

From these answers, it can be seen that the overall program goal is to reduce the rates of unintended pregnancy and STI transmission in the community.

It is also necessary to develop intermediate outputs and objectives for the program to help track successful steps on the way to the overall program goal. More information about identifying these objectives can be found in the logic model guide .

Step 2: Define Indicators

Once the program’s goals and objectives are defined, it is time to define indicators for tracking progress towards achieving those goals. Program indicators should be a mix of those that measure process, or what is being done in the program, and those that measure outcomes.

Process indicators track the progress of the program. They help to answer the question, “Are activities being implemented as planned?” Some examples of process indicators are:

- Number of trainings held with health providers

- Number of outreach activities conducted at youth-friendly locations

- Number of condoms distributed at youth-friendly locations

- Percent of youth reached with condom use messages through the media

Outcome indicators track how successful program activities have been at achieving program objectives. They help to answer the question, “Have program activities made a difference?” Some examples of outcome indicators are:

- Percent of youth using condoms during first intercourse

- Number and percent of trained health providers offering family planning services to youth

- Number and percent of new STI infections among youth.

These are just a few examples of indicators that can be created to track a program’s success. More information about creating indicators can be found in the How to Develop Indicators guide .

Step 3: Define Data Collection Methods and TImeline

After creating monitoring indicators, it is time to decide on methods for gathering data and how often various data will be recorded to track indicators. This should be a conversation between program staff, stakeholders, and donors. These methods will have important implications for what data collection methods will be used and how the results will be reported.

The source of monitoring data depends largely on what each indicator is trying to measure. The program will likely need multiple data sources to answer all of the programming questions. Below is a table that represents some examples of what data can be collected and how.

| Implementation process and progress | Program-specific M&E tools |

| Service statistics | Facility logs, referral cards |

| Reach and success of the program intervention within audience subgroups or communities | Small surveys with primary audience(s), such as provider interviews or client exit interviews |

| The reach of media interventions involved in the program | Media ratings data, brodcaster logs, Google analytics, omnibus surveys |

| Reach and success of the program intervention at the population level | Nationally-representative surveys, Omnibus surveys, DHS data |

| Qualitative data about the outcomes of the intervention | Focus groups, in-depth interviews, listener/viewer group discussions, individual media diaries, case studies |

Once it is determined how data will be collected, it is also necessary to decide how often it will be collected. This will be affected by donor requirements, available resources, and the timeline of the intervention. Some data will be continuously gathered by the program (such as the number of trainings), but these will be recorded every six months or once a year, depending on the M&E plan. Other types of data depend on outside sources, such as clinic and DHS data.

After all of these questions have been answered, a table like the one below can be made to include in the M&E plan. This table can be printed out and all staff working on the program can refer to it so that everyone knows what data is needed and when.

| Number of trainings held with health providers | Training attendance sheets | Every 6 months |

| Number of outreach activities conducted at youth-friendly locations | Activity sheet | Every 6 months |

| Number of condoms distributed at youth-friendly locations | Condom distribution sheet | Every 6 months |

| Percent of youth receiving condom use messages through the media | Population-based surveys | Annually |

| Percent of adolescents reporting condom use during first intercourse | DHS or other population-based survey | Annually |

| Number and percent of trained health providers offering family planning services to adolescents | Facility logs | Every 6 months |

| Number and percent of new STI infections among adolescents | DHS or other population-based survey | Annually |

Step 4: Identify M&E Roles and Responsibilities

The next element of the M&E plan is a section on roles and responsibilities. It is important to decide from the early planning stages who is responsible for collecting the data for each indicator. This will probably be a mix of M&E staff, research staff, and program staff. Everyone will need to work together to get data collected accurately and in a timely fashion.

Data management roles should be decided with input from all team members so everyone is on the same page and knows which indicators they are assigned. This way when it is time for reporting there are no surprises.

An easy way to put this into the M&E plan is to expand the indicators table with additional columns for who is responsible for each indicator, as shown below.

| Number of trainings held with health providers | Training attendance sheets | Every 6 months | Activity manager |

| Number of outreach activities conducted at youth-friendly locations | Activity sheet | Every 6 months | Activity manager |

| Number of condoms distributed at youth-friendly locations | Condom distribution sheet | Every 6 months | Activity manager |

| Percent of youth receiving condom use messages through the media | Population-based survey | Annually | Research assistant |

| Percent of adolescents reporting condom use during first intercourse | DHS or other population-based survey | Annually | Research assistant |

| Number and percent of trained health providers offering family planning services to adolescents | Facility logs | Every 6 months | Field M&E officer |

| Number and percent of new STI infections among adolescents | DHS or other population-based survey | Annually | Research assistant |

Step 5: Create an Analysis Plan and Reporting Templates

Once all of the data have been collected, someone will need to compile and analyze it to fill in a results table for internal review and external reporting. This is likely to be an in-house M&E manager or research assistant for the program.

The M&E plan should include a section with details about what data will be analyzed and how the results will be presented. Do research staff need to perform any statistical tests to get the needed answers? If so, what tests are they and what data will be used in them? What software program will be used to analyze data and make reporting tables? Excel? SPSS? These are important considerations.

Another good thing to include in the plan is a blank table for indicator reporting. These tables should outline the indicators, data, and time period of reporting. They can also include things like the indicator target, and how far the program has progressed towards that target. An example of a reporting table is below.

| Number of trainings held with health providers | 0 | 5 | 10 | 50% |

| Number of outreach activities conducted at youth-friendly locations | 0 | 2 | 6 | 33% |

| Number of condoms distributed at youth-friendly locations | 0 | 25,000 | 50,000 | 50% |

| Percent of youth receiving condom use messages through the media. | 5% | 35% | 75% | 47% |

| Percent of adolescents reporting condom use during first intercourse | 20% | 30% | 80% | 38% |

| Number and percent of trained health providers offering family planning services to adolescents | 20 | 106 | 250 | 80% |

| Number and percent of new STI infections among adolescents | 11,00022% | 10,00020% | 10% reduction 5 years | 20% |

Step 6: Plan for Dissemination and Donor Reporting

The last element of the M&E plan describes how and to whom data will be disseminated. Data for data’s sake should not be the ultimate goal of M&E efforts. Data should always be collected for particular purposes.

Consider the following:

- How will M&E data be used to inform staff and stakeholders about the success and progress of the program?

- How will it be used to help staff make modifications and course corrections, as necessary?

- How will the data be used to move the field forward and make program practices more effective?

The M&E plan should include plans for internal dissemination among the program team, as well as wider dissemination among stakeholders and donors. For example, a program team may want to review data on a monthly basis to make programmatic decisions and develop future workplans, while meetings with the donor to review data and program progress might occur quarterly or annually. Dissemination of printed or digital materials might occur at more frequent intervals. These options should be discussed with stakeholders and your team to determine reasonable expectations for data review and to develop plans for dissemination early in the program. If these plans are in place from the beginning and become routine for the project, meetings and other kinds of periodic review have a much better chance of being productive ones that everyone looks forward to.

After following these 6 steps, the outline of the M&E plan should look something like this:

- Program goals and objectives

- Logic model/ Logical Framework/Theory of change

- Table with data sources, collection timing, and staff member responsible

- Description of each staff member’s role in M&E data collection, analysis, and/or reporting

- Analysis plan

- Reporting template table

- Description of how and when M&E data will be disseminated internally and externally

M&E Planning: Template for Indicator Reporting

M&E Plan Indicators Table Template

M&E Plan: Data Sources Table Example

Tips & Recommendations

- It is a good idea to try to avoid over-promising what data can be collected. It is better to collect fewer data well than a lot of data poorly. It is important for program staff to take a good look at the staff time and resource costs of data collection to see what is reasonable.

Glossary & Concepts

- Process indicators track how the implementation of the program is progressing. They help to answer the question, “Are activities being implemented as planned?”

- Outcome indicators track how successful program activities have been at achieving program goals. They help to answer the question, “Have program activities made a difference?”

Resources and References

Evaluation Toolbox. Step by Step Guide to Create your M&E Plan. Retrieved from: http://evaluationtoolbox.net.au/index.php?option=com_content&view=article&id=23:create-m-and-e-plan&catid=8:planning-your-evaluation&Itemid=44

infoDev. Developing a Monitoring and Evaluation Plan for ICT for Education. Retrieved from: https://www.infodev.org/infodev-files/resource/InfodevDocuments_287.pdf

FHI360. Developing a Monitoring and Evaluation Work Plan. Retrieved from: http://www.fhi360.org/sites/default/files/media/documents/Monitoring%20HIV-AIDS%20Programs%20(Facilitator)%20-%20Module%203.pdf

Banner Photo: © 2012 Akintunde Akinleye/NURHI, Courtesy of Photoshare

ABOUT HOW TO GUIDES

SBC How-to Guides are short guides that provide step-by-step instructions on how to perform core social and behavior change tasks. From formative research through monitoring and evaluation, these guides cover each step of the SBC process, offer useful hints, and include important resources and references.

Share this Article

Harvard and MIT’s $800 Million Mistake

Harvard and MIT’s $800 Million Mistake: The Triple Failure of 2U, edX, and Axim Collaborative

The future of Coursera’s only credible alternative for universities rests in the hands of 2U’s creditors.

- 10 Best Data Science Courses for 2024

- 7 Best Free OCaml Courses for 2024

- 6 Best Free Ecology Courses for 2024

- [2024] Massive List of Thousands of Free Certificates and Badges

- Learn Something New: 100 Most Popular Courses For September

600 Free Google Certifications

Most common

Popular subjects.

Communication Skills

Data Analysis

Digital Marketing

Popular courses

What is a Mind?

The Ancient Greeks

Quantum Mechanics for Everyone

Organize and share your learning with Class Central Lists.

View our Lists Showcase

Class Central is learner-supported. When you buy through links on our site, we may earn an affiliate commission.

Planning for Monitoring and Evaluation

Philanthropy University and fhi360 via Independent Help

How will you measure your project’s success? This course will help you answer this question by introducing the basics of monitoring and evaluation (M&E). In this course, you will learn how successful projects plan for data collection, management, analysis, and use. As you complete the course assignments, you will create an M&E plan for your own project.

Module 1: Introduction to Monitoring and Evaluation

Link M&E to your project’s design

Module 2: Linking M&E to Project Design

Define the indicators that you will measure

Module 3: Identifying Indicators & Targets

Choose appropriate data collection methods

Module 4: Data Collection

Create clear, useful data collection tools

Module 5: Roles & Responsibilities

Assign M&E roles and responsibilities

Related Courses

From data collection to data use, project initiation: starting a successful project, evaluating public health programs at scale, household surveys for program evaluation in lmics, the dmaic framework - define and measure phase, project - monitoring and control.

4.7 rating, based on 61 Class Central reviews

Select rating

Start your review of Planning for Monitoring and Evaluation

- Puguh Dwi Kuncoro @puguhdwikuncoro 8 months ago Amazing course, I think it's good initiation to learn about Monitoring and Evaluation. I hope there are a opportunity to develop more knowledge Helpful

- AA Anonymous 5 years ago Having completed this course, I give credit to the Philanthropy University and FHI360 team for conveying such a technical given subject matter (monitoring and evaluation) in such a simplified manner. Though, the fundamental concepts, skill sets, t… Read more Having completed this course, I give credit to the Philanthropy University and FHI360 team for conveying such a technical given subject matter (monitoring and evaluation) in such a simplified manner. Though, the fundamental concepts, skill sets, tools and knowledge of the field (monitoring and evaluation) are enormous, however, the basic and fundamental of it all were all covered and discussed in simplified terms, devoid of any technical jargons. The assessments and quizzes were also relevant, as it gave me the opportunity to appropriately apply and exercise the knowledge gained, tools learnt and skills developed. Kudos to Philanthropy University team for putting up quality learning opportunities in collaboration with international organizations of high reputation, to esteemed learners at no cost to the prospective learner, in contrast to the popular belief that nothing of high quality comes for free. Having undertook some selected courses of Philanthropy University and attest to the fact that it was a time well spent for me, I look forward to undertaking many more courses to come. Helpful

- Opeyemi Simon Samuel 5 years ago This course on Monitoring and Evaluation has provided me with an added skill on M&E and has puts me in better place to be hired to do an M&E job. Its easy, clear and flexible. I recommend it to everyone who has interest. Helpful

- AC ADITYA KUMAR CHAURASIYA 5 months ago Its a good course for self enhancement and quality building. One who is looking for fast and reliant ability can pursue it. It is effective and value time spending Helpful

- AA Anonymous 1 year ago A worthwhile course. Challenges you to excel. It Is relevant and applicable in today's world. I would recommend it to anyone intersted in developing their skills set. Thanks I enjoyed it. Helpful

- AM Andulile Raphael Mwaisaka 5 years ago The course is narrated in simple language which most of the people can get through even for though English is not their native language. The step by step instructions are very helpful even for a slow learner can corp with it. Examples used are very simple and therefore well understood to motivate a learner try to do himself/herself for practical purpose. To conclude I would say, the course is well prepared in a manner which inspire someone to continue reading and doing something for practical purpose. It is real a self- paced learning course. No technical terminologies have been used or where they are used are given simple descriptions which most learner can understand. I enjoyed learning. Helpful

- GS Godlove Shu 5 years ago This course "Planning for Monitoring and Evaluation" introduced the fundamentals of M&E and went further to guide the learners through a robust process of creating an M&E Plan. It explained the meaning, similarities and differences between monitoring and evaluation. In addition, the course walked the learners through the important components of an M&E plan (Introduction document, Logical Framework, Indicator Document, Data Flow Map, Roles and Responsibility Chart). The course stressed the need and made learners understand that monitory and evaluation was fundamental to the success of any project and must be inculcated into the project as early as at the design phase. Helpful

- SN Sani Emmanuel Numa 5 years ago Before now, I have been scouting the internet for resources on M&E with little success due to the junks out there. But in spite of my skepticism about the thorough nature of online course, I decided to just give it a try and really, the Philanthropy University platform is the best. The case studies, resource, illustrations, tasks etc. was wonderful and for everyone of my team at Oliveserah Business and Academic Concepts, taking courses with Philanthropy University will become a norm. Helpful

- KN Kortu Ndebe 5 years ago This course by Philanthropy University is so educative and fully completing it will help bring a lot of positive changes within your organization and leads to a successful project implementation, putting you in the right position for evaluation and even monitoring. I wholeheartedly recommend this course and other courses offered by Philanthropy University like Essentials of Non-profit Strategy, Project Management. Helpful

- AA Anonymous 5 years ago This course is really a great one, for anyone seeking for the right knowledge in project monitoring and evaluation. I was really finding it difficult to monitor and evaluate a project, this course was really an eye-opener for me, I grabbed the knowledge which i earnestly needed. Thanks to philanthropy University and their Team! Helpful

- SI Saed Mahad Ismail 5 years ago Understanding about monitoring,evaluation,accountability and learning.Also how to plan the projects during monitoring and evaluation. Also what is monitoring, types of monitoring,process of monitoring and evaluation and reasons of to make monitoring and evaluation and to achieve a project successfull. Helpful

- CN Charles Nyaribo 4 years ago This is a very important course as far as successful project development completion is concern. Am rural development practitioner with extensive experience in agricultural projects both nationally and internationally. I learnt that a project to be successful it must have a team of competent and qualified monitoring and evaluation staff. Helpful

- AA Anonymous 4 years ago The course is very interesting and helpful! I highly recommend it to anyone who is interested in monitoring and evaluation or working in this field. The course will take you through establishing a strong M&E plan for your project with practical assignments that help in having a concrete understanding of the whole thing! Loved it so much! Helpful

- Zeb 5 years ago The course "Planning for Monitoring and Evaluation" communicated in so meaningful and interactive ways. Certainly, the learning outcomes of this course are value addition for my stuy and skills. I strongly recommend to all M&E position lovers to try this course and feel the change of learning with implementation. Best wishes for all... Helpful

- AA Anonymous 5 years ago Philanthropy University has just nourished it more clear to me through the Monitoring and Evaluation (M&E) online free courses which I will strongly recommend for every individual including; those that are seeking for job and those who are working. Helpful

- TA Taiwo Olayemi Adebowale 5 years ago The Planning for Monitoring and Evaluation course gives you the requisite knowledge and skills for any M&E project. The instructional platform is user friendly and learning materials are exceptionally informative. You will achieve above and beyond your goal! Helpful

- AA Anonymous 5 years ago The course is well structured, with good case examples. The discussion forums are good, very engaging and the learning methodology employed is good for both beginners and those with little expertise in M&E Helpful

- AA Anonymous 5 years ago for the firt step in setting up a M&E plan, questions such us need of informations for M&E plan, which informations is important for partners , i think this stage must be improve and be completed; Helpful

- AA Anonymous 5 years ago This course was perfect. I understood the core concepts of planning for monitoring and evaluation and the applications in data collection, analyses and presentations and also in implementation research. Helpful

- AA Anonymous 5 years ago Enlightening course, Professional learning platform, Easy to understand course contents/materials, Interactive discussion forums, Great course instructional team and Ability to learn from peers. Helpful

Never Stop Learning.

Get personalized course recommendations, track subjects and courses with reminders, and more.

tools4dev Practical tools for international development

How to write a monitoring and evaluation (M&E) framework

Note: An M&E framework can also be called an evaluation matrix.

One of the most popular downloads on tools4dev is our M&E framework template . We’ve had lots of questions from people on how to use specific parts of the template, so we’ve decided to put together this short how-to guide.

Choose your indicators

The first step in writing an M&E framework is to decide which indicators you will use to measure the success of your program. This is a very important step, so you should try to involve as many people as possible to get different perspectives.

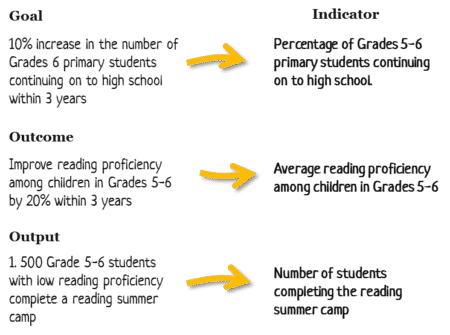

You need to choose indicators for each level of your program – outputs, outcomes and goal (for more information on these levels see our articles on how to design a program and logical frameworks ). There can be more than one indicator for each level, although you should try to keep the total number of indicators manageable.

Each indicator should be:

- Directly related to the output, outcome or goal listed on the problem tree or logframe.

- Something that you can measure accurately using either qualitative or quantitative methods, and your available resources.

- If possible, a standard indicator that is commonly used for this type of program. For example, poverty could be measured using the Progress Out of Poverty Index . Using standard indicators can be better because they are already well defined, there are tools available to measure them, and you will be able to compare your results to other programs or national statistics.

Here is an example of some indicators for the goal, outcome and output of an education program:

Some organisations have very strict rules about how the indicators must be written (for example, it must always start with a number, or must always contain an adjective). In my experience these rules usually lead to indicators that are convoluted or don’t make sense. My advice is just to make sure the indicators are written in a way where everyone involved in the project (including the donor) can understand them.

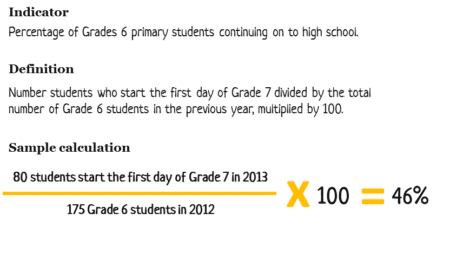

Define each indicator

Once you have chosen your indicators you need to write a definition for each one. The definition describes exactly how the indicator is calculated. If you don’t have definitions there is a serious risk that indicators might be calculated differently at different times, which means the results can’t be compared.

Here is an example of how one indicator in the education program is defined:

After writing the definition of each indicator you also need to identify where the data will come from (the “data source”). Common sources are baseline and endline surveys, monitoring reports, and existing information systems. You also need to decide how frequently it will be measured (monthly, quarterly, annually, etc.).

Measure the baseline and set the target

Before you start your program you need to measure the starting value of each indicator – this is called the “baseline”. In the education example above that means you would need to measure the current percentage of Grade 6 students continuing on to Grade 7 (before you start your program).

In some cases you will need to do a survey to measure the baseline. In other cases you might have existing data available. In this case you need to make sure the existing data is using the same definition as you for calculating the indicator.

Once you know the baseline you need to set a target for improvement. Before you set the target it’s important to do some research on what a realistic target actually is. Many people set targets that are unachievable, without realising it. For example, I once worked on a project where the target was a 25% reduction in the child mortality rate within 12 months. However, a brief review of other child health programs showed that even the best programs only managed a 10-20% reduction within 5 years.

Identify who is responsible and where the results will be reported

The final step is to decide who will be responsible for measuring each indicator. Output indicators are often measured by field staff or program managers, while outcome and goal indicators may be measured by evaluation consultants or even national agencies.

You also need to decide where the results for each indicator will be reported. This could be in your monthly program reports, annual donor reports, or on your website. Indicator results are used to assess whether the program is working or not, so it’s very important that decision makers and stakeholders (not just the donor) have access to them as soon as possible.

Put it all into the template

Once you have completed all these steps, you’re now ready to put everything into the M&E framework template .

Download the M&E framework template and example

Tags Monitoring & Evaluation

About Piroska Bisits Bullen

Related Articles

What can international development learn from tech start-ups?

13 May 2021

Social Enterprise Business Plan Template

12 May 2021

How to write an M&E framework – Free video tutorial & templates

10 September 2017

The Importance of Monitoring and Evaluation for Decision-Making

- First Online: 08 June 2021

Cite this chapter

- Nadini Persaud ORCID: orcid.org/0000-0003-1827-2867 3 &

- Ruby Dagher ORCID: orcid.org/0000-0001-5211-2125 4

583 Accesses

With a rich discussion of the role of monitoring and evaluation (M&E), the differences between monitoring and evaluation, and the various types of evaluations that can be undertaken, this chapter provides a rich assessment of the tools that evaluators can use to assess advancements in the SDGs, lessons learned, accountability, and the power of the interconnected nature of the SDGs. It also provides a critical assessment of the challenges that the evaluation domain faces.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

Subscribe and save.

- Get 10 units per month

- Download Article/Chapter or eBook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

- Durable hardcover edition

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Agrawal, R., & Rao, B. L. N. (2016). Evaluations as catalysts in bridging development inequalities. In R. C. Rist, F. P. Martin &. A. M. Fernandex (Eds.), Poverty, inequality, and evaluation: Changing perspectives (pp. 25–38). Washington, DC: The World Bank.

Google Scholar

Baptiste, L., Lese, V., Gordon, V., Bailey, A., Persaud, N., Nicholson, C., et al. (2019). The transformative agenda for evaluation in small island developing states: The Caribbean and the Pacific. In R. D. van den Berg, C. Magro, & S. S. Mulder (Eds.). Evaluation for transformational change: Opportunities and challenges for the sustainable development goals (pp. 71–87). Exeter, UK: IDEAS.

Carlsson, J., Koehlin, G., & Ekbom, A. (1994). The political economy of evaluation: International aid agencies and the effectiveness of aid . London: Palgrave Macmillan UK.

Book Google Scholar

Cracknell, B. E. (2000). Evaluating development aid: Issues, problems and solutions . New Delhi: Sage.

Crawford, P., & Bryce, P. (2003). Project monitoring and evaluation: A method for enhancing the efficiency and effectiveness of aid project implementation. International Journal of Project Management, 21, 363–373. https://doi.org/10.1016/S0263-7863(02)00060-1 .

Article Google Scholar

Cunill-Grau, N., & Ospina, S. M. (2012). Performance measurement and evaluation systems: Institutionalizing accountability for Government results in Latin America. New Directions for Evaluation, 134 , 77–91.

Estrella, M. (2000). Learning from change. In M. Estrella (Ed.), Learning from change: Issues and experiences in participatory monitoring and evaluation (pp. 1–15). London: Intermediate Technology Publications Ltd.

Chapter Google Scholar

European Union. (2015). Evaluation matters: The evaluation policy for European union development co-operation . https://ec.europa.eu/international-partnerships/system/files/evaluation-matters_en.pdf .

Feeny, S. (2020). Transitioning from the MDGs to the SDGs: Lessons learnt? In S. Awaworyi (Ed.), Moving from the millennium to the sustainable development goals (pp. 343–351). Singapore: Palgrave.

Feinstein, O. N. (2012). Evaluation as a learning tool. New Directions for Evaluation, 134, 103–112. https://doi.org/10.1002/ev.104 .

Fitz-Gibbon, C. T., & Morris, L. L. (1987). How to design a program evaluation . Newbury Park: Sage.

Gabay, C. (2015). Special forum on the millennium development goals: Introduction. Globalizations, 12 (4), 576–580. https://doi.org/10.1080/14747731.2015.1033173 .

Ibrahim, S. (2013). Linking evaluation work in Arab countries to the crises in the “3F’s”—Finances, food, and fuel. In R. C. Rist, M. H. Boily & F. R. Martin (Eds.), Development evaluation in times of turbulence: Dealing with crises that endanger our future (pp. 1–4). Washington, DC. The World Bank.

Kusek, J. S., & Rist, R. C. (2004). Ten steps to a results-based monitoring and evaluation system: A handbook for development practitioners. Washington, DC. https://www.oecd.org/dac/peer-reviews/ World Bank 2004 10_Steps_to_a_Results_Based_ME_System.pdf.

Love, A. (1991). Internal evaluation: Building organizations from within . Newbury Park: Sage.

Marinic, S. (2012). Emergent evaluation and educational reforms in Latin America. New Directions for Evaluation, 134 , 17–27.

Menon, S. (2013). Evaluation and turbulence: Reflections. In R. C. Rist, M. H. Boily & F. R. Martin (Eds.), Development evaluation in times of turbulence: Dealing with crises that endanger our future (pp. 25–30). Washington, DC. The World Bank.

Morra-Imas, L. G., Morra, L. G., and Rist, R. C. (2009). The road to results: Designing and conducting effective development evaluations . Washington, DC: The World Bank. https://issuu.com/world.bank.publications/docs/9780821378915?layout=http%253A%252F%252Fskin.issuu.com%252Fv%252Flight%252Flayout.xml&showFlipBtn=true.

Morra-Imas, L. G., & Rist, R. C. (2009). The road to results: Designing and conducting effective development evaluations . Washington, DC: The World Bank. https://openknowledge.worldbank.org/bitstream/handle/10986/2699/52678.pdf?sequence=1&isAllowed=y

Neirotti, N. (2012). Evaluation in Latin America: Paradigms and practices. New Directions for Evaluation, 134, 7–16. https://doi.org/10.1002/ev .

Organization for Economic Cooperation and Development. (2020). OECD better criteria for better evaluation: Revised and updated evaluation criteria . https://www.oecd.org/dac/evaluation/evaluation-criteria-flyer-2020.pdf .

Parsons, D. (2017). Demystifying evaluation: Practical approaches for researchers and users . Bristol: Policy Press.

Patton, M. Q. (2016). A transcultural global systems perspective: In search of blue marble evaluators. Canadian Journal of Program Evaluation , Special Issue, 374–390.

Patton, M. Q. (2020). Blue marble evaluation: Premises and principles . New York, NY: The Guildford Press.

Persaud, N. (2019). An exploratory study on public sector program evaluation practices and culture in Barbados, Belize, Guyana, and Saint Vincent and the Grenadines: Where are we? Where do we need to go? Journal of MultiDisciplinary Evaluation, 15 (32), 17–27.

Persaud, N. (in press). Strengthening evaluation culture in the English Speaking Commonwealth Caribbean: A guide for evaluation practitioners and decision-makers in the public, private, and NGO sectors . Kingston, Jamaica: Arawak Publications.

Persaud, N., & Dagher, R. (2020). Evaluations in the English-speaking Commonwealth Caribbean region: Lessons from the field. American Journal of Evaluation, 41 (2), 255–276. https://doi.org/10.1177/1098214019866260 .

Reichert, J., & Gatens, A. (2019). Demystifying program evaluation in criminal justice: A guide for practitioners. IL. https://icjia.illinois.gov/researchhub/files/Demystifying_Evaluation191011T20092818.pdf .

Rossi, P. H., Lipsey, M. W., & Henry, G. T. (2018). Evaluation: A systematic approach (8th ed.). Thousand Oaks: Sage.

Rotondo, E. (2012). Lesson learned from evaluation capacity building. New Directions for Evaluation, 134 , 93–101.

Scriven, M. (1991). Prose and cons about goal-free evaluation. Evaluation Practice, 12 (1), 55–62.

Scriven, M. (2016). Roads to recognition and revolution. American Journal of Evaluation, 37 (1), 27–44.

Shepherd, R. (2016). Deliverology and innovation in evaluation: Canada as a case study . Paper presented at collaborative conference between The University of the West Indies, The Caribbean Development Bank, and Carleton University, on Strengthening the role of evaluation in the Caribbean: Lessons from the field, Bridgetown, Barbados.

United Nations Development Programme [UNDP]. (2016). From the MDGs to sustainable development for all: Lessons from 15 years of practice . New York. https://www.undp.org/content/undp/en/home/librarypage/sustainable-development-goals/from-mdgs-to-sustainable-developmentforall.html .

Weber, H. (2015). Reproducing inequalities through development: The MDGs and the politics of method. Globalizations, 12 (4), 660–676. https://doi.org/10.1080/14747731.2015.1039250 .

Download references

Author information

Authors and affiliations.

University of the West Indies, St. Michael, Barbados

Nadini Persaud

University of Ottawa, Ottawa, ON, Canada

Ruby Dagher

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Nadini Persaud .

Rights and permissions

Reprints and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Persaud, N., Dagher, R. (2021). The Importance of Monitoring and Evaluation for Decision-Making. In: The Role of Monitoring and Evaluation in the UN 2030 SDGs Agenda. Palgrave Macmillan, Cham. https://doi.org/10.1007/978-3-030-70213-7_4

Download citation

DOI : https://doi.org/10.1007/978-3-030-70213-7_4

Published : 08 June 2021

Publisher Name : Palgrave Macmillan, Cham

Print ISBN : 978-3-030-70212-0

Online ISBN : 978-3-030-70213-7

eBook Packages : Political Science and International Studies Political Science and International Studies (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- Search USAID Learning Lab Search

- Login to Contribute

Designing Qualitative Research for Monitoring and Evaluation (M&E)

Click here to access the course

In this 2.5-hour Designing Qualitative Research for Monitoring and Evaluation (M&E) e-course, you will learn how to design and plan for a rigorous qualitative study or routine monitoring activity using the step-by-step guidance provided by the Qualitative Inquiry Planning Sheet (QuIPS).

After completing this course, you will be able to:

- design a qualitative inquiry that aligns with your project’s existing knowledge and evidence gaps;

- identify the appropriate qualitative methodology and sampling for your research;

- develop a plan for data collection, management, and analysis;

- address research limitations, risks, and your ethical review; and

- create a plan for engaging key stakeholders in the design and dissemination of findings.

The course includes:

Module 1: Pre-Design Work

- Identify evidence gaps based on source documents.

- Identify key people who can support, advise, and review your inquiry work.

- Identify key stakeholders who would benefit from the results of your qualitative inquiry.

Module 2: Defining the Purpose, Objectives, and Inquiry Questions

- Identify the purpose and objectives of your inquiry.

- Define the questions you hope to answer.

- Determine the type of data you will collect to answer these questions.

Module 3: Designing the Methodology

- Learn about a variety of qualitative inquiry methods.

- Identify which methods and tools are most appropriate for your qualitative inquiry.

- Learn how to choose a sampling strategy.

Module 4: Planning for Data Collection and Analysis

- Develop an implementation plan for data collection.

- Understand the process for qualitative data analysis and be able to articulate your data analysis plan.

- Understand the best way to share and use your qualitative findings.

Module 5: Anticipating Limitations and Risks and Ethical Review

- Document the constraints and ethical research protocol of your qualitative inquiry.

Developed by IDEAL in close partnership with the USAID Bureau for Humanitarian Assistance (BHA), this course is based on the Qualitative Design Toolkit , which focuses its guidance on completing the QuIPS. To learn more about the e-course on the FSN website HERE or access the course directly on the Humanitarian Leadership Academy's Kaya platform HERE .

Page last updated August 23, 2024

7 steps for setting-up a Monitoring & Evaluation system

Designing a Monitoring and Evaluation system, or M&E system, is a complex task that usually involves staff from different units. This article describes the development of such a system in 7 steps (1). Each step is linked with key questions, which are intended to stimulate a discussion of the current state of the M&E system in a project or in an organization. Therefore, the 7 steps do not represent a strict chronological sequence for the development of an M&E system. All steps should be considered from the beginning:

- Step 1 : Define the purpose and scope of the M&E system

- Step 2 : Agree on outcomes and objectives - Theory of change (including indicators)

- Step 3 : Plan data collection and analysis (including development of tools)

- Step 4 : Plan the organization of the data

- Step 5 : Plan the information flow and reporting requirements (how and for whom?)

- Step 6 : Plan reflection processes and events

- Step 7 : Plan the necessary resources and skills

Download the tool “M&E gap analysis” to work on these 7 steps .

Do you wish to get more information on designing a project level database for your M&E activities? Then, take a look at "The complete guide for a project level M&E database" too.

Take a look at how a database for development assistance for projects would look like via this database template .

Explore a database for indicators tracking for global M&E in this database template .

If you like this article don't forget to register to the ActivityInfo newsletter to receive new guides, articles and webinars on various M&E topics !

This guide is also available in French and Spanish

Step 1: Define the purpose and scope of the M&E system

It is crucial to define the scope of the M&E system at the very beginning. A question that will likely need to be answered is whether the system should be impact-oriented and whether we even want to monitor higher-level impacts, or whether the project team is satisfied with simply recording the proper implementation of activities and their results. Both can make sense and be correct, depending on the circumstances and what is needed to be able to improve the project in the best possible way. Of course, high-level results-oriented monitoring is usually preferable, but it could also fail due to the available capabilities. Moreover, it should be clear from the beginning who will continue to work with the M&E findings later on.

A challenge in this first step is engaging staff and convincing them that the additional time and effort to set-up an M&E system is worthwhile in order to improve project steering and thus the quality of project or program results. There are many and varied activities that can be carried out to this end. For some project/program teams, a workshop or a presentation may be helpful to convince of the usefulness of monitoring; in other cases, various face-to-face discussions may be more appropriate. The approach must ultimately be decided by the person responsible for M&E and depends on the resources available and the key people involved. Prior consultation on these can be useful.