Research Topics & Ideas: Data Science

50 Topic Ideas To Kickstart Your Research Project

If you’re just starting out exploring data science-related topics for your dissertation, thesis or research project, you’ve come to the right place. In this post, we’ll help kickstart your research by providing a hearty list of data science and analytics-related research ideas , including examples from recent studies.

PS – This is just the start…

We know it’s exciting to run through a list of research topics, but please keep in mind that this list is just a starting point . These topic ideas provided here are intentionally broad and generic , so keep in mind that you will need to develop them further. Nevertheless, they should inspire some ideas for your project.

To develop a suitable research topic, you’ll need to identify a clear and convincing research gap , and a viable plan to fill that gap. If this sounds foreign to you, check out our free research topic webinar that explores how to find and refine a high-quality research topic, from scratch. Alternatively, consider our 1-on-1 coaching service .

Data Science-Related Research Topics

- Developing machine learning models for real-time fraud detection in online transactions.

- The use of big data analytics in predicting and managing urban traffic flow.

- Investigating the effectiveness of data mining techniques in identifying early signs of mental health issues from social media usage.

- The application of predictive analytics in personalizing cancer treatment plans.

- Analyzing consumer behavior through big data to enhance retail marketing strategies.

- The role of data science in optimizing renewable energy generation from wind farms.

- Developing natural language processing algorithms for real-time news aggregation and summarization.

- The application of big data in monitoring and predicting epidemic outbreaks.

- Investigating the use of machine learning in automating credit scoring for microfinance.

- The role of data analytics in improving patient care in telemedicine.

- Developing AI-driven models for predictive maintenance in the manufacturing industry.

- The use of big data analytics in enhancing cybersecurity threat intelligence.

- Investigating the impact of sentiment analysis on brand reputation management.

- The application of data science in optimizing logistics and supply chain operations.

- Developing deep learning techniques for image recognition in medical diagnostics.

- The role of big data in analyzing climate change impacts on agricultural productivity.

- Investigating the use of data analytics in optimizing energy consumption in smart buildings.

- The application of machine learning in detecting plagiarism in academic works.

- Analyzing social media data for trends in political opinion and electoral predictions.

- The role of big data in enhancing sports performance analytics.

- Developing data-driven strategies for effective water resource management.

- The use of big data in improving customer experience in the banking sector.

- Investigating the application of data science in fraud detection in insurance claims.

- The role of predictive analytics in financial market risk assessment.

- Developing AI models for early detection of network vulnerabilities.

Data Science Research Ideas (Continued)

- The application of big data in public transportation systems for route optimization.

- Investigating the impact of big data analytics on e-commerce recommendation systems.

- The use of data mining techniques in understanding consumer preferences in the entertainment industry.

- Developing predictive models for real estate pricing and market trends.

- The role of big data in tracking and managing environmental pollution.

- Investigating the use of data analytics in improving airline operational efficiency.

- The application of machine learning in optimizing pharmaceutical drug discovery.

- Analyzing online customer reviews to inform product development in the tech industry.

- The role of data science in crime prediction and prevention strategies.

- Developing models for analyzing financial time series data for investment strategies.

- The use of big data in assessing the impact of educational policies on student performance.

- Investigating the effectiveness of data visualization techniques in business reporting.

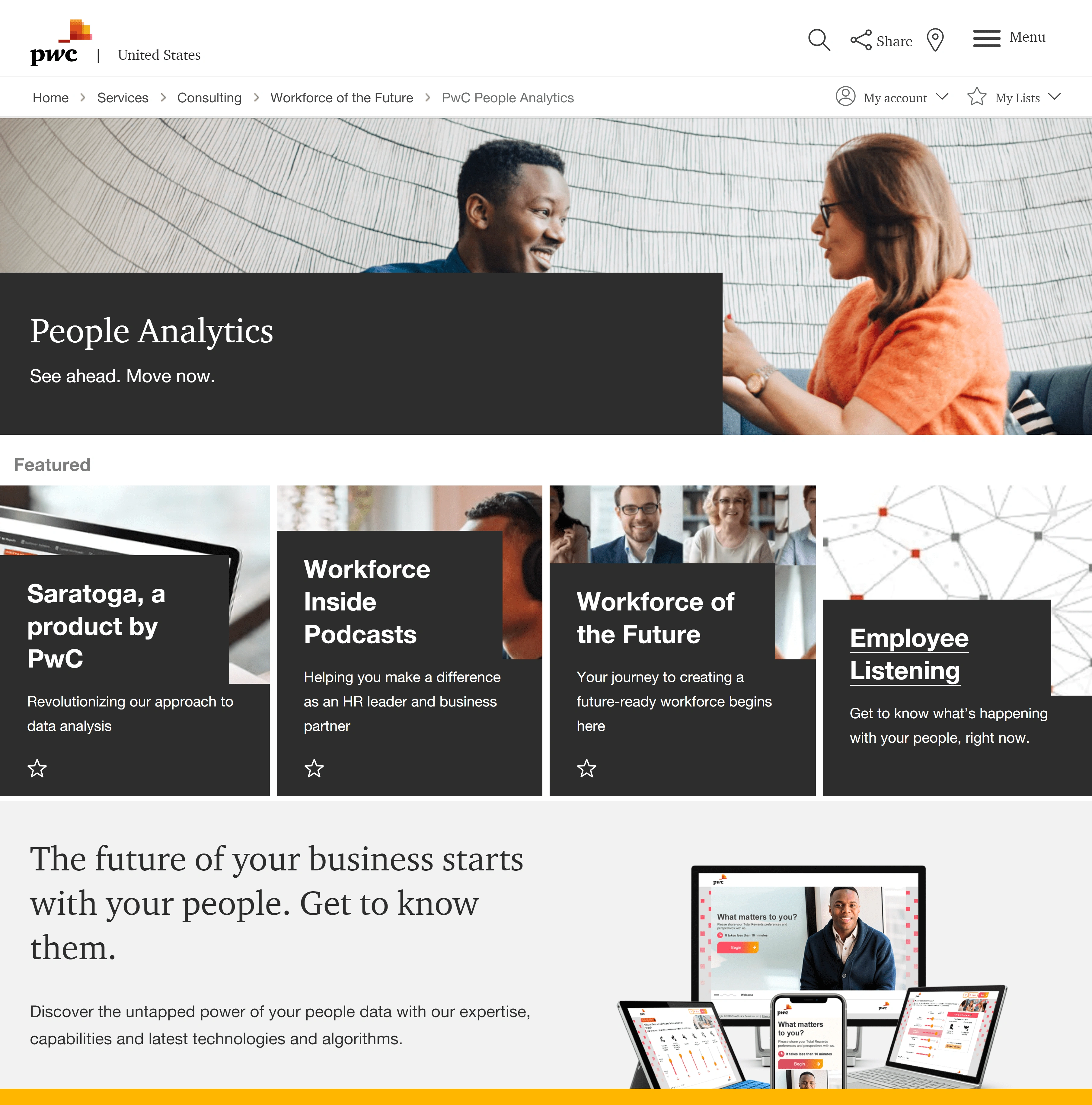

- The application of data analytics in human resource management and talent acquisition.

- Developing algorithms for anomaly detection in network traffic data.

- The role of machine learning in enhancing personalized online learning experiences.

- Investigating the use of big data in urban planning and smart city development.

- The application of predictive analytics in weather forecasting and disaster management.

- Analyzing consumer data to drive innovations in the automotive industry.

- The role of data science in optimizing content delivery networks for streaming services.

- Developing machine learning models for automated text classification in legal documents.

- The use of big data in tracking global supply chain disruptions.

- Investigating the application of data analytics in personalized nutrition and fitness.

- The role of big data in enhancing the accuracy of geological surveying for natural resource exploration.

- Developing predictive models for customer churn in the telecommunications industry.

- The application of data science in optimizing advertisement placement and reach.

Recent Data Science-Related Studies

While the ideas we’ve presented above are a decent starting point for finding a research topic, they are fairly generic and non-specific. So, it helps to look at actual studies in the data science and analytics space to see how this all comes together in practice.

Below, we’ve included a selection of recent studies to help refine your thinking. These are actual studies, so they can provide some useful insight as to what a research topic looks like in practice.

- Data Science in Healthcare: COVID-19 and Beyond (Hulsen, 2022)

- Auto-ML Web-application for Automated Machine Learning Algorithm Training and evaluation (Mukherjee & Rao, 2022)

- Survey on Statistics and ML in Data Science and Effect in Businesses (Reddy et al., 2022)

- Visualization in Data Science VDS @ KDD 2022 (Plant et al., 2022)

- An Essay on How Data Science Can Strengthen Business (Santos, 2023)

- A Deep study of Data science related problems, application and machine learning algorithms utilized in Data science (Ranjani et al., 2022)

- You Teach WHAT in Your Data Science Course?!? (Posner & Kerby-Helm, 2022)

- Statistical Analysis for the Traffic Police Activity: Nashville, Tennessee, USA (Tufail & Gul, 2022)

- Data Management and Visual Information Processing in Financial Organization using Machine Learning (Balamurugan et al., 2022)

- A Proposal of an Interactive Web Application Tool QuickViz: To Automate Exploratory Data Analysis (Pitroda, 2022)

- Applications of Data Science in Respective Engineering Domains (Rasool & Chaudhary, 2022)

- Jupyter Notebooks for Introducing Data Science to Novice Users (Fruchart et al., 2022)

- Towards a Systematic Review of Data Science Programs: Themes, Courses, and Ethics (Nellore & Zimmer, 2022)

- Application of data science and bioinformatics in healthcare technologies (Veeranki & Varshney, 2022)

- TAPS Responsibility Matrix: A tool for responsible data science by design (Urovi et al., 2023)

- Data Detectives: A Data Science Program for Middle Grade Learners (Thompson & Irgens, 2022)

- MACHINE LEARNING FOR NON-MAJORS: A WHITE BOX APPROACH (Mike & Hazzan, 2022)

- COMPONENTS OF DATA SCIENCE AND ITS APPLICATIONS (Paul et al., 2022)

- Analysis on the Application of Data Science in Business Analytics (Wang, 2022)

As you can see, these research topics are a lot more focused than the generic topic ideas we presented earlier. So, for you to develop a high-quality research topic, you’ll need to get specific and laser-focused on a specific context with specific variables of interest. In the video below, we explore some other important things you’ll need to consider when crafting your research topic.

Get 1-On-1 Help

If you’re still unsure about how to find a quality research topic, check out our Research Topic Kickstarter service, which is the perfect starting point for developing a unique, well-justified research topic.

I have to submit dissertation. can I get any help

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

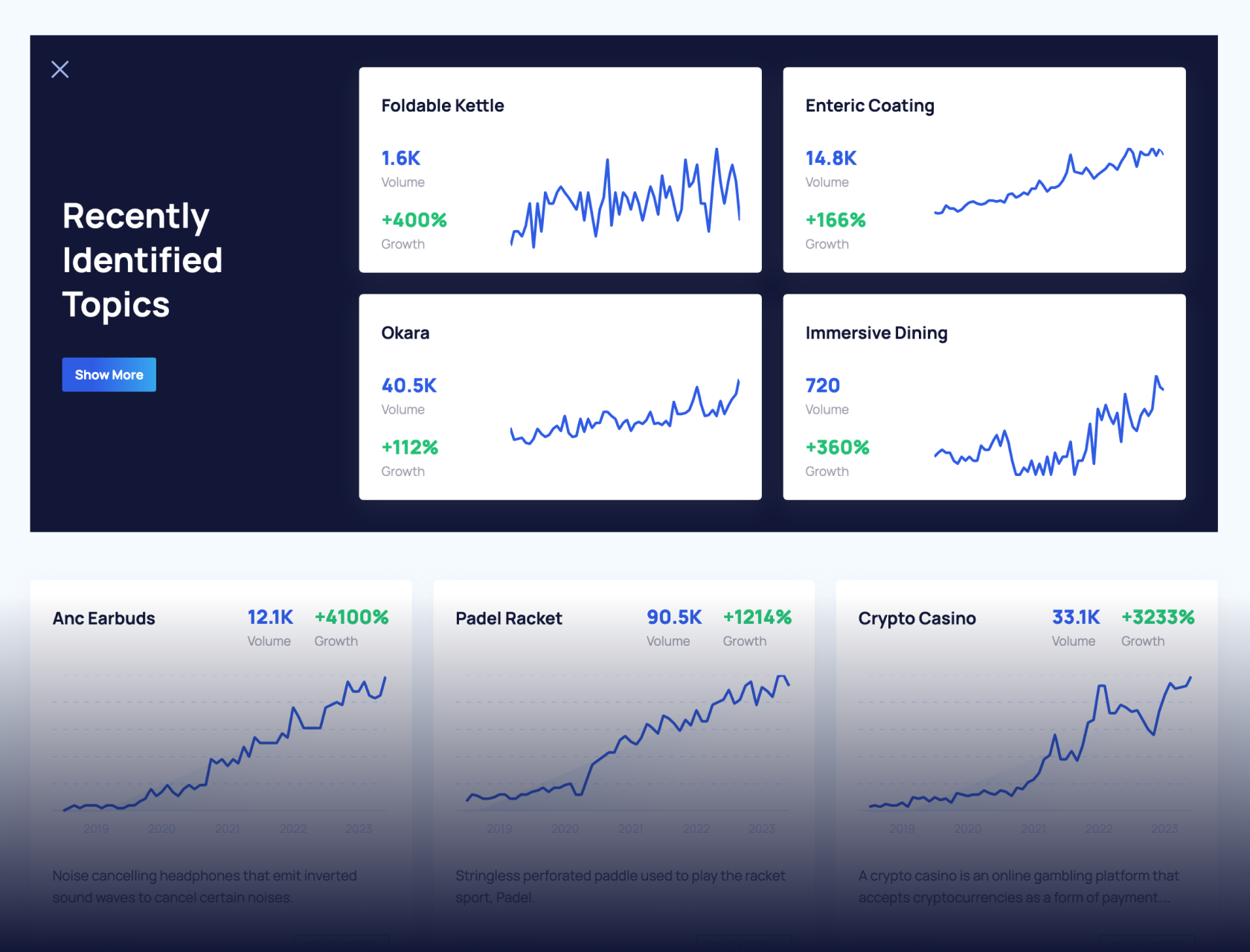

37 Research Topics In Data Science To Stay On Top Of

- February 22, 2024

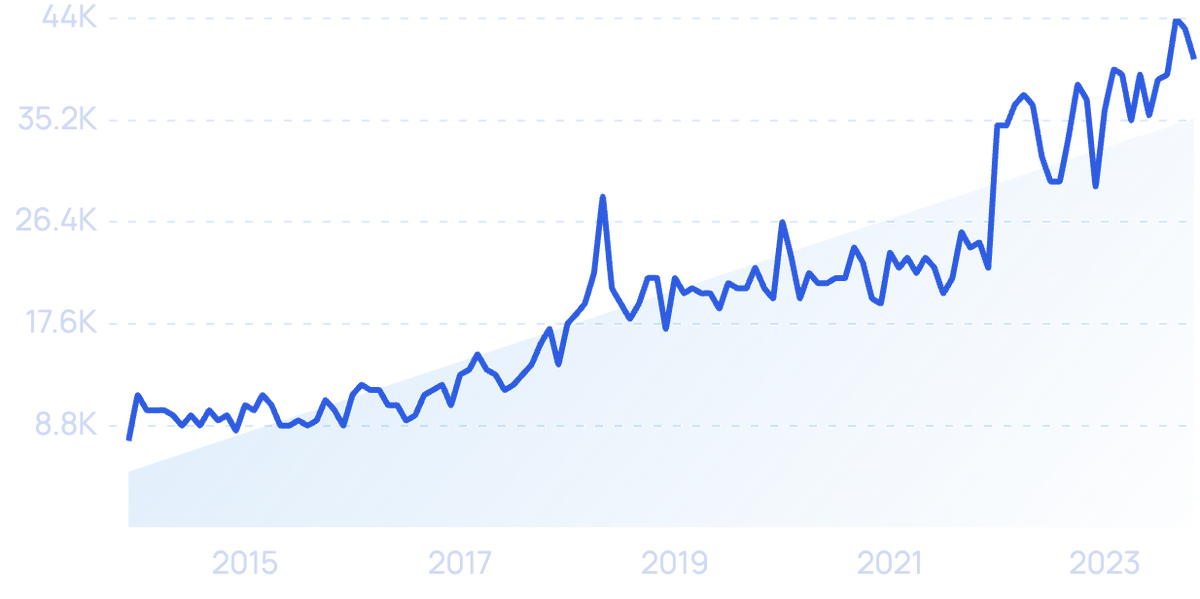

As a data scientist, staying on top of the latest research in your field is essential.

The data science landscape changes rapidly, and new techniques and tools are constantly being developed.

To keep up with the competition, you need to be aware of the latest trends and topics in data science research.

In this article, we will provide an overview of 37 hot research topics in data science.

We will discuss each topic in detail, including its significance and potential applications.

These topics could be an idea for a thesis or simply topics you can research independently.

Stay tuned – this is one blog post you don’t want to miss!

37 Research Topics in Data Science

1.) predictive modeling.

Predictive modeling is a significant portion of data science and a topic you must be aware of.

Simply put, it is the process of using historical data to build models that can predict future outcomes.

Predictive modeling has many applications, from marketing and sales to financial forecasting and risk management.

As businesses increasingly rely on data to make decisions, predictive modeling is becoming more and more important.

While it can be complex, predictive modeling is a powerful tool that gives businesses a competitive advantage.

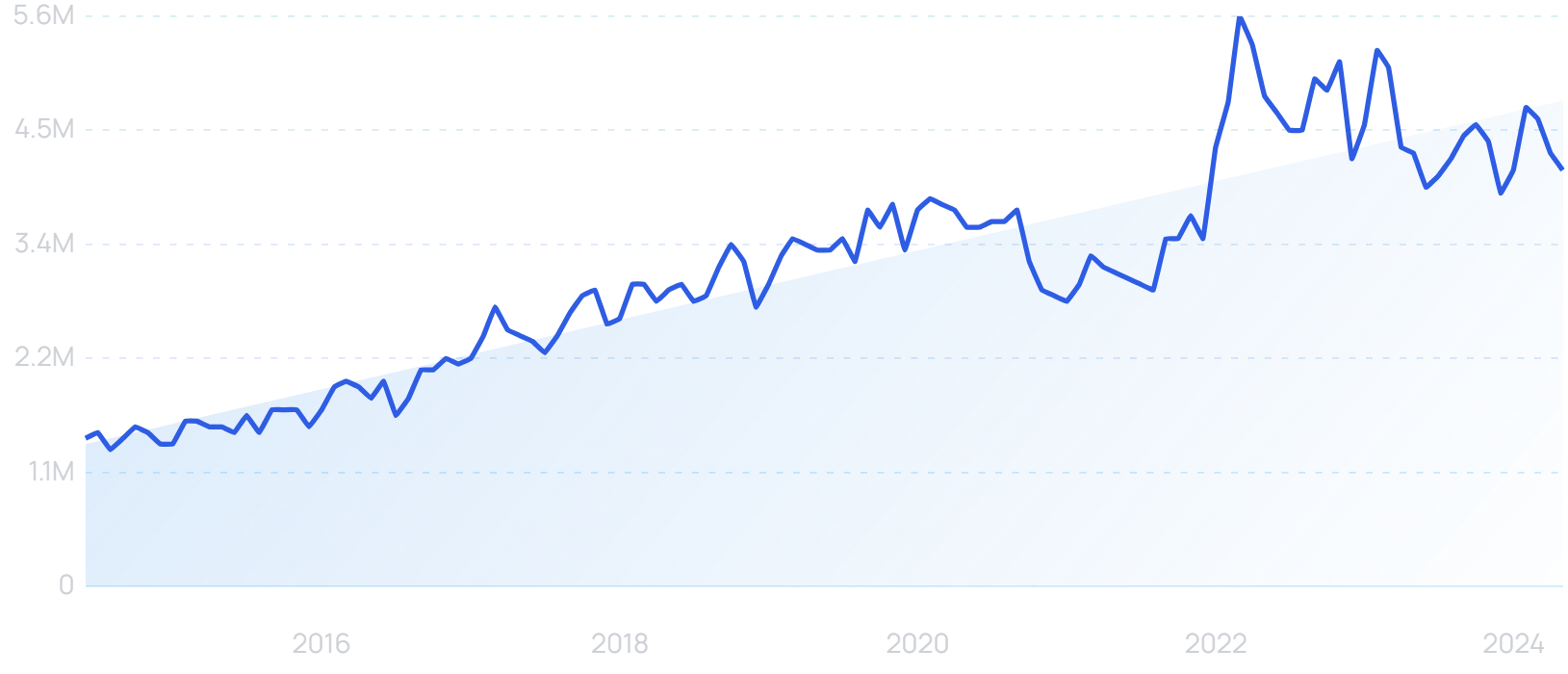

2.) Big Data Analytics

These days, it seems like everyone is talking about big data.

And with good reason – organizations of all sizes are sitting on mountains of data, and they’re increasingly turning to data scientists to help them make sense of it all.

But what exactly is big data? And what does it mean for data science?

Simply put, big data is a term used to describe datasets that are too large and complex for traditional data processing techniques.

Big data typically refers to datasets of a few terabytes or more.

But size isn’t the only defining characteristic – big data is also characterized by its high Velocity (the speed at which data is generated), Variety (the different types of data), and Volume (the amount of the information).

Given the enormity of big data, it’s not surprising that organizations are struggling to make sense of it all.

That’s where data science comes in.

Data scientists use various methods to wrangle big data, including distributed computing and other decentralized technologies.

With the help of data science, organizations are beginning to unlock the hidden value in their big data.

By harnessing the power of big data analytics, they can improve their decision-making, better understand their customers, and develop new products and services.

3.) Auto Machine Learning

Auto machine learning is a research topic in data science concerned with developing algorithms that can automatically learn from data without intervention.

This area of research is vital because it allows data scientists to automate the process of writing code for every dataset.

This allows us to focus on other tasks, such as model selection and validation.

Auto machine learning algorithms can learn from data in a hands-off way for the data scientist – while still providing incredible insights.

This makes them a valuable tool for data scientists who either don’t have the skills to do their own analysis or are struggling.

4.) Text Mining

Text mining is a research topic in data science that deals with text data extraction.

This area of research is important because it allows us to get as much information as possible from the vast amount of text data available today.

Text mining techniques can extract information from text data, such as keywords, sentiments, and relationships.

This information can be used for various purposes, such as model building and predictive analytics.

5.) Natural Language Processing

Natural language processing is a data science research topic that analyzes human language data.

This area of research is important because it allows us to understand and make sense of the vast amount of text data available today.

Natural language processing techniques can build predictive and interactive models from any language data.

Natural Language processing is pretty broad, and recent advances like GPT-3 have pushed this topic to the forefront.

6.) Recommender Systems

Recommender systems are an exciting topic in data science because they allow us to make better products, services, and content recommendations.

Businesses can better understand their customers and their needs by using recommender systems.

This, in turn, allows them to develop better products and services that meet the needs of their customers.

Recommender systems are also used to recommend content to users.

This can be done on an individual level or at a group level.

Think about Netflix, for example, always knowing what you want to watch!

Recommender systems are a valuable tool for businesses and users alike.

7.) Deep Learning

Deep learning is a research topic in data science that deals with artificial neural networks.

These networks are composed of multiple layers, and each layer is formed from various nodes.

Deep learning networks can learn from data similarly to how humans learn, irrespective of the data distribution.

This makes them a valuable tool for data scientists looking to build models that can learn from data independently.

The deep learning network has become very popular in recent years because of its ability to achieve state-of-the-art results on various tasks.

There seems to be a new SOTA deep learning algorithm research paper on https://arxiv.org/ every single day!

8.) Reinforcement Learning

Reinforcement learning is a research topic in data science that deals with algorithms that can learn on multiple levels from interactions with their environment.

This area of research is essential because it allows us to develop algorithms that can learn non-greedy approaches to decision-making, allowing businesses and companies to win in the long term compared to the short.

9.) Data Visualization

Data visualization is an excellent research topic in data science because it allows us to see our data in a way that is easy to understand.

Data visualization techniques can be used to create charts, graphs, and other visual representations of data.

This allows us to see the patterns and trends hidden in our data.

Data visualization is also used to communicate results to others.

This allows us to share our findings with others in a way that is easy to understand.

There are many ways to contribute to and learn about data visualization.

Some ways include attending conferences, reading papers, and contributing to open-source projects.

10.) Predictive Maintenance

Predictive maintenance is a hot topic in data science because it allows us to prevent failures before they happen.

This is done using data analytics to predict when a failure will occur.

This allows us to take corrective action before the failure actually happens.

While this sounds simple, avoiding false positives while keeping recall is challenging and an area wide open for advancement.

11.) Financial Analysis

Financial analysis is an older topic that has been around for a while but is still a great field where contributions can be felt.

Current researchers are focused on analyzing macroeconomic data to make better financial decisions.

This is done by analyzing the data to identify trends and patterns.

Financial analysts can use this information to make informed decisions about where to invest their money.

Financial analysis is also used to predict future economic trends.

This allows businesses and individuals to prepare for potential financial hardships and enable companies to be cash-heavy during good economic conditions.

Overall, financial analysis is a valuable tool for anyone looking to make better financial decisions.

12.) Image Recognition

Image recognition is one of the hottest topics in data science because it allows us to identify objects in images.

This is done using artificial intelligence algorithms that can learn from data and understand what objects you’re looking for.

This allows us to build models that can accurately recognize objects in images and video.

This is a valuable tool for businesses and individuals who want to be able to identify objects in images.

Think about security, identification, routing, traffic, etc.

Image Recognition has gained a ton of momentum recently – for a good reason.

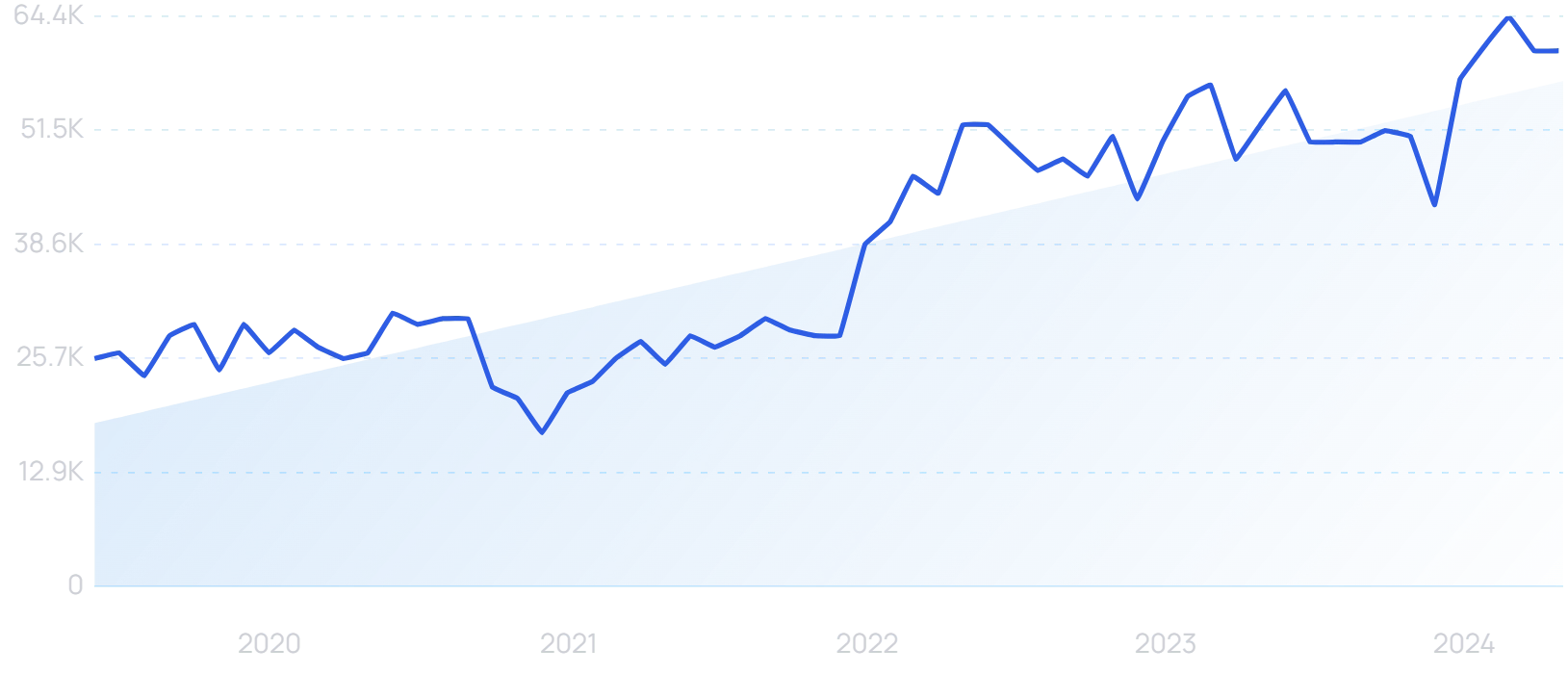

13.) Fraud Detection

Fraud detection is a great topic in data science because it allows us to identify fraudulent activity before it happens.

This is done by analyzing data to look for patterns and trends that may be associated with the fraud.

Once our machine learning model recognizes some of these patterns in real time, it immediately detects fraud.

This allows us to take corrective action before the fraud actually happens.

Fraud detection is a valuable tool for anyone who wants to protect themselves from potential fraudulent activity.

14.) Web Scraping

Web scraping is a controversial topic in data science because it allows us to collect data from the web, which is usually data you do not own.

This is done by extracting data from websites using scraping tools that are usually custom-programmed.

This allows us to collect data that would otherwise be inaccessible.

For obvious reasons, web scraping is a unique tool – giving you data your competitors would have no chance of getting.

I think there is an excellent opportunity to create new and innovative ways to make scraping accessible for everyone, not just those who understand Selenium and Beautiful Soup.

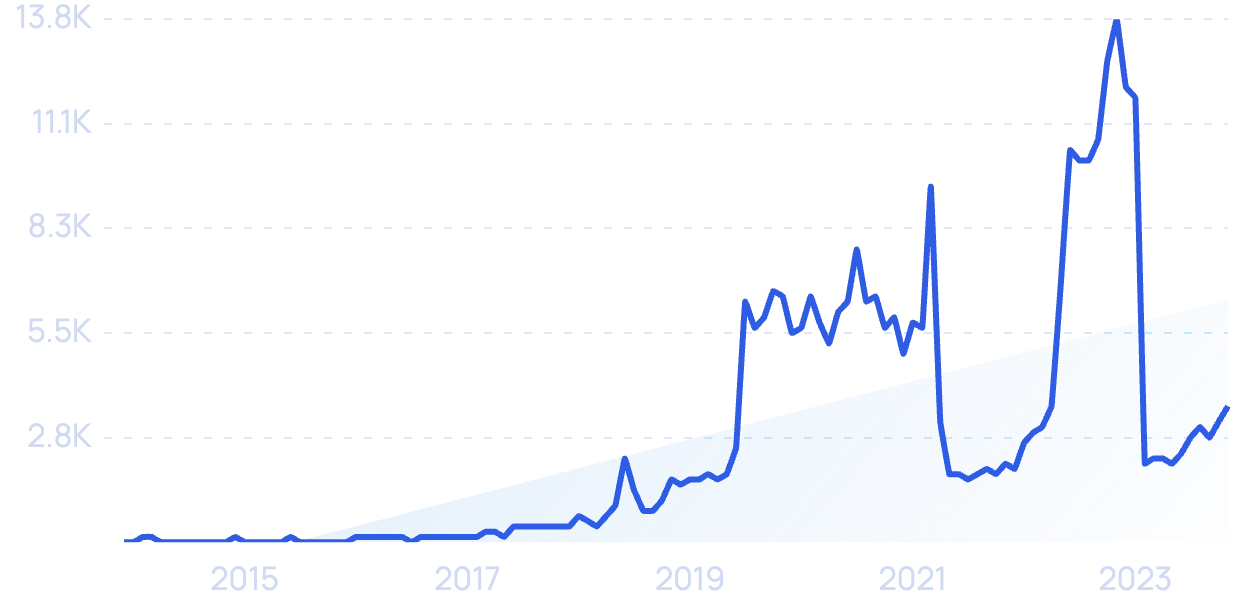

15.) Social Media Analysis

Social media analysis is not new; many people have already created exciting and innovative algorithms to study this.

However, it is still a great data science research topic because it allows us to understand how people interact on social media.

This is done by analyzing data from social media platforms to look for insights, bots, and recent societal trends.

Once we understand these practices, we can use this information to improve our marketing efforts.

For example, if we know that a particular demographic prefers a specific type of content, we can create more content that appeals to them.

Social media analysis is also used to understand how people interact with brands on social media.

This allows businesses to understand better what their customers want and need.

Overall, social media analysis is valuable for anyone who wants to improve their marketing efforts or understand how customers interact with brands.

16.) GPU Computing

GPU computing is a fun new research topic in data science because it allows us to process data much faster than traditional CPUs .

Due to how GPUs are made, they’re incredibly proficient at intense matrix operations, outperforming traditional CPUs by very high margins.

While the computation is fast, the coding is still tricky.

There is an excellent research opportunity to bring these innovations to non-traditional modules, allowing data science to take advantage of GPU computing outside of deep learning.

17.) Quantum Computing

Quantum computing is a new research topic in data science and physics because it allows us to process data much faster than traditional computers.

It also opens the door to new types of data.

There are just some problems that can’t be solved utilizing outside of the classical computer.

For example, if you wanted to understand how a single atom moved around, a classical computer couldn’t handle this problem.

You’ll need to utilize a quantum computer to handle quantum mechanics problems.

This may be the “hottest” research topic on the planet right now, with some of the top researchers in computer science and physics worldwide working on it.

You could be too.

18.) Genomics

Genomics may be the only research topic that can compete with quantum computing regarding the “number of top researchers working on it.”

Genomics is a fantastic intersection of data science because it allows us to understand how genes work.

This is done by sequencing the DNA of different organisms to look for insights into our and other species.

Once we understand these patterns, we can use this information to improve our understanding of diseases and create new and innovative treatments for them.

Genomics is also used to study the evolution of different species.

Genomics is the future and a field begging for new and exciting research professionals to take it to the next step.

19.) Location-based services

Location-based services are an old and time-tested research topic in data science.

Since GPS and 4g cell phone reception became a thing, we’ve been trying to stay informed about how humans interact with their environment.

This is done by analyzing data from GPS tracking devices, cell phone towers, and Wi-Fi routers to look for insights into how humans interact.

Once we understand these practices, we can use this information to improve our geotargeting efforts, improve maps, find faster routes, and improve cohesion throughout a community.

Location-based services are used to understand the user, something every business could always use a little bit more of.

While a seemingly “stale” field, location-based services have seen a revival period with self-driving cars.

20.) Smart City Applications

Smart city applications are all the rage in data science research right now.

By harnessing the power of data, cities can become more efficient and sustainable.

But what exactly are smart city applications?

In short, they are systems that use data to improve city infrastructure and services.

This can include anything from traffic management and energy use to waste management and public safety.

Data is collected from various sources, including sensors, cameras, and social media.

It is then analyzed to identify tendencies and habits.

This information can make predictions about future needs and optimize city resources.

As more and more cities strive to become “smart,” the demand for data scientists with expertise in smart city applications is only growing.

21.) Internet Of Things (IoT)

The Internet of Things, or IoT, is exciting and new data science and sustainability research topic.

IoT is a network of physical objects embedded with sensors and connected to the internet.

These objects can include everything from alarm clocks to refrigerators; they’re all connected to the internet.

That means that they can share data with computers.

And that’s where data science comes in.

Data scientists are using IoT data to learn everything from how people use energy to how traffic flows through a city.

They’re also using IoT data to predict when an appliance will break down or when a road will be congested.

Really, the possibilities are endless.

With such a wide-open field, it’s easy to see why IoT is being researched by some of the top professionals in the world.

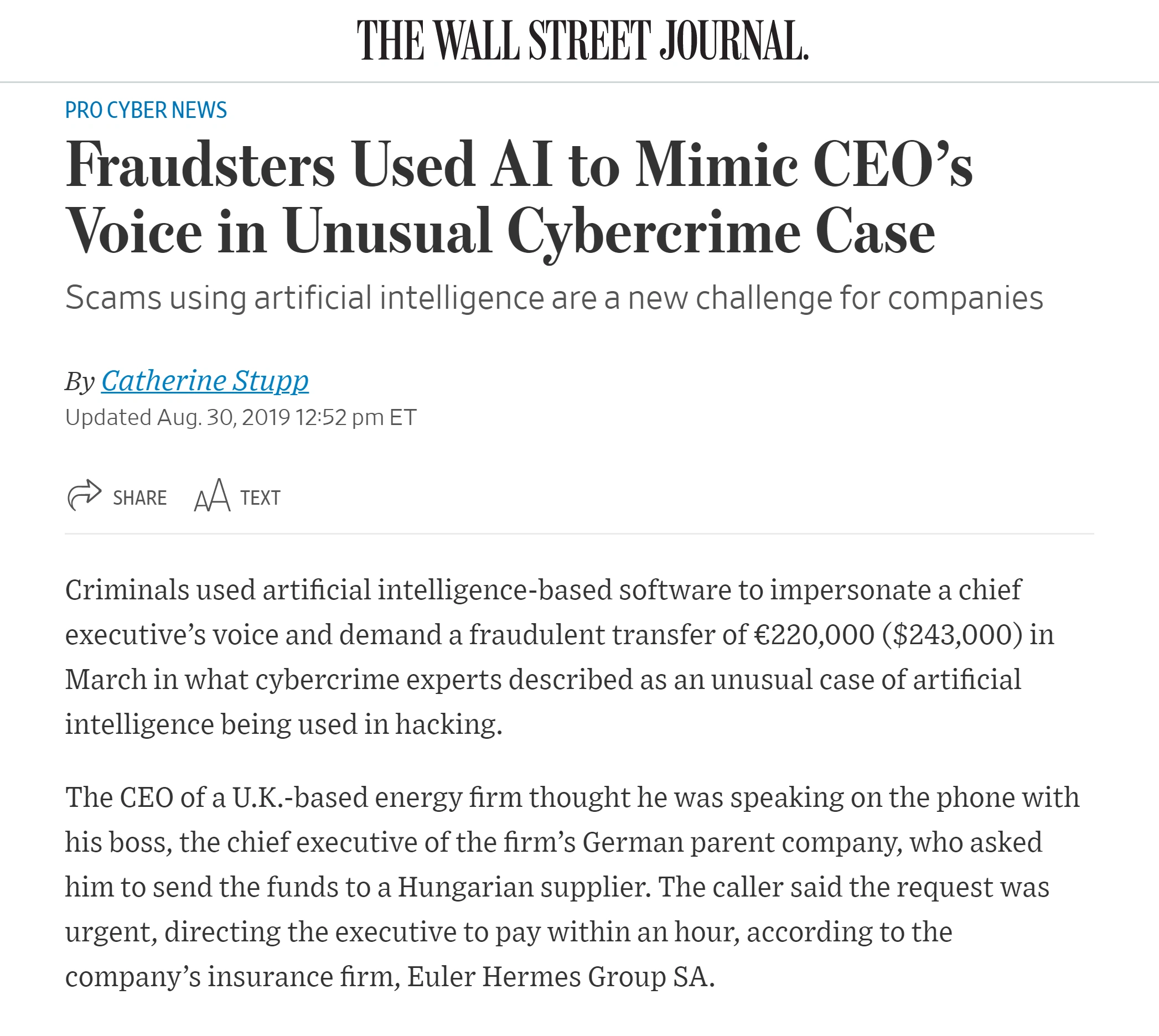

22.) Cybersecurity

Cybersecurity is a relatively new research topic in data science and in general, but it’s already garnering a lot of attention from businesses and organizations.

After all, with the increasing number of cyber attacks in recent years, it’s clear that we need to find better ways to protect our data.

While most of cybersecurity focuses on infrastructure, data scientists can leverage historical events to find potential exploits to protect their companies.

Sometimes, looking at a problem from a different angle helps, and that’s what data science brings to cybersecurity.

Also, data science can help to develop new security technologies and protocols.

As a result, cybersecurity is a crucial data science research area and one that will only become more important in the years to come.

23.) Blockchain

Blockchain is an incredible new research topic in data science for several reasons.

First, it is a distributed database technology that enables secure, transparent, and tamper-proof transactions.

Did someone say transmitting data?

This makes it an ideal platform for tracking data and transactions in various industries.

Second, blockchain is powered by cryptography, which not only makes it highly secure – but is a familiar foe for data scientists.

Finally, blockchain is still in its early stages of development, so there is much room for research and innovation.

As a result, blockchain is a great new research topic in data science that vows to revolutionize how we store, transmit and manage data.

24.) Sustainability

Sustainability is a relatively new research topic in data science, but it is gaining traction quickly.

To keep up with this demand, The Wharton School of the University of Pennsylvania has started to offer an MBA in Sustainability .

This demand isn’t shocking, and some of the reasons include the following:

Sustainability is an important issue that is relevant to everyone.

Datasets on sustainability are constantly growing and changing, making it an exciting challenge for data scientists.

There hasn’t been a “set way” to approach sustainability from a data perspective, making it an excellent opportunity for interdisciplinary research.

As data science grows, sustainability will likely become an increasingly important research topic.

25.) Educational Data

Education has always been a great topic for research, and with the advent of big data, educational data has become an even richer source of information.

By studying educational data, researchers can gain insights into how students learn, what motivates them, and what barriers these students may face.

Besides, data science can be used to develop educational interventions tailored to individual students’ needs.

Imagine being the researcher that helps that high schooler pass mathematics; what an incredible feeling.

With the increasing availability of educational data, data science has enormous potential to improve the quality of education.

26.) Politics

As data science continues to evolve, so does the scope of its applications.

Originally used primarily for business intelligence and marketing, data science is now applied to various fields, including politics.

By analyzing large data sets, political scientists (data scientists with a cooler name) can gain valuable insights into voting patterns, campaign strategies, and more.

Further, data science can be used to forecast election results and understand the effects of political events on public opinion.

With the wealth of data available, there is no shortage of research opportunities in this field.

As data science evolves, so does our understanding of politics and its role in our world.

27.) Cloud Technologies

Cloud technologies are a great research topic.

It allows for the outsourcing and sharing of computer resources and applications all over the internet.

This lets organizations save money on hardware and maintenance costs while providing employees access to the latest and greatest software and applications.

I believe there is an argument that AWS could be the greatest and most technologically advanced business ever built (Yes, I know it’s only part of the company).

Besides, cloud technologies can help improve team members’ collaboration by allowing them to share files and work on projects together in real-time.

As more businesses adopt cloud technologies, data scientists must stay up-to-date on the latest trends in this area.

By researching cloud technologies, data scientists can help organizations to make the most of this new and exciting technology.

28.) Robotics

Robotics has recently become a household name, and it’s for a good reason.

First, robotics deals with controlling and planning physical systems, an inherently complex problem.

Second, robotics requires various sensors and actuators to interact with the world, making it an ideal application for machine learning techniques.

Finally, robotics is an interdisciplinary field that draws on various disciplines, such as computer science, mechanical engineering, and electrical engineering.

As a result, robotics is a rich source of research problems for data scientists.

29.) HealthCare

Healthcare is an industry that is ripe for data-driven innovation.

Hospitals, clinics, and health insurance companies generate a tremendous amount of data daily.

This data can be used to improve the quality of care and outcomes for patients.

This is perfect timing, as the healthcare industry is undergoing a significant shift towards value-based care, which means there is a greater need than ever for data-driven decision-making.

As a result, healthcare is an exciting new research topic for data scientists.

There are many different ways in which data can be used to improve healthcare, and there is a ton of room for newcomers to make discoveries.

30.) Remote Work

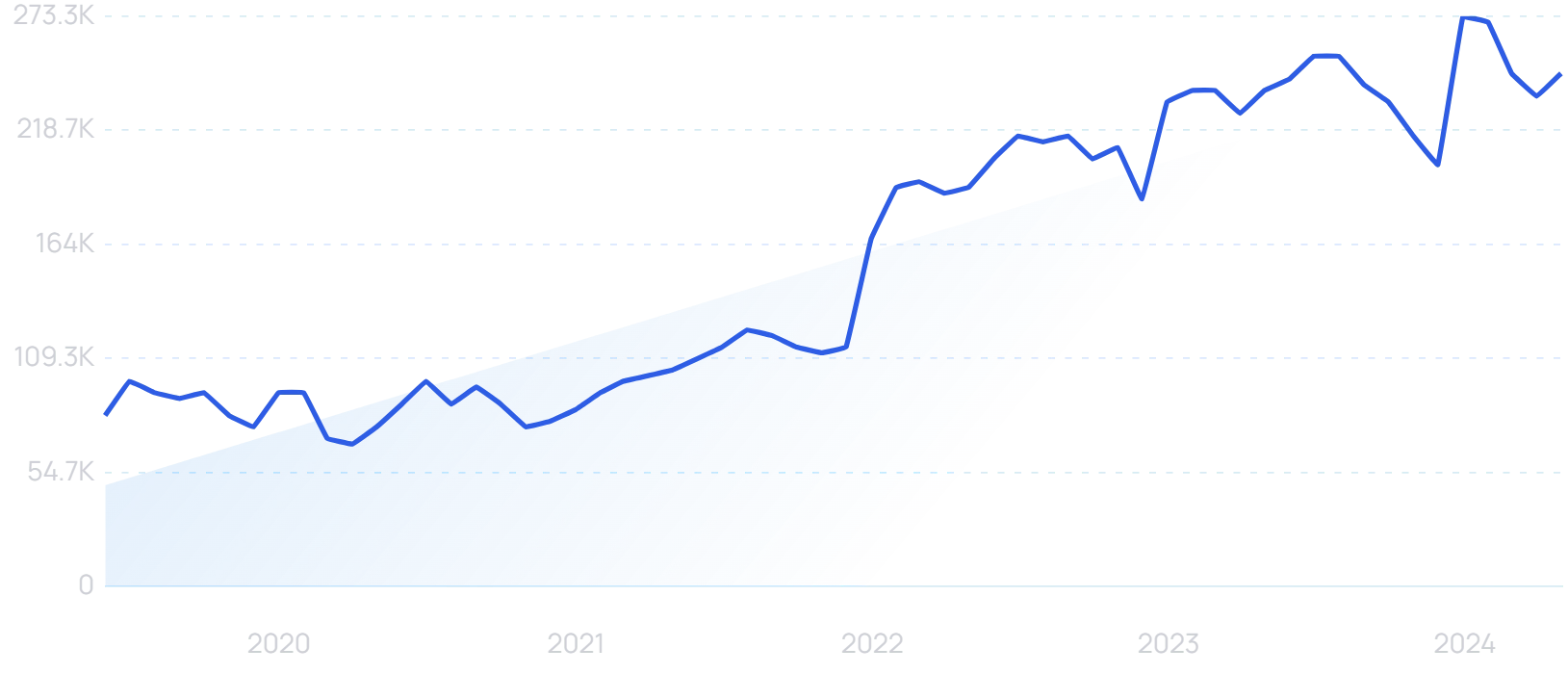

There’s no doubt that remote work is on the rise.

In today’s global economy, more and more businesses are allowing their employees to work from home or anywhere else they can get a stable internet connection.

But what does this mean for data science? Well, for one thing, it opens up a whole new field of research.

For example, how does remote work impact employee productivity?

What are the best ways to manage and collaborate on data science projects when team members are spread across the globe?

And what are the cybersecurity risks associated with working remotely?

These are just a few of the questions that data scientists will be able to answer with further research.

So if you’re looking for a new topic to sink your teeth into, remote work in data science is a great option.

31.) Data-Driven Journalism

Data-driven journalism is an exciting new field of research that combines the best of both worlds: the rigor of data science with the creativity of journalism.

By applying data analytics to large datasets, journalists can uncover stories that would otherwise be hidden.

And telling these stories compellingly can help people better understand the world around them.

Data-driven journalism is still in its infancy, but it has already had a major impact on how news is reported.

In the future, it will only become more important as data becomes increasingly fluid among journalists.

It is an exciting new topic and research field for data scientists to explore.

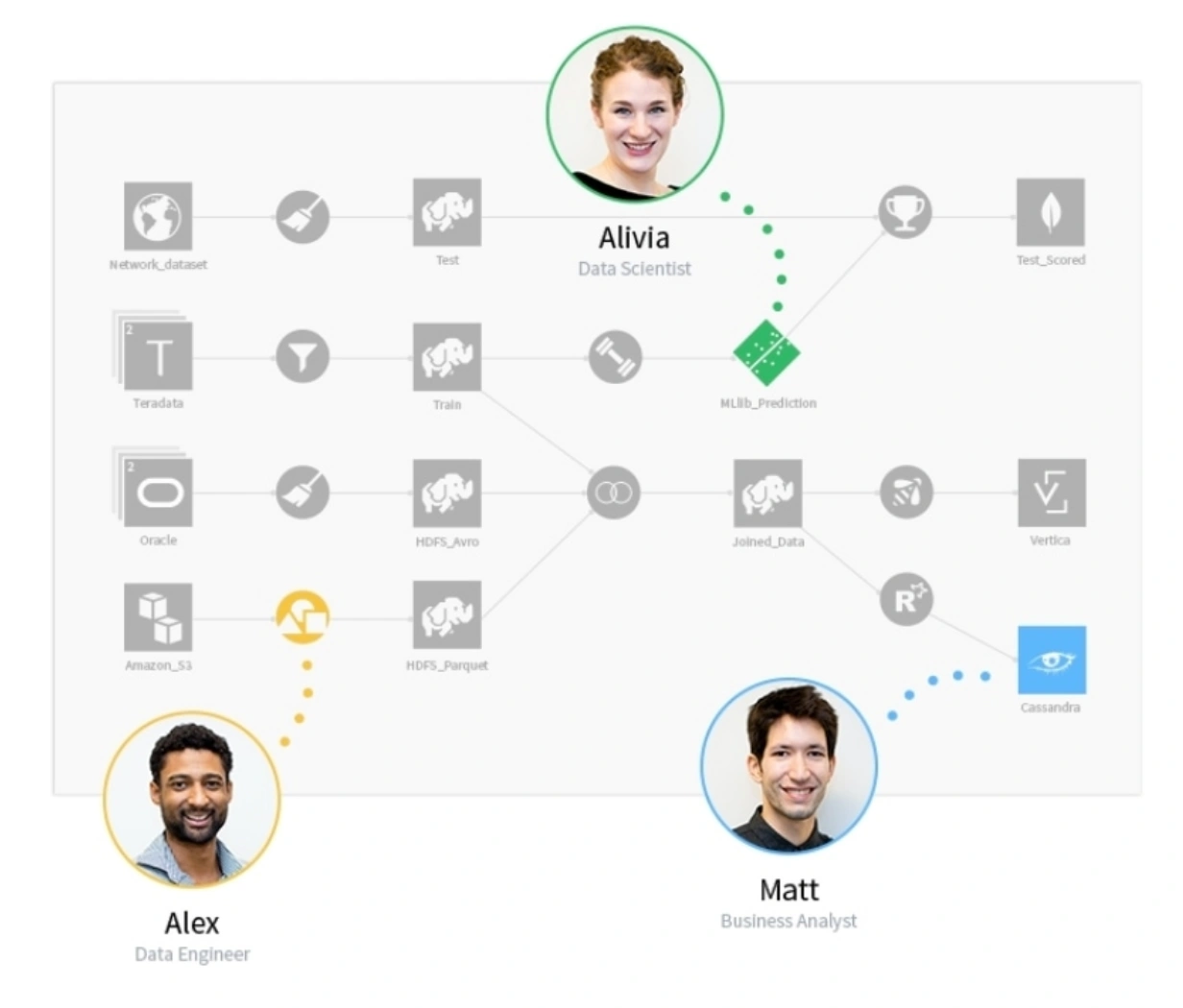

32.) Data Engineering

Data engineering is a staple in data science, focusing on efficiently managing data.

Data engineers are responsible for developing and maintaining the systems that collect, process, and store data.

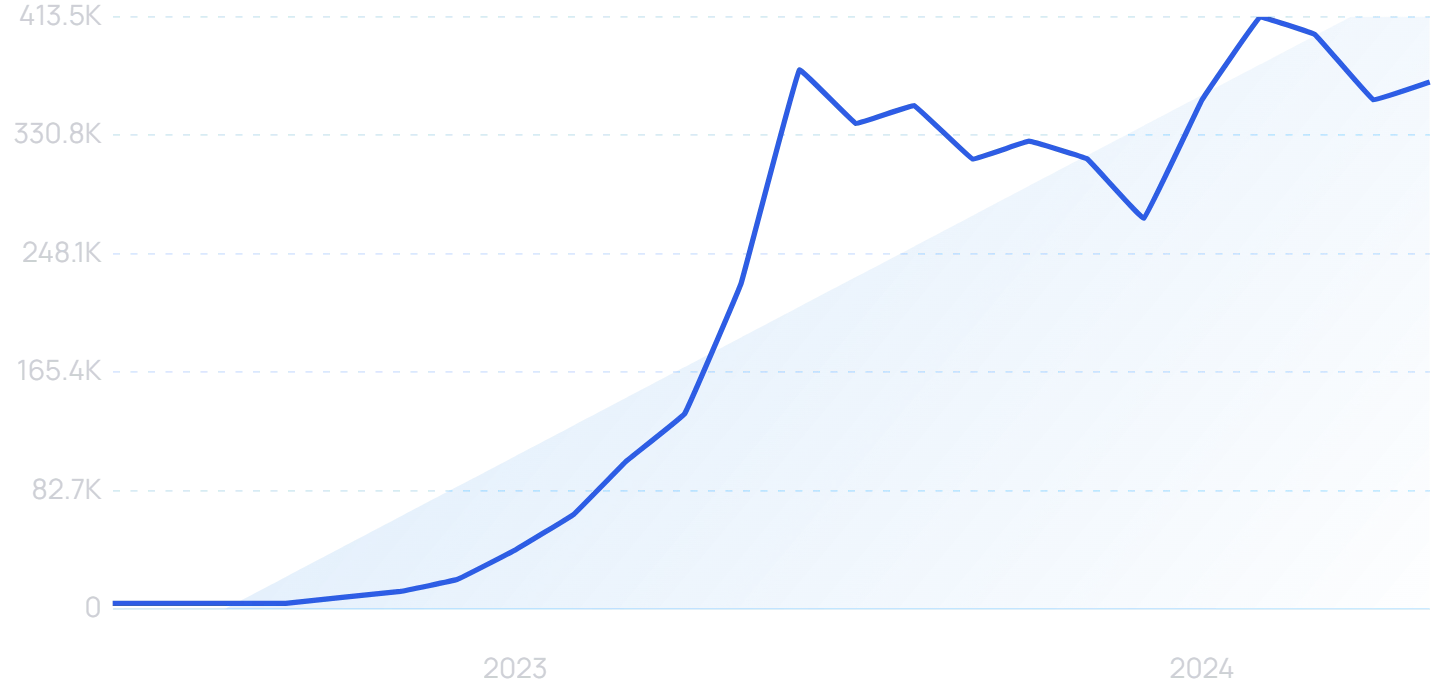

In recent years, there has been an increasing demand for data engineers as the volume of data generated by businesses and organizations has grown exponentially.

Data engineers must be able to design and implement efficient data-processing pipelines and have the skills to optimize and troubleshoot existing systems.

If you are looking for a challenging research topic that would immediately impact you worldwide, then improving or innovating a new approach in data engineering would be a good start.

33.) Data Curation

Data curation has been a hot topic in the data science community for some time now.

Curating data involves organizing, managing, and preserving data so researchers can use it.

Data curation can help to ensure that data is accurate, reliable, and accessible.

It can also help to prevent research duplication and to facilitate the sharing of data between researchers.

Data curation is a vital part of data science. In recent years, there has been an increasing focus on data curation, as it has become clear that it is essential for ensuring data quality.

As a result, data curation is now a major research topic in data science.

There are numerous books and articles on the subject, and many universities offer courses on data curation.

Data curation is an integral part of data science and will only become more important in the future.

34.) Meta-Learning

Meta-learning is gaining a ton of steam in data science. It’s learning how to learn.

So, if you can learn how to learn, you can learn anything much faster.

Meta-learning is mainly used in deep learning, as applications outside of this are generally pretty hard.

In deep learning, many parameters need to be tuned for a good model, and there’s usually a lot of data.

You can save time and effort if you can automatically and quickly do this tuning.

In machine learning, meta-learning can improve models’ performance by sharing knowledge between different models.

For example, if you have a bunch of different models that all solve the same problem, then you can use meta-learning to share the knowledge between them to improve the cluster (groups) overall performance.

I don’t know how anyone looking for a research topic could stay away from this field; it’s what the Terminator warned us about!

35.) Data Warehousing

A data warehouse is a system used for data analysis and reporting.

It is a central data repository created by combining data from multiple sources.

Data warehouses are often used to store historical data, such as sales data, financial data, and customer data.

This data type can be used to create reports and perform statistical analysis.

Data warehouses also store data that the organization is not currently using.

This type of data can be used for future research projects.

Data warehousing is an incredible research topic in data science because it offers a variety of benefits.

Data warehouses help organizations to save time and money by reducing the need for manual data entry.

They also help to improve the accuracy of reports and provide a complete picture of the organization’s performance.

Data warehousing feels like one of the weakest parts of the Data Science Technology Stack; if you want a research topic that could have a monumental impact – data warehousing is an excellent place to look.

36.) Business Intelligence

Business intelligence aims to collect, process, and analyze data to help businesses make better decisions.

Business intelligence can improve marketing, sales, customer service, and operations.

It can also be used to identify new business opportunities and track competition.

BI is business and another tool in your company’s toolbox to continue dominating your area.

Data science is the perfect tool for business intelligence because it combines statistics, computer science, and machine learning.

Data scientists can use business intelligence to answer questions like, “What are our customers buying?” or “What are our competitors doing?” or “How can we increase sales?”

Business intelligence is a great way to improve your business’s bottom line and an excellent opportunity to dive deep into a well-respected research topic.

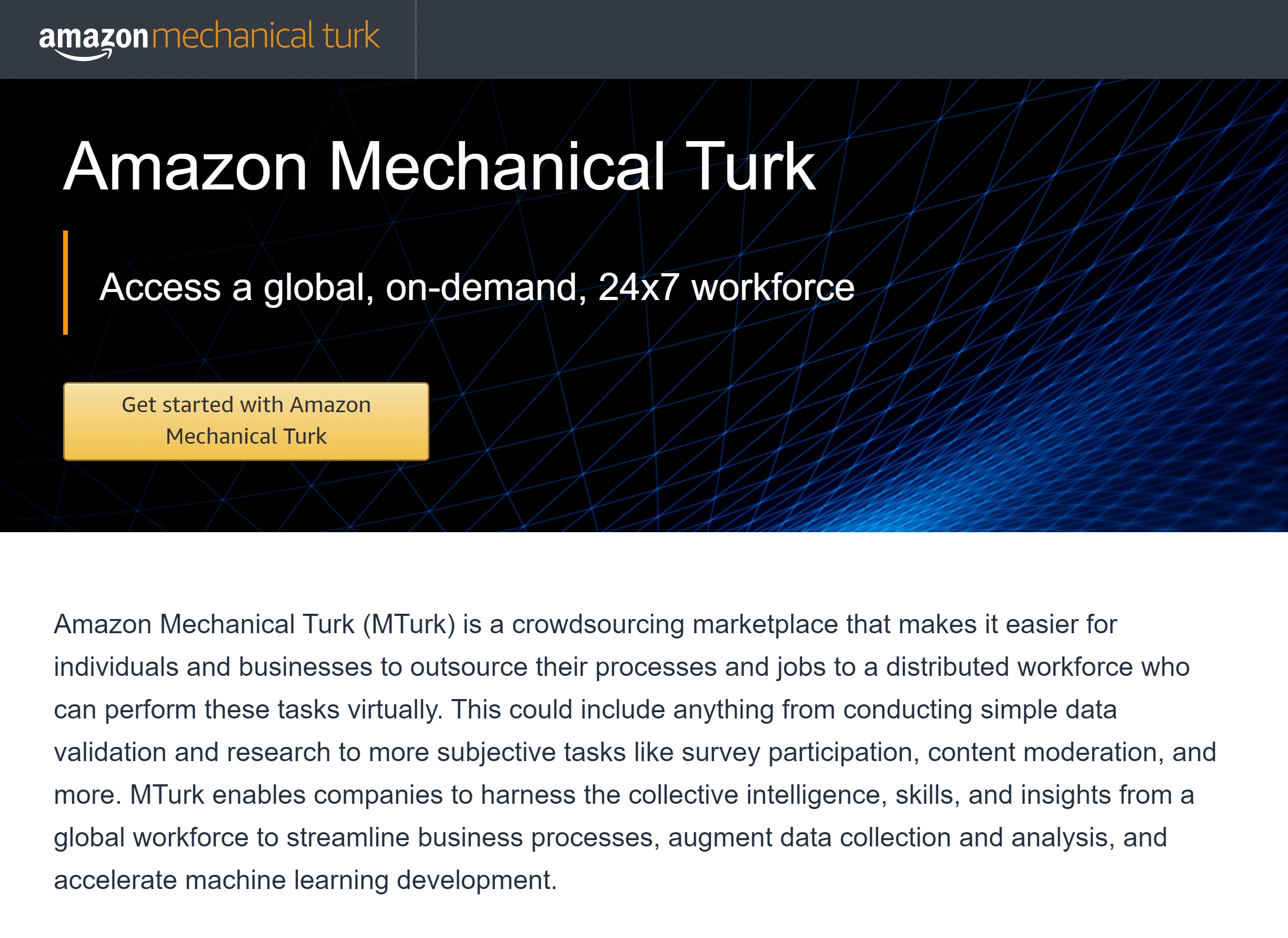

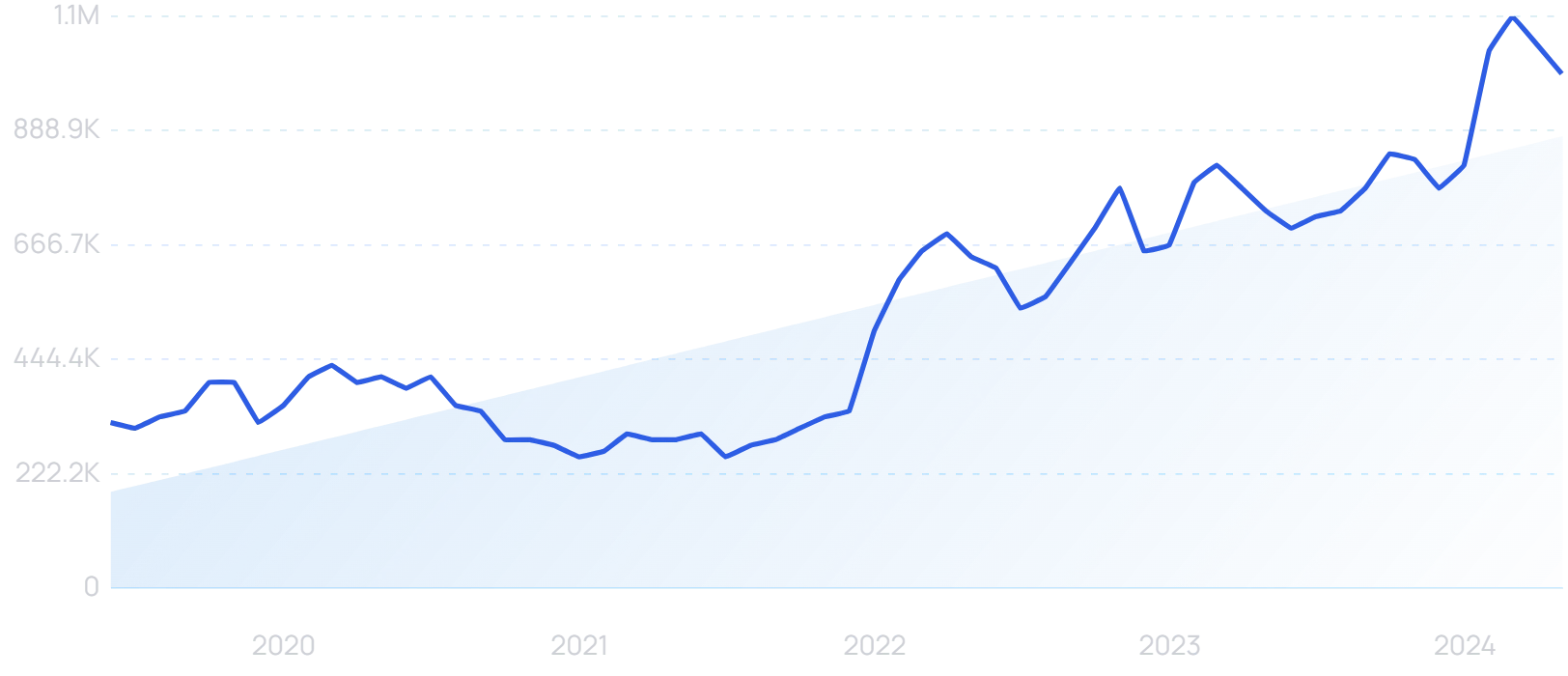

37.) Crowdsourcing

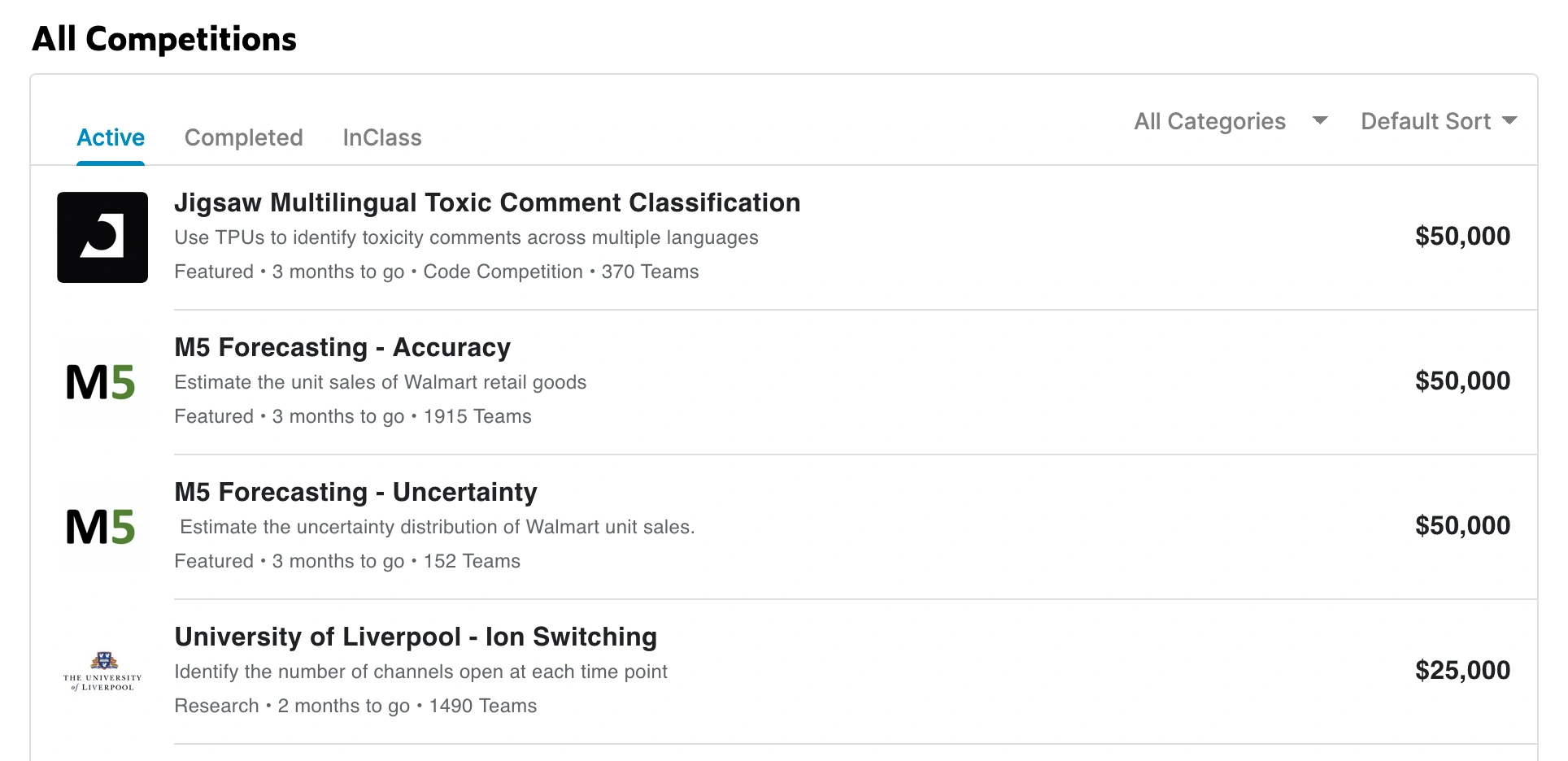

One of the newest areas of research in data science is crowdsourcing.

Crowdsourcing is a process of sourcing tasks or projects to a large group of people, typically via the internet.

This can be done for various purposes, such as gathering data, developing new algorithms, or even just for fun (think: online quizzes and surveys).

But what makes crowdsourcing so powerful is that it allows businesses and organizations to tap into a vast pool of talent and resources they wouldn’t otherwise have access to.

And with the rise of social media, it’s easier than ever to connect with potential crowdsource workers worldwide.

Imagine if you could effect that, finding innovative ways to improve how people work together.

That would have a huge effect.

Final Thoughts, Are These Research Topics In Data Science For You?

Thirty-seven different research topics in data science are a lot to take in, but we hope you found a research topic that interests you.

If not, don’t worry – there are plenty of other great topics to explore.

The important thing is to get started with your research and find ways to apply what you learn to real-world problems.

We wish you the best of luck as you begin your data science journey!

Other Data Science Articles

We love talking about data science; here are a couple of our favorite articles:

- Why Are You Interested In Data Science?

- Recent Posts

- Master Data Analysis with Excel [Unlock Insider Tips] - September 5, 2024

- What Does a Software Development Team Consist Of? [Secrets Revealed] - September 4, 2024

- How to Use Volvo VIDA Software Like a Pro [Expert Tips] - September 4, 2024

Research Areas

Main navigation.

The world is being transformed by data and data-driven analysis is rapidly becoming an integral part of science and society. Stanford Data Science is a collaborative effort across many departments in all seven schools. We strive to unite existing data science research initiatives and create interdisciplinary collaborations, connecting the data science and related methodologists with disciplines that are being transformed by data science and computation.

Our work supports research in a variety of fields where incredible advances are being made through the facilitation of meaningful collaborations between domain researchers, with deep expertise in societal and fundamental research challenges, and methods researchers that are developing next-generation computational tools and techniques, including:

Data Science for Wildland Fire Research

In recent years, wildfire has gone from an infrequent and distant news item to a centerstage isssue spanning many consecutive weeks for urban and suburban communities. Frequent wildfires are changing everyday lives for California in numerous ways -- from public safety power shutoffs to hazardous air quality -- that seemed inconceivable as recently as 2015. Moreover, elevated wildfire risk in the western United States (and similar climates globally) is here to stay into the foreseeable future. There is a plethora of problems that need solutions in the wildland fire arena; many of them are well suited to a data-driven approach.

Seminar Series

Data Science for Physics

Astrophysicists and particle physicists at Stanford and at the SLAC National Accelerator Laboratory are deeply engaged in studying the Universe at both the largest and smallest scales, with state-of-the-art instrumentation at telescopes and accelerator facilities

Data Science for Economics

Many of the most pressing questions in empirical economics concern causal questions, such as the impact, both short and long run, of educational choices on labor market outcomes, and of economic policies on distributions of outcomes. This makes them conceptually quite different from the predictive type of questions that many of the recently developed methods in machine learning are primarily designed for.

Data Science for Education

Educational data spans K-12 school and district records, digital archives of instructional materials and gradebooks, as well as student responses on course surveys. Data science of actual classroom interaction is also of increasing interest and reality.

Data Science for Human Health

It is clear that data science will be a driving force in transitioning the world’s healthcare systems from reactive “sick-based” care to proactive, preventive care.

Data Science for Humanity

Our modern era is characterized by massive amounts of data documenting the behaviors of individuals, groups, organizations, cultures, and indeed entire societies. This wealth of data on modern humanity is accompanied by massive digitization of historical data, both textual and numeric, in the form of historic newspapers, literary and linguistic corpora, economic data, censuses, and other government data, gathered and preserved over centuries, and newly digitized, acquired, and provisioned by libraries, scholars, and commercial entities.

Data Science for Linguistics

The impact of data science on linguistics has been profound. All areas of the field depend on having a rich picture of the true range of variation, within dialects, across dialects, and among different languages. The subfield of corpus linguistics is arguably as old as the field itself and, with the advent of computers, gave rise to many core techniques in data science.

Data Science for Nature and Sustainability

Many key sustainability issues translate into decision and optimization problems and could greatly benefit from data-driven decision making tools. In fact, the impact of modern information technology has been highly uneven, mainly benefiting large firms in profitable sectors, with little or no benefit in terms of the environment. Our vision is that data-driven methods can — and should — play a key role in increasing the efficiency and effectiveness of the way we manage and allocate our natural resources.

Ethics and Data Science

With the emergence of new techniques of machine learning, and the possibility of using algorithms to perform tasks previously done by human beings, as well as to generate new knowledge, we again face a set of new ethical questions.

The Science of Data Science

The practice of data analysis has changed enormously. Data science needs to find new inferential paradigms that allow data exploration prior to the formulation of hypotheses.

- How It Works

- PhD thesis writing

- Master thesis writing

- Bachelor thesis writing

- Dissertation writing service

- Dissertation abstract writing

- Thesis proposal writing

- Thesis editing service

- Thesis proofreading service

- Thesis formatting service

- Coursework writing service

- Research paper writing service

- Architecture thesis writing

- Computer science thesis writing

- Engineering thesis writing

- History thesis writing

- MBA thesis writing

- Nursing dissertation writing

- Psychology dissertation writing

- Sociology thesis writing

- Statistics dissertation writing

- Buy dissertation online

- Write my dissertation

- Cheap thesis

- Cheap dissertation

- Custom dissertation

- Dissertation help

- Pay for thesis

- Pay for dissertation

- Senior thesis

- Write my thesis

214 Best Big Data Research Topics for Your Thesis Paper

Finding an ideal big data research topic can take you a long time. Big data, IoT, and robotics have evolved. The future generations will be immersed in major technologies that will make work easier. Work that was done by 10 people will now be done by one person or a machine. This is amazing because, in as much as there will be job loss, more jobs will be created. It is a win-win for everyone.

Big data is a major topic that is being embraced globally. Data science and analytics are helping institutions, governments, and the private sector. We will share with you the best big data research topics.

On top of that, we can offer you the best writing tips to ensure you prosper well in your academics. As students in the university, you need to do proper research to get top grades. Hence, you can consult us if in need of research paper writing services.

Big Data Analytics Research Topics for your Research Project

Are you looking for an ideal big data analytics research topic? Once you choose a topic, consult your professor to evaluate whether it is a great topic. This will help you to get good grades.

- Which are the best tools and software for big data processing?

- Evaluate the security issues that face big data.

- An analysis of large-scale data for social networks globally.

- The influence of big data storage systems.

- The best platforms for big data computing.

- The relation between business intelligence and big data analytics.

- The importance of semantics and visualization of big data.

- Analysis of big data technologies for businesses.

- The common methods used for machine learning in big data.

- The difference between self-turning and symmetrical spectral clustering.

- The importance of information-based clustering.

- Evaluate the hierarchical clustering and density-based clustering application.

- How is data mining used to analyze transaction data?

- The major importance of dependency modeling.

- The influence of probabilistic classification in data mining.

Interesting Big Data Analytics Topics

Who said big data had to be boring? Here are some interesting big data analytics topics that you can try. They are based on how some phenomena are done to make the world a better place.

- Discuss the privacy issues in big data.

- Evaluate the storage systems of scalable in big data.

- The best big data processing software and tools.

- Data mining tools and techniques are popularly used.

- Evaluate the scalable architectures for parallel data processing.

- The major natural language processing methods.

- Which are the best big data tools and deployment platforms?

- The best algorithms for data visualization.

- Analyze the anomaly detection in cloud servers

- The scrutiny normally done for the recruitment of big data job profiles.

- The malicious user detection in big data collection.

- Learning long-term dependencies via the Fourier recurrent units.

- Nomadic computing for big data analytics.

- The elementary estimators for graphical models.

- The memory-efficient kernel approximation.

Big Data Latest Research Topics

Do you know the latest research topics at the moment? These 15 topics will help you to dive into interesting research. You may even build on research done by other scholars.

- Evaluate the data mining process.

- The influence of the various dimension reduction methods and techniques.

- The best data classification methods.

- The simple linear regression modeling methods.

- Evaluate the logistic regression modeling.

- What are the commonly used theorems?

- The influence of cluster analysis methods in big data.

- The importance of smoothing methods analysis in big data.

- How is fraud detection done through AI?

- Analyze the use of GIS and spatial data.

- How important is artificial intelligence in the modern world?

- What is agile data science?

- Analyze the behavioral analytics process.

- Semantic analytics distribution.

- How is domain knowledge important in data analysis?

Big Data Debate Topics

If you want to prosper in the field of big data, you need to try even hard topics. These big data debate topics are interesting and will help you to get a better understanding.

- The difference between big data analytics and traditional data analytics methods.

- Why do you think the organization should think beyond the Hadoop hype?

- Does the size of the data matter more than how recent the data is?

- Is it true that bigger data are not always better?

- The debate of privacy and personalization in maintaining ethics in big data.

- The relation between data science and privacy.

- Do you think data science is a rebranding of statistics?

- Who delivers better results between data scientists and domain experts?

- According to your view, is data science dead?

- Do you think analytics teams need to be centralized or decentralized?

- The best methods to resource an analytics team.

- The best business case for investing in analytics.

- The societal implications of the use of predictive analytics within Education.

- Is there a need for greater control to prevent experimentation on social media users without their consent?

- How is the government using big data; for the improvement of public statistics or to control the population?

University Dissertation Topics on Big Data

Are you doing your Masters or Ph.D. and wondering the best dissertation topic or thesis to do? Why not try any of these? They are interesting and based on various phenomena. While doing the research ensure you relate the phenomenon with the current modern society.

- The machine learning algorithms are used for fall recognition.

- The divergence and convergence of the internet of things.

- The reliable data movements using bandwidth provision strategies.

- How is big data analytics using artificial neural networks in cloud gaming?

- How is Twitter accounts classification done using network-based features?

- How is online anomaly detection done in the cloud collaborative environment?

- Evaluate the public transportation insights provided by big data.

- Evaluate the paradigm for cancer patients using the nursing EHR to predict the outcome.

- Discuss the current data lossless compression in the smart grid.

- How does online advertising traffic prediction helps in boosting businesses?

- How is the hyperspectral classification done using the multiple kernel learning paradigm?

- The analysis of large data sets downloaded from websites.

- How does social media data help advertising companies globally?

- Which are the systems recognizing and enforcing ownership of data records?

- The alternate possibilities emerging for edge computing.

The Best Big Data Analysis Research Topics and Essays

There are a lot of issues that are associated with big data. Here are some of the research topics that you can use in your essays. These topics are ideal whether in high school or college.

- The various errors and uncertainty in making data decisions.

- The application of big data on tourism.

- The automation innovation with big data or related technology

- The business models of big data ecosystems.

- Privacy awareness in the era of big data and machine learning.

- The data privacy for big automotive data.

- How is traffic managed in defined data center networks?

- Big data analytics for fault detection.

- The need for machine learning with big data.

- The innovative big data processing used in health care institutions.

- The money normalization and extraction from texts.

- How is text categorization done in AI?

- The opportunistic development of data-driven interactive applications.

- The use of data science and big data towards personalized medicine.

- The programming and optimization of big data applications.

The Latest Big Data Research Topics for your Research Proposal

Doing a research proposal can be hard at first unless you choose an ideal topic. If you are just diving into the big data field, you can use any of these topics to get a deeper understanding.

- The data-centric network of things.

- Big data management using artificial intelligence supply chain.

- The big data analytics for maintenance.

- The high confidence network predictions for big biological data.

- The performance optimization techniques and tools for data-intensive computation platforms.

- The predictive modeling in the legal context.

- Analysis of large data sets in life sciences.

- How to understand the mobility and transport modal disparities sing emerging data sources?

- How do you think data analytics can support asset management decisions?

- An analysis of travel patterns for cellular network data.

- The data-driven strategic planning for citywide building retrofitting.

- How is money normalization done in data analytics?

- Major techniques used in data mining.

- The big data adaptation and analytics of cloud computing.

- The predictive data maintenance for fault diagnosis.

Interesting Research Topics on A/B Testing In Big Data

A/B testing topics are different from the normal big data topics. However, you use an almost similar methodology to find the reasons behind the issues. These topics are interesting and will help you to get a deeper understanding.

- How is ultra-targeted marketing done?

- The transition of A/B testing from digital to offline.

- How can big data and A/B testing be done to win an election?

- Evaluate the use of A/B testing on big data

- Evaluate A/B testing as a randomized control experiment.

- How does A/B testing work?

- The mistakes to avoid while conducting the A/B testing.

- The most ideal time to use A/B testing.

- The best way to interpret results for an A/B test.

- The major principles of A/B tests.

- Evaluate the cluster randomization in big data

- The best way to analyze A/B test results and the statistical significance.

- How is A/B testing used in boosting businesses?

- The importance of data analysis in conversion research

- The importance of A/B testing in data science.

Amazing Research Topics on Big Data and Local Governments

Governments are now using big data to make the lives of the citizens better. This is in the government and the various institutions. They are based on real-life experiences and making the world better.

- Assess the benefits and barriers of big data in the public sector.

- The best approach to smart city data ecosystems.

- The big analytics used for policymaking.

- Evaluate the smart technology and emergence algorithm bureaucracy.

- Evaluate the use of citizen scoring in public services.

- An analysis of the government administrative data globally.

- The public values are found in the era of big data.

- Public engagement on local government data use.

- Data analytics use in policymaking.

- How are algorithms used in public sector decision-making?

- The democratic governance in the big data era.

- The best business model innovation to be used in sustainable organizations.

- How does the government use the collected data from various sources?

- The role of big data for smart cities.

- How does big data play a role in policymaking?

Easy Research Topics on Big Data

Who said big data topics had to be hard? Here are some of the easiest research topics. They are based on data management, research, and data retention. Pick one and try it!

- Who uses big data analytics?

- Evaluate structure machine learning.

- Explain the whole deep learning process.

- Which are the best ways to manage platforms for enterprise analytics?

- Which are the new technologies used in data management?

- What is the importance of data retention?

- The best way to work with images is when doing research.

- The best way to promote research outreach is through data management.

- The best way to source and manage external data.

- Does machine learning improve the quality of data?

- Describe the security technologies that can be used in data protection.

- Evaluate token-based authentication and its importance.

- How can poor data security lead to the loss of information?

- How to determine secure data.

- What is the importance of centralized key management?

Unique IoT and Big Data Research Topics

Internet of Things has evolved and many devices are now using it. There are smart devices, smart cities, smart locks, and much more. Things can now be controlled by the touch of a button.

- Evaluate the 5G networks and IoT.

- Analyze the use of Artificial intelligence in the modern world.

- How do ultra-power IoT technologies work?

- Evaluate the adaptive systems and models at runtime.

- How have smart cities and smart environments improved the living space?

- The importance of the IoT-based supply chains.

- How does smart agriculture influence water management?

- The internet applications naming and identifiers.

- How does the smart grid influence energy management?

- Which are the best design principles for IoT application development?

- The best human-device interactions for the Internet of Things.

- The relation between urban dynamics and crowdsourcing services.

- The best wireless sensor network for IoT security.

- The best intrusion detection in IoT.

- The importance of big data on the Internet of Things.

Big Data Database Research Topics You Should Try

Big data is broad and interesting. These big data database research topics will put you in a better place in your research. You also get to evaluate the roles of various phenomena.

- The best cloud computing platforms for big data analytics.

- The parallel programming techniques for big data processing.

- The importance of big data models and algorithms in research.

- Evaluate the role of big data analytics for smart healthcare.

- How is big data analytics used in business intelligence?

- The best machine learning methods for big data.

- Evaluate the Hadoop programming in big data analytics.

- What is privacy-preserving to big data analytics?

- The best tools for massive big data processing

- IoT deployment in Governments and Internet service providers.

- How will IoT be used for future internet architectures?

- How does big data close the gap between research and implementation?

- What are the cross-layer attacks in IoT?

- The influence of big data and smart city planning in society.

- Why do you think user access control is important?

Big Data Scala Research Topics

Scala is a programming language that is used in data management. It is closely related to other data programming languages. Here are some of the best scala questions that you can research.

- Which are the most used languages in big data?

- How is scala used in big data research?

- Is scala better than Java in big data?

- How is scala a concise programming language?

- How does the scala language stream process in real-time?

- Which are the various libraries for data science and data analysis?

- How does scala allow imperative programming in data collection?

- Evaluate how scala includes a useful REPL for interaction.

- Evaluate scala’s IDE support.

- The data catalog reference model.

- Evaluate the basics of data management and its influence on research.

- Discuss the behavioral analytics process.

- What can you term as the experience economy?

- The difference between agile data science and scala language.

- Explain the graph analytics process.

Independent Research Topics for Big Data

These independent research topics for big data are based on the various technologies and how they are related. Big data will greatly be important for modern society.

- The biggest investment is in big data analysis.

- How are multi-cloud and hybrid settings deep roots?

- Why do you think machine learning will be in focus for a long while?

- Discuss in-memory computing.

- What is the difference between edge computing and in-memory computing?

- The relation between the Internet of things and big data.

- How will digital transformation make the world a better place?

- How does data analysis help in social network optimization?

- How will complex big data be essential for future enterprises?

- Compare the various big data frameworks.

- The best way to gather and monitor traffic information using the CCTV images

- Evaluate the hierarchical structure of groups and clusters in the decision tree.

- Which are the 3D mapping techniques for live streaming data.

- How does machine learning help to improve data analysis?

- Evaluate DataStream management in task allocation.

- How is big data provisioned through edge computing?

- The model-based clustering of texts.

- The best ways to manage big data.

- The use of machine learning in big data.

Is Your Big Data Thesis Giving You Problems?

These are some of the best topics that you can use to prosper in your studies. Not only are they easy to research but also reflect on real-time issues. Whether in University or college, you need to put enough effort into your studies to prosper. However, if you have time constraints, we can provide professional writing help. Are you looking for online expert writers? Look no further, we will provide quality work at a cheap price.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Comment * Error message

Name * Error message

Email * Error message

Save my name, email, and website in this browser for the next time I comment.

As Putin continues killing civilians, bombing kindergartens, and threatening WWIII, Ukraine fights for the world's peaceful future.

Ukraine Live Updates

Ten Research Challenge Areas in Data Science

Although data science builds on knowledge from computer science, mathematics, statistics, and other disciplines, data science is a unique field with many mysteries to unlock: challenging scientific questions and pressing questions of societal importance.

Is data science a discipline?

Data science is a field of study: one can get a degree in data science, get a job as a data scientist, and get funded to do data science research. But is data science a discipline, or will it evolve to be one, distinct from other disciplines? Here are a few meta-questions about data science as a discipline.

- What is/are the driving deep question(s) of data science? Each scientific discipline (usually) has one or more “deep” questions that drive its research agenda: What is the origin of the universe (astrophysics)? What is the origin of life (biology)? What is computable (computer science)? Does data science inherit its deep questions from all its constituency disciplines or does it have its own unique ones?

- What is the role of the domain in the field of data science? People (including this author) (Wing, J.M., Janeia, V.P., Kloefkorn, T., & Erickson, L.C. (2018)) have argued that data science is unique in that it is not just about methods, but about the use of those methods in the context of a domain—the domain of the data being collected and analyzed; the domain for which a question to be answered comes from collecting and analyzing the data. Is the inclusion of a domain inherent in defining the field of data science? If so, is the way it is included unique to data science?

- What makes data science data science? Is there a problem unique to data science that one can convincingly argue would not be addressed or asked by any of its constituent disciplines, e.g., computer science and statistics?

Ten research areas

While answering the above meta-questions is still under lively debate, including within the pages of this journal, we can ask an easier question, one that also underlies any field of study: What are the research challenge areas that drive the study of data science? Here is a list of ten. They are not in any priority order, and some of them are related to each other. They are phrased as challenge areas, not challenge questions. They are not necessarily the “top ten” but they are a good ten to start the community discussing what a broad research agenda for data science might look like. 1

- Scientific understanding of learning, especially deep learning algorithms. As much as we admire the astonishing successes of deep learning, we still lack a scientific understanding of why deep learning works so well. We do not understand the mathematical properties of deep learning models. We do not know how to explain why a deep learning model produces one result and not another. We do not understand how robust or fragile they are to perturbations to input data distributions. We do not understand how to verify that deep learning will perform the intended task well on new input data. Deep learning is an example of where experimentation in a field is far ahead of any kind of theoretical understanding.

- Causal reasoning. Machine learning is a powerful tool to find patterns and examine correlations, particularly in large data sets. While the adoption of machine learning has opened many fruitful areas of research in economics, social science, and medicine, these fields require methods that move beyond correlational analyses and can tackle causal questions. A rich and growing area of current study is revisiting causal inference in the presence of large amounts of data. Economists are already revisiting causal reasoning by devising new methods at the intersection of economics and machine learning that make causal inference estimation more efficient and flexible (Athey, 2016), (Taddy, 2019). Data scientists are just beginning to explore multiple causal inference, not just to overcome some of the strong assumptions of univariate causal inference, but because most real-world observations are due to multiple factors that interact with each other (Wang & Blei, 2018).

- Precious data. Data can be precious for one of three reasons: the dataset is expensive to collect; the dataset contains a rare event (low signal-to-noise ratio ); or the dataset is artisanal—small and task-specific. A good example of expensive data comes from large, one-of, expensive scientific instruments, e.g., the Large Synoptic Survey Telescope, the Large Hadron Collider, the IceCube Neutrino Detector at the South Pole. A good example of rare event data is data from sensors on physical infrastructure, such as bridges and tunnels; sensors produce a lot of raw data, but the disastrous event they are used to predict is (thankfully) rare. Rare data can also be expensive to collect. A good example of artisanal data is the tens of millions of court judgments that China has released online to the public since 2014 (Liebman, Roberts, Stern, & Wang, 2017) or the 2+ million US government declassified documents collected by Columbia’s History Lab (Connelly, Madigan, Jervis, Spirling, & Hicks, 2019). For each of these different kinds of precious data, we need new data science methods and algorithms, taking into consideration the domain and intended uses of the data.

- Multiple, heterogeneous data sources. For some problems, we can collect lots of data from different data sources to improve our models. For example, to predict the effectiveness of a specific cancer treatment for a human, we might build a model based on 2-D cell lines from mice, more expensive 3-D cell lines from mice, and the costly DNA sequence of the cancer cells extracted from the human. State-of-the-art data science methods cannot as yet handle combining multiple, heterogeneous sources of data to build a single, accurate model. Since many of these data sources might be precious data, this challenge is related to the third challenge. Focused research in combining multiple sources of data will provide extraordinary impact.

- Inferring from noisy and/or incomplete data. The real world is messy and we often do not have complete information about every data point. Yet, data scientists want to build models from such data to do prediction and inference. A great example of a novel formulation of this problem is the planned use of differential privacy for Census 2020 data (Garfinkel, 2019), where noise is deliberately added to a query result, to maintain the privacy of individuals participating in the census. Handling “deliberate” noise is particularly important for researchers working with small geographic areas such as census blocks, since the added noise can make the data uninformative at those levels of aggregation. How then can social scientists, who for decades have been drawing inferences from census data, make inferences on this “noisy” data and how do they combine their past inferences with these new ones? Machine learning’s ability to better separate noise from signal can improve the efficiency and accuracy of those inferences.

- Trustworthy AI. We have seen rapid deployment of systems using artificial intelligence (AI) and machine learning in critical domains such as autonomous vehicles, criminal justice, healthcare, hiring, housing, human resource management, law enforcement, and public safety, where decisions taken by AI agents directly impact human lives. Consequently, there is an increasing concern if these decisions can be trusted to be correct, reliable, robust, safe, secure, and fair, especially under adversarial attacks. One approach to building trust is through providing explanations of the outcomes of a machine learned model. If we can interpret the outcome in a meaningful way, then the end user can better trust the model. Another approach is through formal methods, where one strives to prove once and for all a model satisfies a certain property. New trust properties yield new tradeoffs for machine learned models, e.g., privacy versus accuracy; robustness versus efficiency. There are actually multiple audiences for trustworthy models: the model developer, the model user, and the model customer. Ultimately, for widespread adoption of the technology, it is the public who must trust these automated decision systems.

- Computing systems for data-intensive applications. Traditional designs of computing systems have focused on computational speed and power: the more cycles, the faster the application can run. Today, the primary focus of applications, especially in the sciences (e.g., astronomy, biology, climate science, materials science), is data. Also, novel special-purpose processors, e.g., GPUs, FPGAs, TPUs, are now commonly found in large data centers. Even with all these data and all this fast and flexible computational power, it can still take weeks to build accurate predictive models; however, applications, whether from science or industry, want real-time predictions. Also, data-hungry and compute-hungry algorithms, e.g., deep learning, are energy hogs (Strubell, Ganesh, & McCallum, 2019). We should consider not only space and time, but also energy consumption, in our performance metrics. In short, we need to rethink computer systems design from first principles, with data (not compute) the focus. New computing systems designs need to consider: heterogeneous processing; efficient layout of massive amounts of data for fast access; the target domain, application, or even task; and energy efficiency.

- Automating front-end stages of the data life cycle. While the excitement in data science is due largely to the successes of machine learning, and more specifically deep learning, before we get to use machine learning methods, we need to prepare the data for analysis. The early stages in the data life cycle (Wing, 2019) are still labor intensive and tedious. Data scientists, drawing on both computational and statistical methods, need to devise automated methods that address data cleaning and data wrangling, without losing other desired properties, e.g., accuracy, precision, and robustness, of the end model.One example of emerging work in this area is the Data Analysis Baseline Library (Mueller, 2019), which provides a framework to simplify and automate data cleaning, visualization, model building, and model interpretation. The Snorkel project addresses the tedious task of data labeling (Ratner et al., 2018).

- Privacy. Today, the more data we have, the better the model we can build. One way to get more data is to share data, e.g., multiple parties pool their individual datasets to build collectively a better model than any one party can build. However, in many cases, due to regulation or privacy concerns, we need to preserve the confidentiality of each party’s dataset. An example of this scenario is in building a model to predict whether someone has a disease or not. If multiple hospitals could share their patient records, we could build a better predictive model; but due to Health Insurance Portability and Accountability Act (HIPAA) privacy regulations, hospitals cannot share these records. We are only now exploring practical and scalable ways, using cryptographic and statistical methods, for multiple parties to share data and/or share models to preserve the privacy of each party’s dataset. Industry and government are exploring and exploiting methods and concepts, such as secure multi-party computation, homomorphic encryption, zero-knowledge proofs, and differential privacy, as part of a point solution to a point problem.

- Ethics. Data science raises new ethical issues. They can be framed along three axes: (1) the ethics of data: how data are generated, recorded, and shared; (2) the ethics of algorithms: how artificial intelligence, machine learning, and robots interpret data; and (3) the ethics of practices: devising responsible innovation and professional codes to guide this emerging science (Floridi & Taddeo, 2016) and for defining Institutional Review Board (IRB) criteria and processes specific for data (Wing, Janeia, Kloefkorn, & Erickson 2018). Example ethical questions include how to detect and eliminate racial, gender, socio-economic, or other biases in machine learning models.

Closing remarks

As many universities and colleges are creating new data science schools, institutes, centers, etc. (Wing, Janeia, Kloefkorn, & Erickson 2018), it is worth reflecting on data science as a field. Will data science as an area of research and education evolve into being its own discipline or be a field that cuts across all other disciplines? One could argue that computer science, mathematics, and statistics share this commonality: they are each their own discipline, but they each can be applied to (almost) every other discipline. What will data science be in 10 or 50 years?

Acknowledgements

I would like to thank Cliff Stein, Gerad Torats-Espinosa, Max Topaz, and Richard Witten for their feedback on earlier renditions of this article. Many thanks to all Columbia Data Science faculty who have helped me formulate and discuss these ten (and other) challenges during our Fall 2019 retreat.

Athey, S. (2016). “Susan Athey on how economists can use machine learning to improve policy,” Retrieved from https://siepr.stanford.edu/news/susan-athey-how-economists-can-use-machine-learning-improve-policy

Berger, J., He, X., Madigan, C., Murphy, S., Yu, B., & Wellner, J. (2019), Statistics at a Crossroad: Who is for the Challenge? NSF workshop report. Retrieved from https://hub.ki/groups/statscrossroad

Connelly, M., Madigan, D., Jervis, R., Spirling, A., & Hicks, R. (2019). The History Lab. Retrieved from http://history-lab.org/

Floridi , L. & Taddeo , M. (2016). What is Data Ethics? Philosophical Transactions of the Royal Society A , vol. 374, issue 2083, December 2016.

Garfinkel, S. (2019). Deploying Differential Privacy for the 2020 Census of Population and Housing. Privacy Enhancing Technologies Symposium, Stockholm, Sweden. Retrieved from http://simson.net/ref/2019/2019-07-16%20Deploying%20Differential%20Privacy%20for%20the%202020%20Census.pdf

Liebman, B.L., Roberts, M., Stern, R.E., & Wang, A. (2017). Mass Digitization of Chinese Court Decisions: How to Use Text as Data in the Field of Chinese Law. UC San Diego School of Global Policy and Strategy, 21 st Century China Center Research Paper No. 2017-01; Columbia Public Law Research Paper No. 14-551. Retrieved from https://scholarship.law.columbia.edu/faculty_scholarship/2039

Mueller, A. (2019). Data Analysis Baseline Library. Retrieved from https://libraries.io/github/amueller/dabl

Ratner, A., Bach, S., Ehrenberg, H., Fries, J., Wu, S, & Ré, C. (2018). Snorkel: Rapid Training Data Creation with Weak Supervision . Proceedings of the 44 th International Conference on Very Large Data Bases.

Strubell E., Ganesh, A., & McCallum, A. (2019),”Energy and Policy Considerations for Deep Learning in NLP. Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics (ACL).

Taddy, M. (2019). Business Data Science: Combining Machine Learning and Economics to Optimize, Automate, and Accelerate Business Decisions , Mc-Graw Hill.

Wang, Y. & Blei, D.M. (2018). The Blessings of Multiple Causes, Retrieved from https://arxiv.org/abs/1805.06826

Wing, J.M. (2019), The Data Life Cycle, Harvard Data Science Review , vol. 1, no. 1.

Wing, J.M., Janeia, V.P., Kloefkorn, T., & Erickson, L.C. (2018). Data Science Leadership Summit, Workshop Report, National Science Foundation. Retrieved from https://dl.acm.org/citation.cfm?id=3293458

J.M. Wing, “ Ten Research Challenge Areas in Data Science ,” Voices, Data Science Institute, Columbia University, January 2, 2020. arXiv:2002.05658 .

Jeannette M. Wing is Avanessians Director of the Data Science Institute and professor of computer science at Columbia University.

Click here to place an order for topic brief service to get instant approval from your professor.

99 Best Data Science Dissertation Topics

Table of Contents

What is a Data Science Dissertation?