What Is Extrapolation?

Extrapolation is a statistical technique used in data science to estimate values of data points beyond the range of the known values in the data set.

Extrapolation is an inexpensive and effective method you can use to predict future values and trends in data, as well as gain insight into the behavior of complex environments. Extrapolation is especially helpful for time series and geospatial analysis due to the technique’s ability to take into account the impact of temporal and spatial factors on the data.

Using extrapolation techniques, you can calculate unobserved values by extending a known sequence of values in the data set .

Extrapolation vs. Interpolation: What’s the Difference?

Extrapolation is often mistaken for interpolation. The two use the same techniques to estimate unknown values but differ in some key areas. If the estimated values are derived within two known values, then it’s an interpolation. However, if the predicted values fall outside of the data set, then it’s extrapolation.

More From the Built In Tech Dictionary What Is Statistical Analysis?

Common Extrapolation Methods

There are different types of extrapolation for predicting and evaluating trends in data. The following two are the most widely used extrapolation methods.

- Linear Extrapolation : This is the most basic form of extrapolation that uses a linear equation to predict future outcomes. This method is best suited for predictions close to the given data. We simply draw a tangent line from the last point to extend it beyond the known values.

- Polynomial Extrapolation : This method uses a polynomial equation to make predictions about future values. We use polynomial extrapolation when the data points exhibit a non-linear trend. This is more complex than linear extrapolation and we can use it to make more accurate predictions.

More From This Expert 4 Tools to Speed Up Exploratory Data Analysis (EDA) in Python

How Does Extrapolation Work?

Extrapolation is basically a forecasting method common in time series analysis. The following example uses linear extrapolation to predict sales.

Let’s take an example of a company’s sales in 2020 and 2021, then extrapolate what the sales will be in 2022.

To find the value of 2022 using extrapolation, with the given sales records of the past two years, we first need to calculate the slope.

m = (y2 - y1) / (x2 - x1)

After that, we apply a line equation.

(y = y1 + m · (x - x1))

We can then find the extrapolated value for 2022 by plugging the values into the equations above. We conclude that sales will be $15,086.

What Are the Benefits of Extrapolation?

Extrapolating is a powerful tool to help us make data-informed predictions and understand trends. Here are some reasons why we use extrapolation methods.

- If we’re worried that expert forecasts are biased but we don’t know much about the situation, extrapolation might be the best option.

- Extrapolation is inexpensive and straightforward, which means you can run the modeling as much as you need to in order to have multiple predictions.

- When you’re looking at multiple scenarios that are an important element in the forecast, such as economic trends and change policies, extrapolation can help the process.

- Extrapolation can help identify potential risks or future opportunities.

- Extrapolation helps you identify patterns in data and make informed decisions.

What Are the Risks of Extrapolation?

Although the process of extrapolation is simple and straightforward, its accuracy and reliability depend on the trends present in the data set. Thus, careful consideration of the data set and the values it contains can help you mitigate larger errors in forecasting future trends. It’s also essential to use various methods when extrapolating to help reduce errors and ensure that the extrapolation is based on a complete and accurate understanding of the data.

Recent Big Data Articles

All Subjects

study guides for every class

That actually explain what's on your next test, extrapolation, from class:, causal inference.

Extrapolation is the process of estimating unknown values by extending or projecting from known data points. This technique is crucial in understanding how results observed in a specific sample or experimental setting might apply to a broader population or different contexts, which relates closely to issues of external validity and the generalizability of findings.

congrats on reading the definition of Extrapolation . now let's actually learn it.

5 Must Know Facts For Your Next Test

- Extrapolation can introduce errors if the relationship between variables changes outside the observed range of data, potentially leading to misleading conclusions.

- In machine learning for causal inference, extrapolation is often necessary when applying learned models to new datasets, but caution must be exercised to avoid overestimating the model's applicability.

- External validity is fundamentally linked to extrapolation, as it assesses whether study results are applicable to settings or populations beyond those studied.

- Understanding the limits of extrapolation is critical; for instance, applying results from a controlled environment directly to real-world situations can yield inaccurate predictions.

- The validity of extrapolated conclusions heavily depends on the robustness of the underlying causal assumptions made during analysis.

Review Questions

- Extrapolation can significantly impact the reliability of findings because it involves making predictions about unobserved data based on known values. If the underlying relationships remain stable across contexts, then extrapolated conclusions may hold true. However, if those relationships change or do not apply outside the studied sample, it can lead to erroneous interpretations and flawed decision-making. Thus, careful consideration of the context and assumptions is vital when relying on extrapolated results.

- Extrapolating machine learning models poses several challenges, including overfitting and potential changes in underlying data distributions. When a model is overfit to training data, it may not perform well when applied to new datasets due to its lack of generalization. Strategies such as cross-validation, regularization techniques, and ensuring diverse training datasets can help improve model robustness and accuracy. Additionally, conducting sensitivity analyses can assess how variations in input affect output predictions, helping validate extrapolations.

- External validity is inherently linked to extrapolation as it assesses whether research findings can be applied beyond the specific conditions of a study. If researchers fail to establish strong external validity, their ability to extrapolate results confidently to broader populations or different contexts becomes compromised. This impacts generalizability since findings that cannot be reliably extrapolated may misrepresent real-world scenarios or lead to ineffective interventions. Therefore, establishing external validity through careful study design and consideration of contextual factors is crucial for valid extrapolation.

Related terms

Generalization : The process of applying findings from a study sample to a larger population, which relies on the assumption that the sample accurately represents the population.

Overfitting : A modeling error that occurs when a machine learning model learns the details and noise in the training data to the extent that it negatively impacts its performance on new data.

Transferability : The extent to which findings from one context can be applied to another, often assessed in qualitative research settings.

" Extrapolation " also found in:

Subjects ( 34 ).

- AP Statistics

- Advanced quantitative methods

- Algebra and Trigonometry

- Approximation Theory

- Blockchain and Cryptocurrency

- Business Analytics

- Business Valuation

- College Algebra

- College Introductory Statistics

- Computational Mathematics

- Contemporary Mathematics for Non-Math Majors

- Forecasting

- Honors Pre-Calculus

- Honors Statistics

- Intermediate Financial Accounting 2

- Intro to Business Statistics

- Introduction to Demographic Methods

- Introduction to Econometrics

- Introduction to Film Theory

- Mathematical Biology

- Mathematical Fluid Dynamics

- Numerical Analysis I

- Numerical Analysis for Data Science and Statistics

- Numerical Solution of Differential Equations

- Population and Society

- Preparatory Statistics

- Principles of Finance

- Programming for Mathematical Applications

- Screenwriting II

- Thermodynamics I

- Variational Analysis

© 2024 Fiveable Inc. All rights reserved.

Ap® and sat® are trademarks registered by the college board, which is not affiliated with, and does not endorse this website..

Table of Contents

What is extrapolation in data science, interpolation vs. extrapolation, extrapolation methods, what are extrapolation statistics, how to extrapolate numbers, extrapolation examples, what is extrapolation everything you need to know.

The importance of statistics is often overlooked, but it's hard to argue that they don't play a vital role in our lives.

They help us make decisions and understand what's going on around us. We use them to calculate the risk of an operation or treatment, determine whether we need an umbrella today, and even decide what kind of ice cream flavor to get at the grocery store.

Statistics are everywhere, and they're essential because they allow us to make informed decisions about our lives.

Extrapolation is the process of inferring values outside the range of the existing data to make predictions. Extrapolation is one of the essential methods that data scientists use to predict future trends and outcomes.

When looking at a dataset, you can use extrapolation to predict what might happen in the future. For example, suppose you have historical data about how people vote for different political parties at election time. In that case, you could use that information to predict what will happen in upcoming elections.

Your Data Analytics Career is Around The Corner!

Interpolation is the process of estimating a value between known values. Extrapolation is the process of evaluating a value beyond known values.

For example, if you wanted to estimate how much money you'll make when you retire, you might use interpolation to get an estimate. Look at how much money you make now and add it up until retirement.

On the other hand, if you wanted to predict how many people will be using your product in 2020, it might be more helpful to extrapolate from what we know now and project how that will change over time.

Interpolation can help predict things that are likely to happen (such as future events) but not necessarily ones that are guaranteed to happen (like winning the lottery).

Extrapolation can be used to make predictions about any kind of event—even if it's unlikely or impossible—as long as enough data is available for us to make those predictions confidently.

Linear extrapolation is a method of estimating the value of a variable based on its current value and the values of several other variables. It gives good results when the predicted value is close to the available data but can be more accurate when it is far from the available data.

It is because linear extrapolation assumes that there will be no change in the relationship between two variables as you go farther away from their current values.

Linear extrapolation can be done using a linear equation or function, which allows you to draw a tangent line at the endpoints of your graph and extend it beyond the limits of your data set.

The method of Lagrange interpolation is used to find the polynomial curve between known values or near endpoints of a function. It uses Newton's system of finite series to have the data. The resulting polynomial can be used in extrapolating the data.

A conic section is a curve obtained using five points near a given data set. When the data set involves a circle or ellipse, the curve will always curve back to itself. However, when the data set involves a parabolic or hyperbolic curve, it may not curve back to itself as it is relative to the x-axis.

French Curve

French curve extrapolation is a method that uses an existing set of data to predict the variable's value at a point not included in the original data.

It is useful when there is a need to extrapolate from a small number of data points because it does not require any assumptions about the relationship between the variables.

Geometric Extrapolation With Error Prediction

Geometric extrapolation is a method of estimating the value of a variable at a time in the future based on how the variable's values have changed over time. It is typically used when the estimated variable has a known relationship to another variable and is often applied to stock prices.

Extrapolation Statistics are used to predict future behavior based on past data. They can be used to forecast the number of customers you might expect at a given time or place or how much money you will make in a given period. They are used in many fields, such as marketing, finance, and sports.

Extrapolation statistics use mathematical formulas that calculate the probability that a particular event will occur based on other events that have happened before. These events are called "input variables." The mathematical formula is then used to predict what will happen next or what will happen after the input variable has changed slightly.

These statistics can be handy when making important decisions about things like marketing campaigns, sales goals, or budgeting for equipment purchases.

Extrapolation Formula

In the case of linear exploration, the extrapolation of a point to be calculated using two endpoints (x1, y1) and (x2, y2) in the linear graph when the value of x is given, then a formula that can be used is as follows:

Extrapolation formula for linear graph:

Extrapolation is taking a known quantity and projecting it into the future. It can be done when analyzing historical data or making predictions based on current events.

For example, if you wanted to know how much money will be spent on Christmas presents this year, you could use past data and extrapolate that into the future. You could also use current data, such as how many people have been purchasing gifts online, and extrapolate that into the future (for example, predicting that more people will shop online next year).

Extrapolation has two primary uses: forecasting and trend analysis . Forecasting involves predicting future outcomes based on past information and trends. Trend analysis consists of identifying data trends over time and using these trends to predict future results.

Enroll in the Professional Certificate Program in Data Analytics to learn over a dozen of data analytics tools and skills, and gain access to masterclasses by Purdue faculty and IBM experts, exclusive hackathons, Ask Me Anything sessions by IBM.

If you're looking to boost your career, this program is for you.

The Data Analytics Certification Program is designed to teach you how to use data analytics and predictive modeling to solve real-world problems in your organization. You'll learn how to tackle challenges with a team of experts focused on your success—and you'll get support from industry leaders like IBM.

The program features master classes, project-based learning, and hands-on experience that will prepare you for a career in data analytics.

1. What Does Extrapolation Mean?

Extrapolation is the process of making predictions based on current or past data.

It's a way of using existing information to make an educated guess about what might happen in the future.

2. What is Extrapolation With an Example?

Extrapolation is a technique that uses reasoning to predict future events by extrapolating from past occurrences. For example, if you've been keeping track of the number of cups of coffee you drink per week, and it's been steadily increasing over time, you can use extrapolation to predict that you'll drink even more next week.

3. What is Extrapolation in Statistics?

Extrapolation is a statistical technique that predicts future trends based on existing data. Based on past data, it can predict future sales, profits, or other financial performance .

4. What is an Extrapolation on a Graph?

An extrapolation is a graph that goes beyond the limits of the collected data. For example, if you're looking at a graph of stock prices over time, and one point on the graph shows that stocks went up by $50 when they were worth $5,000 each, then an extrapolation would be to assume that if you sold your stocks now for $500 each (which is higher than any point on your graph), you'd make $50.

5. What Is Another Word for Extrapolation?

Extrapolation is another word for prediction.

It describes the process of guessing what might happen in the future based on past events and other factors.

6 Why Do We Use Extrapolation?

An extrapolation is a trend-based approach to predicting what will happen in the future based on what has happened in the past.

Data Science & Business Analytics Courses Duration and Fees

Data Science & Business Analytics programs typically range from a few weeks to several months, with fees varying based on program and institution.

| Program Name | Duration | Fees |

|---|---|---|

| Cohort Starts: | 32 weeks | € 1,790 |

| Cohort Starts: | 11 Months | € 3,790 |

| Cohort Starts: | 11 months | € 2,290 |

| Cohort Starts: | 11 months | € 2,790 |

| Cohort Starts: | 8 months | € 2,790 |

| Cohort Starts: | 14 weeks | € 1,999 |

| 11 months | € 1,099 | |

| 11 months | € 1,099 |

Recommended Reads

Apriori Algorithm in Data Mining

What is Data-Centric Architecture in AI?

Random Forest Algorithm

Scope of PMP® (Project Management Credential) across the world

Why Python Is Essential for Data Analysis and Data Science?

A Complete Guide to Get a Grasp of Time Series Analysis

Get Affiliated Certifications with Live Class programs

Post graduate program in data analytics.

- Post Graduate Program certificate and Alumni Association membership

- Exclusive hackathons and Ask me Anything sessions by IBM

Data Analyst

- Industry-recognized Data Analyst Master’s certificate from Simplilearn

- Dedicated live sessions by faculty of industry experts

- PMP, PMI, PMBOK, CAPM, PgMP, PfMP, ACP, PBA, RMP, SP, and OPM3 are registered marks of the Project Management Institute, Inc.

- What Is Generalizability In Research?

- Data Collection

Generalizability is making sure the conclusions and recommendations from your research apply to more than just the population you studied. Think of it as a way to figure out if your research findings apply to a larger group, not just the small population you studied.

In this guide, we explore research generalizability, factors that influence it, how to assess it, and the challenges that come with it.

So, let’s dive into the world of generalizability in research!

Defining Generalizability

Generalizability refers to the extent to which a study’s findings can be extrapolated to a larger population. It’s about making sure that your findings apply to a large number of people, rather than just a small group.

Generalizability ensures research findings are credible and reliable. If your results are only true for a small group, they might not be valid.

Also, generalizability ensures your work is relevant to as many people as possible. For example, if you were to test a drug only on a small number of patients, you could potentially put patients at risk by prescribing the drug to all patients until you are confident that it is safe for everyone.

Factors Influencing Generalizability

Here are some of the factors that determine if your research can be adapted to a large population or different objects:

1. Sample Selection and Size

The size of the group you study and how you choose those people can affect how well your results can be applied to others. Think of it like asking one person out of a friendship group of 16 if a game is fun, doesn’t accurately represent the opinion of the group.

2. Research Methods and Design

Different methods have different levels of generalizability. For example, if you only observe people in a particular city, your findings may not apply to other locations. But if you use multiple methods, you get a better idea of the big picture.

3. Population Characteristics

Not everyone is the same. People from different countries, different age groups, or different cultures may respond differently. That’s why the characteristics of the people you’re looking at have a significant impact on the generalizability of the results.

4. Context and Environment

Think of your research as a weather forecast. A forecast of sunny weather in one location may not be accurate in another. Context and environment play a role in how well your results translate to other environments or contexts.

Internal vs. External Validity

You can only generalize a study when it has high validity, but there are two types of validity- internal and external. Let’s see the role they play in generalizability:

1. Understanding Internal Validity

Internal validity is a measure of how well a study has ruled out alternative explanations for its findings. For example, if a study investigates the effects of a new drug on blood pressure, internal validity would be high if the study was designed to rule out other factors that could affect blood pressure, such as exercise, diet, and other medications.

2. Understanding External Validity

External validity is the extent to which a study’s findings can be generalized to other populations, settings, and times. It focuses on how well your study’s results apply to the real world.

For example, if a new blood pressure-lowering drug were to be studied in a laboratory with a sample of young healthy adults, the study’s external validity would be limited. This is because the study doesn’t consider people outside the population such as older adults, patients with other medical conditions, and more.

3 . The Relationship Between Internal and External Validity

Internal validity and external validity are often inversely related. This means that studies with high internal validity may have lower external validity, and vice versa.

For example, a study that randomly assigns participants to different treatment groups may have high internal validity, but it may have lower external validity if the participants are not representative of the population of interest.

Strategies for Enhancing Generalizability

Several strategies enable you to enhance the generalizability of their findings, here are some of them:

1 . Random Sampling Techniques

This involves selecting participants from a population in a way that gives everyone an equal chance of being selected. This helps to ensure that the sample is representative of the population.

Let’s say you want to find out how people feel about a new policy. Randomly pick people from the list of people who registered to vote to ensure your sample is representative of the population.

2 . Diverse Sample Selection

Choose samples that are representative of different age groups, genders, races, ethnicities, and economic backgrounds. This helps to ensure that the findings are generalizable to a wider range of people.

3 . Careful Research Design

Meticulously design your studies to minimize the risk of bias and confounding variables. A confounding variable is a factor that makes it hard to tell the real cause of your results.

For example, you are studying the effect of a new drug on cholesterol levels. Even if you take a random sample of participants and randomly select them to receive either a new drug or placebo if you don’t control for the participant’s diet, your results could be misleading. You could be attributing cholesterol balance to drugs when it is due to their diet.

4 . Robust Data Collection Methods

Use robust data collection methods to minimize the risk of errors and biases. This includes using well-validated measures and carefully training data collectors.

For instance, an online survey tool could be used to conduct online polls on how voters change their minds during an election cycle rather than relying on phone interviews, which would make it harder to get repeat voters to participate in the study and review their views over time.

Challenges to Generalizability

1. sample bias .

Sample bias happens when the group you study doesn’t represent everyone you want to talk about. For example, if you’re researching ice cream preferences and only ask your friends, your results might not apply to everyone because your friends are not the only people who take ice cream.

2. Ethical Considerations

Ethical considerations can limit your research’s generalizability because it wouldn’t be right or fair. For example, it’s not ethical to test a new medicine on people without their permission.

3 . Resource Constraints

Having a limited budget for a project also restricts your research’s generalizability. For example, if you want to conduct a large-scale study but don’t have the resources, time, or personnel, you opt for a small-scale study, which could make your findings less likely to apply to a larger population.

4. Limitations of Research Methods

Tools are just as much a part of your research as the research itself. If you an ineffective tool, you might not be able to apply what you’ve learned to other situations.

Assessing Generalizability

Evaluating generalizability allows you to understand the implications of your findings and make realistic recommendations. Here are some of the most effective ways to assess generalizability:

Statistical Measures and Techniques

Several statistical tools and methods allow you to assess the generalizability of your study. Here are the top two:

- Confidence Interval

A confidence interval is a range of values that is likely to contain the true population value. So if a researcher looks at a test and sees that the mean score is 78 with a 95% confidence interval of 70-80, they’re 95% sure that the actual population score is between 70-80.

The p-value indicates the likelihood that the results of the study, or more extreme results, will be obtained if the null hypothesis holds. A null hypothesis is the supposition that there is no association between the variables being analyzed.

A good example is a researcher surveying 1,000 college students to study the relationship between study habits and GPA. The researcher finds that students who study for more hours per week have higher GPAs.

The p-value below 0.05 indicates that there is a statistically significant association between study habits and GPA. This means that the findings of the study are not by coincidence.

Peer Review and Expert Evaluation

Reviewers and experts can look at sample selection, study design, data collection, and analysis methods to spot areas for improvement. They can also look at the survey’s results to see if they’re reliable and if they match up with other studies.

Transparency in Reporting

Clearly and concisely report the survey design, sample selection, data collection methods, data analysis methods, and findings of the survey. This allows other researchers to assess the quality of the survey and to determine whether the results are generalizable.

The Balance Between Generalizability and Specificity

Generalizability refers to the degree to which the findings of a study can be applied to a larger population or context. Specificity, on the other hand, refers to the focus of a study on a particular population or context.

a. When Generalizability Matters Most

Generalizability comes into play when you want to make predictions about the world outside of your sample. For example, you want to look at the impact of a new viewing restrictions policy on the population as a whole.

b. Situations Where Specificity is Preferred

Specificity is important when researchers want to gain a deep understanding of a specific group or phenomenon in detail. For example, if a researcher wants to study the experiences of people with a rare disease.

Finding the Right Balance Between Generalizability and Specificity

The right balance between generalizability and specificity depends on the research question.

Case 1- Specificity over Generalizability

Sometimes, you have to give up some of their generalizability to get more specific results. For example, if you are studying a rare genetic condition, you might not be able to get a sample that’s representative of the population.

Case 2- Generalizability over Specificity

In other cases, you may need to sacrifice some specificity to achieve greater generalizability. For example, when studying the effects of a new drug, you need a sample that includes a wide range of people with different characteristics.

Keep in mind that generalizability and specificity are not mutually exclusive. You can design studies that are both generalizable and specific.

Real-World Examples

Here are a few real-world examples of studies that turned out to be generalizable, as well as some that are not:

1. Case Studies of Research with High Generalizability

We’ve been talking about how important a generalizable study is and how to tell if your research is generalizable. Let’s take a look at some studies that have achieved this:

a. The Framingham Heart Study

This is a long-running study that has been tracking the health of over 15,000 participants since 1948. The study has provided valuable insights into the risk factors for heart disease, stroke, and other chronic diseases

The findings of the Framingham Heart Study are highly generalizable because the study participants were recruited from a representative sample of the general population.

b. The Cochrane Database of Systematic Reviews

This is a collection of systematic reviews that evaluate the evidence for the effectiveness of different healthcare interventions. The Cochrane Database of Systematic Reviews is a highly respected source of information for healthcare professionals and policymakers.

The findings of Cochrane reviews are highly generalizable because they are based on a comprehensive review of all available evidence.

2. Case Studies of Research with Limited Generalizability

Let’s look at some studies that would fail to prove their validity to the general population:

- A study that examines the effects of a new drug on a small sample of participants with a rare medical condition. The findings of this study would not be generalizable to the general population because the study participants were not representative of the general population.

- A study that investigates the relationship between culture and values using a sample of participants from a single country. The findings of this study would not be generalizable to other countries because the study participants were not representative of people from other cultures.

Implications of Generalizability in Different Fields

Research generalizability has significant effects in the real world, here are some ways to leverage it across different fields:

1. Medicine and Healthcare

Generalizability is a key concept of medicine and healthcare. For example, a single study that found a new drug to be effective in treating a specific condition in a limited number of patients might not apply to all patients.

Healthcare professionals also leverage generalizability to create guidelines for clinical practice. For example, a guideline for the treatment of diabetes may not be generalizable to all patients with diabetes if it is based on research studies that only included patients with a particular type of diabetes or a particular level of severity.

2. Social Sciences

Generalizability allows you to make accurate inferences about the behavior and attitudes of large populations. People are influenced by multiple factors, including their culture, personality, and social environment.

For example, a study that finds that a particular educational intervention is effective in improving student achievement in one school may not be generalizable to all schools.

3. Business and Economics

Generalizability also allows companies to conclude how customers and their competitors behave. Factors like economic conditions, consumer tastes, and tech trends can change quickly, so it’s hard to generalize results from one study to the next.

For example, a study that finds that a new marketing campaign is effective in increasing sales of a product in one region may not be generalizable to other regions.

The Future of Generalizability in Research

Let’s take a look at new and future developments geared at improving the generalizability of research:

1. Evolving Research Methods and Technologies

The evolution of research methods and technologies is changing the way that we think about generalizability. In the past, researchers were often limited to studying small samples of people in specific settings. This made it difficult to generalize the findings to the larger population.

Today, you can use various new techniques and technologies to gather data from a larger and more varied sample size. For example, online surveys provide you with a large sample size in a very short period.

2. The Growing Emphasis on Reproducibility

The growing emphasis on reproducibility is also changing the way that we think about generalizability. Reproducibility is the ability to reproduce the results of a study by following the same methods and using a similar sample.

For example, you publish a study that claims that a new drug is effective in treating a certain disease. Two other researchers replicated the study and confirmed the findings. This replication helps to build confidence in the findings of the original study and makes it more likely that the drug will be approved for use.

3. The Ongoing Debate on Generalizability vs. Precision

Generalizability refers to the ability to apply the findings of a study to a wider population. Precision refers to the ability to accurately measure a particular phenomenon.

For some researchers, generalizability matters more than accuracy because it means their findings apply to a larger number of people and have an impact on the real world. For others, accuracy matters more than generalization because it enables you to understand the underlying mechanisms of a phenomenon.

The debate over generalizability versus precision is likely to continue because both concepts are very important. However, it is important to note that the two concepts are not mutually exclusive. It is possible to achieve both generalizability and precision in research by using carefully designed methods and technologies.

Generalizability allows you to apply the findings of a study to a larger population. This is important for making informed decisions about policy and practice, identifying and addressing important social problems, and advancing scientific knowledge.

With more advanced tools such as online surveys, generalizability research is here to stay. Sign up with Formplus to seamlessly collect data from a global audience.

Connect to Formplus, Get Started Now - It's Free!

- Case Studies of Research

- External Validity

- Generalizability

- internal validity

- Specificity

- Moradeke Owa

You may also like:

Conversational Analysis in Research: Methods & Techniques

Communication patterns can reveal a great deal about our social interactions and relationships. But identifying and analyzing them can...

What is Retrieval Practice?

Learning something new is like putting a shelf of books in your brain. If you don’t take them out and read them again, you will probably...

What is Research Replicability in Surveys

Research replicability ensures that if one researcher does a study, another researcher could do the same study and get pretty similar...

Internal Validity in Research: Definition, Threats, Examples

In this article, we will discuss the concept of internal validity, some clear examples, its importance, and how to test it.

Formplus - For Seamless Data Collection

Collect data the right way with a versatile data collection tool. try formplus and transform your work productivity today..

This website may not work correctly because your browser is out of date. Please update your browser .

Extrapolate findings

An evaluation usually involves some level of generalising of the findings to other times, places or groups of people.

For many evaluations, this simply involves generalising from data about the current situation or the recent past to the future.

For example, an evaluation might report that a practice or program has been working well (finding), therefore it is likely to work well in the future (generalisation), and therefore we should continue to do it (recommendation). In this case, it is important to understand whether or not future times are likely to be similar to the time period of the evaluation. If the program had been successful because of support from another organisation, and this support was not going to continue, then it would not be correct to assume that the program would continue to succeed in the future.

For some evaluations, there are other types of generalising needed. Impact evaluations which aim to learn from the evaluation of a pilot to make recommendations about scaling up must be clear about the situations and people to whom results can be generalised.

There are often two levels of generalisation. For example, an evaluation of a new nutrition program in Ghana collected data from a random sample of villages. This allowed statistical generalisation to the larger population of villages in Ghana. In addition, because there was international interest in the nutrition program, many organisations, including governments in other countries, were interested to learn from the evaluation for possible implementation elsewhere.

Analytical generalisation involves making projections about the likely transferability of findings from an evaluation, based on a theoretical analysis of the factors producing outcomes and the effect of context.

Statistical generalisation involves statistically calculating the likely parameters of a population using data from a random sample of that population.

Horizontal evaluation is an approach that combines self-assessment by local participants and external review by peers.

Positive deviance (PD), a behavioural and social change approach, involves learning from those who find unique and successful solutions to problems despite facing the same challenges, constraints and resource deprivation as others.

Realist evaluation aims to identify the underlying generative causal mechanisms that explain how outcomes were caused and how context influences these.

This blog post and its associated replies, written by Jed Friedman for the World Bank, describes a process of using analytic methods to overcome some of the assumptions that must be made when extrapolating results from evaluations to other settings.

- << Synthesise data across evaluations

- Report & Support Use of findings >>

Expand to view all resources related to 'Extrapolate findings'

- Qualitative research & evaluation methods: Integrating theory and practice

- Randomised control trials for the impact evaluation of development initiatives: a statistician's point of view

'Extrapolate findings' is referenced in:

- 52 weeks of BetterEvaluation: Week 34 Generalisations from case studies?

Framework/Guide

- Communication for Development (C4D) : C4D: Generalise findings

- Analytical generalisation

- Statistical generalisation

Back to top

© 2022 BetterEvaluation. All right reserved.

5 Methods of Data Collection for Quantitative Research

In this blog, read up on five different ways to approach data collection for quantitative studies - online surveys, offline surveys, interviews, etc.

Jan 29, 2024

quantilope is the Consumer Intelligence Platform for all end-to-end research needs

In this blog, read up on five different data collection techniques for quantitative research studies.

Quantitative research forms the basis for many business decisions. But what is quantitative data collection, why is it important, and which data collection methods are used in quantitative research?

Table of Contents:

- What is quantitative data collection?

- The importance of quantitative data collection

- Methods used for quantitative data collection

- Example of a survey showing quantitative data

- Strengths and weaknesses of quantitative data

What is quantitative data collection?

Quantitative data collection is the gathering of numeric data that puts consumer insights into a quantifiable context. It typically involves a large number of respondents - large enough to extract statistically reliable findings that can be extrapolated to a larger population.

The actual data collection process for quantitative findings is typically done using a quantitative online questionnaire that asks respondents yes/no questions, ranking scales, rating matrices, and other quantitative question types. With these results, researchers can generate data charts to summarize the quantitative findings and generate easily digestible key takeaways.

Back to Table of Contents

The importance of quantitative data collection

Quantitative data collection can confirm or deny a brand's hypothesis, guide product development, tailor marketing materials, and much more. It provides brands with reliable information to make decisions off of (i.e. 86% like lemon-lime flavor or just 12% are interested in a cinnamon-scented hand soap).

Compared to qualitative data collection, quantitative data allows for comparison between insights given higher base sizes which leads to the ability to have statistical significance. Brands can cut and analyze their dataset in a variety of ways, looking at their findings among different demographic groups, behavioral groups, and other ways of interest. It's also generally easier and quicker to collect quantitative data than it is to gather qualitative feedback, making it an important data collection tool for brands that need quick, reliable, concrete insights.

In order to make justified business decisions from quantitative data, brands need to recruit a high-quality sample that's reflective of their true target market (one that's comprised of all ages/genders rather than an isolated group). For example, a study into usage and attitudes around orange juice might include consumers who buy and/or drink orange juice at a certain frequency or who buy a variety of orange juice brands from different outlets.

Methods used for quantitative data collection

So knowing what quantitative data collection is and why it's important , how does one go about researching a large, high-quality, representative sample ?

Below are five examples of how to conduct your study through various data collection methods :

Online quantitative surveys

Online surveys are a common and effective way of collecting data from a large number of people. They tend to be made up of closed-ended questions so that responses across the sample are comparable; however, a small number of open-ended questions can be included as well (i.e. questions that require a written response rather than a selection of answers in a close-ended list). Open-ended questions are helpful to gather actual language used by respondents on a certain issue or to collect feedback on a view that might not be shown in a set list of responses).

Online surveys are quick and easy to send out, typically done so through survey panels. They can also appear in pop-ups on websites or via a link embedded in social media. From the participant’s point of view, online surveys are convenient to complete and submit, using whichever device they prefer (mobile phone, tablet, or computer). Anonymity is also viewed as a positive: online survey software ensures respondents’ identities are kept completely confidential.

To gather respondents for online surveys, researchers have several options. Probability sampling is one route, where respondents are selected using a random selection method. As such, everyone within the population has an equal chance of getting selected to participate.

There are four common types of probability sampling .

- Simple random sampling is the most straightforward approach, which involves randomly selecting individuals from the population without any specific criteria or grouping.

- Stratified random sampling divides the population into subgroups (strata) and selects a random sample from each stratum. This is useful when a population includes subgroups that you want to be sure you cover in your research.

- Cluster sampling divides the population into clusters and then randomly selects some of the clusters to sample in their entirety. This is useful when a population is geographically dispersed and it would be impossible to include everyone.

- Systematic sampling begins with a random starting point and then selects every nth member of the population after that point (i.e. every 15th respondent).

Learn how to leverage AI to help generate your online quantitative survey inputs:

While online surveys are by far the most common way to collect quantitative data in today’s modern age, there are still some harder-to-reach respondents where other mediums can be beneficial; for example, those who aren’t tech-savvy or who don’t have a stable internet connection. For these audiences, offline surveys may be needed.

Offline quantitative surveys

Offline surveys (though much rarer to come across these days) are a way of gathering respondent feedback without digital means. This could be something like postal questionnaires that are sent out to a sample population and asked to return the questionnaire by mail (like the Census) or telephone surveys where questions are asked of respondents over the phone.

Offline surveys certainly take longer to collect data than online surveys and they can become expensive if the population is difficult to reach (requiring a higher incentive). As with online surveys, anonymity is protected, assuming the mail is not intercepted or lost.

Despite the major difference in data collection to an online survey approach, offline survey data is still reported on in an aggregated, numeric fashion.

In-person interviews are another popular way of researching or polling a population. They can be thought of as a survey but in a verbal, in-person, or virtual face-to-face format. The online format of interviews is becoming more popular nowadays, as it is cheaper and logistically easier to organize than in-person face-to-face interviews, yet still allows the interviewer to see and hear from the respondent in their own words. Though many interviews are collected for qualitative research, interviews can also be leveraged quantitatively; like a phone survey, an interviewer runs through a survey with the respondent, asking mainly closed-ended questions (yes/no, multiple choice questions, or questions with rating scales that ask how strongly the respondent agrees with statements). The advantage of structured interviews is that the interviewer can pace the survey, making sure the respondent gives enough consideration to each question. It also adds a human touch, which can be more engaging for some respondents. On the other hand, for more sensitive issues, respondents may feel more inclined to complete a survey online for a greater sense of anonymity - so it all depends on your research questions, the survey topic, and the audience you're researching.

Observations

Observation studies in quantitative research are similar in nature to a qualitative ethnographic study (in which a researcher also observes consumers in their natural habitats), yet observation studies for quant research remain focused on the numbers - how many people do an action, how much of a product consumer pick up, etc.

For quantitative observations, researchers will record the number and types of people who do a certain action - such as choosing a specific product from a grocery shelf, speaking to a company representative at an event, or how many people pass through a certain area within a given timeframe. Observation studies are generally structured, with the observer asked to note behavior using set parameters. Structured observation means that the observer has to hone in on very specific behaviors, which can be quite nuanced. This requires the observer to use his/her own judgment about what type of behavior is being exhibited (e.g. reading labels on products before selecting them; considering different items before making the final choice; making a selection based on price).

Document reviews and secondary data sources

A fifth method of data collection for quantitative research is known as secondary research : reviewing existing research to see how it can contribute to understanding a new issue in question. This is in contrast to the primary research methods above, which is research that is specially commissioned and carried out for a research project.

There are numerous secondary data sources that researchers can analyze such as public records, government research, company databases, existing reports, paid-for research publications, magazines, journals, case studies, websites, books, and more.

Aside from using secondary research alone, secondary research documents can also be used in anticipation of primary research, to understand which knowledge gaps need to be filled and to nail down the issues that might be important to explore further in a primary research study. Back to Table of Contents

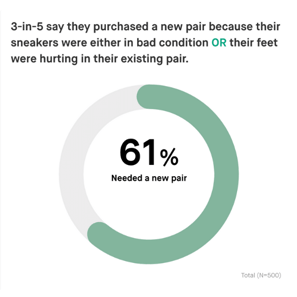

Example of a survey showing quantitative data

The below study shows what quantitative data might look like in a final study dashboard, taken from quantilope's Sneaker category insights study .

The study includes a variety of usage and attitude metrics around sneaker wear, sneaker purchases, seasonality of sneakers, and more. Check out some of the data charts below showing these quantitative data findings - the first of which even cuts the quantitative data findings by demographics.

Beyond these basic usage and attitude (or, descriptive) data metrics, quantitative data also includes advanced methods - such as implicit association testing. See what these quantitative data charts look like from the same sneaker study below:

These are just a few examples of how a researcher or insights team might show their quantitative data findings. However, there are many ways to visualize quantitative data in an insights study, from bar charts, column charts, pie charts, donut charts, spider charts, and more, depending on what best suits the story your data is telling. Back to Table of Contents

Strengths and weaknesses of quantitative data collection

quantitative data is a great way to capture informative insights about your brand, product, category, or competitors. It's relatively quick, depending on your sample audience, and more affordable than other data collection methods such as qualitative focus groups. With quantitative panels, it's easy to access nearly any audience you might need - from something as general as the US population to something as specific as cannabis users . There are many ways to visualize quantitative findings, making it a customizable form of insights - whether you want to show the data in a bar chart, pie chart, etc.

For those looking for quick, affordable, actionable insights, quantitative studies are the way to go.

quantitative data collection, despite the many benefits outlined above, might also not be the right fit for your exact needs. For example, you often don't get as detailed and in-depth answers quantitatively as you would with an in-person interview, focus group, or ethnographic observation (all forms of qualitative research). When running a quantitative survey, it’s best practice to review your data for quality measures to ensure all respondents are ones you want to keep in your data set. Fortunately, there are a lot of precautions research providers can take to navigate these obstacles - such as automated data cleaners and data flags. Of course, the first step to ensuring high-quality results is to use a trusted panel provider. Back to Table of Contents

Quantitative research typically needs to undergo statistical analysis for it to be useful and actionable to any business. It is therefore crucial that the method of data collection, sample size, and sample criteria are considered in light of the research questions asked.

quantilope’s online platform is ideal for quantitative research studies. The online format means a large sample can be reached easily and quickly through connected respondent panels that effectively reach the desired target audience. Response rates are high, as respondents can take their survey from anywhere, using any device with internet access.

Surveys are easy to build with quantilope’s online survey builder. Simply choose questions to include from pre-designed survey templates or build your own questions using the platform’s drag & drop functionality (of which both options are fully customizable). Once the survey is live, findings update in real-time so that brands can get an idea of consumer attitudes long before the survey is complete. In addition to basic usage and attitude questions, quantilope’s suite of advanced research methodologies provides an AI-driven approach to many types of research questions. These range from exploring the features of products that drive purchase through a Key Driver Analysis , compiling the ideal portfolio of products using a TURF , or identifying the optimal price point for a product or service using a Price Sensitivity Meter (PSM) .

Depending on the type of data sought it might be worth considering a mixed-method approach, including both qual and quant in a single research study. Alongside quantitative online surveys, quantilope’s video research solution - inColor , offers qualitative research in the form of videoed responses to survey questions. inColor’s qualitative data analysis includes an AI-drive read on respondent sentiment, keyword trends, and facial expressions.

To find out more about how quantilope can help with any aspect of your research design and to start conducting high-quality, quantitative research, get in touch below:

Get in touch to learn more about quantitative research studies!

Latest articles.

quantilope & WIRe: How Automated Insights Drive A&W's Pricing Strategy

Discover how A&W's application of advanced research methods has enabled its insights team to deliver data-driven recommendations with actio...

September 09, 2024

The Essential Guide to Idea Screening: Turning Concepts Into Reality

In this guide, we'll break down the essentials of idea screening, starting by defining its purpose and exploring the top techniques for suc...

September 04, 2024

The New quantilope Brand Experience

Introducing quantilope's new brand experience featuring a brighter, fresher look and feel.

Extrapolating baseline trend in single-case data: Problems and tentative solutions

- Published: 27 November 2018

- Volume 51 , pages 2847–2869, ( 2019 )

Cite this article

- Rumen Manolov ORCID: orcid.org/0000-0002-9387-1926 1 , 2 ,

- Antonio Solanas 1 &

- Vicenta Sierra 2

5481 Accesses

13 Citations

3 Altmetric

Explore all metrics

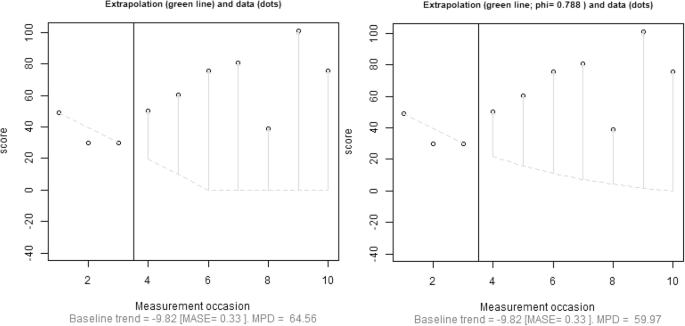

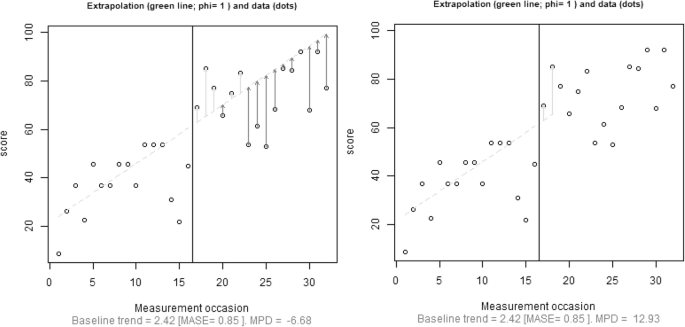

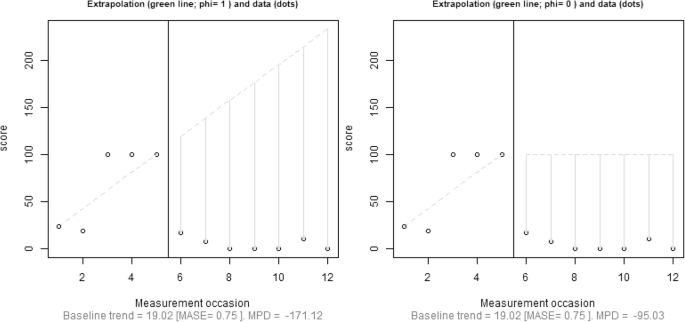

Single-case data often contain trends. Accordingly, to account for baseline trend, several data-analytical techniques extrapolate it into the subsequent intervention phase. Such extrapolation led to forecasts that were smaller than the minimal possible value in 40% of the studies published in 2015 that we reviewed. To avoid impossible predicted values, we propose extrapolating a damping trend, when necessary. Furthermore, we propose a criterion for determining whether extrapolation is warranted and, if so, how far out it is justified to extrapolate a baseline trend. This criterion is based on the baseline phase length and the goodness of fit of the trend line to the data. These proposals were implemented in a modified version of an analytical technique called Mean phase difference. We used both real and generated data to illustrate how unjustified extrapolations may lead to inappropriate quantifications of effect, whereas our proposals help avoid these issues. The new techniques are implemented in a user-friendly website via the Shiny application, offering both graphical and numerical information. Finally, we point to an alternative not requiring either trend line fitting or extrapolation.

Similar content being viewed by others

How important is the linearity assumption in a sample size calculation for a randomised controlled trial where treatment is anticipated to affect a rate of change?

A Review of Time Scale Fundamentals in the g-Formula and Insidious Selection Bias

Search for efficient complete and planned missing data designs for analysis of change.

Avoid common mistakes on your manuscript.

Several features of single-case experimental design (SCED) data have been mentioned as potential reasons for the difficulty of analyzing such data quantitatively, for the lack of consensus regarding the most appropriate statistical analyses, and for the continued use of visual analysis (Campbell & Herzinger, 2010 ; Kratochwill, Levin, Horner, & Swoboda, 2014 ; Parker, Cryer, & Byrns, 2006 ; Smith, 2012 ). Some of the data features that have received the most attention are serial dependence (Matyas & Greenwood, 1997 ; Shadish, Rindskopf, Hedges, & Sullivan, 2013 ), the common use of counts or other outcome measures that are not continuous or normally distributed (Pustejovsky, 2015 ; Sullivan, Shadish, & Steiner, 2015 ), the shortness of the data series (Arnau & Bono, 1998 ; Huitema, McKean, & McKnight, 1999 ), and the presence of trends (Mercer & Sterling, 2012 ; Parker et al., 2006 ; Solomon, 2014 ). In the present article we focus on trends. The reason for this focus is that trend is a data feature whose presence, if not taken into account, can invalidate conclusions regarding an intervention’s effectiveness (Parker et al., 2006 ). Even when there is an intention to take the trend into account, several challenges arise. First, linear trend has been defined in several ways in the context of SCED data (Manolov, 2018 ). Second, there has been recent emphasis on the need to consider nonlinear trends (Shadish, Rindskopf, & Boyajian, 2016 ; Swan & Pustejovsky, 2018 ; Verboon & Peters, 2018 ). Third, some techniques for controlling trend may provide insufficient control (see Tarlow, 2017 , regarding Tau-U by Parker, Vannest, Davis, & Sauber, 2011 ), leading applied researchers to think that their results represent an intervention effect beyond baseline trend, which may not be justified. Fourth, other techniques may extrapolate baseline trend regardless of the degree to which the trend line is a good representation of the baseline data, and despite the possibility of impossible values being predicted (see Parker et al.’s, 2011 , comments on the regression model by Allison & Gorman, 1993 ). The latter two challenges compromise the interpretation of results.

Aim, focus, and organization of the article

The aim of the present article is to provide further discussion on four issues related to baseline trend extrapolation, based on the comments by Parker et al. ( 2011 ). As part of this discussion, we propose tentative solutions to the issues identified. Moreover, we specifically aim to improve one analytical procedure, which extrapolates baseline trend and compares this extrapolation to the actual intervention-phase data: the mean phase difference (MPD; Manolov & Solanas, 2013 ; see also the modification and extension in Manolov & Rochat, 2015 ).

Most single-case data-analytical techniques focus on linear trend, although there are certain exceptions. One exception is a regression-based analysis (Swaminathan, Rogers, Horner, Sugai, & Smolkowski, 2014 ), for which the possibility of modeling quadratic trend has been discussed explicitly. Another is Tau-U, developed by Parker et al. ( 2011 ), which deals more broadly with monotonic (not necessarily linear) trends. We stick here to linear trends and their extrapolation, a decision that reflects Chatfield’s ( 2000 ) statement that relatively simple forecasting methods are preferred, because they are potentially more easily understood. Moreover, this focus is well aligned with our willingness to improve the MPD, a procedure for fitting a linear trend line to baseline data. Despite this focus, three of the four issues identified by Parker et al. ( 2011 ), and the corresponding solutions we propose, are also applicable to nonlinear trends.

Organization

In the following sections, first we mention procedures that include extrapolating the trend line fitted in the baseline, and distinguish them from procedures that account for baseline trend but do not extrapolate it. Second, we perform a review of published research in order to explore how frequently trend extrapolation leads to out-of-bounds predicted values for the outcome variable. Third, we deal separately with the four main issues of extrapolating a baseline trend, as identified by Parker et al. ( 2011 ), and we offer tentative solutions to these issues. Fourth, on the basis of the proposals from the previous two points, we propose a modification of the MPD. In the same section, we also provide examples, based on previously published data, of the extent to which our modification helps avoid misleading results. Fifth, we include a small proof-of-concept simulation study.

Analytical techniques that entail extrapolating baseline trend

Visual analysis.

When discussing how visual analysis should be carried out, Kratochwill et al. ( 2010 ) stated that “[t] he six visual analysis features are used collectively to compare the observed and projected patterns for each phase with the actual pattern observed after manipulation of the independent variable” (p. 18). Moreover, the conservative dual criteria for carrying out structured visual analysis (Fisher, Kelley, & Lomas, 2003 ) entail extrapolating split-middle trend in addition to extrapolating mean level. This procedure has received considerable attention recently as a means of improving decision accuracy (Stewart, Carr, Brandt, & McHenry, 2007 ; Wolfe & Slocum, 2015 ; Young & Daly, 2016 ).

Regression-based analyses

Among the procedures based on regression analysis, the last treatment day procedure (White, Rusch, Kazdin, & Hartmann, 1989 ) entails fitting ordinary least squares (OLS) trend lines to the baseline and intervention phases separately, and comparison between the two is performed for the last intervention phase measurement occasion. In the Allison and Gorman ( 1993 ) regression model, baseline trend is extrapolated before it is removed from both the A and B phases’ data. Apart from OLS regression, the generalized least squares proposal by Swaminathan et al. ( 2014 ) fits trend lines separately to the A and B phases, but baseline trend is still extrapolated for carrying out the comparisons. The overall effect size described by the authors entails comparing the treatment data as estimated from the treatment-phase trend line to the treatment data as estimated from the baseline-phase trend line.

Apart from the procedures based on the general linear model (assuming normal errors), generalized linear models (Fox, 2016 ) need to be mentioned as well in the present subsection. Such models can deal with count data, which are ubiquitous in single-case research (Pustejovsky, 2018a ), specifying a Poisson model (rather than a normal one) for the conditional distribution of the response variable (Shadish, Kyse, & Rindskopf, 2013 ). Other useful models are based on the binomial distribution, specifying a logistic model (Shadish et al., 2016 ), when the data are proportions that have a natural floor (0) and ceiling (100). Despite dealing with certain issues arising from single-case data, these models are not flawless. Note that a Poisson model may present limitations when the data are more variable than expected (i.e., alternative models have been proposed for overdispersed count data; Fox, 2016 ), whereas a logistic model may present the difficulty of not knowing the floor or ceiling (i.e., the upper asymptote) or of forcing artificial limits. Finally, what is most relevant to the topic of the present text is that none of these generalized linear models necessarily includes an extrapolation of baseline trend. Actually, some of them (Rindskopf & Ferron, 2014 ; Verboon & Peters, 2018 ) consider the baseline data together with the intervention-phase data in order to detect when the greatest change is produced. Other models (Shadish, Kyse, & Rindskopf, 2013 ) include an interaction term between the dummy phase variable and the time variable, making possible the estimation of change in slope.

Nonregression procedures

MPD involves estimating baseline trend and extrapolating it into the intervention phase in order to compare the predictions with the actual intervention-phase data. Another nonregression procedure, Slope and level change (SLC; Solanas, Manolov, & Onghena, 2010 ), involves estimating baseline trend and removing it from the whole series before quantifying the change in slope and the net change in level (hence, SLC). In one of the steps of the SLC, baseline trend is removed from the n A baseline measurements and the n B intervention-phase measurements by subtracting from each value ( y i ) the slope estimate ( b 1 ), multiplied by the measurement occasion ( i ). Formally, \( {\overset{\sim }{y}}_i={y}_i-i\times {b}_1;i=1,2,\dots, \left({n}_A+{n}_B\right) \) . This step does resemble extrapolating baseline trend, but there is no estimation of the intercept of the baseline trend line, and thus a trend line is not fitted to the baseline data and then extrapolated, which would lead to obtaining residuals as in Allison and Gorman’s ( 1993 ) model. Therefore, we consider that it is more accurate to conceptualize this step as removing baseline trend from the intervention-phase trend for the purpose of comparison.

Nonoverap indices

Among nonoverlap indices, the percentage of data points exceeding median trend (Wolery, Busick, Reichow, & Barton, 2010 ) involves fitting a split-middle (i.e., bi-split) trend line and extrapolating it into the subsequent phase. Regarding Tau-U (Parker et al., 2011 ), it only takes into account the number of baseline measurements that improve previous baseline measurements, and this number is subtracted from the number of intervention-phase values that improve the baseline-phase values. Therefore, no intercept or slope is estimated, and no trend line is fitted or extrapolated, either. The way in which trend is controlled for in Tau-U cannot be described as trend extrapolation in a strict sense.

Two other nonoverlap indices also entail baseline trend control. According to the “additional output” calculated at http://ktarlow.com/stats/tau/ , the baseline-corrected Tau (Tarlow, 2017 ) removes baseline trend from the data using the expression \( {\overset{\sim }{y}}_i={y}_i-i\times {b}_{1(TS)};i=1,2,\dots, \left({n}_A+{n}_B\right) \) , where b 1( TS ) is the Theil–Sen estimate of slope. In the percentage of nonoverlapping corrected data (Manolov & Solanas, 2009 ), baseline trend is eliminated from the n values via the same expression as for baseline-corrected Tau, \( {\overset{\sim }{y}}_i={y}_i-i\times {b}_{1(D)};i=1,2,\dots, \left({n}_A+{n}_B\right) \) , but slope is estimated via b 1( D ) (see Appendix B ) instead of via b 1( TS ) . Therefore, as we discussed above for SLC, there is actually no trend extrapolation in the baseline-corrected Tau or percentage-of-nonoverlapping-corrected data.

Procedures not extrapolating trend

The analytical procedures included in the present subsection do not extrapolate baseline trend, but they do take baseline trend into account. We decided to mention these techniques for three reasons. First, we wanted to provide a broader overview of analytical techniques applicable to single-case data. Second, we wanted to make it explicit that not all analytical procedures entail baseline trend extrapolation, and therefore, such extrapolation is not an indispensable step in single-case data analysis. Stated in other words, it is possible to deal with baseline trend without extrapolating it. Third, the procedures mentioned here were those more recently developed or suggested for single-case data analysis, and so they may be less widely known. Moreover, they can be deemed more sophisticated and more strongly grounded on statistical theory than is MPD, which is the focus of the present article.

The between-case standard mean difference, also known as the d statistic (Shadish, Hedges, & Pustejovsky, 2014 ), assumes stable data, but the possibility of detrending has been mentioned (Marso & Shadish, 2015 ) if baseline trend is present. It is not clear that a regression model using time and its interaction with a dummy variable representing phase entails baseline trend extrapolation. Moreover, a different approach was suggested by Pustejovsky, Hedges, and Shadish ( 2014 ) for obtaining a d statistic—namely, in relation to multilevel analysis. In multilevel analysis, also referred to as hierarchical linear models , the trend in each phase can be modeled separately, and the slopes can be compared (Ferron, Bell, Hess, Rendina-Gobioff, & Hibbard, 2009 ). Another statistical option is to use generalized additive models (GAMs; Sullivan et al., 2015 ), in which there is greater flexibility for modeling the exact shape of the trend in each phase, without the need to specify a particular model a priori. GAMs that have been specifically suggested include the use of cubic polynomial curves fitted to different portions of the data and joined at the specific places (called knots ) that divide the data into portions. Just like when using multilevel models, trend lines are fitted separately to each phase, without the need to extrapolate baseline trend.

A review of research published in 2015

Aim of the review.

It has already been stated (Parker et al., 2011 ) and illustrated (Tarlow, 2017 ) that baseline trend extrapolation can lead to impossible forecasts for the subsequent intervention-phase data. Accordingly, the research question we chose was the percentage of studies in which extrapolating the baseline trend of the data set (across several different techniques for fitting the trend line) leads to values that are below the lower bound or above the upper bound of the outcome variable.

Search strategy

We focused on the four journals that have published most SCED research, according to the review by Shadish and Sullivan ( 2011 ). These journals are Journal of Applied Behavior Analysis , Behavior Modification , Research in Autism Spectrum Disorders , and Focus on Autism and Other Developmental Disabilities . Each of these four journals published more than ten SCED studies in 2008, and the 76 studies they published represent 67% of all studies included in the Shadish and Sullivan review. Given that the bibliographic search was performed in September 2016, we focused on the year 2015 and looked for any articles using phase designs (AB designs, variations, or extensions) or alternation designs with a baseline phase and providing a graphical representation of the data, with at least three measurements in the initial baseline condition.

Techniques for finding a best fitting straight line

For the present review, we selected five techniques for finding a best-fitting straight line: OLS, split-middle, tri-split, Theil–Sen, and differencing. The motivation for this choice was that these five techniques are included in single-case data-analytical procedures (Manolov, 2018 ), and therefore, applied researchers can potentially use them. The R code used for checking whether out-of-bounds forecasts are obtained is available at https://osf.io/js3hk/ .

Upper and lower bounds

The data were retrieved using Plot Digitizer for Windows ( https://plotdigitizer.sourceforge.net ). We counted the number and percentage of studies in which values out of logical bounds were obtained after extrapolating the baseline trend, estimated either from an initial baseline phase or from a subsequent withdrawal phase (e.g., in ABAB designs) for at least one of the data sets reported graphically in the article. The “logical bounds” were defined as 0 as a minimum and 1 or 100 as a maximum, when the measurement provided was a proportion or a percentage, respectively. Additional upper bounds included the maximal scores obtainable for an exam (e.g., Cheng, Huang, & Yang, 2015 ; Knight, Wood, Spooner, Browder, & O’Brien, 2015 ), for the number of steps in a task (e.g., S. J. Gardner & Wolfe, 2015 ), for the number of trials in the session (Brandt, Dozier, Juanico, Laudont, & Mick, 2015 ; Cannella-Malone, Sabielny, & Tullis, 2015 ), or for the duration of transition between a stimulus and reaching a location (Siegel & Lien, 2015 ), or the total duration of a session, when quantifying latency (Hine, Ardoin, & Foster, 2015 ). We chose a conservative approach, and did not to speculate Footnote 1 about upper bounds for behaviors that were expressed as either a frequency (e.g., Fiske et al., 2015 ; Ledbetter-Cho et al., 2015 ) or a rate (e.g., Austin & Tiger, 2015 ; Fahmie, Iwata, & Jann, 2015 ; Rispoli et al., 2015 ; Saini, Greer, & Fisher, 2015 ). Footnote 2

Results of the review

The numbers of articles included per journal are as follows. From the Journal of Applied Behavior Analysis , 27 SCED studies were included from the 46 “research articles” published (excluding three alternating-treatment designs without a baseline), and 20 more SCED studies were included from the 30 “reports” published (excluding two alternating-treatments design without a baseline and one changing-criterion design). From Behavior Modification , eight SCED studies were included from the 39 “articles” published (excluding two alternating-treatments design studies without a baseline, two studies with other designs without phases, one study with phases but only two measurements in the baseline phase, meta-analyses of single cases, and data analysis for single-case articles). From Research in Autism Spectrum Disorders , seven SCED studies were included from the 67 “original research articles” published (excluding one SCED study that did not have a minimum of three measurements per phase, as per Kratochwill et al., 2010 ). From Focus on Autism and Other Developmental Disabilities , six SCED studies were included from the 21 “articles” published. The references to all 68 articles reviewed are available in Appendix A at https://osf.io/js3hk/ .

The results of this review are as follows. Extrapolation led to impossibly small values for all five trend estimators in 27 studies (39.71%), in contrast to 34 studies (50.00%) in which that did not happen for any of the trend estimators. Complementarily, extrapolation led to impossibly large values for all five trend estimators in eight studies (11.76%), in contrast to 56 studies (82.35%) in which that did not happen for any of the trend estimators. In terms of when the extrapolation led to an impossible value, a summary is provided in Table 1 . Note that this table refers to the data set in each article, including the earliest out-of-bounds forecast. Thus, it can be seen that for all trend-line-fitting techniques, it was most common to have out-of-bounds forecasts already before the third intervention phase measurement occasion. This is relevant, considering that an immediate effect can be understood to refer to the first three intervention data points (Kratochwill et al., 2010 ).

These results suggest that researchers using techniques to extrapolate baseline trend should be cautious about downward trends that would apparently lead to negative values, if continued. We do not claim that the four journals and the year 2015 are representative of all published SCED research, but the evidence obtained suggests that trend extrapolation may affect the meaningfulness of the quantitative operations performed with the predicted data frequently enough for it to be considered an issue worth investigation.

Main issues when extrapolating baseline trend, and tentative solutions

The main issues when extrapolating baseline trend that were identified by Parker et al. ( 2011 ) include (a) unreliable trend lines being fitted; (b) the assumption that trends will continue unabated; (c) no consideration of the baseline phase length; and (d) the possibility of out-of-bounds forecasts. In this section, we comment on each of these four issues identified by Parker et al. ( 2011 ) separately (although they are related), and we propose tentative solutions, based on the existing literature. However, we begin by discussing in brief how these issues could be avoided rather than simply addressed.

Avoiding the issues