Identifying Empirical Research Articles

Identifying empirical articles.

- Searching for Empirical Research Articles

What is Empirical Research?

An empirical research article reports the results of a study that uses data derived from actual observation or experimentation. Empirical research articles are examples of primary research. To learn more about the differences between primary and secondary research, see our related guide:

- Primary and Secondary Sources

By the end of this guide, you will be able to:

- Identify common elements of an empirical article

- Use a variety of search strategies to search for empirical articles within the library collection

Look for the IMRaD layout in the article to help identify empirical research. Sometimes the sections will be labeled differently, but the content will be similar.

- I ntroduction: why the article was written, research question or questions, hypothesis, literature review

- M ethods: the overall research design and implementation, description of sample, instruments used, how the authors measured their experiment

- R esults: output of the author's measurements, usually includes statistics of the author's findings

- D iscussion: the author's interpretation and conclusions about the results, limitations of study, suggestions for further research

Parts of an Empirical Research Article

Parts of an empirical article.

The screenshots below identify the basic IMRaD structure of an empirical research article.

Introduction

The introduction contains a literature review and the study's research hypothesis.

The method section outlines the research design, participants, and measures used.

Results

The results section contains statistical data (charts, graphs, tables, etc.) and research participant quotes.

The discussion section includes impacts, limitations, future considerations, and research.

Learn the IMRaD Layout: How to Identify an Empirical Article

This short video overviews the IMRaD method for identifying empirical research.

- Next: Searching for Empirical Research Articles >>

- Last Updated: Nov 16, 2023 8:24 AM

CityU Home - CityU Catalog

- USC Libraries

- Research Guides

- Identify Empirical Articles

*Education: Identify Empirical Articles

- Google Scholar tips and instructions

- Newspaper Articles

- Video Tutorials

- EDUC 508 Library Session

- Statistics and Data

- Tests & Measurements

- Citation Managers

- APA Style This link opens in a new window

- Scan & Deliver and Interlibrary Loan

- Educational Leadership

- Global Executive Ed.D.

- Marriage & Family Therapy

- Organizational Change & Leadership

- Literacy Education

- Accreditation

- Journal Ranking Metrics

- Publishing Your Research

- Education and STEM Databases

How to Recognize Empirical Journal Articles

Definition of an empirical study: An empirical research article reports the results of a study that uses data derived from actual observation or experimentation. Empirical research articles are examples of primary research.

Parts of a standard empirical research article: (articles will not necessary use the exact terms listed below.)

- Abstract ... A paragraph length description of what the study includes.

- Introduction ...Includes a statement of the hypotheses for the research and a review of other research on the topic.

- Who are participants

- Design of the study

- What the participants did

- What measures were used

- Results ...Describes the outcomes of the measures of the study.

- Discussion ...Contains the interpretations and implications of the study.

- References ...Contains citation information on the material cited in the report. (also called bibliography or works cited)

Characteristics of an Empirical Article:

- Empirical articles will include charts, graphs, or statistical analysis.

- Empirical research articles are usually substantial, maybe from 8-30 pages long.

- There is always a bibliography found at the end of the article.

Type of publications that publish empirical studies:

- Empirical research articles are published in scholarly or academic journals

- These journals are also called “peer-reviewed,” or “refereed” publications.

Examples of such publications include:

- American Educational Research Journal

- Computers & Education

- Journal of Educational Psychology

Databases that contain empirical research: (selected list only)

- List of other useful databases by subject area

This page is adapted from Eric Karkhoff's Sociology Research Guide: Identify Empirical Articles page (Cal State Fullerton Pollak Library).

Sample Empirical Articles

Roschelle, J., Feng, M., Murphy, R. F., & Mason, C. A. (2016). Online Mathematics Homework Increases Student Achievement. AERA Open . ( L INK TO ARTICLE )

Lester, J., Yamanaka, A., & Struthers, B. (2016). Gender microaggressions and learning environments: The role of physical space in teaching pedagogy and communication. Community College Journal of Research and Practice , 40(11), 909-926. ( LINK TO ARTICLE )

- << Previous: Newspaper Articles

- Next: Workshops and Webinars >>

- Last Updated: Aug 15, 2024 2:42 PM

- URL: https://libguides.usc.edu/education

Penn State University Libraries

Empirical research in the social sciences and education.

- What is Empirical Research and How to Read It

- Finding Empirical Research in Library Databases

- Designing Empirical Research

- Ethics, Cultural Responsiveness, and Anti-Racism in Research

- Citing, Writing, and Presenting Your Work

Contact the Librarian at your campus for more help!

Introduction: What is Empirical Research?

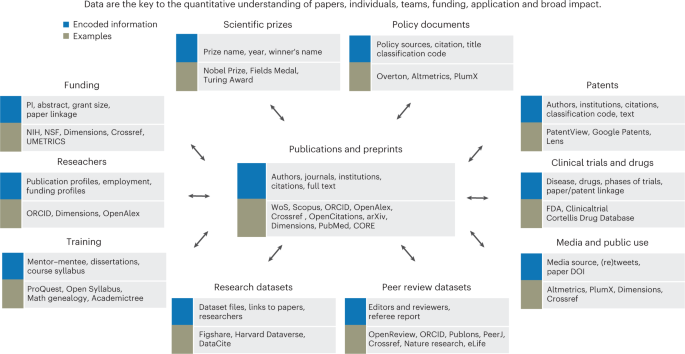

Empirical research is based on observed and measured phenomena and derives knowledge from actual experience rather than from theory or belief.

How do you know if a study is empirical? Read the subheadings within the article, book, or report and look for a description of the research "methodology." Ask yourself: Could I recreate this study and test these results?

Key characteristics to look for:

- Specific research questions to be answered

- Definition of the population, behavior, or phenomena being studied

- Description of the process used to study this population or phenomena, including selection criteria, controls, and testing instruments (such as surveys)

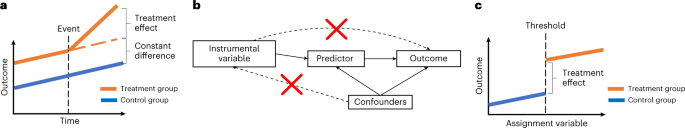

Another hint: some scholarly journals use a specific layout, called the "IMRaD" format, to communicate empirical research findings. Such articles typically have 4 components:

- Introduction: sometimes called "literature review" -- what is currently known about the topic -- usually includes a theoretical framework and/or discussion of previous studies

- Methodology: sometimes called "research design" -- how to recreate the study -- usually describes the population, research process, and analytical tools used in the present study

- Results: sometimes called "findings" -- what was learned through the study -- usually appears as statistical data or as substantial quotations from research participants

- Discussion: sometimes called "conclusion" or "implications" -- why the study is important -- usually describes how the research results influence professional practices or future studies

Reading and Evaluating Scholarly Materials

Reading research can be a challenge. However, the tutorials and videos below can help. They explain what scholarly articles look like, how to read them, and how to evaluate them:

- CRAAP Checklist A frequently-used checklist that helps you examine the currency, relevance, authority, accuracy, and purpose of an information source.

- IF I APPLY A newer model of evaluating sources which encourages you to think about your own biases as a reader, as well as concerns about the item you are reading.

- Credo Video: How to Read Scholarly Materials (4 min.)

- Credo Tutorial: How to Read Scholarly Materials

- Credo Tutorial: Evaluating Information

- Credo Video: Evaluating Statistics (4 min.)

- Credo Tutorial: Evaluating for Diverse Points of View

- Next: Finding Empirical Research in Library Databases >>

- Last Updated: Aug 13, 2024 3:16 PM

- URL: https://guides.libraries.psu.edu/emp

Free APA Journals ™ Articles

Recently published articles from subdisciplines of psychology covered by more than 90 APA Journals™ publications.

For additional free resources (such as article summaries, podcasts, and more), please visit the Highlights in Psychological Research page.

- Basic / Experimental Psychology

- Clinical Psychology

- Core of Psychology

- Developmental Psychology

- Educational Psychology, School Psychology, and Training

- Forensic Psychology

- Health Psychology & Medicine

- Industrial / Organizational Psychology & Management

- Neuroscience & Cognition

- Social Psychology & Social Processes

- Moving While Black: Intergroup Attitudes Influence Judgments of Speed (PDF, 71KB) Journal of Experimental Psychology: General February 2016 by Andreana C. Kenrick, Stacey Sinclair, Jennifer Richeson, Sara C. Verosky, and Janetta Lun

- Recognition Without Awareness: Encoding and Retrieval Factors (PDF, 116KB) Journal of Experimental Psychology: Learning, Memory, and Cognition September 2015 by Fergus I. M. Craik, Nathan S. Rose, and Nigel Gopie

- The Tip-of-the-Tongue Heuristic: How Tip-of-the-Tongue States Confer Perceptibility on Inaccessible Words (PDF, 91KB) Journal of Experimental Psychology: Learning, Memory, and Cognition September 2015 by Anne M. Cleary and Alexander B. Claxton

- Cognitive Processes in the Breakfast Task: Planning and Monitoring (PDF, 146KB) Canadian Journal of Experimental Psychology / Revue canadienne de psychologie expérimentale September 2015 by Nathan S. Rose, Lin Luo, Ellen Bialystok, Alexandra Hering, Karen Lau, and Fergus I. M. Craik

- Searching for Explanations: How the Internet Inflates Estimates of Internal Knowledge (PDF, 138KB) Journal of Experimental Psychology: General June 2015 by Matthew Fisher, Mariel K. Goddu, and Frank C. Keil

- Client Perceptions of Corrective Experiences in Cognitive Behavioral Therapy and Motivational Interviewing for Generalized Anxiety Disorder: An Exploratory Pilot Study (PDF, 62KB) Journal of Psychotherapy Integration March 2017 by Jasmine Khattra, Lynne Angus, Henny Westra, Christianne Macaulay, Kathrin Moertl, and Michael Constantino

- Attention-Deficit/Hyperactivity Disorder Developmental Trajectories Related to Parental Expressed Emotion (PDF, 160KB) Journal of Abnormal Psychology February 2016 by Erica D. Musser, Sarah L. Karalunas, Nathan Dieckmann, Tara S. Peris, and Joel T. Nigg

- The Integrated Scientist-Practitioner: A New Model for Combining Research and Clinical Practice in Fee-For-Service Settings (PDF, 58KB) Professional Psychology: Research and Practice December 2015 by Jenna T. LeJeune and Jason B. Luoma

- Psychotherapists as Gatekeepers: An Evidence-Based Case Study Highlighting the Role and Process of Letter Writing for Transgender Clients (PDF, 76KB) Psychotherapy September 2015 by Stephanie L. Budge

- Perspectives of Family and Veterans on Family Programs to Support Reintegration of Returning Veterans With Posttraumatic Stress Disorder (PDF, 70KB) Psychological Services August 2015 by Ellen P. Fischer, Michelle D. Sherman, Jean C. McSweeney, Jeffrey M. Pyne, Richard R. Owen, and Lisa B. Dixon

- "So What Are You?": Inappropriate Interview Questions for Psychology Doctoral and Internship Applicants (PDF, 79KB) Training and Education in Professional Psychology May 2015 by Mike C. Parent, Dana A. Weiser, and Andrea McCourt

- Cultural Competence as a Core Emphasis of Psychoanalytic Psychotherapy (PDF, 81KB) Psychoanalytic Psychology April 2015 by Pratyusha Tummala-Narra

- The Role of Gratitude in Spiritual Well-Being in Asymptomatic Heart Failure Patients (PDF, 123KB) Spirituality in Clinical Practice March 2015 by Paul J. Mills, Laura Redwine, Kathleen Wilson, Meredith A. Pung, Kelly Chinh, Barry H. Greenberg, Ottar Lunde, Alan Maisel, Ajit Raisinghani, Alex Wood, and Deepak Chopra

- Nepali Bhutanese Refugees Reap Support Through Community Gardening (PDF, 104KB) International Perspectives in Psychology: Research, Practice, Consultation January 2017 by Monica M. Gerber, Jennifer L. Callahan, Danielle N. Moyer, Melissa L. Connally, Pamela M. Holtz, and Beth M. Janis

- Does Monitoring Goal Progress Promote Goal Attainment? A Meta-Analysis of the Experimental Evidence (PDF, 384KB) Psychological Bulletin February 2016 by Benjamin Harkin, Thomas L. Webb, Betty P. I. Chang, Andrew Prestwich, Mark Conner, Ian Kellar, Yael Benn, and Paschal Sheeran

- Youth Violence: What We Know and What We Need to Know (PDF, 388KB) American Psychologist January 2016 by Brad J. Bushman, Katherine Newman, Sandra L. Calvert, Geraldine Downey, Mark Dredze, Michael Gottfredson, Nina G. Jablonski, Ann S. Masten, Calvin Morrill, Daniel B. Neill, Daniel Romer, and Daniel W. Webster

- Supervenience and Psychiatry: Are Mental Disorders Brain Disorders? (PDF, 113KB) Journal of Theoretical and Philosophical Psychology November 2015 by Charles M. Olbert and Gary J. Gala

- Constructing Psychological Objects: The Rhetoric of Constructs (PDF, 108KB) Journal of Theoretical and Philosophical Psychology November 2015 by Kathleen L. Slaney and Donald A. Garcia

- Expanding Opportunities for Diversity in Positive Psychology: An Examination of Gender, Race, and Ethnicity (PDF, 119KB) Canadian Psychology / Psychologie canadienne August 2015 by Meghana A. Rao and Stewart I. Donaldson

- Racial Microaggression Experiences and Coping Strategies of Black Women in Corporate Leadership (PDF, 132KB) Qualitative Psychology August 2015 by Aisha M. B. Holder, Margo A. Jackson, and Joseph G. Ponterotto

- An Appraisal Theory of Empathy and Other Vicarious Emotional Experiences (PDF, 151KB) Psychological Review July 2015 by Joshua D. Wondra and Phoebe C. Ellsworth

- An Attachment Theoretical Framework for Personality Disorders (PDF, 100KB) Canadian Psychology / Psychologie canadienne May 2015 by Kenneth N. Levy, Benjamin N. Johnson, Tracy L. Clouthier, J. Wesley Scala, and Christina M. Temes

- Emerging Approaches to the Conceptualization and Treatment of Personality Disorder (PDF, 111KB) Canadian Psychology / Psychologie canadienne May 2015 by John F. Clarkin, Kevin B. Meehan, and Mark F. Lenzenweger

- A Complementary Processes Account of the Development of Childhood Amnesia and a Personal Past (PDF, 585KB) Psychological Review April 2015 by Patricia J. Bauer

- Terminal Decline in Well-Being: The Role of Social Orientation (PDF, 238KB) Psychology and Aging March 2016 by Denis Gerstorf, Christiane A. Hoppmann, Corinna E. Löckenhoff, Frank J. Infurna, Jürgen Schupp, Gert G. Wagner, and Nilam Ram

- Student Threat Assessment as a Standard School Safety Practice: Results From a Statewide Implementation Study (PDF, 97KB) School Psychology Quarterly June 2018 by Dewey Cornell, Jennifer L. Maeng, Anna Grace Burnette, Yuane Jia, Francis Huang, Timothy Konold, Pooja Datta, Marisa Malone, and Patrick Meyer

- Can a Learner-Centered Syllabus Change Students’ Perceptions of Student–Professor Rapport and Master Teacher Behaviors? (PDF, 90KB) Scholarship of Teaching and Learning in Psychology September 2016 by Aaron S. Richmond, Jeanne M. Slattery, Nathanael Mitchell, Robin K. Morgan, and Jared Becknell

- Adolescents' Homework Performance in Mathematics and Science: Personal Factors and Teaching Practices (PDF, 170KB) Journal of Educational Psychology November 2015 by Rubén Fernández-Alonso, Javier Suárez-Álvarez, and José Muñiz

- Teacher-Ready Research Review: Clickers (PDF, 55KB) Scholarship of Teaching and Learning in Psychology September 2015 by R. Eric Landrum

- Enhancing Attention and Memory During Video-Recorded Lectures (PDF, 83KB) Scholarship of Teaching and Learning in Psychology March 2015 by Daniel L. Schacter and Karl K. Szpunar

- The Alleged "Ferguson Effect" and Police Willingness to Engage in Community Partnership (PDF, 70KB) Law and Human Behavior February 2016 by Scott E. Wolfe and Justin Nix

- Randomized Controlled Trial of an Internet Cognitive Behavioral Skills-Based Program for Auditory Hallucinations in Persons With Psychosis (PDF, 92KB) Psychiatric Rehabilitation Journal September 2017 by Jennifer D. Gottlieb, Vasudha Gidugu, Mihoko Maru, Miriam C. Tepper, Matthew J. Davis, Jennifer Greenwold, Ruth A. Barron, Brian P. Chiko, and Kim T. Mueser

- Preventing Unemployment and Disability Benefit Receipt Among People With Mental Illness: Evidence Review and Policy Significance (PDF, 134KB) Psychiatric Rehabilitation Journal June 2017 by Bonnie O'Day, Rebecca Kleinman, Benjamin Fischer, Eric Morris, and Crystal Blyler

- Sending Your Grandparents to University Increases Cognitive Reserve: The Tasmanian Healthy Brain Project (PDF, 88KB) Neuropsychology July 2016 by Megan E. Lenehan, Mathew J. Summers, Nichole L. Saunders, Jeffery J. Summers, David D. Ward, Karen Ritchie, and James C. Vickers

- The Foundational Principles as Psychological Lodestars: Theoretical Inspiration and Empirical Direction in Rehabilitation Psychology (PDF, 68KB) Rehabilitation Psychology February 2016 by Dana S. Dunn, Dawn M. Ehde, and Stephen T. Wegener

- Feeling Older and Risk of Hospitalization: Evidence From Three Longitudinal Cohorts (PDF, 55KB) Health Psychology Online First Publication — February 11, 2016 by Yannick Stephan, Angelina R. Sutin, and Antonio Terracciano

- Anger Intensification With Combat-Related PTSD and Depression Comorbidity (PDF, 81KB) Psychological Trauma: Theory, Research, Practice, and Policy January 2016 by Oscar I. Gonzalez, Raymond W. Novaco, Mark A. Reger, and Gregory A. Gahm

- Special Issue on eHealth and mHealth: Challenges and Future Directions for Assessment, Treatment, and Dissemination (PDF, 32KB) Health Psychology December 2015 by Belinda Borrelli and Lee M. Ritterband

- Posttraumatic Growth Among Combat Veterans: A Proposed Developmental Pathway (PDF, 110KB) Psychological Trauma: Theory, Research, Practice, and Policy July 2015 by Sylvia Marotta-Walters, Jaehwa Choi, and Megan Doughty Shaine

- Racial and Sexual Minority Women's Receipt of Medical Assistance to Become Pregnant (PDF, 111KB) Health Psychology June 2015 by Bernadette V. Blanchfield and Charlotte J. Patterson

- An Examination of Generational Stereotypes as a Path Towards Reverse Ageism (PDF, 205KB) The Psychologist-Manager Journal August 2017 By Michelle Raymer, Marissa Reed, Melissa Spiegel, and Radostina K. Purvanova

- Sexual Harassment: Have We Made Any Progress? (PDF, 121KB) Journal of Occupational Health Psychology July 2017 By James Campbell Quick and M. Ann McFadyen

- Multidimensional Suicide Inventory-28 (MSI-28) Within a Sample of Military Basic Trainees: An Examination of Psychometric Properties (PDF, 79KB) Military Psychology November 2015 By Serena Bezdjian, Danielle Burchett, Kristin G. Schneider, Monty T. Baker, and Howard N. Garb

- Cross-Cultural Competence: The Role of Emotion Regulation Ability and Optimism (PDF, 100KB) Military Psychology September 2015 By Bianca C. Trejo, Erin M. Richard, Marinus van Driel, and Daniel P. McDonald

- The Effects of Stress on Prospective Memory: A Systematic Review (PDF, 149KB) Psychology & Neuroscience September 2017 by Martina Piefke and Katharina Glienke

- Don't Aim Too High for Your Kids: Parental Overaspiration Undermines Students' Learning in Mathematics (PDF, 164KB) Journal of Personality and Social Psychology November 2016 by Kou Murayama, Reinhard Pekrun, Masayuki Suzuki, Herbert W. Marsh, and Stephanie Lichtenfeld

- Sex Differences in Sports Interest and Motivation: An Evolutionary Perspective (PDF, 155KB) Evolutionary Behavioral Sciences April 2016 by Robert O. Deaner, Shea M. Balish, and Michael P. Lombardo

- Asian Indian International Students' Trajectories of Depression, Acculturation, and Enculturation (PDF, 210KB) Asian American Journal of Psychology March 2016 By Dhara T. Meghani and Elizabeth A. Harvey

- Cynical Beliefs About Human Nature and Income: Longitudinal and Cross-Cultural Analyses (PDF, 163KB) January 2016 Journal of Personality and Social Psychology by Olga Stavrova and Daniel Ehlebracht

- Annual Review of Asian American Psychology, 2014 (PDF, 384KB) Asian American Journal of Psychology December 2015 By Su Yeong Kim, Yishan Shen, Yang Hou, Kelsey E. Tilton, Linda Juang, and Yijie Wang

- Resilience in the Study of Minority Stress and Health of Sexual and Gender Minorities (PDF, 40KB) Psychology of Sexual Orientation and Gender Diversity September 2015 by Ilan H. Meyer

- Self-Reported Psychopathy and Its Association With Criminal Cognition and Antisocial Behavior in a Sample of University Undergraduates (PDF, 91KB) Canadian Journal of Behavioural Science / Revue canadienne des sciences du comportement July 2015 by Samantha J. Riopka, Richard B. A. Coupland, and Mark E. Olver

Journals Publishing Resource Center

Find resources for writing, reviewing, and editing articles for publishing with APA Journals™.

Visit the resource center

Journals information

- Frequently Asked Questions

- Journal statistics and operations data

- Special Issues

- Email alerts

- Copyright and permissions

Contact APA Publications

- Ask a Librarian

Research: Overview & Approaches

- Getting Started with Undergraduate Research

- Planning & Getting Started

- Building Your Knowledge Base

- Locating Sources

- Reading Scholarly Articles

- Creating a Literature Review

- Productivity & Organizing Research

- Scholarly and Professional Relationships

Introduction to Empirical Research

Databases for finding empirical research, guided search, google scholar, examples of empirical research, sources and further reading.

- Interpretive Research

- Action-Based Research

- Creative & Experimental Approaches

Your Librarian

- Introductory Video This video covers what empirical research is, what kinds of questions and methods empirical researchers use, and some tips for finding empirical research articles in your discipline.

- Guided Search: Finding Empirical Research Articles This is a hands-on tutorial that will allow you to use your own search terms to find resources.

- Study on radiation transfer in human skin for cosmetics

- Long-Term Mobile Phone Use and the Risk of Vestibular Schwannoma: A Danish Nationwide Cohort Study

- Emissions Impacts and Benefits of Plug-In Hybrid Electric Vehicles and Vehicle-to-Grid Services

- Review of design considerations and technological challenges for successful development and deployment of plug-in hybrid electric vehicles

- Endocrine disrupters and human health: could oestrogenic chemicals in body care cosmetics adversely affect breast cancer incidence in women?

- << Previous: Scholarly and Professional Relationships

- Next: Interpretive Research >>

- Last Updated: Aug 13, 2024 12:18 PM

- URL: https://guides.lib.purdue.edu/research_approaches

- University of La Verne

- Subject Guides

Identify Empirical Research Articles

- Interactive Tutorial

- Literature Matrix

- Guide to the Successful Thesis and Dissertation: A Handbook for Students and Faculty

- Practical Guide to the Qualitative Dissertation

- Guide to Writing Empirical Papers, Theses, and Dissertations

What is a Literature Review--YouTube

Literature Review Guides

- How to write a literature review

- The Literature Review:a few steps on conducting it Permission granted from Writing at the University of Toronto

- Six steps for writing a literature review This blog, written by Tanya Golash-Bozal PhD an Associate Professor of Sociology at the University of California at Merced, offers a very nice and simple advice on how to write a literature review from the point of view of an experience professional.

- The Writing Center, University of North Carolina at Chapel Hill Permission granted to use this guide.

- Writing Center University of North Carolina

- Literature Reviews Otago Polytechnic in New Zealand produced this guide and in my opinion, it is one of the best. NOTE: Except where otherwise noted, content on this site is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License. However, all images and Otago Polytechnic videos are copyrighted.

What Are Empirical Articles?

As a student at the University of La Verne, faculty may instruct you to read and analyze empirical articles when writing a research paper, a senior or master's project, or a doctoral dissertation. How can you recognize an empirical article in an academic discipline? An empirical research article is an article which reports research based on actual observations or experiments. The research may use quantitative research methods, which generate numerical data and seek to establish causal relationships between two or more variables.(1) Empirical research articles may use qualitative research methods, which objectively and critically analyze behaviors, beliefs, feelings, or values with few or no numerical data available for analysis.(2)

How can I determine if I have found an empirical article?

When looking at an article or the abstract of an article, here are some guidelines to use to decide if an article is an empirical article.

- Is the article published in an academic, scholarly, or professional journal? Popular magazines such as Business Week or Newsweek do not publish empirical research articles; academic journals such as Business Communication Quarterly or Journal of Psychology may publish empirical articles. Some professional journals, such as JAMA: Journal of the American Medical Association publish empirical research. Other professional journals, such as Coach & Athletic Director publish articles of professional interest, but they do not publish research articles.

- Does the abstract of the article mention a study, an observation, an analysis or a number of participants or subjects? Was data collected, a survey or questionnaire administered, an assessment or measurement used, an interview conducted? All of these terms indicate possible methodologies used in empirical research.

- Introduction -The introduction provides a very brief summary of the research.

- Methodology -The method section describes how the research was conducted, including who the participants were, the design of the study, what the participants did, and what measures were used.

- Results -The results section describes the outcomes of the measures of the study.

- Discussion -The discussion section contains the interpretations and implications of the study.

- Conclusion -

- References -A reference section contains information about the articles and books cited in the report and should be substantial.

- How long is the article? An empirical article is usually substantial; it is normally seven or more pages long.

When in doubt if an article is an empirical research article, share the article citation and abstract with your professor or a librarian so that we can help you become better at recognizing the differences between empirical research and other types of scholarly articles.

How can I search for empirical research articles using the electronic databases available through Wilson Library?

- A quick and somewhat superficial way to look for empirical research is to type your search terms into the database's search boxes, then type STUDY OR STUDIES in the final search box to look for studies on your topic area. Be certain to use the ability to limit your search to scholarly/professional journals if that is available on the database. Evaluate the results of your search using the guidelines above to determine if any of the articles are empirical research articles.

- In EbscoHost databases, such as Education Source , on the Advanced Search page you should see a PUBLICATION TYPE field; highlight the appropriate entry. Empirical research may not be the term used; look for a term that may be a synonym for empirical research. ERIC uses REPORTS-RESEARCH. Also find the field for INTENDED AUDIENCE and highlight RESEARCHER. PsycArticles and Psycinfo include a field for METHODOLOGY where you can highlight EMPIRICAL STUDY. National Criminal Justice Reference Service Abstracts has a field for DOCUMENT TYPE; highlight STUDIES/RESEARCH REPORTS. Then evaluate the articles you find using the guidelines above to determine if an article is empirical.

- In ProQuest databases, such as ProQuest Psychology Journals , on the Advanced Search page look under MORE SEARCH OPTIONS and click on the pull down menu for DOCUMENT TYPE and highlight an appropriate type, such as REPORT or EVIDENCE BASED. Also look for the SOURCE TYPE field and highlight SCHOLARLY JOURNALS. Evaluate the search results using the guidelines to determine if an article is empirical.

- Pub Med Central , Sage Premier , Science Direct , Wiley Interscience , and Wiley Interscience Humanities and Social Sciences consist of scholarly and professional journals which publish primarily empirical articles. After conducting a subject search in these databases, evaluate the items you find by using the guidelines above for deciding if an article is empirical.

- "Quantitative research" A Dictionary of Nursing. Oxford University Press, 2008. Oxford Reference Online. Oxford University Press. University of La Verne. 25 August 2009

- "Qualitative analysis" A Dictionary of Public Health. Ed. John M. Last, Oxford University Press, 2007. Oxford Reference Online . Oxford University Press. University of La Verne. 25 August 2009

Empirical Articles:Tips on Database Searching

- Identifying Empirical Articles

- Next: Interactive Tutorial >>

- Last Updated: Jul 29, 2024 7:29 PM

- URL: https://laverne.libguides.com/empirical-articles

Identify Empirical Research Articles

- What is empirical research?

- Finding empirical research in library databases

- Research design

- Need additional help?

Getting started

According to the APA , empirical research is defined as the following: "Study based on facts, systematic observation, or experiment, rather than theory or general philosophical principle." Empirical research articles are generally located in scholarly, peer-reviewed journals and often follow a specific layout known as IMRaD: 1) Introduction - This provides a theoretical framework and might discuss previous studies related to the topic at hand. 2) Methodology - This describes the analytical tools used, research process, and the populations included. 3) Results - Sometimes this is referred to as findings, and it typically includes statistical data. 4) Discussion - This can also be known as the conclusion to the study, this usually describes what was learned and how the results can impact future practices.

In addition to IMRaD, it's important to see a conclusion and references that can back up the author's claims.

Characteristics to look for

In addition to the IMRaD format mentioned above, empirical research articles contain several key characteristics for identification purposes:

- The length of empirical research is often substantial, usually eight to thirty pages long.

- You should see data of some kind, this includes graphs, charts, or some kind of statistical analysis.

- There is always a bibliography found at the end of the article.

Publications

Empirical research articles can be found in scholarly or academic journals. These types of journals are often referred to as "peer-reviewed" publications; this means qualified members of an academic discipline review and evaluate an academic paper's suitability in order to be published.

The CRAAP Checklist should be utilized to help you examine the currency, relevancy, authority, accuracy, and purpose of an information resource. This checklist was developed by California State University's Meriam Library .

This page has been adapted from the Sociology Research Guide: Identify Empirical Articles at Cal State Fullerton Pollak Library.

- << Previous: Home

- Next: Finding empirical research in library databases >>

- Last Updated: Feb 22, 2024 10:12 AM

- URL: https://paloaltou.libguides.com/empiricalresearch

Empirical Research

- Living reference work entry

- First Online: 22 May 2017

- Cite this living reference work entry

- Emeka Thaddues Njoku 2

544 Accesses

1 Citations

The term “empirical” entails gathered data based on experience, observations, or experimentation. In empirical research, knowledge is developed from factual experience as opposed to theoretical assumption and usually involved the use of data sources like datasets or fieldwork, but can also be based on observations within a laboratory setting. Testing hypothesis or answering definite questions is a primary feature of empirical research. Empirical research, in other words, involves the process of employing working hypothesis that are tested through experimentation or observation. Hence, empirical research is a method of uncovering empirical evidence.

Through the process of gathering valid empirical data, scientists from a variety of fields, ranging from the social to the natural sciences, have to carefully design their methods. This helps to ensure quality and accuracy of data collection and treatment. However, any error in empirical data collection process could inevitably render such...

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

Institutional subscriptions

Bibliography

Bhattacherjee, A. (2012). Social science research: Principles, methods, and practices. Textbooks Collection . Book 3.

Google Scholar

Comte, A., & Bridges, J. H. (Tr.) (1865). A general view of positivism . Trubner and Co. (reissued by Cambridge University Press , 2009).

Dilworth, C. B. (1982). Empirical research in the literature class. English Journal, 71 (3), 95–97.

Article Google Scholar

Heisenberg, W. (1971). Positivism, metaphysics and religion. In R. N. Nanshen (Ed.), Werner Heisenberg – Physics and beyond – Encounters and conversations , World Perspectives. 42. Translator: Arnold J. Pomerans. New York: Harper and Row.

Hossain, F. M. A. (2014). A critical analysis of empiricism. Open Journal of Philosophy, 2014 (4), 225–230.

Kant, I. (1783). Prolegomena to any future metaphysic (trans: Bennett, J.). Early Modern Texts. www.earlymoderntexts.com

Koch, S. (1992). Psychology’s Bridgman vs. Bridgman’s Bridgman: An essay in reconstruction. Theory and Psychology, 2 (3), 261–290.

Matin, A. (1968). An outline of philosophy . Dhaka: Mullick Brothers.

Mcleod, S. (2008). Psychology as science. http://www.simplypsychology.org/science-psychology.html

Popper, K. (1963). Conjectures and refutations: The growth of scientific knowledge . London: Routledge.

Simmel, G. (1908). The problem areas of sociology in Kurt H. Wolf: The sociology of Georg Simmel . London: The Free Press.

Weber, M. (1991). The nature of social action. In W. G. Runciman (Ed.), Weber: Selections in translation . Cambridge: Cambridge University Press.

Download references

Author information

Authors and affiliations.

Department of Political Science, University of Ibadan, Ibadan, Oyo, 200284, Nigeria

Emeka Thaddues Njoku

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Emeka Thaddues Njoku .

Editor information

Editors and affiliations.

Rhinebeck, New York, USA

David A. Leeming

Rights and permissions

Reprints and permissions

Copyright information

© 2017 Springer-Verlag GmbH Germany

About this entry

Cite this entry.

Njoku, E.T. (2017). Empirical Research. In: Leeming, D. (eds) Encyclopedia of Psychology and Religion. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-27771-9_200051-1

Download citation

DOI : https://doi.org/10.1007/978-3-642-27771-9_200051-1

Received : 01 April 2017

Accepted : 08 May 2017

Published : 22 May 2017

Publisher Name : Springer, Berlin, Heidelberg

Print ISBN : 978-3-642-27771-9

Online ISBN : 978-3-642-27771-9

eBook Packages : Springer Reference Behavioral Science and Psychology Reference Module Humanities and Social Sciences Reference Module Business, Economics and Social Sciences

- Publish with us

Policies and ethics

- Find a journal

- Track your research

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- HHS Author Manuscripts

Writing Empirical Articles: Transparency, Reproducibility, Clarity, and Memorability

Morton Ann Gernsbacher

Department of Psychology, University of Wisconsin–Madison

https://orcid.org/0000-0003-0397-3329

This article provides recommendations for writing empirical journal articles that enable transparency, reproducibility, clarity, and memorability. Recommendations for transparency include preregistering methods, hypotheses, and analyses; submitting registered reports; distinguishing confirmation from exploration; and showing your warts. Recommendations for reproducibility include documenting methods and results fully and cohesively, by taking advantage of open-−science tools, and citing sources responsibly. Recommendations for clarity include writing short paragraphs, composed of short sentences; writing comprehensive abstracts; and seeking feedback from a naive audience. Recommendations for memorability include writing narratively; embracing the hourglass shape of empirical articles; beginning articles with a hook; and synthesizing, rather than Mad Libbing, previous literature.

They began three and a half centuries ago ( Wells, 1998 ). Since then, they’ve been written and read; cited, abstracted, and extracted; paywalled and unpaywalled; pre-printed and reprinted. They arose as correspondences between pairs of scientists ( Kronick, 1984 ), then morphed into publicly disseminated conference presentations ( Schaffner, 1994 ). By the 20th century, they’ d grown into the format we use today ( Mack, 2015 ). They are empirical journal articles, and their raison d’être was and continues to be communicating science.

Many of us baby boomers honed our empirical-article writing skills by following Bem’s (1987) how-to guide. We applied Bem’s recommendations to our own articles, and we assigned his chapter to our students and postdocs. The 2004 reprint of Bem’s chapter retains a high recommendation from the American Psychological Association (2010) in its “Guide for New Authors”; it appears in scores of graduate and undergraduate course syllabi ( Gernsbacher, 2017a ); and its advice is offered by numerous universities’ writing centers (e.g., Harvard College, 2008 ; Purdue Online Writing Lab, 2012 ; University of Connecticut, n.d. ; University of Minnesota, n.d. ; University of Washington, 2010 ).

However, psychological scientists have recently confronted their questionable research practices ( John, Loewenstein, & Prelec, 2012 ), many of which arise during the writing (or revising) process ( Sacco, Bruton, & Brown, 2018 ). Questionable research practices include

- failing to report all the studies conducted, conditions manipulated, participants tested, data collected, or other “researcher degrees of freedom” ( Simmons, Nelson, & Simonsohn, 2011 , p. 1359);

- fishing through statistical analyses to report only those meeting a certain level of statistical significance, which is a practice known as p -hacking ( Simonsohn, Nelson, & Simmons, 2014 );

- reporting an unpredicted result as though it had been hypothesized all along, which is a practice known as hypothesizing after the results are known (often referred to as HARKing; Kerr, 1998 ); and

- promising that the reported results bear implications beyond the populations sampled or materials and tasks administered ( Simons, Shoda, & Lindsay, 2017 ).

Unfortunately, some of these questionable reporting practices seem to be sanctioned in Bem’s how-to guide ( Devlin, 2017 ; Vazire, 2014 ). For example, Bem’s chapter seems to encourage authors to p -hack their data. Authors are advised to

examine [your data] from every angle. Analyze the sexes separately. Make up new composite indexes. If a datum suggests a new hypothesis, try to find additional evidence for it elsewhere in the data. If you see dim traces of interesting patterns, try to reorganize the data to bring them into bolder relief. If there are participants you don’t like, or trials, observers, or interviewers who gave you anomalous results, drop them (temporarily). Go on a fishing expedition for something — anything — interesting ( Bem, 1987 , p. 172; Bem, 2004 , pp. 186–187).

Bem’s chapter has also been interpreted as encouraging authors to hypothesize after the results are known ( Wagenmakers, Wetzels, Borsboom, & van der Maas, 2011 ). After acknowledging “there are two possible articles you can write: (a) the article you planned to write when you designed your study or (b) the article that makes the most sense now that you have seen the results,” Bem noted the two potential articles “are rarely the same” and directed authors to write the latter article by “recentering your article around the new findings and subordinating or even ignoring your original hypotheses” ( Bem, 1987 , pp. 171—173; Bem, 2004 , pp. 186–187).

This article provides recommendations for writing empirical journal articles that communicate research processes and products transparently with enough detail to allow replication and reproducibility. 1 Like Bem’s chapter, this article also provides recommendations for writing empirical articles that are clear and memorable.

Open materials for this article, which are available at https://osf.io/q3pna/ , include a list of publicly available course syllabi that mention Bem’s (1987 , 2004) “Writing the Empirical Journal Article” chapter and a tally of word and sentence counts, along with citation counts, for Clark and Clark (1939 , 1940 , 1947) , Harlow (1958) , Miller (1956) , and Tolman (1948) .

Recommendations for Transparency

Researchers write empirical journal articles to report and record why they conducted their studies, how they conducted their studies, and what they observed in their studies. The value of these archival records depends on how transparently researchers write their reports. Writing transparently, means, as the vernacular connotes, writing frankly.

Preregister your study

The best way to write transparent empirical articles is through preregistration ( Chambers et al., 2013 ). Preregistering a study involves specifying the study’s motivation, hypothesis, method, materials, sample, and analysis plan—basically everything but the results and discussion of those results—before the study is conducted.

Preregistration is a “time-stamped research plan that you can point to after conducting a study to prove to yourself and others that you really are testing a predicted relationship” (Mellor, as cited in Graf, 2017 , para.3). Indeed, most of our common statistical tests rest on the assumption that we have preregistered, or at the least previously specified, our predictions ( Wagenmakers, Wetzels, Borsboom, van der Maas, & Kievit, 2012 ).

For more than 20 years, medical journals have required preregistration for researchers conducting clinical trials ( Maxwell, Kelley, & Rausch, 2008 ). More recently, sites such as Open Science Framework and AsPredicted.org allow all types of researchers to document their preregistration, and preregistration is considered a best practice by psychologists of many stripes: cognitive ( de Groot, 2014 ), clinical ( Tackett et al., 2017 ), comparative ( Stevens, 2017 ), developmental ( Donnellan, Lucas, Fraley, & Roisman, 2013 ), social ( van 't Veerab & Giner-Sorolla, 2016 ), personality ( Asendorpf et al., 2013 ), relationship ( Campbell, Loving, & Lebelc, 2014 ), neuroscience ( Button et al., 2013 ), and neuroimaging ( Poldrack et al., 2017 ).

The benefits of preregistration are plentiful, both to our sciences and to ourselves. As Mellor noted (cited in Graf, 2017 , para. 8), “Every step that goes into a preregistration: writing the hypotheses, defining the variables, and creating statistical tests, are steps that we all have to take at some point. Making them before data collection can improve the researcher’s study design.” Misconceptions about preregistration are also plentiful. For instance, some researchers mistakenly believe that if a study is preregistered, unpredicted analyses cannot be reported; they can, but they need to be identified as exploratory (see, e.g., Neuroskeptic, 2013 ). Other researchers worry that purely exploratory research cannot be preregistered; it can, but it needs to be identified as exploratory (see, e.g., McIntosh, 2017 ). Preregistration manifests transparency and is, therefore, one of the most important steps in conducting and reporting research transparently.

Submit a registered report

A further step in writing transparent articles is to submit a registered report. Registered reports are journal articles for which both the authors’ preregistrations and their subsequent manuscripts undergo peer review. (Pre-registration outside of submission as a registered- report journal article does not require peer review, only documentation.)

Registered reports epitomize how most of us were trained to do research. For our dissertations and masters’ theses, even our senior theses, we submitted our work to review at two stages: after we designed the study (e.g., at our dissertation proposal meeting) and after we collected and analyzed the data and interpreted our results (e.g., at our final defense). The same two-stage review occurs with registered-report journal articles ( Nosek & Lakens, 2014 ). More and more journals are providing authors with the option to publish registered reports (for a list, see Center for Open Science, n.d. ). The beauty of registered reports is that, as with our dissertations, our success depends not on the shimmer of our results but on the soundness of our ideas and the competence of our execution.

Distinguish confirmation from exploration

Writing transparently means distinguishing confirmation from exploration. To be sure, exploration is a valid and important mode of scientific inquiry. The exploratory analyses Bem wrote about (“examine [your data] from every angle”) are vital for discovery—and should not be discouraged. However, it is also vital to distinguish exploratory from confirmatory analyses.

For example, clarify whether “additional exploratory analysis was conducted” ( Brockhaus, 1980 , p. 517), “data were derived from an exploratory questionnaire” ( Scogin & Bienias, 1988 , p. 335), or “results . . . should be interpreted cautiously because of their exploratory nature” ( Martin & Stelmaczonek, 1988 , p. 387). Entire research projects may be exploratory ( McIntosh, 2017 ), but they must be identified as such (e.g., “Prediction of Improvement in Group Therapy: An Exploratory Study,” Yalom, Houts, Zimerberg, & Rand, 1967 ; and “Personality and Probabilistic Thinking: An Exploratory Study,” Wright & Phillips, 1979 ).

Show your warts

Scientific reporting demands showing your work ( Vazire, 2017 ); transparent scientific reporting demands showing your warts. If participants were excluded, explain why and how many: for example, “Two of these subjects were excluded because of their inability to comply with the imagery instructions at least 75% of the time” ( Sadalla, Burroughs, & Staplin, 1980 , p. 521).

Similarly, if data were lost, explain why and how many: for example, “Ratings for two subjects were lost to equipment error” ( Vrana, Spence, & Lang, 1988 , p. 488) or “Because of experimenter error, processing times were not available for 11 subjects” ( McDaniel & Einstein, 1986 , p. 56).

If one or more pilot studies were conducted, state that. If experiments were conducted in an order different from the reported order, state that. If participants participated in more than one study, state that. If measures were recalculated, stimuli were refashioned, procedures were reconfigured, variables were dropped, items were modified—if anything transgressed the pre- specified plan and approach—state that.

Writing transparently also requires acknowledging when results are unpredicted: for example, “An unexpected result of Experiment 1 was the lack of an age . . . effect . . . due to different presentation rates” ( Kliegl, Smith, & Bakes, 1989 , p. 251) or “Unexpectedly, the female preponderance in depressive symptoms is strongly demonstrated in every age group in this high school sample” ( Allgood-Merten, Lewinsohn, & Hops, 1990 , p. 59). Concede when hypotheses lack support: for example, “we were unable to demonstrate that free care benefited people with a high income” ( Brook et al., 1983 , p. 1431) or “we cannot reject the null hypothesis with any confidence” ( Tannenbaum & Smith, 1964 , p. 407).

Consider placing a Statement of Transparency in either your manuscript or your supplementary materials: for example, “Statement of Transparency: The data used in the present study were initially collected as part of a larger exploratory study” ( Werner & Milyavskaya, 2017 , p. 3) or “As described in the Statement of Transparency in our online supplemental materials, we also collected additional variables and conducted further analyses that we treat as exploratory” ( Gehlbach et al., 2016 , p. 344).

Consider ending your manuscript with a Constraints on Generality statement ( Simons et al., 2017 ), which “defines the scope of the conclusions that are justified by your data” and “clarifies which aspects of your sample of participants, materials, and procedures should be preserved in a direct replication” (p. 1125; see Simons et al., 2017 , for examples).

Recommendations for Reproducibility

The soul of science is that its results are reproducible. Reproducible results are repeatable, reliable, and replicable. But reproducing a result, or simply trying to reproduce it, requires knowing in detail how that previous result was obtained. Therefore, writing for reproducibility means providing enough detail so readers will know how each result was obtained.

Document your research fully

Many researchers appreciate that empirical studies need to be reported accurately and completely—in fact, fully enough to allow other researchers to reproduce them— but they encounter a barrier: Many journals enforce word limits; some even limit the number of tables and figures that can accompany each article or the number of sources that can be cited. Journals’ limits can stymie authors’ efforts to write for reproducibility.

After using the maximum number of words allowed for methods and results, turn to open-science tools. Repositories, such as Open Science Framework (osf.io), Pub-Med Central (ncbi.nlm.nih.gov/pmc/), and Mendeley Data (mendeley.com/datasets), allow researchers to make their materials and data publicly available, which is a best practice quickly becoming mandatory ( Lindsay, 2017 ; Munafò et al., 2017 ; Nosek et al., 2015 ). These repositories also allow researchers to make detailed documentation of their methods and results publicly available.

For example, I recently analyzed 5 million books, 25 million abstracts, and 150 million journal articles to examine scholars’ use of person-first (e.g., person with a disability ) versus identity-first (e.g., disabled person ) language ( Gernsbacher, 2017b ). Because the journal that published my article limited me to 2,000 words, eight citations, and zero tables or figures, I created and posted on Open Science Framework an accompanying technical report ( Gernsbacher, 2016 ), which served as my open notebook. For the current article, I also created a technical report ( Gernsbacher, 2017a ) to document the course syllabi that assign Bem’s chapter (mentioned earlier) and the word counts that illustrate classic articles’ concision (mentioned later).

By taking advantage of open-science repositories, authors can document

- why they qualify for the 21-Word Solution, which is a statement authors can place in their Method section to verify they have “report[ed] how [they] determined [their] sample size, all data exclusions (if any), all manipulations, and all measures in the study” ( Simmons, Nelson, & Simonsohn, 2012 , p. 4);

- how they fulfilled the Preferred Reporting Items for Systematic Reviews and Meta-Analyses check-list ( PRISMA, 2015 ); and

- that they have met other methodological or statistical criteria (e.g., they have provided their data, materials, and code; Lindsay, 2017 ).

An accompanying technical report can serve as a publicly accessible lab notebook, which also comes in handy for selfish reasons ( Markowetz, 2015 ; McKiernan et al., 2016 ). A tidy, publicly accessible lab notebook can be, like tidy computer documentation, “a love letter you write to your future self” ( Conway, 2005 , p. 143).

Document your research cohesively

Documentation should also be cohesive. For instance, rather than posting a slew of separate supplementary files, consider combining all the supporting text, summary data, and supplementary tables and figures into one composite file. More helpfully, annotate the composite file with a table of contents or a set of in-file bookmarks.

A well-indexed composite file can reduce the frustration readers (and reviewers) incur when required to open multiple supplementary files (often generically named Supp. Fig.1, Supp. Fig. 2, etc.). Posting a well-indexed composite file on an open-science platform can also ensure that valuable information is available outside of journals’ paywalls, with guaranteed access beyond the life of an individual researcher’s or journal’s Web site (e.g., Open Science Framework guarantees their repository for 50 years).

Cite sources responsibly

As Simkin and Roychowdhury (2003) advised in the title of their study demonstrating high rates of erroneous citations, “read before you cite.” Avoid “drive by citations” ( Perrin, 2009 ), which reference a study so generically as to appear pro forma. Ensure that a specific connection exists between your claim and the source you cite to support that claim. Is the citation the original statement of the idea, a comprehensive review, an example of a similar study, or a counterclaim? If so, make that connection clear, rather than simply grabbing and citing the first article that pops up in a Google Scholar search.

Interrogate a reference before citing it, rather than citing it simply because other articles do. For example, I tallied hundreds of articles that mistakenly cited Rizzolatti et al. (1996) as providing empirical evidence for mirror neurons in humans—despite neither Rizzolatti et al.’s data nor their text supporting that claim ( Gallese, Gernsbacher, Heyes, Hickok, & Iacoboni, 2011 ).

Try to include a linked DOI (digital object identifier) for every reference you cite. Clicking on a linked DOI takes your readers directly to the original source, without having to search for it by its title, authors, journal, or the like. 2 Moreover, a DOI, like an ISBN, provides a permanent link to a published work; therefore, DOIs obviate link rot and guarantee greater longevity than standard URLs, even journal publishers’ URLs.

Recommendations for Clarity

Empirical articles are becoming more difficult to read, as an analysis of nearly three-quarter million articles in more than 100 high-impact journals recently demonstrated ( Plavén-Sigray, Matheson, Schiffler, & Thompson, 2017 ). Sentences in empirical articles have grown longer, and vocabulary has grown more abstruse. Therefore, the primary recommendation for achieving clarity in empirical articles is simple: Write concisely using plain language ( Box 1 provides additional suggestions and resources for clear writing).

Additional Recommendations for Clear Writing

Use precise terms.

Concision requires precision. Rather than writing that a dependent variable is related to, influenced by, or affected by the independent variable, state the exact relation between the two variables or the precise effect one variable has on another. Did manipulating the independent variable increase, decrease, improve, worsen, augment, diminish, negate, strengthen, weaken, delay, or accelerate the dependent variable?

Most important, use precise terms in your title. Follow the example of Parker, Garry, Engle, Harper, and Clfasefi (2008) , who titled their article “Psychotropic Placebos Reduce the Misinformation Effect by Increasing Monitoring at Test” rather than “The Effects of Psychotropic Placebos on Memory.”

Omit Needless Words

Numerous wordy expressions can be replaced by one word. For example, replace due to the fact that, for the reason that, or owing to the fact that with because; replace for the purpose of with for; have the capability of with can; in the event that with if; during the course of with during; fewer in number with fewer; in order to with to; and whether or not with whether . And replace the well-worn and wordy expression that appears in numerous acknowledgements, we wish to thank , with simply we thank .

Build Parallel Structures

Parallel structure aids comprehension ( Fraizer, Taft, Roeper, Clifton, & Ehrlich, 1984 ), whereas disjointed structure (e.g., Time flies like an arrow; fruit flies like a banana ) impedes comprehension ( Gernsbacher, 1997 ). Simons (2012) demonstrated how to build parallel structure with the example sentence Active reconstruction of a past experience differs from passively hearing a story about it. That sentence lacks parallel structure because the first half uses a noun phrase ( Active reconstruction ), whereas the second half uses a gerundive nominal ( passively hearing ). But the sentence can easily be made parallel: Actively reconstructing a past experience differs from passively hearing a story about it.

Listen to Your Writing

Try reading aloud what you have written (or use text-to-speech software). Listening to your writing is a great way to catch errors and get a feel for whether your writing is too stilted (and your sentences are too long).

Read About Writing

Read about how to write clearly in Pinker’s (2015) book, Zinsser’s (2016) book, Wagenmakers’s (2009) article, Simons’s (2012) guide, and Gernsbacher’s (2013) graduate-level open-access course. Try testing the clarity of your writing with online readability indices (e.g., https://readable.io/text or https://wordcounttools.com )

Write short sentences

Every writing guide, from Strunk and White’s (1959) venerable Elements of Style to the prestigious journal Nature ’s (2014) guide, admonishes writers to use shorter, rather than longer, sentences. Shorter sentences are not only easier to understand, but also better at conveying complex information ( Flesch, 1948 ).

The trick to writing short sentences is to restrict each sentence to one and only one idea. Resist the temptation to embed multiple clauses or parentheticals, which challenge comprehension. Instead, break long, rambling sentences into crisp, more concise ones. For example, write the previous three short sentences rather than the following long sentence: The trick to writing short sentences is to restrict each sentence to one and only one idea by breaking long, rambling sentences into crisp, more concise ones while resisting the temptation to embed multiple clauses or parentheticals, which challenge comprehension.

How short is short enough? The Oxford Guide to Plain English ( Cutts, 2013 ) recommends averaging no more than 15 to 20 words per sentence. Such short, crisp sentences have been the mainstay of many great psychological scientists, including Kenneth and Mamie Clark. Their 1939, 1940, and 1947 articles reporting young Black children’s racial identification and self-esteem have garnered more than 2,500 citations. These articles figured persuasively in Brown v. Board of Education (1954) . And these articles’ sentences averaged 16 words.

Write short paragraphs

Combine short sentences into short paragraphs. Aim for around five sentences per paragraph. Harlow’s “The Nature of Love” (1958) , Tolman’s “Cognitive Maps in Rats and Men” (1948) , and Miller’s “The Magical Number Seven, Plus or Minus Two” (1956) , which have been cited more than 2,000, 5,000, and 25,000 times, respectively, average five sentences per paragraph.

The prototypical five-sentence paragraph comprises a topic sentence, three supporting sentences, and a conclusion sentence. For example, a paragraph in Parker, Garry, Engle, Harper, and Clifasefi’s (2008 , p. 410) article begins with the following topic sentence: “One of the puzzles of human behaviour is how taking a substance that does nothing can cause something.” The paragraph continues with three (in this case, conjoined) supporting sentences: “Phoney painkillers can lessen our pain or make it worse; phoney alcohol can lead us to do things we might otherwise resist, and phoney feedback can even cause us to shed body fat.” The paragraph then concludes with the sentence “Perhaps Kirsch (2004, p. 341) said it best: ‘Placebos are amazing.’”

Write comprehensive abstracts

Compiling a technical report and placing it on an open-source platform can circumvent a journal’s word limit for a manuscript. However, a journal’s word limit for an abstract is more difficult to circumvent. That limit is firm, and an abstract can often be the sole content that is read, particularly if the rest of the article lies behind a paywall. Therefore, authors need to make the most of their 150 or 250 words so that an abstract can inform on its own ( Mensh & Kording, 2017 ).

A clear abstract states the study’s primary hypothesis; its major methodology, including its sample size and sampled population; its main findings, along with their summary statistics; and its key implications. A clear abstract is explicit, concrete, and comprehensive, which was advice offered by Bem (1987 , 2004) .

Seek naive feedback

One of the best ways to ensure that a message is clear is to assess its clarity according to a naive audience ( Traxler & Gernsbacher, 1995 ). Indeed, the more naive the audience, the more informative the feedback ( Traxler & Gernsbacher, 1992 , 1993 ).

Unfortunately, some researchers seek feedback on their manuscripts from only their coauthors or fellow lab members. But coauthors and fellow lab members are hardly naive. Better feedback can be obtained from readers who are unfamiliar with the research—and unfamiliar with even the research area. If those readers say the writing is unclear (or a figure or table is confusing), it is, by definition, unclear (or confusing); it is best to revise for clarity.

Recommendations for Memorability

Most researchers want their articles not only to be read but also to be remembered. The goal in writing a memorable article is not necessarily to pen a flashy article; rather, the goal is to compose an article that enables readers to remember what they have read days or months later, as well as paragraphs or pages later ( Gernsbacher, 1990 ).

Write narratively

The primary tool for increasing memorability is writing narratively ( Bruner, 1991 ). An empirical article should tell a story, not in the sense of a tall tale but in the spirit of a coherent and logical narrative.

Even authors who bristle at the notion of scholarly articles as stories must surely recognize that empirical articles resemble Aristotelian narratives: Introduction sections begin with a premise (the previous literature) that leads to an inciting incident (however, ...) and conclude with a therefore (the methods used to combat the inciting incident).

Thus, Introduction sections and Method sections are empirical articles’ Act One, their setups. Results sections are empirical articles’ Act Two, their confrontations. And Discussion sections are empirical articles’ Act Three, their resolutions.

Writing Act One (introduction and methods) prior to collecting data, as we would do if submitting a registered report, helps us adhere to Feynman’s (1974) warning not to fool ourselves (e.g., not to misremember what we did vs. did not predict and, consequently, which analyses are vs. are not confirmatory).

Writing all sections narratively, as setup, confrontation, and then resolution, should increase their short- and long-term memorability. Similarly, writing methods and results as sequences of events should increase their memorability.

For methods, Bem (1987 , 2004) recommended leading readers through the procedure as if they were research participants, which is an excellent idea. For results, readers can be led through the analytic pipeline in the sequence in which it occurred.

Embrace the hourglass

Bem advised that an article should be written “in the shape of an hourglass. It begins with broad general statements, progressively narrows down to the specifics of your study, and then broadens out again to more general considerations” ( Bem, 1987 , p. 175; Bem, 2004 , p. 189). That advice should also not be jettisoned ( Devlin, 2017 ).

Call it the hourglass or call it the “broad- narrow-broad” structure ( Mensh & Kording, 2017 , p. 4), the notion is that well-written empirical articles begin broadly (theories and questions), narrow to specifics (methods and results), and end broadly (implications). Authors who embrace the hourglass shape aid their readers, particularly readers who skim ( Weinstein, 2016 ).

Begin with a hook

Journal editors advise that articles “should offer a clear, direct, and compelling story that first hooks the reader” (Rains, 2012, p. 497). For example, Oyserman et al. (2017) began their article with the following hook, which led directly to a statement articulating what their article was about (illustrated here in italics):

Will you be going to that networking lunch? Will you be tempted by a donut at 4 pm? Will you be doing homework at 9 pm? If, like many people, your responses are based on your gut sense of who you are—shy or outgoing, a treat lover or a dieter, studious or a procrastinator—you made three assumptions about identity: that motivation and behavior are identity based, that identities are chronically on the mind, and that identities are stable. (p. 139)

As another example, Newman et al. (2014) began their article with the following hook:

In its classic piece, “Clinton Deploys Vowels to Bosnia,” the satirical newspaper The Onion quoted Trszg Grzdnjkln, 44. “I have six children and none of them has a name that is understandable to me or to anyone else. Mr. Clinton, please send my poor, wretched family just one ‘E.’ Please.” The Onion was onto something when it suggested that people with hard to pronounce names suffer while their more pronounceable counter parts benefit. (p. 1, italics added)

As a third example, Jakimik and Glenberg (1990) began their article with the following hook:

You’re zipping through an article in your favorite journal when your reading stops with a thud. The author has just laid out two alternative hypotheses and then referred to one of them as “the former approach.” But now you are confused about which was first, which was second. You curse the author and your own lack of concentration, reread the setup rehearsing the order of the two hypotheses, and finally figure out which alternative the author was referring to. We have experienced this problem, too, and we do not think that it is simply a matter of lack of concentration. The subject of this article is the reason for difficulty with referring devices such as “the former approach.” (p. 582, italics added)

Synthesize previous literature (rather than Mad Lib it)

In the game of Mad Libs, one player generates a list of words from specified categories, for instance, a proper name, an activity, and a number. Then, the other player fills a template sentence with that list of generated terms.

In a similar way, some authors review the literature by Mad Libbing terms into sentence templates, for example, “_____ [author’s name] investigated _____ [research topic] with _____ [number] of participants and found a statistically significant effect of _____ [variable] on _____ [variable].”

A more memorable, albeit more difficult, way to review the literature is to synthesize it, as Aronson (1969) illustrated in his synthesis of previous studies on cognitive dissonance:

The research [on cognitive dissonance] has been as diverse as it has been plentiful; its range extends from maze running in rats (Lawrence and Festinger, 1962) to the development of values in children (Aronson and Carlsmith, 1963); from the hunger of college sophomores (Brehm et al., 1964) to the proselytizing behavior of religious zealots (Festinger et al., 1956). The proliferation of research testing and extending dissonance theory results from the generality and simplicity of the theory. (p. 1)

Notice that Aronson wrote a coherent narrative in which phenomena, not researchers, are the topics. That is what is meant by synthesizing, not Mad Libbing, previous literature.

Even technical literature can be synthesized rather than Mad Libbed, as Guillem et al. (2011) demonstrated:

Cortical acetylcholine (ACh) release from the basal forebrain is essential for proper sensory processing and cognition (1–3) and tunes neuronal and synaptic activity in the underlying cortical networks (4,5). Loss of cholinergic function during aging and Alzheimer’s disease results in cognitive decline, notably a loss of memory and the ability to sustain attention (6,7). Interfering with the cholinergic system strongly affects cognition (3,8–13). Rapid changes in prefrontal cortical ACh levels at the scale of seconds are correlated with attending and detecting cues (14,15). Various types of nicotinic ACh receptor (nAChR) subunits are expressed in the prefrontal cortex (PFC) (16–18) ... However, the causal relation between nAChR β2 subunits (henceforth β2-nAChRs) expressed in the medial PFC (mPFC) and attention performance has not yet been demonstrated. (p. 888)

Guillem et al. began with a premise (“Cortical acetyl- choline (ACh) release from the basal forebrain is essential”), which they then supported with the literature. They further developed their premise (“Loss of cholinergic function during aging and Alzheimer’s disease results in cognitive decline,” “Interfering with the cholinergic system strongly affects cognition,” and “Rapid changes in prefrontal cortical ACh levels ... are correlated with attending and detecting cues”), and they concluded with their “However.” They synthesized the literature to tell a story.

Writing clearly and memorably need not be orthogonal to writing transparently and enabling reproducibility. For example, in their seminal article on false memories for words presented in lists, Roediger and McDermott (1995)

- documented their experimental procedure fully enough to allow replication, including most recently a preregistered replication ( Zwaan et al., 2017 );

- provided their research materials openly (in an appendix);

- told their story in short paragraphs (average length of 5.1 sentences) and short sentences (average length of 18 words);

- embraced an hourglass shape (e.g., their discussion began by relating their study to prior work, continued by contrasting experiments that measured false recall vs. false recognition, extended to discussing phenomenological experience, and broadened to articulating implications); and

- transparently acknowledged parallel efforts by another research team (“While working on this article, we learned that Don Read was conducting similar research, which is described briefly in Lindsay & Read, 1994,” p. 804).

A well-written empirical article that enables reproducibility and transparency can also be clear and memorable.

Barring extraordinary disruption, empirical journal articles are likely to survive at least a couple more decades. Authors will continue to write empirical articles to communicate why they did their studies, how they did their studies, what they observed, and what those observations mean. And readers will continue to read empirical articles to receive this communication. The most successful articles will continue to embody Grice’s (1975) maxims for communication: They will be informative, truthful, relevant, clear, and memorable.

1 Some researchers distinguish between replication, which they define as corroborating previous results by collecting new data, and reproduction, which they define as corroborating previous results by analyzing previous data ( Peng, 2011 ). Other researchers consider the two terms to be synonymous ( Shuttleworth, 2009 ), or they propose that the two terms should be used synonymously ( Goodman, Fanelli, & Ioannidis, 2016 ).

2 To make a linked, or clickable, DOI, simply add the preface https://doi.org to the alphanumeric string.

Author Contributions

Declaration of Conflicting Interests

The author(s) declared no conflicts of interest with respect to the authorship or the publication of this article.

Data and materials are available via Open Science Framework and can be accessed at https://osf.io/uxych . The Open Practices Disclosure for this article can be found at http://journals.sagepub.com/doi/suppl/10.1177/2515245918754485 . This article has received badges for Open Data and Open Materials. More information about the Open Practices badges can be found at http://www.psychologicalscience.org/publications/badges .

- Allgood-Merten B, Lewinsohn PM, Hops H. Sex differences and adolescent depression. Journal of Abnormal Psychology. 1990; 99 :55–63. doi: 10.1037/0021-843X.99.1.55. [ PubMed ] [ CrossRef ] [ Google Scholar ]

- American Psychological Association. Preparing manuscripts for publication in psychology journals: A guide for new authors. 2010 https://www.apa.org/pubs/authors/new-author-guide.pdf .

- Aronson E. The theory of cognitive dissonance: A current perspective. In: Berkowitz L, editor. Advances in experimental social psychology. Vol. 4. New York, NY: Academic Press; 1969. pp. 1–34. [ CrossRef ] [ Google Scholar ]

- Asendorpf JB, Conner M, De Fruyt F, De Houwer J, Denissen JJA, Fiedler K, Wicherts JM. Recommendations for increasing replicability in psychology. European Journal of Personality. 2013; 27 :108–119. doi: 10.1002/per.1919. [ CrossRef ] [ Google Scholar ]

- Bem DJ. Writing the empirical journal article. In: Zanna MP, Darley JM, editors. The compleat academic: A practical guide for the beginning social scientist. Hillsdale, NJ: Erlbaum; 1987. pp. 171–201. [ Google Scholar ]

- Bem DJ. Writing the empirical journal article. In: Darley JM, Zanna MP, Roediger HL III, editors. The compleat academic: A practical guide for the beginning social scientist. 2. Washington, DC: American Psychological Association; 2004. pp. 185–219. [ Google Scholar ]

- Brockhaus RH. Risk taking propensity of entrepreneurs. Academy of Management Journal. 1980; 23 :509–520. doi: 10.5465/ambpp.1976.4975934. [ CrossRef ] [ Google Scholar ]

- Brook RH, Ware JE, Jr, Rogers WH, Keeler EB, Davies AR, Donald CA, Newhouse JP. Does free care improve adults’ health? Results from a randomized controlled trial. The New England Journal of Medicine. 1983; 308 :1426–1434. doi: 10.1056/NEJM198312083092305. [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Brown v. Board of Educ., 347 U.S. 483 (1954).

- Bruner J. The narrative construction of reality. Critical Inquiry. 1991; 18 :1–21. doi: 10.1086/448619. [ CrossRef ] [ Google Scholar ]

- Button KS, Ioannidis JPA, Mokrysz C, Nosek BA, Flint J, Robinson ESJ, Munafò MR. Power failure: Why small sample size undermines the reliability of neuroscience. Nature Reviews Neuroscience. 2013; 14 :365–376. doi: 10.1038/nrn3475. [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Campbell L, Loving TJ, Lebelc EB. Enhancing transparency of the research process to increase accuracy of findings: A guide for relationship researchers. Personal Relationships. 2014; 21 :531–545. doi: 10.1111/pere.12053. [ CrossRef ] [ Google Scholar ]

- Center for OpenScience. Registered Reports: Peer review before results are known to align scientific values and practices. n.d https://cos.io/rr/

- Chambers C, Munafo M more than 80 signatories. Trust in science would be improved by study pre-registration. The Guardian. 2013 Jun 5; https://www.theguardian.com/science/blog/2013/jun/05/trust-in-science-study-pre-registration .

- Clark KB, Clark MK. The development of consciousness of self and the emergence of racial identification in Negro preschool children. Journal of Social Psychology. 1939; 10 :591–599. doi: 10.1080/00224545.1939.9713394. . [ CrossRef ] [ Google Scholar ]

- Clark KB, Clark MK. Skin color as a factor in racial identification of Negro preschool children. Journal of Social Psychology. 1940; 11 :159–169. doi: 10.1080/00224545.1940.9918741. [ CrossRef ] [ Google Scholar ]

- Clark KB, Clark MK. Racial identification and preference in Negro children. In: Newcomb TM, Hartley EL, editors. Readings in social psychology. New York, NY: Henry Holt; 1947. pp. 169–178. [ Google Scholar ]