Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- What Is Peer Review? | Types & Examples

What Is Peer Review? | Types & Examples

Published on December 17, 2021 by Tegan George . Revised on June 22, 2023.

Peer review, sometimes referred to as refereeing , is the process of evaluating submissions to an academic journal. Using strict criteria, a panel of reviewers in the same subject area decides whether to accept each submission for publication.

Peer-reviewed articles are considered a highly credible source due to the stringent process they go through before publication.

There are various types of peer review. The main difference between them is to what extent the authors, reviewers, and editors know each other’s identities. The most common types are:

- Single-blind review

- Double-blind review

- Triple-blind review

Collaborative review

Open review.

Relatedly, peer assessment is a process where your peers provide you with feedback on something you’ve written, based on a set of criteria or benchmarks from an instructor. They then give constructive feedback, compliments, or guidance to help you improve your draft.

Table of contents

What is the purpose of peer review, types of peer review, the peer review process, providing feedback to your peers, peer review example, advantages of peer review, criticisms of peer review, other interesting articles, frequently asked questions about peer reviews.

Many academic fields use peer review, largely to determine whether a manuscript is suitable for publication. Peer review enhances the credibility of the manuscript. For this reason, academic journals are among the most credible sources you can refer to.

However, peer review is also common in non-academic settings. The United Nations, the European Union, and many individual nations use peer review to evaluate grant applications. It is also widely used in medical and health-related fields as a teaching or quality-of-care measure.

Peer assessment is often used in the classroom as a pedagogical tool. Both receiving feedback and providing it are thought to enhance the learning process, helping students think critically and collaboratively.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

Depending on the journal, there are several types of peer review.

Single-blind peer review

The most common type of peer review is single-blind (or single anonymized) review . Here, the names of the reviewers are not known by the author.

While this gives the reviewers the ability to give feedback without the possibility of interference from the author, there has been substantial criticism of this method in the last few years. Many argue that single-blind reviewing can lead to poaching or intellectual theft or that anonymized comments cause reviewers to be too harsh.

Double-blind peer review

In double-blind (or double anonymized) review , both the author and the reviewers are anonymous.

Arguments for double-blind review highlight that this mitigates any risk of prejudice on the side of the reviewer, while protecting the nature of the process. In theory, it also leads to manuscripts being published on merit rather than on the reputation of the author.

Triple-blind peer review

While triple-blind (or triple anonymized) review —where the identities of the author, reviewers, and editors are all anonymized—does exist, it is difficult to carry out in practice.

Proponents of adopting triple-blind review for journal submissions argue that it minimizes potential conflicts of interest and biases. However, ensuring anonymity is logistically challenging, and current editing software is not always able to fully anonymize everyone involved in the process.

In collaborative review , authors and reviewers interact with each other directly throughout the process. However, the identity of the reviewer is not known to the author. This gives all parties the opportunity to resolve any inconsistencies or contradictions in real time, and provides them a rich forum for discussion. It can mitigate the need for multiple rounds of editing and minimize back-and-forth.

Collaborative review can be time- and resource-intensive for the journal, however. For these collaborations to occur, there has to be a set system in place, often a technological platform, with staff monitoring and fixing any bugs or glitches.

Lastly, in open review , all parties know each other’s identities throughout the process. Often, open review can also include feedback from a larger audience, such as an online forum, or reviewer feedback included as part of the final published product.

While many argue that greater transparency prevents plagiarism or unnecessary harshness, there is also concern about the quality of future scholarship if reviewers feel they have to censor their comments.

In general, the peer review process includes the following steps:

- First, the author submits the manuscript to the editor.

- Reject the manuscript and send it back to the author, or

- Send it onward to the selected peer reviewer(s)

- Next, the peer review process occurs. The reviewer provides feedback, addressing any major or minor issues with the manuscript, and gives their advice regarding what edits should be made.

- Lastly, the edited manuscript is sent back to the author. They input the edits and resubmit it to the editor for publication.

In an effort to be transparent, many journals are now disclosing who reviewed each article in the published product. There are also increasing opportunities for collaboration and feedback, with some journals allowing open communication between reviewers and authors.

It can seem daunting at first to conduct a peer review or peer assessment. If you’re not sure where to start, there are several best practices you can use.

Summarize the argument in your own words

Summarizing the main argument helps the author see how their argument is interpreted by readers, and gives you a jumping-off point for providing feedback. If you’re having trouble doing this, it’s a sign that the argument needs to be clearer, more concise, or worded differently.

If the author sees that you’ve interpreted their argument differently than they intended, they have an opportunity to address any misunderstandings when they get the manuscript back.

Separate your feedback into major and minor issues

It can be challenging to keep feedback organized. One strategy is to start out with any major issues and then flow into the more minor points. It’s often helpful to keep your feedback in a numbered list, so the author has concrete points to refer back to.

Major issues typically consist of any problems with the style, flow, or key points of the manuscript. Minor issues include spelling errors, citation errors, or other smaller, easy-to-apply feedback.

Tip: Try not to focus too much on the minor issues. If the manuscript has a lot of typos, consider making a note that the author should address spelling and grammar issues, rather than going through and fixing each one.

The best feedback you can provide is anything that helps them strengthen their argument or resolve major stylistic issues.

Give the type of feedback that you would like to receive

No one likes being criticized, and it can be difficult to give honest feedback without sounding overly harsh or critical. One strategy you can use here is the “compliment sandwich,” where you “sandwich” your constructive criticism between two compliments.

Be sure you are giving concrete, actionable feedback that will help the author submit a successful final draft. While you shouldn’t tell them exactly what they should do, your feedback should help them resolve any issues they may have overlooked.

As a rule of thumb, your feedback should be:

- Easy to understand

- Constructive

Below is a brief annotated research example. You can view examples of peer feedback by hovering over the highlighted sections.

Influence of phone use on sleep

Studies show that teens from the US are getting less sleep than they were a decade ago (Johnson, 2019) . On average, teens only slept for 6 hours a night in 2021, compared to 8 hours a night in 2011. Johnson mentions several potential causes, such as increased anxiety, changed diets, and increased phone use.

The current study focuses on the effect phone use before bedtime has on the number of hours of sleep teens are getting.

For this study, a sample of 300 teens was recruited using social media, such as Facebook, Instagram, and Snapchat. The first week, all teens were allowed to use their phone the way they normally would, in order to obtain a baseline.

The sample was then divided into 3 groups:

- Group 1 was not allowed to use their phone before bedtime.

- Group 2 used their phone for 1 hour before bedtime.

- Group 3 used their phone for 3 hours before bedtime.

All participants were asked to go to sleep around 10 p.m. to control for variation in bedtime . In the morning, their Fitbit showed the number of hours they’d slept. They kept track of these numbers themselves for 1 week.

Two independent t tests were used in order to compare Group 1 and Group 2, and Group 1 and Group 3. The first t test showed no significant difference ( p > .05) between the number of hours for Group 1 ( M = 7.8, SD = 0.6) and Group 2 ( M = 7.0, SD = 0.8). The second t test showed a significant difference ( p < .01) between the average difference for Group 1 ( M = 7.8, SD = 0.6) and Group 3 ( M = 6.1, SD = 1.5).

This shows that teens sleep fewer hours a night if they use their phone for over an hour before bedtime, compared to teens who use their phone for 0 to 1 hours.

Peer review is an established and hallowed process in academia, dating back hundreds of years. It provides various fields of study with metrics, expectations, and guidance to ensure published work is consistent with predetermined standards.

- Protects the quality of published research

Peer review can stop obviously problematic, falsified, or otherwise untrustworthy research from being published. Any content that raises red flags for reviewers can be closely examined in the review stage, preventing plagiarized or duplicated research from being published.

- Gives you access to feedback from experts in your field

Peer review represents an excellent opportunity to get feedback from renowned experts in your field and to improve your writing through their feedback and guidance. Experts with knowledge about your subject matter can give you feedback on both style and content, and they may also suggest avenues for further research that you hadn’t yet considered.

- Helps you identify any weaknesses in your argument

Peer review acts as a first defense, helping you ensure your argument is clear and that there are no gaps, vague terms, or unanswered questions for readers who weren’t involved in the research process. This way, you’ll end up with a more robust, more cohesive article.

While peer review is a widely accepted metric for credibility, it’s not without its drawbacks.

- Reviewer bias

The more transparent double-blind system is not yet very common, which can lead to bias in reviewing. A common criticism is that an excellent paper by a new researcher may be declined, while an objectively lower-quality submission by an established researcher would be accepted.

- Delays in publication

The thoroughness of the peer review process can lead to significant delays in publishing time. Research that was current at the time of submission may not be as current by the time it’s published. There is also high risk of publication bias , where journals are more likely to publish studies with positive findings than studies with negative findings.

- Risk of human error

By its very nature, peer review carries a risk of human error. In particular, falsification often cannot be detected, given that reviewers would have to replicate entire experiments to ensure the validity of results.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Measures of central tendency

- Chi square tests

- Confidence interval

- Quartiles & Quantiles

- Cluster sampling

- Stratified sampling

- Thematic analysis

- Discourse analysis

- Cohort study

- Ethnography

Research bias

- Implicit bias

- Cognitive bias

- Conformity bias

- Hawthorne effect

- Availability heuristic

- Attrition bias

- Social desirability bias

Peer review is a process of evaluating submissions to an academic journal. Utilizing rigorous criteria, a panel of reviewers in the same subject area decide whether to accept each submission for publication. For this reason, academic journals are often considered among the most credible sources you can use in a research project– provided that the journal itself is trustworthy and well-regarded.

In general, the peer review process follows the following steps:

- Reject the manuscript and send it back to author, or

- Send it onward to the selected peer reviewer(s)

- Next, the peer review process occurs. The reviewer provides feedback, addressing any major or minor issues with the manuscript, and gives their advice regarding what edits should be made.

- Lastly, the edited manuscript is sent back to the author. They input the edits, and resubmit it to the editor for publication.

Peer review can stop obviously problematic, falsified, or otherwise untrustworthy research from being published. It also represents an excellent opportunity to get feedback from renowned experts in your field. It acts as a first defense, helping you ensure your argument is clear and that there are no gaps, vague terms, or unanswered questions for readers who weren’t involved in the research process.

Peer-reviewed articles are considered a highly credible source due to this stringent process they go through before publication.

Many academic fields use peer review , largely to determine whether a manuscript is suitable for publication. Peer review enhances the credibility of the published manuscript.

However, peer review is also common in non-academic settings. The United Nations, the European Union, and many individual nations use peer review to evaluate grant applications. It is also widely used in medical and health-related fields as a teaching or quality-of-care measure.

A credible source should pass the CRAAP test and follow these guidelines:

- The information should be up to date and current.

- The author and publication should be a trusted authority on the subject you are researching.

- The sources the author cited should be easy to find, clear, and unbiased.

- For a web source, the URL and layout should signify that it is trustworthy.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

George, T. (2023, June 22). What Is Peer Review? | Types & Examples. Scribbr. Retrieved August 12, 2024, from https://www.scribbr.com/methodology/peer-review/

Is this article helpful?

Tegan George

Other students also liked, what are credible sources & how to spot them | examples, ethical considerations in research | types & examples, applying the craap test & evaluating sources, what is your plagiarism score.

- Science Notes Posts

- Contact Science Notes

- Todd Helmenstine Biography

- Anne Helmenstine Biography

- Free Printable Periodic Tables (PDF and PNG)

- Periodic Table Wallpapers

- Interactive Periodic Table

- Periodic Table Posters

- Science Experiments for Kids

- How to Grow Crystals

- Chemistry Projects

- Fire and Flames Projects

- Holiday Science

- Chemistry Problems With Answers

- Physics Problems

- Unit Conversion Example Problems

- Chemistry Worksheets

- Biology Worksheets

- Periodic Table Worksheets

- Physical Science Worksheets

- Science Lab Worksheets

- My Amazon Books

Understanding Peer Review in Science

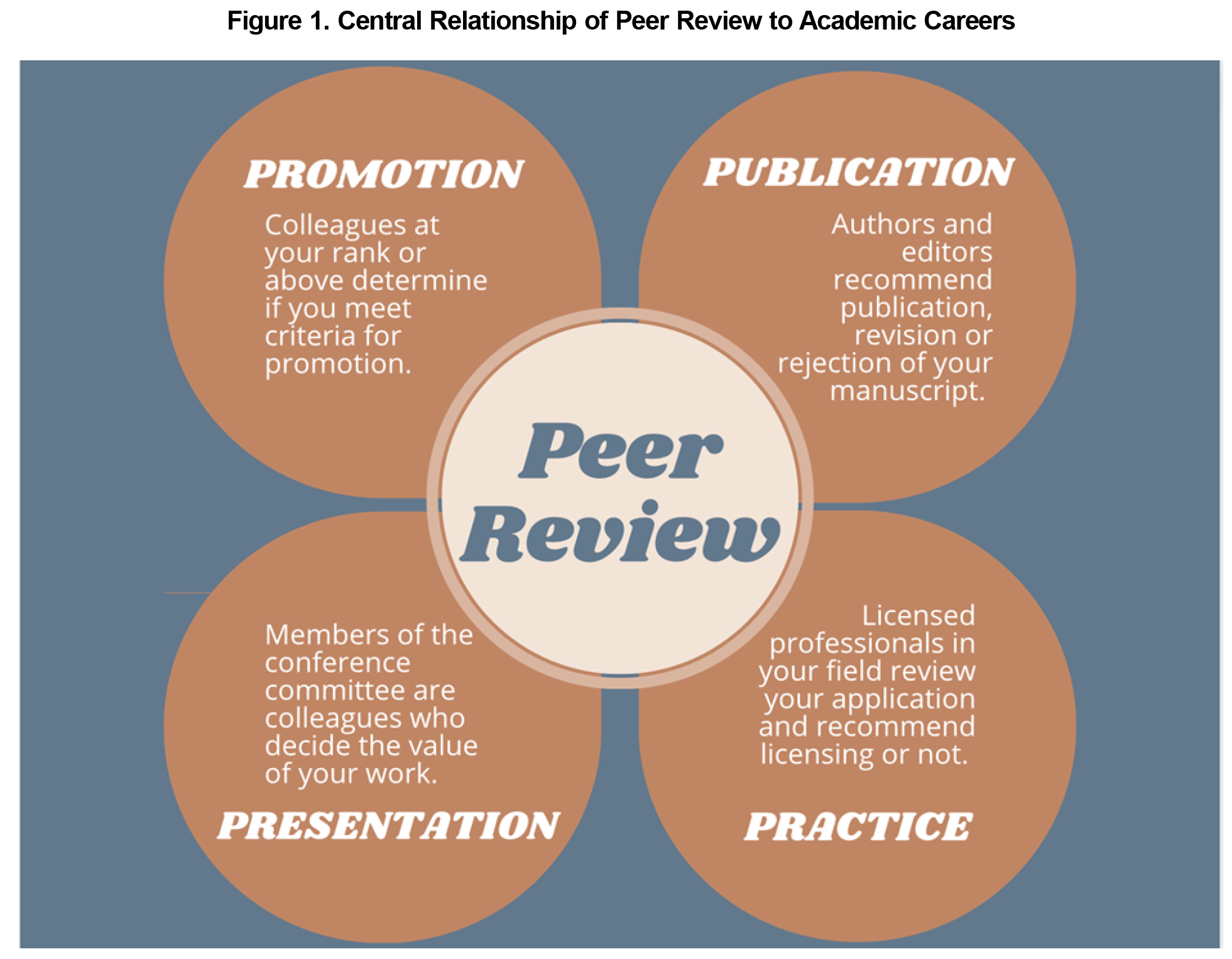

Peer review is an essential element of the scientific publishing process that helps ensure that research articles are evaluated, critiqued, and improved before release into the academic community. Take a look at the significance of peer review in scientific publications, the typical steps of the process, and and how to approach peer review if you are asked to assess a manuscript.

What Is Peer Review?

Peer review is the evaluation of work by peers, who are people with comparable experience and competency. Peers assess each others’ work in educational settings, in professional settings, and in the publishing world. The goal of peer review is improving quality, defining and maintaining standards, and helping people learn from one another.

In the context of scientific publication, peer review helps editors determine which submissions merit publication and improves the quality of manuscripts prior to their final release.

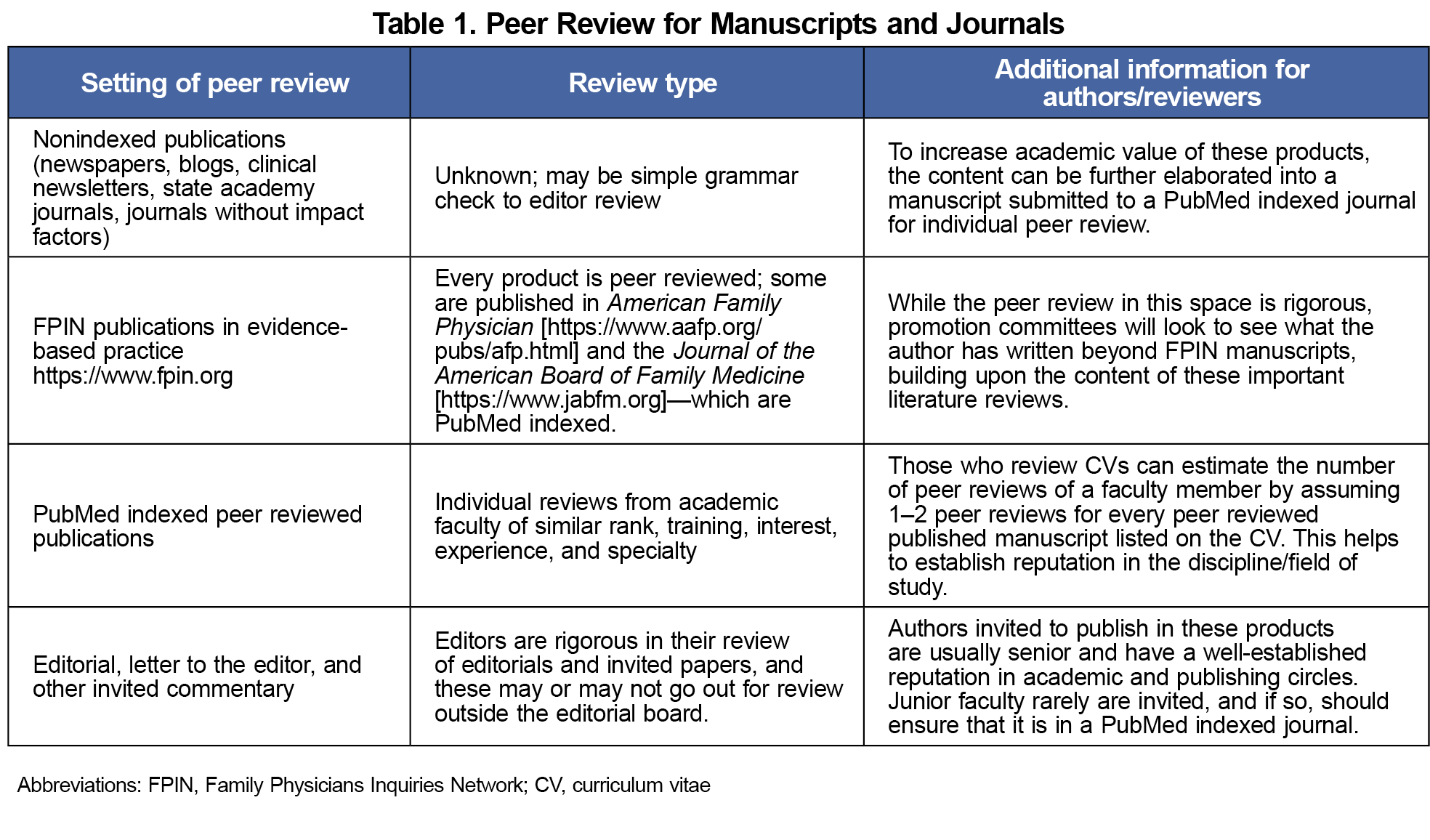

Types of Peer Review for Manuscripts

There are three main types of peer review:

- Single-blind review: The reviewers know the identities of the authors, but the authors do not know the identities of the reviewers.

- Double-blind review: Both the authors and reviewers remain anonymous to each other.

- Open peer review: The identities of both the authors and reviewers are disclosed, promoting transparency and collaboration.

There are advantages and disadvantages of each method. Anonymous reviews reduce bias but reduce collaboration, while open reviews are more transparent, but increase bias.

Key Elements of Peer Review

Proper selection of a peer group improves the outcome of the process:

- Expertise : Reviewers should possess adequate knowledge and experience in the relevant field to provide constructive feedback.

- Objectivity : Reviewers assess the manuscript impartially and without personal bias.

- Confidentiality : The peer review process maintains confidentiality to protect intellectual property and encourage honest feedback.

- Timeliness : Reviewers provide feedback within a reasonable timeframe to ensure timely publication.

Steps of the Peer Review Process

The typical peer review process for scientific publications involves the following steps:

- Submission : Authors submit their manuscript to a journal that aligns with their research topic.

- Editorial assessment : The journal editor examines the manuscript and determines whether or not it is suitable for publication. If it is not, the manuscript is rejected.

- Peer review : If it is suitable, the editor sends the article to peer reviewers who are experts in the relevant field.

- Reviewer feedback : Reviewers provide feedback, critique, and suggestions for improvement.

- Revision and resubmission : Authors address the feedback and make necessary revisions before resubmitting the manuscript.

- Final decision : The editor makes a final decision on whether to accept or reject the manuscript based on the revised version and reviewer comments.

- Publication : If accepted, the manuscript undergoes copyediting and formatting before being published in the journal.

Pros and Cons

While the goal of peer review is improving the quality of published research, the process isn’t without its drawbacks.

- Quality assurance : Peer review helps ensure the quality and reliability of published research.

- Error detection : The process identifies errors and flaws that the authors may have overlooked.

- Credibility : The scientific community generally considers peer-reviewed articles to be more credible.

- Professional development : Reviewers can learn from the work of others and enhance their own knowledge and understanding.

- Time-consuming : The peer review process can be lengthy, delaying the publication of potentially valuable research.

- Bias : Personal biases of reviews impact their evaluation of the manuscript.

- Inconsistency : Different reviewers may provide conflicting feedback, making it challenging for authors to address all concerns.

- Limited effectiveness : Peer review does not always detect significant errors or misconduct.

- Poaching : Some reviewers take an idea from a submission and gain publication before the authors of the original research.

Steps for Conducting Peer Review of an Article

Generally, an editor provides guidance when you are asked to provide peer review of a manuscript. Here are typical steps of the process.

- Accept the right assignment: Accept invitations to review articles that align with your area of expertise to ensure you can provide well-informed feedback.

- Manage your time: Allocate sufficient time to thoroughly read and evaluate the manuscript, while adhering to the journal’s deadline for providing feedback.

- Read the manuscript multiple times: First, read the manuscript for an overall understanding of the research. Then, read it more closely to assess the details, methodology, results, and conclusions.

- Evaluate the structure and organization: Check if the manuscript follows the journal’s guidelines and is structured logically, with clear headings, subheadings, and a coherent flow of information.

- Assess the quality of the research: Evaluate the research question, study design, methodology, data collection, analysis, and interpretation. Consider whether the methods are appropriate, the results are valid, and the conclusions are supported by the data.

- Examine the originality and relevance: Determine if the research offers new insights, builds on existing knowledge, and is relevant to the field.

- Check for clarity and consistency: Review the manuscript for clarity of writing, consistent terminology, and proper formatting of figures, tables, and references.

- Identify ethical issues: Look for potential ethical concerns, such as plagiarism, data fabrication, or conflicts of interest.

- Provide constructive feedback: Offer specific, actionable, and objective suggestions for improvement, highlighting both the strengths and weaknesses of the manuscript. Don’t be mean.

- Organize your review: Structure your review with an overview of your evaluation, followed by detailed comments and suggestions organized by section (e.g., introduction, methods, results, discussion, and conclusion).

- Be professional and respectful: Maintain a respectful tone in your feedback, avoiding personal criticism or derogatory language.

- Proofread your review: Before submitting your review, proofread it for typos, grammar, and clarity.

- Couzin-Frankel J (September 2013). “Biomedical publishing. Secretive and subjective, peer review proves resistant to study”. Science . 341 (6152): 1331. doi: 10.1126/science.341.6152.1331

- Lee, Carole J.; Sugimoto, Cassidy R.; Zhang, Guo; Cronin, Blaise (2013). “Bias in peer review”. Journal of the American Society for Information Science and Technology. 64 (1): 2–17. doi: 10.1002/asi.22784

- Slavov, Nikolai (2015). “Making the most of peer review”. eLife . 4: e12708. doi: 10.7554/eLife.12708

- Spier, Ray (2002). “The history of the peer-review process”. Trends in Biotechnology . 20 (8): 357–8. doi: 10.1016/S0167-7799(02)01985-6

- Squazzoni, Flaminio; Brezis, Elise; Marušić, Ana (2017). “Scientometrics of peer review”. Scientometrics . 113 (1): 501–502. doi: 10.1007/s11192-017-2518-4

Related Posts

You are using an outdated browser . Please upgrade your browser today !

What Is Peer Review and Why Is It Important?

It’s one of the major cornerstones of the academic process and critical to maintaining rigorous quality standards for research papers. Whichever side of the peer review process you’re on, we want to help you understand the steps involved.

This post is part of a series that provides practical information and resources for authors and editors.

Peer review – the evaluation of academic research by other experts in the same field – has been used by the scientific community as a method of ensuring novelty and quality of research for more than 300 years. It is a testament to the power of peer review that a scientific hypothesis or statement presented to the world is largely ignored by the scholarly community unless it is first published in a peer-reviewed journal.

It is also safe to say that peer review is a critical element of the scholarly publication process and one of the major cornerstones of the academic process. It acts as a filter, ensuring that research is properly verified before being published. And it arguably improves the quality of the research, as the rigorous review by like-minded experts helps to refine or emphasise key points and correct inadvertent errors.

Ideally, this process encourages authors to meet the accepted standards of their discipline and in turn reduces the dissemination of irrelevant findings, unwarranted claims, unacceptable interpretations, and personal views.

If you are a researcher, you will come across peer review many times in your career. But not every part of the process might be clear to you yet. So, let’s have a look together!

Types of Peer Review

Peer review comes in many different forms. With single-blind peer review , the names of the reviewers are hidden from the authors, while double-blind peer review , both reviewers and authors remain anonymous. Then, there is open peer review , a term which offers more than one interpretation nowadays.

Open peer review can simply mean that reviewer and author identities are revealed to each other. It can also mean that a journal makes the reviewers’ reports and author replies of published papers publicly available (anonymized or not). The “open” in open peer review can even be a call for participation, where fellow researchers are invited to proactively comment on a freely accessible pre-print article. The latter two options are not yet widely used, but the Open Science movement, which strives for more transparency in scientific publishing, has been giving them a strong push over the last years.

If you are unsure about what kind of peer review a specific journal conducts, check out its instructions for authors and/or their editorial policy on the journal’s home page.

Why Should I Even Review?

To answer that question, many reviewers would probably reply that it simply is their “academic duty” – a natural part of academia, an important mechanism to monitor the quality of published research in their field. This is of course why the peer-review system was developed in the first place – by academia rather than the publishers – but there are also benefits.

Are you looking for the right place to publish your paper? Find out here whether a De Gruyter journal might be the right fit.

Besides a general interest in the field, reviewing also helps researchers keep up-to-date with the latest developments. They get to know about new research before everyone else does. It might help with their own research and/or stimulate new ideas. On top of that, reviewing builds relationships with prestigious journals and journal editors.

Clearly, reviewing is also crucial for the development of a scientific career, especially in the early stages. Relatively new services like Publons and ORCID Reviewer Recognition can support reviewers in getting credit for their efforts and making their contributions more visible to the wider community.

The Fundamentals of Reviewing

You have received an invitation to review? Before agreeing to do so, there are three pertinent questions you should ask yourself:

- Does the article you are being asked to review match your expertise?

- Do you have time to review the paper?

- Are there any potential conflicts of interest (e.g. of financial or personal nature)?

If you feel like you cannot handle the review for whatever reason, it is okay to decline. If you can think of a colleague who would be well suited for the topic, even better – suggest them to the journal’s editorial office.

But let’s assume that you have accepted the request. Here are some general things to keep in mind:

Please be aware that reviewer reports provide advice for editors to assist them in reaching a decision on a submitted paper. The final decision concerning a manuscript does not lie with you, but ultimately with the editor. It’s your expert guidance that is being sought.

Reviewing also needs to be conducted confidentially . The article you have been asked to review, including supplementary material, must never be disclosed to a third party. In the traditional single- or double-blind peer review process, your own anonymity will also be strictly preserved. Therefore, you should not communicate directly with the authors.

When writing a review, it is important to keep the journal’s guidelines in mind and to work along the building blocks of a manuscript (typically: abstract, introduction, methods, results, discussion, conclusion, references, tables, figures).

After initial receipt of the manuscript, you will be asked to supply your feedback within a specified period (usually 2-4 weeks). If at some point you notice that you are running out of time, get in touch with the editorial office as soon as you can and ask whether an extension is possible.

Some More Advice from a Journal Editor

- Be critical and constructive. An editor will find it easier to overturn very critical, unconstructive comments than to overturn favourable comments.

- Justify and specify all criticisms. Make specific references to the text of the paper (use line numbers!) or to published literature. Vague criticisms are unhelpful.

- Don’t repeat information from the paper , for example, the title and authors names, as this information already appears elsewhere in the review form.

- Check the aims and scope. This will help ensure that your comments are in accordance with journal policy and can be found on its home page.

- Give a clear recommendation . Do not put “I will leave the decision to the editor” in your reply, unless you are genuinely unsure of your recommendation.

- Number your comments. This makes it easy for authors to easily refer to them.

- Be careful not to identify yourself. Check, for example, the file name of your report if you submit it as a Word file.

Sticking to these rules will make the author’s life and that of the editors much easier!

Explore new perspectives on peer review in this collection of blog posts published during Peer Review Week 2021

[Title image by AndreyPopov/iStock/Getty Images Plus

David Sleeman

David Sleeman worked as a Senior Journals Manager in the field of Physical Sciences at De Gruyter.

You might also be interested in

Academia & Publishing

How to Maximize Your Message Through Social Media: A Global Masterclass from Library Professionals

Taking libraries into the future, part 2: an interview with mike jones and tomasz stompor, embracing diversity, equity and inclusion: social justice and the modern university, visit our shop.

De Gruyter publishes over 1,300 new book titles each year and more than 750 journals in the humanities, social sciences, medicine, mathematics, engineering, computer sciences, natural sciences, and law.

Pin It on Pinterest

Unfortunately we don't fully support your browser. If you have the option to, please upgrade to a newer version or use Mozilla Firefox , Microsoft Edge , Google Chrome , or Safari 14 or newer. If you are unable to, and need support, please send us your feedback .

We'd appreciate your feedback. Tell us what you think! opens in new tab/window

What is peer review?

Reviewers play a pivotal role in scholarly publishing. The peer review system exists to validate academic work, helps to improve the quality of published research, and increases networking possibilities within research communities. Despite criticisms, peer review is still the only widely accepted method for research validation and has continued successfully with relatively minor changes for some 350 years.

Elsevier relies on the peer review process to uphold the quality and validity of individual articles and the journals that publish them.

Peer review has been a formal part of scientific communication since the first scientific journals appeared more than 300 years ago. The Philosophical Transactions opens in new tab/window of the Royal Society is thought to be the first journal to formalize the peer review process opens in new tab/window under the editorship of Henry Oldenburg (1618- 1677).

Despite many criticisms about the integrity of peer review, the majority of the research community still believes peer review is the best form of scientific evaluation. This opinion was endorsed by the outcome of a survey Elsevier and Sense About Science conducted in 2009 opens in new tab/window and has since been further confirmed by other publisher and scholarly organization surveys. Furthermore, a 2015 survey by the Publishing Research Consortium opens in new tab/window , saw 82% of researchers agreeing that “without peer review there is no control in scientific communication.”

To learn more about peer review, visit Elsevier’s free e-learning platform Researcher Academy opens in new tab/window and see our resources below.

The peer review process

Types of peer review.

Peer review comes in different flavours. Each model has its own advantages and disadvantages, and often one type of review will be preferred by a subject community. Before submitting or reviewing a paper, you must therefore check which type is employed by the journal so you are aware of the respective rules. In case of questions regarding the peer review model employed by the journal for which you have been invited to review, consult the journal’s homepage or contact the editorial office directly.

Single anonymized review

In this type of review, the names of the reviewers are hidden from the author. This is the traditional method of reviewing and is the most common type by far. Points to consider regarding single anonymized review include:

Reviewer anonymity allows for impartial decisions , as the reviewers will not be influenced by potential criticism from the authors.

Authors may be concerned that reviewers in their field could delay publication, giving the reviewers a chance to publish first.

Reviewers may use their anonymity as justification for being unnecessarily critical or harsh when commenting on the authors’ work.

Double anonymized review

Both the reviewer and the author are anonymous in this model. Some advantages of this model are listed below.

Author anonymity limits reviewer bias, such as on author's gender, country of origin, academic status, or previous publication history.

Articles written by prestigious or renowned authors are considered based on the content of their papers, rather than their reputation.

But bear in mind that despite the above, reviewers can often identify the author through their writing style, subject matter, or self-citation – it is exceedingly difficult to guarantee total author anonymity. More information for authors can be found in our double-anonymized peer review guidelines .

Triple anonymized review

With triple anonymized review, reviewers are anonymous to the author, and the author's identity is unknown to both the reviewers and the editor. Articles are anonymized at the submission stage and are handled in a way to minimize any potential bias towards the authors. However, it should be noted that:

The complexities involved with anonymizing articles/authors to this level are considerable.

As with double anonymized review, there is still a possibility for the editor and/or reviewers to correctly identify the author(s) from their writing style, subject matter, citation patterns, or other methodologies.

Open review

Open peer review is an umbrella term for many different models aiming at greater transparency during and after the peer review process. The most common definition of open review is when both the reviewer and author are known to each other during the peer review process. Other types of open peer review consist of:

Publication of reviewers’ names on the article page

Publication of peer review reports alongside the article, either signed or anonymous

Publication of peer review reports (signed or anonymous) with authors’ and editors’ responses alongside the article

Publication of the paper after pre-checks and opening a discussion forum to the community who can then comment (named or anonymous) on the article

Many believe this is the best way to prevent malicious comments, stop plagiarism, prevent reviewers from following their own agenda, and encourage open, honest reviewing. Others see open review as a less honest process, in which politeness or fear of retribution may cause a reviewer to withhold or tone down criticism. For three years, five Elsevier journals experimented with publication of peer review reports (signed or anonymous) as articles alongside the accepted paper on ScienceDirect ( example opens in new tab/window ).

Read more about the experiment

More transparent peer review

Transparency is the key to trust in peer review and as such there is an increasing call towards more transparency around the peer review process . In an effort to promote transparency in the peer review process, many Elsevier journals therefore publish the name of the handling editor of the published paper on ScienceDirect. Some journals also provide details about the number of reviewers who reviewed the article before acceptance. Furthermore, in order to provide updates and feedback to reviewers, most Elsevier journals inform reviewers about the editor’s decision and their peers’ recommendations.

Article transfer service: sharing reviewer comments

Elsevier authors may be invited to transfer their article submission from one journal to another for free if their initial submission was not successful.

As a referee, your review report (including all comments to the author and editor) will be transferred to the destination journal, along with the manuscript. The main benefit is that reviewers are not asked to review the same manuscript several times for different journals.

Tools and resources

Interesting reads.

Chapter 2 of Academic and Professional Publishing, 2012, by Irene Hames in 2012 opens in new tab/window

"Is Peer Review in Crisis?" Perspectives in Publishing No 2, August 2004, by Adrian Mulligan opens in new tab/window

“The history of the peer-review process” Trends in Biotechnology, 2002, by Ray Spier opens in new tab/window

Reviewers’ Update articles

Peer review using today’s technology

Lifting the lid on publishing peer review reports: an interview with Bahar Mehmani and Flaminio Squazzoni

How face-to-face peer review can benefit authors and journals alike

Innovation in peer review: introducing “volunpeers”

Results masked review: peer review without publication bias

Elsevier Researcher Academy modules

The certified peer reviewer course opens in new tab/window

Transparency in peer review opens in new tab/window

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- What Is Peer Review? | Types & Examples

What Is Peer Review? | Types & Examples

Published on 6 May 2022 by Tegan George . Revised on 2 September 2022.

Peer review, sometimes referred to as refereeing , is the process of evaluating submissions to an academic journal. Using strict criteria, a panel of reviewers in the same subject area decides whether to accept each submission for publication.

Peer-reviewed articles are considered a highly credible source due to the stringent process they go through before publication.

There are various types of peer review. The main difference between them is to what extent the authors, reviewers, and editors know each other’s identities. The most common types are:

- Single-blind review

- Double-blind review

- Triple-blind review

Collaborative review

Open review.

Relatedly, peer assessment is a process where your peers provide you with feedback on something you’ve written, based on a set of criteria or benchmarks from an instructor. They then give constructive feedback, compliments, or guidance to help you improve your draft.

Table of contents

What is the purpose of peer review, types of peer review, the peer review process, providing feedback to your peers, peer review example, advantages of peer review, criticisms of peer review, frequently asked questions about peer review.

Many academic fields use peer review, largely to determine whether a manuscript is suitable for publication. Peer review enhances the credibility of the manuscript. For this reason, academic journals are among the most credible sources you can refer to.

However, peer review is also common in non-academic settings. The United Nations, the European Union, and many individual nations use peer review to evaluate grant applications. It is also widely used in medical and health-related fields as a teaching or quality-of-care measure.

Peer assessment is often used in the classroom as a pedagogical tool. Both receiving feedback and providing it are thought to enhance the learning process, helping students think critically and collaboratively.

Prevent plagiarism, run a free check.

Depending on the journal, there are several types of peer review.

Single-blind peer review

The most common type of peer review is single-blind (or single anonymised) review . Here, the names of the reviewers are not known by the author.

While this gives the reviewers the ability to give feedback without the possibility of interference from the author, there has been substantial criticism of this method in the last few years. Many argue that single-blind reviewing can lead to poaching or intellectual theft or that anonymised comments cause reviewers to be too harsh.

Double-blind peer review

In double-blind (or double anonymised) review , both the author and the reviewers are anonymous.

Arguments for double-blind review highlight that this mitigates any risk of prejudice on the side of the reviewer, while protecting the nature of the process. In theory, it also leads to manuscripts being published on merit rather than on the reputation of the author.

Triple-blind peer review

While triple-blind (or triple anonymised) review – where the identities of the author, reviewers, and editors are all anonymised – does exist, it is difficult to carry out in practice.

Proponents of adopting triple-blind review for journal submissions argue that it minimises potential conflicts of interest and biases. However, ensuring anonymity is logistically challenging, and current editing software is not always able to fully anonymise everyone involved in the process.

In collaborative review , authors and reviewers interact with each other directly throughout the process. However, the identity of the reviewer is not known to the author. This gives all parties the opportunity to resolve any inconsistencies or contradictions in real time, and provides them a rich forum for discussion. It can mitigate the need for multiple rounds of editing and minimise back-and-forth.

Collaborative review can be time- and resource-intensive for the journal, however. For these collaborations to occur, there has to be a set system in place, often a technological platform, with staff monitoring and fixing any bugs or glitches.

Lastly, in open review , all parties know each other’s identities throughout the process. Often, open review can also include feedback from a larger audience, such as an online forum, or reviewer feedback included as part of the final published product.

While many argue that greater transparency prevents plagiarism or unnecessary harshness, there is also concern about the quality of future scholarship if reviewers feel they have to censor their comments.

In general, the peer review process includes the following steps:

- First, the author submits the manuscript to the editor.

- Reject the manuscript and send it back to the author, or

- Send it onward to the selected peer reviewer(s)

- Next, the peer review process occurs. The reviewer provides feedback, addressing any major or minor issues with the manuscript, and gives their advice regarding what edits should be made.

- Lastly, the edited manuscript is sent back to the author. They input the edits and resubmit it to the editor for publication.

In an effort to be transparent, many journals are now disclosing who reviewed each article in the published product. There are also increasing opportunities for collaboration and feedback, with some journals allowing open communication between reviewers and authors.

It can seem daunting at first to conduct a peer review or peer assessment. If you’re not sure where to start, there are several best practices you can use.

Summarise the argument in your own words

Summarising the main argument helps the author see how their argument is interpreted by readers, and gives you a jumping-off point for providing feedback. If you’re having trouble doing this, it’s a sign that the argument needs to be clearer, more concise, or worded differently.

If the author sees that you’ve interpreted their argument differently than they intended, they have an opportunity to address any misunderstandings when they get the manuscript back.

Separate your feedback into major and minor issues

It can be challenging to keep feedback organised. One strategy is to start out with any major issues and then flow into the more minor points. It’s often helpful to keep your feedback in a numbered list, so the author has concrete points to refer back to.

Major issues typically consist of any problems with the style, flow, or key points of the manuscript. Minor issues include spelling errors, citation errors, or other smaller, easy-to-apply feedback.

The best feedback you can provide is anything that helps them strengthen their argument or resolve major stylistic issues.

Give the type of feedback that you would like to receive

No one likes being criticised, and it can be difficult to give honest feedback without sounding overly harsh or critical. One strategy you can use here is the ‘compliment sandwich’, where you ‘sandwich’ your constructive criticism between two compliments.

Be sure you are giving concrete, actionable feedback that will help the author submit a successful final draft. While you shouldn’t tell them exactly what they should do, your feedback should help them resolve any issues they may have overlooked.

As a rule of thumb, your feedback should be:

- Easy to understand

- Constructive

Below is a brief annotated research example. You can view examples of peer feedback by hovering over the highlighted sections.

Influence of phone use on sleep

Studies show that teens from the US are getting less sleep than they were a decade ago (Johnson, 2019) . On average, teens only slept for 6 hours a night in 2021, compared to 8 hours a night in 2011. Johnson mentions several potential causes, such as increased anxiety, changed diets, and increased phone use.

The current study focuses on the effect phone use before bedtime has on the number of hours of sleep teens are getting.

For this study, a sample of 300 teens was recruited using social media, such as Facebook, Instagram, and Snapchat. The first week, all teens were allowed to use their phone the way they normally would, in order to obtain a baseline.

The sample was then divided into 3 groups:

- Group 1 was not allowed to use their phone before bedtime.

- Group 2 used their phone for 1 hour before bedtime.

- Group 3 used their phone for 3 hours before bedtime.

All participants were asked to go to sleep around 10 p.m. to control for variation in bedtime . In the morning, their Fitbit showed the number of hours they’d slept. They kept track of these numbers themselves for 1 week.

Two independent t tests were used in order to compare Group 1 and Group 2, and Group 1 and Group 3. The first t test showed no significant difference ( p > .05) between the number of hours for Group 1 ( M = 7.8, SD = 0.6) and Group 2 ( M = 7.0, SD = 0.8). The second t test showed a significant difference ( p < .01) between the average difference for Group 1 ( M = 7.8, SD = 0.6) and Group 3 ( M = 6.1, SD = 1.5).

This shows that teens sleep fewer hours a night if they use their phone for over an hour before bedtime, compared to teens who use their phone for 0 to 1 hours.

Peer review is an established and hallowed process in academia, dating back hundreds of years. It provides various fields of study with metrics, expectations, and guidance to ensure published work is consistent with predetermined standards.

- Protects the quality of published research

Peer review can stop obviously problematic, falsified, or otherwise untrustworthy research from being published. Any content that raises red flags for reviewers can be closely examined in the review stage, preventing plagiarised or duplicated research from being published.

- Gives you access to feedback from experts in your field

Peer review represents an excellent opportunity to get feedback from renowned experts in your field and to improve your writing through their feedback and guidance. Experts with knowledge about your subject matter can give you feedback on both style and content, and they may also suggest avenues for further research that you hadn’t yet considered.

- Helps you identify any weaknesses in your argument

Peer review acts as a first defence, helping you ensure your argument is clear and that there are no gaps, vague terms, or unanswered questions for readers who weren’t involved in the research process. This way, you’ll end up with a more robust, more cohesive article.

While peer review is a widely accepted metric for credibility, it’s not without its drawbacks.

- Reviewer bias

The more transparent double-blind system is not yet very common, which can lead to bias in reviewing. A common criticism is that an excellent paper by a new researcher may be declined, while an objectively lower-quality submission by an established researcher would be accepted.

- Delays in publication

The thoroughness of the peer review process can lead to significant delays in publishing time. Research that was current at the time of submission may not be as current by the time it’s published.

- Risk of human error

By its very nature, peer review carries a risk of human error. In particular, falsification often cannot be detected, given that reviewers would have to replicate entire experiments to ensure the validity of results.

Peer review is a process of evaluating submissions to an academic journal. Utilising rigorous criteria, a panel of reviewers in the same subject area decide whether to accept each submission for publication.

For this reason, academic journals are often considered among the most credible sources you can use in a research project – provided that the journal itself is trustworthy and well regarded.

Peer review can stop obviously problematic, falsified, or otherwise untrustworthy research from being published. It also represents an excellent opportunity to get feedback from renowned experts in your field.

It acts as a first defence, helping you ensure your argument is clear and that there are no gaps, vague terms, or unanswered questions for readers who weren’t involved in the research process.

Peer-reviewed articles are considered a highly credible source due to this stringent process they go through before publication.

In general, the peer review process follows the following steps:

- Reject the manuscript and send it back to author, or

- Lastly, the edited manuscript is sent back to the author. They input the edits, and resubmit it to the editor for publication.

Many academic fields use peer review , largely to determine whether a manuscript is suitable for publication. Peer review enhances the credibility of the published manuscript.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

George, T. (2022, September 02). What Is Peer Review? | Types & Examples. Scribbr. Retrieved 12 August 2024, from https://www.scribbr.co.uk/research-methods/peer-reviews/

Is this article helpful?

Tegan George

Other students also liked, what is a double-blind study | introduction & examples, a quick guide to experimental design | 5 steps & examples, data cleaning | a guide with examples & steps.

Peer review

Psychological Services reviewer guidelines

Psychological Services guidelines for reviewers.

Nick Bowman, PhD

Nick Bowman, PhD, associate editor for Technology, Mind, and Behavior sheds light on registered reports, outlining key features, misconceptions, and benefits of this unique article type.

Part three, peer review

In this part of the series, we examine the role of peer reviewers.

How to become a journal editor

The psychology field is looking for fresh voices—why not add yours?

Join a reviewer mentorship program

Explore and join reviewer mentorship programs offered by various APA journals.

Learn how to review a manuscript

Peer review is an integral part of science and a valuable contribution to our field. Browse these resources and consider joining the community of APA reviewers.

Get recognized for peer review

Publons is a service that provides instant recognition for peer review and enables APA reviewers and action editors to maintain a verified record of their contributions for promotion and funding applications.

Little-known secrets for how to get published

Advice from seasoned psychologists for those seeking to publish in a journal for the first time

How to review a manuscript

Journal editors identify 10 key steps for would-be reviewers

How to find reviewer opportunities

What if you want to review journal manuscripts but the editors aren’t beating down your door?

Webinars and training

Standards, guidelines, and regulations

Guidelines for responsible conduct regarding scientific communication

APA Style Journal Article Reporting Standards

National Science Foundation (NSF) Grant Proposal Guide

The Proposal & Award Policies & Procedures Guide (PAPPG) is the source for information about NSF's proposal and award process. Each version of the PAPPG applies to all proposals or applications submitted while that version is effective.

National Institutes of Health (NIH) Peer Review Policies and Practices

NIH resources about the regulations and processes that govern peer review, including management of conflicts of interest, applicant and reviewer responsibilities in maintaining the integrity in peer review, appeals, and more.

Office of Management and Budget (OMB) Final Information Quality Bulletin for Peer Review

Peer review at APA Journals

APA Journals Peer Review Process

Like other scientific journals, APA journals utilize a peer review process to guide manuscript selection and publication decisions.

APA reviewers get recognized through Web of Science Reviewer Recognition Service

Web of Science Reviewer Recognition Service™ enables APA reviewers and action editors to maintain a verified record of their contributions.

What is peer review?

From a publisher’s perspective, peer review functions as a filter for content, directing better quality articles to better quality journals and so creating journal brands.

Running articles through the process of peer review adds value to them. For this reason publishers need to make sure that peer review is robust.

Editor Feedback

"Pointing out the specifics about flaws in the paper’s structure is paramount. Are methods valid, is data clearly presented, and are conclusions supported by data?” (Editor feedback)

“If an editor can read your comments and understand clearly the basis for your recommendation, then you have written a helpful review.” (Editor feedback)

Peer Review at Its Best

What peer review does best is improve the quality of published papers by motivating authors to submit good quality work – and helping to improve that work through the peer review process.

In fact, 90% of researchers feel that peer review improves the quality of their published paper (University of Tennessee and CIBER Research Ltd, 2013).

What the Critics Say

The peer review system is not without criticism. Studies show that even after peer review, some articles still contain inaccuracies and demonstrate that most rejected papers will go on to be published somewhere else.

However, these criticisms should be understood within the context of peer review as a human activity. The occasional errors of peer review are not reasons for abandoning the process altogether – the mistakes would be worse without it.

Improving Effectiveness

Some of the ways in which Wiley is seeking to improve the efficiency of the process, include:

- Reducing the amount of repeat reviewing by innovating around transferable peer review

- Providing training and best practice guidance to peer reviewers

- Improving recognition of the contribution made by reviewers

Visit our Peer Review Process and Types of Peer Review pages for additional detailed information on peer review.

Transparency in Peer Review

Wiley is committed to increasing transparency in peer review, increasing accountability for the peer review process and giving recognition to the work of peer reviewers and editors. We are also actively exploring other peer review models to give researchers the options that suit them and their communities.

Special Issues

Special Issues are subject to extensive review, during which journal Editors or Editorial Board input is solicited for each proposal. Our approval process includes an assessment of the rationale and scope of the proposed topic(s), and the expertise of Guest Editors, if any are involved. Special Issue articles must follow the same policies as described in the journal's Author Guidelines.

Editor/Editorial Board papers

Papers authored by Editors or Editorial Board members of the title are sent to Editors that are unaffiliated with the author or institution and monitored carefully to ensure there is no peer review bias.

- UConn Library

- Research Now

- Explore Information

- Understanding & Recognizing Peer Review

Explore Information — Understanding & Recognizing Peer Review

- Getting the Lay of the Land

- Why use Library Information?

- The Information Lifecycle

- Primary & Secondary Sources - Humanities & Social Sciences

- Primary & Secondary Sources - Sciences

- Help & Other Resources

- Research Now Homepage

What Do You Mean by Peer Reviewed Sources?

(Source: NCSU Libraries)

What's so great about peer review?

Peer reviewed articles are often considered the most reliable and reputable sources in that field of study. Peer reviewed articles have undergone review (hence the "peer-review") by fellow experts in that field, as well as an editorial review process. The purpose of this is to ensure that, as much as possible, the finished product meets the standards of the field.

Peer reviewed publications are one of the main ways researchers communicate with each other.

Most library databases have features to help you discover articles from scholarly journals. Most articles from scholarly journals have gone through the peer review process. Many scholarly journals will also publish book reviews or start off with an editorial, which are not peer reviewed - so don't be tricked!

So that means I can turn my brain off, right?

Nope! You still need to engage with what you find. Are there additional scholarly sources with research that supports the source you've found, or have you encountered an outlier in the research? Have others been able to replicate the results of the research? Is the information old and outdated? Was this study on toothpaste (for example) funded by Colgate?

You're engaging with the research - ultimately, you decide what belongs in your project, and what doesn't. You get to decide if a source is relevant or not. It's a lot of responsibility - but it's a lot of authority, too.

Understanding Types of Sources

- Popular vs. Scholarly

- Reading Scholarly Articles

- Check Yourself!

Popular vs. scholarly articles.

When looking for articles to use in your assignment, you should realize that there is a difference between "popular" and "scholarly" articles.

Popular sources, such as newspapers and magazines, are written by journalists or others for general readers (for example, Time, Rolling Stone, and National Geographic).

Scholarly sources are written for the academic community, including experts and students, on topics that are typically footnoted and based on research (for example, American Literature or New England Review). Scholarly journals are sometimes referred to as "peer-reviewed," "refereed" or "academic."

How do you find scholarly or "peer-reviewed" journal articles?

The option to select scholarly or peer-reviewed articles is typically available on the search page of each database. Just check the box or select the option . You can also search Ulrich's Periodical Directory to see if the journal is Refereed / Peer-reviewed.

Popular Sources (Magazines & Newspapers) Inform and entertain the general public.

- Are often written by journalists or professional writers for a general audience

- Use language easily understood by general readers

- Rarely give full citations for sources

- Written for the general public

- Tend to be shorter than journal articles

Scholarly or Academic Sources (Journals & Scholarly Books) Disseminate research and academic discussion among professionals in a discipline.

- Are written by and for faculty, researchers or scholars (chemists, historians, doctors, artists, etc.)

- Uses scholarly or technical language

- Tend to be longer articles about research

- Include full citations for sources

- Are often refereed or peer reviewed (articles are reviewed by an editor and other specialists before being accepted for publication)

- Publications may include book reviews and editorials which are not considered scholarly articles

Trade Publications Neither scholarly or popular sources, but could be a combination of both. Allows practitioners in specific industries to share market and production information that improves their businesses.

- Not peer reviewed. Usually written by people in the field or with subject expertise

- Shorter articles that are practical

- Provides information about current events and trends

What might you find in a scholarly article?

- Title: what the article is about

- Authors and affiliations: the writer of the article and the professional affiliations. The credentials may appear below the name or in a footnote.

- Abstract: brief summary of the article. Gives you a general understanding before you read the whole thing.

- Introduction: general overview of the research topic or problem

- Literature Review: what others have found on the same topic

- Methods: information about how the authors conducted their research

- Results: key findings of the author's research

- Discussion/Conclusion: summary of the results or findings

- References: Citations to publications by other authors mentioned in the article

- Anatomy of a Scholarly Article This tutorial from the NCSU Libraries provides an interactive module for learning about the unique structure and elements of many scholarly articles.

- << Previous: Primary & Secondary Sources - Sciences

- Next: Help & Other Resources >>

- Last Updated: Aug 2, 2024 4:30 PM

- URL: https://guides.lib.uconn.edu/exploreinfo

Peer Reviewed Literature

What is peer review, terminology, peer review what does that mean, what types of articles are peer-reviewed, what information is not peer-reviewed, what about google scholar.

- How do I find peer-reviewed articles?

- Scholarly vs. Popular Sources

Research Librarian

For more help on this topic, please contact our Research Help Desk: [email protected] or 781-768-7303. Stay up-to-date on our current hours . Note: all hours are EST.

This Guide was created by Carolyn Swidrak (retired).

Research findings are communicated in many ways. One of the most important ways is through publication in scholarly, peer-reviewed journals.

Research published in scholarly journals is held to a high standard. It must make a credible and significant contribution to the discipline. To ensure a very high level of quality, articles that are submitted to scholarly journals undergo a process called peer-review.

Once an article has been submitted for publication, it is reviewed by other independent, academic experts (at least two) in the same field as the authors. These are the peers. The peers evaluate the research and decide if it is good enough and important enough to publish. Usually there is a back-and-forth exchange between the reviewers and the authors, including requests for revisions, before an article is published.

Peer review is a rigorous process but the intensity varies by journal. Some journals are very prestigious and receive many submissions for publication. They publish only the very best, most highly regarded research.

The terms scholarly, academic, peer-reviewed and refereed are sometimes used interchangeably, although there are slight differences.

Scholarly and academic may refer to peer-reviewed articles, but not all scholarly and academic journals are peer-reviewed (although most are.) For example, the Harvard Business Review is an academic journal but it is editorially reviewed, not peer-reviewed.

Peer-reviewed and refereed are identical terms.

From Peer Review in 3 Minutes [Video], by the North Carolina State University Library, 2014, YouTube (https://youtu.be/rOCQZ7QnoN0).

Peer reviewed articles can include:

- Original research (empirical studies)

- Review articles

- Systematic reviews

- Meta-analyses

There is much excellent, credible information in existence that is NOT peer-reviewed. Peer-review is simply ONE MEASURE of quality.

Much of this information is referred to as "gray literature."

Government Agencies

Government websites such as the Centers for Disease Control (CDC) publish high level, trustworthy information. However, most of it is not peer-reviewed. (Some of their publications are peer-reviewed, however. The journal Emerging Infectious Diseases, published by the CDC is one example.)

Conference Proceedings

Papers from conference proceedings are not usually peer-reviewed. They may go on to become published articles in a peer-reviewed journal.

Dissertations

Dissertations are written by doctoral candidates, and while they are academic they are not peer-reviewed.

Many students like Google Scholar because it is easy to use. While the results from Google Scholar are generally academic they are not necessarily peer-reviewed. Typically, you will find:

- Peer reviewed journal articles (although they are not identified as peer-reviewed)

- Unpublished scholarly articles (not peer-reviewed)

- Masters theses, doctoral dissertations and other degree publications (not peer-reviewed)

- Book citations and links to some books (not necessarily peer-reviewed)

- Next: How do I find peer-reviewed articles? >>

- Last Updated: Feb 12, 2024 9:39 AM

- URL: https://libguides.regiscollege.edu/peer_review

Understanding the peer review process

What is peer review a guide for authors.

The peer review process starts once you have submitted your paper to a journal.

After submission, your paper will be sent for assessment by independent experts in your field. The reviewers are asked to judge the validity, significance, and originality of your work.

Below we expand on what peer review is, and how it works.

What is peer review? And why is important?

Peer review is the independent assessment of your research paper by experts in your field. The purpose of peer review is to evaluate the paper’s quality and suitability for publication.

As well as peer review acting as a form of quality control for academic journals, it is a very useful source of feedback for you. The feedback can be used to improve your paper before it is published.

So at its best, peer review is a collaborative process, where authors engage in a dialogue with peers in their field, and receive constructive support to advance their work.

Use our free guide to discover how you can get the most out of the peer review process.

Why is peer review important?

Peer review is vitally important to uphold the high standards of scholarly communications, and maintain the quality of individual journals. It is also an important support for the researchers who author the papers.

Every journal depends on the hard work of reviewers who are the ones at the forefront of the peer review process. The reviewers are the ones who test and refine each article before publication. Even for very specialist journals, the editor can’t be an expert in the topic of every article submitted. So, the feedback and comments of carefully selected reviewers are an essential guide to inform the editor’s decision on a research paper.

There are also practical reasons why peer review is beneficial to you, the author. The peer review process can alert you to any errors in your work, or gaps in the literature you may have overlooked.

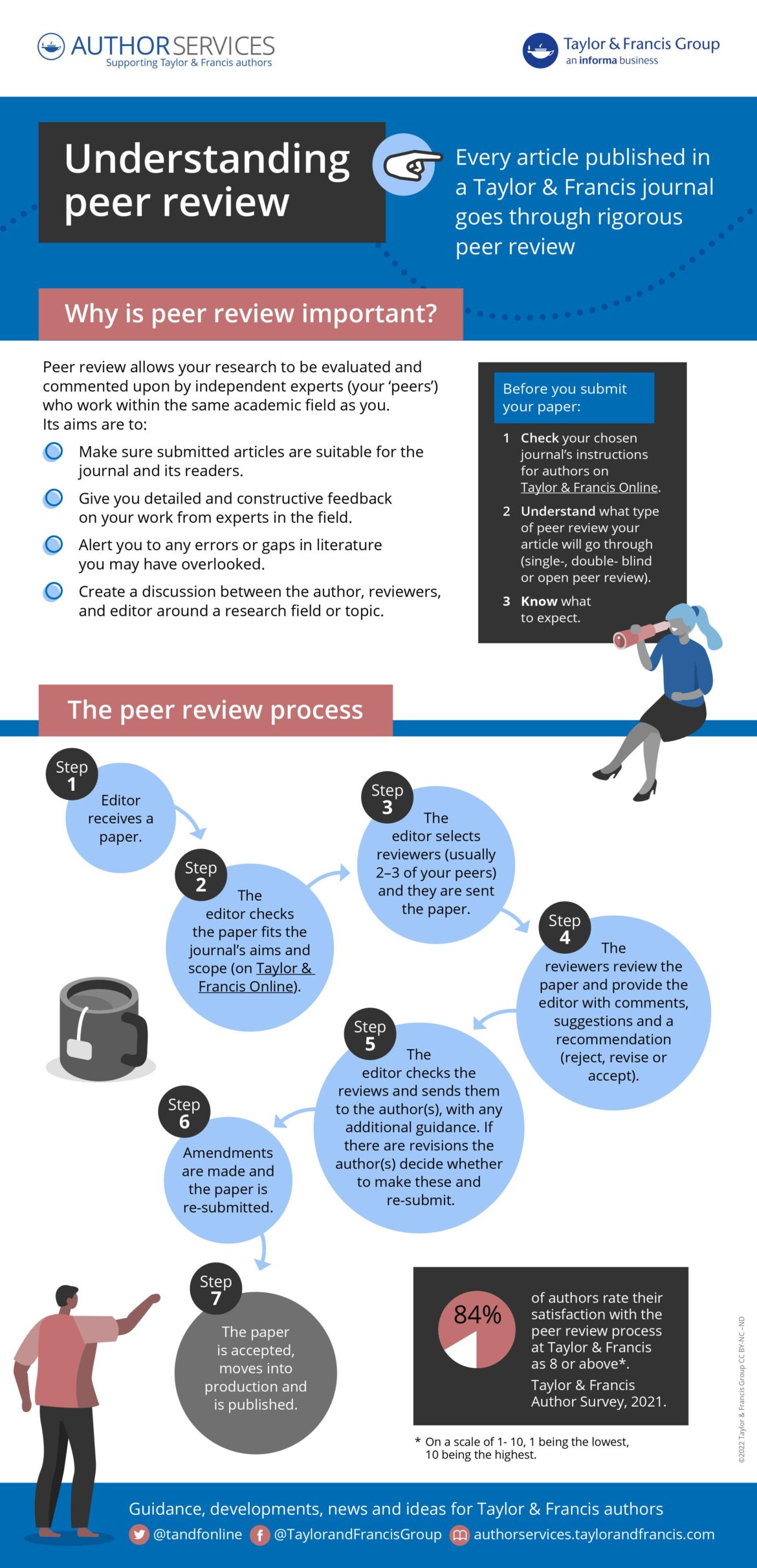

Researchers consistently tell us that their final published article is better than the version they submitted before peer review. 91% of respondents to a Sense about Science peer review survey said that their last paper was improved through peer review. A Taylor & Francis study supports this, finding that most researchers, across all subject areas, rated the contribution of peer review towards improving their article as 8 or above out of 10.

Enlarge the infographic

Choose the right journal for your research: Think. Check. Submit

We support Think. Check. Submit. , an initiative launched by a coalition of scholarly communications organizations. It provides the tools to help you choose the right journal for your work.

Think. Check. Submit. was established because there are some journals which do not provide the quality assurance and services that should be delivered by a reputable journal. In particular, many of these journals do not make sure there is thorough peer review or editor feedback process in place.

That means, if you submit to one of these journals, you will not benefit from helpful article feedback from your peers. It may also lead to others being skeptical about the validity of your published results.

You should therefore make sure that you submit your work to a journal you can trust. By using the checklist provided on the Think. Check. Submit. website , you can make an informed choice.

Peer review integrity at Taylor & Francis

Every full research article published in a Taylor & Francis journal has been through peer review, as outlined in the journal’s aims & scope information. This means that the article’s quality, validity, and relevance has been assessed by independent peers within the research field.

We believe in the integrity of peer review with every journal we publish, ascribing to the following statement:

All published research articles in this journal have undergone rigorous peer review, based on initial editor screening, anonymous refereeing by independent expert referees, and consequent revision by article authors when required.

Different types of peer review

Peer review takes different forms and each type has pros and cons. The type of peer review model used will often vary between journals, even of the same publisher. So, check your chosen journal’s peer-review policy before you submit , to make sure you know what to expect and are comfortable with your paper being reviewed in that way.

Every Taylor & Francis journal publishes a statement describing the type of peer review used by the journal within the aims & scope section on Taylor & Francis Online.

Below we go through the most common types of peer review.

Common types of peer review

Single-anonymous peer review.

This type of peer review is also called ‘single-blind review’. In this model, the reviewers know that you are the author of the article, but you don’t know the identities of the reviewers.

Single-anonymous review is most common for science and medicine journals.

Find out more about the pros and cons of single-anonymous peer review .

Double-anonymous peer review

In this model, which is also known as ‘double-blind review’, the reviewers don’t know that you are the author of the article. And you don’t know who the reviewers are either. Double-anonymous review is particularly common in humanities and some social sciences’ journals.

Discover more about the pros and cons of double-anonymous peer review .

If you are submitting your article for double-anonymous peer review, make sure you know how to make your article anonymous .

Open peer review

There is no one agreed definition of open peer review. In fact, a recent study identified 122 different definitions of the term. Typically, it will mean that the reviewers know you are the author and also that their identity will be revealed to you at some point during the review or publication process.

Find out more about open peer review .

Post-publication peer review

In post-publication peer review models, your paper may still go through one of the other types of peer review first. Alternatively, your paper may be published online almost immediately, after some basic checks. Either way, once it is published, there will then be an opportunity for invited reviewers (or even readers) to add their own comments or reviews.

You can learn about the pros and cons of post-publication peer review here.

Registered Reports

The Registered Reports process splits peer review into two parts.

The first round of peer review takes place after you’ve designed your study, but before you’ve collected or analyzed any data. This allows you to get feedback on both the question you’re looking to answer, and the experiment you’ve designed to test it.

If your manuscript passes peer review, the journal will give you an in-principle acceptance (IPA). This indicates that your article will be published as long as you successfully complete your study according to the pre-registered methods and submit an evidence-based interpretation of the results.

Explore Registered Reports at Taylor & Francis .

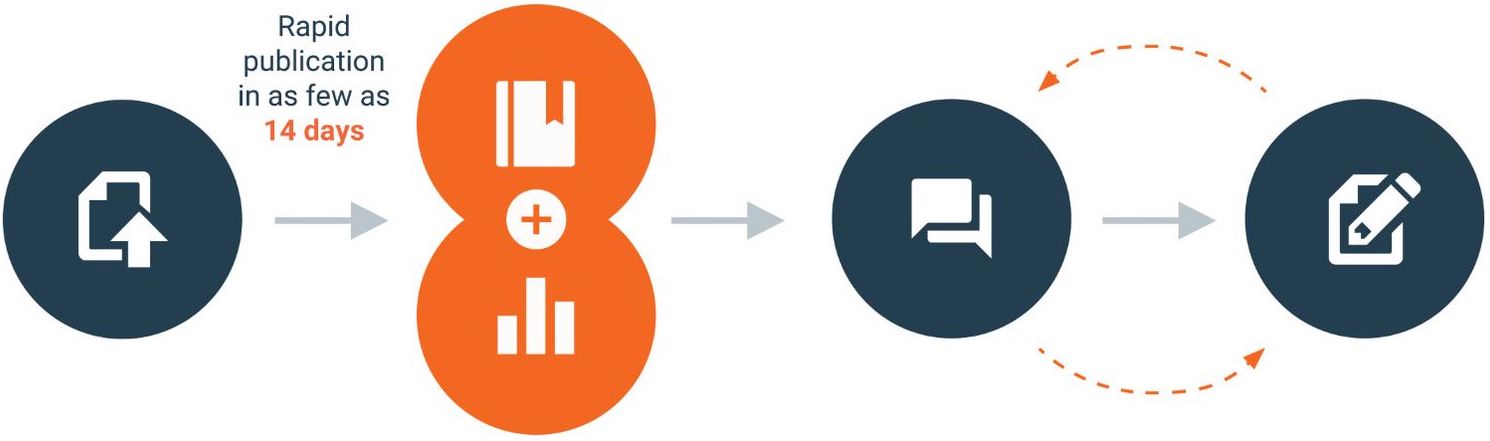

F1000 Research: Open and post-publication peer review

F1000Research is part of the Taylor & Francis Group. It operates an innovative peer review process which is fully transparent and takes place after an article has been published.

How it works

Before publication, authors are asked to suggest at least five potential reviewers who are experts in the field. The reviewers also need to be able to provide unbiased reports on the article.

Submitted articles are published rapidly, after passing a series of pre-publication checks that assess, originality, readability, author eligibility, and compliance with F1000Research’s policies and ethical guidelines.

Once the article is published, expert reviewers are formally invited to review.

The peer review process is entirely open and transparent. Each peer review report, plus the approval status selected by the reviewer, is published with the reviewer’s name and affiliation alongside the article.

Authors are encouraged to respond openly to the peer review reports and can publish revised versions of their article if they wish. New versions are clearly linked and easily navigable, so that readers and reviewers can quickly find the latest version of an article.

The article remains published regardless of the reviewers’ reports. Articles that pass peer review are indexed in Scopus, PubMed, Google Scholar and other bibliographic databases.

How our publishing process works for articles

1. Article submission

Submitting an article is easy with our single-page submission system.

The in-house editorial team carries out a basic check on each submission to ensure that all policies are adhered to.

2. Publication and data deposition

Once the authors have analysed the manuscript, the article (with its associated source data) is published within a week, enabling immediate viewing and caution.

3. Open peer review & user commenting

Expert reviewers are selected and invited. Their reports and names are published alongside the article, together with the authors’ responses and comments from registered users.

4. Article revision