Educational resources and simple solutions for your research journey

What is a Research Hypothesis: How to Write it, Types, and Examples

Any research begins with a research question and a research hypothesis . A research question alone may not suffice to design the experiment(s) needed to answer it. A hypothesis is central to the scientific method. But what is a hypothesis ? A hypothesis is a testable statement that proposes a possible explanation to a phenomenon, and it may include a prediction. Next, you may ask what is a research hypothesis ? Simply put, a research hypothesis is a prediction or educated guess about the relationship between the variables that you want to investigate.

It is important to be thorough when developing your research hypothesis. Shortcomings in the framing of a hypothesis can affect the study design and the results. A better understanding of the research hypothesis definition and characteristics of a good hypothesis will make it easier for you to develop your own hypothesis for your research. Let’s dive in to know more about the types of research hypothesis , how to write a research hypothesis , and some research hypothesis examples .

Table of Contents

What is a hypothesis ?

A hypothesis is based on the existing body of knowledge in a study area. Framed before the data are collected, a hypothesis states the tentative relationship between independent and dependent variables, along with a prediction of the outcome.

What is a research hypothesis ?

Young researchers starting out their journey are usually brimming with questions like “ What is a hypothesis ?” “ What is a research hypothesis ?” “How can I write a good research hypothesis ?”

A research hypothesis is a statement that proposes a possible explanation for an observable phenomenon or pattern. It guides the direction of a study and predicts the outcome of the investigation. A research hypothesis is testable, i.e., it can be supported or disproven through experimentation or observation.

Characteristics of a good hypothesis

Here are the characteristics of a good hypothesis :

- Clearly formulated and free of language errors and ambiguity

- Concise and not unnecessarily verbose

- Has clearly defined variables

- Testable and stated in a way that allows for it to be disproven

- Can be tested using a research design that is feasible, ethical, and practical

- Specific and relevant to the research problem

- Rooted in a thorough literature search

- Can generate new knowledge or understanding.

How to create an effective research hypothesis

A study begins with the formulation of a research question. A researcher then performs background research. This background information forms the basis for building a good research hypothesis . The researcher then performs experiments, collects, and analyzes the data, interprets the findings, and ultimately, determines if the findings support or negate the original hypothesis.

Let’s look at each step for creating an effective, testable, and good research hypothesis :

- Identify a research problem or question: Start by identifying a specific research problem.

- Review the literature: Conduct an in-depth review of the existing literature related to the research problem to grasp the current knowledge and gaps in the field.

- Formulate a clear and testable hypothesis : Based on the research question, use existing knowledge to form a clear and testable hypothesis . The hypothesis should state a predicted relationship between two or more variables that can be measured and manipulated. Improve the original draft till it is clear and meaningful.

- State the null hypothesis: The null hypothesis is a statement that there is no relationship between the variables you are studying.

- Define the population and sample: Clearly define the population you are studying and the sample you will be using for your research.

- Select appropriate methods for testing the hypothesis: Select appropriate research methods, such as experiments, surveys, or observational studies, which will allow you to test your research hypothesis .

Remember that creating a research hypothesis is an iterative process, i.e., you might have to revise it based on the data you collect. You may need to test and reject several hypotheses before answering the research problem.

How to write a research hypothesis

When you start writing a research hypothesis , you use an “if–then” statement format, which states the predicted relationship between two or more variables. Clearly identify the independent variables (the variables being changed) and the dependent variables (the variables being measured), as well as the population you are studying. Review and revise your hypothesis as needed.

An example of a research hypothesis in this format is as follows:

“ If [athletes] follow [cold water showers daily], then their [endurance] increases.”

Population: athletes

Independent variable: daily cold water showers

Dependent variable: endurance

You may have understood the characteristics of a good hypothesis . But note that a research hypothesis is not always confirmed; a researcher should be prepared to accept or reject the hypothesis based on the study findings.

Research hypothesis checklist

Following from above, here is a 10-point checklist for a good research hypothesis :

- Testable: A research hypothesis should be able to be tested via experimentation or observation.

- Specific: A research hypothesis should clearly state the relationship between the variables being studied.

- Based on prior research: A research hypothesis should be based on existing knowledge and previous research in the field.

- Falsifiable: A research hypothesis should be able to be disproven through testing.

- Clear and concise: A research hypothesis should be stated in a clear and concise manner.

- Logical: A research hypothesis should be logical and consistent with current understanding of the subject.

- Relevant: A research hypothesis should be relevant to the research question and objectives.

- Feasible: A research hypothesis should be feasible to test within the scope of the study.

- Reflects the population: A research hypothesis should consider the population or sample being studied.

- Uncomplicated: A good research hypothesis is written in a way that is easy for the target audience to understand.

By following this research hypothesis checklist , you will be able to create a research hypothesis that is strong, well-constructed, and more likely to yield meaningful results.

Types of research hypothesis

Different types of research hypothesis are used in scientific research:

1. Null hypothesis:

A null hypothesis states that there is no change in the dependent variable due to changes to the independent variable. This means that the results are due to chance and are not significant. A null hypothesis is denoted as H0 and is stated as the opposite of what the alternative hypothesis states.

Example: “ The newly identified virus is not zoonotic .”

2. Alternative hypothesis:

This states that there is a significant difference or relationship between the variables being studied. It is denoted as H1 or Ha and is usually accepted or rejected in favor of the null hypothesis.

Example: “ The newly identified virus is zoonotic .”

3. Directional hypothesis :

This specifies the direction of the relationship or difference between variables; therefore, it tends to use terms like increase, decrease, positive, negative, more, or less.

Example: “ The inclusion of intervention X decreases infant mortality compared to the original treatment .”

4. Non-directional hypothesis:

While it does not predict the exact direction or nature of the relationship between the two variables, a non-directional hypothesis states the existence of a relationship or difference between variables but not the direction, nature, or magnitude of the relationship. A non-directional hypothesis may be used when there is no underlying theory or when findings contradict previous research.

Example, “ Cats and dogs differ in the amount of affection they express .”

5. Simple hypothesis :

A simple hypothesis only predicts the relationship between one independent and another independent variable.

Example: “ Applying sunscreen every day slows skin aging .”

6 . Complex hypothesis :

A complex hypothesis states the relationship or difference between two or more independent and dependent variables.

Example: “ Applying sunscreen every day slows skin aging, reduces sun burn, and reduces the chances of skin cancer .” (Here, the three dependent variables are slowing skin aging, reducing sun burn, and reducing the chances of skin cancer.)

7. Associative hypothesis:

An associative hypothesis states that a change in one variable results in the change of the other variable. The associative hypothesis defines interdependency between variables.

Example: “ There is a positive association between physical activity levels and overall health .”

8 . Causal hypothesis:

A causal hypothesis proposes a cause-and-effect interaction between variables.

Example: “ Long-term alcohol use causes liver damage .”

Note that some of the types of research hypothesis mentioned above might overlap. The types of hypothesis chosen will depend on the research question and the objective of the study.

Research hypothesis examples

Here are some good research hypothesis examples :

“The use of a specific type of therapy will lead to a reduction in symptoms of depression in individuals with a history of major depressive disorder.”

“Providing educational interventions on healthy eating habits will result in weight loss in overweight individuals.”

“Plants that are exposed to certain types of music will grow taller than those that are not exposed to music.”

“The use of the plant growth regulator X will lead to an increase in the number of flowers produced by plants.”

Characteristics that make a research hypothesis weak are unclear variables, unoriginality, being too general or too vague, and being untestable. A weak hypothesis leads to weak research and improper methods.

Some bad research hypothesis examples (and the reasons why they are “bad”) are as follows:

“This study will show that treatment X is better than any other treatment . ” (This statement is not testable, too broad, and does not consider other treatments that may be effective.)

“This study will prove that this type of therapy is effective for all mental disorders . ” (This statement is too broad and not testable as mental disorders are complex and different disorders may respond differently to different types of therapy.)

“Plants can communicate with each other through telepathy . ” (This statement is not testable and lacks a scientific basis.)

Importance of testable hypothesis

If a research hypothesis is not testable, the results will not prove or disprove anything meaningful. The conclusions will be vague at best. A testable hypothesis helps a researcher focus on the study outcome and understand the implication of the question and the different variables involved. A testable hypothesis helps a researcher make precise predictions based on prior research.

To be considered testable, there must be a way to prove that the hypothesis is true or false; further, the results of the hypothesis must be reproducible.

Frequently Asked Questions (FAQs) on research hypothesis

1. What is the difference between research question and research hypothesis ?

A research question defines the problem and helps outline the study objective(s). It is an open-ended statement that is exploratory or probing in nature. Therefore, it does not make predictions or assumptions. It helps a researcher identify what information to collect. A research hypothesis , however, is a specific, testable prediction about the relationship between variables. Accordingly, it guides the study design and data analysis approach.

2. When to reject null hypothesis ?

A null hypothesis should be rejected when the evidence from a statistical test shows that it is unlikely to be true. This happens when the test statistic (e.g., p -value) is less than the defined significance level (e.g., 0.05). Rejecting the null hypothesis does not necessarily mean that the alternative hypothesis is true; it simply means that the evidence found is not compatible with the null hypothesis.

3. How can I be sure my hypothesis is testable?

A testable hypothesis should be specific and measurable, and it should state a clear relationship between variables that can be tested with data. To ensure that your hypothesis is testable, consider the following:

- Clearly define the key variables in your hypothesis. You should be able to measure and manipulate these variables in a way that allows you to test the hypothesis.

- The hypothesis should predict a specific outcome or relationship between variables that can be measured or quantified.

- You should be able to collect the necessary data within the constraints of your study.

- It should be possible for other researchers to replicate your study, using the same methods and variables.

- Your hypothesis should be testable by using appropriate statistical analysis techniques, so you can draw conclusions, and make inferences about the population from the sample data.

- The hypothesis should be able to be disproven or rejected through the collection of data.

4. How do I revise my research hypothesis if my data does not support it?

If your data does not support your research hypothesis , you will need to revise it or develop a new one. You should examine your data carefully and identify any patterns or anomalies, re-examine your research question, and/or revisit your theory to look for any alternative explanations for your results. Based on your review of the data, literature, and theories, modify your research hypothesis to better align it with the results you obtained. Use your revised hypothesis to guide your research design and data collection. It is important to remain objective throughout the process.

5. I am performing exploratory research. Do I need to formulate a research hypothesis?

As opposed to “confirmatory” research, where a researcher has some idea about the relationship between the variables under investigation, exploratory research (or hypothesis-generating research) looks into a completely new topic about which limited information is available. Therefore, the researcher will not have any prior hypotheses. In such cases, a researcher will need to develop a post-hoc hypothesis. A post-hoc research hypothesis is generated after these results are known.

6. How is a research hypothesis different from a research question?

A research question is an inquiry about a specific topic or phenomenon, typically expressed as a question. It seeks to explore and understand a particular aspect of the research subject. In contrast, a research hypothesis is a specific statement or prediction that suggests an expected relationship between variables. It is formulated based on existing knowledge or theories and guides the research design and data analysis.

7. Can a research hypothesis change during the research process?

Yes, research hypotheses can change during the research process. As researchers collect and analyze data, new insights and information may emerge that require modification or refinement of the initial hypotheses. This can be due to unexpected findings, limitations in the original hypotheses, or the need to explore additional dimensions of the research topic. Flexibility is crucial in research, allowing for adaptation and adjustment of hypotheses to align with the evolving understanding of the subject matter.

8. How many hypotheses should be included in a research study?

The number of research hypotheses in a research study varies depending on the nature and scope of the research. It is not necessary to have multiple hypotheses in every study. Some studies may have only one primary hypothesis, while others may have several related hypotheses. The number of hypotheses should be determined based on the research objectives, research questions, and the complexity of the research topic. It is important to ensure that the hypotheses are focused, testable, and directly related to the research aims.

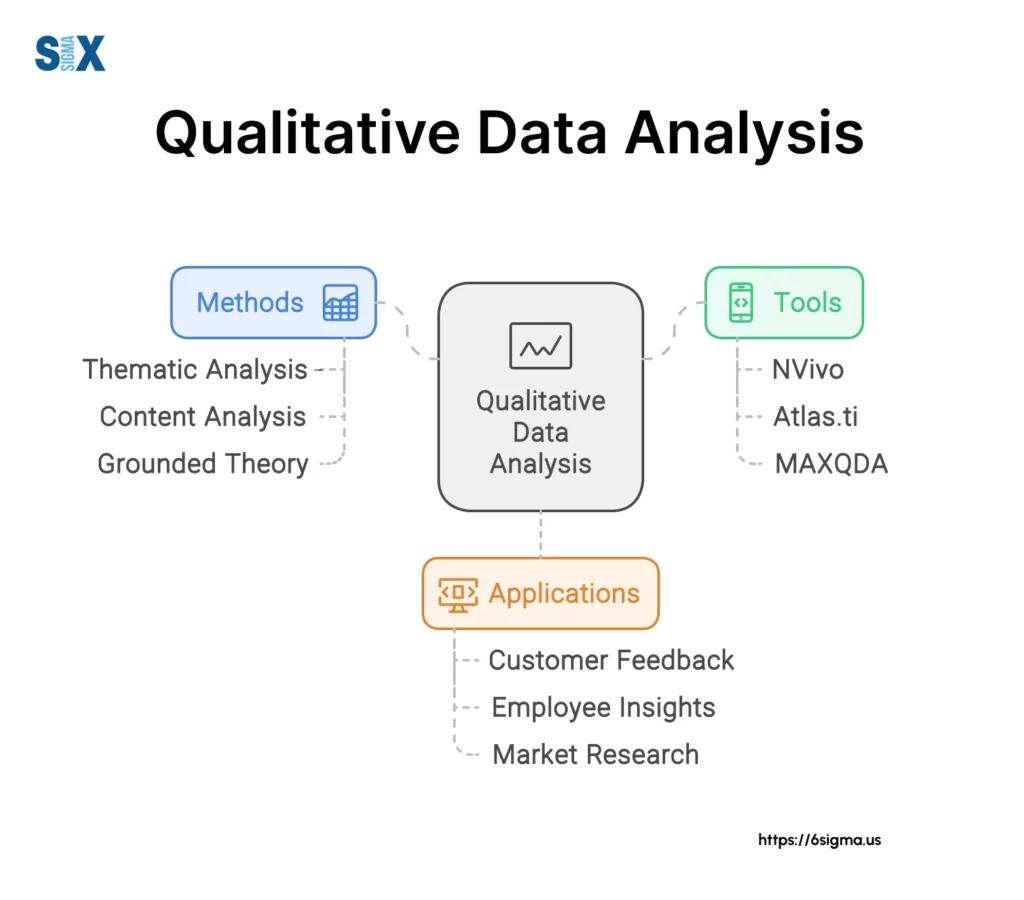

9. Can research hypotheses be used in qualitative research?

Yes, research hypotheses can be used in qualitative research, although they are more commonly associated with quantitative research. In qualitative research, hypotheses may be formulated as tentative or exploratory statements that guide the investigation. Instead of testing hypotheses through statistical analysis, qualitative researchers may use the hypotheses to guide data collection and analysis, seeking to uncover patterns, themes, or relationships within the qualitative data. The emphasis in qualitative research is often on generating insights and understanding rather than confirming or rejecting specific research hypotheses through statistical testing.

Editage All Access is a subscription-based platform that unifies the best AI tools and services designed to speed up, simplify, and streamline every step of a researcher’s journey. The Editage All Access Pack is a one-of-a-kind subscription that unlocks full access to an AI writing assistant, literature recommender, journal finder, scientific illustration tool, and exclusive discounts on professional publication services from Editage.

Based on 22+ years of experience in academia, Editage All Access empowers researchers to put their best research forward and move closer to success. Explore our top AI Tools pack, AI Tools + Publication Services pack, or Build Your Own Plan. Find everything a researcher needs to succeed, all in one place – Get All Access now starting at just $14 a month !

Related Posts

Back to School – Lock-in All Access Pack for a Year at the Best Price

Journal Turnaround Time: Researcher.Life and Scholarly Intelligence Join Hands to Empower Researchers with Publication Time Insights

Information

- Author Services

Initiatives

You are accessing a machine-readable page. In order to be human-readable, please install an RSS reader.

All articles published by MDPI are made immediately available worldwide under an open access license. No special permission is required to reuse all or part of the article published by MDPI, including figures and tables. For articles published under an open access Creative Common CC BY license, any part of the article may be reused without permission provided that the original article is clearly cited. For more information, please refer to https://www.mdpi.com/openaccess .

Feature papers represent the most advanced research with significant potential for high impact in the field. A Feature Paper should be a substantial original Article that involves several techniques or approaches, provides an outlook for future research directions and describes possible research applications.

Feature papers are submitted upon individual invitation or recommendation by the scientific editors and must receive positive feedback from the reviewers.

Editor’s Choice articles are based on recommendations by the scientific editors of MDPI journals from around the world. Editors select a small number of articles recently published in the journal that they believe will be particularly interesting to readers, or important in the respective research area. The aim is to provide a snapshot of some of the most exciting work published in the various research areas of the journal.

Original Submission Date Received: .

- Active Journals

- Find a Journal

- Proceedings Series

- For Authors

- For Reviewers

- For Editors

- For Librarians

- For Publishers

- For Societies

- For Conference Organizers

- Open Access Policy

- Institutional Open Access Program

- Special Issues Guidelines

- Editorial Process

- Research and Publication Ethics

- Article Processing Charges

- Testimonials

- Preprints.org

- SciProfiles

- Encyclopedia

Article Menu

- Subscribe SciFeed

- Recommended Articles

- Google Scholar

- on Google Scholar

- Table of Contents

Find support for a specific problem in the support section of our website.

Please let us know what you think of our products and services.

Visit our dedicated information section to learn more about MDPI.

JSmol Viewer

Visually hypothesising in scientific paper writing: confirming and refuting qualitative research hypotheses using diagrams.

1. Introduction

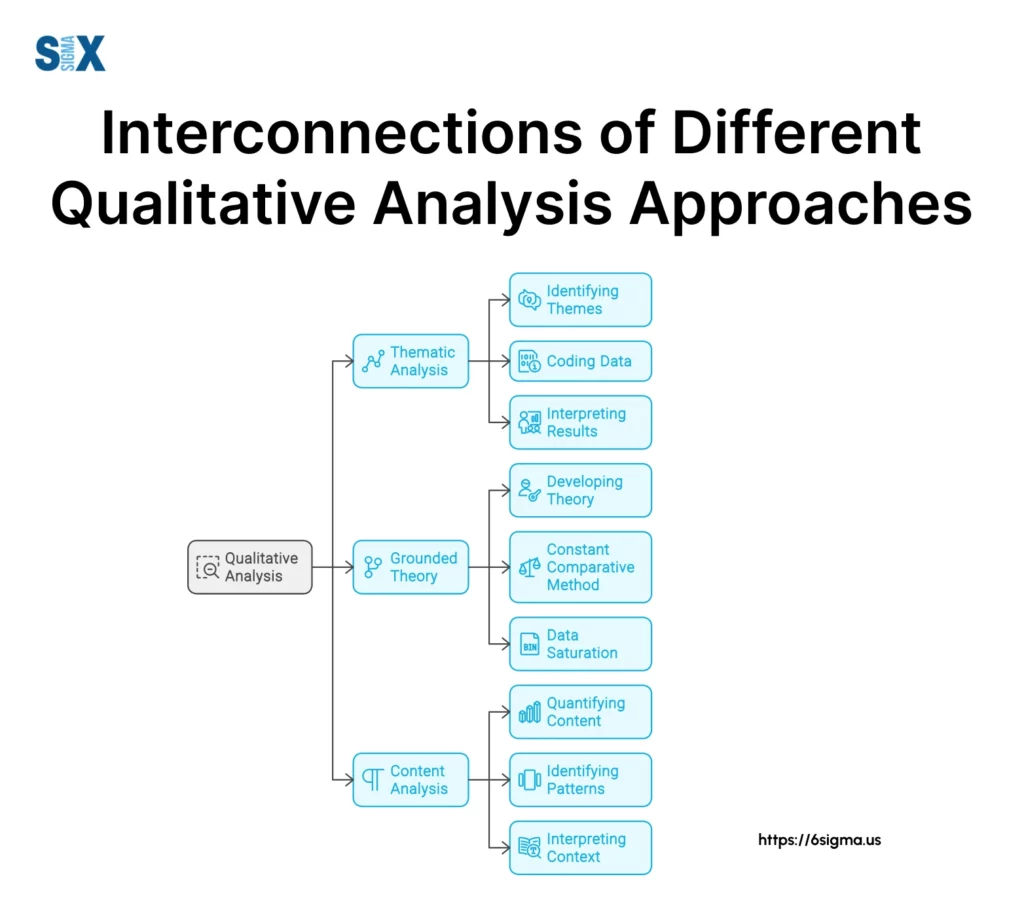

2. overview of visual communication and post-positivist research, visual communication in post-positivist qualitative research, 3. understanding qualitative research (and hypotheses): types, notions, contestations and epistemological underpinnings, 3.1. what is qualitative research, 3.2. types of qualitative research and their epistemological underpinnings, 3.3. what is a research hypothesis can it be used in qualitative research, 3.4. analogical arguments in support of using hypotheses in qualitative research, 3.5. can a hypothesis be “tested” in qualitative research, 4. the process of developing and using hypotheses in qualitative research.

“Begin a research study without having to test a hypothesis. Instead, it allows them to develop hypotheses by listening to what the research participants say. Because the method involves developing hypotheses after the data are collected, it is called hypothesis-generating research rather than hypothesis-testing research. The grounded theory method uses two basic principles: (1) questioning rather than measuring, and (2) generating hypotheses using theoretical coding.”

4.1. Formulating the Qualitative Research Hypothesis

- The qualitative hypothesis should be based on a research problem derived from the research questions.

- It should be supported by literature evidence (on the relationship or association between variables).

- It should be informed by past research and observations.

- It must be falsifiable or disprovable (see Popper [ 77 ]).

- It should be analysable using data collected from the field or literature.

- It has to be testable or verifiable, provable, nullifiable, refutable, confirmable or disprovable based on the results of analysing data collected from the field or literature.

4.2. Refuting or Verifying a Qualitative Research Hypothesis Diagrammatically (with Illustration)

“A core development concern in Nigeria is the magnitude of challenges rural people face. Inefficient infrastructures, lack of employment opportunities and poor social amenities are some of these challenges. These challenges persist mainly due to ineffective approaches used in tackling them. This research argues that an approach based on territorial development would produce better outcomes. The reason is that territorial development adopts integrated policies and actions with a focus on places as opposed to sectoral approaches. The research objectives were to evaluate rural development approaches and identify a specific approach capable of activating poverty reduction. It addressed questions bordering on past rural development approaches and how to improve urban-rural linkages in rural areas. It also addressed questions relating to ways that rural areas can reduce poverty through territorial development…” [ 16 ], p. 1

“Nigeria has legal and institutional opportunities for comprehensive improvement of rural areas through territorial development. However, due to the absence of a concrete rural development plan and area-based rural development strategies, this has not been materialized”.

- Proposition 1: Legal and institutional opportunities that can lead to comprehensive improvement of rural areas through territorial development exist in Nigeria .

- Proposition 2: However, due to the absence of a concrete rural development plan and area-based rural development strategies, this has not been materialized .

- Independent variables: legal and institutional opportunities; incessant structural changes in its political history; and policy negligence.

- Dependent variable: comprehensive rural improvements through territorial development.

5. Discussion and Conclusion

Acknowledgments, conflicts of interest.

- Friesen, J.; Van Stan, J.T.; Elleuche, S. Communicating Science through Comics: A Method. Publications 2018 , 6 , 38. [ Google Scholar ] [ CrossRef ]

- Hammersley, M. The Dilemma of Qualitative Method: Herbert Blumer and the Chicago Tradition ; Routledge: London, UK, 1989; ISBN 0-415-01772-6. [ Google Scholar ]

- Ulichny, P. The Role of Hypothesis Testing in Qualitative Research. A Researcher Comments. TESOL Q. 1991 , 25 , 200–202. [ Google Scholar ] [ CrossRef ]

- Bluhm, D.J.; Harman, W.; Lee, T.W.; Mitchell, T.R. Qualitative research in management: A decade of progress. J. Manag. Stud. 2011 , 48 , 1866–1891. [ Google Scholar ] [ CrossRef ]

- Maudsley, G. Mixing it but not mixed-up: Mixed methods research in medical education (a critical narrative review). Med. Teach. 2011 , 33 , e92–e104. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Agee, J. Developing qualitative research questions: A reflective process. Int. J. Qual. Stud. Educ. 2009 , 22 , 431–447. [ Google Scholar ] [ CrossRef ]

- Yin, R.K. Qualitative Research from Start to Finish , 2nd ed.; Guilford Publications: New York, NY, USA, 2015; ISBN 9781462517978. [ Google Scholar ]

- Alvesson, M.; Sköldberg, K. Reflexive Methodology: New Vistas for Qualitative Research ; Sage: London, UK, 2017; ISBN 9781473964242. [ Google Scholar ]

- Geertz, C. The Interpretation of Cultures: Selected Essays ; Basic Books: New York, NY, USA, 1973; ISBN 465-03425-X. [ Google Scholar ]

- John-Steiner, V.; Mahn, H. Sociocultural approaches to learning and development: A Vygotskian framework. Educ. Psychol. 1996 , 31 , 191–206. [ Google Scholar ] [ CrossRef ]

- Zeidler, D.L. STEM education: A deficit framework for the twenty first century? A sociocultural socioscientific response. Cult. Stud. Sci. Educ. 2016 , 11 , 11–26. [ Google Scholar ] [ CrossRef ]

- Koro-Ljungberg, M. Reconceptualizing Qualitative Research: Methodologies without Methodology ; Sage Publications: Los Angeles, CA, USA, 2015; ISBN 9781483351711. [ Google Scholar ]

- Chigbu, U.E. The Application of Weyarn’s (Germany) Rural Development Approach to Isuikwuato (Nigeria): An Appraisal of the Possibilities and Limitations. Unpublished Master’s Thesis, Technical University of Munich, Munich, Germany, 2009. [ Google Scholar ]

- Ntiador, A.M. Development of an Effective Land (Title) Registration System through Inter-Agency Data Integration as a Basis for Land Management. Master’s Thesis, Technical University of Munich, Munich, Germany, 2009. [ Google Scholar ]

- Sakaria, P. Redistributive Land Reform: Towards Accelerating the National Resettlement Programme of Namibia. Master’s Thesis, Technical University of Munich, Munich, Germany, 2016. [ Google Scholar ]

- Chigbu, U.E. Territorial Development: Suggestions for a New Approach to Rural Development in Nigeria. Ph.D. Thesis, Technical University of Munich, Munich, Germany, 2013. [ Google Scholar ]

- Miller, K. Communication Theories: Perspectives, Processes, and Contexts , 2nd ed.; Peking University Press: Beijing, China, 2007; ISBN 9787301124314. [ Google Scholar ]

- Taylor, T.R.; Lindlof, B.C. Qualitative Communication Research Methods , 3rd ed.; SAGE: Thousand Oaks, CA, USA, 2011; ISBN 978-1412974738. [ Google Scholar ]

- Bergman, M. Positivism. In The International Encyclopedia of Communication Theory and Philosophy ; Wiley and Sons: Hoboken, NJ, USA, 2016; ISBN 9781118766804. [ Google Scholar ]

- Robson, C. Real World Research. A Resource for Social Scientists and Practitioner-Researchers , 2nd ed.; Blackwell: Malden, MA, USA, 2002; ISBN 978-0-631-21305-5. [ Google Scholar ]

- Bohn, R.; Short, J. Measuring Consumer Information. Int. J. Commun. 2012 , 6 , 980–1000. [ Google Scholar ]

- Zacks, J.; Levy, E.; Tversky, B.; Schinao, D. Graphs in Print, Diagrammatic Representation and Reasoning ; Springer: London, UK, 2002. [ Google Scholar ]

- Merieb, E.N.; Hoehn, K. Human Anatomy & Physiology , 7th ed.; Pearson International: Cedar Rapids, IA, USA, 2007; ISBN 978-0132197991. [ Google Scholar ]

- Semetko, H.; Scammell, M. The SAGE Handbook of Political Communication ; SAGE Publications: London, UK, 2012; ISBN 9781446201015. [ Google Scholar ]

- Thorpe, S.; Fize, D.; Marlot, C. Speed of processing in the human visual system. Nature 1996 , 381 , 520–522. [ Google Scholar ] [ CrossRef ]

- Holcomb, P.; Grainger, J. On the Time Course of Visual Word Recognition. J. Cognit. Neurosci. 2006 , 18 , 1631–1643. [ Google Scholar ] [ CrossRef ]

- Messaris, P. Visual Communication: Theory and Research. J. Commun. 2003 , 53 , 551–556. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- Mirzoeff, M. An Introduction to Visual Culture ; Routledge: New York, NY, USA, 1999. [ Google Scholar ]

- Prosser, J. (Ed.) Image-Based Research: A Sourcebook for Qualitative Researchers ; Routledge: New York, NY, USA, 1998. [ Google Scholar ]

- Howells, R. Visual Culture: An Introduction ; Polity Press: Cambridge, UK, 2002. [ Google Scholar ]

- Thomson, P. (Ed.) Doing Visual Research with Children and Young People ; Routledge: London, UK, 2009. [ Google Scholar ]

- Jensen, K.B. (Ed.) A Handbook of Media and Communication Research: Qualitative and Quantitative Methodologies ; Routledge: London, UK; New York, NY, USA, 2013. [ Google Scholar ]

- Bestley, R.; Noble, I. Visual Research: An Introduction to Research Methods in Graphic Design , 3rd ed.; Bloomsbury Publishing: London, UK, 2016. [ Google Scholar ]

- Wilke, R.; Hill, M. On New Forms of Science Communication and Communication in Science: A Videographic Approach to Visuality in Science Slams and Academic Group Talk. Qual. Inq. 2019 . [ Google Scholar ] [ CrossRef ]

- Adam, F. Measuring National Innovation Performance: The Innovation Union Scoreboard Revisited. In SpringerBriefs in Economics ; Springer: London, UK, 2014; Volume 5, ISBN 978-3-642-39464-5. [ Google Scholar ]

- Flick, U. An Introduction to Qualitative Research , 5th ed.; Sage: Thousand Oaks, CA, USA, 2006. [ Google Scholar ]

- Creswell, J.W. Five qualitative approaches to inquiry. Qual. Inq. Res. Des. Choos. Five Approaches 2007 , 2 , 53–80. [ Google Scholar ]

- Auerbach, C.F.; Silverstein, L.B. Qualitative Data: An Introduction to Coding and Analysis ; New York University Press: New York, NY, USA; London, UK, 2003; ISBN 9780814706954. [ Google Scholar ]

- Adams, T.E. A review of narrative ethics. Qual. Inq. 2008 , 14 , 175–194. [ Google Scholar ] [ CrossRef ]

- Hancock, B.; Ockleford, B.; Windridge, K. An Introduction to Qualitative Research ; NHS: Nottingham/Sheffield, UK, 2009. [ Google Scholar ]

- Henwood, K. Qualitative research. Encycl. Crit. Psychol. 2014 , 1611–1614. [ Google Scholar ] [ CrossRef ]

- Glaser, B.G.; Strauss, A.L. Discovery of Grounded Theory: Strategies for Qualitative Research ; Routledge: New York, NY, USA; London, UK, 2017; ISBN 978-0202302607. [ Google Scholar ]

- Ary, D.; Jacobs, L.C.; Irvine, C.K.S.; Walker, D. Introduction to Research in Education ; Cengage Learning: Belmont, CA, USA, 2018; ISBN 978-1133596745. [ Google Scholar ]

- Sullivan, G.; Sargeant, J. Qualities of Qualitative Research: Part I Editorial). J. Grad. Med. Educ. 2011 , 449–452. [ Google Scholar ] [ CrossRef ]

- Bryman, A. Social Research Methods , 5th ed.; Oxford University Press: Oxford, UK, 2015; ISBN 978-0199689453. [ Google Scholar ]

- Creswell, J.W. Research Design: Qualitative, Quantitative, and Mixed Methods Approaches , 4th ed.; Sage: Thousand Oaks, CA, USA, 2013; ISBN 978-1452226101. [ Google Scholar ]

- Taylor, S.J.; Robert, B.; DeVault, M. Introduction to Qualitative Research Methods: A Guidebook and Resource ; John Wiley & Sons: Hoboken, NJ, USA, 2015; ISBN 978-1-118-76721-4. [ Google Scholar ]

- Litosseliti, L. (Ed.) Research Methods in Linguistics ; Bloomsbury Publishing: New York, NY, USA, 2010; ISBN 9780826489937. [ Google Scholar ]

- Berger, A.A. Media and Communication Research Methods: An Introduction to Qualitative and Quantitative Approaches , 4th ed.; Sage Publications: Los Angeles, CA, USA, 2019; ISBN 9781483377568. [ Google Scholar ]

- Creswell, J.W. Qualitative Inquiry and Research Design: Choosing among Five Approaches , 3rd ed.; SAGE: Thousand Oaks, CA, USA, 2012; ISBN 978-1412995306. [ Google Scholar ]

- Saunders, B.; Sim, J.; Kingstone, T.; Baker, S.; Waterfield, J.; Bartlam, B.; Burroughs, H.; Jinks, C. Saturation in qualitative research: Exploring its conceptualization and operationalization. Qual. Quant. 2018 , 52 , 1893–1907. [ Google Scholar ] [ CrossRef ]

- Leonard, K. Six Types of Qualitative Research. Bizfluent , 22 January 2019. Available online: https://bizfluent.com/info-8580000-six-types-qualitative-research.html (accessed on 3 February 2019).

- Campbell, D.T. Methodology and Epistemology for Social Science, Selected Papers ; University of Chicago Press: Chicago, IL, USA, 1998. [ Google Scholar ]

- Crotty, M. The Foundations of Social Research: Meanings and Perspectives in the Research Process ; Sage Publications: London, UK, 1998. [ Google Scholar ]

- Gilbert, N. Researching Social Life ; Sage Publications: London, UK, 2008. [ Google Scholar ]

- Kerlinger, F.N. The attitude structure of the individual: A Q-study of the educational attitudes of professors and laymen. Genet. Psychol. Monogr. 1956 , 53 , 283–329. [ Google Scholar ]

- Ary, D.; Jacobs, L.C.; Razavieh, A. Introduction to Research in Education ; Harcourt Brace College Publishers: Fort Worth, TX, USA, 1996; ISBN 0155009826. [ Google Scholar ]

- Creswell, J.W. Research Design: Qualitative & Quantative Approaches ; Sage: Thousand Oaks, CA, USA, 1994; ISBN 978-0803952553. [ Google Scholar ]

- Mourougan, S.; Sethuraman, K. Hypothesis Development and Testing. J. Bus. Manag. 2017 , 19 , 34–40. [ Google Scholar ] [ CrossRef ]

- Green, J.L.; Camilli, G.; Elmore, P.B. (Eds.) Handbook of Complementary Methods in Education Research ; American Education Research Association: Washington, DC, USA, 2012; ISBN 978-0805859331. [ Google Scholar ]

- Pyrczak, F. Writing Empirical Research Reports: A Basic Guide for Students of the Social and Behavioral Sciences , 8th ed.; Routledge: New York, NY, USA, 2016; ISBN 978-1936523368. [ Google Scholar ]

- Gravetter, F.J.; Forzano, L.A.B. Research Methods for the Behavioral Sciences , 4th ed.; Wadsworth Cengage Learning: Belmont, CA, USA, 2018; ISBN 978-1111342258. [ Google Scholar ]

- Malterud, K. Qualitative research: Standards, challenges, and guidelines. Lancet 2001 , 358 , 483–488. [ Google Scholar ] [ CrossRef ]

- Malterud, K.; Hollnagel, H. Encouraging the strengths of women patients: A case study from general practice on empowering dialogues. Scand. J. Public Health 1999 , 27 , 254–259. [ Google Scholar ] [ CrossRef ]

- Flyvbjerg, B. Five misunderstandings about case-study research. Qual. Inq. 2006 , 12 , 219–245. [ Google Scholar ] [ CrossRef ]

- Mabikke, S. Improving Land and Water Governance in Uganda: The Role of Institutions in Secure Land and Water Rights in Lake Victoria Basin. Ph.D. Thesis, Technical University of Munich, Munich, Germany, 2014. [ Google Scholar ]

- Sabatier, P.A. The advocacy coalition framework: Revisions and relevance for Europe. J. Eur. Public Policy 1998 , 5 , 98–130. [ Google Scholar ] [ CrossRef ]

- Holloway, I.; Galvin, K. Qualitative Research in Nursing and Healthcare , 4th ed.; John Wiley & Sons: Hoboken, NJ, USA, 2016; ISBN 978-1-118-87449-3. [ Google Scholar ]

- Christensen, L.B.; Johnson, R.B.; Turner, L.A. Research Methods, Design and Analysis , 12th ed.; Pearson: London, UK; New York, NY, USA, 2011; ISBN 9780205961252. [ Google Scholar ]

- Smith, B.; McGannon, K.R. Developing rigor in qualitative research: Problems and opportunities within sport and exercise psychology. Int. Rev. Sport Exerc. Psychol. 2018 , 11 , 101–121. [ Google Scholar ] [ CrossRef ]

- Konijn, E.A.; van de Schoot, R.; Winter, S.D.; Ferguson, C.J. Possible solution to publication bias through Bayesian statistics, including proper null hypothesis testing. Commun. Methods Meas. 2015 , 9 , 280–302. [ Google Scholar ] [ CrossRef ]

- Pfister, L.; Kirchner, J.W. Debates—Hypothesis testing in hydrology: Theory and practice. Water Resour. Res. 2017 , 53 , 1792–1798. [ Google Scholar ] [ CrossRef ]

- Strotz, L.C.; Simões, M.; Girard, M.G.; Breitkreuz, L.; Kimmig, J.; Lieberman, B.S. Getting somewhere with the Red Queen: Chasing a biologically modern definition of the hypothesis. Biol. Lett. 2018 , 14 , 2017734. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Steger, M.F.; Owens, G.P.; Park, C.L. Violations of war: Testing the meaning-making model among Vietnam veterans. J. Clin. Psychol. 2015 , 71 , 105–116. [ Google Scholar ] [ CrossRef ] [ PubMed ]

- Garland, E.L.; Kiken, L.G.; Faurot, K.; Palsson, O.; Gaylord, S.A. Upward spirals of mindfulness and reappraisal: Testing the mindfulness-to-meaning theory with autoregressive latent trajectory modelling. Cognit. Ther. Res. 2017 , 41 , 381–392. [ Google Scholar ] [ CrossRef ]

- Gentsch, K.; Loderer, K.; Soriano, C.; Fontaine, J.R.; Eid, M.; Pekrun, R.; Scherer, K.R. Effects of achievement contexts on the meaning structure of emotion words. Cognit. Emot. 2018 , 32 , 379–388. [ Google Scholar ] [ CrossRef ]

- Popper, K. Conjectures and Refutations. In Readings in the Philosophy of Science ; Schick, T., Ed.; Routledge and Keagan Paul: London, UK, 1963; pp. 33–39. [ Google Scholar ]

- Chilisa, B. Indigenous Research Methodologies ; Sage: Thousand Oaks, CA, USA, 2011; ISBN 9781412958820. [ Google Scholar ]

- Wilson, S. Research as Ceremony: Indigenous Research Methods ; Fernwood Press: Black Point, NS, Canada, 2008; ISBN 9781552662816. [ Google Scholar ]

- Chigbu, U.E. Concept and Approach to Land Management Interventions for Rural Development in Africa. In Geospatial Technologies for Effective Land Governance ; El-Ayachi, M., El Mansouri, L., Eds.; IGI Global: Hershey, PA, USA, 2019; pp. 1–14. [ Google Scholar ]

- Kawulich, B.B. Gatekeeping: An ongoing adventure in research. Field Methods J. 2011 , 23 , 57–76. [ Google Scholar ] [ CrossRef ]

- Ntihinyurwa, P.D. An Evaluation of the Role of Public Participation in Land Use Consolidation (LUC) Practices in Rwanda and Its Improvement. Master’s Thesis, Technical University of Munich, Munich, Germany, 2016. [ Google Scholar ]

- Ntihinyurwa, P.D.; de Vries, W.T.; Chigbu, U.E.; Dukwiyimpuhwe, P.A. The positive impacts of farm land fragmentation in Rwanda. Land Use Policy 2019 , 81 , 565–581. [ Google Scholar ] [ CrossRef ]

- Chigbu, U.E. Masculinity, men and patriarchal issues aside: How do women’s actions impede women’s access to land? Matters arising from a peri-rural community in Nigeria. Land Use Policy 2019 , 81 , 39–48. [ Google Scholar ] [ CrossRef ]

- Gwaleba, M.J.; Masum, F. Participation of Informal Settlers in Participatory Land Use Planning Project in Pursuit of Tenure Security. Urban Forum 2018 , 29 , 169–184. [ Google Scholar ] [ CrossRef ]

- Sait, M.A.; Chigbu, U.E.; Hamiduddin, I.; De Vries, W.T. Renewable Energy as an Underutilised Resource in Cities: Germany’s ‘Energiewende’ and Lessons for Post-Brexit Cities in the United Kingdom. Resources 2019 , 8 , 7. [ Google Scholar ] [ CrossRef ]

- Chigbu, U.E.; Paradza, G.; Dachaga, W. Differentiations in Women’s Land Tenure Experiences: Implications for Women’s Land Access and Tenure Security in Sub-Saharan Africa. Land 2019 , 8 , 22. [ Google Scholar ] [ CrossRef ]

- Chigbu, U.E.; Alemayehu, Z.; Dachaga, W. Uncovering land tenure insecurities: Tips for tenure responsive land-use planning in Ethiopia. Dev. Pract. 2019 . [ Google Scholar ] [ CrossRef ]

- Handayani, W.; Rudianto, I.; Setyono, J.S.; Chigbu, U.E.; Sukmawati, A.N. Vulnerability assessment: A comparison of three different city sizes in the coastal area of Central Java, Indonesia. Adv. Clim. Chang. Res. 2017 , 8 , 286–296. [ Google Scholar ] [ CrossRef ]

- Maxwell, J. Qualitative Research: An Interactive Design , 3rd ed.; Sage: Thousand Oaks, CA, USA, 2005; ISBN 9781412981194. [ Google Scholar ]

- Banks, M. Using Visual Data in Qualitative Research , 2nd ed.; Sage: Thousand Oaks, CA, USA, 2018; Volume 5, ISBN 9781473913196. [ Google Scholar ]

Click here to enlarge figure

| Types | Approach to Research or Enquiries | Data Collection Methods | Data Analysis Methods | Forms in Scientific Writing | Epistemological Foundations |

|---|---|---|---|---|---|

| Narrative | Explores situations, scenarios and processes | Interviews and documents | Storytelling, content review and theme (meaning development | In-depth narration of events or situations | Objectivism, postmodernism, social constructionism, feminism and constructivism (including interpretive and reflexive) in positivist and post-positivist perspectives |

| Case study | Examination of episodic events with focus on answering “how” questions | Interviews, observations, document contents and physical inspections | Detailed identification of themes and development of narratives | In-depth study of possible lessons learned from a case or cases | |

| Grounded theory | Investigates procedures | Interviews and questionnaire | Data coding, categorisation of themes and description of implications | Theory and theoretical models | |

| Historical | Description of past events | Interviews, surveys and documents | Description of events development | Historical reports | |

| Phenomenological | Understand or explain experiences | Interviews, surveys and observations | Description of experiences, examination of meanings and theme development | Contextualisation and reporting of experience | |

| Ethnographic | Describes and interprets social grouping or cultural situation | Interviews, observations and active participation | Description and interpretation of data and theme development | Detailed reporting of interpreted data |

Share and Cite

Chigbu, U.E. Visually Hypothesising in Scientific Paper Writing: Confirming and Refuting Qualitative Research Hypotheses Using Diagrams. Publications 2019 , 7 , 22. https://doi.org/10.3390/publications7010022

Chigbu UE. Visually Hypothesising in Scientific Paper Writing: Confirming and Refuting Qualitative Research Hypotheses Using Diagrams. Publications . 2019; 7(1):22. https://doi.org/10.3390/publications7010022

Chigbu, Uchendu Eugene. 2019. "Visually Hypothesising in Scientific Paper Writing: Confirming and Refuting Qualitative Research Hypotheses Using Diagrams" Publications 7, no. 1: 22. https://doi.org/10.3390/publications7010022

Article Metrics

Article access statistics, further information, mdpi initiatives, follow mdpi.

Subscribe to receive issue release notifications and newsletters from MDPI journals

- Getting Started

- GLG Institute

- Expert Witness

- Integrated Insights

- Qualitative

- Featured Content

- Medical Devices & Diagnostics

- Pharmaceuticals & Biotechnology

- Industrials

- Consumer Goods

- Payments & Insurance

- Hedge Funds

- Private Equity

- Private Credit

- Investment Managers & Mutual Funds

- Investment Banks & Research

- Consulting Firms

- Advertising & Public Relations

- Law Firm Resources

- Social Impact

- Clients - MyGLG

- Network Members

Qualitative vs. Quantitative Research — Here’s What You Need to Know

Will Mellor, Director of Surveys, GLG

Read Time: 0 Minutes

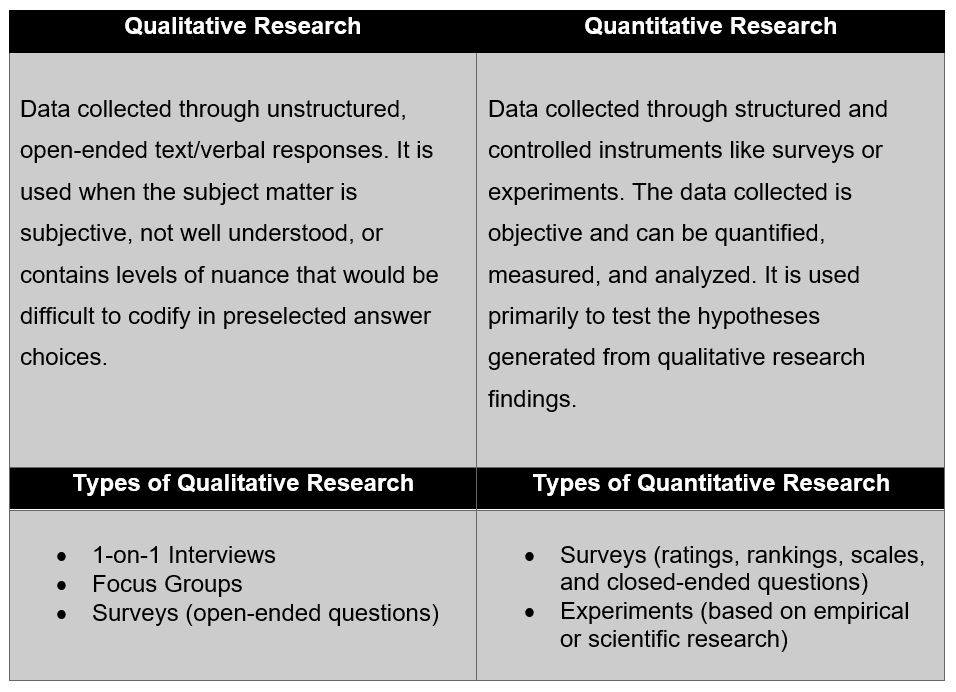

Qualitative vs. Quantitative — you’ve heard the terms before, but what do they mean? Here’s what you need to know on when to use them and how to apply them in your research projects.

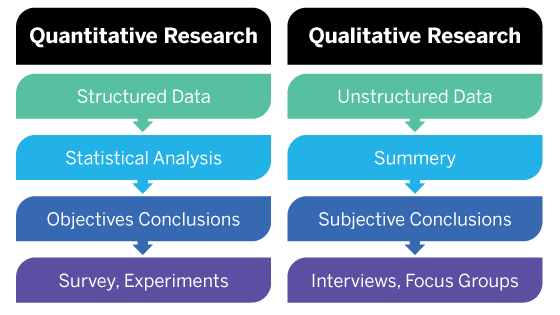

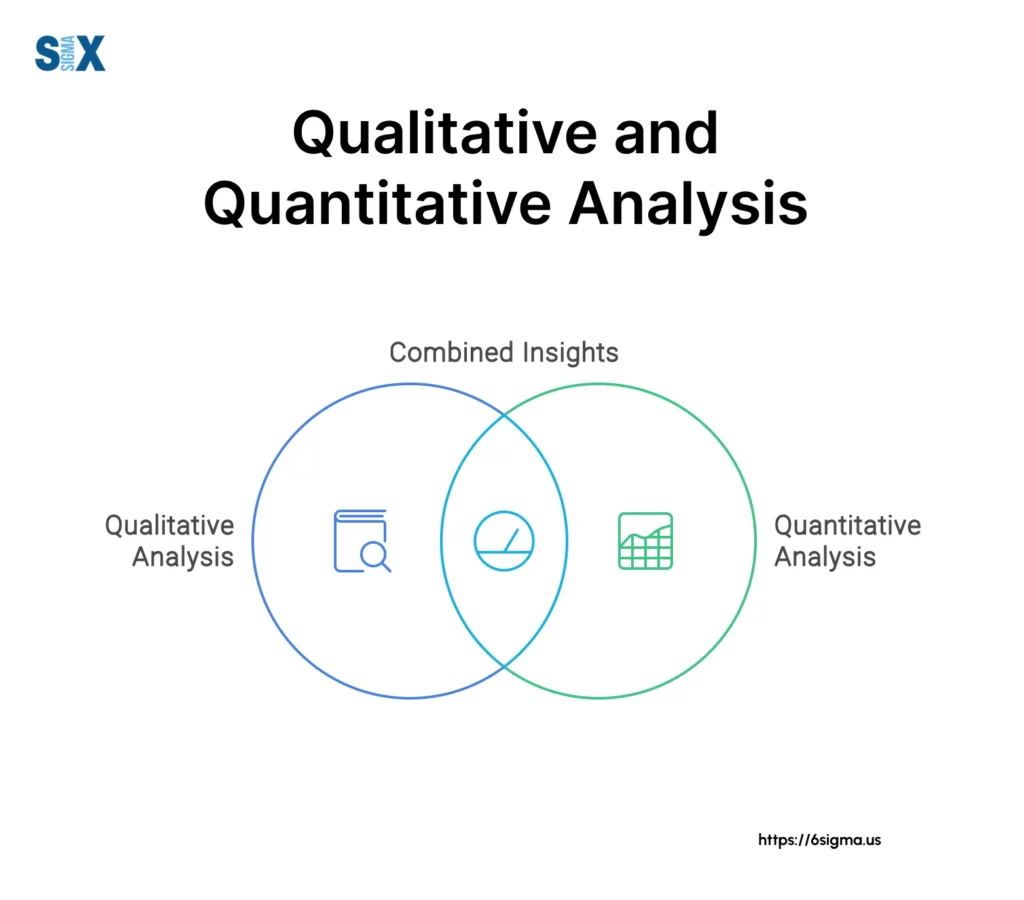

Most research projects you undertake will likely require some combination of qualitative and quantitative data. The magnitude of each will depend on what you need to accomplish. They are opposite in their approach, which makes them balanced in their outcomes.

When Are They Applied?

Qualitative

Qualitative research is used to formulate a hypothesis . If you need deeper information about a topic you know little about, qualitative research can help you uncover themes. For this reason, qualitative research often comes prior to quantitative. It allows you to get a baseline understanding of the topic and start to formulate hypotheses around correlation and causation.

Quantitative

Quantitative research is used to test or confirm a hypothesis . Qualitative research usually informs quantitative. You need to have enough understanding about a topic in order to develop a hypothesis you can test. Since quantitative research is highly structured, you first need to understand what the parameters are and how variable they are in practice. This allows you to create a research outline that is controlled in all the ways that will produce high-quality data.

In practice, the parameters are the factors you want to test against your hypothesis. If your hypothesis is that COVID is going to transform the way companies think about office space, some of your parameters might include the percent of your workforce working from home pre- and post-COVID, total square footage of office space held, and/or real-estate spend expectations by executive leadership. You would also want to know the variability of those parameters. In the COVID example, you will need to know standard ranges of square footage and real-estate expenditures so that you can create answer options that will capture relevant, high-quality, and easily actionable data.

Methods of Research

Often, qualitative research is conducted with a small sample size and includes many open-ended questions . The goal is to understand “Why?” and the thinking behind the decisions. The best way to facilitate this type of research is through one-on-one interviews, focus groups, and sometimes surveys. A major benefit of the interview and focus group formats is the ability to ask follow-up questions and dig deeper on answers that are particularly insightful.

Conversely, quantitative research is designed for larger sample sizes, which can garner perspectives across a wide spectrum of respondents. While not always necessary, sample sizes can sometimes be large enough to be statistically significant . The best way to facilitate this type of research is through surveys or large-scale experiments.

Unsurprisingly, the two different approaches will generate different types of data that will need to be analyzed differently.

For qualitative data, you’ll end up with data that will be highly textual in nature. You’ll be reading through the data and looking for key themes that emerge over and over. This type of research is also great at producing quotes that can be used in presentations or reports. Quotes are a powerful tool for conveying sentiment and making a poignant point.

For quantitative data, you’ll end up with a data set that can be analyzed, often with statistical software such as Excel, R, or SPSS. You can ask many different types of questions that produce this quantitative data, including rating/ranking questions, single-select, multiselect, and matrix table questions. These question types will produce data that can be analyzed to find averages, ranges, growth rates, percentage changes, minimums/maximums, and even time-series data for longer-term trend analysis.

Mixed Methods Approach

You aren’t limited to just one approach. If you need both quantitative and qualitative data, then collect both. You can even collect both quantitative and qualitative data within one type of research instrument. In a survey, you can ask both open-ended questions about “Why?” as well as closed-ended, data-related questions. Even in an unstructured format, like an interview or focus group, you can ask numerical questions to capture analyzable data.

Just be careful. While qualitative themes can be generalized, it can be dangerous to generalize on such a small sample size of quantitative data. For instance, why companies like a certain software platform may fall into three to five key themes. How much they spend on that platform can be highly variable.

The Takeaway

If you are unfamiliar with the topic you are researching, qualitative research is the best first approach. As you get deeper in your research, certain themes will emerge, and you’ll start to form hypotheses. From there, quantitative research can provide larger-scale data sets that can be analyzed to either confirm or deny the hypotheses you formulated earlier in your research. Most importantly, the two approaches are not mutually exclusive. You can have an eye for both themes and data throughout the research process. You’ll just be leaning more heavily to one or the other depending on where you are in your understanding of the topic.

Ready to get started? Get the actionable insights you need with the help of GLG’s qualitative and quantitative research methods.

About Will Mellor

Will Mellor leads a team of accomplished project managers who serve financial service firms across North America. His team manages end-to-end survey delivery from first draft to final deliverable. Will is an expert on GLG’s internal membership and consumer populations, as well as survey design and research. Before coming to GLG, he was the vice president of an economic consulting group, where he was responsible for designing economic impact models for clients in both the public sector and the private sector. Will has bachelor’s degrees in international business and finance and a master’s degree in applied economics.

For more information, read our articles: Three Ways to Apply Qualitative Research , Focusing on Focus Groups: Best Practices, What Type of Survey Do You Need?, or The 6 Pillars of Successful Survey Design

You can also download our eBooks: GLG’s Guide to Effective Qualitative Research or Strategies for Successful Surveys

Enter your contact information below and a member of our team will reach out to you shortly.

Thank you for contacting GLG, someone will respond to your inquiry as soon as possible.

Subscribe to Insights 360

Enter your email below and receive our monthly newsletter, featuring insights from GLG’s network of approximately 1 million professionals with first-hand expertise in every industry.

What Is A Research (Scientific) Hypothesis? A plain-language explainer + examples

By: Derek Jansen (MBA) | Reviewed By: Dr Eunice Rautenbach | June 2020

If you’re new to the world of research, or it’s your first time writing a dissertation or thesis, you’re probably noticing that the words “research hypothesis” and “scientific hypothesis” are used quite a bit, and you’re wondering what they mean in a research context .

“Hypothesis” is one of those words that people use loosely, thinking they understand what it means. However, it has a very specific meaning within academic research. So, it’s important to understand the exact meaning before you start hypothesizing.

Research Hypothesis 101

- What is a hypothesis ?

- What is a research hypothesis (scientific hypothesis)?

- Requirements for a research hypothesis

- Definition of a research hypothesis

- The null hypothesis

What is a hypothesis?

Let’s start with the general definition of a hypothesis (not a research hypothesis or scientific hypothesis), according to the Cambridge Dictionary:

Hypothesis: an idea or explanation for something that is based on known facts but has not yet been proved.

In other words, it’s a statement that provides an explanation for why or how something works, based on facts (or some reasonable assumptions), but that has not yet been specifically tested . For example, a hypothesis might look something like this:

Hypothesis: sleep impacts academic performance.

This statement predicts that academic performance will be influenced by the amount and/or quality of sleep a student engages in – sounds reasonable, right? It’s based on reasonable assumptions , underpinned by what we currently know about sleep and health (from the existing literature). So, loosely speaking, we could call it a hypothesis, at least by the dictionary definition.

But that’s not good enough…

Unfortunately, that’s not quite sophisticated enough to describe a research hypothesis (also sometimes called a scientific hypothesis), and it wouldn’t be acceptable in a dissertation, thesis or research paper . In the world of academic research, a statement needs a few more criteria to constitute a true research hypothesis .

What is a research hypothesis?

A research hypothesis (also called a scientific hypothesis) is a statement about the expected outcome of a study (for example, a dissertation or thesis). To constitute a quality hypothesis, the statement needs to have three attributes – specificity , clarity and testability .

Let’s take a look at these more closely.

Need a helping hand?

Hypothesis Essential #1: Specificity & Clarity

A good research hypothesis needs to be extremely clear and articulate about both what’ s being assessed (who or what variables are involved ) and the expected outcome (for example, a difference between groups, a relationship between variables, etc.).

Let’s stick with our sleepy students example and look at how this statement could be more specific and clear.

Hypothesis: Students who sleep at least 8 hours per night will, on average, achieve higher grades in standardised tests than students who sleep less than 8 hours a night.

As you can see, the statement is very specific as it identifies the variables involved (sleep hours and test grades), the parties involved (two groups of students), as well as the predicted relationship type (a positive relationship). There’s no ambiguity or uncertainty about who or what is involved in the statement, and the expected outcome is clear.

Contrast that to the original hypothesis we looked at – “Sleep impacts academic performance” – and you can see the difference. “Sleep” and “academic performance” are both comparatively vague , and there’s no indication of what the expected relationship direction is (more sleep or less sleep). As you can see, specificity and clarity are key.

Hypothesis Essential #2: Testability (Provability)

A statement must be testable to qualify as a research hypothesis. In other words, there needs to be a way to prove (or disprove) the statement. If it’s not testable, it’s not a hypothesis – simple as that.

For example, consider the hypothesis we mentioned earlier:

Hypothesis: Students who sleep at least 8 hours per night will, on average, achieve higher grades in standardised tests than students who sleep less than 8 hours a night.

We could test this statement by undertaking a quantitative study involving two groups of students, one that gets 8 or more hours of sleep per night for a fixed period, and one that gets less. We could then compare the standardised test results for both groups to see if there’s a statistically significant difference.

Again, if you compare this to the original hypothesis we looked at – “Sleep impacts academic performance” – you can see that it would be quite difficult to test that statement, primarily because it isn’t specific enough. How much sleep? By who? What type of academic performance?

So, remember the mantra – if you can’t test it, it’s not a hypothesis 🙂

Defining A Research Hypothesis

You’re still with us? Great! Let’s recap and pin down a clear definition of a hypothesis.

A research hypothesis (or scientific hypothesis) is a statement about an expected relationship between variables, or explanation of an occurrence, that is clear, specific and testable.

So, when you write up hypotheses for your dissertation or thesis, make sure that they meet all these criteria. If you do, you’ll not only have rock-solid hypotheses but you’ll also ensure a clear focus for your entire research project.

What about the null hypothesis?

You may have also heard the terms null hypothesis , alternative hypothesis, or H-zero thrown around. At a simple level, the null hypothesis is the counter-proposal to the original hypothesis.

For example, if the hypothesis predicts that there is a relationship between two variables (for example, sleep and academic performance), the null hypothesis would predict that there is no relationship between those variables.

At a more technical level, the null hypothesis proposes that no statistical significance exists in a set of given observations and that any differences are due to chance alone.

And there you have it – hypotheses in a nutshell.

If you have any questions, be sure to leave a comment below and we’ll do our best to help you. If you need hands-on help developing and testing your hypotheses, consider our private coaching service , where we hold your hand through the research journey.

Psst... there’s more!

This post was based on one of our popular Research Bootcamps . If you're working on a research project, you'll definitely want to check this out ...

17 Comments

Very useful information. I benefit more from getting more information in this regard.

Very great insight,educative and informative. Please give meet deep critics on many research data of public international Law like human rights, environment, natural resources, law of the sea etc

In a book I read a distinction is made between null, research, and alternative hypothesis. As far as I understand, alternative and research hypotheses are the same. Can you please elaborate? Best Afshin

This is a self explanatory, easy going site. I will recommend this to my friends and colleagues.

Very good definition. How can I cite your definition in my thesis? Thank you. Is nul hypothesis compulsory in a research?

It’s a counter-proposal to be proven as a rejection

Please what is the difference between alternate hypothesis and research hypothesis?

It is a very good explanation. However, it limits hypotheses to statistically tasteable ideas. What about for qualitative researches or other researches that involve quantitative data that don’t need statistical tests?

In qualitative research, one typically uses propositions, not hypotheses.

could you please elaborate it more

I’ve benefited greatly from these notes, thank you.

This is very helpful

well articulated ideas are presented here, thank you for being reliable sources of information

Excellent. Thanks for being clear and sound about the research methodology and hypothesis (quantitative research)

I have only a simple question regarding the null hypothesis. – Is the null hypothesis (Ho) known as the reversible hypothesis of the alternative hypothesis (H1? – How to test it in academic research?

this is very important note help me much more

Hi” best wishes to you and your very nice blog”

Trackbacks/Pingbacks

- What Is Research Methodology? Simple Definition (With Examples) - Grad Coach - […] Contrasted to this, a quantitative methodology is typically used when the research aims and objectives are confirmatory in nature. For example,…

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

Chapter 1. Introduction

“Science is in danger, and for that reason it is becoming dangerous” -Pierre Bourdieu, Science of Science and Reflexivity

Why an Open Access Textbook on Qualitative Research Methods?

I have been teaching qualitative research methods to both undergraduates and graduate students for many years. Although there are some excellent textbooks out there, they are often costly, and none of them, to my mind, properly introduces qualitative research methods to the beginning student (whether undergraduate or graduate student). In contrast, this open-access textbook is designed as a (free) true introduction to the subject, with helpful, practical pointers on how to conduct research and how to access more advanced instruction.

Textbooks are typically arranged in one of two ways: (1) by technique (each chapter covers one method used in qualitative research); or (2) by process (chapters advance from research design through publication). But both of these approaches are necessary for the beginner student. This textbook will have sections dedicated to the process as well as the techniques of qualitative research. This is a true “comprehensive” book for the beginning student. In addition to covering techniques of data collection and data analysis, it provides a road map of how to get started and how to keep going and where to go for advanced instruction. It covers aspects of research design and research communication as well as methods employed. Along the way, it includes examples from many different disciplines in the social sciences.

The primary goal has been to create a useful, accessible, engaging textbook for use across many disciplines. And, let’s face it. Textbooks can be boring. I hope readers find this to be a little different. I have tried to write in a practical and forthright manner, with many lively examples and references to good and intellectually creative qualitative research. Woven throughout the text are short textual asides (in colored textboxes) by professional (academic) qualitative researchers in various disciplines. These short accounts by practitioners should help inspire students. So, let’s begin!

What is Research?

When we use the word research , what exactly do we mean by that? This is one of those words that everyone thinks they understand, but it is worth beginning this textbook with a short explanation. We use the term to refer to “empirical research,” which is actually a historically specific approach to understanding the world around us. Think about how you know things about the world. [1] You might know your mother loves you because she’s told you she does. Or because that is what “mothers” do by tradition. Or you might know because you’ve looked for evidence that she does, like taking care of you when you are sick or reading to you in bed or working two jobs so you can have the things you need to do OK in life. Maybe it seems churlish to look for evidence; you just take it “on faith” that you are loved.

Only one of the above comes close to what we mean by research. Empirical research is research (investigation) based on evidence. Conclusions can then be drawn from observable data. This observable data can also be “tested” or checked. If the data cannot be tested, that is a good indication that we are not doing research. Note that we can never “prove” conclusively, through observable data, that our mothers love us. We might have some “disconfirming evidence” (that time she didn’t show up to your graduation, for example) that could push you to question an original hypothesis , but no amount of “confirming evidence” will ever allow us to say with 100% certainty, “my mother loves me.” Faith and tradition and authority work differently. Our knowledge can be 100% certain using each of those alternative methods of knowledge, but our certainty in those cases will not be based on facts or evidence.

For many periods of history, those in power have been nervous about “science” because it uses evidence and facts as the primary source of understanding the world, and facts can be at odds with what power or authority or tradition want you to believe. That is why I say that scientific empirical research is a historically specific approach to understand the world. You are in college or university now partly to learn how to engage in this historically specific approach.

In the sixteenth and seventeenth centuries in Europe, there was a newfound respect for empirical research, some of which was seriously challenging to the established church. Using observations and testing them, scientists found that the earth was not at the center of the universe, for example, but rather that it was but one planet of many which circled the sun. [2] For the next two centuries, the science of astronomy, physics, biology, and chemistry emerged and became disciplines taught in universities. All used the scientific method of observation and testing to advance knowledge. Knowledge about people , however, and social institutions, however, was still left to faith, tradition, and authority. Historians and philosophers and poets wrote about the human condition, but none of them used research to do so. [3]

It was not until the nineteenth century that “social science” really emerged, using the scientific method (empirical observation) to understand people and social institutions. New fields of sociology, economics, political science, and anthropology emerged. The first sociologists, people like Auguste Comte and Karl Marx, sought specifically to apply the scientific method of research to understand society, Engels famously claiming that Marx had done for the social world what Darwin did for the natural world, tracings its laws of development. Today we tend to take for granted the naturalness of science here, but it is actually a pretty recent and radical development.

To return to the question, “does your mother love you?” Well, this is actually not really how a researcher would frame the question, as it is too specific to your case. It doesn’t tell us much about the world at large, even if it does tell us something about you and your relationship with your mother. A social science researcher might ask, “do mothers love their children?” Or maybe they would be more interested in how this loving relationship might change over time (e.g., “do mothers love their children more now than they did in the 18th century when so many children died before reaching adulthood?”) or perhaps they might be interested in measuring quality of love across cultures or time periods, or even establishing “what love looks like” using the mother/child relationship as a site of exploration. All of these make good research questions because we can use observable data to answer them.

What is Qualitative Research?

“All we know is how to learn. How to study, how to listen, how to talk, how to tell. If we don’t tell the world, we don’t know the world. We’re lost in it, we die.” -Ursula LeGuin, The Telling

At its simplest, qualitative research is research about the social world that does not use numbers in its analyses. All those who fear statistics can breathe a sigh of relief – there are no mathematical formulae or regression models in this book! But this definition is less about what qualitative research can be and more about what it is not. To be honest, any simple statement will fail to capture the power and depth of qualitative research. One way of contrasting qualitative research to quantitative research is to note that the focus of qualitative research is less about explaining and predicting relationships between variables and more about understanding the social world. To use our mother love example, the question about “what love looks like” is a good question for the qualitative researcher while all questions measuring love or comparing incidences of love (both of which require measurement) are good questions for quantitative researchers. Patton writes,

Qualitative data describe. They take us, as readers, into the time and place of the observation so that we know what it was like to have been there. They capture and communicate someone else’s experience of the world in his or her own words. Qualitative data tell a story. ( Patton 2002:47 )

Qualitative researchers are asking different questions about the world than their quantitative colleagues. Even when researchers are employed in “mixed methods” research ( both quantitative and qualitative), they are using different methods to address different questions of the study. I do a lot of research about first-generation and working-college college students. Where a quantitative researcher might ask, how many first-generation college students graduate from college within four years? Or does first-generation college status predict high student debt loads? A qualitative researcher might ask, how does the college experience differ for first-generation college students? What is it like to carry a lot of debt, and how does this impact the ability to complete college on time? Both sets of questions are important, but they can only be answered using specific tools tailored to those questions. For the former, you need large numbers to make adequate comparisons. For the latter, you need to talk to people, find out what they are thinking and feeling, and try to inhabit their shoes for a little while so you can make sense of their experiences and beliefs.

Examples of Qualitative Research

You have probably seen examples of qualitative research before, but you might not have paid particular attention to how they were produced or realized that the accounts you were reading were the result of hours, months, even years of research “in the field.” A good qualitative researcher will present the product of their hours of work in such a way that it seems natural, even obvious, to the reader. Because we are trying to convey what it is like answers, qualitative research is often presented as stories – stories about how people live their lives, go to work, raise their children, interact with one another. In some ways, this can seem like reading particularly insightful novels. But, unlike novels, there are very specific rules and guidelines that qualitative researchers follow to ensure that the “story” they are telling is accurate , a truthful rendition of what life is like for the people being studied. Most of this textbook will be spent conveying those rules and guidelines. Let’s take a look, first, however, at three examples of what the end product looks like. I have chosen these three examples to showcase very different approaches to qualitative research, and I will return to these five examples throughout the book. They were all published as whole books (not chapters or articles), and they are worth the long read, if you have the time. I will also provide some information on how these books came to be and the length of time it takes to get them into book version. It is important you know about this process, and the rest of this textbook will help explain why it takes so long to conduct good qualitative research!

Example 1 : The End Game (ethnography + interviews)

Corey Abramson is a sociologist who teaches at the University of Arizona. In 2015 he published The End Game: How Inequality Shapes our Final Years ( 2015 ). This book was based on the research he did for his dissertation at the University of California-Berkeley in 2012. Actually, the dissertation was completed in 2012 but the work that was produced that took several years. The dissertation was entitled, “This is How We Live, This is How We Die: Social Stratification, Aging, and Health in Urban America” ( 2012 ). You can see how the book version, which was written for a more general audience, has a more engaging sound to it, but that the dissertation version, which is what academic faculty read and evaluate, has a more descriptive title. You can read the title and know that this is a study about aging and health and that the focus is going to be inequality and that the context (place) is going to be “urban America.” It’s a study about “how” people do something – in this case, how they deal with aging and death. This is the very first sentence of the dissertation, “From our first breath in the hospital to the day we die, we live in a society characterized by unequal opportunities for maintaining health and taking care of ourselves when ill. These disparities reflect persistent racial, socio-economic, and gender-based inequalities and contribute to their persistence over time” ( 1 ). What follows is a truthful account of how that is so.

Cory Abramson spent three years conducting his research in four different urban neighborhoods. We call the type of research he conducted “comparative ethnographic” because he designed his study to compare groups of seniors as they went about their everyday business. It’s comparative because he is comparing different groups (based on race, class, gender) and ethnographic because he is studying the culture/way of life of a group. [4] He had an educated guess, rooted in what previous research had shown and what social theory would suggest, that people’s experiences of aging differ by race, class, and gender. So, he set up a research design that would allow him to observe differences. He chose two primarily middle-class (one was racially diverse and the other was predominantly White) and two primarily poor neighborhoods (one was racially diverse and the other was predominantly African American). He hung out in senior centers and other places seniors congregated, watched them as they took the bus to get prescriptions filled, sat in doctor’s offices with them, and listened to their conversations with each other. He also conducted more formal conversations, what we call in-depth interviews, with sixty seniors from each of the four neighborhoods. As with a lot of fieldwork , as he got closer to the people involved, he both expanded and deepened his reach –

By the end of the project, I expanded my pool of general observations to include various settings frequented by seniors: apartment building common rooms, doctors’ offices, emergency rooms, pharmacies, senior centers, bars, parks, corner stores, shopping centers, pool halls, hair salons, coffee shops, and discount stores. Over the course of the three years of fieldwork, I observed hundreds of elders, and developed close relationships with a number of them. ( 2012:10 )

When Abramson rewrote the dissertation for a general audience and published his book in 2015, it got a lot of attention. It is a beautifully written book and it provided insight into a common human experience that we surprisingly know very little about. It won the Outstanding Publication Award by the American Sociological Association Section on Aging and the Life Course and was featured in the New York Times . The book was about aging, and specifically how inequality shapes the aging process, but it was also about much more than that. It helped show how inequality affects people’s everyday lives. For example, by observing the difficulties the poor had in setting up appointments and getting to them using public transportation and then being made to wait to see a doctor, sometimes in standing-room-only situations, when they are unwell, and then being treated dismissively by hospital staff, Abramson allowed readers to feel the material reality of being poor in the US. Comparing these examples with seniors with adequate supplemental insurance who have the resources to hire car services or have others assist them in arranging care when they need it, jolts the reader to understand and appreciate the difference money makes in the lives and circumstances of us all, and in a way that is different than simply reading a statistic (“80% of the poor do not keep regular doctor’s appointments”) does. Qualitative research can reach into spaces and places that often go unexamined and then reports back to the rest of us what it is like in those spaces and places.

Example 2: Racing for Innocence (Interviews + Content Analysis + Fictional Stories)

Jennifer Pierce is a Professor of American Studies at the University of Minnesota. Trained as a sociologist, she has written a number of books about gender, race, and power. Her very first book, Gender Trials: Emotional Lives in Contemporary Law Firms, published in 1995, is a brilliant look at gender dynamics within two law firms. Pierce was a participant observer, working as a paralegal, and she observed how female lawyers and female paralegals struggled to obtain parity with their male colleagues.

Fifteen years later, she reexamined the context of the law firm to include an examination of racial dynamics, particularly how elite white men working in these spaces created and maintained a culture that made it difficult for both female attorneys and attorneys of color to thrive. Her book, Racing for Innocence: Whiteness, Gender, and the Backlash Against Affirmative Action , published in 2012, is an interesting and creative blending of interviews with attorneys, content analyses of popular films during this period, and fictional accounts of racial discrimination and sexual harassment. The law firm she chose to study had come under an affirmative action order and was in the process of implementing equitable policies and programs. She wanted to understand how recipients of white privilege (the elite white male attorneys) come to deny the role they play in reproducing inequality. Through interviews with attorneys who were present both before and during the affirmative action order, she creates a historical record of the “bad behavior” that necessitated new policies and procedures, but also, and more importantly , probed the participants ’ understanding of this behavior. It should come as no surprise that most (but not all) of the white male attorneys saw little need for change, and that almost everyone else had accounts that were different if not sometimes downright harrowing.