What’s a Hypothesis Space?

Last updated: March 18, 2024

- Math and Logic

Baeldung Pro comes with both absolutely No-Ads as well as finally with Dark Mode , for a clean learning experience:

>> Explore a clean Baeldung

Once the early-adopter seats are all used, the price will go up and stay at $33/year.

1. Introduction

Machine-learning algorithms come with implicit or explicit assumptions about the actual patterns in the data. Mathematically, this means that each algorithm can learn a specific family of models, and that family goes by the name of the hypothesis space.

In this tutorial, we’ll talk about hypothesis spaces and how to choose the right one for the data at hand.

2. Hypothesis Spaces

Let’s say that we have a binary classification task and that the data are two-dimensional. Our goal is to find a model that classifies objects as positive or negative. Applying Logistic Regression , we can get the models of the form:

which estimate the probability that the object at hand is positive.

2.1. Hypotheses and Assumptions

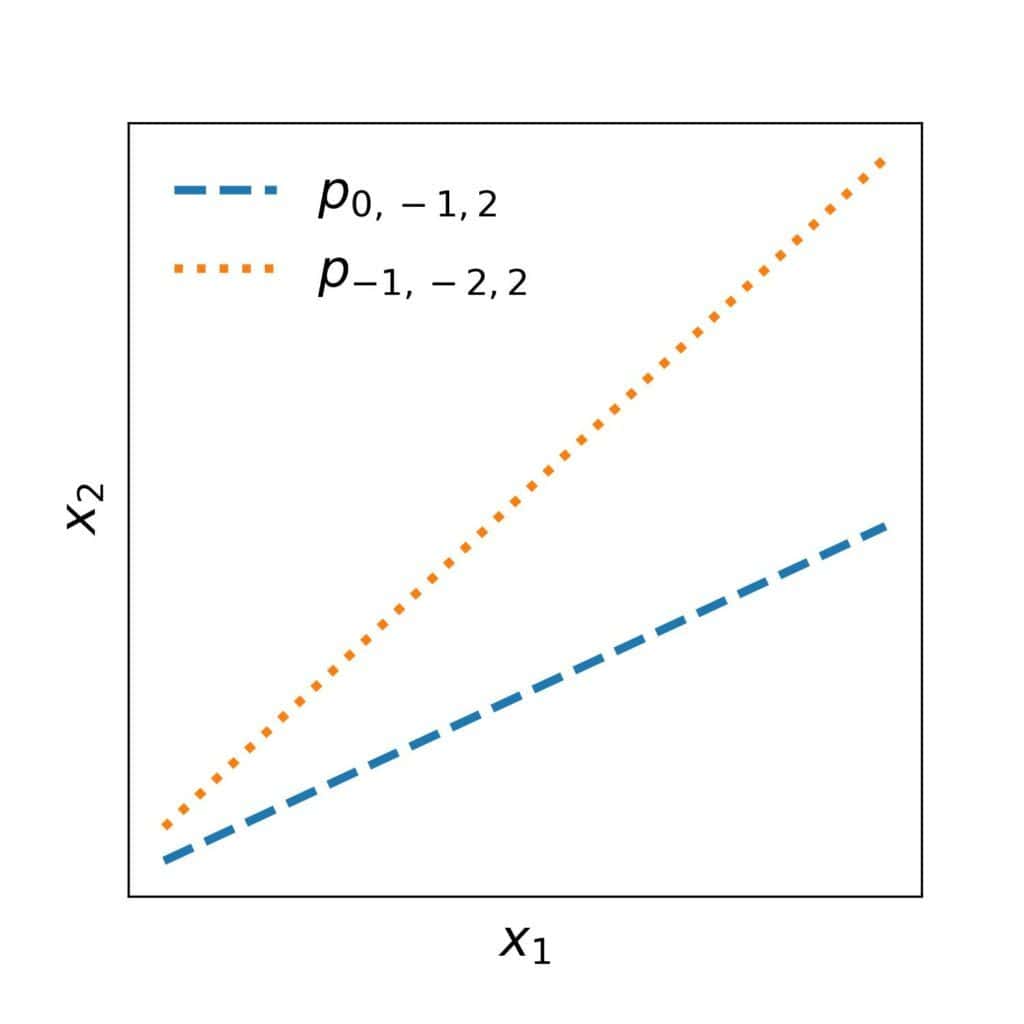

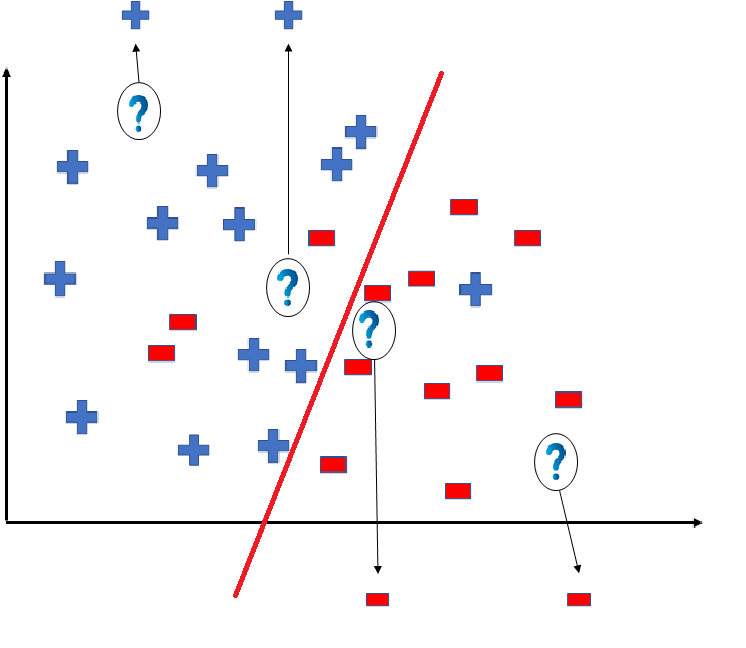

The underlying assumption of hypotheses ( 1 ) is that the boundary separating the positive from negative objects is a straight line. So, every hypothesis from this space corresponds to a straight line in a 2D plane. For instance:

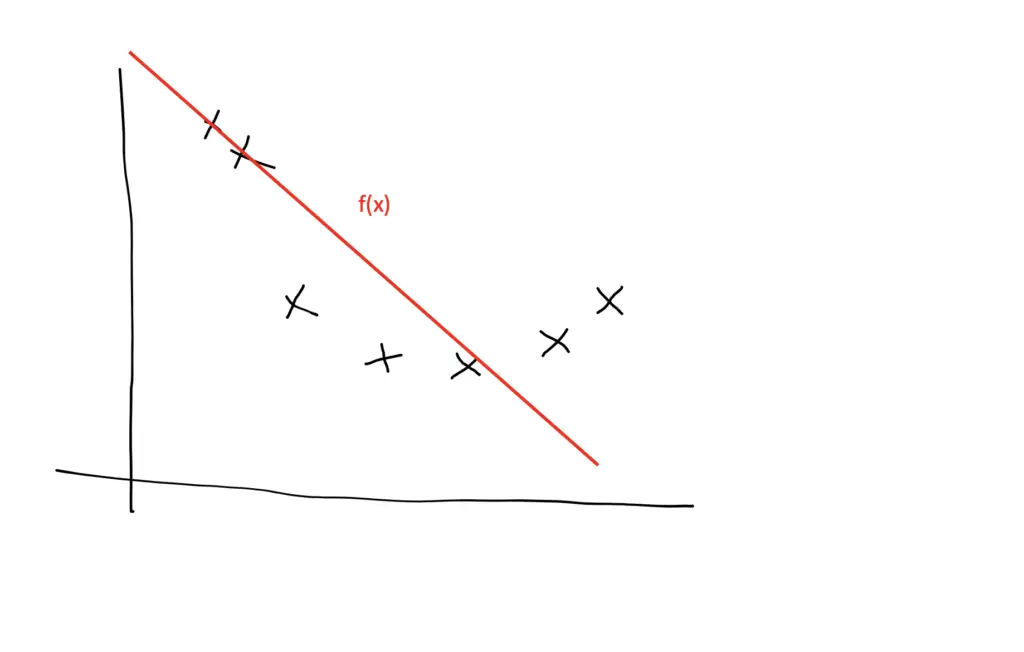

2.2. Regression

3. expressivity of a hypothesis space.

We could informally say that one hypothesis space is more expressive than another if its hypotheses are more diverse and complex.

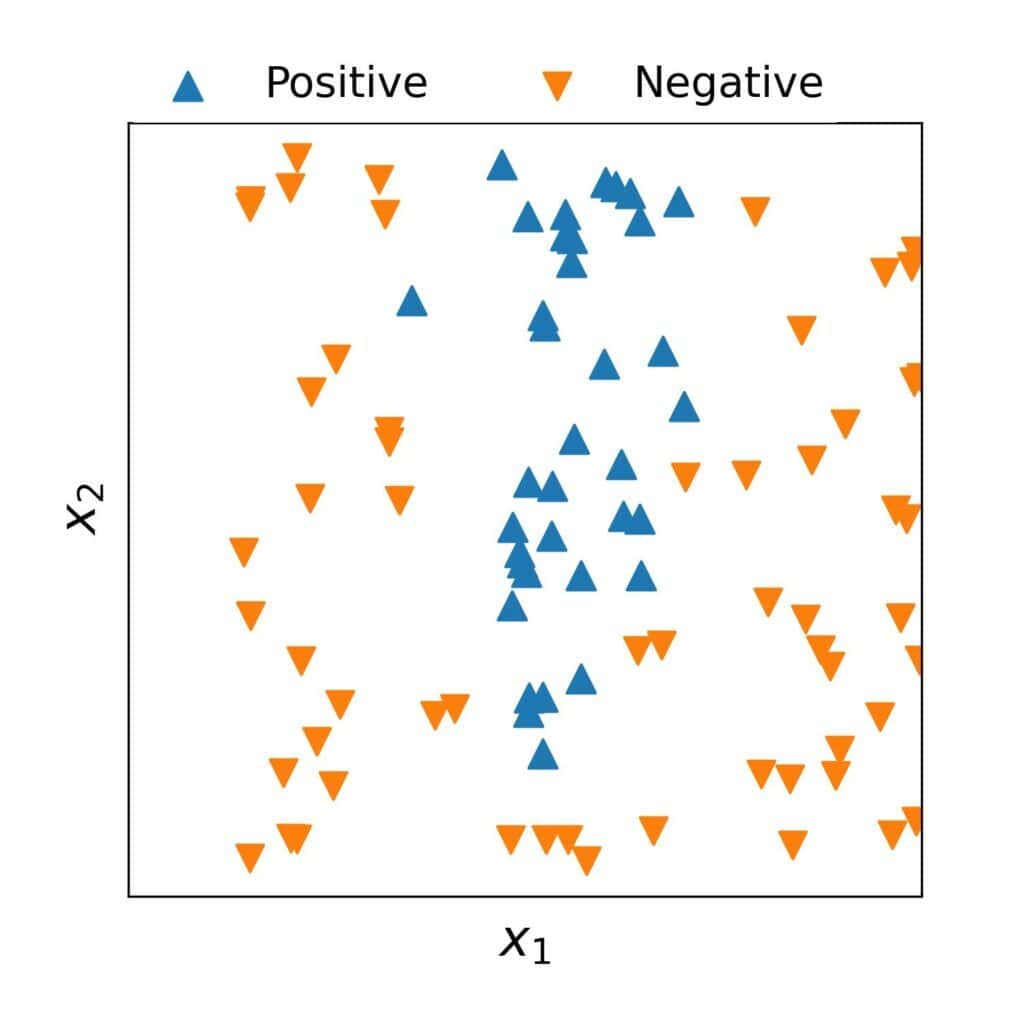

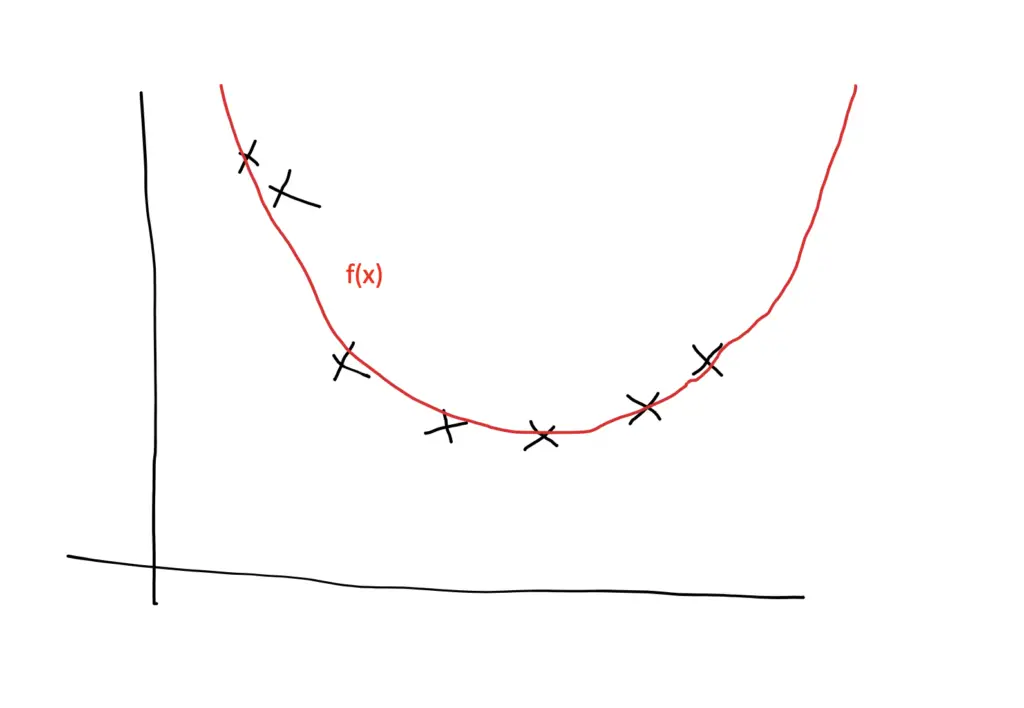

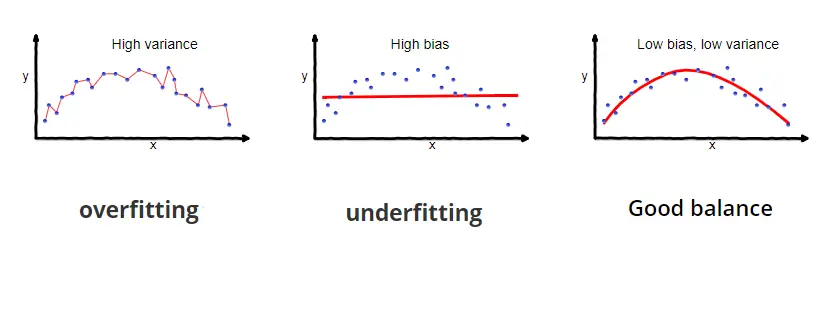

We may underfit the data if our algorithm’s hypothesis space isn’t expressive enough. For instance, linear hypotheses aren’t particularly good options if the actual data are extremely non-linear:

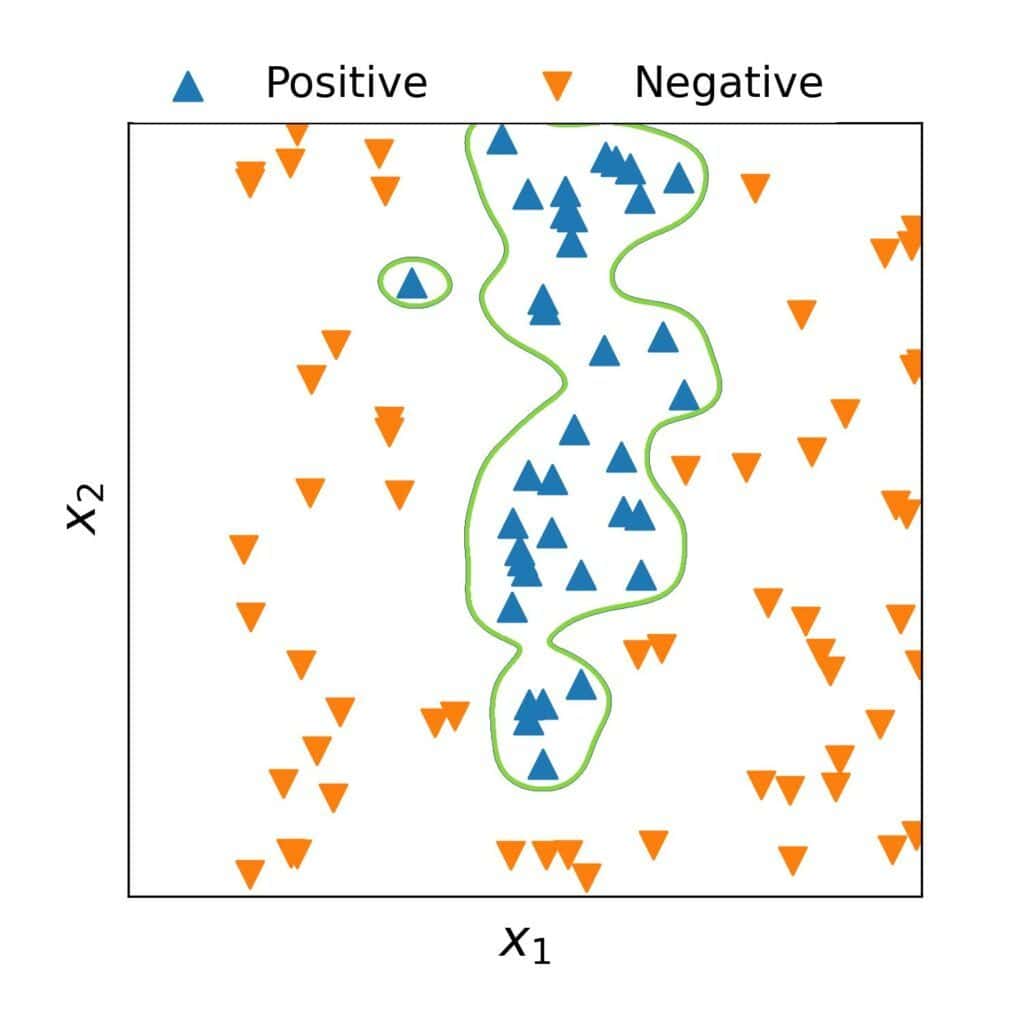

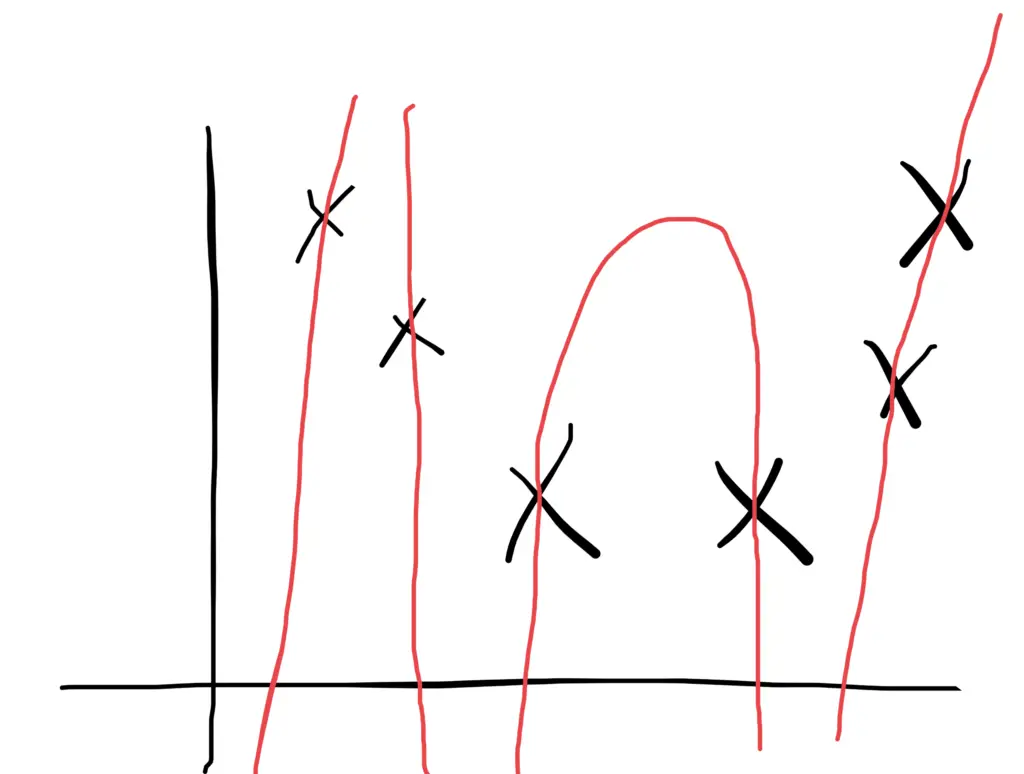

So, training an algorithm that has a very expressive space increases the chance of completely capturing the patterns in the data. However, it also increases the risk of overfitting. For instance, a space containing the hypotheses of the form:

would start modelling the noise, which we see from its decision boundary:

Such models would generalize poorly to unseen data.

3.1. Expressivity vs. Interpretability

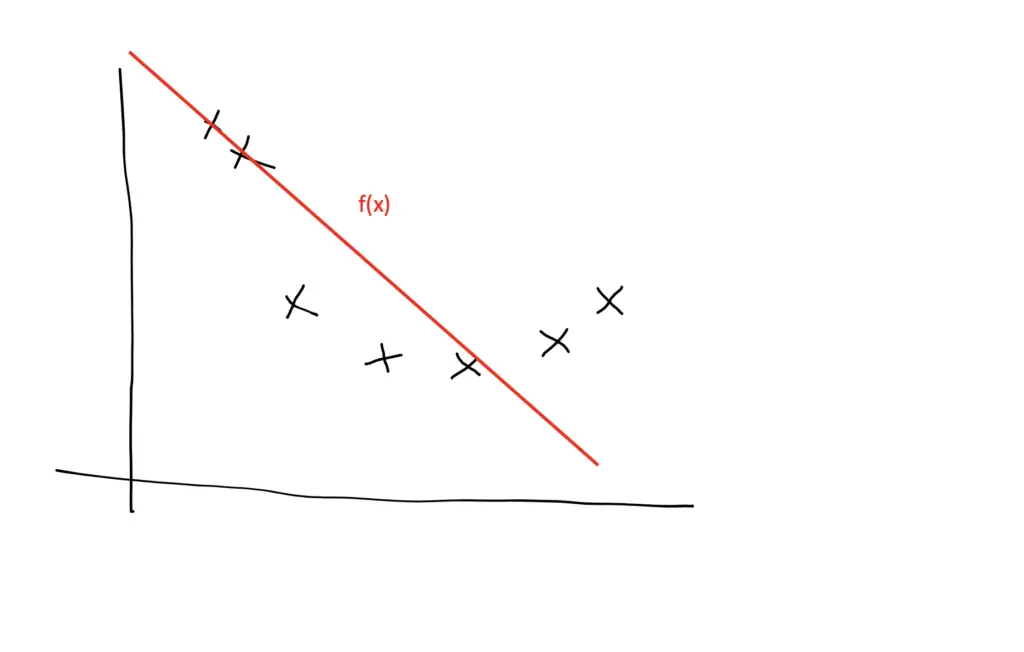

Additionally, even if a complex hypothesis has a good generalization capability, it may be unusable in practice because it’s too complicated to understand or compute. What’s more, intricated hypotheses offer limited insight into the real-world process that generated the data. For example, a quadratic model:

4. How to Choose the Hypothesis Space?

We need to find the right balance between expressivity and simplicity. Unfortunately, that’s easier said than done. Most of the time, we need to rely on our intuition about the data.

So, we should start by exploring the dataset, using visualizations as much as possible. For instance, we can conclude that a straight line isn’t likely to be an adequate boundary for the above classification data. However, a high-order curve would probably be too complex even though it might split the dataset into two classes without an error.

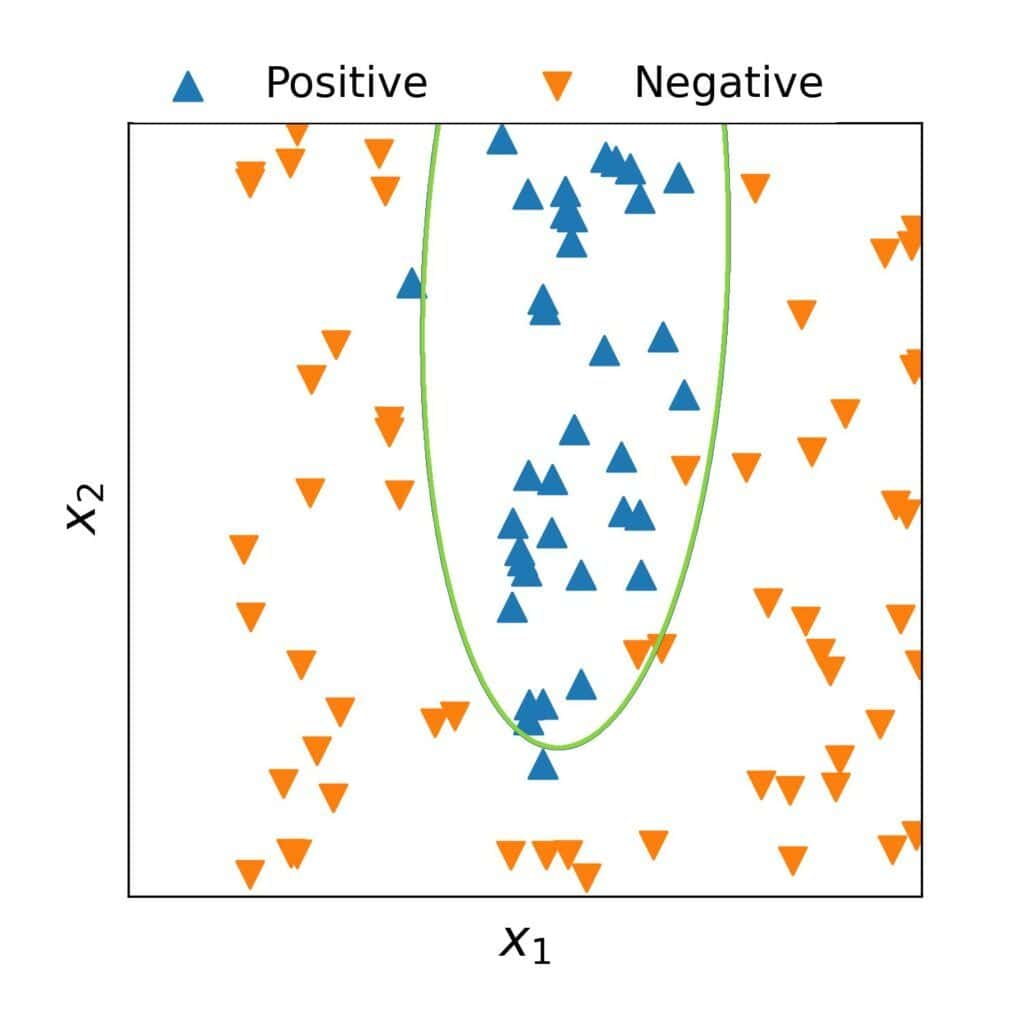

A second-degree curve might be the compromise we seek, but we aren’t sure. So, we start with the space of quadratic hypotheses:

We get a model whose decision boundary appears to be a good fit even though it misclassifies some objects:

Since we’re satisfied with the model, we can stop here. If that hadn’t been the case, we could have tried a space of cubic models. The idea would be to iteratively try incrementally complex families until finding a model that both performs well and is easy to understand.

4. Conclusion

In this article, we talked about hypotheses spaces in machine learning. An algorithm’s hypothesis space contains all the models it can learn from any dataset.

The algorithms with too expressive spaces can generalize poorly to unseen data and be too complex to understand, whereas those with overly simple hypotheses may underfit the data. So, when applying machine-learning algorithms in practice, we need to find the right balance between expressivity and simplicity.

- Data Science

- Data Analysis

- Data Visualization

- Machine Learning

- Deep Learning

- Computer Vision

- Artificial Intelligence

- AI ML DS Interview Series

- AI ML DS Projects series

- Data Engineering

- Web Scrapping

Hypothesis in Machine Learning

The concept of a hypothesis is fundamental in Machine Learning and data science endeavours. In the realm of machine learning, a hypothesis serves as an initial assumption made by data scientists and ML professionals when attempting to address a problem. Machine learning involves conducting experiments based on past experiences, and these hypotheses are crucial in formulating potential solutions.

It’s important to note that in machine learning discussions, the terms “hypothesis” and “model” are sometimes used interchangeably. However, a hypothesis represents an assumption, while a model is a mathematical representation employed to test that hypothesis. This section on “Hypothesis in Machine Learning” explores key aspects related to hypotheses in machine learning and their significance.

Table of Content

How does a Hypothesis work?

Hypothesis space and representation in machine learning, hypothesis in statistics, faqs on hypothesis in machine learning.

A hypothesis in machine learning is the model’s presumption regarding the connection between the input features and the result. It is an illustration of the mapping function that the algorithm is attempting to discover using the training set. To minimize the discrepancy between the expected and actual outputs, the learning process involves modifying the weights that parameterize the hypothesis. The objective is to optimize the model’s parameters to achieve the best predictive performance on new, unseen data, and a cost function is used to assess the hypothesis’ accuracy.

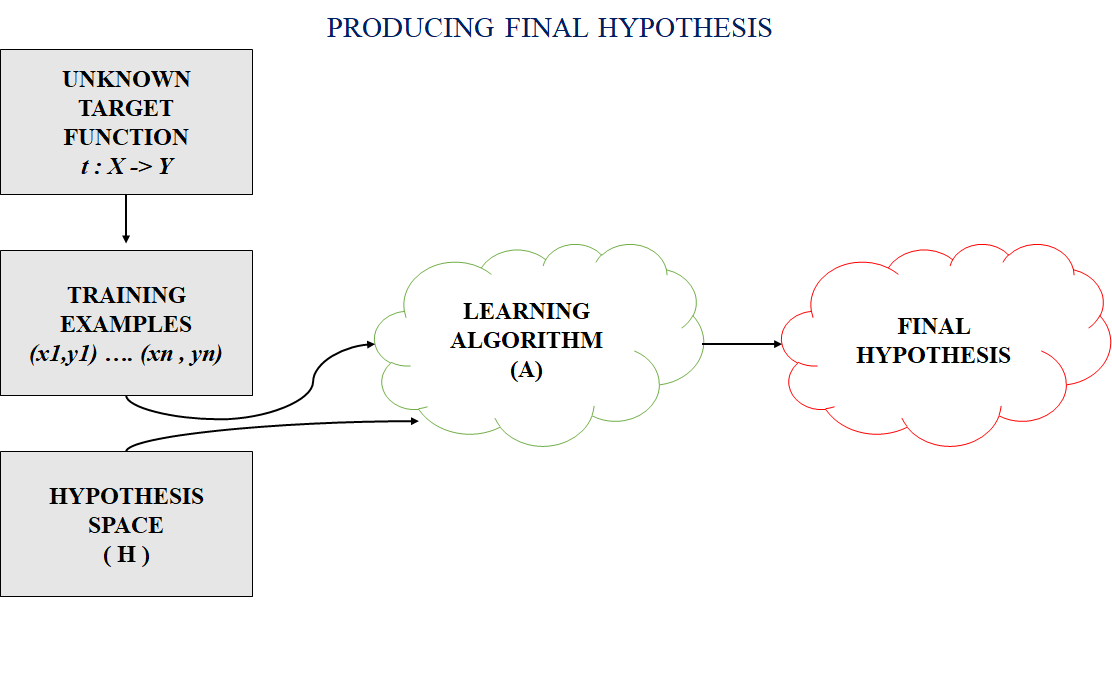

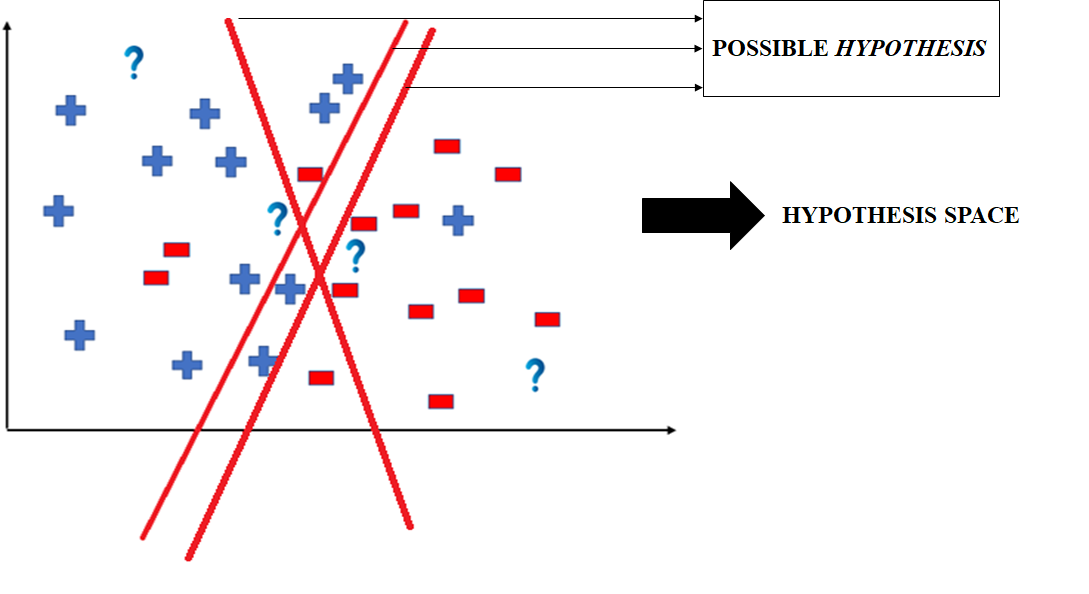

In most supervised machine learning algorithms, our main goal is to find a possible hypothesis from the hypothesis space that could map out the inputs to the proper outputs. The following figure shows the common method to find out the possible hypothesis from the Hypothesis space:

Hypothesis Space (H)

Hypothesis space is the set of all the possible legal hypothesis. This is the set from which the machine learning algorithm would determine the best possible (only one) which would best describe the target function or the outputs.

Hypothesis (h)

A hypothesis is a function that best describes the target in supervised machine learning. The hypothesis that an algorithm would come up depends upon the data and also depends upon the restrictions and bias that we have imposed on the data.

The Hypothesis can be calculated as:

[Tex]y = mx + b [/Tex]

- m = slope of the lines

- b = intercept

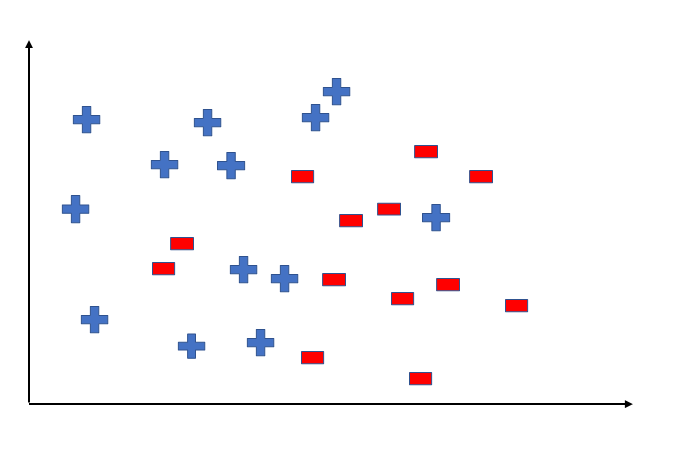

To better understand the Hypothesis Space and Hypothesis consider the following coordinate that shows the distribution of some data:

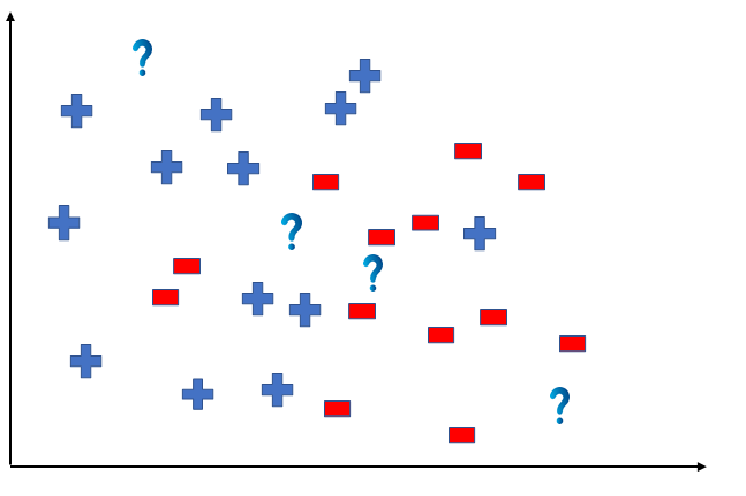

Say suppose we have test data for which we have to determine the outputs or results. The test data is as shown below:

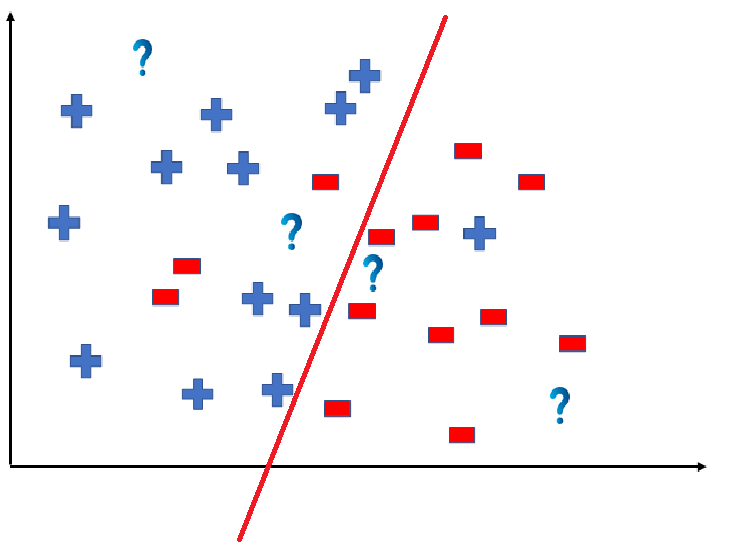

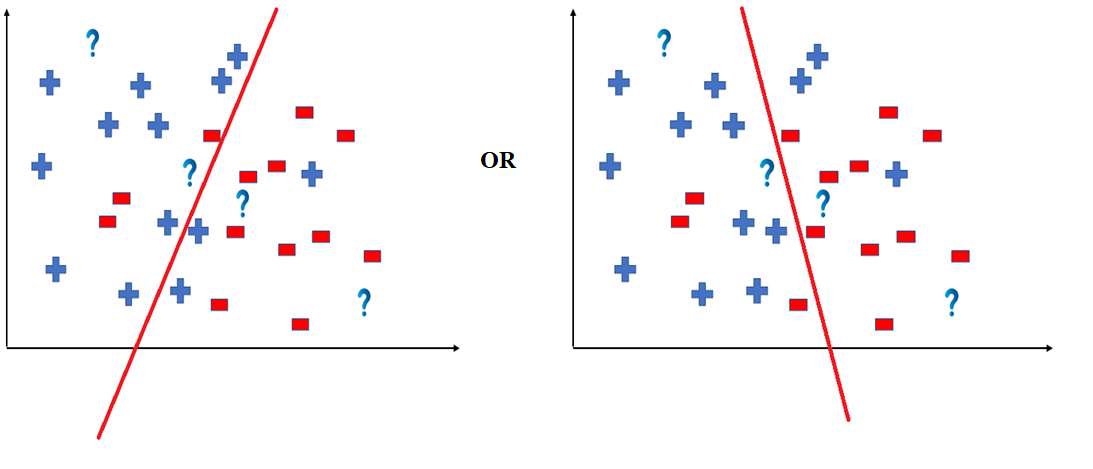

We can predict the outcomes by dividing the coordinate as shown below:

So the test data would yield the following result:

But note here that we could have divided the coordinate plane as:

The way in which the coordinate would be divided depends on the data, algorithm and constraints.

- All these legal possible ways in which we can divide the coordinate plane to predict the outcome of the test data composes of the Hypothesis Space.

- Each individual possible way is known as the hypothesis.

Hence, in this example the hypothesis space would be like:

The hypothesis space comprises all possible legal hypotheses that a machine learning algorithm can consider. Hypotheses are formulated based on various algorithms and techniques, including linear regression, decision trees, and neural networks. These hypotheses capture the mapping function transforming input data into predictions.

Hypothesis Formulation and Representation in Machine Learning

Hypotheses in machine learning are formulated based on various algorithms and techniques, each with its representation. For example:

- Linear Regression : [Tex] h(X) = \theta_0 + \theta_1 X_1 + \theta_2 X_2 + … + \theta_n X_n[/Tex]

- Decision Trees : [Tex]h(X) = \text{Tree}(X)[/Tex]

- Neural Networks : [Tex]h(X) = \text{NN}(X)[/Tex]

In the case of complex models like neural networks, the hypothesis may involve multiple layers of interconnected nodes, each performing a specific computation.

Hypothesis Evaluation:

The process of machine learning involves not only formulating hypotheses but also evaluating their performance. This evaluation is typically done using a loss function or an evaluation metric that quantifies the disparity between predicted outputs and ground truth labels. Common evaluation metrics include mean squared error (MSE), accuracy, precision, recall, F1-score, and others. By comparing the predictions of the hypothesis with the actual outcomes on a validation or test dataset, one can assess the effectiveness of the model.

Hypothesis Testing and Generalization:

Once a hypothesis is formulated and evaluated, the next step is to test its generalization capabilities. Generalization refers to the ability of a model to make accurate predictions on unseen data. A hypothesis that performs well on the training dataset but fails to generalize to new instances is said to suffer from overfitting. Conversely, a hypothesis that generalizes well to unseen data is deemed robust and reliable.

The process of hypothesis formulation, evaluation, testing, and generalization is often iterative in nature. It involves refining the hypothesis based on insights gained from model performance, feature importance, and domain knowledge. Techniques such as hyperparameter tuning, feature engineering, and model selection play a crucial role in this iterative refinement process.

In statistics , a hypothesis refers to a statement or assumption about a population parameter. It is a proposition or educated guess that helps guide statistical analyses. There are two types of hypotheses: the null hypothesis (H0) and the alternative hypothesis (H1 or Ha).

- Null Hypothesis(H 0 ): This hypothesis suggests that there is no significant difference or effect, and any observed results are due to chance. It often represents the status quo or a baseline assumption.

- Aternative Hypothesis(H 1 or H a ): This hypothesis contradicts the null hypothesis, proposing that there is a significant difference or effect in the population. It is what researchers aim to support with evidence.

Q. How does the training process use the hypothesis?

The learning algorithm uses the hypothesis as a guide to minimise the discrepancy between expected and actual outputs by adjusting its parameters during training.

Q. How is the hypothesis’s accuracy assessed?

Usually, a cost function that calculates the difference between expected and actual values is used to assess accuracy. Optimising the model to reduce this expense is the aim.

Q. What is Hypothesis testing?

Hypothesis testing is a statistical method for determining whether or not a hypothesis is correct. The hypothesis can be about two variables in a dataset, about an association between two groups, or about a situation.

Q. What distinguishes the null hypothesis from the alternative hypothesis in machine learning experiments?

The null hypothesis (H0) assumes no significant effect, while the alternative hypothesis (H1 or Ha) contradicts H0, suggesting a meaningful impact. Statistical testing is employed to decide between these hypotheses.

Please Login to comment...

Similar reads.

- Best External Hard Drives for Mac in 2024: Top Picks for MacBook Pro, MacBook Air & More

- How to Watch NFL Games Live Streams Free

- OpenAI o1 AI Model Launched: Explore o1-Preview, o1-Mini, Pricing & Comparison

- How to Merge Cells in Google Sheets: Step by Step Guide

- #geekstreak2024 – 21 Days POTD Challenge Powered By Deutsche Bank

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

Stack Exchange Network

Stack Exchange network consists of 183 Q&A communities including Stack Overflow , the largest, most trusted online community for developers to learn, share their knowledge, and build their careers.

Q&A for work

Connect and share knowledge within a single location that is structured and easy to search.

What is the difference between hypothesis space and representational capacity?

I am reading Goodfellow et al Deeplearning Book . I found it difficult to understand the difference between the definition of the hypothesis space and representation capacity of a model.

In Chapter 5 , it is written about hypothesis space:

One way to control the capacity of a learning algorithm is by choosing its hypothesis space, the set of functions that the learning algorithm is allowed to select as being the solution.

And about representational capacity:

The model specifies which family of functions the learning algorithm can choose from when varying the parameters in order to reduce a training objective. This is called the representational capacity of the model.

If we take the linear regression model as an example and allow our output $y$ to takes polynomial inputs, I understand the hypothesis space as the ensemble of quadratic functions taking input $x$ , i.e $y = a_0 + a_1x + a_2x^2$ .

How is it different from the definition of the representational capacity, where parameters are $a_0$ , $a_1$ and $a_2$ ?

- machine-learning

- terminology

- computational-learning-theory

- hypothesis-class

3 Answers 3

Consider a target function $f: x \mapsto f(x)$ .

A hypothesis refers to an approximation of $f$ . A hypothesis space refers to the set of possible approximations that an algorithm can create for $f$ . The hypothesis space consists of the set of functions the model is limited to learn. For instance, linear regression can be limited to linear functions as its hypothesis space, or it can be expanded to learn polynomials.

The representational capacity of a model determines the flexibility of it, its ability to fit a variety of functions (i.e. which functions the model is able to learn), at the same. It specifies the family of functions the learning algorithm can choose from.

- 1 $\begingroup$ Does it mean that the set of functions described by the representational capacity is strictly included in the hypothesis space ? By definition, is it possible to have functions in the hypothesis space NOT described in the representational capacity ? $\endgroup$ – Qwarzix Commented Aug 23, 2018 at 8:43

- $\begingroup$ It's still pretty confusing to me. Most sources say that a "model" is an instance (after execution/training on data) of a "learning algorithm". How, then, can a model specify the family of functions the learning algorithm can choose from? It doesn't make sense to me. The authors of the book should've explained these concepts in more depth. $\endgroup$ – Talendar Commented Oct 9, 2020 at 13:09

A hypothesis space is defined as the set of functions $\mathcal H$ that can be chosen by a learning algorithm to minimize loss (in general).

$$\mathcal H = \{h_1, h_2,....h_n\}$$

The hypothesis class can be finite or infinite, for example a discrete set of shapes to encircle certain portion of the input space is a finite hypothesis space, whereas hpyothesis space of parametrized functions like neural nets and linear regressors are infinite.

Although the term representational capacity is not in the vogue a rough definition woukd be: The representational capacity of a model, is the ability of its hypothesis space to approximate a complex function, with 0 error, which can only be approximated by infinitely many hypothesis spaces whose representational capacity is equal to or exceed the representational capacity required to approximate the complex function.

The most popular measure of representational capacity is the $\mathcal V$ $\mathcal C$ Dimension of a model. The upper bound for VC dimension ( $d$ ) of a model is: $$d \leq \log_2| \mathcal H|$$ where $|H|$ is the cardinality of the set of hypothesis space.

A hypothesis space/class is the set of functions that the learning algorithm considers when picking one function to minimize some risk/loss functional.

The capacity of a hypothesis space is a number or bound that quantifies the size (or richness) of the hypothesis space, i.e. the number (and type) of functions that can be represented by the hypothesis space. So a hypothesis space has a capacity. The two most famous measures of capacity are VC dimension and Rademacher complexity.

In other words, the hypothesis class is the object and the capacity is a property (that can be measured or quantified) of this object, but there is not a big difference between hypothesis class and its capacity, in the sense that a hypothesis class naturally defines a capacity, but two (different) hypothesis classes could have the same capacity.

Note that representational capacity (not capacity , which is common!) is not a standard term in computational learning theory, while hypothesis space/class is commonly used. For example, this famous book on machine learning and learning theory uses the term hypothesis class in many places, but it never uses the term representational capacity .

Your book's definition of representational capacity is bad , in my opinion, if representational capacity is supposed to be a synonym for capacity , given that that definition also coincides with the definition of hypothesis class, so your confusion is understandable.

- 1 $\begingroup$ I agree with you. The authors of the book should've explained these concepts in more depth. Most sources say that a "model" is an instance (after execution/training on data) of a "learning algorithm". How, then, can a model specify the family of functions the learning algorithm can choose from? Also, as you pointed out, the definition of the terms "hypothesis space" and "representational capacity" given by the authors are practically the same, although they use the terms as if they represent different concepts. $\endgroup$ – Talendar Commented Oct 9, 2020 at 13:18

You must log in to answer this question.

Not the answer you're looking for browse other questions tagged machine-learning terminology computational-learning-theory hypothesis-class capacity ..

- Featured on Meta

- User activation: Learnings and opportunities

- Join Stack Overflow’s CEO and me for the first Stack IRL Community Event in...

Hot Network Questions

- Sent money to rent an apartment, landlord delaying refund with excuses. Is this a scam?

- How to plausibly delay the creation of the telescope

- How to translate the letter Q to Japanese?

- In Python 3.12, why does 'Öl' take less memory than 'Ö'?

- Some of them "have no hair"

- Nginx rewrite directive loops, location with trailing slash doesn't match, why?

- Smallest prime q such that concatenation (p+q)"q is a prime

- Why believe in the existence of large cardinals rather than just their consistency?

- Horror short film about a guy trying to test a VR game with spiders in a house. He wakes up and realizes the game hasn't started

- How would you say "must" as in "Pet rabbits must be constantly looking for a way to escape."?

- Establishing Chirality For a 4D Person?

- Mark 6:54 - Who knew/recognized Jesus: the disciples or the crowds?

- Was the total glaciation of the world, a.k.a. snowball earth, due to Bok space clouds?

- Script does not work when run from Startup Programs

- Is this the right way to ask for input without pressing the return key?

- Is SQL .bak file compressed without explicitly stating to compress?

- Disable Firefox feature to choose its own DNS

- Returning to the US for 2 weeks after a short stay around 6 months prior with an ESTA but a poor entry interview - worried about visiting again

- Is Boltzmann entropy well-defined for arbitrary probability density function?

- Cutting a curve through a thick timber without waste

- Hungarian Immigration wrote a code on my passport

- Do 'avoid' notes depend on register?

- When did St Peter receive the Keys of Heaven?

- Is "Canada's nation's capital" a mistake?

LEARN STATISTICS EASILY

Learn Data Analysis Now!

What is: Hypothesis Space

What is hypothesis space.

The term “hypothesis space” refers to the set of all possible hypotheses that can be formulated to explain a given set of data within the context of statistical modeling, machine learning, and data science. In essence, it encompasses every potential model or function that can be used to make predictions or inferences based on the available data. The hypothesis space is crucial in determining the effectiveness of a learning algorithm, as it defines the boundaries within which the algorithm operates. A well-defined hypothesis space allows for better generalization, enabling the model to perform effectively on unseen data.

Ad description. Lorem ipsum dolor sit amet, consectetur adipiscing elit.

Components of Hypothesis Space

A hypothesis space is typically composed of various models, each representing a different assumption about the underlying data-generating process. These models can range from simple linear functions to complex non-linear algorithms, such as neural networks. The complexity and richness of the hypothesis space are influenced by several factors, including the choice of features, the type of model employed, and the regularization techniques applied. For instance, a linear regression model has a relatively simple hypothesis space, while a deep learning model can possess a vast and intricate hypothesis space due to its multiple layers and parameters.

Importance of Hypothesis Space in Machine Learning

In machine learning, the hypothesis space plays a pivotal role in the learning process. It directly impacts the model’s ability to learn from data and make accurate predictions. A larger hypothesis space may provide the flexibility needed to capture complex patterns in the data, but it also increases the risk of overfitting, where the model learns noise rather than the underlying distribution. Conversely, a smaller hypothesis space may lead to underfitting, where the model fails to capture essential patterns. Therefore, finding the right balance in the hypothesis space is critical for achieving optimal model performance.

Exploration of Hypothesis Space

Exploring the hypothesis space involves evaluating different models and their performance on the training data. Techniques such as cross-validation are commonly employed to assess how well a model generalizes to unseen data. By partitioning the data into training and validation sets, data scientists can iteratively test various hypotheses and refine their models. This exploration is essential for identifying the most suitable hypothesis that balances complexity and accuracy, ultimately leading to better predictive performance.

Hypothesis Space and Regularization

Regularization techniques are often employed to manage the complexity of the hypothesis space. These techniques, such as L1 (Lasso) and L2 (Ridge) regularization, add a penalty term to the loss function, discouraging overly complex models. By constraining the hypothesis space, regularization helps prevent overfitting, ensuring that the model remains generalizable. This is particularly important in high-dimensional datasets where the risk of overfitting is heightened due to the increased number of features relative to the number of observations.

Dimensionality Reduction and Hypothesis Space

Dimensionality reduction techniques, such as Principal Component Analysis (PCA) and t-Distributed Stochastic Neighbor Embedding (t-SNE), can also influence the hypothesis space. By reducing the number of features, these techniques simplify the hypothesis space, making it easier for models to learn from the data. This simplification can lead to improved model performance, especially in cases where the original feature set contains redundant or irrelevant information. Consequently, dimensionality reduction serves as a valuable tool in the data preprocessing phase, enhancing the overall efficiency of the learning process.

Evaluating Hypothesis Space with Metrics

To assess the effectiveness of different hypotheses within the hypothesis space, various evaluation metrics are employed. Common metrics include accuracy, precision, recall, F1-score, and area under the ROC curve (AUC-ROC). These metrics provide insights into how well a model performs in terms of classification or regression tasks. By systematically evaluating different hypotheses against these metrics, data scientists can identify the most promising models and refine their approaches accordingly, ensuring that the selected hypothesis aligns with the desired outcomes.

Bayesian Perspective on Hypothesis Space

From a Bayesian perspective, the hypothesis space is treated probabilistically. Each hypothesis is assigned a prior probability, reflecting the belief in its validity before observing the data. As data is observed, these prior probabilities are updated to posterior probabilities using Bayes’ theorem. This approach allows for a more nuanced exploration of the hypothesis space, as it incorporates uncertainty and provides a framework for model comparison. Bayesian methods can be particularly useful in scenarios where prior knowledge is available, guiding the selection of hypotheses based on both empirical evidence and theoretical considerations.

Practical Applications of Hypothesis Space

In practical applications, understanding the hypothesis space is essential for various domains, including finance, healthcare, and marketing. For instance, in finance, different models may be hypothesized to predict stock prices based on historical data. In healthcare, hypothesis spaces can be constructed to identify risk factors for diseases based on patient data. In marketing, understanding customer behavior through various hypotheses can lead to more effective targeting strategies. By leveraging the concept of hypothesis space, practitioners can develop robust models that drive decision-making and enhance outcomes across diverse fields.

Programmathically

Introduction to the hypothesis space and the bias-variance tradeoff in machine learning.

In this post, we introduce the hypothesis space and discuss how machine learning models function as hypotheses. Furthermore, we discuss the challenges encountered when choosing an appropriate machine learning hypothesis and building a model, such as overfitting, underfitting, and the bias-variance tradeoff.

The hypothesis space in machine learning is a set of all possible models that can be used to explain a data distribution given the limitations of that space. A linear hypothesis space is limited to the set of all linear models. If the data distribution follows a non-linear distribution, the linear hypothesis space might not contain a model that is appropriate for our needs.

To understand the concept of a hypothesis space, we need to learn to think of machine learning models as hypotheses.

The Machine Learning Model as Hypothesis

Generally speaking, a hypothesis is a potential explanation for an outcome or a phenomenon. In scientific inquiry, we test hypotheses to figure out how well and if at all they explain an outcome. In supervised machine learning, we are concerned with finding a function that maps from inputs to outputs.

But machine learning is inherently probabilistic. It is the art and science of deriving useful hypotheses from limited or incomplete data. Our functions are not axioms that explain the data perfectly, and for most real-life problems, we will never have all the data that exists. Accordingly, we will not find the one true function that perfectly describes the data. Instead, we find a function through training a model to map from known training input to known training output. This way, the model gradually approximates the assumed true function that describes the distribution of the data. So we treat our model as a hypothesis that needs to be tested as to how well it explains the output from a given input. We do this using a test or validation data set.

The Hypothesis Space

During the training process, we select a model from a hypothesis space that is subject to our constraints. For example, a linear hypothesis space only provides linear models. We can approximate data that follows a quadratic distribution using a model from the linear hypothesis space.

Of course, a linear model will never have the same predictive performance as a quadratic model, so we can adjust our hypothesis space to also include non-linear models or at least quadratic models.

The Data Generating Process

The data generating process describes a hypothetical process subject to some assumptions that make training a machine learning model possible. We need to assume that the data points are from the same distribution but are independent of each other. When these requirements are met, we say that the data is independent and identically distributed (i.i.d.).

Independent and Identically Distributed Data

How can we assume that a model trained on a training set will perform better than random guessing on new and previously unseen data? First of all, the training data needs to come from the same or at least a similar problem domain. If you want your model to predict stock prices, you need to train the model on stock price data or data that is similarly distributed. It wouldn’t make much sense to train it on whether data. Statistically, this means the data is identically distributed . But if data comes from the same problem, training data and test data might not be completely independent. To account for this, we need to make sure that the test data is not in any way influenced by the training data or vice versa. If you use a subset of the training data as your test set, the test data evidently is not independent of the training data. Statistically, we say the data must be independently distributed .

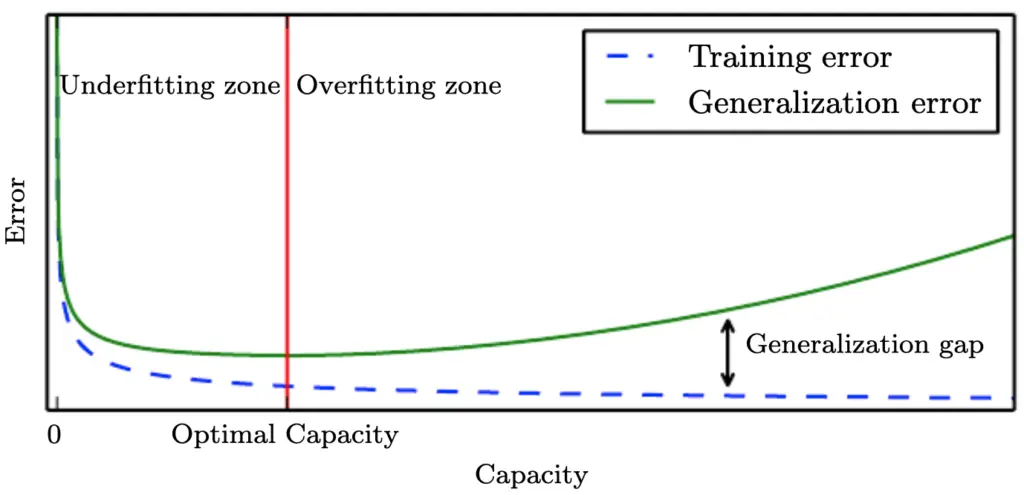

Overfitting and Underfitting

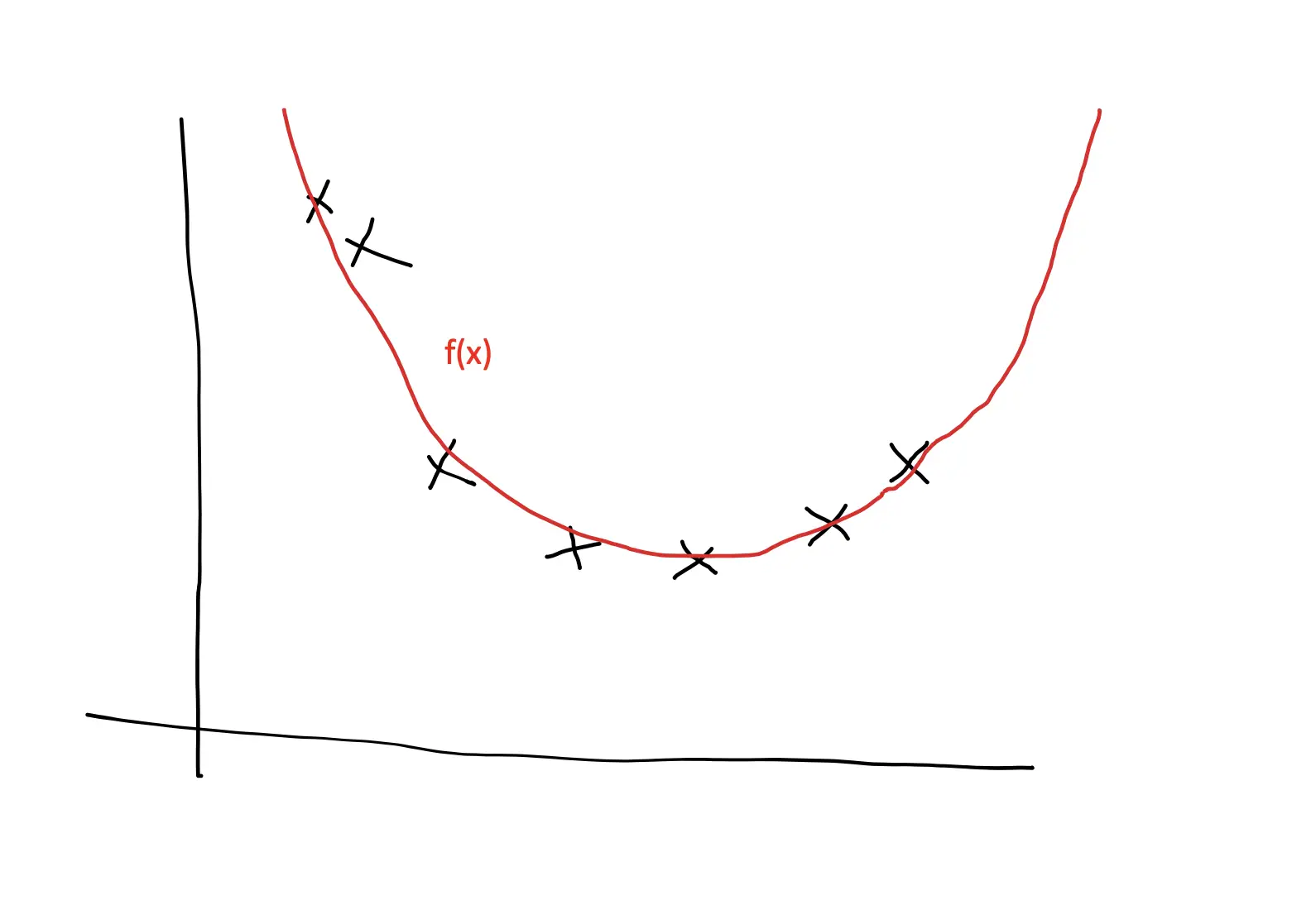

We want to select a model from the hypothesis space that explains the data sufficiently well. During training, we can make a model so complex that it perfectly fits every data point in the training dataset. But ultimately, the model should be able to predict outputs on previously unseen input data. The ability to do well when predicting outputs on previously unseen data is also known as generalization. There is an inherent conflict between those two requirements.

If we make the model so complex that it fits every point in the training data, it will pick up lots of noise and random variation specific to the training set, which might obscure the larger underlying patterns. As a result, it will be more sensitive to random fluctuations in new data and predict values that are far off. A model with this problem is said to overfit the training data and, as a result, to suffer from high variance .

To avoid the problem of overfitting, we can choose a simpler model or use regularization techniques to prevent the model from fitting the training data too closely. The model should then be less influenced by random fluctuations and instead, focus on the larger underlying patterns in the data. The patterns are expected to be found in any dataset that comes from the same distribution. As a consequence, the model should generalize better on previously unseen data.

But if we go too far, the model might become too simple or too constrained by regularization to accurately capture the patterns in the data. Then the model will neither generalize well nor fit the training data well. A model that exhibits this problem is said to underfit the data and to suffer from high bias . If the model is too simple to accurately capture the patterns in the data (for example, when using a linear model to fit non-linear data), its capacity is insufficient for the task at hand.

When training neural networks, for example, we go through multiple iterations of training in which the model learns to fit an increasingly complex function to the data. Typically, your training error will decrease during learning the more complex your model becomes and the better it learns to fit the data. In the beginning, the training error decreases rapidly. In later training iterations, it typically flattens out as it approaches the minimum possible error. Your test or generalization error should initially decrease as well, albeit likely at a slower pace than the training error. As long as the generalization error is decreasing, your model is underfitting because it doesn’t live up to its full capacity. After a number of training iterations, the generalization error will likely reach a trough and start to increase again. Once it starts to increase, your model is overfitting, and it is time to stop training.

Ideally, you should stop training once your model reaches the lowest point of the generalization error. The gap between the minimum generalization error and no error at all is an irreducible error term known as the Bayes error that we won’t be able to completely get rid of in a probabilistic setting. But if the error term seems too large, you might be able to reduce it further by collecting more data, manipulating your model’s hyperparameters, or altogether picking a different model.

Bias Variance Tradeoff

We’ve talked about bias and variance in the previous section. Now it is time to clarify what we actually mean by these terms.

Understanding Bias and Variance

In a nutshell, bias measures if there is any systematic deviation from the correct value in a specific direction. If we could repeat the same process of constructing a model several times over, and the results predicted by our model always deviate in a certain direction, we would call the result biased.

Variance measures how much the results vary between model predictions. If you repeat the modeling process several times over and the results are scattered all across the board, the model exhibits high variance.

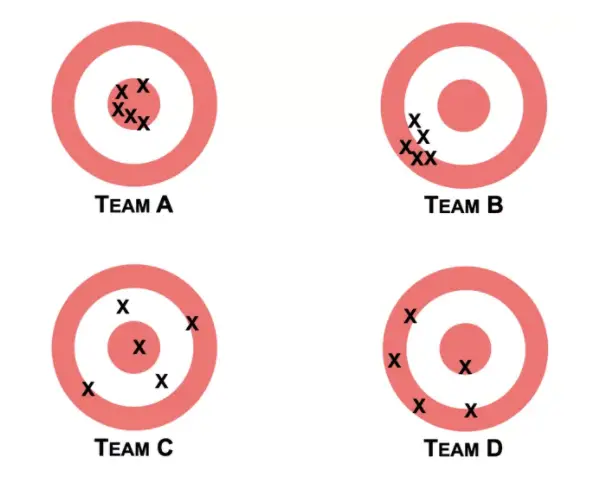

In their book “Noise” Daniel Kahnemann and his co-authors provide an intuitive example that helps understand the concept of bias and variance. Imagine you have four teams at the shooting range.

Team B is biased because the shots of its team members all deviate in a certain direction from the center. Team B also exhibits low variance because the shots of all the team members are relatively concentrated in one location. Team C has the opposite problem. The shots are scattered across the target with no discernible bias in a certain direction. Team D is both biased and has high variance. Team A would be the equivalent of a good model. The shots are in the center with little bias in one direction and little variance between the team members.

Generally speaking, linear models such as linear regression exhibit high bias and low variance. Nonlinear algorithms such as decision trees are more prone to overfitting the training data and thus exhibit high variance and low bias.

A linear model used with non-linear data would exhibit a bias to predict data points along a straight line instead of accomodating the curves. But they are not as susceptible to random fluctuations in the data. A nonlinear algorithm that is trained on noisy data with lots of deviations would be more capable of avoiding bias but more prone to incorporate the noise into its predictions. As a result, a small deviation in the test data might lead to very different predictions.

To get our model to learn the patterns in data, we need to reduce the training error while at the same time reducing the gap between the training and the testing error. In other words, we want to reduce both bias and variance. To a certain extent, we can reduce both by picking an appropriate model, collecting enough training data, selecting appropriate training features and hyperparameter values. At some point, we have to trade-off between minimizing bias and minimizing variance. How you balance this trade-off is up to you.

The Bias Variance Decomposition

Mathematically, the total error can be decomposed into the bias and the variance according to the following formula.

Remember that Bayes’ error is an error that cannot be eliminated.

Our machine learning model represents an estimating function \hat f(X) for the true data generating function f(X) where X represents the predictors and y the output values.

Now the mean squared error of our model is the expected value of the squared difference of the output produced by the estimating function \hat f(X) and the true output Y.

The bias is a systematic deviation from the true value. We can measure it as the squared difference between the expected value produced by the estimating function (the model) and the values produced by the true data-generating function.

Of course, we don’t know the true data generating function, but we do know the observed outputs Y, which correspond to the values generated by f(x) plus an error term.

The variance of the model is the squared difference between the expected value and the actual values of the model.

Now that we have the bias and the variance, we can add them up along with the irreducible error to get the total error.

A machine learning model represents an approximation to the hypothesized function that generated the data. The chosen model is a hypothesis since we hypothesize that this model represents the true data generating function.

We choose the hypothesis from a hypothesis space that may be subject to certain constraints. For example, we can constrain the hypothesis space to the set of linear models.

When choosing a model, we aim to reduce the bias and the variance to prevent our model from either overfitting or underfitting the data. In the real world, we cannot completely eliminate bias and variance, and we have to trade-off between them. The total error produced by a model can be decomposed into the bias, the variance, and irreducible (Bayes) error.

About Author

Related Posts

- Eyke Hüllermeier 5 ,

- Thomas Fober 5 &

- Marco Mernberger 5

126 Accesses

In machine learning, the goal of a supervised learning algorithm is to perform induction, i.e., to generalize a (finite) set of observations (the training data) into a general model of the domain. In this regard, the hypothesis space is defined as the set of candidate models considered by the algorithm.

More specifically, consider the problem of learning a mapping (model) \( f \in F = Y^X \) from an input space X to an output space Y , given a set of training data \( D = \left\{ {\left( {{x_1},{y_1}} \right),...,\left( {{x_n},{y_n}} \right)} \right\} \subset X \times Y \) . A learning algorithm A takes D as an input and produces a function (model, hypothesis) f ∈ H ⊂ F as an output, where H is the hypothesis space. This subset is determined by the formalism used to represent models (e.g., as logical formulas, linear functions, or non-linear functions implemented as artificial neural networks or decision trees ). Thus, the choice of the hypothesis space produces a representation...

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

Subscribe and save.

- Get 10 units per month

- Download Article/Chapter or eBook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Durable hardcover edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Author information

Authors and affiliations.

Philipps-Universität Marburg, Hans-Meerwein-Straße, Marburg, Germany

Eyke Hüllermeier, Thomas Fober & Marco Mernberger

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Eyke Hüllermeier .

Editor information

Editors and affiliations.

Biomedical Sciences Research Institute, University of Ulster, Coleraine, UK

Werner Dubitzky

Department of Computer Science, University of Rostock, Rostock, Germany

Olaf Wolkenhauer

Department of Bio and Brain Engineering, Korea Advanced Institute of Science and Technology (KAIST), Daejeon, Republic of Korea

Kwang-Hyun Cho

Department of Biomedical Engineering, Rensselaer Polytechnic Institute, Troy, NY, USA

Hiroki Yokota

Rights and permissions

Reprints and permissions

Copyright information

© 2013 Springer Science+Business Media, LLC

About this entry

Cite this entry.

Hüllermeier, E., Fober, T., Mernberger, M. (2013). Hypothesis Space. In: Dubitzky, W., Wolkenhauer, O., Cho, KH., Yokota, H. (eds) Encyclopedia of Systems Biology. Springer, New York, NY. https://doi.org/10.1007/978-1-4419-9863-7_926

Download citation

DOI : https://doi.org/10.1007/978-1-4419-9863-7_926

Publisher Name : Springer, New York, NY

Print ISBN : 978-1-4419-9862-0

Online ISBN : 978-1-4419-9863-7

eBook Packages : Biomedical and Life Sciences Reference Module Biomedical and Life Sciences

Share this entry

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Machine Learning

- Machine Learning Tutorial

- Machine Learning Applications

- Life cycle of Machine Learning

- Install Anaconda & Python

- AI vs Machine Learning

- How to Get Datasets

- Data Preprocessing

- Supervised Machine Learning

- Unsupervised Machine Learning

- Supervised vs Unsupervised Learning

Supervised Learning

- Regression Analysis

- Linear Regression

- Simple Linear Regression

- Multiple Linear Regression

- Backward Elimination

- Polynomial Regression

Classification

- Classification Algorithm

- Logistic Regression

- K-NN Algorithm

- Support Vector Machine Algorithm

- Na�ve Bayes Classifier

Miscellaneous

- Classification vs Regression

- Linear Regression vs Logistic Regression

- Decision Tree Classification Algorithm

- Random Forest Algorithm

- Clustering in Machine Learning

- Hierarchical Clustering in Machine Learning

- K-Means Clustering Algorithm

- Apriori Algorithm in Machine Learning

- Association Rule Learning

- Confusion Matrix

- Cross-Validation

- Data Science vs Machine Learning

- Machine Learning vs Deep Learning

- Dimensionality Reduction Technique

- Machine Learning Algorithms

- Overfitting & Underfitting

- Principal Component Analysis

- What is P-Value

- Regularization in Machine Learning

- Examples of Machine Learning

- Semi-Supervised Learning

- Essential Mathematics for Machine Learning

- Overfitting in Machine Learning

- Types of Encoding Techniques

- Feature Selection Techniques in Machine Learning

- Bias and Variance in Machine Learning

- Machine Learning Tools

- Prerequisites for Machine Learning

- Gradient Descent in Machine Learning

- Machine Learning Experts Salary in India

- Machine Learning Models

- Machine Learning Books

- Linear Algebra for Machine learning

- Types of Machine Learning

- Feature Engineering for Machine Learning

- Top 10 Machine Learning Courses in 2021

- Epoch in Machine Learning

- Machine Learning with Anomaly Detection

- What is Epoch

- Cost Function in Machine Learning

- Bayes Theorem in Machine learning

- Perceptron in Machine Learning

- Entropy in Machine Learning

- Issues in Machine Learning

- Precision and Recall in Machine Learning

- Genetic Algorithm in Machine Learning

- Normalization in Machine Learning

- Adversarial Machine Learning

- Basic Concepts in Machine Learning

- Machine Learning Techniques

- Demystifying Machine Learning

- Challenges of Machine Learning

- Model Parameter vs Hyperparameter

- Hyperparameters in Machine Learning

- Importance of Machine Learning

- Machine Learning and Cloud Computing

- Anti-Money Laundering using Machine Learning

- Data Science Vs. Machine Learning Vs. Big Data

- Popular Machine Learning Platforms

- Deep learning vs. Machine learning vs. Artificial Intelligence

- Machine Learning Application in Defense/Military

- Machine Learning Applications in Media

- How can Machine Learning be used with Blockchain

- Prerequisites to Learn Artificial Intelligence and Machine Learning

- List of Machine Learning Companies in India

- Mathematics Courses for Machine Learning

- Probability and Statistics Books for Machine Learning

- Risks of Machine Learning

- Best Laptops for Machine Learning

- Machine Learning in Finance

- Lead Generation using Machine Learning

- Machine Learning and Data Science Certification

- What is Big Data and Machine Learning

- How to Save a Machine Learning Model

- Machine Learning Model with Teachable Machine

- Data Structure for Machine Learning

- Hypothesis in Machine Learning

- Gaussian Discriminant Analysis

- How Machine Learning is used by Famous Companies

- Introduction to Transfer Learning in ML

- LDA in Machine Learning

- Stacking in Machine Learning

- CNB Algorithm

- Deploy a Machine Learning Model using Streamlit Library

- Different Types of Methods for Clustering Algorithms in ML

- EM Algorithm in Machine Learning

- Machine Learning Pipeline

- Exploitation and Exploration in Machine Learning

- Machine Learning for Trading

- Data Augmentation: A Tactic to Improve the Performance of ML

- Difference Between Coding in Data Science and Machine Learning

- Data Labelling in Machine Learning

- Impact of Deep Learning on Personalization

- Major Business Applications of Convolutional Neural Network

- Mini Batch K-means clustering algorithm

- What is Multilevel Modelling

- GBM in Machine Learning

- Back Propagation through time - RNN

- Data Preparation in Machine Learning

- Predictive Maintenance Using Machine Learning

- NLP Analysis of Restaurant Reviews

- What are LSTM Networks

- Performance Metrics in Machine Learning

- Optimization using Hopfield Network

- Data Leakage in Machine Learning

- Generative Adversarial Network

- Machine Learning for Data Management

- Tensor Processing Units

- Train and Test datasets in Machine Learning

- How to Start with Machine Learning

- AUC-ROC Curve in Machine Learning

- Targeted Advertising using Machine Learning

- Top 10 Machine Learning Projects for Beginners using Python

- What is Human-in-the-Loop Machine Learning

- What is MLOps

- K-Medoids clustering-Theoretical Explanation

- Machine Learning Or Software Development: Which is Better

- How does Machine Learning Work

- How to learn Machine Learning from Scratch

- Is Machine Learning Hard

- Face Recognition in Machine Learning

- Product Recommendation Machine Learning

- Designing a Learning System in Machine Learning

- Recommendation System - Machine Learning

- Customer Segmentation Using Machine Learning

- Detecting Phishing Websites using Machine Learning

- Hidden Markov Model in Machine Learning

- Sales Prediction Using Machine Learning

- Crop Yield Prediction Using Machine Learning

- Data Visualization in Machine Learning

- ELM in Machine Learning

- Probabilistic Model in Machine Learning

- Survival Analysis Using Machine Learning

- Traffic Prediction Using Machine Learning

- t-SNE in Machine Learning

- BERT Language Model

- Federated Learning in Machine Learning

- Deep Parametric Continuous Convolutional Neural Network

- Depth-wise Separable Convolutional Neural Networks

- Need for Data Structures and Algorithms for Deep Learning and Machine Learning

- Geometric Model in Machine Learning

- Machine Learning Prediction

- Scalable Machine Learning

- Credit Score Prediction using Machine Learning

- Extrapolation in Machine Learning

- Image Forgery Detection Using Machine Learning

- Insurance Fraud Detection -Machine Learning

- NPS in Machine Learning

- Sequence Classification- Machine Learning

- EfficientNet: A Breakthrough in Machine Learning Model Architecture

- focl algorithm in Machine Learning

- Gini Index in Machine Learning

- Rainfall Prediction using ML

- Major Kernel Functions in Support Vector Machine

- Bagging Machine Learning

- BERT Applications

- Xtreme: MultiLingual Neural Network

- History of Machine Learning

- Multimodal Transformer Models

- Pruning in Machine Learning

- ResNet: Residual Network

- Gold Price Prediction using Machine Learning

- Dog Breed Classification using Transfer Learning

- Cataract Detection Using Machine Learning

- Placement Prediction Using Machine Learning

- Stock Market prediction using Machine Learning

- How to Check the Accuracy of your Machine Learning Model

- Interpretability and Explainability: Transformer Models

- Pattern Recognition in Machine Learning

- Zillow Home Value (Zestimate) Prediction in ML

- Fake News Detection Using Machine Learning

- Genetic Programming VS Machine Learning

- IPL Prediction Using Machine Learning

- Document Classification Using Machine Learning

- Heart Disease Prediction Using Machine Learning

- OCR with Machine Learning

- Air Pollution Prediction Using Machine Learning

- Customer Churn Prediction Using Machine Learning

- Earthquake Prediction Using Machine Learning

- Factor Analysis in Machine Learning

- Locally Weighted Linear Regression

- Machine Learning in Restaurant Industry

- Machine Learning Methods for Data-Driven Turbulence Modeling

- Predicting Student Dropout Using Machine Learning

- Image Processing Using Machine Learning

- Machine Learning in Banking

- Machine Learning in Education

- Machine Learning in Healthcare

- Machine Learning in Robotics

- Cloud Computing for Machine Learning and Cognitive Applications

- Credit Card Approval Using Machine Learning

- Liver Disease Prediction Using Machine Learning

- Majority Voting Algorithm in Machine Learning

- Data Augmentation in Machine Learning

- Decision Tree Classifier in Machine Learning

- Machine Learning in Design

- Digit Recognition Using Machine Learning

- Electricity Consumption Prediction Using Machine Learning

- Data Analytics vs. Machine Learning

- Injury Prediction in Competitive Runners Using Machine Learning

- Protein Folding Using Machine Learning

- Sentiment Analysis Using Machine Learning

- Network Intrusion Detection System Using Machine Learning

- Titanic- Machine Learning From Disaster

- Adenovirus Disease Prediction for Child Healthcare Using Machine Learning

- RNN for Sequence Labelling

- CatBoost in Machine Learning

- Cloud Computing Future Trends

- Histogram of Oriented Gradients (HOG)

- Implementation of neural network from scratch using NumPy

- Introduction to SIFT( Scale Invariant Feature Transform)

- Introduction to SURF (Speeded-Up Robust Features)

- Kubernetes - load balancing service

- Kubernetes Resource Model (KRM) and How to Make Use of YAML

- Are Robots Self-Learning

- Variational Autoencoders

- What are the Security and Privacy Risks of VR and AR

- What is a Large Language Model (LLM)

- Privacy-preserving Machine Learning

- Continual Learning in Machine Learning

- Quantum Machine Learning (QML)

- Split Single Column into Multiple Columns in PySpark DataFrame

- Why should we use AutoML

- Evaluation Metrics for Object Detection and Recognition

- Mean Intersection over Union (mIoU) for image segmentation

- YOLOV5-Object-Tracker-In-Videos

- Predicting Salaries with Machine Learning

- Fine-tuning Large Language Models

- AutoML Workflow

- Build Chatbot Webapp with LangChain

- Building a Machine Learning Classification Model with PyCaret

- Continuous Bag of Words (CBOW) in NLP

- Deploying Scrapy Spider on ScrapingHub

- Dynamic Pricing Using Machine Learning

- How to Improve Neural Networks by Using Complex Numbers

- Introduction to Bayesian Deep Learning

- LiDAR: Light Detection and Ranging for 3D Reconstruction

- Meta-Learning in Machine Learning

- Object Recognition in Medical Imaging

- Region-level Evaluation Metrics for Image Segmentation

- Sarcasm Detection Using Neural Networks

- SARSA Reinforcement Learning

- Single Shot MultiBox Detector (SSD) using Neural Networking Approach

- Stepwise Predictive Analysis in Machine Learning

- Vision Transformers vs. Convolutional Neural Networks

- V-Net in Image Segmentation

- Forest Cover Type Prediction Using Machine Learning

- Ada Boost algorithm in Machine Learning

- Continuous Value Prediction

- Bayesian Regression

- Least Angle Regression

- Linear Models

- DNN Machine Learning

- Why do we need to learn Machine Learning

- Roles in Machine Learning

- Clustering Performance Evaluation

- Spectral Co-clustering

- 7 Best R Packages for Machine Learning

- Calculate Kurtosis

- Machine Learning for Data Analysis

- What are the benefits of 5G Technology for the Internet of Things

- What is the Role of Machine Learning in IoT

- Human Activity Recognition Using Machine Learning

- Components of GIS

- Attention Mechanism

- Backpropagation- Algorithm

- VGGNet-16 Architecture

- Independent Component Analysis

- Nonnegative Matrix Factorization

- Sparse Inverse Covariance

- Accuracy, Precision, Recall or F1

- L1 and L2 Regularization

- Maximum Likelihood Estimation

- Kernel Principal Component Analysis (KPCA)

- Latent Semantic Analysis

- Overview of outlier detection methods

- Robust Covariance Estimation

- Spectral Bi-Clustering

- Drift in Machine Learning

- Credit Card Fraud Detection Using Machine Learning

- KL-Divergence

- Transformers Architecture

- Novelty Detection with Local Outlier Factor

- Novelty Detection

- Introduction to Bayesian Linear Regression

- Firefly Algorithm

- Keras: Attention and Seq2Seq

- A Guide Towards a Successful Machine Learning Project

- ACF and PCF

- Bayesian Hyperparameter Optimization for Machine Learning

- Random Forest Hyperparameter tuning in python

- Simulated Annealing

- Top Benefits of Machine Learning in FinTech

- Weight Initialisation

- Density Estimation

- Overlay Network

- Micro, Macro Weighted Averages of F1 Score

- Assumptions of Linear Regression

- Evaluation Metrics for Clustering Algorithms

- Frog Leap Algorithm

- Isolation Forest

- McNemar Test

- Stochastic Optimization

- Geomagnetic Field Using Machine Learning

- Image Generation Using Machine Learning

- Confidence Intervals

- Facebook Prophet

- Understanding Optimization Algorithms in Machine Learning

- What Are Probabilistic Models in Machine Learning

- How to choose the best Linear Regression model

- How to Remove Non-Stationarity From Time Series

- AutoEncoders

- Cat Classification Using Machine Learning

- AIC and BIC

- Inception Model

- Architecture of Machine Learning

- Business Intelligence Vs Machine Learning

- Guide to Cluster Analysis: Applications, Best Practices

- Linear Regression using Gradient Descent

- Text Clustering with K-Means

- The Significance and Applications of Covariance Matrix

- Stationarity Tests in Time Series

- Graph Machine Learning

- Introduction to XGBoost Algorithm in Machine Learning

- Bahdanau Attention

- Greedy Layer Wise Pre-Training

- OneVsRestClassifier

- Best Program for Machine Learning

- Deep Boltzmann machines (DBMs) in machine learning

- Find Patterns in Data Using Machine Learning

- Generalized Linear Models

- How to Implement Gradient Descent Optimization from Scratch

- Interpreting Correlation Coefficients

- Image Captioning Using Machine Learning

- fit() vs predict() vs fit_predict() in Python scikit-learn

- CNN Filters

- Shannon Entropy

- Time Series -Exponential Smoothing

- AUC ROC Curve in Machine Learning

- Vector Norms in Machine Learning

- Swarm Intelligence

- L1 and L2 Regularization Methods in Machine Learning

- ML Approaches for Time Series

- MSE and Bias-Variance Decomposition

- Simple Exponential Smoothing

- How to Optimise Machine Learning Model

- Multiclass logistic regression from scratch

- Lightbm Multilabel Classification

- Monte Carlo Methods

- What is Inverse Reinforcement learning

- Content-Based Recommender System

- Context-Awareness Recommender System

- Predicting Flights Using Machine Learning

- NTLK Corpus

- Traditional Feature Engineering Models

- Concept Drift and Model Decay in Machine Learning

- Hierarchical Reinforcement Learning

- What is Feature Scaling and Why is it Important in Machine Learning

- Difference between Statistical Model and Machine Learning

- Introduction to Ranking Algorithms in Machine Learning

- Multicollinearity: Causes, Effects and Detection

- Bag of N-Grams Model

- TF-IDF Model

Related Tutorials

- Tensorflow Tutorial

- PyTorch Tutorial

- Data Science Tutorial

- AI Tutorial

- NLP Tutorial

- Reinforcement Learning

Interview Questions

- Machine learning Interview

| The hypothesis is a common term in Machine Learning and data science projects. As we know, machine learning is one of the most powerful technologies across the world, which helps us to predict results based on past experiences. Moreover, data scientists and ML professionals conduct experiments that aim to solve a problem. These ML professionals and data scientists make an initial assumption for the solution of the problem. This assumption in Machine learning is known as Hypothesis. In Machine Learning, at various times, Hypothesis and Model are used interchangeably. However, a Hypothesis is an assumption made by scientists, whereas a model is a mathematical representation that is used to test the hypothesis. In this topic, "Hypothesis in Machine Learning," we will discuss a few important concepts related to a hypothesis in machine learning and their importance. So, let's start with a quick introduction to Hypothesis. It is just a guess based on some known facts but has not yet been proven. A good hypothesis is testable, which results in either true or false. : Let's understand the hypothesis with a common example. Some scientist claims that ultraviolet (UV) light can damage the eyes then it may also cause blindness. In this example, a scientist just claims that UV rays are harmful to the eyes, but we assume they may cause blindness. However, it may or may not be possible. Hence, these types of assumptions are called a hypothesis. The hypothesis is one of the commonly used concepts of statistics in Machine Learning. It is specifically used in Supervised Machine learning, where an ML model learns a function that best maps the input to corresponding outputs with the help of an available dataset. There are some common methods given to find out the possible hypothesis from the Hypothesis space, where hypothesis space is represented by and hypothesis by Th ese are defined as follows: It is used by supervised machine learning algorithms to determine the best possible hypothesis to describe the target function or best maps input to output. It is often constrained by choice of the framing of the problem, the choice of model, and the choice of model configuration. . It is primarily based on data as well as bias and restrictions applied to data. Hence hypothesis (h) can be concluded as a single hypothesis that maps input to proper output and can be evaluated as well as used to make predictions. The hypothesis (h) can be formulated in machine learning as follows: Where, Y: Range m: Slope of the line which divided test data or changes in y divided by change in x. x: domain c: intercept (constant) : Let's understand the hypothesis (h) and hypothesis space (H) with a two-dimensional coordinate plane showing the distribution of data as follows: Hypothesis space (H) is the composition of all legal best possible ways to divide the coordinate plane so that it best maps input to proper output. Further, each individual best possible way is called a hypothesis (h). Hence, the hypothesis and hypothesis space would be like this: Similar to the hypothesis in machine learning, it is also considered an assumption of the output. However, it is falsifiable, which means it can be failed in the presence of sufficient evidence. Unlike machine learning, we cannot accept any hypothesis in statistics because it is just an imaginary result and based on probability. Before start working on an experiment, we must be aware of two important types of hypotheses as follows: A null hypothesis is a type of statistical hypothesis which tells that there is no statistically significant effect exists in the given set of observations. It is also known as conjecture and is used in quantitative analysis to test theories about markets, investment, and finance to decide whether an idea is true or false. An alternative hypothesis is a direct contradiction of the null hypothesis, which means if one of the two hypotheses is true, then the other must be false. In other words, an alternative hypothesis is a type of statistical hypothesis which tells that there is some significant effect that exists in the given set of observations.The significance level is the primary thing that must be set before starting an experiment. It is useful to define the tolerance of error and the level at which effect can be considered significantly. During the testing process in an experiment, a 95% significance level is accepted, and the remaining 5% can be neglected. The significance level also tells the critical or threshold value. For e.g., in an experiment, if the significance level is set to 98%, then the critical value is 0.02%. The p-value in statistics is defined as the evidence against a null hypothesis. In other words, P-value is the probability that a random chance generated the data or something else that is equal or rarer under the null hypothesis condition. If the p-value is smaller, the evidence will be stronger, and vice-versa which means the null hypothesis can be rejected in testing. It is always represented in a decimal form, such as 0.035. Whenever a statistical test is carried out on the population and sample to find out P-value, then it always depends upon the critical value. If the p-value is less than the critical value, then it shows the effect is significant, and the null hypothesis can be rejected. Further, if it is higher than the critical value, it shows that there is no significant effect and hence fails to reject the Null Hypothesis. In the series of mapping instances of inputs to outputs in supervised machine learning, the hypothesis is a very useful concept that helps to approximate a target function in machine learning. It is available in all analytics domains and is also considered one of the important factors to check whether a change should be introduced or not. It covers the entire training data sets to efficiency as well as the performance of the models. Hence, in this topic, we have covered various important concepts related to the hypothesis in machine learning and statistics and some important parameters such as p-value, significance level, etc., to understand hypothesis concepts in a better way. |

Latest Courses

We provides tutorials and interview questions of all technology like java tutorial, android, java frameworks

Contact info

G-13, 2nd Floor, Sec-3, Noida, UP, 201301, India

[email protected] .

Online Compiler

Stack Exchange Network

Stack Exchange network consists of 183 Q&A communities including Stack Overflow , the largest, most trusted online community for developers to learn, share their knowledge, and build their careers.

Q&A for work

Connect and share knowledge within a single location that is structured and easy to search.

Could anyone explain the terms "Hypothesis space" "sample space" "parameter space" "feature space in machine learning with one concrete example?

I am confused with these machine learning terms, and trying to distinguish them with one concrete example.

for instance, use logistic regression to classify a bunch of cat images.

assume there are 1,000 images with labels indicating the corresponding image is or is not a cat image.

each image has a size of 100*100.

given above, is my following understanding right?

the sample space is the 1,000 images.

the feature space is 100*100 pixels.

the parameter space is a vector that has a length of 100*100+1.

the Hypothesis space is the set of all the possible hyperplanes that have some attribute that I have no idea.

- machine-learning

- classification

- data-mining

2 Answers 2

People are a bit loose with their definitions (meaning different people will use different definitions, depending on the context), but let me put what I would say. I will do so more in the context of modern computer vision.

First, more generally, define $X$ as the space of the input data, and $Y$ as the output label space (some subset of the integers or equivalently one-hot vectors). A dataset is then $D=\{ d=(x,y)\in X\times Y \}$ , where $d\sim P_{X\times Y}$ is sampled from some joint distribution over the input and output space.

Now, let $\mathcal{H}$ be a set of functions such that an element $f \in \mathcal{H}$ is a map $f: X\rightarrow Y$ . This is the space of functions we will consider for our problem. And finally, let $g_\theta \in \mathcal{H}$ be some specific function with parameters $\theta\in\mathbb{R}^n$ , such that we denote $\widehat{y} = g_\theta(x|\theta)$ .

Finally, lets assume that any $f\in\mathcal{H}$ consists of a sequence of mappings $f=f_\ell\circ f_{\ell-1}\circ\ldots\circ f_2\circ f_1$ , where $f_i: F_{i}\rightarrow F_{i+1}$ and $F_1 = X, \, F_{\ell+1}=Y$ .

Ok, now for the definitions:

Hypothesis space (HS): the HS is the abstract function space you consider in solving your problem. Here it is denoted $\mathcal{H}$ . I find that this term does not appear very often in applied ML, rather, it is mostly used in theoretical contexts (e.g., PAC theory ). Sample space (SS): the sample space is simply the input (or instance) space $X$ . This is the same as in probability theory, regarding each training input as a random sample instance 1 . Parameter space (PS): for a fixed classifier $g_\theta$ , the PS is simply the space of possible values of $\theta$ . It defines the space covered by the single architecture that you train 2 . Usually it does not include hyper -parameters when people say it. Feature space (FS): for many models, there are multiple feature spaces. I've denoted them here as $F_2,\ldots, F_\ell$ . They are essentially the intermediate outputs due to the model's layered processing (but see note 1 ). For CNNs, these "feature maps" at different layers are often used for different things, hence distinction is important.

For your example:

The HS is almost the same as the PS once you've chosen logistic regression (except that the HS includes the models arising from different hyper-parameters as well, whereas the PS is fixed for a given set of hyper-parameters). Indeed, here, the HS is the set of all hyperplanes (and the PS could be as well, depending on the presence of e.g. regularization parameters).

The sample space is the set of all possible cat images; i.e., $X$ . It is not usually restricted in meaning to be $D$ , which is usually just called the training set.

The feature space in your case is indeed $F_1 = X$ , assuming that you feed the raw pixels to the logistic regression (so $\ell = 1$ ). 3

1 Some people treat some processed form of the input as the input. E.g., replacing an image $I$ with its HOG or wavelet features $u(I)$ . Then they define the sample space $X_u = \{ u(I_k) \;\forall\; k \}$ , i.e., as the features rather than the images. However, I would argue that you should leave $I\in X$ and simply set $F_1 = X_u$ , i.e., treat it as the first feature space.

2 Note that each $\theta$ defines a different trained model, which is in the HS. However, not all members of $\mathcal{H}$ can be reached by varying the parameter vector. For instance, you might search over the number of layers in a CNN, but the parameter space of a single CNN will not cover that. (Though note again that $\mathcal{H}$ tends to be used more in theoretical contexts). One distinction between HS and PS appears in the context of error decompositions of approximation vs estimation noise .

3 Normally (in "older" computer vision) you would extract features from the image and feed that to e.g. logistic regression. The modern version of this is attaching a fully connected (linear) layer with a softmax at the end of a CNN.

I'll approach this from a more colloquial point of view:

The sample space consists of your sample-level input data, which are instances of specific values in feature space. In your example, your sample space consists of 1000 images.

The feature space consists of the individual components that make up a sample, and potentially intermediate, derived features that express combinations of the raw features. In your example, the feature space is the 10,000 pixels and the color values they can take.

The hypothesis space covers all potential solutions that you could arrive at with your choice of model. A model that draws a linear boundary in feature space, for example, does not have any nonlinear solutions in its hypothesis space. In most cases, you can't enumerate the hypothesis space, but it's useful to know what types of solutions it's even possible for your model to generate.

The parameter space covers the possible values that the model parameters can take, which will vary depending on your model. A logistic regression, for example, will have a weight parameter for every feature that varies between -Inf and +Inf. You could also build a coin flip model that guesses "cat" randomly with probability X, where X is the single parameter that varies from 0 to 100.

Your Answer

Sign up or log in, post as a guest.

Required, but never shown

By clicking “Post Your Answer”, you agree to our terms of service and acknowledge you have read our privacy policy .

Not the answer you're looking for? Browse other questions tagged machine-learning classification data-mining or ask your own question .

- Featured on Meta

- User activation: Learnings and opportunities

- Join Stack Overflow’s CEO and me for the first Stack IRL Community Event in...

Hot Network Questions

- Can Adom Strongroom lift platinum?

- meaning of a sentence from Agatha Christie (Murder of Roger Ackroyd)

- Disable Firefox feature to choose its own DNS

- How to translate the letter Q to Japanese?

- How to react to a rejection based on a single one-line negative review?

- HTTP error 404: Tag not found

- Hungarian Immigration wrote a code on my passport

- 3D Chip Design using TikZ

- Smoking on a hotel room's balcony in Greece

- Is there a way to hide/show seams on model?

- Why a relay frequently clicks when a battery is low?

- How do I make TimelinePlot labels appear on the left side of the axis?

- Cutting a curve through a thick timber without waste

- Grid-based pathfinding for a lot of agents: how to implement "Tight-Following"?

- Why are no metals green or blue?

- How to win a teaching award?

- Can you recommend a good book written about Newton's mathematical achievements?

- My math professor is Chinese. Is it okay for me to speak Chinese to her in office hours?

- How is manual import of product catalog mapped to IGO_ data extensions?

- Invariance of the Lebesgue measure

- Calm and Insight is the Normative Meditative Practice in Buddhism

- Script does not work when run from Startup Programs

- Mark 6:54 - Who knew/recognized Jesus: the disciples or the crowds?

- What can I do to limit damage to a ceiling below bathroom after faucet leak?

Our systems are now restored following recent technical disruption, and we’re working hard to catch up on publishing. We apologise for the inconvenience caused. Find out more: https://www.cambridge.org/universitypress/about-us/news-and-blogs/cambridge-university-press-publishing-update-following-technical-disruption

We use cookies to distinguish you from other users and to provide you with a better experience on our websites. Close this message to accept cookies or find out how to manage your cookie settings .

Login Alert

- > Phase Transitions in Machine Learning

- > Searching the hypothesis space

Book contents

- Frontmatter

- Acknowledgments

- 1 Introduction

- 2 Statistical physics and phase transitions

- 3 The satisfiability problem

- 4 Constraint satisfaction problems

- 5 Machine learning

- 6 Searching the hypothesis space

- 7 Statistical physics and machine learning

- 8 Learning, SAT, and CSP

- 9 Phase transition in FOL covering test

- 10 Phase transitions and relational learning

- 11 Phase transitions in grammatical inference

- 12 Phase transitions in complex systems

- 13 Phase transitions in natural systems

- 14 Discussion and open issues

- Appendix A Phase transitions detected in two real cases

- Appendix B An intriguing idea

6 - Searching the hypothesis space

Published online by Cambridge University Press: 05 August 2012

In Chapter 5 we introduced the main notions of machine learning, with particular regard to hypothesis and data representation, and we saw that concept learning can be formulated in terms of a search problem in the hypothesis space H . As H is in general very large, or even infinite, well-designed strategies are required in order to perform efficiently the search for good hypotheses. In this chapter we will discuss in more depth these general ideas about search.

When concepts are represented using a symbolic or logical language, algorithms for searching the hypothesis space rely on two basic features:

a criterion for checking the quality (performance) of a hypothesis;

an algorithm for comparing two hypotheses with respect to the generality relation.

In this chapter we will discuss the above features in both the propositional and the relational settings, with specific attention to the covering test.

Guiding the search in the hypothesis space

If the hypothesis space is endowed with the more-general-than relation (as is always the case in symbolic learning), hypotheses can be organized into a lattice, as represented in Figure 5.6. This lattice can be explored by moving from more general to more specific hypotheses (top-down strategies) or from more specific to more general ones (bottom-up strategies) or by a combination of the two. Both directions of search rely on the definition of suitable operators, namely, generalization operators for moving up in the lattice and specialization operators for moving down.

Access options

Save book to kindle.

To save this book to your Kindle, first ensure [email protected] is added to your Approved Personal Document E-mail List under your Personal Document Settings on the Manage Your Content and Devices page of your Amazon account. Then enter the ‘name’ part of your Kindle email address below. Find out more about saving to your Kindle .

Note you can select to save to either the @free.kindle.com or @kindle.com variations. ‘@free.kindle.com’ emails are free but can only be saved to your device when it is connected to wi-fi. ‘@kindle.com’ emails can be delivered even when you are not connected to wi-fi, but note that service fees apply.

Find out more about the Kindle Personal Document Service .

- Searching the hypothesis space

- Lorenza Saitta , Università degli Studi del Piemonte Orientale Amedeo Avogadro , Attilio Giordana , Università degli Studi del Piemonte Orientale Amedeo Avogadro , Antoine Cornuéjols

- Book: Phase Transitions in Machine Learning

- Online publication: 05 August 2012

- Chapter DOI: https://doi.org/10.1017/CBO9780511975509.008

Save book to Dropbox

To save content items to your account, please confirm that you agree to abide by our usage policies. If this is the first time you use this feature, you will be asked to authorise Cambridge Core to connect with your account. Find out more about saving content to Dropbox .

Save book to Google Drive