- Accreditation

- Value of Accreditation

- Standards and Process

- Search Accredited Schools

- Educational Membership

- Business Membership

- Find a Member

- Learning and Events

- Conferences

- Webinars and Online Courses

- All Insights

- B-School Leadership

- Future of Work

- Societal Impact

- Leadership and Governance

- Media Center

- Accredited School Search

- Advertise, Sponsor, Exhibit

- Tips and Advice

- Is Business School Right for Me?

The Importance of Variance Analysis

This critical topic is too often taught to only a handful of students—or neglected in the b-school curriculum altogether.

Variance analysis is an essential tool for business graduates to have in their toolkits as they enter the workforce. Over our decades of experience in executive education, we’ve observed that managers across all industries and functions use variance analysis to measure the ability of their organizations to meet their commitments.

Because variance analysis is such a powerful risk management tool, there is a strong case for including it in the finance portion of any MBA curriculum. Yet fewer than half of finance professors believe they should be teaching this subject; they view it as a topic more typically taught in accounting classes. At the same time, in practice, variance analysis is such a cross-functional tool that it could be taught throughout the business school curriculum—but it’s not. We perceive a worrisome disconnect between the way variance analysis is taught and the way it is used in real life.

Variance Analysis and Its Applications

There are three periods in the life of a business plan: prior period to plan, plan to actual, and prior period to actual. For instance, if a business plan is being formulated for 2019, the “prior period” would be 2018, the “plan to actual” would be the budget for 2019, and the “prior period to actual” would be what really happens in 2019. These three stages are also referred to as planning, meeting commitments, and growth.

For each of these periods, variance analysis looks at the deviations between the targeted objective and the actual outcome. The most common variances are found in price, volume, cost, and productivity. When executives conduct an operational review, they will need to explain why there were positive or negative variances in any of these areas. For instance, did the company miss a target because it lost an anticipated national account or failed to lock in a price contract due to competitive pressure?

Executives who understand variances will improve their risk management, make better decisions, and be more likely to meet commitments. In the process, they’ll produce outcomes that can give an organization a real competitive advantage and, ultimately, create shareholder value.

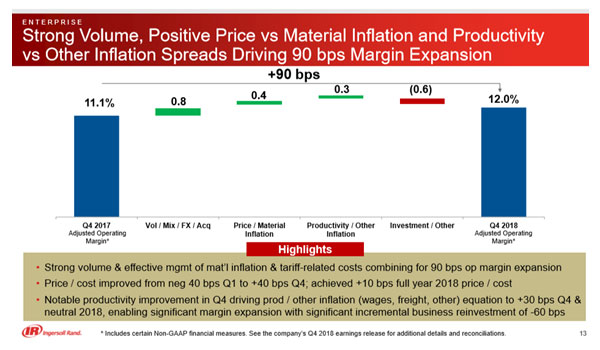

Most businesses apply variance analysis at the operating income level to determine what they projected and what they achieved. The variances usually are displayed in the form of floating bar charts—also known as walk, bridge, or waterfall charts. These graphics are often used in internal corporate documents as well as in investor-facing documents such as quarterly earnings presentations.

While variance analysis can be applied in many functional areas, it is used most often in finance-related fields. Yet, the majority of finance programs at both the graduate and undergraduate levels don’t cover it at all. We surveyed finance faculty in 2013 and accounting faculty in 2017 to determine how they teach and use variance analysis. Among other things, we learned that:

- More than 80 percent of accounting faculty believe that variance analysis is important to a finance career, and they are far more likely to teach it than their finance faculty colleagues.

- Only 59 percent of finance faculty and 48 percent of accounting faculty are familiar with examples of walk charts from real-world companies. Yet these visual portrayals of operating margin variances are commonplace in quarterly earnings presentations and readily found on investor relations websites.

Because universities mostly fail to teach this important topic, corporate educators have been left to fill the learning gap. Many global organizations, in fact, make variance analysis a key subject in their development programs for entry-level financial professionals.

The University Response

We believe it’s critical for universities to better align their curricula with the skills that today’s employers seek in the graduates they hire. Not only do we think variance analysis should be included in the business curriculum, but we could even make an argument for running it as a capstone business course. We offer these suggestions for ways that faculty could integrate this powerful tool across the business school program:

- Both accounting and finance faculty should, as much as is practical, incorporate variance analysis into their classes, particularly focusing on financial planning and analysis. We acknowledge that a dearth of corporate finance texts on the topic will make this a challenge for finance professors. The two of us employ teaching materials in our graduate business and undergraduate finance classes based on experience in the corporate world, and we would be glad to share them with others.

- Faculty who use case studies should always include a case specific to variance analysis tools. Students who pursue careers in corporate finance will almost certainly be required to use such tools, particularly as data and predictive analytics applications are enhanced to improve forecasting accuracy. Two sources of such case studies are TRI Corporation and Harvard Business Publishing.

- Professors can introduce students to real-world applications of variance analysis by showing how it is used in investor relations (IR) pitches. As instructors, the two of us routinely search IR sites for applications of variance analysis. We specifically look for operating margin variance walks (floating bars, brick charts) for visual applications that can make the topic come to life for students. Here’s an example from Ingersoll Rand:

- Faculty from accounting and finance programs should collaborate on when, where, and how to teach variance analysis. At the very least, this will ensure that students gain an understanding of the topic from either a finance or an accounting perspective, but the ideal would be for them to benefit from both perspectives for a holistic understanding. At Fairfield University, accounting programs introduce students to the theory of variance analysis. Then finance programs take an operational and cross-functional approach that addresses planning, meeting commitments, and growth.

- Both accounting and finance faculty should help finance majors understand variance analysis from a practitioner’s standpoint. Discussions about pricing, supply chain, manufacturing costs, risk management, and inflation and deflation around cost inputs can help students grasp the necessity of making trade-offs and balancing short-term and long-term business goals. To make sure students understand the practitioner’s viewpoint, we use corporate business simulations that are more operationally focused, as opposed to being academic in tone.

- To extend the topic to all majors, not just finance and accounting students, faculty from disciplines such as strategy and operations could also incorporate variance analysis into their classes. For instance, if they use business simulations for their capstone courses, they could add a component that covers variance analysis. At Fairfield, we use a variety of competitive business simulations from the corporate world.

- Finally, professors can bring in guest speakers from almost any business functional area and ask them to explain, as part of their presentations, how variance analysis is relevant in their fields. As an example, we often have senior finance executives from Stanley Black & Decker—a company known well-known for its ability to grow and meet its commitments via variance analysis—present to our graduate program. We tap other companies from Fairfield County as well.

In the graduate classes we teach at Fairfield University, we have always tried to connect theory with practice. And we’ve long believed that creating a culture of meeting and exceeding commitments requires aligning interaction across functions in the workplace. With this article, we hope that, at the very least, we can start a larger discussion about the need for cross-disciplinary teaching of variance analysis.

For more about variance analysis materials, contact us at [email protected] or [email protected] .

- What is Variance Analysis: Types, E...

What is Variance Analysis: Types, Examples and Formula

Table of Content

Join Our 100,000+ Community

Sign up for latest finance stories.

Key Takeaways

- Variance analysis compares the actual vs expected cash flows and keeps track of the financial metrics of your businesses.

- Different variance analysis formula measures specific financial metrics, providing insights into specific aspects of performance.

- Leveraging AI capabilities to analyze differences helps stakeholders achieve a better understanding of the finances and make well-informed decisions.

Introduction

In any business, having a grasp of projected cashflows, and available cash is crucial for daily financial operations. Enterprises utilize variance to measure the disparity between expected and actual cash flow.

Variance analysis involves assessing the reasons for the variances and understanding their impact on financial performance. In this ever-changing global economy, variance is an important metric for enterprises to track more than ever as it helps understand how accurate your cash forecasts are and whether you need to adjust your financial plans or take corrective actions to survive in the ever-changing volatile business environment.

By the end of this blog, you will be able to understand variance analysis, its importance, and how to calculate it so you can leverage the cash properly and make strategic and informed business decisions.

What is Variance Analysis?

Variance analysis measures the difference between the forecasted cash position and the actual cash position. A positive variance occurs when actual cash flow surpasses the forecasted amount, while a negative variance indicates the opposite. Variance analysis helps you understand where you went over or under budget and why.

This analysis provides insights into budget deviations and their underlying causes. It holds significance by enabling financial performance monitoring, trend identification, and informed decision-making for future planning. Through variance analysis, you can stay aligned with financial objectives and progressively enhance your profitability.

Types of Variance Analysis

Different types of variances can occur in the cash forecasting process due to reasons such as changes in market scenarios, customer behavior, and timing issues, among other factors. These variances can impact both sales revenue and expenses. By understanding the core impacts of these variances, companies can make necessary adjustments to their budgets, mitigate risks, and improve their overall financial performance.

Broadly, variances can be classified into two major categories:

- Materials, Labor, and Variable Overhead Variances

- Fixed Overhead Variances

Materials, labor, and variable overhead variances

These include price/rate variances and efficiency and quantity variances. Price/rate variances show the differences between industry-standard costs and actual pricing for materials, while efficiency variances and quantity variances refer to the differences between actual input values and the expected input values specified. This analysis plays a crucial role in managing procurement costs, making informed decisions, optimizing cost structures, and maintaining positive cash flow.

Fixed overhead variances

Fixed overhead variances include volume variances and budget variances. Volume variances measure the difference between the actual revenue and budgeted revenue that is derived solely from changes in sales volume. Meanwhile, budget variances indicate the differences between actual and budgeted amounts. These variances help businesses understand the influence of sales volume fluctuations on financial performance, provide insights into the effectiveness of financial planning , and identify areas of overperformance or underperformance.

Budget variances

Budget variances can be divided into two subgroups: expense variances and revenue variances. Expense variance measures actual costs compared to the budgeted costs while revenue variances measure actual revenue with the budgeted revenue. Positive revenue variances represent revenue that exceeds the expected revenue, while negative revenue variances represent lower expected revenue.

Budget variance analysis are important to understand the reasons behind the deviations from the budgeted amounts. It enables the identification of avenues for enhancing business processes, boosting revenue, and cutting costs. By examining revenue variances, you can uncover possibilities for long-term efficiency improvements and increased business value.

Let’s take a look at an example of variance in budgeting

Let’s say that your enterprise sells gadgets, and you’ve projected that you’ll sell $1 million worth of gadgets in the next quarter. However, at the end of the quarter, you find that you’ve only sold $800,000 worth of gadgets. That’s a variance of $200,000, or 20% of your original plan. By analyzing this variance, you can figure out what went wrong and take steps to improve your sales performance in the next quarter. Here, variance analysis becomes the vital tool that enables you to quickly identify such changes and adjust your strategies accordingly to manage your financial performance and optimize cash forecasting .

Role of Variance Analysis

In periods of market instability, your business could face unforeseen fluctuations in revenue, costs, or other financial indicators. In such cases, one of the most crucial tools in your financial management system is variance analysis.

Variance analysis allows you to track the financial performance of your organization and implement proactive measures to decrease risks and enhance financial health. It enables businesses to compare their expected cash flow with their actual cash flow and to identify the root reasons for any discrepancies. Businesses can acquire an important understanding of their cash flow performance and decide on appropriate actions in response to fluctuating market conditions.

For instance, If a company realizes its cash inflows are lower, it can cut costs or alter its pricing strategy to stay profitable. Likewise, if its real cash outflows exceed because of unforeseen costs, it can modify its financial plan or explore other funding choices.

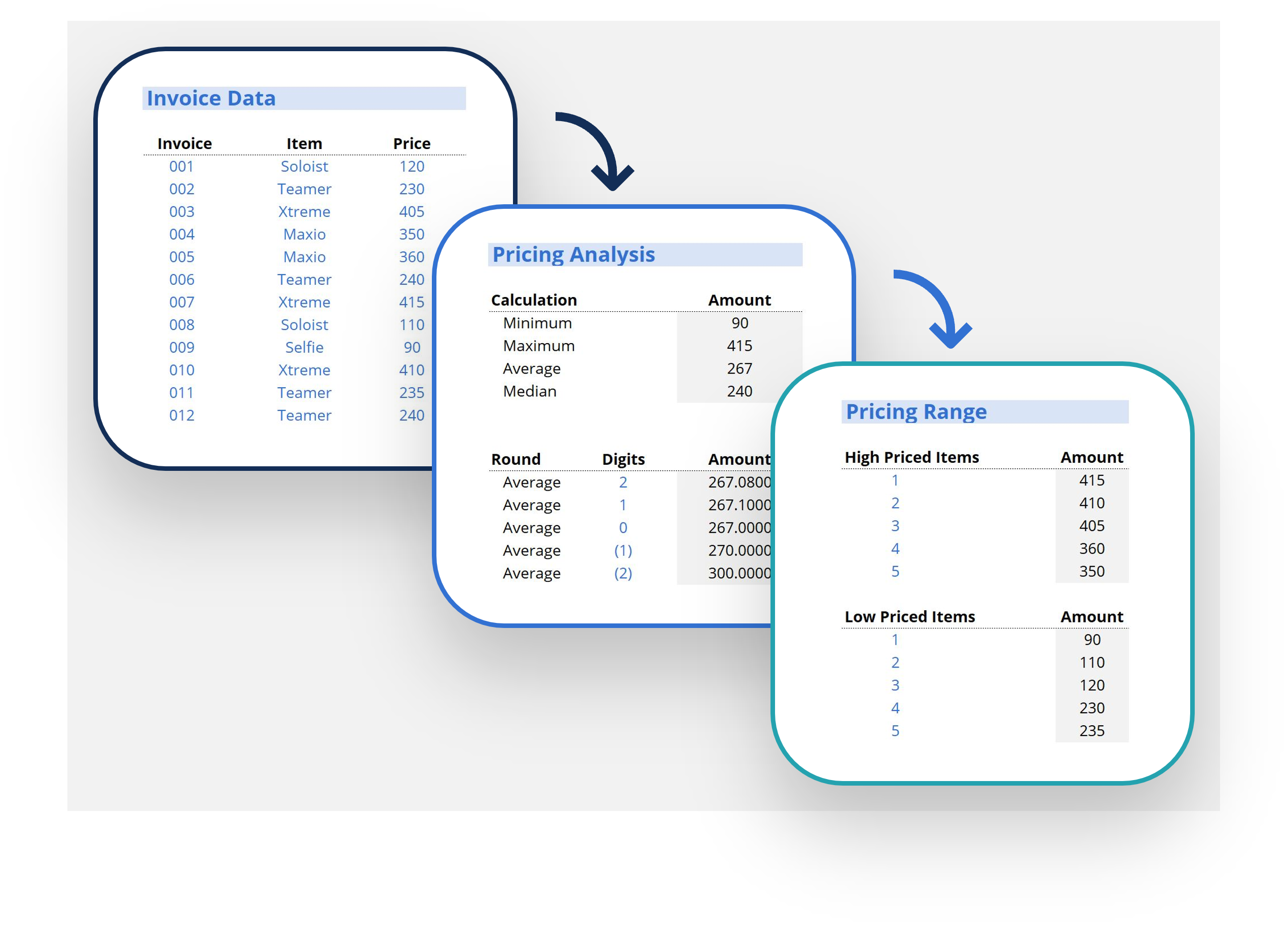

Variance Analysis Formula

The key components of variance are relatively straightforward; actuals vs. expected. Let’s look into the key variance analysis formula that focuses on specific financial metrics. These formulas unveil gaps between expected and actual results, providing insights into specific aspects of performance.

Cost variance formula

Cost variance measures the difference between actual costs and budgeted costs. The cost variance formula is:

Cost Variance = Actual Costs – Budgeted Costs

This formula helps identify cost control issues, inefficiencies, and opportunities for improvement.

Efficiency variance formula

Efficiency variance measures the difference between actual input values (e.g., labor hours, machine hours) and budgeted or standard input values. The efficiency variance formula is:

Efficiency Variance = (Actual Input – Budgeted Input) × Standard Rate

This formula helps organizations identify variations in productivity and pinpoint areas for improvement.

Volume variance formula

Volume variance, also known as sales volume variance, measures the impact of changes in sales volume on revenue compared to the budgeted volume. The variance volume formula is:

Volume Variance = (Actual Sales Volume – Budgeted Sales Volume) × Budgeted Selling Price

This formula helps organizations to understand the contribution of sales volume to revenue performance.

Budget variance formula

Budget variance measures the actual revenue with the budgeted revenue. The budget variance formula is:

Budget Variance = Actual Revenue – Budgeted Revenue

This formula aids in evaluating pricing strategies, market demand, and sales effectiveness.

Examples of Variance Analysis

For instance, let’s consider, a company that plans to create a new mobile app with a projected cost of $50,000. The expected timeline for completion is 4 months, with a budgeted labor cost of $10,000 per month. The target is to release the application with 10 key features. Here are the examples that demonstrate different types of variances under this scenario:

Cost variance:

During the development process, the company implements cost-saving measures and efficient resource allocation, resulting in lower actual costs. The actual cost of the project at completion is $45,000. The cost variance can be calculated as follows:

Cost Variance = $45,000 – $50,000 = -$5,000

Here, the negative cost variance of -$5,000 indicates that the company has achieved cost savings of $5,000 compared to the budgeted cost for the project.

Efficiency variance:

The project is efficiently managed, and the team completes the development in 3.5 months instead of the budgeted 4 months. Assuming a budgeted labor cost of $10,000 per month, the efficiency variance can be calculated as follows:

Efficiency Variance = (3.5 months – 4 months) × $10,000 = -$5,000

The negative efficiency variance of -$5,000 indicates that the project was completed ahead of schedule, resulting in labor cost savings of $5,000.

Volume variance:

The final version of the mobile application is released with 12 key features instead of the budgeted 10 features. Assuming a budgeted revenue of $2,000 per feature, the volume variance can be calculated as follows:

Volume Variance = (12 features – 10 features) × $2,000 per feature = $4,000

The positive volume variance of $4,000 indicates that the company delivered additional features, resulting in increased revenue of $4,000 compared to the budgeted amount.

Budget variance:

The company spent $8,000 on marketing and promotional activities for the mobile application launch, while the budgeted amount was $10,000. The budget variance can be calculated as follows:

Budget Variance = $8,000 – $10,000 = -$2,000

The negative budget variance of -$2,000 indicates that the company spent $2,000 less than the budgeted amount for marketing and promotional activities.

In these scenarios, the company achieved cost savings, enhanced efficiency, delivered additional features, and spent less than the budgeted amount on marketing expenses. These variances provide insights into cost management, efficiency, revenue generation, and budget adherence within the given software development project scenario.

Benefits of Conducting Variance Analysis

Let’s take a look at the top 4 benefits enterprises can reap by conducting variance analysis for cash forecasting:

Identify discrepancies

Variance analysis helps identify discrepancies between the actual cash inflows and outflows and the forecasted amounts. By comparing the forecasted cash flow with the actual cash flow, it is easier to identify any discrepancies, enabling the stakeholders to take corrective measures.

Refine cash forecasting techniques

Conducting variance analysis allows for a review of past forecasts to identify any errors or biases that may have impacted accuracy. This information can be used to refine forecasting techniques, improve future forecasts make adjustments to existing forecast templates, or build new ones.

Improve financial decision-making

Understanding the reasons for variances can provide valuable insights that can help improve financial decision-making, which is critical in a volatile market. For example, if a variance is caused by unexpected expenses, management may decide to reduce expenses or explore cost-saving measures.

Better cash management

By analyzing variances, companies can identify areas where cash management can be improved. This can include better management of accounts receivable or accounts payable, more effective inventory management, or renegotiating payment terms with the suppliers.

Role of AI in Variance Analysis for Cash Forecasting

Amid turbulent market conditions, as companies prepare for 2024 and beyond, enterprises’ finance chiefs professionals are recommending various enhancements to improve decision-making. The most commonly mentioned improvements are the adoption of digital technologies, AI, and automation, and the enhancement of forecasting, scenario planning, and consistency in measuring key performance indicators, as per the Deloitte CFO Signals Survey .

This goes to show the significance of the adoption of advanced technologies, such as AI, for companies preparing for uncertain markets. The challenge with traditional variance analysis is that it is difficult for treasurers to create low-variance cash flow forecasts for enterprises as they utilize manual methods and spreadsheets while dealing with large volumes of data. Moreover, relying on manual variance reduction approaches leads to high variance and can be time-consuming, labor-intensive, and expensive, thus, delaying the decision-making process.

Here’s how AI addresses this challenge and enables it to take variance analysis to the next level:

- AI-based cash forecasting software helps in variance analysis by taking additional steps to improve the accuracy of the cash forecast by 90-95%.

- It enables organizations to continuously improve the forecast by understanding the key drivers of variance.

- It compares cash forecasts to actual results to check for variances, aligning the forecast with other aspects such as monthly, quarterly, and yearly forecasts, thus, ensuring that the forecast is accurate across various scenarios.

- AI also analyzes the accuracy of cash forecasts through a line item analysis across multiple horizons and makes tweaks to the algorithm through an AI-assisted review process.

- Finally, AI fine-tunes the forecast model and enhances the data as needed to achieve the desired level of forecast accuracy.

Benefits of Leveraging AI in Variance Analysis

Here are some of the key benefits you can achieve by conducting variance analysis with AI in cash forecasting:

Automated reporting

AI can streamline the process of reporting discrepancies in cash flow by delivering consistent reports that emphasize developments and regularities. Automating the process of reporting allows organizations to save time and resources that would otherwise have been spent on manual reporting. This also guarantees consistent and accurate reporting, removing the chance of human mistakes. By receiving frequent updates on discrepancies in cash flow as they occur, you can effectively monitor your business’s cash flow and pinpoint opportunities for enhancement to optimize your financial results.

Faster, data-driven decision-making

AI can assist in making quicker, better-informed decisions about managing cash flow by providing in-depth insights on cash forecasts in real time. This can assist companies in promptly addressing fluctuations in cash flow and implementing necessary measures. This is especially crucial in periods of market volatility when cash flow trends can quickly fluctuate and unforeseen circumstances may arise.

Real-time cash analysis & better liquidity management

With AI at its core, cash flow forecasting software can learn from industry-wide seasonal fluctuations to improve forecasting accuracy. AI-powered cash forecasting software that enables variance analysis can also create snapshots of different forecasts and variances to compare them for detailed, category-level analysis. Offering such comprehensive visibility, helps you respond quickly to changes in cash flow, take corrective action as needed, and manage your enterprise’s liquidity better.

Improved cash forecasting accuracy with real-time cash analysis

AI streamlines your examination of cash flow by delving deeply into and analyzing a large volume of data from various sources, such as past cash flow information, market trends, and economic indicators, in real time. Therefore, it allows for an immediate understanding of discrepancies in cash flow. This can offer a more in-depth assessment of cash flow discrepancies, enabling the recognition of trends and patterns that may not be visible through manual review.

How HighRadius Can Help Automate Variance Analysis in Cash Forecasting?

In a rapidly evolving business landscape, market uncertainties and disruptions can have a significant impact on an enterprise’s financial stability. That’s why having a robust cash forecasting system with AI at its core is essential for businesses to conduct automated variance analysis. HighRadius’ cash forecasting software enables more advanced and sophisticated variance analysis that helps you achieve up to 95% global cash flow forecast accuracy.

By leveraging its AI capabilities in data analysis, pattern recognition, real-time integration, and predictive modeling, it empowers finance teams to gain deeper insights, improve accuracy, and make more informed decisions to manage cash flow effectively. Furthermore, our solution helps continuously improve the forecast by understanding the key drivers of variance. The AI algorithm learns from historical data and feedback, continuously improving their accuracy and effectiveness over time. This iterative learning process enhances the quality of variance analysis results.

Our AI-based cash forecasting solution supports drilling down into variances across various cash flow categories, geographies, and entity-level variances performing a root cause analysis, and helps achieve up to 98% automated cash flow category tagging.

1) What is the difference between standard costing and variance analysis?

Standard costing is setting an estimated (standard) cost on metrics such as input values, materials, cost of labor, and overhead based on industrial trends and historical data. Variance analysis focuses on analyzing and interpreting differences (variances) between actual costs and standard costs.

2) What are the three main sources of variance in an analysis?

In variance analysis, the three main sources of variance are material variances (differences in material usage or cost), labor variances (variations in labor productivity or wage rates), and overhead variances (deviations in overhead costs).

3) What is P&L variance analysis?

P&L (profit & loss) variance analysis is the process of comparing actual financial results to expected results in order to identify differences or variances. This type of variance analysis is typically performed on a company’s income statement, which shows its revenues, expenses, and net profit or loss over a specific period of time.

4) Why is the analysis of variance important?

The analysis of variance is important to keep track of as it tells about the financial health of your business. With proper variance analysis, you can measure the financial performance of your business, keep track of over and under-performing financial metrics, and identify areas for improvement.

Related Resources

How to Improve Cash Flow: Top 12 Strategies

Cash Forecasting for Mid-Sized Businesses

What is Cash Flow Planning: Benefits, Importance, and Types

Streamline your treasury operations with highradius.

Automate manual processes, generate accurate forecasts, reduce errors, and gain real-time visibility into your cash position to maximize your cash flow.

The HighRadius™ Treasury Management Applications consist of AI-powered Cash Forecasting Cloud and Cash Management Cloud designed to support treasury teams from companies of all sizes and industries. Delivered as SaaS, our solutions seamlessly integrate with multiple systems including ERPs, TMS, accounting systems, and banks using sFTP or API. They help treasuries around the world achieve end-to-end automation in their forecasting and cash management processes to deliver accurate and insightful results with lesser manual effort.

Please fill in the details below

Get the hottest Accounts Receivable stories

Delivered straight to your inbox.

- Order To Cash

- Collections Management

- Cash Application Management

- Deductions Management

- Credit Management

- Electronic Invoicing

- B2B Payments

- Payment Gateway

- Surcharge Management

- Interchange Fee Optimizer

- Payment Gateway For SAP

- Record To Report

- Financial Close Management

- Account Reconciliation

- Anomaly Management

- Accounts Payable Automation

- Treasury & Risk

- Cash Management

- Cash Forecasting

- Treasury Payments

- Learn & Transform

- Whitepapers

- Courses & Certifications

- Why Choose Us

- Data Sheets

- Case Studies

- Analyst Reports

- Integration Capabilities

- Partner Ecosystem

- Speed to Value

- Company Overview

- Leadership Team

- Upcoming Events

- Schedule a Demo

- Privacy Policy

HighRadius Corporation 2107 CityWest Blvd, Suite 1100, Houston, TX 77042

We have seen financial services costs decline by $2.5M while the volume, quality, and productivity increase.

Colleen Zdrojewski

Trusted By 800+ Global Businesses

principlesofaccounting.com

Variance Analysis

- Goals Achievement

- Fill in the Blanks

- Multiple Choice

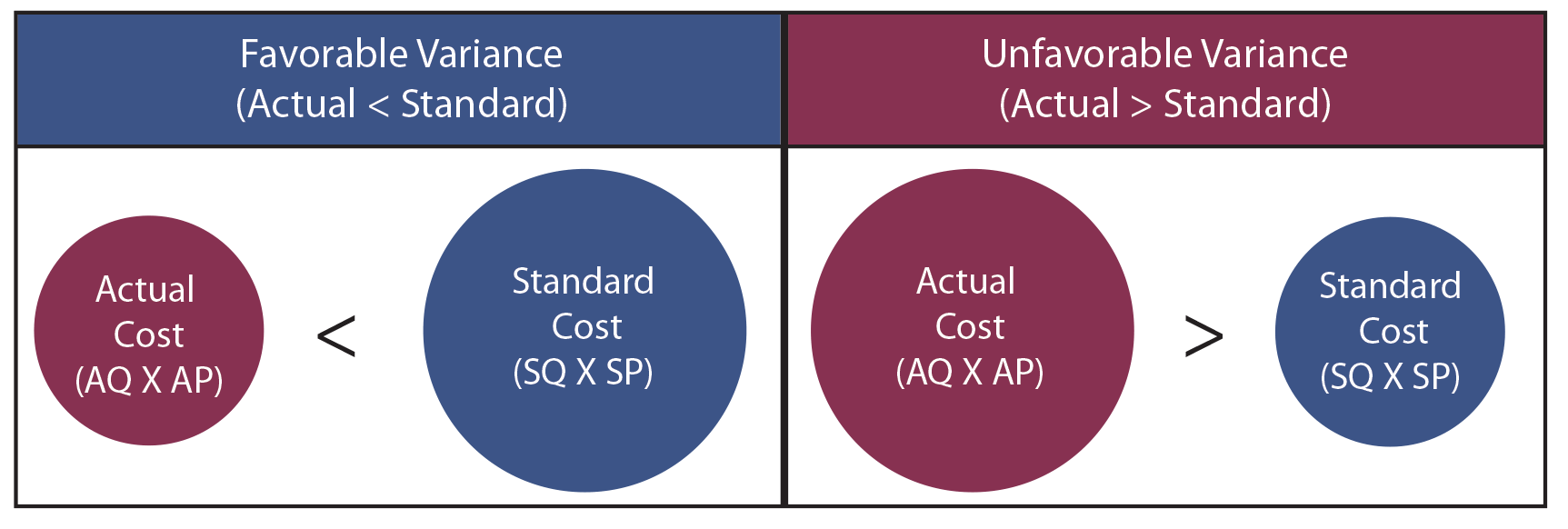

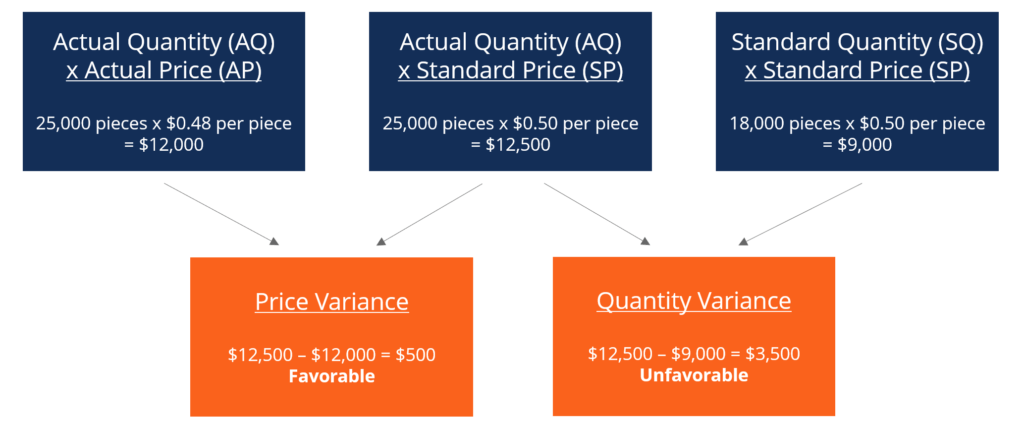

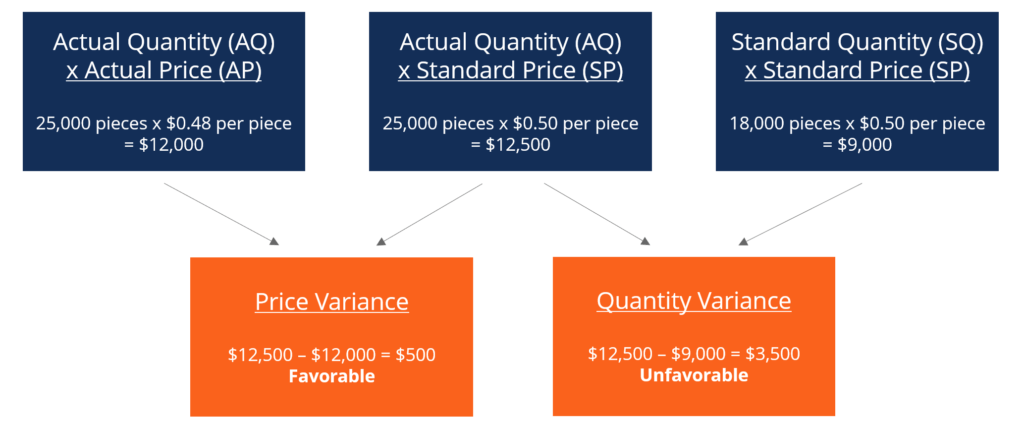

Standard costs provide information that is useful in performance evaluation. Standard costs are compared to actual costs, and mathematical deviations between the two are termed variances. Favorable variances result when actual costs are less than standard costs, and vice versa. The following illustration is intended to demonstrate the very basic relationship between actual cost and standard cost. AQ means the “actual quantity” of input used to produce the output. AP means the “actual price” of the input used to produce the output. SQ and SP refer to the “standard” quantity and price that was anticipated. Variance analysis can be conducted for material, labor, and overhead.

Direct Material Variances

- Materials Price Variance : A variance that reveals the difference between the standard price for materials purchased and the amount actually paid for those materials [(standard price – actual price) X actual quantity].

- Materials Quantity Variance : A variance that compares the standard quantity of materials that should have been used to the actual quantity of materials used. The quantity variation is measured at the standard price per unit [(standard quantity – actual quantity) X standard price].

Note that there are several ways to perform the intrinsic variance calculations. One can compute the values for the red, blue, and green balls and note the differences. Or, one can perform the algebraic calculations for the price and quantity variances. Note that unfavorable variances (negative) offset favorable (positive) variances. A total variance could be zero, resulting from favorable pricing that was wiped out by waste. A good manager would want to take corrective action, but would be unaware of the problem based on an overall budget versus actual comparison.

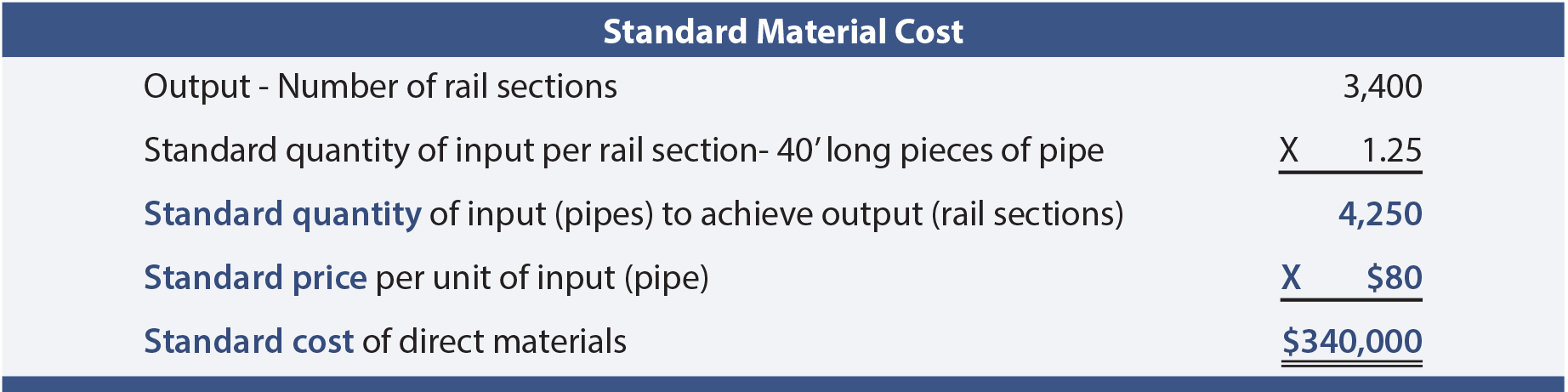

Blue Rail measures its output in “sections.” Each section consists of one post and four rails. The sections are 10’ in length and the posts average 4’ each. Some overage and waste is expected due to the need for an extra post at the end of a set of sections, faulty welds, and bad pipe cuts. The company has adopted an achievable standard of 1.25 pieces of raw pipe (50’) per section of rail. During August, Blue Rail produced 3,400 sections of railing. It was anticipated that pipe would cost $80 per 40’ piece. Standard material cost for this level of output is computed as follows:

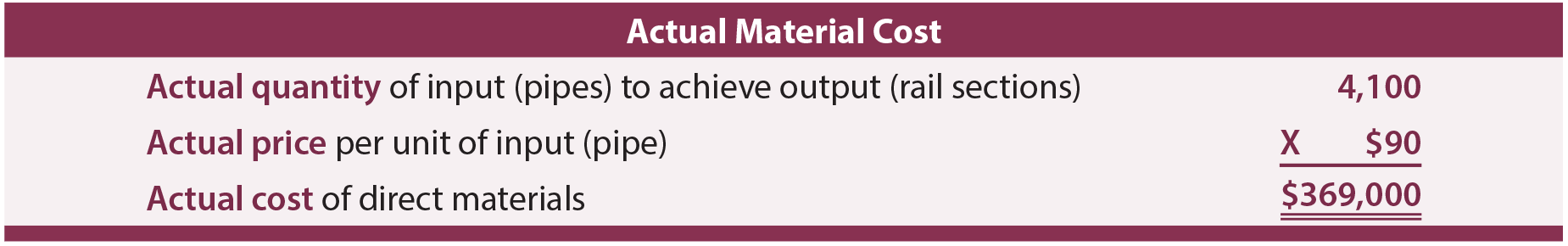

The production manager was disappointed to receive the monthly performance report revealing actual material cost of $369,000. A closer examination of the actual cost of materials follows.

The total direct material variance was unfavorable $29,000 ($340,000 vs. $369,000). However, this unfavorable outcome was driven by higher prices for raw material, not waste, as follows:

MATERIALS PRICE VARIANCE

(sp – ap) x aq = ($80 – $90) x 4,100, = <$41,000>.

Materials usage was favorable since less material was used (4,100 pieces of pipe) than was standard (4,250 pieces of pipe). This resulted in a favorable materials quantity variance:

MATERIALS QUANTITY VARIANCE

(sq – aq) x sp = (4,250 – 4,100) x $80, journal entries.

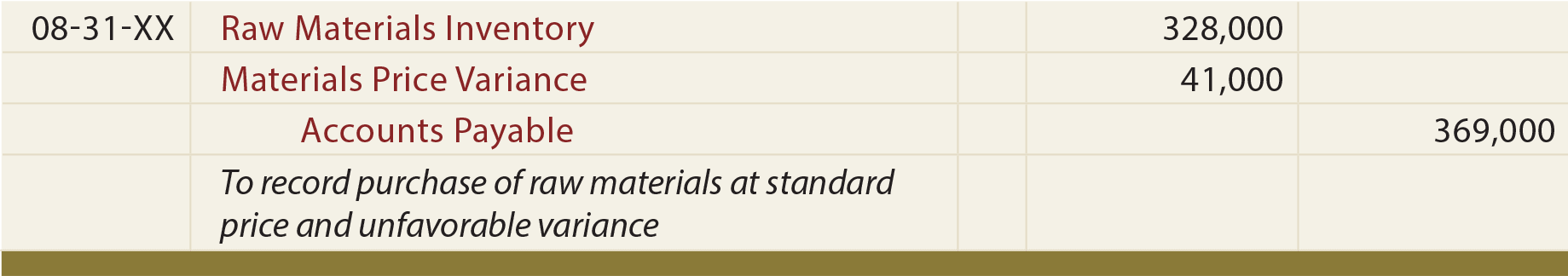

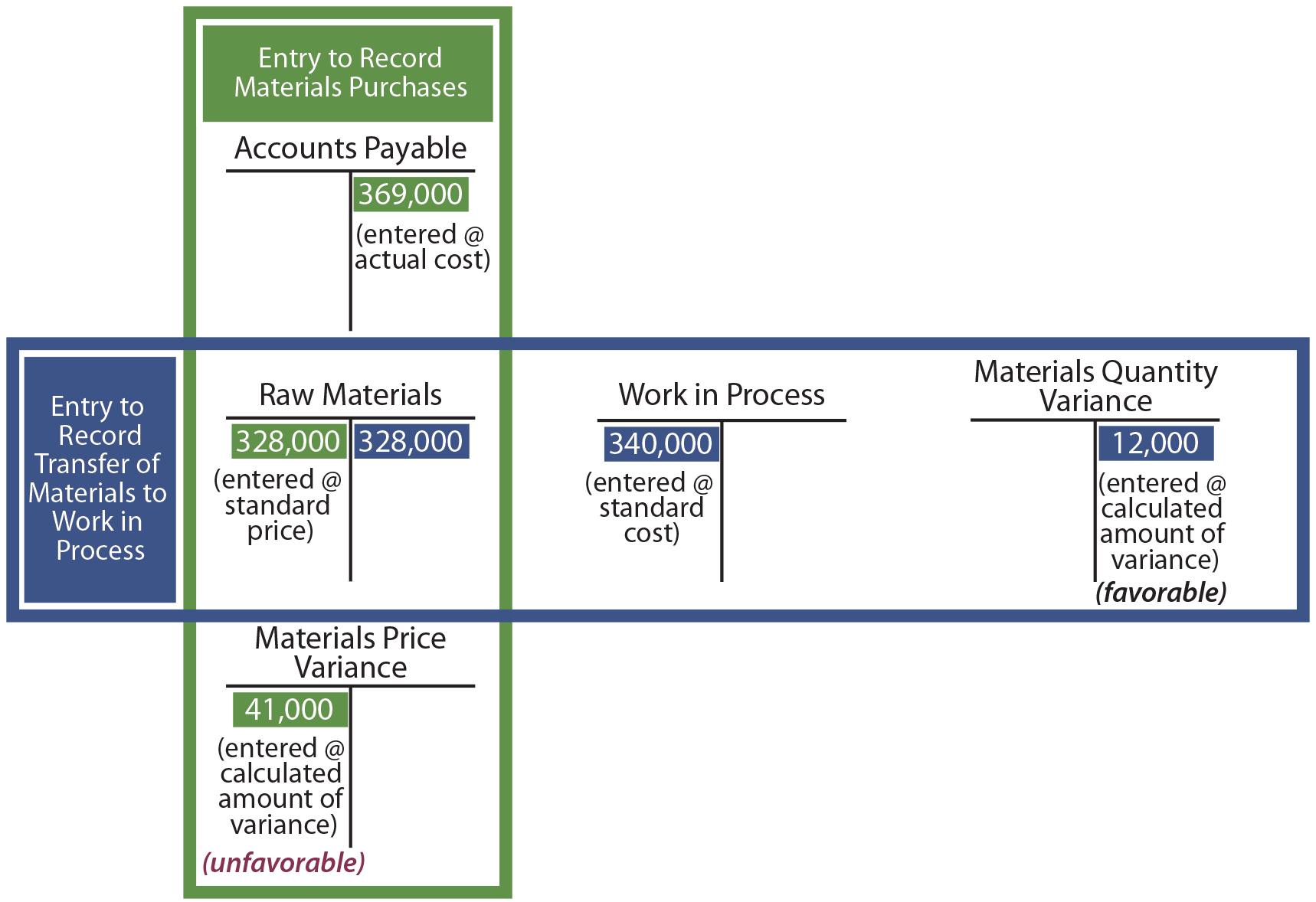

A company may desire to adapt its general ledger accounting system to capture and report variances. Do not lose sight of the very simple fact that the amount of money to account for is still the money that was actually spent ($369,000). To the extent the price paid for materials differs from standard, the variance is debited (unfavorable) or credited (favorable) to a Materials Price Variance account. This results in the Raw Materials Inventory account carrying only the standard price of materials, no matter the price paid:

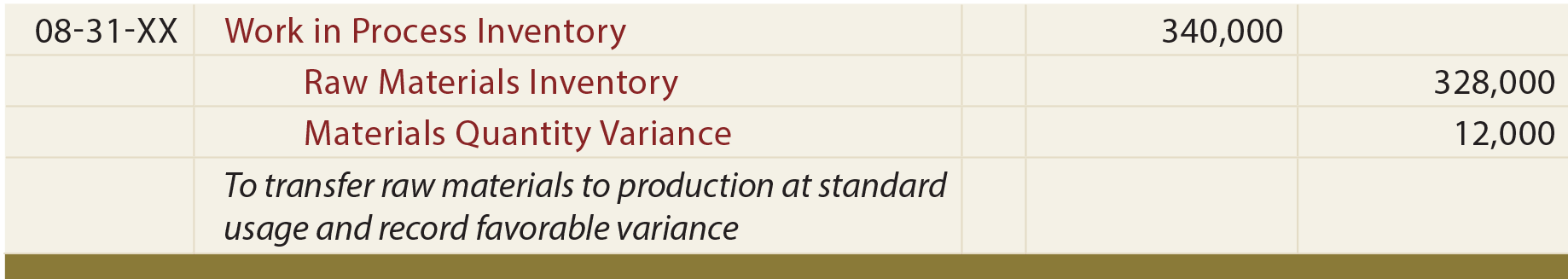

Work in Process is debited for the standard cost of the standard quantity that should be used for the productive output achieved, no matter how much is used. Any difference between standard and actual raw material usage is debited (unfavorable) or credited (favorable) to the Materials Quantity Variance account:

The price and quantity variances are generally reported by decreasing income (if unfavorable debits) or increasing income (if favorable credits), although other outcomes are possible. Examine the following diagram and notice the $369,000 of cost is ultimately attributed to work in process ($340,000 debit), materials price variance ($41,000 debit), and materials quantity variance ($12,000 credit). This illustration presumes that all raw materials purchased are put into production. If this were not the case, then the price variances would be based on the amount purchased while the quantity variances would be based on output.

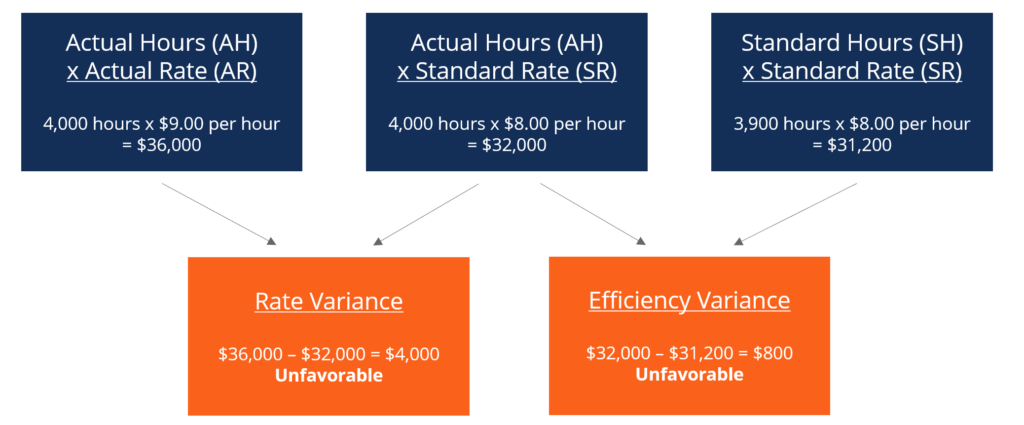

Direct Labor Variances

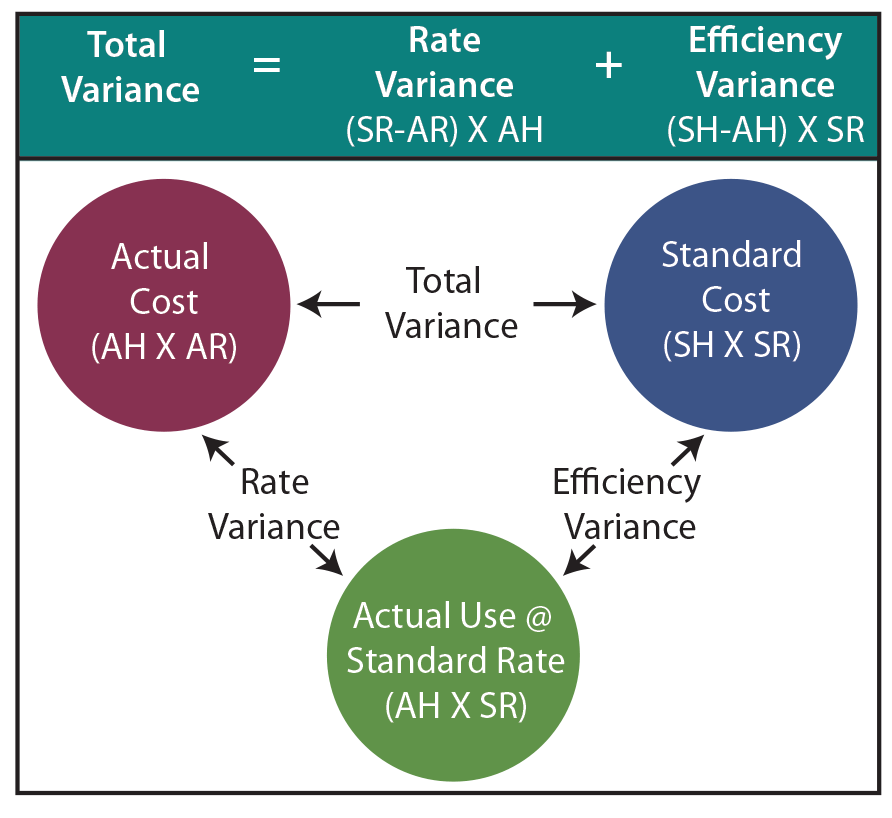

In this illustration, AH is the actual hours worked, AR is the actual labor rate per hour, SR is the standard labor rate per hour, and SH is the standard hours for the output achieved.

The Total Direct Labor Variance consists of:

- Labor Rate Variance : A variance that reveals the difference between the standard rate and actual rate for the actual labor hours worked [(standard rate – actual rate) X actual hours].

- Labor Efficiency Variance : A variance that compares the standard hours of direct labor that should have been used to the actual hours worked. The efficiency variance is measured at the standard rate per hour [(standard hours – actual hours) X standard rate].

As with material variances, there are several ways to perform the intrinsic labor variance calculations. One can compute the values for the red, blue, and green balls. Or, one can perform the noted algebraic calculations for the rate and efficiency variances.

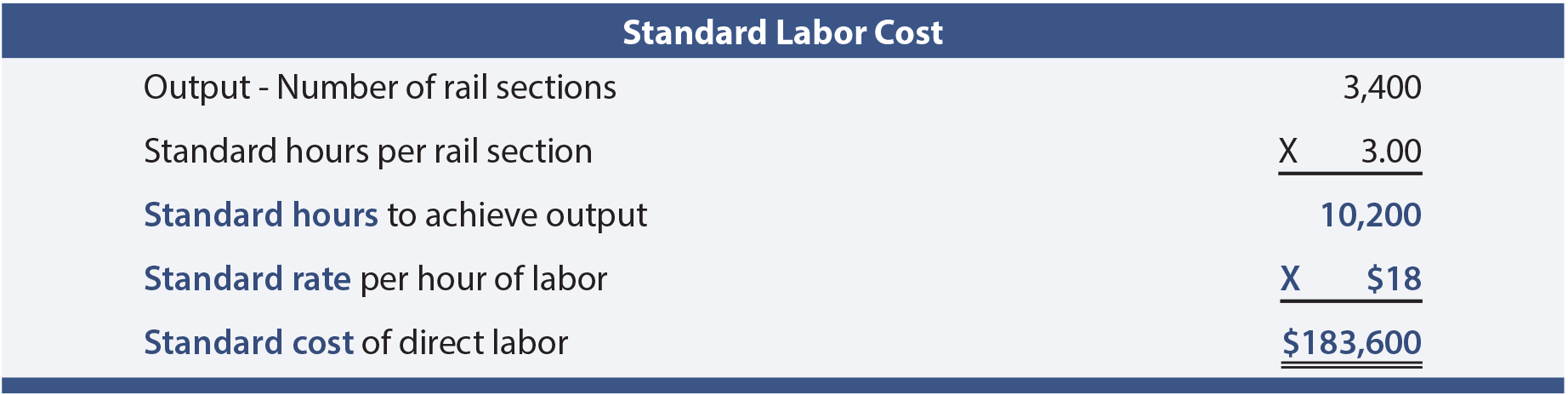

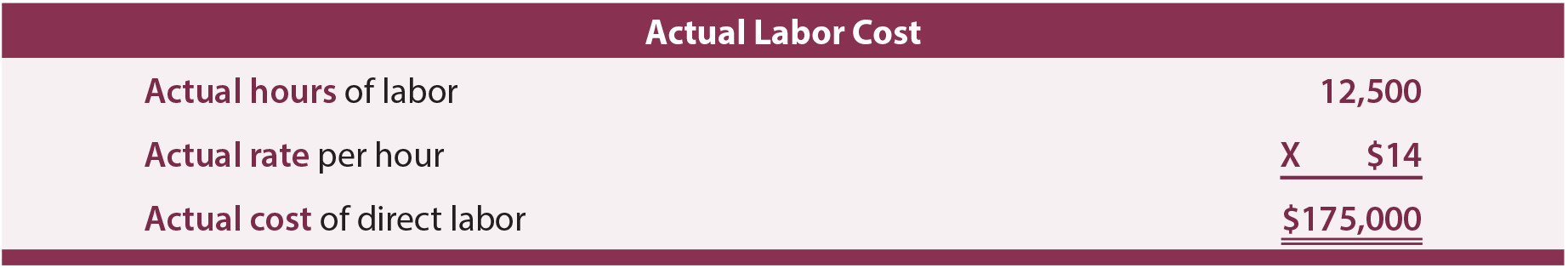

Recall that Blue Rail Manufacturing had to custom cut, weld, sand, and paint each section of railing. The company has adopted a standard of 3 labor hours for each section of rail. Skilled labor is anticipated to cost $18 per hour. During August, remember that Blue Rail produced 3,400 sections of railing. Therefore, the standard labor cost for August is calculated as:

The monthly performance report revealed actual labor cost of $175,000. A closer examination of the actual cost of labor revealed the following:

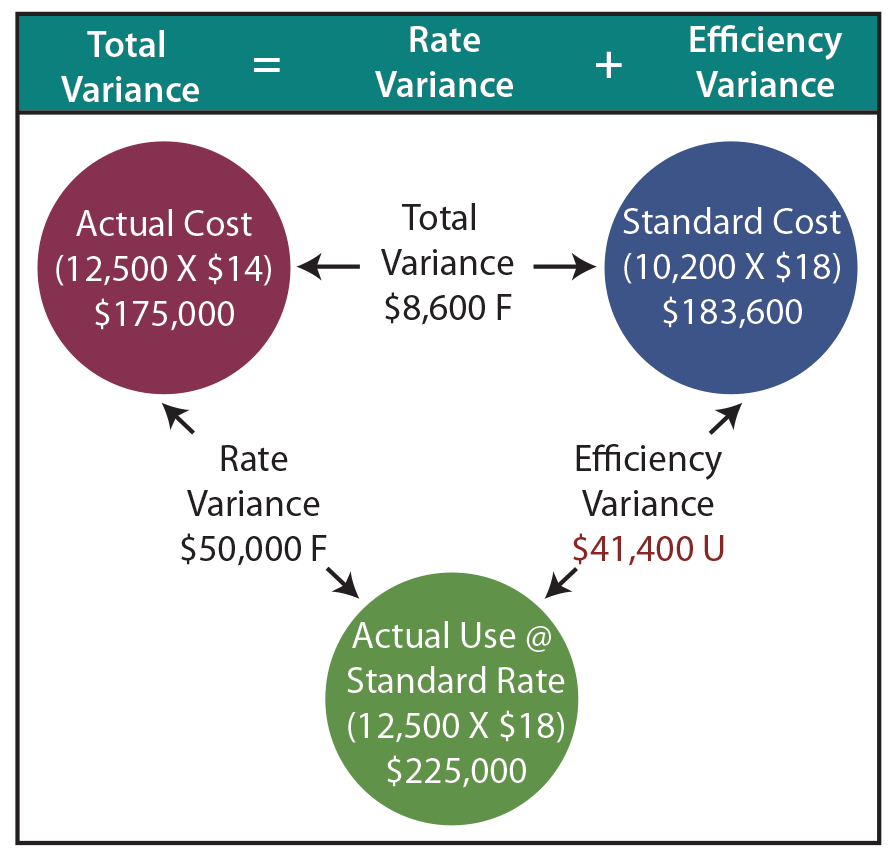

The total direct labor variance was favorable $8,600 ($183,600 vs. $175,000). However, detailed variance analysis is necessary to fully assess the nature of the labor variance. As will be shown, Blue Rail experienced a very favorable labor rate variance, but this was offset by significant unfavorable labor efficiency.

LABOR RATE VARIANCE

(sr – ar) x ah = ($18 – $14) x 12,500.

The hourly wage rate was lower because of a shortage of skilled welders. Less-experienced welders were paid less per hour, but they also worked slower. This inefficiency shows up in the unfavorable labor efficiency variance:

LABOR EFFICIENCY VARIANCE

(sh – ah) x sr = (10,200 – 12,500) x $18, =<$41,400>, journal entry.

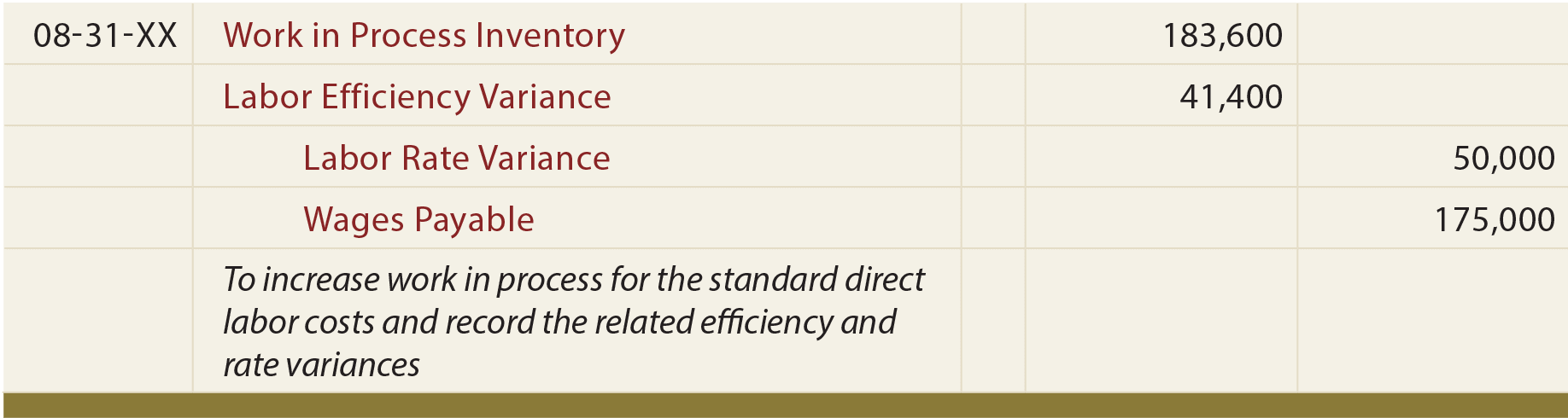

If Blue Rail desires to capture labor variances in its general ledger accounting system, the entry might look something like this:

Once again, debits reflect unfavorable variances, and vice versa. Such variance amounts are generally reported as decreases (unfavorable) or increases (favorable) in income, with the standard cost going to the Work in Process Inventory account.

The following diagram shows the impact within the general ledger accounts:

Factory Overhead Variances

Variance analysis should also be performed to evaluate spending and utilization for factory overhead. Overhead variances are a bit more challenging to calculate and evaluate. As a result, the techniques for factory overhead evaluation vary considerably from company to company. To begin, recall that overhead has both variable and fixed components (unlike direct labor and direct material that are exclusively variable in nature). The variable components may consist of items like indirect material, indirect labor, and factory supplies. Fixed factory overhead might include rent, depreciation, insurance, maintenance, and so forth. Because variable and fixed costs behave in a completely different manner, it stands to reason that proper evaluation of variances between expected and actual overhead costs must take into account the intrinsic cost behavior. As a result, variance analysis for overhead is split between variances related to variable overhead and variances related to fixed overhead.

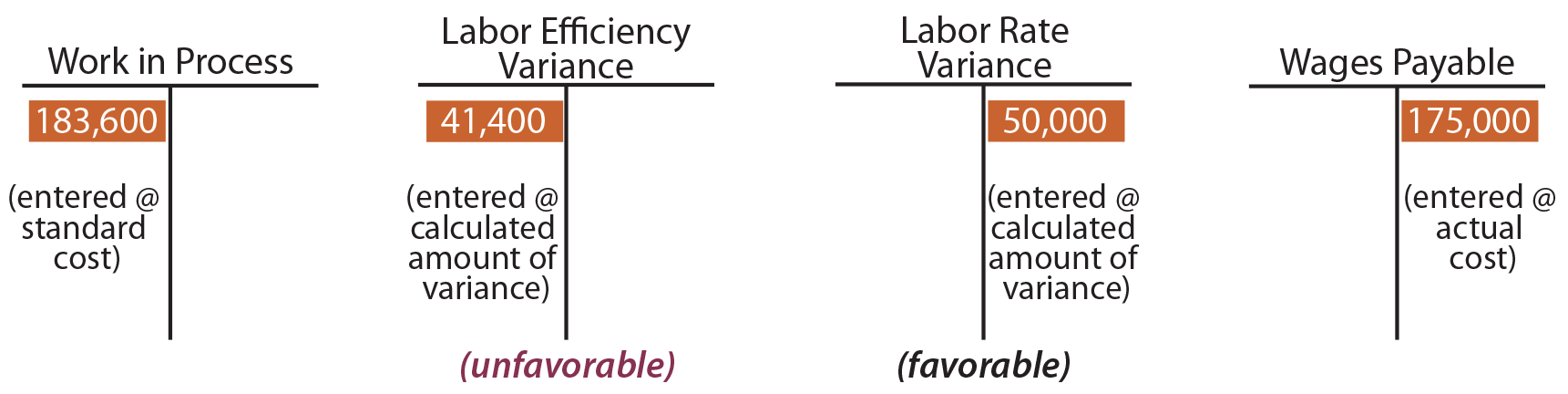

Variable Factory Overhead Variances

The cost behavior for variable factory overhead is not unlike direct material and direct labor, and the variance analysis is quite similar. The goal will be to account for the total “actual” variable overhead by applying: (1) the “standard” amount to work in process and (2) the “difference” to appropriate variance accounts.

Review the following graphic and notice that more is spent on actual variable factory overhead than is applied based on standard rates. This scenario produces unfavorable variances (also known as “underapplied overhead” since not all that is spent is applied to production). As monies are spent on overhead (wages, utilization of supplies, etc.), the cost (xx) is transferred to the Factory Overhead account. As production occurs, overhead is applied/transferred to Work in Process (yyy). When more is spent than applied, the balance (zz) is transferred to variance accounts representing the unfavorable outcome.

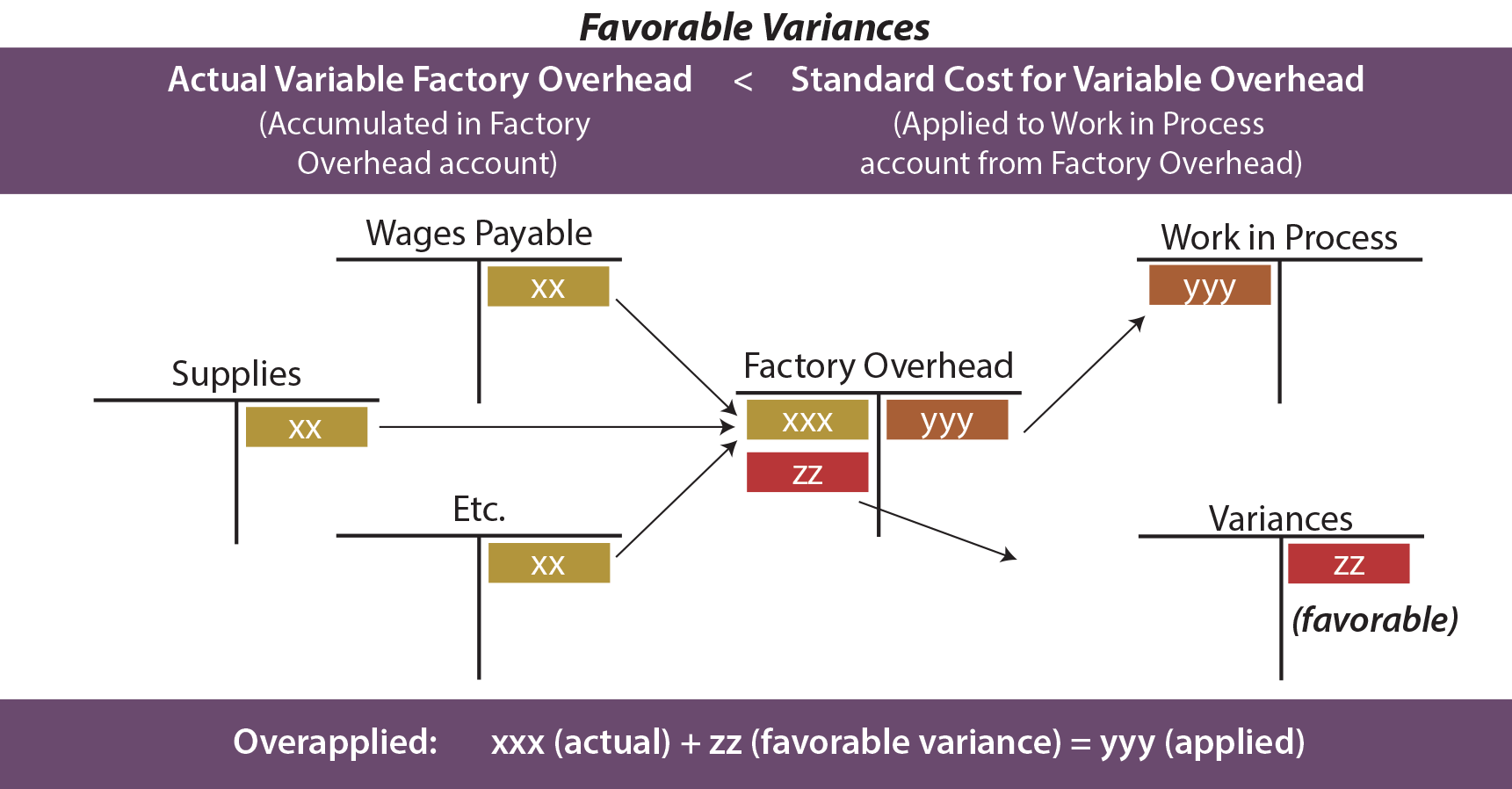

The next illustration is the opposite scenario. When less is spent than applied, the balance (zz) represents the favorable overall variances. Favorable overhead variances are also known as “overapplied overhead” since more cost is applied to production than was actually incurred.

A good manager will want to explore the nature of variances relating to variable overhead. It is not sufficient to simply conclude that more or less was spent than intended. As with direct material and direct labor, it is possible that the prices paid for underlying components deviated from expectations (a variable overhead spending variance). On the other hand, it is possible that the company’s productive efficiency drove the variances (a variable overhead efficiency variance). Thus, the Total Variable Overhead Variance can be divided into a Variable Overhead Spending Variance and a Variable Overhead Efficiency Variance .

Before looking closer at these variances, it is first necessary to recall that overhead is usually applied based on a predetermined rate, such as $X per direct labor hour. This means that the amount debited to work in process is driven by the overhead application approach. This will become clearer with the following illustration.

Blue Rail’s variable factory overhead for August consisted primarily of indirect materials (welding rods, grinding disks, paint, etc.), indirect labor (inspector time, shop foreman, etc.), and other items. Extensive budgeting and analysis had been performed, and it was estimated that variable factory overhead should be applied at $10 per direct labor hour. During August, $105,000 was actually spent on variable factory overhead items. The standard cost for August’s production was as follows:

But, a closer look reveals that overhead spending was quite favorable, while overhead efficiency was not so good. Remember that 12,500 hours were actually worked.

Since variable overhead is consumed at the presumed rate of $10 per hour, this means that $125,000 of variable overhead (actual hours X standard rate) was attributable to the output achieved. Comparing this figure ($125,000) to the standard cost ($102,000) reveals an unfavorable variable overhead efficiency variance of $23,000. However, this inefficiency was significantly offset by the $20,000 favorable variable overhead spending variance ($105,000 vs. $125,000).

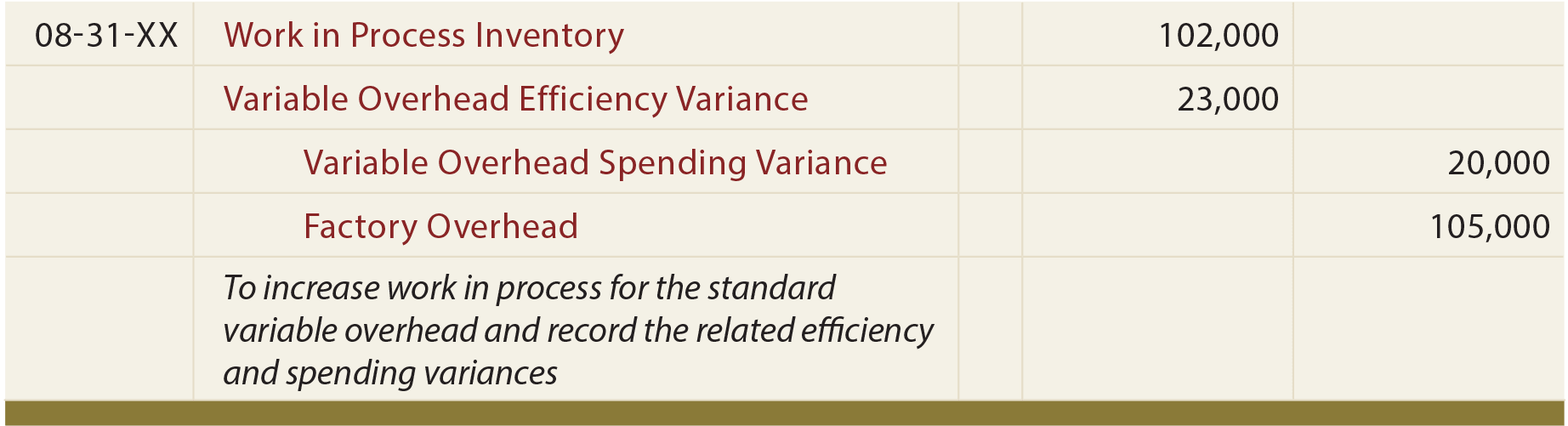

This entry applies variable factory overhead to production and records the related variances:

The variable overhead efficiency variance can be confusing as it may reflect efficiencies or inefficiencies experienced with the base used to apply overhead. For Blue Rail, remember that the total number of hours was “high” because of inexperienced labor. These welders may have used more welding rods and had sloppier welds requiring more grinding. While the overall variance calculations provide signals about these issues, a manager would actually need to drill down into individual cost components to truly find areas for improvement.

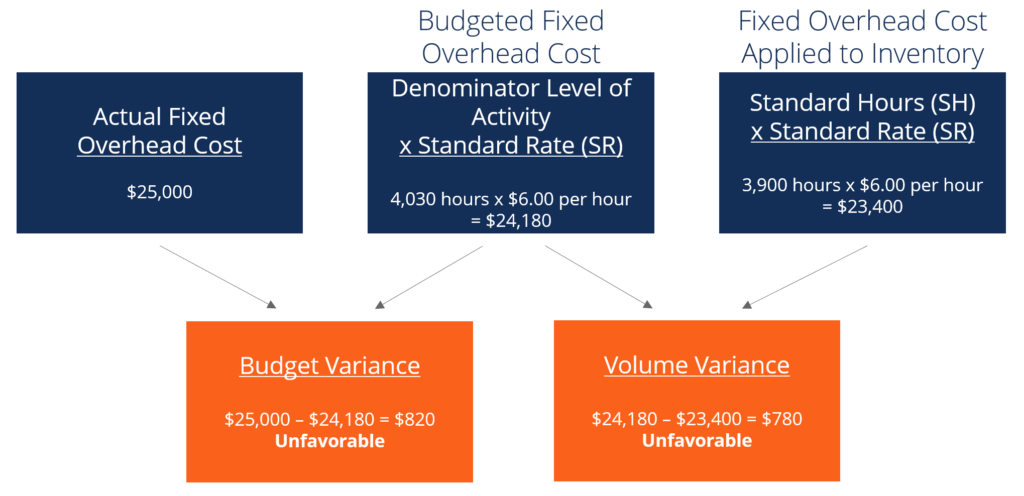

Fixed Factory Overhead Variances

Actual fixed factory overhead may show little variation from budget. This results because of the intrinsic nature of a fixed cost. For instance, rent is usually subject to a lease agreement that is relatively certain. Depreciation on factory equipment can be calculated in advance. The costs of insurance policies are tied to a contract. Even though budget and actual numbers may differ little in the aggregate, the underlying fixed overhead variances are nevertheless worthy of close inspection.

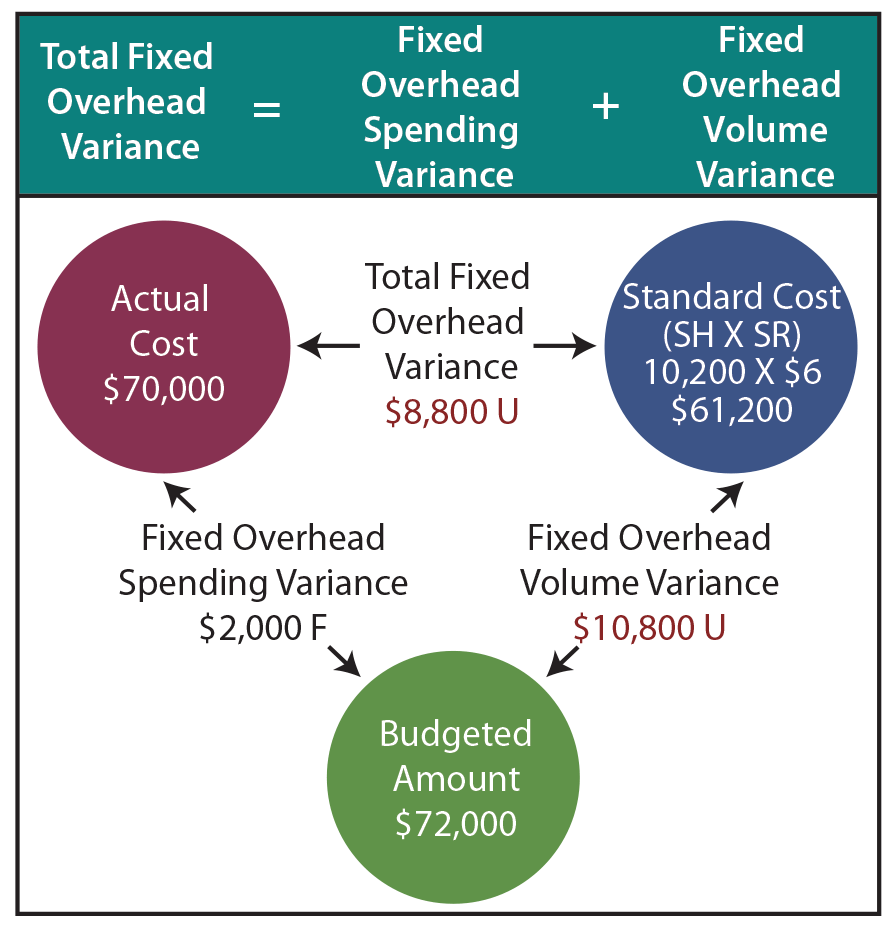

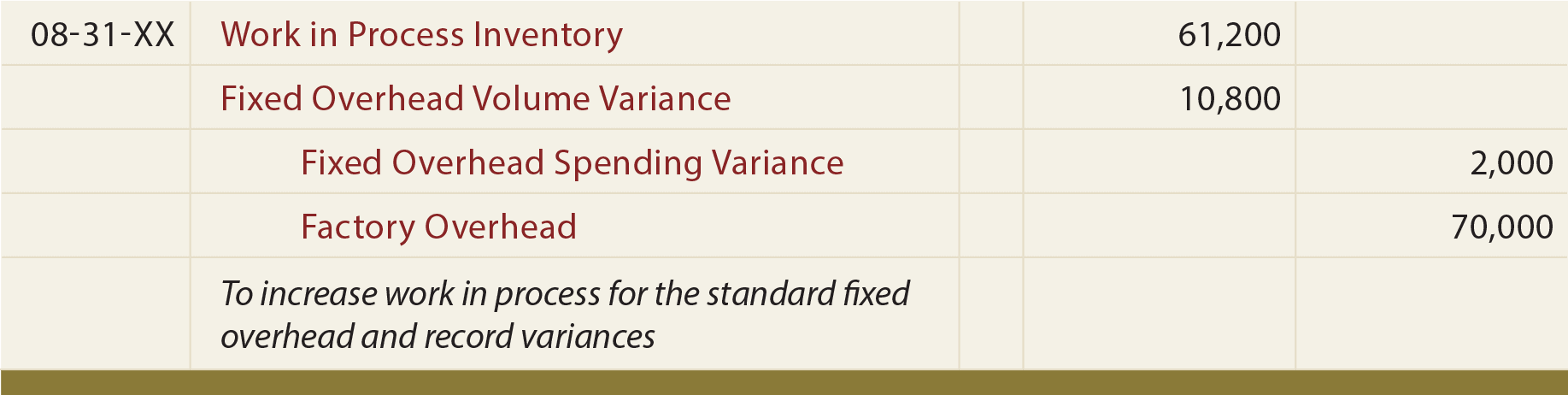

As illustrated, $61,200 should be allocated to work in process. This reflects the standard cost allocation of fixed overhead (i.e., 10,200 hours should be used to produce 3,400 units). Notice that this differs from the budgeted fixed overhead by $10,800, representing an unfavorable Fixed Overhead Volume Variance .

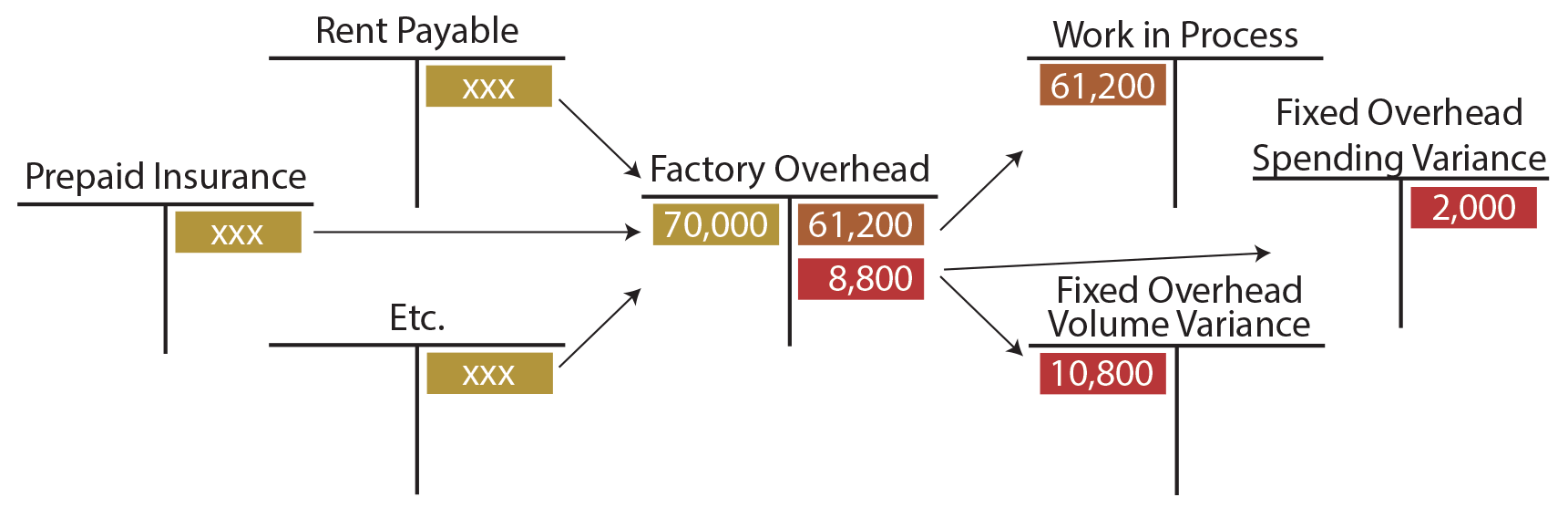

Since production did not rise to the anticipated level of 4,000 units, much of the fixed cost (that was in place to support 4,000 units) was “under-utilized.” For Blue Rail, the volume variance is offset by the favorable Fixed Overhead Spending Variance of $2,000; $70,000 was spent versus the budgeted $72,000. Following is an illustration showing the flow of fixed costs into the Factory Overhead account, and on to Work in Process and the related variances.

Following is the entry to apply fixed factory overhead to production and record related volume and spending variances:

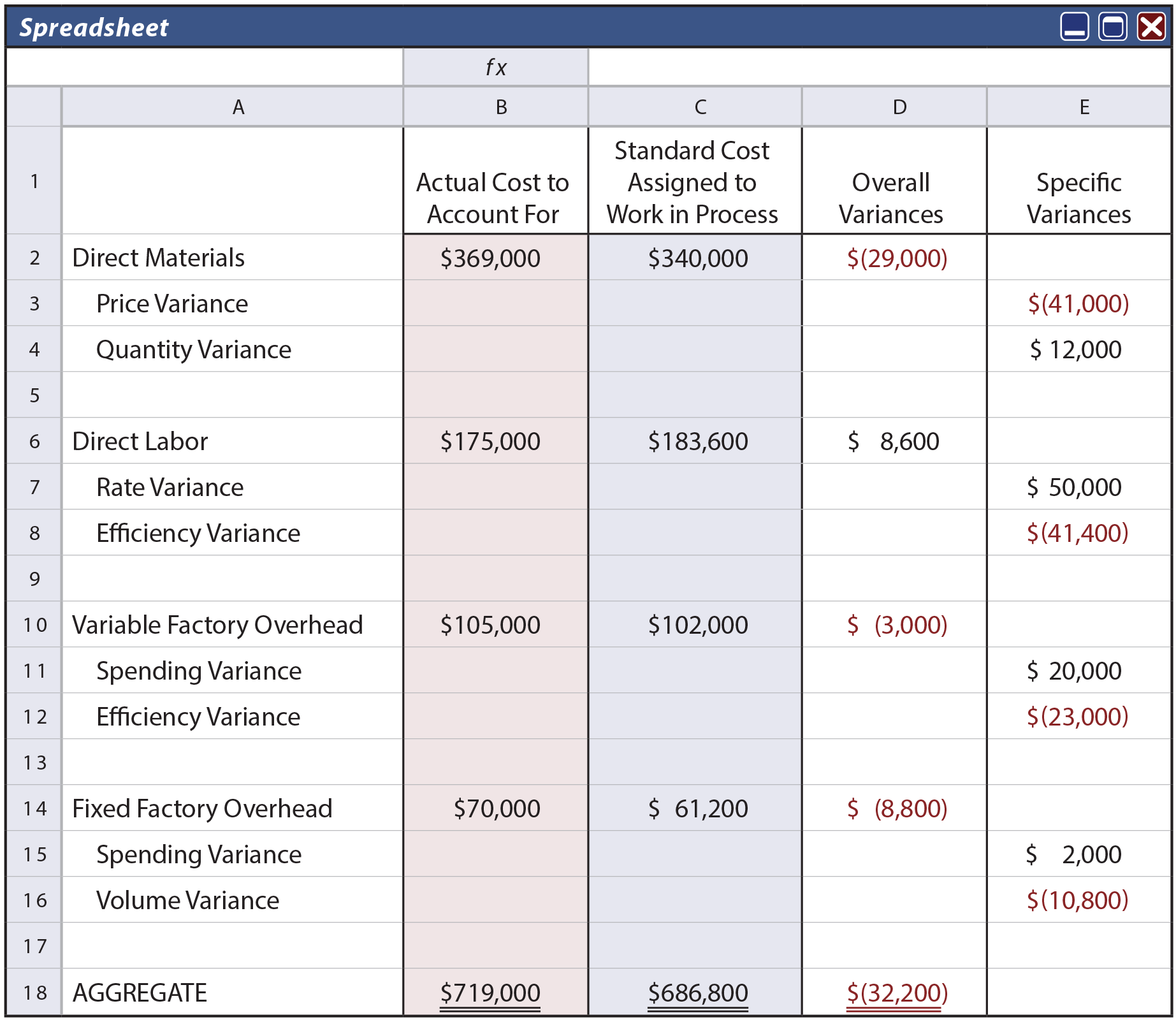

The following spreadsheet summarizes the Blue Rail case study. Carefully trace amounts in the spreadsheet back to the illustrations.

Notice that the standard cost of $686,800 corresponds to the amounts assigned to work in process inventory via the various journal entries, while the total variances of $32,200 were charged/credited to specific variance accounts. By so doing, the full $719,000 actually spent is fully accounted for in the records of Blue Rail.

Examining Variances

Not all variances need to be analyzed. One must consider the circumstances under which the variances resulted and the materiality of amounts involved. One should also understand that not all unfavorable variances are bad. For example, buying raw materials of superior quality (at higher than anticipated prices) may be offset by reduction in waste and spoilage. Likewise, favorable variances are not always good. Blue Rail’s very favorable labor rate variance resulted from using inexperienced, less expensive labor. Was this the reason for the unfavorable outcomes in efficiency and volume? Perhaps! The challenge for a good manager is to take the variance information, examine the root causes, and take necessary corrective measures to fine tune business operations.

In closing this discussion of standards and variances, be mindful that care should be taken in examining variances. If the original standards are not accurate and fair, the resulting variance signals will themselves prove quite misleading.

| Did you learn? |

|---|

| What is a variance? |

| Be able to calculate and explain material, labor, and overhead variances. |

| When should variances be investigated? |

Variance Analysis

Analysis of the difference between planned and actual numbers

What is Variance Analysis?

Variance analysis can be summarized as an analysis of the difference between planned and actual numbers. The sum of all variances gives a picture of the overall over-performance or under-performance for a particular reporting period . For each item, companies assess their favorability by comparing actual costs to standard costs in the industry.

For example, if the actual cost is lower than the standard cost for raw materials, assuming the same volume of materials, it would lead to a favorable price variance (i.e., cost savings). However, if the standard quantity was 10,000 pieces of material and 15,000 pieces were required in production, this would be an unfavorable quantity variance because more materials were used than anticipated.

Learn variance analysis step by step in CFI’s Budgeting and Forecasting course .

The Role of Variance Analysis

When standards are compared to actual performance numbers, the difference is what we call a “variance.” Variances are computed for both the price and quantity of materials, labor, and variable overhead and are reported to management. However, not all variances are important.

Management should only pay attention to those that are unusual or particularly significant. Often, by analyzing these variances, companies are able to use the information to identify a problem so that it can be fixed or simply to improve overall company performance.

Types of Variances

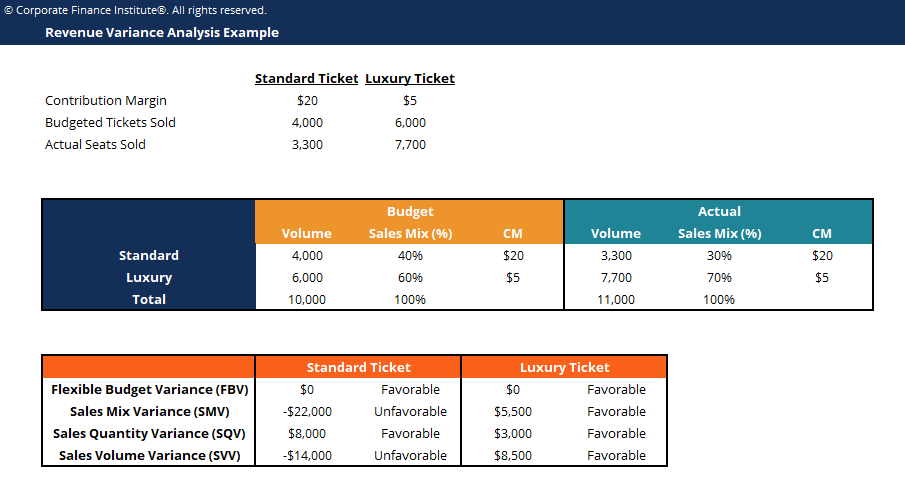

As mentioned above, materials, labor, and variable overhead consist of price and quantity/efficiency variances. Fixed overhead, however, includes a volume variance and a budget variance.

The Column Method for Variance Analysis

When calculating for variances, the simplest way is to follow the column method and input all the relevant information. This method is best shown through the example below:

XYZ Company produces gadgets. Overhead is applied to products based on direct labor hours. The denominator level of activity is 4,030 hours. The company’s standard cost card is below:

Direct materials: 6 pieces per gadget at $0.50 per piece

Direct labor: 1.3 hours per gadget at $8 per hour

Variable manufacturing overhead: 1.3 hours per gadget at $4 per hour

Fixed manufacturing overhead: 1.3 hours per gadget at $6 per hour

In January, the company produced 3,000 gadgets. The fixed overhead expense budget was $24,180. Actual costs in January were as follows:

Direct materials: 25,000 pieces purchased at the cost of $0.48 per piece

Direct labor: 4,000 hours were worked at the cost of $36,000

Variable manufacturing overhead: Actual cost was $17,000

Fixed manufacturing overhead: Actual cost was $25,000

Materials Variance

Adding these two variables together, we get an overall variance of $3,000 (unfavorable). It is a variance that management should look at and seek to improve. Although price variance is favorable, management may want to consider why the company needs more materials than the standard of 18,000 pieces. It may be due to the company acquiring defective materials or having problems/malfunctions with machinery.

Labor Variance

Adding the two variables together, we get an overall variance of $4,800 (Unfavorable). This is another variance that management should look at. Management should address why the actual labor price is a dollar higher than the standard and why 1,000 more hours are required for production. The same column method can also be applied to variable overhead costs. It is similar to the labor format because the variable overhead is applied based on labor hours in this example.

Fixed Overhead Variance

Adding the budget variance and volume variance, we get a total unfavorable variance of $1,600. Once again, this is something that management may want to look at.

Download the Free Template

Enter your name and email in the form below and download the free template (from the top of the article) now!

Variance Analysis Template

Download the free Excel template now to advance your finance knowledge!

- First Name *

The Role of Standards in Variance Analysis

In cost accounting , a standard is a benchmark or a “norm” used in measuring performance. In many organizations, standards are set for both the cost and quantity of materials, labor, and overhead needed to produce goods or provide services.

Quantity standards indicate how much labor (i.e., in hours) or materials (i.e., in kilograms) should be used in manufacturing a unit of a product. In contrast, cost standards indicate what the actual cost of the labor hour or material should be. Standards, in essence, are estimated prices or quantities that a company will incur.

Related Reading

This has been CFI’s guide to Variance Analysis. To help you advance your career, check out the additional CFI resources below:

- Analysis of Financial Statements

- Financial Statement Normalization

- Financial Accounting Theory

- Revenue Recognition Principle

- See all accounting resources

- Share this article

Create a free account to unlock this Template

Access and download collection of free Templates to help power your productivity and performance.

Already have an account? Log in

Supercharge your skills with Premium Templates

Take your learning and productivity to the next level with our Premium Templates.

Upgrading to a paid membership gives you access to our extensive collection of plug-and-play Templates designed to power your performance—as well as CFI's full course catalog and accredited Certification Programs.

Already have a Self-Study or Full-Immersion membership? Log in

Access Exclusive Templates

Gain unlimited access to more than 250 productivity Templates, CFI's full course catalog and accredited Certification Programs, hundreds of resources, expert reviews and support, the chance to work with real-world finance and research tools, and more.

Already have a Full-Immersion membership? Log in

Variance Analysis Impacting Company Financials

The only collaborative FP&A budgeting software that aligns and engages your entire company.

Variance analysis stands as a cornerstone in financial management, providing key insights into operational performance and financial health. This analysis aids the Office of Finance in pinpointing the reasons behind the deviations of actual results from budgeted figures. Through a meticulous breakdown of price, volume, and mix variances, companies can fine-tune their strategies, enhance efficiency, and optimize profitability.

What is variance analysis?

At its core, variance analysis involves the process of segmenting the difference between planned financial outcomes and actual financial performance into distinct components. This allows for precise identification of the sources of variance, which can be categorized as favorable or unfavorable. Understanding these distinctions is critical for strategic decision-making and effective financial management.

The significance of price variance

Price variance reflects the impact of the difference between actual and expected costs on the company's finances. In the context of sales, it would pertain to the variance caused by selling goods at a price different from the planned price. For expenses, it relates to purchasing materials or services at costs diverging from those budgeted. Analyzing price variances helps organizations adjust their pricing strategies and manage procurement practices more effectively.

Consider a manufacturing company that produces electronic goods. The budgeted cost of copper, a key material, was set at $5 per pound with an expected need of 10,000 pounds per month. However, due to fluctuations in the commodities market, the actual price paid was $5.50 per pound. The price variance, in this case, is unfavorable as the company ended up spending $0.50 more per pound than planned, totaling an additional $5,000 per month. This type of analysis prompts the company to either renegotiate supplier contracts or find alternative materials to mitigate such cost overruns in the future.

Volume variance and its implications

Volume variance is crucial in understanding how the quantity of goods sold or produced affects financial outcomes. This analysis helps ascertain whether financial performance variations are due to selling more or less than anticipated or producing at levels different from the plan. Insights derived from volume variance analysis enable management to align production schedules and sales strategies with market demands and operational capacities.

For example, a company specializing in home appliances planned to sell 50,000 units of a newly launched mixer-grinder but managed to sell only 40,000 units due to a delay in seasonal sales promotions. This resulted in an unfavorable volume variance, leading to lower-than-expected revenue. Learning from this, the company can better align promotional activities with peak buying times to ensure target volumes are met.

Navigating through mix variances

Mix variances occur when the proportion of products sold or resources used differs from the expected mix. This type of variance is particularly important in companies with diverse product lines or multiple cost centers. By analyzing mix variances, financial leaders can uncover inefficiencies in product mix strategies and resource allocation, leading to more informed strategic planning.

In the construction industry, a project budget might allocate costs based on a planned mix of labor and materials, such as higher proportions of skilled labor versus unskilled labor. If a project ends up using more unskilled labor due to the unavailability of skilled laborers, it could lead to a mix variance. This could affect the project’s profitability if unskilled labor is less efficient, requiring more hours to complete the same tasks. This insight would be crucial for future labor planning and contract negotiations.

Harnessing favorable and unfavorable variances

Identifying whether variances are favorable or unfavorable is essential for effective financial stewardship. Favorable variances indicate better-than-expected performance, providing opportunities to capitalize on successful strategies. Conversely, unfavorable variances signal areas needing improvement, prompting immediate attention and corrective actions.

Managing favorable variance: Manufacturing sector

A chemical manufacturer might project the cost of raw materials based on historical prices and consumption rates. Suppose the company manages to negotiate a better deal with suppliers, resulting in a lower cost than budgeted while maintaining the same quality and quantity. This favorable price variance can provide additional budgetary leeway, allowing the company to allocate funds to other areas such as R&D or marketing to strengthen its market position.

Addressing unfavorable variance: Healthcare sector

A hospital anticipated certain operational costs for a fiscal year based on average prices and usage rates of medical supplies. However, a sudden increase in patient admissions due to a local health crisis caused an unfavorable variance as the use of medical supplies exceeded forecasts . This scenario would highlight the need for more flexible procurement strategies to quickly adjust to unexpected increases in demand without significantly impacting operational budgets.

Strategies for managing variance

Effective management of variances involves several strategic actions:

- Continuous monitoring: Regular analysis of variances helps in maintaining control over financial performance and in initiating timely adjustments.

- Integrative communication: Facilitating open communication channels across departments ensures that variance insights are integrated into operational planning and decision-making.

- Adaptive planning: Flexibility in financial planning enables companies to adjust their strategies based on variance analysis findings, enhancing responsiveness to market changes.

Variance analysis is not just about numbers; it's a strategic tool that, when used wisely, can significantly influence a company's financial trajectory. For CFOs and the Office of Finance, mastering variance analysis is essential for fostering robust financial health and steering their companies toward sustained profitability.

By understanding the nuances of price, volume, and mix variances, and effectively managing these variances, financial leaders can ensure that their organizations remain competitive, adaptive, and financially stable in a dynamic business environment. Integrating a sophisticated financial management tool like Centage can be a game-changer in this process.

To see firsthand how Centage can transform your financial operations and variance analysis, book a demo today and take the first step toward optimizing your financial performance and strategic decision-making.

- Error message label

Stay in the loop!

Sign up for our newsletter to stay up to date with everything Centage.

Keep reading...

Interviews, tips, guides, industry best practices, and news.

Leveraging Real-Time Data for Proactive Financial Management

How to Read a Budget vs Actual Report

Forecast and Monitor Your Loan Covenants Compliance

- DOI: 10.1506/8172-1165-6601-3L37

- Corpus ID: 153683027

A Case Study of a Variance Analysis Framework for Managing Distribution Costs

- Kevin C Gaffney , V. Gladkikh , R. Webb

- Published 1 May 2007

- Accounting Perspectives

5 Citations

The application of management accounting techniques to determine the financial viability of delivery routes in the bread industry: a case study, dynamic and static decomposition analysis of the czech automotive production sector, using prototypes to induce experimentation and knowledge integration in the development of enabling accounting information, why do employees take more initiatives to improve their performance after co-developing performance measures a field study., using prototypes to induce experimentation and knowledge integration in the development of enabling accounting information*: prototypes to induce experimentation and knowledge integration, 9 references, a framework for analysis of sources of profit contribution variance between actual and plan, responsibility cost control system in china: a case of management accounting application, abc of collaborative planning forecasting and replenishment, marginal costing: cost budgeting and cost variance analysis, the strategy-focused organization, variance analysis as an incentive device when payments are based on rank order, target costing and kaizen costing in japanese automobile companies, a disaggregate analysis of ocean carriers' transit time performance, delivering the goods, related papers.

Showing 1 through 3 of 0 Related Papers

Accessibility options:

- Jump to content

- Accessibility

For the help you need to support your course

- Communities

Find resources by…

- Case study

- Topic e.g. The Steps Glossary

- Resource type e.g. Video, Paper based

- Let me choose See all

- Analysis of Variance - Introduction to Analysis of Variance

Introduction to Analysis of Variance resources

Show me all resources applicable to both students & staff students only staff only

01. Case Study Videos (1)

02. video tutorials (1), 03. teach yourself worksheets (2), 07. community project (6), 08. staff resources (7), 10. workshops (1), 11. quick reference worksheet (2).

Analysis of Variance

- First Online: 29 June 2023

Cite this chapter

- Klaus Backhaus 6 ,

- Bernd Erichson 7 ,

- Sonja Gensler 8 ,

- Rolf Weiber 9 &

- Thomas Weiber 10

1425 Accesses

Analysis of variance is a procedure that examines the effect of one (or more) independent variable(s) on one (or more) dependent variable(s). For the independent variables, which are also called factors or treatments, only a nominal scaling is required, while the dependent variable (also called target variable) is scaled metrically. The analysis of variance is the most important multivariate method for the detection of mean differences across more than two groups and is thus particularly useful for the evaluation of experiments. The chapter deals with both the one-factorial (one dependent and one independent variable) and the two-factorial (one dependent and two independent variables) analysis of variance and extends the considerations in the case study to the analysis with two (nominally scaled) independent factors and two (metrically scaled) covariates. Furthermore, contrast analysis and post-hoc testing are also covered.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

Subscribe and save.

- Get 10 units per month

- Download Article/Chapter or eBook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

This is called inferential statistics and has to be distinguished from descriptive statistics. Inferential statistics makes inferences and predictions about a population based on a sample drawn from the studied population.

In our example, only 5 observations per group and thus a total of 15 observations were chosen in order to make the subsequent calculations easier to understand. The literature usually recommends a minimum of 20 observations per group.

On the website www.multivariate-methods.info we provide supplementary material (e.g., Excel files) to deepen the reader’s understanding of the methodology.

For a brief summary of the basics of statistical testing see Sect. 1.3 .

For a detailed explanation on degrees of freedom (df) see Sect. 1.2.1 .

The user can also choose other values for α . However, α = 5% is a kind of “gold” standard in statistics and goes back to R. A. Fisher (1890–1962) who developed the F-distribution. However, the user must also consider the consequences (costs) of a wrong decision when making a decision.

The p-value can also be calculated with Excel by using the function F.DIST.RT( F emp ;df1;df2). For our application example, we get: F.DIST.RT(38.09;2;12) = 0.0000064 or 0.00064%. The reader will also find a detailed explanation of the p-value in Sect. 1.3.1.2 .

Guidance on testing the assumption of multivariate normal distribution is given in Sect. 3.5 . A detailed description of the testing of variance homogeneity using the Levene test is given in Sect. 3.4.3 .

The alpha error reflects the probability of rejecting the null hypothesis although it is true. For type I and type II errors, refer to the basics of statistical testing in Sect. 1.3 .

SPSS offers a total of 18 variants of post-hoc tests. See Fig. 3.16 in Sect. 3.3.3.2 .

Please note that the number of 5 observations per group, and thus a total of 30 observations, was chosen in order to make the subsequent calculations easier to understand. In the literature, at least 20 observations per group are usually recommended for a two-way ANOVA.

Here, the different types of interaction are illustrated graphically. The interaction effects in the application example correspond to those in the case study and are shown and explained in Fig. 3.15 .

For didactic reasons, the data of the extended example are also used in the case study (cf. Sect. 3.2.2.1 ; Table 3.9 ). Note that the case study is thus based on a total of 30 cases only. In the literature, a number of at least 20 observations per group is usually recommended.

For the types of interaction effects and the calculation of the interaction effect in the case study, see the explanations in Sect. 3.2.2.1 .

The p-value can also be calculated using Excel by using the function F.DIST.RT( F emp ;df1;df2). For the example in Sect. 3.2.1.1 , we obtain: F.DIST.RT(0,062;2;12) = 0.9402. A detailed explanation of the p-value may be found in Sect. 1.3.1.2 .

Bray, J. H., & Maxwell, S. E. (1985). Multivariate analysis of variance . Sage.

Book Google Scholar

Brown, S. R., Collins, R. L., & Schmidt, G. W. (1990). Experimental design and analysis . Sage

Christensen, R. (1996). Analysis of variance, design, and regression: Applied statistical methods . CRC Press.

Google Scholar

Haase, R. F., & Ellis, M. V. (1987). Multivariate analysis of variance. Journal of Counseling Psychology, 34 (4), 404–413.

Article Google Scholar

Kahn, J. (2011). Validation in marketing experiments revisited. Journal of Business Research, 64 (7), 687–692.

Leigh, J. H., & Kinnear, T. C. (1980). On interaction classification. Educational and Psychological Measurement, 40 (4), 841–843.

Levene, H. (1960). Robust tests for equality of variances. In I. Olkin (Ed.), Contributions to probability and statistics. Essays in honor of Harold Hotelling (pp. 278–292). Stanford University Press.

Levy, K. I. (1980). A Monte Carlo study of analysis of covariance under violations of the assumptions of normality and equal regression slopes. Educational and Psychological Measurement, 40 (4), 835–840.

Moore, D. S. (2010). The basic practice of statistics (5th ed.). Freeman.

Perdue, B., & Summers, J. (1986). Checking the success of manipulations in marketing experiments. Journal of Marketing Research, 23 (4), 317–326.

Perreault, W. D., & Darden, W. R. (1975). Unequal cell sizes in marketing experiments: Use of the general linear hypothesis. Journal of Marketing Research, 12 (3), 333–342.

Pituch, K. A., & Stevens, J. P. (2016). Applied multivariate statistics for the social sciences (6th ed.). Routledge.

Shingala, M. C., & Rajyaguru, A. (2015). Comparison of post hoc tests for unequal variance. Journal of New Technologies in Science and Engineering, 2 (5), 22–33.

Smith, R. A. (1971). The effect of unequal group size on Tukey’s HSD procedure. Psychometrika, 36 (1), 31–34.

Warne, R. T. (2014). A primer on multivariate analysis of variance (MANOVA) for behavioral scientists. Practical Assessment, Research & Evaluation, 19 (17), 1–10.

Further Reading

Gelman, A. (2005). Analysis of variance—Why it is more important than ever. The Annals of Statistics, 33 (1), 1–53.

Ho, R. (2006). Handbook of univariate and multivariate data analysis and interpretation with SPSS . CRC Press.

Sawyer, S. F. (2009). Analysis of variance: The fundamental concepts. Journal of Manual & Manipulative Therapy, 17 (2), 27–38.

Scheffe, H. (1999). The analysis of variance . Wiley.

Turner, J. R., & Thayer, J. (2001). Introduction to analysis of variance: Design, analyis & interpretation . Sage Publications.

Download references

Author information

Authors and affiliations.

University of Münster, Münster, Nordrhein-Westfalen, Germany

Klaus Backhaus

Otto-von-Guericke-University Magdeburg, Magdeburg, Sachsen-Anhalt, Germany

Bernd Erichson

Sonja Gensler

University of Trier, Trier, Rheinland-Pfalz, Germany

Rolf Weiber

Munich, Bayern, Germany

Thomas Weiber

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Rolf Weiber .

Rights and permissions

Reprints and permissions

Copyright information

© 2023 Springer Fachmedien Wiesbaden GmbH, part of Springer Nature

About this chapter

Backhaus, K., Erichson, B., Gensler, S., Weiber, R., Weiber, T. (2023). Analysis of Variance. In: Multivariate Analysis. Springer Gabler, Wiesbaden. https://doi.org/10.1007/978-3-658-40411-6_3

Download citation

DOI : https://doi.org/10.1007/978-3-658-40411-6_3

Published : 29 June 2023

Publisher Name : Springer Gabler, Wiesbaden

Print ISBN : 978-3-658-40410-9

Online ISBN : 978-3-658-40411-6

eBook Packages : Business and Economics (German Language)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- Free Case Studies

- Business Essays

Write My Case Study

Buy Case Study

Case Study Help

- Case Study For Sale

- Case Study Services

- Hire Writer

Case Study on Variance Analysis

Variance analysis case study:.

It is important to predict the whole sum of the difference in numbers in order to plan the further development of the company and improvement of its strategies due to these variances in expenditures. Variance analysis is extremely important for the small developing firms, because every extra sum of money is a plus and a chance for the further improvement.

Variance analysis is a useful practice which can make the development of business more rapidly and more effective, because if the company produces similar goods, it can predict the variance on all its production and use the extra money effectively.The topic on variance analysis is quite interesting and important for every future accountant. If one is asked to complete a successful variance analysis case study, he will have to read on the topic a lot to improve his knowledge on the problem under research. There are many reliable sources which can be at hand in the process of writing and help a student become aware of the aspects and principles of variance analysis. A student is supposed to investigate the topic professionally, collect the appropriate data for the research and draw the wise conclusions in the end. One should research the case site, discover the nature of the problem, find out about its cause and effect.

We Will Write a Custom Case Study Specifically For You For Only $13.90/page!

A student is expected to brainstorm the effective solutions to the suggested problem on variance analysis to demonstrate his professional skills.The assignment of case study writing is treated like a complicated process of writing, because this paper has its own peculiarities and rules which have to be fulfilled. With the professional writing assistance of the Internet it is possible to prepare a good paper yourself if you take advantage of a free example case study on variance analysis. It is possible to complete a well-organized assignment just having looked through a good free sample case study on standard costing and variance analysis in the prepared by an expert in the web.

Related posts:

- Case: Coal and Variance

- Case Study on Value Chain Analysis

- Case Study on Ratio Analysis

- Writing a Case Study Response

- Case Study on Data Analysis

- Case Study on Job Analysis

- Case Study on Gap Analysis

Quick Links

Privacy Policy

Terms and Conditions

Testimonials

Our Services

Case Study Writing Services

Case Studies For Sale

Our Company

Welcome to the world of case studies that can bring you high grades! Here, at ACaseStudy.com, we deliver professionally written papers, and the best grades for you from your professors are guaranteed!

[email protected] 804-506-0782 350 5th Ave, New York, NY 10118, USA

Acasestudy.com © 2007-2019 All rights reserved.

Hi! I'm Anna

Would you like to get a custom case study? How about receiving a customized one?

Haven't Found The Case Study You Want?

For Only $13.90/page

CUSTOMER SUCCESS STORY

Productive Serves Makerstreet as a Single Source of Truth

Makerstreet is an Amsterdam-based collective of agencies with over 300 employees in four offices.

Agency Valuation Calculator Report

See the 2023 Global Agency Valuations Report

Book a Demo

Try Productive

Comparisons

{{minutes}} min read

Cost Variance in Project Management: How to Calculate It

Lucija Bakić

August 28, 2024

Cost variance in project management helps project managers keep their cost baseline under control.

This is why cost variance analysis is one of the core project management metrics . In this article, we’ll discuss how you can calculate your cost variance, what you can use it for, different types of project costs, and how you can minimize them.

What Is Cost Variance in Project Management?

Cost variance is a metric that depicts the difference between your expected project cost and your actual cost at a certain point in time. A positive cost variance means that your project budget is on track, while a negative variance signifies budget overrun.

How to Calculate Cost Variance?

The basic formula for cost variance is:

projected cost – actual cost = cost variance

Projected cost is also sometimes called earned value (EV), since it reflects on your work completed. To give a practical example, let’s say your original budget is $10,000. Let’s say you’ve completed 50% of the project; your projected cost or earned value would amount to $5,000. However, you’ve actually spent only $4,000. This means that your cost variance is $1,000, meaning that you’re not only within your budget but that you’ve spent less than expected. For the cost variance percentage, use the following formula:

cost variance / projected cost x 100 = cost variance percentage

Using the example above, $1,000 / $5,000 is 20%, meaning that your budget is 20% over the expected amount.

Automate your project performance insights with Productive

If you don’t want to do manual calculations, you can use project management software like Productive for real-time data access. Head over to our section on tracking cost variance with Productive to learn more.

Types of Cost Variance Formulas

There are also a couple of different ways to measure your budget spend and get insights from multiple perspectives, including:

- Point-in-time cost variance: compares cost variance within a chosen period of time

- Cumulative cost variance: considers variance from the start of the project up to a chosen point in time

- Variance at completion: considers variance from the start of the project up to its completion

A full understanding of cost variance requires using all three methods. Point-in-time and cumulative cost variance help project managers take proactive measures to correct unfavorable cost variance, while variance at completion is necessary for delivering budget reports and promoting cost efficient workflows.

The Importance of Cost Variance Analysis

Cost variance analysis helps project managers identify exactly the point at which budgets begin to diverge from expectations. This allows them to pinpoint specific roadblocks and address them before the project gets derailed. This makes the concept of cost variance an essential part of project financial management . Additionally, building a better understanding of how to control actual cost supports future projects; it helps set better estimates and measures for monitoring your budgeting in stages. Cost variance also supports accountability and transparency between project stakeholders. Project managers who have a handle over their finances during various time periods can present this data to clients, which helps manage expectations, control scope creep, and increase client satisfaction overall.

We also love the ability to invite our clients into the projects. It takes the middle man out of the equation , no need to go back and forth via e-mail, we can get all of the feedback within Productive. This also lets the client see how much work is actually going into the project and you can see that they have a greater appreciation for what we do.

Alex Streltsov , General Manager at Prolex Media

Learn how to optimize your project cost control and collaboration with Productive.

Types of Project Costs

There are two main types of project costs: direct and indirect costs. Direct cost is usually involved closely with the production of goods and services — in the case of professional services, this usually includes employee salaries. Indirect costs, also known as overhead, are tied to activities that don’t produce revenue. Overhead can also be classified into two types: 1. Fixed overhead , which includes costs that remain constant and don’t change with business activity levels. For example:

- Facility costs (office space rent, equipment, etc.)