Sample Size Calculator

Find out the sample size.

This calculator computes the minimum number of necessary samples to meet the desired statistical constraints.

| Confidence Level: | ||

| Margin of Error: | ||

| Population Proportion: | Use 50% if not sure | |

| Population Size: | Leave blank if unlimited population size. | |

Find Out the Margin of Error

This calculator gives out the margin of error or confidence interval of observation or survey.

| Confidence Level: | ||

| Sample Size: | ||

| Population Proportion: | ||

| Population Size: | Leave blank if unlimited population size. | |

Related Standard Deviation Calculator | Probability Calculator

In statistics, information is often inferred about a population by studying a finite number of individuals from that population, i.e. the population is sampled, and it is assumed that characteristics of the sample are representative of the overall population. For the following, it is assumed that there is a population of individuals where some proportion, p , of the population is distinguishable from the other 1-p in some way; e.g., p may be the proportion of individuals who have brown hair, while the remaining 1-p have black, blond, red, etc. Thus, to estimate p in the population, a sample of n individuals could be taken from the population, and the sample proportion, p̂ , calculated for sampled individuals who have brown hair. Unfortunately, unless the full population is sampled, the estimate p̂ most likely won't equal the true value p , since p̂ suffers from sampling noise, i.e. it depends on the particular individuals that were sampled. However, sampling statistics can be used to calculate what are called confidence intervals, which are an indication of how close the estimate p̂ is to the true value p .

Statistics of a Random Sample

The uncertainty in a given random sample (namely that is expected that the proportion estimate, p̂ , is a good, but not perfect, approximation for the true proportion p ) can be summarized by saying that the estimate p̂ is normally distributed with mean p and variance p(1-p)/n . For an explanation of why the sample estimate is normally distributed, study the Central Limit Theorem . As defined below, confidence level, confidence intervals, and sample sizes are all calculated with respect to this sampling distribution. In short, the confidence interval gives an interval around p in which an estimate p̂ is "likely" to be. The confidence level gives just how "likely" this is – e.g., a 95% confidence level indicates that it is expected that an estimate p̂ lies in the confidence interval for 95% of the random samples that could be taken. The confidence interval depends on the sample size, n (the variance of the sample distribution is inversely proportional to n , meaning that the estimate gets closer to the true proportion as n increases); thus, an acceptable error rate in the estimate can also be set, called the margin of error, ε , and solved for the sample size required for the chosen confidence interval to be smaller than e ; a calculation known as "sample size calculation."

Confidence Level

The confidence level is a measure of certainty regarding how accurately a sample reflects the population being studied within a chosen confidence interval. The most commonly used confidence levels are 90%, 95%, and 99%, which each have their own corresponding z-scores (which can be found using an equation or widely available tables like the one provided below) based on the chosen confidence level. Note that using z-scores assumes that the sampling distribution is normally distributed, as described above in "Statistics of a Random Sample." Given that an experiment or survey is repeated many times, the confidence level essentially indicates the percentage of the time that the resulting interval found from repeated tests will contain the true result.

| Confidence Level | z-score (±) |

| 0.70 | 1.04 |

| 0.75 | 1.15 |

| 0.80 | 1.28 |

| 0.85 | 1.44 |

| 0.92 | 1.75 |

| 0.95 | 1.96 |

| 0.96 | 2.05 |

| 0.98 | 2.33 |

| 0.99 | 2.58 |

| 0.999 | 3.29 |

| 0.9999 | 3.89 |

| 0.99999 | 4.42 |

Confidence Interval

In statistics, a confidence interval is an estimated range of likely values for a population parameter, for example, 40 ± 2 or 40 ± 5%. Taking the commonly used 95% confidence level as an example, if the same population were sampled multiple times, and interval estimates made on each occasion, in approximately 95% of the cases, the true population parameter would be contained within the interval. Note that the 95% probability refers to the reliability of the estimation procedure and not to a specific interval. Once an interval is calculated, it either contains or does not contain the population parameter of interest. Some factors that affect the width of a confidence interval include: size of the sample, confidence level, and variability within the sample.

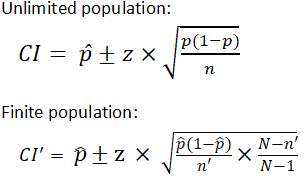

There are different equations that can be used to calculate confidence intervals depending on factors such as whether the standard deviation is known or smaller samples (n<30) are involved, among others. The calculator provided on this page calculates the confidence interval for a proportion and uses the following equations:

| where is z score is the population proportion and are sample size is the population size |

Within statistics, a population is a set of events or elements that have some relevance regarding a given question or experiment. It can refer to an existing group of objects, systems, or even a hypothetical group of objects. Most commonly, however, population is used to refer to a group of people, whether they are the number of employees in a company, number of people within a certain age group of some geographic area, or number of students in a university's library at any given time.

It is important to note that the equation needs to be adjusted when considering a finite population, as shown above. The (N-n)/(N-1) term in the finite population equation is referred to as the finite population correction factor, and is necessary because it cannot be assumed that all individuals in a sample are independent. For example, if the study population involves 10 people in a room with ages ranging from 1 to 100, and one of those chosen has an age of 100, the next person chosen is more likely to have a lower age. The finite population correction factor accounts for factors such as these. Refer below for an example of calculating a confidence interval with an unlimited population.

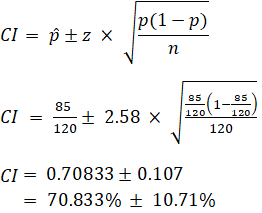

EX: Given that 120 people work at Company Q, 85 of which drink coffee daily, find the 99% confidence interval of the true proportion of people who drink coffee at Company Q on a daily basis.

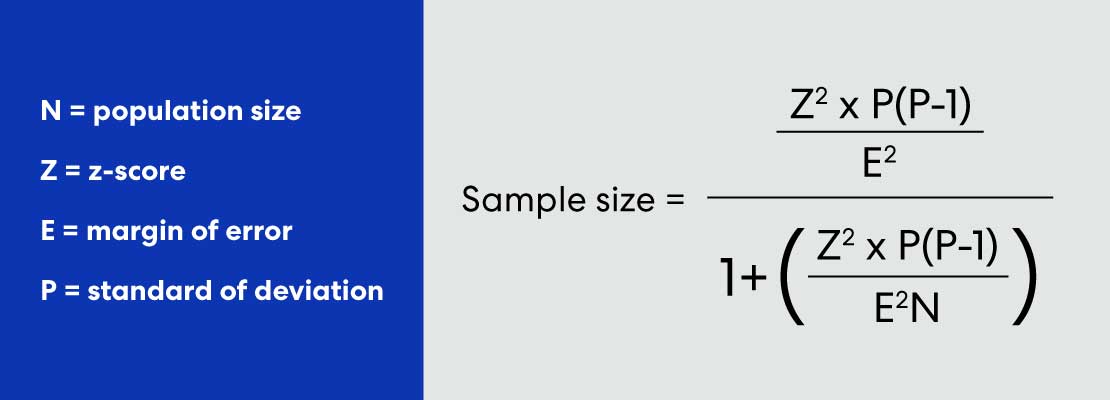

Sample Size Calculation

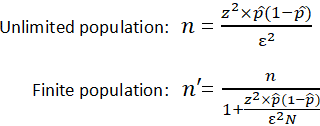

Sample size is a statistical concept that involves determining the number of observations or replicates (the repetition of an experimental condition used to estimate the variability of a phenomenon) that should be included in a statistical sample. It is an important aspect of any empirical study requiring that inferences be made about a population based on a sample. Essentially, sample sizes are used to represent parts of a population chosen for any given survey or experiment. To carry out this calculation, set the margin of error, ε , or the maximum distance desired for the sample estimate to deviate from the true value. To do this, use the confidence interval equation above, but set the term to the right of the ± sign equal to the margin of error, and solve for the resulting equation for sample size, n . The equation for calculating sample size is shown below.

| where is the z score is the margin of error is the population size is the population proportion |

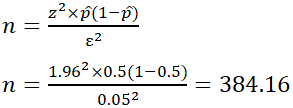

EX: Determine the sample size necessary to estimate the proportion of people shopping at a supermarket in the U.S. that identify as vegan with 95% confidence, and a margin of error of 5%. Assume a population proportion of 0.5, and unlimited population size. Remember that z for a 95% confidence level is 1.96. Refer to the table provided in the confidence level section for z scores of a range of confidence levels.

Thus, for the case above, a sample size of at least 385 people would be necessary. In the above example, some studies estimate that approximately 6% of the U.S. population identify as vegan, so rather than assuming 0.5 for p̂ , 0.06 would be used. If it was known that 40 out of 500 people that entered a particular supermarket on a given day were vegan, p̂ would then be 0.08.

| Search | |

SAMPLE SIZE CALCULATION FOR THESIS (MD/MS/DNB)

Sample Size Calculator

Determination of sample size from number of cases in pilot study.

Determination of Sample Size from a pilot study is the easiest way of determining sample size for Your study. In this type of sample size determination 3 values can be obtained from the sample size taken from pilot study.

1) Standard Error of Mean (2) Precision (3) Sample Size

The Formula Used for This type of Sample size determination is

N = (Z α2) X SD2/ Precision2

Z α– Statistical constant (1.96)

SD – Expected Standard Deviation (that can be obtained from previous studies or a pilot study).

d – Precision/ allowable error (corresponding to effect size)

Remember following general Guidelines while trying to determine sample size from a pilot study.

1. Use the pilot study which deals with the same topic as of yours. Like for example if your study is about neonatal seizures then pilot study must also deal with neonatal seizures.

2. Use the pilot study in which number of cases are near to the number of cases you want to include in your paper. For example, if you want to include 80 cases in your study then use pilot study in which close to 80 patients has been included.

Standard Error Of Mean:

Sample Size (n):

Disclaimer!

There are many methods of sample size determination. It is one of the first hurdle when someone starts writing a thesis. I have tried to give simplest way of determination of sample size. You need to show the method to your PG teacher before you include this method in your thesis. First confirm from your PG teacher and then only proceed.

Sample Size Calculator

Sample size estimation in clinical research: from randomized controlled trials to observational studies.

Introduction

Wang, X. and Ji, X., 2020. Sample size estimation in clinical research: from randomized controlled trials to observational studies. Chest, 158(1), pp.S12-S20.

Wang, X. and Ji, X., 2020. Sample size formulas for different study designs: supplement document for sample size estimation in clinical research.

- Continuous Outcome

- Dichotomous Outcome

- Time-to-event

Reference Example

Chow S-C, Shao J, Wang H, Lokhnygina Y. Sample Size Calculations in Clinical Research. Third ed: Chapman and Hall/CRC; 2017.

Type I error rate, \(\alpha\)

Power, \(1-\beta\)

Ratio of case to control, \(k\)

Allowable difference, \(d=\mu_T-\mu_C\)

Expected population standard deviation, \(\text{SD}\)

\(\delta (>0)\)

Drop rate (%, 0 ~ 99)

Margin on risk difference scale (\(\delta \geq 0)\)

Margin for log-scale odds ratio (\(\delta>0)\)

Schoenfeld D. The Asymptotic Properties of Nonparametric-Tests for Comparing Survival Distributions. Biometrika. 1981;68(1):316-319.

Schoenfeld D. Sample-Size Formula for the Proportional-Hazards Regression-Model. Biometrics. 1983;39(2):499-503.

Margin for log-scale hazard ratio (\(\delta\)>0)

Accrual time period, \(T_a\)

Follow-up time period, \(T_b\)

Hazard for the control group , \(\lambda_C\)

Fleiss JL, Levin B, Paik MC. Statistical Methods for Rates and Proportions. Third ed: John Wiley & Sons; 2013.

A case-control study of the relationship between smoking and CHD is planned. A sample of men with newly diagnosed CHD will be compared for smoking status with a sample of controls. Assuming an equal number of cases and controls (i.e., \(k = 1\)). Previous surveys have shown that around 0.40 of males without CHD are smokers (i.e., \(p_0 = 0.4\)). For achieving an 90% power (i.e., \(1-\beta = 0.9\)) at the 5% level of significance (i.e., \(\alpha = 0.05\)), the sample size to detect an odds ratio of 1.5 (i.e., \(OR = 1.5\) or \(p_1 = 0.5\)) is \(519\) cases and \(519\) controls or \(538\) cases and \(538\) controls by incorporating the continuity correction.

Dupont WD. Power calculations for matched case-control studies. Biometrics. 1988;44(4):1157-1168.

Suppose a researcher conduct a matched case-control study to assess whether bladder cancer may be associated with past exposure to cigarette smoking. Cases will be patients with bladder cancer and controls will be patients hospitalised for injury. One case will be matched to one control (i.e., \(k = 1\))and the correlation between case and control exposures for matched pairs is estimated to be 0.01 (low, i.e., \(r = 0.01\)). It is assumed that 20% of controls will be smokers or past smokers (i.e., \(p_0 = 0.2\)), and the researcher wish to detect an odds ratio of 2 (i.e., \(OR = 2\) or \(p_1 = 0.67\)) with power 90% (i.e., \(1-\beta = 0.9\)). The sample size needed for cases and controls is \(16\) and \(16\), respectively.

- Independent

- Proportional Outcome

Woodward M. Formulae for sample size, power and minimum detectable relative risk in medical studies. Journal of the Royal Statistical Society: Series D (The Statistician). 1992;41(2):185-196.

Fleiss JL, Tytun A, Ury HK. A simple approximation for calculating sample sizes for comparing independent proportions. Biometrics. 1980;36(2):343-346.

A government initiative has decided to reduce the prevalence of male smoking to 30% (i.e., \(p_1 = 0.3\)). A sample survey is planned to test, at the 0.05 level (i.e., \(\alpha = 0.05\)), the hypothesis that the percentage of smokers in the male population is 30% against the one-sided alternative that it is greater. The survey should be able to find a prevalence of 32% (i.e., \(p_0 = 0.32\)), when it is true, with 0.90 power (i.e., \(1-\beta=0.9\)). The survey needs to sample \(9158\) in males pre inititative and \(9158\) in males post government initiative (or \(9257\) and \(9257\) by incorporating the continuity correction).

Ratio of unexposed to exposed, \(k\)

Woodward M (2005). Epidemiology Study Design and Data Analysis. Chapman & Hall/CRC, New York, pp. 381 - 426.

Supposed we wish to test, at the 5% level of significance (i.e., \(\alpha = 0.05\)), the hypothesis that cholesterol means in a population are equal in two study years against the one-sided alternative that the mean is higher in the second of the two years. Suppose that equal sized samples will be taken in each year (i.e., \(k=1\)), but that these will not necessarily be from the same individuals (i.e. the two samples are drawn independently). Our test is to have a power of 0.95 (i.e., \(1-\beta = 0.95\)) at detecting a difference of 0.5 mmol/L (i.e., \(m_0 = 0, m_1 = 0.5\)). The standard deviation of serum cholesterol in humans is assumed to be 1.4 mmol/L (i.e., \(SD = 1.4\)). We need to test \(170\) in the first year and \(170\) in the second year.

Breslow NE, Day NE, Heseltine E, Breslow NE. Statistical Methods in Cancer Research: The Design and Analysis of Cohort Studies. International Agency for Research on Cancer; 1987.

A matched cohort study is to be conduct to quantify the association between exposure A and an outcome B. Assume the prevalence of event in unexposed group is 0.60 (i.e., \(p_0 = 0.6\)) and the correlation between exposed and unexposed for matched pairs is 0.20 (moderate, i.e., \(r = 0.2\)). In order to detect a relative risk of 0.75 (i.e., \(RR=0.75\) or \(p_1 = 0.45\)) with 0.80 power (i.e., \(1-\beta = 0.8\)) using a two-sided 0.05 test (i.e., \(\alpha=0.05\)), there needs to be \(1543\) unexposed and \(1543\) exposed.

Rubinstein LV, Gail MH, Santner TJ. Planning the duration of a comparative clinical trial with loss to follow-up and a period of continued observation. J Chronic Dis. 1981;34(9-10):469-479.

Suppose a two-arm prospective cohort study with 1 year accrual time period (period of time that patients are entering the study, \(T_a = 1\)) and 1 year follow-up time period (period of time after accrual has ended before the final analysis is conducted, \(T_b=1\)). Assume the hazard for the unexposed group is a constant risk over time at 0.5 (i.e., \(\lambda_0 = 0.5\)). To achieve 80% power (i.e., \(1-\beta=0.8\)) to detect Hazard ratio of 2 (i.e., \(HR = 2\)) in the hazard of the exposed group by using a two-sided 0.05-level log-rank test (i.e., \(\alpha=0.05\)), the required sample size for unexposed group is \(53\) and for exposed group is \(53\).

Hazard for the unexposed group , \(\lambda_0\)

Woodward M. Formulae for sample size, power and minimum detectable relative risk in medical studies. Journal of the Royal Statistical Society: Series D (The Statistician). 1992;41(2):185-196

Suppose that the primary interest lies in comparing systolic blood pressure between the two cities. Assume that simple random sampling from among 40-44-year-old men is to be used in each city with twice as many sampled from City 1 as from City 2, so that \(k=2\). Systolic blood pressure is to be compared using a one-sided 5% significance test (i.e. \(\alpha = 0.05\)). The medical investigators wish to be 95% sure of detecting when the average blood pressure in City 1 exceeds that in City 2 by 3 mm Hg (i.e., \(1-\beta=0.95\) and \(m_1 = 3\), \(m_2 = 0\)). From published literature (Smith et al. 1989) the standard deviation of systolic blood pressure is likely to be 15.6mmHg (i.e. \(SD=15.6\)). The sample size required is \(878\) for City 1 and \(439\) for City 2.

Ratio of first samples to second samples, \(k\)

Suppose the estimated prevalence of smoking is higher among male students (around 50%, i.e., \(p_1 = 0.5\)) compared with female students (around 35%, i.e., \(p_2 = 0.35\)). In order to 80% certain (i.e., \(1-\beta=0.8\)) of detecting a prevalence ratio of \(RR = 0.50 / 0.35 = 1.428\) using a 0.05 level of significance (i.e., \(\alpha =0.05\)) with equal number of recruited males and females, the study needs to enroll \(170\) males and \(170\) females.

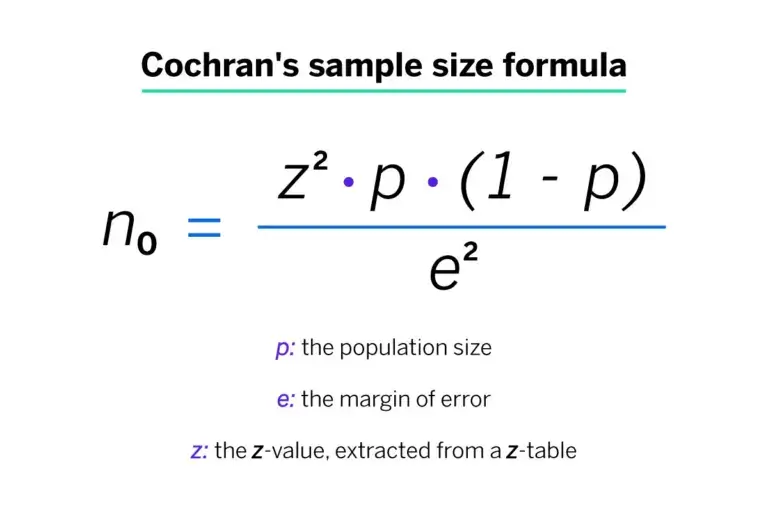

Cochran WG. Sampling Techniques. John Wiley & Sons; 1977.

Kotrlik, J. W. K. J. W., & Higgins, C. C. H. C. C. (2001). Organizational research: Determining appropriate sample size in survey research appropriate sample size in survey research. Information technology, learning, and performance journal, 19(1), 43.

Suppose the researcher assumes a seven (\(7\)) point scaled survery as a continuous data. Suppose for the continuous variable, the level of acceptable error is 3% (i.e., \(d = 0.21\)), and the estimated standard deviation of the scale as 1.167 (i.e., \(SD = 1.167\)). At the 5% Type I error rate (i.e., \(\alpha = 0.05\)), the sample size of the survery is \(119\).

Standard deviation of outcome, \(SD\)

Absolute error or precision, \(d\)

Suppose for the proportional variable, the level of acceptable error is 5% (i.e., \(d = 0.05\)), and the expected proportion in population is 0.5 (i.e., \(p = 0.5\)). At the 5% Type I error rate (i.e., \(\alpha = 0.05\)), the sample size of the survery is \(385\).

Expected proportion in population, \(p\)

- Binary Outcome

- Time-to-event Outcome

Riley R D, Ensor J, Snell K I E, Harrell F E, Martin G P, Reitsma J B et al. (2020). Calculating the sample size required for developing a clinical prediction model. BMJ, m441. doi: 10.1136/bmj.m441

Expected value of the (Cox-Snell) R-squared of the new model

Number of candidate predictor parameters for potential inclusion in the new model

Level of shrinkage desired at internal validation after developing the new model

Overall outcome proportion (for a prognostic model) or overall prevalence (for a diagnostic model)

C-statistic reported in an existing prediction model study

Overall event rate in the population of interest

Timepoint of interest for prediction in follow-up

Average (mean) follow-up time anticipated for individuals

Average outcome value in the population of interest

Standard deviation (SD) of outcome values in the population

Multiplicative margin of error (MMOE) acceptable for calculation of the intercept

Lu, Grace, "Sample Size Formulas For Estimating Areas Under the Receiver Operating Characteristic Curves With Precision and Assurance" (2021). Electronic Thesis and Dissertation Repository. 8045. https://ir.lib.uwo.ca/etd/8045

Area under ROC curve

Null hypothesis AUC value

Prevalence (ratio of positive cases / total sample size)

Power, 1-\(\beta\)

Sample Size Calculator

Determines the minimum number of subjects for adequate study power, clincalc.com » statistics » sample size calculator, study group design.

Two independent study groups

One study group vs. population

Primary Endpoint

Dichotomous (yes/no)

Continuous (means)

Statistical Parameters

| Group 1 Standard deviation is determined by examining previous literature of a similar patient population. " /> | |

| Group 2 " /> | |

| Enrollment ratio For most studies, the enrollment ratio is 1 (ie, equal enrollment between both groups). Some studies will have different enrollment ratios (2:1, 3:1) for additional safety data. " /> | |

| Group 1 " /> | |

| Group 2 " /> | |

| Enrollment ratio For most studies, the enrollment ratio is 1 (ie, equal enrollment between both groups). Some studies will have different enrollment ratios (2:1, 3:1) for additional safety data. " /> | |

| Known population This value is determined by examining previous literature of a similar patient population. " /> | |

| Study group " /> | |

| Known population The mean and standard deviation are determined by examining previous literature of a similar patient population. " /> | |

| Study group " /> | |

| Alpha Most medical literature uses a value of 0.05. " /> | |

| Power Most medical literature uses a value of 80-90% power (β of 0.1-0.2) " /> | |

Dichotomous Endpoint, Two Independent Sample Study

| Sample Size | |

|---|---|

| Group 1 | 690 |

| Group 2 | 690 |

| Total | 1380 |

| Study Parameters | |

|---|---|

| Incidence, group 1 | 35% |

| Incidence, group 2 | 28% |

| Alpha | 0.05 |

| Beta | 0.2 |

| Power | 0.8 |

About This Calculator

This calculator uses a number of different equations to determine the minimum number of subjects that need to be enrolled in a study in order to have sufficient statistical power to detect a treatment effect. 1

Before a study is conducted, investigators need to determine how many subjects should be included. By enrolling too few subjects, a study may not have enough statistical power to detect a difference (type II error). Enrolling too many patients can be unnecessarily costly or time-consuming.

Generally speaking, statistical power is determined by the following variables:

- Baseline Incidence: If an outcome occurs infrequently, many more patients are needed in order to detect a difference.

- Population Variance: The higher the variance (standard deviation), the more patients are needed to demonstrate a difference.

- Treatment Effect Size: If the difference between two treatments is small, more patients will be required to detect a difference.

- Alpha: The probability of a type-I error -- finding a difference when a difference does not exist. Most medical literature uses an alpha cut-off of 5% (0.05) -- indicating a 5% chance that a significant difference is actually due to chance and is not a true difference.

- Beta: The probability of a type-II error -- not detecting a difference when one actually exists. Beta is directly related to study power (Power = 1 - β). Most medical literature uses a beta cut-off of 20% (0.2) -- indicating a 20% chance that a significant difference is missed.

Post-Hoc Power Analysis

To calculate the post-hoc statistical power of an existing trial, please visit the post-hoc power analysis calculator .

References and Additional Reading

- Rosner B. Fundamentals of Biostatistics . 7th ed. Boston, MA: Brooks/Cole; 2011.

Related Calculators

- Post-hoc Power Calculator

Mailing List

New and popular, cite this page.

Show AMA citation

We've filled out some of the form to show you this clinical calculator in action. Click here to start from scratch and enter your own patient data.

Sample Size Calculator

You can use this free sample size calculator to determine the sample size of a given survey per the sample proportion, margin of error, and required confidence level.

Confidence Level (α) : 70% 75% 80% 85% 90% 91% 92% 93% 94% 95% 96% 97% 98% 99% 99.5% 99.9% 99.99%

What is Sample Size?

Some basic terms are of interest when calculating sample size. These are as follows:

Margin of Error: Margin of error is also measured in percentage terms. It indicates the extent to which the outputs of the sample population are reflective of the overall population. The lower the margin of error, the nearer the researcher is to having an accurate response at a given confidence level. To determine the margin of error, take a look at our margin of error calculator .

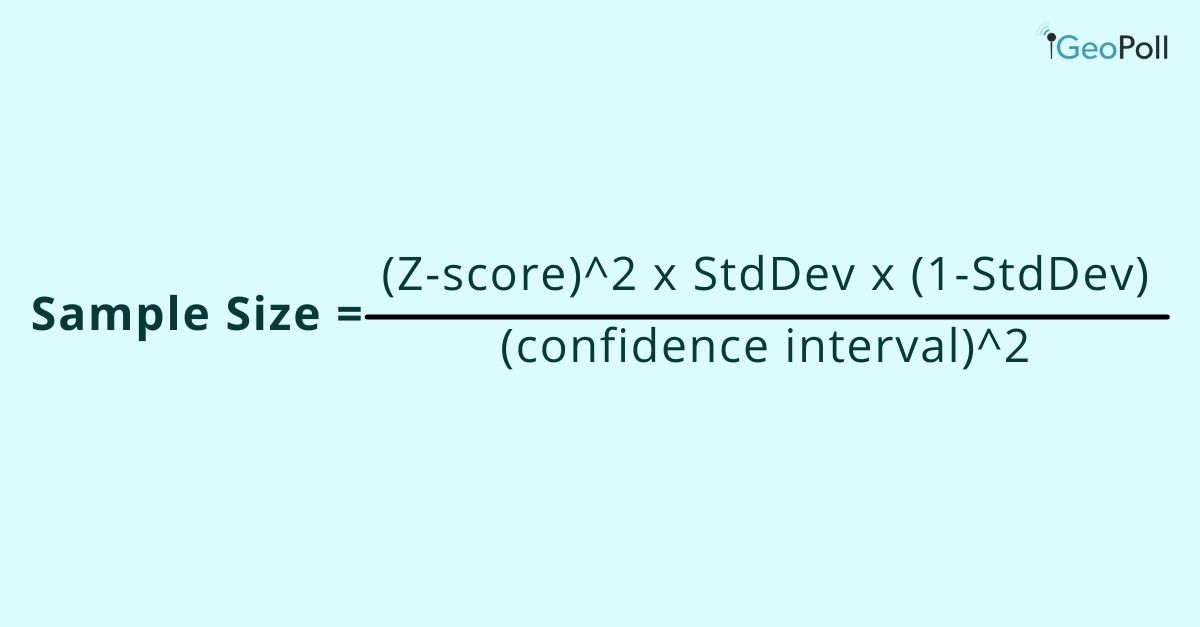

Sample Size Formula

The Sample Size Calculator uses the following formulas:

z is the z-score associated with a level of confidence,

Example of a Sample Size Calculation: Let's say we want to calculate the proportion of patients who have been discharged from a given hospital who are happy with the level of care they received while hospitalized at a 90% confidence level of the proportion within 4%. What sample size would we require?

where z = 1.645 for a confidence level (α) of 90%, p = proportion (expressed as a decimal), e = margin of error.

| Desired Confidence Level | Z-Score |

|---|---|

| 70% | 1.04 |

| 75% | 1.15 |

| 80% | 1.28 |

| 85% | 1.44 |

| 90% | 1.645 |

| 91% | 1.70 |

| 92% | 1.75 |

| 93% | 1.81 |

| 94% | 1.88 |

| 95% | 1.96 |

| 96% | 2.05 |

| 97% | 2.17 |

| 98% | 2.33 |

| 99% | 2.576 |

| 99.5% | 2.807 |

| 99.9% | 3.29 |

| 99.99% | 3.89 |

Reference: Daniel WW (1999). Biostatistics: A Foundation for Analysis in the Health Sciences. 7th edition. New York: John Wiley & Sons.

Sample Size Calculators

For designing clinical research.

- Calculators

- CI for proportion

- CI for mean

- Means - effect size

- Means - sample size

- Proportions - effect size

- Proportions - sample size

- CI for proportion - sample size

- Survival analysis - sample size

- CI for risk ratio

- More calculators...

Calculator finder

- About calculating sample size

This project was supported by the National Center for Advancing Translational Sciences, National Institutes of Health, through UCSF-CTSI Grant Numbers UL1 TR000004 and UL1 TR001872. Its contents are solely the responsibility of the authors and do not necessarily represent the official views of the NIH.

Please cite this site wherever used in published work:

Kohn MA, Senyak J. Sample Size Calculators [website]. UCSF CTSI. 12 June 2024. Available at https://www.sample-size.net/ [Accessed 23 August 2024]

This site was last updated on June 12, 2024.

- Calculators

- Descriptive Statistics

- Merchandise

- Which Statistics Test?

Sample Size Calculator

This calculator allows you to determine an appropriate sample size for your study, given different combinations of confidence, precision and variability.

For large populations, it uses Cochran's equation to perform the calculation.

For small populations of a known size, it uses Cochran's equation together with a population correction to calculate sample size.

Instructions

The default values we provide below will work well for many scenarios.

Precision Level is the margin of error you're prepared to tolerate - e.g., 5% means a result that is within 5 percentage points of the true population value.

Confidence Level is a measure of confidence in the precision of the result. For example, selecting 5% as the level of precision, and 95% as the confidence level, indicates a result that is within 5% of the real population value 95% of the time.

Estimated Proportion is a measure of variability. We suggest you leave this at 0.5 - maximum variability - unless you have prior knowledge about the population from which you are drawing your sample.

The final thing to note is that if you know the size of the population from which you wish to take a sample, you can select the Small Population option, and specify population size. This will result in a smaller sample.

The Calculator

Sample Size Calculation and Sample Size Justification

Sample size calculation is concerned with how much data we require to make a correct decision on particular research. If we have more data, then our decision will be more accurate and there will be less error of the parameter estimate. This doesn’t necessarily mean that more is always best in sample size calculation. A statistician with expertise in sample size calculation will need to apply statistical techniques and formulas in order to find the correct sample size calculation accurately.

There are some basics formulas for sample size calculation, although sample size calculation differs from technique to technique. For example, when we are comparing the means of two populations, if the sample size is less than 30, then we use the t-test . If the sample size is greater than 30, then we use the z-test. If the population size is small, than we need a bigger sample size, and if the population is large, then we need a smaller sample size as compared to the smaller population. Sample size calculation will also differ with different margins of error.

Discover How We Assist to Edit Your Dissertation Chapters

Aligning theoretical framework, gathering articles, synthesizing gaps, articulating a clear methodology and data plan, and writing about the theoretical and practical implications of your research are part of our comprehensive dissertation editing services.

- Bring dissertation editing expertise to chapters 1-5 in timely manner.

- Track all changes, then work with you to bring about scholarly writing.

- Ongoing support to address committee feedback, reducing revisions.

Intellectus allows you generate your sample size write-up in seconds. Click the button below to create a free account now!

Statistical consulting provides a priori sample size calculation, to tell you how many participants you need, and sample size justification, to justify the sample you can obtain. Knowing the appropriate number of participants for your particular study and being able to justify your sample size is important to meet your power and effect size requirements. Using the appropriate power and establishing the effect size will tell you how many people you need to find statistically significant results. Power and effect size measurements are also important to lending credibility to your study and are easily calculated by the experts at Statistics Solutions. Sample size justification is as important as the sample size calculation. If the sample size cannot be accurately justified, the researcher will not be able to make a valid inference. Statistics Solutions can assist with determining the sample size / power analysis for your research study. To learn more, visit our webpage on sample size / power analysis , or contact us today .

Tools to Calculate Sample Size and Power Analysis

Statistics Solutions offers tools to calculate sample size for populations and power analysis for your dissertation or research study. Our sample size for populations calculator is available using the Intellectus Statistics application. The Sample Size/Power Analysis Calculator with Write-up is a tool for anyone struggling with power analysis. Simply identify the test to be conducted and the degrees of freedom where applicable (explained in the document), and the sample size/power analysis calculator will calculate your sample size for a power of .80 of an alpha of .05 for small, medium and large effect sizes. The sample size/power analysis calculator then presents the write-up with references which can easily be integrated in your dissertation document. Click here for a sample. For questions about these or any of our products and services, please email [email protected] or call 877-437-8622.

Additional Resource Pages Related to Sample Size Calculation and Sample Size Justification:

- Sample Size / Power Analysis

- Statistical Power Analysis

- Monte Carlo Methods

- Sample Size Formula

- Standard Error

Power Analysis Resources

Abraham, W. T., & Russell, D. W. (2008). Statistical power analysis in psychological research. Social and Personality Psychology Compass, 2 (1), 283-301.

Bausell, R. B., & Li, Y. -F. (2002). Power analysis for experimental research: A practical guide for the biological, medical and social sciences. Cambridge, UK: Cambridge University Press.

Bonett, D. G., & Seier, E. (2002). A test of normality with high uniform power. Computational Statistics & Data Analysis , 40 (3), 435-445.

Goodman, S. N. & Berlin, J. A. (1994). The use of predicted confidence intervals when planning experiments and the misuse of power when interpreting results. Annals of Internal Medicine, 121 (3), 200-206.

Jones, A., & Sommerlund, B. (2007). A critical discussion of null hypothesis significance testing and statistical power analysis within psychological research. Nordic Psychology, 59 (3), 223-230.

MacCallum, R. C., Browne, M. W., & Cai, L. (2006). Testing differences between nested covariance structure models: Power analysis and null hypotheses. Psychological Methods, 11 (1), 19-35.

Sahai, H., & Khurshid, A. (1996). Formulas and tables for the determination of sample sizes and power in clinical trials involving the difference of two populations: A review. Statistics in Medicine , 15 (1), 1-21.

Sample Size Calculator

What is a sample size.

Sample size refers to the number of observations or participants in a product or web experiment. It plays a crucial role in the reliability and accuracy of tests, ensuring that results effectively represent the population you're studying.

How to calculate sample size

Calculating sample size involves considering several factors, including your confidence level, minimum detectable effect, and baseline conversion rate. Inputting these parameters into a sample size calculator helps you determine the minimum number of participants you need to detect a meaningful effect with a certain degree of certainty. This calculation ensures that your experiments are adequately powered to yield statistically significant results, providing reliable insights for decision making.

Statistical Significance Calculator

Churn calculator.

How to Determine Sample Size for a Research Study

Frankline kibuacha | apr. 06, 2021 | 3 min. read.

This article will discuss considerations to put in place when determining your sample size and how to calculate the sample size.

Confidence Interval and Confidence Level

As we have noted before, when selecting a sample there are multiple factors that can impact the reliability and validity of results, including sampling and non-sampling errors . When thinking about sample size, the two measures of error that are almost always synonymous with sample sizes are the confidence interval and the confidence level.

Confidence Interval (Margin of Error)

Confidence intervals measure the degree of uncertainty or certainty in a sampling method and how much uncertainty there is with any particular statistic. In simple terms, the confidence interval tells you how confident you can be that the results from a study reflect what you would expect to find if it were possible to survey the entire population being studied. The confidence interval is usually a plus or minus (±) figure. For example, if your confidence interval is 6 and 60% percent of your sample picks an answer, you can be confident that if you had asked the entire population, between 54% (60-6) and 66% (60+6) would have picked that answer.

Confidence Level

The confidence level refers to the percentage of probability, or certainty that the confidence interval would contain the true population parameter when you draw a random sample many times. It is expressed as a percentage and represents how often the percentage of the population who would pick an answer lies within the confidence interval. For example, a 99% confidence level means that should you repeat an experiment or survey over and over again, 99 percent of the time, your results will match the results you get from a population.

The larger your sample size, the more confident you can be that their answers truly reflect the population. In other words, the larger your sample for a given confidence level, the smaller your confidence interval.

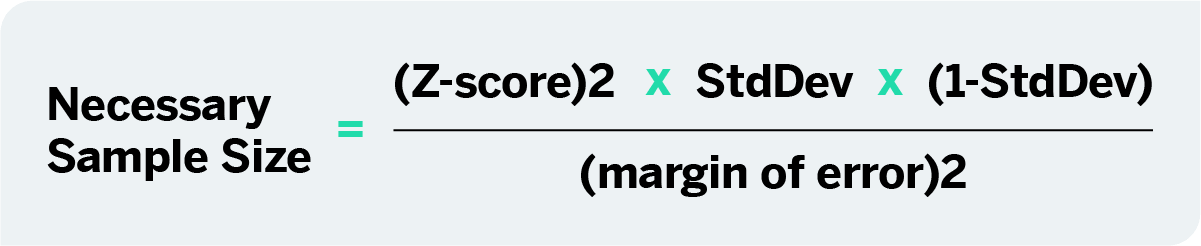

Standard Deviation

Another critical measure when determining the sample size is the standard deviation, which measures a data set’s distribution from its mean. In calculating the sample size, the standard deviation is useful in estimating how much the responses you receive will vary from each other and from the mean number, and the standard deviation of a sample can be used to approximate the standard deviation of a population.

The higher the distribution or variability, the greater the standard deviation and the greater the magnitude of the deviation. For example, once you have already sent out your survey, how much variance do you expect in your responses? That variation in responses is the standard deviation.

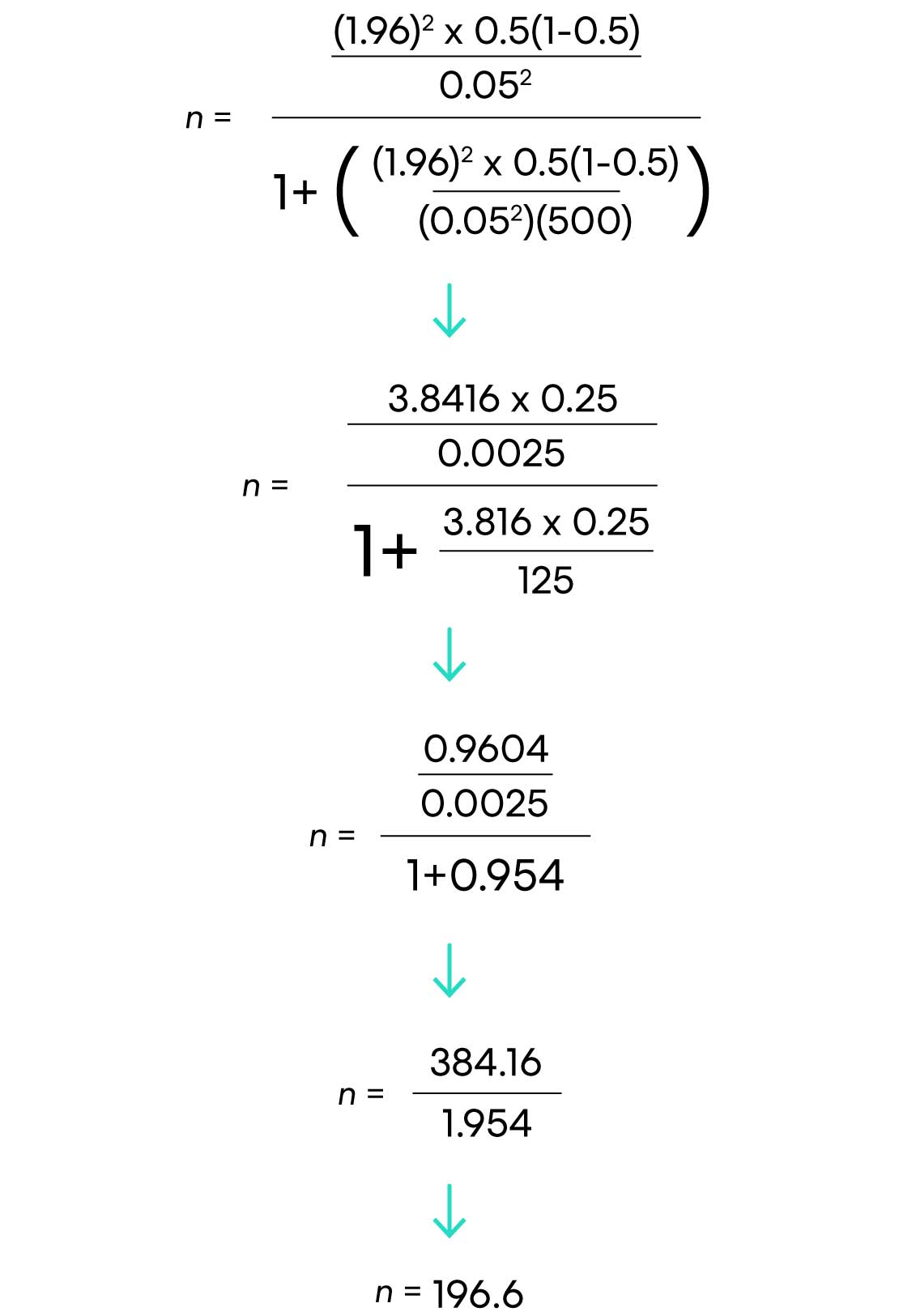

Population Size

As demonstrated through the calculation below, a sample size of about 385 will give you a sufficient sample size to draw assumptions of nearly any population size at the 95% confidence level with a 5% margin of error, which is why samples of 400 and 500 are often used in research. However, if you are looking to draw comparisons between different sub-groups, for example, provinces within a country, a larger sample size is required. GeoPoll typically recommends a sample size of 400 per country as the minimum viable sample for a research project, 800 per country for conducting a study with analysis by a second-level breakdown such as females versus males, and 1200+ per country for doing third-level breakdowns such as males aged 18-24 in Nairobi.

How to Calculate Sample Size

As we have defined all the necessary terms, let us briefly learn how to determine the sample size using a sample calculation formula known as Andrew Fisher’s Formula.

- Determine the population size (if known).

- Determine the confidence interval.

- Determine the confidence level.

- Determine the standard deviation ( a standard deviation of 0.5 is a safe choice where the figure is unknown )

- Convert the confidence level into a Z-Score. This table shows the z-scores for the most common confidence levels:

| 80% | 1.28 | |

| 85% | 1.44 | |

| 90% | 1.65 | |

| 95% | 1.96 | |

| 99% | 2.58 | |

- Put these figures into the sample size formula to get your sample size.

Here is an example calculation:

Say you choose to work with a 95% confidence level, a standard deviation of 0.5, and a confidence interval (margin of error) of ± 5%, you just need to substitute the values in the formula:

((1.96)2 x .5(.5)) / (.05)2

(3.8416 x .25) / .0025

.9604 / .0025

Your sample size should be 385.

Fortunately, there are several available online tools to help you with this calculation. Here’s an online sample calculator from Easy Calculation. Just put in the confidence level, population size, the confidence interval, and the perfect sample size is calculated for you.

GeoPoll’s Sampling Techniques

With the largest mobile panel in Africa, Asia, and Latin America, and reliable mobile technologies, GeoPoll develops unique samples that accurately represent any population. See our country coverage here , or contact our team to discuss your upcoming project.

Related Posts

Sample Frame and Sample Error

Probability and Non-Probability Samples

How GeoPoll Conducts Nationally Representative Surveys

- Tags market research , Market Research Methods , sample size , survey methodology

Beyond Focus Groups: Segal Benz Delivers Authentic Employee Insights with Remesh

Read Article

August 20, 2024

Case Studies

.png)

How AI is Redefining the Use of Audience Data

Team Remesh

August 9, 2024

Market Research

Research 101

Want to Fix Things Fast? Take a Rapid Research Approach

Patrick Hyland

August 8, 2024

Employee Research

For Researchers, by Researchers: How Remesh Implements a Customer Feedback Loop

August 6, 2024

Advanced Research

The Global Research Revolution: From Clipboards to Clicks

August 1, 2024

How and Where Remesh Uses AI in Market Research

July 19, 2024

How to Avoid Confirmation Bias in Research

June 25, 2024

Compressing Research Timelines Without Sacrificing Quality

June 21, 2024

Hidden Insights: The Power of Participant Voting in Market Research

How to calculate sample size using a sample size formula.

Learn how to calculate sample size with a margin of error using these simple sample size formulas for your market research.

Anika Nishat

March 22, 2024

What is sample size determination and why is it important

Finding an appropriate sample size, otherwise known as sample size determination, is a crucial first step in market research. Understanding why sample size is important is equally crucial. The answer: it ensures the robustness, reliability, and believability of your research findings. But how is sample size determined?

Calculating your sample size

During the course of your market research , you may be unable to reach the entire population you want to gather data about. While larger sample sizes bring you closer to a 1:1 representation of your target population, working with them can be time-consuming, expensive, and inconvenient. However, small samples risk yielding results that aren’t representative of the target population. It can be tricky because determining the ideal sample size for statistical significance ensures your research yields reliable and actionable insights.

Luckily, you can easily identify an ideal subset that represents the population and produces strong, statistically significant results that don’t gobble up all of your resources. In this article, we’ll teach you how to calculate sample size with a margin of error to identify that subset.

Five steps to finding your sample size

- Define population size or number of people

Designate your margin of error

- Determine your confidence level

- Predict expected variance

- Finalize your sample size

What is a good statistical sample size can vary depending on your research goals. But by following these five steps, you'll ensure you get the right selection size for your research needs.

Download: The State of AI in Market Research

Define the size of your population.

Your sample size needs will differ depending on the true population size or the total number of people you're looking to conclude on. That's why determining the minimum sample size for statistical significance is an important first step.

Defining the size of your population can be easier said than done. While there is a lot of population data available, you may be targeting a complex population or for which no reliable data currently exists.

Knowing the size of your population is more important when dealing with relatively small, easy-to-measure groups of people. If you're dealing with a larger population, take your best estimate, and roll with it.

This is the first step in a sample size formula, yielding more accurate results than a simple estimate – and accurately reflecting the population.

Random sample errors are inevitable whenever you're using a subset of your total population. Be confident that your results are accurate by designating how much error you intend to permit: that's your margin of error.

Sometimes called a "confidence interval," a margin of error indicates how much you're willing for your sample mean to differ from your population mean . It's often expressed alongside statistics as a plus-minus (±) figure, indicating a range which you can be relatively certain about.

For example, say you take a sample proportion of your colleagues with a designated 3% margin of error and find that 65% of your office uses some form of voice recognition technology at home. If you were to ask your entire office, you could be sure that in reality, as low as 62% and as high as 68% might use some form of voice recognition technology at home.

Determine how confident you can be

Your confidence level reveals how certain you can be that the true proportion of the total population would pick an answer within a particular range. The most common confidence levels are 90%, 95%, and 99%. Researchers most often employ a 95% confidence level.

Don't confuse confidence levels for confidence intervals (i.e., mean of error). Remember the distinction by thinking about how the concepts relate to each other to sample more confidently.

In our example from the previous step, when you put confidence levels and intervals together, you can say you're 95% certain that the true percentage of your colleagues who use voice recognition technology at home is within ± three percentage points from the sample mean of 65%, or between 62% and 68%.

Your confidence level corresponds to something called a "z-score." A z-score is a value that indicates the placement of your raw score (meaning the percent of your confidence level) in any number of standard deviations below or above the population mean.

Z-scores for the most common confidence intervals are:

- 90% = 2.576

- 99% = 2.576

While not as commonly used, the z-score for an 80% confidence interval is approximately 1.28. If you're using a different confidence interval, use this z-score table . A z-score sample calculator like this will quickly determine the appropriate value for your chosen confidence level.

Predict variance by calculating standard deviation in a sample

The last thing you'll want to consider when calculating your sample size is the amount of variance you expect to see among participant responses.

The standard deviation in a sample measures how much individual sample data points deviate from the average population.

Don't know how much variance to expect? Use the standard deviation of 0.5 to make sure your group is large enough.

Read: Best Practices for Writing Discussion Guides (eBook)

Finding your ideal sample size.

Now that you know what goes into determining sample size, you can easily calculate sample size online. Consider using a sample size calculator to ensure accuracy. Or, calculate it the old-fashioned way: by hand.

Below, find two sample size calculations - one for the known population proportion and one for the unknown population.

Sample size for known population

Sample size for unknown population

.jpeg)

Here’s how the calculations work out for our voice recognition technology example in an office of 500 people, with a 95% confidence level and 5% margin of error:

There you have it! 197 respondents are needed.

You can tweak some things if that number is too big to swallow.

Try increasing your margin of error or decreasing your confidence level. This will reduce the number of respondents necessary but, unfortunately, increase the chances of errors. Even so, understanding why trade-offs are necessary in sample size determination can help you make informed decisions.

Summing Up Sample Size

Calculating sample size sounds complicated - but, utilizing an easy sample size formula and even calculators are now available to make this tedious part of market research faster!

Once you've determined your sample size, you're ready to create and distribute your sample market research survey. This can be done through methods like running a focus group or even a customer satisfaction survey . Whatever you decide, you now have the information needed to make decisions with confidence.

Want to whip your research skills into shape? Check out our go-to eBook on writing discussion guides !

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Suspendisse varius enim in eros elementum tristique. Duis cursus, mi quis viverra ornare, eros dolor interdum nulla, ut commodo diam libero vitae erat. Aenean faucibus nibh et justo cursus id rutrum lorem imperdiet. Nunc ut sem vitae risus tristique posuere.

Three Ways to Energize Your Employee Listening Program

Stay up-to date..

Stay ahead of the curve. Get it all. Or get what suits you. Our 101 material is great if you’re used to working with an agency. Are you a seasoned pro? Sign up to receive just our advanced materials.

Get insights in your inbox . Sign up to stay up to date on the latest research tactics and breakthroughs.

©All Rights Reserved 2024. Read our Privacy policy

No data capture tricks. No bull SEO content for ranking. Just good, solid, honest, research ed.

By entering your email address, you agree to receive marketing communications in accordance with our privacy policy.

Request a demo

Dive Deeper

Root out friction in every digital experience, super-charge conversion rates, and optimise digital self-service

Uncover insights from any interaction, deliver AI-powered agent coaching, and reduce cost to serve

Increase revenue and loyalty with real-time insights and recommendations delivered straight to teams on the ground

Know how your people feel and empower managers to improve employee engagement, productivity, and retention

Take action in the moments that matter most along the employee journey and drive bottom line growth

Whatever they’re are saying, wherever they’re saying it, know exactly what’s going on with your people

Get faster, richer insights with qual and quant tools that make powerful market research available to everyone

Run concept tests, pricing studies, prototyping + more with fast, powerful studies designed by UX research experts

Track your brand performance 24/7 and act quickly to respond to opportunities and challenges in your market

Meet the operating system for experience management

- Free Account

- Product Demos

- For Digital

- For Customer Care

- For Human Resources

- For Researchers

- Financial Services

- All Industries

Popular Use Cases

- Customer Experience

- Employee Experience

- Employee Exit Interviews

- Net Promoter Score

- Voice of Customer

- Customer Success Hub

- Product Documentation

- Training & Certification

- XM Institute

- Popular Resources

- Customer Stories

- Artificial Intelligence

- Market Research

- Partnerships

- Marketplace

The annual gathering of the experience leaders at the world’s iconic brands building breakthrough business results.

- English/AU & NZ

- Español/Europa

- Español/América Latina

- Português Brasileiro

- REQUEST DEMO

- Experience Management

- Sampling Methods

- How To determine Sample Size

Try Qualtrics for free

Determining sample size: how to make sure you get the correct sample size.

16 min read Sample size can make or break your research project. Here’s how to master the delicate art of choosing the right sample size.

What is sample size?

Sample size is the beating heart of any research project. It’s the invisible force that gives life to your data, making your findings robust, reliable and believable.

Sample size is what determines if you see a broad view or a focus on minute details; the art and science of correctly determining it involves a careful balancing act. Finding an appropriate sample size demands a clear understanding of the level of detail you wish to see in your data and the constraints you might encounter along the way.

Remember, whether you’re studying a small group or an entire population, your findings are only ever as good as the sample you choose.

Free eBook: Empower your market research efforts today

Let’s delve into the world of sampling and uncover the best practices for determining sample size for your research.

How to determine sample size

“How much sample do we need?” is one of the most commonly-asked questions and stumbling points in the early stages of research design . Finding the right answer to it requires first understanding and answering two other questions:

How important is statistical significance to you and your stakeholders?

What are your real-world constraints.

At the heart of this question is the goal to confidently differentiate between groups, by describing meaningful differences as statistically significant. Statistical significance isn’t a difficult concept, but it needs to be considered within the unique context of your research and your measures.

First, you should consider when you deem a difference to be meaningful in your area of research. While the standards for statistical significance are universal, the standards for “meaningful difference” are highly contextual.

For example, a 10% difference between groups might not be enough to merit a change in a marketing campaign for a breakfast cereal, but a 10% difference in efficacy of breast cancer treatments might quite literally be the difference between life and death for hundreds of patients. The exact same magnitude of difference has very little meaning in one context, but has extraordinary meaning in another. You ultimately need to determine the level of precision that will help you make your decision.

Within sampling, the lowest amount of magnification – or smallest sample size – could make the most sense, given the level of precision needed, as well as timeline and budgetary constraints.

If you’re able to detect statistical significance at a difference of 10%, and 10% is a meaningful difference, there is no need for a larger sample size, or higher magnification. However, if the study will only be useful if a significant difference is detected for smaller differences – say, a difference of 5% — the sample size must be larger to accommodate this needed precision. Similarly, if 5% is enough, and 3% is unnecessary, there is no need for a larger statistically significant sample size.

You should also consider how much you expect your responses to vary. When there isn’t a lot of variability in response, it takes a lot more sample to be confident that there are statistically significant differences between groups.

For instance, it will take a lot more sample to find statistically significant differences between groups if you are asking, “What month do you think Christmas is in?” than if you are asking, “How many miles are there between the Earth and the moon?”. In the former, nearly everybody is going to give the exact same answer, while the latter will give a lot of variation in responses. Simply put, when your variables do not have a lot of variance, larger sample sizes make sense.

Statistical significance

The likelihood that the results of a study or experiment did not occur randomly or by chance, but are meaningful and indicate a genuine effect or relationship between variables.

Magnitude of difference

The size or extent of the difference between two or more groups or variables, providing a measure of the effect size or practical significance of the results.

Actionable insights

Valuable findings or conclusions drawn from data analysis that can be directly applied or implemented in decision-making processes or strategies to achieve a particular goal or outcome.

It’s crucial to understand the differences between the concepts of “statistical significance”, “magnitude of difference” and “actionable insights” – and how they can influence each other:

- Even if there is a statistically significant difference, it doesn’t mean the magnitude of the difference is large: with a large enough sample, a 3% difference could be statistically significant

- Even if the magnitude of the difference is large, it doesn’t guarantee that this difference is statistically significant: with a small enough sample, an 18% difference might not be statistically significant

- Even if there is a large, statistically significant difference, it doesn’t mean there is a story, or that there are actionable insights

There is no way to guarantee statistically significant differences at the outset of a study – and that is a good thing.

Even with a sample size of a million, there simply may not be any differences – at least, any that could be described as statistically significant. And there are times when a lack of significance is positive.

Imagine if your main competitor ran a multi-million dollar ad campaign in a major city and a huge pre-post study to detect campaign effects, only to discover that there were no statistically significant differences in brand awareness . This may be terrible news for your competitor, but it would be great news for you.

With Stats iQ™ you can analyze your research results and conduct significance testing

As you determine your sample size, you should consider the real-world constraints to your research.

Factors revolving around timings, budget and target population are among the most common constraints, impacting virtually every study. But by understanding and acknowledging them, you can definitely navigate the practical constraints of your research when pulling together your sample.

Timeline constraints

Gathering a larger sample size naturally requires more time. This is particularly true for elusive audiences, those hard-to-reach groups that require special effort to engage. Your timeline could become an obstacle if it is particularly tight, causing you to rethink your sample size to meet your deadline.

Budgetary constraints

Every sample, whether large or small, inexpensive or costly, signifies a portion of your budget. Samples could be like an open market; some are inexpensive, others are pricey, but all have a price tag attached to them.

Population constraints

Sometimes the individuals or groups you’re interested in are difficult to reach; other times, they’re a part of an extremely small population. These factors can limit your sample size even further.

What’s a good sample size?

A good sample size really depends on the context and goals of the research. In general, a good sample size is one that accurately represents the population and allows for reliable statistical analysis.

Larger sample sizes are typically better because they reduce the likelihood of sampling errors and provide a more accurate representation of the population. However, larger sample sizes often increase the impact of practical considerations, like time, budget and the availability of your audience. Ultimately, you should be aiming for a sample size that provides a balance between statistical validity and practical feasibility.

4 tips for choosing the right sample size

Choosing the right sample size is an intricate balancing act, but following these four tips can take away a lot of the complexity.

1) Start with your goal

The foundation of your research is a clearly defined goal. You need to determine what you’re trying to understand or discover, and use your goal to guide your research methods – including your sample size.

If your aim is to get a broad overview of a topic, a larger, more diverse sample may be appropriate. However, if your goal is to explore a niche aspect of your subject, a smaller, more targeted sample might serve you better. You should always align your sample size with the objectives of your research.

2) Know that you can’t predict everything

Research is a journey into the unknown. While you may have hypotheses and predictions, it’s important to remember that you can’t foresee every outcome – and this uncertainty should be considered when choosing your sample size.

A larger sample size can help to mitigate some of the risks of unpredictability, providing a more diverse range of data and potentially more accurate results. However, you shouldn’t let the fear of the unknown push you into choosing an impractically large sample size.

3) Plan for a sample that meets your needs and considers your real-life constraints

Every research project operates within certain boundaries – commonly budget, timeline and the nature of the sample itself. When deciding on your sample size, these factors need to be taken into consideration.

Be realistic about what you can achieve with your available resources and time, and always tailor your sample size to fit your constraints – not the other way around.

4) Use best practice guidelines to calculate sample size

There are many established guidelines and formulas that can help you in determining the right sample size.

The easiest way to define your sample size is using a sample size calculator , or you can use a manual sample size calculation if you want to test your math skills. Cochran’s formula is perhaps the most well known equation for calculating sample size, and widely used when the population is large or unknown.

Beyond the formula, it’s vital to consider the confidence interval, which plays a significant role in determining the appropriate sample size – especially when working with a random sample – and the sample proportion. This represents the expected ratio of the target population that has the characteristic or response you’re interested in, and therefore has a big impact on your correct sample size.

If your population is small, or its variance is unknown, there are steps you can still take to determine the right sample size. Common approaches here include conducting a small pilot study to gain initial estimates of the population variance, and taking a conservative approach by assuming a larger variance to ensure a more representative sample size.

Empower your market research

Conducting meaningful research and extracting actionable intelligence are priceless skills in today’s ultra competitive business landscape. It’s never been more crucial to stay ahead of the curve by leveraging the power of market research to identify opportunities, mitigate risks and make informed decisions.

Equip yourself with the tools for success with our essential eBook, “The ultimate guide to conducting market research” .

With this front-to-back guide, you’ll discover the latest strategies and best practices that are defining effective market research. Learn about practical insights and real-world applications that are demonstrating the value of research in driving business growth and innovation.

Learn how to determine sample size

To choose the correct sample size, you need to consider a few different factors that affect your research, and gain a basic understanding of the statistics involved. You’ll then be able to use a sample size formula to bring everything together and sample confidently, knowing that there is a high probability that your survey is statistically accurate.

The steps that follow are suitable for finding a sample size for continuous data – i.e. data that is counted numerically. It doesn’t apply to categorical data – i.e. put into categories like green, blue, male, female etc.

Stage 1: Consider your sample size variables

Before you can calculate a sample size, you need to determine a few things about the target population and the level of accuracy you need:

1. Population size

How many people are you talking about in total? To find this out, you need to be clear about who does and doesn’t fit into your group. For example, if you want to know about dog owners, you’ll include everyone who has at some point owned at least one dog. (You may include or exclude those who owned a dog in the past, depending on your research goals.) Don’t worry if you’re unable to calculate the exact number. It’s common to have an unknown number or an estimated range.

2. Margin of error (confidence interval)

Errors are inevitable – the question is how much error you’ll allow. The margin of error , AKA confidence interval, is expressed in terms of mean numbers. You can set how much difference you’ll allow between the mean number of your sample and the mean number of your population. If you’ve ever seen a political poll on the news, you’ve seen a confidence interval and how it’s expressed. It will look something like this: “68% of voters said yes to Proposition Z, with a margin of error of +/- 5%.”

3. Confidence level

This is a separate step to the similarly-named confidence interval in step 2. It deals with how confident you want to be that the actual mean falls within your margin of error. The most common confidence intervals are 90% confident, 95% confident, and 99% confident.

4. Standard deviation

This step asks you to estimate how much the responses you receive will vary from each other and from the mean number. A low standard deviation means that all the values will be clustered around the mean number, whereas a high standard deviation means they are spread out across a much wider range with very small and very large outlying figures. Since you haven’t yet run your survey, a safe choice is a standard deviation of .5 which will help make sure your sample size is large enough.

Stage 2: Calculate sample size

Now that you’ve got answers for steps 1 – 4, you’re ready to calculate the sample size you need. This can be done using an online sample size calculator or with paper and pencil.

1. Find your Z-score

Next, you need to turn your confidence level into a Z-score. Here are the Z-scores for the most common confidence levels:

- 90% – Z Score = 1.645

- 95% – Z Score = 1.96

- 99% – Z Score = 2.576

If you chose a different confidence level, use this Z-score table (a resource owned and hosted by SJSU.edu) to find your score.

2. Use the sample size formula

Plug in your Z-score, standard of deviation, and confidence interval into the sample size calculator or use this sample size formula to work it out yourself:

This equation is for an unknown population size or a very large population size. If your population is smaller and known, just use the sample size calculator.

What does that look like in practice?

Here’s a worked example, assuming you chose a 95% confidence level, .5 standard deviation, and a margin of error (confidence interval) of +/- 5%.

((1.96)2 x .5(.5)) / (.05)2

(3.8416 x .25) / .0025

.9604 / .0025

385 respondents are needed

Voila! You’ve just determined your sample size.

eBook: 2022 Market Research Global Trends Report

Related resources

Convenience sampling 15 min read, non-probability sampling 17 min read, probability sampling 8 min read, stratified random sampling 13 min read, simple random sampling 10 min read, sampling methods 15 min read, sampling and non-sampling errors 10 min read, request demo.

Ready to learn more about Qualtrics?

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 23 August 2024

Disrupted brain functional connectivity as early signature in cognitively healthy individuals with pathological CSF amyloid/tau

- Abdulhakim Al-Ezzi 1 ,

- Rebecca J. Arechavala ORCID: orcid.org/0000-0002-9799-2610 2 ,

- Ryan Butler 1 ,

- Anne Nolty 3 ,

- Jimmy J. Kang 4 ,

- Shinsuke Shimojo ORCID: orcid.org/0000-0002-1290-5232 5 ,

- Daw-An Wu ORCID: orcid.org/0000-0003-4296-3369 5 ,

- Alfred N. Fonteh 1 ,

- Michael T. Kleinman 2 ,

- Robert A. Kloner 1 , 6 &

- Xianghong Arakaki 1

Communications Biology volume 7 , Article number: 1037 ( 2024 ) Cite this article

Metrics details

- Cerebrospinal fluid proteins

- Cognitive control

- Diagnostic markers

- Neurophysiology

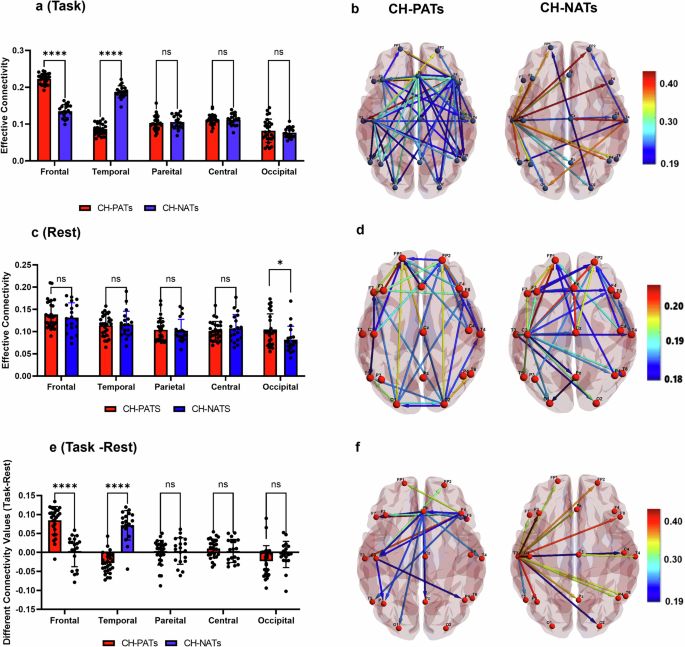

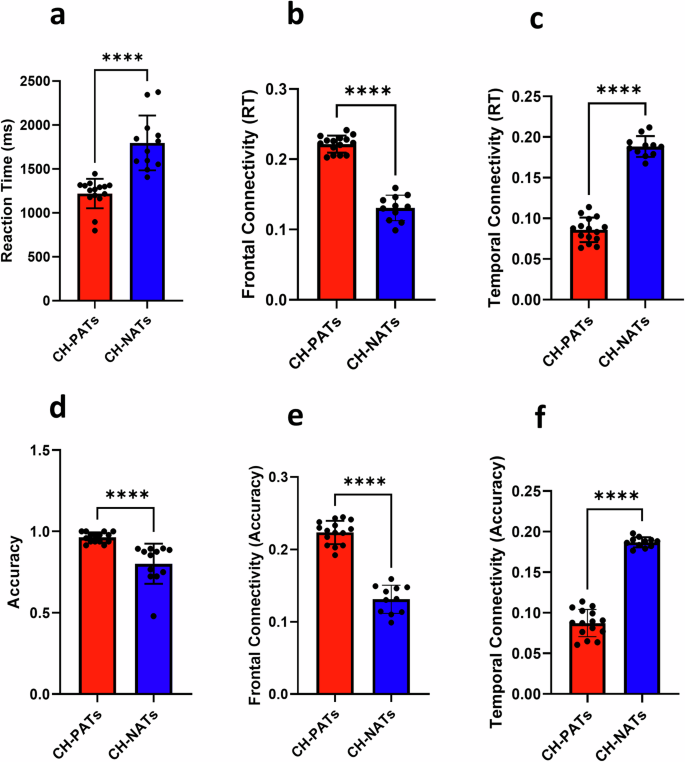

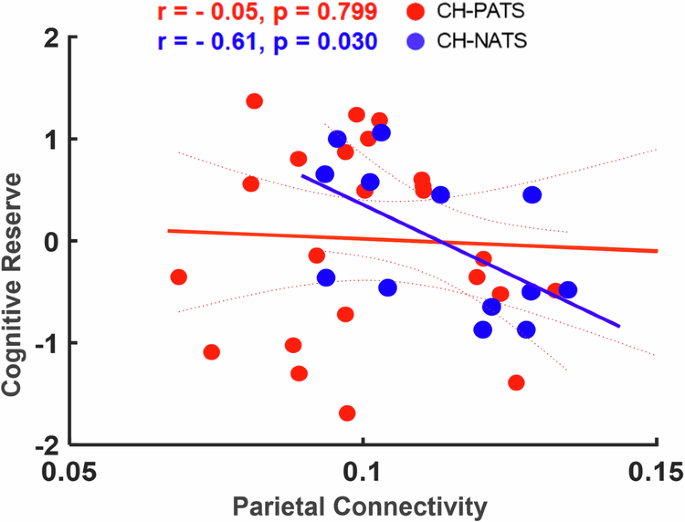

Alterations in functional connectivity (FC) have been observed in individuals with Alzheimer’s disease (AD) with elevated amyloid ( A β ) and tau. However, it is not yet known whether directed FC is already influenced by A β and tau load in cognitively healthy (CH) individuals. A 21-channel electroencephalogram (EEG) was used from 46 CHs classified based on cerebrospinal fluid (CSF) A β tau ratio: pathological (CH-PAT) or normal (CH-NAT). Directed FC was estimated with Partial Directed Coherence in frontal, temporal, parietal, central, and occipital regions. We also examined the correlations between directed FC and various functional metrics, including neuropsychology, cognitive reserve, MRI volumetrics, and heart rate variability between both groups. Compared to CH-NATs, the CH-PATs showed decreased FC from the temporal regions, indicating a loss of relative functional importance of the temporal regions. In addition, frontal regions showed enhanced FC in the CH-PATs compared to CH-NATs, suggesting neural compensation for the damage caused by the pathology. Moreover, CH-PATs showed greater FC in the frontal and occipital regions than CH-NATs. Our findings provide a useful and non-invasive method for EEG-based analysis to identify alterations in brain connectivity in CHs with a pathological versus normal CSF A β /tau.

Introduction

Alzheimer’s disease (AD) is a neurological disorder in which progressive neurodegeneration and synaptic dysfunction result in impairments in a range of cognitive domains. With the continual rise of the global population and life expectancy, it is anticipated that the prevalence of neurocognitive disorders or dementia will experience a substantial surge, reaching an estimated 74.7 million individuals by 2030 and more than 131.5 million by 2050 worldwide 1 , 2 . Recent research reported early impairments in executive functions and memory among individuals afflicted with A β and/or tau pathologies 3 , 4 , 5 . These findings provide validation for the notion that executive functions and episodic memory 6 , 7 , 8 are indeed affected during the initial stages of AD, primarily due to the alteration or pathology of the frontal and temporal cortices 9 , 10 . More specifically, inhibitory abilities 11 , attentional processes 12 , 13 , and visuospatial functions 14 appear to be particularly compromised. A defining feature of the progression of AD is the reduction in A β protein (resulting in low levels of CSF amyloid- β ( A β ) and the rise in neuronal degeneration biomarkers (such as increased levels of CSF total tau and phosphorylated tau) in individuals with AD. The reduction in CSF A β levels seems to occur in the early progression of AD, becoming apparent more than twenty years before the onset of any clinical symptoms 15 . In individuals with AD or those who are at risk of developing AD, the amyloid- β -to-tau ratio is often low, indicating an accumulation of A β plaques and/or tau tangles in the brain 6 , 16 , 17 . Lei Wang et al, found that lower CSF A β 42 levels and higher tau/ A β 42 ratios were strongly correlated with a reduction in hippocampal volume and indicators of progressive atrophy of the cornu ammonis subfield in pre-clinical AD individuals, but not cognitively healthy (CH) individuals 18 . Compared to the A β 42 and/or tau, the A β 42/tau ratio demonstrated greater sensitivity in detecting pre-symptomatic AD and distinguishing it from frontotemporal dementia 19 . Consequently, it is plausible that A β 42/tau ratio may serve as a sensitive biomarker in detecting the earliest stages of preclinical AD compared to individual biomarkers. Preclinical investigations offer robust evidence supporting functional connectivity as a probable intermediary mechanism linking A β to tau secretion and accumulation 20 . Despite the significant research dedicated to unraveling AD pathogenesis, there is currently a lack of sensitive, specific, reliable, objective, and easily scalable biomarkers or endpoints to guide clinical trials and facilitate early risk detection in clinical settings.

Several large prospective studies attempt to characterize the early diagnostic criteria in people at risk of developing AD. These assessments include pathological markers (both Beta Amyloid ( A β ) and tau pathologies) 21 , neuropsychological scores (Montreal Cognitive Assessment (MoCA) and Mini-Mental State Examination (MMSE)) 22 , neuroimaging (Magnetic resonance imaging (MRI), Magnetoencephalography (MEG), and electroencephalogram (EEG) 23 , and heart rate variability (HRV) 24 . EEG Brain connectivity, MRI brain structures, neuropsychological assessments, and HRV are intricately correlated measures that can predict early AD pathology. For example, a recent examination of HRV from the Multi-Ethnic study of atherosclerosis revealed a correlation between higher HRV and superior cognitive function across various cognitive domains 25 . We previously reported a significant association between high resting HR and less negative alpha event related resynchronization (ERD) during Stroop testing in individuals with pathological A β /tau, compared with those with normal A β /tau 26 . These findings prompt further investigation into brain connectivity involved with pathological A β /tau presence during task-switching tasks. Therefore, we aim to integrate brain activity, neuropsychology, and HRV assessments in this study to facilitate early detection of AD risks, understand disease mechanisms, and ultimately help improving outcomes for individuals affected by AD by addressing the multifaceted nature of the disease.

The abnormality of brain connectivity measured by MRI in regions with early A β -burden (e.g., default mode network (DMN) has been shown when A β fibrils just start to accumulate 27 . However, this abnormality has not been reported or tested in EEG investigations to our knowledge. Both A β and tau pathologies have been shown to impact brain network’s structural and FC 28 . Abnormal FC has been consistently identified in the early stages of AD before the appearance of clinical symptoms or brain structural changes 29 . For instance, a recent study has achieved 90% accuracy in classifying brain A β and tau pathology in subjective cognitive decline from mild cognitive impairment (MCI) individuals using EEG coherence 30 . FC studies have found that abnormal cerebrospinal fluid (CSF) levels of phosphorylated-tau and A β in early AD are linked with disrupted cortical networks involving the anterior and posterior cingulate cortex, and temporal and frontal cortices 31 . We previously reported that ERD increased in CH with pathological A β tau ratio (CH-PATs) 32 , compared to CH with normal A β tau ratio (CH-NATs) in alpha band. Using regional interconnectivity methods, a previous study found that the temporal and frontal regions’ connection is a characteristic pattern for the pathological transition of normal to MCI and the density of edges in these networks is a differential pattern between HC and MCI 33 . The decreased patterns of regional hemispheric interconnectivity in the metabolic network rely on the pathology severity 33 . Therefore, EEG can be a promising, diagnostic, noninvasive, high temporal resolution method, which is a cost-effective biomarker and easily accessible to track and predict the severity of cognitive dysfunction in degenerative diseases. As memory (predominantly localized in the temporal region) and executive functions (mainly associated with the frontal region) are the two sensitive cognitive activities that were abnormal in early AD 9 , 10 , it will be compelling to study frontal and temporal FC in the early stage of AD spectrum.

In the present study, we aimed to: (1) compare effective connectivity (EC) between CH-NATs and CH-PATs during task switching, and explore the potential contribution of task difficulty levels; (2) study the links between EC and Neuropsychological measures, structural MRI brain volumes, and HRV.

Participant characteristics

The demographic and clinical characteristics of our subjects have been reported in our previous work 32 ). The participants’ age in CH-PATs and CH-NATs were comparable and both groups also had similar educational levels, with mean years of education. There were no differences in cognitive reserve (CR) and intelligence quotient (IQ) scores between CH-NATs and CH-PATs.

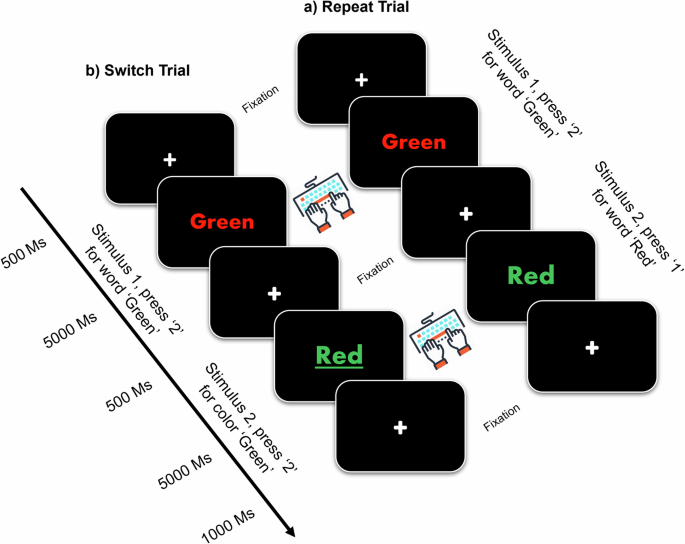

Behavioral analysis

The difference between the accuracy (ACC) and reaction time (RT) scored under the effect of trial types (repeat or switch) was notable with a significantly improved RT ( p < 0.0001) and ACC ( p = 0.048) during repeat trials than during switch trials. In addition, the results of this study showed no significant differences in group × trial type interaction in RT, F(1, 108) = 0.0001, p = 0.991 and ACC, F(1, 108) = 0.003, p = 0.960 between the two groups of all CH-PAT and CH-NAT participants. A comparison of the main effect of trial types and group × trial between CH-NATs and CH-PATs was reported previously in our work 32 .

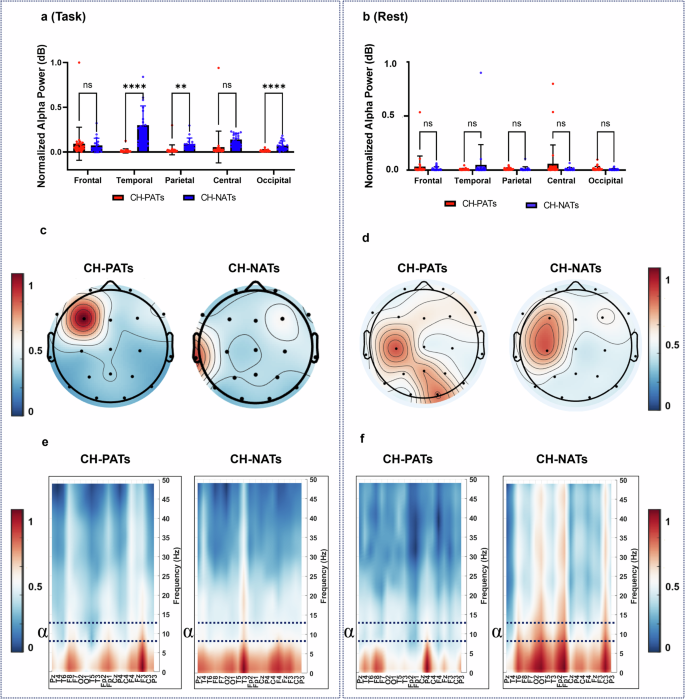

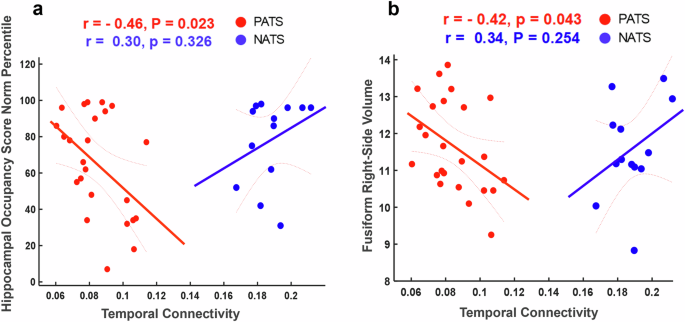

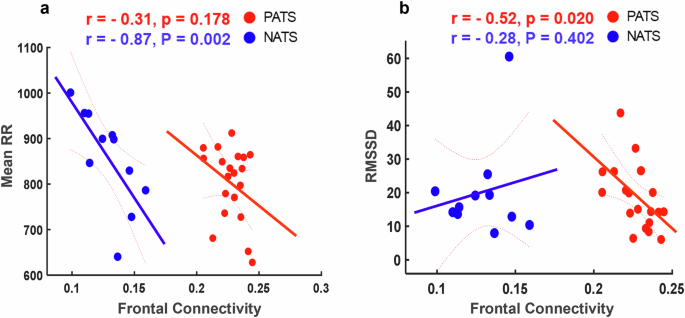

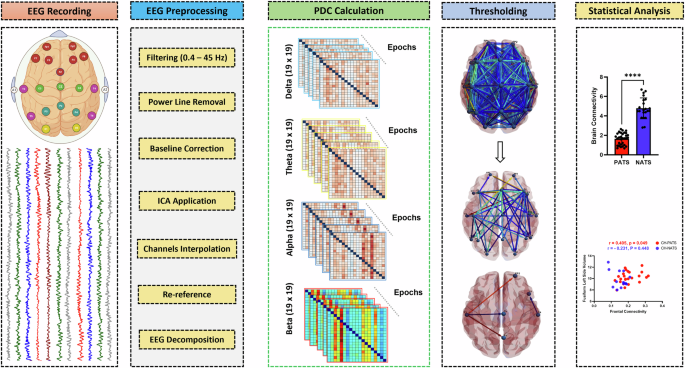

EEG power spectral density