- Tuple Assignment

Introduction

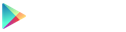

Tuples are basically a data type in python . These tuples are an ordered collection of elements of different data types. Furthermore, we represent them by writing the elements inside the parenthesis separated by commas. We can also define tuples as lists that we cannot change. Therefore, we can call them immutable tuples. Moreover, we access elements by using the index starting from zero. We can create a tuple in various ways. Here, we will study tuple assignment which is a very useful feature in python.

In python, we can perform tuple assignment which is a quite useful feature. We can initialise or create a tuple in various ways. Besides tuple assignment is a special feature in python. We also call this feature unpacking of tuple.

The process of assigning values to a tuple is known as packing. While on the other hand, the unpacking or tuple assignment is the process that assigns the values on the right-hand side to the left-hand side variables. In unpacking, we basically extract the values of the tuple into a single variable.

Moreover, while performing tuple assignments we should keep in mind that the number of variables on the left-hand side and the number of values on the right-hand side should be equal. Or in other words, the number of variables on the left-hand side and the number of elements in the tuple should be equal. Let us look at a few examples of packing and unpacking.

Tuple Packing (Creating Tuples)

We can create a tuple in various ways by using different types of elements. Since a tuple can contain all elements of the same data type as well as of mixed data types as well. Therefore, we have multiple ways of creating tuples. Let us look at few examples of creating tuples in python which we consider as packing.

Example 1: Tuple with integers as elements

Example 2: Tuple with mixed data type

Example 3: Tuple with a tuple as an element

Example 4: Tuple with a list as an element

If there is only a single element in a tuple we should end it with a comma. Since writing, just the element inside the parenthesis will be considered as an integer.

For example,

Correct way of defining a tuple with single element is as follows:

Moreover, if you write any sequence separated by commas, python considers it as a tuple.

Browse more Topics Under Tuples and its Functions

- Immutable Tuples

- Creating Tuples

- Initialising and Accessing Elements in a Tuple

- Tuple Slicing

- Tuple Indexing

- Tuple Functions

Tuple Assignment (Unpacking)

Unpacking or tuple assignment is the process that assigns the values on the right-hand side to the left-hand side variables. In unpacking, we basically extract the values of the tuple into a single variable.

Frequently Asked Questions (FAQs)

Q1. State true or false:

Inserting elements in a tuple is unpacking.

Q2. What is the other name for tuple assignment?

A2. Unpacking

Q3. In unpacking what is the important condition?

A3. The number of variables on the left-hand side and the number of elements in the tuple should be equal.

Q4. Which error displays when the above condition fails?

A4. ValueError: not enough values to unpack

Customize your course in 30 seconds

Which class are you in.

- Initialising and Accessing Elements in Tuple

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Download the App

Python Tuple: How to Create, Use, and Convert

A Python tuple is one of Python’s three built-in sequence data types , the others being lists and range objects. A Python tuple shares a lot of properties with the more commonly known Python list :

- It can hold multiple values in a single variable

- It’s ordered: the order of items is preserved

- A tuple can have duplicate values

- It’s indexed: you can access items numerically

- A tuple can have an arbitrary length

But there are significant differences:

- A tuple is immutable; it can not be changed once you have defined it.

- A tuple is defined using optional parentheses () instead of square brackets []

- Since a tuple is immutable, it can be hashed, and thus it can act as the key in a dictionary

Table of Contents

- 1 Creating a Python tuple

- 2 Multiple assignment using a Python tuple

- 3 Indexed access

- 4 Append to a Python Tuple

- 5 Get tuple length

- 6 Python Tuple vs List

- 7 Python Tuple vs Set

- 8 Converting Python tuples

Creating a Python tuple

We create tuples from individual values using optional parentheses (round brackets) like this:

Like everything in Python, tuples are objects and have a class that defines them. We can also create a tuple by using the tuple() constructor from that class. It allows any Python iterable type as an argument. In the following example, we create a tuple from a list:

Now you also know how to convert a Python list to a tuple!

Which method is best?

It’s not always easy for Python to infer if you’re using regular parentheses or if you’re trying to create a tuple. To demonstrate, let’s define a tuple holding only one item:

Python sees the number one, surrounded by useless parentheses on the first try, so Python strips down the expression to the number 1. However, we added a comma in the second try, explicitly signaling to Python that we are creating a tuple with just one element.

A tuple with just one item is useless for most use cases, but it demonstrates how Python recognizes a tuple: because of the comma.

If we can use tuple() , why is there a second method as well? The other notation is more concise, but it also has its value because you can use it to unpack multiple lists into a tuple in this way concisely:

The leading * operator unpacks the lists into individual elements. It’s as if you would have typed them individually at that spot. This unpacking trick works for all iterable types if you were wondering!

Multiple assignment using a Python tuple

You’ve seen something called tuple unpacking in the previous topic. There’s another way to unpack a tuple, called multiple assignment. It’s something that you see used a lot, especially when returning data from a function, so it’s worth taking a look at this.

Multiple assignment works like this:

Like using the *, this type of unpacking works for all iterable types in Python, including lists and strings.

As I explained in the Python trick on returning multiple values from a Python function, unpacking tuples works great in conjunction with a function that returns multiple values. It’s a neat way of returning more than one value without having to resort to data classes or dictionaries :

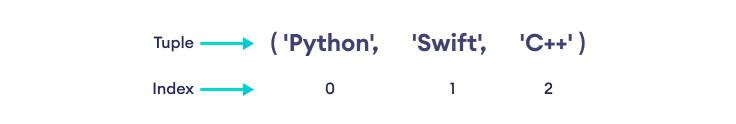

Indexed access

We can access a tuple using index numbers like [0] and [1] :

Append to a Python Tuple

Because a tuple is immutable, you can not append data to a tuple after creating it . For the same reason, you can’t remove data from a tuple either. You can, of course, create a new tuple from the old one and append the extra item(s) to it this way:

What we did was unpack t1 , create a new tuple with the unpacked values and two different strings and assign the result to t again.

Get tuple length

The len() function works on Python tuples just like it works on all other iterable types like lists and strings:

Python Tuple vs List

The most significant difference between a Python tuple and a Python list is that a List is mutable, while a tuple is not. After defining a tuple, you can not add or remove values. In contrast, a list allows you to add or remove values at will. This property can be an advantage; you can see it as write protection. If a piece of data is not meant to change, using a tuple can prevent errors. After all, six months from now, you might have forgotten that you should not change the data. Using a tuple prevents mistakes.

Another advantage is that tuples are faster, or at least that is what people say. I have not seen proof, but it makes sense. Since it’s an immutable data type, a tuple’s internal implementation can be simpler than lists. After all, they don’t need ways to grow larger or insert elements at random positions, which usually is implemented as a linked list . From what I understand, a tuple uses a simple array-like structure in the CPython implementation.

Python Tuple vs Set

The most significant difference between tuples and Python sets is that a tuple can have duplicates while a set can’t. The entire purpose of a set is its inability to contain duplicates. It’s an excellent tool for deduplicating your data.

Converting Python tuples

Convert tuple to list.

Python lists are mutable, while tuples are not. If you need to, you can convert a tuple to a list with one of the following methods.

The cleanest and most readable way is to use the list() constructor:

A more concise but less readable method is to use unpacking. This unpacking can sometimes come in handy because it allows you to unpack multiple tuples into one list or add some extra values otherwise:

Convert tuple to set

Analogous to the conversion to a list, we can use set() to convert a tuple to a set:

Here, too, we can use unpacking:

Convert tuple to string

Like most objects in Python, a tuple has a so-called dunder method, called __str__ , which converts the tuple into a string. When you want to print a tuple, you don’t need to do so explicitly. Python’s print function will call this method on any object that is not a string. In other cases, you can use the str() constructor to get the string representation of a tuple:

Get certified with our courses

Learn Python properly through small, easy-to-digest lessons, progress tracking, quizzes to test your knowledge, and practice sessions. Each course will earn you a downloadable course certificate.

Related articles

- Python Set: The Why And How With Example Code

- Python List: How To Create, Sort, Append, Remove, And More

- Convert a String to Title Case Using Python

- Python YAML: How to Load, Read, and Write YAML

- Table of Contents

- Course Home

- Assignments

- Peer Instruction (Instructor)

- Peer Instruction (Student)

- Change Course

- Instructor's Page

- Progress Page

- Edit Profile

- Change Password

- Scratch ActiveCode

- Scratch Activecode

- Instructors Guide

- About Runestone

- Report A Problem

- 13.1 Introduction

- 13.2 Tuple Packing

- 13.3 Tuple Assignment with Unpacking

- 13.4 Tuples as Return Values

- 13.5 Unpacking Tuples as Arguments to Function Calls

- 13.6 Glossary

- 13.7 Exercises

- 13.8 Chapter Assessment

- 13.2. Tuple Packing" data-toggle="tooltip">

- 13.4. Tuples as Return Values' data-toggle="tooltip" >

13.3. Tuple Assignment with Unpacking ¶

Python has a very powerful tuple assignment feature that allows a tuple of variable names on the left of an assignment statement to be assigned values from a tuple on the right of the assignment. Another way to think of this is that the tuple of values is unpacked into the variable names.

This does the equivalent of seven assignment statements, all on one easy line.

Naturally, the number of variables on the left and the number of values on the right have to be the same.

Unpacking into multiple variable names also works with lists, or any other sequence type, as long as there is exactly one value for each variable. For example, you can write x, y = [3, 4] .

13.3.1. Swapping Values between Variables ¶

This feature is used to enable swapping the values of two variables. With conventional assignment statements, we have to use a temporary variable. For example, to swap a and b :

Tuple assignment solves this problem neatly:

The left side is a tuple of variables; the right side is a tuple of values. Each value is assigned to its respective variable. All the expressions on the right side are evaluated before any of the assignments. This feature makes tuple assignment quite versatile.

13.3.2. Unpacking Into Iterator Variables ¶

Multiple assignment with unpacking is particularly useful when you iterate through a list of tuples. You can unpack each tuple into several loop variables. For example:

On the first iteration the tuple ('Paul', 'Resnick') is unpacked into the two variables first_name and last_name . One the second iteration, the next tuple is unpacked into those same loop variables.

13.3.3. The Pythonic Way to Enumerate Items in a Sequence ¶

When we first introduced the for loop, we provided an example of how to iterate through the indexes of a sequence, and thus enumerate the items and their positions in the sequence.

We are now prepared to understand a more pythonic approach to enumerating items in a sequence. Python provides a built-in function enumerate . It takes a sequence as input and returns a sequence of tuples. In each tuple, the first element is an integer and the second is an item from the original sequence. (It actually produces an “iterable” rather than a list, but we can use it in a for loop as the sequence to iterate over.)

The pythonic way to consume the results of enumerate, however, is to unpack the tuples while iterating through them, so that the code is easier to understand.

Check your Understanding

Consider the following alternative way to swap the values of variables x and y. What’s wrong with it?

- You can't use different variable names on the left and right side of an assignment statement.

- Sure you can; you can use any variable on the right-hand side that already has a value.

- At the end, x still has it's original value instead of y's original value.

- Once you assign x's value to y, y's original value is gone.

- Actually, it works just fine!

With only one line of code, assign the variables water , fire , electric , and grass to the values “Squirtle”, “Charmander”, “Pikachu”, and “Bulbasaur”

With only one line of code, assign four variables, v1 , v2 , v3 , and v4 , to the following four values: 1, 2, 3, 4.

If you remember, the .items() dictionary method produces a sequence of tuples. Keeping this in mind, we have provided you a dictionary called pokemon . For every key value pair, append the key to the list p_names , and append the value to the list p_number . Do not use the .keys() or .values() methods.

The .items() method produces a sequence of key-value pair tuples. With this in mind, write code to create a list of keys from the dictionary track_medal_counts and assign the list to the variable name track_events . Do NOT use the .keys() method.

Tuple Assignment Python [With Examples]

Tuple assignment is a feature that allows you to assign multiple variables simultaneously by unpacking the values from a tuple (or other iterable) into those variables.

Tuple assignment is a concise and powerful way to assign values to multiple variables in a single line of code.

Here’s how it works:

In this example, the values from the my_tuple tuple are unpacked and assigned to the variables a , b , and c in the same order as they appear in the tuple.

Tuple assignment is not limited to tuples; it can also work with other iterable types like lists:

Tuple assignment can be used to swap the values of two variables without needing a temporary variable:

Tuple assignment is a versatile feature in Python and is often used when you want to work with multiple values at once, making your code more readable and concise.

Tuple Assignment Python Example

Here are some examples of tuple assignment in Python:

Example 1: Basic Tuple Assignment

Example 2: Multiple Variables Assigned at Once

Example 3: Swapping Values

Example 4: Unpacking a Tuple Inside a Loop

Example 5: Ignoring Unwanted Values

These examples demonstrate various uses of tuple assignment in Python, from basic variable assignment to more advanced scenarios like swapping values or ignoring unwanted elements in the tuple. Tuple assignment is a powerful tool for working with structured data in Python.

- Python Tuple Vs List Performance

- Subprocess Python Stdout

- Python Subprocess Stderr

- Python Asyncio Subprocess [Asynchronous Subprocesses]

- Subprocess.popen And Subprocess.run

- Python Subprocess.popen

- Difference Between Subprocess Popen And Call

- 5 Tuple Methods in Python [Explained]

- Python List to Tuple

- Python Tuple Append

- Python Unpack Tuple Into Arguments

- Python Concatenate Tuples

Aniket Singh holds a B.Tech in Computer Science & Engineering from Oriental University. He is a skilled programmer with a strong coding background, having hands-on experience in developing advanced projects, particularly in Python and the Django framework. Aniket has worked on various real-world industry projects and has a solid command of Python, Django, REST API, PostgreSQL, as well as proficiency in C and C++. He is eager to collaborate with experienced professionals to further enhance his skills.

Leave a Comment Cancel reply

Unpacking in Python: Beyond Parallel Assignment

- Introduction

Unpacking in Python refers to an operation that consists of assigning an iterable of values to a tuple (or list ) of variables in a single assignment statement. As a complement, the term packing can be used when we collect several values in a single variable using the iterable unpacking operator, * .

Historically, Python developers have generically referred to this kind of operation as tuple unpacking . However, since this Python feature has turned out to be quite useful and popular, it's been generalized to all kinds of iterables. Nowadays, a more modern and accurate term would be iterable unpacking .

In this tutorial, we'll learn what iterable unpacking is and how we can take advantage of this Python feature to make our code more readable, maintainable, and pythonic.

Additionally, we'll also cover some practical examples of how to use the iterable unpacking feature in the context of assignments operations, for loops, function definitions, and function calls.

- Packing and Unpacking in Python

Python allows a tuple (or list ) of variables to appear on the left side of an assignment operation. Each variable in the tuple can receive one value (or more, if we use the * operator) from an iterable on the right side of the assignment.

For historical reasons, Python developers used to call this tuple unpacking . However, since this feature has been generalized to all kind of iterable, a more accurate term would be iterable unpacking and that's what we'll call it in this tutorial.

Unpacking operations have been quite popular among Python developers because they can make our code more readable, and elegant. Let's take a closer look to unpacking in Python and see how this feature can improve our code.

- Unpacking Tuples

In Python, we can put a tuple of variables on the left side of an assignment operator ( = ) and a tuple of values on the right side. The values on the right will be automatically assigned to the variables on the left according to their position in the tuple . This is commonly known as tuple unpacking in Python. Check out the following example:

When we put tuples on both sides of an assignment operator, a tuple unpacking operation takes place. The values on the right are assigned to the variables on the left according to their relative position in each tuple . As you can see in the above example, a will be 1 , b will be 2 , and c will be 3 .

To create a tuple object, we don't need to use a pair of parentheses () as delimiters. This also works for tuple unpacking, so the following syntaxes are equivalent:

Since all these variations are valid Python syntax, we can use any of them, depending on the situation. Arguably, the last syntax is more commonly used when it comes to unpacking in Python.

When we are unpacking values into variables using tuple unpacking, the number of variables on the left side tuple must exactly match the number of values on the right side tuple . Otherwise, we'll get a ValueError .

For example, in the following code, we use two variables on the left and three values on the right. This will raise a ValueError telling us that there are too many values to unpack:

Note: The only exception to this is when we use the * operator to pack several values in one variable as we'll see later on.

On the other hand, if we use more variables than values, then we'll get a ValueError but this time the message says that there are not enough values to unpack:

If we use a different number of variables and values in a tuple unpacking operation, then we'll get a ValueError . That's because Python needs to unambiguously know what value goes into what variable, so it can do the assignment accordingly.

- Unpacking Iterables

The tuple unpacking feature got so popular among Python developers that the syntax was extended to work with any iterable object. The only requirement is that the iterable yields exactly one item per variable in the receiving tuple (or list ).

Check out the following examples of how iterable unpacking works in Python:

When it comes to unpacking in Python, we can use any iterable on the right side of the assignment operator. The left side can be filled with a tuple or with a list of variables. Check out the following example in which we use a tuple on the right side of the assignment statement:

It works the same way if we use the range() iterator:

Even though this is a valid Python syntax, it's not commonly used in real code and maybe a little bit confusing for beginner Python developers.

Finally, we can also use set objects in unpacking operations. However, since sets are unordered collection, the order of the assignments can be sort of incoherent and can lead to subtle bugs. Check out the following example:

If we use sets in unpacking operations, then the final order of the assignments can be quite different from what we want and expect. So, it's best to avoid using sets in unpacking operations unless the order of assignment isn't important to our code.

- Packing With the * Operator

The * operator is known, in this context, as the tuple (or iterable) unpacking operator . It extends the unpacking functionality to allow us to collect or pack multiple values in a single variable. In the following example, we pack a tuple of values into a single variable by using the * operator:

For this code to work, the left side of the assignment must be a tuple (or a list ). That's why we use a trailing comma. This tuple can contain as many variables as we need. However, it can only contain one starred expression .

We can form a stared expression using the unpacking operator, * , along with a valid Python identifier, just like the *a in the above code. The rest of the variables in the left side tuple are called mandatory variables because they must be filled with concrete values, otherwise, we'll get an error. Here's how this works in practice.

Packing the trailing values in b :

Packing the starting values in a :

Packing one value in a because b and c are mandatory:

Packing no values in a ( a defaults to [] ) because b , c , and d are mandatory:

Supplying no value for a mandatory variable ( e ), so an error occurs:

Packing values in a variable with the * operator can be handy when we need to collect the elements of a generator in a single variable without using the list() function. In the following examples, we use the * operator to pack the elements of a generator expression and a range object to a individual variable:

In these examples, the * operator packs the elements in gen , and ran into g and r respectively. With his syntax, we avoid the need of calling list() to create a list of values from a range object, a generator expression, or a generator function.

Notice that we can't use the unpacking operator, * , to pack multiple values into one variable without adding a trailing comma to the variable on the left side of the assignment. So, the following code won't work:

If we try to use the * operator to pack several values into a single variable, then we need to use the singleton tuple syntax. For example, to make the above example works, we just need to add a comma after the variable r , like in *r, = range(10) .

- Using Packing and Unpacking in Practice

Packing and unpacking operations can be quite useful in practice. They can make your code clear, readable, and pythonic. Let's take a look at some common use-cases of packing and unpacking in Python.

- Assigning in Parallel

One of the most common use-cases of unpacking in Python is what we can call parallel assignment . Parallel assignment allows you to assign the values in an iterable to a tuple (or list ) of variables in a single and elegant statement.

For example, let's suppose we have a database about the employees in our company and we need to assign each item in the list to a descriptive variable. If we ignore how iterable unpacking works in Python, we can get ourself writing code like this:

Even though this code works, the index handling can be clumsy, hard to type, and confusing. A cleaner, more readable, and pythonic solution can be coded as follows:

Using unpacking in Python, we can solve the problem of the previous example with a single, straightforward, and elegant statement. This tiny change would make our code easier to read and understand for newcomers developers.

- Swapping Values Between Variables

Another elegant application of unpacking in Python is swapping values between variables without using a temporary or auxiliary variable. For example, let's suppose we need to swap the values of two variables a and b . To do this, we can stick to the traditional solution and use a temporary variable to store the value to be swapped as follows:

This procedure takes three steps and a new temporary variable. If we use unpacking in Python, then we can achieve the same result in a single and concise step:

In statement a, b = b, a , we're reassigning a to b and b to a in one line of code. This is a lot more readable and straightforward. Also, notice that with this technique, there is no need for a new temporary variable.

- Collecting Multiple Values With *

When we're working with some algorithms, there may be situations in which we need to split the values of an iterable or a sequence in chunks of values for further processing. The following example shows how to uses a list and slicing operations to do so:

Check out our hands-on, practical guide to learning Git, with best-practices, industry-accepted standards, and included cheat sheet. Stop Googling Git commands and actually learn it!

Even though this code works as we expect, dealing with indices and slices can be a little bit annoying, difficult to read, and confusing for beginners. It has also the drawback of making the code rigid and difficult to maintain. In this situation, the iterable unpacking operator, * , and its ability to pack several values in a single variable can be a great tool. Check out this refactoring of the above code:

The line first, *body, last = seq makes the magic here. The iterable unpacking operator, * , collects the elements in the middle of seq in body . This makes our code more readable, maintainable, and flexible. You may be thinking, why more flexible? Well, suppose that seq changes its length in the road and you still need to collect the middle elements in body . In this case, since we're using unpacking in Python, no changes are needed for our code to work. Check out this example:

If we were using sequence slicing instead of iterable unpacking in Python, then we would need to update our indices and slices to correctly catch the new values.

The use of the * operator to pack several values in a single variable can be applied in a variety of configurations, provided that Python can unambiguously determine what element (or elements) to assign to each variable. Take a look at the following examples:

We can move the * operator in the tuple (or list ) of variables to collect the values according to our needs. The only condition is that Python can determine to what variable assign each value.

It's important to note that we can't use more than one stared expression in the assignment If we do so, then we'll get a SyntaxError as follows:

If we use two or more * in an assignment expression, then we'll get a SyntaxError telling us that two-starred expression were found. This is that way because Python can't unambiguously determine what value (or values) we want to assign to each variable.

- Dropping Unneeded Values With *

Another common use-case of the * operator is to use it with a dummy variable name to drop some useless or unneeded values. Check out the following example:

For a more insightful example of this use-case, suppose we're developing a script that needs to determine the Python version we're using. To do this, we can use the sys.version_info attribute . This attribute returns a tuple containing the five components of the version number: major , minor , micro , releaselevel , and serial . But we just need major , minor , and micro for our script to work, so we can drop the rest. Here's an example:

Now, we have three new variables with the information we need. The rest of the information is stored in the dummy variable _ , which can be ignored by our program. This can make clear to newcomer developers that we don't want to (or need to) use the information stored in _ cause this character has no apparent meaning.

Note: By default, the underscore character _ is used by the Python interpreter to store the resulting value of the statements we run in an interactive session. So, in this context, the use of this character to identify dummy variables can be ambiguous.

- Returning Tuples in Functions

Python functions can return several values separated by commas. Since we can define tuple objects without using parentheses, this kind of operation can be interpreted as returning a tuple of values. If we code a function that returns multiple values, then we can perform iterable packing and unpacking operations with the returned values.

Check out the following example in which we define a function to calculate the square and cube of a given number:

If we define a function that returns comma-separated values, then we can do any packing or unpacking operation on these values.

- Merging Iterables With the * Operator

Another interesting use-case for the unpacking operator, * , is the ability to merge several iterables into a final sequence. This functionality works for lists, tuples, and sets. Take a look at the following examples:

We can use the iterable unpacking operator, * , when defining sequences to unpack the elements of a subsequence (or iterable) into the final sequence. This will allow us to create sequences on the fly from other existing sequences without calling methods like append() , insert() , and so on.

The last two examples show that this is also a more readable and efficient way to concatenate iterables. Instead of writing list(my_set) + my_list + list(my_tuple) + list(range(1, 4)) + list(my_str) we just write [*my_set, *my_list, *my_tuple, *range(1, 4), *my_str] .

- Unpacking Dictionaries With the ** Operator

In the context of unpacking in Python, the ** operator is called the dictionary unpacking operator . The use of this operator was extended by PEP 448 . Now, we can use it in function calls, in comprehensions and generator expressions, and in displays .

A basic use-case for the dictionary unpacking operator is to merge multiple dictionaries into one final dictionary with a single expression. Let's see how this works:

If we use the dictionary unpacking operator inside a dictionary display, then we can unpack dictionaries and combine them to create a final dictionary that includes the key-value pairs of the original dictionaries, just like we did in the above code.

An important point to note is that, if the dictionaries we're trying to merge have repeated or common keys, then the values of the right-most dictionary will override the values of the left-most dictionary. Here's an example:

Since the a key is present in both dictionaries, the value that prevail comes from vowels , which is the right-most dictionary. This happens because Python starts adding the key-value pairs from left to right. If, in the process, Python finds keys that already exit, then the interpreter updates that keys with the new value. That's why the value of the a key is lowercased in the above example.

- Unpacking in For-Loops

We can also use iterable unpacking in the context of for loops. When we run a for loop, the loop assigns one item of its iterable to the target variable in every iteration. If the item to be assigned is an iterable, then we can use a tuple of target variables. The loop will unpack the iterable at hand into the tuple of target variables.

As an example, let's suppose we have a file containing data about the sales of a company as follows:

| Product | Price | Sold Units |

|---|---|---|

| Pencil | 0.25 | 1500 |

| Notebook | 1.30 | 550 |

| Eraser | 0.75 | 1000 |

| ... | ... | ... |

From this table, we can build a list of two-elements tuples. Each tuple will contain the name of the product, the price, and the sold units. With this information, we want to calculate the income of each product. To do this, we can use a for loop like this:

This code works as expected. However, we're using indices to get access to individual elements of each tuple . This can be difficult to read and to understand by newcomer developers.

Let's take a look at an alternative implementation using unpacking in Python:

We're now using iterable unpacking in our for loop. This makes our code way more readable and maintainable because we're using descriptive names to identify the elements of each tuple . This tiny change will allow a newcomer developer to quickly understand the logic behind the code.

It's also possible to use the * operator in a for loop to pack several items in a single target variable:

In this for loop, we're catching the first element of each sequence in first . Then the * operator catches a list of values in its target variable rest .

Finally, the structure of the target variables must agree with the structure of the iterable. Otherwise, we'll get an error. Take a look at the following example:

In the first loop, the structure of the target variables, (a, b), c , agrees with the structure of the items in the iterable, ((1, 2), 2) . In this case, the loop works as expected. In contrast, the second loop uses a structure of target variables that don't agree with the structure of the items in the iterable, so the loop fails and raises a ValueError .

- Packing and Unpacking in Functions

We can also use Python's packing and unpacking features when defining and calling functions. This is a quite useful and popular use-case of packing and unpacking in Python.

In this section, we'll cover the basics of how to use packing and unpacking in Python functions either in the function definition or in the function call.

Note: For a more insightful and detailed material on these topics, check out Variable-Length Arguments in Python with *args and **kwargs .

- Defining Functions With * and **

We can use the * and ** operators in the signature of Python functions. This will allow us to call the function with a variable number of positional arguments ( * ) or with a variable number of keyword arguments, or both. Let's consider the following function:

The above function requires at least one argument called required . It can accept a variable number of positional and keyword arguments as well. In this case, the * operator collects or packs extra positional arguments in a tuple called args and the ** operator collects or packs extra keyword arguments in a dictionary called kwargs . Both, args and kwargs , are optional and automatically default to () and {} respectively.

Even though the names args and kwargs are widely used by the Python community, they're not a requirement for these techniques to work. The syntax just requires * or ** followed by a valid identifier. So, if you can give meaningful names to these arguments, then do it. That will certainly improve your code's readability.

- Calling Functions With * and **

When calling functions, we can also benefit from the use of the * and ** operator to unpack collections of arguments into separate positional or keyword arguments respectively. This is the inverse of using * and ** in the signature of a function. In the signature, the operators mean collect or pack a variable number of arguments in one identifier. In the call, they mean unpack an iterable into several arguments.

Here's a basic example of how this works:

Here, the * operator unpacks sequences like ["Welcome", "to"] into positional arguments. Similarly, the ** operator unpacks dictionaries into arguments whose names match the keys of the unpacked dictionary.

We can also combine this technique and the one covered in the previous section to write quite flexible functions. Here's an example:

The use of the * and ** operators, when defining and calling Python functions, will give them extra capabilities and make them more flexible and powerful.

Iterable unpacking turns out to be a pretty useful and popular feature in Python. This feature allows us to unpack an iterable into several variables. On the other hand, packing consists of catching several values into one variable using the unpacking operator, * .

In this tutorial, we've learned how to use iterable unpacking in Python to write more readable, maintainable, and pythonic code.

With this knowledge, we are now able to use iterable unpacking in Python to solve common problems like parallel assignment and swapping values between variables. We're also able to use this Python feature in other structures like for loops, function calls, and function definitions.

You might also like...

- Hidden Features of Python

- Python Docstrings

- Handling Unix Signals in Python

- The Best Machine Learning Libraries in Python

- Guide to Sending HTTP Requests in Python with urllib3

Improve your dev skills!

Get tutorials, guides, and dev jobs in your inbox.

No spam ever. Unsubscribe at any time. Read our Privacy Policy.

Leodanis is an industrial engineer who loves Python and software development. He is a self-taught Python programmer with 5+ years of experience building desktop applications with PyQt.

In this article

Monitor with Ping Bot

Reliable monitoring for your app, databases, infrastructure, and the vendors they rely on. Ping Bot is a powerful uptime and performance monitoring tool that helps notify you and resolve issues before they affect your customers.

Vendor Alerts with Ping Bot

Get detailed incident alerts about the status of your favorite vendors. Don't learn about downtime from your customers, be the first to know with Ping Bot.

© 2013- 2024 Stack Abuse. All rights reserved.

Home » Python Basics » Python Unpacking Tuple

Python Unpacking Tuple

Summary : in this tutorial, you’ll learn how to unpack tuples in Python.

Reviewing Python tuples

Python defines a tuple using commas ( , ), not parentheses () . For example, the following defines a tuple with two elements:

Python uses the parentheses to make the tuple clearer:

Python also uses the parentheses to create an empty tuple:

In addition, you can use the tuple() constructor like this:

To define a tuple with only one element, you still need to use a comma. The following example illustrates how to define a tuple with one element:

It’s equivalent to the following:

Note that the following is an integer , not a tuple:

Unpacking a tuple

Unpacking a tuple means splitting the tuple’s elements into individual variables . For example:

The left side:

is a tuple of two variables x and y .

The right side is also a tuple of two integers 1 and 2 .

The expression assigns the tuple elements on the right side (1, 2) to each variable on the left side (x, y) based on the relative position of each element.

In the above example, x will take 1 and y will take 2 .

See another example:

The right side is a tuple of three integers 10 , 20 , and 30 . You can quickly check its type as follows:

In the above example, the x , y , and z variables will take the values 10 , 20 , and 30 respectively.

Using unpacking tuple to swap values of two variables

Traditionally, to swap the values of two variables, you would use a temporary variable like this:

In Python, you can use the unpacking tuple syntax to achieve the same result:

The following expression swaps the values of two variables, x and y.

In this expression, Python evaluates the right-hand side first and then assigns the variable from the left-hand side to the values from the right-hand side.

ValueError: too many values to unpack

The following example unpacks the elements of a tuple into variables. However, it’ll result in an error:

This error is because the right-hand side returns three values while the left-hand side only has two variables.

To fix this, you can add a _ variable:

The _ variable is a regular variable in Python. By convention, it’s called a dummy variable.

Typically, you use the dummy variable to unpack when you don’t care and use its value afterward.

Extended unpacking using the * operator

Sometimes, you don’t want to unpack every single item in a tuple. For example, you may want to unpack the first and second elements. In this case, you can use the * operator. For example:

In this example, Python assigns 192 to r , 210 to g . Also, Python packs the remaining elements 100 and 0.5 into a list and assigns it to the other variable.

Notice that you can only use the * operator once on the left-hand side of an unpacking assignment.

The following example results in error:

Using the * operator on the right hand side

Python allows you to use the * operator on the right-hand side. Suppose that you have two tuples:

The following example uses the * operator to unpack those tuples and merge them into a single tuple:

- Python uses the commas ( , ) to define a tuple, not parentheses.

- Unpacking tuples means assigning individual elements of a tuple to multiple variables.

- Use the * operator to assign remaining elements of an unpacking assignment into a list and assign it to a variable.

Learn Python practically and Get Certified .

Popular Tutorials

Popular examples, reference materials, learn python interactively, python introduction.

- Get Started With Python

- Your First Python Program

- Python Comments

Python Fundamentals

- Python Variables and Literals

- Python Type Conversion

- Python Basic Input and Output

- Python Operators

Python Flow Control

- Python if...else Statement

- Python for Loop

- Python while Loop

- Python break and continue

- Python pass Statement

Python Data types

- Python Numbers and Mathematics

- Python List

Python Tuple

- Python String

- Python Dictionary

- Python Functions

- Python Function Arguments

- Python Variable Scope

- Python Global Keyword

- Python Recursion

- Python Modules

- Python Package

- Python Main function

Python Files

- Python Directory and Files Management

- Python CSV: Read and Write CSV files

- Reading CSV files in Python

- Writing CSV files in Python

- Python Exception Handling

- Python Exceptions

- Python Custom Exceptions

Python Object & Class

- Python Objects and Classes

- Python Inheritance

- Python Multiple Inheritance

- Polymorphism in Python

- Python Operator Overloading

Python Advanced Topics

- List comprehension

- Python Lambda/Anonymous Function

- Python Iterators

- Python Generators

- Python Namespace and Scope

- Python Closures

- Python Decorators

- Python @property decorator

- Python RegEx

Python Date and Time

- Python datetime

- Python strftime()

- Python strptime()

- How to get current date and time in Python?

- Python Get Current Time

- Python timestamp to datetime and vice-versa

- Python time Module

- Python sleep()

Additional Topic

- Precedence and Associativity of Operators in Python

- Python Keywords and Identifiers

- Python Asserts

- Python Json

- Python *args and **kwargs

Python Tutorials

Python tuple()

Python Tuple index()

Python Tuple count()

- Python Lists Vs Tuples

Python del Statement

- Python Dictionary items()

A tuple is a collection similar to a Python list . The primary difference is that we cannot modify a tuple once it is created.

- Create a Python Tuple

We create a tuple by placing items inside parentheses () . For example,

More on Tuple Creation

We can also create a tuple using a tuple() constructor. For example,

Here are the different types of tuples we can create in Python.

Empty Tuple

Tuple of different data types

Tuple of mixed data types

Tuple Characteristics

Tuples are:

- Ordered - They maintain the order of elements.

- Immutable - They cannot be changed after creation.

- Allow duplicates - They can contain duplicate values.

- Access Tuple Items

Each item in a tuple is associated with a number, known as a index .

The index always starts from 0 , meaning the first item of a tuple is at index 0 , the second item is at index 1, and so on.

Access Items Using Index

We use index numbers to access tuple items. For example,

Tuple Cannot be Modified

Python tuples are immutable (unchangeable). We cannot add, change, or delete items of a tuple.

If we try to modify a tuple, we will get an error. For example,

- Python Tuple Length

We use the len() function to find the number of items present in a tuple. For example,

- Iterate Through a Tuple

We use the for loop to iterate over the items of a tuple. For example,

More on Python Tuple

We use the in keyword to check if an item exists in the tuple. For example,

- yellow is not present in colors , so, 'yellow' in colors evaluates to False

- red is present in colors , so, 'red' in colors evaluates to True

Python Tuples are immutable - we cannot change the items of a tuple once created.

If we try to do so, we will get an error. For example,

We cannot delete individual items of a tuple. However, we can delete the tuple itself using the del statement. For example,

Here, we have deleted the animals tuple.

When we want to create a tuple with a single item, we might do the following:

But this would not create a tuple; instead, it would be considered a string .

To solve this, we need to include a trailing comma after the item. For example,

- Python Tuple Methods

Table of Contents

- Introduction

Before we wrap up, let’s put your knowledge of Python tuple to the test! Can you solve the following challenge?

Write a function to modify a tuple by adding an element at the end of it.

- For inputs with tuple (1, 2, 3) and element 4 , the return value should be (1, 2, 3, 4) .

- Hint: You need to first convert the tuple to another data type, such as a list.

Video: Python Lists and Tuples

Sorry about that.

Our premium learning platform, created with over a decade of experience and thousands of feedbacks .

Learn and improve your coding skills like never before.

- Interactive Courses

- Certificates

- 2000+ Challenges

Related Tutorials

Python Library

Python Tutorial

Python's Assignment Operator: Write Robust Assignments

Table of Contents

The Assignment Statement Syntax

The assignment operator, assignments and variables, other assignment syntax, initializing and updating variables, making multiple variables refer to the same object, updating lists through indices and slices, adding and updating dictionary keys, doing parallel assignments, unpacking iterables, providing default argument values, augmented mathematical assignment operators, augmented assignments for concatenation and repetition, augmented bitwise assignment operators, annotated assignment statements, assignment expressions with the walrus operator, managed attribute assignments, define or call a function, work with classes, import modules and objects, use a decorator, access the control variable in a for loop or a comprehension, use the as keyword, access the _ special variable in an interactive session, built-in objects, named constants.

Python’s assignment operators allow you to define assignment statements . This type of statement lets you create, initialize, and update variables throughout your code. Variables are a fundamental cornerstone in every piece of code, and assignment statements give you complete control over variable creation and mutation.

Learning about the Python assignment operator and its use for writing assignment statements will arm you with powerful tools for writing better and more robust Python code.

In this tutorial, you’ll:

- Use Python’s assignment operator to write assignment statements

- Take advantage of augmented assignments in Python

- Explore assignment variants, like assignment expressions and managed attributes

- Become aware of illegal and dangerous assignments in Python

You’ll dive deep into Python’s assignment statements. To get the most out of this tutorial, you should be comfortable with several basic topics, including variables , built-in data types , comprehensions , functions , and Python keywords . Before diving into some of the later sections, you should also be familiar with intermediate topics, such as object-oriented programming , constants , imports , type hints , properties , descriptors , and decorators .

Free Source Code: Click here to download the free assignment operator source code that you’ll use to write assignment statements that allow you to create, initialize, and update variables in your code.

Assignment Statements and the Assignment Operator

One of the most powerful programming language features is the ability to create, access, and mutate variables . In Python, a variable is a name that refers to a concrete value or object, allowing you to reuse that value or object throughout your code.

To create a new variable or to update the value of an existing one in Python, you’ll use an assignment statement . This statement has the following three components:

- A left operand, which must be a variable

- The assignment operator ( = )

- A right operand, which can be a concrete value , an object , or an expression

Here’s how an assignment statement will generally look in Python:

Here, variable represents a generic Python variable, while expression represents any Python object that you can provide as a concrete value—also known as a literal —or an expression that evaluates to a value.

To execute an assignment statement like the above, Python runs the following steps:

- Evaluate the right-hand expression to produce a concrete value or object . This value will live at a specific memory address in your computer.

- Store the object’s memory address in the left-hand variable . This step creates a new variable if the current one doesn’t already exist or updates the value of an existing variable.

The second step shows that variables work differently in Python than in other programming languages. In Python, variables aren’t containers for objects. Python variables point to a value or object through its memory address. They store memory addresses rather than objects.

This behavior difference directly impacts how data moves around in Python, which is always by reference . In most cases, this difference is irrelevant in your day-to-day coding, but it’s still good to know.

The central component of an assignment statement is the assignment operator . This operator is represented by the = symbol, which separates two operands:

- A value or an expression that evaluates to a concrete value

Operators are special symbols that perform mathematical , logical , and bitwise operations in a programming language. The objects (or object) on which an operator operates are called operands .

Unary operators, like the not Boolean operator, operate on a single object or operand, while binary operators act on two. That means the assignment operator is a binary operator.

Note: Like C , Python uses == for equality comparisons and = for assignments. Unlike C, Python doesn’t allow you to accidentally use the assignment operator ( = ) in an equality comparison.

Equality is a symmetrical relationship, and assignment is not. For example, the expression a == 42 is equivalent to 42 == a . In contrast, the statement a = 42 is correct and legal, while 42 = a isn’t allowed. You’ll learn more about illegal assignments later on.

The right-hand operand in an assignment statement can be any Python object, such as a number , list , string , dictionary , or even a user-defined object. It can also be an expression. In the end, expressions always evaluate to concrete objects, which is their return value.

Here are a few examples of assignments in Python:

The first two sample assignments in this code snippet use concrete values, also known as literals , to create and initialize number and greeting . The third example assigns the result of a math expression to the total variable, while the last example uses a Boolean expression.

Note: You can use the built-in id() function to inspect the memory address stored in a given variable.

Here’s a short example of how this function works:

The number in your output represents the memory address stored in number . Through this address, Python can access the content of number , which is the integer 42 in this example.

If you run this code on your computer, then you’ll get a different memory address because this value varies from execution to execution and computer to computer.

Unlike expressions, assignment statements don’t have a return value because their purpose is to make the association between the variable and its value. That’s why the Python interpreter doesn’t issue any output in the above examples.

Now that you know the basics of how to write an assignment statement, it’s time to tackle why you would want to use one.

The assignment statement is the explicit way for you to associate a name with an object in Python. You can use this statement for two main purposes:

- Creating and initializing new variables

- Updating the values of existing variables

When you use a variable name as the left operand in an assignment statement for the first time, you’re creating a new variable. At the same time, you’re initializing the variable to point to the value of the right operand.

On the other hand, when you use an existing variable in a new assignment, you’re updating or mutating the variable’s value. Strictly speaking, every new assignment will make the variable refer to a new value and stop referring to the old one. Python will garbage-collect all the values that are no longer referenced by any existing variable.

Assignment statements not only assign a value to a variable but also determine the data type of the variable at hand. This additional behavior is another important detail to consider in this kind of statement.

Because Python is a dynamically typed language, successive assignments to a given variable can change the variable’s data type. Changing the data type of a variable during a program’s execution is considered bad practice and highly discouraged. It can lead to subtle bugs that can be difficult to track down.

Unlike in math equations, in Python assignments, the left operand must be a variable rather than an expression or a value. For example, the following construct is illegal, and Python flags it as invalid syntax:

In this example, you have expressions on both sides of the = sign, and this isn’t allowed in Python code. The error message suggests that you may be confusing the equality operator with the assignment one, but that’s not the case. You’re really running an invalid assignment.

To correct this construct and convert it into a valid assignment, you’ll have to do something like the following:

In this code snippet, you first import the sqrt() function from the math module. Then you isolate the hypotenuse variable in the original equation by using the sqrt() function. Now your code works correctly.

Now you know what kind of syntax is invalid. But don’t get the idea that assignment statements are rigid and inflexible. In fact, they offer lots of room for customization, as you’ll learn next.

Python’s assignment statements are pretty flexible and versatile. You can write them in several ways, depending on your specific needs and preferences. Here’s a quick summary of the main ways to write assignments in Python:

Up to this point, you’ve mostly learned about the base assignment syntax in the above code snippet. In the following sections, you’ll learn about multiple, parallel, and augmented assignments. You’ll also learn about assignments with iterable unpacking.

Read on to see the assignment statements in action!

Assignment Statements in Action

You’ll find and use assignment statements everywhere in your Python code. They’re a fundamental part of the language, providing an explicit way to create, initialize, and mutate variables.

You can use assignment statements with plain names, like number or counter . You can also use assignments in more complicated scenarios, such as with:

- Qualified attribute names , like user.name

- Indices and slices of mutable sequences, like a_list[i] and a_list[i:j]

- Dictionary keys , like a_dict[key]

This list isn’t exhaustive. However, it gives you some idea of how flexible these statements are. You can even assign multiple values to an equal number of variables in a single line, commonly known as parallel assignment . Additionally, you can simultaneously assign the values in an iterable to a comma-separated group of variables in what’s known as an iterable unpacking operation.

In the following sections, you’ll dive deeper into all these topics and a few other exciting things that you can do with assignment statements in Python.

The most elementary use case of an assignment statement is to create a new variable and initialize it using a particular value or expression:

All these statements create new variables, assigning them initial values or expressions. For an initial value, you should always use the most sensible and least surprising value that you can think of. For example, initializing a counter to something different from 0 may be confusing and unexpected because counters almost always start having counted no objects.

Updating a variable’s current value or state is another common use case of assignment statements. In Python, assigning a new value to an existing variable doesn’t modify the variable’s current value. Instead, it causes the variable to refer to a different value. The previous value will be garbage-collected if no other variable refers to it.

Consider the following examples:

These examples run two consecutive assignments on the same variable. The first one assigns the string "Hello, World!" to a new variable named greeting .

The second assignment updates the value of greeting by reassigning it the "Hi, Pythonistas!" string. In this example, the original value of greeting —the "Hello, World!" string— is lost and garbage-collected. From this point on, you can’t access the old "Hello, World!" string.

Even though running multiple assignments on the same variable during a program’s execution is common practice, you should use this feature with caution. Changing the value of a variable can make your code difficult to read, understand, and debug. To comprehend the code fully, you’ll have to remember all the places where the variable was changed and the sequential order of those changes.

Because assignments also define the data type of their target variables, it’s also possible for your code to accidentally change the type of a given variable at runtime. A change like this can lead to breaking errors, like AttributeError exceptions. Remember that strings don’t have the same methods and attributes as lists or dictionaries, for example.

In Python, you can make several variables reference the same object in a multiple-assignment line. This can be useful when you want to initialize several similar variables using the same initial value:

In this example, you chain two assignment operators in a single line. This way, your two variables refer to the same initial value of 0 . Note how both variables hold the same memory address, so they point to the same instance of 0 .

When it comes to integer variables, Python exhibits a curious behavior. It provides a numeric interval where multiple assignments behave the same as independent assignments. Consider the following examples:

To create n and m , you use independent assignments. Therefore, they should point to different instances of the number 42 . However, both variables hold the same object, which you confirm by comparing their corresponding memory addresses.

Now check what happens when you use a greater initial value:

Now n and m hold different memory addresses, which means they point to different instances of the integer number 300 . In contrast, when you use multiple assignments, both variables refer to the same object. This tiny difference can save you small bits of memory if you frequently initialize integer variables in your code.

The implicit behavior of making independent assignments point to the same integer number is actually an optimization called interning . It consists of globally caching the most commonly used integer values in day-to-day programming.

Under the hood, Python defines a numeric interval in which interning takes place. That’s the interning interval for integer numbers. You can determine this interval using a small script like the following:

This script helps you determine the interning interval by comparing integer numbers from -10 to 500 . If you run the script from your command line, then you’ll get an output like the following:

This output means that if you use a single number between -5 and 256 to initialize several variables in independent statements, then all these variables will point to the same object, which will help you save small bits of memory in your code.

In contrast, if you use a number that falls outside of the interning interval, then your variables will point to different objects instead. Each of these objects will occupy a different memory spot.

You can use the assignment operator to mutate the value stored at a given index in a Python list. The operator also works with list slices . The syntax to write these types of assignment statements is the following:

In the first construct, expression can return any Python object, including another list. In the second construct, expression must return a series of values as a list, tuple, or any other sequence. You’ll get a TypeError if expression returns a single value.

Note: When creating slice objects, you can use up to three arguments. These arguments are start , stop , and step . They define the number that starts the slice, the number at which the slicing must stop retrieving values, and the step between values.

Here’s an example of updating an individual value in a list:

In this example, you update the value at index 2 using an assignment statement. The original number at that index was 7 , and after the assignment, the number is 3 .

Note: Using indices and the assignment operator to update a value in a tuple or a character in a string isn’t possible because tuples and strings are immutable data types in Python.

Their immutability means that you can’t change their items in place :

You can’t use the assignment operator to change individual items in tuples or strings. These data types are immutable and don’t support item assignments.

It’s important to note that you can’t add new values to a list by using indices that don’t exist in the target list:

In this example, you try to add a new value to the end of numbers by using an index that doesn’t exist. This assignment isn’t allowed because there’s no way to guarantee that new indices will be consecutive. If you ever want to add a single value to the end of a list, then use the .append() method.

If you want to update several consecutive values in a list, then you can use slicing and an assignment statement:

In the first example, you update the letters between indices 1 and 3 without including the letter at 3 . The second example updates the letters from index 3 until the end of the list. Note that this slicing appends a new value to the list because the target slice is shorter than the assigned values.

Also note that the new values were provided through a tuple, which means that this type of assignment allows you to use other types of sequences to update your target list.

The third example updates a single value using a slice where both indices are equal. In this example, the assignment inserts a new item into your target list.

In the final example, you use a step of 2 to replace alternating letters with their lowercase counterparts. This slicing starts at index 1 and runs through the whole list, stepping by two items each time.

Updating the value of an existing key or adding new key-value pairs to a dictionary is another common use case of assignment statements. To do these operations, you can use the following syntax:

The first construct helps you update the current value of an existing key, while the second construct allows you to add a new key-value pair to the dictionary.

For example, to update an existing key, you can do something like this:

In this example, you update the current inventory of oranges in your store using an assignment. The left operand is the existing dictionary key, and the right operand is the desired new value.

While you can’t add new values to a list by assignment, dictionaries do allow you to add new key-value pairs using the assignment operator. In the example below, you add a lemon key to inventory :

In this example, you successfully add a new key-value pair to your inventory with 100 units. This addition is possible because dictionaries don’t have consecutive indices but unique keys, which are safe to add by assignment.

The assignment statement does more than assign the result of a single expression to a single variable. It can also cope nicely with assigning multiple values to multiple variables simultaneously in what’s known as a parallel assignment .

Here’s the general syntax for parallel assignments in Python:

Note that the left side of the statement can be either a tuple or a list of variables. Remember that to create a tuple, you just need a series of comma-separated elements. In this case, these elements must be variables.

The right side of the statement must be a sequence or iterable of values or expressions. In any case, the number of elements in the right operand must match the number of variables on the left. Otherwise, you’ll get a ValueError exception.

In the following example, you compute the two solutions of a quadratic equation using a parallel assignment:

In this example, you first import sqrt() from the math module. Then you initialize the equation’s coefficients in a parallel assignment.

The equation’s solution is computed in another parallel assignment. The left operand contains a tuple of two variables, x1 and x2 . The right operand consists of a tuple of expressions that compute the solutions for the equation. Note how each result is assigned to each variable by position.

A classical use case of parallel assignment is to swap values between variables:

The highlighted line does the magic and swaps the values of previous_value and next_value at the same time. Note that in a programming language that doesn’t support this kind of assignment, you’d have to use a temporary variable to produce the same effect:

In this example, instead of using parallel assignment to swap values between variables, you use a new variable to temporarily store the value of previous_value to avoid losing its reference.

For a concrete example of when you’d need to swap values between variables, say you’re learning how to implement the bubble sort algorithm , and you come up with the following function:

In the highlighted line, you use a parallel assignment to swap values in place if the current value is less than the next value in the input list. To dive deeper into the bubble sort algorithm and into sorting algorithms in general, check out Sorting Algorithms in Python .

You can use assignment statements for iterable unpacking in Python. Unpacking an iterable means assigning its values to a series of variables one by one. The iterable must be the right operand in the assignment, while the variables must be the left operand.

Like in parallel assignments, the variables must come as a tuple or list. The number of variables must match the number of values in the iterable. Alternatively, you can use the unpacking operator ( * ) to grab several values in a variable if the number of variables doesn’t match the iterable length.

Here’s the general syntax for iterable unpacking in Python:

Iterable unpacking is a powerful feature that you can use all around your code. It can help you write more readable and concise code. For example, you may find yourself doing something like this:

Whenever you do something like this in your code, go ahead and replace it with a more readable iterable unpacking using a single and elegant assignment, like in the following code snippet:

The numbers list on the right side contains four values. The assignment operator unpacks these values into the four variables on the left side of the statement. The values in numbers get assigned to variables in the same order that they appear in the iterable. The assignment is done by position.

Note: Because Python sets are also iterables, you can use them in an iterable unpacking operation. However, it won’t be clear which value goes to which variable because sets are unordered data structures.

The above example shows the most common form of iterable unpacking in Python. The main condition for the example to work is that the number of variables matches the number of values in the iterable.

What if you don’t know the iterable length upfront? Will the unpacking work? It’ll work if you use the * operator to pack several values into one of your target variables.

For example, say that you want to unpack the first and second values in numbers into two different variables. Additionally, you would like to pack the rest of the values in a single variable conveniently called rest . In this case, you can use the unpacking operator like in the following code:

In this example, first and second hold the first and second values in numbers , respectively. These values are assigned by position. The * operator packs all the remaining values in the input iterable into rest .

The unpacking operator ( * ) can appear at any position in your series of target variables. However, you can only use one instance of the operator:

The iterable unpacking operator works in any position in your list of variables. Note that you can only use one unpacking operator per assignment. Using more than one unpacking operator isn’t allowed and raises a SyntaxError .

Dropping away unwanted values from the iterable is a common use case for the iterable unpacking operator. Consider the following example:

In Python, if you want to signal that a variable won’t be used, then you use an underscore ( _ ) as the variable’s name. In this example, useful holds the only value that you need to use from the input iterable. The _ variable is a placeholder that guarantees that the unpacking works correctly. You won’t use the values that end up in this disposable variable.

Note: In the example above, if your target iterable is a sequence data type, such as a list or tuple, then it’s best to access its last item directly.

To do this, you can use the -1 index:

Using -1 gives you access to the last item of any sequence data type. In contrast, if you’re dealing with iterators , then you won’t be able to use indices. That’s when the *_ syntax comes to your rescue.

The pattern used in the above example comes in handy when you have a function that returns multiple values, and you only need a few of these values in your code. The os.walk() function may provide a good example of this situation.

This function allows you to iterate over the content of a directory recursively. The function returns a generator object that yields three-item tuples. Each tuple contains the following items:

- The path to the current directory as a string

- The names of all the immediate subdirectories as a list of strings

- The names of all the files in the current directory as a list of strings

Now say that you want to iterate over your home directory and list only the files. You can do something like this:

This code will issue a long output depending on the current content of your home directory. Note that you need to provide a string with the path to your user folder for the example to work. The _ placeholder variable will hold the unwanted data.

In contrast, the filenames variable will hold the list of files in the current directory, which is the data that you need. The code will print the list of filenames. Go ahead and give it a try!

The assignment operator also comes in handy when you need to provide default argument values in your functions and methods. Default argument values allow you to define functions that take arguments with sensible defaults. These defaults allow you to call the function with specific values or to simply rely on the defaults.

As an example, consider the following function:

This function takes one argument, called name . This argument has a sensible default value that’ll be used when you call the function without arguments. To provide this sensible default value, you use an assignment.

Note: According to PEP 8 , the style guide for Python code, you shouldn’t use spaces around the assignment operator when providing default argument values in function definitions.

Here’s how the function works:

If you don’t provide a name during the call to greet() , then the function uses the default value provided in the definition. If you provide a name, then the function uses it instead of the default one.

Up to this point, you’ve learned a lot about the Python assignment operator and how to use it for writing different types of assignment statements. In the following sections, you’ll dive into a great feature of assignment statements in Python. You’ll learn about augmented assignments .

Augmented Assignment Operators in Python

Python supports what are known as augmented assignments . An augmented assignment combines the assignment operator with another operator to make the statement more concise. Most Python math and bitwise operators have an augmented assignment variation that looks something like this:

Note that $ isn’t a valid Python operator. In this example, it’s a placeholder for a generic operator. This statement works as follows:

- Evaluate expression to produce a value.

- Run the operation defined by the operator that prefixes the = sign, using the previous value of variable and the return value of expression as operands.

- Assign the resulting value back to variable .

In practice, an augmented assignment like the above is equivalent to the following statement:

As you can conclude, augmented assignments are syntactic sugar . They provide a shorthand notation for a specific and popular kind of assignment.

For example, say that you need to define a counter variable to count some stuff in your code. You can use the += operator to increment counter by 1 using the following code:

In this example, the += operator, known as augmented addition , adds 1 to the previous value in counter each time you run the statement counter += 1 .

It’s important to note that unlike regular assignments, augmented assignments don’t create new variables. They only allow you to update existing variables. If you use an augmented assignment with an undefined variable, then you get a NameError :

Python evaluates the right side of the statement before assigning the resulting value back to the target variable. In this specific example, when Python tries to compute x + 1 , it finds that x isn’t defined.

Great! You now know that an augmented assignment consists of combining the assignment operator with another operator, like a math or bitwise operator. To continue this discussion, you’ll learn which math operators have an augmented variation in Python.

An equation like x = x + b doesn’t make sense in math. But in programming, a statement like x = x + b is perfectly valid and can be extremely useful. It adds b to x and reassigns the result back to x .