22 August 2024: Due to technical disruption, we are experiencing some delays to publication. We are working to restore services and apologise for the inconvenience. For further updates please visit our website: https://www.cambridge.org/universitypress/about-us/news-and-blogs/cambridge-university-press-publishing-update-following-technical-disruption

We use cookies to distinguish you from other users and to provide you with a better experience on our websites. Close this message to accept cookies or find out how to manage your cookie settings .

Login Alert

- > How to Do Research

- > Draw conclusions and make recommendations

Book contents

- Frontmatter

- Acknowledgements

- Introduction: Types of research

- Part 1 The research process

- 1 Develop the research objectives

- 2 Design and plan the study

- 3 Write the proposal

- 4 Obtain financial support for the research

- 5 Manage the research

- 6 Draw conclusions and make recommendations

- 7 Write the report

- 8 Disseminate the results

- Part 2 Methods

- Appendix The market for information professionals: A proposal from the Policy Studies Institute

6 - Draw conclusions and make recommendations

from Part 1 - The research process

Published online by Cambridge University Press: 09 June 2018

This is the point everything has been leading up to. Having carried out the research and marshalled all the evidence, you are now faced with the problem of making sense of it all. Here you need to distinguish clearly between three different things: results, conclusions and recommendations.

Results are what you have found through the research. They are more than just the raw data that you have collected. They are the processed findings of the work – what you have been analysing and striving to understand. In total, the results form the picture that you have uncovered through your research. Results are neutral. They clearly depend on the nature of the questions asked but, given a particular set of questions, the results should not be contentious – there should be no debate about whether or not 63 per cent of respondents said ‘yes’ to question 16.

When you consider the results you can draw conclusions based on them. These are less neutral as you are putting your interpretation on the results and thus introducing a degree of subjectivity. Some research is simply descriptive – the final report merely presents the results. In most cases, though, you will want to interpret them, saying what they mean for you – drawing conclusions.

These conclusions might arise from a comparison between your results and the findings of other studies. They will, almost certainly, be developed with reference to the aim and objectives of the research. While there will be no debate over the results, the conclusions could well be contentious. Someone else might interpret the results differently, arriving at different conclusions. For this reason you need to support your conclusions with structured, logical reasoning.

Having drawn your conclusions you can then make recommendations. These should flow from your conclusions. They are suggestions about action that might be taken by people or organizations in the light of the conclusions that you have drawn from the results of the research. Like the conclusions, the recommendations may be open to debate. You may feel that, on the basis of your conclusions, the organization you have been studying should do this, that or the other.

Access options

Save book to kindle.

To save this book to your Kindle, first ensure [email protected] is added to your Approved Personal Document E-mail List under your Personal Document Settings on the Manage Your Content and Devices page of your Amazon account. Then enter the ‘name’ part of your Kindle email address below. Find out more about saving to your Kindle .

Note you can select to save to either the @free.kindle.com or @kindle.com variations. ‘@free.kindle.com’ emails are free but can only be saved to your device when it is connected to wi-fi. ‘@kindle.com’ emails can be delivered even when you are not connected to wi-fi, but note that service fees apply.

Find out more about the Kindle Personal Document Service .

- Draw conclusions and make recommendations

- Book: How to Do Research

- Online publication: 09 June 2018

- Chapter DOI: https://doi.org/10.29085/9781856049825.007

Save book to Dropbox

To save content items to your account, please confirm that you agree to abide by our usage policies. If this is the first time you use this feature, you will be asked to authorise Cambridge Core to connect with your account. Find out more about saving content to Dropbox .

Save book to Google Drive

To save content items to your account, please confirm that you agree to abide by our usage policies. If this is the first time you use this feature, you will be asked to authorise Cambridge Core to connect with your account. Find out more about saving content to Google Drive .

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Research paper

Writing a Research Paper Conclusion | Step-by-Step Guide

Published on October 30, 2022 by Jack Caulfield . Revised on April 13, 2023.

- Restate the problem statement addressed in the paper

- Summarize your overall arguments or findings

- Suggest the key takeaways from your paper

The content of the conclusion varies depending on whether your paper presents the results of original empirical research or constructs an argument through engagement with sources .

Instantly correct all language mistakes in your text

Upload your document to correct all your mistakes in minutes

Table of contents

Step 1: restate the problem, step 2: sum up the paper, step 3: discuss the implications, research paper conclusion examples, frequently asked questions about research paper conclusions.

The first task of your conclusion is to remind the reader of your research problem . You will have discussed this problem in depth throughout the body, but now the point is to zoom back out from the details to the bigger picture.

While you are restating a problem you’ve already introduced, you should avoid phrasing it identically to how it appeared in the introduction . Ideally, you’ll find a novel way to circle back to the problem from the more detailed ideas discussed in the body.

For example, an argumentative paper advocating new measures to reduce the environmental impact of agriculture might restate its problem as follows:

Meanwhile, an empirical paper studying the relationship of Instagram use with body image issues might present its problem like this:

“In conclusion …”

Avoid starting your conclusion with phrases like “In conclusion” or “To conclude,” as this can come across as too obvious and make your writing seem unsophisticated. The content and placement of your conclusion should make its function clear without the need for additional signposting.

Scribbr Citation Checker New

The AI-powered Citation Checker helps you avoid common mistakes such as:

- Missing commas and periods

- Incorrect usage of “et al.”

- Ampersands (&) in narrative citations

- Missing reference entries

Having zoomed back in on the problem, it’s time to summarize how the body of the paper went about addressing it, and what conclusions this approach led to.

Depending on the nature of your research paper, this might mean restating your thesis and arguments, or summarizing your overall findings.

Argumentative paper: Restate your thesis and arguments

In an argumentative paper, you will have presented a thesis statement in your introduction, expressing the overall claim your paper argues for. In the conclusion, you should restate the thesis and show how it has been developed through the body of the paper.

Briefly summarize the key arguments made in the body, showing how each of them contributes to proving your thesis. You may also mention any counterarguments you addressed, emphasizing why your thesis holds up against them, particularly if your argument is a controversial one.

Don’t go into the details of your evidence or present new ideas; focus on outlining in broad strokes the argument you have made.

Empirical paper: Summarize your findings

In an empirical paper, this is the time to summarize your key findings. Don’t go into great detail here (you will have presented your in-depth results and discussion already), but do clearly express the answers to the research questions you investigated.

Describe your main findings, even if they weren’t necessarily the ones you expected or hoped for, and explain the overall conclusion they led you to.

Having summed up your key arguments or findings, the conclusion ends by considering the broader implications of your research. This means expressing the key takeaways, practical or theoretical, from your paper—often in the form of a call for action or suggestions for future research.

Argumentative paper: Strong closing statement

An argumentative paper generally ends with a strong closing statement. In the case of a practical argument, make a call for action: What actions do you think should be taken by the people or organizations concerned in response to your argument?

If your topic is more theoretical and unsuitable for a call for action, your closing statement should express the significance of your argument—for example, in proposing a new understanding of a topic or laying the groundwork for future research.

Empirical paper: Future research directions

In a more empirical paper, you can close by either making recommendations for practice (for example, in clinical or policy papers), or suggesting directions for future research.

Whatever the scope of your own research, there will always be room for further investigation of related topics, and you’ll often discover new questions and problems during the research process .

Finish your paper on a forward-looking note by suggesting how you or other researchers might build on this topic in the future and address any limitations of the current paper.

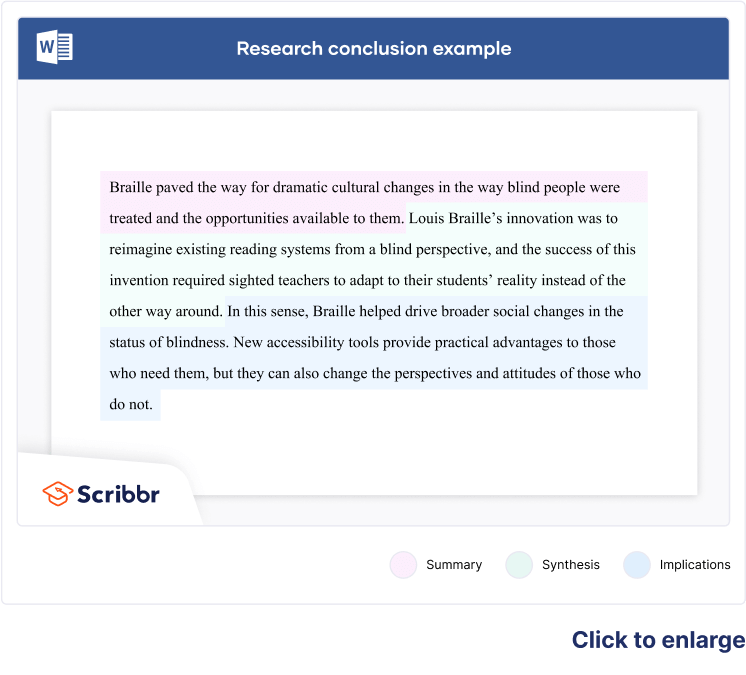

Full examples of research paper conclusions are shown in the tabs below: one for an argumentative paper, the other for an empirical paper.

- Argumentative paper

- Empirical paper

While the role of cattle in climate change is by now common knowledge, countries like the Netherlands continually fail to confront this issue with the urgency it deserves. The evidence is clear: To create a truly futureproof agricultural sector, Dutch farmers must be incentivized to transition from livestock farming to sustainable vegetable farming. As well as dramatically lowering emissions, plant-based agriculture, if approached in the right way, can produce more food with less land, providing opportunities for nature regeneration areas that will themselves contribute to climate targets. Although this approach would have economic ramifications, from a long-term perspective, it would represent a significant step towards a more sustainable and resilient national economy. Transitioning to sustainable vegetable farming will make the Netherlands greener and healthier, setting an example for other European governments. Farmers, policymakers, and consumers must focus on the future, not just on their own short-term interests, and work to implement this transition now.

As social media becomes increasingly central to young people’s everyday lives, it is important to understand how different platforms affect their developing self-conception. By testing the effect of daily Instagram use among teenage girls, this study established that highly visual social media does indeed have a significant effect on body image concerns, with a strong correlation between the amount of time spent on the platform and participants’ self-reported dissatisfaction with their appearance. However, the strength of this effect was moderated by pre-test self-esteem ratings: Participants with higher self-esteem were less likely to experience an increase in body image concerns after using Instagram. This suggests that, while Instagram does impact body image, it is also important to consider the wider social and psychological context in which this usage occurs: Teenagers who are already predisposed to self-esteem issues may be at greater risk of experiencing negative effects. Future research into Instagram and other highly visual social media should focus on establishing a clearer picture of how self-esteem and related constructs influence young people’s experiences of these platforms. Furthermore, while this experiment measured Instagram usage in terms of time spent on the platform, observational studies are required to gain more insight into different patterns of usage—to investigate, for instance, whether active posting is associated with different effects than passive consumption of social media content.

If you’re unsure about the conclusion, it can be helpful to ask a friend or fellow student to read your conclusion and summarize the main takeaways.

- Do they understand from your conclusion what your research was about?

- Are they able to summarize the implications of your findings?

- Can they answer your research question based on your conclusion?

You can also get an expert to proofread and feedback your paper with a paper editing service .

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

The conclusion of a research paper has several key elements you should make sure to include:

- A restatement of the research problem

- A summary of your key arguments and/or findings

- A short discussion of the implications of your research

No, it’s not appropriate to present new arguments or evidence in the conclusion . While you might be tempted to save a striking argument for last, research papers follow a more formal structure than this.

All your findings and arguments should be presented in the body of the text (more specifically in the results and discussion sections if you are following a scientific structure). The conclusion is meant to summarize and reflect on the evidence and arguments you have already presented, not introduce new ones.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Caulfield, J. (2023, April 13). Writing a Research Paper Conclusion | Step-by-Step Guide. Scribbr. Retrieved August 23, 2024, from https://www.scribbr.com/research-paper/research-paper-conclusion/

Is this article helpful?

Jack Caulfield

Other students also liked, writing a research paper introduction | step-by-step guide, how to create a structured research paper outline | example, checklist: writing a great research paper, what is your plagiarism score.

- U.S. Locations

- UMGC Europe

- Learn Online

- Find Answers

- 855-655-8682

- Current Students

Online Guide to Writing and Research

The research process, explore more of umgc.

- Online Guide to Writing

Planning and Writing a Research Paper

Draw Conclusions

As a writer, you are presenting your viewpoint, opinions, evidence, etc. for others to review, so you must take on this task with maturity, courage and thoughtfulness. Remember, you are adding to the discourse community with every research paper that you write. This is a privilege and an opportunity to share your point of view with the world at large in an academic setting.

Because research generates further research, the conclusions you draw from your research are important. As a researcher, you depend on the integrity of the research that precedes your own efforts, and researchers depend on each other to draw valid conclusions.

To test the validity of your conclusions, you will have to review both the content of your paper and the way in which you arrived at the content. You may ask yourself questions, such as the ones presented below, to detect any weak areas in your paper, so you can then make those areas stronger. Notice that some of the questions relate to your process, others to your sources, and others to how you arrived at your conclusions.

Checklist for Evaluating Your Conclusions

| Checked | Questions |

| ✓ | Does the evidence in my paper evolve from a stated thesis or topic statement? |

| ✓ | Do all of my resources for evidence agree with each other? Are there conflicts, and have I identified them as conflicts? |

| ✓ | Have I offered enough evidence for every conclusion I have drawn? Are my conclusions based on empirical studies, expert testimony, or data, or all of these? |

| ✓ | Are all of my sources credible? Is anyone in my audience likely to challenge them? |

| ✓ | Have I presented circular reasoning or illogical conclusions? |

| ✓ | Am I confident that I have covered most of the major sources of information on my topic? If not, have I stated this as a limitation of my research? |

| ✓ | Have I discovered further areas for research and identified them in my paper? |

| ✓ | Have others to whom I have shown my paper perceived the validity of my conclusions? |

| ✓ | Are my conclusions strong? If not, what causes them to be weak? |

Key Takeaways

- Because research generates further research, the conclusions you draw from your research are important.

- To test the validity of your conclusions, you will have to review both the content of your paper and the way in which you arrived at the content.

Mailing Address: 3501 University Blvd. East, Adelphi, MD 20783 This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License . © 2022 UMGC. All links to external sites were verified at the time of publication. UMGC is not responsible for the validity or integrity of information located at external sites.

Table of Contents: Online Guide to Writing

Chapter 1: College Writing

How Does College Writing Differ from Workplace Writing?

What Is College Writing?

Why So Much Emphasis on Writing?

Chapter 2: The Writing Process

Doing Exploratory Research

Getting from Notes to Your Draft

Introduction

Prewriting - Techniques to Get Started - Mining Your Intuition

Prewriting: Targeting Your Audience

Prewriting: Techniques to Get Started

Prewriting: Understanding Your Assignment

Rewriting: Being Your Own Critic

Rewriting: Creating a Revision Strategy

Rewriting: Getting Feedback

Rewriting: The Final Draft

Techniques to Get Started - Outlining

Techniques to Get Started - Using Systematic Techniques

Thesis Statement and Controlling Idea

Writing: Getting from Notes to Your Draft - Freewriting

Writing: Getting from Notes to Your Draft - Summarizing Your Ideas

Writing: Outlining What You Will Write

Chapter 3: Thinking Strategies

A Word About Style, Voice, and Tone

A Word About Style, Voice, and Tone: Style Through Vocabulary and Diction

Critical Strategies and Writing

Critical Strategies and Writing: Analysis

Critical Strategies and Writing: Evaluation

Critical Strategies and Writing: Persuasion

Critical Strategies and Writing: Synthesis

Developing a Paper Using Strategies

Kinds of Assignments You Will Write

Patterns for Presenting Information

Patterns for Presenting Information: Critiques

Patterns for Presenting Information: Discussing Raw Data

Patterns for Presenting Information: General-to-Specific Pattern

Patterns for Presenting Information: Problem-Cause-Solution Pattern

Patterns for Presenting Information: Specific-to-General Pattern

Patterns for Presenting Information: Summaries and Abstracts

Supporting with Research and Examples

Writing Essay Examinations

Writing Essay Examinations: Make Your Answer Relevant and Complete

Writing Essay Examinations: Organize Thinking Before Writing

Writing Essay Examinations: Read and Understand the Question

Chapter 4: The Research Process

Planning and Writing a Research Paper: Ask a Research Question

Planning and Writing a Research Paper: Cite Sources

Planning and Writing a Research Paper: Collect Evidence

Planning and Writing a Research Paper: Decide Your Point of View, or Role, for Your Research

Planning and Writing a Research Paper: Draw Conclusions

Planning and Writing a Research Paper: Find a Topic and Get an Overview

Planning and Writing a Research Paper: Manage Your Resources

Planning and Writing a Research Paper: Outline

Planning and Writing a Research Paper: Survey the Literature

Planning and Writing a Research Paper: Work Your Sources into Your Research Writing

Research Resources: Where Are Research Resources Found? - Human Resources

Research Resources: What Are Research Resources?

Research Resources: Where Are Research Resources Found?

Research Resources: Where Are Research Resources Found? - Electronic Resources

Research Resources: Where Are Research Resources Found? - Print Resources

Structuring the Research Paper: Formal Research Structure

Structuring the Research Paper: Informal Research Structure

The Nature of Research

The Research Assignment: How Should Research Sources Be Evaluated?

The Research Assignment: When Is Research Needed?

The Research Assignment: Why Perform Research?

Chapter 5: Academic Integrity

Academic Integrity

Giving Credit to Sources

Giving Credit to Sources: Copyright Laws

Giving Credit to Sources: Documentation

Giving Credit to Sources: Style Guides

Integrating Sources

Practicing Academic Integrity

Practicing Academic Integrity: Keeping Accurate Records

Practicing Academic Integrity: Managing Source Material

Practicing Academic Integrity: Managing Source Material - Paraphrasing Your Source

Practicing Academic Integrity: Managing Source Material - Quoting Your Source

Practicing Academic Integrity: Managing Source Material - Summarizing Your Sources

Types of Documentation

Types of Documentation: Bibliographies and Source Lists

Types of Documentation: Citing World Wide Web Sources

Types of Documentation: In-Text or Parenthetical Citations

Types of Documentation: In-Text or Parenthetical Citations - APA Style

Types of Documentation: In-Text or Parenthetical Citations - CSE/CBE Style

Types of Documentation: In-Text or Parenthetical Citations - Chicago Style

Types of Documentation: In-Text or Parenthetical Citations - MLA Style

Types of Documentation: Note Citations

Chapter 6: Using Library Resources

Finding Library Resources

Chapter 7: Assessing Your Writing

How Is Writing Graded?

How Is Writing Graded?: A General Assessment Tool

The Draft Stage

The Draft Stage: The First Draft

The Draft Stage: The Revision Process and the Final Draft

The Draft Stage: Using Feedback

The Research Stage

Using Assessment to Improve Your Writing

Chapter 8: Other Frequently Assigned Papers

Reviews and Reaction Papers: Article and Book Reviews

Reviews and Reaction Papers: Reaction Papers

Writing Arguments

Writing Arguments: Adapting the Argument Structure

Writing Arguments: Purposes of Argument

Writing Arguments: References to Consult for Writing Arguments

Writing Arguments: Steps to Writing an Argument - Anticipate Active Opposition

Writing Arguments: Steps to Writing an Argument - Determine Your Organization

Writing Arguments: Steps to Writing an Argument - Develop Your Argument

Writing Arguments: Steps to Writing an Argument - Introduce Your Argument

Writing Arguments: Steps to Writing an Argument - State Your Thesis or Proposition

Writing Arguments: Steps to Writing an Argument - Write Your Conclusion

Writing Arguments: Types of Argument

Appendix A: Books to Help Improve Your Writing

Dictionaries

General Style Manuals

Researching on the Internet

Special Style Manuals

Writing Handbooks

Appendix B: Collaborative Writing and Peer Reviewing

Collaborative Writing: Assignments to Accompany the Group Project

Collaborative Writing: Informal Progress Report

Collaborative Writing: Issues to Resolve

Collaborative Writing: Methodology

Collaborative Writing: Peer Evaluation

Collaborative Writing: Tasks of Collaborative Writing Group Members

Collaborative Writing: Writing Plan

General Introduction

Peer Reviewing

Appendix C: Developing an Improvement Plan

Working with Your Instructor’s Comments and Grades

Appendix D: Writing Plan and Project Schedule

Devising a Writing Project Plan and Schedule

Reviewing Your Plan with Others

By using our website you agree to our use of cookies. Learn more about how we use cookies by reading our Privacy Policy .

How to Write a Conclusion for Research Papers (with Examples)

The conclusion of a research paper is a crucial section that plays a significant role in the overall impact and effectiveness of your research paper. However, this is also the section that typically receives less attention compared to the introduction and the body of the paper. The conclusion serves to provide a concise summary of the key findings, their significance, their implications, and a sense of closure to the study. Discussing how can the findings be applied in real-world scenarios or inform policy, practice, or decision-making is especially valuable to practitioners and policymakers. The research paper conclusion also provides researchers with clear insights and valuable information for their own work, which they can then build on and contribute to the advancement of knowledge in the field.

The research paper conclusion should explain the significance of your findings within the broader context of your field. It restates how your results contribute to the existing body of knowledge and whether they confirm or challenge existing theories or hypotheses. Also, by identifying unanswered questions or areas requiring further investigation, your awareness of the broader research landscape can be demonstrated.

Remember to tailor the research paper conclusion to the specific needs and interests of your intended audience, which may include researchers, practitioners, policymakers, or a combination of these.

Table of Contents

What is a conclusion in a research paper, summarizing conclusion, editorial conclusion, externalizing conclusion, importance of a good research paper conclusion, how to write a conclusion for your research paper, research paper conclusion examples.

- How to write a research paper conclusion with Paperpal?

Frequently Asked Questions

A conclusion in a research paper is the final section where you summarize and wrap up your research, presenting the key findings and insights derived from your study. The research paper conclusion is not the place to introduce new information or data that was not discussed in the main body of the paper. When working on how to conclude a research paper, remember to stick to summarizing and interpreting existing content. The research paper conclusion serves the following purposes: 1

- Warn readers of the possible consequences of not attending to the problem.

- Recommend specific course(s) of action.

- Restate key ideas to drive home the ultimate point of your research paper.

- Provide a “take-home” message that you want the readers to remember about your study.

Types of conclusions for research papers

In research papers, the conclusion provides closure to the reader. The type of research paper conclusion you choose depends on the nature of your study, your goals, and your target audience. I provide you with three common types of conclusions:

A summarizing conclusion is the most common type of conclusion in research papers. It involves summarizing the main points, reiterating the research question, and restating the significance of the findings. This common type of research paper conclusion is used across different disciplines.

An editorial conclusion is less common but can be used in research papers that are focused on proposing or advocating for a particular viewpoint or policy. It involves presenting a strong editorial or opinion based on the research findings and offering recommendations or calls to action.

An externalizing conclusion is a type of conclusion that extends the research beyond the scope of the paper by suggesting potential future research directions or discussing the broader implications of the findings. This type of conclusion is often used in more theoretical or exploratory research papers.

Align your conclusion’s tone with the rest of your research paper. Start Writing with Paperpal Now!

The conclusion in a research paper serves several important purposes:

- Offers Implications and Recommendations : Your research paper conclusion is an excellent place to discuss the broader implications of your research and suggest potential areas for further study. It’s also an opportunity to offer practical recommendations based on your findings.

- Provides Closure : A good research paper conclusion provides a sense of closure to your paper. It should leave the reader with a feeling that they have reached the end of a well-structured and thought-provoking research project.

- Leaves a Lasting Impression : Writing a well-crafted research paper conclusion leaves a lasting impression on your readers. It’s your final opportunity to leave them with a new idea, a call to action, or a memorable quote.

Writing a strong conclusion for your research paper is essential to leave a lasting impression on your readers. Here’s a step-by-step process to help you create and know what to put in the conclusion of a research paper: 2

- Research Statement : Begin your research paper conclusion by restating your research statement. This reminds the reader of the main point you’ve been trying to prove throughout your paper. Keep it concise and clear.

- Key Points : Summarize the main arguments and key points you’ve made in your paper. Avoid introducing new information in the research paper conclusion. Instead, provide a concise overview of what you’ve discussed in the body of your paper.

- Address the Research Questions : If your research paper is based on specific research questions or hypotheses, briefly address whether you’ve answered them or achieved your research goals. Discuss the significance of your findings in this context.

- Significance : Highlight the importance of your research and its relevance in the broader context. Explain why your findings matter and how they contribute to the existing knowledge in your field.

- Implications : Explore the practical or theoretical implications of your research. How might your findings impact future research, policy, or real-world applications? Consider the “so what?” question.

- Future Research : Offer suggestions for future research in your area. What questions or aspects remain unanswered or warrant further investigation? This shows that your work opens the door for future exploration.

- Closing Thought : Conclude your research paper conclusion with a thought-provoking or memorable statement. This can leave a lasting impression on your readers and wrap up your paper effectively. Avoid introducing new information or arguments here.

- Proofread and Revise : Carefully proofread your conclusion for grammar, spelling, and clarity. Ensure that your ideas flow smoothly and that your conclusion is coherent and well-structured.

Write your research paper conclusion 2x faster with Paperpal. Try it now!

Remember that a well-crafted research paper conclusion is a reflection of the strength of your research and your ability to communicate its significance effectively. It should leave a lasting impression on your readers and tie together all the threads of your paper. Now you know how to start the conclusion of a research paper and what elements to include to make it impactful, let’s look at a research paper conclusion sample.

| Summarizing Conclusion | Impact of social media on adolescents’ mental health | In conclusion, our study has shown that increased usage of social media is significantly associated with higher levels of anxiety and depression among adolescents. These findings highlight the importance of understanding the complex relationship between social media and mental health to develop effective interventions and support systems for this vulnerable population. |

| Editorial Conclusion | Environmental impact of plastic waste | In light of our research findings, it is clear that we are facing a plastic pollution crisis. To mitigate this issue, we strongly recommend a comprehensive ban on single-use plastics, increased recycling initiatives, and public awareness campaigns to change consumer behavior. The responsibility falls on governments, businesses, and individuals to take immediate actions to protect our planet and future generations. |

| Externalizing Conclusion | Exploring applications of AI in healthcare | While our study has provided insights into the current applications of AI in healthcare, the field is rapidly evolving. Future research should delve deeper into the ethical, legal, and social implications of AI in healthcare, as well as the long-term outcomes of AI-driven diagnostics and treatments. Furthermore, interdisciplinary collaboration between computer scientists, medical professionals, and policymakers is essential to harness the full potential of AI while addressing its challenges. |

How to write a research paper conclusion with Paperpal?

A research paper conclusion is not just a summary of your study, but a synthesis of the key findings that ties the research together and places it in a broader context. A research paper conclusion should be concise, typically around one paragraph in length. However, some complex topics may require a longer conclusion to ensure the reader is left with a clear understanding of the study’s significance. Paperpal, an AI writing assistant trusted by over 800,000 academics globally, can help you write a well-structured conclusion for your research paper.

- Sign Up or Log In: Create a new Paperpal account or login with your details.

- Navigate to Features : Once logged in, head over to the features’ side navigation pane. Click on Templates and you’ll find a suite of generative AI features to help you write better, faster.

- Generate an outline: Under Templates, select ‘Outlines’. Choose ‘Research article’ as your document type.

- Select your section: Since you’re focusing on the conclusion, select this section when prompted.

- Choose your field of study: Identifying your field of study allows Paperpal to provide more targeted suggestions, ensuring the relevance of your conclusion to your specific area of research.

- Provide a brief description of your study: Enter details about your research topic and findings. This information helps Paperpal generate a tailored outline that aligns with your paper’s content.

- Generate the conclusion outline: After entering all necessary details, click on ‘generate’. Paperpal will then create a structured outline for your conclusion, to help you start writing and build upon the outline.

- Write your conclusion: Use the generated outline to build your conclusion. The outline serves as a guide, ensuring you cover all critical aspects of a strong conclusion, from summarizing key findings to highlighting the research’s implications.

- Refine and enhance: Paperpal’s ‘Make Academic’ feature can be particularly useful in the final stages. Select any paragraph of your conclusion and use this feature to elevate the academic tone, ensuring your writing is aligned to the academic journal standards.

By following these steps, Paperpal not only simplifies the process of writing a research paper conclusion but also ensures it is impactful, concise, and aligned with academic standards. Sign up with Paperpal today and write your research paper conclusion 2x faster .

The research paper conclusion is a crucial part of your paper as it provides the final opportunity to leave a strong impression on your readers. In the research paper conclusion, summarize the main points of your research paper by restating your research statement, highlighting the most important findings, addressing the research questions or objectives, explaining the broader context of the study, discussing the significance of your findings, providing recommendations if applicable, and emphasizing the takeaway message. The main purpose of the conclusion is to remind the reader of the main point or argument of your paper and to provide a clear and concise summary of the key findings and their implications. All these elements should feature on your list of what to put in the conclusion of a research paper to create a strong final statement for your work.

A strong conclusion is a critical component of a research paper, as it provides an opportunity to wrap up your arguments, reiterate your main points, and leave a lasting impression on your readers. Here are the key elements of a strong research paper conclusion: 1. Conciseness : A research paper conclusion should be concise and to the point. It should not introduce new information or ideas that were not discussed in the body of the paper. 2. Summarization : The research paper conclusion should be comprehensive enough to give the reader a clear understanding of the research’s main contributions. 3 . Relevance : Ensure that the information included in the research paper conclusion is directly relevant to the research paper’s main topic and objectives; avoid unnecessary details. 4 . Connection to the Introduction : A well-structured research paper conclusion often revisits the key points made in the introduction and shows how the research has addressed the initial questions or objectives. 5. Emphasis : Highlight the significance and implications of your research. Why is your study important? What are the broader implications or applications of your findings? 6 . Call to Action : Include a call to action or a recommendation for future research or action based on your findings.

The length of a research paper conclusion can vary depending on several factors, including the overall length of the paper, the complexity of the research, and the specific journal requirements. While there is no strict rule for the length of a conclusion, but it’s generally advisable to keep it relatively short. A typical research paper conclusion might be around 5-10% of the paper’s total length. For example, if your paper is 10 pages long, the conclusion might be roughly half a page to one page in length.

In general, you do not need to include citations in the research paper conclusion. Citations are typically reserved for the body of the paper to support your arguments and provide evidence for your claims. However, there may be some exceptions to this rule: 1. If you are drawing a direct quote or paraphrasing a specific source in your research paper conclusion, you should include a citation to give proper credit to the original author. 2. If your conclusion refers to or discusses specific research, data, or sources that are crucial to the overall argument, citations can be included to reinforce your conclusion’s validity.

The conclusion of a research paper serves several important purposes: 1. Summarize the Key Points 2. Reinforce the Main Argument 3. Provide Closure 4. Offer Insights or Implications 5. Engage the Reader. 6. Reflect on Limitations

Remember that the primary purpose of the research paper conclusion is to leave a lasting impression on the reader, reinforcing the key points and providing closure to your research. It’s often the last part of the paper that the reader will see, so it should be strong and well-crafted.

- Makar, G., Foltz, C., Lendner, M., & Vaccaro, A. R. (2018). How to write effective discussion and conclusion sections. Clinical spine surgery, 31(8), 345-346.

- Bunton, D. (2005). The structure of PhD conclusion chapters. Journal of English for academic purposes , 4 (3), 207-224.

Paperpal is a comprehensive AI writing toolkit that helps students and researchers achieve 2x the writing in half the time. It leverages 21+ years of STM experience and insights from millions of research articles to provide in-depth academic writing, language editing, and submission readiness support to help you write better, faster.

Get accurate academic translations, rewriting support, grammar checks, vocabulary suggestions, and generative AI assistance that delivers human precision at machine speed. Try for free or upgrade to Paperpal Prime starting at US$19 a month to access premium features, including consistency, plagiarism, and 30+ submission readiness checks to help you succeed.

Experience the future of academic writing – Sign up to Paperpal and start writing for free!

Related Reads:

- 5 Reasons for Rejection After Peer Review

- Ethical Research Practices For Research with Human Subjects

7 Ways to Improve Your Academic Writing Process

- Paraphrasing in Academic Writing: Answering Top Author Queries

Preflight For Editorial Desk: The Perfect Hybrid (AI + Human) Assistance Against Compromised Manuscripts

You may also like, how to write your research paper in apa..., how to choose a dissertation topic, how to write a phd research proposal, how to write an academic paragraph (step-by-step guide), research funding basics: what should a grant proposal..., how to write an abstract in research papers..., how to write dissertation acknowledgements, how to write the first draft of a..., mla works cited page: format, template & examples, how to write a high-quality conference paper.

Drawing Conclusions in Psychological Research: From Data to Insights

Table of Contents

Have you ever wondered how a hunch transforms into a scientific understanding? In the realm of psychological research , this transformation hinges on a crucial step: drawing conclusions. This step is not about making wild guesses but about making sense of the data collected through meticulous research and testing hypotheses . It’s the moment where pieces of the puzzle come together, providing answers and sometimes raising even more questions. Let’s embark on a journey to understand how researchers in psychology navigate from raw data to insightful conclusions that can influence theories , practices, and our very understanding of human behavior.

What happens after data analysis in psychological research?

Once the numbers have been crunched, and the analyses are complete, researchers stand at a critical juncture. They must interpret the data in a meaningful way. This process involves looking at the results from various angles, considering alternative explanations, and determining the relevance of the findings to the original research questions . It’s a step that requires not just statistical know\-how but also a deep understanding of human psychology and the theories that frame our understanding of it.

How are conclusions synthesized in psychology?

The synthesis of conclusions in psychological research is an art as much as it is a science. Researchers meticulously review their findings, considering the context of the study, the limitations of their methods , and the patterns that have emerged. They may discover that their results support their initial hypothesis , or they may be taken by surprise by what the data reveals. In either case, they must construct a narrative that aligns with the evidence and contributes to the broader conversation in the field of psychology.

The reflective process of drawing conclusions

Drawing conclusions is inherently reflective. It’s a time for researchers to look back at their work and ask crucial questions. Did the study design work as intended? Were the methods appropriate? Is there a need for further research? This reflection is not only about assessing the success of the study but also about understanding its place within the larger body of psychological research. It’s about taking the new knowledge gleaned and fitting it into the existing puzzle—or realizing that perhaps the puzzle itself needs to be redefined.

Relating outcomes to existing theories

Every conclusion drawn from a psychological study has the potential to affirm, challenge, or refine existing theories. This is where research moves beyond data points and becomes part of the ongoing dialogue that shapes our understanding of the mind and behavior. Researchers must consider how their conclusions align with or diverge from the predictions made by current theories and what this means for the field. Does the study reinforce the credibility of a theory, or does it suggest that revisions are necessary?

Potentially modifying theories based on new evidence

When data introduce new perspectives or contradict prevailing theories, it’s a sign that the field may be on the cusp of change. Researchers must be prepared to propose modifications to existing theories or even suggest new ones. This can be a contentious process, as it challenges the status quo and requires a strong foundation of evidence. However, it’s through this process that psychology continues to evolve and refine its understanding of human behavior.

Understanding the implications of research

The conclusions of a psychological study are not confined to academic papers—they have real-world implications. Researchers must consider how their findings can inform clinical practices , learning and improve educational outcomes.">educational strategies , or even policy decisions . They need to communicate the relevance of their work to various audiences, from fellow scientists to practitioners and policymakers. The implications can be profound, influencing how we approach mental health , learning, and social interaction .

The role of peer review in drawing conclusions

Peer review acts as a critical checkpoint in the process of drawing conclusions. Other experts in the field scrutinize the study to ensure that the methodology is sound, the analysis is robust, and the conclusions are justified. This collaborative effort helps to maintain the integrity of psychological research and ensures that conclusions are based on a solid foundation of evidence.

Drawing conclusions is a defining moment in psychological research. It’s the culmination of a complex process that starts with a question and, through careful design and analysis, ends with insights that can deepen our understanding of the human mind and behavior. This step is not the end of the journey; it’s a bridge to further inquiry, discussion, and discovery that propels the field forward. As researchers continue to piece together the vast puzzle of psychology, each study adds another piece, slowly bringing into focus the intricate picture of human nature.

How do you think the process of drawing conclusions in research affects the way we understand human behavior? And, what role do you believe new evidence should play in challenging or reinforcing existing psychological theories?

How useful was this post?

Click on a star to rate it!

Average rating 0 / 5. Vote count: 0

No votes so far! Be the first to rate this post.

We are sorry that this post was not useful for you!

Let us improve this post!

Tell us how we can improve this post?

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Submit Comment

Research Methods in Psychology

1 Introduction to Psychological Research – Objectives and Goals, Problems, Hypothesis and Variables

- Nature of Psychological Research

- The Context of Discovery

- Context of Justification

- Characteristics of Psychological Research

- Goals and Objectives of Psychological Research

2 Introduction to Psychological Experiments and Tests

- Independent and Dependent Variables

- Extraneous Variables

- Experimental and Control Groups

- Introduction of Test

- Types of Psychological Test

- Uses of Psychological Tests

3 Steps in Research

- Research Process

- Identification of the Problem

- Review of Literature

- Formulating a Hypothesis

- Identifying Manipulating and Controlling Variables

- Formulating a Research Design

- Constructing Devices for Observation and Measurement

- Sample Selection and Data Collection

- Data Analysis and Interpretation

- Hypothesis Testing

- Drawing Conclusion

4 Types of Research and Methods of Research

- Historical Research

- Descriptive Research

- Correlational Research

- Qualitative Research

- Ex-Post Facto Research

- True Experimental Research

- Quasi-Experimental Research

5 Definition and Description Research Design, Quality of Research Design

- Research Design

- Purpose of Research Design

- Design Selection

- Criteria of Research Design

- Qualities of Research Design

6 Experimental Design (Control Group Design and Two Factor Design)

- Experimental Design

- Control Group Design

- Two Factor Design

7 Survey Design

- Survey Research Designs

- Steps in Survey Design

- Structuring and Designing the Questionnaire

- Interviewing Methodology

- Data Analysis

- Final Report

8 Single Subject Design

- Single Subject Design: Definition and Meaning

- Phases Within Single Subject Design

- Requirements of Single Subject Design

- Characteristics of Single Subject Design

- Types of Single Subject Design

- Advantages of Single Subject Design

- Disadvantages of Single Subject Design

9 Observation Method

- Definition and Meaning of Observation

- Characteristics of Observation

- Types of Observation

- Advantages and Disadvantages of Observation

- Guides for Observation Method

10 Interview and Interviewing

- Definition of Interview

- Types of Interview

- Aspects of Qualitative Research Interviews

- Interview Questions

- Convergent Interviewing as Action Research

- Research Team

11 Questionnaire Method

- Definition and Description of Questionnaires

- Types of Questionnaires

- Purpose of Questionnaire Studies

- Designing Research Questionnaires

- The Methods to Make a Questionnaire Efficient

- The Types of Questionnaire to be Included in the Questionnaire

- Advantages and Disadvantages of Questionnaire

- When to Use a Questionnaire?

12 Case Study

- Definition and Description of Case Study Method

- Historical Account of Case Study Method

- Designing Case Study

- Requirements for Case Studies

- Guideline to Follow in Case Study Method

- Other Important Measures in Case Study Method

- Case Reports

13 Report Writing

- Purpose of a Report

- Writing Style of the Report

- Report Writing – the Do’s and the Don’ts

- Format for Report in Psychology Area

- Major Sections in a Report

14 Review of Literature

- Purposes of Review of Literature

- Sources of Review of Literature

- Types of Literature

- Writing Process of the Review of Literature

- Preparation of Index Card for Reviewing and Abstracting

15 Methodology

- Definition and Purpose of Methodology

- Participants (Sample)

- Apparatus and Materials

16 Result, Analysis and Discussion of the Data

- Definition and Description of Results

- Statistical Presentation

- Tables and Figures

17 Summary and Conclusion

- Summary Definition and Description

- Guidelines for Writing a Summary

- Writing the Summary and Choosing Words

- A Process for Paraphrasing and Summarising

- Summary of a Report

- Writing Conclusions

18 References in Research Report

- Reference List (the Format)

- References (Process of Writing)

- Reference List and Print Sources

- Electronic Sources

- Book on CD Tape and Movie

- Reference Specifications

- General Guidelines to Write References

Share on Mastodon

Jump to navigation

Cochrane Training

Chapter 15: interpreting results and drawing conclusions.

Holger J Schünemann, Gunn E Vist, Julian PT Higgins, Nancy Santesso, Jonathan J Deeks, Paul Glasziou, Elie A Akl, Gordon H Guyatt; on behalf of the Cochrane GRADEing Methods Group

Key Points:

- This chapter provides guidance on interpreting the results of synthesis in order to communicate the conclusions of the review effectively.

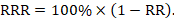

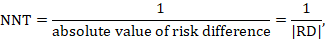

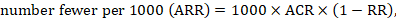

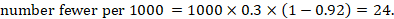

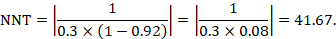

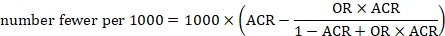

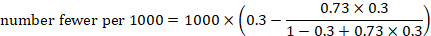

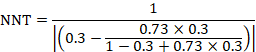

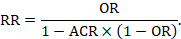

- Methods are presented for computing, presenting and interpreting relative and absolute effects for dichotomous outcome data, including the number needed to treat (NNT).

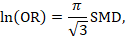

- For continuous outcome measures, review authors can present summary results for studies using natural units of measurement or as minimal important differences when all studies use the same scale. When studies measure the same construct but with different scales, review authors will need to find a way to interpret the standardized mean difference, or to use an alternative effect measure for the meta-analysis such as the ratio of means.

- Review authors should not describe results as ‘statistically significant’, ‘not statistically significant’ or ‘non-significant’ or unduly rely on thresholds for P values, but report the confidence interval together with the exact P value.

- Review authors should not make recommendations about healthcare decisions, but they can – after describing the certainty of evidence and the balance of benefits and harms – highlight different actions that might be consistent with particular patterns of values and preferences and other factors that determine a decision such as cost.

Cite this chapter as: Schünemann HJ, Vist GE, Higgins JPT, Santesso N, Deeks JJ, Glasziou P, Akl EA, Guyatt GH. Chapter 15: Interpreting results and drawing conclusions. In: Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA (editors). Cochrane Handbook for Systematic Reviews of Interventions version 6.4 (updated August 2023). Cochrane, 2023. Available from www.training.cochrane.org/handbook .

15.1 Introduction

The purpose of Cochrane Reviews is to facilitate healthcare decisions by patients and the general public, clinicians, guideline developers, administrators and policy makers. They also inform future research. A clear statement of findings, a considered discussion and a clear presentation of the authors’ conclusions are, therefore, important parts of the review. In particular, the following issues can help people make better informed decisions and increase the usability of Cochrane Reviews:

- information on all important outcomes, including adverse outcomes;

- the certainty of the evidence for each of these outcomes, as it applies to specific populations and specific interventions; and

- clarification of the manner in which particular values and preferences may bear on the desirable and undesirable consequences of the intervention.

A ‘Summary of findings’ table, described in Chapter 14 , Section 14.1 , provides key pieces of information about health benefits and harms in a quick and accessible format. It is highly desirable that review authors include a ‘Summary of findings’ table in Cochrane Reviews alongside a sufficient description of the studies and meta-analyses to support its contents. This description includes the rating of the certainty of evidence, also called the quality of the evidence or confidence in the estimates of the effects, which is expected in all Cochrane Reviews.

‘Summary of findings’ tables are usually supported by full evidence profiles which include the detailed ratings of the evidence (Guyatt et al 2011a, Guyatt et al 2013a, Guyatt et al 2013b, Santesso et al 2016). The Discussion section of the text of the review provides space to reflect and consider the implications of these aspects of the review’s findings. Cochrane Reviews include five standard subheadings to ensure the Discussion section places the review in an appropriate context: ‘Summary of main results (benefits and harms)’; ‘Potential biases in the review process’; ‘Overall completeness and applicability of evidence’; ‘Certainty of the evidence’; and ‘Agreements and disagreements with other studies or reviews’. Following the Discussion, the Authors’ conclusions section is divided into two standard subsections: ‘Implications for practice’ and ‘Implications for research’. The assessment of the certainty of evidence facilitates a structured description of the implications for practice and research.

Because Cochrane Reviews have an international audience, the Discussion and Authors’ conclusions should, so far as possible, assume a broad international perspective and provide guidance for how the results could be applied in different settings, rather than being restricted to specific national or local circumstances. Cultural differences and economic differences may both play an important role in determining the best course of action based on the results of a Cochrane Review. Furthermore, individuals within societies have widely varying values and preferences regarding health states, and use of societal resources to achieve particular health states. For all these reasons, and because information that goes beyond that included in a Cochrane Review is required to make fully informed decisions, different people will often make different decisions based on the same evidence presented in a review.

Thus, review authors should avoid specific recommendations that inevitably depend on assumptions about available resources, values and preferences, and other factors such as equity considerations, feasibility and acceptability of an intervention. The purpose of the review should be to present information and aid interpretation rather than to offer recommendations. The discussion and conclusions should help people understand the implications of the evidence in relation to practical decisions and apply the results to their specific situation. Review authors can aid this understanding of the implications by laying out different scenarios that describe certain value structures.

In this chapter, we address first one of the key aspects of interpreting findings that is also fundamental in completing a ‘Summary of findings’ table: the certainty of evidence related to each of the outcomes. We then provide a more detailed consideration of issues around applicability and around interpretation of numerical results, and provide suggestions for presenting authors’ conclusions.

15.2 Issues of indirectness and applicability

15.2.1 the role of the review author.

“A leap of faith is always required when applying any study findings to the population at large” or to a specific person. “In making that jump, one must always strike a balance between making justifiable broad generalizations and being too conservative in one’s conclusions” (Friedman et al 1985). In addition to issues about risk of bias and other domains determining the certainty of evidence, this leap of faith is related to how well the identified body of evidence matches the posed PICO ( Population, Intervention, Comparator(s) and Outcome ) question. As to the population, no individual can be entirely matched to the population included in research studies. At the time of decision, there will always be differences between the study population and the person or population to whom the evidence is applied; sometimes these differences are slight, sometimes large.

The terms applicability, generalizability, external validity and transferability are related, sometimes used interchangeably and have in common that they lack a clear and consistent definition in the classic epidemiological literature (Schünemann et al 2013). However, all of the terms describe one overarching theme: whether or not available research evidence can be directly used to answer the health and healthcare question at hand, ideally supported by a judgement about the degree of confidence in this use (Schünemann et al 2013). GRADE’s certainty domains include a judgement about ‘indirectness’ to describe all of these aspects including the concept of direct versus indirect comparisons of different interventions (Atkins et al 2004, Guyatt et al 2008, Guyatt et al 2011b).

To address adequately the extent to which a review is relevant for the purpose to which it is being put, there are certain things the review author must do, and certain things the user of the review must do to assess the degree of indirectness. Cochrane and the GRADE Working Group suggest using a very structured framework to address indirectness. We discuss here and in Chapter 14 what the review author can do to help the user. Cochrane Review authors must be extremely clear on the population, intervention and outcomes that they intend to address. Chapter 14, Section 14.1.2 , also emphasizes a crucial step: the specification of all patient-important outcomes relevant to the intervention strategies under comparison.

In considering whether the effect of an intervention applies equally to all participants, and whether different variations on the intervention have similar effects, review authors need to make a priori hypotheses about possible effect modifiers, and then examine those hypotheses (see Chapter 10, Section 10.10 and Section 10.11 ). If they find apparent subgroup effects, they must ultimately decide whether or not these effects are credible (Sun et al 2012). Differences between subgroups, particularly those that correspond to differences between studies, should be interpreted cautiously. Some chance variation between subgroups is inevitable so, unless there is good reason to believe that there is an interaction, review authors should not assume that the subgroup effect exists. If, despite due caution, review authors judge subgroup effects in terms of relative effect estimates as credible (i.e. the effects differ credibly), they should conduct separate meta-analyses for the relevant subgroups, and produce separate ‘Summary of findings’ tables for those subgroups.

The user of the review will be challenged with ‘individualization’ of the findings, whether they seek to apply the findings to an individual patient or a policy decision in a specific context. For example, even if relative effects are similar across subgroups, absolute effects will differ according to baseline risk. Review authors can help provide this information by identifying identifiable groups of people with varying baseline risks in the ‘Summary of findings’ tables, as discussed in Chapter 14, Section 14.1.3 . Users can then identify their specific case or population as belonging to a particular risk group, if relevant, and assess their likely magnitude of benefit or harm accordingly. A description of the identifying prognostic or baseline risk factors in a brief scenario (e.g. age or gender) will help users of a review further.

Another decision users must make is whether their individual case or population of interest is so different from those included in the studies that they cannot use the results of the systematic review and meta-analysis at all. Rather than rigidly applying the inclusion and exclusion criteria of studies, it is better to ask whether or not there are compelling reasons why the evidence should not be applied to a particular patient. Review authors can sometimes help decision makers by identifying important variation where divergence might limit the applicability of results (Rothwell 2005, Schünemann et al 2006, Guyatt et al 2011b, Schünemann et al 2013), including biologic and cultural variation, and variation in adherence to an intervention.

In addressing these issues, review authors cannot be aware of, or address, the myriad of differences in circumstances around the world. They can, however, address differences of known importance to many people and, importantly, they should avoid assuming that other people’s circumstances are the same as their own in discussing the results and drawing conclusions.

15.2.2 Biological variation

Issues of biological variation that may affect the applicability of a result to a reader or population include divergence in pathophysiology (e.g. biological differences between women and men that may affect responsiveness to an intervention) and divergence in a causative agent (e.g. for infectious diseases such as malaria, which may be caused by several different parasites). The discussion of the results in the review should make clear whether the included studies addressed all or only some of these groups, and whether any important subgroup effects were found.

15.2.3 Variation in context

Some interventions, particularly non-pharmacological interventions, may work in some contexts but not in others; the situation has been described as program by context interaction (Hawe et al 2004). Contextual factors might pertain to the host organization in which an intervention is offered, such as the expertise, experience and morale of the staff expected to carry out the intervention, the competing priorities for the clinician’s or staff’s attention, the local resources such as service and facilities made available to the program and the status or importance given to the program by the host organization. Broader context issues might include aspects of the system within which the host organization operates, such as the fee or payment structure for healthcare providers and the local insurance system. Some interventions, in particular complex interventions (see Chapter 17 ), can be only partially implemented in some contexts, and this requires judgements about indirectness of the intervention and its components for readers in that context (Schünemann 2013).

Contextual factors may also pertain to the characteristics of the target group or population, such as cultural and linguistic diversity, socio-economic position, rural/urban setting. These factors may mean that a particular style of care or relationship evolves between service providers and consumers that may or may not match the values and technology of the program.

For many years these aspects have been acknowledged when decision makers have argued that results of evidence reviews from other countries do not apply in their own country or setting. Whilst some programmes/interventions have been successfully transferred from one context to another, others have not (Resnicow et al 1993, Lumley et al 2004, Coleman et al 2015). Review authors should be cautious when making generalizations from one context to another. They should report on the presence (or otherwise) of context-related information in intervention studies, where this information is available.

15.2.4 Variation in adherence

Variation in the adherence of the recipients and providers of care can limit the certainty in the applicability of results. Predictable differences in adherence can be due to divergence in how recipients of care perceive the intervention (e.g. the importance of side effects), economic conditions or attitudes that make some forms of care inaccessible in some settings, such as in low-income countries (Dans et al 2007). It should not be assumed that high levels of adherence in closely monitored randomized trials will translate into similar levels of adherence in normal practice.

15.2.5 Variation in values and preferences

Decisions about healthcare management strategies and options involve trading off health benefits and harms. The right choice may differ for people with different values and preferences (i.e. the importance people place on the outcomes and interventions), and it is important that decision makers ensure that decisions are consistent with a patient or population’s values and preferences. The importance placed on outcomes, together with other factors, will influence whether the recipients of care will or will not accept an option that is offered (Alonso-Coello et al 2016) and, thus, can be one factor influencing adherence. In Section 15.6 , we describe how the review author can help this process and the limits of supporting decision making based on intervention reviews.

15.3 Interpreting results of statistical analyses

15.3.1 confidence intervals.

Results for both individual studies and meta-analyses are reported with a point estimate together with an associated confidence interval. For example, ‘The odds ratio was 0.75 with a 95% confidence interval of 0.70 to 0.80’. The point estimate (0.75) is the best estimate of the magnitude and direction of the experimental intervention’s effect compared with the comparator intervention. The confidence interval describes the uncertainty inherent in any estimate, and describes a range of values within which we can be reasonably sure that the true effect actually lies. If the confidence interval is relatively narrow (e.g. 0.70 to 0.80), the effect size is known precisely. If the interval is wider (e.g. 0.60 to 0.93) the uncertainty is greater, although there may still be enough precision to make decisions about the utility of the intervention. Intervals that are very wide (e.g. 0.50 to 1.10) indicate that we have little knowledge about the effect and this imprecision affects our certainty in the evidence, and that further information would be needed before we could draw a more certain conclusion.

A 95% confidence interval is often interpreted as indicating a range within which we can be 95% certain that the true effect lies. This statement is a loose interpretation, but is useful as a rough guide. The strictly correct interpretation of a confidence interval is based on the hypothetical notion of considering the results that would be obtained if the study were repeated many times. If a study were repeated infinitely often, and on each occasion a 95% confidence interval calculated, then 95% of these intervals would contain the true effect (see Section 15.3.3 for further explanation).

The width of the confidence interval for an individual study depends to a large extent on the sample size. Larger studies tend to give more precise estimates of effects (and hence have narrower confidence intervals) than smaller studies. For continuous outcomes, precision depends also on the variability in the outcome measurements (i.e. how widely individual results vary between people in the study, measured as the standard deviation); for dichotomous outcomes it depends on the risk of the event (more frequent events allow more precision, and narrower confidence intervals), and for time-to-event outcomes it also depends on the number of events observed. All these quantities are used in computation of the standard errors of effect estimates from which the confidence interval is derived.

The width of a confidence interval for a meta-analysis depends on the precision of the individual study estimates and on the number of studies combined. In addition, for random-effects models, precision will decrease with increasing heterogeneity and confidence intervals will widen correspondingly (see Chapter 10, Section 10.10.4 ). As more studies are added to a meta-analysis the width of the confidence interval usually decreases. However, if the additional studies increase the heterogeneity in the meta-analysis and a random-effects model is used, it is possible that the confidence interval width will increase.

Confidence intervals and point estimates have different interpretations in fixed-effect and random-effects models. While the fixed-effect estimate and its confidence interval address the question ‘what is the best (single) estimate of the effect?’, the random-effects estimate assumes there to be a distribution of effects, and the estimate and its confidence interval address the question ‘what is the best estimate of the average effect?’ A confidence interval may be reported for any level of confidence (although they are most commonly reported for 95%, and sometimes 90% or 99%). For example, the odds ratio of 0.80 could be reported with an 80% confidence interval of 0.73 to 0.88; a 90% interval of 0.72 to 0.89; and a 95% interval of 0.70 to 0.92. As the confidence level increases, the confidence interval widens.

There is logical correspondence between the confidence interval and the P value (see Section 15.3.3 ). The 95% confidence interval for an effect will exclude the null value (such as an odds ratio of 1.0 or a risk difference of 0) if and only if the test of significance yields a P value of less than 0.05. If the P value is exactly 0.05, then either the upper or lower limit of the 95% confidence interval will be at the null value. Similarly, the 99% confidence interval will exclude the null if and only if the test of significance yields a P value of less than 0.01.

Together, the point estimate and confidence interval provide information to assess the effects of the intervention on the outcome. For example, suppose that we are evaluating an intervention that reduces the risk of an event and we decide that it would be useful only if it reduced the risk of an event from 30% by at least 5 percentage points to 25% (these values will depend on the specific clinical scenario and outcomes, including the anticipated harms). If the meta-analysis yielded an effect estimate of a reduction of 10 percentage points with a tight 95% confidence interval, say, from 7% to 13%, we would be able to conclude that the intervention was useful since both the point estimate and the entire range of the interval exceed our criterion of a reduction of 5% for net health benefit. However, if the meta-analysis reported the same risk reduction of 10% but with a wider interval, say, from 2% to 18%, although we would still conclude that our best estimate of the intervention effect is that it provides net benefit, we could not be so confident as we still entertain the possibility that the effect could be between 2% and 5%. If the confidence interval was wider still, and included the null value of a difference of 0%, we would still consider the possibility that the intervention has no effect on the outcome whatsoever, and would need to be even more sceptical in our conclusions.

Review authors may use the same general approach to conclude that an intervention is not useful. Continuing with the above example where the criterion for an important difference that should be achieved to provide more benefit than harm is a 5% risk difference, an effect estimate of 2% with a 95% confidence interval of 1% to 4% suggests that the intervention does not provide net health benefit.

15.3.2 P values and statistical significance

A P value is the standard result of a statistical test, and is the probability of obtaining the observed effect (or larger) under a ‘null hypothesis’. In the context of Cochrane Reviews there are two commonly used statistical tests. The first is a test of overall effect (a Z-test), and its null hypothesis is that there is no overall effect of the experimental intervention compared with the comparator on the outcome of interest. The second is the (Chi 2 ) test for heterogeneity, and its null hypothesis is that there are no differences in the intervention effects across studies.

A P value that is very small indicates that the observed effect is very unlikely to have arisen purely by chance, and therefore provides evidence against the null hypothesis. It has been common practice to interpret a P value by examining whether it is smaller than particular threshold values. In particular, P values less than 0.05 are often reported as ‘statistically significant’, and interpreted as being small enough to justify rejection of the null hypothesis. However, the 0.05 threshold is an arbitrary one that became commonly used in medical and psychological research largely because P values were determined by comparing the test statistic against tabulations of specific percentage points of statistical distributions. If review authors decide to present a P value with the results of a meta-analysis, they should report a precise P value (as calculated by most statistical software), together with the 95% confidence interval. Review authors should not describe results as ‘statistically significant’, ‘not statistically significant’ or ‘non-significant’ or unduly rely on thresholds for P values , but report the confidence interval together with the exact P value (see MECIR Box 15.3.a ).

We discuss interpretation of the test for heterogeneity in Chapter 10, Section 10.10.2 ; the remainder of this section refers mainly to tests for an overall effect. For tests of an overall effect, the computation of P involves both the effect estimate and precision of the effect estimate (driven largely by sample size). As precision increases, the range of plausible effects that could occur by chance is reduced. Correspondingly, the statistical significance of an effect of a particular magnitude will usually be greater (the P value will be smaller) in a larger study than in a smaller study.

P values are commonly misinterpreted in two ways. First, a moderate or large P value (e.g. greater than 0.05) may be misinterpreted as evidence that the intervention has no effect on the outcome. There is an important difference between this statement and the correct interpretation that there is a high probability that the observed effect on the outcome is due to chance alone. To avoid such a misinterpretation, review authors should always examine the effect estimate and its 95% confidence interval.