Data Representation in Computer: Number Systems, Characters, Audio, Image and Video

What is data representation in computer.

A computer uses a fixed number of bits to represent a piece of data which could be a number, a character, image, sound, video, etc. Data representation is the method used internally to represent data in a computer. Let us see how various types of data can be represented in computer memory.

Before discussing data representation of numbers, let us see what a number system is.

Number Systems

Number systems are the technique to represent numbers in the computer system architecture, every value that you are saving or getting into/from computer memory has a defined number system.

The number 289 is pronounced as two hundred and eighty-nine and it consists of the symbols 2, 8, and 9. Similarly, there are other number systems. Each has its own symbols and method for constructing a number.

Let us discuss some of the number systems. Computer architecture supports the following number of systems:

Binary Number System

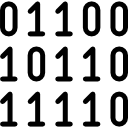

A Binary number system has only two digits that are 0 and 1. Every number (value) represents 0 and 1 in this number system. The base of the binary number system is 2 because it has only two digits.

Octal Number System

Decimal number system.

The decimal number system has only ten (10) digits from 0 to 9. Every number (value) represents with 0,1,2,3,4,5,6, 7,8 and 9 in this number system. The base of decimal number system is 10, because it has only 10 digits.

Hexadecimal Number System

Data representation of characters.

There are different methods to represent characters . Some of them are discussed below:

The code called ASCII (pronounced ‘’.S-key”), which stands for American Standard Code for Information Interchange, uses 7 bits to represent each character in computer memory. The ASCII representation has been adopted as a standard by the U.S. government and is widely accepted.

Since there are exactly 128 unique combinations of 7 bits, this 7-bit code can represent only128 characters. Another version is ASCII-8, also called extended ASCII, which uses 8 bits for each character, can represent 256 different characters.

If ASCII-coded data is to be used in a computer that uses EBCDIC representation, it is necessary to transform ASCII code to EBCDIC code. Similarly, if EBCDIC coded data is to be used in an ASCII computer, EBCDIC code has to be transformed to ASCII.

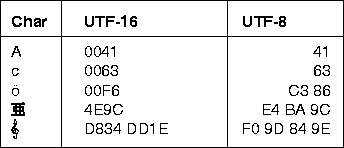

Using 8-bit ASCII we can represent only 256 characters. This cannot represent all characters of written languages of the world and other symbols. Unicode is developed to resolve this problem. It aims to provide a standard character encoding scheme, which is universal and efficient.

Data Representation of Audio, Image and Video

In most cases, we may have to represent and process data other than numbers and characters. This may include audio data, images, and videos. We can see that like numbers and characters, the audio, image, and video data also carry information.

For example, an image is most popularly stored in Joint Picture Experts Group (JPEG ) file format. An image file consists of two parts – header information and image data. Information such as the name of the file, size, modified data, file format, etc. is stored in the header part.

Numerous such techniques are used to achieve compression. Depending on the application, images are stored in various file formats such as bitmap file format (BMP), Tagged Image File Format (TIFF), Graphics Interchange Format (GIF), Portable (Public) Network Graphic (PNG).

Similarly, video is also stored in different files such as AVI (Audio Video Interleave) – a file format designed to store both audio and video data in a standard package that allows synchronous audio with video playback, MP3, JPEG-2, WMV, etc.

FAQs About Data Representation in Computer

What is number system with example, you might also like, what is c++ programming language c++ character set, c++ tokens, what is artificial intelligence functions, 6 benefits, applications of ai, what is microprocessor evolution of microprocessor, types, features, 10 evolution of computing machine, history, what are decision making statements in c types, what are c++ keywords set of 59 keywords in c ++, what is cloud computing classification, characteristics, principles, types of cloud providers, generations of computer first to fifth, classification, characteristics, features, examples, types of computer software: systems software, application software, what are operators in c different types of operators in c, types of storage devices, advantages, examples, 10 types of computers | history of computers, advantages, what is operating system functions, types, types of user interface, advantages and disadvantages of operating system, advantages and disadvantages of flowcharts, what is flowchart in programming symbols, advantages, preparation, what is problem solving algorithm, steps, representation, what are data types in c++ types, data and information: definition, characteristics, types, channels, approaches, what are expressions in c types, leave a reply cancel reply.

- Computer Concepts Tutorial

- Computer Concepts - Home

- Introduction to Computer

- Introduction to GUI based OS

- Elements of Word Processing

- Spread Sheet

- Introduction to Internet, WWW, Browsers

- Communication & Collaboration

- Application of Presentations

- Application of Digital Financial Services

- Computer Concepts Resources

- Computer Concepts - Quick Guide

- Computer Concepts - Useful Resources

- Computer Concepts - Discussion

- Selected Reading

- UPSC IAS Exams Notes

- Developer's Best Practices

- Questions and Answers

- Effective Resume Writing

- HR Interview Questions

- Computer Glossary

Representation of Data/Information

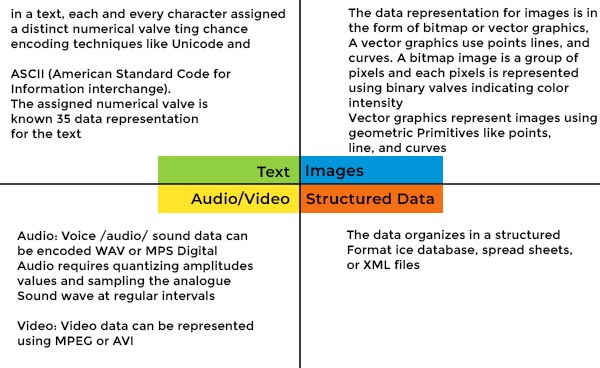

Computers do not understand human language; they understand data within the prescribed form. Data representation is a method to represent data and encode it in a computer system. Generally, a user inputs numbers, text, images, audio, and video etc types of data to process but the computer converts this data to machine language first and then processes it.

Some Common Data Representation Methods Include

Data representation plays a vital role in storing, process, and data communication. A correct and effective data representation method impacts data processing performance and system compatibility.

Computers represent data in the following forms

Number system.

A computer system considers numbers as data; it includes integers, decimals, and complex numbers. All the inputted numbers are represented in binary formats like 0 and 1. A number system is categorized into four types −

- Binary − A binary number system is a base of all the numbers considered for data representation in the digital system. A binary number system consists of only two values, either 0 or 1; so its base is 2. It can be represented to the external world as (10110010) 2 . A computer system uses binary digits (0s and 1s) to represent data internally.

- Octal − The octal number system represents values in 8 digits. It consists of digits 0,12,3,4,5,6, and 7; so its base is 8. It can be represented to the external world as (324017) 8 .

- Decimal − Decimal number system represents values in 10 digits. It consists of digits 0, 12, 3, 4, 5, 6, 7, 8, and 9; so its base is 10. It can be represented to the external world as (875629) 10 .

The below-mentioned table below summarises the data representation of the number system along with their Base and digits.

| Number System | ||

|---|---|---|

| System | Base | Digits |

| Binary | 2 | 0 1 |

| Octal | 8 | 0 1 2 3 4 5 6 7 |

| Decimal | 10 | 0 1 2 3 4 5 6 7 8 9 |

| Hexadecimal | 16 | 0 1 2 3 4 5 6 7 8 9 A B C D E F |

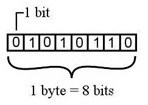

Bits and Bytes

A bit is the smallest data unit that a computer uses in computation; all the computation tasks done by the computer systems are based on bits. A bit represents a binary digit in terms of 0 or 1. The computer usually uses bits in groups. It's the basic unit of information storage and communication in digital computing.

A group of eight bits is called a byte. Half of a byte is called a nibble; it means a group of four bits is called a nibble. A byte is a fundamental addressable unit of computer memory and storage. It can represent a single character, such as a letter, number, or symbol using encoding methods such as ASCII and Unicode.

Bytes are used to determine file sizes, storage capacity, and available memory space. A kilobyte (KB) is equal to 1,024 bytes, a megabyte (MB) is equal to 1,024 KB, and a gigabyte (GB) is equal to 1,024 MB. File size is roughly measured in KBs and availability of memory space in MBs and GBs.

The following table shows the conversion of Bits and Bytes −

| Byte Value | Bit Value |

|---|---|

| 1 Byte | 8 Bits |

| 1024 Bytes | 1 Kilobyte |

| 1024 Kilobytes | 1 Megabyte |

| 1024 Megabytes | 1 Gigabyte |

| 1024 Gigabytes | 1 Terabyte |

| 1024 Terabytes | 1 Petabyte |

| 1024 Petabytes | 1 Exabyte |

| 1024 Exabytes | 1 Zettabyte |

| 1024 Zettabytes | 1 Yottabyte |

| 1024 Yottabytes | 1 Brontobyte |

| 1024 Brontobytes | 1 Geopbytes |

A Text Code is a static code that allows a user to insert text that others will view when they scan it. It includes alphabets, punctuation marks and other symbols. Some of the most commonly used text code systems are −

Extended ASCII

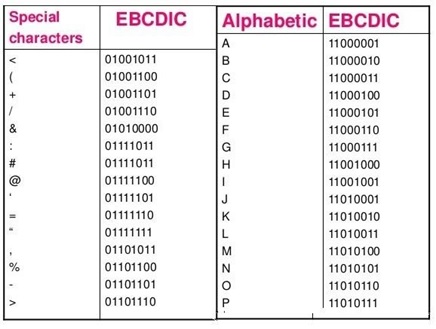

EBCDIC stands for Extended Binary Coded Decimal Interchange Code. IBM developed EBCDIC in the early 1960s and used it in their mainframe systems like System/360 and its successors. To meet commercial and data processing demands, it supports letters, numbers, punctuation marks, and special symbols. Character codes distinguish EBCDIC from other character encoding methods like ASCII. Data encoded in EBCDIC or ASCII may not be compatible with computers; to make them compatible, we need to convert with systems compatibility. EBCDIC encodes each character as an 8-bit binary code and defines 256 symbols. The below-mentioned table depicts different characters along with their EBCDIC code.

ASCII stands for American Standard Code for Information Interchange. It is an 8-bit code that specifies character values from 0 to 127. ASCII is a standard for the Character Encoding of Numbers that assigns numerical values to represent characters, such as letters, numbers, exclamation marks and control characters used in computers and communication equipment that are using data.

ASCII originally defined 128 characters, encoded with 7 bits, allowing for 2^7 (128) potential characters. The ASCII standard specifies characters for the English alphabet (uppercase and lowercase), numerals from 0 to 9, punctuation marks, and control characters for formatting and control tasks such as line feed, carriage return, and tab.

| ASCII Tabular column | ||

|---|---|---|

| ASCII Code | Decimal Value | Character |

| 0000 0000 | 0 | Null prompt |

| 0000 0001 | 1 | Start of heading |

| 0000 0010 | 2 | Start of text |

| 0000 0011 | 3 | End of text |

| 0000 0100 | 4 | End of transmit |

| 0000 0101 | 5 | Enquiry |

| 0000 0110 | 6 | Acknowledge |

| 0000 0111 | 7 | Audible bell |

| 0000 1000 | 8 | Backspace |

| 0000 1001 | 9 | Horizontal tab |

| 0000 1010 | 10 | Line Feed |

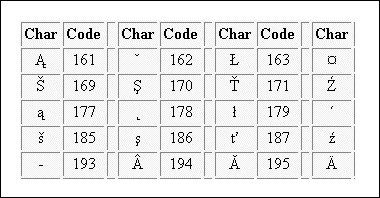

Extended American Standard Code for Information Interchange is an 8-bit code that specifies character values from 128 to 255. Extended ASCII encompasses different character encoding normal ASCII character set, consisting of 128 characters encoded in 7 bits, some additional characters that utilise full 8 bits of a byte; there are a total of 256 potential characters.

Different extended ASCII exist, each introducing more characters beyond the conventional ASCII set. These additional characters may encompass symbols, letters, and special characters to a specific language or location.

Extended ASCII Tabular column

It is a worldwide character standard that uses 4 to 32 bits to represent letters, numbers and symbols. Unicode is a standard character encoding which is specifically designed to provide a consistent way to represent text in nearly all of the world's writing systems. Every character is assigned a unique numeric code, program, or language. Unicode offers a wide variety of characters, including alphabets, ideographs, symbols, and emojis.

Unicode Tabular Column

Data representation

Computers use binary - the digits 0 and 1 - to store data. A binary digit, or bit, is the smallest unit of data in computing. It is represented by a 0 or a 1. Binary numbers are made up of binary digits (bits), eg the binary number 1001. The circuits in a computer's processor are made up of billions of transistors. A transistor is a tiny switch that is activated by the electronic signals it receives. The digits 1 and 0 used in binary reflect the on and off states of a transistor. Computer programs are sets of instructions. Each instruction is translated into machine code - simple binary codes that activate the CPU. Programmers write computer code and this is converted by a translator into binary instructions that the processor can execute. All software, music, documents, and any other information that is processed by a computer, is also stored using binary. [1]

To include strings, integers, characters and colours. This should include considering the space taken by data, for instance the relation between the hexadecimal representation of colours and the number of colours available.

This video is superb place to understand this topic

- 1 How a file is stored on a computer

- 2 How an image is stored in a computer

- 3 The way in which data is represented in the computer.

- 6 Standards

- 7 References

How a file is stored on a computer [ edit ]

How an image is stored in a computer [ edit ]

The way in which data is represented in the computer. [ edit ].

To include strings, integers, characters and colours. This should include considering the space taken by data, for instance the relation between the hexadecimal representation of colours and the number of colours available [3] .

This helpful material is used with gratitude from a computer science wiki under a Creative Commons Attribution 3.0 License [4]

Sound [ edit ]

- Let's look at an oscilloscope

- The BBC has an excellent article on how computers represent sound

See Also [ edit ]

Standards [ edit ].

- Outline the way in which data is represented in the computer.

References [ edit ]

- ↑ http://www.bbc.co.uk/education/guides/zwsbwmn/revision/1

- ↑ https://ujjwalkarn.me/2016/08/11/intuitive-explanation-convnets/

- ↑ IBO Computer Science Guide, First exams 2014

- ↑ https://compsci2014.wikispaces.com/2.1.10+Outline+the+way+in+which+data+is+represented+in+the+computer

A unit of abstract mathematical system subject to the laws of arithmetic.

A natural number, a negative of a natural number, or zero.

Give a brief account.

- Computer organization

- Very important ideas in computer science

Teach Computer Science

Data Representation

Topics include binary, decimal, and hexadecimal numbers, and the conversions between them.

Computational Thinking

We may think that computers “think” and that they outsmart humans “just like that”. However that’s not the case, computers do exactly what we humans tell them to do, or better said, what we program them to do. Once programmed a computer can only execute problems and produce solutions more efficiently than humans. Computational thinking …

For Data Units, candidates should be able to: define the terms bit, nibble, byte, kilobyte, megabyte, gigabyte, terabyte understand that data needs to be converted into a binary format to be processed by a computer. Data units in computer systems Bit This is a single unit of memory and can only store 2 possible binary …

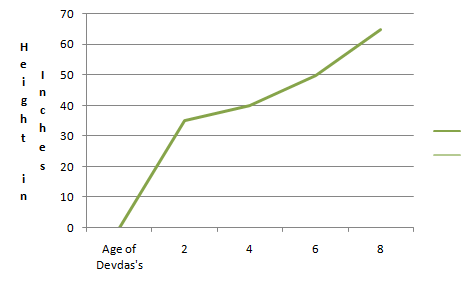

Image Representation

For Image Representation, candidates should be able to: explain the representation of an image as a series of pixels represented in binary explain the need for metadata to be included in the file such as height, width and colour depth discuss the effect of colour depth and resolution on the size of an image file. …

Sound Representation

For Sound Representation, candidates should be able to: explain how sound can be sampled and stored in digital form explain how sampling intervals and other considerations affect the size of a sound file and the quality of its playback. How can sound be sampled and stored in digital form? A microphone converts sound waves into …

Number Systems

For Number Systems, candidates should be able to: convert positive denary whole numbers (0-255) into 8-bit binary numbers and vice versa add two 8-bit binary integers and explain overflow errors which may occur convert positive denary whole numbers (0-255) into 2-digit hexadecimal numbers and vice versa convert between binary and hexadecimal equivalents of the same …

Character Sets

Candidates should be able to: explain the use of binary codes to represent characters explain the term character set describe with examples (for example ASCII and Unicode) the relationship between the number of bits per character in a character set and the number of characters which can be represented. How are binary codes used to …

Computer Instructions

In regards to computer instructions, candidates should be able to: explain how instructions are coded as bit patterns explain how the computer distinguishes between instructions and data. Computer Instructions: How are program instructions coded? Machine code instructions are binary numbers and are coded as bit patterns, for example, a 16 bit machine code instruction could …

Converting Decimal to Binary

Converting Decimal to Binary: The Decimal Numbering System Decimal is a base 10 numbering Binary Numbering System Binary is a base 2 numbering system that is made up of two numbers: 0 and 1. 0 means OFF and 1 means ON. The computer’s central processing unit (CPU) only recognizes these two states – ON and …

Converting Hexadecimal to Decimal

Hexadecimal Numbering System Hexadecimal is a base 16 numbering system that is made up of 16 digits: 0 – 9 and six more, which is A through F. Uses of Hexadecimal The hexadecimal numbering system is often used by programmers to simplify the binary numbering system. Since 16 is equivalent to 24, there is a …

Converting Hexadecimal to Binary

Converting Hexadecimal to Binary Hexadecimal Numbering System Hexadecimal is a base 16 numbering system which is made up of 16 digits: 0 – 9 and six more, which is A through F. Uses of Hexadecimal Hexadecimal numbering system is often used by programmers to simplify the binary numbering system. Since 16 is equivalent to 24, …

Converting Decimal to Hexadecimal

Converting Decimal to Hexadecimal: The Decimal Numbering System Decimal is a base 10 numbering system that is made up of 10 numbers: 0, 1, 2, 3, 4, 5, 6, 7, 8, and 9. It is the most commonly used numbering system. The reason behind that is convenience. We have 10 fingers that we use for …

Uses of Hexadecimal

Hexadecimal Numbering System Hexadecimal is a base 16 numbering system that is made up of 16 digits: 0 – 9 and six more, which is A through F.The table below shows how the hexadecimal system works and its equivalent decimal number: Hexadecimal Decimal Hexadecimal Decimal 0 0 11 = (1 x 16) + …

Converting Binary to Hexadecimal

Binary Numbering System Binary is a base 2 numbering system which is made up of two numbers: 0 and 1. 0 means OFF and 1 means ON. The computer’s central processing unit (CPU) only recognizes these two states – ON and OFF. It is the foundation for all binary code, which is used in computer …

Converting Binary to Decimal

Converting Binary to Decimal: The Binary Numbering System Binary is a base 2 numbering system that is made up of two numbers: 0 and 1. 0 means OFF and 1 means ON. The computer’s central processing unit (CPU) only recognizes these two states – ON and OFF. It is the foundation for all binary code, …

Bitmap Image and Colour Depth Quiz

Further Readings: Bitmap Color depth

Colour Depth Gap Fill Exercise

Further Readings: Color depth

Data Units Gap Fill Exercise

Further Readings: Units of information

Data Units Multi-Choice Quiz

Colour mapping and direct colour.

Pupil Resources – EXTENSION TOPIC What is the difference between Colour Mapping and Direct Colour? Colour mapping With low colour depths (up to 8-bit) it is practical to map every colour to a binary code. 1-bit colour mapping – (2 colours) monochrome, often black and white. 2-bit colour mapping – (4 colours) CGA – used …

Instructions

Candidates should be able to: explain how instructions are coded as bit patterns explain how the computer distinguishes between instructions and data. How are program instructions coded? Machine code instructions are binary numbers and are coded as bit patterns, for example, a 16-bit machine code instruction could be coded as 001010101101001011. In machine code, the …

Sound – Quality, Size & Storing Solutions

Candidates should be able to: How can sound be sampled and stored in digital form? A microphone converts sound waves into voltage changes. If a microphone is plugged into a sound card then the voltage can be sampled at regular intervals (the sample rate) and each value converted into a binary number. This digitising of …

- Data representation

Bytes of memory

- Abstract machine

Unsigned integer representation

Signed integer representation, pointer representation, array representation, compiler layout, array access performance, collection representation.

- Consequences of size and alignment rules

Uninitialized objects

Pointer arithmetic, undefined behavior.

- Computer arithmetic

Arena allocation

This course is about learning how computers work, from the perspective of systems software: what makes programs work fast or slow, and how properties of the machines we program impact the programs we write. We want to communicate ideas, tools, and an experimental approach.

The course divides into six units:

- Assembly & machine programming

- Storage & caching

- Kernel programming

- Process management

- Concurrency

The first unit, data representation , is all about how different forms of data can be represented in terms the computer can understand.

Computer memory is kind of like a Lite Brite.

A Lite Brite is big black backlit pegboard coupled with a supply of colored pegs, in a limited set of colors. You can plug in the pegs to make all kinds of designs. A computer’s memory is like a vast pegboard where each slot holds one of 256 different colors. The colors are numbered 0 through 255, so each slot holds one byte . (A byte is a number between 0 and 255, inclusive.)

A slot of computer memory is identified by its address . On a computer with M bytes of memory, and therefore M slots, you can think of the address as a number between 0 and M −1. My laptop has 16 gibibytes of memory, so M = 16×2 30 = 2 34 = 17,179,869,184 = 0x4'0000'0000 —a very large number!

The problem of data representation is the problem of representing all the concepts we might want to use in programming—integers, fractions, real numbers, sets, pictures, texts, buildings, animal species, relationships—using the limited medium of addresses and bytes.

Powers of ten and powers of two. Digital computers love the number two and all powers of two. The electronics of digital computers are based on the bit , the smallest unit of storage, which a base-two digit: either 0 or 1. More complicated objects are represented by collections of bits. This choice has many scale and error-correction advantages. It also refracts upwards to larger choices, and even into terminology. Memory chips, for example, have capacities based on large powers of two, such as 2 30 bytes. Since 2 10 = 1024 is pretty close to 1,000, 2 20 = 1,048,576 is pretty close to a million, and 2 30 = 1,073,741,824 is pretty close to a billion, it’s common to refer to 2 30 bytes of memory as “a giga byte,” even though that actually means 10 9 = 1,000,000,000 bytes. But for greater precision, there are terms that explicitly signal the use of powers of two. 2 30 is a gibibyte : the “-bi-” component means “binary.”

Virtual memory. Modern computers actually abstract their memory spaces using a technique called virtual memory . The lowest-level kind of address, called a physical address , really does take on values between 0 and M −1. However, even on a 16GiB machine like my laptop, the addresses we see in programs can take on values like 0x7ffe'ea2c'aa67 that are much larger than M −1 = 0x3'ffff'ffff . The addresses used in programs are called virtual addresses . They’re incredibly useful for protection: since different running programs have logically independent address spaces, it’s much less likely that a bug in one program will crash the whole machine. We’ll learn about virtual memory in much more depth in the kernel unit ; the distinction between virtual and physical addresses is not as critical for data representation.

Most programming languages prevent their users from directly accessing memory. But not C and C++! These languages let you access any byte of memory with a valid address. This is powerful; it is also very dangerous. But it lets us get a hands-on view of how computers really work.

C++ programs accomplish their work by constructing, examining, and modifying objects . An object is a region of data storage that contains a value, such as the integer 12. (The standard specifically says “a region of data storage in the execution environment, the contents of which can represent values”.) Memory is called “memory” because it remembers object values.

In this unit, we often use functions called hexdump to examine memory. These functions are defined in hexdump.cc . hexdump_object(x) prints out the bytes of memory that comprise an object named x , while hexdump(ptr, size) prints out the size bytes of memory starting at a pointer ptr .

For example, in datarep1/add.cc , we might use hexdump_object to examine the memory used to represent some integers:

This display reports that a , b , and c are each four bytes long; that a , b , and c are located at different, nonoverlapping addresses (the long hex number in the first column); and shows us how the numbers 1, 2, and 3 are represented in terms of bytes. (More on that later.)

The compiler, hardware, and standard together define how objects of different types map to bytes. Each object uses a contiguous range of addresses (and thus bytes), and objects never overlap (objects that are active simultaneously are always stored in distinct address ranges).

Since C and C++ are designed to help software interface with hardware devices, their standards are transparent about how objects are stored. A C++ program can ask how big an object is using the sizeof keyword. sizeof(T) returns the number of bytes in the representation of an object of type T , and sizeof(x) returns the size of object x . The result of sizeof is a value of type size_t , which is an unsigned integer type large enough to hold any representable size. On 64-bit architectures, such as x86-64 (our focus in this course), size_t can hold numbers between 0 and 2 64 –1.

Qualitatively different objects may have the same data representation. For example, the following three objects have the same data representation on x86-64, which you can verify using hexdump :

In C and C++, you can’t reliably tell the type of an object by looking at the contents of its memory. That’s why tricks like our different addf*.cc functions work.

An object can have many names. For example, here, local and *ptr refer to the same object:

The different names for an object are sometimes called aliases .

There are five objects here:

- ch1 , a global variable

- ch2 , a constant (non-modifiable) global variable

- ch3 , a local variable

- ch4 , a local variable

- the anonymous storage allocated by new char and accessed by *ch4

Each object has a lifetime , which is called storage duration by the standard. There are three different kinds of lifetime.

- static lifetime: The object lasts as long as the program runs. ( ch1 , ch2 )

- automatic lifetime: The compiler allocates and destroys the object automatically as the program runs, based on the object’s scope (the region of the program in which it is meaningful). ( ch3 , ch4 )

- dynamic lifetime: The programmer allocates and destroys the object explicitly. ( *allocated_ch )

Objects with dynamic lifetime aren’t easy to use correctly. Dynamic lifetime causes many serious problems in C programs, including memory leaks, use-after-free, double-free, and so forth. Those serious problems cause undefined behavior and play a “disastrously central role” in “our ongoing computer security nightmare” . But dynamic lifetime is critically important. Only with dynamic lifetime can you construct an object whose size isn’t known at compile time, or construct an object that outlives the function that created it.

The compiler and operating system work together to put objects at different addresses. A program’s address space (which is the range of addresses accessible to a program) divides into regions called segments . Objects with different lifetimes are placed into different segments. The most important segments are:

- Code (also known as text or read-only data ). Contains instructions and constant global objects. Unmodifiable; static lifetime.

- Data . Contains non-constant global objects. Modifiable; static lifetime.

- Heap . Modifiable; dynamic lifetime.

- Stack . Modifiable; automatic lifetime.

The compiler decides on a segment for each object based on its lifetime. The final compiler phase, which is called the linker , then groups all the program’s objects by segment (so, for instance, global variables from different compiler runs are grouped together into a single segment). Finally, when a program runs, the operating system loads the segments into memory. (The stack and heap segments grow on demand.)

We can use a program to investigate where objects with different lifetimes are stored. (See cs61-lectures/datarep2/mexplore0.cc .) This shows address ranges like this:

Object declaration | Lifetime | Segment | Example address range |

|---|---|---|---|

Constant global | Static | Code (or Text) | 0x40'0000 (≈1 × 2 ) |

Global | Static | Data | 0x60'0000 (≈1.5 × 2 ) |

Local | Automatic | Stack | 0x7fff'448d'0000 (≈2 = 2 × 2 ) |

Anonymous, returned by | Dynamic | Heap | 0x1a0'0000 (≈8 × 2 ) |

Constant global data and global data have the same lifetime, but are stored in different segments. The operating system uses different segments so it can prevent the program from modifying constants. It marks the code segment, which contains functions (instructions) and constant global data, as read-only, and any attempt to modify code-segment memory causes a crash (a “Segmentation violation”).

An executable is normally at least as big as the static-lifetime data (the code and data segments together). Since all that data must be in memory for the entire lifetime of the program, it’s written to disk and then loaded by the OS before the program starts running. There is an exception, however: the “bss” segment is used to hold modifiable static-lifetime data with initial value zero. Such data is common, since all static-lifetime data is initialized to zero unless otherwise specified in the program text. Rather than storing a bunch of zeros in the object files and executable, the compiler and linker simply track the location and size of all zero-initialized global data. The operating system sets this memory to zero during the program load process. Clearing memory is faster than loading data from disk, so this optimization saves both time (the program loads faster) and space (the executable is smaller).

Abstract machine and hardware

Programming involves turning an idea into hardware instructions. This transformation happens in multiple steps, some you control and some controlled by other programs.

First you have an idea , like “I want to make a flappy bird iPhone game.” The computer can’t (yet) understand that idea. So you transform the idea into a program , written in some programming language . This process is called programming.

A C++ program actually runs on an abstract machine . The behavior of this machine is defined by the C++ standard , a technical document. This document is supposed to be so precisely written as to have an exact mathematical meaning, defining exactly how every C++ program behaves. But the document can’t run programs!

C++ programs run on hardware (mostly), and the hardware determines what behavior we see. Mapping abstract machine behavior to instructions on real hardware is the task of the C++ compiler (and the standard library and operating system). A C++ compiler is correct if and only if it translates each correct program to instructions that simulate the expected behavior of the abstract machine.

This same rough series of transformations happens for any programming language, although some languages use interpreters rather than compilers.

A bit is the fundamental unit of digital information: it’s either 0 or 1.

C++ manages memory in units of bytes —8 contiguous bits that together can represent numbers between 0 and 255. C’s unit for a byte is char : the abstract machine says a byte is stored in char . That means an unsigned char holds values in the inclusive range [0, 255].

The C++ standard actually doesn’t require that a byte hold 8 bits, and on some crazy machines from decades ago , bytes could hold nine bits! (!?)

But larger numbers, such as 258, don’t fit in a single byte. To represent such numbers, we must use multiple bytes. The abstract machine doesn’t specify exactly how this is done—it’s the compiler and hardware’s job to implement a choice. But modern computers always use place–value notation , just like in decimal numbers. In decimal, the number 258 is written with three digits, the meanings of which are determined both by the digit and by their place in the overall number:

\[ 258 = 2\times10^2 + 5\times10^1 + 8\times10^0 \]

The computer uses base 256 instead of base 10. Two adjacent bytes can represent numbers between 0 and \(255\times256+255 = 65535 = 2^{16}-1\) , inclusive. A number larger than this would take three or more bytes.

\[ 258 = 1\times256^1 + 2\times256^0 \]

On x86-64, the ones place, the least significant byte, is on the left, at the lowest address in the contiguous two-byte range used to represent the integer. This is the opposite of how decimal numbers are written: decimal numbers put the most significant digit on the left. The representation choice of putting the least-significant byte in the lowest address is called little-endian representation. x86-64 uses little-endian representation.

Some computers actually store multi-byte integers the other way, with the most significant byte stored in the lowest address; that’s called big-endian representation. The Internet’s fundamental protocols, such as IP and TCP, also use big-endian order for multi-byte integers, so big-endian is also called “network” byte order.

The C++ standard defines five fundamental unsigned integer types, along with relationships among their sizes. Here they are, along with their actual sizes and ranges on x86-64:

Type | Size | Size | Range |

|---|---|---|---|

| 1 | 1 | [0, 255] = [0, 2 −1] |

| ≥1 | 2 | [0, 65,535] = [0, 2 −1] |

| ≥ | 4 | [0, 4,294,967,295] = [0, 2 −1] |

| ≥ | 8 | [0, 18,446,744,073,709,551,615] = [0, 2 −1] |

| ≥ | 8 | [0, 18,446,744,073,709,551,615] = [0, 2 −1] |

Other architectures and operating systems implement different ranges for these types. For instance, on IA32 machines like Intel’s Pentium (the 32-bit processors that predated x86-64), sizeof(long) was 4, not 8.

Note that all values of a smaller unsigned integer type can fit in any larger unsigned integer type. When a value of a larger unsigned integer type is placed in a smaller unsigned integer object, however, not every value fits; for instance, the unsigned short value 258 doesn’t fit in an unsigned char x . When this occurs, the C++ abstract machine requires that the smaller object’s value equals the least -significant bits of the larger value (so x will equal 2).

In addition to these types, whose sizes can vary, C++ has integer types whose sizes are fixed. uint8_t , uint16_t , uint32_t , and uint64_t define 8-bit, 16-bit, 32-bit, and 64-bit unsigned integers, respectively; on x86-64, these correspond to unsigned char , unsigned short , unsigned int , and unsigned long .

This general procedure is used to represent a multi-byte integer in memory.

- Write the large integer in hexadecimal format, including all leading zeros required by the type size. For example, the unsigned value 65534 would be written 0x0000FFFE . There will be twice as many hexadecimal digits as sizeof(TYPE) .

- Divide the integer into its component bytes, which are its digits in base 256. In our example, they are, from most to least significant, 0x00, 0x00, 0xFF, and 0xFE.

In little-endian representation, the bytes are stored in memory from least to most significant. If our example was stored at address 0x30, we would have:

In big-endian representation, the bytes are stored in the reverse order.

Computers are often fastest at dealing with fixed-length numbers, rather than variable-length numbers, and processor internals are organized around a fixed word size . A word is the natural unit of data used by a processor design . In most modern processors, this natural unit is 8 bytes or 64 bits , because this is the power-of-two number of bytes big enough to hold those processors’ memory addresses. Many older processors could access less memory and had correspondingly smaller word sizes, such as 4 bytes (32 bits).

The best representation for signed integers—and the choice made by x86-64, and by the C++20 abstract machine—is two’s complement . Two’s complement representation is based on this principle: Addition and subtraction of signed integers shall use the same instructions as addition and subtraction of unsigned integers.

To see what this means, let’s think about what -x should mean when x is an unsigned integer. Wait, negative unsigned?! This isn’t an oxymoron because C++ uses modular arithmetic for unsigned integers: the result of an arithmetic operation on unsigned values is always taken modulo 2 B , where B is the number of bits in the unsigned value type. Thus, on x86-64,

-x is simply the number that, when added to x , yields 0 (mod 2 B ). For example, when unsigned x = 0xFFFFFFFFU , then -x == 1U , since x + -x equals zero (mod 2 32 ).

To obtain -x , we flip all the bits in x (an operation written ~x ) and then add 1. To see why, consider the bit representations. What is x + (~x + 1) ? Well, (~x) i (the i th bit of ~x ) is 1 whenever x i is 0, and vice versa. That means that every bit of x + ~x is 1 (there are no carries), and x + ~x is the largest unsigned integer, with value 2 B -1. If we add 1 to this, we get 2 B . Which is 0 (mod 2 B )! The highest “carry” bit is dropped, leaving zero.

Two’s complement arithmetic uses half of the unsigned integer representations for negative numbers. A two’s-complement signed integer with B bits has the following values:

- If the most-significant bit is 1, the represented number is negative. Specifically, the represented number is – (~x + 1) , where the outer negative sign is mathematical negation (not computer arithmetic).

- If every bit is 0, the represented number is 0.

- If the most-significant but is 0 but some other bit is 1, the represented number is positive.

The most significant bit is also called the sign bit , because if it is 1, then the represented value depends on the signedness of the type (and that value is negative for signed types).

Another way to think about two’s-complement is that, for B -bit integers, the most-significant bit has place value 2 B –1 in unsigned arithmetic and negative 2 B –1 in signed arithmetic. All other bits have the same place values in both kinds of arithmetic.

The two’s-complement bit pattern for x + y is the same whether x and y are considered as signed or unsigned values. For example, in 4-bit arithmetic, 5 has representation 0b0101 , while the representation 0b1100 represents 12 if unsigned and –4 if signed ( ~0b1100 + 1 = 0b0011 + 1 == 4). Let’s add those bit patterns and see what we get:

Note that this is the right answer for both signed and unsigned arithmetic : 5 + 12 = 17 = 1 (mod 16), and 5 + -4 = 1.

Subtraction and multiplication also produce the same results for unsigned arithmetic and signed two’s-complement arithmetic. (For instance, 5 * 12 = 60 = 12 (mod 16), and 5 * -4 = -20 = -4 (mod 16).) This is not true of division. (Consider dividing the 4-bit representation 0b1110 by 2. In signed arithmetic, 0b1110 represents -2, so 0b1110/2 == 0b1111 (-1); but in unsigned arithmetic, 0b1110 is 14, so 0b1110/2 == 0b0111 (7).) And, of course, it is not true of comparison. In signed 4-bit arithmetic, 0b1110 < 0 , but in unsigned 4-bit arithmetic, 0b1110 > 0 . This means that a C compiler for a two’s-complement machine can use a single add instruction for either signed or unsigned numbers, but it must generate different instruction patterns for signed and unsigned division (or less-than, or greater-than).

There are a couple quirks with C signed arithmetic. First, in two’s complement, there are more negative numbers than positive numbers. A representation with sign bit is 1, but every other bit 0, has no positive counterpart at the same bit width: for this number, -x == x . (In 4-bit arithmetic, -0b1000 == ~0b1000 + 1 == 0b0111 + 1 == 0b1000 .) Second, and far worse, is that arithmetic overflow on signed integers is undefined behavior .

Type | Size | Size | Range |

|---|---|---|---|

| 1 | 1 | [−128, 127] = [−2 , 2 −1] |

| = | 2 | [−32,768, 32,767] = [−2 , 2 −1] |

| = | 4 | [−2,147,483,648, 2,147,483,647] = [−2 , 2 −1] |

| = | 8 | [−9,223,372,036,854,775,808, 9,223,372,036,854,775,807] = [−2 , 2 −1] |

| = | 8 | [−9,223,372,036,854,775,808, 9,223,372,036,854,775,807] = [−2 , 2 −1] |

The C++ abstract machine requires that signed integers have the same sizes as their unsigned counterparts.

We distinguish pointers , which are concepts in the C abstract machine, from addresses , which are hardware concepts. A pointer combines an address and a type.

The memory representation of a pointer is the same as the representation of its address value. The size of that integer is the machine’s word size; for example, on x86-64, a pointer occupies 8 bytes, and a pointer to an object located at address 0x400abc would be stored as:

The C++ abstract machine defines an unsigned integer type uintptr_t that can hold any address. (You have to #include <inttypes.h> or <cinttypes> to get the definition.) On most machines, including x86-64, uintptr_t is the same as unsigned long . Cast a pointer to an integer address value with syntax like (uintptr_t) ptr ; cast back to a pointer with syntax like (T*) addr . Casts between pointer types and uintptr_t are information preserving, so this assertion will never fail:

Since it is a 64-bit architecture, the size of an x86-64 address is 64 bits (8 bytes). That’s also the size of x86-64 pointers.

To represent an array of integers, C++ and C allocate the integers next to each other in memory, in sequential addresses, with no gaps or overlaps. Here, we put the integers 0, 1, and 258 next to each other, starting at address 1008:

Say that you have an array of N integers, and you access each of those integers in order, accessing each integer exactly once. Does the order matter?

Computer memory is random-access memory (RAM), which means that a program can access any bytes of memory in any order—it’s not, for example, required to read memory in ascending order by address. But if we run experiments, we can see that even in RAM, different access orders have very different performance characteristics.

Our arraysum program sums up all the integers in an array of N integers, using an access order based on its arguments, and prints the resulting delay. Here’s the result of a couple experiments on accessing 10,000,000 items in three orders, “up” order (sequential: elements 0, 1, 2, 3, …), “down” order (reverse sequential: N , N −1, N −2, …), and “random” order (as it sounds).

| order | trial 1 | trial 2 | trial 3 |

|---|---|---|---|

| , up | 8.9ms | 7.9ms | 7.4ms |

| , down | 9.2ms | 8.9ms | 10.6ms |

| , random | 316.8ms | 352.0ms | 360.8ms |

Wow! Down order is just a bit slower than up, but random order seems about 40 times slower. Why?

Random order is defeating many of the internal architectural optimizations that make memory access fast on modern machines. Sequential order, since it’s more predictable, is much easier to optimize.

Foreshadowing. This part of the lecture is a teaser for the Storage unit, where we cover access patterns and caching, including the processor caches that explain this phenomenon, in much more depth.

The C++ programming language offers several collection mechanisms for grouping subobjects together into new kinds of object. The collections are arrays, structs, and unions. (Classes are a kind of struct. All library types, such as vectors, lists, and hash tables, use combinations of these collection types.) The abstract machine defines how subobjects are laid out inside a collection. This is important, because it lets C/C++ programs exchange messages with hardware and even with programs written in other languages: messages can be exchanged only when both parties agree on layout.

Array layout in C++ is particularly simple: The objects in an array are laid out sequentially in memory, with no gaps or overlaps. Assume a declaration like T x[N] , where x is an array of N objects of type T , and say that the address of x is a . Then the address of element x[i] equals a + i * sizeof(T) , and sizeof(a) == N * sizeof(T) .

Sidebar: Vector representation

The C++ library type std::vector defines an array that can grow and shrink. For instance, this function creates a vector containing the numbers 0 up to N in sequence:

Here, v is an object with automatic lifetime. This means its size (in the sizeof sense) is fixed at compile time. Remember that the sizes of static- and automatic-lifetime objects must be known at compile time; only dynamic-lifetime objects can have varying size based on runtime parameters. So where and how are v ’s contents stored?

The C++ abstract machine requires that v ’s elements are stored in an array in memory. (The v.data() method returns a pointer to the first element of the array.) But it does not define std::vector ’s layout otherwise, and C++ library designers can choose different layouts based on their needs. We found these to hold for the std::vector in our library:

sizeof(v) == 24 for any vector of any type, and the address of v is a stack address (i.e., v is located in the stack segment).

The first 8 bytes of the vector hold the address of the first element of the contents array—call it the begin address . This address is a heap address, which is as expected, since the contents must have dynamic lifetime. The value of the begin address is the same as that of v.data() .

Bytes 8–15 hold the address just past the contents array—call it the end address . Its value is the same as &v.data()[v.size()] . If the vector is empty, then the begin address and the end address are the same.

Bytes 16–23 hold an address greater than or equal to the end address. This is the capacity address . As a vector grows, it will sometimes outgrow its current location and move its contents to new memory addresses. To reduce the number of copies, vectors usually to request more memory from the operating system than they immediately need; this additional space, which is called “capacity,” supports cheap growth. Often the capacity doubles on each growth spurt, since this allows operations like v.push_back() to execute in O (1) time on average.

Compilers must also decide where different objects are stored when those objects are not part of a collection. For instance, consider this program:

The abstract machine says these objects cannot overlap, but does not otherwise constrain their positions in memory.

On Linux, GCC will put all these variables into the stack segment, which we can see using hexdump . But it can put them in the stack segment in any order , as we can see by reordering the declarations (try declaration order i1 , c1 , i2 , c2 , c3 ), by changing optimization levels, or by adding different scopes (braces). The abstract machine gives the programmer no guarantees about how object addresses relate. In fact, the compiler may move objects around during execution, as long as it ensures that the program behaves according to the abstract machine. Modern optimizing compilers often do this, particularly for automatic objects.

But what order does the compiler choose? With optimization disabled, the compiler appears to lay out objects in decreasing order by declaration, so the first declared variable in the function has the highest address. With optimization enabled, the compiler follows roughly the same guideline, but it also rearranges objects by type—for instance, it tends to group char s together—and it can reuse space if different variables in the same function have disjoint lifetimes. The optimizing compiler tends to use less space for the same set of variables. This is because it’s arranging objects by alignment.

The C++ compiler and library restricts the addresses at which some kinds of data appear. In particular, the address of every int value is always a multiple of 4, whether it’s located on the stack (automatic lifetime), the data segment (static lifetime), or the heap (dynamic lifetime).

A bunch of observations will show you these rules:

| Type | Size | Address restrictions | ( ) |

|---|---|---|---|

| ( , ) | 1 | No restriction | 1 |

| ( ) | 2 | Multiple of 2 | 2 |

| ( ) | 4 | Multiple of 4 | 4 |

| ( ) | 8 | Multiple of 8 | 8 |

| 4 | Multiple of 4 | 4 | |

| 8 | Multiple of 8 | 8 | |

| 16 | Multiple of 16 | 16 | |

| 8 | Multiple of 8 | 8 |

These are the alignment restrictions for an x86-64 Linux machine.

These restrictions hold for most x86-64 operating systems, except that on Windows, the long type has size and alignment 4. (The long long type has size and alignment 8 on all x86-64 operating systems.)

Just like every type has a size, every type has an alignment. The alignment of a type T is a number a ≥1 such that the address of every object of type T must be a multiple of a . Every object with type T has size sizeof(T) —it occupies sizeof(T) contiguous bytes of memory; and has alignment alignof(T) —the address of its first byte is a multiple of alignof(T) . You can also say sizeof(x) and alignof(x) where x is the name of an object or another expression.

Alignment restrictions can make hardware simpler, and therefore faster. For instance, consider cache blocks. CPUs access memory through a transparent hardware cache. Data moves from primary memory, or RAM (which is large—a couple gigabytes on most laptops—and uses cheaper, slower technology) to the cache in units of 64 or 128 bytes. Those units are always aligned: on a machine with 128-byte cache blocks, the bytes with memory addresses [127, 128, 129, 130] live in two different cache blocks (with addresses [0, 127] and [128, 255]). But the 4 bytes with addresses [4n, 4n+1, 4n+2, 4n+3] always live in the same cache block. (This is true for any small power of two: the 8 bytes with addresses [8n,…,8n+7] always live in the same cache block.) In general, it’s often possible to make a system faster by leveraging restrictions—and here, the CPU hardware can load data faster when it can assume that the data lives in exactly one cache line.

The compiler, library, and operating system all work together to enforce alignment restrictions.

On x86-64 Linux, alignof(T) == sizeof(T) for all fundamental types (the types built in to C: integer types, floating point types, and pointers). But this isn’t always true; on x86-32 Linux, double has size 8 but alignment 4.

It’s possible to construct user-defined types of arbitrary size, but the largest alignment required by a machine is fixed for that machine. C++ lets you find the maximum alignment for a machine with alignof(std::max_align_t) ; on x86-64, this is 16, the alignment of the type long double (and the alignment of some less-commonly-used SIMD “vector” types ).

We now turn to the abstract machine rules for laying out all collections. The sizes and alignments for user-defined types—arrays, structs, and unions—are derived from a couple simple rules or principles. Here they are. The first rule applies to all types.

1. First-member rule. The address of the first member of a collection equals the address of the collection.

Thus, the address of an array is the same as the address of its first element. The address of a struct is the same as the address of the first member of the struct.

The next three rules depend on the class of collection. Every C abstract machine enforces these rules.

2. Array rule. Arrays are laid out sequentially as described above.

3. Struct rule. The second and subsequent members of a struct are laid out in order, with no overlap, subject to alignment constraints.

4. Union rule. All members of a union share the address of the union.

In C, every struct follows the struct rule, but in C++, only simple structs follow the rule. Complicated structs, such as structs with some public and some private members, or structs with virtual functions, can be laid out however the compiler chooses. The typical situation is that C++ compilers for a machine architecture (e.g., “Linux x86-64”) will all agree on a layout procedure for complicated structs. This allows code compiled by different compilers to interoperate.

That next rule defines the operation of the malloc library function.

5. Malloc rule. Any non-null pointer returned by malloc has alignment appropriate for any type. In other words, assuming the allocated size is adequate, the pointer returned from malloc can safely be cast to T* for any T .

Oddly, this holds even for small allocations. The C++ standard (the abstract machine) requires that malloc(1) return a pointer whose alignment is appropriate for any type, including types that don’t fit.

And the final rule is not required by the abstract machine, but it’s how sizes and alignments on our machines work.

6. Minimum rule. The sizes and alignments of user-defined types, and the offsets of struct members, are minimized within the constraints of the other rules.

The minimum rule, and the sizes and alignments of basic types, are defined by the x86-64 Linux “ABI” —its Application Binary Interface. This specification standardizes how x86-64 Linux C compilers should behave, and lets users mix and match compilers without problems.

Consequences of the size and alignment rules

From these rules we can derive some interesting consequences.

First, the size of every type is a multiple of its alignment .

To see why, consider an array with two elements. By the array rule, these elements have addresses a and a+sizeof(T) , where a is the address of the array. Both of these addresses contain a T , so they are both a multiple of alignof(T) . That means sizeof(T) is also a multiple of alignof(T) .

We can also characterize the sizes and alignments of different collections .

- The size of an array of N elements of type T is N * sizeof(T) : the sum of the sizes of its elements. The alignment of the array is alignof(T) .

- The size of a union is the maximum of the sizes of its components (because the union can only hold one component at a time). Its alignment is also the maximum of the alignments of its components.

- The size of a struct is at least as big as the sum of the sizes of its components. Its alignment is the maximum of the alignments of its components.

Thus, the alignment of every collection equals the maximum of the alignments of its components.

It’s also true that the alignment equals the least common multiple of the alignments of its components. You might have thought lcm was a better answer, but the max is the same as the lcm for every architecture that matters, because all fundamental alignments are powers of two.

The size of a struct might be larger than the sum of the sizes of its components, because of alignment constraints. Since the compiler must lay out struct components in order, and it must obey the components’ alignment constraints, and it must ensure different components occupy disjoint addresses, it must sometimes introduce extra space in structs. Here’s an example: the struct will have 3 bytes of padding after char c , to ensure that int i2 has the correct alignment.

Thanks to padding, reordering struct components can sometimes reduce the total size of a struct. Padding can happen at the end of a struct as well as the middle. Padding can never happen at the start of a struct, however (because of Rule 1).

The rules also imply that the offset of any struct member —which is the difference between the address of the member and the address of the containing struct— is a multiple of the member’s alignment .

To see why, consider a struct s with member m at offset o . The malloc rule says that any pointer returned from malloc is correctly aligned for s . Every pointer returned from malloc is maximally aligned, equalling 16*x for some integer x . The struct rule says that the address of m , which is 16*x + o , is correctly aligned. That means that 16*x + o = alignof(m)*y for some integer y . Divide both sides by a = alignof(m) and you see that 16*x/a + o/a = y . But 16/a is an integer—the maximum alignment is a multiple of every alignment—so 16*x/a is an integer. We can conclude that o/a must also be an integer!

Finally, we can also derive the necessity for padding at the end of structs. (How?)

What happens when an object is uninitialized? The answer depends on its lifetime.

- static lifetime (e.g., int global; at file scope): The object is initialized to 0.

- automatic or dynamic lifetime (e.g., int local; in a function, or int* ptr = new int ): The object is uninitialized and reading the object’s value before it is assigned causes undefined behavior.

Compiler hijinks

In C++, most dynamic memory allocation uses special language operators, new and delete , rather than library functions.

Though this seems more complex than the library-function style, it has advantages. A C compiler cannot tell what malloc and free do (especially when they are redefined to debugging versions, as in the problem set), so a C compiler cannot necessarily optimize calls to malloc and free away. But the C++ compiler may assume that all uses of new and delete follow the rules laid down by the abstract machine. That means that if the compiler can prove that an allocation is unnecessary or unused, it is free to remove that allocation!

For example, we compiled this program in the problem set environment (based on test003.cc ):

The optimizing C++ compiler removes all calls to new and delete , leaving only the call to m61_printstatistics() ! (For instance, try objdump -d testXXX to look at the compiled x86-64 instructions.) This is valid because the compiler is explicitly allowed to eliminate unused allocations, and here, since the ptrs variable is local and doesn’t escape main , all allocations are unused. The C compiler cannot perform this useful transformation. (But the C compiler can do other cool things, such as unroll the loops .)

One of C’s more interesting choices is that it explicitly relates pointers and arrays. Although arrays are laid out in memory in a specific way, they generally behave like pointers when they are used. This property probably arose from C’s desire to explicitly model memory as an array of bytes, and it has beautiful and confounding effects.

We’ve already seen one of these effects. The hexdump function has this signature (arguments and return type):

But we can just pass an array as argument to hexdump :

When used in an expression like this—here, as an argument—the array magically changes into a pointer to its first element. The above call has the same meaning as this:

C programmers transition between arrays and pointers very naturally.

A confounding effect is that unlike all other types, in C arrays are passed to and returned from functions by reference rather than by value. C is a call-by-value language except for arrays. This means that all function arguments and return values are copied, so that parameter modifications inside a function do not affect the objects passed by the caller—except for arrays. For instance: void f ( int a[ 2 ]) { a[ 0 ] = 1 ; } int main () { int x[ 2 ] = { 100 , 101 }; f(x); printf( "%d \n " , x[ 0 ]); // prints 1! } If you don’t like this behavior, you can get around it by using a struct or a C++ std::array . #include <array> struct array1 { int a[ 2 ]; }; void f1 (array1 arg) { arg.a[ 0 ] = 1 ; } void f2 (std :: array < int , 2 > a) { a[ 0 ] = 1 ; } int main () { array1 x = {{ 100 , 101 }}; f1(x); printf( "%d \n " , x.a[ 0 ]); // prints 100 std :: array < int , 2 > x2 = { 100 , 101 }; f2(x2); printf( "%d \n " , x2[ 0 ]); // prints 100 }

C++ extends the logic of this array–pointer correspondence to support arithmetic on pointers as well.

Pointer arithmetic rule. In the C abstract machine, arithmetic on pointers produces the same result as arithmetic on the corresponding array indexes.

Specifically, consider an array T a[n] and pointers T* p1 = &a[i] and T* p2 = &a[j] . Then:

Equality : p1 == p2 if and only if (iff) p1 and p2 point to the same address, which happens iff i == j .

Inequality : Similarly, p1 != p2 iff i != j .

Less-than : p1 < p2 iff i < j .

Also, p1 <= p2 iff i <= j ; and p1 > p2 iff i > j ; and p1 >= p2 iff i >= j .

Pointer difference : What should p1 - p2 mean? Using array indexes as the basis, p1 - p2 == i - j . (But the type of the difference is always ptrdiff_t , which on x86-64 is long , the signed version of size_t .)

Addition : p1 + k (where k is an integer type) equals the pointer &a[i + k] . ( k + p1 returns the same thing.)

Subtraction : p1 - k equals &a[i - k] .

Increment and decrement : ++p1 means p1 = p1 + 1 , which means p1 = &a[i + 1] . Similarly, --p1 means p1 = &a[i - 1] . (There are also postfix versions, p1++ and p1-- , but C++ style prefers the prefix versions.)

No other arithmetic operations on pointers are allowed. You can’t multiply pointers, for example. (You can multiply addresses by casting the pointers to the address type, uintptr_t —so (uintptr_t) p1 * (uintptr_t) p2 —but why would you?)

From pointers to iterators

Let’s write a function that can sum all the integers in an array.

This function can compute the sum of the elements of any int array. But because of the pointer–array relationship, its a argument is really a pointer . That allows us to call it with subarrays as well as with whole arrays. For instance:

This way of thinking about arrays naturally leads to a style that avoids sizes entirely, using instead a sentinel or boundary argument that defines the end of the interesting part of the array.

These expressions compute the same sums as the above:

Note that the data from first to last forms a half-open range . iIn mathematical notation, we care about elements in the range [first, last) : the element pointed to by first is included (if it exists), but the element pointed to by last is not. Half-open ranges give us a simple and clear way to describe empty ranges, such as zero-element arrays: if first == last , then the range is empty.

Note that given a ten-element array a , the pointer a + 10 can be formed and compared, but must not be dereferenced—the element a[10] does not exist. The C/C++ abstract machines allow users to form pointers to the “one-past-the-end” boundary elements of arrays, but users must not dereference such pointers.

So in C, two pointers naturally express a range of an array. The C++ standard template library, or STL, brilliantly abstracts this pointer notion to allow two iterators , which are pointer-like objects, to express a range of any standard data structure—an array, a vector, a hash table, a balanced tree, whatever. This version of sum works for any container of int s; notice how little it changed:

Some example uses:

Addresses vs. pointers

What’s the difference between these expressions? (Again, a is an array of type T , and p1 == &a[i] and p2 == &a[j] .)

The first expression is defined analogously to index arithmetic, so d1 == i - j . But the second expression performs the arithmetic on the addresses corresponding to those pointers. We will expect d2 to equal sizeof(T) * d1 . Always be aware of which kind of arithmetic you’re using. Generally arithmetic on pointers should not involve sizeof , since the sizeof is included automatically according to the abstract machine; but arithmetic on addresses almost always should involve sizeof .

Although C++ is a low-level language, the abstract machine is surprisingly strict about which pointers may be formed and how they can be used. Violate the rules and you’re in hell because you have invoked the dreaded undefined behavior .

Given an array a[N] of N elements of type T :

Forming a pointer &a[i] (or a + i ) with 0 ≤ i ≤ N is safe.

Forming a pointer &a[i] with i < 0 or i > N causes undefined behavior.

Dereferencing a pointer &a[i] with 0 ≤ i < N is safe.

Dereferencing a pointer &a[i] with i < 0 or i ≥ N causes undefined behavior.

(For the purposes of these rules, objects that are not arrays count as single-element arrays. So given T x , we can safely form &x and &x + 1 and dereference &x .)

What “undefined behavior” means is horrible. A program that executes undefined behavior is erroneous. But the compiler need not catch the error. In fact, the abstract machine says anything goes : undefined behavior is “behavior … for which this International Standard imposes no requirements.” “Possible undefined behavior ranges from ignoring the situation completely with unpredictable results, to behaving during translation or program execution in a documented manner characteristic of the environment (with or without the issuance of a diagnostic message), to terminating a translation or execution (with the issuance of a diagnostic message).” Other possible behaviors include allowing hackers from the moon to steal all of a program’s data, take it over, and force it to delete the hard drive on which it is running. Once undefined behavior executes, a program may do anything, including making demons fly out of the programmer’s nose.

Pointer arithmetic, and even pointer comparisons, are also affected by undefined behavior. It’s undefined to go beyond and array’s bounds using pointer arithmetic. And pointers may be compared for equality or inequality even if they point to different arrays or objects, but if you try to compare different arrays via less-than, like this:

that causes undefined behavior.

If you really want to compare pointers that might be to different arrays—for instance, you’re writing a hash function for arbitrary pointers—cast them to uintptr_t first.

Undefined behavior and optimization

A program that causes undefined behavior is not a C++ program . The abstract machine says that a C++ program, by definition, is a program whose behavior is always defined. The C++ compiler is allowed to assume that its input is a C++ program. (Obviously!) So the compiler can assume that its input program will never cause undefined behavior. Thus, since undefined behavior is “impossible,” if the compiler can prove that a condition would cause undefined behavior later, it can assume that condition will never occur.

Consider this program:

If we supply a value equal to (char*) -1 , we’re likely to see output like this:

with no assertion failure! But that’s an apparently impossible result. The printout can only happen if x + 1 > x (otherwise, the assertion will fail and stop the printout). But x + 1 , which equals 0 , is less than x , which is the largest 8-byte value!

The impossible happens because of undefined behavior reasoning. When the compiler sees an expression like x + 1 > x (with x a pointer), it can reason this way:

“Ah, x + 1 . This must be a pointer into the same array as x (or it might be a boundary pointer just past that array, or just past the non-array object x ). This must be so because forming any other pointer would cause undefined behavior.

“The pointer comparison is the same as an index comparison. x + 1 > x means the same thing as &x[1] > &x[0] . But that holds iff 1 > 0 .

“In my infinite wisdom, I know that 1 > 0 . Thus x + 1 > x always holds, and the assertion will never fail.

“My job is to make this code run fast. The fastest code is code that’s not there. This assertion will never fail—might as well remove it!”

Integer undefined behavior

Arithmetic on signed integers also has important undefined behaviors. Signed integer arithmetic must never overflow. That is, the compiler may assume that the mathematical result of any signed arithmetic operation, such as x + y (with x and y both int ), can be represented inside the relevant type. It causes undefined behavior, therefore, to add 1 to the maximum positive integer. (The ubexplore.cc program demonstrates how this can produce impossible results, as with pointers.)

Arithmetic on unsigned integers is much safer with respect to undefined behavior. Unsigned integers are defined to perform arithmetic modulo their size. This means that if you add 1 to the maximum positive unsigned integer, the result will always be zero.

Dividing an integer by zero causes undefined behavior whether or not the integer is signed.

Sanitizers, which in our makefiles are turned on by supplying SAN=1 , can catch many undefined behaviors as soon as they happen. Sanitizers are built in to the compiler itself; a sanitizer involves cooperation between the compiler and the language runtime. This has the major performance advantage that the compiler introduces exactly the required checks, and the optimizer can then use its normal analyses to remove redundant checks.

That said, undefined behavior checking can still be slow. Undefined behavior allows compilers to make assumptions about input values, and those assumptions can directly translate to faster code. Turning on undefined behavior checking can make some benchmark programs run 30% slower [link] .

Signed integer undefined behavior

File cs61-lectures/datarep5/ubexplore2.cc contains the following program.

What will be printed if we run the program with ./ubexplore2 0x7ffffffe 0x7fffffff ?

0x7fffffff is the largest positive value can be represented by type int . Adding one to this value yields 0x80000000 . In two's complement representation this is the smallest negative number represented by type int .

Assuming that the program behaves this way, then the loop exit condition i > n2 can never be met, and the program should run (and print out numbers) forever.

However, if we run the optimized version of the program, it prints only two numbers and exits:

The unoptimized program does print forever and never exits.

What’s going on here? We need to look at the compiled assembly of the program with and without optimization (via objdump -S ).

The unoptimized version basically looks like this:

This is a pretty direct translation of the loop.

The optimized version, though, does it differently. As always, the optimizer has its own ideas. (Your compiler may produce different results!)

The compiler changed the source’s less than or equal to comparison, i <= n2 , into a not equal to comparison in the executable, i != n2 + 1 (in both cases using signed computer arithmetic, i.e., modulo 2 32 )! The comparison i <= n2 will always return true when n2 == 0x7FFFFFFF , the maximum signed integer, so the loop goes on forever. But the i != n2 + 1 comparison does not always return true when n2 == 0x7FFFFFFF : when i wraps around to 0x80000000 (the smallest negative integer), then i equals n2 + 1 (which also wrapped), and the loop stops.

Why did the compiler make this transformation? In the original loop, the step-6 jump is immediately followed by another comparison and jump in steps 1 and 2. The processor jumps all over the place, which can confuse its prediction circuitry and slow down performance. In the transformed loop, the step-7 jump is never followed by a comparison and jump; instead, step 7 goes back to step 4, which always prints the current number. This more streamlined control flow is easier for the processor to make fast.

But the streamlined control flow is only a valid substitution under the assumption that the addition n2 + 1 never overflows . Luckily (sort of), signed arithmetic overflow causes undefined behavior, so the compiler is totally justified in making that assumption!

Programs based on ubexplore2 have demonstrated undefined behavior differences for years, even as the precise reasons why have changed. In some earlier compilers, we found that the optimizer just upgraded the int s to long s—arithmetic on long s is just as fast on x86-64 as arithmetic on int s, since x86-64 is a 64-bit architecture, and sometimes using long s for everything lets the compiler avoid conversions back and forth. The ubexplore2l program demonstrates this form of transformation: since the loop variable is added to a long counter, the compiler opportunistically upgrades i to long as well. This transformation is also only valid under the assumption that i + 1 will not overflow—which it can’t, because of undefined behavior.

Using unsigned type prevents all this undefined behavior, because arithmetic overflow on unsigned integers is well defined in C/C++. The ubexplore2u.cc file uses an unsigned loop index and comparison, and ./ubexplore2u and ./ubexplore2u.noopt behave exactly the same (though you have to give arguments like ./ubexplore2u 0xfffffffe 0xffffffff to see the overflow).

Computer arithmetic and bitwise operations

Basic bitwise operators.

Computers offer not only the usual arithmetic operators like + and - , but also a set of bitwise operators. The basic ones are & (and), | (or), ^ (xor/exclusive or), and the unary operator ~ (complement). In truth table form:

| (and) | ||

|---|---|---|

| 0 | 0 | |

| 0 | 1 |

| (or) | ||

|---|---|---|

| 0 | 1 | |

| 1 | 1 |

| (xor) | ||

|---|---|---|

| 0 | 1 | |

| 1 | 0 |

| (complement) | |

|---|---|

| 1 | |

| 0 |

In C or C++, these operators work on integers. But they work bitwise: the result of an operation is determined by applying the operation independently at each bit position. Here’s how to compute 12 & 4 in 4-bit unsigned arithmetic:

These basic bitwise operators simplify certain important arithmetics. For example, (x & (x - 1)) == 0 tests whether x is zero or a power of 2.

Negation of signed integers can also be expressed using a bitwise operator: -x == ~x + 1 . This is in fact how we define two's complement representation. We can verify that x and (-x) does add up to zero under this representation:

Bitwise "and" ( & ) can help with modular arithmetic. For example, x % 32 == (x & 31) . We essentially "mask off", or clear, higher order bits to do modulo-powers-of-2 arithmetics. This works in any base. For example, in decimal, the fastest way to compute x % 100 is to take just the two least significant digits of x .

Bitwise shift of unsigned integer

x << i appends i zero bits starting at the least significant bit of x . High order bits that don't fit in the integer are thrown out. For example, assuming 4-bit unsigned integers

Similarly, x >> i appends i zero bits at the most significant end of x . Lower bits are thrown out.

Bitwise shift helps with division and multiplication. For example:

A modern compiler can optimize y = x * 66 into y = (x << 6) + (x << 1) .

Bitwise operations also allows us to treat bits within an integer separately. This can be useful for "options".

For example, when we call a function to open a file, we have a lot of options:

- Open for reading?

- Open for writing?

- Read from the end?

- Optimize for writing?

We have a lot of true/false options.

One bad way to implement this is to have this function take a bunch of arguments -- one argument for each option. This makes the function call look like this:

The long list of arguments slows down the function call, and one can also easily lose track of the meaning of the individual true/false values passed in.

A cheaper way to achieve this is to use a single integer to represent all the options. Have each option defined as a power of 2, and simply | (or) them together and pass them as a single integer.

Flags are usually defined as powers of 2 so we set one bit at a time for each flag. It is less common but still possible to define a combination flag that is not a power of 2, so that it sets multiple bits in one go.

File cs61-lectures/datarep5/mb-driver.cc contains a memory allocation benchmark. The core of the benchmark looks like this: