- Ways to Give

- Contact an Expert

- Explore WRI Perspectives

Filter Your Site Experience by Topic

Applying the filters below will filter all articles, data, insights and projects by the topic area you select.

- All Topics Remove filter

- Climate filter site by Climate

- Cities filter site by Cities

- Energy filter site by Energy

- Food filter site by Food

- Forests filter site by Forests

- Freshwater filter site by Freshwater

- Ocean filter site by Ocean

- Business filter site by Business

- Economics filter site by Economics

- Finance filter site by Finance

- Equity & Governance filter site by Equity & Governance

Search WRI.org

Not sure where to find something? Search all of the site's content.

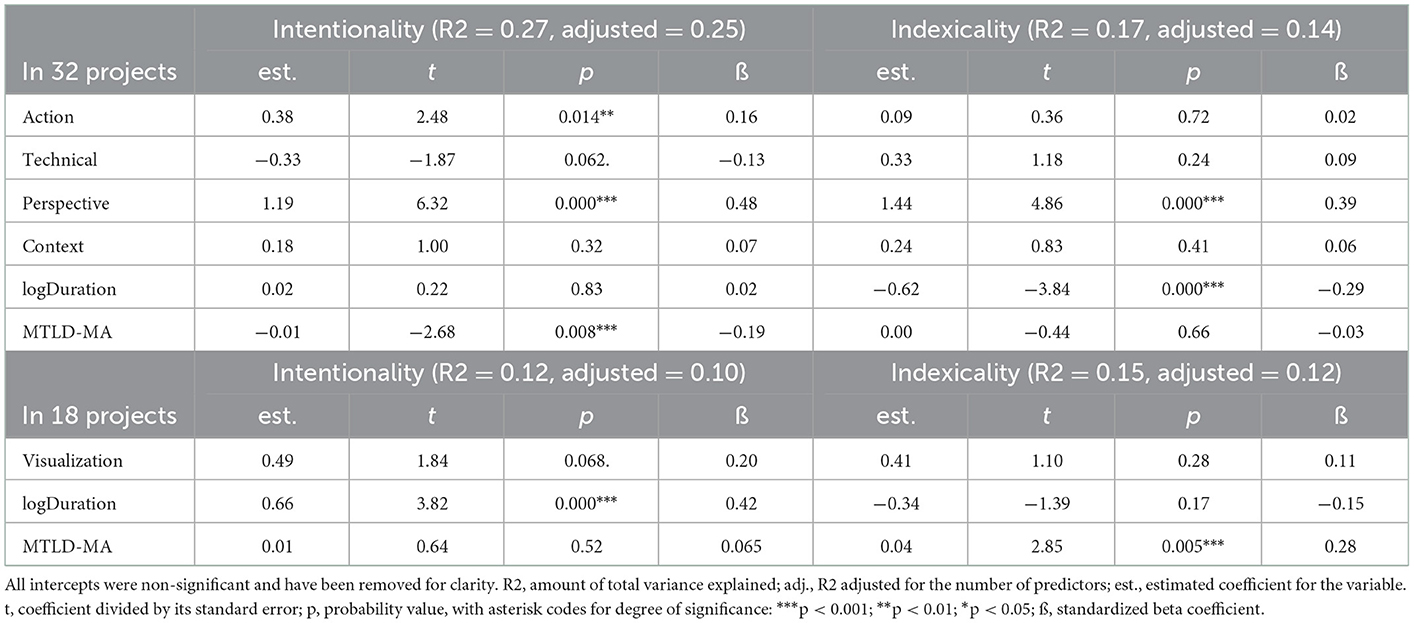

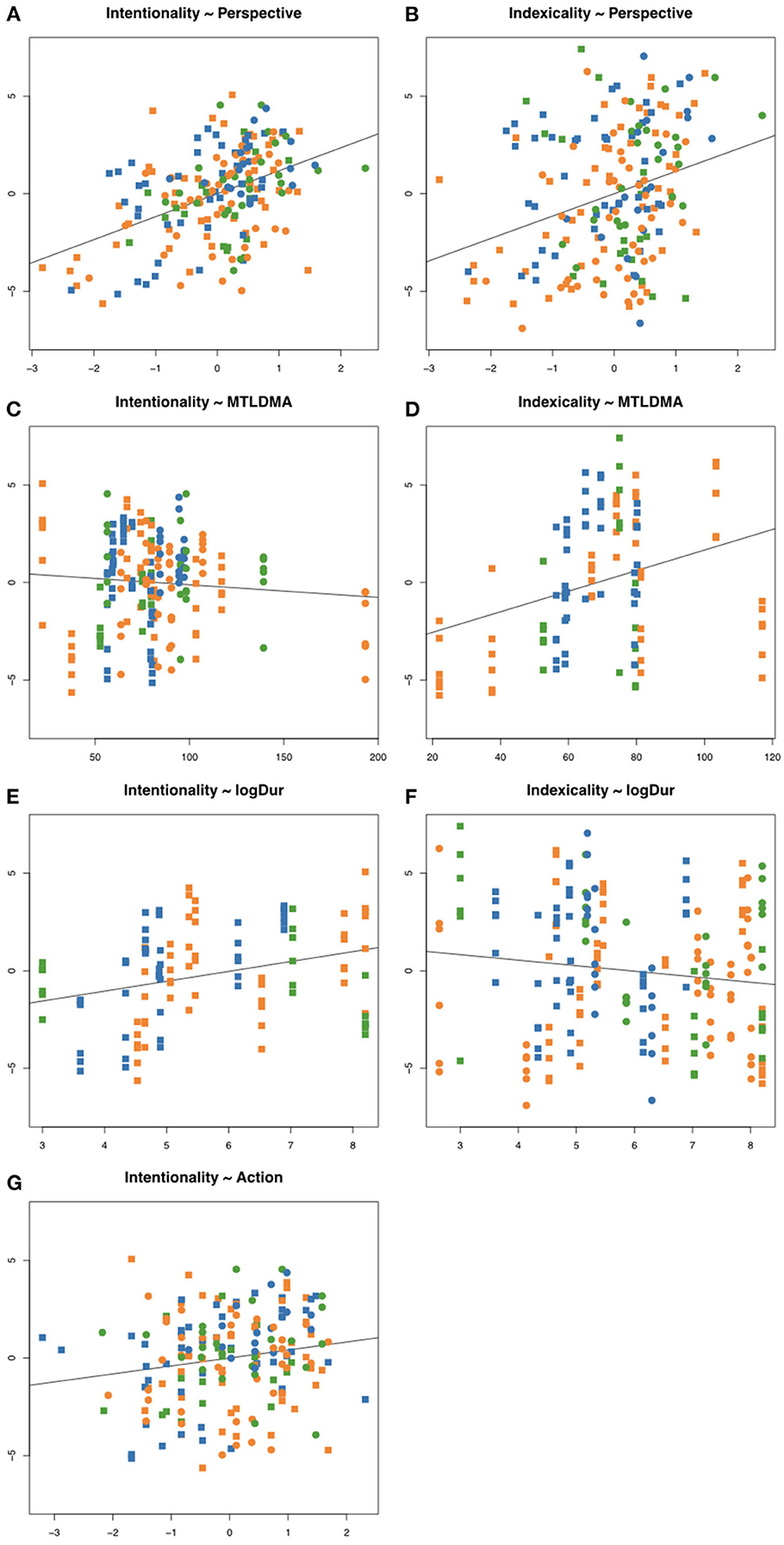

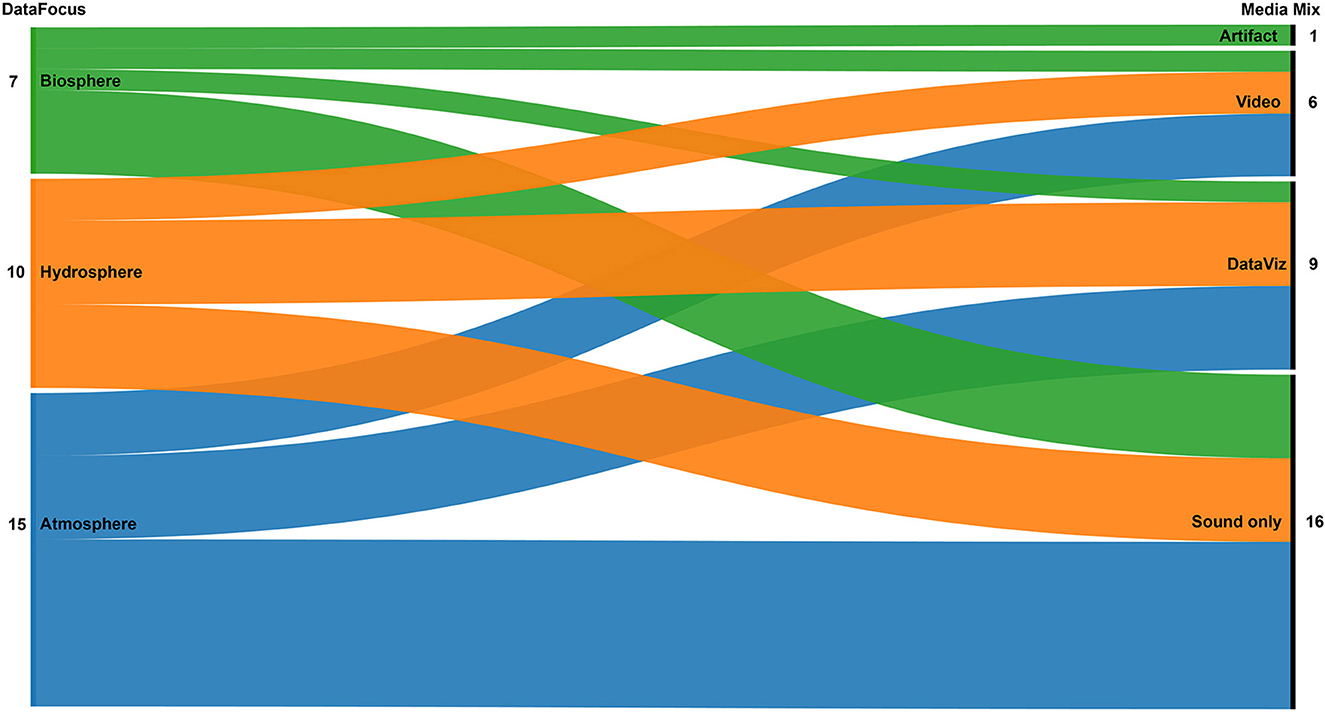

Overview of 100+ Climate Data Platforms

This Data Visualization is part of Climate Watch . Reach out to Irene Berman-Vaporis for more information.

- Irene Berman-Vaporis

From country to city-level emissions, measuring adaptation vs. mitigation, tracking climate finance from the public and private sectors, understanding global climate goals and the action needed to meet them requires a wealth of information. Data exists for many aspects of climate change, but with hundreds of platforms and countless datasets, it can be difficult to distinguish the best information for a particular need, or to find where data gaps exist.

This interactive visual shows a matrix of more than 100 major climate data platforms, displayed by topic (x-axis) and geographic level/scale (y-axis). Users can also view this data as a filterable table or download it directly .

Potential use cases include:

- Climate data analysts can find the most relevant platform for their specific topic and the level at which they work.

- Funders and data creators can pinpoint data gaps, check if there are existing platforms on a subject to avoid redundancy of new work, or leverage synergies by building on existing work.

- City planners can find data on peer cities’ emissions and climate actions, as well as how those actions might relate to national-level targets.

- Decision makers looking for specific data on policy, finance or other topics can find relevant data for various scales, comparing policy or investment from various cities, countries or regions.

Some of the notable patterns found in curating this dataset include: an overarching focus on mitigation, energy and country-level data; numerous platforms showcasing similar datasets; and a general lack of maintenance, even on carefully built datasets. These all underscore a clear need to improve the world’s climate-related data infrastructure.

Projects that include this Resource

Climate Watch

Climate Watch offers powerful insights and data on national climate plans, long-term strategies and greenhouse gas emissions to help countries achieve their climate and sustainable development goals.

Primary Contact

Head of Communications, Systems Change Lab and Climate Watch

How You Can Help

WRI relies on the generosity of donors like you to turn research into action. You can support our work by making a gift today or exploring other ways to give.

Stay Informed

World Resources Institute 10 G Street NE Suite 800 Washington DC 20002 +1 (202) 729-7600

© 2024 World Resources Institute

Envision a world where everyone can enjoy clean air, walkable cities, vibrant landscapes, nutritious food and affordable energy.

- MOTIVATION.

- LINKING COMMENTARY TO CLIMATE DATA.

- TECHNICAL ARCHITECTURE.

- EXPLORING COMMENTARY IN SPACE AND TIME.

- FUTURE PLANS FOR CHARME.

Berrick , S. W. , G. Leptoukh , J. D. Farley , and H. Rui , 2009 : Giovanni: A web service workflow-based data visualization and analysis system . IEEE Trans. Geosci. Remote Sens. , 47 , 106 – 113 , doi: 10.1109/TGRS.2008.2003183 .

- Search Google Scholar

- Export Citation

Blower , J. D. , and Coauthors , 2014 : Understanding climate data through commentary metadata: The CHARMe project. Theory and Practice of Digital Libraries-TPDL 2013 Selected Workshops , L. Bolikowski et al., Eds., Springer, 28–39, doi: 10.1007/978-3-319-08425-1_4 .

Dowell , M. , and Coauthors , 2013 : Strategy towards an architecture for climate monitoring from space, 39 pp. [Available online at www.wmo.int/pages/prog/sat/documents/ARCH_strategy-climate-architecture-space.pdf .]

Kershaw , P ., 2014 : Data model for commentary metadata (d400.1). Tech. rep., The CHARMe Project .

Nagni , M. , and P. Kershaw , 2014 : Concrete encodings of commentary metadata (d400.3). Tech. rep., The CHARMe Project .

Rood , R. , and P. Edwards , 2014 : Climate informatics: Human experts and the end-to-end system. Earthzine . [Available online at www.earthzine.org/2014/05/22/climate-informatics-human-experts-and-the-end-to-end-system/ .]

An example of an annotation that describes a dataset found via the CMSAF website.

The basic Open Annotation (OA) data model showing how an annotation links one piece of information (held in the body) with another (the target), and is described using the Resource Description Framework (RDF).

The CHARMe data model being used to link a comment about a conference paper to the data it cites (in this example, the “ATSR2lb product”). The links are encoded using standard ontologies for describing resources such as RDF and Dublin Core (dc); see the For Further Reading section for more details.

Overview of CHARMe client-server architecture, with the JavaScript plug-in shown as an example of a client program. More details on the technologies used can be found in the technical documentation listed in the For Further Reading section. The CHARMe node (blue box) provides a range of web interfaces (green box). SPARQL and REST-ful (OpenSearch) query interfaces are provided for structured queries using RDF metadata. A REST API allows for submission, deletion, or modification of annotations and supports a JSON-LD serialization. The security layer provides access control via an OAuth 2.0 interface. Validation middleware checks the format of what has been submitted. A web admin interface allows users with the appropriate privileges to log in to the node directly (e.g., as a moderator) or to set up new data providers. The triple store and interface (gray box) is based on Apache Jena and Fuseki, respectively. The triple store is augmented with a NoSQL plug-in to index information and improve performance for search.

The screenshots show a user viewing an annotation via (left) a browser or (right) the plug-in. If the user is the creator of the annotation, or has moderator or superuser privileges, the interface will include a "Delete" button, as highlighted, with the further ability to "Modify" an annotation via the plug-in.

The Significant Events Viewer being used to explore time series of global ozone in two reanalysis datasets (ERA-40 and ERA-Int), with a significant event timeline plotted below. The right-hand panel, “Event Information,” describes the selected significant event (indicated by the yellow bubble). The clear CHARMe icon (top right) indicates that there are currently no user annotations recorded for the selected significant event.

A screenshot of the CHARMe Maps tool, highlighting the “fine-grained commentary” capability. Here, the user is visualizing two variables from a dataset (sea surface temperature and its associated error field) along with comments that have been attached to specific points or regions within the dataset. Note that each variable is associated with a different set of commentary.

A screenshot of the CHARMe Maps tool, highlighting the “intercomparison” capability. Two different albedo datasets are being visualized (left), with the two right-hand columns showing the commentary metadata that have been attached to each dataset. The dataset described in the right-most column (corresponding to the lower one in the visualization panel) has a number of publications attached to it.

Simplified representation of the data model for fine-grained commentary illustrating the use of Open Annotation's capability to annotate spatial and temporal subsets of a resource (in this case, a climate dataset). The full data model for fine-grained commentary includes more properties of the SubsetSelector.

- View raw image

- Download Powerpoint Slide

| All Time | Past Year | Past 30 Days | |

|---|---|---|---|

| Abstract Views | 0 | 0 | 0 |

| Full Text Views | 488 | 139 | 8 |

| PDF Downloads | 136 | 37 | 5 |

Related Content

The impacts of interannual climate variability on the declining trend in terrestrial water storage over the tigris–euphrates river basin, evaluation of snowfall retrieval performance of gpm constellation radiometers relative to spaceborne radars, a 440-year reconstruction of heavy precipitation in california from blue oak tree rings, quantifying the role of internal climate variability and its translation from climate variables to hydropower production at basin scale in india, extreme convective rainfall and flooding from winter season extratropical cyclones in the mid-atlantic region of the united states.

- Previous Article

- Next Article

Capturing and Sharing Our Collective Expertise on Climate Data: The CHARMe Project

Displayed acceptance dates for articles published prior to 2023 are approximate to within a week. If needed, exact acceptance dates can be obtained by emailing [email protected] .

- Download PDF

For users of climate services, the ability to quickly determine the datasets that best fit one’s needs would be invaluable. The volume, variety, and complexity of climate data makes this judgment difficult. The ambition of CHARMe (Characterization of metadata to enable high-quality climate services) is to give a wider interdisciplinary community access to a range of supporting information, such as journal articles, technical reports, or feedback on previous applications of the data. The capture and discovery of this “commentary” information, often created by data users rather than data providers, and currently not linked to the data themselves, has not been significantly addressed previously. CHARMe applies the principles of Linked Data and open web standards to associate, record, search, and publish user-derived annotations in a way that can be read both by users and automated systems. Tools have been developed within the CHARMe project that enable annotation capability for data delivery systems already in wide use for discovering climate data. In addition, the project has developed advanced tools for exploring data and commentary in innovative ways, including an interactive data explorer and comparator (“CHARMe Maps”), and a tool for correlating climate time series with external “significant events” (e.g., instrument failures or large volcanic eruptions) that affect the data quality. Although the project focuses on climate science, the concepts are general and could be applied to other fields. All CHARMe system software is open-source and released under a liberal license, permitting future projects to reuse the source code as they wish.

Increasingly, people gather inputs and make decisions through websites that offer evolving commentary from others at the point of decision or purchase; think of Amazon, TripAdvisor, Yelp, and countless others. Introducing an analogous capability into Earth science data selection and acquisition has the potential to turn what is currently a solitary exploration into one where the user’s decisions are informed by a broad community.

Today, science users in search of relevant datasets for their investigations find these data in a variety of ways: filtering by climate parameter, crawling/browsing data servers, or through some other sophisticated means. Regardless of how a user arrived there, ultimately she is presented with a list of links to data sources (files) through some data system interface. These are what the science user accesses to compute, analyze, and visualize information, yet many times they lack up-to-date ancillary information—for example, pointers to documentation on the dataset, contact information for the dataset engineer or the responsible science lead, information on how others have used the data, or if there are known problems. In all of these cases the interested user must navigate away from the search results in order to discover the information they require, and it is not always obvious how to go about it, or whether the information she discovers is valid for, or even relevant to, the particular dataset of interest.

The European collaborative project CHARMe (Characterization of metadata to enable high-quality climate services) has developed a system that avoids these navigation and presentation issues by providing crowd-sourced user commentary directly next to the data download link. The connection is immediate and obvious, and content continues to expand as the data get more use. Users can post notes about issues and questions to the data providers and other users, and the data engineers responsible for the dataset know exactly what data are involved when answering questions. The technology allows for this knowledge capture to stay connected to the dataset itself, no matter how the user arrives at the download link.

As an example, Fig. 1 shows CHARMe being used via the website of the Climate Monitoring Satellite Applications Facility (CMSAF), hosted by Deutscher Wetterdienst: www.cmsaf.eu/doi . In this case the user is browsing a list of datasets, and having clicked on the blue “C” icon is viewing an annotation linking the selected dataset to a validation report. The tags “describing” and “linking” (as well as others available to the user when submitting the annotation) help subsequent users to understand why the comment was made, and discover the comment using a facility known as a “faceted search.”

Citation: Bulletin of the American Meteorological Society 97, 4; 10.1175/BAMS-D-14-00189.1

- Download Figure

- Download figure as PowerPoint slide

This article describes the application of this system to climate data, the underlying technology and data model, and the tools that have been developed to demonstrate use of this commentary to explore climate data in new ways.

Climate data are diverse, encompassing in situ and remotely sensed observations, the results of numerical models, and the combination of models and observations in reanalysis programs. A particular feature of climate data is that their intrinsic value grows over time, but there is a risk that the expertise in the use of the data is lost as people move on and expert teams disperse. End products are often derived from a variety of sources, making it difficult to issue simple statements about a product’s quality, and impossible to label a particular dataset as “the best.” Instead, users need to weigh up a range of features to judge a dataset’s fitness for their specific purpose. The importance of this “knowledge around the data” is recognized by the International Strategy Toward an Architecture Climate Monitoring from Space ( Dowell et al. 2013 ): it is just as important to preserve this knowledge about the data as it is to preserve the measurements themselves.

There are many international collaborations and initiatives already gathering the information users need about climate data. For instance, the European project “Coordinating Earth Observation data for reanalysis for climate services: CORE-CLIMAX” ( www.coreclimax.eu/ ) is bringing together the data and information to support reanalyses of past climate. The international Obs4MIPS (Observations for Model Intercomparisons; www.earthsystemcog.org/projects/obs4mips/ ) activity is making observational products more accessible for climate model intercomparisons, partly through the generation of technical notes that describe the characteristics of the observational data in a way that is tailored to the needs of climate modelers.

The Climate Data Guide from the National Center for Atmospheric Research ( https://climatedataguide.ucar.edu/ ) allows users to compare the attributes, strengths, and limitations of multiple datasets. However the website specifically states “The Climate Data Guide generally does not distribute data sets. It is your responsibility to find and process the data that you need. ” Again, the data and supporting information are in different locations, and it is up to the user to navigate between them.

In addition, every Earth science researcher and climate data user around the world will be generating commentary as they go about their work. It is surely the case that the same advances and dead-ends are being discovered time and again. Traditionally, such knowledge is captured in narrative form and shared through human-readable means such as papers, articles, and presentations; a great deal of extra value can be gained by sharing this information in a machine-readable, searchable way.

The term “Linked Data” refers to a set of best-practice techniques that describe how one can make data available on the web and interconnect it with other data, with the aim of increasing its value for applications and users. The CHARMe project applies the principles of Linked Data to climate data commentary: the representation of the commentary within a formal data model is a critical part of the CHARMe design. Open Annotation, a W3C effort to develop a common approach to annotating digital resources, provides the underlying concept ( www.openannotation.org/ ). It offers a simple and general data model for recording annotations about objects. An annotation associates a piece of information (the body) with a subject (the target), as shown in Fig. 2 . Although applied largely to arts- and humanities-related applications thus far, the model has shown itself to be versatile and readily adaptable to Earth observation and climate science use. For example, Open Annotation provides a means of specifying subsets of a given target, such as a character range to reference a given piece of text from a document. Building on these concepts in the framework, it is possible to define extensions to describe geographic subsets of datasets, which are described further in the section below, “Exploring Commentary in Space and Time.”

In a data portal, a target is typically a dataset (or subset of a dataset), while the annotation body holds the commentary. The overall design offers flexibility: a single comment body can be associated with many data targets, or the body of one annotation can be the target of another. This is illustrated in Fig. 3 , which shows how the CHARMe data model represents a comment on a conference paper, also capturing a link to the dataset cited by the paper. In this way the CHARMe system begins to link the user to a “web of knowledge” about the data they are interested in.

The GeoViQua project ( www.geoviqua.org/ ) has developed a data model for user feedback on datasets in the Global Earth Observation System of Systems. This model shares some conceptual similarities with the CHARMe data model, with the main difference being that the CHARMe model is built on Linked Data principles and the RDF (Resource Description Framework; www.w3.org/RDF/ ) data model, whereas the GeoViQua model is based around a UML (Unified Modeling Language; www.uml.org/ ) model and a derived XML encoding. These two approaches are complementary. UML models describe information in a relatively fixed, rigid fashion; this allows data producers and consumers to interoperate closely because the consumer knows exactly what data structure to expect. The disadvantage of this is that data models, once fixed and agreed upon, can be hard to apply to situations that were not expected at design time. By contrast, RDF models enable the data producer to structure data more flexibly, enabling new requirements to be more smoothly integrated. However, it can be difficult to write data-consuming software that can handle all the possibilities afforded by this high degree of flexibility. Discussions are ongoing within the Open Geospatial Consortium to harmonize the CHARMe and GeoViQua models at the conceptual level, enabling implementers to apply the encoding they feel is most appropriate to the application.

Rather than create a new web portal to expose climate data commentary to users, CHARMe has developed a plug-in that is simple to include in existing data-access portals. The plug-in highlights to users the existence of commentary on their datasets of interest and allows them to make comments of their own. A third-party data provider is “CHARMe enabled” by integrating the JavaScript for the plug-in in their website. As shown in Fig. 1 , CHARMe also provides a convenient way to share information from the data provider (e.g., dataset provenance, updates, or corrections) at the point of access.

CHARMe has been implemented as a client-server architecture. On the server side, there is currently a single repository (a CHARMe “node”) that stores all the annotation information and has interfaces to support many clients. Figure 4 shows the architecture of a CHARMe node, with the plug-in as an example of a client application. In most cases, the node does not store the target information itself—for instance, the actual dataset and conference paper journal in Fig. 3 —but instead stores a link to the target; it is an important principle of Linked Data that these targets have persistent identifiers, such as a Digital Object Identifier (DOI) or persistent Uniform Resource Locator (pURL). This is critical not only for the CHARMe system to work but also for the larger problem of wider use of data citation in general.

The philosophy underlying the technical implementation was to develop a generic piece of software that can be configured to work with different underlying “off-the-shelf” technologies, and for the information held in the CHARMe node to be accessible via a range of web service interfaces. For example, CHARMe provides an open, read-only, web service endpoint (using the SPARQL protocol: www.w3.org/TR/rdf-sparql-query/ ) allowing potentially complex data mining and analysis for data scientists, as well as a simpler (but more limited) OpenSearch interface ( www.opensearch.org/ ). The faceted search facility in the plug-in is effectively a graphical user interface for queries to this OpenSearch interface. As these interfaces are built on open standards, it is possible for third parties to build other applications that produce and consume CHARMe commentary, with the CHARMe information syndicated to multiple end-user applications.

CHARMe uses the popular OAuth 2.0 framework ( http://oauth.net/2/ ) to secure interactions between client programs and the node. The user authenticates and delegates permission to the client program to execute any secured operations on their behalf. All annotations submitted are publicly accessible in read-only mode; add, delete, and modify functions are secured and require login. Users have authority to modify or delete only the annotations that they themselves have submitted. In addition, however, there are two other elevated sets of privileges, for “moderators” and “superusers,” which require registration with the node. Moderators are able to modify and delete any annotation originating from their client program(s), while superuser privileges can be granted to an overall administrator or administrators for the node. The client program is identified by an ID assigned by the node to the instance of the program (such as the plug-in) when it is deployed, and the moderator has oversight of content entered from their deployment of CHARMe. Superusers, in contrast, can modify or delete any annotation, from any source. This is provided as a second line of support for the moderation function used by individual clients. Example interfaces from a browser or the plug-in are pictured in Fig. 5 .

As part of the CHARMe project, the European Centre for Medium-Range Weather Forecasts (ECMWF) has developed a web-based graphical tool for associating features in climate time-series data with commentary that indicates “significant events” that may have affected the data, such as volcanic eruptions or satellite instrument failures. This “Significant Events Viewer” has been developed to work with ECMWF’s reanalysis datasets and internal observation and events databases, but is designed to be general enough to be extended to other datasets and user needs. The viewer provides users with an opportunity to become more familiar with the variety of observations that feed into the reanalysis, and to determine whether the variability and features seen in the dataset are likely to be artifacts of the measurements or processing steps, or real changes in the environment. A registered CHARMe user can also add commentary to the significant event. Figure 6 shows an example of the Significant Events Viewer being used to explore ECMWF’s reanalysis datasets ERA-40 and ERA-Interim alongside the significant events timeline. The tool is publicly available, following a simple and free registration, at http://apps.ecmwf.int/significant-events/ .

To explore commentary in geographic space, CHARMe has developed a further prototype tool, “CHARMe Maps,” that demonstrates the use of commentary metadata in an interactive mapping interface. The tool has two main capabilities, which are illustrated in Figs. 7 and 8 :

the ability to attach annotations to a specific subset of a dataset—for example, a particular geographic region (we refer to this as “fine-grained commentary”), and

the ability to compare two datasets both visually and by the commentary that has been attached to them (we refer to this as intercomparison).

The linking of annotations to a subset of a dataset requires a modification to the general data model in Fig. 3 . Fortunately, this kind of capability was anticipated by the designers of the Open Annotation specification. The properties of the subset include a geographic extent (defined as a 2D geometry), a temporal extent (defined by start and end times), and a vertical extent (not used in this prototype). Additionally, the definition of a subset allows the user to specify exactly which variables within a dataset are considered to be part of the subset; in this way, the user can attach a comment to a very specific part of a multivariate, multidimensional climate dataset. Figure 9 illustrates this data model for fine-grained commentary.

The CHARMe project has built a system to support the creation, modification, and archiving of comments linked to climate datasets and other targets. The success of the CHARMe tools in achieving the vision set out at the start of this article will depend not only on further technology development, but also on the cultivation of a community of users who will build up the web of annotations and links over time. Although the project focuses on climate science, the technologies and concepts are very general and could be applied to other fields.

Data providers can enable CHARMe functionality in their websites by installing the JavaScript plug-in. It is also possible for institutions to host their own CHARMe node to store annotation information, but there is as yet no capability to federate searches across multiple nodes, so this is more appropriate if an institution wishes to keep annotations internal rather than public.

The node provides a standard API from which it is hoped many different client applications could be developed, of which the Maps tool is just one example that demonstrates the possibilities. One potential reuse of CHARMe that is under consideration is integration into NASA’s Giovanni tool, a web-based tool designed to enable visual data exploration and comparison of data offered by the Earth Observing System Data and Information System ( http://giovanni.gsfc.nasa.gov/giovanni/ ). In the most recent Giovanni architecture, service workflows are specified as URLs that encode the service request and a specification of the data subset to be visualized, comprising the data variables, spatial region, and temporal range. That is, the URL includes the same information to specify a data subset as the specification in the CHARMe Maps data model. As such, the open machine-accessible architecture would allow a fairly straightforward incorporation of the CHARMe Maps ability to support commentary on data subsets.

Another potential NASA system to explore CHARMe integration is the related Regional Climate Model Evaluation System (RCMES; https://rcmes.jpl.nasa.gov/ ). RCMES is both a database for observations and an analytical toolkit allowing regridding, metrics computation, and visualization demonstrating comparisons of observations and climate model outputs. We envision an augmented RCMES allowing users to leverage CHARMe to explain the results of their model evaluation, and science workbench notes that are not currently captured in the RCMES tool. There is also strong interest in using CHARMe in the Earth System CoG collaboration environment ( www.earthsystemcog.org/ ). CoG provides a search interface to the Earth System Grid Federation climate data archive, which houses climate model output and other widely used datasets, along with wikis, forums, and other tools for distributed discussion and analysis. Here, CHARMe could be integrated into the data search as a way to build an online knowledge base available at the point of download.

A further need for development is around moderation tools and policy. It does not appear that the social media world has solved the issue of controversial annotations. The main risk to a CHARMe annotation is not so much the controversy it sparks, as the possibility that the debate becomes irrelevant to the initial annotation and overwhelms substantive commenting, with the value of the commentary lost in the noise. This risk is likely relatively small in the beginning while the community is also small, but can become problematic as the community grows. We are currently surveying social media implementations and literature for promising approaches—such as upvoting/downvoting, reputation scoring, and sort/group mechanisms—to mitigate this risk.

The CHARMe code and user manuals are available at https://github.com/charme-project . The CHARMe system software is open-source, released under a BSD license, permitting future projects to reuse the source code as they wish. The Maps prototype is not currently accessible as an operational tool, but we would be happy to collaborate with anyone wishing to develop this capability in their own system.

ACKNOWLEDGMENTS.

The CHARMe project was coordinated by the University of Reading, and project partners were Airbus Defence and Space, CGI, Deutscher Wetterdienst (DWD), the European Centre for Medium-Range Weather Forecasts, the Royal Netherlands Meteorological Institute (KNMI), the Science and Technology Facilities Council, Terraspatium, and the UK Met Office. The project received funding from the European Union’s Seventh Framework Programme for research, technological development, and demonstration under Grant Agreement 312541.

FOR FURTHER READING

Ams publications.

Get Involved with AMS

Affiliate sites.

Email : [email protected]

Phone : 617-227-2425

Fax : 617-742-8718

Headquarters:

45 Beacon Street

Boston, MA 02108-3693

1200 New York Ave NW

Washington, DC 200005-3928

- Privacy Policy & Disclaimer

- Get Adobe Acrobat Reader

- © 2024 American Meteorological Society

- [195.158.225.244]

- 195.158.225.244

Character limit 500 /500

Navigation Menu

Search code, repositories, users, issues, pull requests..., provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications You must be signed in to change notification settings

CMIP6 climate data extraction and treatment by multi-polygon shapefile using ESGF NetCDF and WORLDCLIM datasets

Hydroenvironment/CMIP6-WORLDCLIM-HANDLING

Folders and files.

| Name | Name | |||

|---|---|---|---|---|

| 51 Commits | ||||

Repository files navigation

CMIP6-WORLDCLIM-HANDLING

🌏 DAILY AND MONTHLY DATA TREATMENT FROM GENERAL CIRCULATION MODELS (GCMs) BY CMIP6 PHASE

✅The 2013 IPCC fifth assessment report (AR5) featured climate models from CMIP5, while the upcoming 2021 IPCC sixth assessment report (AR6) will feature new state-of-the-art CMIP6 models.

✅CMIP6 will consist of the “runs” from around 100 distinct climate models being produced across 49 different modelling groups. While the results from only around 40 CMIP6 models have been published so far, it is already evident that a number of them have a notably higher climate sensitivity than models in CMIP5

✅The overview paper on the CMIP6 experimental design and organization has now been published in GMD (Eyring et al., 2016). This CMIP6 overview paper presents the background and rationale for the new structure of CMIP, provides a detailed description of the CMIP Diagnostic, Evaluation and Characterization of Klima (DECK) experiments and CMIP6 historical simulations, and includes a brief introduction to the 23 CMIP6-Endorsed MIPs.

✅The IPCC AR5 featured four Representative Concentration Pathways (RCPs) that examined different possible future greenhouse gas emissions. These scenarios – RCP2.6, RCP4.5, RCP6.0, and RCP8.5 – have new versions in CMIP6. These updated scenarios are called SSP1-2.6, SSP2-4.5, SSP4-6.0, and SSP5-8.5, each of which result in similar 2100 radiative forcing levels as their predecessor in AR5.

A brief summary can be found in the following overview presentation: https://www.wcrp-climate.org/images/modelling/WGCM/CMIP/CMIP6FinalDesign_GMD_180329.pdf

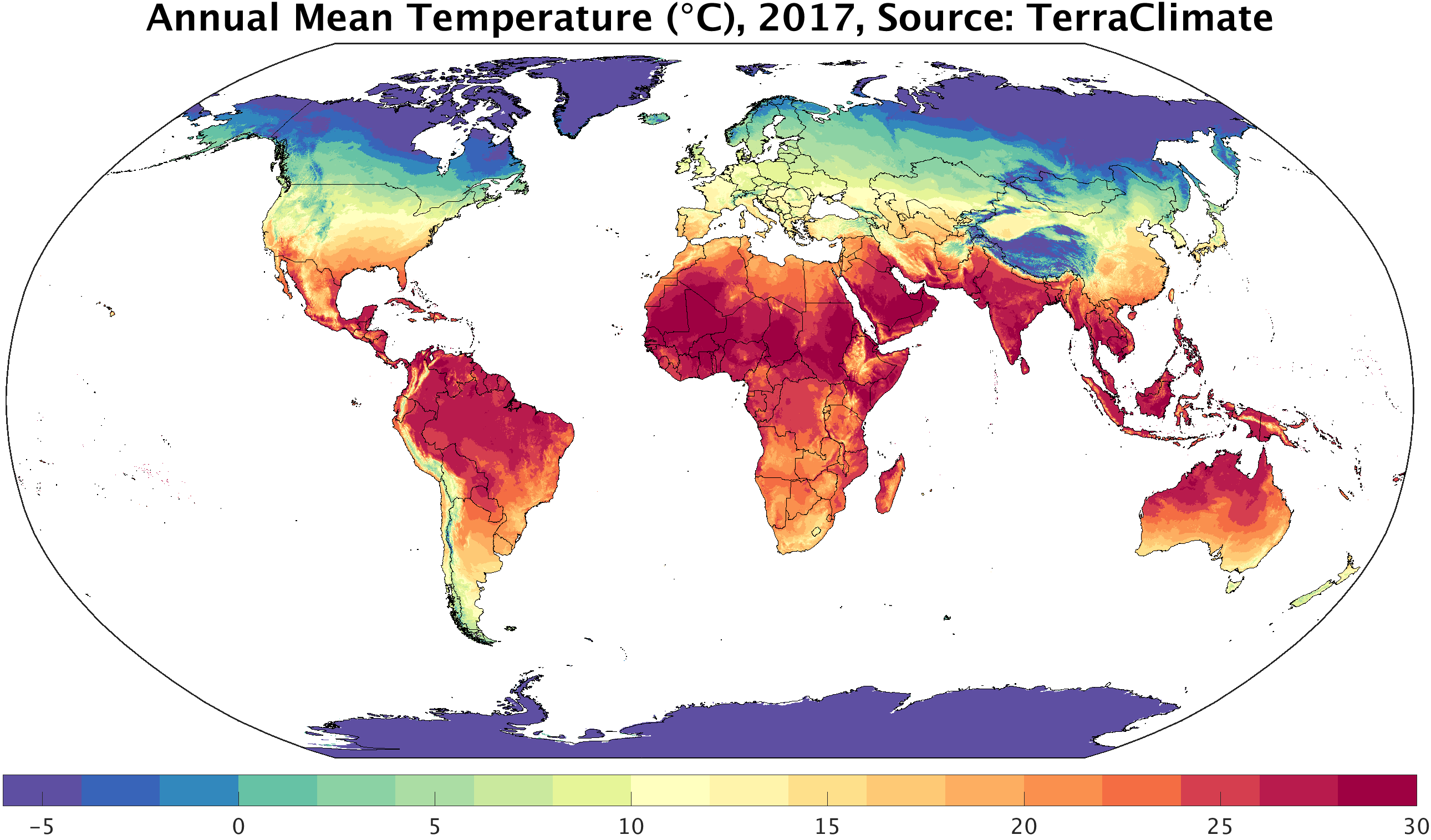

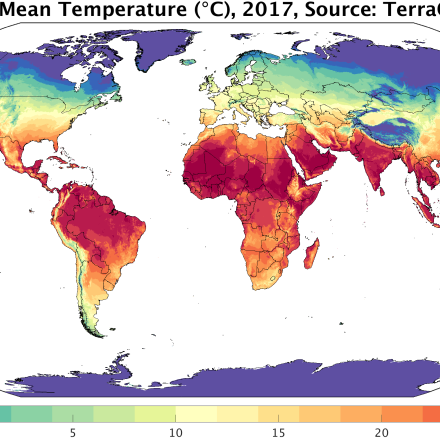

Data Snapshots

An up-to-date archive of freely available climate maps, ready to use in websites, presentations, or broadcasts. Click on the thumbnails below to access image galleries.

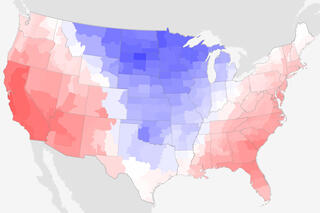

Temperature

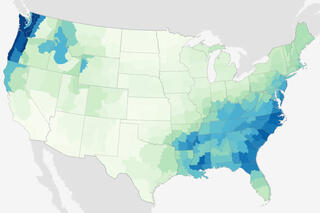

Precipitation

Projections

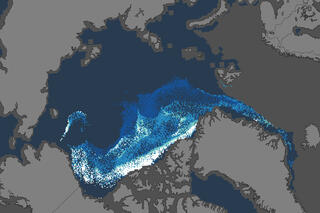

Ice & Snow

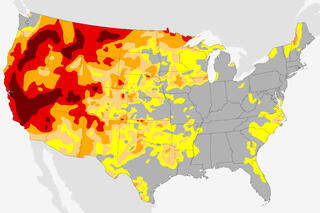

Severe Weather

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- NASA Author Manuscripts

Big Data Challenges in Climate Science

John l. schnase.

NASA Goddard Space Flight Center, Greenbelt, MD 20771 USA

Tsengdar J. Lee

NASA Headquarters, Washington, DC 20546 USA

Chris A. Mattmann

NASA Jet Propulsion Laboratory, Pasadena, CA 91109 USA

Christopher S. Lynnes

Luca cinquini, paul m. ramirez, andre f. hart, dean n. williams.

Lawrence Livermore National Laboratory, Livermore, CA 94550 USA

Duane Waliser

Pamela rinsland.

NASA Langley Research Center, Hampton, VA 23681 USA

W. Philip Webster

Daniel q. duffy, mark a. mcinerney, glenn s. tamkin, gerald l. potter, laura carrier.

The knowledge we gain from research in climate science depends on the generation, dissemination, and analysis of high-quality data. This work comprises technical practice as well as social practice, both of which are distinguished by their massive scale and global reach. As a result, the amount of data involved in climate research is growing at an unprecedented rate. Climate model intercomparison (CMIP) experiments, the integration of observational data and climate reanalysis data with climate model outputs, as seen in the Obs4MIPs, Ana4MIPs, and CREATE-IP activities, and the collaborative work of the Intergovernmental Panel on Climate Change (IPCC) provide examples of the types of activities that increasingly require an improved cyberinfrastructure for dealing with large amounts of critical scientific data. This paper provides an overview of some of climate science’s big data problems and the technical solutions being developed to advance data publication, climate analytics as a service, and interoperability within the Earth System Grid Federation (ESGF), the primary cyberinfrastructure currently supporting global climate research activities.

I. Introduction

The term “big data” is used to describe data sets that are too large or complex to be worked with using commonly-available tools [ 1 ]. Climate science represents a big data domain that is experiencing unprecedented growth [ 2 ]. Some of the major big data technical challenges facing climate science are easy to understand: large repositories mean that the data sets themselves cannot easily be moved—instead, analytical operations must migrate to where the data reside; complex analyses over large repositories require high-performance computing; large amounts of information increase the importance of metadata, provenance management, and discovery; migrating codes and analytic products within a growing network of storage and computational resources creates a need for fast networks, intermediation, and resource balancing; and, importantly, the ability to respond quickly to customer demands for new and often unanticipated uses for climate data requires greater agility in building and deploying applications [ 3 ].

In addressing these challenges, it is important to recognize that the work of climate science comprises social practice as well as technical practice [ 4 , 5 ]. There are established human processes for creating, sharing, and analyzing scientific data sets, often in a highly collaborative mode. The work is both valued by society and subject to intense critical scrutiny. It informs national and international policy decisions. Collectively, these social factors add urgency and complexity to our efforts to build an effective cyberinfrastructure to support climate science.

This paper provides an overview of some of climate science’s big data problems and the technical solutions being developed to improve data publication, analysis, and accessibility. This material combines the contributions of those who participated in the 2014 Big Data From Space Conference (BiDS ‘14) session titled “Big Data Challenges in Climate Science” [ 6 – 8 ]. We use the work being done by the Intergovernmental Panel on Climate Change as the context for our presentation, with particular focus on the global climate research community’s Earth System Grid Federation collaborative infrastructure and the community’s Climate Model Intercomparison efforts.

II. Background

Our understanding of the Earth’s processes is based on a combination of observational data records and mathematical models. The size of our space-based observational data sets is growing dramatically as new missions come online. However, a potentially bigger data challenge is posed by the work of climate scientists, whose models are producing data sets of hundreds of terabytes or more [ 9 ].

There are two major challenges posed by the data intensive nature of climate science. There is the need to provide effective means for publishing large-scale scientific data collections. This capability is the foundation upon which a variety of data services can be provided, from supporting active research to large-scale data federation, data distribution, and archival storage.

The other data intensive challenge has to do with how these large datasets are used: data analytics—the capacity to perform useful scientific analyses over large quantities of data in reasonable amounts of time. In many respects this is the biggest challenge, for without effective means for transforming large scientific data collections into meaningful scientific knowledge, our climate science mission fails.

In order to gain a perspective on the big data challenges in climate science and the efforts that are underway to address those challenges, it is helpful to examine four elements operating at the core of global-scale climate research: (1) the Intergovernmental Panel on Climate change, which has responsibility for integrating scientific results and presenting them in meaningful ways to policy makers throughout the world; (2) climate model intercomparison experiments that coordinate research on general circulation models, arguably the most important tools available to scientists who study the climate; (3) the Earth System Grid Federation, which provides the distributed infrastructure for publishing climate model outputs, sharing scientific knowledge, and supporting global-scale collaboration; and (4) a new wave of data publication activities aimed at integrating observational data and reanalysis data into the Earth System Grid Federation. In this section, we take a closer look at each of these elements.

A. Intergovernmental Panel on Climate Change

The Intergovernmental Panel on Climate Change (IPCC) is the leading international body for the assessment of climate change [ 10 ]. It was established by the United Nations Environment Program (UNEP) and the World Meteorological Organization (WMO) in 1988 to provide the world with a clear scientific view on the current state of scientific knowledge about climate change and its potential environmental and socio-economic impacts.

The IPCC is open to all member countries of the UN and WMO. Currently 195 countries are members of the IPCC. Governments participate in the review process and the plenary sessions, where main decisions about the IPCC work program are taken and reports are accepted, adopted, and approved. Thousands of scientists from all over the world contribute to the work of the IPCC on a voluntary basis. Review is an essential part of the IPCC process, to ensure an objective and complete assessment of current information. IPCC aims to reflect a range of views and expertise. The IPCC Secretariat coordinates all the IPCC work and liaises with Governments.

Because of its scientific and intergovernmental nature, the IPCC embodies a unique opportunity to provide rigorous and balanced scientific information to decision makers. By endorsing the IPCC reports, governments acknowledge the authority of their scientific content. The work of the organization is therefore policy-relevant and yet policy-neutral, never policy-prescriptive.

B. Climate Model Intercomparison

Climate model intercomparison is one of the most important lines of research contributing to our understanding of the climate, and it contributes significantly to the work of the IPCC [ 11 , 12 ]. The World Climate Research Programme’s (WCRP) Working Group on Coupled Modelling (WGCM) established the Coupled Model Intercomparison Project (CMIP) as a standard experimental protocol for studying the output of coupled atmosphere-ocean general circulation models (GCMs). CMIP provides a community-based infrastructure in support of climate model diagnosis, validation, intercomparison, documentation, and data access. This framework enables a diverse community of scientists to analyze GCMs in a systematic fashion, a process that serves to facilitate model improvement. Virtually the entire international climate modeling community has participated in this project since its inception in 1995. The Program for Climate Model Diagnosis and Intercomparison (PCMDI) archives much of the CMIP data and is one of a number of international climate data repositories that provide support for CMIP. PCMDI’s CMIP effort is funded by the Regional and Global Climate Modeling (RGCM) Program of the Climate and Environmental Sciences Division of the US Department of Energy’s Office of Science, Biological, and Environmental Research (BER) program.

Coupled atmosphere-ocean general circulation models allow the simulated climate to adjust to changes in climate forcing, such as increasing atmospheric carbon dioxide. CMIP began in 1995 by collecting output from model “control runs” in which climate forcing is held constant. Later versions of CMIP collected output from an idealized scenario of global warming, with atmospheric CO2 increasing at the rate of 1% per year until it doubles at about Year 70. CMIP output is available for study by diagnostic sub-projects, academic users, and the public.

Climate model intercomparison has proven to be an effective method to both improve climate models in general and to provide the basis for preparing ensembles to improve climate prediction. In the past, preparation of the data for such activities was the responsibility of the individual researcher. Recently, however, large international collaborative projects such as the CMIP3 and CMIP5 projects have agreed to share model output through the Earth System Grid Federation.

C. Earth System Grid Federation

The climate research community uses the Earth System Grid Federation (ESGF) as the primary mechanism for publishing and sharing IPCC data as well as the ancillary observational and reanalysis products described below [ 13 , 14 ]. ESGF is an international collaboration with a focus on serving the coupled model intercomparison projects and supporting climate and environmental science in general. The ESGF grew out of the larger Global Organization for Earth System Science Portals (GO-ESSP) community and reflects a broad array of contributions from its collaborating partners.

ESGF combines features found in a variety of grid computing approaches. ESGF is a peer-to-peer content distribution network in which geographically distributed collections can be accessed by the climate research community through a certificate authority mechanism. Published ESGF data, regardless of source, conforms to the community-defined CMIP5 Data Reference Syntax and Controlled Vocabularies standard. The trust relationship set up by the authority mechanism essentially creates a virtual organization of producers and consumers of ESGF products.

Reformatting the model output to a common standard and distributing the data though a common portal has proven to be an innovative approach allowing thousands of additional researchers access to data previously limited to a much more sophisticated technical audience [ 6 , 7 ]. For example, IPCC Working Group Two, which focused on climate change impacts, adaptations, and vulnerabilities, and Working Group Three, which dealt with the mitigation of climate change, made extensive use of the CMIP3 and CMIP5 archives in the preparation of recent IPCC Assessment Reports. This approach to data distribution has proven to be so successful that other climate related projects have emerged to provide CMIP-relevant observations and reanalysis. More than 1300 scientific papers have been written using these data. Distributing satellite observations and reanalysis products for use by the climate research community is the next step.

D. Obs4MIPs, Ana4MIPs, and CREATE-IP

Observations tailored for use by the climate science community has long been a dream of many climate modeling scientists and their graduate students [ 15 ]. When science teams associated with Earth observational missions produced new level three products in the 1980’s—the Earth Radiation Budget Experiment (ERBE), for example—it was a challenge for climate researchers to customize the data so that they could be used to validate the model’s Top Of Atmosphere (TOA) energy balance and cloud radiative properties. Once they mastered the format, each scientist obtained their own copy of the data and used it for model evaluation. This process has been repeated over and over by individual scientists, even today. As the processing of satellite data became more sophisticated, accessing the data became more onerous because of the proliferation of versions, levels of processing, and other features. As a result, the IPCC’s Third Assessment Report, released in 2001, only dedicated a minimal amount of discussion to model validation using observations.

By 2013, IPCC’s Fifth Assessment Report included more extensive use of observational data, facilitated in part by the efforts to make satellite data more accessible in the intervening years. This was accompanied by a growing interest in the use of reanalysis data, another application of observational data of particular value to climate monitoring and research. Reanalyses assimilate historical observational data spanning an extended period of time using a single, constant assimilation scheme. They ingest all available observational data every 6–12 hours over the period being analyzed and produce a dynamically consistent estimate of the climate state at each time interval. Reanalysis data sets can span decades, going as far back as the beginning of the satellite era [ 2 ].

Because of this growing need to use observations in the IPCC process, the Observations for Model Intercomparison Projects (Obs4MIPs), Analysis for Model Intercomparison Projects (Ana4MIPs), and the Collaborative REAnalysis Technical Environment-Intercomparison Project (CREATE-IP) [ 7 ] have been created to provide a new way to distribute observational data and reanalyses for use by climate scientists. The objective of these projects is to prepare observational data (currently mostly satellite data) and selected reanalysis products in the same way as the CMIP model data have been reformatted and tagged for inclusion into ESGF. The preparation involves ensuring the data files are in NetCDF ( https://www.unidata.ucar.edu/software/netcdf/docs/ ) format and the data adhere to the Climate and Forecast (CF) metadata conventions in addition to other formatting procedures that have been agreed upon by the World Climate Research Program (WCRP) Working group on Coupled Modeling (WGCM). To aid with the formatting procedures, a software utility, Climate Model Output Rewriter (CMOR), is available that ensures adherence to the standard formatting. Software is also available to display and analyze the data in 2D and 3D.

Data entered into the projects must have a history of peer reviewed publications, be version controlled, and reside in a long-term archive. For example, a WCRP Data Advisory Council (WDAC) Obs4MIPs task team has been established to govern the data inclusion process. For inclusion into the Obs4MIPs archive, a data producer proposes to the WDAC task team with the detailed information required above. The first step in preparation of the data is generally done in consultation with the individual science teams, who identify specifics about the data, including the appropriate processing version, citations, and other details. Documentation and error estimates are also required.

Table 1 shows a current list of the observational data products available through ESGF. Because of the strict NetCDF file format and CF-compliance requirement, one limitation that is still being resolved is the desire by some climate modeling researchers to include data that does not have a corresponding variable in the CMIP archive but has significant value to the climate research community. For instance, the Moderate Resolution Imaging Spectroradiometer (MODIS) produces several dozen products, yet only a few variables have a corresponding CMIP variable and are thus eligible for publication under the present guidelines. Another limitation is the limited capability of including uncertainty information in the Obs4MIPs formatted files.

Obs4MIPs and CREATE-IP Variables Available in ESGF

| Air Temperature Standard Error | Northward Wind |

| Ambient Aerosol Optical Thickness at 550 nm | Number of CloudSat Profiles Contributing to the Calculation |

| Ambient Aerosol Optical Thickness at 550nm Observations | Number of MISR Samples |

| Ambient Aerosol Optical Thickness at 550nm Standard Deviation | PARASOL Reflectance |

| CALIPSO 3D Clear fraction | Precipitation - monthly and 3h |

| CALIPSO 3D Undefined fraction | Precipitation Standard Error |

| CALIPSO Clear Cloud Fraction | Sea Surface Height Above Geoid |

| CALIPSO Cloud Fraction | Sea Surface Height Above Geoid Observations |

| CALIPSO High Level Cloud Fraction | Sea Surface Height Above Geoid Standard Error |

| CALIPSO Low Level Cloud Fraction | Sea Surface Temperature |

| CALIPSO Mid Level Cloud Fraction | Sea Surface Temperature Number of Observations |

| CALIPSO Scattering Ratio | Sea Surface Temperature Standard Error |

| CALIPSO Total Cloud Fraction | Specific Humidity |

| Cloud Fraction retrieved by MISR | Specific Humidity Number of Observations |

| CloudSat 94GHz radar Total Cloud Fraction | Specific Humidity Standard Error |

| CloudSat Radar Reflectivity CFAD | Surface Downwelling Clear-Sky Longwave Radiation |

| Eastward Near-Surface Wind | Surface Downwelling Clear-Sky Shortwave Radiation |

| Eastward Near-Surface Wind Number of Observations | Surface Downwelling Longwave Radiation |

| Eastward Near-Surface Wind Standard Error | Surface Downwelling Shortwave Radiation |

| Eastward Wind | Surface Upwelling Clear-Sky Shortwave Radiation |

| Fraction of Absorbed Photosynthetically Active Radiation | Surface Upwelling Longwave Radiation |

| ISCCP Cloud Area Fraction | Surface Upwelling Shortwave Radiation |

| ISCCP Mean Cloud Albedo (day) | TOA incident Shortwave Radiation |

| ISCCP Mean Cloud Top Pressure (day) | TOA Outgoing Clear-Sky Longwave Radiation |

| ISCCP Mean Cloud Top Temperature (day) | TOA Outgoing Clear-Sky Shortwave Radiation |

| ISCCP Total Cloud Fraction (daytime only) | TOA Outgoing Longwave Radiation |

| Leaf Area Index | TOA Outgoing Shortwave Radiation |

| Mole Fraction of O3 | Total Cloud Fraction |

| Mole Fraction of O3 Number of Observations | Total Cloud Fraction Number of Observations |

| Mole Fraction of O3 Standard Error | Total Cloud Fraction Standard Deviation |

| Near-Surface Wind Speed | Water Vapor Path |

| Near-Surface Wind Speed Number of Observations | Sea Surface Temperature |

| Near-Surface Wind Speed Standard Error | Solar Zenith Angle |

| Air Temperature | Specific Humidity |

| Condensed Water Path | Surface Air Pressure |

| Convective Precipitation | Surface Downward Eastward Wind Stress |

| Eastward Near-Surface Wind | Surface Downward Northward Wind Stress |

| Eastward Wind | Surface Downwelling Longwave Radiation |

| Evaporation | Surface Downwelling Shortwave Radiation |

| Geopotential Height | Surface Temperature |

| Ice Water Path | Surface Upward Latent Heat Flux |

| Near-Surface Air Temperature | Surface Upward Sensible Heat Flux |

| Near-Surface Wind Speed | Surface Upwelling Longwave Radiation |

| Northward Near-Surface Wind | Surface Upwelling Shortwave Radiation |

| Northward Wind | TOA incident Shortwave Radiation |

| Precipitation | TOA Outgoing Clear-Sky Longwave Radiation |

| Relative Humidity | TOA Outgoing Longwave Radiation |

| Sea Level Pressure | Total Cloud Fraction |

| Snowfall Flux | Water Vapor Path |

| Omega (=dp/dt) |

Reanalysis is extremely useful for many issues relating to climate models [ 16 , 17 ]. The Ana4MIPs effort focuses on providing a select set of reanalysis variables to climate model intercomparison efforts. This project provides only variables that are a match for the CMIP5 protocol and of particular use to researchers who need reanalysis data as a baseline for model and model ensemble evaluation. It has become apparent, however, that there is strong interest in making a more expansive set of atmospheric reanalysis data available to the community via the ESGF. In response, NASA has initiated the CREATE-IP project. CREATE-IP includes reanalysis products from the European Center for Medium-Range Weather Forecasts (ECMWF), National Oceanic and Atmospheric Administration (NOAA)/National Center for Environmental Prediction (NCEP), NOAA/Earth system Research Laboratory (ESRL), NASA, and the Japanese Meteorological Agency (JMA). Each reanalysis has been repackaged in a form similar to the CMIP and Obs4MIPs projects. Table 1 shows the current CREATE-IP variables.

III. Next Generation Cyberinfrastructure for Climate Data Publication

Because of the fundamental importance of high-quality, readily-accessible data, an effective cyberinfrastructure for climate science requires improved ways to generate and disseminate data. Institutions that host ESGF servers have responsibility for correctly formatting and registering their data contributions. IPCC data are produced in forms that are directly compatible with the ESGF CMIP5 standard. As described above, data products from other sources—such as Obs4MIPs, generally require reformatting. This alignment—moving from the frame of reference defined by the observational community to that used by the climate community—is often a mixed process of automatic and manual conversion and contributes significantly to the data preparation overhead of the Obs4MIPs activities. It is at the heart of the Obs4MIPs, Ana4MIPs, and CREATE-IP data challenge [ 18 ].

Efforts are underway to develop a cyberinfrastructure that overcomes these challenges [ 6 ]. The new capabilities will provide automatic conversion of NASA HDF-EOS/HDF datasets into NetCDF/CF datasets compatible with the ESGF, the ability to perform model checking on those converted datasets using the Climate Model Output Rewriter, and the ability to automatically publish remote sensing data into the ESGF.

We are working with three NASA Distributed Active Archive Centers (DAACs) to identify requirements for various ad-hoc data publication pipelines used in the Obs4MIPs projects and then standardize them into a toolkit. The publication infrastructure is now part of a core project called Open Climate Workbench (OCW) [ 19 ] stewarded at the open source Apache Software Foundation (ASF), the world’s largest open source organization and home to some of the Web’s most widely-used software systems. For example, its flagship HTTPD web server services 53% of the Web requests on the Internet.

A. Architecture

A notional architecture for a next generation publishing cyberinfrastructure is shown in Fig. 1 . As originally conceived, remote sensing data would enter the system from the bottom left of the figure. Remote sensing data used for comparison with climate models are generally gridded, though the system could handle swath information through its transformation process as described below.

The NASA ESGF cyberinfrastructure shown (upper left) is responsible for publishing remote sensing datasets to the ESGF portal (upper right). Automated data generation and dissemination workflows substantially improve the efficiency and accuracy of the data publication process.

In an initial step ( Fig. 1 , Step 1), the architecture would leverage a technology such as OPeNDAP ( http://www.opendap.org/ ) to access and subset the data, which provides input to the next step ( Fig. 1 , Step 2) where data wrappers encapsulate mission-specific transformations needed to yield a variable (e.g., sea ice), along with its latitude and longitude in WGS84 format, time in ISO6801 format, and an optional height value. This five-tuple of (variable value, latitude, longitude, time, height) would then be passed to a regridding step ( Fig. 1 , Step 3) where the data would be spatially and temporally aligned with the desired climate model output and written to a NetCDF/CF-compliant file with the necessary metadata information ( Fig. 1 , Step 4). Finally, the data would be validated using the Climate Model Output Rewriter ( Fig. 1 , Step 5) and published to the ESGF ( Fig. 1 , Step 6).

The right side of Fig. 1 shows what a user would do once the remote sensing data are available in the ESGF. Here again OPeNDAP provides user and application access to published ESGF data ( Fig. 1 , Step 7). In this case, the architecture creates leveraged opportunities to combine OPeNDAP with other community-oriented tools, such as the Regional Climate Model Evaluation System (RCMES; https://rcmes.jpl.nasa.gov/ ), a Web-accessible database of remote sensing observations and analytical toolkit for computing climate metrics ( Fig. 1 , Steps 8–9).

B. Technologies and Implementation

Fig. 2 shows how we have implemented the notional architecture described above. We standardized on the use of a few technologies to implement the architecture, and we simplified the process by collapsing Steps 1–4 into Data Extraction and Data Conversion steps. The extraction steps are provided by OPeNDAP and Apache’s Object Oriented Data Technology (OODT) framework via the framework’s core services and three of its client tools, the Crawler, Workflow Manager, and File Manager.

The as-implemented architecture of the NASA ESGF cyber-infrastructure comprises a series of workflow stages that combine Apache OODT software, NetCDF operators, OPeNDAP, Apache Solr, and the ESGF publishing toolkit.

The Workflow Manager encapsulates control and data flow and allows a user to model a series of steps in the scientific process as well as the input and output passed between steps. The File Manager tracks a file’s key information, including its metadata, provenance, location, Multi-Purpose Internet Mail Extensions (MIME) type, etc., and it provides data movement capabilities. The Crawler provides automated methods for ingesting, locating, selecting, and interactively extracting files and metadata managed by the File Manager, while simultaneously notifying the Workflow Manager that pipelines need to be executed.

The Crawler is seeded with an initial data staging area or a non-local OPeNDAP directory of remote sensing data. The Crawler extracts file and HDF metadata, which it subsequently presents to the File Manager for ingestion. At the same time, the Crawler notifies the Workflow Manager that the conversion pipeline should be initiated for the variable of interest. Data Extraction is kicked off, and the required five-tuple of information is extracted. Any necessary conversion is performed in the Data Conversion step using the NetCDF Operators package, which then writes a new NetCDF file based on the extracted five-tuple. The resulting output is sent to the Data Validation step that in turn calls a Python Web service that applies the CMOR checker. If the validation is successful, Metadata Harvesting collects the NetCDF information into a Thematic Real-Time Environmental Distributed Data Services (THREDDS) data server, publishes it to Apache Solr, and, ultimately, delivers it to the Earth System Grid Federation in the Publishing to ESGF step.

Publishing remote sensing data alongside climate model output enables better comparisons and understanding that, in turn, more completely inform those who study the climate and those who make crucial policy decisions affecting the climate. Our expectation is that using automated workflows to streamline the publication of high-quality data will significantly improve this crucial activity.

IV. Next Generation Cyberinfrastructure for Climate Data Analytics

Climate model input and output data provide the basis for intellectual work in climate science. As these data sets grow in size, new approaches to data analysis are needed. in efforts to address the big data challenges of climate science, some researchers are moving toward a notion of Climate Analytics-as-a-Service (CAaaS). CAaaS combines high-performance computing and server-side analytics with scalable data management, cloud computing, a notion of adaptive analytics, and domain-specific APis to improve the accessibility and usability of large collections of climate data [ 3 , 8 ]. In this section we take a closer look at these concepts and a specific implementation of CAaaS in NASA’s MERRA Analytic Services project.

A. High-performance server-side analytics

At its core, CAaaS must bring together data storage and high-performance computing in order to perform analyses over data where the data reside. MapReduce has been of particular interest, because it provides an approach to high-performance analytics that is proving to be useful in many data intensive domains [ 3 ]. MapReduce enables distributed computing over large data sets using high-end computer clusters. It is an analysis paradigm that combines distributed storage and retrieval with distributed, parallel computation, allocating to the data repository analytical operations that yield reduced outputs to applications and interfaces that may reside elsewhere. Since MapReduce implements repositories as storage clusters, data set size and system scalability are limited only by the number of nodes in the clusters.

MapReduce distributes computations across large data sets using a large number of computers (nodes). In a “map” operation a head node takes the input, partitions it into smaller sub-problems, and distributes them to data nodes. A data node may do this again in turn, leading to a multi-level tree structure. The data node processes the smaller problem, and passes the answer back to a reducer node to perform the reduction operation. In a “reduce” step, the reducer node then collects the answers to all the sub-problems and combines them in some way to form the output—an answer to the problem it was originally trying to solve.

While MapReduce has proven effective for large repositories of textual data, its use in data intensive science applications has been limited, because many scientific data sets are inherently complex, have high dimensionality, and use binary formats. Adapting MapReduce to complex, binary data types has been a major advancement to these efforts. Due to the importance of MapReduce in large-scale analytics, and its widespread use, there has been significant private-sector investments in recent years aimed at improving the performance and applicability of the technology—improvements that benefit and leverage the efforts of science communities that are becoming more involved in analytics.

B. Workflow-stratified adaptive analytics

The relationship between data and workflows contributes to the way we think about data analytics. Data-intensive analysis workflows, in general, bridge between a largely unstructured mass of archived scientific data and the highly structured, tailored, reduced, and refined analytic products that are used by individual scientists and form the basis of intellectual work in the domain. In general, the initial steps of an analysis, those operations that first interact with a data repository, tend to be the most general, while data manipulations closer to the client tend to be the most tailored—specialized to the individual, to the domain, or to the science question under study. The amount of data being operated on also tends to be larger on the repository-side of the workflow, smaller toward the client-side end-products.

This stratification can be used to optimize data-intensive workflows. We believe that the first job of an analytics system is to implement a set of near-archive, early-stage operations that are a common starting point in many of these analysis workflows. For example, it is important that a system be able to compute maximum, minimum, sum, count, average, variance, and difference operations such as:

that return, as in this example, the average value of a variable when given its name, a temporal extent, and a spatial extent. Because of their widespread use, these simple operations— microservices, if you will—function as “canonical operations” with which more complex opeations can be built. This is an active area of research with many analytic frameworks in development [ 20 – 22 ]. However, our work with its current focus on workflow stratification, microservices, and the client-side construction of complex operations using server-side microservices is distinctive [ 23 ]. And, by implementing basic descriptive statistics and other primitive operations over data in a high-performance compute-storage environment using powerful analytical software, the system is able to support more complex analyses, such as the predictive modeling, machine learning, and neural networking approaches often associated with advanced analytics.

C. Domain-specific application programming interfaces

CAaaS capabilities are exposed to clients through a RESTful Web services interface. In order to make these capabilities easier to use, we are building a client-side Climate Data Services (CDS) application programming interface (API) that essentially wraps REST interface’s Web service endpoints and presents them to client applications through a library of Python-based methods. With this arrangement, application developers have the option of coding against the REST interface directly or using the CDS API Python’s library and with its more familiar method syntax.

APIs can take many forms, but the goal for all APIs is to make it easier to implement the abstract capabilities of a system. In building the CDS API, we are trying to provide for climate science a uniform semantic treatment of the combined functionalities of large-scale data management and server-side analytics. We do this by combining concepts from the Open Archive Information Systems (OAIS) reference model, highly dynamic object-oriented programming APIs, and Web 2.0 resource-oriented APIs.

The OAIS reference model, defined by the Consultative Committee on Space Data Systems, addresses a full range of archival information preservation functions including ingest, archival storage, data management, access, and dissemination—full information lifecycle management. OAIS provides examples and some ”best practice” recommendations and defines a minimal set of responsibilities for an archive to be called an OAIS [ 25 ]. These high-level services provide a vocabulary that we have adopted for the CDS Reference Model and associated Library and API.

The CDS Reference Model is a logical specification that presents a single abstract data and analytic services model to calling applications. The Reference Model can be implemented using various technologies; in all cases, however, actions are based on the following six primitives:

| Submit data to a service | |

| Retrieve data from a service (synchronous) | |

| Request data from a service (asynchronous) | |

| Retrieve data from a service | |

| Track progress of service activity | |

| Initiate a service-definable extension. |

Within this OAIS-inspired framework, the Python-based CDS Library sits atop a RESTful Web services client that encapsulates inbound and outbound interactions with various climate data services ( Fig. 3 ). These provide the foundation upon which we have also built a CDS command line interpreter (CLI) that supports interactive sessions. In addition, Python scripts and full Python applications can use methods imported from the API. The resulting client stack can be distributed as a software package or used to build a cloud-based service (SaaS) or distributable cloud image (PaaS).

Notional architecture of a CAaaS system. Applications have the option of reaching services directly through the system’s Web service REST interface or through the CDS API’s Python libraries.

Unlike other APIs, our approach focuses on the specific analytic requirements of climate science and unites the language and abstractions of collections management with those of high-performance analytics. Doing so reflects at the application level the confluence of storage and computation that is driving big data architectures of the future.

D. MERRA Analytic Services

The MERRA Analytic Services (MERRA/AS) project brings these elements together in an end-to-end demonstration of CAaaS ( Fig. 4 ). MERRA/AS enables MapReduce analytics over NASA’s Modern-Era Retrospective Analysis for Research and Applications (MERRA) data collection. The MERRA reanalysis integrates observational data with numerical models to produce a global temporally and spatially consistent synthesis of key climate variables [ 25 ]. The effectiveness of MERRA/AS has been demonstrated in several applications, and the work is contributing new ideas about how a next generation cyberinfrastructure for climate data analytics might be designed.

The MERRA Analytic Service provides an end-to-end demonstration of the principals underlying Climate Analytics-as-a-Service: important data embedded in a high-performance storage-compute environment where analytic services are exposed via Web services to client-side applications through an easy-to-use client-side API tailored to the climate research community.

In simple terms, our vision for MERRA/AS is that it allows MERRA data to be stored in a Hadoop Distributed File System (HDFS) on a MERRA/AS cluster. Functionality is exposed through the CDS API. The API exposures enable a basic set of operations that can be used to build arbitrarily complex workflows and assembled into more complex operations (which can be folded back into the API and MERRA/AS service as further extensions). The complexities of the underlying mapper and reducer codes for the basic operations are encapsulated and abstracted away from the user, making these common operations easier to use.

The Apache Hadoop software library is the classic framework for MapReduce distributed analytics. We are using Cloudera, the 100% open source, enterprise-ready distribution of Apache Hadoop. Cloudera is integrated with configuration and administration tools and related open source packages. The total size of the MERRA/AS HDFS repository is approximately 480 TB. Currently, MERRA/AS is running on a 36-node Dell cluster that has 576 Intel 2.6 GHz SandyBridge cores, 1300 TB of raw storage, 1250 GB of RAM, and a 11.7 TF theoretical peak compute capacity. Nodes communicate through a Fourteen Data Rate (FDR) Infiniband network having peak TCP/IP speeds in excess of 20 Gbps.

The canonical operations that implement MERRA/AS’s maximum, minimum, count, sum, difference, average, and variance calculations are Java MapReduce programs that are ultimately exposed as simple references to CDS Library methods or as Web services endpoints. There is a substantial code ecosystem behind these apparently simple operations, nearly 6000 lines of Java code being offloaded from the user to the MERRA/AS service.

E. MERRA/AS in use

MERRA/AS currently is in beta testing with about two dozen partners across a wide range of organizations and topic areas. It operates at a NASA Technology Readiness Level of seven (TRL 7) as a prototype deployed in an operational environment at or near scale of the production system, with most functions available for demonstration and test. While the system is not available for open beta testing to the general public, arrangements can be made to test the system through NASA’s Climate Model Data Services [ 27 ].

In one beta application, MERRA/AS’s web service is providing data to the RECOVER wildfire decision support system, which is being used for post-fire rehabilitation planning by Burned Area Emergency Response (BAER) teams within the US Department of Interior and the US Forest Service [ 28 ]. This capability has lead to the development of new data products based on climate reanalysis data that until now were not available to the wildfire community.

In our largest deployment exercise to date, the CDS Client Distribution Package and the CDS API have been used by the iPlant Collaborative to integrate MERRA data and MERRA/AS functionality into the iPlant Discovery Environment. iPlant is a virtual organization created by a cooperative agreement funded by the US National Science Foundation (NSF) to create cyberinfrastructure for the plant sciences. The project develops computing systems and software that combine computing resources, like those of TeraGrid, and bioinformatics and computational biology software. Its goal is easier collaboration among researchers with improved data access and processing efficiency. Primarily centered in the US, it collaborates internationally and includes a wide range of governmental and private-sector partners [ 29 ].

Initial results have shown that analytic engine optimizations can yield near real-time performance of MERRA/AS’s canonical operations and that the total time required to assemble relevant data for many applications can be significantly reduced, often by as much as two to three orders of magnitude [ 24 ].

V. Next Generation Cyberinfrastructure for Enhanced Interoperability

Big data challenges are sometimes viewed as problems of large-scale data management where solutions are offered through an array of traditional storage and archive theories and technologies. These approaches tend to view big data as an issue of storing and managing large amounts of structured data for the purpose of finding subsets of interest. Alternatively, big data challenges can be viewed as knowledge management problems where solutions are offered through an array of analytic techniques and technologies. These approaches tend to view big data as an issue of extracting meaningful patterns from large amounts of unstructured data for the purpose of finding insights of interest.

As the ESGF community grapples with its scaling challenges, it seeks to find a balance between these competing views. This is evident in the charge that the ESGF Compute Working Team (ESGF-CWT)—the international team of collaborators responsible for designing ESGF’s ”next generation” architecture—has laid out for itself. The Team’s overarching goal is to increase the analytical capabilities of the enterprise, primarily by exposing high-performance computing resources and analysis tools to the community through Web services [ 30 ]. Ideally, ESGF data from the Federation’s distributed collections would be united with the Web-accessible tools and compute resources needed to perform advanced analytics at the scale needed for IPCC’s increasingly complex work.

However, integrating high-performance computing and high-performance analytics—finding an optimal storage-compute balance in ESGF’s ecosystem of distributed resources—is not a trivial exercise. ESGF’s technical heritage is that of a large-scale distributed archive. Its nodes basically store and distribute data. They typically support compute resources sufficient to stream data out of storage onto the network for client consumption, and the behaviors implemented and exposed by ESGF’s Web service interface are the basic discovery and download operations of an archive.