9.1 Null and Alternative Hypotheses

The actual test begins by considering two hypotheses . They are called the null hypothesis and the alternative hypothesis . These hypotheses contain opposing viewpoints.

H 0 , the — null hypothesis: a statement of no difference between sample means or proportions or no difference between a sample mean or proportion and a population mean or proportion. In other words, the difference equals 0.

H a —, the alternative hypothesis: a claim about the population that is contradictory to H 0 and what we conclude when we reject H 0 .

Since the null and alternative hypotheses are contradictory, you must examine evidence to decide if you have enough evidence to reject the null hypothesis or not. The evidence is in the form of sample data.

After you have determined which hypothesis the sample supports, you make a decision. There are two options for a decision. They are reject H 0 if the sample information favors the alternative hypothesis or do not reject H 0 or decline to reject H 0 if the sample information is insufficient to reject the null hypothesis.

Mathematical Symbols Used in H 0 and H a :

| equal (=) | not equal (≠) greater than (>) less than (<) |

| greater than or equal to (≥) | less than (<) |

| less than or equal to (≤) | more than (>) |

H 0 always has a symbol with an equal in it. H a never has a symbol with an equal in it. The choice of symbol depends on the wording of the hypothesis test. However, be aware that many researchers use = in the null hypothesis, even with > or < as the symbol in the alternative hypothesis. This practice is acceptable because we only make the decision to reject or not reject the null hypothesis.

Example 9.1

H 0 : No more than 30 percent of the registered voters in Santa Clara County voted in the primary election. p ≤ 30 H a : More than 30 percent of the registered voters in Santa Clara County voted in the primary election. p > 30

A medical trial is conducted to test whether or not a new medicine reduces cholesterol by 25 percent. State the null and alternative hypotheses.

Example 9.2

We want to test whether the mean GPA of students in American colleges is different from 2.0 (out of 4.0). The null and alternative hypotheses are the following: H 0 : μ = 2.0 H a : μ ≠ 2.0

We want to test whether the mean height of eighth graders is 66 inches. State the null and alternative hypotheses. Fill in the correct symbol (=, ≠, ≥, <, ≤, >) for the null and alternative hypotheses.

- H 0 : μ __ 66

- H a : μ __ 66

Example 9.3

We want to test if college students take fewer than five years to graduate from college, on the average. The null and alternative hypotheses are the following: H 0 : μ ≥ 5 H a : μ < 5

We want to test if it takes fewer than 45 minutes to teach a lesson plan. State the null and alternative hypotheses. Fill in the correct symbol ( =, ≠, ≥, <, ≤, >) for the null and alternative hypotheses.

- H 0 : μ __ 45

- H a : μ __ 45

Example 9.4

An article on school standards stated that about half of all students in France, Germany, and Israel take advanced placement exams and a third of the students pass. The same article stated that 6.6 percent of U.S. students take advanced placement exams and 4.4 percent pass. Test if the percentage of U.S. students who take advanced placement exams is more than 6.6 percent. State the null and alternative hypotheses. H 0 : p ≤ 0.066 H a : p > 0.066

On a state driver’s test, about 40 percent pass the test on the first try. We want to test if more than 40 percent pass on the first try. Fill in the correct symbol (=, ≠, ≥, <, ≤, >) for the null and alternative hypotheses.

- H 0 : p __ 0.40

- H a : p __ 0.40

Collaborative Exercise

Bring to class a newspaper, some news magazines, and some internet articles. In groups, find articles from which your group can write null and alternative hypotheses. Discuss your hypotheses with the rest of the class.

This book may not be used in the training of large language models or otherwise be ingested into large language models or generative AI offerings without OpenStax's permission.

Want to cite, share, or modify this book? This book uses the Creative Commons Attribution License and you must attribute Texas Education Agency (TEA). The original material is available at: https://www.texasgateway.org/book/tea-statistics . Changes were made to the original material, including updates to art, structure, and other content updates.

Access for free at https://openstax.org/books/statistics/pages/1-introduction

- Authors: Barbara Illowsky, Susan Dean

- Publisher/website: OpenStax

- Book title: Statistics

- Publication date: Mar 27, 2020

- Location: Houston, Texas

- Book URL: https://openstax.org/books/statistics/pages/1-introduction

- Section URL: https://openstax.org/books/statistics/pages/9-1-null-and-alternative-hypotheses

© Apr 16, 2024 Texas Education Agency (TEA). The OpenStax name, OpenStax logo, OpenStax book covers, OpenStax CNX name, and OpenStax CNX logo are not subject to the Creative Commons license and may not be reproduced without the prior and express written consent of Rice University.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Null and Alternative Hypotheses | Definitions & Examples

Null & Alternative Hypotheses | Definitions, Templates & Examples

Published on May 6, 2022 by Shaun Turney . Revised on June 22, 2023.

The null and alternative hypotheses are two competing claims that researchers weigh evidence for and against using a statistical test :

- Null hypothesis ( H 0 ): There’s no effect in the population .

- Alternative hypothesis ( H a or H 1 ) : There’s an effect in the population.

Table of contents

Answering your research question with hypotheses, what is a null hypothesis, what is an alternative hypothesis, similarities and differences between null and alternative hypotheses, how to write null and alternative hypotheses, other interesting articles, frequently asked questions.

The null and alternative hypotheses offer competing answers to your research question . When the research question asks “Does the independent variable affect the dependent variable?”:

- The null hypothesis ( H 0 ) answers “No, there’s no effect in the population.”

- The alternative hypothesis ( H a ) answers “Yes, there is an effect in the population.”

The null and alternative are always claims about the population. That’s because the goal of hypothesis testing is to make inferences about a population based on a sample . Often, we infer whether there’s an effect in the population by looking at differences between groups or relationships between variables in the sample. It’s critical for your research to write strong hypotheses .

You can use a statistical test to decide whether the evidence favors the null or alternative hypothesis. Each type of statistical test comes with a specific way of phrasing the null and alternative hypothesis. However, the hypotheses can also be phrased in a general way that applies to any test.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

The null hypothesis is the claim that there’s no effect in the population.

If the sample provides enough evidence against the claim that there’s no effect in the population ( p ≤ α), then we can reject the null hypothesis . Otherwise, we fail to reject the null hypothesis.

Although “fail to reject” may sound awkward, it’s the only wording that statisticians accept . Be careful not to say you “prove” or “accept” the null hypothesis.

Null hypotheses often include phrases such as “no effect,” “no difference,” or “no relationship.” When written in mathematical terms, they always include an equality (usually =, but sometimes ≥ or ≤).

You can never know with complete certainty whether there is an effect in the population. Some percentage of the time, your inference about the population will be incorrect. When you incorrectly reject the null hypothesis, it’s called a type I error . When you incorrectly fail to reject it, it’s a type II error.

Examples of null hypotheses

The table below gives examples of research questions and null hypotheses. There’s always more than one way to answer a research question, but these null hypotheses can help you get started.

| ( ) | ||

| Does tooth flossing affect the number of cavities? | Tooth flossing has on the number of cavities. | test: The mean number of cavities per person does not differ between the flossing group (µ ) and the non-flossing group (µ ) in the population; µ = µ . |

| Does the amount of text highlighted in the textbook affect exam scores? | The amount of text highlighted in the textbook has on exam scores. | : There is no relationship between the amount of text highlighted and exam scores in the population; β = 0. |

| Does daily meditation decrease the incidence of depression? | Daily meditation the incidence of depression.* | test: The proportion of people with depression in the daily-meditation group ( ) is greater than or equal to the no-meditation group ( ) in the population; ≥ . |

*Note that some researchers prefer to always write the null hypothesis in terms of “no effect” and “=”. It would be fine to say that daily meditation has no effect on the incidence of depression and p 1 = p 2 .

The alternative hypothesis ( H a ) is the other answer to your research question . It claims that there’s an effect in the population.

Often, your alternative hypothesis is the same as your research hypothesis. In other words, it’s the claim that you expect or hope will be true.

The alternative hypothesis is the complement to the null hypothesis. Null and alternative hypotheses are exhaustive, meaning that together they cover every possible outcome. They are also mutually exclusive, meaning that only one can be true at a time.

Alternative hypotheses often include phrases such as “an effect,” “a difference,” or “a relationship.” When alternative hypotheses are written in mathematical terms, they always include an inequality (usually ≠, but sometimes < or >). As with null hypotheses, there are many acceptable ways to phrase an alternative hypothesis.

Examples of alternative hypotheses

The table below gives examples of research questions and alternative hypotheses to help you get started with formulating your own.

| Does tooth flossing affect the number of cavities? | Tooth flossing has an on the number of cavities. | test: The mean number of cavities per person differs between the flossing group (µ ) and the non-flossing group (µ ) in the population; µ ≠ µ . |

| Does the amount of text highlighted in a textbook affect exam scores? | The amount of text highlighted in the textbook has an on exam scores. | : There is a relationship between the amount of text highlighted and exam scores in the population; β ≠ 0. |

| Does daily meditation decrease the incidence of depression? | Daily meditation the incidence of depression. | test: The proportion of people with depression in the daily-meditation group ( ) is less than the no-meditation group ( ) in the population; < . |

Null and alternative hypotheses are similar in some ways:

- They’re both answers to the research question.

- They both make claims about the population.

- They’re both evaluated by statistical tests.

However, there are important differences between the two types of hypotheses, summarized in the following table.

| A claim that there is in the population. | A claim that there is in the population. | |

|

| ||

| Equality symbol (=, ≥, or ≤) | Inequality symbol (≠, <, or >) | |

| Rejected | Supported | |

| Failed to reject | Not supported |

Prevent plagiarism. Run a free check.

To help you write your hypotheses, you can use the template sentences below. If you know which statistical test you’re going to use, you can use the test-specific template sentences. Otherwise, you can use the general template sentences.

General template sentences

The only thing you need to know to use these general template sentences are your dependent and independent variables. To write your research question, null hypothesis, and alternative hypothesis, fill in the following sentences with your variables:

Does independent variable affect dependent variable ?

- Null hypothesis ( H 0 ): Independent variable does not affect dependent variable.

- Alternative hypothesis ( H a ): Independent variable affects dependent variable.

Test-specific template sentences

Once you know the statistical test you’ll be using, you can write your hypotheses in a more precise and mathematical way specific to the test you chose. The table below provides template sentences for common statistical tests.

| ( ) | ||

| test

with two groups | The mean dependent variable does not differ between group 1 (µ ) and group 2 (µ ) in the population; µ = µ . | The mean dependent variable differs between group 1 (µ ) and group 2 (µ ) in the population; µ ≠ µ . |

| with three groups | The mean dependent variable does not differ between group 1 (µ ), group 2 (µ ), and group 3 (µ ) in the population; µ = µ = µ . | The mean dependent variable of group 1 (µ ), group 2 (µ ), and group 3 (µ ) are not all equal in the population. |

| There is no correlation between independent variable and dependent variable in the population; ρ = 0. | There is a correlation between independent variable and dependent variable in the population; ρ ≠ 0. | |

| There is no relationship between independent variable and dependent variable in the population; β = 0. | There is a relationship between independent variable and dependent variable in the population; β ≠ 0. | |

| Two-proportions test | The dependent variable expressed as a proportion does not differ between group 1 ( ) and group 2 ( ) in the population; = . | The dependent variable expressed as a proportion differs between group 1 ( ) and group 2 ( ) in the population; ≠ . |

Note: The template sentences above assume that you’re performing one-tailed tests . One-tailed tests are appropriate for most studies.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Descriptive statistics

- Measures of central tendency

- Correlation coefficient

Methodology

- Cluster sampling

- Stratified sampling

- Types of interviews

- Cohort study

- Thematic analysis

Research bias

- Implicit bias

- Cognitive bias

- Survivorship bias

- Availability heuristic

- Nonresponse bias

- Regression to the mean

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

Null and alternative hypotheses are used in statistical hypothesis testing . The null hypothesis of a test always predicts no effect or no relationship between variables, while the alternative hypothesis states your research prediction of an effect or relationship.

The null hypothesis is often abbreviated as H 0 . When the null hypothesis is written using mathematical symbols, it always includes an equality symbol (usually =, but sometimes ≥ or ≤).

The alternative hypothesis is often abbreviated as H a or H 1 . When the alternative hypothesis is written using mathematical symbols, it always includes an inequality symbol (usually ≠, but sometimes < or >).

A research hypothesis is your proposed answer to your research question. The research hypothesis usually includes an explanation (“ x affects y because …”).

A statistical hypothesis, on the other hand, is a mathematical statement about a population parameter. Statistical hypotheses always come in pairs: the null and alternative hypotheses . In a well-designed study , the statistical hypotheses correspond logically to the research hypothesis.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Turney, S. (2023, June 22). Null & Alternative Hypotheses | Definitions, Templates & Examples. Scribbr. Retrieved September 4, 2024, from https://www.scribbr.com/statistics/null-and-alternative-hypotheses/

Is this article helpful?

Shaun Turney

Other students also liked, inferential statistics | an easy introduction & examples, hypothesis testing | a step-by-step guide with easy examples, type i & type ii errors | differences, examples, visualizations, what is your plagiarism score.

Independent t-test for two samples

Introduction.

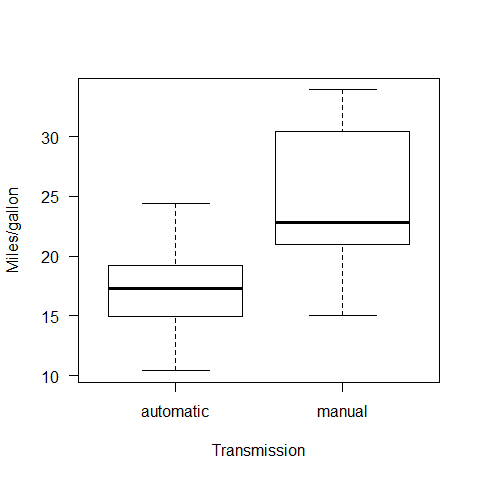

The independent t-test, also called the two sample t-test, independent-samples t-test or student's t-test, is an inferential statistical test that determines whether there is a statistically significant difference between the means in two unrelated groups.

Null and alternative hypotheses for the independent t-test

The null hypothesis for the independent t-test is that the population means from the two unrelated groups are equal:

H 0 : u 1 = u 2

In most cases, we are looking to see if we can show that we can reject the null hypothesis and accept the alternative hypothesis, which is that the population means are not equal:

H A : u 1 ≠ u 2

To do this, we need to set a significance level (also called alpha) that allows us to either reject or accept the alternative hypothesis. Most commonly, this value is set at 0.05.

What do you need to run an independent t-test?

In order to run an independent t-test, you need the following:

- One independent, categorical variable that has two levels/groups.

- One continuous dependent variable.

Unrelated groups

Unrelated groups, also called unpaired groups or independent groups, are groups in which the cases (e.g., participants) in each group are different. Often we are investigating differences in individuals, which means that when comparing two groups, an individual in one group cannot also be a member of the other group and vice versa. An example would be gender - an individual would have to be classified as either male or female – not both.

Assumption of normality of the dependent variable

The independent t-test requires that the dependent variable is approximately normally distributed within each group.

Note: Technically, it is the residuals that need to be normally distributed, but for an independent t-test, both will give you the same result.

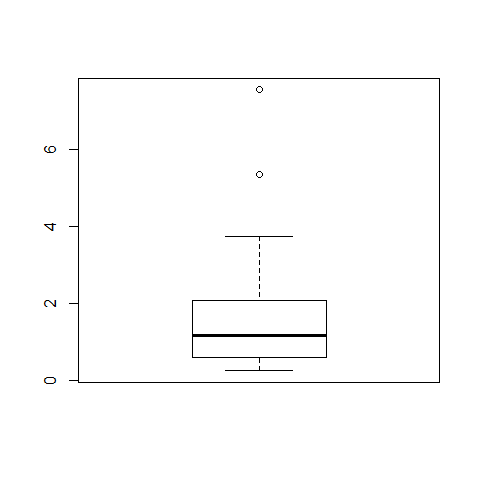

You can test for this using a number of different tests, but the Shapiro-Wilks test of normality or a graphical method, such as a Q-Q Plot, are very common. You can run these tests using SPSS Statistics, the procedure for which can be found in our Testing for Normality guide. However, the t-test is described as a robust test with respect to the assumption of normality. This means that some deviation away from normality does not have a large influence on Type I error rates. The exception to this is if the ratio of the smallest to largest group size is greater than 1.5 (largest compared to smallest).

What to do when you violate the normality assumption

If you find that either one or both of your group's data is not approximately normally distributed and groups sizes differ greatly, you have two options: (1) transform your data so that the data becomes normally distributed (to do this in SPSS Statistics see our guide on Transforming Data ), or (2) run the Mann-Whitney U test which is a non-parametric test that does not require the assumption of normality (to run this test in SPSS Statistics see our guide on the Mann-Whitney U Test ).

Assumption of homogeneity of variance

The independent t-test assumes the variances of the two groups you are measuring are equal in the population. If your variances are unequal, this can affect the Type I error rate. The assumption of homogeneity of variance can be tested using Levene's Test of Equality of Variances, which is produced in SPSS Statistics when running the independent t-test procedure. If you have run Levene's Test of Equality of Variances in SPSS Statistics, you will get a result similar to that below:

This test for homogeneity of variance provides an F -statistic and a significance value ( p -value). We are primarily concerned with the significance value – if it is greater than 0.05 (i.e., p > .05), our group variances can be treated as equal. However, if p < 0.05, we have unequal variances and we have violated the assumption of homogeneity of variances.

Overcoming a violation of the assumption of homogeneity of variance

If the Levene's Test for Equality of Variances is statistically significant, which indicates that the group variances are unequal in the population, you can correct for this violation by not using the pooled estimate for the error term for the t -statistic, but instead using an adjustment to the degrees of freedom using the Welch-Satterthwaite method. In all reality, you will probably never have heard of these adjustments because SPSS Statistics hides this information and simply labels the two options as "Equal variances assumed" and "Equal variances not assumed" without explicitly stating the underlying tests used. However, you can see the evidence of these tests as below:

From the result of Levene's Test for Equality of Variances, we can reject the null hypothesis that there is no difference in the variances between the groups and accept the alternative hypothesis that there is a statistically significant difference in the variances between groups. The effect of not being able to assume equal variances is evident in the final column of the above figure where we see a reduction in the value of the t -statistic and a large reduction in the degrees of freedom (df). This has the effect of increasing the p -value above the critical significance level of 0.05. In this case, we therefore do not accept the alternative hypothesis and accept that there are no statistically significant differences between means. This would not have been our conclusion had we not tested for homogeneity of variances.

Reporting the result of an independent t-test

When reporting the result of an independent t-test, you need to include the t -statistic value, the degrees of freedom (df) and the significance value of the test ( p -value). The format of the test result is: t (df) = t -statistic, p = significance value. Therefore, for the example above, you could report the result as t (7.001) = 2.233, p = 0.061.

Fully reporting your results

In order to provide enough information for readers to fully understand the results when you have run an independent t-test, you should include the result of normality tests, Levene's Equality of Variances test, the two group means and standard deviations, the actual t-test result and the direction of the difference (if any). In addition, you might also wish to include the difference between the groups along with a 95% confidence interval. For example:

Inspection of Q-Q Plots revealed that cholesterol concentration was normally distributed for both groups and that there was homogeneity of variance as assessed by Levene's Test for Equality of Variances. Therefore, an independent t-test was run on the data with a 95% confidence interval (CI) for the mean difference. It was found that after the two interventions, cholesterol concentrations in the dietary group (6.15 ± 0.52 mmol/L) were significantly higher than the exercise group (5.80 ± 0.38 mmol/L) ( t (38) = 2.470, p = 0.018) with a difference of 0.35 (95% CI, 0.06 to 0.64) mmol/L.

To know how to run an independent t-test in SPSS Statistics, see our SPSS Statistics Independent-Samples T-Test guide. Alternatively, you can carry out an independent-samples t-test using Excel, R and RStudio .

Step-by-step guide to hypothesis testing in statistics

Hypothesis testing in statistics helps us use data to make informed decisions. It starts with an assumption or guess about a group or population—something we believe might be true. We then collect sample data to check if there is enough evidence to support or reject that guess. This method is useful in many fields, like science, business, and healthcare, where decisions need to be based on facts.

Learning how to do hypothesis testing in statistics step-by-step can help you better understand data and make smarter choices, even when things are uncertain. This guide will take you through each step, from creating your hypothesis to making sense of the results, so you can see how it works in practical situations.

What is Hypothesis Testing?

Table of Contents

Hypothesis testing is a method for determining whether data supports a certain idea or assumption about a larger group. It starts by making a guess, like an average or a proportion, and then uses a small sample of data to see if that guess seems true or not.

For example, if a company wants to know if its new product is more popular than its old one, it can use hypothesis testing. They start with a statement like “The new product is not more popular than the old one” (this is the null hypothesis) and compare it with “The new product is more popular” (this is the alternative hypothesis). Then, they look at customer feedback to see if there’s enough evidence to reject the first statement and support the second one.

Simply put, hypothesis testing is a way to use data to help make decisions and understand what the data is really telling us, even when we don’t have all the answers.

Importance Of Hypothesis Testing In Decision-Making And Data Analysis

Hypothesis testing is important because it helps us make smart choices and understand data better. Here’s why it’s useful:

- Reduces Guesswork : It helps us see if our guesses or ideas are likely correct, even when we don’t have all the details.

- Uses Real Data : Instead of just guessing, it checks if our ideas match up with real data, which makes our decisions more reliable.

- Avoids Errors : It helps us avoid mistakes by carefully checking if our ideas are right so we don’t make costly errors.

- Shows What to Do Next : It tells us if our ideas work or not, helping us decide whether to keep, change, or drop something. For example, a company might test a new ad and decide what to do based on the results.

- Confirms Research Findings : It makes sure that research results are accurate and not just random chance so that we can trust the findings.

Here’s a simple guide to understanding hypothesis testing, with an example:

1. Set Up Your Hypotheses

Explanation: Start by defining two statements:

- Null Hypothesis (H0): This is the idea that there is no change or effect. It’s what you assume is true.

- Alternative Hypothesis (H1): This is what you want to test. It suggests there is a change or effect.

Example: Suppose a company says their new batteries last an average of 500 hours. To check this:

- Null Hypothesis (H0): The average battery life is 500 hours.

- Alternative Hypothesis (H1): The average battery life is not 500 hours.

2. Choose the Test

Explanation: Pick a statistical test that fits your data and your hypotheses. Different tests are used for various kinds of data.

Example: Since you’re comparing the average battery life, you use a one-sample t-test .

3. Set the Significance Level

Explanation: Decide how much risk you’re willing to take if you make a wrong decision. This is called the significance level, often set at 0.05 or 5%.

Example: You choose a significance level of 0.05, meaning you’re okay with a 5% chance of being wrong.

4. Gather and Analyze Data

Explanation: Collect your data and perform the test. Calculate the test statistic to see how far your sample result is from what you assumed.

Example: You test 30 batteries and find they last an average of 485 hours. You then calculate how this average compares to the claimed 500 hours using the t-test.

5. Find the p-Value

Explanation: The p-value tells you the probability of getting a result as extreme as yours if the null hypothesis is true.

Example: You find a p-value of 0.0001. This means there’s a very small chance (0.01%) of getting an average battery life of 485 hours or less if the true average is 500 hours.

6. Make Your Decision

Explanation: Compare the p-value to your significance level. If the p-value is smaller, you reject the null hypothesis. If it’s larger, you do not reject it.

Example: Since 0.0001 is much less than 0.05, you reject the null hypothesis. This means the data suggests the average battery life is different from 500 hours.

7. Report Your Findings

Explanation: Summarize what the results mean. State whether you rejected the null hypothesis and what that implies.

Example: You conclude that the average battery life is likely different from 500 hours. This suggests the company’s claim might not be accurate.

Hypothesis testing is a way to use data to check if your guesses or assumptions are likely true. By following these steps—setting up your hypotheses, choosing the right test, deciding on a significance level, analyzing your data, finding the p-value, making a decision, and reporting results—you can determine if your data supports or challenges your initial idea.

Understanding Hypothesis Testing: A Simple Explanation

Hypothesis testing is a way to use data to make decisions. Here’s a straightforward guide:

1. What is the Null and Alternative Hypotheses?

- Null Hypothesis (H0): This is your starting assumption. It says that nothing has changed or that there is no effect. It’s what you assume to be true until your data shows otherwise. Example: If a company says their batteries last 500 hours, the null hypothesis is: “The average battery life is 500 hours.” This means you think the claim is correct unless you find evidence to prove otherwise.

- Alternative Hypothesis (H1): This is what you want to find out. It suggests that there is an effect or a difference. It’s what you are testing to see if it might be true. Example: To test the company’s claim, you might say: “The average battery life is not 500 hours.” This means you think the average battery life might be different from what the company says.

2. One-Tailed vs. Two-Tailed Tests

- One-Tailed Test: This test checks for an effect in only one direction. You use it when you’re only interested in finding out if something is either more or less than a specific value. Example: If you think the battery lasts longer than 500 hours, you would use a one-tailed test to see if the battery life is significantly more than 500 hours.

- Two-Tailed Test: This test checks for an effect in both directions. Use this when you want to see if something is different from a specific value, whether it’s more or less. Example: If you want to see if the battery life is different from 500 hours, whether it’s more or less, you would use a two-tailed test. This checks for any significant difference, regardless of the direction.

3. Common Misunderstandings

- Clarification: Hypothesis testing doesn’t prove that the null hypothesis is true. It just helps you decide if you should reject it. If there isn’t enough evidence against it, you don’t reject it, but that doesn’t mean it’s definitely true.

- Clarification: A small p-value shows that your data is unlikely if the null hypothesis is true. It suggests that the alternative hypothesis might be right, but it doesn’t prove the null hypothesis is false.

- Clarification: The significance level (alpha) is a set threshold, like 0.05, that helps you decide how much risk you’re willing to take for making a wrong decision. It should be chosen carefully, not randomly.

- Clarification: Hypothesis testing helps you make decisions based on data, but it doesn’t guarantee your results are correct. The quality of your data and the right choice of test affect how reliable your results are.

Benefits and Limitations of Hypothesis Testing

- Clear Decisions: Hypothesis testing helps you make clear decisions based on data. It shows whether the evidence supports or goes against your initial idea.

- Objective Analysis: It relies on data rather than personal opinions, so your decisions are based on facts rather than feelings.

- Concrete Numbers: You get specific numbers, like p-values, to understand how strong the evidence is against your idea.

- Control Risk: You can set a risk level (alpha level) to manage the chance of making an error, which helps avoid incorrect conclusions.

- Widely Used: It can be used in many areas, from science and business to social studies and engineering, making it a versatile tool.

Limitations

- Sample Size Matters: The results can be affected by the size of the sample. Small samples might give unreliable results, while large samples might find differences that aren’t meaningful in real life.

- Risk of Misinterpretation: A small p-value means the results are unlikely if the null hypothesis is true, but it doesn’t show how important the effect is.

- Needs Assumptions: Hypothesis testing requires certain conditions, like data being normally distributed . If these aren’t met, the results might not be accurate.

- Simple Decisions: It often results in a basic yes or no decision without giving detailed information about the size or impact of the effect.

- Can Be Misused: Sometimes, people misuse hypothesis testing, tweaking data to get a desired result or focusing only on whether the result is statistically significant.

- No Absolute Proof: Hypothesis testing doesn’t prove that your hypothesis is true. It only helps you decide if there’s enough evidence to reject the null hypothesis, so the conclusions are based on likelihood, not certainty.

Final Thoughts

Hypothesis testing helps you make decisions based on data. It involves setting up your initial idea, picking a significance level, doing the test, and looking at the results. By following these steps, you can make sure your conclusions are based on solid information, not just guesses.

This approach lets you see if the evidence supports or contradicts your initial idea, helping you make better decisions. But remember that hypothesis testing isn’t perfect. Things like sample size and assumptions can affect the results, so it’s important to be aware of these limitations.

In simple terms, using a step-by-step guide for hypothesis testing is a great way to better understand your data. Follow the steps carefully and keep in mind the method’s limits.

What is the difference between one-tailed and two-tailed tests?

A one-tailed test assesses the probability of the observed data in one direction (either greater than or less than a certain value). In contrast, a two-tailed test looks at both directions (greater than and less than) to detect any significant deviation from the null hypothesis.

How do you choose the appropriate test for hypothesis testing?

The choice of test depends on the type of data you have and the hypotheses you are testing. Common tests include t-tests, chi-square tests, and ANOVA. You get more details about ANOVA, you may read Complete Details on What is ANOVA in Statistics ? It’s important to match the test to the data characteristics and the research question.

What is the role of sample size in hypothesis testing?

Sample size affects the reliability of hypothesis testing. Larger samples provide more reliable estimates and can detect smaller effects, while smaller samples may lead to less accurate results and reduced power.

Can hypothesis testing prove that a hypothesis is true?

Hypothesis testing cannot prove that a hypothesis is true. It can only provide evidence to support or reject the null hypothesis. A result can indicate whether the data is consistent with the null hypothesis or not, but it does not prove the alternative hypothesis with certainty.

Related Posts

How to Find the Best Online Statistics Homework Help

Why SPSS Homework Help Is An Important aspect for Students?

Leave a comment cancel reply.

Your email address will not be published. Required fields are marked *

t-test Calculator

Table of contents

Welcome to our t-test calculator! Here you can not only easily perform one-sample t-tests , but also two-sample t-tests , as well as paired t-tests .

Do you prefer to find the p-value from t-test, or would you rather find the t-test critical values? Well, this t-test calculator can do both! 😊

What does a t-test tell you? Take a look at the text below, where we explain what actually gets tested when various types of t-tests are performed. Also, we explain when to use t-tests (in particular, whether to use the z-test vs. t-test) and what assumptions your data should satisfy for the results of a t-test to be valid. If you've ever wanted to know how to do a t-test by hand, we provide the necessary t-test formula, as well as tell you how to determine the number of degrees of freedom in a t-test.

When to use a t-test?

A t-test is one of the most popular statistical tests for location , i.e., it deals with the population(s) mean value(s).

There are different types of t-tests that you can perform:

- A one-sample t-test;

- A two-sample t-test; and

- A paired t-test.

In the next section , we explain when to use which. Remember that a t-test can only be used for one or two groups . If you need to compare three (or more) means, use the analysis of variance ( ANOVA ) method.

The t-test is a parametric test, meaning that your data has to fulfill some assumptions :

- The data points are independent; AND

- The data, at least approximately, follow a normal distribution .

If your sample doesn't fit these assumptions, you can resort to nonparametric alternatives. Visit our Mann–Whitney U test calculator or the Wilcoxon rank-sum test calculator to learn more. Other possibilities include the Wilcoxon signed-rank test or the sign test.

Which t-test?

Your choice of t-test depends on whether you are studying one group or two groups:

One sample t-test

Choose the one-sample t-test to check if the mean of a population is equal to some pre-set hypothesized value .

The average volume of a drink sold in 0.33 l cans — is it really equal to 330 ml?

The average weight of people from a specific city — is it different from the national average?

Two-sample t-test

Choose the two-sample t-test to check if the difference between the means of two populations is equal to some pre-determined value when the two samples have been chosen independently of each other.

In particular, you can use this test to check whether the two groups are different from one another .

The average difference in weight gain in two groups of people: one group was on a high-carb diet and the other on a high-fat diet.

The average difference in the results of a math test from students at two different universities.

This test is sometimes referred to as an independent samples t-test , or an unpaired samples t-test .

Paired t-test

A paired t-test is used to investigate the change in the mean of a population before and after some experimental intervention , based on a paired sample, i.e., when each subject has been measured twice: before and after treatment.

In particular, you can use this test to check whether, on average, the treatment has had any effect on the population .

The change in student test performance before and after taking a course.

The change in blood pressure in patients before and after administering some drug.

How to do a t-test?

So, you've decided which t-test to perform. These next steps will tell you how to calculate the p-value from t-test or its critical values, and then which decision to make about the null hypothesis.

Decide on the alternative hypothesis :

Use a two-tailed t-test if you only care whether the population's mean (or, in the case of two populations, the difference between the populations' means) agrees or disagrees with the pre-set value.

Use a one-tailed t-test if you want to test whether this mean (or difference in means) is greater/less than the pre-set value.

Compute your T-score value :

Formulas for the test statistic in t-tests include the sample size , as well as its mean and standard deviation . The exact formula depends on the t-test type — check the sections dedicated to each particular test for more details.

Determine the degrees of freedom for the t-test:

The degrees of freedom are the number of observations in a sample that are free to vary as we estimate statistical parameters. In the simplest case, the number of degrees of freedom equals your sample size minus the number of parameters you need to estimate . Again, the exact formula depends on the t-test you want to perform — check the sections below for details.

The degrees of freedom are essential, as they determine the distribution followed by your T-score (under the null hypothesis). If there are d degrees of freedom, then the distribution of the test statistics is the t-Student distribution with d degrees of freedom . This distribution has a shape similar to N(0,1) (bell-shaped and symmetric) but has heavier tails . If the number of degrees of freedom is large (>30), which generically happens for large samples, the t-Student distribution is practically indistinguishable from N(0,1).

💡 The t-Student distribution owes its name to William Sealy Gosset, who, in 1908, published his paper on the t-test under the pseudonym "Student". Gosset worked at the famous Guinness Brewery in Dublin, Ireland, and devised the t-test as an economical way to monitor the quality of beer. Cheers! 🍺🍺🍺

p-value from t-test

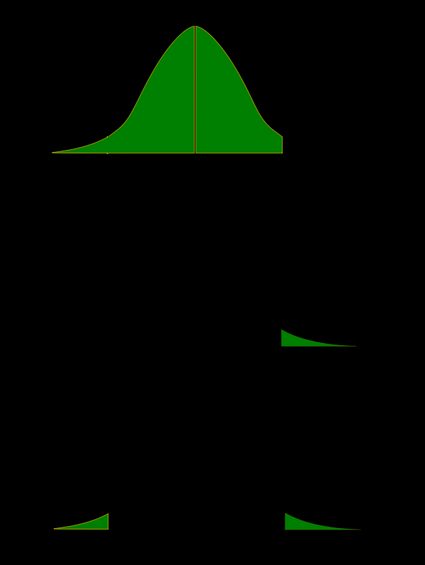

Recall that the p-value is the probability (calculated under the assumption that the null hypothesis is true) that the test statistic will produce values at least as extreme as the T-score produced for your sample . As probabilities correspond to areas under the density function, p-value from t-test can be nicely illustrated with the help of the following pictures:

The following formulae say how to calculate p-value from t-test. By cdf t,d we denote the cumulative distribution function of the t-Student distribution with d degrees of freedom:

p-value from left-tailed t-test:

p-value = cdf t,d (t score )

p-value from right-tailed t-test:

p-value = 1 − cdf t,d (t score )

p-value from two-tailed t-test:

p-value = 2 × cdf t,d (−|t score |)

or, equivalently: p-value = 2 − 2 × cdf t,d (|t score |)

However, the cdf of the t-distribution is given by a somewhat complicated formula. To find the p-value by hand, you would need to resort to statistical tables, where approximate cdf values are collected, or to specialized statistical software. Fortunately, our t-test calculator determines the p-value from t-test for you in the blink of an eye!

t-test critical values

Recall, that in the critical values approach to hypothesis testing, you need to set a significance level, α, before computing the critical values , which in turn give rise to critical regions (a.k.a. rejection regions).

Formulas for critical values employ the quantile function of t-distribution, i.e., the inverse of the cdf :

Critical value for left-tailed t-test: cdf t,d -1 (α)

critical region:

(-∞, cdf t,d -1 (α)]

Critical value for right-tailed t-test: cdf t,d -1 (1-α)

[cdf t,d -1 (1-α), ∞)

Critical values for two-tailed t-test: ±cdf t,d -1 (1-α/2)

(-∞, -cdf t,d -1 (1-α/2)] ∪ [cdf t,d -1 (1-α/2), ∞)

To decide the fate of the null hypothesis, just check if your T-score lies within the critical region:

If your T-score belongs to the critical region , reject the null hypothesis and accept the alternative hypothesis.

If your T-score is outside the critical region , then you don't have enough evidence to reject the null hypothesis.

How to use our t-test calculator

Choose the type of t-test you wish to perform:

A one-sample t-test (to test the mean of a single group against a hypothesized mean);

A two-sample t-test (to compare the means for two groups); or

A paired t-test (to check how the mean from the same group changes after some intervention).

Two-tailed;

Left-tailed; or

Right-tailed.

This t-test calculator allows you to use either the p-value approach or the critical regions approach to hypothesis testing!

Enter your T-score and the number of degrees of freedom . If you don't know them, provide some data about your sample(s): sample size, mean, and standard deviation, and our t-test calculator will compute the T-score and degrees of freedom for you .

Once all the parameters are present, the p-value, or critical region, will immediately appear underneath the t-test calculator, along with an interpretation!

One-sample t-test

The null hypothesis is that the population mean is equal to some value μ 0 \mu_0 μ 0 .

The alternative hypothesis is that the population mean is:

- different from μ 0 \mu_0 μ 0 ;

- smaller than μ 0 \mu_0 μ 0 ; or

- greater than μ 0 \mu_0 μ 0 .

One-sample t-test formula :

- μ 0 \mu_0 μ 0 — Mean postulated in the null hypothesis;

- n n n — Sample size;

- x ˉ \bar{x} x ˉ — Sample mean; and

- s s s — Sample standard deviation.

Number of degrees of freedom in t-test (one-sample) = n − 1 n-1 n − 1 .

The null hypothesis is that the actual difference between these groups' means, μ 1 \mu_1 μ 1 , and μ 2 \mu_2 μ 2 , is equal to some pre-set value, Δ \Delta Δ .

The alternative hypothesis is that the difference μ 1 − μ 2 \mu_1 - \mu_2 μ 1 − μ 2 is:

- Different from Δ \Delta Δ ;

- Smaller than Δ \Delta Δ ; or

- Greater than Δ \Delta Δ .

In particular, if this pre-determined difference is zero ( Δ = 0 \Delta = 0 Δ = 0 ):

The null hypothesis is that the population means are equal.

The alternate hypothesis is that the population means are:

- μ 1 \mu_1 μ 1 and μ 2 \mu_2 μ 2 are different from one another;

- μ 1 \mu_1 μ 1 is smaller than μ 2 \mu_2 μ 2 ; and

- μ 1 \mu_1 μ 1 is greater than μ 2 \mu_2 μ 2 .

Formally, to perform a t-test, we should additionally assume that the variances of the two populations are equal (this assumption is called the homogeneity of variance ).

There is a version of a t-test that can be applied without the assumption of homogeneity of variance: it is called a Welch's t-test . For your convenience, we describe both versions.

Two-sample t-test if variances are equal

Use this test if you know that the two populations' variances are the same (or very similar).

Two-sample t-test formula (with equal variances) :

where s p s_p s p is the so-called pooled standard deviation , which we compute as:

- Δ \Delta Δ — Mean difference postulated in the null hypothesis;

- n 1 n_1 n 1 — First sample size;

- x ˉ 1 \bar{x}_1 x ˉ 1 — Mean for the first sample;

- s 1 s_1 s 1 — Standard deviation in the first sample;

- n 2 n_2 n 2 — Second sample size;

- x ˉ 2 \bar{x}_2 x ˉ 2 — Mean for the second sample; and

- s 2 s_2 s 2 — Standard deviation in the second sample.

Number of degrees of freedom in t-test (two samples, equal variances) = n 1 + n 2 − 2 n_1 + n_2 - 2 n 1 + n 2 − 2 .

Two-sample t-test if variances are unequal (Welch's t-test)

Use this test if the variances of your populations are different.

Two-sample Welch's t-test formula if variances are unequal:

- s 1 s_1 s 1 — Standard deviation in the first sample;

- s 2 s_2 s 2 — Standard deviation in the second sample.

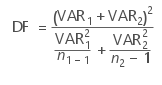

The number of degrees of freedom in a Welch's t-test (two-sample t-test with unequal variances) is very difficult to count. We can approximate it with the help of the following Satterthwaite formula :

Alternatively, you can take the smaller of n 1 − 1 n_1 - 1 n 1 − 1 and n 2 − 1 n_2 - 1 n 2 − 1 as a conservative estimate for the number of degrees of freedom.

🔎 The Satterthwaite formula for the degrees of freedom can be rewritten as a scaled weighted harmonic mean of the degrees of freedom of the respective samples: n 1 − 1 n_1 - 1 n 1 − 1 and n 2 − 1 n_2 - 1 n 2 − 1 , and the weights are proportional to the standard deviations of the corresponding samples.

As we commonly perform a paired t-test when we have data about the same subjects measured twice (before and after some treatment), let us adopt the convention of referring to the samples as the pre-group and post-group.

The null hypothesis is that the true difference between the means of pre- and post-populations is equal to some pre-set value, Δ \Delta Δ .

The alternative hypothesis is that the actual difference between these means is:

Typically, this pre-determined difference is zero. We can then reformulate the hypotheses as follows:

The null hypothesis is that the pre- and post-means are the same, i.e., the treatment has no impact on the population .

The alternative hypothesis:

- The pre- and post-means are different from one another (treatment has some effect);

- The pre-mean is smaller than the post-mean (treatment increases the result); or

- The pre-mean is greater than the post-mean (treatment decreases the result).

Paired t-test formula

In fact, a paired t-test is technically the same as a one-sample t-test! Let us see why it is so. Let x 1 , . . . , x n x_1, ... , x_n x 1 , ... , x n be the pre observations and y 1 , . . . , y n y_1, ... , y_n y 1 , ... , y n the respective post observations. That is, x i , y i x_i, y_i x i , y i are the before and after measurements of the i -th subject.

For each subject, compute the difference, d i : = x i − y i d_i := x_i - y_i d i := x i − y i . All that happens next is just a one-sample t-test performed on the sample of differences d 1 , . . . , d n d_1, ... , d_n d 1 , ... , d n . Take a look at the formula for the T-score :

Δ \Delta Δ — Mean difference postulated in the null hypothesis;

n n n — Size of the sample of differences, i.e., the number of pairs;

x ˉ \bar{x} x ˉ — Mean of the sample of differences; and

s s s — Standard deviation of the sample of differences.

Number of degrees of freedom in t-test (paired): n − 1 n - 1 n − 1

t-test vs Z-test

We use a Z-test when we want to test the population mean of a normally distributed dataset, which has a known population variance . If the number of degrees of freedom is large, then the t-Student distribution is very close to N(0,1).

Hence, if there are many data points (at least 30), you may swap a t-test for a Z-test, and the results will be almost identical. However, for small samples with unknown variance, remember to use the t-test because, in such cases, the t-Student distribution differs significantly from the N(0,1)!

🙋 Have you concluded you need to perform the z-test? Head straight to our z-test calculator !

What is a t-test?

A t-test is a widely used statistical test that analyzes the means of one or two groups of data. For instance, a t-test is performed on medical data to determine whether a new drug really helps.

What are different types of t-tests?

Different types of t-tests are:

- One-sample t-test;

- Two-sample t-test; and

- Paired t-test.

How to find the t value in a one sample t-test?

To find the t-value:

- Subtract the null hypothesis mean from the sample mean value.

- Divide the difference by the standard deviation of the sample.

- Multiply the resultant with the square root of the sample size.