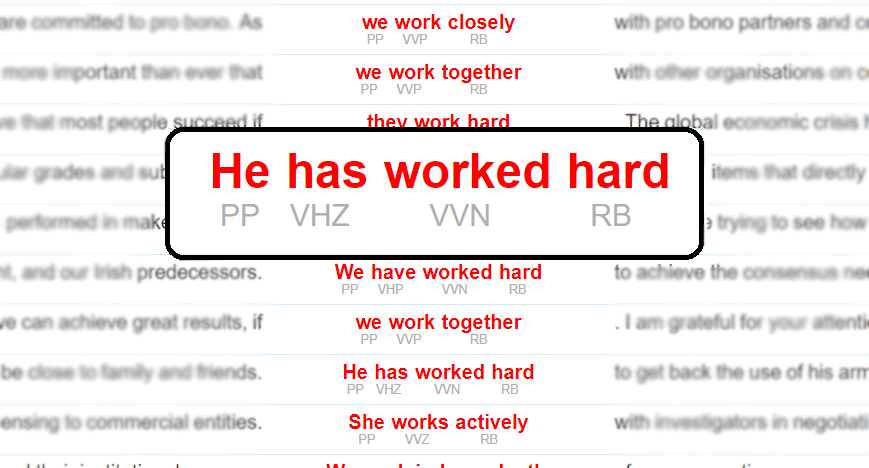

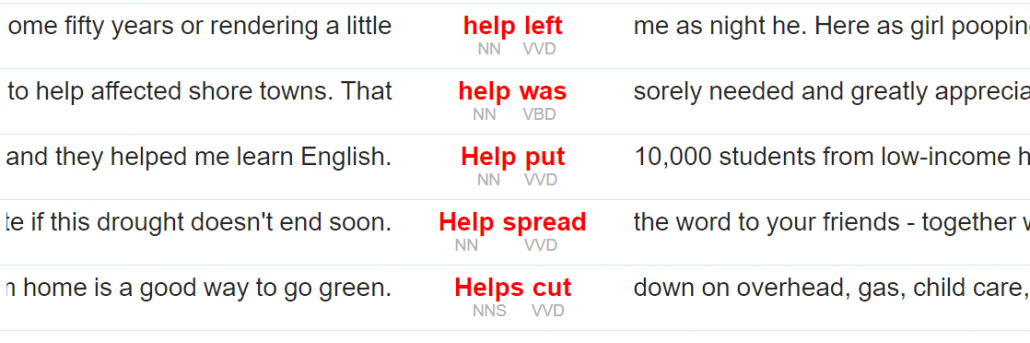

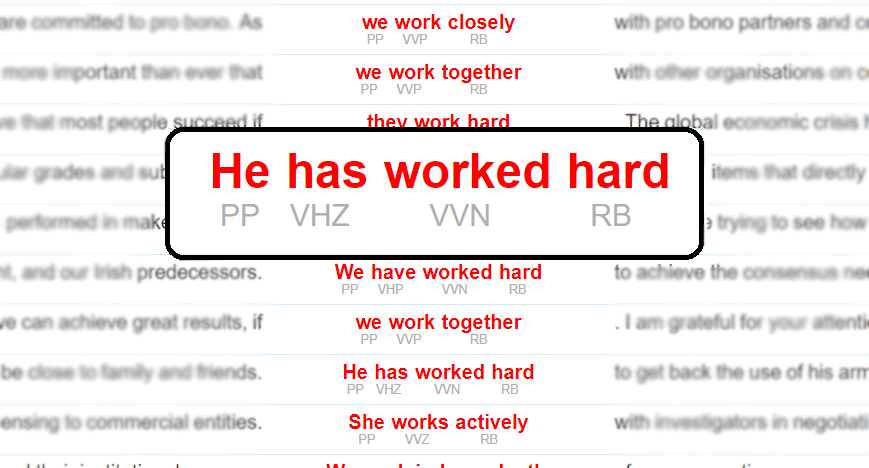

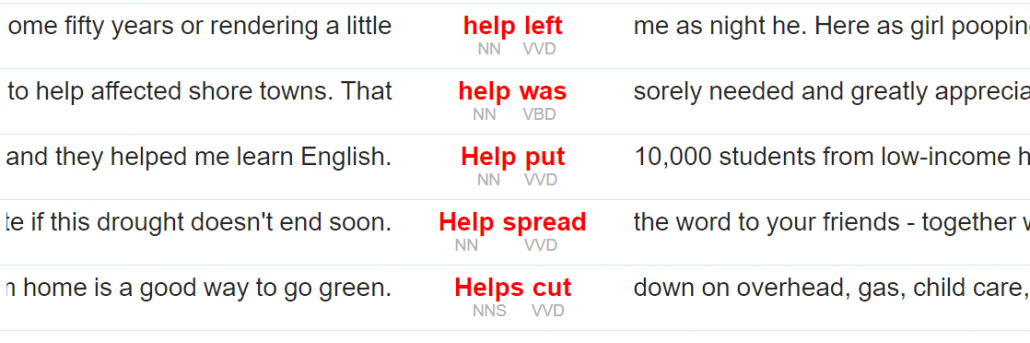

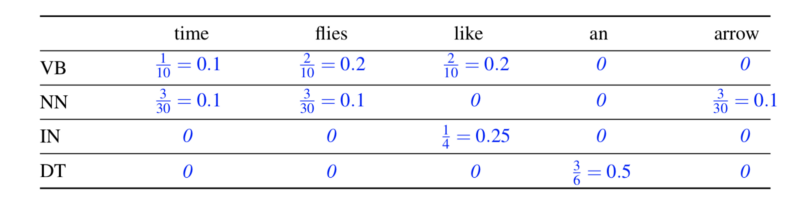

| b. | | *He sometimes to the cafe. | We can easily imagine a tagset in which the four distinct grammatical forms just discussed were all tagged as VB . Although this would be adequate for some purposes, a more fine-grained tagset provides useful information about these forms that can help other processors that try to detect patterns in tag sequences. The Brown tagset captures these distinctions, as summarized in 7.1 . | Form | Category | Tag |

|---|

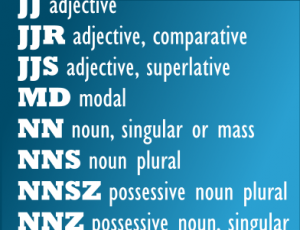

| go | base | VB | | goes | 3rd singular present | VBZ | | gone | past participle | VBN | | going | gerund | VBG | | went | simple past | VBD | Table 7.1: Some morphosyntactic distinctions in the Brown tagset In addition to this set of verb tags, the various forms of the verb to be have special tags: be/BE, being/BEG, am/BEM, are/BER, is /BEZ, been/BEN, were/BED and was/BEDZ (plus extra tags for negative forms of the verb). All told, this fine-grained tagging of verbs means that an automatic tagger that uses this tagset is effectively carrying out a limited amount of morphological analysis . Most part-of-speech tagsets make use of the same basic categories, such as noun, verb, adjective, and preposition. However, tagsets differ both in how finely they divide words into categories, and in how they define their categories. For example, is might be tagged simply as a verb in one tagset; but as a distinct form of the lexeme be in another tagset (as in the Brown Corpus). This variation in tagsets is unavoidable, since part-of-speech tags are used in different ways for different tasks. In other words, there is no one 'right way' to assign tags, only more or less useful ways depending on one's goals. 8 Summary- Words can be grouped into classes, such as nouns, verbs, adjectives, and adverbs. These classes are known as lexical categories or parts of speech. Parts of speech are assigned short labels, or tags, such as NN , VB ,

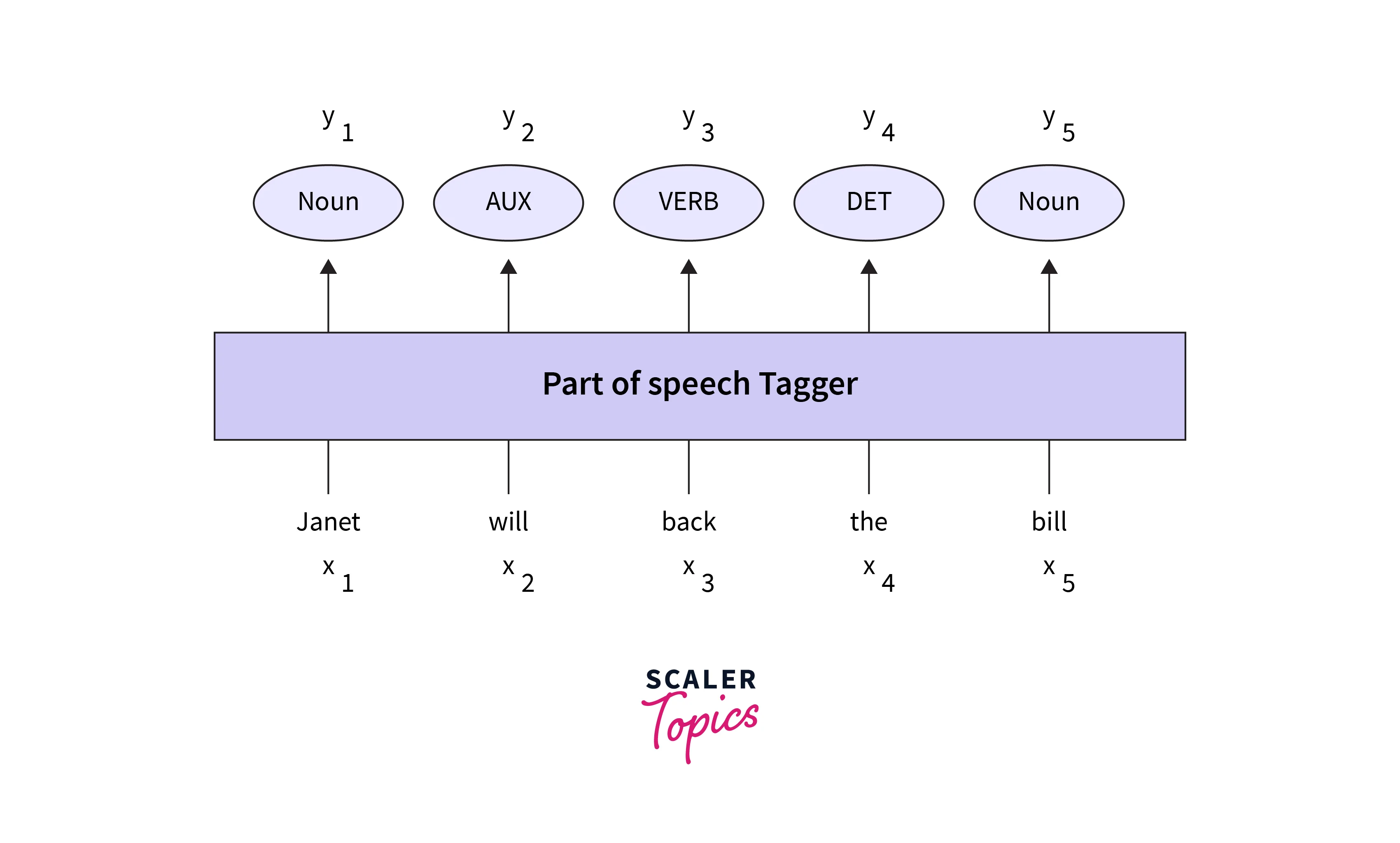

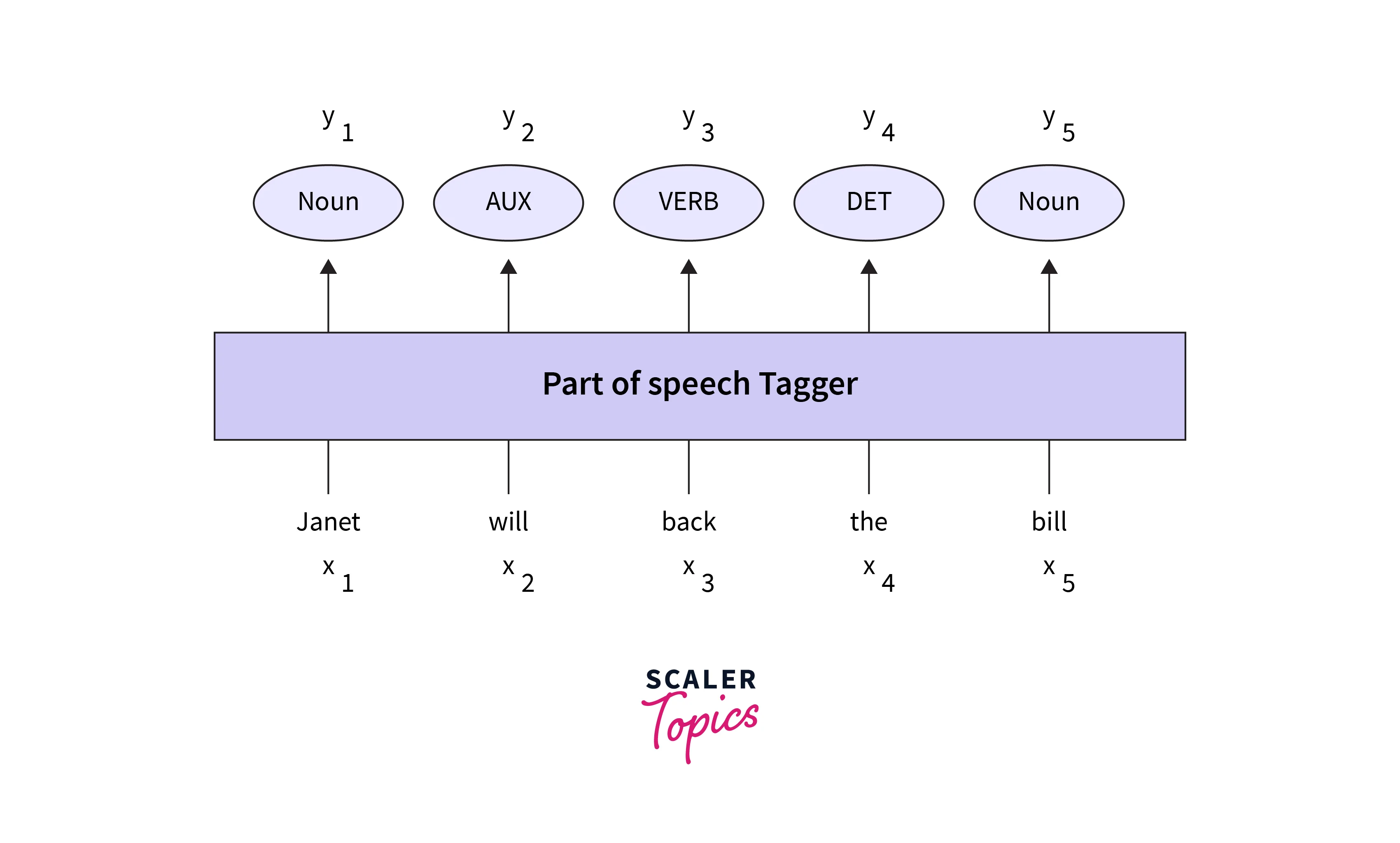

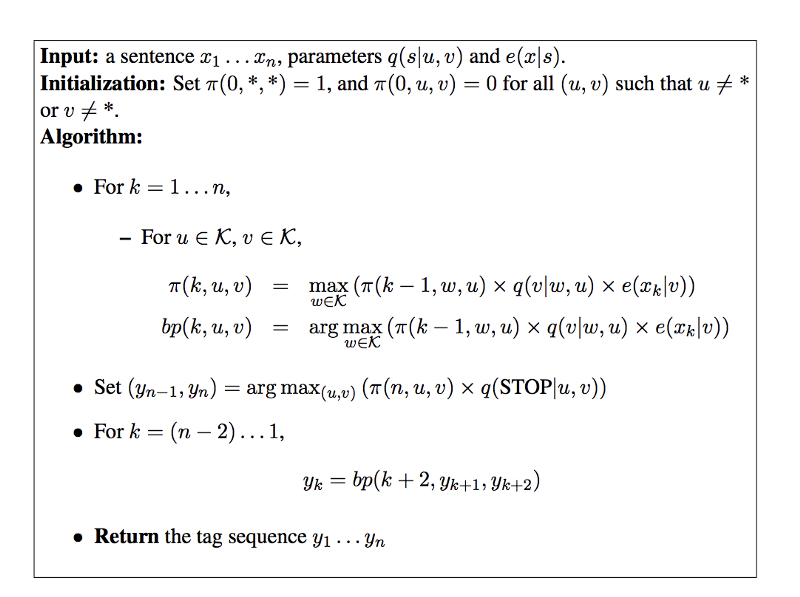

- The process of automatically assigning parts of speech to words in text is called part-of-speech tagging, POS tagging, or just tagging.

- Automatic tagging is an important step in the NLP pipeline, and is useful in a variety of situations including: predicting the behavior of previously unseen words, analyzing word usage in corpora, and text-to-speech systems.

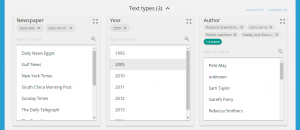

- Some linguistic corpora, such as the Brown Corpus, have been POS tagged.

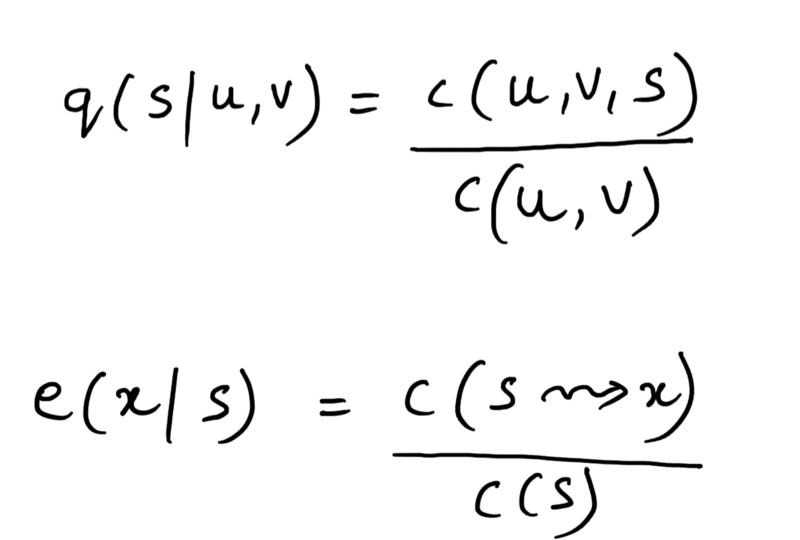

- A variety of tagging methods are possible, e.g. default tagger, regular expression tagger, unigram tagger and n-gram taggers. These can be combined using a technique known as backoff.

- Taggers can be trained and evaluated using tagged corpora.

- Backoff is a method for combining models: when a more specialized model (such as a bigram tagger) cannot assign a tag in a given context, we backoff to a more general model (such as a unigram tagger).

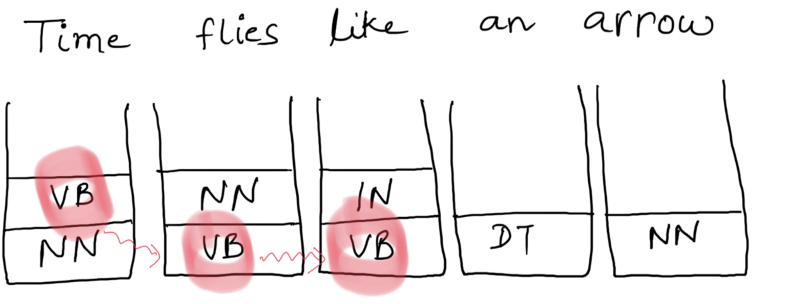

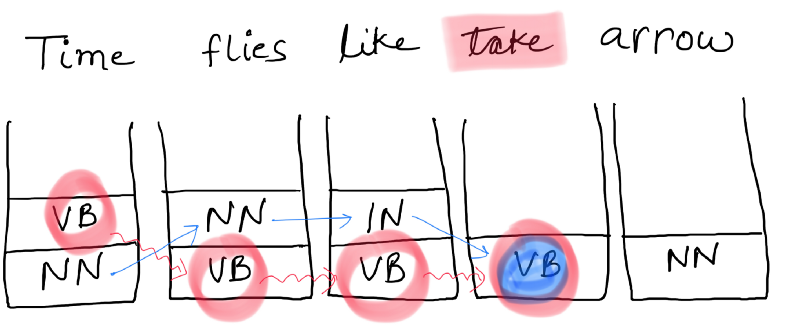

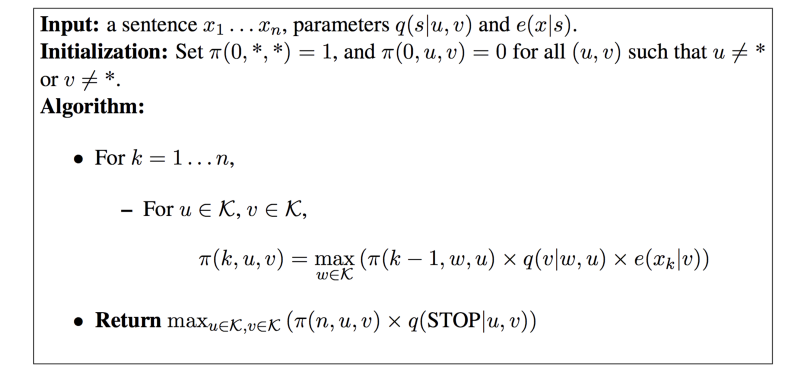

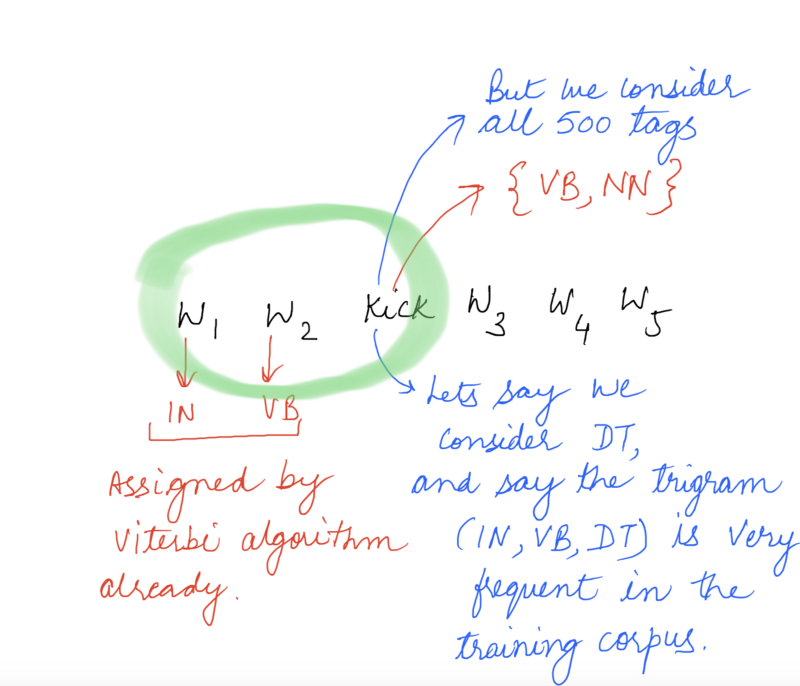

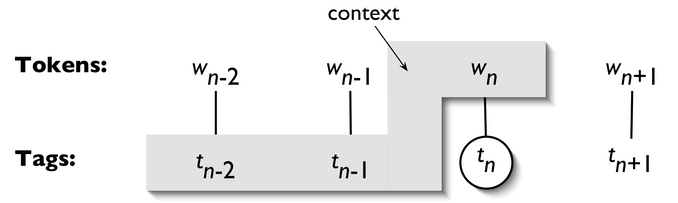

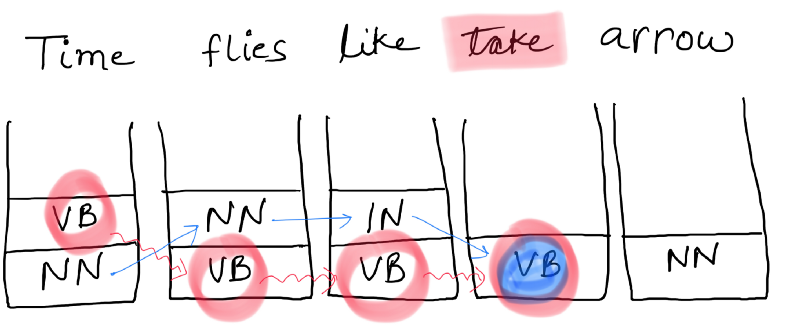

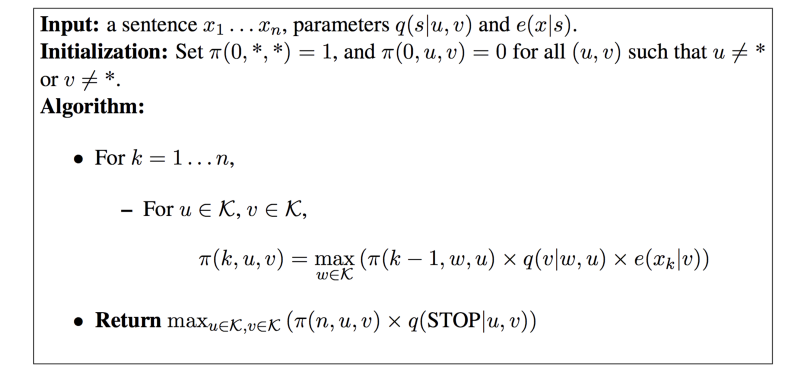

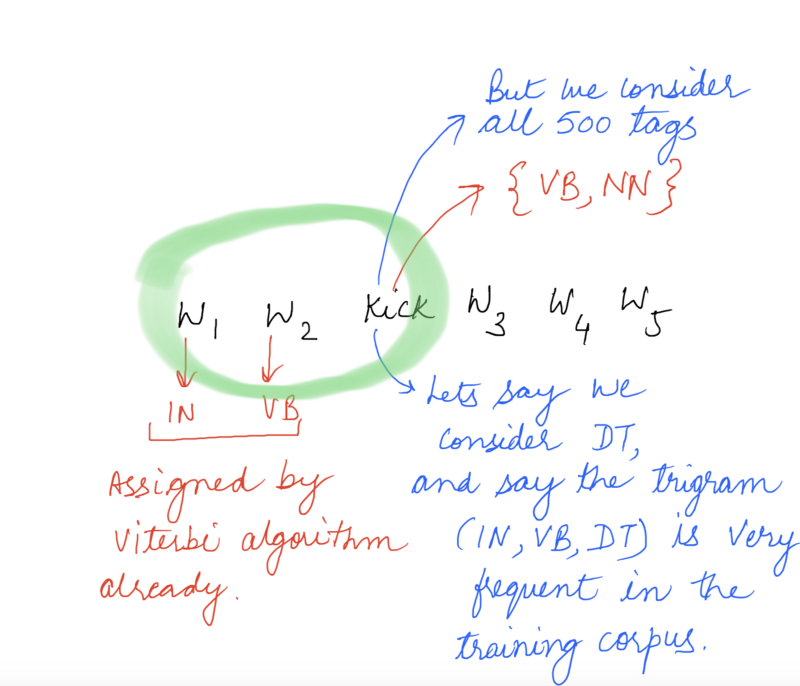

- Part-of-speech tagging is an important, early example of a sequence classification task in NLP: a classification decision at any one point in the sequence makes use of words and tags in the local context.

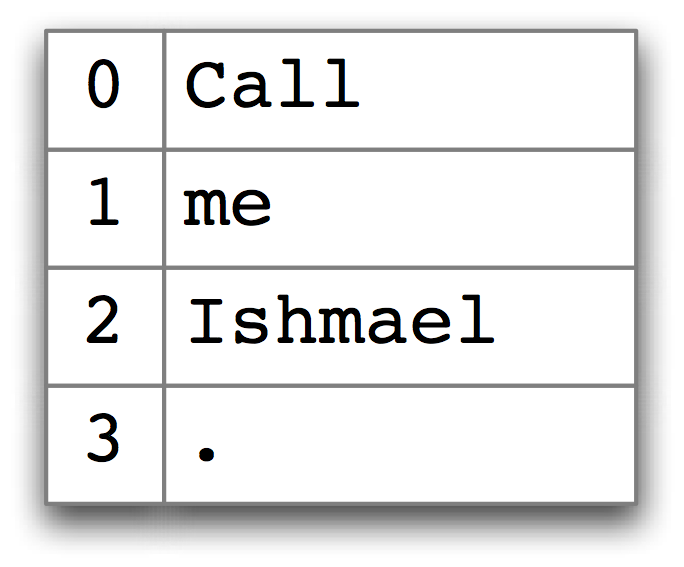

- A dictionary is used to map between arbitrary types of information, such as a string and a number: freq[ 'cat' ] = 12 . We create dictionaries using the brace notation: pos = {} , pos = { 'furiously' : 'adv' , 'ideas' : 'n' , 'colorless' : 'adj' } .

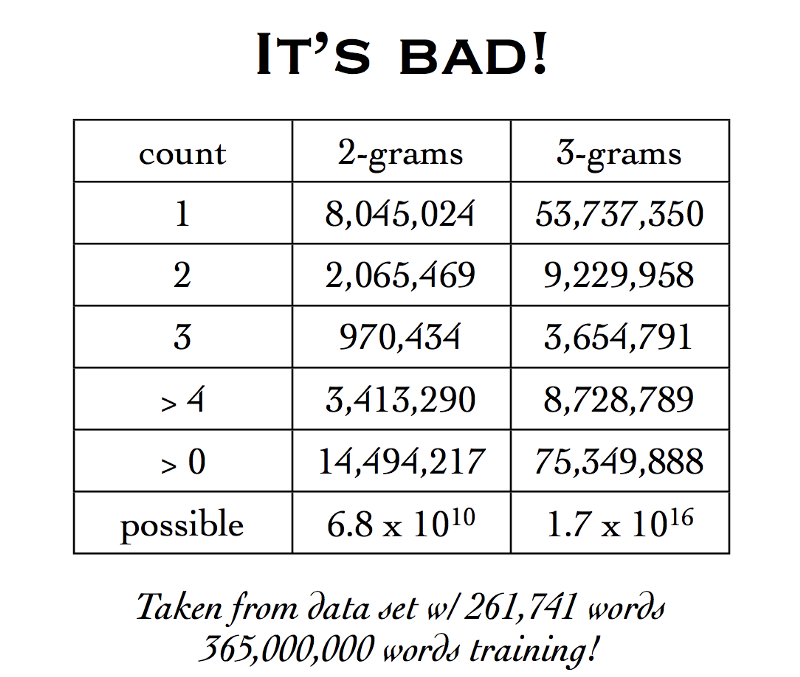

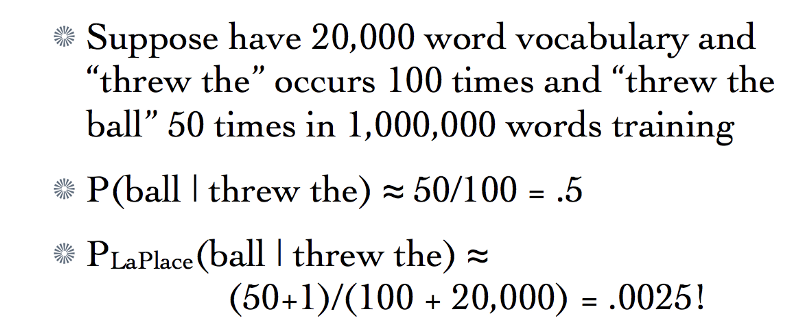

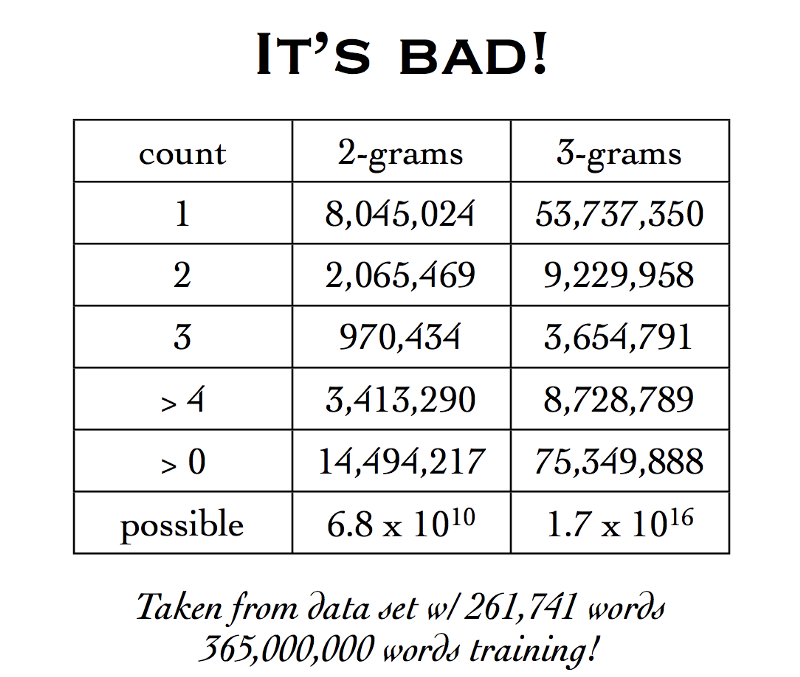

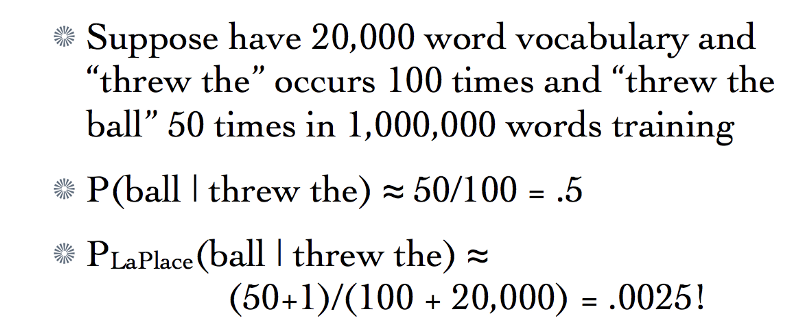

- N-gram taggers can be defined for large values of n , but once n is larger than 3 we usually encounter the sparse data problem; even with a large quantity of training data we only see a tiny fraction of possible contexts.

- Transformation-based tagging involves learning a series of repair rules of the form "change tag s to tag t in context c ", where each rule fixes mistakes and possibly introduces a (smaller) number of errors.

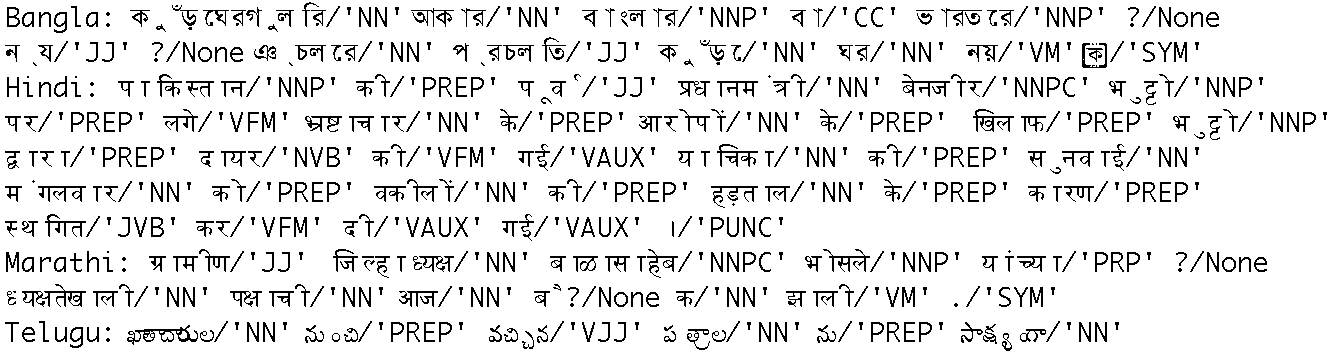

9 Further ReadingExtra materials for this chapter are posted at http://nltk.org/ , including links to freely available resources on the web. For more examples of tagging with NLTK, please see the Tagging HOWTO at http://nltk.org/howto . Chapters 4 and 5 of (Jurafsky & Martin, 2008) contain more advanced material on n-grams and part-of-speech tagging. The "Universal Tagset" is described by (Petrov, Das, & McDonald, 2012) . Other approaches to tagging involve machine learning methods ( chap-data-intensive ). In 7. we will see a generalization of tagging called chunking in which a contiguous sequence of words is assigned a single tag. For tagset documentation, see nltk.help.upenn_tagset() and nltk.help.brown_tagset() . Lexical categories are introduced in linguistics textbooks, including those listed in 1. . There are many other kinds of tagging. Words can be tagged with directives to a speech synthesizer, indicating which words should be emphasized. Words can be tagged with sense numbers, indicating which sense of the word was used. Words can also be tagged with morphological features. Examples of each of these kinds of tags are shown below. For space reasons, we only show the tag for a single word. Note also that the first two examples use XML-style tags, where elements in angle brackets enclose the word that is tagged. - Speech Synthesis Markup Language (W3C SSML): That is a <emphasis>big</emphasis> car!

- SemCor: Brown Corpus tagged with WordNet senses: Space in any <wf pos= "NN" lemma= "form" wnsn= "4" >form</wf> is completely measured by the three dimensions. (Wordnet form/nn sense 4: "shape, form, configuration, contour, conformation")

- Morphological tagging, from the Turin University Italian Treebank: E ' italiano , come progetto e realizzazione , il primo (PRIMO ADJ ORDIN M SING) porto turistico dell' Albania .

Note that tagging is also performed at higher levels. Here is an example of dialogue act tagging, from the NPS Chat Corpus (Forsyth & Martell, 2007) included with NLTK. Each turn of the dialogue is categorized as to its communicative function: 10 Exercises- ☼ Search the web for "spoof newspaper headlines", to find such gems as: British Left Waffles on Falkland Islands , and Juvenile Court to Try Shooting Defendant . Manually tag these headlines to see if knowledge of the part-of-speech tags removes the ambiguity.

- ☼ Working with someone else, take turns to pick a word that can be either a noun or a verb (e.g. contest ); the opponent has to predict which one is likely to be the most frequent in the Brown corpus; check the opponent's prediction, and tally the score over several turns.

- ☼ Tokenize and tag the following sentence: They wind back the clock, while we chase after the wind . What different pronunciations and parts of speech are involved?

- ☼ Review the mappings in 3.1 . Discuss any other examples of mappings you can think of. What type of information do they map from and to?

- ☼ Using the Python interpreter in interactive mode, experiment with the dictionary examples in this chapter. Create a dictionary d , and add some entries. What happens if you try to access a non-existent entry, e.g. d[ 'xyz' ] ?

- ☼ Try deleting an element from a dictionary d , using the syntax del d[ 'abc' ] . Check that the item was deleted.

- ☼ Create two dictionaries, d1 and d2 , and add some entries to each. Now issue the command d1.update(d2) . What did this do? What might it be useful for?

- ☼ Create a dictionary e , to represent a single lexical entry for some word of your choice. Define keys like headword , part-of-speech , sense , and example , and assign them suitable values.

- ☼ Satisfy yourself that there are restrictions on the distribution of go and went , in the sense that they cannot be freely interchanged in the kinds of contexts illustrated in (3d) in 7 .

- ☼ Train a unigram tagger and run it on some new text. Observe that some words are not assigned a tag. Why not?

- ☼ Learn about the affix tagger (type help(nltk.AffixTagger) ). Train an affix tagger and run it on some new text. Experiment with different settings for the affix length and the minimum word length. Discuss your findings.

- ☼ Train a bigram tagger with no backoff tagger, and run it on some of the training data. Next, run it on some new data. What happens to the performance of the tagger? Why?

- ☼ We can use a dictionary to specify the values to be substituted into a formatting string. Read Python's library documentation for formatting strings http://docs.python.org/lib/typesseq-strings.html and use this method to display today's date in two different formats.

- ◑ Use sorted() and set() to get a sorted list of tags used in the Brown corpus, removing duplicates.

- Which nouns are more common in their plural form, rather than their singular form? (Only consider regular plurals, formed with the -s suffix.)

- Which word has the greatest number of distinct tags. What are they, and what do they represent?

- List tags in order of decreasing frequency. What do the 20 most frequent tags represent?

- Which tags are nouns most commonly found after? What do these tags represent?

- What happens to the tagger performance for the various model sizes when a backoff tagger is omitted?

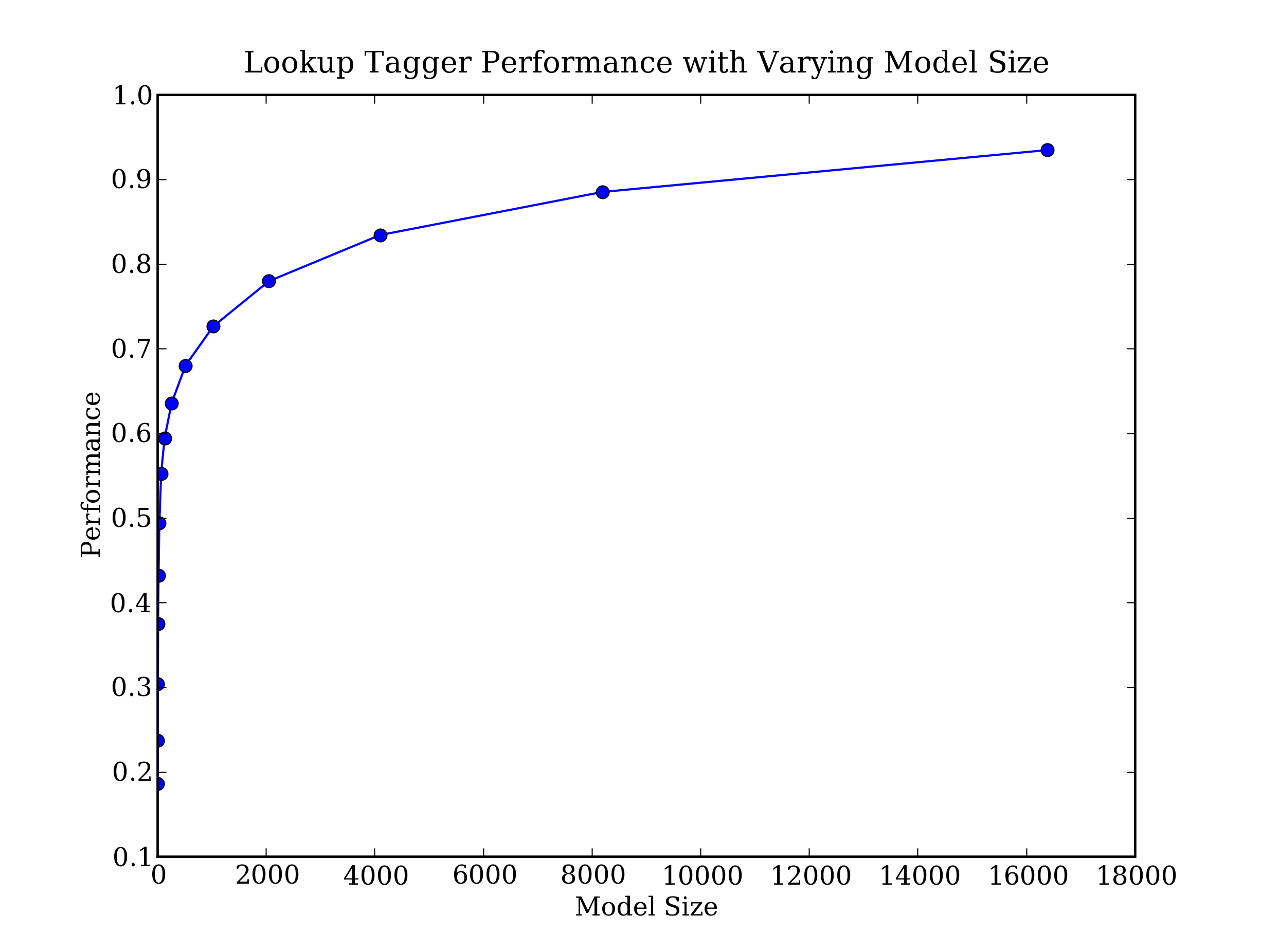

- Consider the curve in 4.2 ; suggest a good size for a lookup tagger that balances memory and performance. Can you come up with scenarios where it would be preferable to minimize memory usage, or to maximize performance with no regard for memory usage?

- ◑ What is the upper limit of performance for a lookup tagger, assuming no limit to the size of its table? (Hint: write a program to work out what percentage of tokens of a word are assigned the most likely tag for that word, on average.)

- What proportion of word types are always assigned the same part-of-speech tag?

- How many words are ambiguous, in the sense that they appear with at least two tags?

- What percentage of word tokens in the Brown Corpus involve these ambiguous words?

- A tagger t takes a list of words as input, and produces a list of tagged words as output. However, t.evaluate() is given correctly tagged text as its only parameter. What must it do with this input before performing the tagging?

- Once the tagger has created newly tagged text, how might the evaluate() method go about comparing it with the original tagged text and computing the accuracy score?

- Now examine the source code to see how the method is implemented. Inspect nltk.tag.api.__file__ to discover the location of the source code, and open this file using an editor (be sure to use the api.py file and not the compiled api.pyc binary file).

- Produce an alphabetically sorted list of the distinct words tagged as MD .

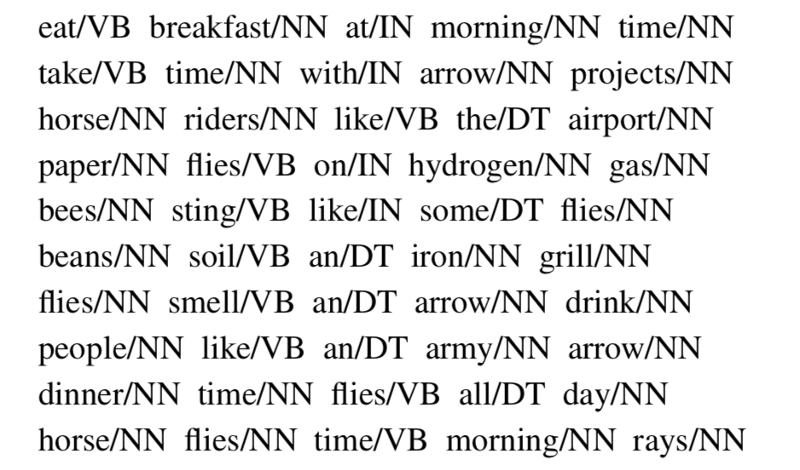

- Identify words that can be plural nouns or third person singular verbs (e.g. deals , flies ).

- Identify three-word prepositional phrases of the form IN + DET + NN (eg. in the lab ).

- What is the ratio of masculine to feminine pronouns?

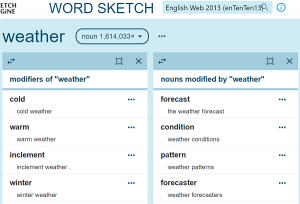

- ◑ In 3.1 we saw a table involving frequency counts for the verbs adore , love , like , prefer and preceding qualifiers absolutely and definitely . Investigate the full range of adverbs that appear before these four verbs.

- ◑ We defined the regexp_tagger that can be used as a fall-back tagger for unknown words. This tagger only checks for cardinal numbers. By testing for particular prefix or suffix strings, it should be possible to guess other tags. For example, we could tag any word that ends with -s as a plural noun. Define a regular expression tagger (using RegexpTagger() ) that tests for at least five other patterns in the spelling of words. (Use inline documentation to explain the rules.)

- ◑ Consider the regular expression tagger developed in the exercises in the previous section. Evaluate the tagger using its accuracy() method, and try to come up with ways to improve its performance. Discuss your findings. How does objective evaluation help in the development process?

- ◑ How serious is the sparse data problem? Investigate the performance of n-gram taggers as n increases from 1 to 6. Tabulate the accuracy score. Estimate the training data required for these taggers, assuming a vocabulary size of 10 5 and a tagset size of 10 2 .

- ◑ Obtain some tagged data for another language, and train and evaluate a variety of taggers on it. If the language is morphologically complex, or if there are any orthographic clues (e.g. capitalization) to word classes, consider developing a regular expression tagger for it (ordered after the unigram tagger, and before the default tagger). How does the accuracy of your tagger(s) compare with the same taggers run on English data? Discuss any issues you encounter in applying these methods to the language.

- ◑ 4.1 plotted a curve showing change in the performance of a lookup tagger as the model size was increased. Plot the performance curve for a unigram tagger, as the amount of training data is varied.

- ◑ Inspect the confusion matrix for the bigram tagger t2 defined in 5 , and identify one or more sets of tags to collapse. Define a dictionary to do the mapping, and evaluate the tagger on the simplified data.

- ◑ Experiment with taggers using the simplified tagset (or make one of your own by discarding all but the first character of each tag name). Such a tagger has fewer distinctions to make, but much less information on which to base its work. Discuss your findings.

- ◑ Recall the example of a bigram tagger which encountered a word it hadn't seen during training, and tagged the rest of the sentence as None . It is possible for a bigram tagger to fail part way through a sentence even if it contains no unseen words (even if the sentence was used during training). In what circumstance can this happen? Can you write a program to find some examples of this?

- ◑ Preprocess the Brown News data by replacing low frequency words with UNK , but leaving the tags untouched. Now train and evaluate a bigram tagger on this data. How much does this help? What is the contribution of the unigram tagger and default tagger now?

- ◑ Modify the program in 4.1 to use a logarithmic scale on the x -axis, by replacing pylab.plot() with pylab.semilogx() . What do you notice about the shape of the resulting plot? Does the gradient tell you anything?

- ◑ Consult the documentation for the Brill tagger demo function, using help(nltk.tag.brill.demo) . Experiment with the tagger by setting different values for the parameters. Is there any trade-off between training time (corpus size) and performance?

- ◑ Write code that builds a dictionary of dictionaries of sets. Use it to store the set of POS tags that can follow a given word having a given POS tag, i.e. word i → tag i → tag i+1 .

- Print a table with the integers 1..10 in one column, and the number of distinct words in the corpus having 1..10 distinct tags in the other column.

- For the word with the greatest number of distinct tags, print out sentences from the corpus containing the word, one for each possible tag.

- ★ Write a program to classify contexts involving the word must according to the tag of the following word. Can this be used to discriminate between the epistemic and deontic uses of must ?

- Create three different combinations of the taggers. Test the accuracy of each combined tagger. Which combination works best?

- Try varying the size of the training corpus. How does it affect your results?

- Create a new kind of unigram tagger that looks at the tag of the previous word, and ignores the current word. (The best way to do this is to modify the source code for UnigramTagger() , which presumes knowledge of object-oriented programming in Python.)

- Add this tagger to the sequence of backoff taggers (including ordinary trigram and bigram taggers that look at words), right before the usual default tagger.

- Evaluate the contribution of this new unigram tagger.

- ★ Consider the code in 5 which determines the upper bound for accuracy of a trigram tagger. Review Abney's discussion concerning the impossibility of exact tagging (Church, Young, & Bloothooft, 1996) . Explain why correct tagging of these examples requires access to other kinds of information than just words and tags. How might you estimate the scale of this problem?

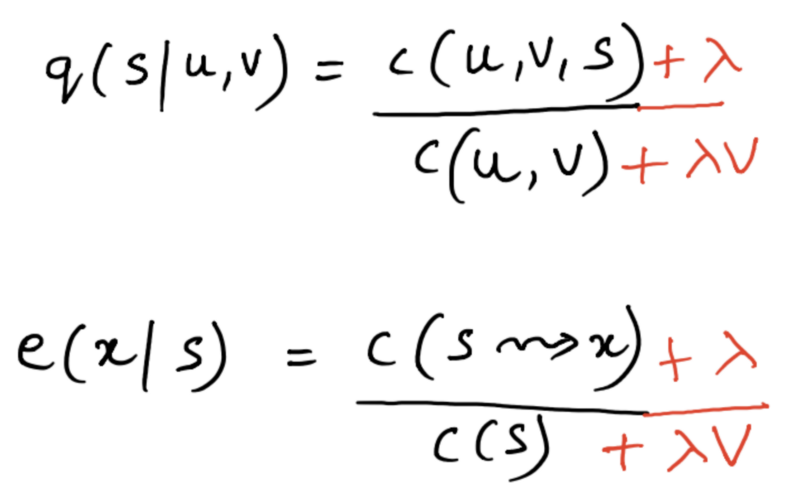

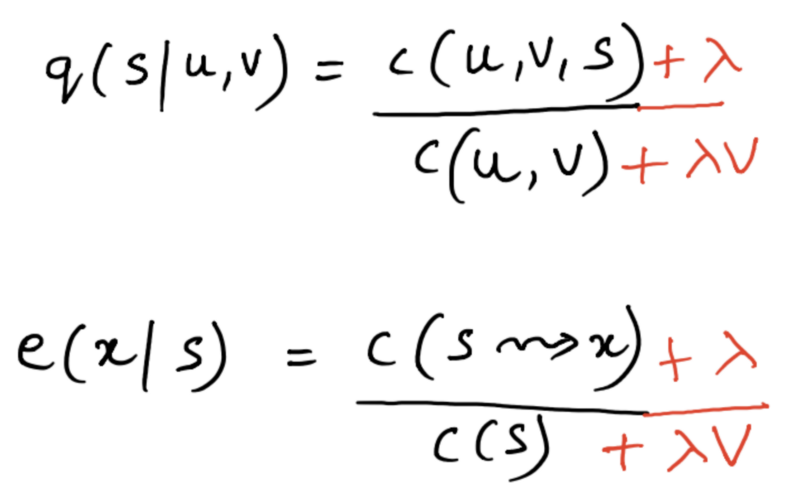

- ★ Use some of the estimation techniques in nltk.probability , such as Lidstone or Laplace estimation, to develop a statistical tagger that does a better job than n-gram backoff taggers in cases where contexts encountered during testing were not seen during training.

- ★ Inspect the diagnostic files created by the Brill tagger rules.out and errors.out . Obtain the demonstration code by accessing the source code (at http://www.nltk.org/code ) and create your own version of the Brill tagger. Delete some of the rule templates, based on what you learned from inspecting rules.out . Add some new rule templates which employ contexts that might help to correct the errors you saw in errors.out .

- ★ Develop an n-gram backoff tagger that permits "anti-n-grams" such as [ "the" , "the" ] to be specified when a tagger is initialized. An anti-ngram is assigned a count of zero and is used to prevent backoff for this n-gram (e.g. to avoid estimating P( the | the ) as just P( the )).

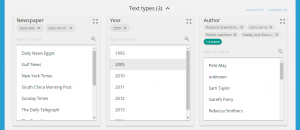

- ★ Investigate three different ways to define the split between training and testing data when developing a tagger using the Brown Corpus: genre ( category ), source ( fileid ), and sentence. Compare their relative performance and discuss which method is the most legitimate. (You might use n-fold cross validation, discussed in 3 , to improve the accuracy of the evaluations.)

- ★ Develop your own NgramTagger class that inherits from NLTK's class, and which encapsulates the method of collapsing the vocabulary of the tagged training and testing data that was described in this chapter. Make sure that the unigram and default backoff taggers have access to the full vocabulary.

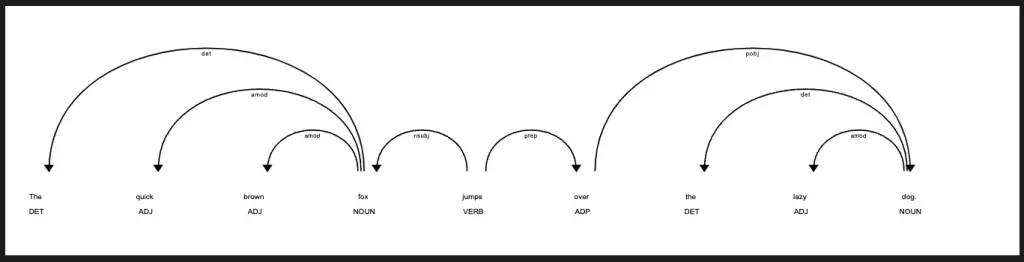

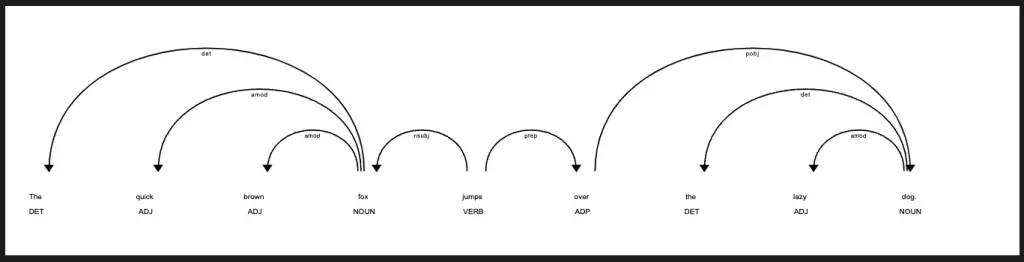

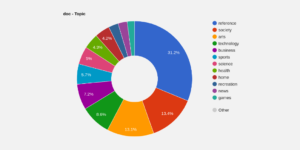

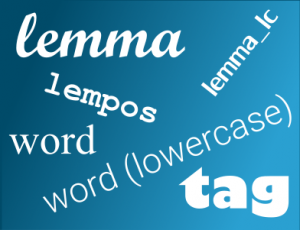

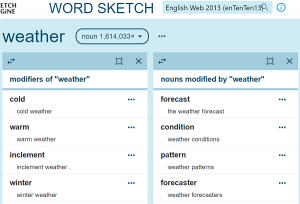

About this document... UPDATED FOR NLTK 3.0. This is a chapter from Natural Language Processing with Python , by Steven Bird , Ewan Klein and Edward Loper , Copyright © 2019 the authors. It is distributed with the Natural Language Toolkit [ http://nltk.org/ ], Version 3.0, under the terms of the Creative Commons Attribution-Noncommercial-No Derivative Works 3.0 United States License [ http://creativecommons.org/licenses/by-nc-nd/3.0/us/ ]. This document was built on Wed 4 Sep 2019 11:40:48 ACST  Part-of-speech tagging in NLP (with Python Examples)April 18, 2023  Part-of-speech (POS) tagging is a process that assigns a part of speech (noun, verb, adjective, etc.) to each word in a given text. This technique is used to understand the role of words in a sentence and is a critical component of many natural language processing (NLP) applications. In this article, we will explore the basics of POS tagging, its importance, and the techniques and tools used for it.  What is POS tagging?POS tagging is a process of labeling each word in a text with its corresponding part of speech. The goal is to assign the correct POS tag to each word based on its context. For example, in the sentence “The cat is sleeping,” the word “cat” is a noun, “is” is a verb, and “sleeping” is an adjective. POS tagging allows us to identify these roles and understand the meaning of the sentence. Getting StartedFor this Part-of-speech tagging tutorial, you will need to install Python along with the most popular natural language processing libraries used in this guide. Open the Terminal and type (might take a while to run): Understand POS Visually with PythonThis code will output the part-of-speech tagging and dependency parsing results for the text “Barack Obama was born in Hawaii”, using the pre-trained English model in Spacy. The first loop will print out each token in the text along with its part-of-speech tag, detailed part-of-speech tag, dependency relation and the head of the current token. The second part of the code will visualize the dependency parsing results in the text using the displacy module, which will display an interactive visualization of the syntactic dependencies between words in the sentence. The visualization will be rendered in the Jupyter notebook.  Importance of POS taggingPOS tagging is essential for various NLP tasks, including text-to-speech conversion, sentiment analysis , and machine translation. It helps in disambiguating the meaning of words in a sentence by identifying the context and their respective parts of speech. Accurate POS tagging can improve the accuracy of NLP models, leading to better results in many applications. Techniques for POS taggingThere are several techniques for POS tagging, including rule-based approaches, stochastic models, and deep learning . Rule-based approaches use hand-crafted rules to assign POS tags based on the word’s context, such as its surrounding words and the sentence structure. Stochastic models use probability distributions to predict the most likely POS tag for each word based on training data. Deep learning approaches, such as recurrent neural networks (RNNs) and convolutional neural networks (CNNs), can learn the context and relationships between words to predict POS tags. Tools for POS taggingThere are several Python libraries available for POS tagging, including NLTK, spaCy, and TextBlob. NLTK provides several algorithms for POS tagging, including rule-based and stochastic models. spaCy uses a combination of rule-based and deep learning techniques for POS tagging, providing fast and accurate results. TextBlob is a simpler library that provides an easy-to-use interface for POS tagging and other NLP tasks. Challenges of POS taggingPOS tagging is a complex task that requires dealing with the ambiguity of natural language. Words can have multiple meanings, and their parts of speech can change depending on the context. In addition, some languages, such as Chinese and Japanese, do not have spaces between words, making it difficult to identify word boundaries. POS tagging also requires large amounts of annotated training data to achieve high accuracy . Useful Python Libraries for Part-of-speech tagging- NLTK: pos_tag()

- Spacy: pos_tag()

- TextBlob: tags, noun_phrases()

POS Tagging in NLTKVisualize Part-of-Speech Tagging.  If you don’t know what these tags mean, here is a full list of Part-of-speech tags in NLTK. POS Tagging in SpaCy If you don’t know what these tags mean, here is a full list of Part-of-speech tags in spaCy. POS Tagging in TextBlobIn this example, we create a TextBlob object containing the text to be tagged, and then call the tags property on the TextBlob object to perform POS tagging. The resulting tags variable contains a list of tuples, where each tuple contains a word and its corresponding POS tag. Finally, we print out the tags for each word in the text using a for loop. The output will be something like:  If you don’t know what these tags mean, here is a full list of Part-of-speech tags in TextBlob. Datasets useful for Part-of-speech taggingPenn treebank, universal dependencies, to know before you learn part-of-speech tagging. - Basic understanding of machine learning algorithms

- Familiarity with Python programming language

- Knowledge of text pre-processing techniques such as tokenization and stemming

- Understanding of parts of speech and their roles in a sentence.

Important Concepts in Part-of-speech tagging- Language grammar rules

- POS tag sets and their definitions

- The ambiguity problem in POS tagging

- The role of machine learning in POS tagging

- Commonly used POS tagging algorithms

What’s Next?- Named Entity Recognition (NER)

- Chunking and Shallow Parsing

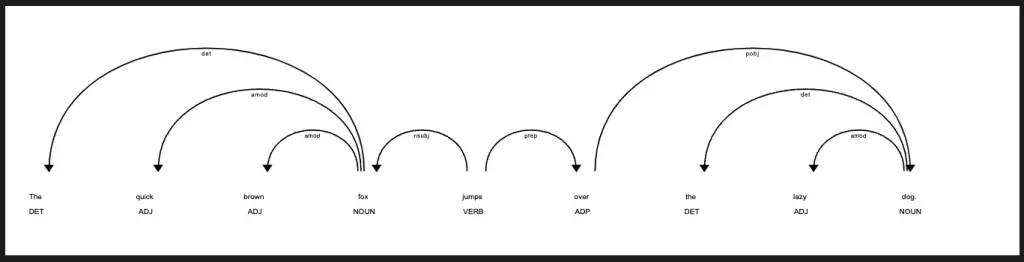

- Dependency Parsing

- Natural Language Understanding (NLU)

- Sentiment Analysis

- Text Classification

Relevant Entities| Entity | Properties |

|---|

| Text | Sequence of words to be tagged with parts of speech | | Part-of-speech tag | Label assigned to a word indicating its grammatical category | | Tagset | A collection of part-of-speech tags | | Corpus | A large collection of text used for training and evaluating POS taggers | | Tokenization | The process of breaking a text into words or tokens |

Frequently Asked QuestionsIdentification of word type Improve text understanding Noun, verb, adjective Improved accuracy Different objectives Ambiguity, context In conclusion, POS tagging is a crucial component of NLP applications that helps in identifying the role of words in a sentence. It allows us to disambiguate the meaning of words and understand the context of a text. There are several techniques and tools available for POS tagging, each with its strengths and weaknesses. While POS tagging can be challenging, accurate results can significantly improve the performance of NLP models. - NLTK documentation on Part-of-speech tagging: https://www.nltk.org/book/ch05.html

- spaCy documentation on Part-of-speech tagging: https://spacy.io/usage/linguistic-features#pos-tagging

- Stanford CoreNLP documentation on Part-of-speech tagging: https://stanfordnlp.github.io/CoreNLP/pos.html

- Part-of-speech tagging with Hidden Markov Models in Python: https://towardsdatascience.com/part-of-speech-tagging-with-hidden-markov-models-python-for-language-processing-56c9a0ab07d9

- A Comprehensive Guide to Part-of-speech Tagging: https://www.analyticsvidhya.com/blog/2021/05/a-comprehensive-guide-to-part-of-speech-tagging/

Related posts:SpaCy Part-of-Speech Tags- Named Entity Recognition in NLP (with Python Examples)

- Word Embeddings in NLP (with Python Examples)

Natural Language Processing (NLP) with Python ExamplesPython for NLP: Parts of Speech Tagging and Named Entity Recognition This is the 4th article in my series of articles on Python for NLP. In my previous article , I explained how the spaCy library can be used to perform tasks like vocabulary and phrase matching. In this article, we will study parts of speech tagging and named entity recognition in detail. We will see how the spaCy library can be used to perform these two tasks. - Parts of Speech (POS) Tagging

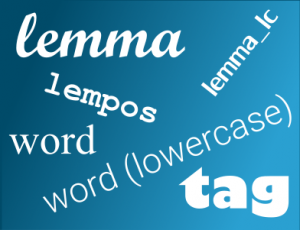

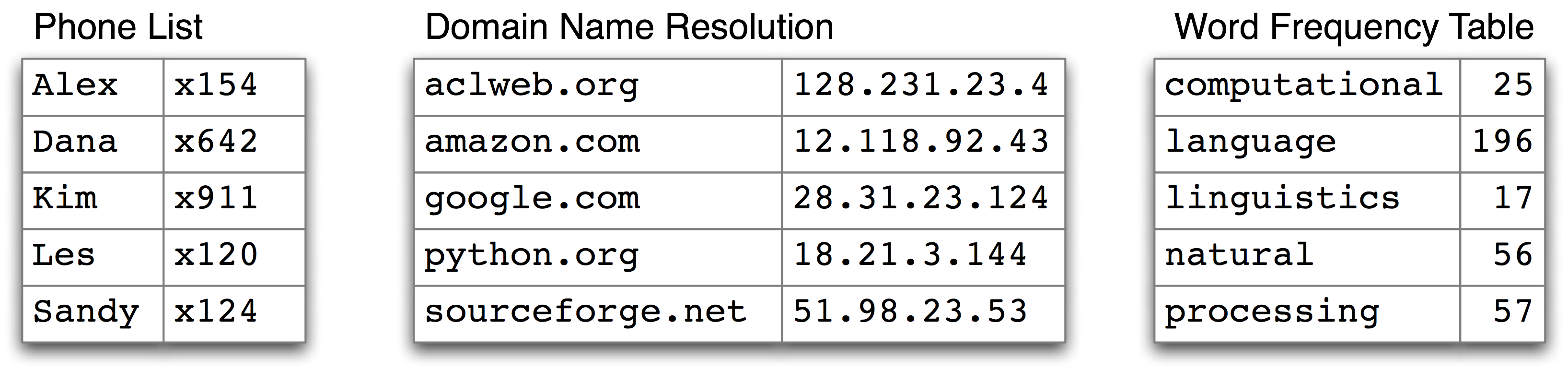

Parts of speech tagging simply refers to assigning parts of speech to individual words in a sentence, which means that, unlike phrase matching, which is performed at the sentence or multi-word level, parts of speech tagging is performed at the token level. Let's take a very simple example of parts of speech tagging. As usual, in the script above we import the core spaCy English model. Next, we need to create a spaCy document that we will be using to perform parts of speech tagging. The spaCy document object has several attributes that can be used to perform a variety of tasks. For instance, to print the text of the document, the text attribute is used. Similarly, the pos_ attribute returns the coarse-grained POS tag. To obtain fine-grained POS tags, we could use the tag_ attribute. And finally, to get the explanation of a tag, we can use the spacy.explain() method and pass it the tag name. Let's see this in action: The above script simply prints the text of the sentence. The output looks like this: Next, let's see pos_ attribute. We will print the POS tag of the word "hated", which is actually the seventh token in the sentence. You can see that POS tag returned for "hated" is a "VERB" since "hated" is a verb. Now let's print the fine-grained POS tag for the word "hated". To see what VBD means, we can use spacy.explain() method as shown below: The output shows that VBD is a verb in the past tense. Let's print the text, coarse-grained POS tags, fine-grained POS tags, and the explanation for the tags for all the words in the sentence. In the script above we improve the readability and formatting by adding 12 spaces between the text and coarse-grained POS tag and then another 10 spaces between the coarse-grained POS tags and fine-grained POS tags. A complete tag list for the parts of speech and the fine-grained tags, along with their explanation, is available at spaCy official documentation. - Why POS Tagging is Useful?

POS tagging can be really useful, particularly if you have words or tokens that can have multiple POS tags. For instance, the word "google" can be used as both a noun and verb, depending upon the context. While processing natural language, it is important to identify this difference. Fortunately, the spaCy library comes pre-built with machine learning algorithms that, depending upon the context (surrounding words), it is capable of returning the correct POS tag for the word. Let's see this in action. Execute the following script: In the script above we create spaCy document with the text "Can you google it?" Here the word "google" is being used as a verb. Next, we print the POS tag for the word "google" along with the explanation of the tag. The output looks like this: From the output, you can see that the word "google" has been correctly identified as a verb. Let's now see another example: Here in the above script the word "google" is being used as a noun as shown by the output: - Finding the Number of POS Tags

You can find the number of occurrences of each POS tag by calling the count_by on the spaCy document object. The method takes spacy.attrs.POS as a parameter value. In the output, you can see the ID of the POS tags along with their frequencies of occurrence. The text of the POS tag can be displayed by passing the ID of the tag to the vocabulary of the actual spaCy document. Now in the output, you will see the ID, the text, and the frequency of each tag as shown below: - Visualizing Parts of Speech Tags

Visualizing POS tags in a graphical way is extremely easy. The displacy module from the spacy library is used for this purpose. To visualize the POS tags inside the Jupyter notebook, you need to call the render method from the displacy module and pass it the spacy document, the style of the visualization, and set the jupyter attribute to True as shown below: Check out our hands-on, practical guide to learning Git, with best-practices, industry-accepted standards, and included cheat sheet. Stop Googling Git commands and actually learn it! In the output, you should see the following dependency tree for POS tags.  You can clearly see the dependency of each token on another along with the POS tag. If you want to visualize the POS tags outside the Jupyter notebook, then you need to call the serve method. The plot for POS tags will be printed in the HTML form inside your default browser. Execute the following script: Once you execute the above script, you will see the following message: To view the dependency tree, type the following address in your browser: http://127.0.0.1:5000/ . You will see the following dependency tree:  Named entity recognition refers to the identification of words in a sentence as an entity e.g. the name of a person, place, organization, etc. Let's see how the spaCy library performs named entity recognition. Look at the following script: In the script above we created a simple spaCy document with some text. To find the named entity we can use the ents attribute, which returns the list of all the named entities in the document. You can see that three named entities were identified. To see the detail of each named entity, you can use the text , label , and the spacy.explain method which takes the entity object as a parameter. In the output, you will see the name of the entity along with the entity type and a small description of the entity as shown below: You can see that "Manchester United" has been correctly identified as an organization, company, etc. Similarly, "Harry Kane" has been identified as a person and finally, "$90 million" has been correctly identified as an entity of type Money. You can also add new entities to an existing document. For instance in the following example, "Nesfruita" is not identified as a company by the spaCy library. From the output, you can see that only India has been identified as an entity. Now to add "Nesfruita" as an entity of type "ORG" to our document, we need to execute the following steps: First, we need to import the Span class from the spacy.tokens module. Next, we need to get the hash value of the ORG entity type from our document. After that, we need to assign the hash value of ORG to the span. Since "Nesfruita" is the first word in the document, the span is 0-1. Finally, we need to add the new entity span to the list of entities. Now if you execute the following script, you will see "Nesfruita" in the list of entities. The output of the script above looks like this: In the case of POS tags, we could count the frequency of each POS tag in a document using a special method sen.count_by . However, for named entities, no such method exists. We can manually count the frequency of each entity type. Suppose we have the following document along with its entities: To count the person type entities in the above document, we can use the following script: In the output, you will see 2 since there are 2 entities of type PERSON in the document. - Visualizing Named Entities

Like the POS tags, we can also view named entities inside the Jupyter notebook as well as in the browser. To do so, we will again use the displacy object. Look at the following example: You can see that the only difference between visualizing named entities and POS tags is that here in case of named entities we passed ent as the value for the style parameter. The output of the script above looks like this:  You can see from the output that the named entities have been highlighted in different colors along with their entity types. You can also filter which entity types to display. To do so, you need to pass the type of the entities to display in a list, which is then passed as a value to the ents key of a dictionary. The dictionary is then passed to the options parameter of the render method of the displacy module as shown below: In the script above, we specified that only the entities of type ORG should be displayed in the output. The output of the script above looks like this:  Finally, you can also display named entities outside the Jupyter notebook. The following script will display the named entities in your default browser. Execute the following script: Now if you go to the address http://127.0.0.1:5000/ in your browser, you should see the named entities. Parts of speech tagging and named entity recognition are crucial to the success of any NLP task. In this article, we saw how Python's spaCy library can be used to perform POS tagging and named entity recognition with the help of different examples. You might also like...- Python for NLP: Tokenization, Stemming, and Lemmatization with SpaCy Library

- Python for NLP: Vocabulary and Phrase Matching with SpaCy

- Simple NLP in Python with TextBlob: N-Grams Detection

- Sentiment Analysis in Python With TextBlob

- Python for NLP: Creating Bag of Words Model from Scratch

Improve your dev skills!Get tutorials, guides, and dev jobs in your inbox. No spam ever. Unsubscribe at any time. Read our Privacy Policy. Programmer | Blogger | Data Science Enthusiast | PhD To Be | Arsenal FC for Life In this article Monitor with Ping BotReliable monitoring for your app, databases, infrastructure, and the vendors they rely on. Ping Bot is a powerful uptime and performance monitoring tool that helps notify you and resolve issues before they affect your customers.  Vendor Alerts with Ping BotGet detailed incident alerts about the status of your favorite vendors. Don't learn about downtime from your customers, be the first to know with Ping Bot.  © 2013- 2024 Stack Abuse. All rights reserved.  Document text extractionOnline Tool To Extract Text From PDFs & Images nlp consultingBuilding Advanced Natural Language Processing (NLP) Applications API & custom applicationsCustom Machine Learning Models Extract Just What You Need AI for legal documentsThe Doc Hawk, Our Custom Application For Legal Documents log in to extract Natural Language ProcessingMachine learning, deep learning, neural networks, large language models, pre-processing, optimisation, learning types, part-of-speech (pos) tagging in nlp: 4 python how to tutorials. by Neri Van Otten | Jan 24, 2023 | Data Science , Natural Language Processing What is Part-of-speech (POS) tagging?Part-of-speech (POS) tagging is fundamental in natural language processing (NLP) and can be done in Python. It involves labelling words in a sentence with their corresponding POS tags. POS tags indicate the grammatical category of a word, such as noun, verb, adjective, adverb, etc. The goal of POS tagging is to determine a sentence’s syntactic structure and identify each word’s role in the sentence. Table of Contents There are two main types of POS tagging in NLP, and several Python libraries can be used for POS tagging, including NLTK, spaCy, and TextBlob. This article discusses the different types of POS taggers, the advantages and disadvantages of each, and provides code examples for the three most commonly used libraries in Python.  Several libraries do POS tagging in Python. Types of Part-of-speech (POS) tagging in NLPThere are two main types of part-of-speech (POS) tagging in natural language processing (NLP): - Rule-based POS tagging uses a set of linguistic rules and patterns to assign POS tags to words in a sentence. This method relies on a predefined set of grammatical rules, a dictionary of words, and their POS tags. The NLTK library’s pos_tag() function is an example of a rule-based POS tagger that uses the Penn Treebank POS tag set.

- Statistical POS tagging uses machine learning algorithms, such as Hidden Markov Models (HMM) or Conditional Random Fields (CRF), to predict POS tags based on the context of the words in a sentence. This method requires a large amount of training data to create models. The SpaCy library’s POS tagger is an example of a statistical POS tagger that uses a neural network-based model trained on the OntoNotes 5 corpus .

Both rule-based and statistical POS tagging have their advantages and disadvantages. Rule-based taggers are simpler to implement and understand but less accurate than statistical taggers. Statistical taggers, however, are more accurate but require a large amount of training data and computational resources. Advantages and disadvantages of the different types of Part-of-speech (POS) tagging for NLP in PythonRule-based part-of-speech (POS) taggers and statistical POS taggers are two different approaches to POS tagging in natural language processing (NLP). Each method has its advantages and disadvantages. The benefits of rule-based Part-of-speech (POS) tagging:- Simple to implement and understand

- It doesn’t require a lot of computational resources or training data

- It can be easily customized to specific domains or languages

Disadvantages of rule-based Part-of-speech (POS) tagging:- Less accurate than statistical taggers

- Limited by the quality and coverage of the rules

- It can be difficult to maintain and update

The Benefits of Statistical Part-of-speech (POS) Tagging:- More accurate than rule-based taggers

- Don’t require a lot of human-written rules

- Can learn from large amounts of training data

Disadvantages of statistical Part-of-speech (POS) Tagging:- Requires more computational resources and training data

- It can be difficult to interpret and debug

- Can be sensitive to the quality and diversity of the training data

In general, for most of the real-world use cases, it’s recommended to use statistical POS taggers, which are more accurate and robust. However, in some cases, the rule-based POS tagger is still useful, for example, for small or specific domains where the training data is unavailable or for specific languages that are not well-supported by existing statistical models. Rule-based Part-of-speech (POS) tagging for NLP in Python code1. nltk part-of-speech (pos) tagging. One common way to perform POS tagging in Python using the NLTK library is to use the pos_tag() function, which uses the Penn Treebank POS tag set. For example: This will make a list of tuples, each with a word and the POS tag that goes with it. It’s also possible to use other POS taggers, like Stanford POS Tagger, or others with better performance, like SpaCy POS Tagger, but they require additional setup and processing. NLTK POS tagger abbreviationsHere is a list of the available abbreviations and their meaning. | Abbreviation | Meaning |

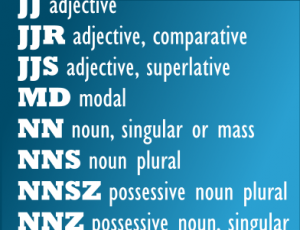

|---|

| CC | coordinating conjunction | | CD | cardinal digit | | DT | determiner | | EX | existential there | | FW | foreign word | | IN | preposition/subordinating conjunction | | JJ | This NLTK POS Tag is an adjective (large) | | JJR | adjective, comparative (larger) | | JJS | adjective, superlative (largest) | | LS | list market | | MD | modal (could, will) | | NN | noun, singular (cat, tree) | | NNS | noun plural (desks) | | NNP | proper noun, singular (sarah) | | NNPS | proper noun, plural (indians or americans) | | PDT | predeterminer (all, both, half) | | POS | possessive ending (parent\ ‘s) | | PRP | personal pronoun (hers, herself, him, himself) | | PRP$ | possessive pronoun (her, his, mine, my, our ) | | RB | adverb (occasionally, swiftly) | | RBR | adverb, comparative (greater) | | RBS | adverb, superlative (biggest) | | RP | particle (about) | | TO | infinite marker (to) | | UH | interjection (goodbye) | | VB | verb (ask) | | VBG | verb gerund (judging) | | VBD | verb past tense (pleaded) | | VBN | verb past participle (reunified) | | VBP | verb, present tense not 3rd person singular(wrap) | | VBZ | verb, present tense with 3rd person singular (bases) | | WDT | wh-determiner (that, what) | | WP | wh- pronoun (who) | | WRB | wh- adverb (how) |

2. TextBlob Part-of-speech (POS) taggingHere is an example of how to use the part-of-speech (POS) tagging functionality in the TextBlob library in Python: This will output a list of tuples, where each tuple contains a word and its corresponding POS tag, using the pattern-based POS tagger. TextBlob also can tag using a statistical POS tagger. To use the NLTK POS Tagger, you can pass pos_tagger attribute to TextBlob, like this: Keep in mind that when using the NLTK POS Tagger, the NLTK library needs to be installed and the pos tagger downloaded. TextBlob is a useful library for conveniently performing everyday NLP tasks, such as POS tagging, noun phrase extraction, sentiment analysis, etc. It is built on top of NLTK and provides a simple and easy-to-use API. Statistical Part-of-speech (POS) tagging for NLP in Python code3. spacy part-of-speech (pos) tagging. Here is an example of how to use the part-of-speech (POS) tagging functionality in the spaCy library in Python: This will output the token text and the POS tag for each token in the sentence: The spaCy library’s POS tagger is based on a statistical model trained on the OntoNotes 5 corpus, and it can tag the text with high accuracy. It also can tag other features, like lemma, dependency, ner, etc. Note that before running the code, you need to download the model you want to use, in this case, en_core_web_sm . You can do this by running !python -m spacy download en_core_web_sm on your command line. 4. NLTK Part-of-speech (POS) taggingThe Averaged Perceptron Tagger in NLTK is a statistical part-of-speech (POS) tagger that uses a machine learning algorithm called Averaged Perceptron. Here is an example of how to use it in Python: This will output a list of tuples, where each tuple contains a word and its corresponding POS tag, using the Averaged Perceptron Tagger You can see that the output tags are different from the previous example because the Averaged Perceptron Tagger uses the universal POS tagset, which is different from the Penn Treebank POS tagset. The averaged perceptron tagger is trained on a large corpus of text, which makes it more robust and accurate than the default rule-based tagger provided by NLTK. It also allows you to specify the tagset, which is the set of POS tags that can be used for tagging; in this case, it’s using the ‘universal’ tagset, which is a cross-lingual tagset, useful for many NLP tasks in Python. It’s important to note that the Averaged Perceptron Tagger requires loading the model before using it, which is why it’s necessary to download it using the nltk.download() function. In conclusion, part-of-speech (POS) tagging is essential in natural language processing (NLP) and can be easily implemented using Python. The process involves labelling words in a sentence with their corresponding POS tags. There are two main types of POS tagging: rule-based and statistical. Rule-based POS taggers use a set of linguistic rules and patterns to assign POS tags to words in a sentence. They are simple to implement and understand but less accurate than statistical taggers. The NLTK library’s pos_tag() function is an example of a rule-based POS tagger that uses the Penn Treebank POS tag set. Statistical POS taggers use machine learning algorithms, such as Hidden Markov Models (HMM) or Conditional Random Fields (CRF), to predict POS tags based on the context of the words in a sentence. They are more accurate but require much training data and computational resources. The SpaCy library’s POS tagger is an example of a statistical POS tagger that uses a neural network-based model trained on the OntoNotes 5 corpus. Both rule-based and statistical POS tagging have their advantages and disadvantages. Rule-based taggers are simpler to implement and understand but less accurate than statistical taggers. Statistical taggers, however, are more accurate but require a large amount of training data and computational resources. In general, for most of the real-world use cases, it’s recommended to use statistical POS taggers, which are more accurate and robust. About the Author Neri Van OttenNeri Van Otten is the founder of Spot Intelligence, a machine learning engineer with over 12 years of experience specialising in Natural Language Processing (NLP) and deep learning innovation. Dedicated to making your projects succeed.  Neri Van Otten is a machine learning and software engineer with over 12 years of Natural Language Processing (NLP) experience. Dedicated to making your projects succeed. Popular posts Top 7 Ways of Implementing Document & Text Similarity Top 10 Open Source Large Language Models (LLM) Top 8 Most Useful Anomaly Detection Algorithms For Time Series Self-attention Made Easy And How To Implement It Top 3 Easy Ways To Remove Stop Word In Python Connect with us Join the NLP CommunityStay Updated With Our Newsletter Recent Articles Precision And Recall In Machine Learning Made Simple: How To Handle The Trade-offWhat is Precision and Recall? When evaluating a classification model's performance, it's crucial to understand its effectiveness at making predictions. Two essential...  Confusion Matrix: A Beginners Guide & How To Tutorial In PythonWhat is a Confusion Matrix? A confusion matrix is a fundamental tool used in machine learning and statistics to evaluate the performance of a classification model. At...  Understand Ordinary Least Squares: How To Beginner’s Guide [Tutorials In Python, R & Excell]What is Ordinary Least Squares (OLS)? Ordinary Least Squares (OLS) is a fundamental technique in statistics and econometrics used to estimate the parameters of a linear...  METEOR Metric In NLP: How It Works & How To Tutorial In PythonWhat is the METEOR Score? The METEOR score, which stands for Metric for Evaluation of Translation with Explicit ORdering, is a metric designed to evaluate the text...  BERTScore – A Powerful NLP Evaluation Metric Explained & How To Tutorial In PythonWhat is BERTScore? BERTScore is an innovative evaluation metric in natural language processing (NLP) that leverages the power of BERT (Bidirectional Encoder...  Perplexity In NLP: Understand How To Evaluate LLMs [Practical Guide]Introduction to Perplexity in NLP In the rapidly evolving field of Natural Language Processing (NLP), evaluating the effectiveness of language models is crucial. One of...  BLEU Score In NLP: What Is It & How To Implement In PythonWhat is the BLEU Score in NLP? BLEU, Bilingual Evaluation Understudy, is a metric used to evaluate the quality of machine-generated text in NLP, most commonly in...  ROUGE Metric In NLP: Complete Guide & How To Tutorial In PythonWhat is the ROUGE Metric? ROUGE, which stands for Recall-Oriented Understudy for Gisting Evaluation, is a set of metrics used to evaluate the quality of summaries and...  Normalised Discounted Cumulative Gain (NDCG): Complete How To GuideWhat is Normalised Discounted Cumulative Gain (NDCG)? Normalised Discounted Cumulative Gain (NDCG) is a popular evaluation metric used to measure the effectiveness of... Submit a Comment Cancel replyYour email address will not be published. Required fields are marked * Save my name, email, and website in this browser for the next time I comment. Submit Comment  2024 NLP Expert Trend PredictionsGet a FREE PDF with expert predictions for 2024. How will natural language processing (NLP) impact businesses? What can we expect from the state-of-the-art models? Find out this and more by subscribing* to our NLP newsletter. You have Successfully Subscribed!* Unsubscribe to our weekly newsletter at any time. We comply with GDPR and do not share your data. By subscribing you agree to our terms & conditions. - Interview Questions

- Free Courses

- Career Guide

Recommended AI Courses  MIT No Code AI and Machine Learning ProgramLearn Artificial Intelligence & Machine Learning from University of Texas. Get a completion certificate and grow your professional career.  AI and ML Program from UT AustinEnroll in the PG Program in AI and Machine Learning from University of Texas McCombs. Earn PG Certificate and and unlock new opportunities What is Part of Speech (POS) tagging?Techniques for pos tagging, pos tagging with hidden markov model, optimizing hmm with viterbi algorithm , implementation using python, part of speech (pos) tagging with hidden markov model.  Back in elementary school, we have learned the differences between the various parts of speech tags such as nouns, verbs, adjectives, and adverbs. Associating each word in a sentence with a proper POS (part of speech) is known as POS tagging or POS annotation. POS tags are also known as word classes, morphological classes, or lexical tags. Back in the days, the POS annotation was manually done by human annotators but being such a laborious task, today we have automatic tools that are capable of tagging each word with an appropriate POS tag within a context. Nowadays, manual annotation is typically used to annotate a small corpus to be used as training data for the development of a new automatic POS tagger. Annotating modern multi-billion-word corpora manually is unrealistic and automatic tagging is used instead. POS tags give a large amount of information about a word and its neighbors. Their applications can be found in various tasks such as information retrieval, parsing, Text to Speech (TTS) applications, information extraction, linguistic research for corpora. They are also used as an intermediate step for higher-level NLP tasks such as parsing, semantics analysis, translation, and many more, which makes POS tagging a necessary function for advanced NLP applications. In this, you will learn how to use POS tagging with the Hidden Makrow model. Alternatively, you can also follow this link to learn a simpler way to do POS tagging. If you want to learn NLP, do check out our Free Course on Natural Language Processing at Great Learning Academy . There are various techniques that can be used for POS tagging such as - Rule-based POS tagging : The rule-based POS tagging models apply a set of handwritten rules and use contextual information to assign POS tags to words. These rules are often known as context frame rules. One such rule might be: “If an ambiguous/unknown word ends with the suffix ‘ing’ and is preceded by a Verb, label it as a Verb”.

- Transformation Based Tagging: The transformation-based approaches use a pre-defined set of handcrafted rules as well as automatically induced rules that are generated during training.

- Deep learning models : Various Deep learning models have been used for POS tagging such as Meta-BiLSTM which have shown an impressive accuracy of around 97 percent.

- Stochastic (Probabilistic) tagging : A stochastic approach includes frequency, probability or statistics. The simplest stochastic approach finds out the most frequently used tag for a specific word in the annotated training data and uses this information to tag that word in the unannotated text. But sometimes this approach comes up with sequences of tags for sentences that are not acceptable according to the grammar rules of a language. One such approach is to calculate the probabilities of various tag sequences that are possible for a sentence and assign the POS tags from the sequence with the highest probability. Hidden Markov Models (HMMs) are probabilistic approaches to assign a POS Tag.

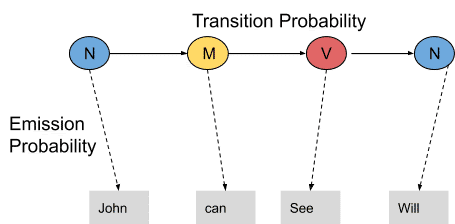

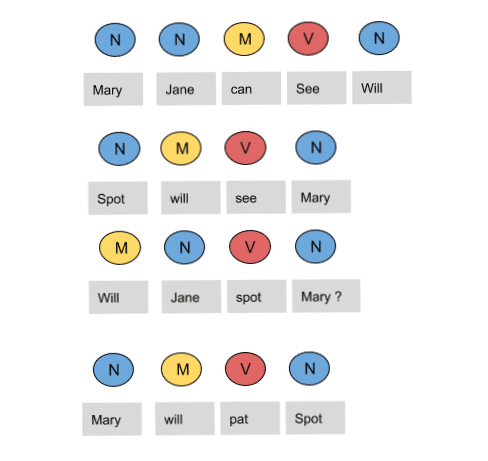

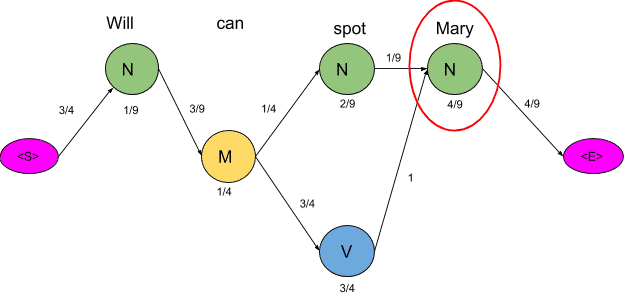

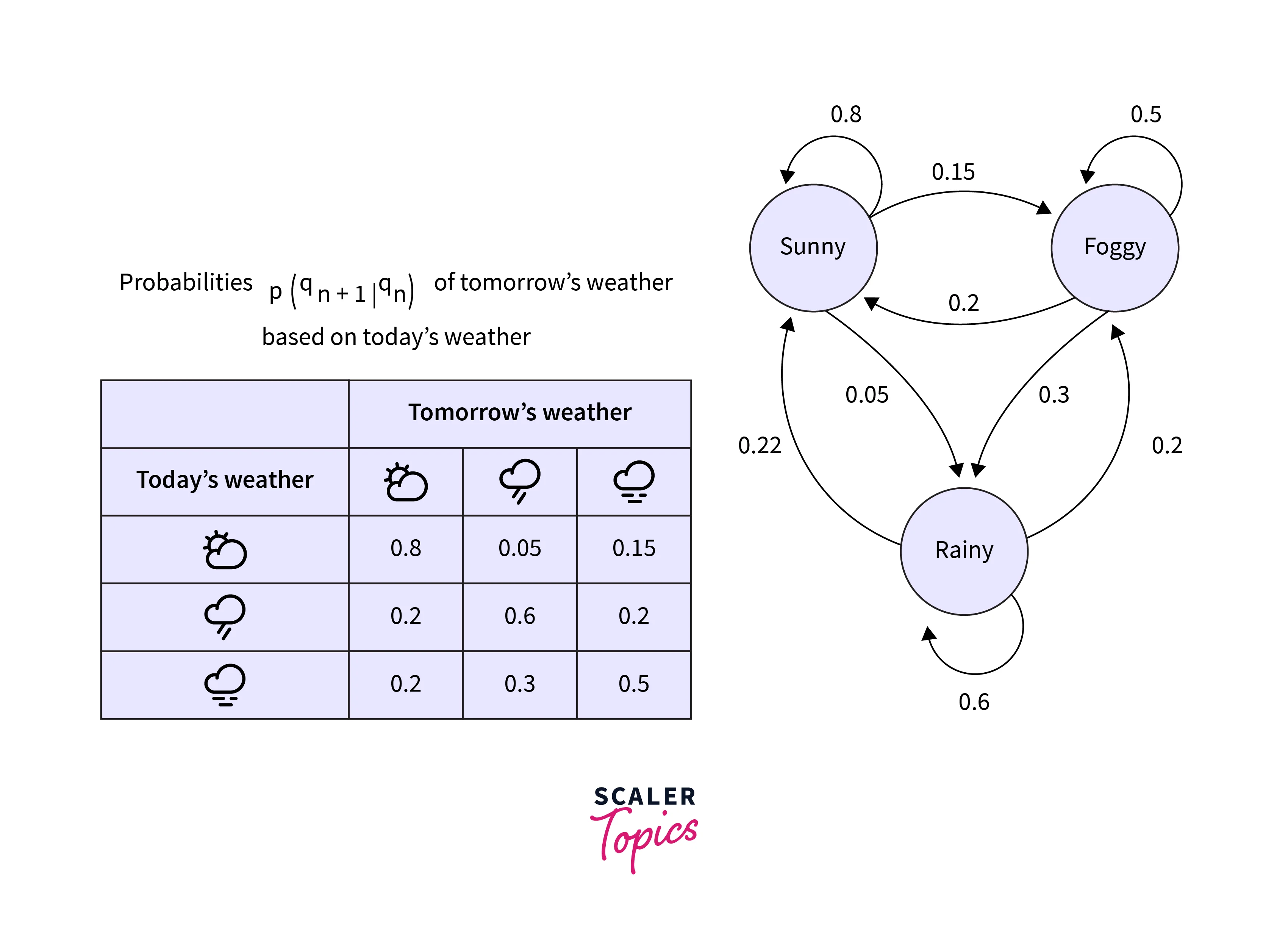

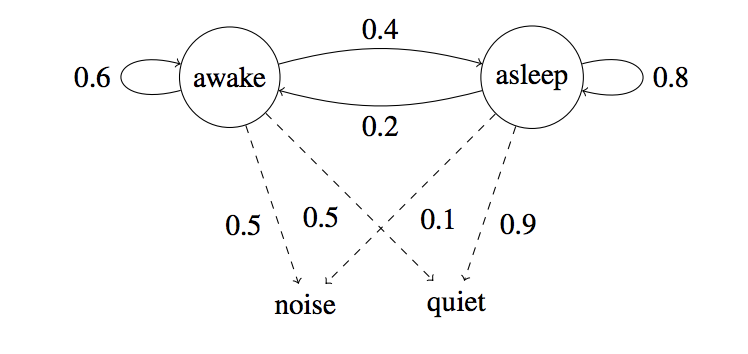

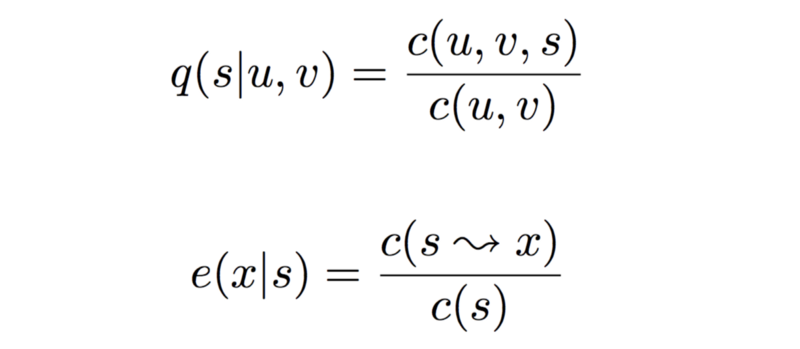

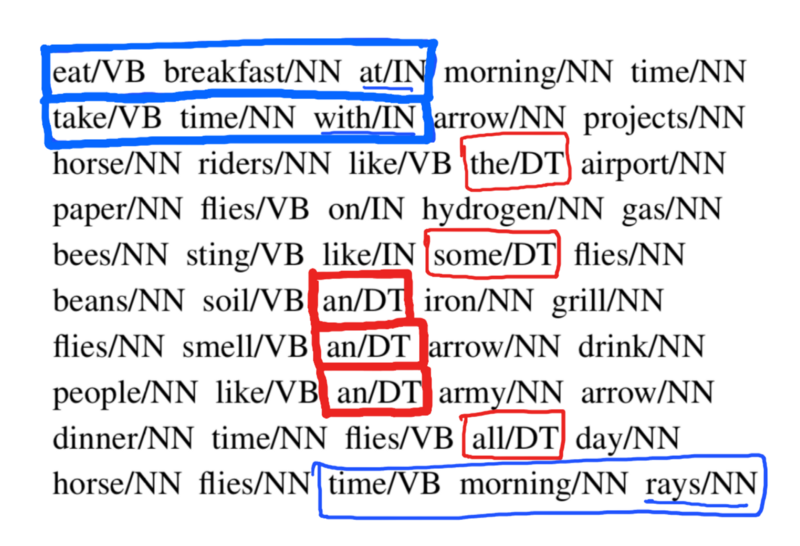

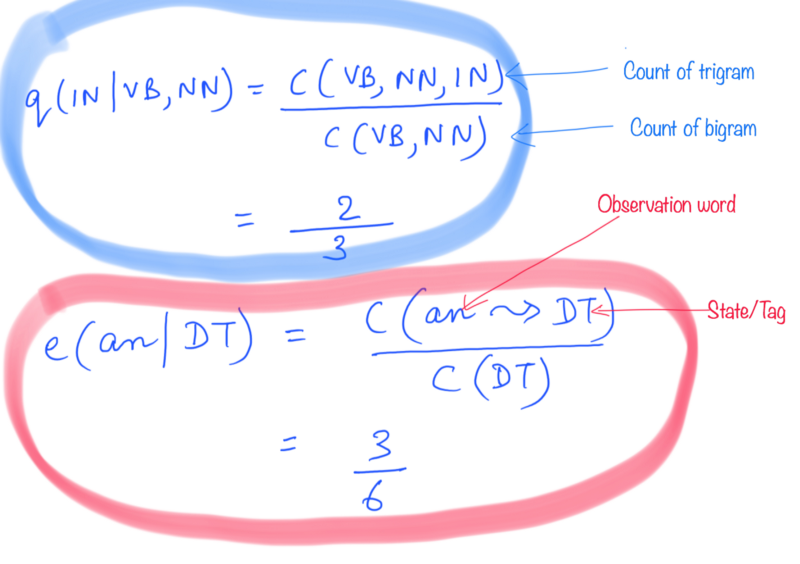

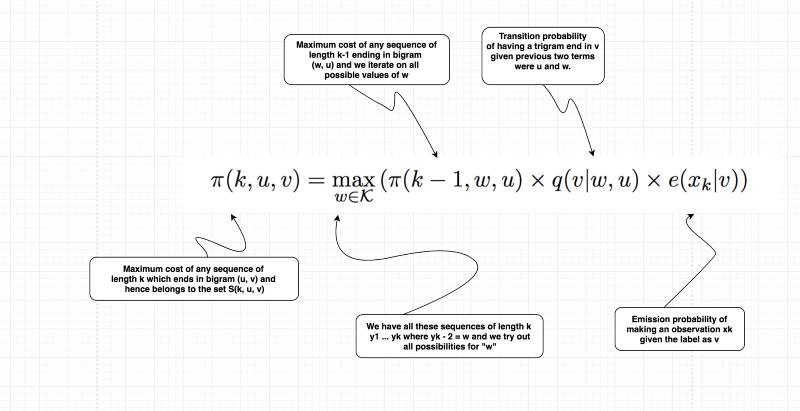

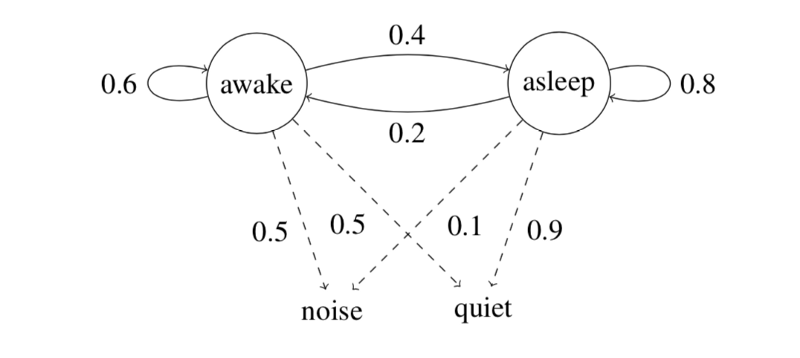

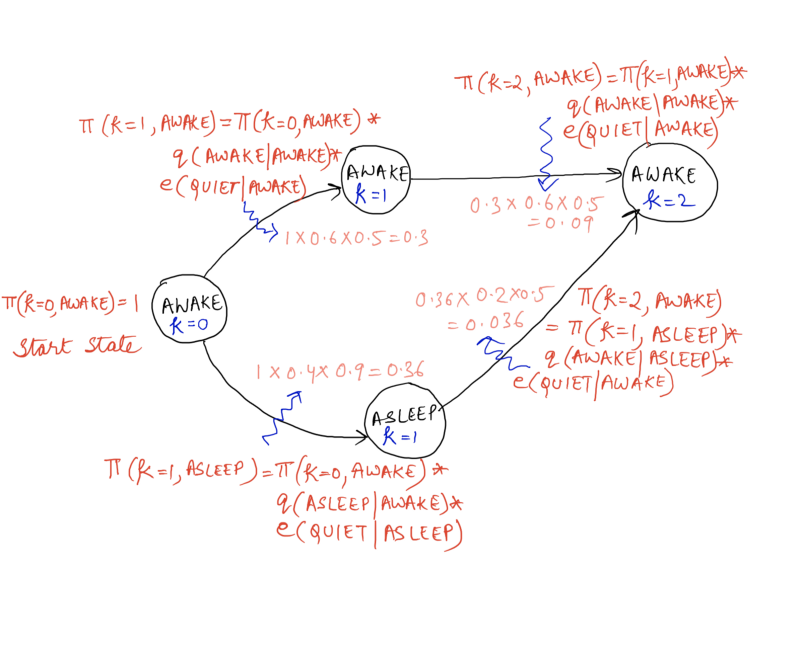

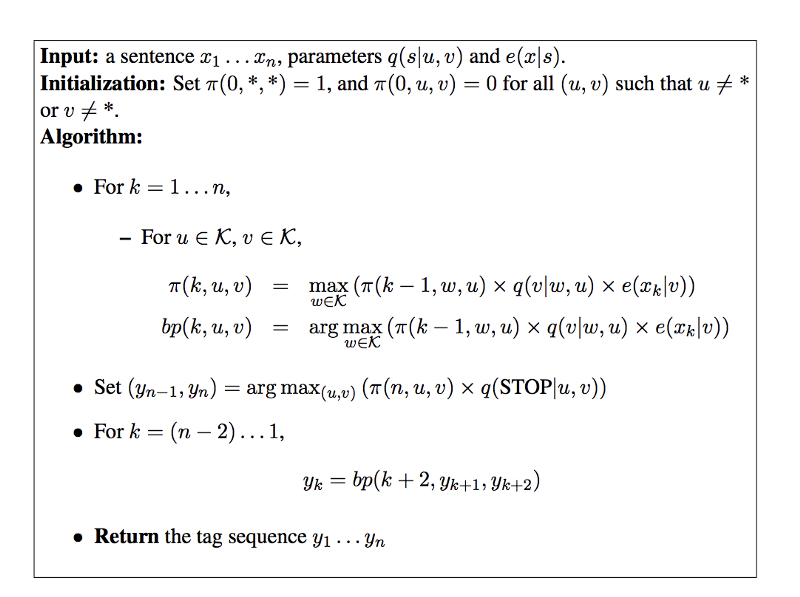

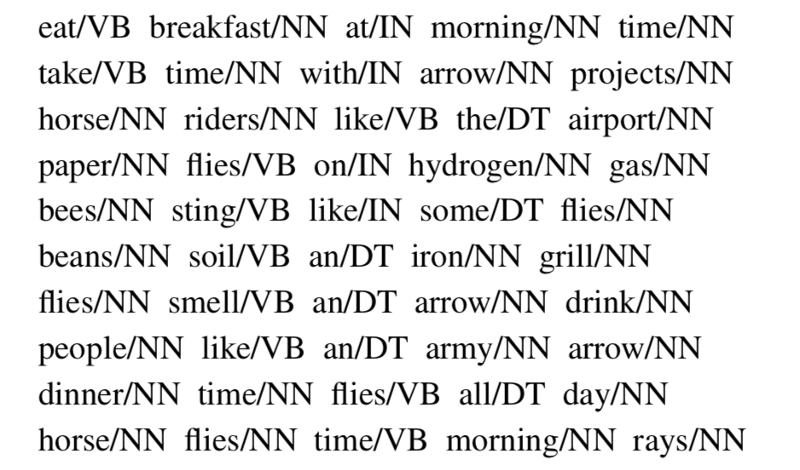

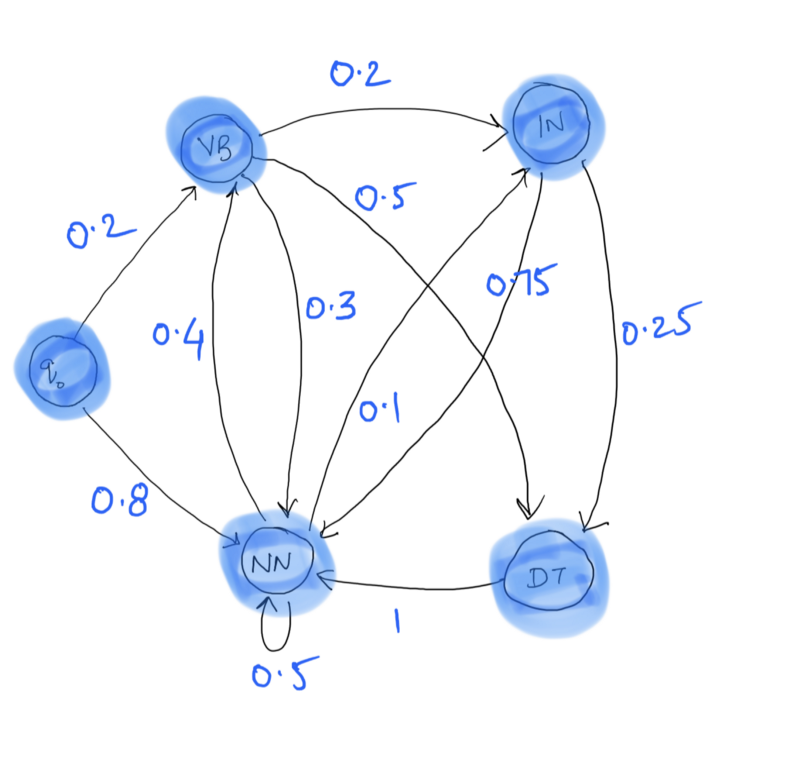

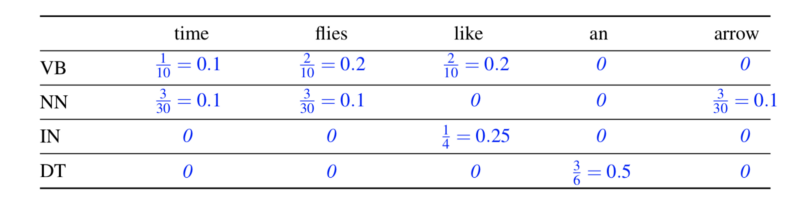

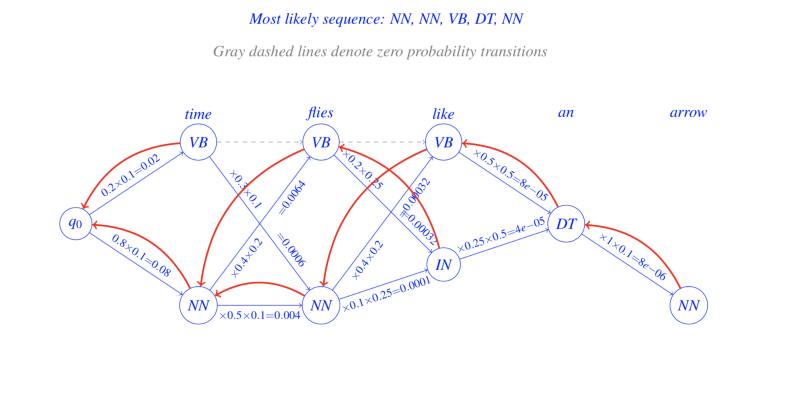

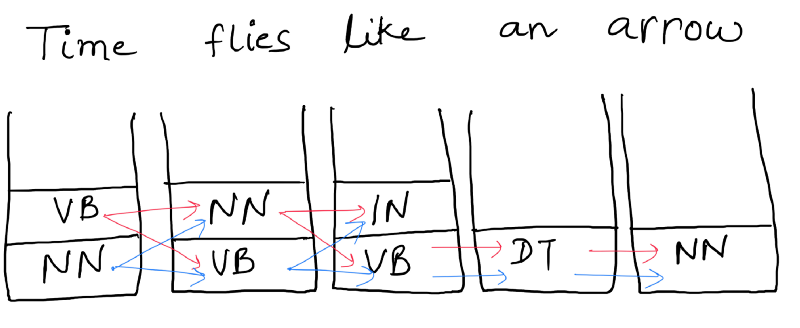

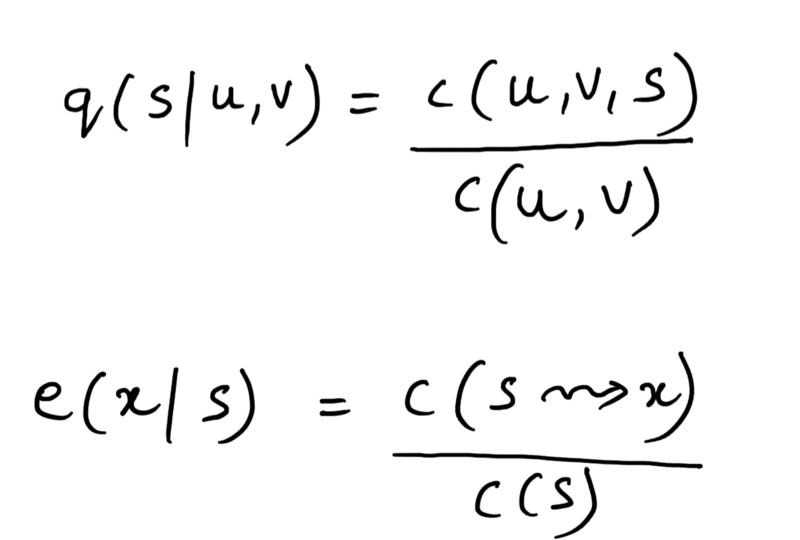

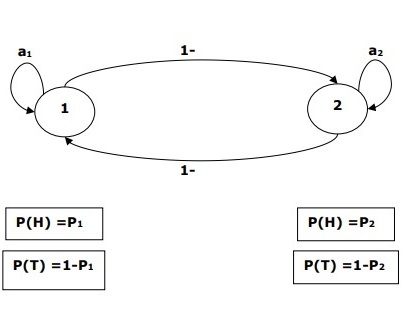

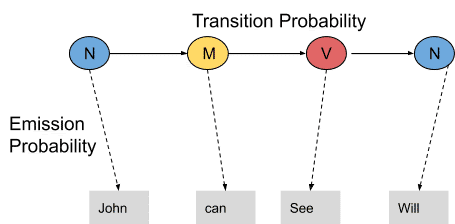

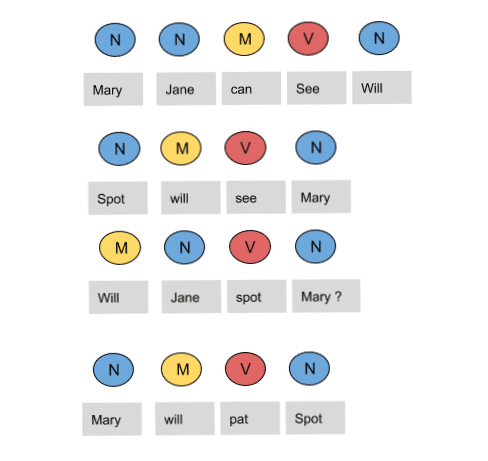

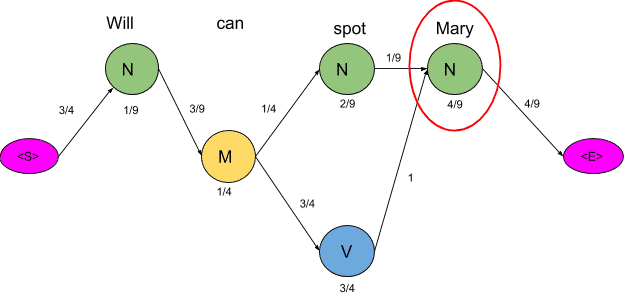

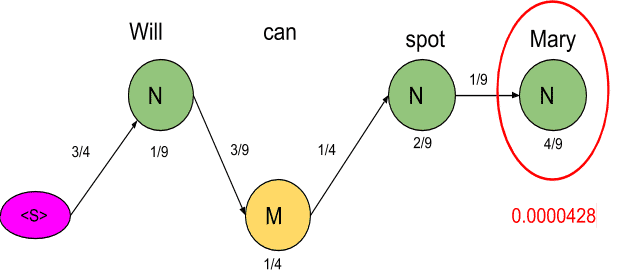

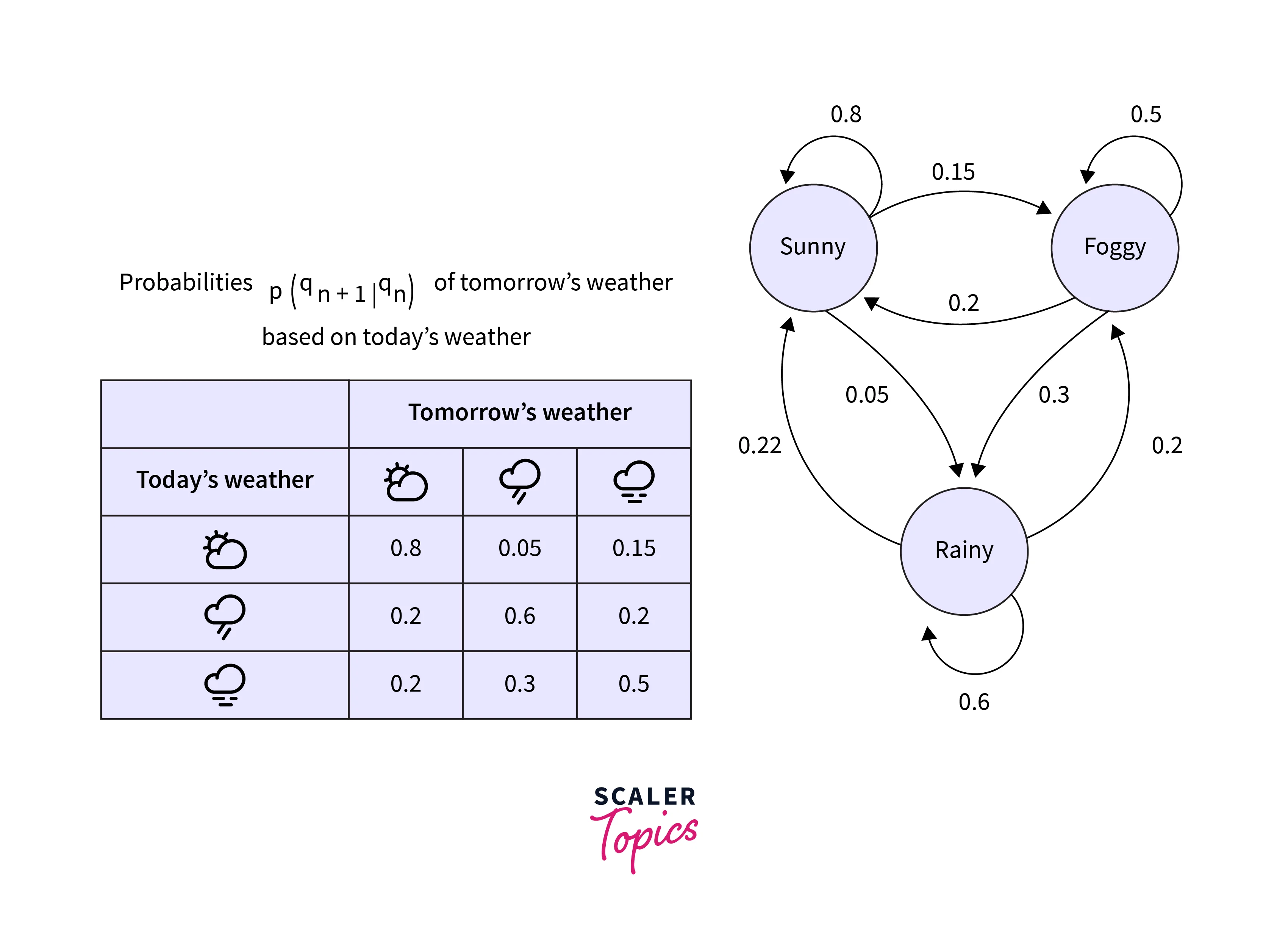

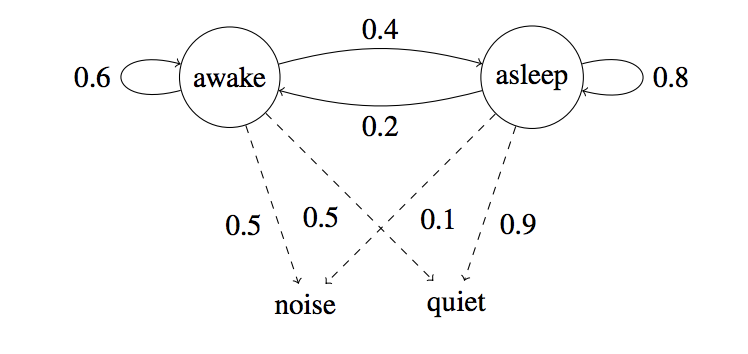

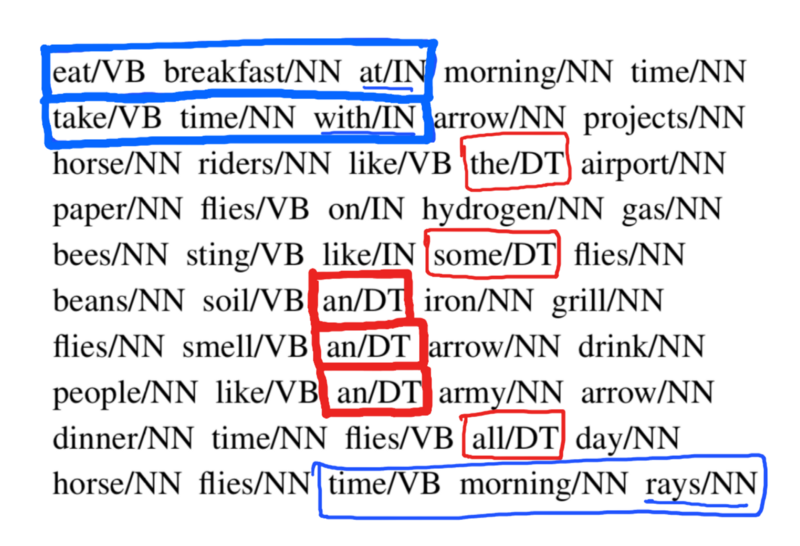

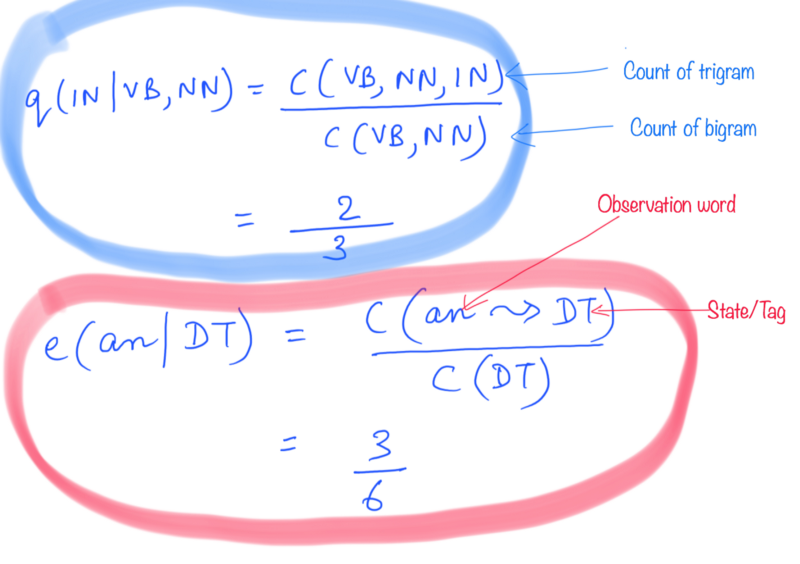

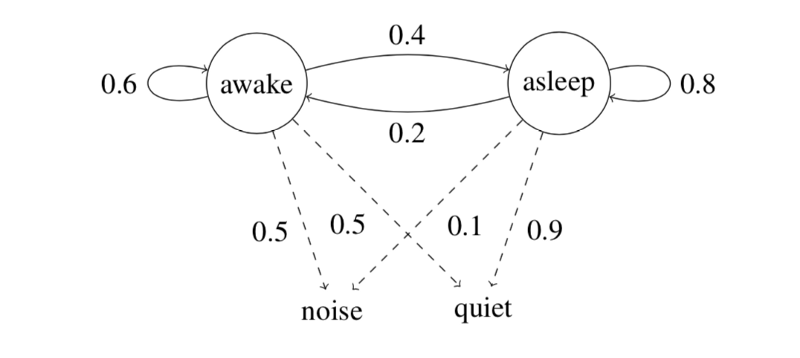

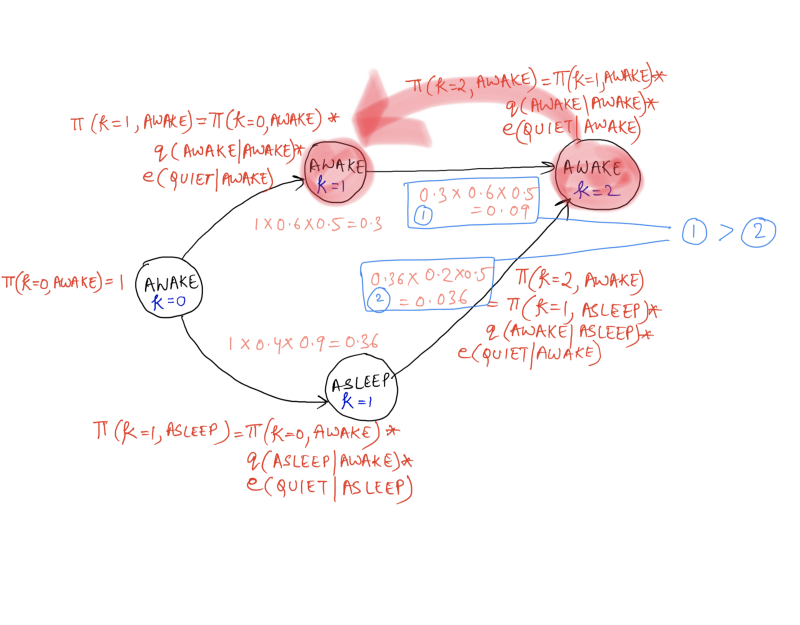

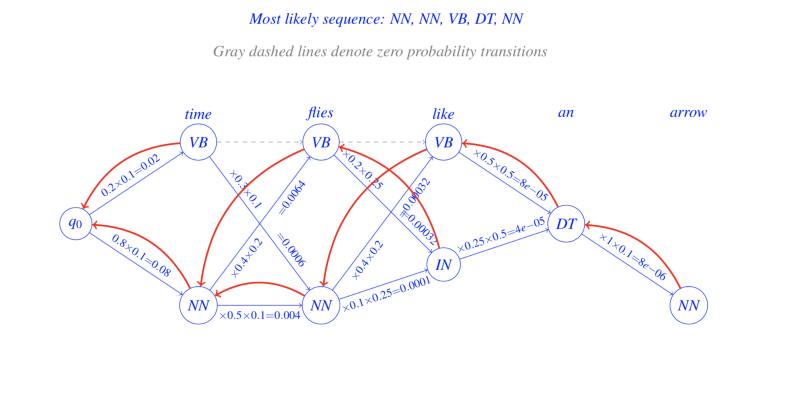

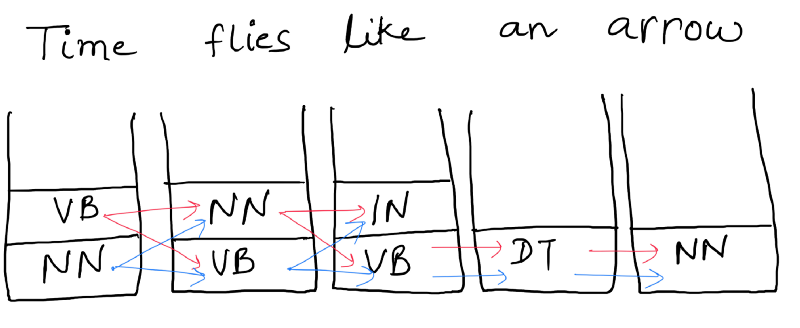

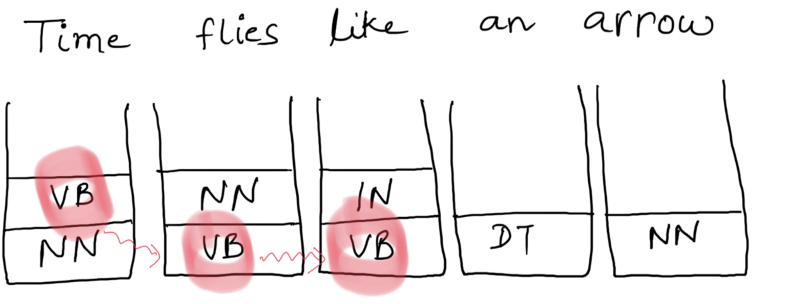

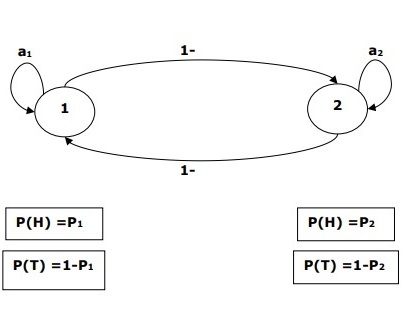

HMM (Hidden Markov Model) is a Stochastic technique for POS tagging. Hidden Markov models are known for their applications to reinforcement learning and temporal pattern recognition such as speech, handwriting, gesture recognition, musical score following, partial discharges, and bioinformatics. Let us consider an example proposed by Dr.Luis Serrano and find out how HMM selects an appropriate tag sequence for a sentence.  In this example, we consider only 3 POS tags that are noun, model and verb. Let the sentence “ Ted will spot Will ” be tagged as noun, model, verb and a noun and to calculate the probability associated with this particular sequence of tags we require their Transition probability and Emission probability. The transition probability is the likelihood of a particular sequence for example, how likely is that a noun is followed by a model and a model by a verb and a verb by a noun. This probability is known as Transition probability. It should be high for a particular sequence to be correct. Now, what is the probability that the word Ted is a noun, will is a model, spot is a verb and Will is a noun. These sets of probabilities are Emission probabilities and should be high for our tagging to be likely. Let us calculate the above two probabilities for the set of sentences below - Mary Jane can see Will

- Spot will see Mary

- Will Jane spot Mary?

- Mary will pat Spot

Note that Mary Jane, Spot, and Will are all names.  In the above sentences, the word Mary appears four times as a noun. To calculate the emission probabilities, let us create a counting table in a similar manner. | Words | Noun | Model | Verb | | Mary | 4 | 0 | 0 | | Jane | 2 | 0 | 0 | | Will | 1 | 3 | 0 | | Spot | 2 | 0 | 1 | | Can | 0 | 1 | 0 | | See | 0 | 0 | 2 | | pat | 0 | 0 | 1 |

Now let us divide each column by the total number of their appearances for example, ‘noun’ appears nine times in the above sentences so divide each term by 9 in the noun column. We get the following table after this operation. | Words | Noun | Model | Verb | | Mary | 4/9 | 0 | 0 | | Jane | 2/9 | 0 | 0 | | Will | 1/9 | 3/4 | 0 | | Spot | 2/9 | 0 | 1/4 | | Can | 0 | 1/4 | 0 | | See | 0 | 0 | 2/4 | | pat | 0 | 0 | 1 |

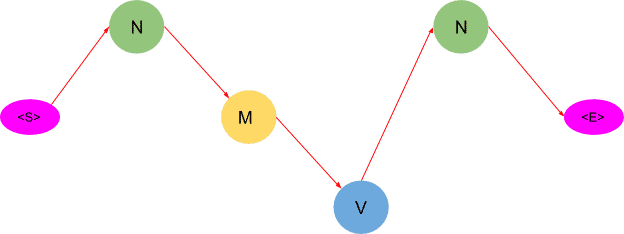

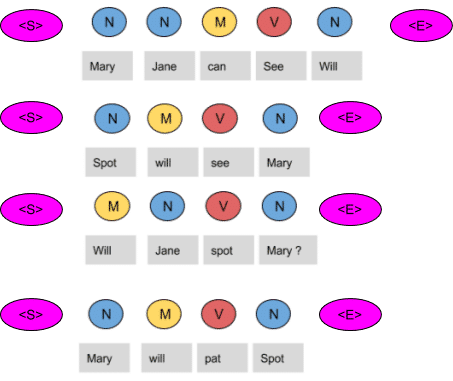

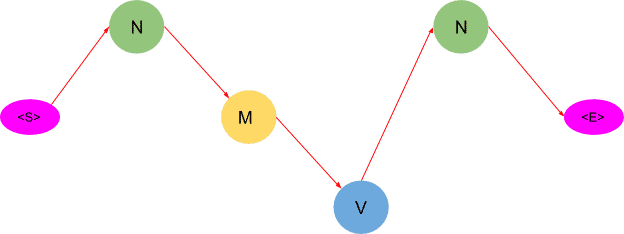

From the above table, we infer that The probability that Mary is Noun = 4/9 The probability that Mary is Model = 0 The probability that Will is Noun = 1/9 The probability that Will is Model = 3/4 In a similar manner, you can figure out the rest of the probabilities. These are the emission probabilities. Next, we have to calculate the transition probabilities, so define two more tags <S> and <E>. <S> is placed at the beginning of each sentence and <E> at the end as shown in the figure below.  Let us again create a table and fill it with the co-occurrence counts of the tags.

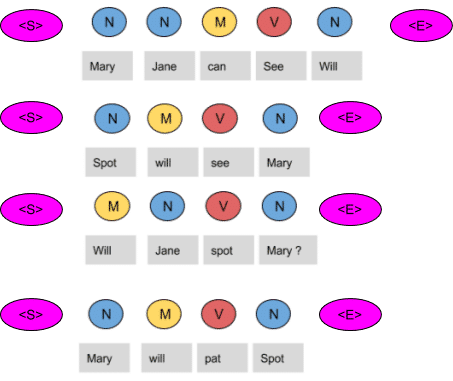

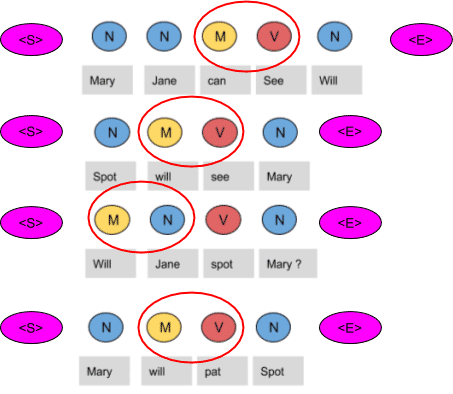

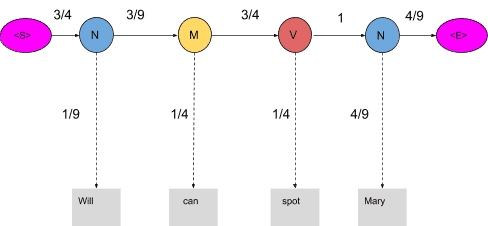

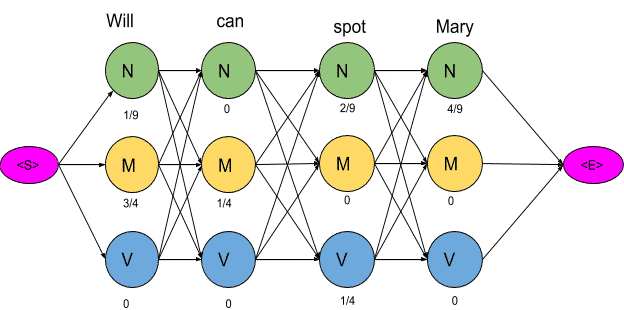

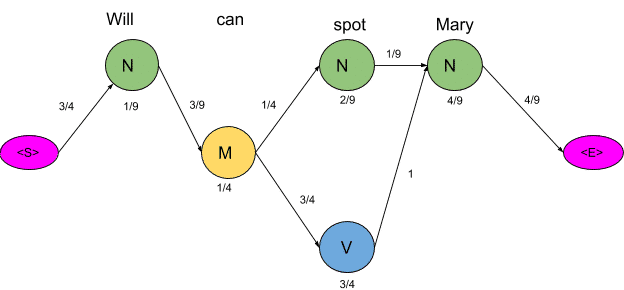

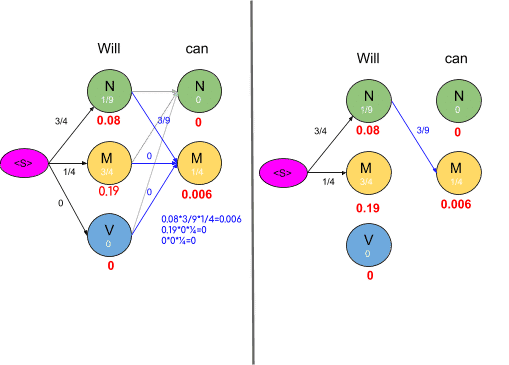

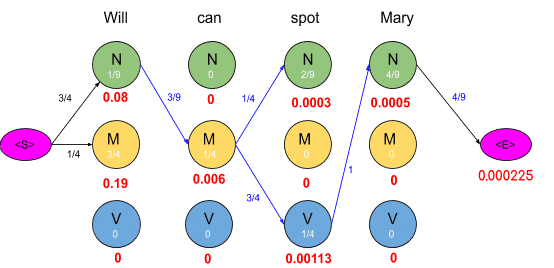

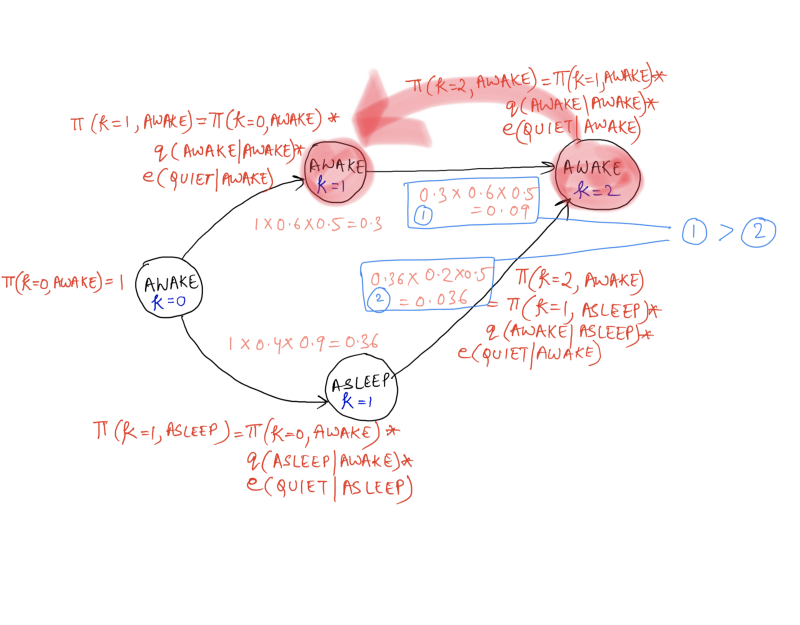

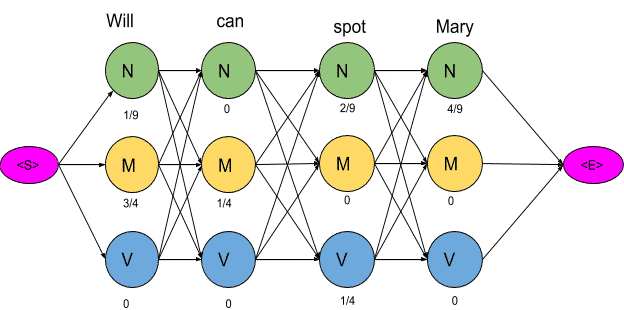

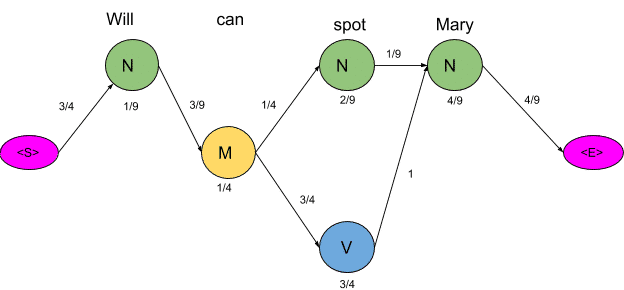

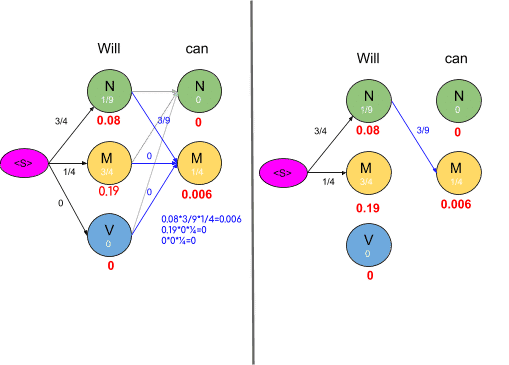

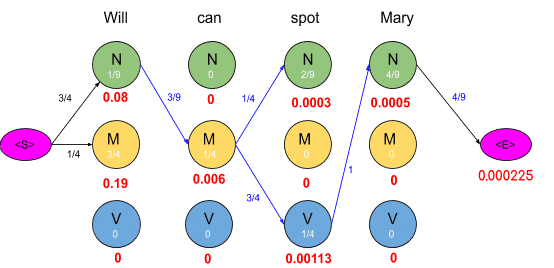

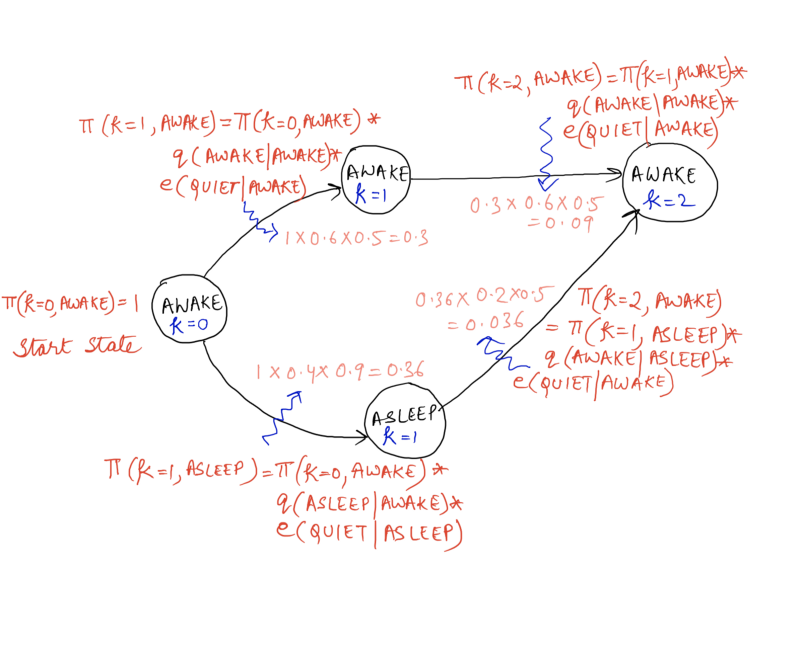

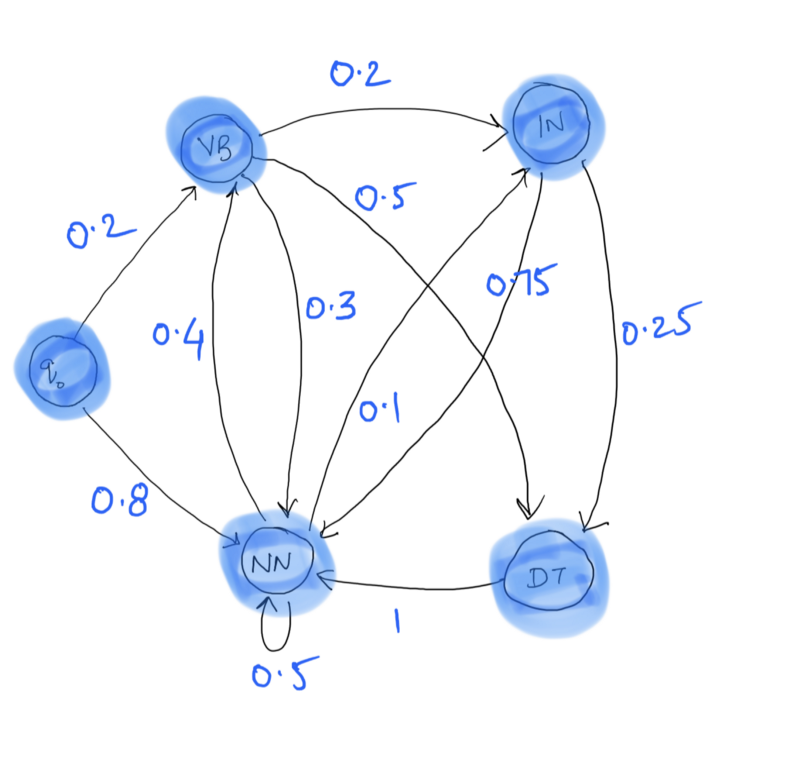

| N | M | V | <E> | | <S> | 3 | 1 | 0 | 0 | | N | 1 | 3 | 1 | 4 | | M | 1 | 0 | 3 | 0 | | V | 4 | 0 | 0 | 0 |

In the above figure, we can see that the <S> tag is followed by the N tag three times, thus the first entry is 3.The model tag follows the <S> just once, thus the second entry is 1. In a similar manner, the rest of the table is filled. Next, we divide each term in a row of the table by the total number of co-occurrences of the tag in consideration, for example, The Model tag is followed by any other tag four times as shown below, thus we divide each element in the third row by four.

| N | M | V | <E> | | <S> | 3/4 | 1/4 | 0 | 0 | | N | 1/9 | 3/9 | 1/9 | 4/9 | | M | 1/4 | 0 | 3/4 | 0 | | V | 4/4 | 0 | 0 | 0 |

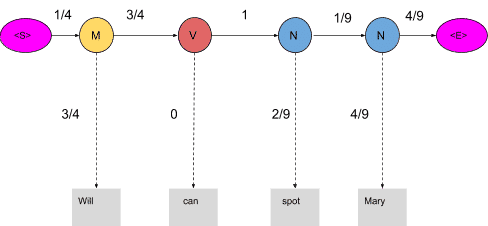

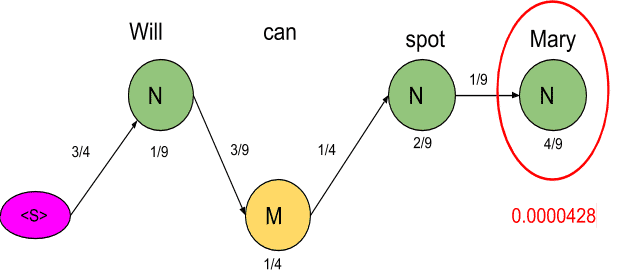

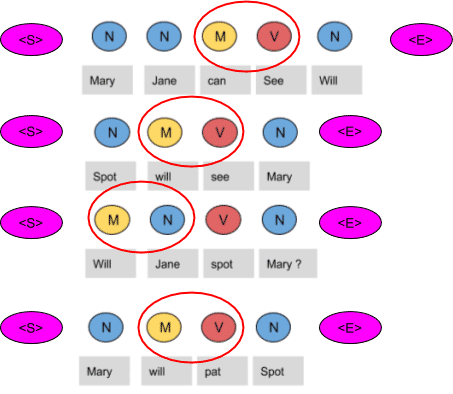

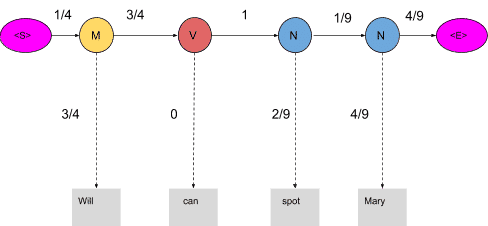

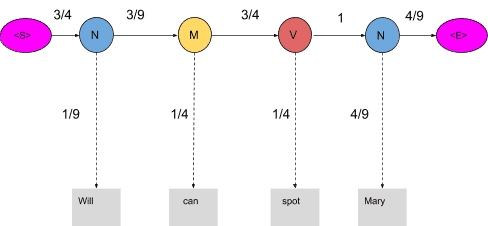

These are the respective transition probabilities for the above four sentences. Now how does the HMM determine the appropriate sequence of tags for a particular sentence from the above tables? Let us find it out. Take a new sentence and tag them with wrong tags. Let the sentence, ‘ Will can spot Mary’ be tagged as- - Will as a model

- Can as a verb

- Spot as a noun

- Mary as a noun

Now calculate the probability of this sequence being correct in the following manner.  The probability of the tag Model (M) comes after the tag <S> is ¼ as seen in the table. Also, the probability that the word Will is a Model is 3/4. In the same manner, we calculate each and every probability in the graph. Now the product of these probabilities is the likelihood that this sequence is right. Since the tags are not correct, the product is zero. 1/4*3/4*3/4*0*1*2/9*1/9*4/9*4/9=0 When these words are correctly tagged, we get a probability greater than zero as shown below  Calculating the product of these terms we get, 3/4*1/9*3/9*1/4*3/4*1/4*1*4/9*4/9= 0.00025720164 For our example, keeping into consideration just three POS tags we have mentioned, 81 different combinations of tags can be formed. In this case, calculating the probabilities of all 81 combinations seems achievable. But when the task is to tag a larger sentence and all the POS tags in the Penn Treebank project are taken into consideration, the number of possible combinations grows exponentially and this task seems impossible to achieve. Now let us visualize these 81 combinations as paths and using the transition and emission probability mark each vertex and edge as shown below.  The next step is to delete all the vertices and edges with probability zero, also the vertices which do not lead to the endpoint are removed. Also, we will mention-  Now there are only two paths that lead to the end, let us calculate the probability associated with each path. <S>→N→M→N→N→<E> = 3/4*1/9*3/9*1/4*1/4*2/9*1/9*4/9*4/9= 0.00000846754 <S>→N→M→N→V→<E>= 3/4*1/9*3/9*1/4*3/4*1/4*1*4/9*4/9= 0.00025720164 Clearly, the probability of the second sequence is much higher and hence the HMM is going to tag each word in the sentence according to this sequence. The Viterbi algorithm is a dynamic programming algorithm for finding the most likely sequence of hidden states—called the Viterbi path—that results in a sequence of observed events, especially in the context of Markov information sources and hidden Markov models (HMM). Source: Wikipedia In the previous section, we optimized the HMM and bought our calculations down from 81 to just two. Now we are going to further optimize the HMM by using the Viterbi algorithm. Let us use the same example we used before and apply the Viterbi algorithm to it.  Consider the vertex encircled in the above example. There are two paths leading to this vertex as shown below along with the probabilities of the two mini-paths.  Now we are really concerned with the mini path having the lowest probability. The same procedure is done for all the states in the graph as shown in the figure below  As we can see in the figure above, the probabilities of all paths leading to a node are calculated and we remove the edges or path which has lower probability cost. Also, you may notice some nodes having the probability of zero and such nodes have no edges attached to them as all the paths are having zero probability. The graph obtained after computing probabilities of all paths leading to a node is shown below:  To get an optimal path, we start from the end and trace backward, since each state has only one incoming edge, This gives us a path as shown below  As you may have noticed, this algorithm returns only one path as compared to the previous method which suggested two paths. Thus by using this algorithm, we saved us a lot of computations. After applying the Viterbi algorithm the model tags the sentence as following- - Will as a noun

- Can as a model

- Spot as a verb